Submission closed

- Frontiers in Psychology

- Cognition

- Research Topics

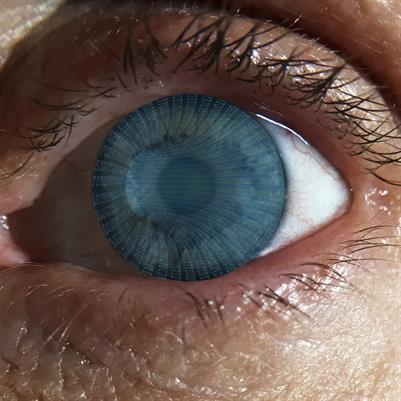

- Advancing the use of Eye-Tracking and Pupillometric data in Complex Environments.

Advancing the use of Eye-Tracking and Pupillometric data in Complex Environments.

11k

Total downloads

56k

Total views and downloads

Submit your idea

Select the journal/section where you want your idea to be submitted:

About this Research Topic

Background

Topic editors

russell a cohen hoffing

US DEVCOM Army Research Laboratory, Army Research Directorate

Playa Vista, CA, United States

steven matthew thurman

CCDC Army Research Laboratory, Human Research and Engineering, US Army Research Laboratory

Aberdeen Proving Ground, United States

jonathan touryan

United States Army Research Laboratory

Adelphi, United States

julien epps

University of New South Wales

Kensington, Australia

josef faller

Optios

New York City, United States

paul sajda

Columbia University

New York City, United States

Frequently asked questions

Frontiers' Research Topics are collaborative hubs built around an emerging theme.Defined, managed, and led by renowned researchers, they bring communities together around a shared area of interest to stimulate collaboration and innovation.

Unlike section journals, which serve established specialty communities, Research Topics are pioneer hubs, responding to the evolving scientific landscape and catering to new communities.

The goal of Frontiers' publishing program is to empower research communities to actively steer the course of scientific publishing. Our program was implemented as a three-part unit with fixed field journals, flexible specialty sections, and dynamically emerging Research Topics, connecting communities of different sizes and maturity.

Research Topics originate from the scientific community. Many of our Research Topics are suggested by existing editorial board members who have identified critical challenges or areas of interest in their field.

As an editor, Research Topics will help you build your journal, as well as your community, around emerging, cutting-edge research. As research trailblazers, Research Topics attract high-quality submissions from leading experts all over the world.

A thriving Research Topic can potentially evolve into a new specialty section if there is sustained interest and a growing community around it.

Each Research Topic must be approved by the specialty chief editor, and it falls under the editorial oversight of our editorial boards, supported by our in-house research integrity team. The same standards and rigorous peer review processes apply to articles published as part of a Research Topic as for any other article we publish.

In 2023, 80% of the Research Topics we published were edited or co-edited by our editorial board members, who are already familiar with their journal's scope, ethos, and publishing model. All other topics are guest edited by leaders in their field, each vetted and formally approved by the specialty chief editor.

Publishing your article within a Research Topic with other related articles increases its discoverability and visibility, which can lead to more views, downloads, and citations. Research Topics grow dynamically as more published articles are added, causing frequent revisiting, and further visibility.

As Research Topics are multidisciplinary, they are cross-listed in several fields and section journals – increasing your reach even more and giving you the chance to expand your network and collaborate with researchers in different fields, all focusing on expanding knowledge around the same important topic.

Our larger Research Topics are also converted into ebooks and receive social media promotion from our digital marketing team.

Frontiers offers multiple article types, but it will depend on the field and section journals in which the Research Topic will be featured. The available article types for a Research Topic will appear in the drop-down menu during the submission process.

Yes, we would love to hear your ideas for a topic. Most of our Research Topics are community-led and suggested by researchers in the field. Our in-house editorial team will contact you to talk about your idea and whether you’d like to edit the topic. If you’re an early-stage researcher, we will offer you the opportunity to coordinate your topic, with the support of a senior researcher as the topic editor.

A team of guest editors (called topic editors) lead their Research Topic. This editorial team oversees the entire process, from the initial topic proposal to calls for participation, the peer review, and final publications.

The team may also include topic coordinators, who help the topic editors send calls for participation, liaise with topic editors on abstracts, and support contributing authors. In some cases, they can also be assigned as reviewers.

As a topic editor (TE), you will take the lead on all editorial decisions for the Research Topic, starting with defining its scope. This allows you to curate research around a topic that interests you, bring together different perspectives from leading researchers across different fields and shape the future of your field.

You will choose your team of co-editors, curate a list of potential authors, send calls for participation and oversee the peer review process, accepting or recommending rejection for each manuscript submitted.

As a topic editor, you're supported at every stage by our in-house team. You will be assigned a single point of contact to help you on both editorial and technical matters. Your topic is managed through our user-friendly online platform, and the peer review process is supported by our industry-first AI review assistant (AIRA).

If you’re an early-stage researcher, we will offer you the opportunity to coordinate your topic, with the support of a senior researcher as the topic editor. This provides you with valuable editorial experience, improving your ability to critically evaluate research articles and enhancing your understanding of the quality standards and requirements for scientific publishing, as well as the opportunity to discover new research in your field, and expand your professional network.

Yes, certificates can be issued on request. We are happy to provide a certificate for your contribution to editing a successful Research Topic.

Research Topics thrive on collaboration and their multi-disciplinary approach around emerging, cutting-edge themes, attract leading researchers from all over the world.

As a topic editor, you can set the timeline for your Research Topic, and we will work with you at your pace. Typically, Research Topics are online and open for submissions within a few weeks and remain open for participation for 6 – 12 months. Individual articles within a Research Topic are published as soon as they are ready.

Find out more about our Research Topics

Our fee support program ensures that all articles that pass peer review, including those published in Research Topics, can benefit from open access – regardless of the author's field or funding situation.

Authors and institutions with insufficient funding can apply for a discount on their publishing fees. A fee support application form is available on our website.

In line with our mission to promote healthy lives on a healthy planet, we do not provide printed materials. All our articles and ebooks are available under a CC-BY license, so you can share and print copies.

Published in

Frontiers in Psychology

- Cognition

- Perception Science

- Cognitive Science

- Human-Media InteractionOffline

- Neuropsychology

Participating Journals

Frontiers in Computer Science

- 2.4 impact factor

- 4.3 citescore

Frontiers in Neuroscience

- 3.2 impact factor

- 6.2 citescore

Impact

- 56kTopic views

- 43kArticle views

- 11kArticle downloads