94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Sustain. Food Syst., 26 February 2025

Sec. Nutrition and Sustainable Diets

Volume 9 - 2025 | https://doi.org/10.3389/fsufs.2025.1551460

This article is part of the Research TopicIntegrative Multi-omics and Artificial Intelligence (AI)-driven Approaches for Superior Nutritional Quality and Stress Resilience in CropsView all 6 articles

Danishta Aziz1†

Danishta Aziz1† Summira Rafiq1†

Summira Rafiq1† Pawan Saini2*†

Pawan Saini2*† Ishtiyaq Ahad1†

Ishtiyaq Ahad1† Basanagouda Gonal2†

Basanagouda Gonal2† Sheikh Aafreen Rehman1†

Sheikh Aafreen Rehman1† Shafiya Rashid3†

Shafiya Rashid3† Pooja Saini4†

Pooja Saini4† Gulab Khan Rohela2†

Gulab Khan Rohela2† Khursheed Aalum5

Khursheed Aalum5 Gurjeet Singh6†

Gurjeet Singh6† Belaghihalli N. Gnanesh7†

Belaghihalli N. Gnanesh7† Mercy Nabila Iliya8*†

Mercy Nabila Iliya8*†The agriculture sector is currently facing several challenges, including the growing global human population, depletion of natural resources, reduction of arable land, rapidly changing climate, and the frequent occurrence of human diseases such as Ebola, Lassa, Zika, Nipah, and most recently, the COVID-19 pandemic. These challenges pose a threat to global food and nutritional security and place pressure on the scientific community to achieve Sustainable Development Goal 2 (SDG2), which aims to eradicate hunger and malnutrition. Technological advancement plays a significant role in enhancing our understanding of the agricultural system and its interactions from the cellular level to the green field level for the benefit of humanity. The use of remote sensing (RS), artificial intelligence (AI), and machine learning (ML) approaches is highly advantageous for producing precise and accurate datasets to develop management tools and models. These technologies are beneficial for understanding soil types, efficiently managing water, optimizing nutrient application, designing forecasting and early warning models, protecting crops from plant diseases and insect pests, and detecting threats such as locusts. The application of RS, AI, and ML algorithms is a promising and transformative approach to improve the resilience of agriculture against biotic and abiotic stresses and achieve sustainability to meet the needs of the ever-growing human population. In this article covered the leveraging AI algorithms and RS data, and how these technologies enable real time monitoring, early detection, and accurate forecasting of pest outbreaks. Furthermore, discussed how these approaches allows for more precise, targeted pest control interventions, reducing the reliance on broad spectrum pesticides and minimizing environmental impact. Despite challenges in data quality and technology accessibility, the integration of AI and RS holds significant potential in revolutionizing pest management.

In every field, whether rocket science or agriculture, there is a constant search for more convenient and intelligent tools to automate various processes and this can only be achieved through machines’ ability to easily understand human intelligence. Agriculture, which involves the cultivation of food crops, is a labor-intensive yet essential occupation that sustains life on planet Earth. Furthermore, the world is facing an alarming situation due to the increasing population, climate change, depletion of natural resources, a reduction in farm workers, and the emergence of novel human diseases such as the COVID-19 pandemic. There are challenges in managing agricultural field to meet the necessary food demand and achieve the second sustainable development goal (SDG-2), which aims for zero hunger (Martos et al., 2021). During the last decade, there has been significant technological advancement in many industries worldwide and the European Commission has designated the year 2021 as the beginning of industrial innovation, referred to as Industry 5.0 (Martos et al., 2021). The use of wooden and metal farming tools was categorized under the first two phases of agricultural revolutions. During the third and fourth phases, significant technological advancements such as robotics, machinery, telecommunication systems, and decoding of genetic codes were the major achievements. The fifth agricultural industrial revolution involves current technological interventions, such as artificial intelligence (AI), machine learning (ML), and remote sensing (RS) (Martos et al., 2021). AI and RS tools now make it easier to manage agricultural fields, by improving methods for monitoring crop yield and health. This involves the timely management of insect pests, pathogens, and weeds, as well as efficient irrigation, nutrient supply, and soil fertility management (Kim et al., 2008).

Agriculture-linked robots are designed to facilitate the effective integration of AI in the agricultural sector, providing potential solutions to various challenges. With the help of AI, farmers can use chatbots as their virtual guides to receive timely advice and recommendations on various aspects of crop management. The use of unmanned aerial vehicles (UAVs) or drones, assisted by global positioning system (GPS), can be remotely controlled, replacing manual labor in various agricultural tasks such as crop health assessment, irrigation scheduling, herbicide application, weed and pest identification, and forecasting (Mogili and Deepak, 2018; Ahirwar et al., 2019). AI is constantly evolving in new directions as a result of advancements in computational techniques and along with many other sectors of the economy, agriculture has also begun to benefit from AI. It has made significant advancements in agriculture, such as in weeding, irrigation management, disease, and insect pest monitoring and management. It also suggests suitable sowing and harvest dates for specific crops and facilitates the sale of crops in the appropriate marketplace at the optimal time (Javaid et al., 2023).

AI has significantly assisted farmers in implementing various technologies, and the prior analysis of farm activities ensure a guaranteed crop quantity and quality (Vijayakumar and Balakrishnan, 2021; Subeesh and Mehta, 2021). AI can address numerous challenges in agriculture, such as labor shortages, by offering solutions like auto-driven tractors, smart irrigation systems, fertilization and pesticide applicators, and AI-based harvesting robots (Wongchai et al., 2022; Bu and Wang, 2019). Numerous datasets on temperature, soil moisture, and other resources required for optimal crop growth are utilized by AI and ML models to gain real-time insights into, when to plant, what to plant, when to fertilize, and when to harvest(Singh et al., 2022; Sabrina et al., 2022). AI algorithms can be useful in detecting insects of various sizes and feeding habits and promptly notify farmers about the invasion of insect pests in their fields through smartphones, enabling them to take timely actions to prevent crop losses. In this review, we explore the application AI and RS in managing insect pests. We analyze the success and challenges associated with utilizing these technologies in pest management. Additionally, we provide a comprehensive overview of AI and RS as potent tools for achieving sustainable and effective pest control in agricultural and ecological contexts.

Currently, there has been a shift in the strategy, for identifying, detecting, classifying, and managing various insect pests and disease-causing pathogens from traditional methods to the use of innovative information-gathering tools and techniques such as remote sensors, geographic information systems (GIS), and GPS. RS is the art and science of gathering information about an object, area, or phenomenon through the analysis of data obtained by a device that does not make physical contact with the object under investigation (Lillesand et al., 2015). According to De Jong and Van der Meer (2007), the remote sensing process (Figure 1) consists of several stages, each of which plays a crucial role in acquiring and utilizing remotely sensed data. RS is a broad term used to encompass various technologies such as satellites, GIS, Internet of Things (IoT), cloud computing, sensors, Decision Support System (DSS), and autonomous robots. The process of RS is mainly influenced by the characteristics of electromagnetic radiation (EMR), which is used to collect data and interpret it. Based on the source of EMR; RS is categorized into two types: passive remote sensing and active remote sensing. Active remote sensors have their source of radiation, such as RADAR (Radio Detection and Ranging) and operates day and night as it does not depend on light. However, passive remote sensors do not have their radiation source, but rather depend on external sources to illuminate the object, therefore, work only during daytime.

The atmosphere plays a crucial role in the process of RS, as EMR passes through it before reaching the target object on the Earth’s surface. When radiation interacts with gas molecules or small particles, such as aerosols, it can scatter in various directions, including backwards to the sensor. When EMR such as visible light, infrared, or microwave, from a remote sensing platform, such as a satellite or a drone, reaches the target object, several interactions may occur. These interactions are mainly determined by the inherent properties of the object and the characteristics of the incoming radiation. The three most common interactions are absorption, emission, and reflection. RS instruments are designed to detect and measure the reflected radiation and by analyzing the reflected radiation at different wavelengths, researchers can extract valuable information about the target’s characteristics and make informed decisions for a wide range of applications (Pellikka and Rees, 2009). After the interaction between incoming radiation and the target object, a sensor (such as digital cameras, electromagnetic scanners, radar systems, multispectral scanners, and hyperspectral scanners) collects and records the data in the form of reflected or emitted electromagnetic radiation (Yang and Everitt, 2011). Radiation can be stored in either analog form (like aerial photographs) or digital formats (like signal values) stored on a magnetic CD or DVD. After recording the EMR from the target objects using a remote sensor, the data is transmitted in electronic form to a receiving station for further processing. For image analysis, various software options are available, such as Imagga, Hive, and Anyline. These programs automatically apply advanced algorithms to input images to extract information from them. Three basic groups of information interpreted while observing the image, include the assignment of different class labels to separate pixels in an image, estimation of properties of the target object, and monitoring the changes in target properties over time (Jensen, 2007). The final output of a remote sensing process can sometimes be used as input for further examination, such as in GIS. At the same time; this information can be used in conjunction with other types of data for various research purposes.

Integrated pest management (IPM) is a comprehensive approach to controlling pests to reduce the use of pesticides on crops and soil and promote an eco-friendly environment. However, implementing IPM requires ongoing monitoring and surveillance of crops, which can be a challenging task. Therefore, AI can be utilized to address this issue (Murmu et al., 2022). AI can analyze large sets of data and draw inferences based on previous reports, enabling farmers to implement timely pest management practices (Katiyar, 2022). Numerous studies have been conducted which highlights the effectiveness of RS and AI in insect pest management. Prabhakar et al. (2012) demonstrated the use of satellite-based RS to monitor and predict the spread of a major insect pest, the fall armyworm (Spodoptera frugiperda), in maize fields. In another study, Smith et al. (2020) demonstrated how drones equipped with multispectral sensors were used to assess pest damage in vineyards, by analyzing the spectral signatures of the vines. The advancements in the RS process help to provide synoptic data of high precision at a very fast pace, even from inaccessible areas (Malinowski et al., 2015). The timeline illustrates the brief history (Figure 2) of advancements in RS technology.

The platform on which remote sensors are mounted, i.e., RS platforms, are categorized into three types, based on the elevation at which the sensors are placed from the earth’s surface: ground-based, airborne, and spaceborne (Johnson et al., 2001). Ground-based platforms are positioned near or on the Earth’s surface to examine specific characteristics of the environment, plants, or objects of interest and are particularly useful for conducting detailed and localized studies, focusing on individual plants or small patches of vegetation. Airborne platforms refer to aircraft used to carry RS instruments for aerial surveys. These platforms offer the advantage of operating at lower altitudes compared to satellites, which allows for higher spatial resolution and targeted data acquisition. Different types of tools used for aerial survey missions include airplanes, helicopters, and UAVs. Spaceborne platforms refer to sensors and instruments placed on satellites (geostationary satellites and sun-synchronous satellites) orbiting the Earth and capturing data from space, providing global and repetitive coverage of the Earth’s surface. The data collected by these satellites is instrumental in various applications, including weather forecasting, climate monitoring, land cover mapping, agriculture, and disaster response (Roy et al., 2022). The most recent example of spaceborne RS is India’s Chandrayaan-3 lander, Vikram, which softly landed on the South Pole of the moon on August 23, 2023. The rover, Pragyan, has conducted studies on the moon’s atmosphere and confirmed the presence of oxygen and other mineral elements (Kanu et al., 2024).

Modern agriculture and food production, aimed at supporting the ever-growing global population, are constantly challenged by the impact of global climate change, which in turn increases the pressure of abiotic and biotic stresses on crop production, leading to an imbalance in economic and environmental sustainability (Bakala et al., 2020). For this, AI and RS seem to be a ray of hope, as can quantify phenotypic data of crops using various tools (Jung et al., 2021). In addition to their applications in other fields, RS and AI play a vital role in agricultural and horticultural fields (Figure 3). The use of AI in the form of Unmanned Aircraft Systems (UAS) has provided an unparalleled advantage in conducting advanced data analysis for the management of agricultural fields, thereby increasing the overall resilience and efficiency of the system (Coble et al., 2018). Various companies, such as AGEYE Technologies, aWhere, Blue Reiver Technology, FarmShots, Fasal, Harvest CROO Robotics, HelioPas AI, Hortau Inc., Ibex Automation, PEAT, Root AI, Trace Genomics, and VineView, have developed different AI-based tools for agriculture, performing various functions like predicting weather and its correlation with pest population dynamics, managing weed systems, picking and packaging fruits and vegetables, and monitoring irrigation and fertilization (Dutta et al., 2014). Remote sensing as a highly useful technology, allows continuous gathering of information about a crop without causing damage and at a very low cost (Barbedo, 2019). Images are captured by remote sensors, and with the assistance of deep learning machine models (DLMM), these images are classified. Based on the input image data, an interpretation is made to determine whether the plant is healthy or diseased. Convolutional neural networks (CNNs), a subset of AI was used to identify the disease-causing pathogen in plants by analyzing input data of lesions and spots on leaf images (Barbedo, 2019).

The RS and AI play an important role in identifying and classifying crops, by understanding the spectral characteristics of different vegetation cover, especially when the crops being studied have similar characteristics and are difficult to distinguish. As compared to traditional crop identification methods, AI offers a significant advantage by promoting greater accuracy and efficiency through the ability to analyze large datapools in less time, detect subtle variations in plant features and monitoring crops in real time leading to better decision making and optimized resource allocation to different crops. With the assistance of optical RS, Low and Duveiller (2014) defined the spatial resolution requirements for identifying a specific crop and reported no common pixel size (one-size-fits-all) for identifying every crop. However, the pixel size and purity required for crop identification vary from crop to crop and from one landscape to another. Also, not every type of sensor is suitable for identifying the majority of crops. For example, MODIS (250 meters) can identify the majority of crops in a particular field, while Landsat (30 meters) provides object-based classification. Zhang et al. (2016) used two consumer-grade cameras, namely the Red, Green, and Blue (RGB) camera, and a modified Near-Infrared (NIR) camera, mounted on an airborne RS platform for crop identification in Texas. The images captured by NIR cameras provide more effective classification results than those captured by RGB cameras and other non-spectral features.

AI-based crop identification is now readily available for identifying different field crops by analyzing images with the help of CNNs, which can identify, classify, and detect different objects based on image features (Ranjithkumar et al., 2021). Ibatullin et al. (2022) utilized RS and AI to monitor various crops, achieving an accuracy of 85–95% in crop identification using satellites such as Sentinel-1 and Sentinel-2. The genus Ficus comprises nearly 1,000 plant species worldwide, making it challenging for a common man to identify them manually with 100% accuracy. In this context, Kho et al. (2017) developed a baseline automated system using an artificial neural network (ANN) and support vector machine (SVM) to identify and classify crop images of three Ficus species: F. benjamina, F. pellucidopunctata, and F. sumatrana with an accuracy level of approximately 83%.

The demand for various agricultural and horticultural crops fluctuates over time due to unreliable prices or market values. Therefore, it is important to accurately determine the area needed for growing specific crops by market planning and export opportunities. Estimating crop area to achieve a desirable yield for feeding a large population is one of the most challenging tasks faced by policymakers. In the current scenario, satellite-based RS, GIS, and ML have estimated crop acreage over vast geographic areas. Meraj et al. (2022) estimated the wheat crop acreage and its yield in Uttar Pradesh, India using satellite-acquired data and the Carnegie-Ames-Stanford Approach (CASA) model. Another satellite, SPOT-5 multispectral imagery data is used to identify the crop type and estimate the crop area simultaneously (Yang et al., 2006). Kshetrimayum et al. (2024) used integration of ML algorithms and Sentinel-1A satellite SAR data to know the pearl millet crop acreage in India, to predict the yield of pearl millet in Agra and Firozabad.

Canopy structure and volume are important factors in crops, particularly for the precise application of fertilizers, pesticides, and irrigation in horticultural crops (Sharma et al., 2022). According to Trout et al. (2008), remote sensors could be used to assess the canopy characteristics in horticultural crops by quantifying the photosynthetically active radiation (PAR) absorbed by the plant canopy. Ayyalasomayajula et al. (2009) measured the tree canopy volume and height of an automated citrus tree with 85% accuracy using oblique or orthoimages. The measurement of temperature at the canopy level is a crucial indicator for detecting water deficit in crop plants. One of the methods used to assess crop water deficit is thermography, which involves remotely measuring the temperature at the crop canopy. Giménez-Gallego et al. (2021) developed an automatic sensor for measuring crop canopy temperature in almonds using a low-cost thermal camera and AI-based image segmentation models. To analyze the data acquired by UAVs, a user-friendly application called Agroview was developed. This cloud and AI-based application can count and locate the position of plants in the field, differentiate between dead and live plants, measure various characteristics of the canopy, and create disease and pest stress maps. The Agroview app was used by Ampatzidis et al. (2020) to assess the phenotypic characteristics of citrus fields, including tree height, canopy size, and pest detection in both normal and high-density plantations.

Due to the universal shift in market economies, the need for accurate and precise information has become crucial for strategic decision-making at all levels, including producers, resource management, market levels, and financing. Due to the predictive nature of RS, farmers can now depend on it to obtain information about the factors that affect the planting and harvesting seasons of a crop. The combination of conventional imaging techniques, spectroscopy, and hyperspectral imaging systems can be used to obtain spatial and spectral data from fruits, vegetables, and other crops to determine crucial quality parameters (Singh et al., 2009). According to Hahn (2004), tomatoes can be classified based on their firmness with 90%precision as hard, soft, and very soft using remote sensors. Temporal RIS-AWiFS data was utilized to determine the optimal harvesting dates in apple orchards. Previously, the prediction of harvest time relied on common statistical procedures, which often resulted in errors. To address this issue, a novel harvest time prediction model based on LSTM (Long Short-Term Memory) AI has been developed using real-time production and weather data (Liu et al., 2022). There has been integration of AI with UAVs to make a conceptual design of olive harvesting drones, in order to replace the traditional time consuming methods of olive harvesting and gathering, which was more labor intensive and a hectic job (Khan et al., 2024). As date fruit (Phoenix dactylifera L.) is having a diverse group of genotypes, varying in external features as well as biochemical composition, this makes it difficult to assess proper harvesting time and post harvest handling of all genotypes separately in order to make it more market consuming. To deal with this problem, nowadays AI and computer vision technology is being put into use and considered to be accurate, non destructive, fast and efficient budding technology (Noutfia and Ropelewska, 2024). Nowadays, potato harvesting time is predicted by aome improved AI models like ResNet-59, which gives more precision and can rationalize the distribution of important resources, minimize waste and improve food security (Abdelhamid et al., 2024). AI- driven harvest time assessment of a crop is more impactful than traditional manual judging, because it gives more precision in ensuring the crop is harvested at peak period by keeping in view all the factors like even ripening of crop, market information, shelf life and waste reduction. By identifying the precise harvest window, AI minimizes the risk of uneven ripening (overripe/underripe), which leads to better quality produce and less post harvest losses, thus maintaining economic stability of people associated with farming. In contrast, traditional methods only rely on visual observations by farmers, which are often inaccurate due to factors like inconsist and uneven monitoring of large areas and weather variability.

RS approach can be used by growers to assess the final yield of a particular crop and calculate the variations among different fields growing the same crop. Vegetation indices (VI’s) based on spectral bands of multispectral imagery can be used, with the most widely used VIs being the NIR/Red ratio (Jordan, 1969) and NDVI (Normalized Difference Vegetation Index) (Rouse et al., 1973). Zaman et al. (2006) utilized an automatic ultrasonic system and a remote sensing-based yield monitoring system and reported a linear correlation between tree size and fruit yield. One more aspect of remote sensing, namely thermal imaging, has been utilized to estimate the number of fruits in orchards and groves. This is based on the principle that there is a temperature difference between the fruit and the surrounding environment. Crop yield depends on the interaction of various plant and environmental factors, such as temperature, rainfall, plant type, germination percentage, flowering percentage, and pesticide application. Therefore, it is crucial to estimate the impact of each factor on crop yield. Al-Adhaileh and Aldhyani (2022) employed the ANN model in Saudi Arabia to accurately predict crop yields using available data sets for various factors influencing potato, rice, sorghum, and wheat yields. Similarly, Babaee et al. (2021) used ANN to estimate the yield of rice crops. In a similar context, Hara et al. (2021) emphasized the importance of selecting independent variables to predict yield using artificial neural networks in conjunction with remotely sensed data. There has been a major integration between AI and data of Sentinel-2 satellite in precision agriculture, particularly for yield assessment in different crops like maize, wheat, rice (Aslan et al., 2024). Vegetation indices are derived from the images collected by satellite and then put to use for predicting yield with high accuracy by the integration of AI algorithms including ML and deep learning models. For validation of AI-based yield prediction models, common metrics used are mean absolute error (MAE), mean squared error (MSE), root mean squared error (RMSE), R-squared (R2), and these validation metrics assess the accuracy and precision of data acquire by different models.

Identification of various insect pests and diseases on farmland and the collection of data on effective pest and disease control mechanisms are now being carried out using RS and AI. RS and AI/ML techniques are highly effective in measuring changes in plant biomass, pigments, coloration, and plant vigour during abiotic stress in crop plants (Pinter et al., 2003). Small et al. (2015) revealed that utilizing historical weather data could be beneficial in predicting potential disease outbreaks of late blight in tomatoes and potatoes. They proposed the development of a web-based DSS. RS can sense physical and physiological changes such as chlorosis, cell death, stunted growth, wilting, and rolling of leaves in plants. Fluorescence spectroscopy (Lins et al., 2009), fluorescence imaging (Moshou et al., 2005) and NIR Spectroscopy were used to detect fire blight disease in pear plants that showed no symptoms (Spinelli et al., 2006). A relatively new technique, electronic nose systems, is used for identifying plant diseases. The E-nose and fluorescence imaging has been innovatively used for assessing stress in plants caused due to insect pests and diseases, by detecting subtle changes in volatile organic compounds (VOCs) released by the plant through release of odour and changes in plant tissue fluorescence pattern. This leads to early detection of insect pests and diseases before any visible symptoms. E- noses were used for early identification and differentiation between fire blight and blossom blight disease in apple trees under laboratory conditions (Fuentes et al., 2018). A field experiment conducted by Lins et al. (2009) aimed to distinguish leaves into citrus canker-attacked leaves and chlorosis-infected healthy leaves. The SVM outperforms other ML techniques in predicting the occurrence and abundance of plant diseases and pests. Bhatia et al. (2020) utilized a hybrid approach, combining SVM and logistic regression algorithms, to detect powdery mildew disease in tomatoes. Similarly, Xiao et al. (2019) discussed the use of LSTM networks to correlate weather data with population dynamics and pest attacks of insects. An interesting smartphone application has been devised for on time detection and identification of pests and diseases in order to decrease substantial economic losses. This application is using python based REST API, PostgreSQL and Keycloak and is tested in field against various pests like Tuta absoluta in tomato (Christakakis et al., 2024).

RS techniques prove to be a more economical and quicker method for mapping weeds than traditional ground survey procedures, especially in large geographical areas. Weeds were categorized as broad-leaved and grass-weeds, according to their texture to develop targeted selective herbicides, using Gabour wavelets and neural networks. Digital color photographs were used to identify bare spots in blueberry fields (Zhang et al., 2010). Similarly, Backes and Jacobi (2006) detected weeds in sugarbeet fields using high-resolution satellite images from the QuickBird satellite. They were able to identify an extensive and dense infestation of Canadian thistle as a weed. Costello et al. (2022) developed a new method for detecting and mapping Parthenium-infested areas using RGB and hyperspectral imagery, supplemented by artificial intelligence.

The indiscriminate use of herbicides worldwide has led to herbicide resistance in various weeds and to manage this, advanced strategies based on AI are required. Several AI-based tools are commercially available for weed management, including RS, robotics, and spectral analysis. However, despite the high potential of AI-based tools in managing herbicidal resistance in weeds, they are not used on a large scale (Ghatrehsamani et al., 2023). The accurate detection and identification of weed categories are necessary for targeted management. Nowadays, AI and UAVs are commonly used to manage weeds in rice fields (Ahmad et al., 2023). Su (2020) used drones equipped with hyperspectral cameras to identify weed species by capturing numerous images of different spectral bands. Machleb et al. (2020) demonstrated the use of autonomous mobile robots for monitoring, detecting various weeds, mapping weeds, and efficiently managing them. Similarly, various ML algorithms such as Random Forest Classifier (RFC), SVM, and CNN help classify different types of digital images to detect weeds in various crops like rice (Kamath et al., 2020).

Crop plants are generally affected by several environmental factors, such as light, temperature, and water, which result in abiotic stresses like drought, salinity, acidity, and heat. Monitoring abiotic stress in plants is crucial for effective agricultural management and assessment of crop health. RS techniques provide valuable tools for detecting and diagnosing various types of abiotic stresses. One common indicator of abiotic stress in plants is the reduction in chlorophyll content. When plants experience stress, such as water scarcity or mineral toxicity, their chlorophyll content degrades, leading to changes in their spectral reflectance properties.

A new approach has been developed to sense the stress level, particularly in the case of tree and row crops in which a shaded portion of tree canopy is taken into consideration (Jones et al., 2002). However, it has a limitation where stomata usually remain closed in the shade areas and there is less temperature variation. In the same way, the availability of surface water can be measured with the help of reflectance patterns in plant canopies with or without the presence of surface water (Pinter et al., 2003).

By combining spectral reflectance data with advanced data analysis techniques, such as vegetation indices (VIs) and ML algorithms, researchers can quantitatively assess stress levels and monitor the health of crops and vegetation over time, helping inthe early detection of abiotic stress, enabling timely interventions and better crop management practices. In addition, AI and ML-based algorithms can be used to enhance crop yield under different stress conditions. Further, the use of ML in QTL (quantitative trait loci) mining will help in identifying various genetic factors of stress tolerance in legumes (Singh et al., 2021). Different VIs used for analysing stress level particularly water stress in plants include NDVI (Karnieli et al., 2010), PRI (Photochemical Reflectance Index) (Ryu et al., 2021) and MSI (Mositure Stress Index) (Hunt et al., 1987). NDVI can be used to optimize irrigation scheduling by identifying the drought prone areas of field, thereby helpful in precise water scheduling of crops. PRI measures how efficiently a crop utilizes incoming radiations and assesses water stress in plant and how CO2 is absorbed by plants. MSI works on the principle of measuring the amount of water present in leaves of plants and is used to analyze canopy stress, fire hazard conditions, ecosystem physiology and predict productivity. Navarro et al. (2022) utilized the leaf image hyperspectral data along with an AI model to reveal the etiological cause of different stresses. An ANN was used to read the reflectance pattern of visible and near-infrared region wavelengths which were the main cause of stress. Advancement in field of AI has led to development of high-throughput gadgets, which help to overcome the biotic and abiotic stresses, and provide big data sets like transportable array for remotely sensed agriculture and phenotyping reference platform (TERRA-REF) forecasts stress at an early as possible (Gou et al., 2024).

RS can detect changes in the water level of a field, whether it is experiencing water-logged or drought conditions, with the help of variable rate irrigation technology such as the centre pivot system (McDowell, 2017). A higher-resolution land data assimilation system was utilized as a computational tool to develop soil moisture and temperature maps. The system aimed to provide information about the amount of soil moisture present at a specific depth of land and soil temperature at various depths, including the rhizosphere (Das et al., 2017). There is a dire need to utilize AI and ML models to estimate the water requirement for crop irrigation. Mohammed et al. (2023) researched implementing AI-based models to predict the optimal water requirements for sensor-based micro-irrigation systems run by solar photovoltaic systems. FourML algorithms — LR, SVR, SLTM neural network, and extreme gradient boosting (XGBoost) were utilized and validated for predicting water requirements, and among all, LSTM and XGBoost were more accurate than SVR and LR in predicting irrigation requirements. Under limited water availability, the automatic irrigation decision support system can be helpful in intelligently scheduling irrigation to improve water use efficiency and enhance crop productivity. Katimbo et al. (2023) evaluated the performance of various AI-based algorithms and models for predicting crop evapotranspiration and crop water stress index. They achieved this by measuring soil moisture, canopy temperature, and NDVI from irrigated maize plants. Based on overall performance and scores, CatBoost and Stacked Regression were identified as the best models for calculating crop water stress index and crop evapotranspiration, respectively. To cope up with the problem of water scarcity in legume farming, in Uttar Pradesh, India AI integration was done with precision farming to raise pea crop and AI-based precision irrigation offered a significant edge in allocating water, enhancing crop production, decreasing greenhouse gas emmission and reducing cultivation cost (Kim and AlZubi, 2024).

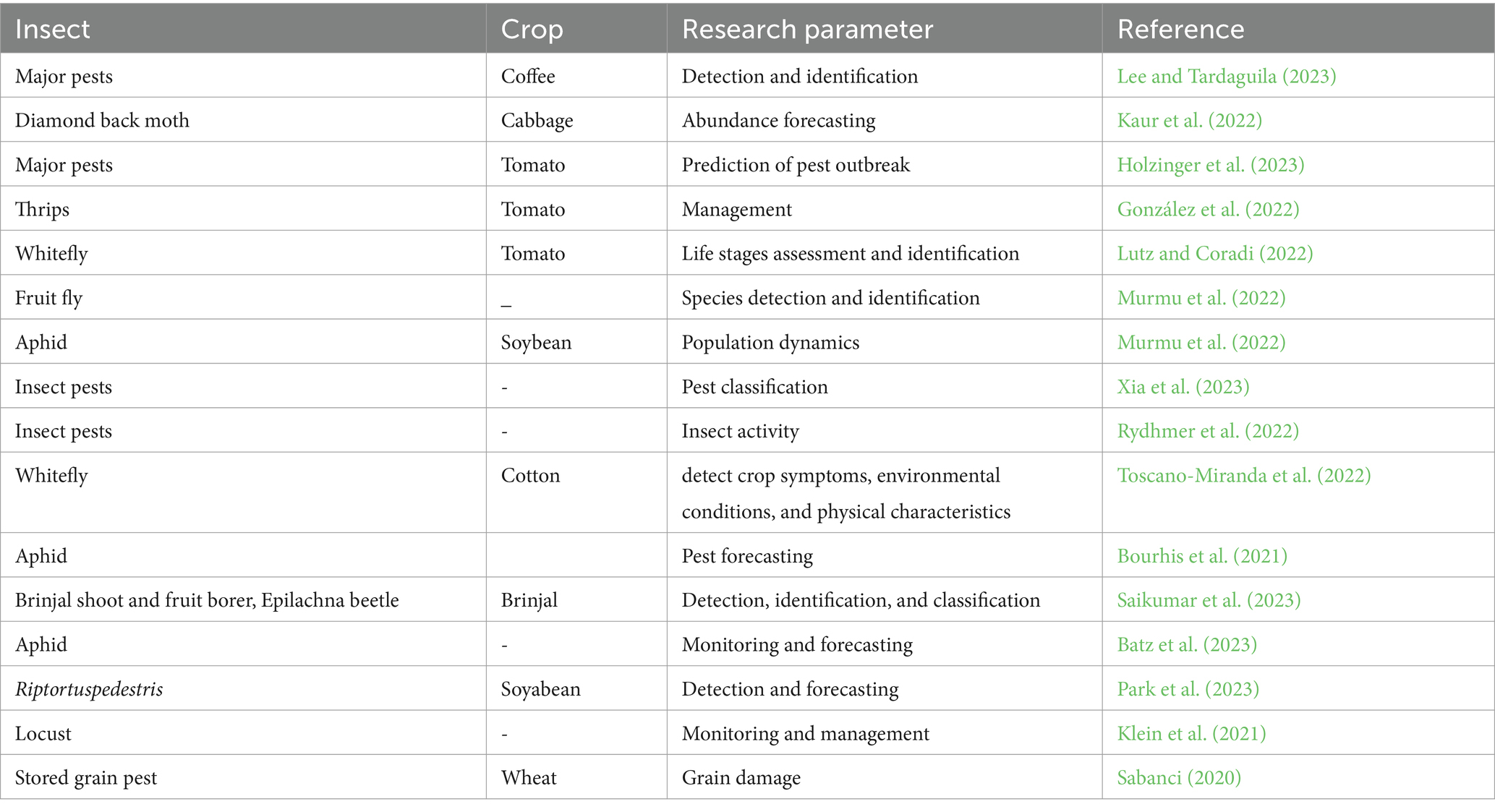

The wavelength of electromagnetic radiation (EMR) changes based on its interaction with the plant surface. Therefore, the condition of plant vigour and health may significantly affect the reflectance pattern of leaves (Luo et al., 2013). The use of RS to assess plant defoliation caused by insect pests, chlorosis, and necrosis has been conducted by comparing spectral responses (Franklin, 2001). Several studies have been conducted on the use of RS, AI, and ML techniques for insect species identification, assessment of incidence and severity, mapping of new insect habitats and breeding areas, and identification of disease symptoms (Tables 1–3 and Figure 4).

Table 3. Examples of application of artificial intelligence and machine learning in insect research.

AI algorithms have played a crucial role in identifying diseases and pests that affect cotton, by automatically detecting crop symptoms, environmental conditions, and physical characteristics associated with pests or diseases. In a study, Gopinath et al. (2022) introduced an automated big data framework specifically designed for classifying plant leaf diseases by employing a Convolutional Recurrent Neural Network Classifier (CRNN) algorithm to successfully differentiate between healthy and diseased leaves across diverse plant species, including bananas, peppers, potatoes, and tomatoes. Sujithra and Ukrit (2022) employed a range of deep neural networks, such as CNN, Radial Basis Neural Network (RBNN), and Feed-Forward Neural Network (FFNN), to diagnose leaf diseases in banana and sugarcane. The workflow process of various AI models, along with deep learning algorithms, for making effective decisions in insect pest management is illustrated in Figure 5. UAV-based pest detection approach utilizes Unmanned Aerial Vehicles aided with various sensors and imaging technologies (RGB cameras, multispectral cameras, infrared thermal cameras, hyperspectral cameras) for identification of insect pests in crops. This approach leads to early detection of pests and judicious application of pesticides for their management keeping intact the ecological balance. The IoT based UAVs (Yadav et al., 2024) focuses on AI mechanism and python programming paradigm for sending the images of different rice pests to Imagga Cloud and provides valuable and timely information for necessary action (Bhoi et al., 2021).

The concern about the invasion of non-native pest species is growing day by day, creating a biosecurity threat. This occurs due to activities such as tourism, inter-border trade, and global climate change (Hulme, 2009). Therefore, there is a need for strict quarantine and inspection approaches to prevent the spread of non-native invasive pests. To reduce inspection costs and processing time, automatic inspection and detection of invasive pest species in commodities, proximal RS is an effective approach. With the help of reflectance data gathered by an RGB camera, the wings and aculeus of three closely resembling fruit fly species were analyzed and three different fruit fly species—Anastrepha fraterculus, A. oblique, and A. sororcula were identified (Faria et al., 2014). Using a NIR and hyperspectral imaging system, researchers were able to differentiate healthy wheat kernels from insect-damaged ones in storage, by employing proximal RS and hyperspectral reflectance profiles (Singh et al., 2010). Using the same technique, two fruit fly species (Dowell and Ballard, 2012), tobacco budworm, and corn earworm (Jia et al., 2007) were also identified. Similarly, Klarica et al. (2011) used imaging spectroscopy to identify two cryptic ant species (Tetramorium caespitum and T. impurum). Wang et al. (2016) utilized a hyperspectral imaging of 37 spectral bands between 41.1 to 87 nm for insect classification, incorporating mitochondrial DNA analysis and morphometry and identified seven species of the Evacanthine leafhopper genus Bundera.

Despite species acting as core units for all research, morphologically identified species can be serving as ill found entities in organisms where intricate evolutionary processes like hybridization, polyploidy and mutation occur. So, integration of taxonomy with AI under a unified concept of species can enable data integration and automatic feature identification that reduce subjectivity of species delimitation (Karbstein et al., 2024). A ML algorithm was developed to identify the most common insect pests infesting coffee fields by analyzing images and was found to be more effective than traditional methods for detecting and identifying pests (Lee and Tardaguila, 2023). Deep learning, a branch of ML, was used to identify and detect the abundance of whiteflies in tomatoes by recognizing whiteflies at various stages (Lutz and Coradi, 2022). Saikumar et al. (2023) conducted experiments on the detection and classification of insect pests in brinjal using artificial intelligence. They utilized Python software with the Keras and Tensorflow frameworks, and the model employed was CNN-VGG-16. A total of 204 images of insect pests captured throughout the study were used as input data for the automatic identification and classification of insect pests like Brinjal shoot and fruit borers and Epilachna beetle (Saikumar et al., 2023).

The monitoring of migrant insect species can be conducted using entomological RADAR. RS technology can automatically monitor the height, horizontal speed, direction, orientation, body mass, and shape of insects. Recent advancements in RS technology have resulted in the development of innovative harmonic RADAR systems that are effective in tracking specific insect species, such as Vespa vellutina, flying at high altitudes of 500 meters (Maggiora et al., 2019). AI-based pest surveillance and monitoring systems use cameras and sensors for data collection and can accurately predict the infestation of a particular pest in an area. Scientists at California University have developed an AI-based pest monitoring system that helps to identify the cause and track the movement of pests (Sharma et al., 2022). The computer vision system and information and communication technology (ICT) algorithm proposed show promise as an automated solution for insect observations, serving as a valuable addition to existing systems for monitoring nocturnal insects (Bjerge et al., 2021). AMMODs (Automated Multisensor Stations for Monitoring Species Diversity) share similarities with weather stations and are operated as autonomous samplers designed to monitor a range of species, including plants, birds, mammals, and insects (Wägele et al., 2022).

Biotic stresses, diseases and insect pests, can severely affect crop productivity. Crop stress caused by pests and diseases can be detected using remote sensing, based on the principle that biotic stress causes changes in pigmentation, physical appearance of plants, and photosynthesis, which directly alter the absorption rate of incident energy and the reflectance pattern of plants (Prabhakar et al., 2012). Prabhakar et al. (2012) detected the level of stress and severity caused by leafhopper attacks in cotton plants by assessing chlorophyll content and relative water content (RWC) using ground-based hyperspectral RS and categorized plants into five infestation grades from 0 to 4, representing healthy to severe damage. The leaf minor damage in a tomato field was assessed using leaf reflectance spectra (Xu et al., 2007) and the symptoms of cyst nematode in beet were mapped using a combination of RS and GIS techniques (Hillnhütter et al., 2011). The abundance of diamondback moth in cabbage crop was predicted using a subset of deep learning within ML (Kaur et al., 2022). Similarly, to detect and identify different pests in tea plantation in Yunnan for preventing the quality deterioration of tea leaves, transfer learning CNN were used (Li et al., 2024).

Understanding the distribution of larval age in the field is an important factor in predicting primary and secondary pest outbreaks, as well as in determining the timing for effective insecticide application for pest management. RS plays a crucial role in providing information about the stage of insect pests present in the field based on accumulated degree days (Yones et al., 2012). The optimal timing for effective management of the cotton leafworm (Spodoptera littoralis) was determined based on degree days calculated from air temperature ranging between 174.85 and 197.59, using satellite RS (Yones et al., 2012). A study conducted by Nansen et al. (2015) on two species of insects, maize weevil (Sitophilus zeamais) and large flour black beetle (Cynaus angustus), revealed that temporal changes in body reflectance patterns were correlated with the response to two killing agents: entomopathogenic nematodes (EPNs) and insecticidal plant extract. Colour infrared photography was used to analyze infestations of black fly and brown spot scale in citrus orchards, as well as white fly infestation in cotton. Similarly, the infestation of mites in cotton fields was investigated at an early stage by observing changes in appearance, colour, and canopy over time using multispectral RS (Fitzgerald, 2000).

ML models can help predict pest outbreaks, for example, the major pests in tomato crops were predicted with significantly higher accuracy compared to traditional statistical models (Holzinger et al., 2023). ML can be used to develop DSS that correlate pest population dynamics with the environment, aiding in effective management. A DSS was developed using ML to manage thrips in tomatoes, resulting in a direct reduction in the use of insecticides (González et al., 2022). AI-based algorithms, such as ML, assist in early warning and forecasting of pests. In the case of fruit flies, different species were classified and detected based on their wing patterns (Murmu et al., 2022). The population dynamics of pests to environmental factors such as temperature and rainfall can be forecasted by ML algorithms. Deep learning algorithms can aid in predicting the occurrence and abundance of aphids in soybean (Murmu et al., 2022). AI has the potential to enhance aphid pest forecasting through various means, including optimizing monitoring infrastructure to improve predictive models (Bourhis et al., 2021).

The time required for analyzing a large number of samples collected by traditional insect collection techniques, such as suction traps, is extensive. Additionally, a skilled taxonomic expert is needed for precise sample processing, creating bottlenecks in monitoring insect populations. For this, AI-based image recognition and ML algorithms have emerged as a more promising approach. Aphids are capable of causing heavy losses in crop yield through direct feeding or by serving as vectors for plant viral diseases. Batz et al. (2023) investigated the potential of AI, ML, and image recognition in systematically monitoring and forecasting insect pests. Detection of an important pest, Riptortus pedestris, which causes heavy damage to soybean pods and leaves, was conducted using a surveillance platform based on an unmanned ground vehicle equipped with a GoPro camera. The ML-based models used to study this pest were under the subset of deep learning algorithms, namely MRCNN, YOLOv3, and Detectron2 (Park et al., 2023). In the global population growing era, it becomes more important to early warn about invasion of new pests like locusts in areas where these are not present. Halubanza (2024) developed an advanced AI-based CNN model, specifically the MobileNet version 2 for automatic identification of different locust species which achieved a precision of 91% for Locusta migratoria and 85% for Nomadacris septemfasciata.

With the assistance of multispectral scanners mounted on Earth-orbiting satellites such as Landsat, RS imagery can be obtained to aid in the detection of a particular insect’s habitat. In the states of Nebraska and South Dakota, USA, mosquito larval habitats were detected in Lewis and Clarke Lake using a multispectral scanner. This lake was found to be the home of seven mosquito species: Aedes dorsalis, A. vexans, Culex tarsalis, C. restruans, C. silinarius, Culiseta annulate, and Anopheles walker (Hayes et al., 1985). Similarly, the use of RS and GIS techniques has helped to detect locust habitat monitoring and breeding zones in Africa, South Europe, and Southwest Asia (Latchininsky and Sivanpillai, 2010).

Infrared sensors can detect flying insect activity by using near-infrared LED lights and high-speed photodetectors (Rydhmer et al., 2022). Various parameters, such as wing beat frequency, melanisation, and wing-to-body ratio, can be measured in the field, automatically uploaded to a cloud database, and analyzed using ML and AI. Xia et al. (2023) evaluated the effectiveness of Vision Transformer (ViT) models in pest classification. The researchers utilized ResNet50, MMAINet, DNVT, and an ensemble learner to aggregate the predictions of these three models through a final classification vote. MMAINet incorporated an attention mechanism to identify discriminative image regions. The identified regions were then used to train fine-grained CNN-based classification models at different resolutions. Every year, the outbreak of locusts in different parts of world is common. This is due to the difficulty in mapping and monitoring locust habitats, which are mainly, located in inaccessible conflict zones of different countries. However, since the 1980s, RS applications have been utilized for monitoring and managing locust swarms. Nowadays, UAVs have brought new potential for more efficient and rapid management of locusts. The studies have utilized AVHRR, SPOT-VGT, and MODIS as well as Landsat for monitoring locust outbreaks (Klein et al., 2021).

One can analyze crop damage due to pests by knowing the reflectance pattern with the help of unmanned aircraft systems and the percentage of crops to be harvested under specific conditions like pest damage (Hunt and Rondon, 2017). The crop yield forecast has been done based on the relationship between VIs and yield with the help of remote sensors (Casa and Jones, 2005). The dependence of crop yield on various factors particularly pest and disease infestation, makes it a tough parameter to know properly but the detection of different growth profiles with the help of field sensors has made it possible to forecast the yield. Sharma et al. (2020) applied a fusion of CNN with LSTM models to raw imagery data for estimating the yield of wheat crops. The proposed model demonstrated a remarkable accuracy improvement of 74%over conventional methods for crop yield prediction and outperformed other deep learning models by 50%. Dharani et al. (2021) conducted a comparative analysis of three techniques namely ANN, CNN, and RNN with LSTM to assess their effectiveness in crop yield prediction. The results revealed that CNN exhibited superior accuracy compared to ANN, while RNN with LSTM outperformed all other techniques, achieving an accuracy of 89%. A CNN-RNN model was proposed for predicting soybean and corn yields in the United States (Khaki et al., 2020). Sabanci (2020) used AI techniques, including artificial bee colony optimization, ANN, and extreme learning machine algorithms, to detect sunn pest damage in wheat grains in Turkey. Toscano-Miranda et al. (2022) conducted a study on cotton crops using artificial intelligence techniques such as classification, image segmentation, and feature extraction. They employed algorithms including support vector machines, fuzzy inference, back-propagation neural networks, and CNN. The study focused on the most prevalent pests, whiteflies, and diseases such as root rot.

The potential of AI and RS in insect pest management rests on creating precise and real time monitoring systems capable of forecasting pest outbreaks and facilitating more focused pest control approaches. However, significant challenges like data quality and availability, technological accessibility, computational cost, species identification complexity, regulatory frameworks for use of AI-driven pest management technologies and adaptation to environmental shifts, especially those caused by global climate change must be addressed, for ensuring responsible and sustainable pest management. The promising future directions in this aspect would be advanced image analysis, multi-sensor integration, predictive modeling, precision pest control, drone based monitoring, use of smart traps and lures and creating accessible platforms for stakeholders to share data, enabling better pest management.

Agriculture heavily relies on timely and accurate information, and RS and AI play a crucial role in providing such information. The ability of RS and AI to rapidly capture data and cover large agricultural areas enables farmers and decision-makers to make informed and timely decisions about crop management, irrigation, and pest control. One specific area where RS and AI have shown great potential is insect pest management. These techniques enable pest monitoring and detection, identification of pest outbreaks, early warning issuance, and determination of the optimal timing for pest control application. This proactive approach could help farmers to prevent crop damage and minimize economic losses caused by pests. Furthermore, recent advancements in spectroscopy and other RS techniques have opened new opportunities for developing alternative and innovative approaches to crop management. The integration of RS data with traditional agricultural practices can lead to more precise and efficient resource utilization, optimizing inputs such as water, fertilizers, and pesticides. Efforts should be made to improve the speed of data acquisition and processing, allowing for a prompt response to changing conditions on the ground. This would make the information even more pertinent and practical for agricultural decision-makers and can lead to increased productivity and resilience in response to constantly changing environmental conditions.

DA: Conceptualization, Software, Writing – original draft. SMR: Conceptualization, Software, Writing – original draft. PaS: Resources, Supervision, Writing – review & editing. IA: Supervision, Writing – review & editing. BaG: Resources, Writing – review & editing. SAR: Resources, Writing – review & editing. SFR: Resources, Writing – review & editing. PoS: Resources, Writing – review & editing. GR: Resources, Writing – review & editing. KA: Resources, Writing – review & editing. GS: Resources, Writing – review & editing. BeG: Resources, Writing – review & editing. MN: Resources, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdelhamid, A. A., Alhussan, A. A., Qenawy, A. S. T., Osman, A. M., Elshewey, A. M., and Eed, M. (2024). Potato harvesting prediction using an improved ResNet-59 model. Potato Res., 67, 1–20. doi: 10.1007/s11540-024-09773-6

Ahirwar, S., Swarnkar, R., Bhukya, S., and Namwade, G. (2019). Application of drone in agriculture. Int. J. Curr. Microbiol. Appl. Sci. 8, 2500–2505. doi: 10.20546/ijcmas.2019.801.264

Ahmad, S. N. I. S. S., Juraimi, A. S., Sulaiman, N., Che’Ya, N. N., Su, A. S. M., Nor, N. M., et al. (2023). Weeds detection and control in Rice crop using UAVs and artificial intelligence: a review. Adv. Agric. Food Res. J. 4:1–25. doi: 10.36877/aafrj.a0000371

Al-Adhaileh, M. H., and Aldhyani, T. H. (2022). Artificial intelligence framework for modeling and predicting crop yield to enhance food security in Saudi Arabia. Peer J. Comput. Sci. 8:e1104. doi: 10.7717/peerj-cs.1104

Ampatzidis, Y., Partel, V., and Costa, L. (2020). Agroview: cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput. Electron. Agric. 174:105457. doi: 10.1016/j.compag.2020.105457

Apan, A., Held, A., Phinn, S., and Markley, J. (2004). Detecting sugarcane ‘orange rust’disease using EO-1 Hyperion hyperspectral imagery. Int. J. Remote Sens. 25, 489–498. doi: 10.1080/01431160310001618031

Aslan, M. F., Sabanci, K., and Aslan, B. (2024). Artificial intelligence techniques in crop yield estimation based on Sentinel-2 data: a comprehensive survey. Sustain. For. 16:8277. doi: 10.3390/su16188277

Ayyalasomayajula, B., Ehsani, R., and Albrigo, G. (2009). Automated citrus tree counting from oblique images and tree height estimation from oblique images. Proceedings of the symposium on the applications of precision agriculture for fruits and vegetables international conference. Acta Hortic. 824, 91–98. doi: 10.17660/ActaHortic.2009.824.10

Babaee, M., Maroufpoor, S., Jalali, M., Zarei, M., and Elbeltagi, A. (2021). Artificial intelligence approach to estimating rice yield. Irrig. Drain. 70, 732–742. doi: 10.1002/ird.2566

Backes, M., and Jacobi, J. (2006). Classification of weed patches in Quickbird images: verification by ground truth data. EARSeL eProceedings 5, 173–179.

Backoulou, G. F., Elliott, N. C., Giles, K., Phoofolo, M., and Catana, V. (2011). Development of a method using multispectral imagery and spatial pattern metrics to quantify stress to wheat fields caused by Diuraphisnoxia. Comput. Electron. Agric. 75, 64–70. doi: 10.1016/j.compag.2010.09.011

Bakala, H. S., Singh, G., and Srivastava, P. (2020). Smart breeding for climate resilient agriculture. Plant Breeding-Curr. Future Views, 4847:77–102. doi: 10.5772/intechopen.94847

Barbedo, J. G. A. (2019). Plant disease identification from individual lesions and spots using deep learning. Biosyst. Eng. 180, 96–107. doi: 10.1016/j.biosystemseng.2019.02.002

Batz, P., Will, T., Thiel, S., Ziesche, T. M., and Joachim, C. (2023). From identification to forecasting: the potential of image recognition and artificial intelligence for aphid pest monitoring. Front. Plant Sci. 14:1150748. doi: 10.3389/fpls.2023.1150748

Bhatia, A., Chug, A., and Singh, A. P. (2020). “Hybrid SVM-LR classifier for powdery mildew disease prediction in tomato plant”, in Proceedings of the 2020 7th International Conference on Signal Processing and Integrated Networks (SPIN), (Noida, India), 218–223.

Bhoi, S. K., Jena, K. K., Panda, S. K., Long, H. V., Kumar, R., Subbulakshmi, P., et al. (2021). An internet of things assisted unmanned aerial vehicle based artificial intelligence model for rice pest detection. Microprocess. Microsyst. 80:103607. doi: 10.1016/j.micpro.2020.103607

Bjerge, K., Nielsen, J. B., Sepstrup, M. V., Helsing-Nielsen, F., and Høye, T. T. (2021). An automated light trap to monitor moths (Lepidoptera) using computer vision-based tracking and deep learning. Sensors 21:343. doi: 10.3390/s21020343

Bourhis, Y., Bell, J. R., van den Bosch, F., and Milne, A. E. (2021). Artificial neural networks for monitoring network optimisation—a practical example using a national insect survey. Environ. Model. Softw. 135:104925. doi: 10.1016/j.envsoft.2020.104925

Bravo, C., Moshou, D., West, J., McCartney, A., and Ramon, H. (2003). Early disease detection in wheat fields using spectral reflectance. Biosyst. Eng. 84, 137–145. doi: 10.1016/S1537-5110(02)00269-6

Bu, F., and Wang, X. (2019). A smart agriculture IoT system based on deep reinforcement learning. Future Gen. Comput. Syst. 99, 500–507. doi: 10.1016/j.future.2019.04.041

Casa, R., and Jones, H. G. (2005). LAI retrieval from multiangular image classification and inversion of a ray tracing model. Remote Sens. Environ. 98, 414–428. doi: 10.1016/j.rse.2005.08.005

Chivkunova, O. B., Solovchenko, A. E., Sokolova, S. G., Merzlyak, M. N., Reshetnikova, I. V., and Gitelson, A. A. (2001). Reflectance spectral features and detection of superficial scald–induced browning in storing apple fruit. J. Russ. Phytopathol. Soc. 2, 73–77.

Christakakis, P., Papadopoulou, G., Mikos, G., Kalogiannidis, N., Ioannidis, D., Tzovaras, D., et al. (2024). Smartphone-based citizen science tool for plant disease and insect Pest detection using artificial intelligence. Technologies 12:101. doi: 10.3390/technologies12070101

Coble, K. H., Mishra, A. K., Ferrell, S., and Griffin, T. (2018). Big data in agriculture: a challenge for the future. Appl. Econ. Persp. Pol. 40, 79–96. doi: 10.1093/aepp/ppx056

Costello, B., Osunkoya, O. O., Sandino, J., Marinic, W., Trotter, P., Shi, B., et al. (2022). Detection of Parthenium weed (Parthenium hysterophorus L.) and its growth stages using artificial intelligence. Agriculture 12:1838. doi: 10.3390/agriculture12111838

Das, N. N., Entekhabi, D., Kim, S., Jagdhuber, T., Dunbar, S., Yueh, S., et al. (2017). “High-resolution enhanced product based on SMAP active-passive approach using sentinel 1A and 1B SAR data”, in 2017 IEEE international geoscience and remote sensing symposium (Fort Worth, TX, USA, IGARSS), 2543–2545.

Datt, B. (2006). Early detection of exotic pests and diseases in Asian vegetables by imaging spectroscopy: a report for the Rural Industries Research and Development Corporation. Rural Ind. Res. Dev. Corp. :31.

De Jong, S. M., and Van der Meer, F. D. (2007). Remote sensing image analysis: Including the spatial domain. AA Dordrecht, The Netherlands: Springer Science & Business Media.

Dharani, M. K., Thamilselvan, R., Natesan, P., Kalaivaani, P. C. D., and Santhoshkumar, S. (2021). Review on crop prediction using deep learning techniques. J. Physics 1767:012026. doi: 10.1088/1742-6596/1767/1/012026

Dowell, F. E., and Ballard, J. W. O. (2012). Using near-infrared spectroscopy to resolve the species, gender, age, and the presence of Wolbachia infection in laboratory-reared Drosophila. G3 2, 1057–1065. doi: 10.1534/g3.112.003103

Dutta, S., Singh, S. K., and Panigrahy, S. (2014). Assessment of late blight induced diseased potato crops: a case study for West Bengal district using temporal AWiFS and MODIS data. J. Ind. Soc. Remote Sens. 42, 353–361. doi: 10.1007/s12524-013-0325-9

Faria, F. A., Perre, P., Zucchi, R. A., Jorge, L. R., Lewinsohn, T. M., and Rocha, A. (2014). Automatic identification of fruit flies (Diptera: Tephritidae). J. Vis. Commun. Image Represent. 25, 1516–1527. doi: 10.1016/j.jvcir.2014.06.014

Franke, J., and Menz, G. (2007). Multi-temporal wheat disease detection by multi-spectral remote sensing. Precis. Agric. 8, 161–172. doi: 10.1007/s11119-007-9036-y

Franklin, S. (2001). Remote sensing for sustainable forest management. Boca Raton, Florida: Lewis publisher.

Fuentes, M. T., Lenardis, A., and De la Fuente, E. B. (2018). Insect assemblies related to volatile signals emitted by different soybean–weeds–herbivory combinations. Agr. Ecosyst. Environ. 255, 20–26. doi: 10.1016/j.agee.2017.12.007

Ghatrehsamani, S., Jha, G., Dutta, W., Molaei, F., Nazrul, F., Fortin, M., et al. (2023). Artificial intelligence tools and techniques to combat herbicide resistant weeds—a review. Sustain. For. 15:1843. doi: 10.3390/su15031843

Giménez-Gallego, J., González-Teruel, J. D., Soto-Valles, F., Jiménez-Buendía, M., Navarro-Hellín, H., and Torres-Sánchez, R. (2021). Intelligent thermal image-based sensor for affordable measurement of crop canopy temperature. Comput. Electron. Agric. 188:106319. doi: 10.1016/j.compag.2021.106319

González, M. I., Encarnação, J., Aranda, C., Osório, H., Montalvo, T., and Talavera, S. (2022). “The use of artificial intelligence and automatic remote monitoring for mosquito surveillance” in Ecology and control of vector-borne diseases (PA, Leiden, The Netherlands: Wageningen Academic Publishers), 1116–1121.

Goodwin, N. R., Coops, N. C., Wulder, M. A., Gillanders, S., Schroeder, T. A., and Nelson, T. (2008). Estimation of insect infestation dynamics using a temporal sequence of Landsat data. Remote Sens. Environ. 112, 3680–3689. doi: 10.1016/j.rse.2008.05.005

Gopinath, S., Sakthivel, K., and Lalith, S. (2022). A plant disease image using convolutional recurrent neural network procedure intended for big data plant classification. J. Intell. Fuzzy Syst. 43, 4173–4186. doi: 10.3233/JIFS-220747

Gou, C., Zafar, S., Fatima, N., Hasnain, Z., Aslam, N., Iqbal, N., et al. (2024). Machine and deep learning: artificial intelligence application in biotic and abiotic stress management in plants. Front. Biosci. Landmark 29:20–34. doi: 10.31083/j.fbl2901020

Graeff, S., Link, J., and Claupein, W. (2006). Identification of powdery mildew (Erysiphe graminis sp. tritici) and take-all disease (Gaeumannomyces graminis sp. tritici) in wheat (Triticum aestivum L.) by means of leaf reflectance measurements. Open Life Sci. 1, 275–288. doi: 10.2478/s11535-006-0020-8

Hahn, F. (2004). Spectral bandwidth effect on a Rhizopus stolonifer spore detector and its on-line behavior using red tomato fruits. Can. Biosyst. Eng. 46, 49–54.

Halubanza, B. (2024). A framework for an early warning system for the management of the spread of locust invasion based on artificial intelligence technologies (Doctoral dissertation: The University of Zambia.

Hara, P., Piekutowska, M., and Niedbała, G. (2021). Selection of independent variables for crop yield prediction using artificial neural network models with remote sensing data. Land 10:609. doi: 10.3390/land10060609

Hayes, R. O., Maxwell, E. L., Mitchell, C. J., and Woodzick, T. L. (1985). Detection, identification, and classification of mosquito larval habitats using remote sensing scanners in earth-orbiting satellites. Bull. World Health Organ. 63, 361–374

Hillnhütter, C., Mahlein, A. K., Sikora, R. A., and Oerke, E. C. (2011). Remote sensing to detect plant stress induced by Heterodera schachtii and Rhizoctonia solani in sugar beet fields. Field Crop Res. 122, 70–77. doi: 10.1016/j.fcr.2011.02.007

Holzinger, A., Keiblinger, K., Holub, P., Zatloukal, K., and Müller, H. (2023). AI for life: trends in artificial intelligence for biotechnology. New Biotechnol. 74, 16–24. doi: 10.1016/j.nbt.2023.02.001

Hulme, P. E. (2009). Trade, transport and trouble: managing invasive species pathways in an era of globalization. J. Appl. Ecol. 46, 10–18. doi: 10.1111/j.1365-2664.2008.01600.x

Hunt, E. R. Jr., Rock, B. N., and Nobel, P. S. (1987). Measurement of leaf relative water content by infrared reflectance. Remote Sens. Environ. 22, 429–435. doi: 10.1016/0034-4257(87)90094-0

Hunt, E. R. Jr., and Rondon, S. I. (2017). Detection of potato beetle damage using remote sensing from small unmanned aircraft systems. J. Appli. Remote Sens. 11:026013. doi: 10.1117/1.JRS.11.026013

Ibatullin, S., Dorosh, Y., Sakal, O., Dorosh, O., and Dorosh, A. (2022). “Crop identification using remote sensing methods and artificial intelligence” in International conference of young professionals (European Association of Geoscientists & Engineers), 1–5.

Javaid, M., Haleem, A., Khan, I. H., and Suman, R. (2023). Understanding the potential applications of artificial intelligence in agriculture sector. Adv. Agrochem. 2, 15–30. doi: 10.1016/j.aac.2022.10.001

Jensen, J. R. (2007). Remote sensing of the environment: An earth resource perspective : Pearson Prentice Hall.

Jia, F., Maghirang, E., Dowell, F., Abel, C., and Ramaswamy, S. (2007). Differentiating tobacco budworm and corn earworm using near-infrared spectroscopy. J. Econ. Entomol. 100, 759–764. doi: 10.1093/jee/100.3.759

Johnson, L. F., Bosch, D. F., Williams, D. C., and Lobitz, B. M. (2001). Remote sensing of vineyard management zones: implications for wine quality. Appl. Eng. Agric. 17, 557–560. doi: 10.13031/2013.6454

Jones, H. G., Stoll, M., Santos, T., Sousa, C. D., Chaves, M. M., and Grant, O. M. (2002). Use of infrared thermography for monitoring stomatal closure in the field: application to grapevine. J. Exp. Bot. 53, 2249–2260. doi: 10.1093/jxb/erf083

Jordan, C. F. (1969). Derivation of leaf area index from quality of light on the forest floor. Ecology 50, 663–666. doi: 10.2307/1936256

Jung, J., Maeda, M., Chang, A., Bhandari, M., Ashapure, A., and Landivar-Bowles, J. (2021). The potential of remote sensing and artificial intelligence as tools to improve the resilience of agriculture production systems. Curr. Opin. Biotechnol. 70, 15–22. doi: 10.1016/j.copbio.2020.09.003

Kamath, R., Balachandra, M., and Prabhu, S. (2020). Paddy crop and weed discrimination: a multiple classifier system approach. Int. J. Agron. 2020, 1–14. doi: 10.1155/2020/6474536

Kanu, N. J., Gupta, E., and Verma, G. C. (2024). An insight into India’s moon mission–Chandrayan-3: the first nation to land on the southernmost polar region of the moon. Planetary Space Sci. 242:105864. doi: 10.1016/j.pss.2024.105864

Karbstein, K., Kösters, L., Hodač, L., Hofmann, M., Hörandl, E., Tomasello, S., et al. (2024). Species delimitation 4.0: integrative taxonomy meets artificial intelligence. Trends Ecol. Evol. 39, 771–784. doi: 10.1016/j.tree.2023.11.002

Karnieli, A., Agam, N., Pinker, R. T., Anderson, M., Imhoff, M. L., Gutman, G. G., et al. (2010). Use of NDVI and land surface temperature for drought assessment: merits and limitations. J. Clim. 23, 618–633. doi: 10.1175/2009JCLI2900.1

Katimbo, A., Rudnick, D. R., Zhang, J., Ge, Y., DeJonge, K. C., Franz, T. E., et al. (2023). Evaluation of artificial intelligence algorithms with sensor data assimilation in estimating crop evapotranspiration and crop water stress index for irrigation water management. Smart Agric. Technol. 4:100176. doi: 10.1016/j.atech.2023.100176

Katiyar, S. (2022). “The use of pesticide management using artificial intelligence” in Artificial intelligence applications in agriculture and food quality improvement (IGI Global), 74–94.

Kaur, J., Sahu, K. P., and Singh, S. (2022). Optimization of pest management using artificial intelligence: fundamentals and applications, vol. 11: Souvenir & Abstracts.

Khaki, S., Wang, L., and Archontoulis, S. V. (2020). A CNN-RNN framework for crop yield prediction. Front. Plant Sci. 10:1750. doi: 10.3389/fpls.2019.01750

Khan, H. A., Rao, S. A., and Farooq, U. (2024). Optimizing olive harvesting efficiency through UAVs and AI integration. J. Agric. Sci. 16:45. doi: 10.5539/jas.v16n11p45

Kho, S. J., Manickam, S., Malek, S., Mosleh, M., and Dhillon, S. K. (2017). Automated plant identification using artificial neural network and support vector machine. Front. Life Sci. 10, 98–107. doi: 10.1080/21553769.2017.1412361

Kim, T. H., and AlZubi, A. A. (2024). AI-enhanced precision irrigation in legume farming: optimizing water use efficiency. Legum. Res. 47, 1382–1389. doi: 10.18805/LRF-791

Kim, Y., Evans, R. G., and Iversen, W. M. (2008). Remote sensing and control of an irrigation system using a distributed wireless sensor network. IEEE Trans. Instrum. Meas. 57, 1379–1387. doi: 10.1109/TIM.2008.917198

Klarica, J., Bittner, L., Pallua, J., Pezzei, C., Huck-Pezzei, V., Dowell, F., et al. (2011). Near-infrared imaging spectroscopy as a tool to discriminate two cryptic Tetramorium ant species. J. Chem. Ecol. 37, 549–552. doi: 10.1007/s10886-011-9956-x

Klein, I., Oppelt, N., and Kuenzer, C. (2021). Application of remote sensing data for locust research and management—a review. Insects 12:233. doi: 10.3390/insects12030233

Kshetrimayum, A., Goyal, A., and Bhadra, B. K. (2024). Semi physical and machine learning approach for yield estimation of pearl millet crop using SAR and optical data products. J. Spat. Sci. 69, 573–592. doi: 10.1080/14498596.2023.2259857

Latchininsky, A. V., and Sivanpillai, R. (2010). Locust habitat monitoring and risk assessment using remote sensing and GIS technologies, in integrated Management of Arthropod Pests and Insect Borne Diseases. Dordrecht: Springer Netherlands, 163–188.

Lee, W. S., and Tardaguila, J. (2023). Pest and disease management, in advanced automation for tree fruit orchards and vineyards. Cham: Springer International Publishing, 93–118.

Li, Z., Sun, J., Shen, Y., Yang, Y., Wang, X., Wang, X., et al. (2024). Deep migration learning-based recognition of diseases and insect pests in Yunnan tea under complex environments. Plant Methods 20:101. doi: 10.1186/s13007-024-01219-x

Lillesand, T., Kiefer, R. W., and Chipman, J. (2015). Remote sensing and image interpretation : John Wiley & Sons.

Lins, E. C., Belasque, J., and Marcassa, L. G. (2009). Detection of citrus canker in citrus plants using laser induced fluorescence spectroscopy. Precis. Agric. 10, 319–330. doi: 10.1007/s11119-009-9124-2

Liu, S. C., Jian, Q. Y., Wen, H. Y., and Chung, C. H. (2022). A crop harvest time prediction model for better sustainability, integrating feature selection and artificial intelligence methods. Sustain. For. 14:14101. doi: 10.3390/su142114101

Low, F., and Duveiller, G. (2014). Defining the spatial resolution requirements for crop identification using optical remote sensing. Remote Sens. 6, 9034–9063. doi: 10.3390/rs6099034

Luo, J., Huang, W., Yuan, L., Zhao, C., Du, S., Zhang, J., et al. (2013). Evaluation of spectral indices and continuous wavelet analysis to quantify aphid infestation in wheat. Precis. Agric. 14, 151–161. doi: 10.1007/s11119-012-9283-4

Lutz, É., and Coradi, P. C. (2022). Applications of new technologies for monitoring and predicting grains quality stored: sensors, internet of things, and artificial intelligence. Measurement 188:110609. doi: 10.1016/j.measurement.2021.110609

Machleb, J., Peteinatos, G. G., Kollenda, B. L., Andújar, D., and Gerhards, R. (2020). Sensor-based mechanical weed control: present state and prospects. Comput. Electron. Agric. 176:105638. doi: 10.1016/j.compag.2020.105638

Maggiora, R., Saccani, M., Milanesio, D., and Porporato, M. (2019). An innovative harmonic radar to track flying insects: the case of Vespa velutina. Sci. Rep. 9:11964. doi: 10.1038/s41598-019-48511-8

Malinowski, R., Groom, G., Schwanghart, W., and Heckrath, G. (2015). Detection and delineation of localized flooding from WorldView-2 multispectral data. Remote Sens. 7, 14853–14875. doi: 10.3390/rs71114853

Martos, V., Ahmad, A., Cartujo, P., and Ordoñez, J. (2021). Ensuring agricultural sustainability through remote sensing in the era of agriculture 5.0. Appl. Sci. 11:5911. doi: 10.3390/app11135911

McDowell, R. W. (2017). Does variable rate irrigation decrease nutrient leaching losses from grazed dairy farming? Soil Use Manag. 33, 530–537. doi: 10.1111/sum.12363

Meraj, G., Kanga, S., Ambadkar, A., Kumar, P., Singh, S. K., Farooq, M., et al. (2022). Assessing the yield of wheat using satellite remote sensing-based machine learning algorithms and simulation modeling. Remote Sens. 14:3005. doi: 10.3390/rs14133005

Mogili, U. R., and Deepak, B. B. V. L. (2018). Review on application of drone systems in precision agriculture. Proc. Comput. Sci. 133, 502–509. doi: 10.1016/j.procs.2018.07.063

Mohammed, M., Hamdoun, H., and Sagheer, A. (2023). Toward sustainable farming: implementing artificial intelligence to predict optimum water and energy requirements for sensor-based micro irrigation systems powered by solar PV. Agronomy 13:1081. doi: 10.3390/agronomy13041081

Moshou, D., Bravo, C., Oberti, R., West, J., Bodria, L., McCartney, A., et al. (2005). Plant disease detection based on data fusion of hyper-spectral and multi-spectral fluorescence imaging using Kohonen maps. Real Time Imaging 11, 75–83. doi: 10.1016/j.rti.2005.03.003

Murmu, S., Pradhan, A. K., Chaurasia, H., Kumar, D., and Samal, I. (2022). Impact of bioinformatics advances in agricultural sciences. AgroSci. Today 3, 480–485.

Nansen, C., Ribeiro, L. P., Dadour, I., and Roberts, J. D. (2015). Detection of temporal changes in insect body reflectance in response to killing agents. PLoS One 10:e0124866. doi: 10.1371/journal.pone.0124866

Navarro, A., Nicastro, N., Costa, C., Pentangelo, A., Cardarelli, M., Ortenzi, L., et al. (2022). Sorting biotic and abiotic stresses on wild rocket by leaf-image hyperspectral data mining with an artificial intelligence model. Plant Methods 18:45. doi: 10.1186/s13007-022-00880-4

Noutfia, Y., and Ropelewska, E. (2024). What can artificial intelligence approaches bring to an improved and efficient harvesting and postharvest handling of date fruit (Phoenix dactylifera L.)? A review. Postharvest Biol. Technol. 213:112926. doi: 10.1016/j.postharvbio.2024.112926

Park, Y. H., Choi, S. H., Kwon, Y. J., Kwon, S. W., Kang, Y. J., and Jun, T. H. (2023). Detection of soybean insect pest and a forecasting platform using deep learning with unmanned ground vehicles. Agronomy 13:477. doi: 10.3390/agronomy13020477

Pinter, P. J. Jr., Hatfield, J. L., Schepers, J. S., Barnes, E. M., Moran, M. S., Daughtry, C. S., et al. (2003). Remote sensing for crop management. Photogramm. Eng. Remote. Sens. 69, 647–664. doi: 10.14358/PERS.69.6.647

Prabhakar, M., Prasad, Y. G., Mandal, U. K., Ramakrishna, Y. S., Ramalakshmaiaha, C., Venkateswarlu, N. C., et al. (2006). Spectral characteristics of Peanut crop infected by late leafspot disease under Rainfed conditions. Agriculture and Hydrology Applications of Remote Sensing (SPIE), 109–115

Prabhakar, M., Prasad, Y. G., and Rao, M. N. (2012). “Remote sensing of biotic stress in crop plants and its applications for pest management” in Crop stress and its management: Perspectives and strategies (New York: Springer), 517–549.

Pellikka, P., and Rees, W. G. (2009). Remote sensing of glaciers: techniques for topographic, spatial and thematic mapping of glaciers. CRC Press. 1–315. doi: 10.1201/b10155

Ranjithkumar, C., Saveetha, S., Kumar, V. D., Prathyangiradevi, S., and Kanagasabapathy, T. (2021). AI based crop identification application. Int. J. Res. Eng. Sci. Manag. 4, 17–21.

Rouse, J. W., Haas, R. H., Shell, J. A., and Deering, D. W. (1973). “Monitoring vegetation systems in the Great Plains with ERTS-1” in Proceedings of third earth resources technology satellite symposium (Washington, DC: Goddard Space Flight Center), 309–317.

Roy, L., Ganchaudhuri, S., Pathak, K., Dutta, A., and Gogoi Khanikar, P. (2022). Application of remote sensing and GIS in agriculture. Int. J. Res. Anal. Rev. 9:460.

Rydhmer, K., Bick, E., Still, L., Strand, A., Luciano, R., Helmreich, S., et al. (2022). Automating insect monitoring using unsupervised near-infrared sensors. Sci. Rep. 12:2603. doi: 10.1038/s41598-022-06439-6