- Department of Intelligent Manufacturing Engineering, School of Mechanical Engineering, Jiangsu University, Zhenjiang, China

Tea is rich in polyphenols, vitamins, and protein, which is good for health and tastes great. As a result, tea is very popular and has become the second most popular beverage in the world after water. For this reason, it is essential to improve the yield and quality of tea. In this paper, we review the application of computer vision and machine learning in the tea industry in the last decade, covering three crucial stages: cultivation, harvesting, and processing of tea. We found that many advanced artificial intelligence algorithms and sensor technologies have been used in tea, resulting in some vision-based tea harvesting equipment and disease detection methods. However, these applications focus on the identification of tea buds, the detection of several common diseases, and the classification of tea products. Clearly, the current applications have limitations and are insufficient for the intelligent and sustainable development of the tea field. The current fruitful developments in technologies related to UAVs, vision navigation, soft robotics, and sensors have the potential to provide new opportunities for vision-based tea harvesting machines, intelligent tea garden management, and multimodal-based tea processing monitoring. Therefore, research and development combining computer vision and machine learning is undoubtedly a future trend in the tea industry.

1. Introduction

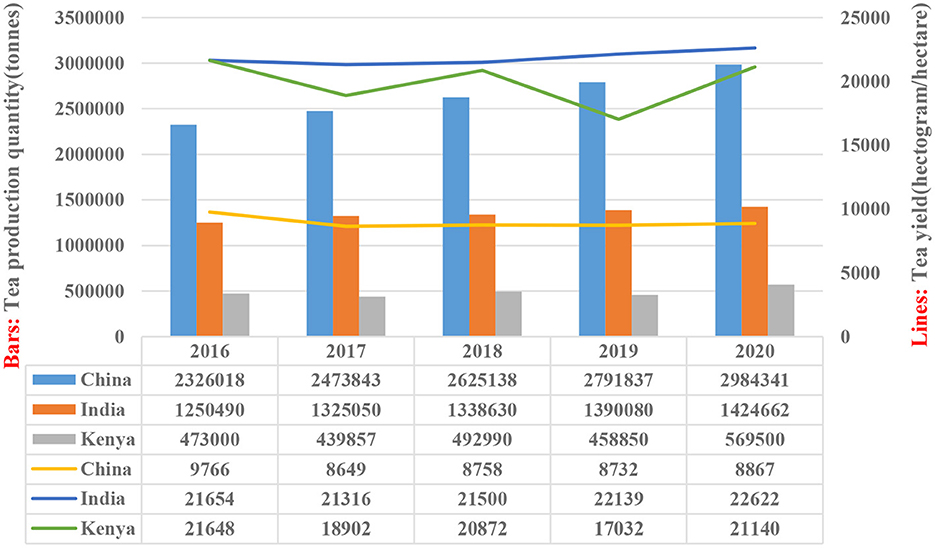

Tea is the second most consumed beverage in the world after water. The production and processing of tea is a significant source of livelihood for numerous households in developing countries (United Nations, 2022a). Tea is very popular with the public, and it has beneficial effects on many diseases, including cancer, diabetes, and cardiovascular disease, with its rich polyphenol content (Khan and Mukhtar, 2018; Xu et al., 2019; Alam et al., 2022). According to publicly available data (Food Agriculture Organization of the United Nations - FAO, 2022), from 2016 to 2020, the tea production of the three largest tea-producing countries in the world showed an overall upward trend, with China ranking first in the world in terms of tea production. However, tea yield is lower than in other tea-producing countries and is on a downward trend, from 976.6 kg per hectare in 2016 to 886.7 kg per hectare in 2020, as shown in Figure 1. Therefore, it is necessary for tea farmers to adopt sustainable methods to improve the yield and quality of tea while reducing costs (Bechar and Vigneault, 2016). Tea is not only a labor-intensive crop but also is highly seasonal. Currently, tea harvesting still relies heavily on manual picking. However, the problems with this method are costly and require heavy physical labor (Han et al., 2014), so it is of great significance to explore automated harvesting and high-quality tea production.

In recent years, technological tools such as machine learning, big data, the Internet of Things, and generative adversarial networks have been reshaping modern agriculture (Sharma et al., 2022). For example, researchers have combined machine vision and robotics technology to achieve weed control in agriculture, avoiding environmental pollution caused by chemical weeding and crop damage during mechanical weeding, which could be commercialized gradually in the future (Li Y. et al., 2022). We find its advantages enough, but at the same time, we cannot ignore its shortcomings. Such as, in the pre-application phase, Cameras, processors, and possible algorithm training are expensive, and complex application scenarios may put higher requirements on some devices, such as cameras.

At present, many crops are harvested automatically by agricultural harvesting robots. Jia et al. (2020) improved the Mask R-CNN algorithm to achieve real-time detection of apples with pixel-level accuracy, and it can be applied to an apple harvesting robot. Williams et al. (2019) used the full convolutional neural network (FCN) to detect kiwi fruit and designed a soft end-effector to pick it in the way of decals. Multiple robotic arms were used to work at the same time, which significantly improved efficiency. In general, computer vision and machine learning are used in these solutions. Besides, their application is not only for harvesting but also for other stages of crop production.

Tea presents more unique challenges compared to crops such as apples and kiwis. Firstly, the tea buds being picked are located at the top of the tea tree canopy, which puts forward strict requirements for the identification of tea and the determination of the location of picking points due to their irregular shape and fragile plant tissue. Secondly, the characteristics of tea buds and older leaves are similar, whether in texture, color, or shape, which increases the identification difficulty. Finally, tea buds are tiny in size and light in weight, which are easy to shake in the natural environment and challenging to collect by gravity, so negative pressure collection (negative pressure fan generates airflow to absorb tea buds) may be a good choice (Zhu et al., 2021).

Before our work, some scholars reviewed machine vision or artificial intelligence applications in the tea industry. Among them, Gill et al. (2011) reviewed the application of computer vision in tea grading and monitoring, Yashodha and Shalini (2021) reviewed the application of machine learning on early tea disease prediction, and Bhargava et al. (2022) reviewed the methods concerning machine learning for evaluating tea quality. These surveys do not comprehensively review computer vision and machine learning applications in the tea industry. Most reviews do not cover the rapidly developing deep cameras and deep learning algorithms based on big data. However, our study has three main vital contributions. First, this study focuses on computer vision and machine learning applications in the tea industry, covering a variety of application scenarios such as tea harvesting, tea disease control, tea production and processing, and tea plantation navigation. Second, the cameras, algorithms, and performances used in the current applications are organized. Finally, we discuss this industry's current research challenges and future trends.

The rest of the paper is divided into five parts: Section 2 presents the methods used for this review. Section 3 contains a definition of computer vision, principles of deep camera work, and an overview of machine learning. Section 4 describes in detail the application of computer vision in the tea industry. Challenges faced by computer vision in the application of the tea industry are discussed in Section 5, and the conclusions and prospects of this paper are presented in Section 6.

2. Methods

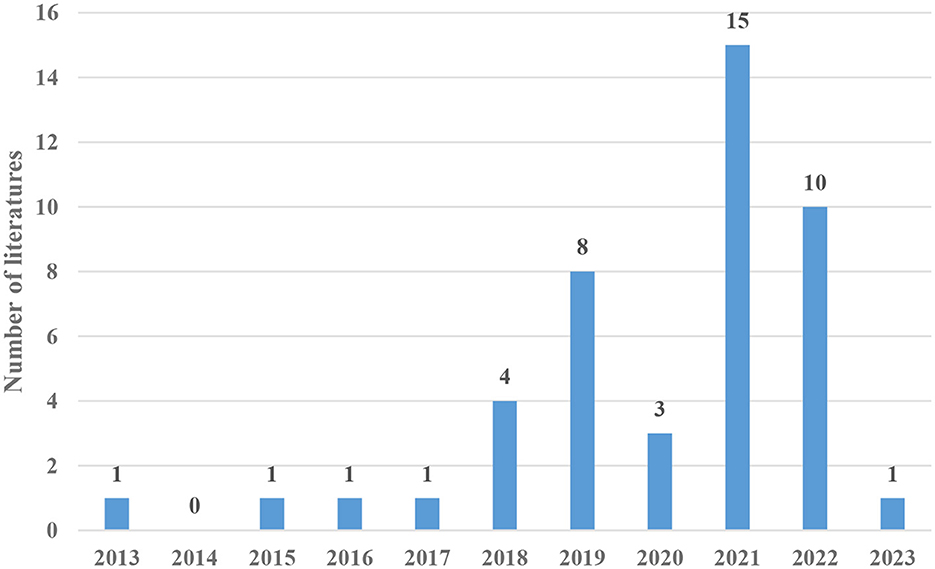

To enable a comprehensive collection of relevant literature, we accessed ScienceDirect, IEEE, the frontier in plant science, ACM, MDPI, Hindawi, Springer, and arXiv databases and used Web of Science and Google Scholar databases to check for omissions. Since the application of computer vision in the tea industry commonly uses machine learning, we used the following string (“tea” OR “Camellia sinensis”) AND (“computer vision” OR “machine vision” OR “image” OR “visual” OR “Shot”) were searched for the literature. The time was limited to the last decade. From 2013 to January 2023, 106 articles were collected, with the ScienceDirect database ranking first, followed by the MDPI database. Subsequently, some articles were removed, including review literature, duplicate literature, and literature with low relevance to the topic. Finally, 45 articles were retained for review, and Figure 2 shows their statistics by year, from which it can be found that there are more relevant studies in the last 5 years.

3. Computer vision and machine learning

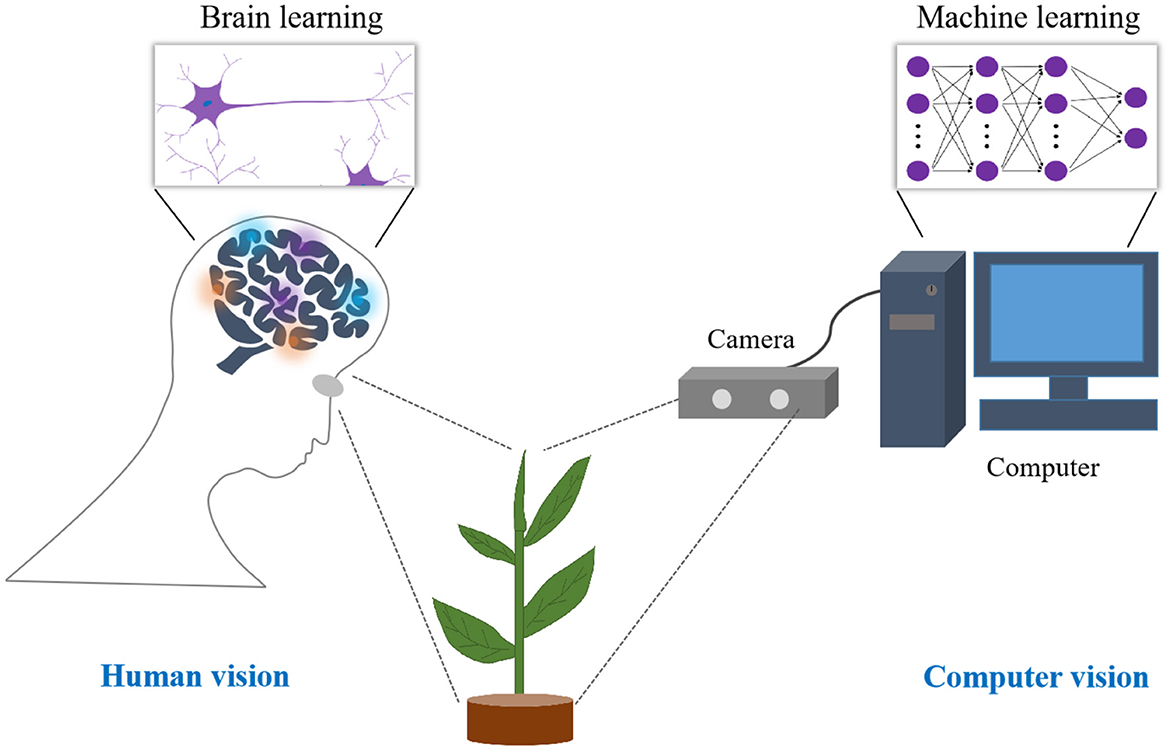

Computer vision is designed to build a computational model of the human visual system that can autonomously perform specific tasks that the human visual system is capable of performing (Huang, 1996). At the same time, it can also perform tasks that the human visual system cannot perform (e.g., some high-precision, high-intensity tasks). Figure 3 briefly compares the human visual system with the computer vision system. Similar to the human visual system, the working process of computer vision is divided into four steps: data acquisition, data processing, data learning, and task execution. In the data acquisition phase, computer vision uses cameras to collect image or video information to achieve the effect of the human eye observing the world. At the data processing stage, the images are processed into numerical form. The computer learns from humans to filter out distracting and useless noise to retain valid data for the next step of learning. In the data learning stage, computers can learn things in nature just like human brains by relying on high-performance central processing units (CPUs) and graphics processing units (GPUs). Unlike human learning by association, computers can extract small features from images for more fine-grained learning. This type of learning focuses on using data and algorithms to imitate humans, also known as machine learning (IBM, 2022). Once learning is complete, the computer vision system can perform a variety of tasks, such as image classification, object detection, object semantic segmentation, and instance segmentation, to name a few. In general, the combination of computer vision and machine learning can not only see things but also think and comprehend things.

3.1. Computer vision

The camera is one of the key components of a computer vision system that can see the real world just like the human eye. Different application scenarios and usage purposes will affect the choice of camera. On the one hand, if only target detection at the image level needs to be achieved, an ordinary monocular camera is sufficient to meet the requirements. On the other hand, if the camera is not only used for simple two-dimensional (2D) detection but also needs to locate the three-dimensional (3D) information of the target in space, then a camera capable of outputting depth information is needed. According to different principles of depth information acquisition, depth cameras on the market are mainly divided into binocular stereo (BS) cameras and RGB-D (Red, green and blue-depth) cameras. BS cameras use the parallax principle to calculate the depth indirectly through the algorithm. While RGB-D cameras measure depth information more directly through laser, which is mainly divided into cameras based on the structured light (SL) principle, cameras based on the time of Flight (ToF) principle, and active infrared stereo (AIRS) cameras (Li T. et al., 2022).

3.1.1. Binocular stereo cameras

As mentioned above, BS cameras use the principle of parallax to measure the distance mainly by receiving the light signal reflected from the measured object by the camera (Sun et al., 2019a), which is a passive stereo vision method. ZED (Stereolabs, San Francisco, CA, USA) cameras are the quintessential BS cameras and have been exploited in many scenarios (e.g., industrial robots, underwater robots, and cargo transportation). ZED cameras are rapidly impacting the agricultural sector due to their optimized performance and low price. They have been adopted for farm navigation (Fue et al., 2020; Yun et al., 2021), orchard 3D mapping (Tagarakis et al., 2022), and crop localization (Liao et al., 2019; Li H. et al., 2021; Hou et al., 2022). The same principle applies to the Tara Stereo Camera (e-Con Systems, Chennai, Tamil Nadu, India), which is less useful in agriculture because of its lower field of view and 3D resolution than the ZED camera. Since BS cameras use natural light to calculate the object depth information, the cameras cannot work at night. In addition, the depth of information is derived indirectly, which generates a sizeable computational loss. The accuracy also decreases as the distance increases and the ambient light source dims, which is a critical point to be optimized.

3.1.2. Structured light cameras

SL sensor is a non-contact distance measuring instrument which belongs to active stereo vision. Since the Apple company adopted the SL sensor as an unlocking tool for communication devices, more and more people have started to understand and study its principle. SL technology is similar to binocular stereo vision technology, except that it uses an infrared projector instead of a camera in binocular stereovision. An infrared projector projects streaks of light (or discrete spots and other encoded patterns) to the subject, and another infrared camera captures the degree of streak distortion to derive depth information. Since SL technology can actively project feature points to calculate depth, it can effectively avoid the problem of partial depth loss in binocular stereo vision. As a latecomer, SL cameras have gained popularity in the market due to their good depth accuracy, excellent performance in dim environments, and low model complexity. SL cameras on the market include Kinect v1 (Microsoft, Redmond, WA, USA), DaBai (Orbbec, Shenzhen, Guangdong, China), and XtionPro Live (Asus, Taipei, Taiwan). Theoretically, structured light cameras are suitable for agriculture where unstructured environments exist. The use of SL cameras has played a variety of roles in agriculture, including crop harvesting (Qingchun et al., 2014), aquaculture (Saberioon and Cisar, 2016), and livestock monitoring (Pezzuolo et al., 2018). As structured light cameras use the distortion of camera capture coding mode to calculate depth, intense natural light is not friendly to this camera. At the same time, structured light cameras are still unable to measure the depth information of occlusion, which is a common problem in agriculture. Last but not least, structured light cameras are not suitable for recognition at long distances.

3.1.3. Time of flight cameras

In contrast to the previous two types of cameras, the ToF cameras do not use cameras in the distance measurement process but mainly use an infrared transmitter and receiver in their structure. Its technology is based on the invariance principle of the speed of light, where the depth is calculated by multiplying the speed of light by the time difference of the light's flight. Thanks to the advantages of the principle, ToF cameras are not affected by dim light conditions and can quickly respond while achieving high precision ranging. Because of these advantages, ToF cameras are widely used in agriculture, including crop harvesting (Barnea et al., 2016; Lin et al., 2019), yield estimation (Yu et al., 2021), field navigation (Song et al., 2022), aquaculture (Saberioon and Císar, 2018), and livestock monitoring (Zhu et al., 2015). The Kinect v2 is one of the most widely used ToF cameras (Fu et al., 2020). ToF cameras have excellent overall performance, but the resolution could be higher. Since infrared light is easily interfered with by strong light radiation, ToF cameras are not directly applicable in environments with intense natural light.

3.1.4. Other cameras

To compensate for the shortcomings of single-principle distance measurement, some cameras on the market integrate multiple distance measurement methods to adapt to a broader range of working environments. AIRS cameras combine binocular stereo vision technology with structured light technology to compensate for the disadvantage of low accuracy of BS cameras in dark light environments while retaining the advantage of high depth resolution. The RealSense D435 (Intel, Santa Clara, CA, USA) is a compact AIRS camera that has been used in agriculture for crop harvesting (Peng et al., 2021; Polic et al., 2022) and animal monitoring (Chen C. et al., 2019). Some cameras try to combine ToF technology with binocular vision technology, such as the SeerSense DS80 (Xvisio, Shanghai, China) camera, which can adapt to indoor and outdoor working environments.

3.2. Machine learning

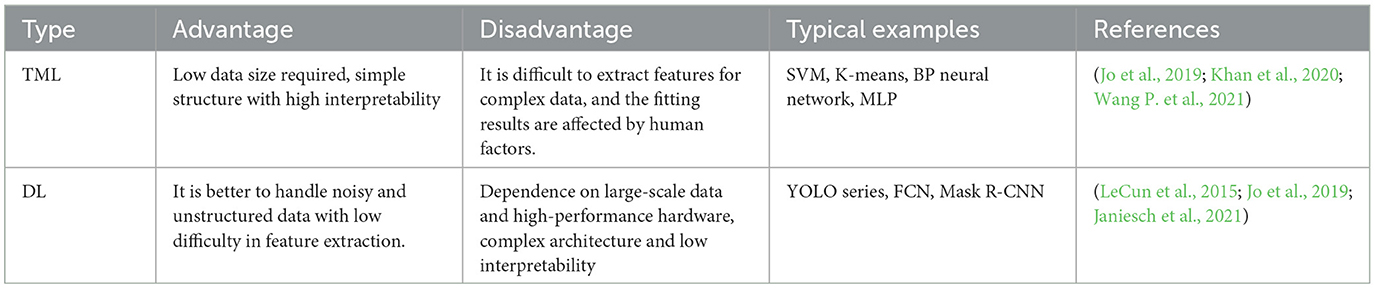

For the vast amount of valuable data generated during the growth, harvesting, and production of crops, it is not desirable to rely solely on hardware devices that are good enough. Machine learning (ML) can allow computers to learn without being explicitly programmed (Meng et al., 2020). ML can solve identification, tracking, and prediction problems in agriculture and has been widely used in crop harvesting, disease control, yield forecasting, agricultural navigation, animal management, and aquaculture. Currently, the main machine learning algorithms commonly used in the tea industry are traditional machine learning (TML) and deep learning (DL), both of which require appropriate sample data to assist with learning, and the comparison between them is shown in Table 1.

3.2.1. Traditional machine learning

TML generally possesses the structure of a shallow network (Wang J. et al., 2018). Its origin can be traced back to a machine that can recognize letters—a “perceptron” (Fradkov, 2020). TML usually involves multiple steps, such as image preprocessing, segmentation, feature extraction, feature selection, and classification (Khan et al., 2020). Since multiple steps need to be processed sequentially, objects cannot usually be detected directly in an end-to-end manner. However, thanks to its simple network structure, TML is highly interpretable and does not require much hardware to achieve excellent results on a small sample data set (Wang P. et al., 2021). TML is mainly used to deal with classification and prediction problems.

For the classification problem, the main concern is to classify data (which can be text, images, or speech) according to the category to which it belongs. In agriculture, classification algorithms are needed for scene understanding, crop recognition, and agricultural product classification. Typical classification algorithms include support vector machine (SVM) and K-means clustering. The principle of SVM is to accurately distinguish each class using hyperplanes, which are set between classes and as far away from all classes as possible. On the other hand, the K-means clustering algorithm uses the differences between the data for classification, which are reflected by the Euclidean distance of each data point. The greater the distance, the greater the differences, and vice versa. Finally, all data points of the same category are clustered together to complete the classification task.

Prediction, instead of classification, produces more accurate outputs (rather than discrete classification sequences). Accurate yield and harvest cycle prediction based on information such as soil chemical element content, precipitation, and crop growth status is essential in precision agriculture. Of course, the application of forecasting algorithms is not limited to this but also to disaster warning, crop tracking, and agricultural price forecasting. Widely used forecasting models include time series models (where the output is often closely related to time) and regression models (where the output is usually unaffected by time).

3.2.2. Deep learning

DL has excellent potential in the feature analysis of high-dimensional data. Moreover, features that have been previously learned can be migrated to new problem situations (Ashtiani et al., 2021). DL is developing rapidly, mainly in the fields of image processing and speech recognition.

In the field of image processing, many mature DL networks are generated to accomplish the recognition, segmentation, and localization of targets in images, including Single shot multi-box detector (SSD) (Liu et al., 2016), FCN (Long et al., 2015), and Mask R-CNN (He et al., 2017) instance segmentation network. Although these networks perform different tasks, they all structurally possess backbone networks to accomplish feature extraction (e.g., VGG16, ResNet50). For crop detection and tracking in agriculture, complex network structures can reduce the real-time performance of recognition (Zhao et al., 2018). To improve the efficiency of DL network work, researchers have introduced depthwise separable convolution in the networks, typically including MobileNets (Howard et al., 2017), MobilenetV2 (Sandler et al., 2018), and Xception (Chollet, 2017) networks.

In the field of speech recognition, DL networks mainly take the role of converting the input signal sequence into a text sequence (Qingfeng et al., 2019). Speech recognition is significant for interactive operation, insect identification, and animal monitoring in agriculture. In order to overcome the effect of cluttered noise on speech recognition in agriculture, Liu et al. (2020) use convolutional LSTM networks to map the input speech atlas to a high-dimensional embedding space for clustering and extract the distinguished speech spectrum vectors for denoising, which has significant recognition advantages in noisy environments. Since complex network structures are often not easy to use, Google (Santa Clara, CA, USA) has developed, for example, TensorFlow and Kersa DL frameworks to promote the popularity of DL in agriculture.

4. Computer vision for the tea industry

In China, tea is a healthy beverage and a cultural symbol, with the processing techniques and customs of traditional tea listed as a world intangible cultural Heritage (United Nations, 2022b). With the increasing demand for tea and the continuous development of agricultural machinery, many tea-harvesting machines have been spawned (Wu et al., 2017; Song et al., 2020; Zhao et al., 2022). However, these machines are only suitable for bulk tea and do not allow selective harvesting of high-quality tea, while the quality of the harvested buds is relatively inferior (Zhang and Li, 2021). Although the method of distinguishing tea buds from old leaves in terms of color, shape, and texture is simple and convenient, it is challenging to achieve accurate identification and picking of tea buds due to the presence of many disturbing factors in the outdoor environment (Yang H. et al., 2019). In addition, the tea harvesting process has common problems in agricultural environments, such as object shading, unstable lighting conditions, and complex scenes. However, it also has the challenges of difficult identification, easy breakage, and high growth density. Figure 4 shows the difficulties of vision-based tea-harvesting robots.

Figure 4. Difficulties of vision-based tea harvesting machines: (A) Obscured targets; (B) Unstable light; (C) Complex environment (e.g., presence of pests, dead leaves, etc.); (D) Small differences in target and background characteristics; (E) Small size and easy to break, reproduced from Li Y. et al. (2021); (F) High target growth density.

In recent years, combining computer vision and machine learning has solved many challenges in the agricultural field and provided new solutions for intelligent tea picking. Moreover, it can also be applied to other aspects of the tea industry, such as disease control, production processing, tea plantation navigation, and growth status monitoring.

4.1. Tea harvest

In the past few years, in order to develop tea-picking equipment with high efficiency and picking quality, compared with non-selective mechanical cutting, the method of picking after identifying buds has been favored by researchers.

The recognition of tea buds is usually obtained by object detection or image segmentation. DL is widely used in target detection and image segmentation with its good performance. Yang H. et al. (2019) proposed an improved YOLO v3 algorithm to recognize tea buds. The accuracy of this method on the training model reached more than 90%, and it could detect four types of tea targets (one bud with one leaf, one bud with two leaves, top view, and side view of the bud). Moreover, the TML method of K-means clustering was used to find the nine most suitable anchor frames. In the subsequent research, Yang et al. (2021) designed a complete vision-based automatic picking robot. It mainly includes mechanical structure, vision recognition system, and motion control system. The robot first applies the PSO-SVM method to segment the tea buds quickly and accurately, then uses the improved ant colony algorithm (ACA) to find the optimal trajectory of the robot, and finally uses the previously studied vision recognition system to identify the buds for picking. The solution was validated to be highly stable and efficient through simulation and laboratory platforms. However, the tea plantation scenes are complex, and many disturbing factors need to be considered to drive the recognition model with high robustness. To this end, Chen and Chen (2020) and Xu et al. (2022) constructed a diverse dataset of natural scenes for model training, considering factors such as light variations in the environment, tea shoot density, shooting angle, and background complexity. It is demonstrated that the accuracy of shoot recognition is higher when shooting from the side than from the top.

For the determination of the picking location, Yang H. et al. (2019) and Chen et al. (2022) used the target detection method, which first detected the tea buds in the image, then cut out the detected tea buds image, and used the traditional morphological method to segment, denoise and extract the skeleton of the target, and finally determined the picking point. The accuracy of this method is 83%. However, the method based on DL has good performance for the segmentation of a large number of samples. Chen and Chen (2020) proposed to use of two DL networks in a series to determine the pick points. In this method, Faster R-CNN was first used to detect the target, then FCN was used to segment the detected tea bud stems, and finally, the center of the segmentation area was used as the picking point. The limitation of this method lies in the poor real-time performance of prediction using two convolutional neural networks. Wang T. et al. (2021) applied the Mask R-CNN algorithm to achieve the segmentation of tea buds and stems for a total of two targets, using only one DL network to achieve the detection and segmentation functions. Then the center coordinates of the tea stem are used as the picking point, which is simple and has good robustness in complex environments. To make the robot more flexible in picking tea leaves, Yan L. et al. (2022) proposed a method that can give picking points and picking angles. Firstly, the tea bud and stem were segmented into a whole, and then the minimum external rectangle was applied to envelope all the segmentation results. The rectangular frame rotation angle was used as the picking angle of the manipulator, and the picking point was determined using the position of the bottom of the rectangular frame. The experiment proved that the accuracy of picking point localization reached 94.9% and the recall reached 91%, which could support the accurate localization of tea picking. In addition to shooting to identify the picking point, the extraction of the picking point will be interfered with by mutual occlusion due to the high density of tea growth. To solve this problem, Li Y. et al. (2021) proposed a method based on an RGB-D camera to locate tea buds. The depth camera was used to generate a 3D point cloud of the target, and the midpoint of the point cloud to be offset downward by a distance was defined as the picking point according to the growth characteristics of tea leaves. Picking experiments conducted in tea plantations showed a picking success rate of 83.18%, while the average localization time for each tea bud was only 24 ms. Considering that the tea fresh leaf grade is related to the number of picked-down buds and leaves, Yan C. et al. (2022) proposed a target segmentation method based on the improved DeepLabV3+. It could output three types of picking points, namely single bud tip, one bud with one leaf, and one bud with two leaves, respectively, and the recognition accuracy reached 82.85, 90.07, and 84.78%, respectively.

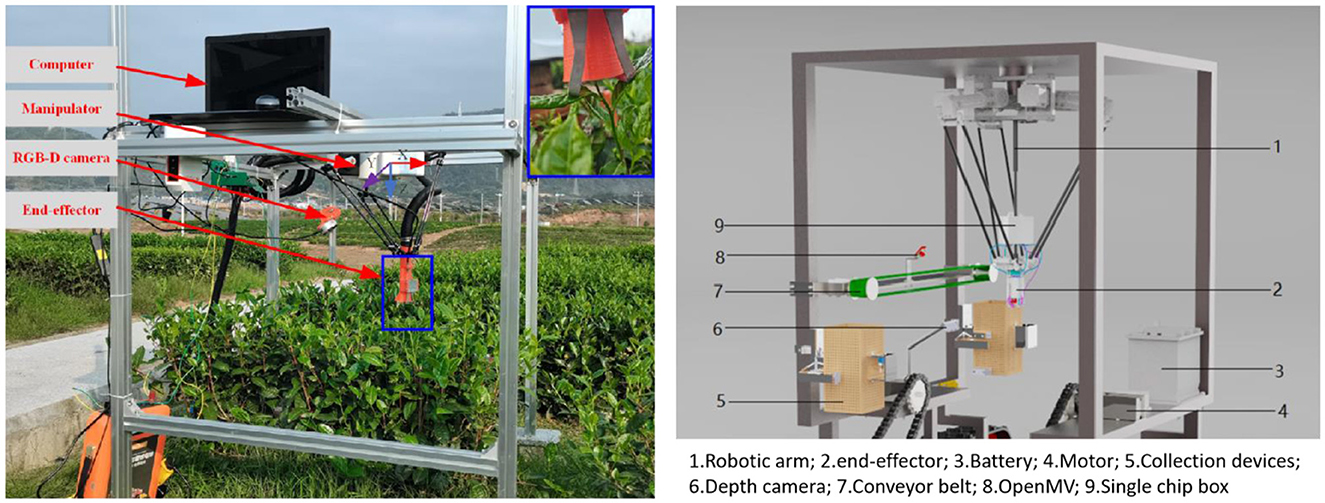

For tea bud picking, existing vision-based intelligent picking robots usually use Delta robotic arms for spatial movement of the end-effector. Yang H. et al. (2019) designed an end-effector capable of picking and collecting buds. Considering the various growth patterns of tea buds, Li Y. et al. (2021) and Zhu et al. (2021) designed a shear-type end-effector. The scissors are mounted at the end of the hollow sleeve and are opened and closed by a control device. For the working process, first, unfold the scissors, the sleeve wraps the target from top to bottom, and close the scissors after reaching the specified position to realize picking. At the same time, the negative pressure at the other end of the sleeve absorbs the picked tea buds. To pick tea buds with high quality, Chen et al. (2022) designed an end-effector with multiple sensors embedded in it and installed rubber pads on the jaws, which can imitate human hands to accurately pick the target while protecting the target from damage. Unlike the previous generalized suction collection method, this method sorts and collects tea buds by driving the target to the conveyor belt through a robotic arm. Considering the existence of current tea picking machines that damage tea stalks and tea buds, to be able to optimize the current tool structure. Wu et al. (2022) investigated the bending of tea stalks under conditions of large perturbations. The tea stalk disturbance curve fitted by the experiment was helpful in finding the optimal picking area and guided the movement speed and cutting frequency of the end-effector during the working process. The quality of the buds obtained by shearing is inferior to hand-picking due to severe end oxidation. For this reason, Luo et al. (2022) developed a dynamic model for the mechanical picking of tea buds. The parameters for maintaining low damage during mechanical picking were identified, including clamping pressure, bending force, and pull-out force.

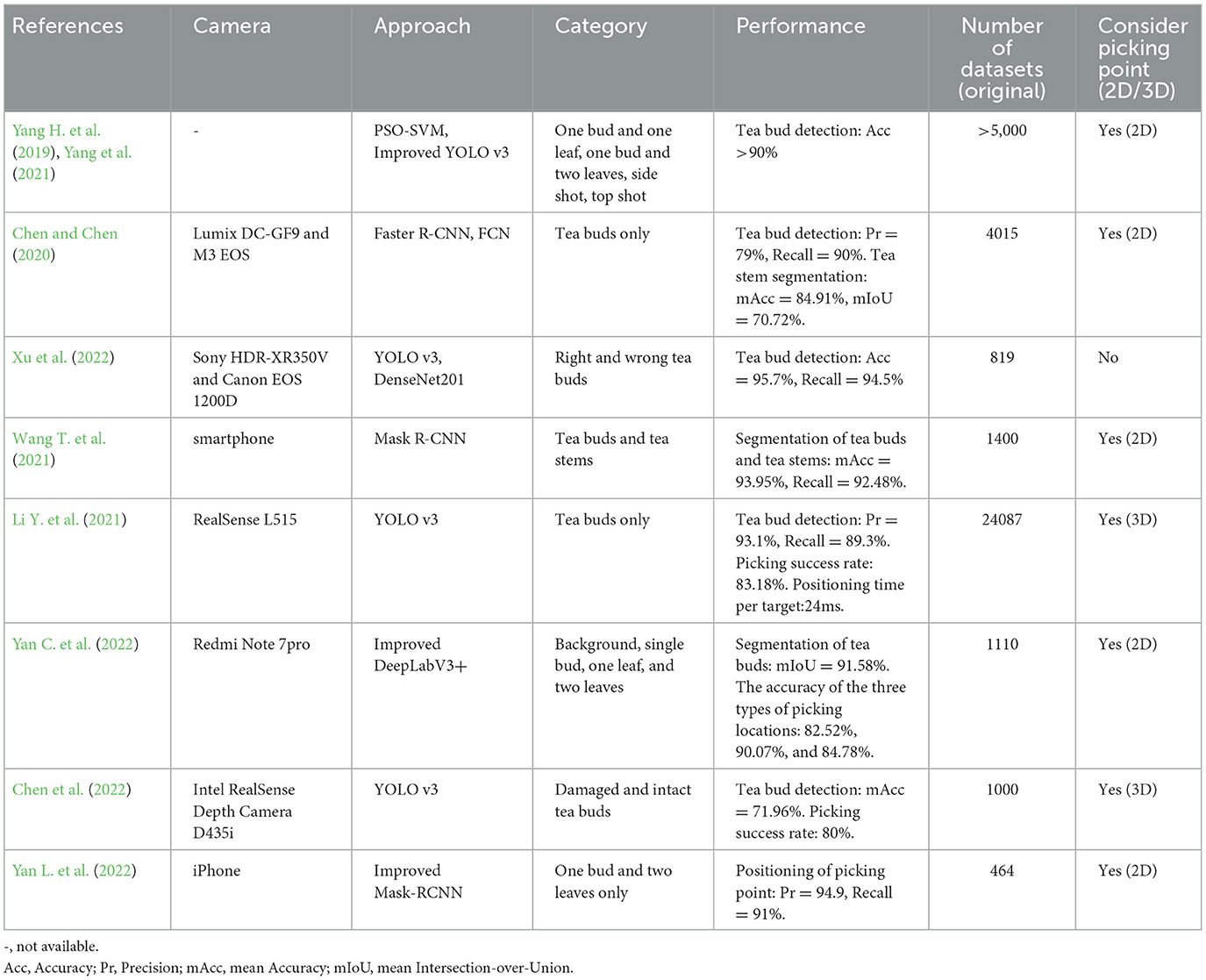

As can be seen from the above discussion, there are many researchers to use algorithms to identify tea buds and harvest points. However, more work needs to be done to make prototypes and perform picking for harvesting. The prototypes found in the literature are Li Y. et al. (2021) and Chen et al. (2022), as shown in Figure 5. Most scholars in this field have concentrated on green tea, and a few have studied other teas, such as flower tea (Qi et al., 2022). The research methods mainly focus on DL, TML, and a combination of both. Table 2 summarizes computer vision and machine learning applications in tea harvesting. There are also relatively few studies in tea harvesting using RGB-D, with most studies using cameras or even mobile phones to collect images to build datasets. However, to achieve intelligent tea harvesting in the future, the combination of depth camera detection and localization is essential. At the same time, selecting a depth camera is also crucial.

Figure 5. Vision-based automatic tea picking machine, reproduced from Li Y. et al. (2021) and Chen et al. (2022).

4.2. Tea disease control

Diseases are an important factor affecting the yield and quality of tea, and one-fifth of the total production value is lost each year as a result (Yang N. et al., 2019). Disease prevention and management is an effective means to reduce yield loss and improve product quality. In the early stage, the identification of tea diseases mainly relies on the on-site investigation by experts in related fields, which is inefficient and costly (Mukhopadhyay et al., 2021). Currently, computer vision is expected to replace manual identification. On the one hand, visual monitoring of tea's status can play an early warning role for diseases. On the other hand, the classification and identification of data is an essential basis for guiding tea farmers to make scientific decisions. Unlike the vision system for tea harvesting, the vision system for disease control focuses on identifying diseases and estimating severity.

For the disease recognition of tea leaves, there are two main cases: one is to use the picked diseased tea leaves for identification (shot in a structured environment with fewer disturbing factors in the image), and the other is to use the images taken in the tea plantation environment for identification (shot in an unstructured environment with more disturbing factors in the image). For the first case, Chen C. et al. (2019) and Sun et al. (2019b) used convolutional neural networks to classify seven common tea leaf diseases, and the classification accuracy was above 90% in both cases. Considering that this method requires picking diseased tea leaves to a fixed location for identification and classification, it is inefficient and detrimental to the rehabilitation of tea trees. For this reason, the second case is capable of real-time identification in the tea plantation environment. Its technical route is extracting or detecting the diseased tea leaves from the complex background and then identifying them. To this end, Sun Y. et al. (2019) designed the SLIV_SVM algorithm, which first used the SLIC algorithm to split the acquired image into many super pixel blocks, then used SVM to classify the pixel blocks, and finally used morphology to obtain the accurate diseased tea leaves. The accuracy of this method for diseased leaf extraction was 98.5%. Considering a large amount of image data collection and labeling may increase the cost of identification. Hu et al. (2019a), Hu and Fang (2022) applied Generative Adversarial Neural Network (GAN) for data augmentation of the extracted diseased tea leaves to automatically generate new training samples to achieve data expansion and satisfy the classifier's demand for a large number of samples. After experiments, it was demonstrated that the method only requires small-scale samples to complete the training task. Lanjewar and Panchbhai (2022) proposed a cloud-based method for identifying tea diseases. It only requires smartphones to take images of tea leaves and upload them to the cloud to identify three tea leaf diseases with 100% accuracy. This method will make detecting tea diseases more convenient, simple, and efficient in the future.

The identification of diseases only solves the qualitative problem. For quantitative problems, a disease severity analysis of tea leaves is required. To this end, Hu et al. (2021a) proposed a method for estimating tea leaf blight severity. The method first used a target detection algorithm to extract diseased leaves and then fed the detected targets into a trained VGG16 network for severity grading. However, it completely depends on DL models for analysis and has low interpretability. To this end, Hu et al. (2021b) proposed a combination of the Initial Disease Severity (IDS) index, color features, and texture features to estimate tea leaf blight severity. The experimental results show that the method achieves an average accuracy of 83% and has good robustness to occluded and damaged diseased leaves.

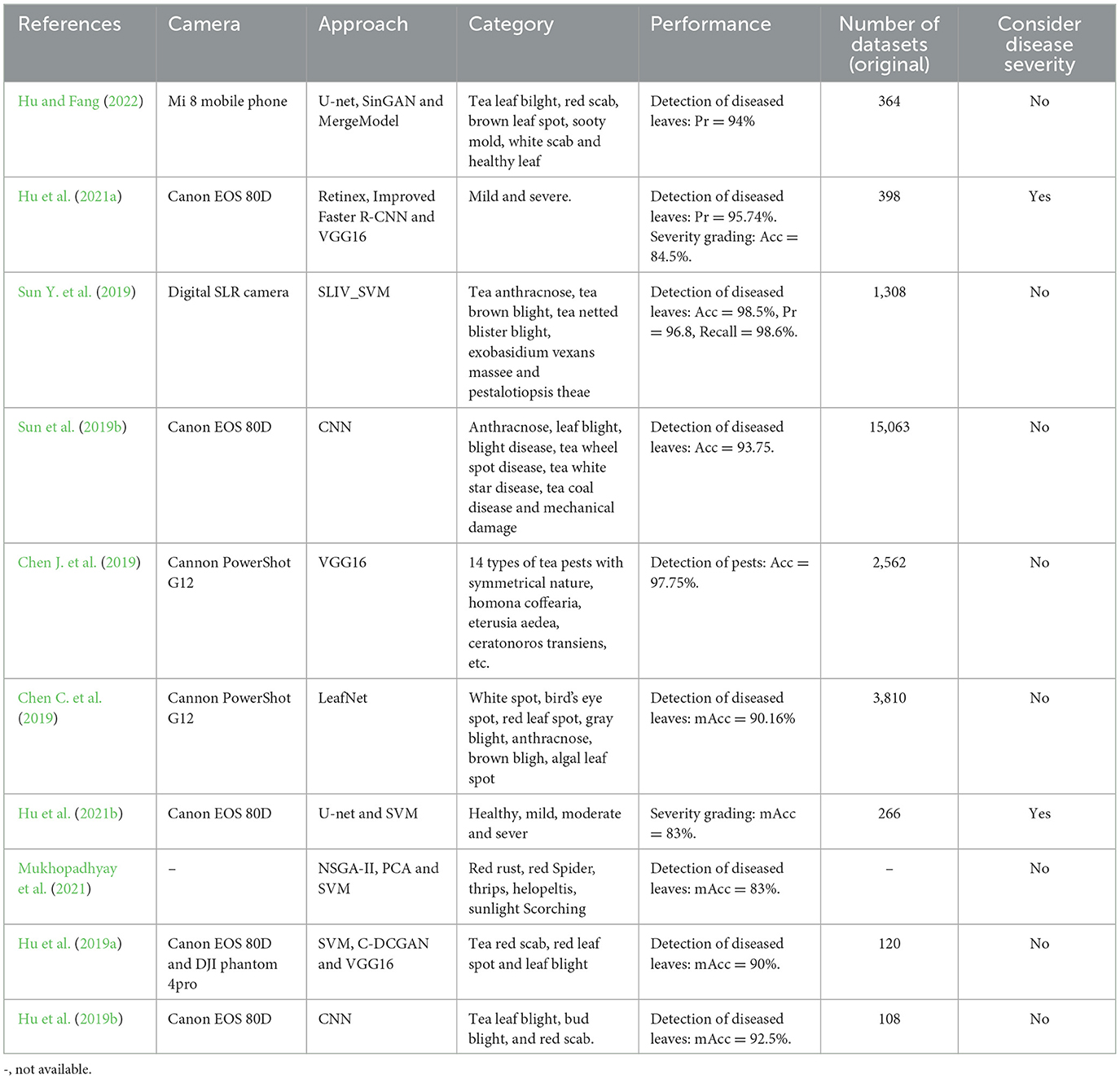

Pests in tea plantations are also an important factor in causing tea tree diseases, and identifying pests enables early countermeasures to be taken. To this end, Chen et al. (2021) used CNN to achieve the identification of 14 tea pests. Through experiments, it was proved that the method performs better than TML with an accuracy rate of 97.75%, which meets practical work needs. A summary of the application of computer vision and machine learning in tea disease control is presented in Table 3.

Table 3. Summary of the application of computer vision and machine learning for tea disease control.

4.3. Tea production and processing

Most of the picked tea leaves need to go through five stages: withering, cut tear and curling, fermentation, drying, and sorting before they are turned into products (Kimutai et al., 2020). Each stage requires strict control by experienced workers to produce a finished product that meets the standards. However, manual monitoring of the tea production process and quality grading is susceptible to empirical, visual, and psychological factors (Dong et al., 2017). For this reason, computer vision has been applied to the different stages of tea processing thanks to its objectivity, convenience, and low cost.

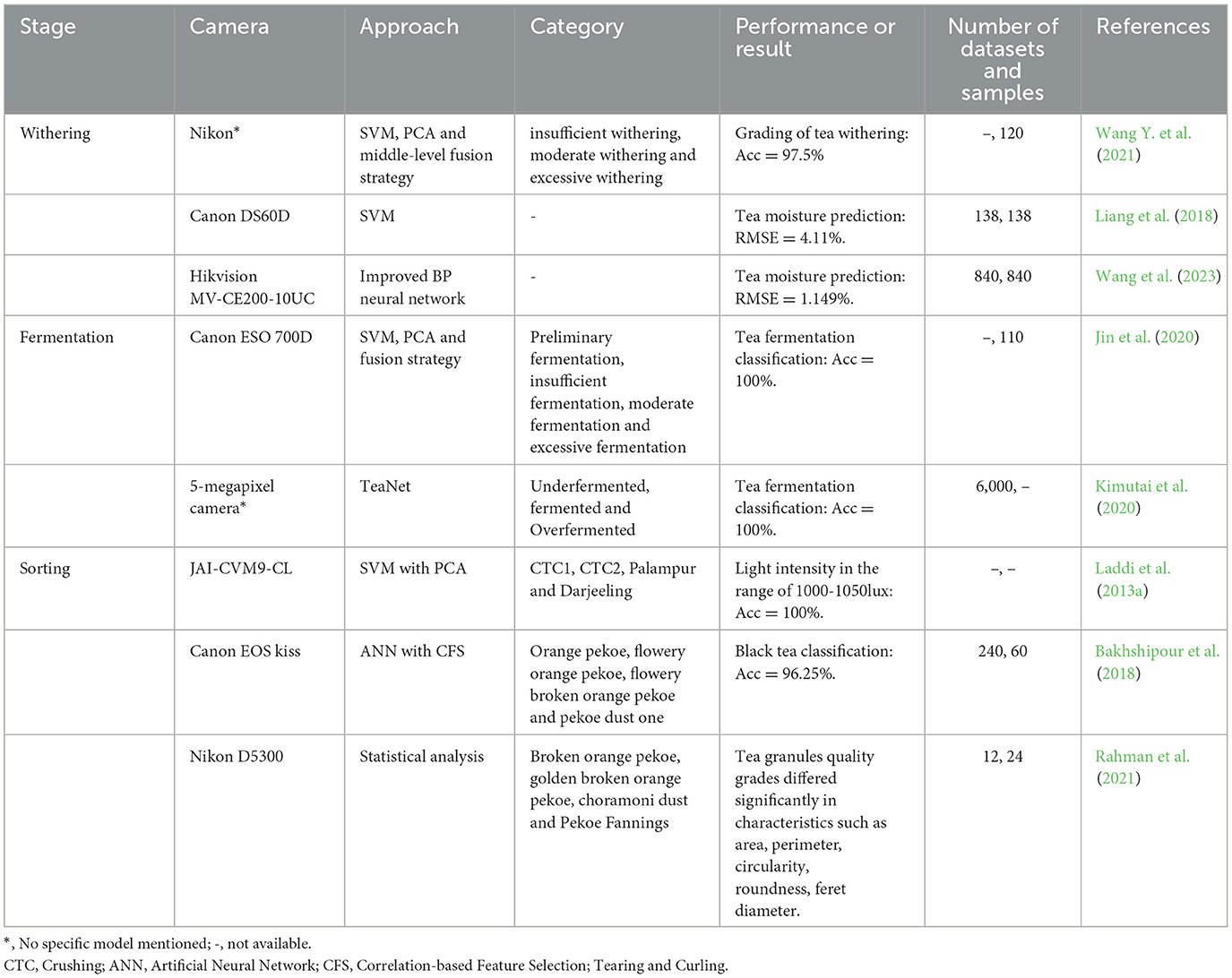

Withering enables the tea leaves to lose their moisture. Moderate wilting can increase the concentration of sap in the cells while preventing tea leaves from being crushed during the cutting tearing and curling steps (Deb and Jolvis Pou, 2016). In this regard, Wang Y. et al. (2021) proposed a method for monitoring tea withering that combines visual and spectral features. The method performed a mid-level fusion of the collected data and then applied an SVM model to classify the withering tea status into three categories: insufficient, moderate, and excessive, and the prediction set achieved an accuracy of 97.5%. However, high-cost spectral measurement equipment was relied upon in this study. For this reason, Liang et al. (2018) and Wang et al. (2023) implemented the prediction of moisture in tea leaves based on color or texture features in the images. The experimental result showed that the mean squared errors (RMES) are below 5%.

Tea fermentation is an oxidation process that improves the taste of tea. The control of the degree of fermentation is crucial, as too much or not enough can reduce the quality of the tea (Sharma et al., 2015). To this end, Jin et al. (2020) used a fusion of computer vision and FT-NIR techniques to evaluate the degree of fermentation of black tea. The method divided fermentation into four categories (Preliminary fermentation, Insufficient fermentation, Moderate fermentation, and Excessive fermentation). The SVM model was used to quickly and accurately determine the fermentation level. The method achieved 100% classification accuracy in the validation set. It was considered that the study incorporates complex and expensive spectroscopic equipment, and that TML underperforms DL in the case of many samples. So, Kimutai et al. (2020) designed a TeaNet model to implement the classification of tea fermentation levels. The method extracted color and texture features in the image as input and used a deep convolutional neural network (DCNN) model to distinguish between underfermented, fermented, and over-fermented. The results showed outperformed other TML algorithms with an average accuracy of 100%.

Sorting is the final step, and emphasis is placed on the classification of dried tea into different grades based on quality. Laddi et al. (2013b) proposed a method to distinguish different grades of black tea based on the texture features of the tea particles. This method combines feature extraction with principal component analysis and considers the illumination effect. The experiments showed that the texture features of tea particles could better distinguish tea grades under dark field illumination conditions. In order to comprehensively assess tea quality, Rahman et al. (2021) studied methods for assessing tea quality using features such as color, texture, and shape. Statistical methods were used to find that four different qualities of tea particles could be clearly distinguished in terms of area, circumference, roundness, and diameter. All the above methods constructed relationships between tea particle quality and different characteristics but did not achieve the purpose of classification. For this reason, Bakhshipour et al. (2018) put forward a method to classify the quality of tea leaves based on image features. The color and texture features in the images were extracted, the number of features was reduced using correlation-based feature selection (CFS), and the classification effects of different machine learning methods were compared. The experimental results showed a classification accuracy of 96.25% using artificial neural networks. To reduce the cost of visual classification, Li L. et al. (2021) used pictures taken by smartphones and a micro-spectrometer to classify the quality of tea leaves. The method combined principal component analysis, SVM models, and a mid-level fusion strategy to obtain an accuracy of 94.29% in the prediction set.

Table 4 summarizes computer vision and machine learning applications in tea production and processing. It can be seen from the above discussion and table that there are few pieces of research on tea wilt and fermentation and more research on tea sorting. Currently, tea production and processing research is mainly focused on black tea. Most of the methods used are TML, probably due to the small number of classifications involved in the tea production process (In general, no more than ten categories). Most research in this area uses RGB cameras to capture image data, which can only extract two-dimensional physical features such as color, texture, and size. Few applications using deep cameras have been found for the tea production process. In the future, as DL models continue to become lighter and the price of depth cameras decreases, computer vision will be more widely used in the tea industry.

Table 4. Summary of the application of computer vision and machine learning in tea production and processing.

4.4. Other applications

In order to achieve automatic driving in tea plantations and monitor the growth status of tea trees, Lin and Chen (2019) and Lin et al. (2021) used semantic segmentation algorithms to extract navigation routes for tea trees planted by row. The whole system used two cameras in total, one of which implements the intelligent guidance of the riding tea picker. The other camera took real-time images of the tea tree canopy during the movement of the tea picker and performed segmentation, and finally calculated the canopy coverage to measure the tea tree growth status. The method had an average deviation angle of 5.92° from the guidance line in the navigation experiment, and the average horizontal displacement was 12.17 cm. After completing the drive, it could output a map of the growth status of the tea trees across the tea plantation and the average growth value. The method uses the tea tree crown split area to reflect the growth status, and there is the problem of tea trees not sprouting but still being able to obtain high growth values by the large canopy. Wang et al. (2022) proposed an improved YOLOv5 algorithm to implement a method to detect the number of tea buds at the top of the tree canopy. Since the photographed canopy's field of view remains unchanged, tea buds' growth density can be calculated. In contrast, the germination density can reflect the growth state of the tea trees more objectively.

Tea is a high-value product. To prevent unscrupulous merchants from using substandard products and purify the tea industry's healthy development, category differentiation of tea products is especially important. Wu et al. (2018) used the KNN algorithm to differentiate oolong, green, and black tea. The overall accuracy of this method was 94.7%, and the average recognition time per image was 0.0491s. In contrast, Zhang et al. (2018) used DL to distinguish the same three tea categories with an overall accuracy of 98.33%.

5. Challenges of computer vision and machine learning in the tea industry

With the continuous development of artificial intelligence and sensor technology, precision agriculture is gradually moving from an idea to a reality. The combination of computer vision and machine learning, which can maintain excellent robustness and accuracy in unstructured environments, has achieved impressive performance in the tea industry. However, there are still some challenges in existing research, such as the accurate harvesting and collection of tea buds, the qualification and quantification of multiple types of diseases, effective evaluation metrics, and open datasets in the tea industry. The resolution of these issues is necessary to promote the sustainable development of the tea industry.

5.1. Accurate picking and collection of tea buds

The following challenges are mainly faced for the accurate picking of tea buds. First, for the identification of tea buds, some studies (Chen and Chen, 2020; Wang T. et al., 2021; Xu et al., 2022) have focused on the two-dimensional detection of buds and picking points, while the three-dimensional localization of picking points has not been studied enough. Although these studies have achieved good detection accuracy on their datasets, they have failed to carry out identification experiments in actual situations.

Furthermore, for the picking of tea buds, Li Y. et al. (2021) and Chen et al. (2022) have adopted RGB-D cameras for 3D positioning of picking points and picking trials, but they are only limited to static picking (the tea picker stays still and the mechanical arm moves for picking), which is not sufficient in terms of efficiency and quality. The improvement of picking quality and efficiency is very important if we want to replace manual labor and achieve accurate picking in the future. Luo et al. (2022) showed that when tea buds were picked by mechanical cutting, the wound surface was uneven, oxidation was severe, and the picking quality was inferior to manual picking. However, soft-bodied robots have flexibility, perception, and softness (Wang H. et al., 2018; Su et al., 2022) and are expected to be an effective method for picking tea buds in the future. For efficiency improvements, on the one hand, using multiple robotic arms to work simultaneously and dividing each arm into different picking areas is a feasible solution. On the other hand, a vision-based tea picker to achieve dynamic picking (the tea picker runs forward while the robotic arm moves for picking) is also necessary.

Finally, for the collection of picked tea buds, Li Y. et al. (2021) and Yang et al. (2021) used a negative pressure absorption method to complete the task, and this can realize the collection completed at the same time as the picking action, which ensures the overall work efficiency. However, this collection device does not have the sorting and collection function, and subsequent manual sorting of tea buds of different grades (single bud tip, one bud and one leaf, and one bud and two leaves) is required. Some studies (Yang H. et al., 2019; Yan C. et al., 2022) have been able to achieve the classification of tea buds in terms of grade on the canopy. However, not much research has been done on graded harvesting devices. Chen et al. (2022) mentioned placing the picked tea buds on a belt and using the forward and reverse rotation of the belt to sort and collect the damaged and intact tea buds. In the future, belts could be installed on both sides of the tea-picking machine to achieve the sorting and collection of different grades of tea leaves by means of conveyance. Although this method will sacrifice some of the picking efficiency, this loss of efficiency can be compensated.

5.2. Qualification and quantification of multiple types of diseases

There are about 130 tea diseases in China alone (Hu et al., 2019a). Current research has focused on identifying a few common diseases, which is insufficient to provide an authoritative qualitative result in practical applications. Data collection for multiple disease categories and labeling is necessary, but this entails a high cost. Using data augmentation (geometric changes, GAN) to produce more images can accomplish the training task using a small amount of available data. This approach has been utilized in several studies (Sun et al., 2019b; Hu and Fang, 2022), but it still has some shortcomings (Lu et al., 2022). Semi-supervised and unsupervised learning, which require fewer data annotations, may be promising approaches for multi-class disease identification in the future.

The assessment of disease severity is also critical because it can guide tea farmers in quantitative treatment. There are few studies on disease quantification using vision, and the existing studies Hu et al. (2021a) and Hu et al. (2021b) still use image classification to make a rough distinction between disease severity and focus on one type of disease. However, it is essential to calculate the specific severity of various kinds of diseased leaves in the future management of tea plantations.

5.3. Effective evaluation indicators

The evaluation metrics currently are insufficiently adapted to the specific application. Some studies (Chen J. et al., 2019; Qi et al., 2021; Zhang et al., 2021) still used only metrics from the computer domain to evaluate the excellence of the methods, for example, the mIoU, mAP, and F1 scores. Although these indicators can reflect the strengths and weaknesses of the algorithm in terms of accuracy, the readability is poor and not representative. Tea production manufacturers mainly focus on other indicators of technology, such as stability, operational efficiency, and input-output ratio. Therefore, an effective evaluation should be able to precisely reflect the value of the application situation to attract more capital to promote the development of technology.

There are also some studies (Li Y. et al., 2021; Chen et al., 2022) that have used picking success, localization time, and robustness to measure the feasibility of the method, but do not assign weights to the involved metrics for application scenarios so that they can be measured comprehensively. For example, disease identification needs to consider metrics such as accuracy and speed. On the one hand, if a high-performance computer is used in the application for identification, the model's accuracy should be heavily considered since the device has a fast inference speed. On the other hand, if a mobile device is used for recognition, then the inference speed of the model should be given a high weight. Therefore, effective evaluation indicators should also be measured comprehensively, distinguishing between primary and secondary aspects. In the future, proposing effective evaluation indicators based on specific applications in agriculture should be sufficient to attract attention.

5.4. Open datasets in the tea industry

Image data acquisition in agriculture needs to take into account factors such as topography, weather, and crop growth period. Especially for famous tea, which is harvested for roughly only 15 days (Zhang et al., 2021), the data collection period is short. Due to the lack of publicly available datasets, most research scholars use their own collected data to complete the training and validation of the algorithm. Moreover, some studies (Hu et al., 2019a,b; Wu et al., 2022) utilized a small amount of image data and did not contain rich features. It led to a large variation in the results of some studies and a lack of specific benchmarks.

Unfortunately, there are no specific public datasets on scenarios such as harvesting of tea buds, diseases of tea leaves, subsequent production and processing, and navigation in tea plantations. However, the construction of public datasets based on each application scenario can help future scholars reduce the cost of research and facilitate the screening of the best algorithms for each application scenario. Therefore, the construction of future open datasets could open up the prospects of computer vision applications in the tea industry.

6. Conclusion and future perspectives

This paper reviews the applications of computer vision and machine learning in the tea industry over the past decade. It is not surprising to find from the collation that many studies have used DL to solve complex problems in tea harvesting and tea plantation navigation, as DL has superior performance in target detection and segmentation. However, the algorithms used in tea plant disease control and tea processing mainly focus on TML, which may be because this method has high interpretability and real-time performance in image classification, which is convenient for feature selection and extraction. In addition, it can also be found that depth cameras are not widely used in the tea industry. Only a few cases focus on tea harvesting and tea plantation navigation, which mainly use AIRS cameras with good comprehensive performance.

We have found several challenges from past research that are sufficient to warrant attention, including accurate tea bud picking and harvesting, qualification and quantification of multiple types of diseases, valid evaluation indicators, and open public datasets. These challenges call for the construction of new tea harvesting equipment, authoritative disease detection methods, comprehensive and representative evaluation indicators, and public datasets to lay the foundation for future research, application, and dissemination, and to avoid getting caught in the obstacles faced by current applications.

At present, simpler and lighter DL networks have become an important research direction in the field of computers. Meanwhile, TML plays an irreplaceable role, and the combination of the two may have a complementary effect in the future. For the picking of tea buds, it is an unavoidable problem to determine the specific 3D position, and selecting deep cameras is a feasible approach in the future. Since tea trees are not always planted on flat land, UAVs have better pervasiveness for monitoring the growth state than other land-based driving machines. Although most of the current research uses RGB cameras to achieve visual perception, in the future, with the increasing popularity of consumer deep cameras, the spring of the tea industry and precision agriculture development is coming.

Author contributions

HW: writing—original draft and investigation. JG: supervision and conceptualization. MW: writing and modifying. All authors contributed to the article and approved the submitted version.

Funding

Funded by Jiangsu Province Key Research and Development Program—Key Project Research and Development of Key Technology of Typical Fruit and Tea Precision Intelligent Picking Robot System under Complex Environment (Project No. BE2021016).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alam, M., Ali, S., Ashraf, G. M., Bilgrami, A. L., Yadav, D. K., Hassan, M. I., et al. (2022). Epigallocatechin 3-gallate: from green tea to cancer therapeutics. Food Chem. 379, 132135. doi: 10.1016/j.foodchem.2022.132135

Ashtiani, S. H. M., Javanmardi, S., Jahanbanifard, M., Martynenko, A., and Verbeek, F. J. (2021). Detection of mulberry ripeness stages using deep learning models. IEEE Access 9, 100380–100394. doi: 10.1109/ACCESS.2021.3096550

Bakhshipour, A., Sanaeifar, A., Payman, S. H., and de la Guardia, M. (2018). Evaluation of data mining strategies for classification of black tea based on image-based features. Food Anal. Methods 11, 1041–1050. doi: 10.1007/s12161-017-1075-z

Barnea, E., Mairon, R., and Ben-Shahar, O. (2016). Colour-agnostic shape-based 3D fruit detection for crop harvesting robots. Biosyst. Eng. 146, 57–70. doi: 10.1016/j.biosystemseng.2016.01.013

Bechar, A., and Vigneault, C. (2016). Agricultural robots for field operations: concepts and components. Biosyst. Eng. 149, 94–111. doi: 10.1016/j.biosystemseng.2016.06.014

Bhargava, A., Bansal, A., Goyal, V., and Bansal, P. (2022). A review on tea quality and safety using emerging parameters. Food Meas. 16, 1291–1311. doi: 10.1007/s11694-021-01232-x

Chen, C., Lu, J., Zhou, M., Yi, J., Liao, M., Gao, Z., et al. (2022). A YOLOv3-based computer vision system for identification of tea buds and the picking point. Comput. Electron. Agricult. 198, 107116. doi: 10.1016/j.compag.2022.107116

Chen, C., Zhu, W., Liu, D., Steibel, J., Siegford, J., Wurtz, K., et al. (2019). Detection of aggressive behaviours in pigs using a RealSence depth sensor. Comput. Electron. Agricult. 166, 105003. doi: 10.1016/j.compag.2019.105003

Chen, J., Liu, Q., and Gao, L. (2019). Visual tea leaf disease recognition using a convolutional neural network model. Symmetry 11, 343. doi: 10.3390/sym11030343

Chen, J., Liu, Q., and Gao, L. (2021). Deep convolutional neural networks for tea tree pest recognition and diagnosis. Symmetry 13, 2140. doi: 10.3390/sym13112140

Chen, Y. T., and Chen, S. F. (2020). Localizing plucking points of tea leaves using deep convolutional neural networks. Comput. Electron. Agricult. 171, 105298. doi: 10.1016/j.compag.2020.105298

Chollet, F. (2017). “Xception: deep learning with depthwise separable convolutions,” in Proceedings of the IEEE conference on computer vision and pattern recognition (p. 1251–58). doi: 10.1109/CVPR.2017.195

Deb, S., and Jolvis Pou, K. R. (2016). A review of withering in the processing of black tea. J. Biosyst. Eng. 41, 365–372. doi: 10.5307/JBE.2016.41.4.365

Dong, C. W., Zhu, H. K., Zhao, J. W., Jiang, Y. W., Yuan, H. B., Chen, Q. S., et al. (2017). Sensory quality evaluation for appearance of needle-shaped green tea based on computer vision and non-linear tools. J. Zhejiang Univ. Sci. B, 18, 544. doi: 10.1631/jzus.B1600423

Food Agriculture Organization of the United Nations - FAO (2022). https://www.fao.org/faostat/en/#data/QCL (accessed December 18, 2022).

Fradkov, A. L. (2020). Early history of machine learning. IFAC-PapersOnLine 53, 1385–1390. doi: 10.1016/j.ifacol.2020.12.1888

Fu, L., Gao, F., Wu, J., Li, R., Karkee, M., Zhang, Q., et al. (2020). Application of consumer RGB-D cameras for fruit detection and localization in field: a critical review. Comput. Electron. Agricult. 177, 105687. doi: 10.1016/j.compag.2020.105687

Fue, K., Porter, W., Barnes, E., Li, C., and Rains, G. (2020). Evaluation of a stereo vision system for cotton row detection and boll location estimation in direct sunlight. Agronomy 10, 1137. doi: 10.3390/agronomy10081137

Gill, G. S., Kumar, A., and Agarwal, R. (2011). Monitoring and grading of tea by computer vision–a review. J. Food Eng. 106, 13–19. doi: 10.1016/j.jfoodeng.2011.04.013

Han, Y., Xiao, H., Qin, G., Song, Z., Ding, W., and Mei, S. (2014). Developing situations of tea plucking machine. Engineering 6, 268–273. doi: 10.4236/eng.2014.66031

He, K., Gkioxari, G., Dollár, P., and Girshick, R. (2017). “Mask r-cnn,” in Proceedings of the IEEE international conference on computer vision (pp. 2961–69). doi: 10.1109/ICCV.2017.322

Hou, C., Zhang, X., Tang, Y., Zhuang, J., Tan, Z., Huang, H., et al. (2022). Detection and localization of citrus fruit based on improved You Only Look Once v5s and binocular vision in the orchard. Front. Plant Sci. 13, 1–16. doi: 10.3389/fpls.2022.972445

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., et al. (2017). Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv preprint arXiv:1704, 04861.

Hu, G., and Fang, M. (2022). Using a multi-convolutional neural network to automatically identify small-sample tea leaf diseases. Sustain. Comput. Inform. Syst. 35, 100696. doi: 10.1016/j.suscom.2022.100696

Hu, G., Wang, H., Zhang, Y., and Wan, M. (2021a). Detection and severity analysis of tea leaf blight based on deep learning. Comput. Electrical Eng. 90, 107023. doi: 10.1016/j.compeleceng.2021.107023

Hu, G., Wei, K., Zhang, Y., Bao, W., and Liang, D. (2021b). Estimation of tea leaf blight severity in natural scene images. Precision Agricult. 22, 1239–1262. doi: 10.1007/s11119-020-09782-8

Hu, G., Wu, H., Zhang, Y., and Wan, M. (2019a). A low shot learning method for tea leaf's disease identification. Comput. Electron. Agricult. 163, 104852. doi: 10.1016/j.compag.2019.104852

Hu, G., Yang, X., Zhang, Y., and Wan, M. (2019b). Identification of tea leaf diseases by using an improved deep convolutional neural network. Sustain. Comput. Inf. Syst. 24, 100353. doi: 10.1016/j.suscom.2019.100353

Huang, T. S. (1996). Computer Vision: Evolution and Promise. Available online at: https://cds.cern.ch/record/400313/files/p21 (accessed December 31, 2022).

IBM (2022) What is Machine Learning?. Available online at: https://www.ibm.com/topics/machine-learning (accessed January 1, 2023).

Janiesch, C., Zschech, P., and Heinrich, K. (2021). Machine learning and deep learning. Electron. Markets 31, 685–695. doi: 10.1007/s12525-021-00475-2

Jia, W., Tian, Y., Luo, R., Zhang, Z., Lian, J., Zheng, Y., et al. (2020). Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agricult. 172, 105380. doi: 10.1016/j.compag.2020.105380

Jin, G., Wang, Y., Li, L., Shen, S., Deng, W. W., Zhang, Z., et al. (2020). Intelligent evaluation of black tea fermentation degree by FT-NIR and computer vision based on data fusion strategy. LWT 125, 109216. doi: 10.1016/j.lwt.2020.109216

Jo, T., Nho, K., and Saykin, A. J. (2019). Deep learning in Alzheimer's disease: diagnostic classification and prognostic prediction using neuroimaging data. Front. Aging Neurosci., 11, 220. doi: 10.3389/fnagi.2019.00220

Khan, N., and Mukhtar, H. (2018). Tea polyphenols in promotion of human health. Nutrients 11, 39. doi: 10.3390/nu11010039

Khan, S., Sajjad, M., Hussain, T., Ullah, A., and Imran, A. S. (2020). A review on traditional machine learning and deep learning models for WBCs classification in blood smear images. IEEE Access 9, 10657–10673. doi: 10.1109/ACCESS.2020.3048172

Kimutai, G., Ngenzi, A., Said, R. N., Kiprop, A., and Förster, A. (2020). An optimum tea fermentation detection model based on deep convolutional neural networks. Data 5, 44. doi: 10.3390/data5020044

Laddi, A., Prakash, N. R., Sharma, S., and Kumar, A. (2013a). Discrimination analysis of Indian tea varieties based upon color under optimum illumination. J. Food Measure. Characteriz. 7, 60–65. doi: 10.1007/s11694-013-9139-2

Laddi, A., Sharma, S., Kumar, A., and Kapur, P. (2013b). Classification of tea grains based upon image texture feature analysis under different illumination conditions. J. Food Eng., 115, 226–231. doi: 10.1016/j.jfoodeng.2012.10.018

Lanjewar, M. G., and Panchbhai, K. G. (2022). Convolutional neural network based tea leaf disease prediction system on smart phone using paas cloud. Neural Comput. Appl. 33, 1–17. doi: 10.1007/s00521-022-07743-y

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Li, H., Tao, H., Cui, L., Liu, D., Sun, J., Zhang, M., et al. (2021). Recognition and Localization Method of tomato based on SOM-K-means Algorithm. Transact. Chinese Soc. Agricult. Machinery 52, 23–29. doi: 10.6041/j.issn.1000-1298.2021.01.003

Li, L., Wang, Y., Jin, S., Li, M., Chen, Q., Ning, J., et al. (2021). Evaluation of black tea by using smartphone imaging coupled with micro-near-infrared spectrometer. Spectrochimica Acta Part A Mol. Biomol. Spectroscopy 246, 118991. doi: 10.1016/j.saa.2020.118991

Li, T., Feng, Q., Qiu, Q., Xie, F., and Zhao, C. (2022). Occluded apple fruit detection and localization with a frustum-based point-cloud-processing approach for robotic harvesting. Remote Sens. 14, 482. doi: 10.3390/rs14030482

Li, Y., Guo, Z., Shuang, F., Zhang, M., and Li, X. (2022). Key technologies of machine vision for weeding robots: a review and benchmark. Comput. Electron. Agricult. 196, 106880. doi: 10.1016/j.compag.2022.106880

Li, Y., He, L., Jia, J., Lv, J., Chen, J., Qiao, X., et al. (2021). In-field tea shoot detection and 3D localization using an RGB-D camera. Comput. Electron. Agricult. 185, 106149. doi: 10.1016/j.compag.2021.106149

Liang, G., Dong, C., Hu, B., Zhu, H., Yuan, H., Jiang, Y., et al. (2018). Prediction of moisture content for congou black tea withering leaves using image features and non-linear method. Sci. Rep., 8, 7854. doi: 10.1038/s41598-018-26165-2

Liao, J., Wang, Y., and Yin, J. (2019). Point cloud acquisition, segmentation and location method of crops based on binocular vision. Jiangsu J. Agricult. Sci. 35, 847–852. doi: 10.3969/j.issn.1000-4440.2019.04.014

Lin, G., Tang, Y., Zou, X., Li, J., and Xiong, J. (2019). In-field citrus detection and localisation based on RGB-D image analysis. Biosyst. Eng. 186, 34–44. doi: 10.1016/j.biosystemseng.2019.06.019

Lin, Y. K., and Chen, S. F. (2019). Development of navigation system for tea field machine using semantic segmentation. IFAC-PapersOnLine 52, 108–113. doi: 10.1016/j.ifacol.2019.12.506

Lin, Y. K., Chen, S. F., Kuo, Y. F., Liu, T. L., and Lee, S. Y. (2021). Developing a guiding and growth status monitoring system for riding-type tea plucking machine using fully convolutional networks. Comput. Electron. Agricult. 191, 106540. doi: 10.1016/j.compag.2021.106540

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C. Y., et al. (2016). “October. Ssd: single shot multibox detector,” in European conference on computer vision (Springer, Cham), p. 21–37. doi: 10.1007/978-3-319-46448-0_2

Liu, X., Yuan, B., and Li, K. (2020). Design for the intelligent irrigation system based on speech recognition. J. Shandong Agricult. Univ. 51, 479–481. doi: 10.3969/j.issn.1000-2324.2020.03.017

Long, J., Shelhamer, E., and Darrell, T. (2015). “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition (p. 3431–40). doi: 10.1109/CVPR.2015.7298965

Lu, Y., Chen, D., Olaniyi, E., and Huang, Y. (2022). Generative adversarial networks (GANs) for image augmentation in agriculture: a systematic review. Comput. Electron. Agricult. 200, 107208. doi: 10.1016/j.compag.2022.107208

Luo, K., Wu, Z., Cao, C., Qin, K., Zhang, X., An, M., et al. (2022). Biomechanical characterization of bionic mechanical harvesting of tea buds. Agriculture, 12, 1361. doi: 10.3390/agriculture12091361

Meng, T., Jing, X., Yan, Z., and Pedrycz, W. (2020). A survey on machine learning for data fusion. Inform. Fusion 57, 115–129. doi: 10.1016/j.inffus.2019.12.001

Mukhopadhyay, S., Paul, M., Pal, R., and De, D. (2021). Tea leaf disease detection using multi-objective image segmentation. Multimed. Tools Appl. 80, 753–771. doi: 10.1007/s11042-020-09567-1

Peng, Y., Zhao, S., and Liu, J. (2021). Segmentation of overlapping grape clusters based on the depth region growing method. Electronics 10, 2813. doi: 10.3390/electronics10222813

Pezzuolo, A., Guarino, M., Sartori, L., and Marinello, F. (2018). A feasibility study on the use of a structured light depth-camera for three-dimensional body measurements of dairy cows in free-stall barns. Sensors 18, 673. doi: 10.3390/s18020673

Polic, M., Tabak, J., and Orsag, M. (2022). Pepper to fall: a perception method for sweet pepper robotic harvesting. Intel. Service Robot. 15, 193–201. doi: 10.1007/s11370-021-00401-7

Qi, C., Gao, J., Pearson, S., Harman, H., Chen, K., Shu, L., et al. (2022). Tea chrysanthemum detection under unstructured environments using the TC-YOLO model. Expert Syst. Appl. 193, 116473. doi: 10.1016/j.eswa.2021.116473

Qi, F., Xie, Z., Tang, Z., and Chen, H. (2021). Related study based on Otsu watershed algorithm and new squeeze-and-excitation networks for segmentation and level classification of tea buds. Neural Process. Lett. 53, 2261–2275. doi: 10.1007/s11063-021-10501-1

Qingchun, F., Wei, C., Jianjun, Z., and Xiu, W. (2014). Design of structured-light vision system for tomato harvesting robot. Int. J. Agricult. Biol. Eng. 7, 19–26. doi: 10.3965/j.ijabe.20140702.003

Qingfeng, L., Jianqing, G., and Genshun, W. (2019). The research development and challenge of automatic speech recognition. Front. Data Domputing 1, 26–36. doi: 10.11871/jfdc.issn.2096-742X.2019.02.003

Rahman, M. T., Ferdous, S., Jenin, M. S., Mim, T. R., Alam, M., Al Mamun, M. R., et al. (2021). Characterization of tea (Camellia sinensis) granules for quality grading using computer vision system. J. Agric. Food Res. 6, 100210. doi: 10.1016/j.jafr.2021.100210

Saberioon, M., and Císar, P. (2018). Automated within tank fish mass estimation using infrared reflection system. Comput. Electron. Agricult. 150, 484–492. doi: 10.1016/j.compag.2018.05.025

Saberioon, M. M., and Cisar, P. (2016). Automated multiple fish tracking in three-dimension using a structured light sensor. Comput. Electron. Agricult. 121, 215–221. doi: 10.1016/j.compag.2015.12.014

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and Chen, L. C. (2018). “Mobilenetv2: inverted residuals and linear bottlenecks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (p. 4510-4520). doi: 10.1109/CVPR.2018.00474

Sharma, P., Ghosh, A., Tudu, B., Sabhapondit, S., Baruah, B. D., Tamuly, P., et al. (2015). Monitoring the fermentation process of black tea using QCM sensor based electronic nose. Sensors Actuators B Chem. 219, 146–157. doi: 10.1016/j.snb.2015.05.013

Sharma, V., Tripathi, A. K., and Mittal, H. (2022). Technological revolutions in smart farming: Current trends, challenges and future directions. Comput. Electron. Agricult. 13, 107217. doi: 10.1016/j.compag.2022.107217

Song, Y., Li, W., Li, B., and Zhang, Z. (2020). Design and test of crawler type intelligent tea picker. J. Agricult. Mech. Res. 42, 123–127. doi: 10.13427/j.cnki.njyi.2020.08.023

Song, Y., Xu, F., Yao, Q., Liu, J., and Yang, S. (2022). Navigation algorithm based on semantic segmentation in wheat fields using an RGB-D camera. Information Processing in Agriculture. doi: 10.1016/j.inpa.2022.05.002

Su, H., Hou, X., Zhang, X., Qi, W., Cai, S., Xiong, X., et al. (2022). Pneumatic soft robots: Challenges and benefits. In Actuators (Vol. 11, No. 3, 92.). MDPI. doi: 10.3390/act11030092

Sun, X., Jiang, Y., Ji, Y., Fu, W., Yan, S., Chen, Q., et al. (2019a). “Distance measurement system based on binocular stereo vision,” in IOP Conference Series: Earth and Environmental Science (IOP Publishing), p. 52051. doi: 10.1088/1755-1315/252/5/052051

Sun, X., Mu, S., Xu, Y., Cao, Z., and Su, T. (2019b). Image recognition of tea leaf diseases based on convolutional neural network. arXiv preprint arXiv:1901, 02694. doi: 10.1109/SPAC46244.2018.8965555

Sun, Y., Jiang, Z., Zhang, L., Dong, W., and Rao, Y. (2019). SLIC_SVM based leaf diseases saliency map extraction of tea plant. Comput. Electron. Agricult. 157, 102–109. doi: 10.1016/j.compag.2018.12.042

Tagarakis, A. C., Filippou, E., Kalaitzidis, D., Benos, L., Busato, P., Bochtis, D., et al. (2022). Proposing UGV and UAV systems for 3D mapping of orchard environments. Sensors 22, 1571. doi: 10.3390/s22041571

United Nations (2022a). International Tea Day|United Nations. Available online at: https://www.un.org/en/observances/tea-day (accessed December 13, 2022).

United Nations (2022b). Traditional tea processing techniques and associated social practices in China. Available online at: https://ich.unesco.org/en/RL/traditional-tea-processing-techniques-and-associated-social-practices-in-china-01884 (accessed January 31, 2023).

Wang, F., Xie, B., Lü, E., Zeng, Z., Mei, S., Ma, C., et al. (2023). Design of a moisture content detection system for yinghong No. 9 tea leaves based on machine vision. Appl. Sci. 13, 1806. doi: 10.3390/app13031806

Wang, H., Totaro, M., and Beccai, L. (2018). Toward perceptive soft robots: progress and challenges. Adv. Sci. 5, 1800541. doi: 10.1002/advs.201800541

Wang, J., Li, X., Yang, G., Wang, F., Men, S., Xu, B., et al. (2022). Research on Tea Trees Germination Density Detection Based on Improved YOLOv5. Forests 13, 2091. doi: 10.3390/f13122091

Wang, J., Ma, Y., Zhang, L., Gao, R. X., and Wu, D. (2018). Deep learning for smart manufacturing: methods and applications. J. Manufactur. Syst. 48, 144–156. doi: 10.1016/j.jmsy.2018.01.003

Wang, P., Fan, E., and Wang, P. (2021). Comparative analysis of image classification algorithms based on traditional machine learning and deep learning. Pattern Recognit. Lett. 141, 61–67. doi: 10.1016/j.patrec.2020.07.042

Wang, T., Zhang, K., Zhang, W., Wang, R., Wan, S., Rao, Y., et al. (2021). Tea picking Point Detection and Location Based on Mask-RCNN. Information Processing in Agriculture. doi: 10.1016/j.inpa.2021.12.004

Wang, Y., Liu, Y., Cui, Q., Li, L., Ning, J., Zhang, Z., et al. (2021). Monitoring the withering condition of leaves during black tea processing via the fusion of electronic eye (E-eye), colorimetric sensing array (CSA), and micro-near-infrared spectroscopy (NIRS). J. Food Eng. 300, 110534. doi: 10.1016/j.jfoodeng.2021.110534

Williams, H. A., Jones, M. H., Nejati, M., Seabright, M. J., Bell, J., Penhall, N. D., et al. (2019). Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 181, 140–156. doi: 10.1016/j.biosystemseng.2019.03.007

Wu, W., Hu, Y., and Jiang, Z. (2022). Investigation on the bending behavior of tea stalks based on non-prismatic beam with virtual internodes. Agriculture 12, 370. doi: 10.3390/agriculture12030370

Wu, X., Li, B., Wang, X., Li, S., and Zeng, C. (2017). Design and analysis of single knapsack tea plucking machine. J. Agricult. Mechanization Res. 39, 92–96.

Wu, X., Yang, J., and Wang, S. (2018). Tea category identification based on optimal wavelet entropy and weighted k-Nearest Neighbors algorithm. Multimed. Tools Appl. 77, 3745–3759. doi: 10.1007/s11042-016-3931-z

Xu, W., Zhao, L., Li, J., Shang, S., Ding, X., Wang, T., et al. (2022). Detection and classification of tea buds based on deep learning. Comput. Electron. Agricult. 192, 106547. doi: 10.1016/j.compag.2021.106547

Xu, X. Y., Zhao, C. N., Cao, S. Y., Tang, G. Y., Gan, R. Y., Li, H. B., et al. (2019). Effects and mechanisms of tea for the prevention and management of cancers: an updated review. Crit. Rev. Food Sci. Nutr. 60, 1–13. doi: 10.1080/10408398.2019.1588223

Yan, C., Chen, Z., Li, Z., Liu, R., Li, Y., Xiao, H., et al. (2022). Tea sprout picking point identification based on improved deepLabV3+. Agriculture 12, 1594. doi: 10.3390/agriculture12101594

Yan, L., Wu, K., Lin, J., Xu, X., Zhang, J., Zhao, X., et al. (2022). Identification and picking point positioning of tender tea shoots based on MR3P-TS model. Front. Plant Sci. 13, 962391. doi: 10.3389/fpls.2022.962391

Yang, H., Chen, L., Chen, M., Ma, Z., Deng, F., Li, M., et al. (2019). Tender tea shoots recognition and positioning for picking robot using improved YOLO-V3 model. IEEE Access 7, 180998–181011. doi: 10.1109/ACCESS.2019.2958614

Yang, H., Chen, L., Ma, Z., Chen, M., Zhong, Y., Deng, F., et al. (2021). Computer vision-based high-quality tea automatic plucking robot using Delta parallel manipulator. Comput. Electron. Agricult. 181, 105946. doi: 10.1016/j.compag.2020.105946

Yang, N., Yuan, M., Wang, P., Zhang, R., Sun, J., Mao, H., et al. (2019). Tea diseases detection based on fast infrared thermal image processing technology. J. Sci. Food Agric. 99, 3459–3466. doi: 10.1002/jsfa.9564

Yashodha, G., and Shalini, D. (2021). An integrated approach for predicting and broadcasting tea leaf disease at early stage using iot with machine learning–a review. Mater. Today Proceed. 37, 484–488. doi: 10.1016/j.matpr.2020.05.458

Yu, L., Xiong, J., Fang, X., Yang, Z., Chen, Y., Lin, X., et al. (2021). A litchi fruit recognition method in a natural environment using RGB-D images. Biosyst. Eng. 204, 50–63. doi: 10.1016/j.biosystemseng.2021.01.015

Yun, C., Kim, H. J., Jeon, C. W., Gang, M., Lee, W. S., Han, J. G., et al. (2021). Stereovision-based ridge-furrow detection and tracking for auto-guided cultivator. Comput. Electron. Agricult. 191, 106490. doi: 10.1016/j.compag.2021.106490

Zhang, J., and Li, Z. (2021). Intelligent tea-picking system based on active computer vision and internet of things. Security Commun. Netw. 2021, 1–8. doi: 10.1155/2021/5302783

Zhang, L., Zou, L., Wu, C., Jia, J., and Chen, J. (2021). Method of famous tea sprout identification and segmentation based on improved watershed algorithm. Comput. Electron. Agricult. 184, 106108. doi: 10.1016/j.compag.2021.106108

Zhang, Y. D., Muhammad, K., and Tang, C. (2018). Twelve-layer deep convolutional neural network with stochastic pooling for tea category classification on GPU platform. Multimed. Tools Appl., 77, 22821–22839. doi: 10.1007/s11042-018-5765-3

Zhao, H., Qi, X., Shen, X., Shi, J., and Jia, J. (2018). “Icnet for real-time semantic segmentation on high-resolution images,” in Proceedings of the European Conference on Computer Vision (ECCV) (p. 405–420). doi: 10.1007/978-3-030-01219-9_25

Zhao, R., Bian, X., Chen, J., Dong, C., Wu, C., Jia, J., et al. (2022). Development and test for distributed control prototype of the riding profiling tea harvester. J. Tea Sci. 42, 263–276. doi: 10.13305/j.cnki.jts.2022.02.003

Zhu, Q., Ren, J., Barclay, D., McCormack, S., and Thomson, W. (2015). “Automatic animal detection from Kinect sensed images for livestock monitoring and assessment,” in 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing (IEEE), p. 1154–57. doi: 10.1109/CIT/IUCC/DASC/PICOM.2015.172

Keywords: computer vision, machine learning, tea, precision agriculture, harvest

Citation: Wang H, Gu J and Wang M (2023) A review on the application of computer vision and machine learning in the tea industry. Front. Sustain. Food Syst. 7:1172543. doi: 10.3389/fsufs.2023.1172543

Received: 23 February 2023; Accepted: 31 March 2023;

Published: 20 April 2023.

Edited by:

Samuel Ayofemi Olalekan Adeyeye, Hindustan University, IndiaReviewed by:

Seyed-Hassan Miraei Ashtiani, Ferdowsi University of Mashhad, IranTobi Fadiji, Stellenbosch University, South Africa

Copyright © 2023 Wang, Gu and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jinan Gu, Z3VqaW5hbkB0c2luZ2h1YS5vcmcuY24=

Huajia Wang

Huajia Wang Jinan Gu*

Jinan Gu*