- 1Department of Information Technology and Electrical Engineering, Università degli Studi di Napoli Federico II, Napoli, Italy

- 2Department of Engineering, Università degli Studi della Campania “Luigi Vanvitelli”, Caserta, Italy

Prisma Hand II is an under-actuated prosthetic hand developed at the University of Naples, Federico II to study in-hand manipulations during grasping activities. 3 motors equipped on the robotic hand drive 19 joints using elastic tendons. The operations of the hand are achieved by combining tactile hand sensing with under-actuation capabilities. The hand has the potential to be employed in both industrial and prosthetic applications due to its dexterous motion capabilities. However, currently there are no commercially available tactile sensors with compatible dimensions suitable for the prosthetic hand. Hence, in this work, we develop a novel tactile sensor designed based on an opto-electronic technology for the Prisma Hand II. The optimised dimensions of the proposed sensor made it possible to be integrated with the fingertips of the prosthetic hand. The output voltage obtained from the novel tactile sensor is used to determine optimum grasping forces and torques during in-hand manipulation tasks employing Neural Networks (NNs). The grasping force values obtained using a Convolutional Neural Network (CNN) and an Artificial Neural Network (ANN) are compared based on Mean Square Error (MSE) values to find out a better training network for the tasks. The tactile sensing capabilities of the proposed novel sensing method are presented and compared in simulation studies and experimental validations using various hand manipulation tasks. The developed tactile sensor is found to be showcasing a better performance compared to previous version of the sensor used in the hand.

1 Introduction

Nowadays, replacement of a missing limb due to trauma, illness, or any congenital conditions present from birth with prosthetic upper limbs is a significant challenge to the medical industry. This has prompted a need to create intelligent and multi-functional prosthetic hands as a means of replacing an amputated limb of a patient. Many aspects of prosthetic hand including appropriate weight, ergonomic design, easiness of use, stable force feedback, sufficient grasping, and manipulation capabilities should be taken into account in order to meet a patient’s needs. When the aforementioned factors are in proper proportion, a prosthetic device can assist a patient to perform daily activities effortlessly.

Autonomous in-hand object manipulation capabilities are the most sought-after essential abilities for the prosthetic hands (Feigl et al., 2023). Numerous studies have been going on in the field of development of prosthetic devices controlled by a patient’s electromyographic (EMG) data (Park et al., 2024; Liu et al., 2024). Along with EMG data, inclusion of touch sensors into a prosthetic device can manipulate objects in various positions and orientations by controlling grasping forces (Sharma et al., 2023). Touch sensors currently used in prosthetic hands have some drawbacks that limit their effectiveness. One major issue is their lack of sensitivity which results in inaccurate detection of amount of grasping forces. Another drawback is the durability of these sensors as they can wear out quickly with regular use. This can lead to the need for frequent replacements which can be costly and time-consuming. Additionally the size and weight of the sensors can also be a limitation as they may add bulk to the prosthetic hand and make it less comfortable for the user to wear for extended periods of time. One of the major limitation related to touch sensors employed in finger tips is their dimensional incompatibility. Developing a compatible touch sensor for fingertips is a challenging task due to the dimensional constraints. Incompatible touch sensors equipped on fingertips will result into inaccurate grasp force values. Hence these limitations highlight the need for continued research and development in improving the functionality of touch sensors in prosthetic hands to better mimic the capabilities of a human hand.

This paper addresses the current shortcomings of available sensing methods of prosthetic hands and proposes a novel tactile sensor developed based on opto-electronic technology for a prosthetic hand named “Prisma Hand II.” Our work aims to develop a dimensionally compatible tactile sensor to integrate with the fingertips of the Prisma Hand II. We managed to create a

2 State of art

Manipulation of an object using an under-actuated hand is a challenging yet promising field of research that continues to push the boundaries of robotic manipulation capabilities. By developing innovative control strategies and improving the design of hands, researchers aim to enhance the versatility and efficiency of robotic manipulation systems. As technology advances, object manipulation is expected to play a key role in various industries, revolutionizing the way tasks are performed in complex and dynamic environments. There are various kinds of prosthetic hands currently available in the market suitable for hand disabled patients (Belter et al., 2013; Van Der Niet and van der Sluis, 2013; Marateb et al., 2021; Liu et al., 2019). Most of these prosthetic hands are employed with tactile sensors to manipulate the physical interactions. Tactile sensors work based on changes in the values of resistance, capacitance, optical distribution, and electrical charges (Fraden and King, 2004; Russell, 1990). Piezoresistive (Almassri et al., 2015), Piezoelectric (Nassar et al., 2023), capacitive (Ge et al., 2022), quantum tunnel effect (Shi et al., 2020), optical (Fujiwara et al., 2018), and barometric (Liu et al., 2023) sensors are the most common types of tactile sensors used in prosthetic hands.

Contact point locations of a fingertip are determined using reconstructed forces and torques values obtained from a tactile sensor integrated to a hand in Liu et al. (2012b). The measured force values can be used for carrying out force controlling strategies and optimising grasping forces. Fast responding tactile sensors such as piezoelectric, capacitive, and barometric sensors can measure vibrations at contact points. The vibration information can be used for detection of slip and object exploration. Tactile sensors are capable to measure object shape and pressure distributions at contact points (Kyberd and Chappell, 1992). Capacitive and piezoresistive sensors can gather data from taxels directly using multiplexing circuits. Increasing the number of tactile sensors in a fingertip can lead to issues with wiring. These issues can be overcome by using plastic optical fibres in the tactile sensors. A 2 layered silicone based haptic tactile sensor is proposed in Sato et al. (2008). The displacement of the marker is recorded by a camera and can compute the magnitude of the force with a resolution of 0.3 N. An optical fibre based sensor named Fiber Bragg grating (FBG) senso embedded in a silicone based elastomer is introduced in Heo et al. (2008). The sensor can detect forces upto 15 N with a resolution of 0.05 N. The main drawback of the sensor is the presence of hysteresis loss due to silicone material embedded to the sensor. Microbending optical fiber (MBOF) sensors are also demonstrated in Heo et al. (2008) to reduce the effect of optical bending issues. Another tactile optical sensor with a silicone based skin equipped to a fingertip is given in Yamada et al. (2005). Reflector chips made up of steel acts as the top layer of the silicone skin. The intensity and direction of applied forces are measured using optical sensing method. In order to avoid the bulky nature of the optical fibres, LEDs are introduced into the tactile sensors in Rossiter and Mukai (2005). The sensor showcased

Pressure profiles obtained during application of force on a tactile surface are generated to measure force values in Weiß and Worn (2005). The pressure profiles are mostly used for recognising contacts (Liu et al., 2012a), estimating grasp intensity (Bekiroglu et al., 2011), and classifying objects (Drimus et al., 2014). Integrating tactile sensors to fingers and fingertips is a complex task due to dimensional constraints. Tactile sensors can be flexible (Schmitz et al., 2010) or rigid (Koiva et al., 2013) based on the types of surfaces and required outputs. Different types of sensors equipped on robotic hands and their characteristics are given in Supplementary Table 1.

Machine learning techniques are currently being used in a number of studies for determining forces acting at contact points using tactile sensing systems. Feature extraction is performed to filter raw signals and decrease the computational time by reducing transmission of unwanted data through tactile sensing system (Dahiya et al., 2013). Unsupervised way of learning is applied to recognise features from a tactile sensing system. In Madry et al. (2014), an unsupervised feature learning scheme is employed to extract features from raw images and pool these images to determine grasping force. Along with unsupervised learning methods, deep learning techniques are also used for extracting features from tactile sensors. Krizhevsky et al. (2012) adopted a CNN to extract features of a tactile image and Sohn et al. (2017) employed a Recurrent Neural Network (RNN) to analyse the deformation of the tactile sensor padding. Most of the learning based feature extraction methods are prone to over-fitting issues. In order to reduce the probability of over-fitting, most learning methods are accompanied by feature selection methods (Servati et al., 2017). Bhattacharjee et al. (2012) proposed a K-Nearest Neighbour (KNN) strategy to recognise contact points using a tactile sensor. However, computational time is higher during contact point recognition compared to traditional recognition methods. Support vector machines (SVM) methods are employed along with tactile sensors to determine contact points, and grasping forces. Gastaldo et al. (2014) employed SVM method to obtain contact point locations during a grasping activity. Even-though the SVM method performed better showcasing high accuracy predictions, computational effort is higher during the prediction process.

Nowadays, researchers are focusing more on deep learning techniques to increase the performances during predictions. Deep learning techniques have been successfully outperformed most of the conventional machine learning methods (Zou et al., 2017; Zhao et al., 2018). Deep Neural Network (DNN) based force reconstruction from image patterns are presented in Sferrazza et al. (2019). The proposed DNN performed well during real time testing, however, generalization capabilities of the strategy are not analysed. Radical Basis Function Neural Network (RBFNN) based prediction of contact point forces are carried out in Wang and Song (2021), Zhang et al. (2018). RBFNN exhibited greater generalization capabilities by linearising non linear functions with high precision. However, real time prediction of 3D forces are not achieved using the technique. Correlating sensor signals using ANN based approaches is given in Gao et al. (2018), Chuah and Kim (2016). The Artificial Neural Network (ANN) based networks performed better during simulation studies and however, computational effort is higher during real—time implementations. Electrical Impedance Tomography Neural Network (EIT-NN) based low-cost sensor is given in Park et al. (2021). Convolutional Neural Network (CNN) based tactile systems are employed in Kakani et al. (2021) and Meier et al. (2016) to determine force and motion characteristics during a grasping task. Various machine learning integrated tactile sensing systems used in different applications are given in Supplementary Table 2.

Response timing of tactile sensors is crucial during a task execution. Fast and accurate sensing capabilities will increase the efficiency of a task execution. A tactile sensor manufactured using polystyrene microspheres (Li et al., 2016) exihibited a fast response time of 38 ms. A piezoresistive tactile sensor (Wang et al., 2019) consisted of Galinstan micro-channels has a response time of 90 ms. A flexible pressure sensor (Wang et al., 2019) composed of PZT nano ribbons exhibited a fast response rate of 0.1 ms. Chun et al. (2016) proposed a tactile sensor manufactured using a conductive graphene-sponge composite. The response time of the proposed sensor is obtained as 5 ms. Several actuation techniques have also been referenced such as elastic actuation in form of a series of elastic tendons (Grebenstein et al., 2012) combined with compliant links made up of steel layers (Choi et al., 2017). Also several attempts for flexure based (Odhner et al., 2014) and spring-based (Lotti et al., 2005) elastic compliant finger joints are made. Several robotic hands such as fully-actuated hands like Shadow Dexterous Hand (Andrychowicz et al., 2020), KITECH hand (Lee et al., 2016) and BCL-13 (Zhou et al., 2018) have been developed based on individual joint control.

Controller schemes adopted for manipulating the hand postures are crucial to carryout a grasping task effectively. In Ficuciello et al. (2012), 3 postural synergies based control strategies are adopted for improving the efficiency of grasp executions carried out by a UB Hand IV (University of Bologna Hand, version IV). Smooth hand motions are obtained using the proposed control strategy during experimental validations. A study has been conducted in Ficuciello et al. (2018) for investigating the effectiveness of postural synergies employed in an anthropomorphic hand named SCHUNK 5-Fingered Hand (S5FH). Inverse kinematic schemes and feedbacks from an RGBD camera are used for manipulating the motions of the hand. The motor current values are utilized to restrict the grasping forces using the motor position control in the synergy subspace. An another synergy based control strategy adopted for the S5FH is demonstrated in Ficuciello (2018). Synergy parameters are computed based on an under-actuated kinematic approach. The proposed control strategy is composed of a feedforward and 2 correction parameters to determine fingertip positions during grasping. The development of a reinforcement learning algorithm for an anthropomorphic hand-arm system using policy search methods is given in Ficuciello (2019). The reward function generated during the learning is used to assess the effectiveness of the grasp in a synergy-based framework. Experiments are conducted on a KUKA LWR4+ manipulator equipped with a S5FH to demonstrate the effectiveness of the method. Optimal contact forces are computed in Villani et al. (2012) considering the contact points and wrench. Feedback from tactile and force sensors are used for obtaining an optimal grasp.

In this paper, a novel tactile sensing method developed for enhancing grasping capabilities of a prosthetic hand named “PRISMA Hand II” based on opto-electronic technology is presented. In this work, we chose an optical tactile sensor for the prosthetic hand, since optical tactile sensors are considered as the most durable and flexible sensors with high spatial resolution (Ascari et al., 2007). We developed a compatible tactile sensor and successfully integrated to the fingertips of the prosthetic hand.

We calibrated the sensor using a reference sensor (ATI) in order to train NN to reconstruct contact force components. As a result, the same sensor provided a tactile map and contact force estimation at the same time, by allowing us to implement different control approaches with the same device. Grasping force and torque values in 3 axes to manipulate an object are determined separately using ANN and CNN training methods. The accuracy of force values obtained using these 2 NNs is compared using Mean Square Error (MSE) values. An In hand manipulation control technique for grasping activities of an object using hand is also developed. The control scheme computed grasp forces/torques based on the reconstructed data from sensors. Additionally, it also controlled the orientation of the object using taxel voltage data. Experimental validations are carried out to study the performance of the proposed strategies.

3 Design and sensing system of the PRISMA Hand II

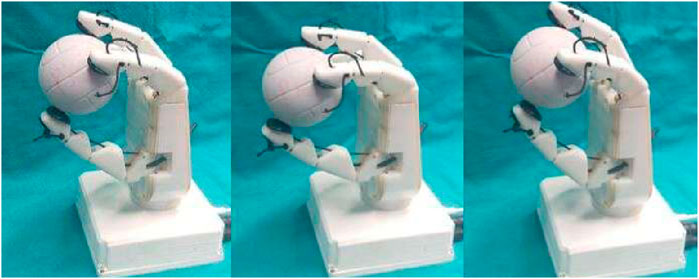

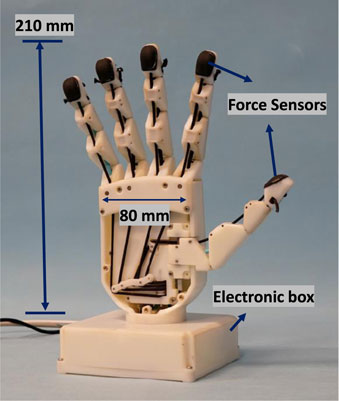

PRISMA Hand II (shown in Figure 1), an updated model of PRISMA Hand I (Ficuciello et al., 2020) is a 3D printed tendon-actuated prosthetic hand made up of 19 joints driven by 3 motors and weighs around 0.339 kg. The thumb is composed of 1 revolute joint and 3 flexion/extension compliant rolling joints. The index, ring, and little fingers are consisted of a single revolute joint and 3 flexing/extension joints. It comprised of fingertip tactile sensors to compute voltage/force feedback.

Figure 1. Prisma Hand II (Ficuciello et al., 2020).

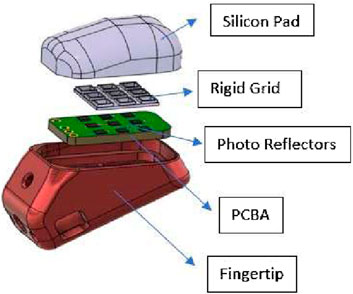

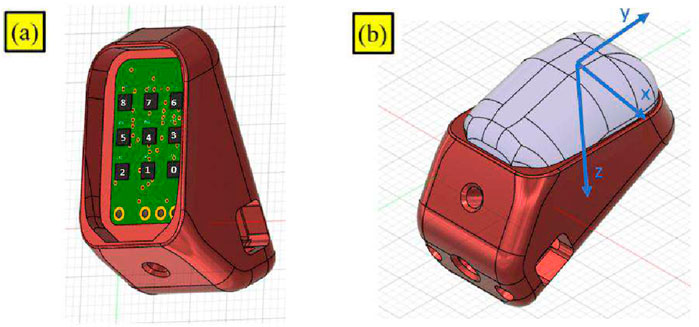

A novel tactile sensing technology was developed for the PRISMA Hand II to improve manipulation capabilities of the hand during grasping. The tactile sensing method employed a taxel sensing method based on optoelectronic technology (Cirillo et al., 2017). The sensor shown in Figure 2 operated based on the change in intensity of reflected light caused by pressing the fingertip deformable pads, which was then measured by photocouples and output a voltage value. Depending on the deformation of the pad above the phototaxels, the reflected light on the photocouples changed the photocurrent values. The change in values of photocurrent caused a voltage change that was detected by an Analog to Digital Converter (ADC) on the printed circuit board (PCB). The output of the ADC converter was obtained in the form of digital bytes. Voltage output bytes were translated to force and torque values in the 3 axes (X,Y,Z) using two NNs training methods such as ANN and CNN methods. MSE values were used to determine the highest performing network. During the calibration procedure, a 3 axes ATI F/T sensor served as a force/torque reference to establish the relationship between voltage and force.

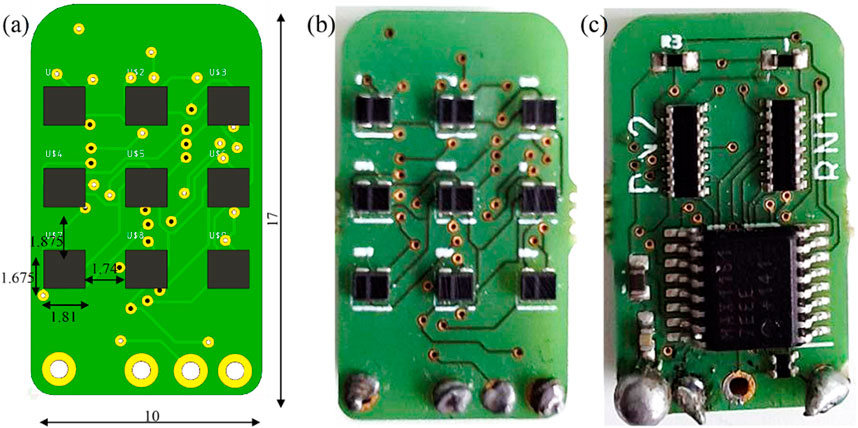

The dimensions of the fingertip sensor along with front and rear views are shown in Figure 3. A PCB containing LED-photo transistor couples was covered by an elastic pad. The deformable pad consisted of a top layer of a black silicon (MM928) layer with an opaque black grid sandwiched between the silicon and the PCB. This prevented light disturbances and non-monotonic behavior under high stresses, guaranteeing maximum reflection of light at all wavelengths. The ability to receive light of any wavelength can improve the sensitivity and precision of the sensors. 2 Capacitors (C3 (0.1 uF) Ceramic and C4 (4.7 uF) Tantalum) and resistors (R2 (270 kΩ), R3 (3.9 kΩ), RN1 (3.9 kΩ), RN2 (270 kΩ), and RN4 (1 kΩ)) equipped on the PCB aided the conversion of photocurrent to voltage during operation of the sensors.

The sensor previously employed on the hand was composed of a 2 × 2 matrix structure photo transistor couples (2 × 2) placed on top of the PCB. The sensor employed an ADC converter [ADS1015 (4 channels)]. The sensors were trained using NNs utilizing force readings from the ATI F/T sensor. On the contrary, the new optimised sensors developed in this work consisted of a 3 × 3 matrix structure photo transistor couples. The improvised sensor was connected to an Arduino Nano micro controller using an I2C connection protocol. Data were communicated in the form of bits during the operation of sensors. The unique feature of the new sensors allowed us to use a combination of force and torque data from an ATI sensor as an input for training a NN. This decreased MSE errors and improved precision of force prediction. The increased amount of input data samples (force + torque) used to train the NNs accounted for the higher precision in output force prediction. Furthermore, the bits were converted to a voltage,

For the sensors given in the study, the

4 Force mapping using neural networks

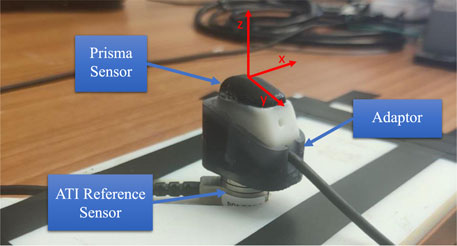

The process of force mapping was performed to establish a relationship between the output voltage from the sensor and the reference force/torque values in 3 axes (X, Y, Z) from an ATI F/T sensor by means of ANN and CNN networks separately. The fore-mentioned relationship was established during a calibration process by recording 140,000 samples of voltages and relative forces at a frequency of 100 Hz using ROS (Koubâa, 2017). During the calibration process, the pad was pressed and moved at various angles to capture as many data samples as possible using a grasping procedure. An ANN network developed in Cirillo et al. (2017) was used for training the data samples. TensorFlow and Keras sequential models with dense and activation layers were used for training on a Python framework. The performance of NN models was compared based on accuracy of force/torque predictions obtained after training. The parameter for selecting the optimal model was based on the lowest MSE value in the force/torque prediction phase of tests. Figure 4 depicts sensor calibration using the axis frame of reference.

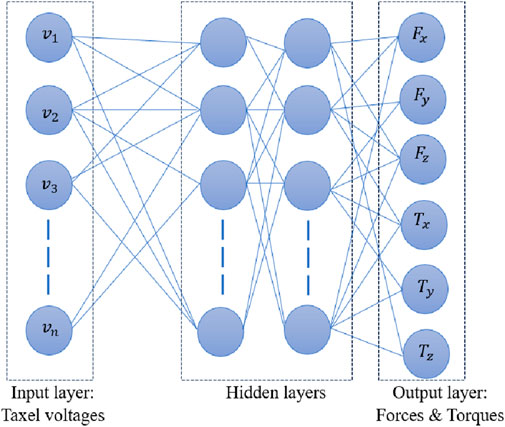

An ANN network trained using Levenberg—Marquardt (LM) method is depicted in Figure 5. Input layer was composed of output voltages from various taxels and forces/torques in 3 axes comprised the output layer. The properties of hidden layers were varied based on the task and provided in the coming sections.

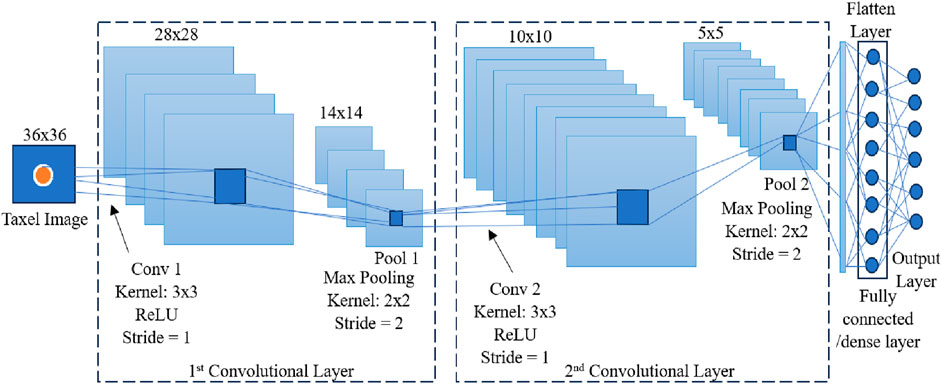

A CNN network was also used for training the data samples to reconstruct force/torque values. CNN architecture used in this work is given in Figure 6. Taxel image extractions were performed using two convolutional layers as shown in the figure. ReLU function was used as the activation function in the convolutional layers. A 3 × 3 kernel with stride value 1 and padding value 1 was used in convolutional layers accompanied by a pooling process with 2 × 2 kernel, stride value 2 and padding value 1. Fully connected/dense layers of NNs were used for predicting force and torque values from the extracted features from the convolutional layers.

5 In-hand manipulation control

In hand manipulation control method was employed to obtain an optimum grasp on objects during object manipulation tasks. The objects were manipulated based on voltages (raw data) received from each sensor taxel. Force/torque values were reconstructed using NNs (ANN and CNN networks) separately and evaluated to determine optimum grasps.

The operating principle of in-hand manipulation was based on correct voltage thresholding of all 9 taxel values collected from the sensor. The fingers were capable of executing sufficient motions to ensure a successful grasp and manipulation of objects to avoid grips on objects. The key principle of threshold selection was based on a real-time computation of the average values of all taxels’ voltages,

The adoption of real-time mean voltage readings was critical since the sensor was extremely sensitive and not possible to be set to a conventional threshold value. The contact points between fingertip and object can change during a grasping task. This causes a shift in the pressure points of the pad and result in a varying voltage throughout the task. The second rationale for using the average was to account for voltage variations across all taxels and examine the whole contact surface rather than just one specific location. A heuristic approach was taken for selecting the constant of proportionality K, as changes in K can significantly affect the spectrum of acceptable and non-feasible contact. In theory, increasing the K value reduces the range of safe contact, whereas decreasing the K value reduces the range of unsafe contact. The value of K was determined by conducting multiple tests and observing the behavior of voltage change. The threshold value was then compared to the real taxel voltage measurements. The conditions in which the taxel voltage falls below the threshold were regarded as unsafe contacts. A taxel voltage equal to or greater than the threshold was considered as a safe contact between an object and a component of the taxel-related sensor. The objective was to consider the contact unsafe when a minimum number of taxels (an entire row) obeys the unsafe contact point conditions. The steps followed during in-hand manipulation control scheme is given in Algorithm 1.

Algorithm 1.In hand manipulation scheme.

Input:

1. Voltages obtained from all taxels,

Output: Optimum value of grasping contact and force:

2. Initialise the parameter sets and start grasping task

3. Compute average voltage values,

4. Compute constant of proportionality,

5. For all values of

6.

if

7. final voltage,

8. Grasping contact and force finalised

10.

else

11. Update the position of grasp and go to step 5

12.

end if

6 Results and discussion

In this work, experimental validations on sensors equipped on the thumb of the Prisma hand II were carried out to analyse performance of the developed sensor. Simulation studies and experimental validations were performed using different architectures of CNN and experimentation set ups. The thumb of the hand plays a major role in the horizontal and vertical in-hand manipulations. Hence, thumb was selected to study the performance of the sensor developed for the hand.

6.1 Experimental validation of working of sensors

ANN and CNN networks were used for predicting 3 axes force values in X, Y, and Z directions. Voltages transformed into a 3 × 3 matrix structure were given as the input for training networks. A sigmoid function was used as an activation function during ANN training process. A batch size of 64 samples and 300 epochs were used for training ANN. The whole sample of data was divided into 80 percent for training and 20 percent for validating the network. Adaptive Moment Estimation (ADAM) was used to optimise the results and respective MSE values of the ANN trained in the X, Y, and Z-axes were found to be MSEx = 0.053, MSEy = 0.049, and MSEz = 0.319 respectively. To compare and select a best performing network, a CNN was also used for training the same dataset. The CNN comprised 2 convolutional layers of 256 and 64 neurons in addition to a dense layer of 16 neurons. The dataset was divided into 80 percent of training data and 20 percent of validation data. CNN used a batch size of 64 and epochs of 300. The MSE values for CNN trained in the X, Y, and Z-axes were found to be MSEx = 0.022, MSEy = 0.022, and MSEz = 0.148 respectively.

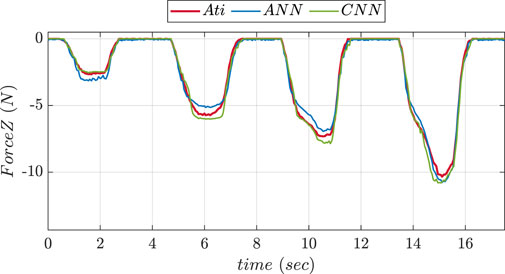

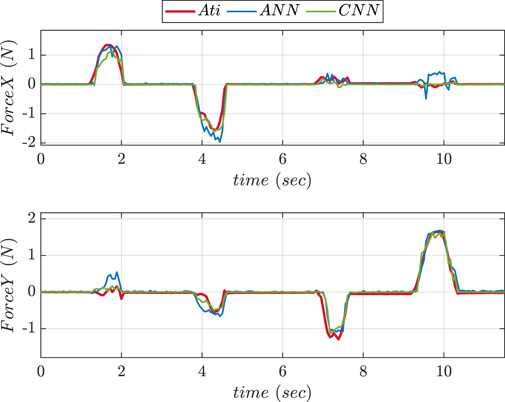

To compare the performance of two NNs, experiments were conducted in 2 phases using the fingertip pad carrying out a pushing and a sliding tasks. During pushing tasks, NN was supposed to be precisely predicting the

Figure 8. Comparative prediction graphs of X and Y-axes force using ANN and CNN during slide experiment.

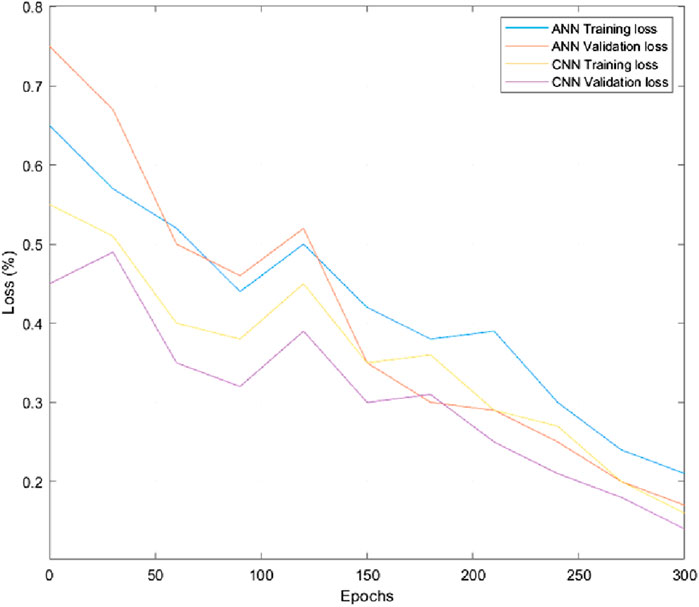

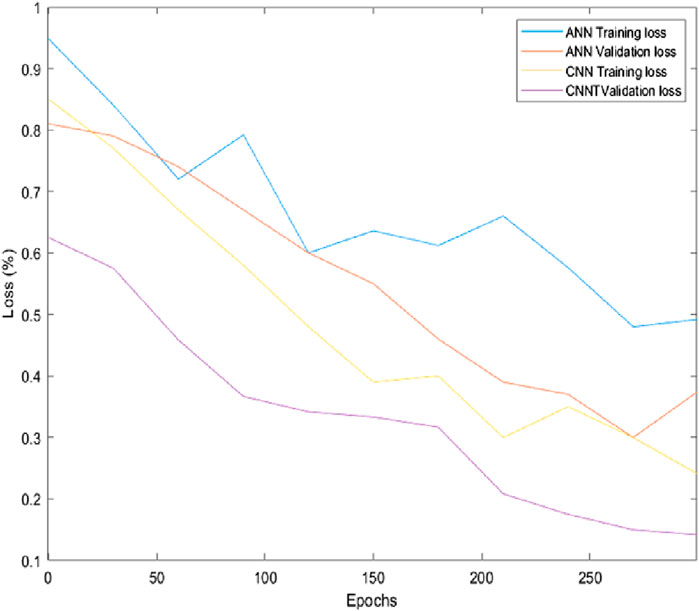

Figures 9 and 10 depict the comparison of training and validation losses during simulation and experimentation validations respectively. The training and validation losses computed using ANN and CNN networks at epoch 300 during simulation were obtained as 21%, 17%, 15%, and 13% respectively. Similarly training and validation losses during experimentations using ANN and CNN were obtained as 40%, 37.5%, 24% and 17% respectively. Hence, it is evident that CNN performed better in terms of losses for the specified task compared to ANN. The parameters and outcomes of ANN and CNN training networks during simulation studies and experimentations are given in Supplementary Tables 3, 4.

The accuracy of the ANN model seems to be more stable when momentum is utilized, exhibiting less volatility during the training epochs. Better performance is achieved with smaller decay levels. With a decay of

6.2 Experimental validation using updated convolutional neural network

6.2.1 Inclusion of torque values in CNN training

The previous approach to train a CNN was based on the inclusion of only 3 axes force values. To evaluate the performance of CNN based on increment of data samples, 3 axes torque values were considered during the training phase in order to make the prediction of forces more precise. The reference torque values were provided from an ATI F/T sensor whereas the 9 taxel solution of the sensor made the inclusion of torque values possible. An experimental validation of performance of sensors considering torque values were carried out for pushing and sliding actions of the thumb. Parameters and results obtained in this experimentation phase are given in Supplementary Table 5.

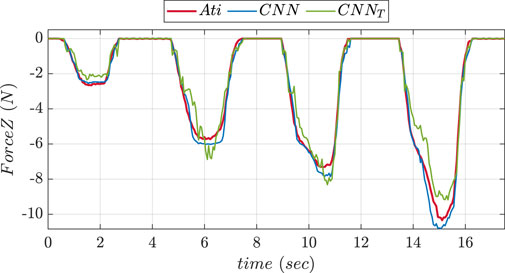

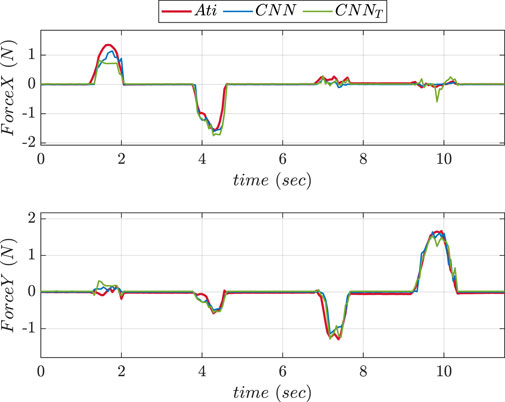

The MSE values in X, Y, and Z directions during force training were found to be MSEx = 0.022, MSEy = 0.031 and MSEz = 0.400 respectively. The MSE values in X, Y, and Z directions during torque training were found to be MSEx = 9.932, MSEy = 8.841, and MSEz = 0.465 respectively. Figure 11 showcases a graph comparing the performance of prediction of Z-axis forces obtained using CNN with and without considering torque values during pushing and slidin experiments. Figure 12 shows graph comparing the performance of prediction of X and Y-axes forces using two CNN networks during sliding experiment.

Figure 11. Comparative prediction graph of Z-axis force using CNN with torque (CNNt) and without torque (CNN) during push experiment.

Figure 12. Comparative prediction graphs of X and Y-axes force using CNN with torque (CNNt) and without torque (CNN) during slide experiment.

The MSE values in Z-axis during pushing experiment were obtained as 0.11 for CNN without considering torque and 0.35 for CNN considering torque. The MSE values in X-axis during sliding experiment were found to be 0.013 for CNN without considering torque and 0.023 for CNN considering torque. The MSE values in Y-axis during sliding experiment were obtained as 0.01 for CNN without considering torque and 0.012 for CNN considering torque. MSE statistics showed that the accuracy of network reduced during force prediction when torque values were added to the CNN network.

6.2.2 Inclusion of more layers in CNN training network

On the basis of the previous approach for the inclusion of torque during training, an increment of layers in the same model of CNN was made to improve the learning ability of the network. In this architecture, 5 convolutional layers were chosen with 128, 64, 32, 32, and 16 neurons. Furthermore, a dense layer of 16 neurons was added to obtain an output layer of 6 neurons including torque and force values. Supplementary Table 6 depicts the parameters of CNN and results obtained during this experimentation phase.

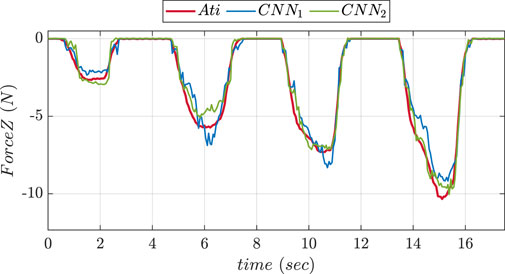

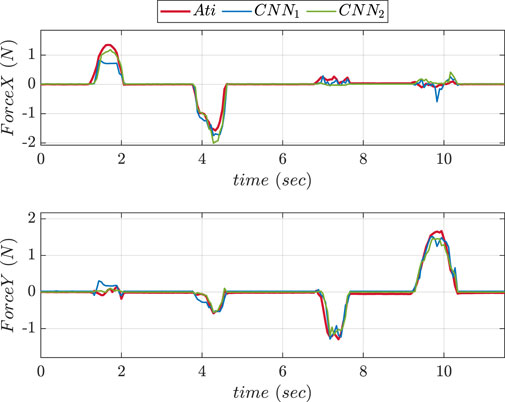

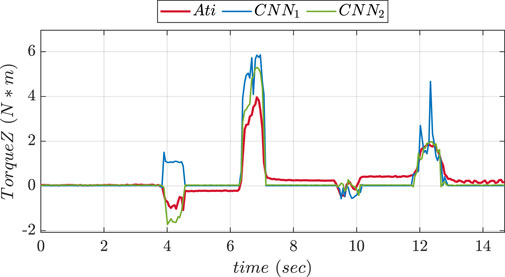

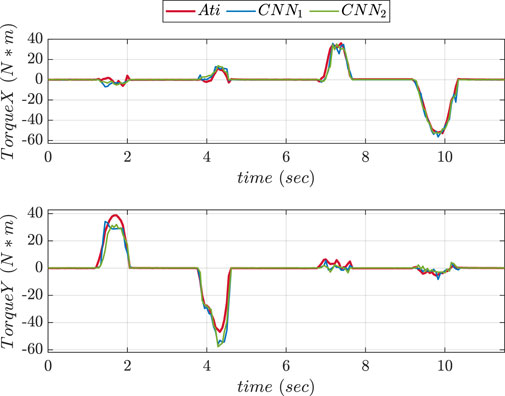

An experimental comparison for evaluating the precision of prediction for both CNN trained was done during pushing and sliding experiments. We consider CNN1 (older version) to be with fewer layers while CNN2 (updated version) to be with more layers. Figures 13, 14 show graphs depicting the performance of prediction of X, Y, and Z-axes forces during pushing and sliding experiments respectively. Moreover, experiments were also conducted in order to compare X, Y, and Z-axes torques using CNN with fewer layers (CNN1) and (CNN2). Graphs comparing the values of prediction are shown in Figures 15, 16. The MSE values for force training in X, Y, and Z directions were found to be MSEx = 0.022, MSEy = 0.043, and MSEz = 0.500 respectively. The MSE values for torque training in X, Y, and Z directions were obtained as MSEx = 11.302, MSEy = 9.221, and MSEz = 0.511 respectively.

Figure 13. Comparative prediction graph of Z-axis force using CNN with less layers (CNN1) and more layers (CNN2) during push experiment.

Figure 14. Comparative prediction graphs of X and Y-axes force using CNN with less layers (CNN1) and more layers (CNN2) during slide experiment.

Figure 15. Experiment 2 using torque: Comparative prediction graph of Z-axis torque using CNN with less layers (CNN1) and more layers (CNN2).

Figure 16. Experiment 1 using torque: Comparative prediction graphs of X and Y-axes torque using CNN with less layers (CNN1) and more layers (CNN2).

The MSE values of prediction phase in Z-axis during push experiment were found to be 0.35 for CNN1 and 0.19 for CNN2. The MSE values of prediction phase in X-axis during slide experiment were obtained as 0.023 for CNN1 and 0.015 for CNN2. The MSE values of prediction phase in Y-axis during slide experiment were found to be 0.012 for CNN1 and 0.008 for CNN2. The MSE data showed that increasing the number of layers improved CNN performance in force prediction compared to fewer layers.

6.3 Experimental validations during in-hand manipulation tasks

Experiments were conducted to determine the efficiency of the sensors to carry out in-hand manipulation tasks successfully. An object (a rubber ball) with unknown mass and geometry was chosen for performing the task. In the presented experiments, the manipulation approaches were based on the behavior of the taxels of the sensor placed on the thumb. Figure 17 displays the taxels equipped on the thumb sensors.

The condition for horizontal manipulation was based on the evaluation of taxels on the lateral side. The thumb moved horizontally in clockwise and anticlockwise directions at a constant speed of 20 rpm. In order to maintain a safe contact during the whole manipulation process, the lateral side taxels of the sensor were checked. In case of horizontal manipulation, initial motion of the thumb was carried out in clockwise direction until the lateral taxels (6–3–0) lost contact or it reached joint limits. After the initial phase of manipulation, the thumb was turned in an anticlockwise manner to return the object to its original position.

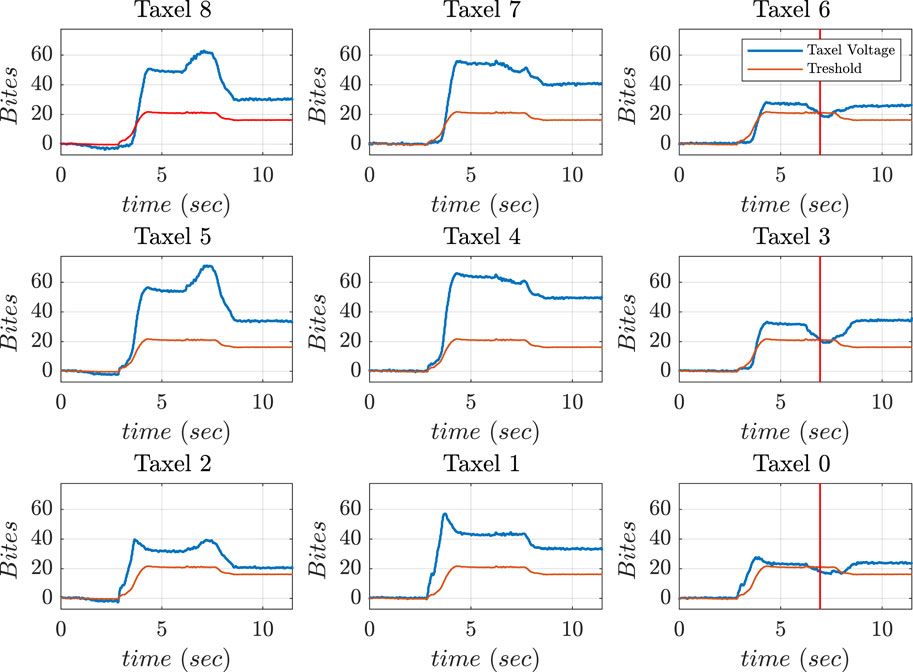

Figure 18 shows the frames of horizontal manipulation using thumb. Figure 19 depicts the time instant of non-feasible contact on the graph when the left side taxel voltages fall under the threshold value (the reader should consider the mirror view of the taxel matrix in the figure). Figure 19 demonstrates the stop condition of clockwise manipulation at time instant 6.9 s when the voltage values of taxels (6–3–0) fall below the threshold. This signified that the right lateral side of the taxels is no longer in touch with the object. This circumstance increased the chance that the object was fall out of the hand hold.

Figure 19. Voltage values of thumb sensor taxels along with threshold and instant of danger (red) indicated during horizontal manipulation phase.

The condition of vertical manipulation was based on behavior of the top and bottom taxels of the sensor presented on the thumb. Taking reference from Figure 17, the vertical manipulation comprised of 2 phases. The first phase of downward manipulation consisted of fingers closure and thumb extension in downward direction. Motions were carried out with a constant velocity of 10 rpm until the top taxels (6–7–8) lost contact or reached joint limits. Similarly, for upward manipulation, fingers opening and thumb flexion were carried out at a constant velocity of 10 rpm until the bottom taxels (0–1–2) break contact or the joint limits were reached.

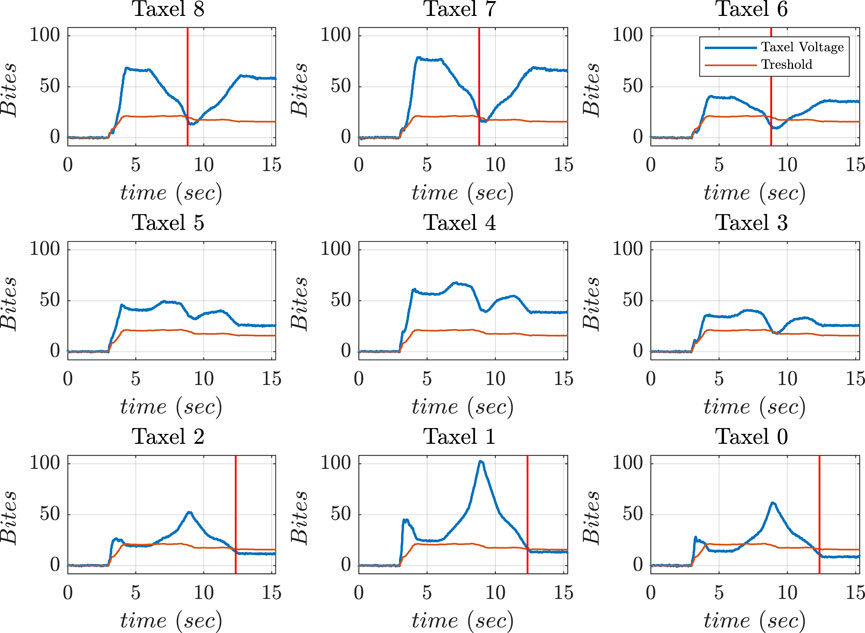

Figure 20 shows the screenshots of motions of fingers and thumb during vertical manipulation task. Figure 21 depicts graphs obtained during vertical manipulation tasks. The results showed that there were two stop time instants during the task. A stop time instant of downward manipulation at 8.8 s and stop time instant of upward manipulation at 12.3 s were detected from the results.

Figure 21. Voltage values of thumb sensor taxels along with threshold and instant of danger (red) indicated during vertical manipulation phase.

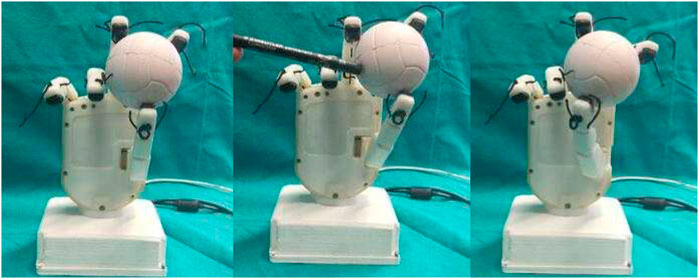

6.3.1 Experimental analysis to check reaction to disturbance

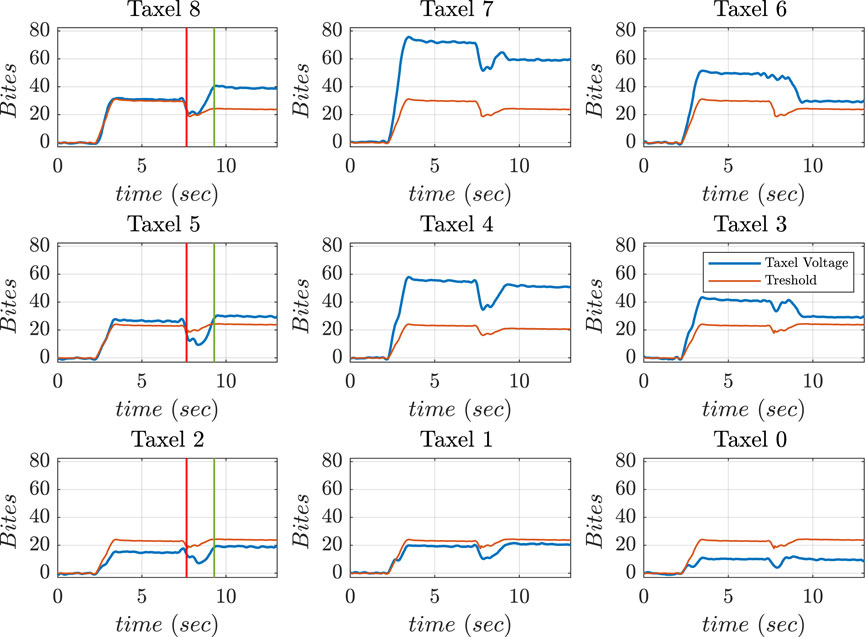

A feasible manipulation of object was accomplished in the presence of external disturbances based on the voltage information. In this experiment, hand executed a pinching grasp and the controller determined a possibility of non-feasible grasps using instantaneous threshold value at each sampling time. The reaction to external disturbances during task execution was provided by taxels placed on the lateral side of the thumb. The thumb moved in the required direction (clockwise and anticlockwise) at a constant velocity of 20 rpm depending on which lateral side lost contact with the object. The rotation stopped when at least two taxels returned to threshold values. K was set to a value higher than 0.6 to increase the performance of the controller.

The hand grasped a foam ball and an external disturbance was applied to the ball using a pen as shown in Figure 22. The application of external disturbance caused a non-feasible left-side contact. Taxels such as 8, 5, and 2 fall below the threshold value. The motions were implemented to bring voltage outputs of taxels 8 and 5 inside threshold level. The disturbance caused on left-side was identified at time instant 7.9 (red) and a feasible contact was restored at time instant 9.2 (green) as shown in Figure 23.

Figure 23. Voltage values of thumb sensor taxels, along with threshold (red), during external disturbance.

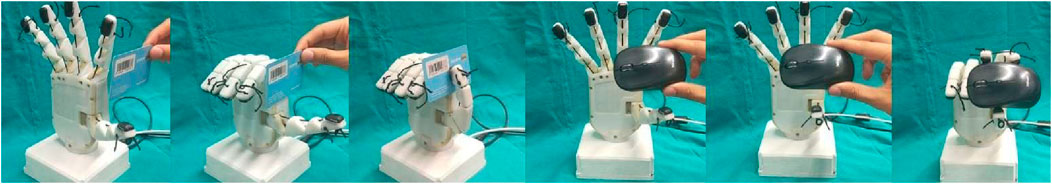

6.4 Experiment to validate force control scheme while grasping

Force control enabled a variety of gripping techniques, including pinch, power, and lateral grasps, to be used securely and effectively to grasp objects with varying shapes and orientations. The Z-axis forces were considered during grasping tasks to determine threshold force. Similarly, forces in X and Y-axes were considered for computing threshold forces during slip conditions. The orientation of the axis of force on the fingertip pad are shown in Figure 17.

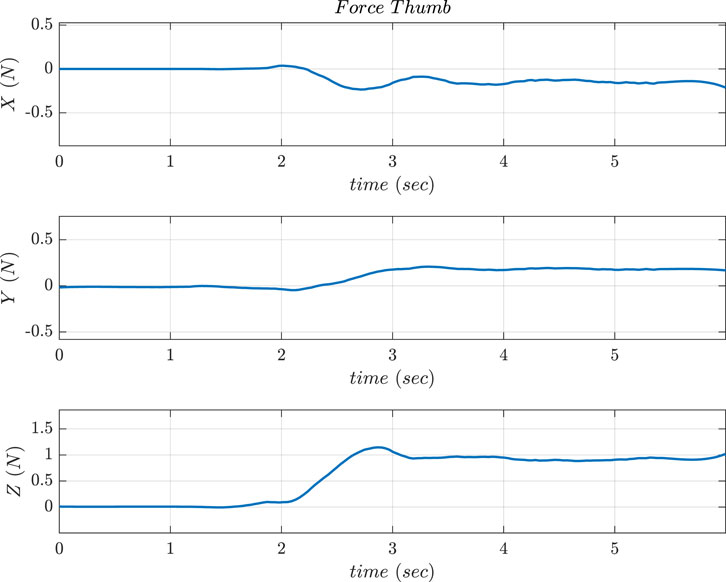

Experiments for validating the force control scheme based on threshold values during grasping tasks were conducted. The motor moved at a speed of 60 rpm until a desired value of force was reached. The fingers and thumb rotations were set to a speed of 60 rpm. The stop criterion was ensured based on the value of force threshold in 2 phases for efficient grasping. The first phase was executed after the normal Z-axis force reached 0.8 N to stop the finger’s motion. Moreover, the second phase involved the stopping of the thumb flexion when the force reached a value of 1 N. An added advantage of this kind of approach was that the force limits can be modified easily to a higher or lower value depending on the requirement of grasping. Figure 24 shows the screenshots of motions of the hand during lateral and pinch grasps execution. Figure 25 shows the Z-axis force reaching a desired value of 1 N during pinch grasp (mouse). When the hand grasped an object laterally at different orientations, the motor that controlled the thumb and fingers moved them to predetermined places. In addition, the thumb moved at a speed of 60 rpm until the required force was applied.

Figure 25. Force output obtained in 3 axes of Thumb sensor during Pinch Grasps (similar graphs are obtained for the lateral grasp).

6.5 Experimental validation during manipulation tasks combining raw tactile voltage and reconstructed force data

The predetermined force values were used for enhancing in-hand manipulation tasks. The performance of force controller described in Section 6.4 was improved using a predetermined force. The computed force values were used to exert desired grasping force to reduce the effects of external disturbance and restore a feasible grasp. The behavior of force in the Z-axis during the task is depicted in Supplementary Figure 1. The value of force reached around 1 N at time 4.2 s during in-hand manipulation of the object when an external disturbance force was applied. In order to maintain a stable grasp, 1 N force was applied to the hand in the opposite direction. The red line in Supplementary Figure 1 indicates the non feasible grasp. The restoration of the feasible grasp is indicated in yellow line. The restoration of required force (equal to 1 N) in Z-axis to obtain a feasible grasp is indicated by the green line. The values of raw tactile voltage values were used for carrying out the grasping task during the manipulation phase depicted between the red and the yellow lines. The in hand manipulation tasks were controlled using the combination of raw tactile voltage and reconstructed force values.

7 Conclusion and future works

This work presented a novel sensing strategy based on opto-electronic technology developed for a prosthetic hand. Grasping forces and torques were determined from voltages obtained from the novel sensor using ANN and CNN training methods. To summarize the performance of various trained NNs, the optimum approach for carrying out both pushing and slipping tasks was to use CNN compared to a ANN. The CNN2 was found to be the best model for determining force and torque values from ATI reference sensor measurements with better prediction accuracy, lower training and validation losses, better learning rate, and lower MSE values compared to CNN1. However, when torque values were introduced to the CNN network, the accuracy of the network decreased while predicting forces/torques.

Experimental evaluations on proposed novel sensor in this work demonstrated a force capacity upto 30 N in the Z-axis frame, which was sufficient for carrying out power, pinch, and lateral grasps. For force values less than 1.2 N, the precision of force prediction using NN differs by no more than 0.15 N from the actual force value. This disparity can be reduced by using an autonomous calibration procedure at the micro-force level to improve the sensor’s efficiency. The performance of the developed sensor was satisfactory when compared with the similar tactile sensors available in the market. The sensor could able to achieve a better sensing capability even though it is designed with dimensional constraints to place on the fingertips.

A method for controlling in-hand manipulation was developed in order to facilitate the grasping of objects with the hands in presence of external disturbances. Grasp forces were calculated by the control strategy using the reconstructed sensor data. The method employed taxel voltage data to regulate the object’s orientation. The adoption of control strategy to assist during grasping tasks enhanced the grasping of object without loosing contact even in the presence of external disturbances in vertical and horizontal directions. Experiments were conducted to validate the proposed strategies and analyze their effectiveness. The idea of in-hand manipulation control proposed in this work can be useful in the employment of the PRISMA Hand II in prosthetic as well as in the industrial domains. Future work will focus on employing the proposed force control strategy on all the fingers to improve efficiency of in-hand manipulation tasks.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author contributions

AS: Conceptualization, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. MP: Methodology, Software, Writing–original draft, Writing–review and editing. PK: Conceptualization, Methodology, Validation, Writing–original draft, Writing–review and editing. SP: Investigation, Supervision, Validation, Writing–original draft, Writing–review and editing. SS: Writing–original draft, Writing–review and editing. FF: Funding acquisition, Investigation, Project administration, Resources, Supervision, Validation, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Italian Ministry of Research under the complementary actions to the NRRP “Fit4MedRob—Fit for Medical Robotics” Grant (PNC0000007).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2024.1460589/full#supplementary-material

References

Almassri, A. M., Wan Hasan, W., Ahmad, S. A., Ishak, A. J., Ghazali, A., Talib, D., et al. (2015). Pressure sensor: state of the art, design, and application for robotic hand. J. Sensors 2015, 1–12. doi:10.1155/2015/846487

Andrychowicz, O. M., Baker, B., Chociej, M., Jozefowicz, R., McGrew, B., Pachocki, J., et al. (2020). Learning dexterous in-hand manipulation. Int. J. Robotics Res. 39, 3–20. doi:10.1177/0278364919887447

Ascari, L., Corradi, P., Beccai, L., and Laschi, C. (2007). A miniaturized and flexible optoelectronic sensing system for tactile skin. J. Micromechanics Microengineering 17, 2288–2298. doi:10.1088/0960-1317/17/11/016

Bekiroglu, Y., Laaksonen, J., Jorgensen, J. A., Kyrki, V., and Kragic, D. (2011). Assessing grasp stability based on learning and haptic data. IEEE Trans. Robotics 27, 616–629. doi:10.1109/tro.2011.2132870

Belter, J. T., Segil, J. L., Sm, B., and Weir, R. F. (2013). Mechanical design and performance specifications of anthropomorphic prosthetic hands: a review. J. rehabilitation Res. Dev. 50, 599–618. doi:10.1682/jrrd.2011.10.0188

Bhattacharjee, T., Rehg, J. M., and Kemp, C. C. (2012). “Haptic classification and recognition of objects using a tactile sensing forearm,” in 2012 IEEE/RSJ international Conference on intelligent Robots and systems (IEEE), 4090–4097.

Choi, K. Y., Akhtar, A., and Bretl, T. (2017). “A compliant four-bar linkage mechanism that makes the fingers of a prosthetic hand more impact resistant,” in 2017 IEEE international conference on robotics and automation (ICRA) (IEEE), 6694–6699.

Chuah, M. Y., and Kim, S. (2016). “Improved normal and shear tactile force sensor performance via least squares artificial neural network (lsann),” in 2016 IEEE international conference on robotics and automation (ICRA) (IEEE), 116–122.

Chun, S., Hong, A., Choi, Y., Ha, C., and Park, W. (2016). A tactile sensor using a conductive graphene-sponge composite. Nanoscale 8, 9185–9192. doi:10.1039/c6nr00774k

Cirillo, A., Cirillo, P., Maria, G. D., Natale, C., and Pirozzi, S. (2017). “Force/tactile sensors based on optoelectronic technology for manipulation and physical human–robot interaction,” in Advanced mechatronics and MEMS devices II (Springer), 95–131.

Dahiya, R. S., Mittendorfer, P., Valle, M., Cheng, G., and Lumelsky, V. J. (2013). Directions toward effective utilization of tactile skin: a review. IEEE Sensors J. 13, 4121–4138. doi:10.1109/jsen.2013.2279056

Drimus, A., Kootstra, G., Bilberg, A., and Kragic, D. (2014). Design of a flexible tactile sensor for classification of rigid and deformable objects. Robotics Aut. Syst. 62, 3–15. doi:10.1016/j.robot.2012.07.021

Feigl, P., Weibel, J.-B., and Vincze, M. (2023). Autonomous in-hand object modeling from a mobile manipulator. in # placeholder_parent_metadata_value#, 80–85.

Ficuciello, F. (2018). Synergy-based control of underactuated anthropomorphic hands. IEEE Trans. Industrial Inf. 15, 1144–1152. doi:10.1109/tii.2018.2841043

Ficuciello, F. (2019). Hand-arm autonomous grasping: synergistic motions to enhance the learning process. Intell. Serv. Robot. 12, 17–25. doi:10.1007/s11370-018-0262-0

Ficuciello, F., Federico, A., Lippiello, V., and Siciliano, B. (2018). Synergies evaluation of the schunk s5fh for grasping control. Adv. Robot Kinemat. 2016, 225–233. doi:10.1007/978-3-319-56802-7_24

Ficuciello, F., Palli, G., Melchiorri, C., and Siciliano, B. (2012). “Planning and control during reach to grasp using the three predominant ub hand iv postural synergies,” in 2012 IEEE international conference on robotics and automation (IEEE), 2255–2260.

Ficuciello, F., Pisani, G., Marcellini, S., and Siciliano, B. (2020). The prisma hand i: a novel underactuated design and emg/voice-based multimodal control. Eng. Appl. Artif. Intell. 93, 103698. doi:10.1016/j.engappai.2020.103698

Fraden, J., and King, J. (2004). Handbook of modern sensors: physics, designs, and applications, 3. Springer.

Fujiwara, E., Wu, Y. T., Suzuki, C. K., de Andrade, D. T. G., Neto, A. R., and Rohmer, E. (2018). “Optical fiber force myography sensor for applications in prosthetic hand control,” in 2018 IEEE 15th international workshop on advanced motion control (AMC) (IEEE), 342–347.

Gao, S., Duan, J., Kitsos, V., Selviah, D. R., and Nathan, A. (2018). User-oriented piezoelectric force sensing and artificial neural networks in interactive displays. IEEE J. Electron Devices Soc. 6, 766–773. doi:10.1109/jeds.2018.2848917

Gastaldo, P., Pinna, L., Seminara, L., Valle, M., and Zunino, R. (2014). A tensor-based pattern-recognition framework for the interpretation of touch modality in artificial skin systems. IEEE Sensors J. 14, 2216–2225. doi:10.1109/jsen.2014.2320820

Ge, C., Yang, B., Wu, L., Duan, Z., Li, Y., Ren, X., et al. (2022). Capacitive sensor combining proximity and pressure sensing for accurate grasping of a prosthetic hand. ACS Appl. Electron. Mater. 4, 869–877. doi:10.1021/acsaelm.1c01274

Grebenstein, M., Chalon, M., Friedl, W., Haddadin, S., Wimböck, T., Hirzinger, G., et al. (2012). The hand of the dlr hand arm system: designed for interaction. Int. J. Robotics Res. 31, 1531–1555. doi:10.1177/0278364912459209

Heo, J.-S., Kim, J.-Y., and Lee, J.-J. (2008). “Tactile sensors using the distributed optical fiber sensors,” in 2008 3rd international conference on sensing technology (IEEE), 486–490.

Hoshino, K., and Mori, D. (2008). “Three-dimensional tactile sensor with thin and soft elastic body,” in 2008 IEEE workshop on advanced robotics and its social impacts (IEEE), 1–6.

Kakani, V., Cui, X., Ma, M., and Kim, H. (2021). Vision-based tactile sensor mechanism for the estimation of contact position and force distribution using deep learning. Sensors 21, 1920. doi:10.3390/s21051920

Koiva, R., Zenker, M., Schürmann, C., Haschke, R., and Ritter, H. J. (2013). “A highly sensitive 3d-shaped tactile sensor,” in 2013 IEEE/ASME international conference on advanced intelligent mechatronics (IEEE), 1084–1089.

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012). Imagine classification with deep convolutional neural networks. Adv. neural Inf. Process. Syst. 25.

Kyberd, P. J., and Chappell, P. H. (1992). Object-slip detection during manipulation using a derived force vector. Mechatronics 2, 1–13. doi:10.1016/0957-4158(92)90034-l

Lee, D.-H., Park, J.-H., Park, S.-W., Baeg, M.-H., and Bae, J.-H. (2016). Kitsch-hand: a highly dexterous and modularized robotic hand. IEEE/ASME Trans. Mechatronics 22, 876–887. doi:10.1109/tmech.2016.2634602

Li, T., Luo, H., Qin, L., Wang, X., Xiong, Z., Ding, H., et al. (2016). Flexible capacitive tactile sensor based on micropatterned dielectric layer. Small 12, 5042–5048. doi:10.1002/smll.201600760

Liu, H., Greco, J., Song, X., Bimbo, J., Seneviratne, L., and Althoefer, K. (2012a). “Tactile image based contact shape recognition using neural network,” in 2012 IEEE international Conference on multisensor Fusion and Integration for intelligent systems (MFI) (IEEE), 138–143.

Liu, H., Song, X., Bimbo, J., Althoefer, K., and Senerivatne, L. (2012b). “Intelligent fingertip sensing for contact information identification,” in Advances in reconfigurable mechanisms and robots I (Springer), 599–608.

Liu, H., Xu, K., Siciliano, B., and Ficuciello, F. (2019). “The mero hand: a mechanically robust anthropomorphic prosthetic hand using novel compliant rolling contact joint,” in 2019 IEEE/ASME international Conference on advanced intelligent mechatronics (AIM) (IEEE), 126–132.

Liu, Y., Berman, J., Dodson, A., Park, J., Zahabi, M., Huang, H., et al. (2024). Human-centered evaluation of emg-based upper-limb prosthetic control modes. IEEE Trans. Human-Machine Syst. 54, 271–281. doi:10.1109/thms.2024.3381094

Liu, Y., Yang, X., Li, J., Zhang, K., Wang, H., Zeng, X., et al. (2023). Microstructured gel polymer electrolyte and an interdigital electrode-based intronic barometric pressure sensor with high resolution over a broad range. ACS Appl. Mater. and Interfaces 15, 58976–58983. doi:10.1021/acsami.3c16276

Lotti, F., Tiezzi, P., Vassura, G., Biagiotti, L., Palli, G., and Melchiorri, C. (2005). “Development of ub hand 3: early results,” in Proceedings of the 2005 IEEE international Conference on Robotics and automation (IEEE), 4488–4493.

Madry, M., Bo, L., Kragic, D., and Fox, D. (2014). “St-hmp: unsupervised spatio-temporal feature learning for tactile data,” in 2014 IEEE international conference on robotics and automation (ICRA) (IEEE), 2262–2269.

Marateb, H. R., Nezhad, F. Z., Nosouhi, M., Esfahani, Z., Fazilati, F., Yusefi, F., et al. (2021). “Prosthesis control using undersampled surface electromyographic signals,” in Analysis of medical modalities for improved diagnosis in modern healthcare (Florida: CRC Press), 89–112.

Meier, M., Patzelt, F., Haschke, R., and Ritter, H. J. (2016). “Tactile convolutional networks for online slip and rotation detection,” in Artificial neural networks and machine learning–ICANN 2016: 25th international conference on artificial neural networks, barcelona, Spain, september 6-9, 2016, proceedings, Part II 25 (Springer), 12–19.

Nassar, H., Khandelwal, G., Chirila, R., Karagiorgis, X., Ginesi, R. E., Dahiya, A. S., et al. (2023). Fully 3d printed piezoelectric pressure sensor for dynamic tactile sensing. Addit. Manuf. 71, 103601. doi:10.1016/j.addma.2023.103601

Odhner, L. U., Jentoft, L. P., Claffee, M. R., Corson, N., Tenzer, Y., Ma, R. R., et al. (2014). A compliant, underactuated hand for robust manipulation. Int. J. Robotics Res. 33, 736–752. doi:10.1177/0278364913514466

Ohmura, Y., Kuniyoshi, Y., and Nagakubo, A. (2006). “Conformable and scalable tactile sensor skin for curved surfaces,” in Proceedings 2006 IEEE international conference on robotics and automation, 2006 (IEEE), 1348–1353. ICRA 2006.

Park, J., Berman, J., Dodson, A., Liu, Y., Armstrong, M., Huang, H., et al. (2024). Assessing workload in using electromyography (emg)-based prostheses. Ergonomics 67, 257–273. doi:10.1080/00140139.2023.2221413

Park, K. P. S. M., Hyunkyu and Kim, J., and Mo, S. (2021). Deep neural network based electrical impedance tomographic sensing methodology for large-area robotic tactile sensing. IEEE Trans. Robotics 5, 1570–1583. doi:10.1109/tro.2021.3060342

Rossiter, J., and Mukai, T. (2005). “A novel tactile sensor using a matrix of leds operating in both photoemitter and photodetector modes,” in SENSORS, 2005 (IEEE), 4.

Sato, K., Kamiyama, K., Nii, H., Kawakami, N., and Tachi, S. (2008). “Measurement of force vector field of robotic finger using vision-based haptic sensor,” in 2008 IEEE/RSJ international conference on intelligent robots and systems (IEEE), 488–493.

Schmitz, A., Maggiali, M., Natale, L., Bonino, B., and Metta, G. (2010). “A tactile sensor for the fingertips of the humanoid robot icub,” in 2010 IEEE/RSJ international Conference on intelligent Robots and systems (IEEE), 2212–2217.

Servati, A., Zou, L., Wang, Z. J., Ko, F., and Servati, P. (2017). Novel flexible wearable sensor materials and signal processing for vital sign and human activity monitoring. Sensors 17, 1622. doi:10.3390/s17071622

Sferrazza, C., Wahlsten, A., Trueeb, C., and D’Andrea, R. (2019). Ground truth force distribution for learning-based tactile sensing: a finite element approach. IEEE Access 7, 173438–173449. doi:10.1109/access.2019.2956882

Sharma, N., Prakash, A., and Sharma, S. (2023). An optoelectronic muscle contraction sensor for prosthetic hand application. Rev. Sci. Instrum. 94, 035009. doi:10.1063/5.0130394

Shi, L., Li, Z., Chen, M., Qin, Y., Jiang, Y., and Wu, L. (2020). Quantum effect-based flexible and transparent pressure sensors with ultrahigh sensitivity and sensing density. Nat. Commun. 11, 3529. doi:10.1038/s41467-020-17298-y

Sohn, K.-S., Chung, J., Cho, M.-Y., Timilsina, S., Park, W. B., Pyo, M., et al. (2017). An extremely simple macroscale electronic skin realized by deep machine learning. Sci. Rep. 7, 11061. doi:10.1038/s41598-017-11663-6

Van Der Niet, O., and van der Sluis, C. K. (2013). Functionality of i-limb and i-limb pulse hands: case report. J. rehabilitation Res. Dev. 50, 1123–1128. doi:10.1682/jrrd.2012.08.0140

Villani, L., Ficuciello, F., Lippiello, V., Palli, G., Ruggiero, F., and Siciliano, B. (2012). “Grasping and control of multi-fingered hands,” in Advanced bimanual manipulation: results from the DEXMART Project, 219–266.

Wang, C., Dong, L., Peng, D., and Pan, C. (2019). Tactile sensors for advanced intelligent systems. Adv. Intell. Syst. 1, 1900090. doi:10.1002/aisy.201900090

Wang, F., and Song, Y. (2021). Three-dimensional force prediction of a flexible tactile sensor based on radial basis function neural networks. J. Sensors 2021, 8825019. doi:10.1155/2021/8825019

Weiß, K., and Worn, H. (2005). “The working principle of resistive tactile sensor cells,” in IEEE international conference mechatronics and automation (IEEE), 2005, 471–476. doi:10.1109/icma.2005.1626593

Yamada, Y., Morizono, T., Umetani, Y., and Takahashi, H. (2005). Highly soft viscoelastic robot skin with a contact object-location-sensing capability. IEEE Trans. Industrial Electron. 52, 960–968. doi:10.1109/tie.2005.851654

Zhang, Y., Ye, J., Lin, Z., Huang, S., Wang, H., and Wu, H. (2018). A piezoresistive tactile sensor for a large area employing neural network. Sensors 19, 27. doi:10.3390/s19010027

Zhao, L., Chen, Z., Yang, Y., Wang, Z. J., and Leung, V. C. (2018). Incomplete multi-view clustering via deep semantic mapping. Neurocomputing 275, 1053–1062. doi:10.1016/j.neucom.2017.07.016

Zhou, J., Yi, J., Chen, X., Liu, Z., and Wang, Z. (2018). Bcl-13: a 13-dof soft robotic hand for dexterous grasping and in-hand manipulation. IEEE Robotics Automation Lett. 3, 3379–3386. doi:10.1109/lra.2018.2851360

Keywords: under actuation, in-hand manipulation, tactile sensing, neural networks architectures, control

Citation: Singh A, Pinto M, Kaltsas P, Pirozzi S, Sulaiman S and Ficuciello F (2024) Validations of various in-hand object manipulation strategies employing a novel tactile sensor developed for an under-actuated robot hand. Front. Robot. AI 11:1460589. doi: 10.3389/frobt.2024.1460589

Received: 06 July 2024; Accepted: 02 September 2024;

Published: 26 September 2024.

Edited by:

Yilun Sun, Technical University of Munich, GermanyReviewed by:

Houde Dai, Chinese Academy of Sciences (CAS), ChinaBurkhard Corves, RWTH Aachen University, Germany

Copyright © 2024 Singh, Pinto, Kaltsas, Pirozzi, Sulaiman and Ficuciello. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shifa Sulaiman, c3Nham1lY2hAZ21haWwuY29t

Avinash Singh1

Avinash Singh1 Petros Kaltsas

Petros Kaltsas Shifa Sulaiman

Shifa Sulaiman Fanny Ficuciello

Fanny Ficuciello