- Graduate School of Integrated Design Engineering, Faculty of Science and Technology, Keio University, Yokohama, Japan

This paper presents a tangle- and contact-free path planning (TCFPP) for a mobile robot attached to a base station with a finite-length cable. This type of robot, called a tethered mobile robot, can endure long-time exploration with a continuous power supply and stable communication via its cable. However, the robot faces potential hazards that endanger its operation such as cable snagging on and cable entanglement with obstacles and the robot. To address these challenges, our approach incorporates homotopy-aware path planning into deep reinforcement learning. The proposed reward design in the learning problem penalizes the cable-obstacle and cable-robot contacts and encourages the robot to follow the homotopy-aware path toward a goal. We consider two distinct scenarios for the initial cable configuration: 1) the robot pulls the cable sequentially from the base while heading for the goal, and 2) the robot moves to the goal starting from a state where the cable has already been partially deployed. The proposed method is compared with naive approaches in terms of contact avoidance and path similarity. Simulation results revealed that the robot can successfully find a contact-minimized path under the guidance of the reference path in both scenarios.

1 Introduction

A tethered mobile robot can perform exploration for a long duration with a continuous power supply and stable communication through its cable. In addition, the robot can use the cable as a lifeline on steep slopes or cliffs to prevent falling or to hook it onto fixed obstacles. Their typical applications include the exploration of nuclear power plants (Nagatani et al., 2013), underwater areas, subterranean spaces (Martz et al., 2020), and lunar/planetary slopes and caves (Abad-Manterola et al., 2011; Schreiber et al., 2020). Untethered mobile robots have indeed explored various environments, such as uneven terrains or cluttered areas, but we believe that tethering mobile robots is such a powerful solution that will allow for exploration into previously uncharted territories while ensuring power, communication, and fall safety. However, difficulties arise when we try to deploy the tethered robot system. This is because the path planning algorithms for conventional mobile robots cannot be applied directly to tethered robots owing to constraints such as the cable length and the cable’s interaction with the robot and obstacles.

A major challenge in path planning for tethered mobile robots has been computing the shortest path to the target point, considering the cable length and cable-obstacle interaction. This issue has been studied extensively in Xavier (1999), Brass et al. (2015), Abad-Manterola et al. (2011), Kim et al. (2014), Kim and Likhachev (2015), and Sahin and Bhattacharya (2023). The motivation stems from the fact that the workspace of a tethered robot is theoretically a circular shape, whose center is the anchor point of the cable when there are no obstacles in the environment. However, this is not the case when obstacles are present. In early research, visibility graph-based approaches were proposed in Xavier (1999), Brass et al. (2015), and Abad-Manterola et al. (2011). These studies assumed that the cable automatically coiled to maintain its tension at all times.

Research in this area has gained momentum only since the work of homotopy-aware path planning, which was first proposed by Igarashi and Stilman (2010) and mathematically refined by Kim et al. (2014). The essence of this approach is to topologically encode a path by its placement with respect to the obstacles in the environment. The usefulness of this method can be observed in its application to exploration problems (Shapovalov and Pereira, 2020) and 3D environments (Sahin and Bhattacharya, 2023).

Recent studies have focused on avoiding cable-robot and cable-obstacle contacts. Path planning with cable-robot avoidance was developed in Yang et al. (2023), whereas cable-obstacle contact is admissible. Our previous study, Shimada and Ishigami (2023), proposed a waypoint refinement method based on the distance from the cable base, curvature, and proximity to obstacles, which was formulated using an artificial potential field. However, this method is only applicable when the cable is initially stored in a retractable mechanism and cannot be used when the cable is initially deployed in the environment.

Despite such intensive research effort, a comprehensive approach that balances the three objectives—overcoming cable length constraints, avoiding cable-robot contact, and avoiding cable-obstacle contact—has not been developed yet. To this end, we must consider the following two issues: 1) the global path generated by conventional mobile robot methods cannot be used directly as a path for a tethered robot, and 2) the robot has no knowledge of the cable dynamics and cannot directly control the cable position, because of the underactuated nature of the tethered robot system.

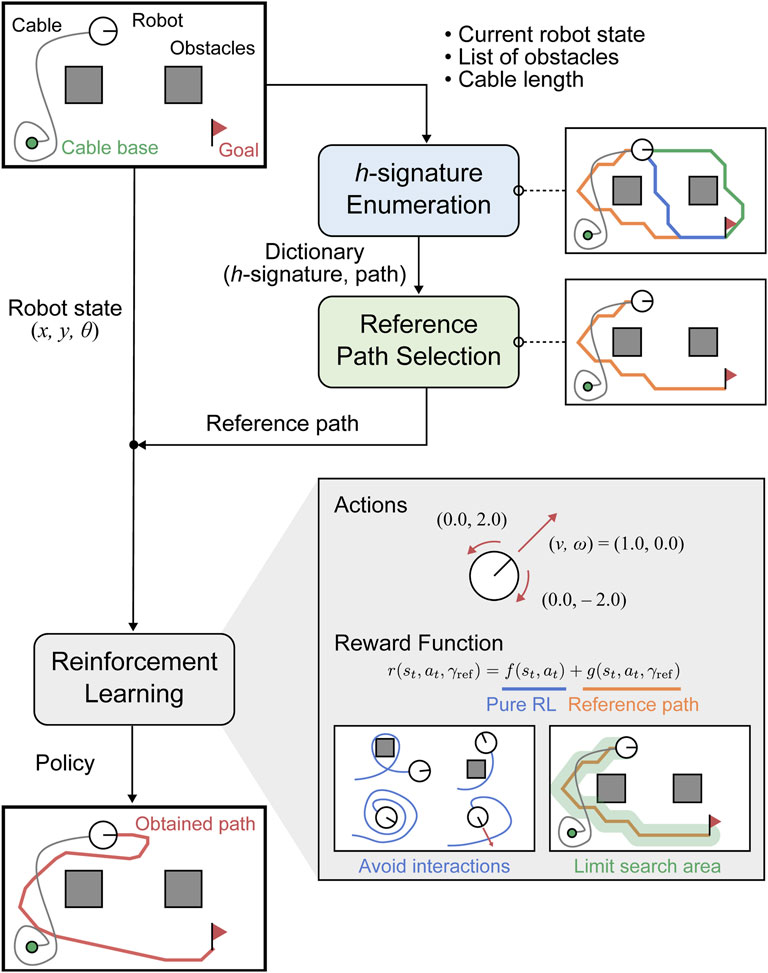

In this study, we aim to solve a path planning problem that considers the cable length constraints and minimizes the cable-obstacle and cable-robot contacts. Our approach, a tangle- and contact-free path planning (TCFPP) algorithm uses deep reinforcement learning (DRL) with a homotopy-aware reference path guidance (Figure 1). The reward function in DRL has two components: the first guides contact avoidance and the second suppresses the deviations from the reference path. We built a customized Gymnasium environment using a kinematics-based robot model and a position-based cable model. A standard DRL algorithm, Deep Q-Network (Mnih et al., 2015) can successfully determine an effective path in a given environment. We evaluated the proposed method through simulations from two aspects: contact avoidance and similarity with the paths of naive approaches.

Figure 1. Flowchart of the proposed algorithm: Tangle- and Contact-free Path Planning (TCFPP) method. The algorithm aims to generate paths that minimize cable-obstacle and cable-robot contact.

The following are the key contributions of this study:

• We develop a method for selecting a reference path for a tethered mobile robot from enumerated feasible paths in terms of homotopy class.

• We propose TCFPP, a path planning method for a tethered mobile robot that considers cable-obstacle and cable-robot avoidance using DRL with homotopy-aware reference path guidance.

• We show that the proposed method effectively balances path shortness with maintaining the distance from obstacles.

The remainder of this paper is organized as follows. Section 2 introduces the concepts of homotopy class of path and

2 Preliminaries

The proposed method uses DRL with hommotopy-aware reference path. This section introduces the notion of homotopy class of path and the basics of reinforcement learing with a reference path.

2.1 Homotopy class of paths

The notion of homotopy class of paths plays an important role in capturing their nature based on their topological relations to obstacles in the environment, rather than their geometric properties, such as length, curvature, or smoothness. Here we outline the concept of a homotopy class and introduce

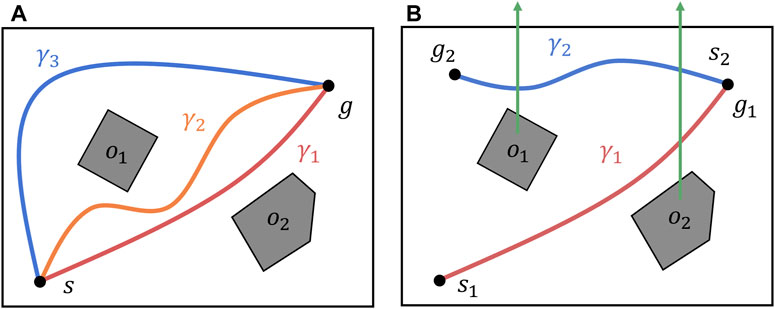

Consider two paths that share the same start and end points. The two paths belong to the same homotopy class if and only if they can be deformed into each other without intersecting any obstacles. For example, in Figure 2A, the paths

Figure 2. Homotopic relation between paths in an environment with two obstacles. (A) Three paths in an environment with two obstacles. Paths

In addition, the

2.2 Reinforcement learning with reference path

We consider the standard RL problem with reference path

The first term,

3 Problem statement

This study addresses a path planning problem that considers the constraints imposed by the cable length and minimizes the cable-obstacle and cable-robot contacts. The goal was to avoid entanglement and contact between the three entities: the robot, cable, and obstacles. Although these interactions do not always endanger the robot, they potentially impede its safe operation.

In a tethered mobile robot path planning, there are two primary scenarios based on the initial state of the cable: Unreeling Cable and Handling Deployed Cable. In the unreeling cable scenario, the cable and robot follow nearly identical paths, thereby focusing on avoiding cable-obstacle contact. In contrast, the handling deployed cable scenario treats the cable as an additional obstacle, thus requiring paths that avoid both cable-obstacle and cable-robot contacts.

Figure 1 illustrates the flowchart of the proposed algorithm. This algorithm inputs the robot’s current and target positions, cable placement, and cable length, and aims to output paths that minimize cable-related contact with the guidance of a homotopy-aware reference path. For the computation of the reference path, we first enumerate all paths with different homotopy classes that connect the robot’s current and target points, and then select the shortest and reachable path as the reference path, taking into account cable length constraints.

A key assumption is that the decision-making entity in DRL, which we call the agent, has no knowledge of the cable behavior, and its interaction with the environment can be obtained only via reward signals.

4 Proposed method: TCFPP

The proposed method comprises three main modules: 1) enumeration of shortest paths with distinct

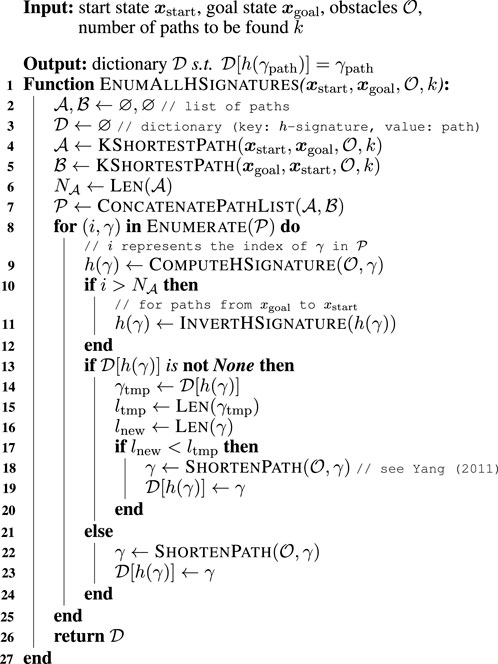

4.1 Step one—Enumerating

The objective of this step is to enumerate all possible

The algorithm initializes two lists

The next step is to determine the shortest path for each

In the

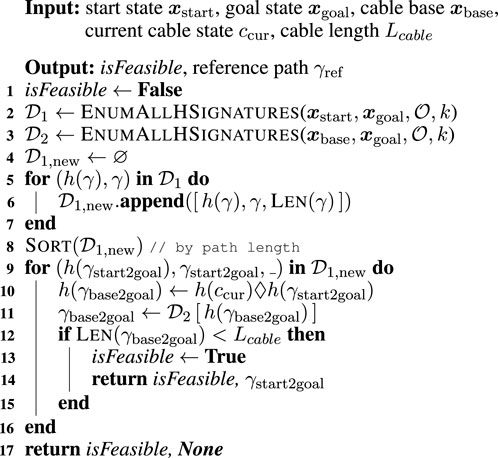

4.2 Step two—Computing reference path

Algorithm 1 allows us to find the possible kinds of

Algorithm 2 first initializes a Boolean flag with false that checks for the existence of a reachable path to the target position with consideration of cable placement and length (line 1). When this flag is returned as false, it means that the goal is too far away for the length of the cable. This algorithm then enumerates the

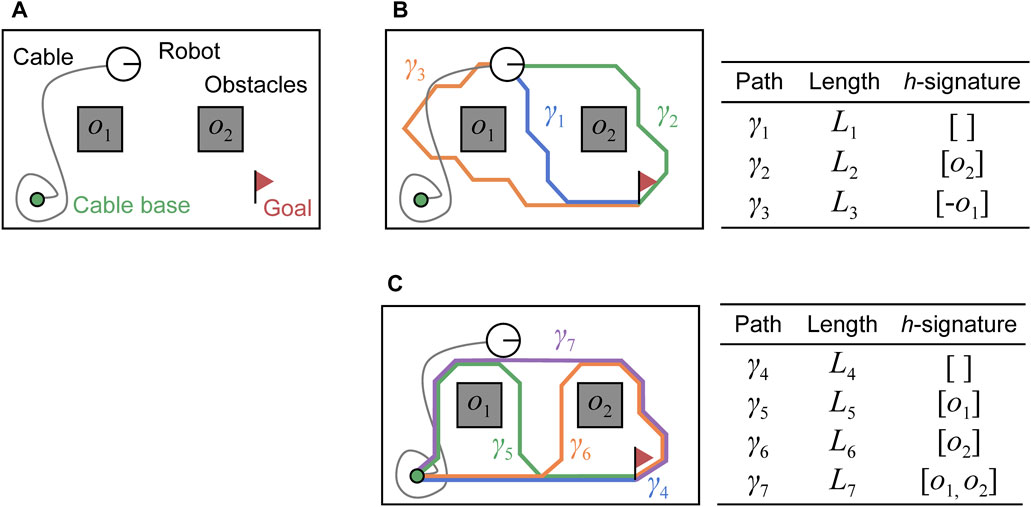

Figure 3. Example of paths stored in two dictionaries

The next step is to find the shortest path in the dictionary

After sorting the list

We explain the process in lines 9–15 using the visualized example in Figure 3. In line 9, we first retrieve the elements with shortest path from

If all paths in the list

4.3 Step three—training agent

The reward function includes two terms: the pure RL term,

The reward function,

The reward

The exact value of the positive/negative rewards was determined through experiments. In this study, we set

The reward function

Let

where

5 Simulation model

We present a tethered robot model that uses a kinematics-based robot with a position-based cable. Although this model lacks mechanical fidelity, it offers computational efficiency, which is useful for iterative RL simulations.

5.1 Kinematics-based robot model

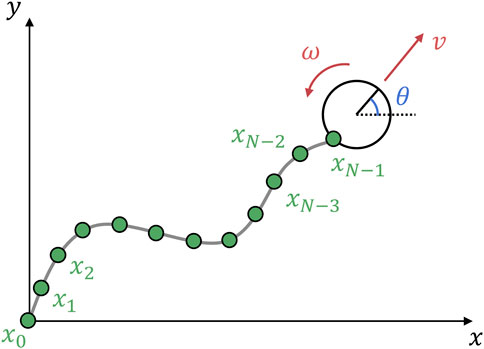

In this study, we used the velocity motion model (Thrun, 2002) (Figure 4). The command velocity can be input directly into the model. The robot state is defined as

Figure 4. Tethered mobile robot model used in this study: kinematics-based robot model and position-based cable model. The one end of the cable

5.2 Position-based cable model

A cable is modeled as a chain of nodes; when one cable node is pulled, the rest of the nodes follow. This method is called a geometry- or position-based model and is often used in computer graphics. We extended the model in Brown et al. (2004) for a tethered mobile robot to update the cable nodes from two end nodes: 1) the propagation of the robot motion to the cable, and 2) the application of the fixed node constraint to the entire cable nodes. On the first side, from the robot motion to the cable nodes, the node positions are updated as follows:

For the second side, the node positions are updated using

Equation 7 updates in the opposite direction to Equation 6 and propagates zero displacement because the endpoint

5.3 Contact detection

To detect cable-obstacle contact, we use the Liang-Barsky algorithm (Liang and Barsky, 1984), which is a line clipping algorithm. Although this algorithm originally identifies the overlaps between a rectangle and a line segment, it can also be used for contact detection. The line segment with the end points

To detect cable-robot contact, we compute the distance between the center of the robot,

where

6 Simulation results and discussion

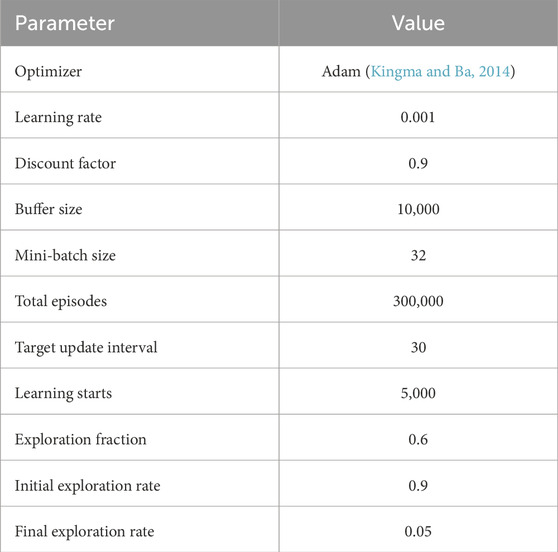

This section evaluates the proposed method in the two distinct scenarios explained in Section 3: unreeling the cable (Scenario 1) and handling the deployed cable (Scenario 2). All simulations were executed on an Intel(R) Core(TM) i7-12700 CPU clocked at 2.10 GHz, NVIDIA GeForce RTX 3070, Python 3.8, and PyTorch 1.13. A single learning with 300,000 total timesteps, as defined in stable-baselines3 (Raffin et al., 2021), required approximately 8 min.

6.1 RL environment

We formulate the TCFPP as an MDP expressed by the tuple:

The Deep Q-Network used in this study comprises the main and target networks with a three-layer neural network structure. The state of the robot is processed in the input layer, followed by two middle layers of 256 units each with ReLU activation, and finally, an output layer that maps to the robot’s actions. The termination condition for an episode is two-fold: reaching the goal and colliding with an obstacle. The agent receives a positive reward,

6.2 Experimental setup

We tested our algorithm in three environments: Dots, Two bars, and Complex environments (Figure 6). These datasets were inspired by Bhardwaj et al. (2017) and serve different purposes:

• The Dots maps represent cluttered environments in which an agent must consider multiple path patterns in the sense of homotopy classes.

• The Two bars maps represent simplified indoor environments with two rooms separated by a narrow passage, in which the agent can take multiple path patterns in the sense of geometry (not homotopy). The focus is on how distant from the obstacles the agent chooses a path.

• The Complex maps represent unstructured indoor environments with various numbers and shapes of obstacles. In these environments, the agent must consider both homotopy and geometry, which makes the path-finding task complex.

In this study, we manually defined five environments for each type and validated our proposed method in 20 cases by considering the initial cable configuration.

The generation of initial cable placements presents unique challenges for Scenario 2 because the obstacles must be considered. In this study, we generated a reasonable initial cable configuration by following a sequential process: 1) run a simulation in the unreeling cable scenario (Scenario 1); 2) record the final robot and cable placements; 3) retrieve the recorded placements and set them as the initial setting in the handling deployed cable scenario (Scenario 2); 4) assign the next goal position and start the next simulation; 5) repeat ii) to 6) until the completion of the specified number of simulations.

To evaluate contact avoidance, we used the following metrics: path length

6.3 Results

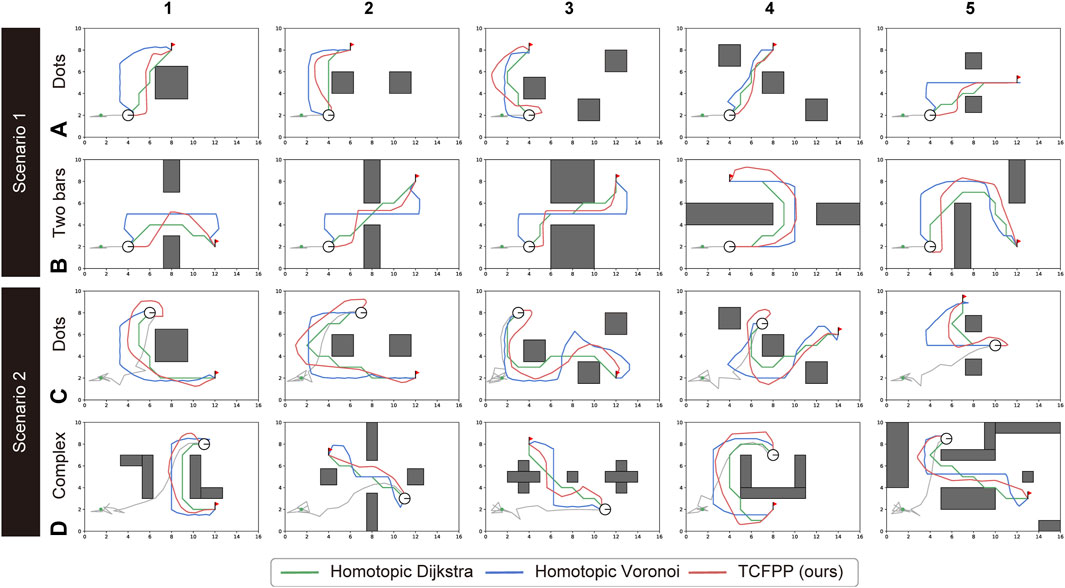

We compared our proposed method (TCFPP) with two classical path planning methods augmented with

The reason we provide

6.3.1 Qualitative evaluation on path similarity

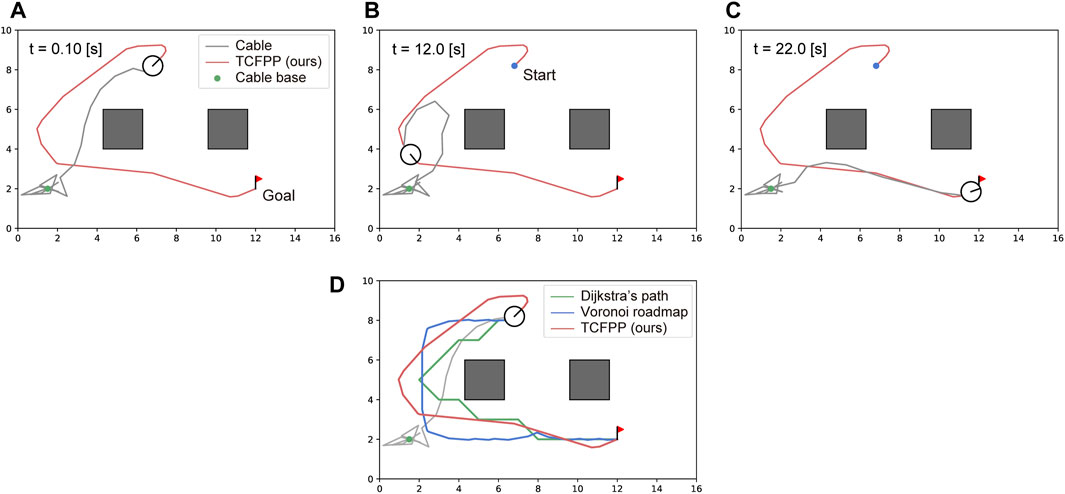

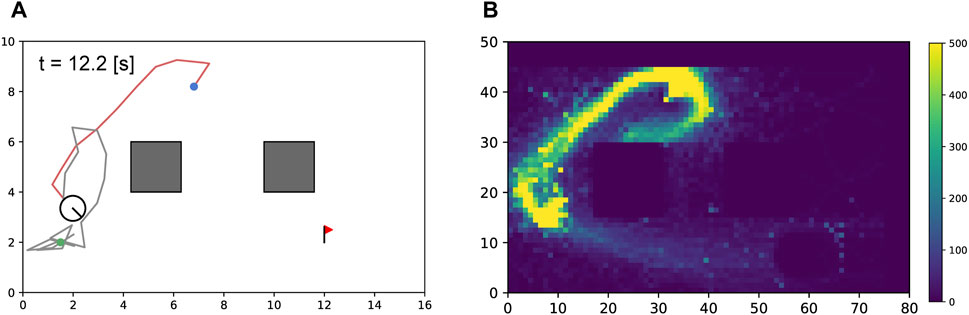

Figure 5 shows snapshots of a typical path tracking simulation and the path similarity with the baselines. Figures 5A–C show the sequential movement of the robot that follows the path obtained by TCFPP. Figure 5D shows the paths of the three methods with the same

Figure 5. Typical simulation result. (A–C) The snapshots of path tracking generated by our method are shown. The circular robot, whose line segment represents its heading, is connected with the environment via a cable (gray line) at a point (green dot). The red line represents the path generated by our method, which connects the start point (blue dot) and goal point (red flag). (D) The visual comparison of the generated path by TCFPP with two classical planning methods is depicted.

Figure 6. Path comparison for all 20 configurations (5 maps × 2 environment types × 2 scenarios). The two types of environments for Scenario 1 are (A) and (B), and for Scenario 2, they are (C) and (D). The three path planning algorithms shared the best homotopy class of the paths; however, they generated different paths with respect to the path shape, path length, and proximity to the obstacles. The green lines represent the Homotopic Dijkstra’s algorithm, the blue lines represent the Homotopic Voronoi roadmap, and the red lines represent the proposed method.

6.3.2 Quantitative evaluation on contact avoidance

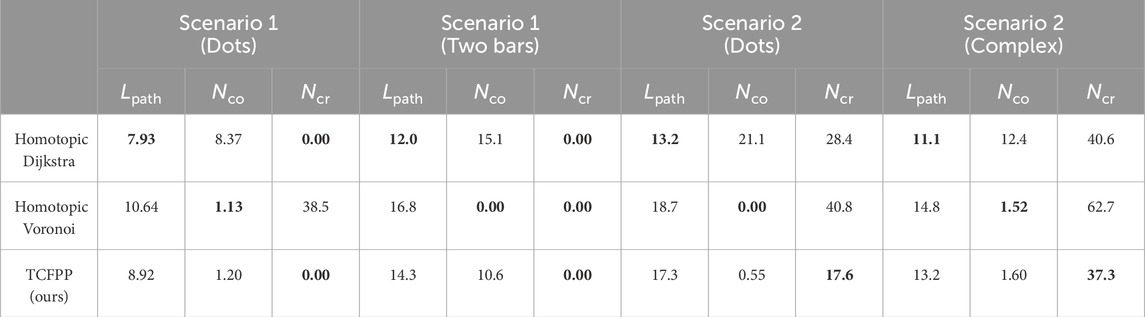

Table 2 presents a comparison of the quantitative results of the three metrics: path length

Table 2. Quantitative results over five simulations in each case. The values are the averages of five simulations (best in bold). The metrics

6.3.3 Sensitivity study on penalty for cable-robot contact

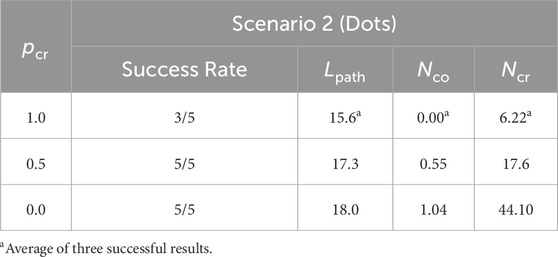

The proposed reward design was not based on physical parameters; therefore, it was difficult to understand intuitively whether the values and weights of the rewards were appropriate. Therefore, we evaluated the impact of the penalties,

Table 3. Sensitivity study on the penalty value for cable-robot contact. This penalty can adjust whether the agent can or cannot step over the cable. When

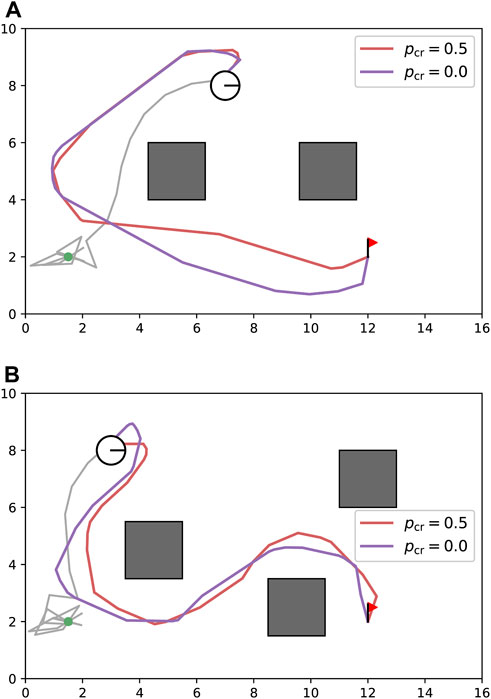

Figure 7. Sensitivity study on cable-robot penalty

For

Figure 8. Sensitivity study on cable-robot penalty

6.3.4 Ablation study on reference path

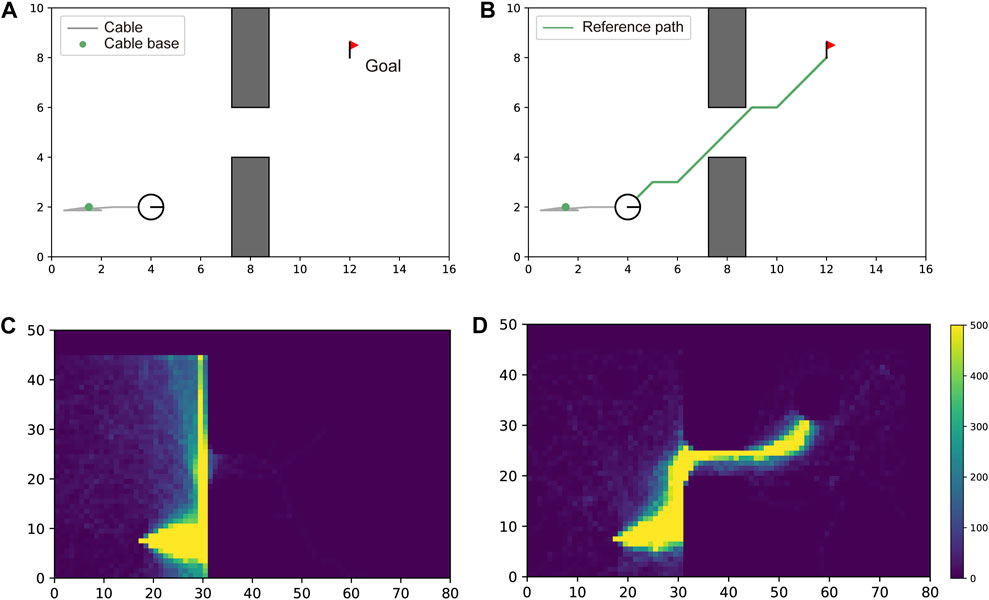

The proposed reward design has two terms: a pure RL term and a reference path term, as presented in Equation 1. We evaluated the effectiveness of providing information about the reference path to the agent. When we do not use the reference path information, we simply eliminate the second term of the reward function

Figure 9. Effectiveness of reference path for learning efficiency. In (A, C), the robot could not traverse the gap without guidance of a reference path. The number of times the agent visited each cell of the map during the training process is visualized as a heatmap. This heatmap shows the lack of experience of the agent in reaching the goal. In (B, D), the robot successfully traversed the gap with guidance from the reference path (green line). The heatmap shows the efficient search along the path.

6.3.5 Limitations and possible extensions

Although the proposed method demonstrated successful improvements in cable-robot and cable-obstacle avoidance in a specific environment, a versatile policy was not obtained. A possible extension would be to use curriculum learning (Soviany et al., 2022), where the learning environment gradually becomes more difficult as the training progresses. The key challenge is expected to be the formulation of navigational difficulties for a tethered mobile robot. To this end, considering realistic parameters of specific hardware and work environments would help. The parameters are, for example, the wheel diameter of the robot and cable thickness, which are crucial for accurately modeling cable overstepping; additionally considering cable-obstacle and cable-ground friction to more realistically simulate cable and obstacle interactions, e.g., displacement of lightweight obstacles by the cable.

7 Conclusion

This paper presented TCFPP, a tangle- and contact-free path planning for a tethered mobile robot that minimizes the cable-obstacle and cable-robot interactions with cable length constraints. Path planning for a tethered mobile robot is a challenging task due to the underactuated nature of the cable and due to the interactions between the cable, the robot, and the obstacles that potentially endanger the safe operation of the robot. We formulated this task as a reinforcement learning problem and trained the agent in simulation with Deep Q-Network. Our reward function consisted of two main parts: the first was to give penalties against cable-obstacle and cable-robot contacts, and the second was to give a penalty against the deviation from the precomputed reference path and to give a reward for the motion along the path. This reward design enabled finding paths that minimize the cable-obstacle and cable-robot contacts while efficiently searching in the vicinity of the reference path. The proposed method was tested in the two distinct scenarios—unreeling cable and handling deployed cable. Compared with classical path planning algorithms, the proposed method generated a path that balances its shortness with the avoidance of cable-obstacle and cable-robot contacts.

Future work will focus on incorporating curriculum learning to enhance the adaptability of the policy to various environments and on integrating realistic parameters such as wheel diameter and cable thickness. These factors can enable the robot to acquire a versatile policy, and further enhance the safety of tethered robot deployment in real-world scenarios.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

RS: Conceptualization, Data curation, Investigation, Methodology, Writing–original draft, Writing–review and editing, Software, Validation, Visualization. GI: Funding acquisition, Supervision, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abad-Manterola, P., Nesnas, I. A., and Burdick, J. W. (2011). “Motion planning on steep terrain for the tethered axel rover,” in 2011 IEEE international Conference on Robotics and automation (IEEE), 4188–4195.

Bhardwaj, M., Choudhury, S., and Scherer, S. (2017). Learning heuristic search via imitation. arXiv. arXiv:1707.03034 [preprint].

Brass, P., Vigan, I., and Xu, N. (2015). Shortest path planning for a tethered robot. Comput. Geom. 48, 732–742. doi:10.1016/j.comgeo.2015.06.004

Brown, J., Latombe, J.-C., and Montgomery, K. (2004). Real-time knot-tying simulation. Vis. Comput. 20, 165–179. doi:10.1007/s00371-003-0226-y

Coulter, R. C. (1992). Implementation of the pure pursuit path tracking algorithm. Tech. Rep. Carnegie-Mellon UNIV Pittsburgh PA Robotics INST.

Dijkstra, E. (1959). A note on two problems in connexion with graphs. Numer. Math. 1, 269–271. doi:10.1007/bf01386390

Igarashi, T., and Stilman, M. (2010). “Homotopic path planning on manifolds for cabled mobile robots,” in Algorithmic foundations of robotics IX (Springer), 1–18.

Kim, S., Bhattacharya, S., and Kumar, V. (2014). “Path planning for a tethered mobile robot,” in 2014 IEEE international Conference on Robotics and automation (IEEE), 1132–1139.

Kim, S., and Likhachev, M. (2015). “Path planning for a tethered robot using multi-heuristic a with topology-based heuristics,” in 2015 IEEE/RSJ international Conference on intelligent Robots and systems (IEEE), 4656–4663.

Kingma, D. P., and Ba, J. (2014). Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980.

Liang, Y.-D., and Barsky, B. A. (1984). A new concept and method for line clipping. ACM Trans. Graph. 3, 1–22. doi:10.1145/357332.357333

Lozano-Pérez, T., and Wesley, M. A. (1979). An algorithm for planning collision-free paths among polyhedral obstacles. Commun. ACM 22, 560–570. doi:10.1145/359156.359164

Martz, J., Al-Sabban, W., and Smith, R. N. (2020). Survey of unmanned subterranean exploration, navigation, and localisation. IET Cyber-Systems Robotics 2, 1–13. doi:10.1049/iet-csr.2019.0043

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., et al. (2015). Human-level control through deep reinforcement learning. nature 518, 529–533. doi:10.1038/nature14236

Nagatani, K., Kiribayashi, S., Okada, Y., Otake, K., Yoshida, K., Tadokoro, S., et al. (2013). Emergency response to the nuclear accident at the fukushima daiichi nuclear power plants using mobile rescue robots. J. Field Robotics 30, 44–63. doi:10.1002/rob.21439

Ota, K., Jha, D. K., Oiki, T., Miura, M., Nammoto, T., Nikovski, D., et al. (2020). Trajectory optimization for unknown constrained systems using reinforcement learning. arXiv Prepr. arXiv:1903.05751. doi:10.48550/arXiv.1903.05751

Raffin, A., Hill, A., Gleave, A., Kanervisto, A., Ernestus, M., and Dormann, N. (2021). Stable-baselines3: reliable reinforcement learning implementations. J. Mach. Learn. Res. 22 (268), 1–8. doi:10.5555/3546258.3546526

Sahin, A., and Bhattacharya, S. (2023). Topo-geometrically distinct path computation using neighborhood-augmented graph, and its application to path planning for a tethered robot in 3d. arXiv Prepr. arXiv:2306.01203. doi:10.48550/arXiv.2306.01203

Schreiber, D. A., Richter, F., Bilan, A., Gavrilov, P. V., Lam, H. M., Price, C. H., et al. (2020). Arcsnake: an archimedes’ screw-propelled, reconfigurable serpentine robot for complex environments. IEEE International Conference on Robotics and Automation ICRA, 7029–7034.

Shapovalov, D., and Pereira, G. A. (2020). “Exploration of unknown environments with a tethered mobile robot,” in 2020 IEEE/RSJ international Conference on intelligent Robots and systems (IEEE), 6826–6831.

Shimada, R., and Ishigami, G. (2023). “Path planning with cable-obstacles avoidance for a tethered mobile robot in unstructured environments,” in Proceedings of the 34th international symposium on space technology and science. 2023-k-04).

Soviany, P., Ionescu, R. T., Rota, P., and Sebe, N. (2022). Curriculum learning: a survey. Int. J. Comput. Vis. 130, 1526–1565. doi:10.1007/s11263-022-01611-x

Werner, M., and Feld, S. (2014). “Homotopy and alternative routes in indoor navigation scenarios,” in 2014 international Conference on indoor Positioning and indoor navigation (IEEE), 230–238.

Xavier, P. G. (1999). “Shortest path planning for a tethered robot or an anchored cable,” in Proceedings 1999 IEEE international Conference on Robotics and automation (IEEE), 2, 1011–1017.

Yang, K. (2011). Anytime synchronized-biased-greedy rapidly-exploring random tree path planning in two dimensional complex environments. Int. J. Control Automation Syst. 9, 750–758. doi:10.1007/s12555-011-0417-7

Keywords: tethered mobile robot, path planning, homotopy class, reinforcement learning, deep Q-network

Citation: Shimada R and Ishigami G (2024) Tangle- and contact-free path planning for a tethered mobile robot using deep reinforcement learning. Front. Robot. AI 11:1388634. doi: 10.3389/frobt.2024.1388634

Received: 20 February 2024; Accepted: 24 July 2024;

Published: 02 September 2024.

Edited by:

Giovanni Iacca, University of Trento, ItalyCopyright © 2024 Shimada and Ishigami. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ryuki Shimada, cnl1a2lzaGltYWRhMjE4QGtlaW8uanA=

Ryuki Shimada

Ryuki Shimada Genya Ishigami

Genya Ishigami