- 1Naval Research Laboratory, Stennis Space Center, MS, United States

- 2Naval Research Laboratory, Washington, DC, United States

Game theory offers techniques for applying autonomy in the field. In this mini-review, we define autonomy, and briefly overview game theory with a focus on Nash and Stackleberg equilibria and Social dilemma. We provide a discussion of successful projects using game theory approaches applied to several autonomous systems.

1 Introduction: Autonomy and game theory

Autonomous systems are designed with tools to respond to situations that were not anticipated during design; e.g., decisions; self-directed behavior; human proxies [12]. Autonomous systems likely follow rules like their human counterparts (e.g., laws, commanders’ intents, etc.). This short review paper is intended to illustrate how game theory can be effectively used in representative autonomous systems.

Game theory is the study of the ways in which interacting choices of agents produce outcomes with respect to the preferences (or utilities) of those agents, where the outcomes in question might have been intended by none of the agents [21].

A game represents situations in which at least one agent or player acts to maximize its utility through anticipating the responses to its actions by one or more other agents. The game provides a model of interactive situations among rational players. The key to game theory is that one player’s payoff relies on the strategy used by the other player. The structure of a game includes players and their preferences, the strategies available, and outcomes of the strategies [32].

In the interaction of rational agents [3], non-cooperative game theory is an approach often utilized to obtain intended objectives. The strategic game is the most used non-cooperative game. For this game, only the strategies and outcomes available from a combination of choices incorporated.

The strategy of an agent specifies the procedure based on how a player chooses their actions. A solution concept is a well-specified set of rules used to predict how a game will develop. For example, a Nash equilibrium is a solution concept and when agents have no incentive to deviate from their selected actions, the game is in Nash equilibrium [23]. When agents or players opt for what they view as the most appropriate action to oppose their opponent’s actions, it is termed a Nash equilibrium.

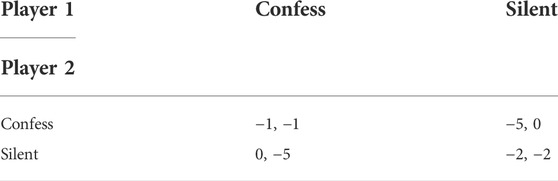

The strategic (or normal form) game is typically represented by a matrix which shows the players, strategies, and payoffs (Table 1). It can be represented by a function that associates a payoff for each player with every possible combination of actions. For example, for two players and a game matrix: one player chooses the row and the other chooses the column. As determined by the number of columns and rows, there are two strategies determined for each agent/player. The payoffs are provided in the intersections. The row/column intersections contain the payoffs as a pair of values. The first value is the payoff for a row player and the second is payoff for a column player. When each agent or player in the game performs simultaneous actions or is at least ignorant of another player’s actions, the game is in normal form.

For example, in the prisoner’s dilemma [18], each prisoner can either “confess” or be “silent”. If exactly one prisoner confesses, their sentence is less and the other prisoner has a longer sentence. However, if they both confess, they both have shortened sentences. Hence we see that confess is strictly dominated by silent. This can be seen by comparing in Table 1, the first numbers in each column, in this case 0 > −1 and −2 > −5. This comparison shows that no matter what the column player chooses, the row player does better by choosing silent. Also when for every row the second payoff is examined, we see the same options, the values compared are the same: 0 > −1; −2 > −5. This shows that no matter what choice row player does, column is better by choosing silent. This demonstrates that the unique Nash equilibrium of this game is (silent, silent).

2 Robotic applications

Several projects have considered the utilization of game theory for applications in robotics. First we can examine a cooperative situation in which robots agree on strategies that may involve sacrifices by all to have a lower overall cost but still achieve their goals. However, every robot must take into account that the other robots are also trying to resolve their goals independently, which is termed a non-cooperative situation. An equilibrium solution occurs when, by taking account the possibilities of other robots performing operations in conflict with its goals, the robot selects its actions.

One example of this in the context of a complicated set of corridors is how to provide for autonomous coordination of two robots. The robots have independent goal locations and initial locations. The conflicts arise when robots need to occupy the same corridors at the same point in time while traversing their optimal paths. Game theory provides a solution suitable for both robots. However, the same choices for an individual robot may be less than optimal [19].

A multi-robot searching task can be modeled as a multiplayer cooperative nonzero-sum game. The robotic players choose their strategies simultaneously at the beginning of the game. Although the overall process of searching is dynamic, it can be treated as a sequence of static games at each discrete point in time. The players must resolve a non-zero sum static game for every discrete interval. This process follows if, with conditioned probability, that observations by the other team are available.

Specifically a game-theory based strategic searching approach has been developed for cooperation of a multi-robot system performing a searching task. To consider the interactions between robots, dynamic programming estimated the utility function, based on using the a priori probability map, travel costs, and the other robots’ current state. Based on this utility function, a utility matrix was developed for an N-robot non-zero-sum game, where both pure Nash and mixed-strategy equilibria were applied to guide the robots to their decisions [22].

A distributed decision-making approach to the problem of control effort allocation to robotic team members in a warehouse has been designed [27]. In this approach, coordination of the robotic team in completing a task in an efficient manner was the objective. A controller design methodology was developed which allowed the robot team to work together based on game theoretic learning algorithms using fictitious play and extended Kalman filters. In particular, each robot of the team predicts the other robots’ planning actions while making decisions to maximize its own expected reward that is dependent on the reward for joint completion of the task. The algorithm was successfully tested on collaborations for material handling and for patrolling robots in warehouses.

In [8], a game theory-based negotiation is utilized for allocating functions and tasks among multiple robots. After the initial task allocation, a new approach employing utility functions was developed to choose the negotiation robots and construct the negotiation set. All the robots have various tasks and the problem is assigning jobs to them minimizing costs and without conflicts. There are m robots and n tasks and xij indicates if the job Ji is allocated to robot Rj. Then the objective is to minimize overall cost

where Cj is max cost allowed for Rj and wij is max cost for job Ji assigned to Rj. φj is a design objective function.

3 Autonomous/self-driving cars

There has been extensive use of game theory to control self-driving cars. For cars, decisions are constantly interacting between drivers and roadways, composing a game-theoretic problem. Accurately planning through road interactions is a safety-critical challenge in autonomous driving [13]. To deal with the mutual influence between autonomous vehicles and humans with computational feasibility, a game structure provided the authors a hierarchical framework. Their design accounted for the complex interactions with lane changing, road intersections and roundabout decisions.

The road decisions of autonomous vehicles interact with the decisions of other drivers/vehicles. Decisions include passing another car, road merging or accident avoidance. This mutual dependence, best captured by dynamic game theory, creates a strong coupling between the vehicle’s planning and its predictions of other drivers’ behavior. The basic approach in [13] considers one human driver, H, and one autonomous vehicle, A. The dynamics of their joint state is xt and xt+1 is the expression of its evolution

where uAt, uHt are the driving actions of the human and autonomous vehicles.

The system must maximize an objective that depends on the evolution of the vehicles over a finite time. The reward function RA captures specifications of the vehicle’s behavior such as fuel consumption, safety, etc. It is the cumulative return for t = 0:N,

3.1 Autonomous vehicle lane changes

Another project considers a particular urban traffic scenario in which an autonomous vehicle needs to determine the level of cooperation of the vehicles in the adjacent lane in order to change a lane [26]. Smirnov’s team developed a game theory-based decision-making model for lane changing in congested urban intersections. As input, driving parameters were related to vehicles in an intersection before a car stopped completely. For game players to enhance and protect their independent interests, strategies must consider mutual awareness of the situation and the predicted outcomes. The authors reported that non-cooperative dynamic games were the most effectively used for lane-changing

Differential games were used to design a fully automated lane-changing and car following control system [35]. Decisions computed the vehicles under control minimized costs for several undesirable/unexpected situations. Evaluations of the discrete and continuous control variables for lane-changes and accelerations were simulated. To provide optimal lane changing decisions and speed-ups, they used both cooperative and non-cooperative controllers.

A mandatory lane-changing decision-making model [2] was designed based on game theory for a two-player nonzero-sum non-cooperative game under incomplete information. Using the Harsanyi transformation [16], they transformed the model into a game that contained imperfect information to cover traditional and connected environments given complete and incomplete information inputs. They restructured the game with incomplete information to an imperfect information game

3.2 Intersection problems

A decision-making model based on a dynamic non-cooperative game was also used to investigate lane changing in an urban scenario of a congested intersection [29]. The game’s results can be predicted if each vehicle maximizes its payoff in the interaction. For this approach the context proposed was management of traffic with red lights at two-lane road intersections.

In [15] the authors developed an approach to mimic human behavior. In their project, various styles of driving operation were assessed using utility functions involving safety of driving, comfort of riding and efficiency of total travel routing. They used non-cooperative games for Stackleberg and Nash equilibria [25]. They concluded that the algorithms developed performed the proper decisions under different driving situations. They also tested two scenarios to change lanes, i.e., merging and overtaking, to evaluate the feasibility and effectiveness of the proposed decision-making framework for different human behaviors. Their experimental evaluations showed that decision-making for autonomous vehicles similar to observed human behaviors can be achieved using both game theory approaches. In situations modeling ordinary styles of driving, the Stackleberg equilibrium game compared to Nash equilibria reduced the cost value by 20 percent.

A model of cooperative behavior strategy in conflict situations between autonomous vehicles in roundabouts has used game theory [4]. Roundabout intersections promote a more efficient and continuous flow of traffic. Roundabout entries move traffic through an intersection quickly and with less congestion for intersections. They can be managed more effectively using cooperative decisions by autonomous vehicles. This approach leads to shorter waiting times and more efficient traffic control while following all traffic regulations.

For roundabouts, well defined rules of the road dictate how autonomous vehicles should interact in traffic [17]. A game strategy based on the prisoner’s dilemma has been used [4] for such roadways. The entry problem for roundabouts has been solved using non-zero sum games to yield shorter waiting intervals for each individual car.

3.3 Open road autonomy

For autonomous vehicles in uncomplicated environments with few interactions, mapping and planning is well developed [28]. However, the unresolved problems are the complexities of human interactions on the open road. Still, this approach presents a model for negotiation between an autonomous vehicle and another vehicle at an unsigned intersection or (equivalently) with a pedestrian at an unsigned road-crossing (i.e., jaywalking), using discrete sequential game [14]. In this model if only car location indicates intent, a non-zero collision probability provides optimal behavior for both vehicles. They also concluded that to reduce probabilities of collisions, alternative forms of control and signal usage should be considered for autonomous vehicles.

4 Aerial and underwater autonomous vehicles

A game theoretic real time planning approach for an autonomous vehicle (e.g., an aerial drone) for competitive races against several opponents over a race course while accounting for opponents’ decisions has been developed [34]. It uses an iterative best-response scheme with a sensitivity term to find approximate Nash equilibria in the space of multiple robot trajectories. The sensitivity term develops Nash equilibria that provide an advantage to individual robots. Through extensive multi-player racing simulations, where the planner exhibits rich behaviors like blocking, overtaking, nudging or threatening, it demonstrated behaviors similar to human racers.

Modeling the interactions of agents that are risk sensitive is important to allow more real-world and efficient agent behavior. During interactions, the extent to which agents exhibit risky maneuvers is not solely determined by their risk tolerance; it also depends on the risk-sensitivity of their opponents. Agent interactions involving risk were modeled in a game-theoretical framework [20]. By being aware of the underlying risks during interactions, this approach leads to safer behaviors by being at a farther distance from other agents [33]. Anticipating feedback in game-theoretic interactions leverages other agent’s risk-awareness to plan for safe and time-efficient trajectories.

An important related issue that can arise is that use of “best responses” may have a potential downside. That is in some situations, cooperation can involve possibly violating certain ethical rules [10] and has engendered discussions recently about self-driving cars and autonomous weapons. This can also be considered from the point of view whether user stress has an effect. It has been shown that even with the increased cognitive load such as during stressful situations, individuals are generally honest [24] and this aspect can be significant in game theory modeling of human and autonomous systems interactions. It is important that such issues be considered in system designs as there will be ever more various autonomous systems and their human interactions involved in the future.

4.1 Applications of unmanned aerial vehicles

Autonomous unmanned aerial vehicles can be tasked to search an unknown/uncertain environment, and neutralize targets perceived as threats. This problem can be formulated by issues that a uav faces when it detects multiple such targets and needs to decide which target to neutralize, given the uncertainty over the decisions of its opponents [5], in a game theoretic framework. Bardhan and colleagues use a correlated equilibrium concept based decentralized game theoretic solution that requires local information of the uavs.

Another game was designed [31] for a swarm (group) of autonomous uavs, where each uav is tasked with collecting information from an area of interest. In this setting, a mission needs to maximize the amount of information collected by uavs. This is formulated by dividing the region of interest into discrete cells, each having potential information value. Each selfish uav (i.e., player) makes the simplest decision for itself by selecting a path among available choices (i.e., strategies) it will fly. So each player or uav behaves selfishly by choosing the best choice of available paths. Game payoffs are determined using information fusion for aggregating information from the multiple uavs operating at multiple locations. Efficiency of a mission is the ratio of an optimal output to a pure strategy Nash equilibrium for the corresponding game.

Stackelberg games can obtain flight routes for uavs operating in areas with malicious opponents using gps spoofing attacks to divert uavs from their chosen flight paths [11]. In a Stackelberg game between a uav acting as the game leader and a gps spoofer, the leader chooses a group of uavs to protect, after which the spoofer opponent determines its actions by observing the choice of the leader. Strategies during this game reflect abilities of each uav group to estimate its location using positions of its nearby uavs, allowing it to succeed to gain a destination despite ongoing gps spoofing attacks.

4.2 Autonomous underwater vehicles

Autonomous underwater vehicles multi-vehicle coordination and cooperation has been formulated with game theory. Very simple games have been used [7] to stably steer an auv formation in its position underwater that is the best compromise between target destination of each vehicle and preservation of communication capabilities among all of the vehicles due to limits on underwater communications.

A specific type of security game is a Stackelberg Security Game [30]. A key concept in this type of security game is a leader-follower framework for strategies for underwater auv patrols. In the real world, it can be assumed that any security pattern can be exploited by attackers beforehand through reconnaissance. Thus, security patrols must have a certain degree of randomness while maintaining their efficiency. The leader will commit to an optimal policy and the follower will find an optimal policy after observing the leader’s actions. The leader’s policy, x, a probability distribution over the leader’s pure strategies where xi is the percentage of times strategy i was used in the policy. Then q and qj are the follower’s optimal policy and strategy j’s percentage in response to the leader’s strategy. Rij and Cij are the reward matrices of the leader and follower respectively when the leader commits to strategy i, and the follower strategy j [9]. The leader will then solve the following Mixed Integer Quadratic Problem:

X and Q are index sets of leader’s and follower’s strategies, M is a large positive number and a ∈A is the follower’s maximum reward.

5 Conclusion

We have provided a mini-review illustrative but not exhaustive of successful autonomy applications of game theory based on Nash, Stackleberg and social dilemmas. There are recent research developments in game theory that can enhance such applications. Mean-field (MF) game theory [6] is a model created to deal with an environment where several participants interact smoothly. Standard game theories are used to deal with how two participants interact with each other. MF however describes one participant deals with a group of others. Due to the complexity of interactions between participants, the original theory was nonapplicable to large groups but using mean-field game theory, situations involving large groups can be solved quickly and easily. Another new approach is evolutionary game theory which focuses on evolutionary dynamics that are frequency dependent [1, 36]. The fitness payoff for a particular phenotype depends on population composition. Classical game theory focuses largely on the properties of the equilibria of games. A central feature of EGT is a focus on dynamics of strategies and their composition in a population rather than on properties of equilibria.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Acknowledgments

The authors would like to thank the Naval Research Laboratory’s Base Program for sponsoring this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Alexander J. The stanford encyclopedia of philosophy. In: EN Zalta, editor. Evolutionary game theory (2021). (Summer 2021 Edition). Available at: https://plato.stanford.edu/archives/sum2021/entries/game-evolutionary/.

2. Ali Y, Zheng Z, Haque MM, Wang M. A game theory-based approach for modelling mandatory lane-changing behaviour in a connected environment: A game theory approach. Transportation Res C: Emerging Tech (2019) 106:220–42. doi:10.1016/j.trc.2019.07.011

3. Amadae SM. Rational choice theory. Encyclopedia Britannica (2021). Available at: https//www.britannica.com/topic/rational-choice-theory/.

4. Banjanovic-Mehmedovic L, Halilovic E, Bosankic I, Kantardzic M, Kasapovic S. Autonomous vehicle-to-vehicle (V2V) decision making in roundabout using game theory. Int J Adv Comput Sci Appl (2016) 7:292–8. doi:10.14569/ijacsa.2016.070840

5. Bardhan R, Bera T, Sundaram S. A decentralized game theoretic approach for team formation and task assignment by autonomous unmanned aerial vehicles. In: International conference on unmanned aircraft systems (ICUAS) (2017). p. 432–7.

6. Cardaliaguet P, Porretta A. An introduction to mean field game theory. In: P Cardaliaguet, and A Porretta, editors. Mean field games. Lecture notes in mathematics. Springer Pub (2020). p. 2281.

7. Cococcioni M, Fiaschi L, Lermusiaux PFJ. Game theory for unmanned vehicles path planning in the marine domain: State of the art and new possibilities. J Mar Sci Eng (2021).

8. Cui R, Guo J, Gao B. Game theory-based negotiation for multiple robots task allocation. Robotica (2013) 31:923–34. doi:10.1017/s0263574713000192

9. Dennis S, Petry F, Sofge D. Game theory framework for agent decision-making in communication constrained environments.Unmanned Syst Tech XXIII (2021). 117580P. doi:10.1117/12.2585922

10. Du Y, Ma W, Sun Q, Sai L. Collaborative settings increase dishonesty. Front Psychol (2021) 12:650032. retrieved 4/11/2022 from. doi:10.3389/fpsyg.2021.650032

11. Eldosouky AR, Ferdowsi A, Saad W. Drones in distress: A game-theoretic countermeasure for protecting UAVs against GPS spoofing. IEEE Internet Things J (2019) 7:2840–54. doi:10.1109/jiot.2019.2963337

12. Endsley MR. Human-AI teaming: State-of-the-Art and research needs. The national academies of sciences-engineering-medicine. Washington, DC: National Academies Press (2021). Retrieved 12/27/2021 from. Available at: https://www.nap.edu/catalog/26355/human-ai-teaming-state-of-the-art-and-research-needs.

13. Fisac J, Bronstein E, Stefansson E, Sadigh D, Sastry S, Dragan A. Hierarchical game-theoretic planning for autonomous vehicles. In: 2019 international conference on robotics and automation, ICRA (2019). p. 9590–6.

14. Fox CW, Camara F, Markkula G, Romano RA, Madigan R, Merat N. When should the chicken cross the road? Game theory for autonomous vehicle-human interactions. In: Proceedings of the 4th international conference on vehicle technology and intelligent transport systems (2018). p. 429–31.

15. Hang P, Lv C, Xing Y, Huang C, Hu Z. Human-like decision making for autonomous driving: A noncooperative game theoretic approach. IEEE Trans Intell Transp Syst (2020) 22:2076–87. doi:10.1109/tits.2020.3036984

16. Hu H, Stuart H. An epistemic analysis of the Harsanyi transformation. Int J Game Theor (2002) 30:517–25. doi:10.1007/s001820200095

17. Isebrands H, Hallmark S. Statistical analysis and development of crash prediction model for roundabouts on high-speed rural roadways. Transportation Res Rec (2012) 2389:3–13. doi:10.3141/2312-01

18. Kuhn S. The stanford Encyclopedia of philosophy (winter 2019 edition). In: E Zalta, editor. Prisoner’s dilemma (2019). Available at: https://plato.stanford.edu/archives/win2019/entries/prisoner-dilemma/.

19. LaValle S, Hutchinson S. Game theory as a unifying structure for a variety of robot tasks. In: Proc 1993 int symp on intelligent control (1993). p. 429–34.

20. Lawless WF, Sofge DA. Risk determination versus risk perception: From misperceived drone attacks, hate speech and military nuclear wastes to human-machine autonomy. Stanford University (2022). Presented at AAAI-Spring 2022. Available at: https://www.aaai.org/Symposia/Spring/sss22symposia.php#ss09.

22. Meng Y. Multi-robot searching using game-theory based approach. Int J Adv Robotic Syst (2008) 5(4):44–350. doi:10.5772/6232

24. Reis M, Pfister R, Foerster A. Cognitive load promotes honesty. Psychol Res (2022). doi:10.1007/s00426-022-01686-8

25. Simaan M, Cruz J. On the Stackelberg strategy in nonzero-sum games. J Optim Theor Appl (1973) 11(5):533–55. doi:10.1007/bf00935665

26. Smirnov N, Liu Y, Validi A, Morales-Alvarez W, Olaverri-Monreal C. A game theory-based approach for modeling autonomous vehicle behavior in congested, urban lane-changing scenarios. Sensors (2021) 21:1523–32. doi:10.3390/s21041523

27. Smyrnakis M, Veres S. Coordination of control in robot teams using game-theoretic learning. IFAC Proc Volumes (2007) 47(3):1194–202. doi:10.3182/20140824-6-za-1003.02504

28. Taeihagh A, Lim H. Governing autonomous vehicles: Emerging responses for safety, liability, privacy, cybersecurity, and industry risks. Transport Rev (2019) 39(1):103–28. doi:10.1080/01441647.2018.1494640

29. Talebpour A, Mahmassani HS, Hamdar SH. Modeling lane-changing behavior in a connected environment: A game theory approach. Transportation Res Proced (2015) 7:420–40. doi:10.1016/j.trpro.2015.06.022

30. Tambe M. Security and game theory: Algorithms, deployed systems, lessons learned. Cambridge University Press (2012).

31. Thakoor O, Garg J, Nagi R. Multiagent UAV routing: A game theory analysis with tight price of anarchy bounds. IEEE Trans Autom Sci Eng (2019) 17:100–16. doi:10.1109/tase.2019.2902360

33. Wang M, Mehr N, Gaidon A, Schwager M. Game theoretic planning for risk-aware interactive agents. In: IEEE/RSJ International Conference on Intelligent Robots and Systems Las Vegas (2020). p. 6998–7005.

34. Wang Z, Spica R, Schwager M. Game theoretic motion planning for multi-robot racing. Distributed Autonomous Robotic Syst (2020) 9:225–38. doi:10.1007/978-3-030-05816-6_16

35. Wang M, Hoogendoorn SP, Daamen W, van Arem B, Happee R. Game theoretic approach for predictive lane-changing and car-following control. Transportation Res Part C: Emerging Tech (2015) 58:73–92. doi:10.1016/j.trc.2015.07.009

Keywords: game theory, robotics, autonomous vehicles, nash equilibrium, self-driving cars

Citation: Dennis S, Petry F and Sofge D (2022) Game theory approaches for autonomy. Front. Phys. 10:880706. doi: 10.3389/fphy.2022.880706

Received: 21 February 2022; Accepted: 18 August 2022;

Published: 11 October 2022.

Edited by:

Víctor M. Eguíluz, Spanish National Research Council (CSIC), SpainReviewed by:

Linjie Liu, Northwest A&F University, ChinaMendeli Henning Vainstein, Federal University of Rio Grande do Sul, Brazil

Paolo Grigolini, University of North Texas, United States

Copyright © 2022 Dennis, Petry and Sofge. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fred Petry, ZnJlZG5hdnkzQGdtYWlsLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Steven Dennis1†

Steven Dennis1† Fred Petry

Fred Petry Donald Sofge

Donald Sofge