- College of Computer Science and Engineering, Weifang University of Science and Technology, Weifang, China

Introduction: Advances in machine vision and mobile electronics will be accelerated by the creation of sophisticated optoelectronic vision sensors that allow for sophisticated picture recognition of visual information and data pre-processing. Several new types of vision sensors have been devised in the last decade to solve these drawbacks, one of which is neuromorphic vision sensors, which have exciting qualities such as high temporal resolution, broad dynamic range, and low energy consumption. Neuromorphic sensors are inspired by the working principles of biological sensory neurons and would be useful in telemedicine, health surveillance, security monitoring, automatic driving, intelligent robots, and other applications of the Internet of Things.

Methods: This paper provides a comprehensive review of various state-of-the-art AI vision sensors and frameworks.

Results: The fundamental signal processing techniques deployed and the associated challenges were discussed.

Discussion: Finally, the role of vision sensors in computer vision is also discussed.

1 Introduction

Vision is the fundamental function of cognitive creatures and agents, responsible for comprehending and seeing their surroundings. The visual system accounts for more than 80% of information in the human perception system, greatly surpassing the total of the auditory, tactile, and other perceptual systems (Picano, 2021). How to create a sophisticated visual perception system for use in computer vision technology and artificial intelligence has long been a research focus in academia and industry (Liu et al., 2019).

A video is a series of still pictures that was created by the advancement of cinema and television technologies. It makes use of the human visual system’s visual persistence phenomena (Hafed et al., 2021). Traditional video has made significant advances in the visual viewing angle in recent years (Zare et al., 2019), but there are drawbacks such as high data sampling redundancy, a small photosensitive dynamic range, low resolution in time domain acquisition, and the ability to produce motion blur in high-speed motion scenes (Sharif et al., 2023). Furthermore, computer vision has been moving in the mainstream direction of “video camera + computer + algorithm = machine vision” (Hossain et al., 2019; Wu and Ji, 2019), but few scholars question the logic of using image sequences (videos) to express visual information, and even fewer scholars question whether this computer vision method can be realized.

The human visual system has the advantages of low redundancy, low power consumption, high dynamics and strong robustness. It can efficiently and adaptively process dynamic and static information, and has strong small sample generalization ability and comprehensive complex scene perception ability (Heuer et al., 2020). Explore the mysteries of the human visual system, and learn from the neural network structure of the human visual system and the processing mechanism of visual information sampling and processing (Pramod and Arun, 2022), to establish a new set of visual information perception and processing theories, technical standards, chips and application engineering systems. The ability to better simulate, extend or surpass the human visual perception system (Ham et al., 2021). This is the intersection of neuroscience and information science, called neuromorphic vision (Najaran and Schmuker, 2021; Martini et al., 2022; Chunduri and Perera, 2023). Neuromorphic vision is a visual perception system that includes hardware development, soft support, and biological neural models. One of its ultimate goals is to simulate the structure and mechanism of biological visual perception (Ferrara et al., 2022) in order to achieve real machine vision.

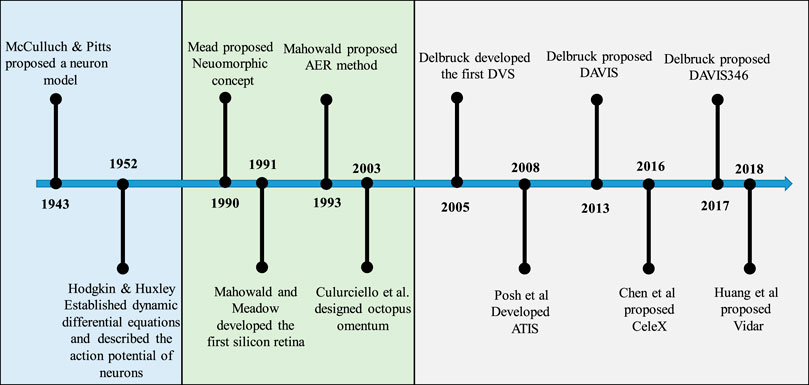

The development of neuromorphic vision sensors is based on scientific and physiologic research on the structure and functional mechanism of biological retinas. A neuron model with computing power was proposed by Alexiadis (Alexiadis, 2019). To describe the neural network, Shatnawi et al. (Shatnawi et al., 2023) established dynamic differential equations for neurons.

The generation and transmission of the action potential is called a spike. A Ph.D. student at Caltech, thought: “The brain is the birthplace of imagination, which makes me very excited. I hope to create a chip for imagining things”. The topic of stereo vision is investigated from the standpoints of science and engineering. The author initially introduced the Neuromorphic idea (Han et al., 2022), employing large-scale integrated circuits to replicate the organic nervous system. The writers featured a moving cat on the cover of “Scientific American” (Ferrara et al., 2022), launching the first silicon retina, which models the biology of cone cells, horizontal cells, and bipolar cells on the retina. It was the formal start of the burgeoning area of neuromorphic vision sensors. Marinis et al. (Marinis et al., 2021) presented Address-Event Representation (AER), a novel form of integrated circuit communication protocol, to overcome the challenge of dense three-dimensional integration of integrated circuits, which enabled asynchronous event readout. Purohit et al. (Purohit and Manohar, 2022) created Octopus Retina, an AER-based integral-discharge pulse model that represents pixel light intensity as frequency or pulse interval. Abubakar et al. (Abubakar et al., 2023) created a Dynamic Vision Sensor (DVS) that represented pixel light intensity changes with sparse spatiotemporal asynchronous events, and its commercialization was a watershed moment. DVS, on the other hand, is unable to capture fine texture images of natural scenes. Oliveria et al. (Oliveria et al., 2021) proposed an asynchronous time-based image sensor (ATIS) and an event-triggered light intensity measurement circuit to reconstruct the pixel grey level as the change occurred. Zhang et al. (Zhang et al., 2022a) created a Dynamic and Active Pixel Vision Sensor (DAVIS), a dual-mode technical route that includes an additional independent traditional image sampling circuit to compensate for DVS texture imaging flaws. It was then expanded to colour DAVIS346 (Moeys et al., 2017). To restore the scene texture, Feng et al. (Feng et al., 2020) increased the bit width of the event and let the event carry the pixel light intensity information output. Auge et al. (Auge et al., 2021) adopted the octopus retina’s principle of light intensity integral distribution sampling and replaced pulse plane transmission with the AER method to conserve transmission bandwidth. They also confirmed that the integral sampling principle can quickly reconstruct scene texture details. That is, the Fovea-like Sampling Model (FSM), also called Vidar, as shown in Figure 1. Neuromorphic vision sensors simulate biological visual perception systems, which have the advantages of high temporal resolution, less data redundancy, low power consumption and high dynamic range, and are used in autonomous driving (Chen L. et al., 2022), unmanned vehicles machine vision fields such as machine vision navigation (Mueggler et al., 2017; Mitrokhin et al., 2019), industrial inspection (Zhu Z. et al., 2022), and video surveillance (Zhang S. et al., 2022) have huge market potential, especially in scenarios involving high-speed motion and extreme lighting. The sampling, processing, and application of neuromorphic vision is another important field of neuromorphic engineering (Wang T. et al., 2022). This activity validates the computational neuroscience’s visual brain model (Baek and Seo, 2022) and is a useful method for examining human intellect. The development of neuromorphic vision sensors is still in its infancy, and more investigation and study are still required to match or even surpass the human visual system’s capacity for perception in intricately interconnected settings (Chen J. et al., 2023).

This paper systematically reviews and summarizes the development process of neuromorphic vision, the neural sampling model of biological vision, the sampling mechanism and types of neuromorphic vision sensors, neurovisual signal processing and feature expression, and vision applications, and looks forward to the future of this field. The major challenges and possible development directions of the research are discussed, and its potential impact on the future field of machine vision and artificial intelligence is discussed.

2 Neuromorphic vision model and sampling mechanism

The technical route of neuromorphic vision is generally divided into three levels: the structural level imitates the retina, the device functional level approaches the retina, and the intelligence level surpasses the retina. If the traditional camera is a simulation of the human visual system, then this bionic retina is only a primary simulation of the functional level of the device. In fact, the traditional camera is far inferior to the perception ability of the human retina in various complex environments in terms of structure, function and even intelligence (Chen et al., 2022b).

In recent years, the “Brain Projects” (Fang and Hu, 2021) in various countries have been successively deployed and launched, and the analysis of brain-like vision from the structural level is one of the important contents to support, mainly through the use of fine analysis and advanced detection technology by neuroscientists to obtain the structure of the basic unit of the retina, functions and their network connections, which provide theoretical support for the device function level approaching the biological visual perception system. The neuromorphic vision sensor starts from the device function level simulation, that is, the optoelectronic nanodevice is used to simulate the biological vision sampling model and information processing function, and the perception system with or beyond the biological vision ability is constructed under the limited physical space and power consumption conditions. In short, neuromorphic vision sensors do not need to wait until they fully understand the analytic structure and mechanism of the retina before simulating. Instead, they learn from the structure-level research mechanism and bypass this more difficult problem, and use simulation engineering techniques such as device function-level approximation. The ability to reach, extend or surpass the human visual perception system.

At present, neuromorphic vision sensors have achieved staged results (Zhou et al., 2019). There are differential visual sampling models that simulate the peripheral sensory motor function of the retina (Jeremie and Perrinet, 2023). There are also integral visual sampling models that simulate the fine texture function of the fovea, such as octopus retina (Purohit and Manohar, 2022), and Vidar (Auge et al., 2021).

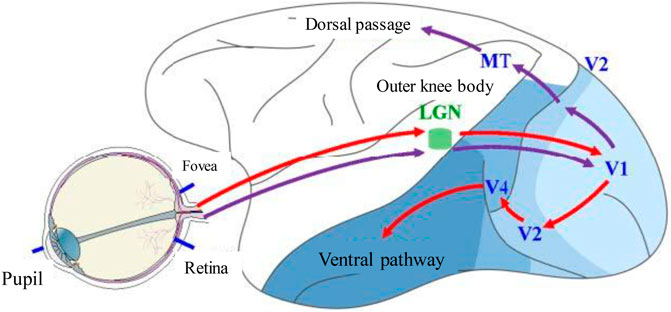

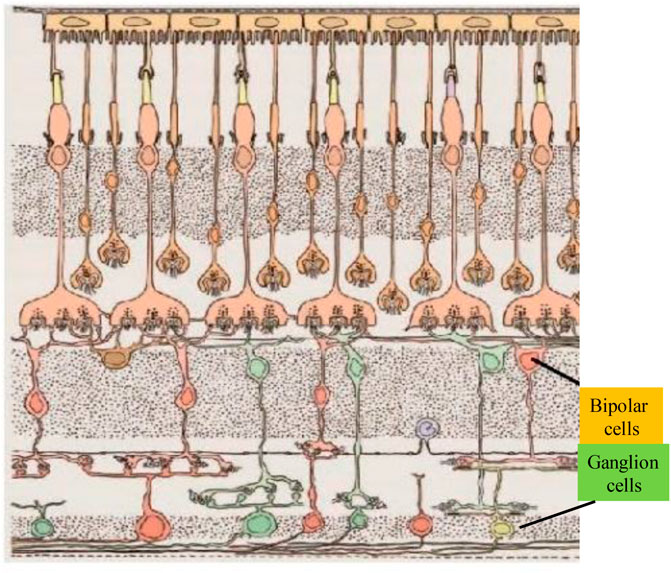

Including photoreceptor cells, bipolar cells, horizontal cells, ganglion cells and other main components (Sawant et al., 2023), as shown in Figure 2. Photoreceptor cells are divided into two types: rod cells and cone cells, which are responsible for converting light signals entering the eye into electrical signals, which are transmitted to bipolar cells and horizontal cells. Cone cells are sensitive to color and are mainly responsible for color recognition, and usually work under the condition of strong scene illumination. Rod cells are sensitive to light and can perceive weak light, mainly providing work in night scenes (Soucy et al., 2023), but they have no color discrimination ability. Bipolar cells receive signal input from photoreceptors, and they are divided into ON-type and OFF-type cells according to the different regions of the receptive field (Sun et al., 2022), which sense the increase in light intensity and the decrease in light intensity, respectively. Horizontal cells are laterally interconnected with photoreceptors and bipolar cells, which adjust the brightness of the signals output by photoreceptors, and are also responsible for enhancing the outline of visual objects. Ganglion cells are responsible for receiving visual signal input from bipolar cells, and respond in the form of spatial-temporal pulse signals, which are then transmitted to the visual cortex through visual fibers. In addition, retinal cells have multiple parallel pathways to transmit and process visual signals, which have great advantages in bandwidth transmission and speed. Among them, the Magnocellular and Parvocellular pathways are the two most important signal pathways (Sawant et al., 2023), which are respectively sensitive to the temporal changes of the scene. and spatial structure sensitive.

FIGURE 2. Schematic diagram of the cross-section of the primate retina (Sawant et al., 2023).

The primate biological retina has the following advantages:

(1) Local adaptive gain control of photoreceptors: The change of recorded light intensity replaces the absolute light intensity to eliminate redundancy, and has a high dynamic range (High Dynamic Range, HDR) for light intensity perception;

(2) Spatial bandpass filter of rod cells: filter out the visual information redundancy of low-frequency information and the noise of high-frequency information;

(3) ON and OFF types: both ganglion cells and retinal outputs are encoded by ON and OFF pulse signals, which reduces the pulse firing frequency of a single channel;

(4) Photoreceptor functional area: The fovea has high spatial resolution and can capture fine textures; its peripheral area has high temporal resolution and captures fast motion information.

Additionally, biological vision is represented and encoded by binary pulse information, and the optic nerve only needs to transmit 20 Mb/s data to the vision Cortex, the amount of data is nearly 1,000 times less than what traditional cameras need to transmit in order to match the dynamic range and spatial resolution of human vision. Therefore, the retina efficiently represents and encodes visual information by converting light intensity data into spatiotemporal pulse array signals through ganglion cells, which serves as both a theoretical foundation and a source of functional inspiration for neuromorphic visual sensors.

2.1 Vision model of the retina

The information acquisition, processing and processing of the biological visual system mainly occur in the retina, lateral geniculate body and visual cortex (Pramod and Arun, 2022), as shown in Figure 3. The retina is the first station to receive visual information. The lateral geniculate body is the information transfer station that transmits retinal visual signals to the primary visual cortex; the visual cortex is the visual central processor, which is used in advanced visual functions such as learning and memory, thinking language, and perceptual awareness (Jeremie and Perrinet, 2023). The entire process of visual cortex information processing is completed by two parallel pathways: V1, V2, and V4. the ventral pathway mainly deals with the recognition of object shape, color, and other information (Toprak et al., 2018), also known as the what pathway; V1, V2, and MT. The dorsal pathway mainly deals with spatial position, motion and other information (Freud et al., 2016), also known as the where pathway. Therefore, the neural computing model is used to explore the information processing and analysis mechanism of the human visual system, which can provide reference ideas and directions for computer vision and artificial intelligence technology, and further inspire the theoretical model and computing method of brain-like vision, so as to better mine visual feature information. It can process dynamic and static information efficiently and adaptively, close to biological vision, and has strong generalization ability of small samples and comprehensive visual analysis ability.

2.2 Differential vision sampling and AER transmission protocol

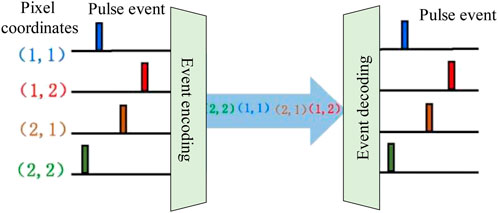

The complex connections between neurons and the transmission of impulse signals between neurons are asynchronous, so how can neuromorphic engineering systems simulate this feature? It is the Marinis team (Marinis et al., 2021) who proposed a new communication protocol AER method, as shown in Figure 4, which is used for multiple asynchronous transmission of pulse signals, and also solves the three-dimensional dense connection problem of large-scale integrated circuits, that is, the “connection problem”.

Like a regular camera, the AER technique interprets each pixel on the sensor as independent rather than sending out a picture at a set frequency. The pulse signal is sent out asynchronously in accordance with the event’s temporal sequence and is broadcast as an event. The decoding circuit then parses event attributes according to address and time. The following are the key components of the AER strategy for neuromorphic vision sensors (Buchel et al., 2021):

(1) The output events of silicon retinal pixels simulate the function of neurons in the retina to emit pulse signals;

(2) Light intensity perception, pulse generation and transmission are asynchronous between silicon retina pixels;

(3) When the asynchronous events output by the silicon retina are sparse, the event representation and transmission are more efficient.

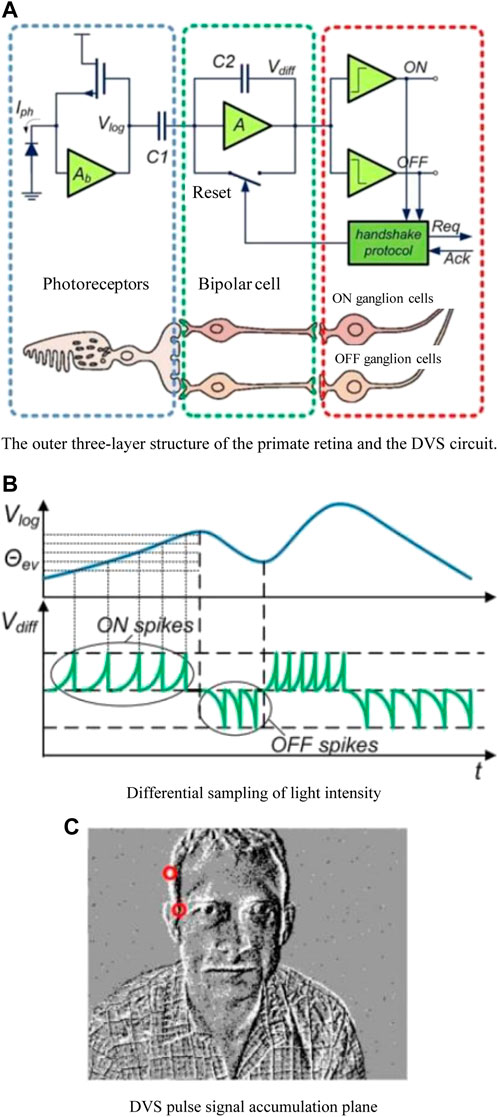

As shown in Figure 5, the abstraction of the three-layer structure of cells and ganglion cells approaches or exceeds the ability of high temporal resolution perception of the retina periphery from the functional level of the device. Differential visual sampling is the mainstream of neuromorphic visual sensor perception models (Dai et al., 2023). The DVS series of vision sensors primarily employ the logarithmic differential model, which is to say that the photocurrent and voltage use a logarithmic mapping relationship in order to increase the dynamic range of light intensity perception (b). The pixel produces a pulse signal when the voltage change exceeds the predetermined threshold due to the relative change in light intensity, as shown in Figure 5. (c). The basic idea is this:

FIGURE 5. Differential visual sampling (Najaran and Schmuker, 2021). (A) The outer three-layer structure of the primate retina and the DVS circuit. (B) Differential sampling of light intensity. (C) DVS pulse signal accumulation plane.

The AER technique is used by the differential vision sensor, and each pulse signal is represented as an event. Include a quadruple representation that includes the pixel position

(1) The output of asynchronous sparse pulses, which is no longer constrained by shutter time and frame rate and can perceive changes in light intensity, lacks the concept of “frame” and can therefore eliminate static and invariable visual redundancy;

(2) High-speed motion vision task analysis is suited for sampling due to its high temporal resolution;

(3) The capacity to perceive high and low light levels is improved and the dynamic range is increased because to the logarithmic mapping connection between photocurrent and voltage.

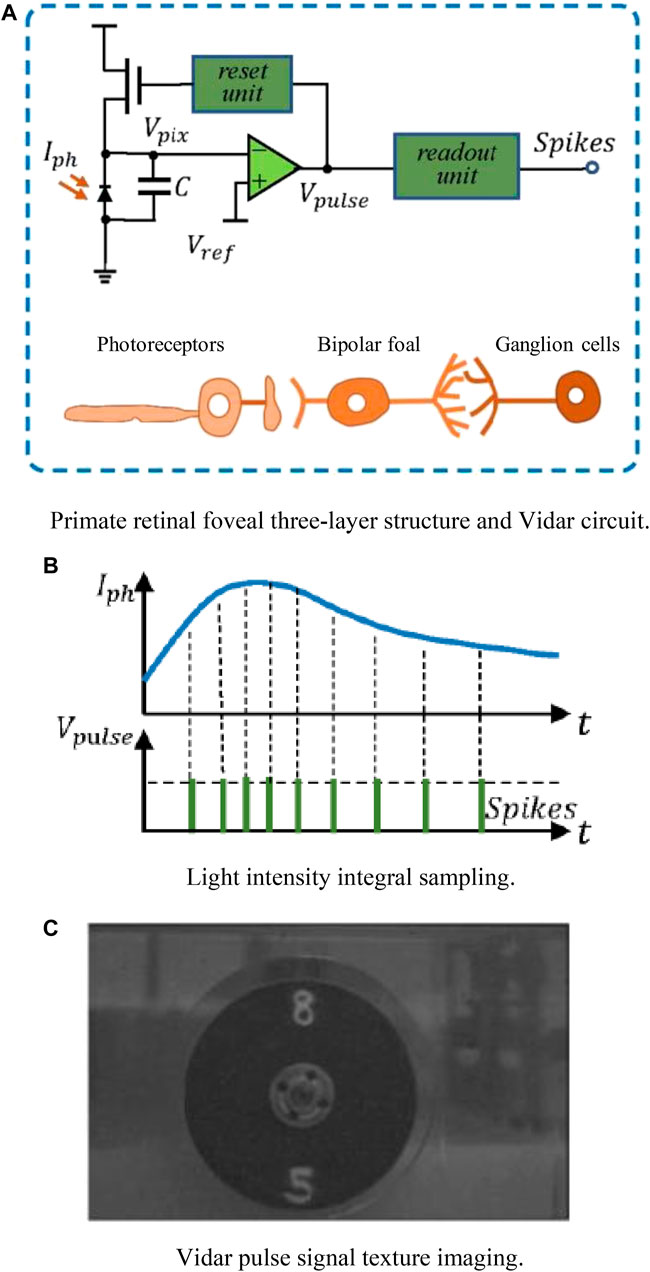

2.3 Integral vision sampling

Integral visual sampling functionally abstracts the three-layer structure of photoreceptors, bipolar cells and ganglion cells in the foveal region of primate retina, such as octopus retina (Purohit and Manohar, 2022), and Vidar (Auge et al., 2021). Integral vision sensors simulate the neuron integral firing model, encode pixel light intensity as frequency or pulse interval, and have the ability to reconstruct the fine texture of visual scenes at high speed (Zhang et al., 2022c), as shown in Figure 6. The photoreceptor converts the light signal into an electrical signal.

FIGURE 6. Integral visual sampling. (A) Primate retinal foveal three-layer structure and Vidar circuit. (B) Light intensity integral sampling. (C) Vidar pulse signal texture imaging.

The accumulation is carried out under the condition of integrator I(t) to reach the accumulated intensity A(t), when the intensity value exceeds the pulse release threshold φ, the pixel point outputs a pulse signal, and the integrator resets to clear the charge (Gao et al., 2018). The principle is as follows:

The pixels of the integrating vision sensor are independent of each other. The octopus retina (Purohit and Manohar, 2022) uses the AER method to output the pulse signal. Especially when the light intensity is sufficient, the integrating vision sensor emits dense pulses, and the event representation is prone to appear multiple times at the same position and adjacent positions. When requesting pulse output, there will be a huge pressure of data transmission, so the bus arbitration mechanism has to be designed to determine the priority for pulse output, and even the pulse signal will be lost due to bandwidth limitation. Vidar (Auge et al., 2021) explored a high-speed polling method to transmit the pulse release at each sampling moment in the form of a pulse matrix. This method does not require the coordinates and timestamps of the output pulse, and only needs to mark whether the pixel is released as “1” and “0”. Replacing the AER method with the pulse plane polling method can save the transmission bandwidth.

3 Types of vision sensors in neuromorphic process

Neuromorphic vision sensor draws on the neural network structure of biological visual system and the processing mechanism of visual information sampling, and simulates, extends or surpasses biological visual perception system at the device function level. In recent years, a large number of representative neuromorphic vision sensors have emerged, which are the prototype of human exploration of bionic vision technology. There are differential visual sampling models that simulate the peripheral sensory motor function of the retina, such as DVS (Abubakar et al., 2023), ATIS (Oliveria et al., 2021), DAVIS (Mesa et al., 2019; Sadaf et al., 2023), CeleX (Feng et al., 2020). There are also integral visual sampling models that simulate the fine texture function of the fovea, such as Vidar (Auge et al., 2021).

3.1 DVS

DVS (Abubakar et al., 2023) abstracts the function of the three-layer structure of photoreceptors, bipolar cells and ganglion cells in the periphery of primate retina, which is composed of photoelectric conversion circuit, dynamic detection circuit and comparator output circuit, as shown in Figure 5. The photoelectric conversion circuit adopts the logarithmic light intensity perception model, which improves the light intensity perception range and is closer to the high dynamic adaptation ability of the biological retina. The dynamic detection circuit adopts a differential sampling model, that is, it responds to changes in light intensity, and does not respond if there is no change in light intensity. The comparator outputs ON or OFF events according to the increase or decrease of light intensity.

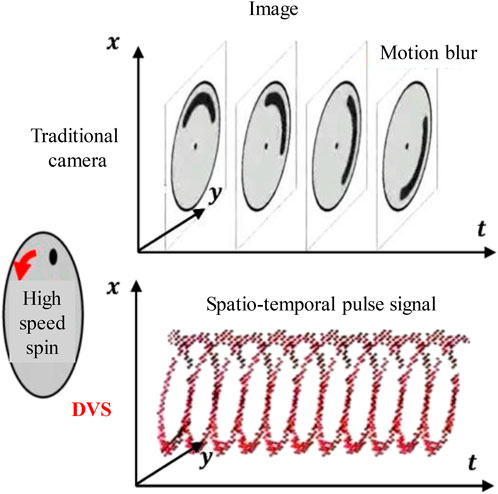

Traditional cameras have a fixed frame rate sampling technique, which in fast-moving situations is prone to motion blur. As illustrated in Figure 7, DVS employs an asynchronous space-time pulse signal to describe the change in scene light intensity and adopts the differential visual sampling model of the AER asynchronous transmission method. High time resolution (106 Hz), high dynamic range (120 dB), low power consumption, less data redundancy, and low latency are just a few benefits of DVS over conventional cameras.

The first commercial DVS128 (Abubakar et al., 2023) developed by the Delbruck team and IniVation has a spatial resolution of 128 × 128, the sampling frequency in the time domain is 106 Hz, the dynamic range is 120 dB, and it is widely used in high-speed moving object recognition, detection and tracking. In addition, the research and products of neuromorphic vision sensors such as DVS and its derivatives ATIS (Oliveria et al., 2021), DAVIS (Mesa et al., 2019; Sadaf et al., 2023) and CeleX (Feng et al., 2020) have also attracted much attention, and are gradually applied to automatic driving and UAV visual navigation and industrial inspection involving high-speed motion vision tasks. For example, Samsung has developed a spatial resolution of 640 × 480 of DVS-G2 (Xu et al., 2020), and the pixel size is 9 μ m × 9 μ m. The IBM company uses DVS128 as the visual perception system of the brain-like chip TrueNorth for fast gesture recognition (Tchantchane et al., 2023).

The DVS uses the differential visual sampling model to filter the static or weakly changing visual information to reduce data redundancy, and at the same time, it has the ability to perceive high-speed motion. However, this advantage brings the disadvantage of visual reconstruction, that is, the ON or OFF event does not carry the absolute light intensity signal, and no pulse signal is emitted when the light intensity change is weak, so that the refined texture image cannot be reconstructed. In order to solve the visual texture visualization of DVS, neuromorphic visual sensors such as ATIS (Oliveria et al., 2021), DAVIS (Mesa et al., 2019; Sadaf et al., 2023) and CeleX (Feng et al., 2020) are derived.

3.2 ATIS

On the basis of DVS, ATIS (Oliveria et al., 2021) skillfully introduced a light intensity measurement circuit based on time interval to realize image reconstruction. The idea is that every time an event occurs in the DVS circuit, the light intensity measurement circuit is triggered to work. Two different reference voltages are set, by integrating the light intensity, and recording the events that reach the two voltages. Because the time required for the voltage to change by the same amount is different under the conditions of different light intensities, by establishing the light intensity and time mapping can infer that the light intensity is small, so as to output the light intensity information at the pixel where the light intensity changes, which is also called Pulse Width Modulation (PWM) (Holesovsky et al., 2021). In addition, in order to solve the problem that the visual texture information of the static area cannot be obtained without DVS pulse signal issuance, ATIS introduces a set of global emission mechanism, that is, all pixels can be forced to emit a pulse, so that a whole image is used as the background, and then the moving area continuously generates pulses and then continuously triggers the light intensity measurement circuit to obtain the grayscale of the moving area to update the background (Fu et al., 2023).

The commercial ATIS (Holesovsky et al., 2021) developed by the Posch team and Prophesee company has a spatial resolution of 304 × 240, a sampling frequency of 106 Hz in the time domain, and a dynamic range of 143 dB, which is widely used in high-speed vision tasks. In addition, Prophessee has also received a $15 million project from Intel Corporation to apply ATIS to the vision processing system of autonomous vehicles. Subsequently, the team of Benosman (Macireau et al., 2018) further verified the technical scheme of using ATIS sampled pulse signals in the three RGB channels to re-integrate the color (Zhou and Zhang, 2022).

When ATIS is facing high-speed motion, there is still a mismatch between events and grayscale reconstruction updates (Han et al., 2022; Hou et al., 2023). The reasons are as follows: the light intensity measurement circuit is triggered after the pulse is issued, and the measurement result is the average light intensity of a period of time after the pulse is issued, resulting in motion mismatch. Slight changes in the scene do not cause pulses, so the pixels are not updated in time, resulting in obvious texture differences over time.

3.3 DVAIS

DAVIS (Mesa et al., 2019; Sadaf et al., 2023) is the most intuitive and effective fusion technology idea, which combines DVS and traditional cameras, and additionally introduces Active Pixel Sensor (APS) on the basis of DVS for visual scene texture (Zhuo et al., 2022).

The Delbruck team and IniVation further introduced a color scheme based on the DAVIS240 (Zhang K. et al., 2022) with a spatial resolution of 240 × 180.

Color DAVIS346 (Moeys et al., 2017), its spatial resolution reaches 346 260, the time domain sampling frequency is 106Hz, the dynamic range is 120dB, and the spatial position of the event coordinates generated by DVS carries RGB color information, but the sampling speed of APS circuit is far less than DVS (Zhao B. et al., 2022). The frame rate of APS mode is 50FPS, and the dynamic range is 56.7 dB. Particularly in high-speed motion scenes, the images produced by the two sets of sampling circuits cannot be precisely synchronized, and APS images exhibit motion blur.

At present, DAVIS is the mainstream of neuromorphic vision sensor commercial products, industrial applications and academic research. It originates from the academic research promotion of DVS series sensors (DVS128, DAVIS240, DAVIS346 and color DAVIS346), and the disclosure, code and a good ecological environment created by open-source software. Therefore, in this paper, the pulse signal processing, feature expression, and vision applications are mainly based on the DVS series sensors of the differential vision sampling model.

3.4 CeleX

CeleX (Feng et al., 2020) takes into account the hysteresis of the light intensity measurement circuit of ATIS, when the DVS circuit outputs the address (x, y) of the pulse event and the release time t, it also outputs the light intensity information I of the pixel in time, namely Available quads for CeleX output events (x, y, t, I) express. The design idea of CeleX mainly includes three parts (Merchan et al., 2023):

(1) Introduce buffer and readout switch circuits to directly convert the circuit of the logarithmic photoreceptor into light intensity information output;

(2) Use the global control signal to output a whole frame of image, so that the whole image can be obtained as the background and Timely global update;

(3) Specially design the light intensity value of the output buffer of the column analog readout circuit. CeleX cleverly designs the bit width of the pulse event to be 9 bits, which not only ensures the semantic information of the pulse itself, but also carries a certain amount of light intensity information.

The fifth-generation CeleX-V (Tang et al., 2023) recently released by CelePixel has a spatial resolution of 1,280,800, which basically reaches the level of traditional cameras. At the same time, the maximum output sampling frequency in the time domain is 160 MHz and the dynamic range is 120 dB. The “three highs” advantages of high spatial resolution, high temporal resolution and high dynamic range of this product have attracted the attention of the current field of neuromorphic engineering (Ma et al., 2023; Mi et al., 2023). In addition, CelePixel has also received a 40 million project funding from Baidu, using CeleX-V for automatic driving assistance systems in cars, and using its advantages to monitor abnormal driving behaviors in real time (Mao et al., 2022).

The pulse events of CeleX use 9-bit information output. When the scene is in severe motion or high-speed motion, the data volume cannot be transmitted in time, and even part of the pulse data is discarded, so that the sampling signal cannot be fidelity (Zhao J. et al., 2023), and it cannot respond to light in time. Updates and other shortcomings. However, CeleX’s “three-high” performance and its advantages in optical flow information output have great application potential in automatic driving, UAV visual navigation (Zhang et al., 2023a), industrial inspection and video surveillance and other tasks involving high-speed motion vision.

3.5 Vidar

Vidar (Auge et al., 2021) abstracted the function of the three-layer structure of photoreceptors, bipolar cells, and ganglion cells in the primate fovea, and adopted an integral visual sampling model to encode pixel light intensity as frequency or pulse interval, with the ability to reconstruct the fine texture of visual scenes at high speed (Zhang H. et al., 2022). Vidar consists of photoelectric conversion circuit, integrator circuit and comparator output circuit, as shown in Figure 6A. The photoreceptor converts the light signal into an electrical signal, the integrator integrates and accumulates the electrical signal, and the comparator compares the accumulated value with the pulse release threshold to determine the output pulse signal (Mi et al., 2022), and the integrator is reset, also known as pulse frequency modulation (PFM) (Purohit and Manohar, 2022).

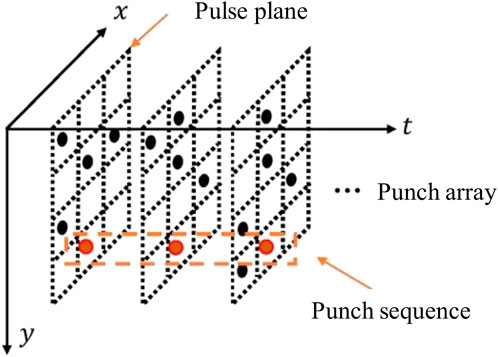

The pulse signal output between Vidar pixels is independent of each other. The pulse signals of a single pixel are arranged in a “pulse sequence” according to the time sequence, and all pixels form a “pulse array” according to the spatial position relationship. The cross-section of the pulse array at each moment is called “Pulse plane”, the pulse signal is represented by “1”, and the no pulse signal is represented by “0”, as shown in Figure 8.

The first Vidar (Auge et al., 2021) has a spatial resolution of 400 × 250, a time domain sampling frequency of 4 × 104 Hz, and an output of 476.3M of data per second. Refined texture reconstruction for static scenes or high-speed motion scenes, such as the use of sliding window accumulation method or pulse interval mapping method (Zhang K. et al., 2022). In addition, Vidar can freely set the duration of the pulse signal for image reconstruction, and has flexibility in the dynamic range of imaging. This integral vision sampling chip can perform refined texture reconstruction for high-speed motion (Zhang H. et al., 2022), and can be used for object detection, tracking and recognition in high-speed motion scenes, and applications in the fields of high-speed vision tasks such as automatic driving, UAV visual navigation, and machine vision.

Vidar uses an integral visual sampling model to encode the light intensity signal by frequency or pulse interval. The essence is to convert the light intensity information into frequency encoding. Compared with the DVS series sensors for motion perception, it is more friendly to visual fine reconstruction. However, Vidar will generate pulses in both static scenes and moving areas, and there is a huge data redundancy in sampling. How to control the pulse emission threshold to adaptively perceive different lighting scenes and control the amount of data is an urgent problem that needs to be solved in integrated visual sampling.

3.6 Performance comparison of imitation retina vision sensors

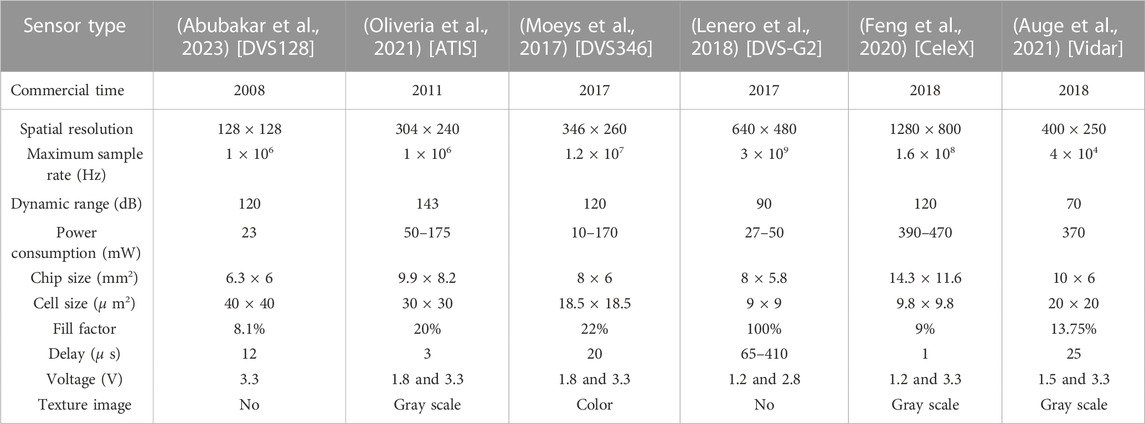

Recently, a large number of neuromorphic vision sensors have emerged and commercialized, including differential visual sampling models that simulate the peripheral sensory motor function of the retina. There are also integral visual sampling models that simulate the foveal function, such as Vidar (Auge et al., 2021). The comparison of specific performance parameters is shown in Table 1.

Neuromorphic vision sensors have two major advantages:

(1) the ability of high-speed visual sampling, which has great application potential in high-speed motion vision tasks;

(2) low power consumption, which is also the essential advantage of the neuromorphic engineering proposed by Mead (Han et al., 2021) and possible final form in the future. However, how to process the spatiotemporal pulse signals output by neuromorphic vision sensors, feature expression and high-speed visual task analysis is the current research focus of neuromorphic vision. At the same time, how to sample brain-like chips for high-speed processing of pulse signals is used in applications involving high-speed vision tasks are the focus of the neuromorphic engineering industry, such as IBM’s TrueNorth (Chen et al., 2022c) chip, Intel’s Loihi (Davies and Srinivasa, 2018) chip, and Manchester University’s SpiNNaker (Russo et al., 2022) chip.

Currently, the spatial resolution of neuromorphic vision sensors has developed from 128 × 128 of the first commercial DVS128 developed by IniVation (Abubakar et al., 2023) to 640 × 480 of Samsung’s DVS-G2 (Xu et al., 2020), CelePixel’s CeleX-V (Tang et al., 2023) 1,280 × 800, but compared with traditional HD and UHD cameras, there is a big gap due to: 1) Spatial resolution and imaging quality; 2) The original intention of dynamic vision sensor design is to perceive high-speed motion instead of high-quality visual viewing. In a word, neuromorphic vision sensors are still in the early stage of exploration, and a lot of exploration and research are needed to achieve the perception ability of the human visual system in complex interactive environments.

4 Asynchronous spatiotemporal pulse signal processing

Neuromorphic vision sensors simulate the pulse firing mechanism of the biological retina. For example, the DVS series sensors using the differential visual sampling model are stimulated by changes in the light intensity of the visual scene to emit pulse signals and record them as address events. The pulse signals present three-dimensionality in the spatial and temporal domains. The sparse discrete lattice of space is shown in Figure 7.

The traditional video signal is represented by the “image frame” paradigm for visual information representation and signal processing, which is also the mainstream direction of existing machine vision. However, “asynchronous spatiotemporal pulse signal” is different from “image frame”, and the existing image signal processing mechanism cannot be directly transferred to the application. How to establish a new set of signal processing theory and technology (Zhu R. et al., 2021) is the research difficulty and hotspot in the field of neuromorphic visual signal processing (Qi et al., 2022).

4.1 Analysis of asynchronous space-time pulse signals

In recent years, the analysis of asynchronous spatiotemporal pulse signals (Chen et al., 2020a) mainly focuses on filtering, noise reduction and frequency domain variation analysis.

The filtering analysis of pulse signal is a preprocessing technique from the perspective of signal processing, and it is also the application basis of the visual analysis task of neuromorphic vision sensor. Sajwani et al. (Sajwani et al., 2023) proposed a general filtering method for asynchronous spatiotemporal pulse signals, which consists of hierarchical filtering in the time domain or spatial domain, which can be extended to complex non-linear filters such as edge detection. Linares et al. (Linares et al., 2019) filter noise reduction and horizontal feature extraction of asynchronous spatiotemporal pulse signals on FPGA, which can significantly improve target recognition and tracking performance. Li et al. (Li H. et al., 2019) used an inhomogeneous Poisson generation process to realize the up-sampling of the asynchronous spatio-temporal pulse signal after performing spatio-temporal difference filtering for the pulse signal’s firing rate.

Neuromorphic vision sensor outputs an asynchronous spatiotemporal pulse signal that is interfered with by leakage current noise and background noise. Khodamoradi et al. (Khodamoradi and Kastner, 2018) implemented the spatiotemporal correlation filter as hardware on the sensor to minimise the DVS background noise. The spatiotemporal pulse signal generated by ATIS was denoised by the Orchard team (Padala et al., 2018) using the Spiking Neural Network (SNN) on the TrueNorth chip, and the performance of target object identification and recognition was enhanced. Du et al. (Du et al., 2021), presents an events other than moving objects were seen as noise, and optical flow was used to assess the motion consistency in order to denoise the spatiotemporal pulse signal produced by DVS.

Transform domain analysis is the basic method of signal processing (Alzubaidi et al., 2021), which transforms the time-space domain into the frequency domain, and then studies the spectral structure and variation law of the signal. Sabatier et al. (Sabatier et al., 2017) suggested an event-based fast Fourier transform for the asynchronous spatiotemporal pulse signal and conducted a cost-benefit analysis of the pulse signal’s frequency domain lossy transform.

The analysis and processing of asynchronous spatiotemporal pulse signals has the following exploration directions:

(1) The asynchronous spatiotemporal pulse signal can be described as a spatiotemporal point process in terms of data distribution (Zhu Z. et al., 2022), and the theory of point process signal processing, learning and reasoning can be introduced (Remesh et al., 2019; Xiao et al., 2019; Ru et al., 2022);

(2) Asynchronous spatiotemporal pulse signal is similar to point cloud in spatiotemporal structure, and deep learning can be used in the structure and method of point cloud network (Wang et al., 2021; Lin L. et al., 2023; Valerdi et al., 2023);

(3) The pulse signal is regarded as the node of the graph model, and the graph model signal processing and learning theory can be used (Shen et al., 2022; Bok et al., 2023);

(4) The timing advantage of the high temporal resolution of the asynchronous spatiotemporal pulse signal is to mine the temporal memory model (Zhu N. et al., 2021; Li et al., 2023) and learn from the brain-like visual signal processing mechanism (Wang X. et al., 2022).

4.2 Asynchronous spatiotemporal pulse signal measurement

Asynchronous spatiotemporal impulse signal metric is to measure the similarity between impulse streams, that is, to calculate the distance between impulse streams in metric space (Lin L. et al., 2023). It is one of the key technologies in asynchronous spatiotemporal impulse signal processing. It has a wide range of important applications in fields such as compression and machine vision tasks.

The asynchronous pulse signal appears as a sparse discrete lattice in the space-time domain, lacking the algebraic operation measure in the Euclidean space. Stereo vision research (Steffen et al., 2019) measured the output pulse signal in two-dimensional space projection and extracted the time plane of spatio-temporal relationship, and applied it to depth estimation in three-dimensional vision. Using the perspective of visual features, Lin et al. (Lin X. et al., 2022) methodically quantified the spatiotemporal pulse signal produced by DVS and used it to vision tasks including motion correction, depth estimation, and optical flow estimation. These techniques add up the time-space pulse signal’s frequency characteristics, but they do not fully use its time-domain properties. The kernel method metric was discussed in (Lin Z. et al., 2022) from the viewpoint of the signal domain, i.e., converting the discrete time-domain pulse signal into a continuous function and determining the separation of the pulse sequences in the inner product of the Hilbert space. Wu et al. (Wu et al., 2023) utilized the convolutional neural network structure to the test and verification of retinal prosthesis data by mapping discrete pulse signals to the feature space and measuring the distance between the pulse signals. Such techniques do not take into account the labelling characteristics of genuine asynchronous spatiotemporal spikes and are instead tested using neurophysiological or simulation-generated spike data (Kim and Jung, 2023).

Dong et al. (Dong et al., 2018) proposed a pulse sequence measurement method with independent pulse labeling attributes, that is, the pulse signals of ON and OFF labeling attributes output by DVS were measured separately, and the discrete pulse sequence was transformed into a smooth continuous function by using a Gaussian kernel function, using the inner product of the Hilbert space to measure the distance of the pulse sequence. In this method, the pulse sequence is used as the operation unit, and the spatial structure relationship of the pulse signal is not considered. Subsequently, the team (Schiopu and Bilcu, 2023) further modeled the asynchronous spatiotemporal pulse signal as a marked spatiotemporal point process, and used a conditional probability density function to characterize the spatial position and labeling properties of the pulse signal, which was applied to the motion in lossy encoding of the asynchronous pulse signal.

Asynchronous spatiotemporal pulse signals are unstructured data, different from normalizable structured “image frames”, and the differences cannot be directly measured in subjective vision. How to orient the measurement of asynchronous pulse signals to visual tasks and the evaluation of normalization is also a difficult problem that needs to be solved urgently.

4.3 Coding of asynchronous space-time pulse signals

With the continuous improvement of the spatial resolution of DVS series sensors, for example, the spatial resolution of Samsung’s DVS-G2 (Xu et al., 2020) is 640 × 480, and the spatial resolution of CeleX-V (Tang et al., 2023) is 1,280 × 800. The generated asynchronous spatiotemporal pulse signal is faced with huge challenges of transmission and storage. How to encode and compress the asynchronous spatiotemporal pulse signal is a brand-new spatiotemporal data compression problem (Atluri et al., 2018; Khan and Martini, 2019).

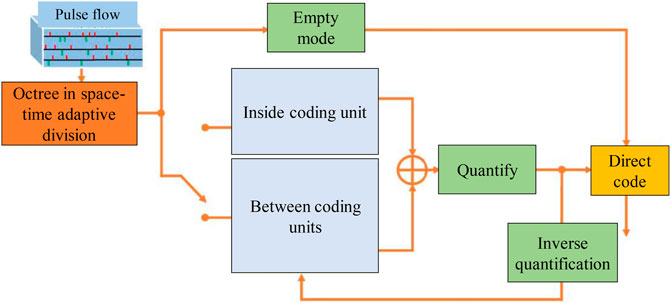

Kim et al. (Kim and Panda, 2021) first proposed a coding compression framework for spatio-temporal asynchronous pulse signals, which took the pulse cuboid as the coding unit and designed address-priority and time-priority predictive coding strategies to achieve effective compression of pulse signals. Subsequently, the team (Dong et al., 2018) further explored more flexible coding strategies such as adaptive division of octrees in the spatiotemporal domain, prediction within coding units, and prediction between coding units, which further improved the compression efficiency of spatiotemporal pulse signals. In addition, the team (Schiopu and Bilcu, 2023) performed a metric analysis of the distortion of pulse spatial position and temporal firing time, and explores vision-oriented tasks.

Analyzing the lossy coding scheme of (Cohen et al., 2018), the specific coding frame of the asynchronous spatiotemporal pulse signal is shown in Figure 9.

The compression scheme of the asynchronous spatiotemporal pulse signal is a preliminary attempt based on the traditional video coding framework and strategy, but the spatiotemporal characteristics of the asynchronous spatiotemporal pulse signal have not been fully analyzed. Can end-to-end deep learning or adaptive encoders of spiking neural networks inspired by neural computational models be applied to the encoding of asynchronous spatiotemporal spiking signals? At present, it is faced with the problems of network input, distortion measurement and sufficient data labeling of asynchronous pulse signals. Therefore, how to design a robust adaptive encoder for data compression of asynchronous spatiotemporal pulse signals will be a very challenging and valuable research topic, which can be further extended to the field of coding and compression of biological pulse signals.

5 Characteristic expression of asynchronous spatiotemporal pulse signal

Asynchronous spatiotemporal pulse signals are presented as sparse discrete lattices in three-dimensional space in both time and space domains, which are more flexible in signal processing and feature expression than the traditional “image frame” paradigm, especially in the time domain length of the pulse signal processing unit or the choice of the number of pulses also increases the difficulty of inputting the visual analysis algorithm of asynchronous spatiotemporal pulse signals. Therefore, how to express the characteristics of asynchronous spatiotemporal pulse signals (Guo et al., 2023), as shown in Figure 10, mining the spatiotemporal characteristics of asynchronous spatiotemporal pulse signals for the visual analysis tasks of “high precision” and “high speed” is the most important method in the field of neuromorphic vision. Important and core research issues also determine the promotion and application of neuromorphic vision sensors. The overview and distribution of research literature in recent years mainly focus on four aspects: rate-based image, hand-crafted feature, end-to-end deep network and spiking neural network.

FIGURE 10. Image representation of frequency accumulation of asynchronous pulse signal (Guo et al., 2023).

5.1 Frequency accumulation image

In order to apply the output of neuromorphic vision sensor to the existing visual algorithm based on “image frame”, the asynchronous spatiotemporal pulse signal can be projected or accumulated in time domain according to the fixed length or number of time domain, that is, the frequency accumulation image.

Model method: The frequency accumulation image is modeled and feature extracted according to the prior knowledge of the image pattern. Ghosh et al. (Ghosh et al., 2022) projected the pulse signals output by the two DVSs into binary images in the time domain to reconstruct the 360° panoramic visual scene. Huang et al. (Huang et al., 2018) used the pulse signal output by CeleX to interpolate image frames and guide the motion area for high-speed target tracking. The Jiang and Gehrig (Jiang et al., 2020a; Gehrig et al., 2020) accumulated the DVS frequency in the time domain as a grayscale image, and then performed maximum likelihood matching tracking with the APS image.

Deep learning method: The frequency accumulation image is input into the deep learning network based on “image frame”. Lai et al. (Lai and Braunl, 2023) accumulated the ON and OFF pulse streams into grayscale images according to the frequency in the time domain, and then used ResNet to predict the steering wheel angle of the autonomous driving scene. Zeng et al. (Zeng et al., 2023) used the pseudo-label of APS for vehicle detection in autonomous driving scenes after mapping the pulse stream output by DVS into a grayscale image. Ryan et al. (Ryan et al., 2023) combined the APS image for vehicle detection in autonomous driving scenes after extracting the pulse stream output by the DVS as an image using the integral distribution model. Cifuentes et al. (Cifuentes et al., 2022) integrated APS for pedestrian detection with the DVS pulse stream output to create a grayscale picture with a set time domain duration. Shiba et al. (Shiba et al., 2022a) utilized the suggested EV-FlowNet network for optical flow estimation and translated the pulse flow generated by the DVS into grayscale pictures in accordance with the time domain sequence. Lele et al. (Lele et al., 2022) performed high dynamic and high frame rate picture reconstruction after amassing images with a set length or a certain number in the temporal domain in accordance with the pulse flow. Xiang et al. (Xiang et al., 2022) used a fixed time domain duration and toggled ON and OFF. The proposed EV-SegNet network is used to segment autonomous driving scenes using the histogram data, which is separately counted and combined into grayscale images. Yang et al. (Yang F. et al., 2023) uses the attention mechanism to find the target by mapping the pulse flow to a grayscale image in accordance with the pulse flow’s sequence.

These techniques can quickly and successfully apply neuromorphic vision sensors to visual tasks involving high-speed motion because they succinctly convert the asynchronous spatiotemporal pulse stream into time-domain projection or frequency accumulation, which is directly compatible with the “image frame” vision algorithm. However, this approach does not completely take use of the spatiotemporal properties of the impulsive flow. The current mainstream of the feature representation of asynchronous spatiotemporal pulse signals.

5.2 Hand-designed features

Before the dominance of deep learning algorithms, hand-designed features were also widely used in the field of machine vision, such as the well-known SIFT (Tang et al., 2022) operator. How to design compact hand-designed features for asynchronous pulse signals (Ramesh et al., 2019), and have the robust characteristics of scale and rotation invariance, is an important technology for neuromorphic vision sensors to be applied to vision tasks.

How to create hand-designed characteristics for vision tasks is being investigated by certain researchers in the field of neuromorphic vision. Edge and corner features were extracted from asynchronous spatiotemporal pulse signals using their temporal and spatial distribution properties. These features were then employed for tasks including target recognition, depth estimation in stereo vision, and target local feature tracking. Zhang et al. (Zhang et al., 2021) used a sampling-specific convolution kernel chip to extract features from the pulse stream and use it for high-speed target tracking and recognition. Rathi et al. (Rathi et al., 2023) used spatiotemporal filters for unsupervised learning to extract visual receptive field features for target recognition tasks in robot navigation.

A feature of the Bag of Events (BOE) was proposed in (Afshar et al., 2020), which is used for handwritten font recognition data collected by DVS and statistical learning to analyses the probability of events. Ye et al. (Ye et al., 2023) extracted the recognition-oriented HOTS features using hierarchical clustering from the pulse stream produced by ATIS by treating each event as a separate unit and accounting for their temporal relationships within a specific airspace. Dong et al. (Dong et al., 2022) then averaged the event time surfaces in the sample neighborhood and aggregated HATS features in the time domain to remove noise’s impact with feature extraction. The edge operator feature of the pulse stream from (Scheerlinck et al., 2019) was extracted using the spatiotemporal filter and then utilized for local feature recognition and target tracking. Wang et al. (Wang Z. et al., 2022) used the time-domain linear sampling kernel to accumulate the weights of the pulse flow as a feature map, and used an unsupervised learning autoencoder network for visual tasks such as optical flow estimation and depth estimation. In addition, also the feature output is used for video reconstruction in the LSTM network. The DART feature operator proposed by the Orchard team (Ramesh et al., 2019) has scale and rotation invariance, and can be applied to the field of target object detection, tracking and recognition.

Hand-designed features have better performance in specific vision task applications, but hand-designed features require a lot of prior knowledge, in-depth understanding of task requirements and data characteristics, and a lot of debugging work. Therefore, using the task-driven cascade method to supervise learning and expressing features (Guo et al., 2023) can better exploit the spatiotemporal characteristics of asynchronous spatiotemporal pulse signals.

5.3 End-to-end deep networks

Deep learning is the current research boom in artificial intelligence, and it has shown obvious performance advantages in image, speech, text and other fields. How to make the asynchronous spatiotemporal pulse signal learn in the end-to-end deep network and fully exploit its spatiotemporal characteristics is the hotspot and difficulty of neuromorphic vision research.

Convolutional Neural Networks: Using 3D Convolution to Process Asynchronous Spatio-Temporal Pulse Signals. Sekikawa et al. (Sekikawa et al., 2018) used an end-to-end deep network to analyze the visual task of asynchronous spatiotemporal pulse signals for the first time. They used 3D spatiotemporal decomposition convolution as the calculation of the input end of the asynchronous spatiotemporal pulse signal, that is, the 3D convolution kernel was decomposed into 2D spatial kernel and 1D motion velocity kernel, and then use recursive operation to process continuous pulse flow in an efficient way, compared with the method of frequency accumulation image, it has a significant improvement in steering wheel angle prediction task in automatic driving scenes, which is a milestone in the field of neuromorphic vision tasks. Uddin et al. (Uddin et al., 2022) proposed an event sequence embedding, which uses spatial aggregation network and temporal aggregation network to extract discrete pulse signals as continuous embedding features, and its performance is compared with frequency accumulation images and hand-designed features in depth. It is estimated that the application has obvious performance advantages.

Point cloud neural network: The asynchronous spatiotemporal pulse signal is treated as a point cloud in three-dimensional space. The pulse signal output by neuromorphic vision sensor is similar to the point cloud in the three-dimensional spatial data structure distribution, but is sparser in the time domain distribution. Valerdi et al. (Valerdi et al., 2023) first used the PointNet (Wang et al., 2021; Lin L. et al., 2023) structure based on point cloud network to process asynchronous spatiotemporal pulse signals, called EventNet, which adopted efficient processing methods of temporal coding and recursive processing, and applied semantic segmentation of autonomous driving scenes and exercise assessment. Chen et al. (Chen X. et al., 2023) regarded asynchronous spatiotemporal impulse signals as event clouds, and adopted the multi-layer hierarchical structure of point cloud network PointNet++ (Lin L. et al., 2023) to extract features for gesture recognition. Jiang et al. (JiangPeng et al., 2022) regarded the asynchronous pulse signal as a point cloud, and proposed an attention mechanism to sample the domain of the pulse signal, which has a significant performance advantage compared to PointNet in gesture recognition.

Graph neural network: The impulse signal is regarded as the node of the graph model, and the processing method of the graph model is adopted. Bok et al. (Bok et al., 2023) proposed to represent the asynchronous spatiotemporal pulse signal by the relevant nodes of the probabilistic graph model, and verified the spatiotemporal representation ability of this method on visual tasks such as pulse signal noise reduction and optical flow estimation. Shen et al. (Shen et al., 2023) modeled asynchronous spatiotemporal pulse signals for the first time in a graph neural network, and achieved a significant performance improvement over frequency cumulative images and hand-designed features in recognition tasks such as gestures (Zhang et al., 2020), alphanumeric, and moving objects (Luo et al., 2022; Lin X. et al., 2023).

The end-to-end deep network can better mine the spatiotemporal characteristics of asynchronous spatiotemporal pulse signals, and its significant performance advantages have also attracted much attention. The performance advantage of deep network supervised learning driven by big data, but the asynchronous pulse signal can hardly be directly subjectively annotated like traditional images, especially in high-level visual tasks such as object detection, tracking and semantic segmentation (Lu et al., 2023a). In addition, the high-speed processing capability and low power consumption of asynchronous spatiotemporal pulse signals are the prerequisites for the wide application of neuromorphic vision sensors, while deep learning currently has no advantages in task processing speed and power consumption. At present, the feature expression of asynchronous pulse signal by end-to-end deep network is still in its infancy, and there are a lot of research points and optimization space.

5.4 Spiking neural networks

The spiking neural network is the third-generation neural network (Bitar et al., 2023), which is a network structure that simulates the biological pulse signal processing mechanism. It considers the precise time information of the pulse signal, and is also one of the important research directions for the feature learning of asynchronous spatiotemporal pulse signals.

The application of spiking neural network in neuromorphic vision mainly focuses on target classification and recognition. Wang et al. (Wang et al., 2022d) proposed a time-domain belief distribution strategy for back-propagation of spiking neural networks, and used GPU for accelerated operations. Fan et al. (Fan et al., 2023) designed a deep spiking neural network for classification tasks, and performed supervised learning and accelerated operations on the deep learning open-source platform. In addition, some researchers have also tried using spiking neural networks in complex visual tasks. Wang and Fan (Wang et al., 2022e; Fan et al., 2023) designed a spiking neural network with multi-layer neuron combination based on empirical information, which was applied to the stereo vision system of binocular parallax and monocular zoom, respectively. Joseph et al. (Joseph and Pakrashi, 2022) proposed a multi-level cascaded spiking neural network to detect candidate regions of fixed scene objects. Quintana (Quintana et al., 2022) designed an end-to-end spiking neural network based on STDP learning rules for robot vision navigation system (Lu et al., 2023b).

At present, the spiking neural network is still in the theoretical research stage, such as the gradient optimization theory of supervised learning of asynchronous spiking spatiotemporal signals (Neftci et al., 2019), the synaptic plasticity mechanism of unsupervised learning (Saunders et al., 2019), the deep learning structure inspired spiking neural network design (Tavanaei et al., 2019). Its performance on neural vision analysis tasks is far less than that of end-to-end deep learning networks. However, the spiking neural network draws on the neural computing model and is closer to the brain visual information processing and analysis mechanism, and has great development potential and application prospects (Jang et al., 2019). Therefore, how to further use the visual cortex information processing and processing mechanism to inspire theoretical models and calculation methods, provide reference ideas and directions for the design and optimization of spiking neural networks, better mine visual feature information and improve computational efficiency, how to solve the spiking neural network supervised learning of neural networks is suitable for complex visual tasks, and how to simulate the efficient calculation of neuron differential equations in hardware circuits or neuromorphic chips is an urgent problem that needs to be solved from theoretical research to practical application of spiking neural networks.

6 Applications

With the rise of cognitive brain science and vision-like computing, machine vision is an important direction to promote the wave of artificial intelligence. Neuromorphic machine vision is an important direction to promote the wave of artificial intelligence. Inspired by the structure and sampling mechanism of biological systems, neuromorphic vision is one of the effective ways to reach or surpass human intelligence, and it has become one of the effective ways for computing gods to reach or surpass human intelligence. The high temporal resolution of the morphological vision sensor, the high temporal resolution of the dynamic range, low power consumption data redundancy, data redundancy and other advantages in automatic driving, low-power data redundancy, in automatic driving, low-power data redundancy and other advantages, in automatic driving, UAV Visual Navigation (Mitrokhin et al., 2019; Galauskis and Ardavs, 2021), Industrial Inspection (Zhu S. et al., 2022), Video Surveillance (Zhang et al., 2022c), and other machine vision fields, especially in the fields of machine vision involving high-speed photography, sports.

6.1 Dataset and software platform

6.1.1 Simulation dataset

The simulation data simulates the neuromorphic vision sensors’ sampling mechanism using computational imaging techniques. The simulation data also simulates the optical environment, signal transmission, and circuit sampling in the form of rendering. It generated a pulse flow of DVS, an APS image, and a depth map of the scene while simulating DAVIS moving through a virtual 3D environment. These outputs can be applied to vision tasks like image reconstruction, depth estimation of stereo vision, and visual navigation. The Event Camera Simulator (ESIM), which simulates and produces data such DVS pulse flow, APS picture, scene optical flow, depth map, camera position and route, was open-sourced by Ozawa et al. (Ozawa et al., 2022). A large-scale multi-sensor interior scene simulation dataset for indoor navigation and localization was supplied by Qu et al. (Qu et al., 2021). In particular, to offer data-driven end-to-end deep learning algorithms, simulation datasets can simulate the effect of actual data gathering in a low-cost manner, which can further enhance the study and development of neuromorphic vision.

6.1.2 Real dataset

At present, the real data sets are mainly classification and recognition tasks: Wunderlich et al. (Wunderlich and Pehle, 2021) recorded motion MNIST and Caltech101 pictures using ATIS and displayed them on an LED monitor. The DVS128 LED was separately reported in (Li et al., 2017; Dong et al., 2022).

MNIST numerical characters and CIFAR-10 image data may be moved around on the screen. IBM (Tchantchane et al., 2023) created the DVS-Gesture gesture recognition dataset by using DVS128 to record 11 gesture motions in a variety of lighting situations. Dong et al. (Dong et al., 2022) created a binary data set of N-CAR vehicles by using ATIS to record cars in real-world road scenes. The largest dataset in the field of recognition, Shen et al. (Shen et al., 2023) assembled the genuine ALS-DVS dataset of 100,000 American letter motions using DAVIS240. Miao et al. (Miao et al., 2019) created the DVS-PAF dataset for pedestrian identification, behavior recognition, and fall detection using color DAVIS346. Zhang et al. (Zhang Z. et al., 2023) recorded the DHP19 dataset of 17 types of 3D pedestrian postures using DAVIS346.

Datasets for scene image reconstruction tasks using neuromorphic vision sensors DDD17 dataset which is also commonly used in neuromorphic vision tasks. Xia et al. (Xia et al., 2023) employed DAVIS346 to record the pulse flow, picture, and steering wheel angle of various illumination conditions. Using DAVIS240 and colour DAVIS346 respectively, Chen et al. (Chen and he, 2020) built the DVS-Intensity and CED datasets for visual scene reconstruction. Zhang et al. (Zhang S. et al., 2022) created the PKU-Spike-High-Speed dataset by using Vidar to record high-speed visual sceneries and moving objects.

Datasets for the Object Detection, Tracking, and Semantic Segmentation Neuromorphic Vision Sensor Vision Task includes (Munir et al., 2022). Ryan et al. (Ryan et al., 2023) created the PKU-DDD17-CAR vehicle detection dataset by marking the vehicles in the driving scene on the DDD17 (Xia et al., 2023) dataset. Cifuentes et al. (Cifuentes et al., 2022) created the DVS-Pedestrian pedestrian detection dataset by using DAVIS240 to capture pedestrians in campus scenarios. Liu et al. (Liu C. et al., 2022) created a DVS-Benchmark data set that may be utilized for target tracking, pedestrian recognition, and target recognition by using DAVIS240 to record the target tracking data set on the LED display. For object detection, tracking, and semantic segmentation, Zhao et al. (Zhao D. et al., 2022) built the EED and EV-IMO datasets, respectively. Munir et al. (Munir et al., 2022) recorded DET data from CeleX-V for lane line detection in scenarios involving autonomous driving.

Datasets for neuromorphic vision sensors include (Zhu et al., 2018; Pfeiffer et al., 2022; Nilsson et al., 2023) for tasks like depth estimation and visual odometry. Using DAVIS346 to record a significant number of autopilot and UAV scenes, Zhu et al. (Zhu et al., 2018) revealed the MVSEC dataset for stereo vision, which is extensively used in the field of neuromorphic vision. A DAVIS240 and an Astra depth camera mounted on a mobile robot were used to record interior scenes in (Nilsson et al., 2023) large-scale multimodal LMED dataset. For the SLAM problem, Pfeiffer et al. (Pfeiffer et al., 2022) created a UZH-FPV dataset with built-in information such as DAVIS346 pulse flow, APS image, optical flow, camera posture, and route (Paredes et al., 2019).

In conclusion, relatively few large-scale datasets are publicly available for neuromorphic vision sensors, especially for complex vision tasks such as object and semantic-level annotation in applications such as object detection, tracking, and semantic segmentation. Developing large-scale datasets for neuromorphic vision applications is the source of data-driven end-to-end supervised learning.

6.2 Visual scene image reconstruction

ATIS and DAVIS can make up for the defect that DVS cannot directly capture the fine texture of the scene, but cannot directly reconstruct the images of high-speed motion and extreme lighting scenes. Some visual scene image reconstruction methods are dedicated to making the DVS series sensors higher. Nagata et al. (Nagata et al., 2021) utilized a sliding spatiotemporal window and minimized optimization functions for scene optical flow and light intensity evaluation. Singh et al. (Singh N. et al., 2022) collected the pulse signal in accordance with the predetermined time domain length before reconstructing the picture using the block sparse dictionary learning technique. A complicated optical flow and manifold construction calculation was presented in (Reinbacher et al., 2018) to rebuild visual scene pictures in real time. Wang et al. (Wang et al., 2020) suggested an asynchronous filtering method that can reconstruct high frame rate and high dynamic video by fusing APS pictures with DVS pulse stream.

Kim et al. (Kim et al., 2023) suggested an event-based double-integral model that can deblur and reconstitute APS pictures using the pulse signal produced by DVS. To survive image-video sequences with high dynamics and high frame rates, Du et al. (Du et al., 2021) employed adversarial generative networks and event-accumulated pictures of defined temporal duration or fixed data. Wang et al. (Wang et al., 2022e) employed the temporal relationship of the LSTM network to reconstruct videos by sampling fixed-length pulse streams in the time domain as feature maps.

Neuromorphic vision sensors are not intended for high-quality visual viewing since they are focused on machine vision perception systems, particularly dynamic vision sensors. Therefore, visual sensors like CeleX-V should directly sample high-quality, high-efficiency, and high-fidelity visual pictures. The visual scene image reconstruction algorithm must also think about how to take use of the benefits of high temporal resolution and high neuromorphism dynamics, as well as how to leverage the timing relationship of pulse signals to further enhance image reconstruction quality.

6.3 Optical flow estimation

Optical flow is the instantaneous speed of pixel motion of space objects on the observation imaging plane. It not only covers the motion information of the measured object, but also has rich three-dimensional structure information. It plays an important role in the research of vision application tasks such as target detection, tracking and recognition. Zhang and Mueggler (Mueggler et al., 2017; Zhang et al., 2023c) proposed optical flow estimation in the pixel domain, which can evaluate targets in high-speed and high-dynamic scenes in real time. In addition, Ieng et al. (Ieng et al., 2017) used 4D spatiotemporal properties to further estimate the optical flow of high-speed moving objects in 3D stereo vision. Wu and Haessig (Haessig et al., 2018; Wu and Guo, 2023) sampled the spiking neural network on the TrueNorth chip for millisecond-level motion optical flow evaluation. Nagata et al. (Nagata et al., 2021) performed scene optical flow on the pulse flow of DVS and used it for visual scene reconstruction. Rueckauer and Hamzah (Rueckauer and Delbruck, 2016; Hamzah et al., 2018) used block matching to evaluate optical flow on FPGA, and further explored the block matching of adaptive time segments (Lee and Kim, 2021), which can evaluate sparse or dense pulse flows in real time. Shiba et al. (Shiba et al., 2022b) accumulated the pulse flow as a feature map by frequency, and then used the supervised learning EV-FlowNet to evaluate the optical flow. A general maximum contrast framework for motion compensation, depth estimation and optical flow estimation is employed. Hordijk et al. (Hordijk et al., 2018) used DVS128 for optical flow estimation, which could keep the UAV landing smoothly. Pardes et al. (Pardes et al., 2019) further used an unsupervised learning hierarchical spiking neural network to perceive global motion. Song et al. (Song et al., 2023) proposed an optical flow estimation method that combines the rendering mode and event gray level in the pixel domain, and samples the optical flow information in CeleX-V.

Due to the difficulty of feature representation in end-to-end supervised learning of asynchronous spatiotemporal pulse signals and the lack of large-scale optical flow datasets, the current optical flow evaluation method is mainly based on the model of prior information, which can directly provide light for the sampling chip of neuromorphic vision sensor. Stream information output. However, the end-to-end supervised learning method can fully exploit the spatiotemporal characteristics of asynchronous spatiotemporal pulse signals, thereby further improving the performance of optical flow motion estimation.

6.4 Object recognition

Neuromorphic vision sensors are widely used in character recognition, object recognition, gesture recognition, gait recognition, and behavior recognition, especially in scenes involving high-speed motion and extreme lighting. Object recognition algorithm is the mainstream of neuromorphic vision task research (Yang H. et al., 2023). From the perspective of processing asynchronous spatiotemporal pulse signals, it is mainly divided into: frequency accumulation image, hand-designed features, end-to-end deep network and spiking neural network.

Frequency cumulative image: Younsi et al. (Younsi et al., 2023) projected the pulse flow into an image according to a fixed time length, and used a feedforward network to recognize the human posture. Morales et al. (Morales et al., 2020) encoded the asynchronous pulse stream into a frequency-accumulated image with a fixed length in the time domain, and then used a convolutional neural network to recognize human poses and high-speed moving characters. Gong (Gong, 2021) accumulated the pulse stream output by DVS as image and speech signal and input it to the deep belief network for character recognition. In addition, Li et al. (Li et al., 2023) used a fixed temporal length to accumulate image sequences and used LSTM to recognize moving characters. Cherskikh (Cherskikh, 2022) accumulated the pulse stream output by ATIS into images according to fixed time domain length or fixed pulse data, and used convolutional network to identify objects. IBM (Tchantchane et al., 2023) accumulated pulse signals into images in time domain, and used convolutional neural network for gesture recognition on the neuromorphic processing chip TrueNorth. Yang et al. (Yang H. et al., 2023) used the attention mechanism to detect and recognize the target from the image of the pulse stream. Lakhshmi et al. (Lakhshmi et al., 2019) accumulated pulse streams as images, and then used convolutional neural networks for action recognition. Du et al. (Du et al., 2021) first preprocessed the pulse signal to denoise, and then input the accumulated images into a convolutional neural network for gait recognition.

Hand-designed features: Mantecon et al. (Mantecon et al., 2019) used an integral distribution model to segment the motion region, and used a hidden Markov model to extract features from the target region for gesture recognition. Afshar et al. (Afshar et al., 2020) carried out handwritten motion font recognition by extracting BOE features. Clady et al. (Clady and Maro, 2017) extracted the motion features of the pulse flow and used it for gesture recognition. In addition, Bartolozzi and Zhang (Bartolozzi et al., 2022; Zhang et al., 2023d) extracted temporal features such as HOTS and HATS respectively for the pulse stream, and used a classifier to recognize handwritten fonts. Shi et al. (Shi et al., 2018) extracted binary features from the pulse stream, and used the framework of statistical learning to recognize characters and gestures. Li et al. (Li et al., 2018) used the time domain coding method to convert the pulse stream into an image, and used the convolutional neural network to carry out features and digital recognition in the classifier.

End-to-end deep network: Chen et al. (Chen J. et al., 2023) treat asynchronous spatiotemporal pulse signals as event clouds, and adopt the hierarchical structure of the end-to-end deep point cloud network PointNet++ (Lin L. et al., 2023) for gesture recognition. Jiang et al. (JiangPeng et al., 2022) adopted an attention mechanism to sample the domain of the impulse signal and used a deep point cloud network structure for gesture recognition. Shen et al. (Shen et al., 2023) modeled asynchronous spatiotemporal pulse signals for the first time in a graph neural network for recognition tasks such as gestures, alphanumeric, and moving objects.

Spiking Neural Network: Xu et al. (Xu et al., 2023) proposed a multi-level cascaded feedforward spiking neural network for handwritten digits.

Character recognition: Zhou et al. (Zhou et al., 2023) constructed a multi-level cascaded spiking neural network model to recognize fast-moving characters. Subsequently, Wang et al. (Wang et al., 2022e) further adopted an end-to-end supervised learning spiking neural network for alphanumeric recognition. Fan et al. (Fan et al., 2023) designed a deep spiking neural network for classification tasks and performed supervised learning on the deep learning open-source platform.

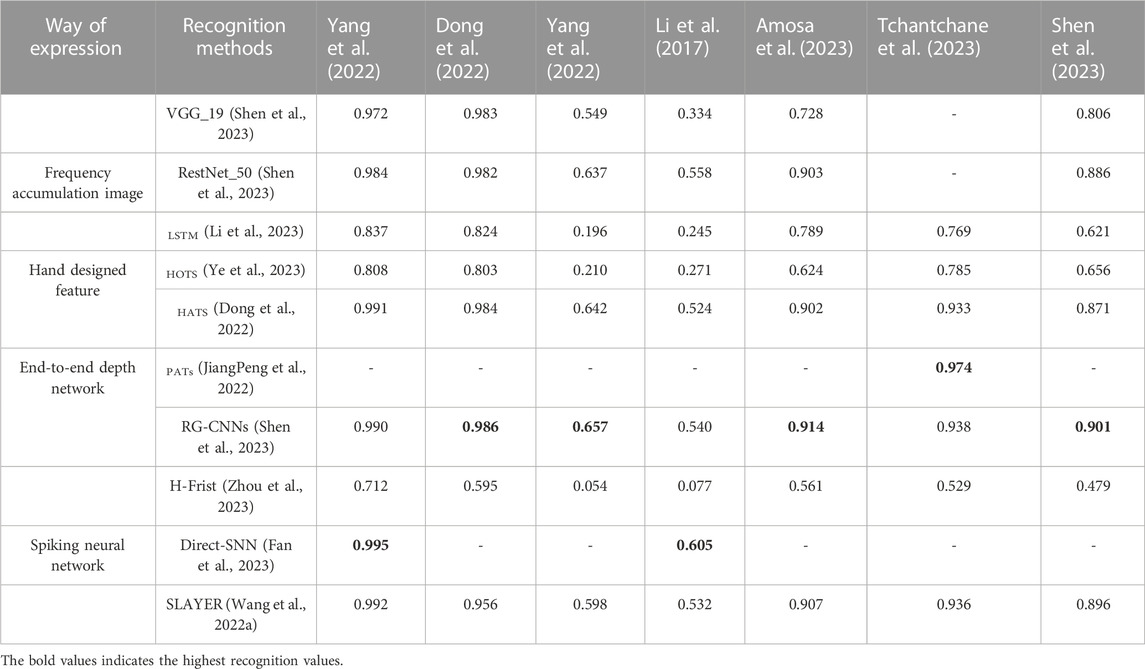

The end-to-end deep network has obvious advantages in the performance of target classification and recognition tasks, as shown in Table 2. Because the asynchronous spatiotemporal pulse signal is modeled as event points, which can better tap the spatiotemporal characteristics. In addition, large-scale classification and recognition datasets provide data-driven insights for deep network models. How to further utilize the timing advantage of high temporal resolution, mine the temporal memory model, learn from the brain-like visual signal processing mechanism (Schomarker et al., 2023), and perform high-speed recognition on the neuromorphic processing chip is an urgent problem that needs to be studied in current neuromorphic vision tasks.

6.5 Object detection, tracking and segmentation

6.5.1 Object detection

In recent years, object detection methods in neuromorphic vision are divided into two directions from the task perspective: primitive detection and target object detection.