- 1Department of Special Needs and Inclusive Education, Debre Tabor University, Debre Tabor, Ethiopia

- 2Department of English Language and Literature, Debre Tabor University, Debre Tabor, Ethiopia

Introduction: Formative assessment tries to improve learning rather than simply providing judgments on learners’ achievement.

Objective: The purpose of the study is to examine the practice of formative assessment (FA) and the influence of socio-demographic factors (experience, education, and university category) on the practice.

Methods: The study used a quantitative research approach with a descriptive survey research design, with data collected from 319 teachers through a questionnaire designed to measure three major components of FA (feedback, alignment of FA with objectives and contents, peer, and self-assessment). To select the participants, this study used both stratified and random sampling techniques. The data analysis techniques used were both descriptive and inferential statistics.

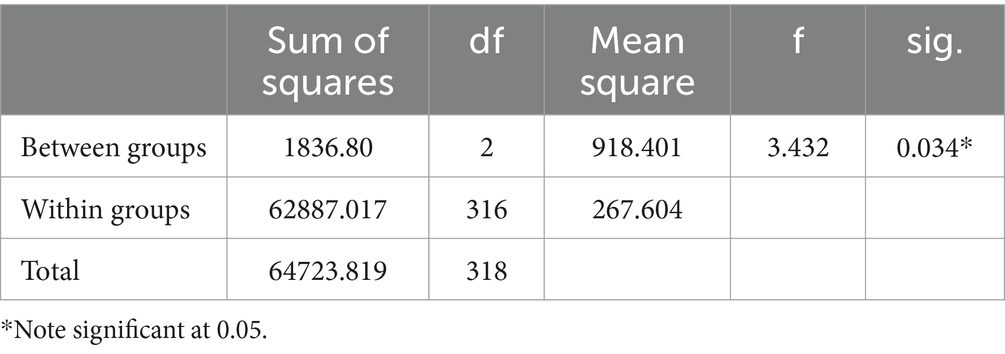

Results: The analysis revealed that most higher education teachers did not use formative assessment strategies to help students progress. This was shown by miss aligning FA strategies with learning objectives and contents of the course; improper feedback for learners, and lack of learners’ involvement in the assessment procedure. We did one-way ANOVA, a statistical test that reveals whether the differences we identified between groups were due to chance or were genuinely significant. The analysis noted that the level of practicing FA was found to differ significantly by university category and experience in teaching. However, ‘educational level’ yielded non-significant results on the extent of practicing FA, implying that a higher degree does not imply that a more likely to use FA strategies.

Conclusions and recommendations: Overall, teachers in higher education institutions were not practicing FA strategies. Thus, universities are required to organize discussions and training that would enable teachers to recognize and discharge their multifaceted roles in performing FA.

Introduction

Assessment is a process of collecting and discussing information from various sources and activities to determine how well students have learned the subject matter and how well educational learning outcomes are met in all educational systems (Muskin, 2017). Summative and formative assessment (FA) tasks are used while assessing learners (Anisa et al., 2021). The summative assessment uses student learning data to measure academic accomplishment at the end of a specified period to assign marks. Whereas FA employs student learning data to deliver feedback, diagnose difficulties, and track learning progress to scaffold students’ learning. FA combines formal and informal data collection strategies to increase student learning, and offers teachers and students ongoing, real-time information that informs and supports instruction (Nyabe, 2015).

Assessment is a comprehensive and systematic process of collecting, analyzing, and discussing information from various sources and activities to determine how well students have learned the subject matter and how effectively educational learning outcomes are met across all educational systems (Muskin, 2017). This process is integral to the educational experience as it provides critical insights into student performance, instructional effectiveness, and curriculum adequacy. By identifying the strengths and weaknesses of both students and educational programs, assessment helps ensure that educational objectives are being achieved and provides a basis for continuous improvement in teaching and learning practices. Within this framework, two primary types of assessment tasks are utilized: summative and formative assessments (Anisa et al., 2021). These assessments play a pivotal role in evaluating student performance, guiding instructional strategies, and informing educational policy decisions.

Summative assessment is designed to evaluate student learning at the conclusion of an instructional period, such as the end of a unit, course, or academic term. It uses student learning data to measure academic accomplishment and assign marks or grades, providing a clear indication of whether students have met the learning objectives (Chand and Pillay, 2024). This type of assessment is often high stakes, as it contributes significantly to final grades and academic records. Summative assessments typically include standardized tests, final exams, and end-of-term projects, which are used to make important decisions about student progression, certification, and accountability (Holmes, 2018).

In contrast, formative assessment (FA) is an ongoing process that employs student learning data to deliver timely feedback, diagnose learning difficulties, and monitor progress. The goal of FA is to scaffold students’ learning by identifying areas where they need support and providing the necessary interventions. FA combines both formal and informal data collection strategies, such as quizzes, observations, student reflections, and peer assessments, to enhance learning. It offers teachers and students continuous, real-time information that informs and supports instruction, making it a dynamic and integral part of the teaching and learning process (Andrade and Heritage, 2018). Formative assessment is characterized by its iterative nature, where feedback loops are created to help students understand their learning progress and areas for improvement. This approach fosters a growth mindset, encourages self-regulated learning, and promotes a deeper understanding of the subject matter. By integrating formative assessment into daily instructional practices, educators can create a more responsive and adaptive learning environment that meets the diverse needs of all students (Ismail et al., 2022).

Formative assessment tries to improve learning rather than simply providing judgments on learners’ achievement (Alahmadi and El Keshky, 2018). It can be used in a variety of settings, learning topics, types of knowledge and skills, and educational levels. In contrast to summative assessment, FA makes it easier to diagnose learning challenges, provide feedback, and inspire students to achieve the desired learning objectives (Holmes, 2018). It encourages a deeper comprehension of the subject and helps to identify individual strengths and limitations. It also promotes active student participation since students are always inspired to stay on track and make sustained efforts throughout the course (Muskin, 2017). Furthermore, the increased emphasis on FA across the world poses a difficulty for universities that have previously relied on summative assessments. All of the above demonstrated the ability of FA to establish and construct relevant learning environments in in higher education institutions (Ababio and Dumba, 2013).

Specifically, FA has cognitive and metacognitive benefits for all university students, as well as future teachers, and administrators. It is an important strategy for professionals working in higher educational settings since it provides several feedback opportunities to promote self-regulated learning (Xiao and Yang, 2019). It offers students and educators with information that allows them to analyze and alter the instructional process. This means that it improves the educator’s ability to assist student learning improvement (Playfoot, 2023). Similarly to this, Black and Wiliam (2005) argue that FA, when used as learning evidence, allows educators to alter teaching strategies to meet learning needs, resulting in considerable gains in student learning achievement and improvement. Thus, if university teachers implement FA into their everyday lesson, students’ learning will improve. However, educators did not comprehend or were hesitant to employ this concept, although acknowledging its importance in enabling learning development (Forster and Souvignier, 2014).

In constructing a formative assessment, there are a variety of aspects and components that need to be considered. First, teachers should align the assessment with learning objectives and criteria to ensure that assessment measures are directly tied to learning outcomes (Forster and Souvignier, 2014). Alignment in the context of formative assessment means that assessment strategies must directly measure specified learning objectives and criteria.

Moreover, feedback strategies should be included in the FA strategies that can encourage the learning achievement of learners (Yan and Pastore, 2022). The other aspect where teachers should do is that the assessment includes both peer and self-assessment techniques to foster a collaborative environment where students learn from and support each other. Peer and self-assessment are techniques in which learners offer feedback to one another (peer assessment) and reflect on what they have learned (self-assessment), encouraging more thoroughly learning. Research evidence on peer and self-assessment underscores its significance in stimulating deep level of learning and critical thinking (Fisseha, 2015). Moreover, teachers shall use various assessment modes such as quizzes, projects, discussions, presentations, role play, etc., can provide a more comprehensive view of student learning (Fisseha, 2015; Forster and Souvignier, 2014).

The Ethiopian Education Policy document, disseminated in 2018, specifies that FA should get more consideration at whatever level of study. The policy statement emphasizes improvement-oriented assessment (Ministry of Education, 2018). Regardless of the aims outlined in the policy statement, the use of FA to promote students’ learning in higher learning institutes is almost non-existent. Educators in higher education frequently neglect the use of formative assessment during teaching and learning process for a variety of reasons, including a lack of pedagogical skills, high class size, and reluctance. As a result, the actual practice of FA does not align with the policy document’s requirements.

Quality assessments carried out by Jimma University and other Ethiopian educational institutions in 2007 and 2009, as well as independent quality audits conducted by the Higher Education Relevance and Quality Assurance (HERQA) in 2008, confirmed that FA practices in university courses have had limited success in improving learning outcomes. As a result, there may be a perceived need to investigate the extent to which FA is used and the accompanying hurdles that have hampered its adoption in Ethiopian higher education institutions (Birhanu, 2018). Thus, this research focused on FA practice at three Ethiopian government-run institutions, examining alignment, feedback, self-assessment, and peer assessment, as well as the influence of socio-demographic factors on the implementation of FA.

Although the importance of FA is well recognized, teachers confront of several obstacles while implementing it. One major barrier is a lack of time and resources. Teachers are usually overwhelmed with curriculum objectives, leaving little time for periodic assessments (Wiliam, 2010). FA adoption is also hampered by a lack of awareness and positive attitudes around it (Berry, 2010). Furthermore, a common misconception is that formative assessment is only “testing,” which leads to a focus on summative assessments rather than ongoing feedback and correction (Yan, 2014). Furthermore, the enormous number of students per classroom, the absence of proper teaching-learning facilities, dissatisfaction as a result of the student cheating system, and the student’s unwillingness to participate in FA are important barriers to effectively implementing it in higher education institutions (Harrison, 2013). Overcoming these challenges requires a great effort among educators.

The relationship between teachers’ experience and education and classroom assessment practice in universities is a critical topic of research. Studies have shown that a teacher’s experience and education have a considerable influence on FA practice at all levels of schooling. This is because different universities’ rules, cultures, and resources can influence how teachers teach and assess their students. In this context, studies revealed that teachers with higher experience and education were more likely to employ a variety of FA strategies, including formative and summative assessment. Experienced and high-level education teachers were also more likely to use FA data in their instruction and provide feedback to pupils. This link is most likely due to a combination of variables, including enhanced knowledge and abilities, improved classroom management, and higher confidence (Brookhart and Guskey, 2001). However, this is not thoroughly addressed in Ethiopian universities.

Even though the aforementioned working instructions or university category and policy documents emphasized the need for FA, practical evidence suggests that teachers in Ethiopian higher education institutions are doing it incorrectly. There appears to be a mismatch between the perceived objectives of FA and what instructors have employed it for. It has been reported that FA has not been practiced to the expected standard. As a result, the projected results and goal were not met. It is simply a collection of marks rather than providing support for learners with diverse interests by offering feedback.

This study looks into the gap between policy and practice regarding formative assessment in Ethiopian higher education institutions. The study focuses on three government-run universities and investigates to what extent FA strategies are implemented, specifically alignment, feedback, peer and self-assessment, as well as the impact of socio-demographic characteristics (teacher experience, university category, and educational level) on FA implementation. The goal is to identify important to provide recommendations about how FA might be used more effectively to increase student learning.

Research questions

1. To what extent are FA strategies implemented in Ethiopian higher education institutions?

2. Are there significant differences in FA practices used by teachers as a result of sociodemographic factors (education level, experience, and university category)?

Research methods

Research setting

The government of Ethiopia has made a commendable effort by categorizing public universities into three groups based on what they want to accomplish. The first group, comprised of research universities, is dedicated to doing cutting-edge research in a variety of fields. The second group, which includes applied science universities, is committed to educating students on issues essential to the nation’s economic growth. It is good to see the government’s dedication to providing education that suits the needs of society. Applied universities promote skills that are immediately transferable in equipping learners for their future careers. In contrast, comprehensive universities provide a range of degrees in different disciplines pertinent to the country; providing students with a comprehensive education that blends academic knowledge with practical application. Based on this, the study was carried out in Ethiopia’s government-funded universities located in the Amhara region. Higher institutions in the region are classified into three types based on their missions and quality. Wollo, Debre Markos, and Debre Berhan universities are all applied, whilst Bahir Dar and Gondar are research universities. The remaining universities, Debre Tabor, Injibara, Debark, Mekdela Amba, and Woldia, offer comprehensive higher education mostly for undergraduate students.

Research design

Ledy and Ormrod (2001) define research design as a basis that guides study efforts in order to connect the research problem to relevant facts. As already stated above, the research questions are: (1) To what extent are FA strategies implemented in higher education institutions? (2) 1. Are there significant differences in FA practices used by teachers as a result of sociodemographic status (educational, experience, and university category)? The study employed a survey research design which is chiefly quantitative to address the aforementioned research questions. In this process, the researchers used questionnaires to gather quantitative data, which they then statistically analyzed to determine practices about the responses on the extent of FA practice (Gravetter and Forzano, 2018).

Sampling techniques and samples

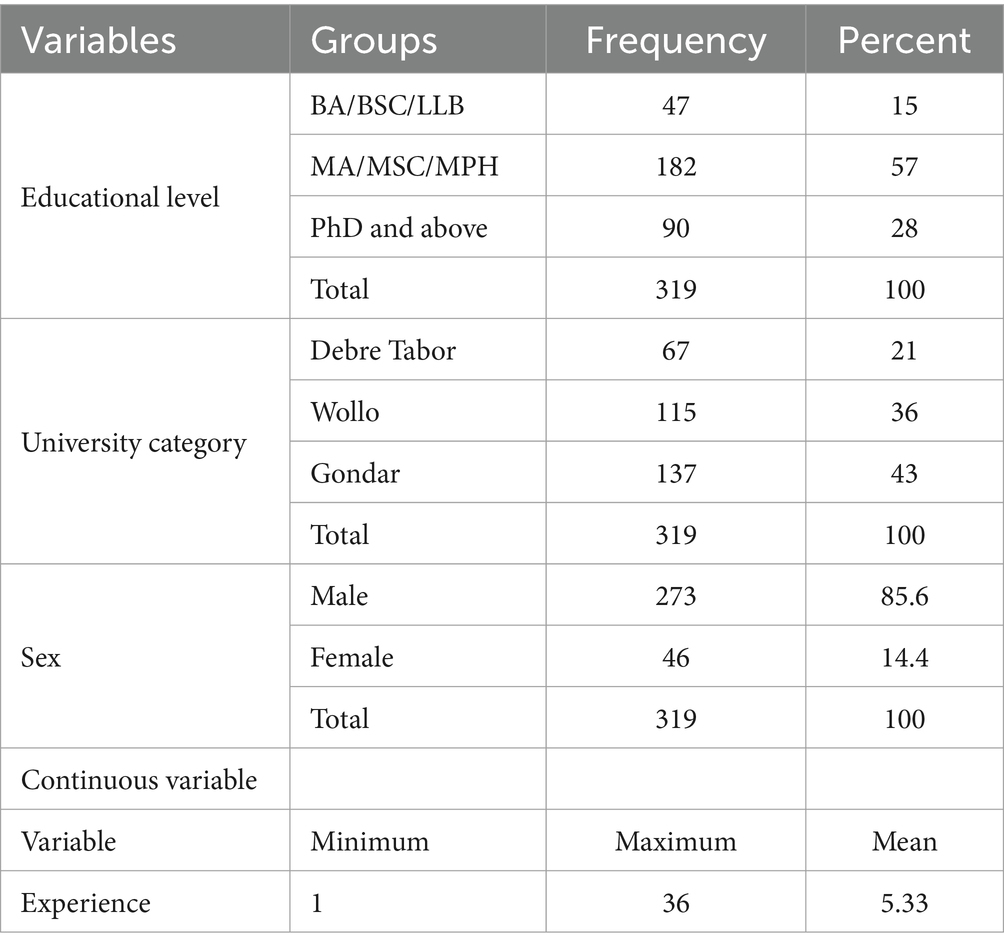

Cohen et al. (2004) indicate that for a population of around 5,320, the best sample size is 384, which is considered representative of the population at a 95% confidence level. As a result, 384 teacher participants were selected to gather data from a total population of 5,320. The number is based on the most recent available data from each university (Cohen et al., 2004). This sample size is considered representative. The study used a proportionate stratified sampling technique to divide teacher participants into three groups: teachers at the University of Gondar in one stratum, teachers at Wollo University in the second stratum, and teachers at Debre Tabor University in another. Then, the researchers used a simple random sampling technique to pick teacher participants from these three groups. The sample size has been computed by the total number of participants at each university. First, colleges were chosen at random, and teachers were contacted once the colleges had been determined. The researchers then used proportionate random selection to choose 176 teachers from the University of Gondar, 139 teachers from Wollo University, and 69 teachers from Debre Tabor University. As a result, 384 people were chosen, with only 319 (83%) fully completing the questionnaire. From 319 participants, Table 1 showed the demographic characteristics of teacher participants.

Data collection instrument

Questionnaire

The researchers employed a questionnaire with four sets of questions based on the aspects of FA outlined in the introduction section. The first set of items contained socio-demographic characteristics of teachers; the second set of questions dealt with the alignment of assessment techniques with objectives and contents; the third part was also talked about peer and self-assessment practice; and the last part of the questionnaire about feedback and remediation strategies used in the implementation of FA strategies in public universities located in Amhara region. The areas and specific items in the questionnaire were chosen primarily based on a comprehensive review of the literature, with a focus on established frameworks for effective formative assessment in education. As stated in the literature review, the key concepts and areas covered in Hattie and Timperley (2007) and Dunn and Mulvenon (2009) influential work on formative assessment including feedback provision; Fisseha (2015), Hargreaves (2005), Demissie (2016), and Maclellan (2001) studies on formative assessment implementation, were used to use the current questions and contents of the questionnaire. These literatures emphasize the necessity of aligning assessment methods with objectives and contents of the subject being taught, utilizing peer and self-assessment, and implementing feedback and remedial processes as critical components for the effectiveness of FA practices. Thus, the questions and contents of the questionnaire were developed based on existing literature of FA and guided the questions. We simply combined multiple theoretical frameworks to capture the essential features revealed in the literature review.

With a critical analysis of the literature review, the researchers constructed the questionnaire with a four-point Likert-scale with values: 1 = never, 2 = rarely, 3 = sometimes, 4 = always. The dimensions of FA in the questionnaire have a total of 28 questions.

Validity and reliability were checked for this set of questions. A questionnaire’s content validity is checked by the modification of questions. Two assistant professors in educational psychology and 5 years of teaching experience checked the questionnaire’s content validity. These experts rated the questionnaire’s overall content positively in measuring the variables under consideration. This analysis specifically interpreted using mean scores of each dimension using descriptive statistics based on what has been recommended by Fisseha (2015), Nyabe (2015), Getinet (2016), and Gemechu et al. (2017). Therefore, a higher score on each item and dimension indicates a good level of FA.

Before gathering data, it is advisable to assess the internal consistency of questionnaire items in terms of assessing the target variables. To examine the reliability of the instruments, twenty-five teachers engaged in the piloting procedure at Bahir Dar University, Bahir Dar, Ethiopia, and reliability was tested using Cronbach alpha. The test indicated that the general FA items had a reliability of 0.76, meaning that the questionnaire was internally consistent. The Cronbach’s alpha values for each dimension were between 0.72 and 0.80. When the questionnaires were presented to teachers, any unclear items were updated for clarity before the data-gathering process began. Then, the instrument was found reliable to be used in the final data collection.

After piloting and refining the questionnaire, as previously stated, the final version was distributed to 384 teachers from Amhara’s sample universities. Data collectors physically distributed the questionnaire to participants. Teachers took 30–40 min to complete the questionnaire. We ensured participant anonymity and confidentiality of responses. Participation was entirely optional, and all participants provided informed consent before beginning the study.

The study also emphasizes the significance of addressing missing data and meticulously reviews incomplete responses during data cleaning. If missing data does not compromise survey response integrity, it’s removed, and significant missing data is excluded for statistical validity.

Methods of data analysis

The quantitative data were analyzed with SPSS version 24. The study’s objective was to determine the extent to which FA is practiced, as well as the effect of sociodemographic characteristics (educational level, university type, and teaching experience) on FA practice. The statistical tools used were descriptive and inferential statistics. Descriptive statistics such as mean and SD were utilized to present the average ratings of each FA component, including content and objective alignment, self-assessment, peer assessment, and feedback strategies. Inferential statistics like one-way ANOVA and Pearson moment product–moment correlation was employed. A one-way ANOVA was used to investigate differences in level FA practice based on university category. On variables with a statistically significant difference, post hoc analysis was conducted to identify the causes of the difference at a probability level which is of 0.05. In addition, the significant relationship between practices of FA and teachers’ experience was analyzed using the Pearson product–moment correlation coefficient.

Findings and discussion

In recent times, Ethiopian higher education institutions have increasingly adopted FA principally as a modality of increasing student learning outcomes and improving education quality. However, data attests to improper implementations of FA that can be attributed to a range of circumstances. This trend appears to continue in public universities in the Amhara region. This part covers the study’s key findings in two categories: the current practice of FA according to the dimensions of FA, and the influence of teachers’ experience, education level, and university category on the extent of practicing FA.

FA implementations in public universities

The practices of FA in various activities performed by university teachers were assessed. Twenty-eight items with four response categories were used to measure to what extent teachers practice FA activities across different dimensions (alignment of FA techniques with objectives and contents, peer and self-assessment practice, and feedback strategy employed). This survey used a numerical rating scale, with participants indicating their level of FA strategies practice in each statement. The greatest possible response for each statement was “always,” which got a rating of 4. The lowest possible response was “never,” which received a rating of one. Means and standard deviations of each item in each component of FA were calculated. Next, the average rating of each item in each dimension was derived. To begin, for each dimension, average mean rating scores were obtained by dividing the total by the number of practicing statements listed for that dimension. Accordingly, the main findings are given and discussed in the following sections.

Feedback strategies

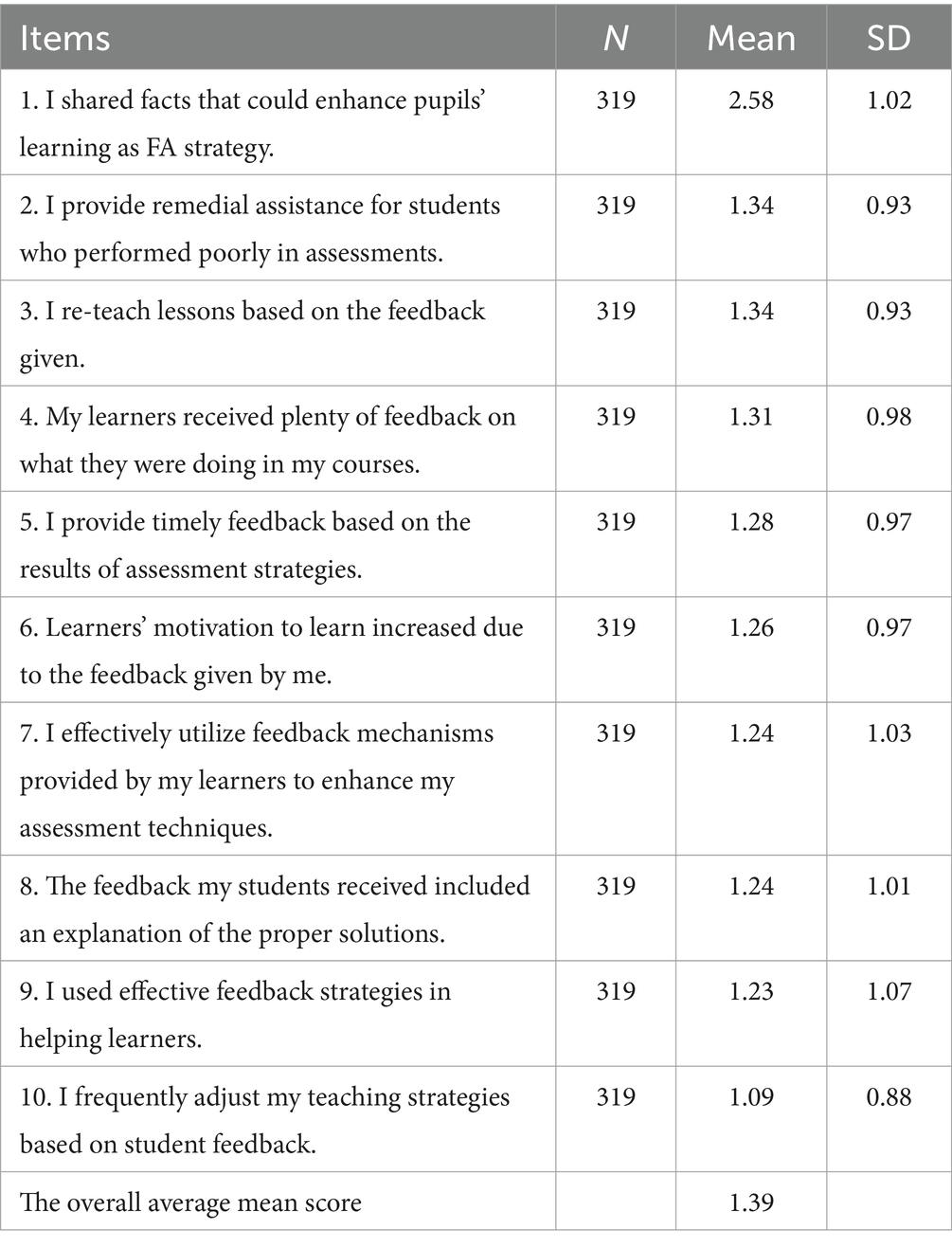

The first FA dimension that this study examined was feedback strategies (see Table 2).

According to the study of descriptive statistics (mean and SD), the mean ratings of all items are lower than expected (between 2.58 and 1.09). This dimension had an overall mean score of 1.39, implying that teachers in public universities less frequently used feedback strategies as part of FA. One item from the 10 items that scored above average mean score was ‘I communicated information that could improve students’ (M = 2.58, SD = 1.02). The items on which respondents scored the highest mean implied that they almost practiced some components of FA more frequently than others. On the other way, the lowest mean score was observed for the item ‘I frequently adjust my teaching strategies based on student feedback’ (M = 1.09, SD = 0.88), which replied that teachers poorly practiced this activity than others mentioned. Thus, teachers were less engaged in using feedback and remediation strategies to help the learning of students. This evidence suggests that, despite some understanding of the value of feedback, teachers are not participating in the whole cycle of formative assessment that is required for effective teaching. It emphasizes the necessity for professional development programs that will provide teachers with not just the skills, but also the confidence, to make data-driven changes to their instruction in order to improve student learning outcomes in the setting of public universities. The educational implications of these findings are that formative assessment techniques, particularly those involving feedback and remediation, are not being implemented effectively in these public universities. It emphasizes the critical need for professional development, a review of assessment processes, and an emphasis on developing student-centered learning environments in which feedback is valued and practiced.

The finding is consistent with studies conducted by Skalicky and Brown (2009), Florez and Sammons (2013), and Faremi et al. (2023). These findings noted that teachers were not using appropriate feedback and remediation for learners at higher education institutions. Given the importance of feedback, higher education institutions must take action to address this issue. This could include giving additional training and assistance to instructors and implementing new policies and procedures to ensure successful feedback and remediation. Thus, it is critical to move beyond rhetoric and make feedback a central component of the teaching and learning process using formative assessment techniques.

Peer and self-assessment practice

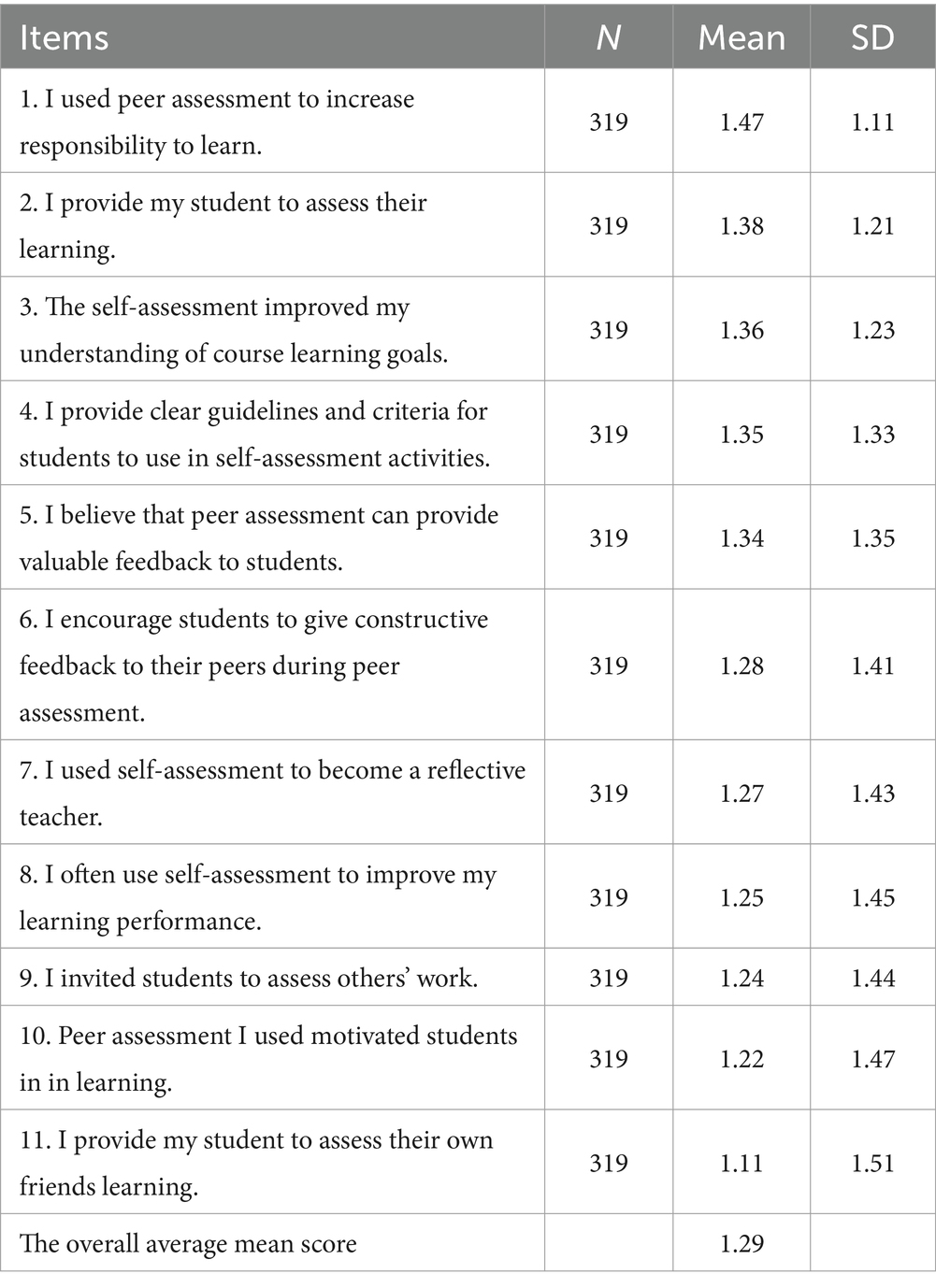

To measure the views of teachers on their engagement in using peer and self-assessment activities, 11 items with four response rates were employed. The mean scores and SD were used (see Table 3).

In terms of peer and self-assessment, all of the items described were practiced at a lesser level. This dimension had a mean answer of 1.29 per item, which was lower than the cut-off point (2) with a range of 1.11 to 1.47, indicating that university lecturers did not employ peer and self-assessment techniques more frequently to assist students learn. The data indicated that it is consistently in the response area of “rarely” for teachers on students’ engagement in the assessment procedures. In sum, the findings revealed that teachers in universities of Amhara regional state did not engage students to assess themselves and others. The current research findings are virtually consistent with previous studies that state teachers did not employ both self-assessment and peer assessment in the teaching-learning process (Brookhart and Guskey, 2001; Skalicky and Brown, 2009; Solomon, 2014; Getinet, 2016; Kindu, 2018). As a result, it could be argued that the involvement of students in the assessment procedure in universities was underutilized. Finding that teachers did not use both self-assessment and peer assessment in the teaching-learning process has implications for both students and educators. For students, this implies they may not be receiving the necessary feedback and support to excel in their studies. For teachers, this means they may require additional training and assistance to adopt effective self-assessment and peer assessment procedures in the classroom.

Alignments of FA techniques with objectives and contents

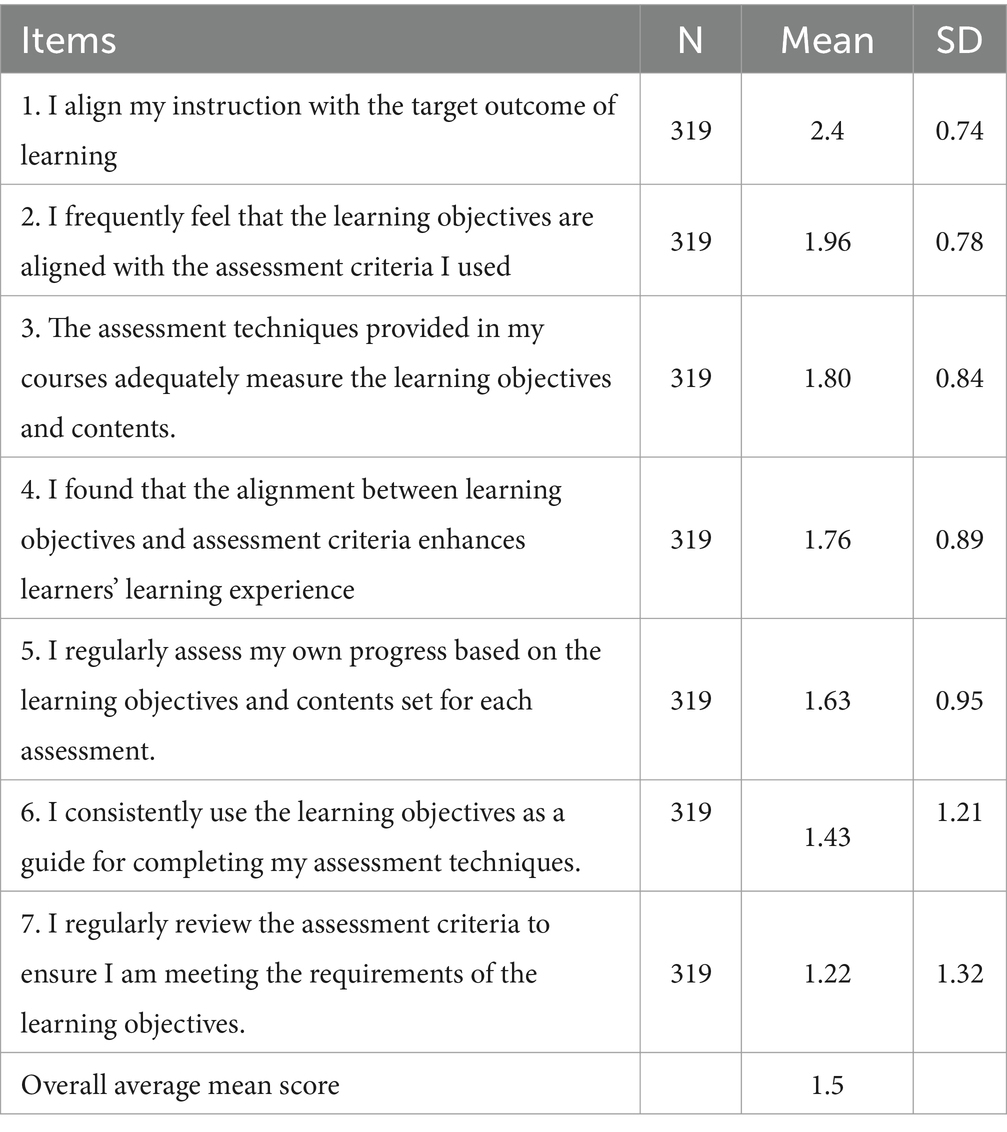

In this part, teachers were requested to respond to items on aligning assessment strategies with the objectives and contents of the course. In this sub-scale, a total of seven items were presented (see Table 4).

The response options for the teacher participants on this dimension of FA items were: always (4), usually (3), sometimes (2), and rarely (1). From the analysis, this dimension had an overall average mean score of 1.50 with a range of 1.22 to 2.40, implying the teacher alignment of their assessment techniques with the learning objectives and contents of the course was fairly low. From the analysis of descriptive statistics (mean and SD), the total items mean score ranges from between the highest mean score of (M = 2.40) to the lowest mean score (M = 1.22). Two items with highest mean scores, respectively, were ‘I align my instruction with target outcome of learning’ (M = 2.40, SD = 0.74), and ‘I frequently feel that the learning objectives are clearly aligned with the assessment criteria I used (M = 2.96, SD = 0.78).

The lowest mean score was observed for the item ‘I regularly review the assessment criteria to ensure I am meeting the requirements of the learning objectives’ (M = 1.22, SD = 132), and ‘I consistently use the learning objectives as a guide for completing my assessment techniques’ (M = 1.43, SD = 1.21). Generally, teachers in public universities of Amhara regional state were not effectively maintaining alignments of FA strategies with the contents and objectives of the course. This is also commendable with the findings of Maclellan (2001), Fisseha (2015), Getinet (2016), Sintayehu (2016), and Abera and Tolosa (2019). These studies provide additional evidence and credibility to the argument being made in the current study.

Based on the findings stated above, the insufficient use of formative assessment techniques in Ethiopia’s public universities is a substantial challenge to improving student learning. Teachers may grasp the necessity of matching their instruction with learning objectives, but often struggle to put this understanding into practice on a daily basis. This is seen in the infrequent use of peer and self-evaluation approaches, which actively engage students in their own learning, as well as the misalignment between declared learning objectives and assessment methods. These challenges are most likely exacerbated by high class numbers and the prevalence of more traditional teaching methods. A change toward more learner-centered teaching and assessment approaches is critical for improving the student learning experience, which should be reinforced by practical teacher training opportunities.

Inferential analysis: exploring differences and relationships

Sociodemographic variation in practicing FA

The study looked at how sociodemographic characteristics including educational level, university category, and instructor experience affected the level of FA practice. Prior to doing inferential statistics such as one-way ANOVA and Pearson product moment correlation, basic assumptions were tested to ensure normality. Skewness and kurtosis are two strategies used to determine whether data is normally distributed or not (Kline, 2011). Thus, in this study, the analysis of each variable’s skewness and kurtosis values revealed that the scale (s) was/were normally distributed because no z values fell between −1.96 and 1.96 (divided the skewness and kurtosis by their standard error). That is, the skewness and kurtosis scores revealed no substantial deviations from the data’s normal distribution. Similarly, the histogram indicated that the data for the study’s variables was nearly regularly distributed. As a result, one-way ANOVA and the Pearson product moment correlation coefficient were used to determine the presence of differences and correlations.

University category and extent of practicing FA

The university category was divided into three levels. The results of the one-way ANOVA showed that there were statistically significant differences (α = 0.05) between instructors’ practices of FA that may be related to their working university: F (2, 316) = 3.432, p = 0.034 (p < 0.05). In other words, there was a statistically significant difference in the actual practices of FA among teachers from different working universities. Multiple comparisons were performed to determine which group means differed from the others. The “Post-Hoc” TukeyHSD (honestly significant difference) test was employed to determine where the difference exists. The mean differences were found between Debre Tabor University and Wollo University. The mean scores of these universities were determined to be Debre Tabor (M = 72), University of Gondar (M = 69.9), and Wollo University (M = 65). Therefore, it can be concluded that the mean score of Debre Tabor University is significantly different from the mean score of Wollo University. One possible explanation for this discrepancy is that Debre Tabor University places a greater focus on FA in its educational program. Moreover, it may offer additional possibilities for teachers to improve their FA practice through workshops, professional development, and other activities. The authors of the study also propose a reasonable explanation for Debre Tabor university’s better FA strategies implementation: Debre Tabor University may place a greater focus on FA in its educational program. This emphasizes the importance of curriculum and training on FA strategies. It appears that certain aspects of Debre Tabor’s program are more effectively preparing instructors to use FA. To scale up FA practices, other universities should follow Debre Tabor University’s lead and incorporate thorough FA training into their programs. This finding is consistent with prior research (Wiliam, 2010; Guskey, 2003), which has shown that school and university-level characteristics such as teachers working environment influence instructors’ assessment practice (see Table 5).

Educational level and extent of practicing FA

Teachers educational level was categorized into three levels: BA/BSC/LLB, MA/MSC/MPH, and PhD and above. The ANOVA result demonstrated that there were no significant differences (α = 0.05) between teachers FA practice that may be attributable to their educational level; F (2,316) = 0.531, p = 0.272 (p > 0.05). The data indicate that the educational level examined in this study is not a significant predictor of teachers’ FA behaviors. This violates some preconceptions regarding the relationship between education and formative assessment. Future study is required to look into these possible causes and the interactions between education, practical training, and other outside variables that influence FA practice (see Table 6).

Darling-Hammond (2000), Guskey (2003), and Stiggins (2005) discovered that teachers with higher levels of education are more effective in their classrooms, may be because they use and understand formative assessment more.

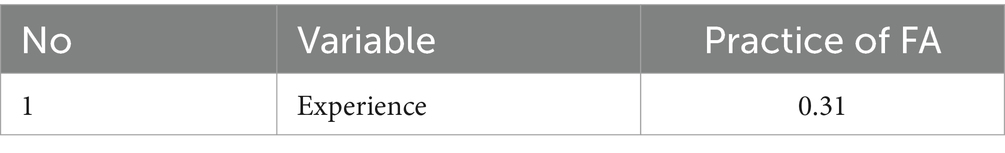

Relationship between teaching experience and extent of practicing FA

In addition to one-way ANOVA, Pearson product–moment correlation was computed to see if there is a statistically significant relationship between teachers’ work experience and practices of FA (see Table 7).

A Pearson product–moment correlation coefficient revealed a moderately significant relationship between the extent of practicing FA and teachers’ teaching experience; as teachers’ experience increased, so did their level of practicing FA in public universities in Amhara Region, Ethiopia. The finding is consistent with the findings of other studies (Brown and Hofer, 2014). These studies found that teachers with more teaching experience were more likely to use a variety of FA strategies.

Implication of the findings

The implications of this study are multifaceted and significant for the landscape of higher education in Ethiopia. Firstly, the findings highlight a critical gap in the implementation of formative assessment (FA) strategies among higher education teachers. Despite the recognized importance of FA in enhancing student learning, the study reveals that many teachers are not effectively utilizing these strategies. This misalignment between FA strategies and learning objectives, coupled with inadequate feedback and limited student involvement, suggests a need for substantial improvements in teaching practices.

The study’s results indicate that socio-demographic factors, particularly university category and teaching experience, significantly influence the practice of FA. This suggests that institutional context and professional experience play crucial roles in shaping how teachers approach formative assessment. The significant differences observed across university categories imply that institutional policies and priorities may impact the adoption and effectiveness of FA practices. Therefore, universities must consider these factors when designing and implementing professional development programs.

Moreover, the non-significant impact of educational level on FA practices suggests that simply having advanced degrees does not necessarily translate to better FA implementation. This finding underscores the importance of targeted training and support for teachers, regardless of their educational background. Universities should focus on providing ongoing professional development opportunities that emphasize practical FA strategies and their alignment with course objectives.

The study also calls for a systemic approach to improving FA practices. Universities should organize regular discussions and training sessions to help teachers understand and fulfill their roles in formative assessment. By fostering a culture of continuous improvement and collaboration, institutions can ensure that teachers are better equipped to use FA strategies effectively. This, in turn, can lead to improved student outcomes and a more robust educational system.

In conclusion, the study underscores the need for a comprehensive strategy to enhance formative assessment practices in higher education. By addressing the identified gaps and leveraging the influence of socio-demographic factors, universities can create a more supportive environment for both teachers and students. This approach will not only improve the quality of education but also contribute to the overall development of the higher education system in Ethiopia.

Limitations of the study

As any other research, this research study also had some drawbacks to this study that could impact the results of the study. These include low generalizability because it only focuses on public universities in Ethiopia’s Amhara region, which may not reflect the experiences of private colleges or institutions in other regions or countries, limiting the findings’ applicability. Furthermore, relying on self-reported data from teachers raises the possibility of bias, as teachers may overstate or underestimate their use of formative assessment (FA) strategies. The study also lacked qualitative data, which could provide further insight into the reasons behind teachers’ practices, and challenges of FA. Furthermore, the study does not take into consideration other potential confounding variables, such as class size, teaching load, or access to professional development opportunities, all of which could have an impact on FA strategy implementation. These limitations highlight the necessity for more complete data collection, including qualitative insights, a larger sample size, and evaluation of other factors that may influence FA practices.

Conclusion

As per the results of the study, assessment strategies in the teaching-learning process demonstrate a reliance on traditional assessment methods rather than using formative assessment strategies. The analysis indicates that teachers did not properly align their assessment techniques with the learning objectives and contents of their courses in universities located in Amhara region, Ethiopia. Feedback mechanisms were rarely used, and teachers rarely adjusted their instruction based on student feedback. Furthermore, peer and self-assessment procedures were underutilized, indicating that students were not fully engaged in the assessment process.

Lastly, the study found that there were statistically significant differences in the practices of FA among the categories of the universities. Specifically, Debre Tabor University teachers report higher levels of FA implementation than others. Moreover, there is a positive relationship between teachers’ teaching experience and their practice of FA in Amhara Region public universities, in Ethiopia. This suggests that as teachers gain experience, they are more likely to include FA in their instructional techniques. The data imply that, while experience is important, institutional environment is also a crucial factor influencing FA implementation in the sampled public universities. However, the analysis showed that education level does not a significant influence on the extent of practicing FA. This is to mean that a higher degree does not imply that a more likely to use FA strategies. This conclusion better emphasizes the connection between the socio-demographic factors, such as teaching experience and institutional environment, and the practice of formative assessment in public universities of Amhara region, Ethiopia.

Recommendations

Based on the study’s findings and conclusion, the following recommendations are put forward:

• Professional development training important: Seminars, conferences, and workshops should be organized regularly for lecturers in the universities to expose them to acquire the skills required to practice FA in universities. This will help them implement FA in universities adequately. The training shall include actual ideas and procedures, examples of excellent student feedback, and practice in establishing clear assessment criteria. It should also discuss how to deal with huge class numbers and resource restrictions, which are common in Ethiopian higher education.

• Establish collaborative networks: Obviously, there is Amhara University forum working together on a number of relevant issues; here therefore, it shall be wise to recommend that FA implementation practice at an equal level be shared.

• Policy and guidelines: It is advised that higher education institutions in the Amhara region incorporate FA techniques into their institutional policies and curricula. This would contribute to a more methodical approach to the implementation of FA strategies. Moreover, specific guidelines can help institutions implement FA techniques more consistently, eventually enhancing student learning results.

• Finally, future research needs to be carried out, which may concentrate on validating the plausibility of the major findings within the broader context and across various universities. The recommended studies shall employee a qualitative approach in which data will be obtained from teachers and students.

Data availability statement

The datasets presented in this article are not readily available because of ethical issues. Requests to access the datasets should be directed to bWVicmF0Z2VkZmllMkBnbWFpbC5jb20=.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants or participants legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

MW: Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. BD: Conceptualization, Formal analysis, Methodology, Project administration, Software, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The authors wish to express special thanks to the participants of the study. We also acknowledge the officials at each district of the study areas.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ababio, B. T., and Dumba, H. (2013). The value of continuous assessment strategies in students’ learning of geography in senior high schools in Ghana. Res. Humanit. Soc. Sci. 3, 71–77.

Abera, G., and Tolosa, G. (2019). Continuous assessment perception of Madda Walabu university. J. Educ. Pract. 10, 4–11.

Alahmadi, N. A., and El Keshky, M. E. S. (2018). Assessing primary school teachers’s knowledge of specific learning disabilities in the Kingdom of Saudi Arabia. J. Educ. Dev. Psychol. 9, 9–22. doi: 10.5539/jedp.v9n1p9

Andrade, H. L., and Heritage, M. (2018). Using formative assessment to enhance learning, achievement, and academic self-regulation. London: Routledge.

Anisa, V., Matthys, M., and Ashley, H. A. (2021). Is continuous assessment fit for purpose? Analyzing the experiences of academics from a south African university of technology. Educ. Inq. 14, 267–283. doi: 10.1080/20004508.2021.1940426

Berry, R. (2010). Formative assessment in higher education: the practice of feedback. J. High. Educ. Policy Manag. 32, 519–529. doi: 10.1080/1360080X.2010.510751

Birhanu, M. (2018). The implementations and challenges of assessment practices for students’ learning in public selected universities Ethiopia. Univ. J. Educ. Res. 6, 2789–2806. doi: 10.13189/ujer.2018.061213

Black, P., and Wiliam, D. (2005). Inside the black box: raising standards through classroom assessment. Phi Delta Kappan 87, 9–21. doi: 10.1177/003172170508700109

Brookhart, S. M., and Guskey, T. R. (2001). Classroom assessment: what teachers need to know and do. Educ. Leadersh. 59, 6–9.

Brown, G. T. L., and Harris, L. R. (2014). The future of self-assessment in classroom practice: Reframing self-assessment as a core competency. Front. Psychol. 5, 3–33.

Chand, S. P., and Pillay, K. (2024). Global scientific and academic research journal of education and literature. India:GSAR Publishers.

Cohen, L., Manion, L., and Morrison, K. (2004). Research methods in education. 6th. Edn. London, UK. Falmer Press.

Darling-Hammond, L. (2000). Teacher quality and student achievement. Educ. policy anal. arch. 8, 1–44.

Demissie, K. (2016). Teachers’ conception and practice of continuous assessment in EFL classes: The case of four primary schools in Adulala town. Ethiopia: Addis Ababa University press.

Dunn, K., and Mulvenon, S. (2009). A critical review of research on formative assessment: the limited scientific evidence of the impact of formative assessment in education. Pract. Assess. Res. Eval. 14, 1–12. doi: 10.7275/jj3x-4y94

Faremi, Y. A., S’lungile, K., and Maziya, N. T. (2023). Practice of continuous assessment in learning business studies in high schools of Lubombo region Eswatini. J. Int. Coop. Dev. 6:32. doi: 10.36941/jicd-2023-0010

Fisseha, M. M. (2015). The use of quality formative assessment to improve student learning in west Ethiopian universities. Ethiopia: Addis Ababa University press.

Florez, M., and Sammons, P. (2013). Effective assessment strategies for elementary students. J. Elem. Educ. 25, 120–135.

Forster, N., and Souvignier, E. (2014). Learning progress assessment and goal setting: effects on reading achievement, reading motivation, and reading self-concept. Learn. Instr. 32, 91–100. doi: 10.1016/j.learninstruc.2014.02.002

Gemechu, A., Muhammed, K., and Maeregu, B. (2017). The implementations and challenges of continuous assessment in public universities of eastern Ethiopia. Int. J. Instr. 10, 109–128. doi: 10.12973/iji.2017.1047a

Getinet, S. (2016). Assessment of the implementation of continuous assessment: the case of METTU university. Eur. J. Sci. Math. Educ. 4, 534–544. doi: 10.30935/scimath/9492

Gravetter, F. J., and Forzano, L.-A. B. (2018). Research methods for the behavioral sciences. Boston, MA, USA: Cengage Learning.

Guskey, T. R. (2003). Assessment for learning: reshaping teaching and learning. Educ. Leadersh. 61, 50–55.

Hargreaves, E. (2005). Assessment for learning? Thinking outside the (black) box. Camb. J. Educ. 35, 213–224. doi: 10.1080/03057640500146880

Harrison, J. (2013). Barriers to effective implementation of formative assessment in higher education. J. Univ. Teach. Learn. Pract. 10, 12–24.

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Holmes, N. (2018). Engaging with assessment: increasing student engagement through continuous assessment. Act. Learn. High. Educ. 19, 23–34. doi: 10.1177/1469787417723230

Ismail, S. M., Rahul, D. R., Patra, I., and Rezvani, E. (2022). Formative vs. summative assessment: impacts on academic motivation, attitude toward learning, test anxiety, and self-regulation skill. Language testing. Asia 12, 1–23. doi: 10.1186/s40468-022-00191-4

Kindu, A. A. (2018). The practice and challenges of continuous assessment in some selected government secondary schools of Gondar City Administration. Ethiopia: Gondar University.

Kline, R. B. (2011). Principles and practice of structural equation modeling. 3rd Edn. New York, NY, USA: The Guilford Press.

Ledy, D., and Ormrod, J. E. (2001). Research design: A guide to connecting research problems to relevant facts. New Jersey: Upper Saddle River, Pearson Education.

Maclellan, E. (2001). Assessment for learning: the differing perceptions of tutors and students. Assess. Eval. High. Educ. 26, 307–318. doi: 10.1080/02602930120063466

Ministry of Education. (2018). Ethiopian education development roadmap (2018–30). Addis Ababa, Ethiopia: an integrated executive summary.

Muskin, J. A. (2017). Continuous assessment for improved teaching and learning: a critical review to inform policy and practice. Paris, France: UNESCO.

Nyabe, N. T. (2015). Primary school teachers’ experience of implementation assessment policy in social studies in the Kavango region of Namibia. Windhoek: Stellenbosch University.

Playfoot, D. (2023). Flipped classrooms in undergraduate statistics: online works just fine. Teach. Psychol. 50, 243–247. doi: 10.1177/00986283211046319

Sintayehu, B. (2016). The practice of continuous assessment in primary schools: the case of Chagni Ethiopia. J. Educ. Pract. 7, 24–30.

Skalicky, J., and Brown, N. (2009). Peer learning framework: A community of practice model. centre for the Advancement of Learning and Teaching. Tasmania, Australia: University of Tasmania.

Solomon, N. (2014). The practice and challenges of implementing continuous assessment in government First Cycle primary schools of Yeka Sub-City. Master\u0027s thesis, Addis Ababa University.

Stiggins, R. (2005). From formative assessment to assessment for learning: A path to success in standards-based schools. Phi Delta Kappan, 87, 324–328.

Wiliam, D. (2010). The impact of formative assessment on learning. Pract. Assess. Res. Eval. 15, 1–6. doi: 10.7275/ghpv-hx83

Xiao, J., and Yang, L. (2019). The impact of formative assessment on student learning outcomes. J. Educ. Psychol. 111, 456–472.

Yan, Z. (2014). A review of formative assessment: a case study of Chinese higher education. Assess. Eval. High. Educ. 39, 320–331. doi: 10.1080/02602938.2013.822944

Keywords: assessment, higher education, feedback, formative assessment, Ethiopia

Citation: Wondim MG and Dessie BA (2025) Unveiling formative assessment in Ethiopian higher education institutions: practices and socioeconomic influences. Front. Educ. 10:1515335. doi: 10.3389/feduc.2025.1515335

Edited by:

Hani Salem Atwa, Arabian Gulf University, BahrainReviewed by:

Davide Parmigiani, University of Genoa, ItalyHayam Hanafi Abdulsamea, Ibn Sina National College for Medical Studies, Saudi Arabia

Nur Sehang Thanks, Tadulako University, Indonesia

Copyright © 2025 Wondim and Dessie. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mebrat Gedfie Wondim, bWVicmF0Z2VkZmllMkBnbWFpbC5jb20=

†ORCID: Mebrat Gedfie Wondim, https://orcid.org/0000-0001-9965-8723

Mebrat Gedfie Wondim

Mebrat Gedfie Wondim Behailu Atinafu Dessie2

Behailu Atinafu Dessie2