94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 21 February 2025

Sec. Higher Education

Volume 10 - 2025 | https://doi.org/10.3389/feduc.2025.1505856

This article is part of the Research TopicAI's Impact on Higher Education: Transforming Research, Teaching, and LearningView all 10 articles

The rise of artificial intelligence (AI), particularly ChatGPT, has transformed educational landscapes globally. Moreover, the Beijing Consensus on Artificial Intelligence and Education and the ‘Pact for the Future’ propose that AI can support UNESCO in achieving development goals, especially focusing on SDG 4, which emphasizes quality education. Thus, this study investigates undergraduate students’ familiarity with and attitudes toward AI tools, as well as their perceived risks and benefits of using AI tools at a private university in China. An explanatory sequential mixed-method design was employed with an online survey of 167 students, followed by a qualitative analysis of open-ended responses. Data were analyzed using the one-sample Wilcoxon signed-rank test and thematic analysis, supported by SPSS and ATLAS.ti 25. The findings revealed that students demonstrated moderate familiarity with AI tools, particularly ChatGPT and willingness to use them in coursework. Positive attitudes toward AI’s value in education were evident, although concerns such as dependence and reduced independent thinking, algorithmic bias and ethical concerns, accuracy and information quality, data security, and privacy concerns were observed among students. Moreover, students generally viewed AI positively and perceived AI integration as inevitable and becoming common in academic settings. Students were concerned that the misuse of AI by their teachers was minimal and trusted their teachers to use AI effectively in teaching. Students also perceived AI’s benefits, such as personalized learning, efficiency and convenience, career and skill development, and support for independent learning. This study contributes to the discourse on AI integration in higher education by highlighting students’ nuanced perceptions and balancing their benefits with potential risks. The findings of this study were limited by the small sample size and institution. Future research should explore diverse contexts to develop comprehensive AI implementation frameworks for higher education.

The introduction of ChatGPT in 2022 has made Artificial Intelligence (AI) popular worldwide. AI makes higher education no exception to whether it should be allowed to be used in the classroom. Nearly 40% of universities in the United Kingdom (UK) stated that they might ban teachers and students from using ChatGPT; otherwise, it would be classified as academic misconduct (Housden, 2023). Several challenges manifest in various technological, organizational, societal, and ethical contexts. A notable challenge is the absence of thorough policies and guidelines for AI integration, leading to inconsistent and frequently ineffective implementation across institutions (Henadirage and Gunarathne, 2024). Moreover, challenges related to technology, including inadequate computational resources, scalability concerns, and the intricate nature of implementation, present significant difficulties (Buinevich et al., 2024). A significant issue is the lack of knowledge among educators and administrators regarding AI technologies, which greatly hinders their effective adoption and use (Ateeq et al., 2024; Henadirage and Gunarathne, 2024). Regarding the exploration and advancement of technology, significant deficiencies add complexity to the integration of AI.

Turing’s (1950) famous remark, ‘Can machines think?’ has become a reality and has prompted the world to unite in creating a pact for a better future (United Nations, 2024). In May 2019, countries convened to reach an agreement on the use of AI, also known as the “Beijing Consensus on Artificial Intelligence and Education” (UNESCO, 2019). The following year, UNESCO envisioned the use of AI to transform education and aid in achieving sustainable development goals (SGDs) (UNESCO, 2021b). Furthermore, UNESCO acknowledged the possibility of the misuse of AI and recommended ethical standards for AI (UNESCO, 2021a). Although the possibilities of artificial intelligence within educational contexts have been the subject of ongoing investigation in various sectors (Moonsamy et al., 2021), generative artificial intelligence has only recently begun to move from experimental settings to actual classroom environments and has gained popularity in the public eye (Bond et al., 2024). To date, there has been no consensus on the appropriate use of generative AI in higher education (Barrett and Pack, 2023). Moreover, the dangers associated with artificial intelligence cannot be overlooked. Large-scale language models may exhibit bias against certain groups because of the training data, which may not adequately reflect diverse populations, thereby producing biased outputs that exacerbate existing societal prejudices and inequities (Farrokhnia et al., 2024). Furthermore, the content—whether text, audio, or images—produced by artificial intelligence may contradict authentic information, allowing individuals to confuse falsehoods with reality, thus creating accountability dilemmas and perpetuating misleading information (Pavlik, 2023). Consequently, there is an urgent requirement for increased interdisciplinary investigation to tackle complex issues related to the incorporation of AI into higher education frameworks (Ullrich et al., 2022). Thus, this study aimed to investigate undergraduate students’ familiarity with and attitudes toward AI tools, as well as their perceived risks and benefits of using these tools in higher education. The research was conducted within the context of a private university in China and addressed the following research questions:

1. What is the level of familiarity among undergraduate students with artificial intelligence (AI), and what are their attitudes toward AI’s role in teaching and learning in higher education?

2. What do undergraduate students perceive as the risks and benefits of using AI tools in higher education?

A survey indicated that students possess a general familiarity with generative artificial intelligence (GenAI) technology, and their engagement with GenAI is influenced by various factors, including the frequency of use (Chan and Hu, 2023). Another quantitative survey conducted in the UK revealed that students extensively utilized generative AI. The findings indicate that the majority of students are cognizant of generative AI, with approximately half having engaged in it or planning to do so for academic purposes (Johnston et al., 2024). Additionally, a recent survey conducted in Bulgaria indicated that local college students were highly familiar with the ChatGPT. The increasing prevalence of ChatGPT among college students suggests a growing eagerness to use this tool in the pursuit of high academic performance (Valova et al., 2024). A study conducted in Germany indicated that artificial intelligence tools have become integrated into the educational experiences of students across all disciplines, with learners discovering diverse applications for these technologies in their respective fields. Approximately two-thirds of students demonstrate familiarity with and practical experience in utilizing the tool, particularly in the fields of engineering, mathematics, and natural sciences (Von Garrel and Mayer, 2023). In a study conducted among medical students in Jordan, it was observed that while the majority were aware of AI tools, a limited number actively utilized these resources in their academic research endeavors (Mosleh et al., 2023). In a study conducted in Latin America, students from Ecuador, Peru, and Mexico recognized the significant contribution of Artificial Intelligence in enhancing educational quality and individualized learning processes (Ríos Hernández et al., 2024).

A quantitative approach was employed to investigate the attitudes of users and students towards the adoption of ChatGPT, with a primary focus on Oman’s residents. The investigation revealed that the student population exhibited a strong motivation to utilize the ChatGPT tool, with participants expressing that they perceived the tool as both beneficial and trustworthy within an educational context (Tiwari et al., 2023). An Australian survey indicated that college students experienced an increased sense of social support from AI with more frequent usage. However, it has also been suggested that prolonged exposure to AI can result in dependence, particularly in situations where human companionship is lacking (Crawford et al., 2024). In New Zealand, one study found that non-universal students and knowledge seekers were more inclined to utilize ChatGPT to accomplish their course requirements without reacting to its content (Stojanov et al., 2024). In a separate experiment, the students exhibited considerable interest in and enthusiasm for their first interaction with generative AI tools. However, when GenAI could not fulfill its advanced academic writing requirements, student satisfaction decreased considerably (Yang et al., 2024). An interview conducted with students from UK business schools revealed their perspectives, noting that generative AI tools often fail to capture the complexity and nuances inherent to real-world situations. Excessive dependence on AI may overlook the significance of a multidisciplinary approach, constraining the scope of critical thinking (Essien et al., 2024).

The implementation of AI in education has the potential to provide personalized learning experiences according to the unique needs of each student, thereby improving both engagement and academic performance (Rizvi, 2023; Tyagi et al., 2022). A research initiative conducted in South Korea addressed the diverse learning needs of students through the customization of various courses for educators, simultaneously enhancing student engagement and academic performance (Lee and Kim, 2023). AI facilitates the innovation and enhancement of educational tools. The integration of artificial intelligence facilitates the advancement of intelligent tutoring systems, adaptive testing, and educational simulations, thereby enhancing the overall quality of education (Negi et al., 2024; Rachovski et al., 2024; Rizvi, 2023).

According to Tlili et al. (2023), the implications of AI include potential issues related to cheating, integrity of honesty, and truthfulness in ChatGPT, concerns regarding privacy, and risk of manipulation. Furthermore, the challenges associated with data privacy and security, along with the implementation of AI, present considerable apprehensions regarding confidentiality and protection of student information (Berendt et al., 2020; Qian, 2021). The integration of artificial intelligence within educational contexts raises significant ethical considerations, particularly regarding the implications for surveillance and the potential erosion of individual autonomy (Akgun and Greenhow, 2022; Berendt et al., 2020).

This study employed a descriptive mixed-method design, in which the theory used in the study serves as a guide for understanding the phenomenon. The Technology Acceptance Model (TAM), developed by Davis in 1989, serves as a prominent theoretical framework for comprehending and forecasting user acceptance and utilization of technology (Aljarrah et al., 2016). This model has emerged as a significant force in the field of Information Systems (IS). However, TAM theory has been adapted in education research to understand learners’ intentions to use technology. The Technology Acceptance Model identifies two key factors that play a crucial role in an individual’s decision to embrace a technology: Perceived Usefulness (PU) and Perceived Ease of Use (PEOU). Perceived Usefulness (PU) denotes the degree to which a person believes that utilizing a particular system will improve their job performance (Aljarrah et al., 2016). This element is crucial to the adoption of AI technology. Studies show that when educators and students view AI tools as advantageous for enhancing teaching and learning results, their propensity to embrace these technologies increases significantly (Al Darayseh, 2023; Al-Abdullatif, 2024; Ma and Lei, 2024). Moreover, Perceived Ease of Use (PEOU) relates to the extent to which individuals feel that utilizing a specific system demands little effort (Malatji et al., 2020). The ease of use of AI tools plays a crucial role in their acceptance and integration into educational practice. When these tools are straightforward and user-friendly, they tend to be more readily adopted by educators and students (Al-Abdullatif, 2024; Supriyanto et al., 2024).

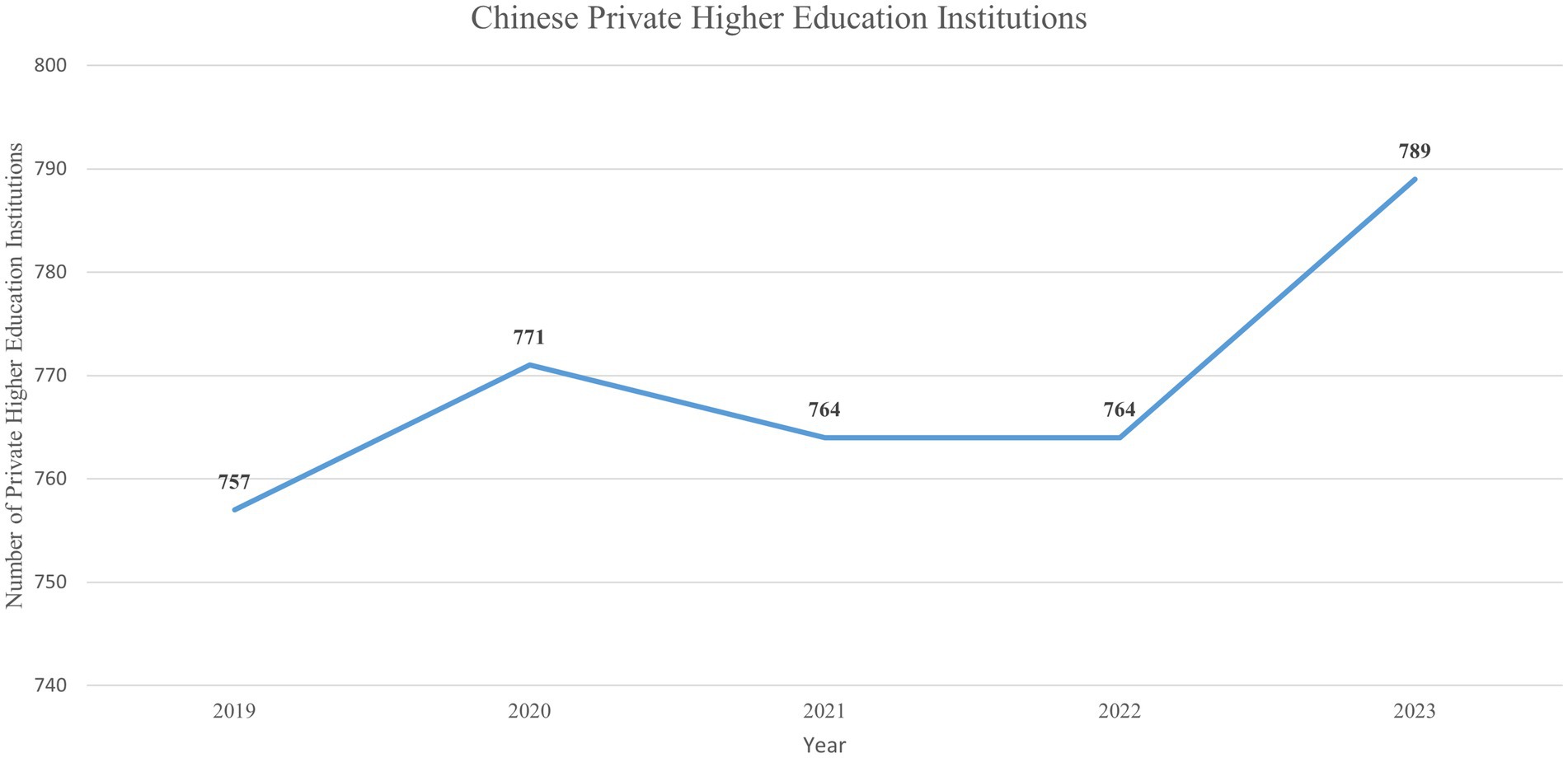

The private higher education sector in China has experienced significant growth and visibility, resulting in a considerable number of students enrolling in private institutions both within China and globally (Liu et al., 2022, 2023). In 2016, the Chinese government enacted a regulation requiring all private organizations to classify themselves as either for-profit or not-for-profit (Liu et al., 2023). According to the five-year trend, there were 757 private higher education institutions in 2019 (see Figure 1). Nonetheless, there is a notable increase in the number of private higher education institutions by 2020. In 2021, the government reclassified ordinary undergraduate institutions, undergraduate-level vocational schools, private higher vocational colleges (junior colleges), and adult education colleges and universities as independent entities, resulting in 764 private higher education institutions between 2021 and 2022 and a total of 789 institutions by 2023 (MOE China, 2020, 2022, 2023). Private higher education institutions sometimes have difficulty securing government funding for their research initiatives. They rely on students’ tuition fees to finance their operations. Moreover, Chinese private higher education institutions face challenges in terms of educational quality and adherence to government laws (Welch, 2024).

Figure 1. Chinese private higher education institutions. Source: Ministry of Education of the People’s Republic of China.

With China enrolling the largest number of students in higher education, investigations into students’ perceptions of Artificial Intelligence add to the discussion surrounding the increasing interest in this area, where the majority of AI research tends to emphasize nonempirical studies (Shahzad et al., 2024). A survey conducted among third-year interior architecture Chinese students revealed limited awareness of artificial intelligence (Cao et al., 2023). Moreover, Chinese students showed a moderate understanding of AI technologies compared to younger Chinese oncologist students, demonstrating a greater level of familiarity (Li et al., 2024). This study enhances the discourse on AI applications in private higher education from the perspective of undergraduate Chinese students.

This study employed an explanatory sequential mixed-method design (Creswell and Creswell, 2023). The explanatory sequential mixed-method design was conducted first in the quantitative method using a survey, followed by qualitative data (Creswell et al., 2018). The researchers chose an explanatory sequential mixed method design to understand the familiarity and attitudes of Artificial, such as the Intelligence and their perceptions of the risks and benefits of AI.

The research locale of this study was a private higher education institution in eastern China. The institution now enrolls approximately 17,000 undergraduate students across 11 departments. The selected private higher education institution had a faculty of over 90% of instructors holding master’s degrees, with 87% of instructors having prior experience working in renowned firms and holding expertise in their respective fields. This institution was chosen because of its strength in computer science studies and its ranking in China’s private institutions, which ranges from 10th to 20th place (Table 1).

This study included 167 respondents (94 males and 73 females). The demographic characteristics of the participants are as follows. The proportions of male and female respondents were almost equal. In addition, 59.3% of the respondents were freshmen, and 26.3% were sophomores; the proportions of juniors and seniors were 9 and 6%, respectively. Most respondents were between 19 and 21 years old (74%). As many as 60.5% of the respondents were computer science majors, and 35.9% of the students were engineering majors. The remaining students were from the humanities and social sciences. The researchers calculated the sample size of the study using the Raosoft calculator online with a 95% confidence level and a 5% margin of error for the 17,000 target population. The recommended sample size was 376. However, during data collection, the researcher failed to meet the recommended sample size due to the limitations of voluntary participation and the randomized sampling technique applied in the study. The researchers sent an online survey to various WeChat groups on popular social media platforms in China.

The instrument used in this study was adopted and modified based on Petricini et al. (2023). Originally, the survey instrument was designed for faculty and students based on their familiarity with and attitudes toward AI. In this study, the researchers used eight items for the familiarity domain and 14 for attitudes toward AI. The researchers did not include items from the original survey; rather, they added more questions regarding the perceived risks and benefits of Artificial Intelligence in higher education in an open-ended format. All quantitative items were tested for reliability using Cronbach’s alpha, and 0.834 using Cronbach’s alpha, which is sufficiently reliable. The survey used a Likert scale, where 1 = strongly disagree, and 5 = strongly agree. Two open-ended questions on the survey questionnaire asked the students about the perceived risks and benefits of AI in education. The online survey was designed in both English and Chinese. Before its widespread distribution, it was first tested with 20 undergraduate students for face validity. Moreover, the first author is fluent in both English and Chinese. Responses to the open-ended questions were given in both languages; some were in English, while others were in Chinese. All responses in Chinese were translated into English.

Prior to data collection, the researchers ensured ethical considerations while conducting the surveys. The survey asked respondents for informed consent to collect their information and invite them to participate in the survey. Moreover, researchers do not collect identifiable information, such as real names and addresses. The questionnaire was published on the Sojump platform, a Chinese data collection platform. The survey was distributed through various WeChat groups in the selected research locale. The survey was conducted over a month during the second semester of the 2023–2024 academic year.

The one-sample Wilcoxon rank-sum test was first proposed by Wilcoxon in 1945. It is a nonparametric statistical test used to determine whether there is a significant difference between the median of a sample and its hypothesized population median. Using a Statistical Package for the Social Sciences (SPSS), the questionnaire data in Tables 2 and 3 can effectively handle small sample sizes, as they do not depend on strict sample size requirements. Second, for questions in the questionnaire designed as ratings (e.g., strongly agree, agree, neutral, disagree, and strongly disagree), the one-sample Wilcoxon signed-rank test can handle these ordered rating data and test whether there is a significant difference between the median familiarity and attitude of the student group and the hypothesized median familiarity and attitude of the population. This test focuses on comparing the median of the sample rather than the mean, which is consistent with the purpose of the attitude survey because the median can better reflect the central tendency, especially when the data distribution is skewed. By comparing the deviations from the median familiarity and attitude, it is possible to test whether students’ familiarity and attitude tend toward a certain direction, such as whether they are generally positive or negative, which helps understand the overall tendency of the student group. The significance levels were p < 0.05 and p < 0.01. Based on this, for Tables 2, 3, corresponding to research question 1 the one-sample Wilcoxon rank-sum test was used to conduct an overall quantitative analysis of the data. Moreover, the researchers used ATLAS.ti 25 for word clouds and thematic analysis. Microsoft Excel and Power BI were used for data visualization.

This study employed a mixed method design to explore the familiarity and attitudes of undergraduate students with artificial intelligence and the perceived risks, as well as the anticipated benefits of utilizing artificial intelligence in education. It invited 167 students from various disciplines to a private higher education institution.

Table 2 presents the familiarity of Chinese undergraduate students with artificial intelligence. The data were analyzed using a single sample Wilcoxon test, assuming that the median was 3 and the significance levels were 0.01 and 0.05, respectively. The results showed that Chinese students were familiar with the concept of artificial intelligence (μ = 3.329, p < 0.01) and had experience using ChatGPT (μ = 3.168, p < 0.5). The research shows that students are open to using ChatGPT and similar tools (μ = 3.521, p < 0.01) for course tasks and to receiving guidance from ChatGPT and similar artificial intelligence-related tools (μ = 3.719, p < 0.01), which is statistically significant. The results showed that the instructor talked about artificial intelligence in class (especially ChatGPT and other text and image generators) (μ = 3.234, p < 0.5). However, the students believed that the instructor did not integrate these tools into their teaching (μ = 2.928). Although the students knew all about artificial intelligence, they knew little about ChatGPT, which can be seen from the insignificant results (μ = 2.988).

Table 3 introduces the attitudes of undergraduate students in China towards artificial intelligence. Research shows that students generally think that artificial intelligence is valuable in education (μ = 3.754, p < 0.01), and the application of artificial intelligence is very common (μ = 3.545, p < 0.01). There were sufficient and beneficial reasons for using AI in education (μ = 3.659, p < 0.01). It was common (μ = 3.240, p < 0.01) and inevitable (μ = 3.357, p < 0.01) to use AI text-generation tools to complete course assignments in higher education. Artificial intelligence (in the form of text and image generation) could pose a danger to students (μ = 2.671, p < 0.01), and measures should be taken to prevent students from using artificial intelligence (μ = 2.545, p < 0.01). The students were not restricted from using artificial intelligence in their course assignments (μ = 3.509, p < 0.01). Students generally held a negative attitude towards the view that artificial intelligence is misused (item 9, μ = 2.737, p < 0.01), and Item 14 held a neutral attitude (μ = 3.0060, p < 0.01) toward the view that using artificial intelligence text generation tools to complete course assignments violates the academic integrity policy of universities. In addition, students thought that teachers could use artificial intelligence in the academic environment in a standardized manner (μ = 3.4012, p < 0.01). Moreover, students’ concerns about the misuse of AI by instructors were minimal (μ = 2.5150, p < 0.01), which is reflected in the significance of the analysis results. In addition, students still had a positive attitude toward teachers’ use of AI to create teaching syllabi (μ = 3.1437, p < 0.01). However, students were neutral in that artificial intelligence could replace their teachers in grading course assignments and evaluations (μ = 2.7246, p < 0.01).

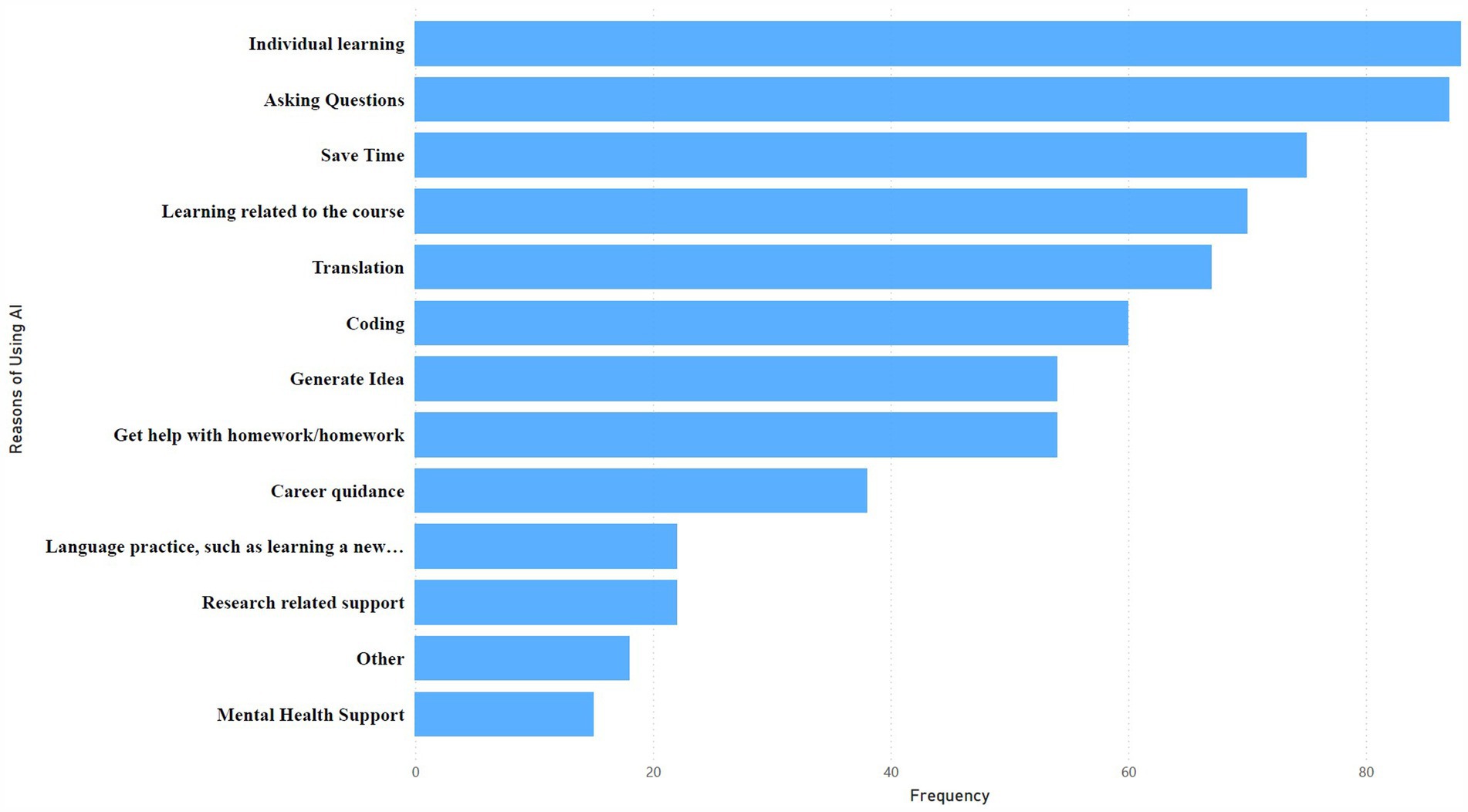

Figure 2 shows the respondents’ reasons for using Artificial Intelligence. Individual learning had the highest frequency among respondents. Artificial intelligence, such as ChatGPT, provides personalized learning experiences. Asking questions was the second-most mentioned need. AI has significant advantages in quickly providing information, knowledge, and solutions and can significantly improve efficiency and convenience, thereby enhancing students’ intention to use AI technology. In addition, the use of AI in learning helps respondents to save time when searching for information quickly. Learning-related courses, translation, coding, generating ideas, and getting help with homework are connected to the first factor that helps students with their individual learning. Career guidance is another interesting reason that appeared among most respondents who used AI to search for a job and prepare for the job market, which helps students in their future job prospects. Language practice was another benefit of using AI among the respondents. Respondents perceived that using AI provided them with an alternative to learning a new language on the Internet or in their classes. Research support, mental health support, and others received the least reason among the students to use AI.

Figure 2. Perceived Benefits of Using Artificial Intelligence. Note. This figure is generated by the authors.

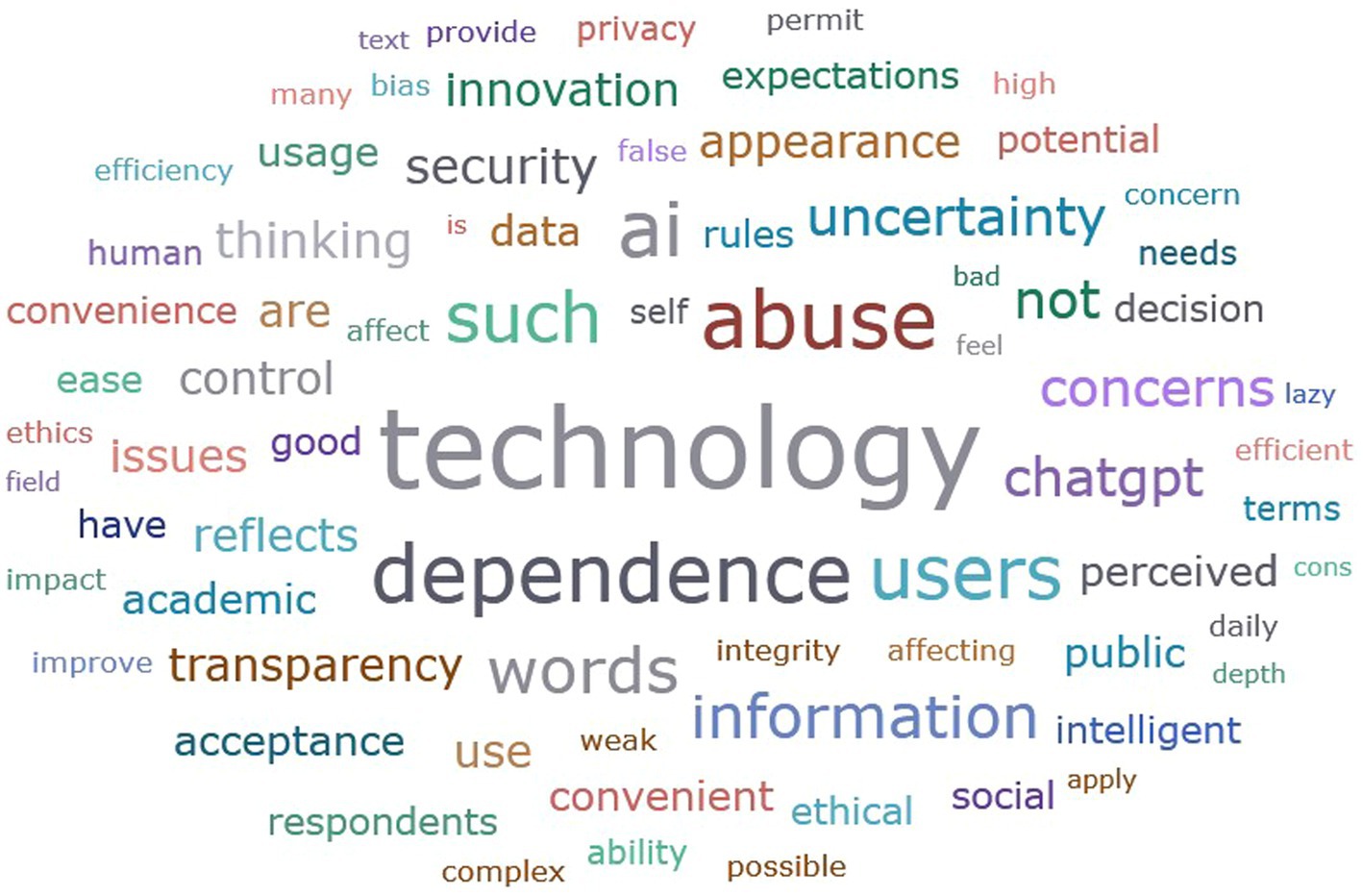

In the survey, respondents were asked about the perceived risks and potential challenges of using Artificial Intelligence in education. According to the respondents, the integration of AI in education presents several challenges (see Figure 3), namely dependence and reduced independent thinking, algorithmic bias and ethical concerns, accuracy and information quality, data security, and privacy concerns. The following are the five major themes based on respondents’ responses:

Figure 3. Perceived risks of using AI in education. The authors generated this word cloud using ATLAS.ti 25 software.

Respondents’ concerns about dependency on AI technology may result in over-reliance, thus impairing the development of human abilities and the capacity for autonomous decision-making. This reliance may impede students’ critical thinking and problem-solving skills. Several respondents expressed concerns that students might prioritize AI-generated answers over their own reasoning. For instance, one respondent noted that students often turn to AI for quick solutions instead of engaging in their own thought processes, stating, “Sometimes, if you encounter a problem in learning, in order to complete the task quickly, you will not find the answer through your own thinking for the first time, but directly rely on the answer generated by AI.” Another participant highlighted that this reliance could lead to a lack of creativity and independent thought, mentioning, “If there are any questions, they will first ask artificial intelligence without their own thinking, and the final thinking will only be limited to the answers given by artificial intelligence, which resulted to a lack creativity.”

Based on their demographics, many of the respondents were from computer science and engineering. According to the respondents, the use of AI in education has ethical concerns, especially in relation to algorithmic bias and discrimination. These biases may influence decision-making processes and result in the inequitable treatment of students based on erroneous data or algorithms, thus compromising the integrity of educational evaluations. For instance, one respondent noted that biases could manifest in AI’s recommendations, which might limit students’ freedom of choice and affect their autonomy. One respondent mentioned, “AI systems may influence students’ learning decisions by recommending learning content and paths, which may limit students’ freedom of choice and affect their autonomy and initiative.” This reflects a broader ethical concern regarding the role of AI in shaping the educational experience.

Students noted that using AI to ask questions about their academic tasks was disadvantageous in terms of the quality and accuracy of information. Students considered apprehensions about the precision of the information supplied by AI (i.e., ChatGPT and DeepSeek). The respondents were concerned that AI might propagate inaccurate or misleading information that is potentially detrimental to their learning and decision-making processes. Respondents expressed skepticism about the accuracy of AI-generated content, emphasizing that it may not always meet professional or academic standards. One respondent noted,’ I do not think the authenticity of the generated content of generative artificial intelligence such as ChatGPT can be guaranteed. Its answers to some questions are not very professional and accurate.” This reflects a broader concern that, while AI can provide quick answers, the quality of those answers may be lacking, which can lead to misinformation. Another respondent echoed this sentiment, stating,’ The main risk is that I do not think the reliability of artificial intelligence is very high. If artificial intelligence suddenly breaks down, it will lead to the stagnation of the whole project or industry.” This highlights the potential consequences of relying on AI for critical tasks, which could have significant implications if inaccuracies are not addressed.

Another issue raised by the respondents pertains to concerns regarding data security and privacy. Students observed that ChatGPT is not readily accessible in China and that access necessitates the use of a Virtual Private Network (VPN). The integration of artificial intelligence into educational settings raises significant concerns regarding data security and personal information protection. Students expressed concern regarding the processes involved in the collection, storage, and utilization of personal data, which may lead to breaches of privacy and deterioration of trust in the provider. Respondents expressed apprehension about how AI tools require extensive data to function effectively, which raises serious privacy concerns. One respondent pointed out, “AI systems usually need to collect a large amount of personal data to provide a personalized learning experience, which may include information such as students’ grades, study habits, and personal interests. The collection and use of such data raises the risk of privacy violations.” This highlights the potential for sensitive information to be mishandled or exposed, thereby leading to significant consequences for individuals. Another respondent echoed these concerns, stating,’ Once these data are leaked, it will be a great loss to individuals, society, and the country. Therefore, there are serious ethical problems.” This underscores the broader implications of data security breaches, not just for individuals but also for societal trust in educational institutions and technologies.

This study investigated undergraduate students’ familiarity with and attitudes toward AI tools, as well as their perceived risks and benefits of using AI tools in higher education. It invited 167 students from various disciplines to a private higher education institution.

Regarding the familiarity of students with Artificial Intelligence, the findings showed that students were moderately familiar with AI tools. Students had some experience in using ChatGPT; however, their knowledge of ChatGPT remained limited. Moreover, students showed an opening to AI tools such as ChatGPT and similar AI tools for completing their course tasks and opened with AI discussions in class. Comparing these findings with Petricini et al.'s (2023) study, students and faculty have mixed opinions on AI. However, the findings of this study demonstrate a level of familiarity with AI. Moreover, in a similar study by Horowitz et al. (2024), familiarity with AI comes together with trust to fully utilize it. However, there are certain aspects of AI that society must explore. In addition, studies have shown similar findings about students’ high degree of familiarity with AI in their studies (Nikoulina and Caroni, 2024; Sahari, 2024).

Regarding students’ attitudes toward AI, the findings revealed that they believe that AI has significant value in education and see it as an inevitable integration into higher education. Students support their teachers in using AI in teacher instruction but do not believe that AI can replace teachers in grading assignments. A systematic review of AI research has revealed that cultural factors play a significant role in the perception that AI cannot substitute for teachers in education (Kelly et al., 2023). This finding corroborates the research conducted by Tlili et al. (2023), which indicates a positive outcome and reflects the growing enthusiasm for its application in learning environments. Furthermore, a study conducted with secondary students in Pune city revealed a strong positive attitude towards AI, suggesting an overall favorable perception among the participants (Pande et al., 2023). Similarly, students in Spain pursuing studies in economics, business management, and education demonstrated awareness of the influence of artificial intelligence. They expressed a willingness to enhance their educational pursuits in this area, even though their current understanding may be somewhat limited (Almaraz-López et al., 2023).

In addition, respondents perceived the benefits of AI in education, including personalized learning, efficiency, information retrieval, career guidance, research support, and mental health support. AI helps students to improve their learning of new languages through independent learning. The benefits of AI in education are recognized in achieving development goals (UNESCO, 2019, 2021b). Furthermore, research indicates that AI facilitates individualized learning experiences by adjusting to the specific requirements of each student and offering customized material and feedback (Pan et al., 2023; Rizvi, 2023). However, despite their positive attitudes toward AI and its perceived benefits, students are worried about the potential dangers of AI. Students recognized substantial concerns concerning ethical use, dependence, reduced independent thinking, accuracy, data privacy, and security. Thus, it is crucial to address ethical concerns such as data privacy and algorithmic fairness to guarantee the responsible implementation of AI (Kaswan et al., 2024; Trivedi, 2023).

This study deepens the understanding of Artificial Intelligence in education research by examining it through the Technology Acceptance Model (TAM). These results underscore the importance of AI literacy for both students and educators. Furthermore, the function of AI as a substitute for human services, including career guidance and tutoring, broadens our understanding of its perceived usefulness. The findings indicate that students are more likely to embrace AI tools when they view them as easy to use and readily available, aligning with the principle that ease of use impacts acceptance. Nonetheless, the absence of complete confidence in AI among students highlights the essential importance of “trust” in this case. Context-specific adaptations are crucial for a deeper understanding of the factors that shape students’ intention to utilize AI. Moreover, the results highlight ethical considerations, including algorithmic bias and data privacy, within the framework of TAM, indicating that these factors could greatly influence users’ perceived trust and, in turn, their acceptance of AI.

Based on these findings, this study offers recommendations for higher education to properly use AI in education.

1. Inclusion of AI in the student’s curriculum. Higher education institutions (HEIs) may consider one course of AI learning to help students understand the use and proper utilization of AI in their studies. According to Aliabadi et al. (2023), artificial Intelligence should be included across the curriculum, transitioning from a topic of personal preference to an integrated component across many.

2. Creating an ethical framework or Guidelines for both students and teachers on AI. The implementation of an ethical framework for AI in HEIs can guide students and teachers in using AI in teaching and learning. HEIs may consider creating an inclusive framework grounded in the opinions of students and teachers. Utilizing frameworks that prioritize fairness, accountability, transparency, and ethics can effectively reduce risks (Sjödén, 2020).

3. Provide training for teachers on the proper use of AI. According to the findings, the students were aware that their teachers utilized AI in their teaching. HEIs can provide additional professional development every school year to help teachers update the development of AI in education, making them more responsive to change. Enhancing teacher training enabled teachers to deliver effective instruction, as students recognized teachers’ positive attitudes toward utilizing AI in their teaching methods. Furthermore, allocating resources towards AI literacy and professional development for teachers can significantly improve their capacity to utilize AI in a manner that is both effective and ethical (AbuJarour and AbuJarour, 2023; Velander et al., 2024).

This study investigated undergraduate students’ familiarity with and attitudes toward AI tools, as well as their perceived risks and benefits of using these tools in the context of a private university in China. The findings revealed that undergraduate students demonstrated moderate familiarity with AI, specifically their awareness of using ChatGPT. However, students showed openness to using ChatGPT and similar tools in coursework and were willing to receive instruction using these tools. In terms of their attitude, students generally viewed AI positively and perceived AI integration as inevitable and becoming common in academic settings. Students were concerned that the misuse of AI by their teachers was minimal and trusted their teachers to use AI effectively in teaching. The perceived benefits can be summarized as personalized learning, efficiency and convenience, career and skill development, and support for independent learning. In terms of perceived risk, students are worried about being dependent and reducing their independent thinking, algorithmic bias and ethical concerns, accuracy and information quality, data security, and privacy concerns. Although this study used a mixed survey method to explore the situation of artificial intelligence in a private university, it has many limitations. Moreover, future researchers should consider studying a more comprehensive and extensive analysis of private universities, and data from multiple private universities should be combined for comparative analysis. Furthermore, this study recommends the integration of ethical AI into curricula, training teachers to guide students, and adopting the ethical framework suggested.

The study data is available upon request from the corresponding author.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants or participants legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements. The researchers are committed to protecting participants by ensuring anonymity and voluntary participation, in accordance with the Declaration of Helsinki. No identifiable data were collected from the participants.

YL: Conceptualization, Data curation, Methodology, Writing – original draft, Formal analysis, Investigation, Visualization. NC: Conceptualization, Formal analysis, Methodology, Resources, Supervision, Writing – review & editing. XX: Funding acquisition, Methodology, Project administration, Resources, Software, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The Open Access funding for this manuscript was provided by the third author through her affiliation with Jimei University Chengyi College.

The authors extend their gratitude to the reviewers for their valuable contributions to enhancing this paper. The quantitative findings from this study were presented and selected at the University Social Responsibility Network (USR) conference, held from October 21 to 23, 2024, at Beijing Normal University. Additionally, this paper was accepted for an oral presentation under the Information and Communication Technologies for Development (ICT4D) Special Interest Group at the 69th Annual Meeting of the Comparative and International Education Society (CIES) in Chicago, United States, scheduled for March 22–26, 2025. Moreover, the first author received full funding from Qingdao City University to present this paper at the aforementioned conference. Thank you very much.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that Grammarly and QuillBot, as Generative AI tools, were used to enhance the readability and language quality of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

AbuJarour, S., and AbuJarour, M. (2023). Bridging education communities in a digital world: exploring the potential and risks of AI in modern education. ICIS: "Rising like Phoenix: Emerg. Pandemic Reshaping Hum. Endeavors Digit. Technol." International Conference on Information Systems, ICIS 2023: "Rising like a Phoenix: Emerging from the Pandemic and Reshaping Human Endeavors with Digital Technologies." Scopus. Available at: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85192532516&partnerID=40&md5=2f5eddc8830318a36d20e5c7ca684189 (Accessed October 30, 2024).

Akgun, S., and Greenhow, C. (2022). Artificial intelligence (AI) in education: addressing societal and ethical challenges in K-12 settings. In Chinn, C., Tan, E., Chan, C., and Kali, Y. International Society of the Learning Sciences (ISLS), Scopus. Available at: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85145769545&partnerID=40&md5=d287892fb96fb12e17ad03a6138f275d

Al Darayseh, A. (2023). Acceptance of artificial intelligence in teaching science: science teachers' perspective. Comput. Educ. 4:100132. doi: 10.1016/j.caeai.2023.100132

Al-Abdullatif, A. M. (2024). Modeling Teachers' acceptance of generative artificial intelligence use in higher education: the role of AI literacy, intelligent TPACK, and perceived trust. Educ. Sci. 14, 1–20. doi: 10.3390/educsci14111209

Aliabadi, R., Singh, A., and Wilson, E. (2023). “Transdisciplinary AI education: the confluence of curricular and community needs in the instruction of artificial intelligence” in Artificial intelligence in education technologies: New development and innovative practices. eds. T. Schlippe, E. C. K. Cheng, and T. Wang, vol. 190 (Singapore: Springer Nature Singapore), 137–151. doi: 10.1007/978-981-99-7947-9_11

Aljarrah, E., Elrehail, H., and Aababneh, B. (2016). E-voting in Jordan: assessing readiness and developing a system. Comput. Hum. Behav. 63, 860–867. doi: 10.1016/j.chb.2016.05.076

Almaraz-López, C., Almaraz-Menéndez, F., and López-Esteban, C. (2023). Comparative study of the attitudes and perceptions of university students in business administration and management and in education toward artificial intelligence. Educ. Sci. 13:609. doi: 10.3390/educsci13060609

Ateeq, A., Alaghbari, M. A., Alzoraiki, M., Milhem, M., and Hasan Beshr, B. A. (2024). Empowering academic success: integrating AI tools in university teaching for enhanced assignment and thesis guidance. ASU Int. Conf. Emerg. Technol. Sustain. 297–301. doi: 10.1109/ICETSIS61505.2024.10459686

Barrett, A., and Pack, A. (2023). Not quite eye to AI: student and teacher perspectives on the use of generative artificial intelligence in the writing process. Int. J. Educ. Technol. High. Educ. 20:59. doi: 10.1186/s41239-023-00427-0

Berendt, B., Littlejohn, A., and Blakemore, M. (2020). AI in education: learner choice and fundamental rights. Learn. Media Technol. 45, 312–324. doi: 10.1080/17439884.2020.1786399

Bond, M., Khosravi, H., De Laat, M., Bergdahl, N., Negrea, V., Oxley, E., et al. (2024). A meta systematic review of artificial intelligence in higher education: A call for increased ethics, collaboration, and rigour. Int. J. Educ. Technol. High. Educ. 21:4. doi: 10.1186/s41239-023-00436-z

Buinevich, M., Shkerin, A., Smolentseva, T., and Puchkova, M. (2024). On the implementation of residual knowledge continuous assessment Technology in an Educational Organization Using Artificial Intelligence Tools. Proc. Int. Conf. Technol. Enhanc. Learn. High. Educ., 111–114. doi: 10.1109/TELE62556.2024.10605664

Cao, Y., Aziz, A. A., and Arshard, W. N. R. M. (2023). University students' perspectives on artificial intelligence: A survey of attitudes and awareness among interior architecture students. IJERI 20, 1–21. doi: 10.46661/ijeri.8429

Chan, C. K. Y., and Hu, W. (2023). Students' voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 20:43. doi: 10.1186/s41239-023-00411-8

Crawford, J., Allen, K.-A., Pani, B., and Cowling, M. (2024). When artificial intelligence substitutes humans in higher education: the cost of loneliness, student success, and retention. Stud. High. Educ. 49, 883–897. doi: 10.1080/03075079.2024.2326956

Creswell, J. W., and Creswell, J. D. (2023). Research design: Qualitative, quantitative, and mixed methods approaches. Sixth Edn. Los Angeles: SAGE.

Creswell, J. W., Creswell, J. D., Creswell, J. W., and Creswell, J. D. (2018). Research design: Qualitative, quantitative, and mixed methods approaches. Fifth Edn. Los Angeles: SAGE.

Essien, A., Bukoye, O. T., O’Dea, X., and Kremantzis, M. (2024). The influence of AI text generators on critical thinking skills in UK business schools. Stud. High. Educ. 49, 865–882. doi: 10.1080/03075079.2024.2316881

Farrokhnia, M., Banihashem, S. K., Noroozi, O., and Wals, A. (2024). A SWOT analysis of ChatGPT: implications for educational practice and research. Innov. Educ. Teach. Int. 61, 460–474. doi: 10.1080/14703297.2023.2195846

Henadirage, A., and Gunarathne, N. (2024). Barriers to and opportunities for the adoption of generative artificial intelligence in higher education in the global south: insights from Sri Lanka. Int. J. Artif. Intelligence Educ., 1–37. doi: 10.1007/s40593-024-00439-5

Horowitz, M. C., Kahn, L., Macdonald, J., and Schneider, J. (2024). Adopting AI: how familiarity breeds both trust and contempt. AI Soc. 39, 1721–1735. doi: 10.1007/s00146-023-01666-5

Housden, J. (2023). Imorning briefing: how are universities dealing with ChatGPT? Inews. Available at: https://inews.co.uk/news/i-morning-briefing-how-are-universities-dealing-with-chatgpt-2179732?srsltid=AfmBOorcHatZVLPZyyK0p4M4nFknL4970t9iq7D2erSu6zG_02Pez-P1

Johnston, H., Wells, R. F., Shanks, E. M., Boey, T., and Parsons, B. N. (2024). Student perspectives on the use of generative artificial intelligence technologies in higher education. Int. J. Educ. Integr. 20:2. doi: 10.1007/s40979-024-00149-4

Kaswan, K. S., Dhatterwal, J. S., and Ojha, R. P. (2024). “AI in personalized learning” in Advances in technological innovations in higher education. eds. I. A. Garg, B. V. Babu, and V. E. Balas. 1st ed (Boca Raton: CRC Press), 103–117.

Kelly, S., Kaye, S.-A., and Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review. Telemat. Inform. 77:101925. doi: 10.1016/j.tele.2022.101925

Lee, J., and Kim, Y. (2023). Sustainable educational Metaverse content and system based on deep learning for enhancing learner immersion. Sustain. For. 15:12663. doi: 10.3390/su151612663

Li, M., Xiong, X., and Xu, B. (2024). Attitudes and perceptions of Chinese oncologists towards artificial intelligence in healthcare: A cross-sectional survey. Front. Digital Health 6:1371302. doi: 10.3389/fdgth.2024.1371302

Liu, X., Zhang, Y., Zhao, X., Hunt, S., Yan, W., and Wang, Y. (2022). The development of independent colleges and their separation from their parent public universities in China. Human. Soc. Sci. Commun. 9:435. doi: 10.1057/s41599-022-01433-9

Liu, X., Zhou, H., Hunt, S., and Zhang, Y. (2023). For-profit or not-for-profit: what has affected the implementation of the policy for private universities in China? High Educ. Pol. 36, 190–212. doi: 10.1057/s41307-021-00254-w

Ma, S., and Lei, L. (2024). The factors influencing teacher education students' willingness to adopt artificial intelligence technology for information-based teaching. Asia Pacific J. Educ. 44, 94–111. doi: 10.1080/02188791.2024.2305155

Malatji, W. R., van Eck, R., and Zuva, T. (2020). Understanding the usage, modifications, limitations and criticisms of technology acceptance model (TAM). Adv. Sci. Technol. Engin. Syst. 5, 113–117. doi: 10.25046/aj050612

MOE China. (2020). National Statistical Communiqué on the development of education [Ministry of Education of the People's Republic of China]. Available at: http://www.moe.gov.cn/jyb_sjzl/sjzl_fztjgb/202005/t20200520_456751.html (Accessed October 30, 2024).

MOE China 2022. (2021) National Statistical Communiqué on the development of education [Ministry of Education of the People's Republic of China]. Available at: http://www.moe.gov.cn/jyb_sjzl/sjzl_fztjgb/202209/t20220914_660850.html (Accessed October 30, 2024).

MOE China 2023. (2022) National Statistical Communiqué on the development of education [Ministry of Education of the People's Republic of China]. Available at: http://www.moe.gov.cn/jyb_sjzl/sjzl_fztjgb/202307/t20230705_1067278.html (Accessed October 30, 2024).

Moonsamy, D., Naicker, N., Adeliyi, T. T., and Ogunsakin, R. E. (2021). A Meta-analysis of educational data Mining for Predicting Students Performance in programming. Int. J. Adv. Comput. Sci. Appl. 12, 97–104. doi: 10.14569/IJACSA.2021.0120213

Mosleh, R., Jarrar, Q., Jarrar, Y., Tazkarji, M., and Hawash, M. (2023). Medicine and pharmacy Students' knowledge, attitudes, and practice regarding artificial intelligence programs: Jordan and West Bank of Palestine. Adv. Med. Educ. Pract. 14, 1391–1400. doi: 10.2147/AMEP.S433255

Negi, P., Shrivastava, V. K., Pandey, S., Chythanya, K. R., Negi, P., and Gupta, M. (2024). Intervention of artificial intelligence in education to reduce illiteracy rate. In 2024 3rd international conference on sentiment analysis and deep learning (ICSADL), 153–157

Nikoulina, A., and Caroni, A. (2024). Familiarity, use, and perception of AI-PoweredTools in higher education. Int. J. Manag. Knowl. Learn. 13, 169–181. doi: 10.53615/2232-5697.13.169-181

Pan, M., Wang, J., and Wang, J. (2023). Application of artificial intelligence in education: opportunities, challenges, and suggestions. In 2023 13th international conference on information Technology in Medicine and Education (ITME), 623–627

Pande, K., Jadhav, V., and Mali, M. (2023). Artificial intelligence: exploring the attitude of secondary students. J. E-Learn. Knowledge Soc. 19, 43–48. doi: 10.20368/1971-8829/1135865

Pavlik, J. V. (2023). Collaborating with ChatGPT: considering the implications of generative artificial intelligence for journalism and media education. J. Mass Commun. Educ. 78, 84–93. doi: 10.1177/10776958221149577

Petricini, T., Wu, C., and Zipf, S. T. (2023). Perceptions about generative AI and ChatGPT use by faculty and college students. Pennsylvania, USA: The Pennsylvania State University.

Qian, Z. (2021). Applications, risks and countermeasures of artificial intelligence in education. In 2021 2nd international conference on artificial intelligence and education (ICAIE), 89–92

Rachovski, T., Petrova, D., and Ivanov, I. (2024). Automated creation of educational questions: analysis of artificial intelligence technologies and their role in education. Proceed. Int. Sci. Pract. Conf. 2, 465–467. doi: 10.17770/etr2024vol2.8101

Ríos Hernández, I. N., Mateus, J. C., Rivera Rogel, D., and Ávila Meléndez, L. R. (2024). Percepciones de estudiantes latinoamericanos sobre el uso de la inteligencia artificial en la educación superior. Austral. Comunicación 13, 1–25. doi: 10.26422/aucom.2024.1301.rio

Rizvi, M. (2023). Exploring the landscape of artificial intelligence in education: challenges and opportunities. In 2023 5th international congress on human-computer interaction, optimization and robotic applications (HORA)

Sahari, D. S. (2024). Measuring the familiarity, usability, and concern towards AI-integrated education of college teachers at the undergraduate level. Scholars J. Arts Human. Soc. Sci. 12, 166–176. doi: 10.36347/sjahss.2024.v12i05.002

Shahzad, M. F., Xu, S., and Javed, I. (2024). ChatGPT awareness, acceptance, and adoption in higher education: the role of trust as a cornerstone. Int. J. Educ. Technol. High. Educ. 21:46. doi: 10.1186/s41239-024-00478-x

Sjödén, B. (2020). “When lying, hiding and deceiving promotes learning—A case for augmented intelligence with augmented ethics” in Artificial intelligence in education. eds. I. I. Bittencourt, M. Cukurova, K. Muldner, R. Luckin, and E. Millán, vol. 12164 (Cham, Switzerland: Springer International Publishing), 291–295.

Stojanov, A., Liu, Q., and Koh, J. H. L. (2024). University students' self-reported reliance on ChatGPT for learning: A latent profile analysis. Comput. Educ. 6:100243. doi: 10.1016/j.caeai.2024.100243

Supriyanto, E., Setiawan, A., Chamsudin, A., Yuliana, I., and Wantoro, J. (2024). Exploring student perceptions and acceptance of ChatGPT in enhanced AI-assisted learning. Int. Conf. Smart Comput., IoT Mach. Learn, 291–295. doi: 10.1109/SIML61815.2024.10578145

Tiwari, C. K., Bhat, M., Khan, S. T., Subramaniam, R., and Khan, M. A. I. (2023). What drives students toward ChatGPT? An investigation of the factors influencing adoption and usage of ChatGPT : Interactive Technology and Smart Education.

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., et al. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 10:15. doi: 10.1186/s40561-023-00237-x

Trivedi, N. B. (2023). AI in education-A transformative force. In 2023 1st DMIHER international conference on artificial intelligence in education and industry 4.0 (IDICAIEI), 1–4

Turing, A. M. (1950). I.—computing machinery and intelligence. Mind LIX, 433–460. doi: 10.1093/mind/LIX.236.433

Tyagi, M., Ranjan, S., and Gupta, A. (2022). Transforming education system through artificial intelligence and machine learning. In 2022 3rd international conference on intelligent engineering and management (ICIEM), 44–49

Ullrich, A., Vladova, G., Eigelshoven, F., and Renz, A. (2022). Data mining of scientific research on artificial intelligence in teaching and administration in higher education institutions: A bibliometrics analysis and recommendation for future research. Discover Artif. Intelligence 2, 1–18. doi: 10.1007/s44163-022-00031-7

UNESCO. (2019). Beijing Consensus on Artificial Intelligence and Education. https://unesdoc.unesco.org/ark:/48223/ (Accessed October 30, 2024).

UNESCO. (2021a). Draft text of the Recommendation on the Ethics of Artificial Intelligence. https://unesdoc.unesco.org/ark:/48223/pf0000377897 (Accessed October 30, 2024).

UNESCO. (2021b). UNESCO Strategy on technological innovation in education (2021–2025). https://unesdoc.unesco.org/ark:/48223/pf0000375776 (Accessed October 30, 2024).

United Nations. (2024). Pact for the future, global digital compact, and declaration on future generations. Available at: https://www.un.org/sites/un2.un.org/files/sotf-the-pact-for-the-future.pdf (Accessed October 30, 2024).

Valova, I., Mladenova, T., and Kanev, G. (2024). Students' perception of ChatGPT usage in education. Int. J. Adv. Comput. Sci. Appl. 15, 466–473. doi: 10.14569/IJACSA.2024.0150143

Velander, J., Taiye, M. A., Otero, N., and Milrad, M. (2024). Artificial intelligence in K-12 education: eliciting and reflecting on Swedish teachers' understanding of AI and its implications for teaching & learning. Educ. Inf. Technol. 29, 4085–4105. doi: 10.1007/s10639-023-11990-4

Von Garrel, J., and Mayer, J. (2023). Artificial intelligence in studies—use of ChatGPT and AI-based tools among students in Germany. Human. Soc. Sci. Commun. 10:799. doi: 10.1057/s41599-023-02304-7

Welch, A. (2024). East Asia's private higher education crisis: demography as destiny? High. Educ. Q. 78, 1–16. doi: 10.1111/hequ.12508

Keywords: artificial intelligence, familiarity, attitude, Beijing consensus on artificial intelligence and education, ChatGPT, private university, higher education, China

Citation: Li Y, Castulo NJ and Xu X (2025) Embracing or rejecting AI? A mixed-method study on undergraduate students’ perceptions of artificial intelligence at a private university in China. Front. Educ. 10:1505856. doi: 10.3389/feduc.2025.1505856

Received: 03 October 2024; Accepted: 31 January 2025;

Published: 21 February 2025.

Edited by:

Vitor William Batista Martins, Universidade do Estado do Pará, BrazilReviewed by:

Dennis Arias-Chávez, Continental University, PeruCopyright © 2025 Li, Castulo and Xu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nilo Jayoma Castulo, bmlsb2Nhc3R1bG9AbWFpbC5ibnUuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.