94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 03 February 2025

Sec. Digital Learning Innovations

Volume 10 - 2025 | https://doi.org/10.3389/feduc.2025.1427450

This article is part of the Research TopicArtificial intelligence (AI) in the complexity of the present and future of education: research and applicationsView all 9 articles

The effective integration of Generative Artificial Intelligence (GenAI) into educational practices holds promise for enhancing teaching and learning processes. Examining faculty acceptance and use of GenAI implementation can provide valuable insights into the conditions necessary for its successful application. This study consisted of a survey to measure the acceptance and use of GenAI in the educational practice of 208 faculty members at a private university in Mexico. The survey instrument used integrates elements of the Technology Acceptance Model (TAM) and the Theory of Reasoned Action (TRA). The original questionnaire was translated into Spanish and validated by experts to ensure reliability and validity in the new context. Overall, acceptance dimensions obtained middle-high results. Behavioral intention obtained the highest values whereas Subjective norm obtained the lowest values. Significant differences in GenAI acceptance regarding faculty disciplines and sociodemographics were not identified. Also, faculty GenAI acceptance is positively moderate correlated with faculty GenAI use to produce text. The identified acceptance level among faculty toward the use of GenAI in educational environments leads to expect a promising future for its integration into teaching and learning practices. In addition, further research on GenAI integration for student use and the impact of faculty training on the effective use of GenAI in educational settings are encouraged.

Research indicates that the effective introduction of innovative instructional technologies in educational contexts is significantly influenced by teachers’ attitudes (Kim and Kim, 2022). Additionally, the effective integration of Generative Artificial Intelligence (GenAI) into educational practices holds promise for enhancing teaching and learning processes (Kiryakova and Angelova, 2023). Thus, examining faculty acceptance of GenAI implementation can provide valuable insights into the conditions necessary for its successful application.

The primary challenges and limitations within education underscore the need for shifts in faculty attitudes (Nikum, 2022). Therefore, gaining insights into the concerns and perspectives of educators, who serve as critical implementers in educational settings, is essential for fostering the integration of GenAI into educational environments (You, 2023).

Furthermore, educational transformation demands a comprehension of technologies, proficiency in their use, and embracement of progress (Nikum, 2022). Faculty members must acquire not only the technical skills required to operate technology, but also the ability to effectively integrate it into their curricula. Additionally, to be receptive to incorporating new technology into their teaching practices, faculty members must comprehend the instructional potentials it offers (Kim and Kim, 2022).

Before November 30, 2022, AI’s presence in daily life was often subtle, embedded in smart devices perceived as simple tools aiding everyday tasks. The introduction of ChatGPT by OpenAI marked a significant shift, transforming AI from an indirect and secondary tool into a tangible and accessible resource. This development made AI’s benefits more apparent and immediate, particularly in educational settings. With ChatGPT, the potential to revolutionize education became clear, offering personalized learning, instant access to information, and the ability to engage students in new, interactive ways. However, as with any disruptive technology, its integration also brought concerns, highlighting the need for balanced and thoughtful implementation (García-Peñalvo, 2023).

Faculty are only beginning to immerse into the potential pedagogical benefits offered by GenAI applications in supporting learners. Consequently, there remains substantial territory for faculty to pursue innovative and meaningful research and practice with GenAI, aiming to achieve a significant impact on learning outcomes (Zawacki-Richter et al., 2019).

Several studies have examined the acceptance and use of AI by both faculty and students, revealing significant differences in their perceptions. Nonetheless, these studies also underscore similarities between faculty and students’ acceptance and use in certain aspects.

Faculty and students have a positive attitude toward AI in learning (Hwang, 2022; Oluwadiya et al., 2023). Although Ural Keleş and Aydın (2021) identified that students’ negative perceptions about artificial intelligence concepts are more significant than positive perceptions. Also, the majority of faculty and students support AI’s inclusion in curriculum and their practice (Ahmed et al., 2022; Swed et al., 2022). However, Sharma et al. (2023) found students’ divided opinions on AI integration, since students highlight concerns about overreliance on AI (Sharma et al., 2023).

Overall, students’ and faculty’s enthusiasm about the potential of AI slightly exceeds concerns about future risks. However, in clinical environments students express more concern about AI than faculty, not just for its integration in educational settings but also for their integration in medical practice. A considerably higher proportion of students, in comparison to faculty, hold the belief that AI might dehumanize healthcare, make physicians redundant, diminish their skills, and ultimately jeopardize patient care (Oluwadiya et al., 2023). Nevertheless, a slight majority of both faculty and students in medicine, harbor concerns about AI potentially replacing their professions (Hwang, 2022).

The Technology Acceptance Model (TAM) and the Theory of Reasoned Action (TRA) are widely used frameworks for understanding and predicting user behavior toward technology and new systems. TAM simplifies the complex process of technology adoption into a model that includes perceived usefulness and perceived ease of use as primary factors influencing the decision to use a technology. It has been extensively validated and is known for its strong predictive power regarding user acceptance and usage behavior. It also can be easily adapted and extended to fit different contexts and technologies (Gaber et al., 2023). By applying TAM, it is expected to gain insights into faculty perceptions of AI’s benefits and ease of integration into their academic routines. TRA is based on the assumption that individuals are rational and make decisions based on their attitudes and subjective norms. It also emphasizes the role of intention in predicting behavior, which is relevant for understanding how attitudes and social influences drive technology adoption.

Compared to models like TPACK (Technological Pedagogical Content Knowledge), which integrates technology into teaching practice through the interaction of pedagogy and content (Mishra and Koehler, 2006), TAM is better suited for examining individual adoption of a specific tool or technology. Similarly, the Concerns-Based Adoption Model (CBAM) focuses on the stages of concern and levels of use among educators (Hall and Hord, 1987), emphasizing adoption as a process rather than an outcome.

For this study, TAM provides an adaptable and concise framework to explore faculty acceptance of GenAI in a higher education context, mainly because it allows a focus on behavioral intention and its relationship with PU, PEU, and attitudes. The incorporation of TRA’s broader constructs, such as Subjective Norm (SN), provides depth by examining social influences on adoption. Also, by aligning TAM’s constructs with the context of teaching practices, the study utilizes a framework tailored to technology use, which is complementary to TPACK’s focus on pedagogical integration and CBAM’s emphasis on adoption stages. Therefore, this multi-framework perspective enriches the discussion and places the study within a broader dialogue on technology adoption in education.

Research exploring the adoption of AI among teachers and students has primarily focused on the medical field. However, their findings are not consistent. While Oluwadiya et al. (2023) identified that students use AI tools more frequently than faculty, Swed et al. (2022) found that residents and faculty have significantly more practice of AI than students.

According to Hasan et al. (2024), students and educators display an average level of knowledge. However, students outperform faculty. In addition, Busch et al. (2024) identified that students reported limited general knowledge of AI and felt inadequately prepared to use AI in their future careers. Students who had AI training as part of their regular courses have reported better AI knowledge and felt more prepared to apply this knowledge professionally.

In medical settings, most faculty and medical students showed a basic knowledge of AI, but only a minority of them were aware of its medical applications (Swed et al., 2022; Sharma et al., 2023). Additionally, the majority of them have not been taught about any AI-related courses (Hwang, 2022). For example, in the study conducted by Oluwadiya et al. (2023), less than 5% of both students and faculty reported receiving AI training.

Research by Hamd et al. (2023), Hussain (2020), and Oluwadiya et al. (2023) indicates that faculty generally hold a favorable attitude toward the utilization of AI. For instance, Wang et al. (2020) identified that they exhibit a readiness to integrate AI into education, particularly in intelligent tutoring systems. Additionally, faculty display overall positive attitudes toward incorporating expert systems into curricula and teaching methods (Jarrah et al., 2023). However, it’s significant that faculty with master’s degrees tend to demonstrate higher positive attitudes compared to those with doctorates (Jarrah et al., 2023).

Furthermore, faculty show positive attitudes toward the use of GenAI in their practice (Hasan et al., 2024; Kiryakova and Angelova, 2023). Overall, faculty express optimism and openness regarding the integration of AI in education, recognizing its potential benefits and opportunities to enhance teaching and learning (Shamsuddinova et al., 2024; Zastudil et al., 2023).

A significant proportion of faculty have indicated their intention to incorporate GenAI into their courses in the near future (Shankar et al., 2023). The intention to use AI in education is positively affected by factors such as AI performance expectancy, effort expectancy, social influence, and facilitating conditions (Ahmad et al., 2023).

Faculty consider GenAI as a useful tool. For instance, Al Shakhoor et al. (2024) identified that faculty members perceived chatbots as useful tools in higher education. Nevertheless, the implementation of such technologies requires training and preparation in order to achieve an optimal learning tool.

Faculty saw many opportunities for the use of GenAI to enhance education. For example, faculty agree that advances in GenAI are likely to change the instructional methods and barriers for English language learners. Faculty think AI might extend their limited time resources (Altun et al., 2024; Kiryakova and Angelova, 2023) and overcome language barriers (Alasadi and Baiz, 2023; Morris, 2023).

Furthermore, faculty see opportunities for language models to yield a new class of intelligent tutoring systems (Cardona et al., 2023). Such tools might provide students feedback on their homework, giving detailed explanations of why a student’s initial answer was wrong and assisting them in reaching a correct solution independently (Alasadi and Baiz, 2023). Students could ask a chatbot questions as a first option when questions arose, and only need to visit faculty office hours if the chatbot were unable to help sufficiently. Therefore, it may help to improve comprehension of course materials (Morris, 2023).

Faculty believe that generative models could be used to create new, engaging lesson plans. Also, faculty members think GenAI may promote time efficiency and productivity at lesson planning (Altun et al., 2024; Morris, 2023). They perceive ChatGPT as a means to provoke interest, activate and engage learners, and stimulate their critical thinking and creativity (Kiryakova and Angelova, 2023).

Besides, faculty highlight the potential of AI to enhance student engagement through personalized learning experiences, adaptive teaching methods, and interactive learning (Alasadi and Baiz, 2023; Altun et al., 2024). They perceive AI as a tool that can assist in tailoring learning pathways for students, especially in contexts with large student populations and limited faculty availability. Additionally, AI is seen as beneficial for providing students with opportunities to practice their knowledge and skills in a non-threatening environment; enabling continuous improvement through feedback mechanisms (Shankar et al., 2023).

The main concerns that faculty express on the use of GenAI in educational settings are cheating, the provision of incorrect or biased information and the decrease of students’ abilities.

When considering the potential impact of Generative AI on education, cheating emerges as a primary concern frequently discussed by faculty (Alasadi and Baiz, 2023; Farrelly and Baker, 2023; You, 2023; Zastudil et al., 2023). Consequently, faculty express concern regarding the unethical use of AI, which poses risks to the integrity and fairness of assessment practices (Morris, 2023). Also, there is a consensus among educators to regard the misuse of AI as a form of plagiarism (Altun et al., 2024; Ibrahim et al., 2023).

Moreover, existing AI-text classifiers often struggle to accurately identify the utilization of ChatGPT in academic assignments. This challenge arises from their tendency to misclassify human-written responses as AI-generated, compounded by the fact that AI-generated text can be easily manipulated to avoid detection through simple editing techniques (Ibrahim et al., 2023).

In a study conducted by Morris (2023), some participants expressed concern that students might be exposed to factuality errors. In this sense, faculty show concern on outdated information, misinformation risk, biased content, accuracy discrepancies as well as inability to verify sources (Altun et al., 2024; You, 2023).

Hence, several instructors refer to the lack of trustworthiness of responses from GenAI tools and its over-reliance as a significant concern when considering students using these models in their classes (Zastudil et al., 2023). The most severe problem according to faculty is the danger that learners will completely trust ChatGPT without checking the authenticity of the generated texts (Kiryakova and Angelova, 2023). However, training and recommendations on how to use such Generative Pre-trained Transformers (GPTs) minimizes these concerns (UNESCO IESALC, 2023).

Faculty are also concerned about AI negatively affecting the acquisition of knowledge and skills (Kiryakova and Angelova, 2023) due to previous issues such as the use of incorrect information and cheating. They also reflect that its dependency may affect students’ problem-solving skills and critical thinking (Altun et al., 2024; Morris, 2023). Besides, GenAI tools reduce the effort to write code and find learning materials (Zastudil et al., 2023).

Some faculty argue that GenAI doesn’t offer that emotional support or encouragement that students often need (Altun et al., 2024). Although the previous study focuses on the hospitality industry, Meng and Dai (2021) mentioned that emotional support from chatbots and humans were effective in reducing people’s stress and worry. Still, when humans and chatbots were compared as a source of emotional support, human support led to a greater perceived supportiveness than that from a chatbot.

By comparing GenAI acceptance across disciplines, there were no significant differences in concern levels based on the faculty’ disciplines, but differences were observed based on their experience and intention to use generative AI in their classes. Faculty who had already used generative AI in their classes showed higher concerns regarding the consequences of using GenAI (You, 2023).

You (2023) identified that the majority of faculty had some experience with generative AI, but its educational use was limited. However, faculty cite a lack of understanding and training in using AI tools for instruction as one of the most important barriers to its use (Shamsuddinova et al., 2024).

The faculty members had a medium level of awareness and a lack of education and training programs (Hamd et al., 2023). Gaber et al. (2023) also revealed that there was no statistically significant relationship between AI awareness (perceived capacity to use AI applications) and technology acceptance among faculty members. Furthermore, there exists a positive relationship between AI awareness and digital competencies among faculty members.

Faculty members have limited experience using AI tools in their practice. High income, a strong educational level and background, and previous experience with technologies were predictors of knowledge, attitudes, and practices toward using AI in their fields. There is a positive correlation between knowledge about AI and attitudes toward AI (Hasan et al., 2024).

Studying faculty’s acceptance and use of GenAI may provide insights into the readiness of educators to integrate GenAI tools into their teaching practices and measure the potential of GenAI in advancing pedagogical methods. This includes understanding their willingness to adopt new technologies, adapt instructional strategies, and invest time in learning how to effectively use GenAI tools. Furthermore, researching faculty acceptance and usage allows for the examination of the potential impact of GenAI on student learning outcomes.

What’s more, studies on faculty acceptance and usage of GenAI can help identify the professional development needs of educators regarding GenAI integration. By understanding their skill gaps and training requirements, institutions can design targeted training programs to support faculty in effectively leveraging GenAI tools in their teaching practices. Furthermore, by addressing these needs, stakeholders can facilitate the successful implementation of GenAI initiatives in educational contexts.

To this end, this study aimed to examine faculty’ acceptance of the application of AI in their educational practice. The study addressed the following research questions:

1. What is the level of acceptance among faculty regarding the use of GenAI in their practice?

2. What is the relationship between GenAI acceptance and Gender, Age, School, Years of experience and the Type of Faculty?

3. To what extent have faculty used GenAI to create different formats such as audio, images, text and video?

4. What is the relationship between the frequency of faculty’s GenAI use and their acceptance of GenAI?

This research consisted of a survey to measure the acceptance and the use of Generative Artificial Intelligence (GenAI) in the educational practice of faculty.

The research population consists of all faculty members at a private university in Monterrey, Mexico, which included 1,256 members. This university enrolls 10,253 students in 7 Schools and 47 professional programs. In this cross-sectional, non-experimental study, the surveys were distributed to all faculty members through the directors of the schools via email, and 208 members, from various schools responded to the survey, obtaining a representative sample with a level of confidence of 95% and a margin error of 6.2%. As a way to recognize that changes and evolutions in the educational field are issues that must be addressed today, this university launched a strategic objective in 2023 to determine the profiles of the faculty for the next generation, called the Next Gen Faculty.

Participation was voluntary and informed consent by each participant was obtained, and no ramifications or penalties were imposed on individuals who chose not to participate. The participants were told of the goal of this study before completing the questionnaires and were also assured of confidentiality of any information provided. Among them, 50% percent were females, aged between 26 and 69 (M = 45.644, SD = 10.014), with an average of 15 years of experience as educators (M = 15.428, SD = 9.938), 46.6% were full-time faculty members, 37% adjunct and 16.3% staff.

Faculty were diverse in their academic disciplines: Arts and Design (19.7%), Health Sciences (18.8%), Engineering and Technology (13.5%), Architecture and Habitat Sciences (13%), Education and Humanities (12%), Business (12%), Law and Social Sciences (9.6%), and Integral Education (1.4%).

The Buabeng-Andoh (2018) questionnaire that integrates the TAM and TRA was adapted and translated to Spanish to measure the faculty’s intentions to use GenAI in their practice. Three experts collaborated to review that the cultural and linguistic nuances were respected. The applied instrument consisted of five constructs with 18 items in total: perceived usefulness (four items), perceived ease of use (five items), attitudes toward use (three items), subjective norm (three items), and behavioral intention (three items). Each item was measured on a 5-point scale from Strongly Disagree (1) to Strongly Agree (5). Sociodemographic items as type of faculty (full-time, adjunct and staff), Gender, School, Age and Years of experience were included in the administered Google form.

The survey was distributed to all the faculty members to ensure representativeness across faculties. The procedure involved sending a link to the survey, hosted on Google Forms, to the directors of the faculties (schools). The directors then forwarded the survey to their respective faculty members with an invitation to participate. On average, each professor required 20 min to answer the questionnaire. Faculty members were reminded that their participation was entirely voluntary and that they could withdraw at any stage without providing a reason.

To assess the reliability of the research instrument, the degree of consistency among the items of the research instrument and its ability to measure the desired variables was verified. The values of the internal consistency coefficient (Cronbach’s alpha) have ranged between 0.812 and 0.956 among the dimensions: Perceived usefulness (0.931), Perceived ease of use (0.812), Attitudes toward use (0.910), Subjective norm (0.804), Behavioral intention (0.956). The alpha value for the instrument items as a whole is 0.893. Accordingly, all values are greater than the accepted standard for reliability of 0.70, and this confirms the consistency among the items of the research instrument. Normality was not confirmed in any of the dimensions. Therefore, it was decided to use non-parametric tests. Spearman’s Rho was employed to correlate faculty GenAI acceptance dimensions with age. The Mann-Whitney U test was used to relate faculty GenAI acceptance dimensions with gender. Lastly, the Kruskal-Wallis H test was applied to analyze the relationship between faculty GenAI acceptance dimensions and the type of faculty and schools.

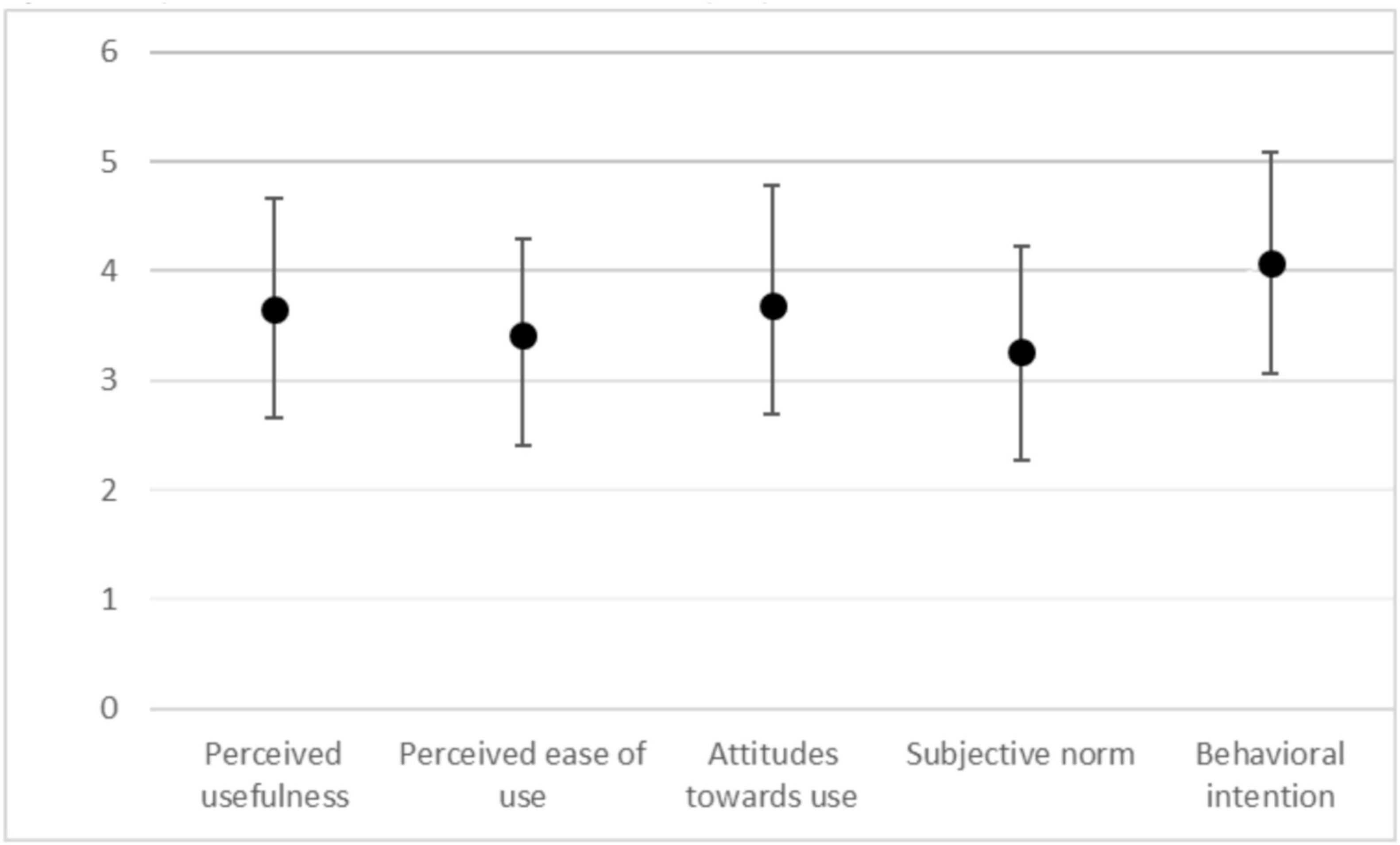

To answer the study’s first question, “What is the level of acceptance among faculty regarding the use of GenAI in their practice?,” arithmetic means and standard deviations were used. Figure 1 shows the means with the margin errors of each dimension of GenAI acceptance. The results show that Behavioral intention obtained the highest values with a mean of 4.069 and a standard deviation of 1.026. Whereas the Subjective norm obtained the lowest values with a mean of 3.269 and a standard deviation of 0.965 (Figure 1).

Figure 1. Faculty’s acceptance of GenAI for educational purposes. Error bars represent one standard deviation.

The general mean of Perceived usefulness is 3.655 and the standard deviation is 1.014. Furthermore, the values of the mean for this dimension have ranged between 3.495 and 3.784. The item stipulating “Using GenAI enables me to accomplish tasks more quickly” is ranked first with a mean of 3.784 and a standard deviation of 1.093, while the item stipulating “Using GenAI improves my performance” is ranked last with a mean of 3.495 and a standard deviation of 1.112 (Table 1).

Additionally, the general mean of Perceived ease of use is 3.41 and the standard deviation is 0.885. Besides, the values of the mean for this dimension have ranged between 3.024 and 3.861. The item stipulating “It is easy for me to become skillful at using GenAI” is ranked first with a mean of 3.861 and a standard deviation of 1.038, while the item stipulating “I have the knowledge necessary to use GenAI” is ranked last with a mean of 3.024 and a standard deviation of 1.21 (Table 1).

Meanwhile, the general mean of Attitudes toward use is 3.696 and the standard deviation is 1.086. Subsequently, the values of the mean for this dimension have ranged between 3.668 and 3.716. The item stipulating “I have positive feelings toward the use of GenAI” is ranked first with a mean of 3.716 and a standard deviation of 1.117, while the item stipulating “I like working with GenAI” is ranked last with a mean of 3.668 and a standard deviation of 1.204 (Table 1).

The general mean of the Subjective norm is 3.269 and the standard deviation is 0.965. The values of the mean for this dimension have ranged between 2.913 and 3.466. The item stipulating “People who are important to me will support me to use GenAI” is ranked first with a mean of 3.466 and a standard deviation of 1.103, while the item stipulating “People who influence my behavior think I should use GenAI” is ranked last with a mean of 2.913 and a standard deviation of 1.156 (Table 1).

Thus, the general mean of Behavioral intention is 4.069 and the standard deviation is 1.026. Also, the values of the mean for this dimension have ranged between 3.957 and 4.069. The items stipulating “I expect that I would use GenAI in future” along with “I plan to use GenAI in future” are ranked first with a mean of 4.125 and a standard deviation of 1.047 and 1.037, respectively, while the item stipulating “I intent to continue to use GenAI” is ranked last with a mean of 3.957 and a standard deviation of 1.126 (Table 1).

In general, results point out that the use of GenAI is not expanded among faculty members yet. However, they have positive attitudes toward its use. The data indicate that while there is a general recognition of the benefits of GenAI, as evidenced by the relatively high mean scores in the Perceived Usefulness and Behavioral Intention dimensions, there are also areas where faculty feel less confident, particularly in terms of their knowledge and ease of use. This is seen in the lower mean scores for items such as “I have the knowledge necessary to use GenAI” in the Perceived Ease of Use dimension.

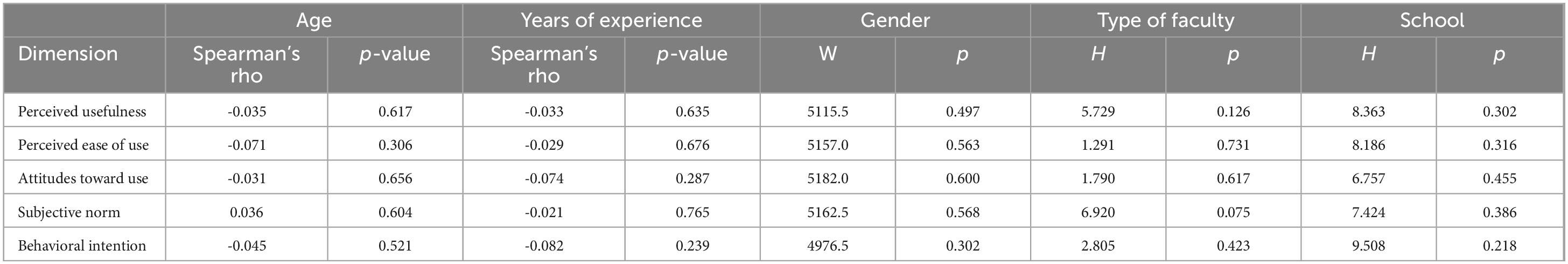

To answer the study’s second question, “What is the relationship between faculty GenAI acceptance and demographic variables (gender, age, year of experience, type of faculty and school)?” arithmetic means, Spearman’s rho and Mann-Whitney test were used. Table 2 shows the Spearman’s rho correlation between each dimension of GenAI acceptance and age. The results show that the values of Spearman’s rho range between -0.071 and 0.036 and the significance levels varied between 0.306 and 0.656, which is a non-statistically significant value at the probability level of 0.05. As a consequence, there are no correlations between GenAI acceptance and faculty’s age (Spearman’s Rho = -0.037; p = 0.591).

Table 2. Correlation between acceptance dimensions and age, years of experience, gender, type of faculty and school.

Table 2 shows the Spearman’s rho correlation between each dimension of GenAI acceptance and years of teaching experience. The results show that the values of Spearman’s rho range between -0.082 and -0.021 and the significance levels varied between 0.239 and 0.765, which is a non-statistically significant value at the probability level of 0.05. As a consequence, correlations between faculty GenAI acceptance and their years of teaching experience were not found (Spearman’s Rho = -0.061; p = 0.382).

Also, Table 2 shows the results of the Mann-Whitney test for the difference between males and females with regard to faculty GenAI acceptance dimensions. The results show that the value of W’s range between 4979.5 and 5182 and the significance levels varied between 0.302 and 0.6, which are not statistically significant values at the probability level of 0.05. As a consequence, there is not a significant difference in GenAI acceptance according to gender (W = 5036.000; p = 0.392).

Furthermore, Table 2 shows the results of the Kruskal-Wallis test for the difference among the type of faculty (full-time, adjunct and staff) with regard to faculty GenAI acceptance dimensions. The results show that the value of H’s range between 1.291 and 6.920 and the significance levels varied between 0.075 and 0.731, which are not statistically significant values at the probability level of 0.05. As a consequence, there is not a significant difference in GenAI acceptance according to the type of faculty (Statistic = 2.075; p = 0.557).

Finally, Table 2 shows the results of the Kruskal-Wallis test for the difference among the schools (Arts and Design, Health Sciences, Engineering and Technology, Architecture and Habitat Sciences, Education and Humanities, Business, Law and Social Sciences and Integral Education) with regard to faculty GenAI acceptance dimensions. The results show that the value of H’s range between 6.757 and 9.508 and the significance levels varied between 0.218 and 0.455, which are not statistically significant values at the probability level of 0.05. As a consequence, there is not a significant difference in GenAI acceptance dimensions according to the school they are part of (Statistic = 8.543; p = 0.287).

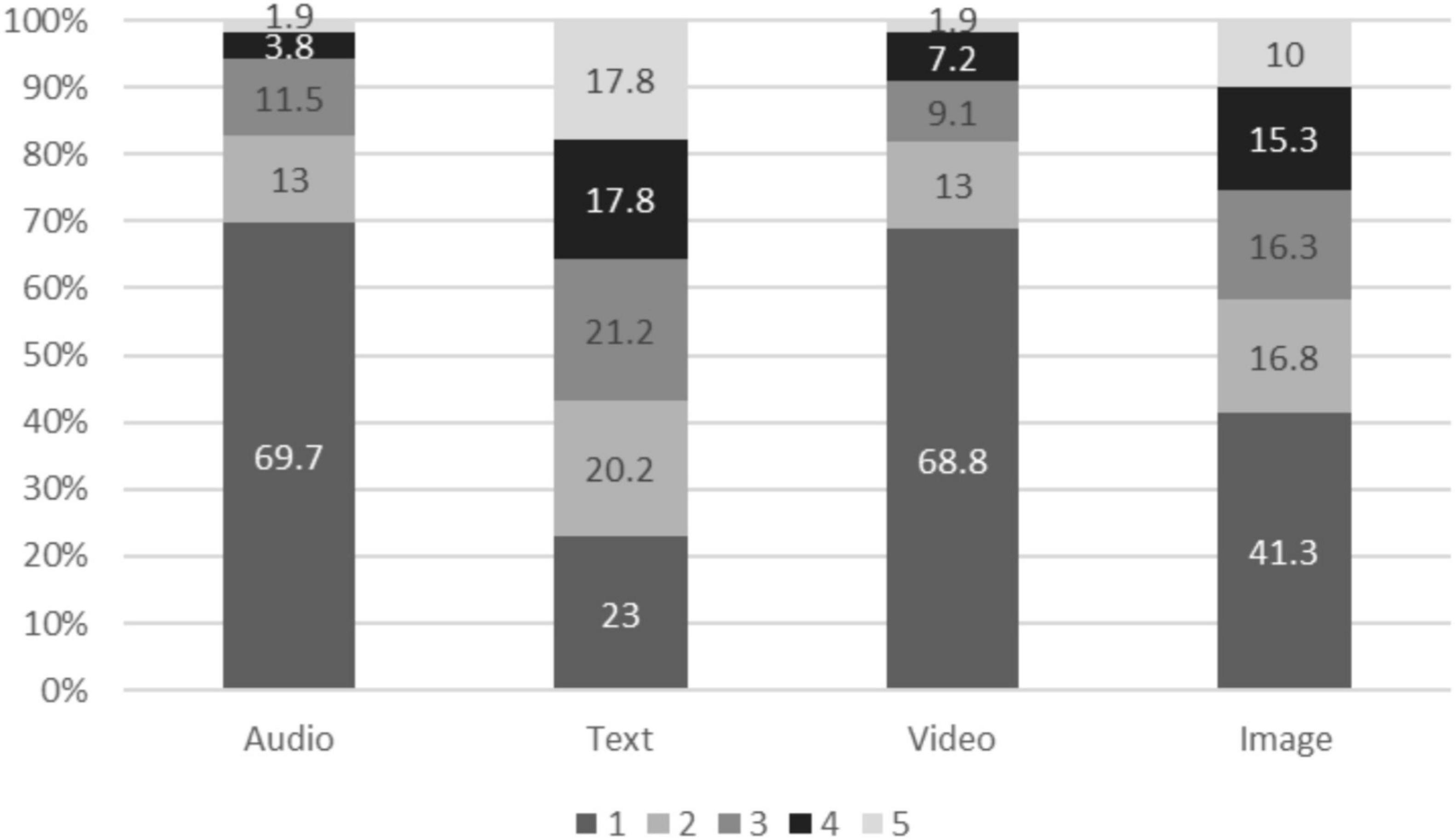

To answer the study’s third question, “To what extent have faculty used GenAI to create different formats such as audio, images, text and video?” percentages of every element of the scale were used. Figure 2 shows that text is the type of format more frequently created by faculty when using GenAI (17.8 frequently used), while 77% of faculty members have used GenAI to produce text at least once.

Figure 2. How often faculty have used GenAI to create audio, text, video or an image. From 1 (Never) to 5 (Frequently).

Then, GenAI to produce images is used frequently by 10% of the faculty, while 58.7% of faculty members have used GenAI to produce images at least once. Furthermore, most of the educators have never used GenAI to produce audio or video (69.7 and 68.8, respectively).

To answer the study’s fourth question, “‘What is the relationship between the frequency of faculty’s GenAI use and their acceptance of GenAI?” Spearman’s rho correlations were utilized. Table 3 shows the Spearman’s rho correlation between the dimensions of GenAI acceptance and the frequency of GenAI usage by type of format generated. The results show that there is a significant positive correlation between the frequency in the use of GenAI and its acceptance. These results persist for any type of the formats generated by GenAI and for the different dimensions of GenAI acceptance. Furthermore, the use of GenAI for producing text has the strongest relationship with GenAI perceived usefulness, perceived ease of use and behavioral intention.

This study provides a comprehensive examination of faculty acceptance, use, and knowledge of Generative AI (GenAI) in higher education, offering insights into its implications for teaching and learning. It is important to note that the findings align with previous research while also highlighting key areas for further exploration and institutional action.

Interestingly, the literature review revealed that faculty members tend to have more positive perceptions regarding the acceptance of GenAI compared to students. While students express greater concern about overreliance on AI and its educational and professional implications, faculty exhibit optimism regarding GenAI’s potential in teaching. Regarding the frequency of GenAI use, the results were inconsistent between faculty and students.

Studies by Garrote Jurado et al. (2023) emphasize the role of AI in enhancing personalized learning and automating grading, though they also caution against ethical challenges, such as the risk of academic dishonesty through AI-generated assignments. Both groups—faculty and students—share a basic understanding of GenAI but lack formal training, underscoring the urgent need for education programs that enhance their proficiency and confidence in using these tools effectively.

These results are in accordance with the findings from Ahmad et al. (2023), Shankar et al. (2023), and Wang et al. (2020) as they reveal willingness among faculty to use GenAI in the near future. Also, behavioral intention was the strongest dimension of GenAI acceptance in the present study, reflecting an openness to adopt GenAI in educational contexts. Furthermore, previous studies demonstrate the favorable attitudes that faculty have concerning the use of AI in educational settings (Hamd et al., 2023; Hussain, 2020; Oluwadiya et al., 2023), especially when supported by institutional policies and resources.

This study confirms that faculty attitudes toward the use of GenAI are specifically consistent with those studies on AI. Nevertheless, conducting comparative analyses of digital strategies employed across higher education institutions could yield valuable insights into the factors associated with the application of AI in education. This includes examining aspects such as institutional openness toward technology, policies and the availability of resources.

This study did not find significant differences on acceptance dimensions according to faculty’s socio demographics and characteristics. In accordance with You (2023), we did not find significant differences in GenAI acceptance regarding faculty disciplines and we did find significant correlations between faculty’s GenAI acceptance and their experience using GenAI.

This study’s results are also in accordance with You (2023) since the majority of faculty expressed that they have used GenAI at least to create text. As expected, faculty mainly use GenAI to create text. On top of that, results on the use of GenAI to generate images are highlighted, since they did not differ so much from results for text. Rather, there is a clear distinction between the frequency in creation of text and images in contrast with the generation of video and audio. This trend may shift with the increasing accessibility of new generative AI tools in the coming years.

Overall, faculty GenAI acceptance is significantly correlated with the use of GenAI. Furthermore, it is highlighted that, among the types of formats, faculty’s use of GenAI to create text is the strongest predictor of faculty acceptance in the usage of GenAI for educational purposes. These results are also consistent with the findings from You (2023), which indicate significant differences in faculty concerns on AI according to their usage of AI. However, it is not clear how these variables influence each other. The relationship between GenAI acceptance and usage appears to be complex and potentially bidirectional. However, it remains uncertain whether increased acceptance leads to greater usage, or if more frequent use results in higher acceptance levels.

The high level of acceptance among faculty toward the use of Generative Artificial Intelligence (GenAI) in educational environments indicates a promising future for its integration into teaching and learning practices. It is crucial to emphasize that this represents the preliminary sketch of the broader framework. For instance, further research may distinguish between faculty use of GenAI and faculty integration of GenAI for student use. In addition, some other future perspectives should be considered such as the institutional policies or individual factors that allowed faculty to either integrate GenAI into their practice or for student use.

Furthermore, research initiatives aimed at evaluating the impact of faculty training on the effectiveness of GenAI use in educational settings should be promoted. Studies assessing changes in teaching practices and overall educational experiences may provide valuable insights into the benefits and limitations of GenAI integration. Also, targeted interventions to enhance faculty skills and knowledge, combined with encouraging peer support, may further facilitate the integration of GenAI in educational settings. However, the focus on faculty perspectives overlooks the broader ecosystem of students and administrators, whose roles are needed for effective GenAI integration. It is also important to note that the study did not distinguish between personal use and pedagogical integration of GenAI, nor did it immerse into ethical challenges or institutional policies that might influence adoption.

This study contributes to the understanding of the dynamics surrounding faculty engagement with GenAI in higher education. By identifying the drivers and barriers shaping faculty attitudes and behaviors toward GenAI, the results provide valuable insights for educational policymakers, administrators, and practitioners seeking to harness the full potential of AI technologies in teaching and learning contexts.

Consequently, this research pretends to underscore the need for ongoing dialogue and collaboration between faculty, administrators, technologists, and ethicists to navigate the complex ethical, pedagogical, and practical considerations associated with GenAI adoption in higher education. By fostering interdisciplinary collaboration and encouraging a culture of ethical reflection, universities can harness the transformative potential of GenAI while safeguarding against potential risks and difficulties. However, it is important to note that the participants in this study are from a single university in Mexico which highlights the need for further research to understand GenAI acceptance across diverse educational contexts.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by the Research Division at Universidad de Monterrey. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

JN: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Supervision, Writing – original draft, Writing – review & editing. JE-G: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors are grateful to the participants who participated in this study.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Ahmad, F. B., Al-Nawaiseh, S. J., and Al-Nawaiseh, A. J. (2023). Receptivity level of faculty members in universities using digital learning tools: A UTAUT perspective. International J. Emerg. Technol. Learn. 18, 209–219. doi: 10.3991/ijet.v18i13.39763

Ahmed, Z., Bhinder, K. K., Tariq, A., Tahir, M. J., Mehmood, Q., Tabassum, M. S., et al. (2022). Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Pakistan: A cross-sectional online survey. Ann. Med. Surg. 76:103493. doi: 10.1016/j.amsu.2022.103493

Al Shakhoor, F., Alnakal, R., Mohamed, O., and Sanad, Z. (2024). “Exploring business faculty’s perception about the usefulness of chatbots in higher education,” in Artificial Intelligence-Augmented Digital Twins, Studies in Systems, Decision and Control, Vol. 503, eds A. M. A. Musleh Al-Sartawi, A. A. Al-Qudah, and F. Shihadeh (Cham: Springer).

Alasadi, E. A., and Baiz, C. R. (2023). Generative AI in education and research: Opportunities, concerns, and solutions. J. Chem. Educ. 100, 2965–2971. doi: 10.1021/acs.jchemed.3c00323

Altun, O., Saydam, M. B., Karatepe, T., and Dima, ŞM. (2024). Unveiling ChatGPT in tourism education: Exploring perceptions, advantages and recommendations from educators. Worldwide Hospitality Tour. Themes 16, 105–118. doi: 10.1108/WHATT-01-2024-0018

Buabeng-Andoh, C. (2018). Predicting students’ intention to adopt mobile learning: A combination of theory of reasoned action and technology acceptance model. J. Res. Innov. Teach. Learn. 11, 178–191. doi: 10.1108/JRIT-03-2017-0004

Busch, F., Hoffmann, L., Truhn, D., Palaian, S., Alomar, M., Shpati, K., et al. (2024). International pharmacy students’ perceptions towards artificial intelligence in medicine—a multinational, multicentre cross-sectional study. Br. J. Clin. Pharmacol. 90, 649–661. doi: 10.1111/bcp.15911

Cardona, M. A., Rodríguez, R. J., and Ishmael, K. (2023). Artificial Intelligence and the Future of Teaching and Learning: Insights and Recommendations. Available online at: https://policycommons.net/artifacts/3854312/ai-report/4660267/ (accessed June 21, 2024).

Farrelly, T., and Baker, N. (2023). Generative artificial intelligence: Implications and considerations for higher education practice. Educ. Sci. 13:1109. doi: 10.3390/educsci13111109

Gaber, S. A., Shahat, H. A., Alkhateeb, I. A., Al Hasan, S. A., Alqatam, M. A., Almughyirah, S. M., et al. (2023). Faculty members’ awareness of artificial intelligence and its relationship to technology acceptance and digital competencies at King faisal university. Int. J. Learn. Teach. Educ. Res. 22, 473–496. doi: 10.26803/ijlter.22.7.25

García-Peñalvo, F. J. (2023). “Generative artificial intelligence: Open challenges, opportunities, and risks in higher education,” in Proceedings of the 14th International Conference on e-Learning 2023, (Belgrade).

Garrote Jurado, R., Pettersson, T., and Zwierewicz, M. (2023). “Students’ attitudes to the use of artificial intelligence,” in Proceedings of the ICERI2023 16th International conference of Education, Research and Innovation, November 13th-15th, 2023, (Seville).

Hall, G. E., and Hord, S. M. (1987). Change in Schools: Facilitating the Process. State Albany, NY: University of New York Press.

Hamd, Z., Elshami, W., Al Kawas, S., Aljuaid, H., and Abuzaid, M. M. (2023). A closer look at the current knowledge and prospects of artificial intelligence integration in dentistry practice: A cross-sectional study. Heliyon 9:e17089. doi: 10.1016/j.heliyon.2023.e17089

Hasan, H. E., Jaber, D., Al Tabbah, S., Lawand, N., Habib, H. A., and Farahat, N. M. (2024). Knowledge, attitude and practice among pharmacy students and faculty members towards artificial intelligence in pharmacy practice: A multinational cross-sectional study. PLoS One 19:e0296884. doi: 10.1371/journal.pone.0296884

Hussain, I. (2020). Attitude of university students and teachers towards instructional role of artificial intelligence. Int. J. Distance Educ. E-Learn. 5, 158–177. doi: 10.36261/ijdeel.v5i2.1057

Hwang, J. Y. (2022). Awareness, Knowledge and Attitude Towards Artificial Intelligence in Learning Among Faculty of Medicine and Health Sciences Students in University Tunku Abdul Rahman. [Doctoral dissertation]. Kampar, Malaysia: Universiti Tunku Abdul Rahan.

Ibrahim, H., Liu, F., Asim, R., Battu, B., Benabderrahmane, S., Alhafni, B., et al. (2023). Perception, performance, and detectability of conversational artificial intelligence across 32 university courses. Sci. Rep. 13:12187. doi: 10.1038/s41598-023-38964-3

Jarrah, H. Y., Alwaely, S., Darawsheh, S. R., Alshurideh, M., and Al-Shaar, A. S. (2023). “Effectiveness of introducing artificial intelligence in the curricula and teaching methods,” in The Effect of Information Technology on Business and Marketing Intelligence Systems. Studies in Computational Intelligence, Vol. 1056, eds M. Alshurideh, B. H. Al Kurdi, R. Masa’deh, H. M. Alzoubi, and S. Salloum (Cham: Springer), doi: 10.1007/978-3-031-12382-5_104

Kim, N. J., and Kim, M. K. (2022). Teacher’s perceptions of using an artificial intelligence-based educational tool for scientific writing. Front. Educ. 7:755914. doi: 10.3389/feduc.2022.755914

Kiryakova, G., and Angelova, N. (2023). ChatGPT—A challenging tool for the university professors in their teaching practice. Educ. Sci. 13:1056. doi: 10.3390/educsci13101056

Meng, J., and Dai, Y. N. (2021). Emotional support from AI Chatbots: Should a supportive partner self-disclose or not? J. Comput. Mediated Commun. 26, 207–222. doi: 10.1093/jcmc/zmab005

Mishra, P., and Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers Coll. Rec. 108, 1017–1054. doi: 10.1111/j.1467-9620.2006.00684.x

Morris, M. R. (2023). Scientists’ perspectives on the potential for generative AI in their fields. arXiv [preprint] doi: 10.48550/arXiv.2304.01420

Nikum, K. (2022). Answers to the societal demands with education 5.0: Indian higher education system. J. Eng. Educ. Trans. 36, 115–127. doi: 10.16920/jeet/2022/v36is1/22184

Oluwadiya, K. S., Adeoti, A. O., Agodirin, S. O., Nottidge, T. E., Usman, M. I., Gali, M. B., et al. (2023). Exploring artificial intelligence in the nigerian medical educational space: An online cross-sectional study of perceptions, risks and benefits among students and lecturers from ten universities. Nigerian Postgraduate Med. J. 30, 285–292. doi: 10.4103/npmj.npmj_186_23

Shamsuddinova, S., Heryani, P., and Naval, M. A. (2024). Evolution to revolution: Critical exploration of educators’ perceptions of the impact of Artificial Intelligence (AI) on the teaching and learning process in the GCC region. Int. J. Educ. Res. 125:102326. doi: 10.1016/j.ijer.2024.102326

Shankar, P. R., Azhar, T., Nadarajah, V. D., Er, H. M., Arooj, M., and Wilson, I. G. (2023). Faculty perceptions regarding an individually tailored, flexible length, outcomes-based curriculum for undergraduate medical students. Korean J. Med. Educ. 35, 235–257. doi: 10.3946/kjme.2023.262

Sharma, V., Saini, U., Pareek, V., Sharma, L., and Kumar, S. (2023). Artificial intelligence (AI) integration in medical education: A pan-India cross-sectional observation of acceptance and understanding among students. Scripta Med. 54, 343–352. doi: 10.5937/scriptamed54-46267

Swed, S., Alibrahim, H., Elkalagi, N. K. H., Nasif, M. N., Rais, M. A., Nashwan, A. J., et al. (2022). Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Syria: A cross-sectional online survey. Front. Artificial Intell. 5:1011524. doi: 10.3389/frai.2022.1011524

UNESCO IESALC (2023). ChatGPT and Artificial Intelligence in Higher Education: Quick Start Guide. Paris: UNESCO.

Ural Keleş, P., and Aydın, S. (2021). University students’ perceptions about artificial intelligence. Shanlax Int. J. Educ. 9, 212–220. doi: 10.34293/education.v9iS1-May.4014

Wang, S., Yu, H., Hu, X., and Li, J. (2020). Participant or spectator? Comprehending the willingness of faculty to use intelligent tutoring systems in the artificial intelligence era. Br. J. Educ. Technol. 51, 1657–1673. doi: 10.1111/bjet.12998

You, J. W. (2023). Analysis of professors’ experiences with generative ai and the concerns of classroom use: Application of the concerns-based adoption model (CBAM). Korean Assoc. General Educ. 17, 333–350. doi: 10.46392/kjge.2023.17.6.333

Zastudil, C., Rogalska, M., Kapp, C., Vaughn, J., and MacNeil, S. (2023). “Generative ai in computing education: Perspectives of students and instructors,” in Proceedings of the 2023 IEEE Frontiers in Education Conference (FIE), (Piscataway, NJ: IEEE), 1–9.

Keywords: faculty, generative artificial intelligence, technology acceptance, educational technology, higher education

Citation: Nevárez Montes J and Elizondo-Garcia J (2025) Faculty acceptance and use of generative artificial intelligence in their practice. Front. Educ. 10:1427450. doi: 10.3389/feduc.2025.1427450

Received: 03 May 2024; Accepted: 16 January 2025;

Published: 03 February 2025.

Edited by:

Ignacio Despujol Zabala, Universitat Politècnica de València, SpainCopyright © 2025 Nevárez Montes and Elizondo-Garcia. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Julián Nevárez Montes, anVsaWFuLm5ldmFyZXpAdWRlbS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.