- 1Department of Psychology, Goldsmiths, University of London, London, United Kingdom

- 2Research and Development, Ultraleap Ltd., Bristol, United Kingdom

Introduction: Gesture-based interactions provide control over a system without the need for physical contact. Mid-air haptic technology allows a user to not visually engage with the interface while receiving system information and is readily manipulable, which has positive implications for automotive environments. It is important, however, that the user still feels a sense of agency, which here refers to perceiving system changes as caused by their gesture.

Methods: In the current study, 36 participants engaged in an experimental time perception task with an automotive-themed infotainment menu, serving as an implicit quantitative measure of agency. This was supplemented with additional self-reported measures. They selected different icons via gesture poses, with sensory feedback either visually or haptically. In addition, (sensory) feedback was also the same for each icon, arbitrarily different or carried semantic information.

Results: Mid-air haptics increased agency compared to visual, and this did not vary as a function of feedback meaning. Agency was also associated with general measures of trust and usability.

Discussion: Our findings demonstrate positive implications for mid-air haptics in automotive contexts and highlight the general importance of user agency.

1 Introduction

Gesture recognition technologies provide users control over systems without physical contact with the device (Janczyk et al., 2019). This allows for more flexible and natural interactions (O’Hara et al., 2013). One promising area of application is in automotive infotainment systems (Ashley, 2014), with some studies showing that mid-air interactions with these systems reduce driving errors and improve user experience (Ohn-Bar and Trivedi, 2014; Parada-Loira et al., 2014). However, a concern with mid-air interactions is providing appropriate sensory feedback for the user to perceive system state changes and crucial factors for their sense of agency (SoA) (Martinez et al., 2017). SoA refers to the conscious experience of having a causal influence over the environment via our actions, something that extends to our interactions with technology (Haggard, 2017).

A commonly used sensory modality, albeit typically in tandem with touchscreens, is visual feedback. However, visual demands in an automotive context can distract and increase the risk of accidents (National Highway Traffic Safety Administration, 2020). Auditory feedback in response to gesture commands has been explored as an alternative to alleviate visual resources (Tabbarah et al., 2023; Shakeri et al., 2017; Sterkenburg et al., 2017). However, this could compete with other auditory information inherent to automotive contexts, both inside and outside the vehicle, so it still faces risks of dual task demands and ultimately noisy signals. In view of these concerns, mid-air haptic technology has been developed. This technology provides tactile information directly to the hand by stimulating the mechanoreceptors via ultrasound waves (Georgiou et al., 2022). This shows promise as an alternative (haptic) modality associated with these interactions, particularly appropriate in automotive scenarios (Georgiou et al., 2022; Harrington et al., 2018; Spakov et al., 2022; Young et al., 2020). This feedback is readily manipulable and can be used to represent the features of selection more closely, without the need to direct visual or audio attention.

The current study’s objective was to empirically investigate the user’s SoA in gesture-based interactions that use mid-air haptics, and examine whether this is modulated by differences in the semantic value of the feedback. Our primary research questions were if SoA is maintained—or even increased—with mid-air haptic feedback as compared to visual, and whether there is added value by providing the feedback with semantic meaning. As exploratory factors, we measured trust in and usability of gesture recognition system as well as measures of individual differences in general, human-computer interaction (HCI) attitudes. Our secondary research questions were therefore whether there is a relationship between SoA and user experience with gestural input, and between SoA and general HCI factors.

The contributions of this article are as follows: (1) to apply robust psychological methods—including implicit and explicit measures—to provide empirical evidence for SoA in gesture-based interactions, (2) to examine positive implications for the use of mid-air haptics with gesture-recognition technology, and (3) to suggest agency to be an important psychological variable in the wider context of HCI.

2 Background

This section introduces the measurement and theory of SoA from a psychological research perspective. It then highlights its importance and applicability to HCI research. Finally, it builds on findings with touchless systems, and recent endeavors with mid-air gestures with automotive user interface (UI), as a rationale for the experiment.

2.1 Sense of agency

There are a number of different measures of SoA, which can be broadly split into two categories: implicit and explicit measures. Explicit measures require the participants to report aspects of their agentic experience, such as making an agency attribution judgment (Waltemate et al., 2016; Farrer et al., 2008) or reporting the amount of control they feel over an action and/or its outcome (Sidarus and Haggard, 2016; Aoyagi et al., 2021). Implicit measures quantify a perceptual correlate of voluntary action in order to infer something about participants’ agentic experiences. A widely used implicit measure is intentional binding (Moore and Obhi, 2012), in which participants perceive movements and their effects to be closer together in time when they are under voluntary control. This effect has been widely replicated (Moore et al., 2009, 2010; Antusch et al., 2021; Engbert et al., 2008; Haggard et al., 2002) and is considered to be an indirect measure of SoA (Coyle et al., 2012; Winkler et al., 2020; Christensen et al., 2019; Wen et al., 2015). Explicit and implicit measures do not always coincide (Dewey and Knoblich, 2014), which is believed to reflect a difference in the level of awareness. This difference is between the judgment and feeling of SoA (Synofzik et al., 2008). It is therefore important to incorporate both measures where possible.

There are different theories concerning the neurocognitive origins of SoA. Retrospective theories argue that a sense of agency is informed by signals generated by the action itself (Wegner and Wheatley, 1999). Prospective theories argue that a sense of agency is informed by predictive, internal signals from the motor system (Blakemore and Frith, 2003). Alternatively, a more recent cue integration account suggests that SoA is the result of both prospective and retrospective mechanisms (Synofzik et al., 2013; Synofzik et al., 2008). Thus, different cues can inform SoA including predictive motor signals and external sensory feedback. The relative influence of these cues depends on their reliability (Moore et al., 2009; Moore et al., 2009; Moore and Fletcher, 2012).

2.2 Agency in HCI: input modality and system feedback

Interactions with systems involve ongoing actions and effects as intended by the user. This illustrates a direct translation of SoA to HCI. A key focus of user-interface design noted by Schneiderman and Plaisant (2004), is to foster a sense of control over a system with a focus on how that system responds.

Input modality is an important factor for an agency in HCI, as this is what the user acts with to bring about an intended change (Limerick et al., 2014). Here, the aim is to optimize the Gulf of Execution, which refers to the translation of user intention to changes in the system (Norman, 1986). Research has shown that SoA varies across input modalities. For example, intentional binding is reduced for speech input compared to keyboard input (Limerick et al., 2015), indicating a reduced SoA. Alternatively, intentional binding is increased for skin input compared to keyboard input (Coyle et al., 2012), indicating an increased SoA.

System feedback is another key factor for an agency in HCI as it signals to the user that an interaction has taken place (Limerick et al., 2014). Here, the objective is to optimize the Gulf of Evaluation, which refers to the translation of system changes back to the user (Norman, 1986). Research has shown that SoA is sensitive to the feedback provided for actions. For example, latency between user input and movement on the system can negatively impact SoA (Berberian et al., 2013; Evangelou et al., 2021). Alternatively, there is also system feedback for the outcome of actions that users make, which can retrospectively impact SoA. For example, the delays between actions and outcomes have been shown to be more impactful than movement-feedback-related latency (David et al., 2016). Furthermore, SoA has also been shown to be modulated by outcome valence and congruence (Wen et al., 2015; Barlas and Kopp, 2018).

2.3 Touchless systems: gesture recognition and sensory modality

Gesture input involves hand movements without direct physical contact, which could weaken SoA given the importance of sensory feedback. Martinez et al. (2017) carried out a study directly comparing gesture input to a physical button press in an action-outcome binding paradigm. They found comparable effects, suggesting that users feel just as in control when using gestures to input commands as they do with physical interactions.

The feedback given to the user from their gesture input may play a crucial role in the feeling that they have caused the change. Further to their comparison between gesture and physical input, Martinez et al. (2017) also compared the effects of feedback from different sensory modalities. The binding effect was greater for mid-air haptic and audio feedback as compared to visual feedback. This suggests that the experience of SoA is stronger in gesture-based interactions when sensory feedback is tactile (mid-air haptic) or auditory, and potentially diminishes when the feedback is visual.

Notably, the aforementioned research investigated gesture-based input of a button press that emulated the physical action. To provide more variety in interactions with more complicated systems, advances in gesture recognition use poses that are performed which are assigned meaning. For example, opening the hand to activate a map before manipulating it (Graichen et al., 2019). Though these studies have looked at usability and trust, less is known about SoA.

In addition to the meaning of the gesture, the meaning of the feedback in response to the recognized gesture has also been considered. Brown et al. (2022) looked at assigning semantic value via the mid-air haptic medium for the selection of automotive icons to their respective gesture poses. This was intended to enhance not only the user’s recognition of a selection but also the correct selection. The results showed that the semantic value of the mid-air haptic patterns was translated and recognized by users, which supported previous work on vibrotactile “Hapticons” (Maclean and Enriquez, 2003). As the recognition of feedback in response is intrinsic to SoA, it can be suggested that this may also benefit from the added recognition value of mid-air haptic meaning.

3 Materials and methods

3.1 Design

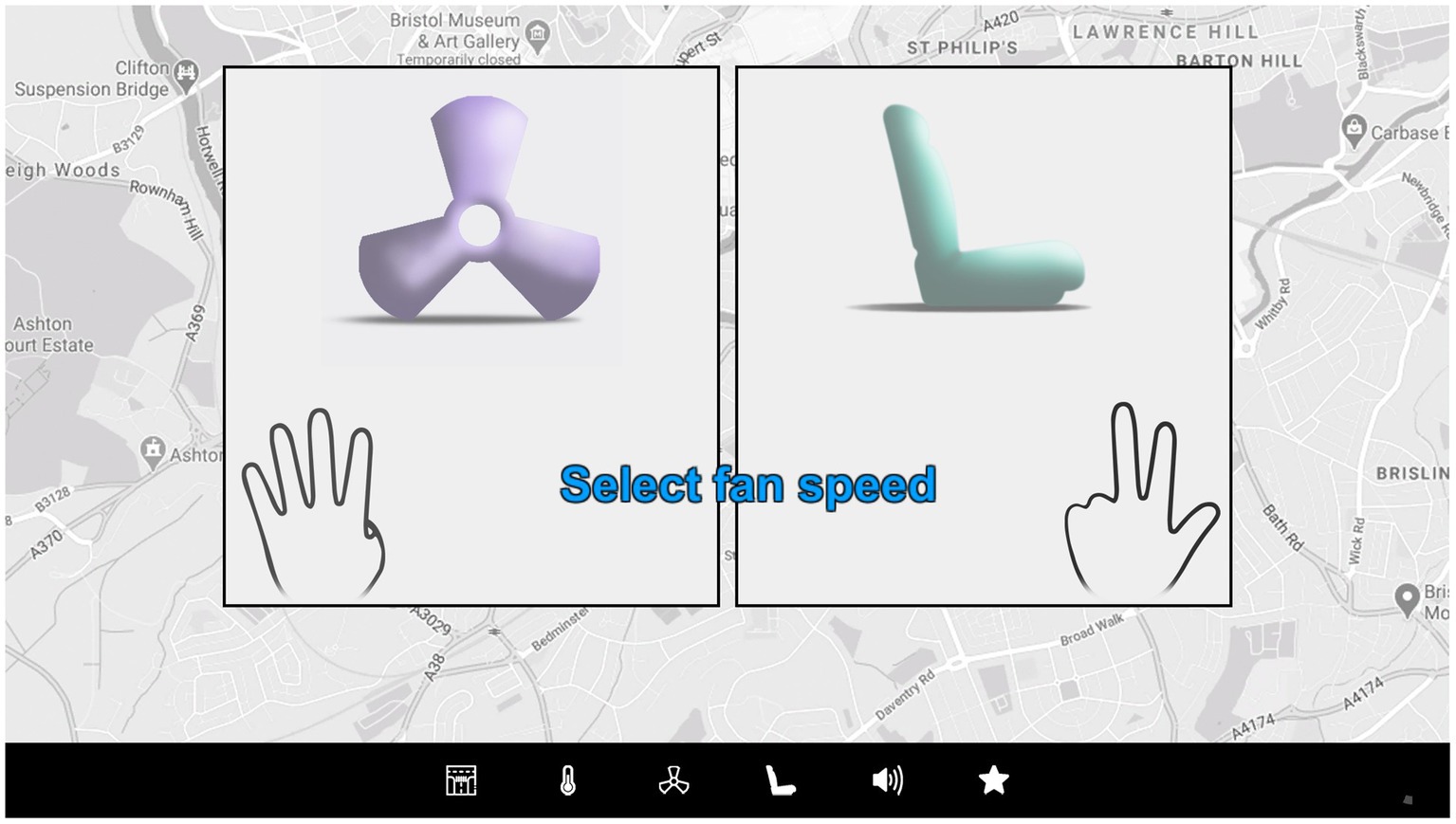

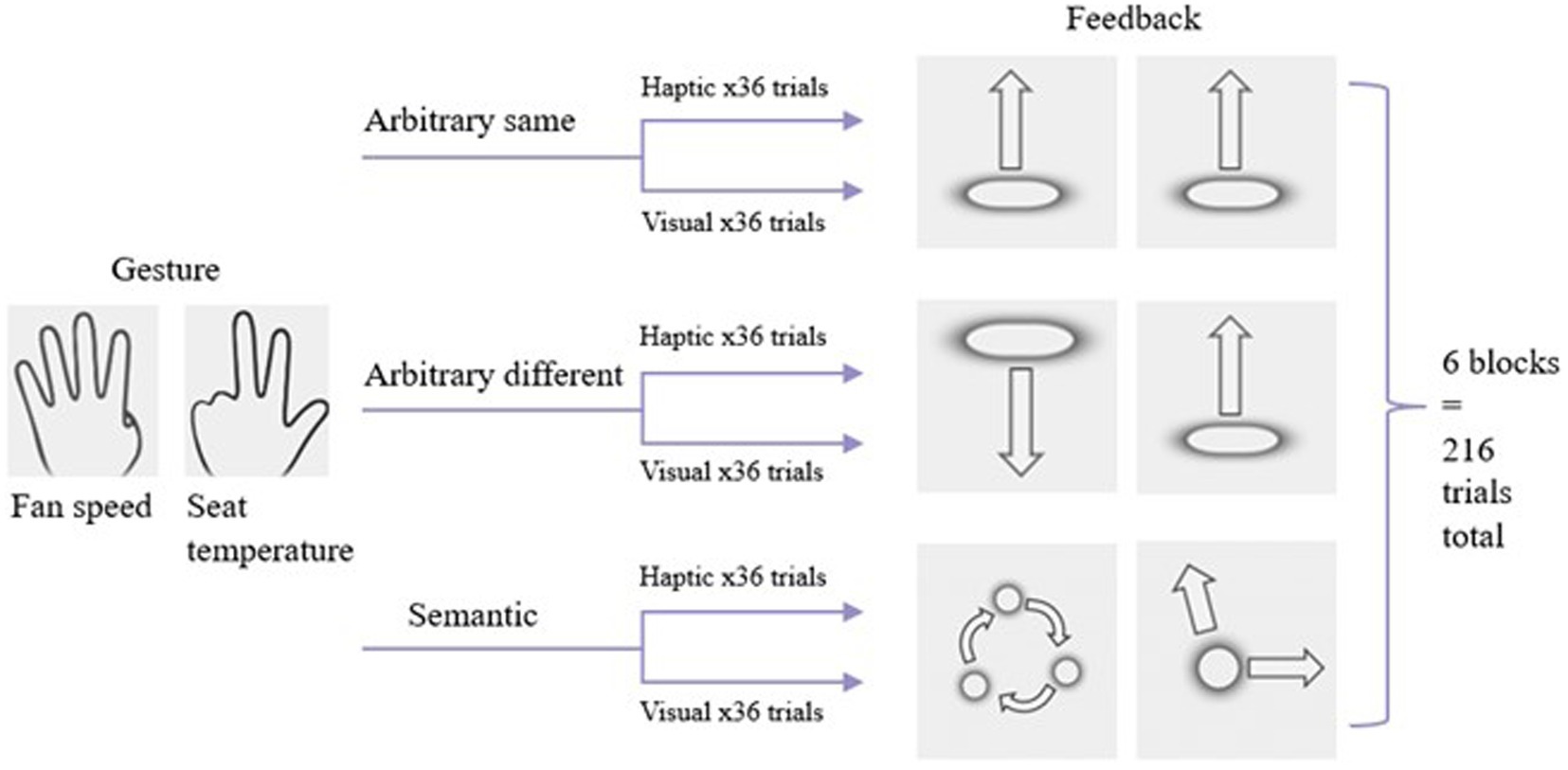

In the current study, we investigate gesture recognition via poses and how differences in the feedback received impact SoA, that being (a) sensory modality and (b) feedback meaning. Participants interacted with an automotive-themed infotainment menu, selecting one of two icons (fan speed or seat temperature) via a gesture pose and receiving feedback for their selection. Feedback was received either visually or (mid-air) haptically. Importantly, the feedback meanings also differed, being either the same for both icons, arbitrarily different, or semantically different.

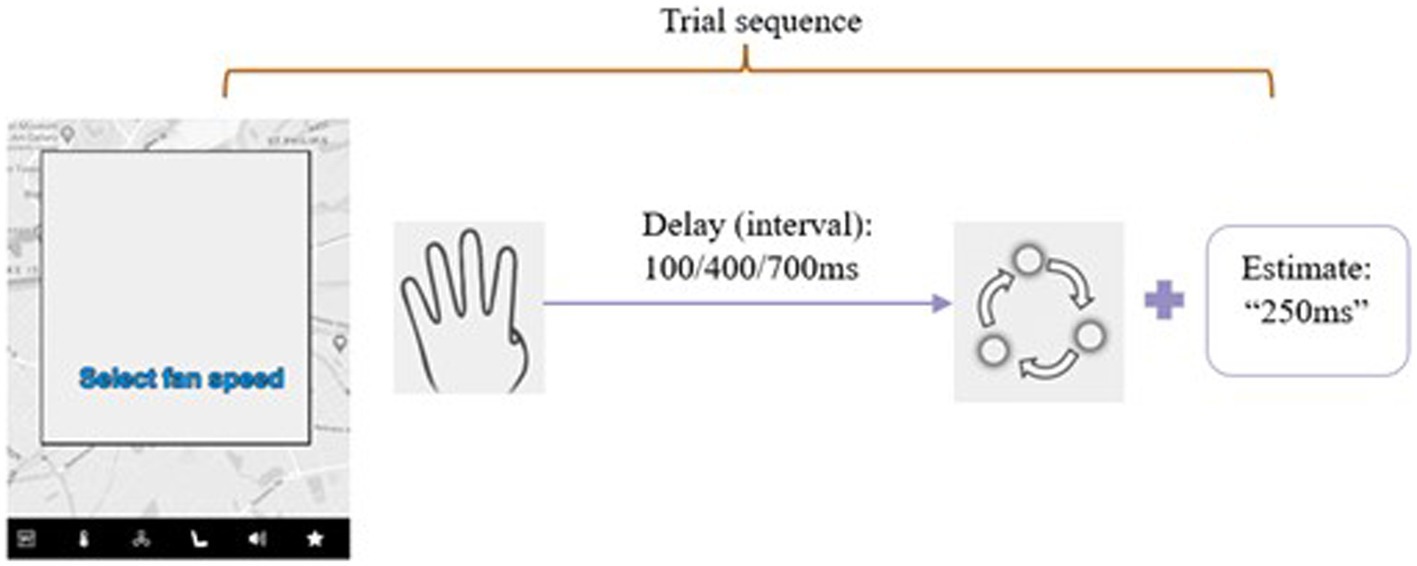

To measure implicit SoA, we used the interval estimation paradigm (Engbert et al., 2008). We introduced varying time delays between the gesture and feedback, and participants were asked to estimate these delays. Differences in the perceived interval between these actions and effects are taken as differences in the magnitude of the experience (Winkler et al., 2020; Coyle et al., 2012; Evangelou et al., 2021). For explicit SoA, we adapted self-report style questions from previous studies (Evangelou et al., 2021) to determine control over actions and feelings of causal influence. We also took measures of trust, usability, technological readiness, and computer anxiety to explore associations with SoA.

A 2 × 3 within-subjects design was used with participants taking part in all six conditions (Figure 1). There were two conditions of sensory feedback: haptic and visual. These were unimodal such that feedback was either given haptically or visually. There were three conditions of feedback type: arbitrary same, arbitrary different, and semantic. In the arbitrary same condition, the feedback was an upward scan for either icon selected; in the arbitrary different condition, the feedback was a scan up for selecting the seat icon and a scan down for selecting a fan icon. In the semantic condition, the feedback for the seat was scanned in an L-shaped way that represented the seat icon, and the feedback for the fan icon was a circular motion to represent a fan.

Figure 1. Research design schematic. Feedback images show the visual feedback conditions as displayed on the screen and represent how the haptic scanning was rendered.

3.1.1 Hypotheses

H1: SoA (explicit and implicit) will be equal to or stronger overall in the haptic conditions.

H2: SoA (explicit and implicit) will be equal to or stronger overall as feedback gains meaning.

H3: The effects of feedback meaning will be emphasized for mid-air haptics.

H4: SoA will be associated with more trust and higher usability in the gesture recognition system.

H5: There will be a relationship between SoA and general attitudes toward technology and HCI

The objective of H1 is to establish the overall potential use or even benefits of mid-air haptics for gestural input. The objective of H2 is to establish a use for feedback meaning. The objective of H3 is to investigate whether adding semantic value to the feedback is particularly beneficial with the haptic modality. The objective of H4 is to show a close relationship between SoA, trust, and usability with gestural input. The objective of H5 is to emphasize the importance of SoA as a variable in HCI contexts.

3.2 Participants

G*Power was used to calculate the required sample size of 29 participants for 0.8 power, based on a previous study (Martinez et al., 2017). Overall, 36 participants were recruited via the SONA participation database and word of mouth and received £10 compensation. One participant was excluded from the analysis due to having difficulty, particularly with selecting the requested icon (an error rate of ~50%, suggesting they may have been selecting one of two icons at random). Finally, 35 (19 females) were included in the analysis, with their ages ranging from 18 to 52 years (M = 27.4; SD = 7.6). The participants were screened for handedness; however, since this paradigm was designed for automotive systems in the United Kingdom and intended for use of left-hand use only, this served only as a potential confound check. No visual or somatosensory impairments were reported.

3.3 Apparatus

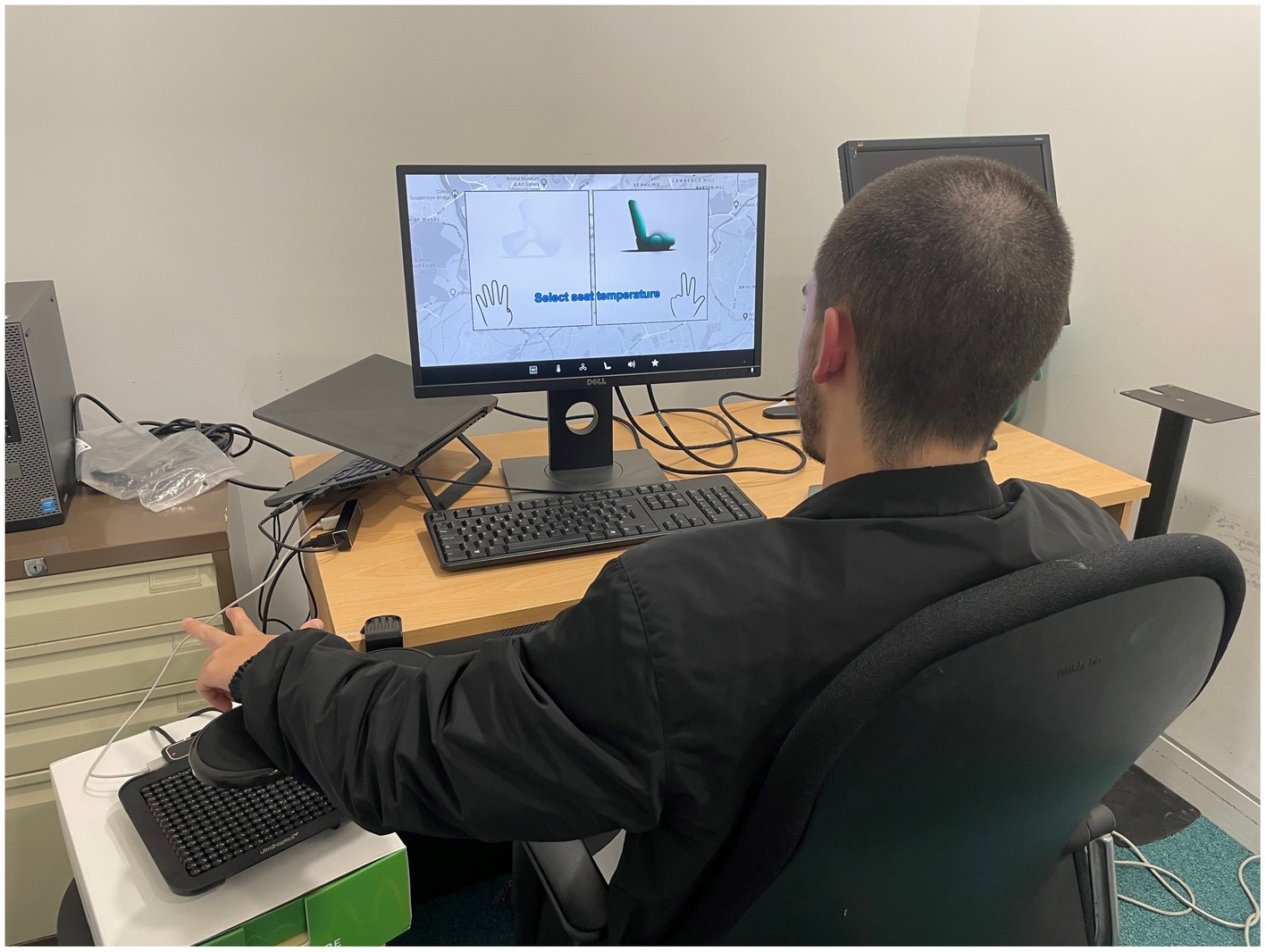

A gesture-controlled infotainment system was set up and run via Unity engine (v2020.3.27f1), with a fan speed and seat temperature icon. These icons were selectable via a 4-finger pose and a 3-finger pose, respectively (see Figure 1). The interaction was enabled by an Ultraleap STRATOS Explore development kit, which consisted of a Leap Motion camera and an ultrasound array. This device reads the gesture pose as appropriate input and provides mid-air haptic feedback as the ultrasound focalizes on parts of the hand, stimulating the mechanoreceptors and effectively transmitting tactile sensation (Carter et al., 2013). The haptic sensations used included a scanning sensation upward (base-to-top of fingers), downward (top-to-base of fingers), a circular sensation (partly fingers and palm) corresponding with the fan icon, and/or an L-shape (tip of fingers along to tip of thumb, and back) in correspondence with the seat icon. Visual animations were made to match the haptic sensations such as scanning up, down, circular (fan) motion and L-shape motion (seat) (Figure 1).

A 14” HD monitor was used with participants sitting at a safe distance, with an armrest on the side of their left hand. The Ultraleap device was positioned where the hand is tracked at a height where the arm is slightly upright in a way that would be used in an automotive environment (Figure 2). Notably, the ultrasonic array works in tandem with the leap motion camera, therefore if the hand is in view of the camera, the mid-air haptic renders on the same hand areas mentioned irrespective of the positioning. The gesture input was followed by either a visual animation outcome or a haptic sensation, depending on the condition, and 1 s later a UI panel opened which was used to input estimates via the keyboard. Headphones were used to minimize the possible conflict between the ultrasound audibility and the mid-air haptic tactility.

3.4 Tasks and measures

3.4.1 Sense of agency

We used the interval estimation method to measure the implicit sense of agency, which requires participants to directly estimate the interval between actions and outcomes. The participants would make the gesture pose and receive the (haptic or visual) feedback after a time interval, which they were told would vary between 1 and 1,000 ms. As a standard format (Engbert et al., 2008), this varied pseudorandomly at only three different time intervals—100, 400, or 700 ms (Figure 3). Participants entered their estimates in the UI panel manually after each trial. Shorter interval estimations indicate a greater sense of agency.

Figure 3. A typical experimental trial sequence. NB intervals were pseudorandom such that each was played 12 times per block (36 trials) but in a random fashion. They then entered their estimate via the keyboard and pressed enter to start the next trial.

Explicit agency was measured using self-report by having participants rate the amount of agency they felt during an interaction. Two questions were adapted from Evangelou et al. (2021) and tailored to the gesture interaction: “I feel in control when making my gesture command” and “I feel the feedback is caused by my gesture command.” As we included the element of selecting the icon as requested in this paradigm, we also had a rating of responsibility: “I feel responsible for which feature is selected.” All ratings were taken on a Likert scale from 1 (strongly disagree) to 7 (strongly agree) and collected every 18 trials (twice per block). Higher ratings indicated a greater sense of agency.

3.4.2 Trust and usability

HCI measures of trust and usability were adapted to the task at hand as a post-hoc measure of the user’s trust and experience with the gesture control infotainment system. We tailored the Trust Between People and Automation scale (Jian et al., 2000) which then consisted of questions such as “I am suspicious of the gesture control system’s intent, actions and outputs” and “The gesture control system is dependable.” These are taken on a 1–7 Likert (non-numbered click-and-drag slider) scale and averaged so that scores range between 1 (low trust) and 7 (high trust). The short version UEQ-S (Schrepp et al., 2017) was used to measure pragmatic (e.g., inefficient/efficient) and hedonic (e.g., boring/exciting) usability, with each word at opposing ends of a 1–5 non-numbered slider scale.

3.4.3 Individual differences in HCI

We measured computer anxiety using the 19-item Computer Anxiety Rating Scale (CARS) (Heinssen et al., 1987), which consisted of items such as “I am afraid that if I begin to use computers I will become dependent upon them and lose some of my reasoning skills” and “Learning to operate computers is like learning any new skill—the more you practice, the better you become.” These are measured on a Likert scale of 1–5, with total summed scores ranging from 19 (low anxiety) to 99 (high anxiety). We also measured technology readiness using the 16-item TRI 2.0 (Parasuraman and Colby, 2015), which consisted of questions such as “Technology gives people more control over their daily lives” and “Sometimes, I think that technology systems are not designed for use by ordinary people.” These were also measured on a 1–5 Likert scale, with the total mean score ranging from 1 (low) to 5 (high).

3.5 Procedure

Prior to the experimental session, the participants completed the CARS and TRI 2.0 online. The participants first underwent a familiarization phase with the apparatus and the gesture control infotainment system. During this, both icons were presented on a screen the entire time, including the appropriate gesture poses (Figure 4). These gesture poses were also verbally told and physically demonstrated to them. Each gesture pose was followed by both the visual and haptic stimuli simultaneously. Participants selected the requested icon in 10 practice trials with varying time intervals between performing the gesture and receiving feedback. For this practice phase only, the correct interval was displayed on the screen to give them a sense of the millisecond timescale. All participants experienced the same time intervals in the practice phase, these were (in a random order; in ms): 50, 200, 400, 600, 800, 950.

For the experimental phase, participants were simply requested to select an icon (Figure 3) and were told that intervals would now vary anywhere between 1 and 1,000 ms. They were told when each block would consist of either haptic feedback or visual; they were not told what type of feedback (arbitrary same, different, or semantic) this would be. Each feedback type condition was completed for each sensory modality consecutively. For example, in the arbitrary different condition, the haptic block was completed first, followed by the visual block. Conditions were counterbalanced to account for order effects. There were six blocks and 36 trials per block. Within these blocks, there were 12 trials of each interval, 18 of each requested icon, and self-reported agency measures taken two times (every 18 trials).

After the interval estimation task, participants completed the Trust Between People and Automation scale and UEQ-S. In this manner, the questions were tailored to the gesture control system they just used and were reported via a click-and-drag slider UI. The participants were then asked if they had any questions or thoughts, and debriefed where requested.

4 Results

Interval estimations were averaged for each condition so that lower scores indicate greater implicit agency. Self-reported control, causation, and responsibility scores were averaged, respectively, so that higher scores indicate greater explicit agency. No outliers were detected (all abs Z <3). The data were processed in Excel and analyses were carried out in Jamovi version 2.

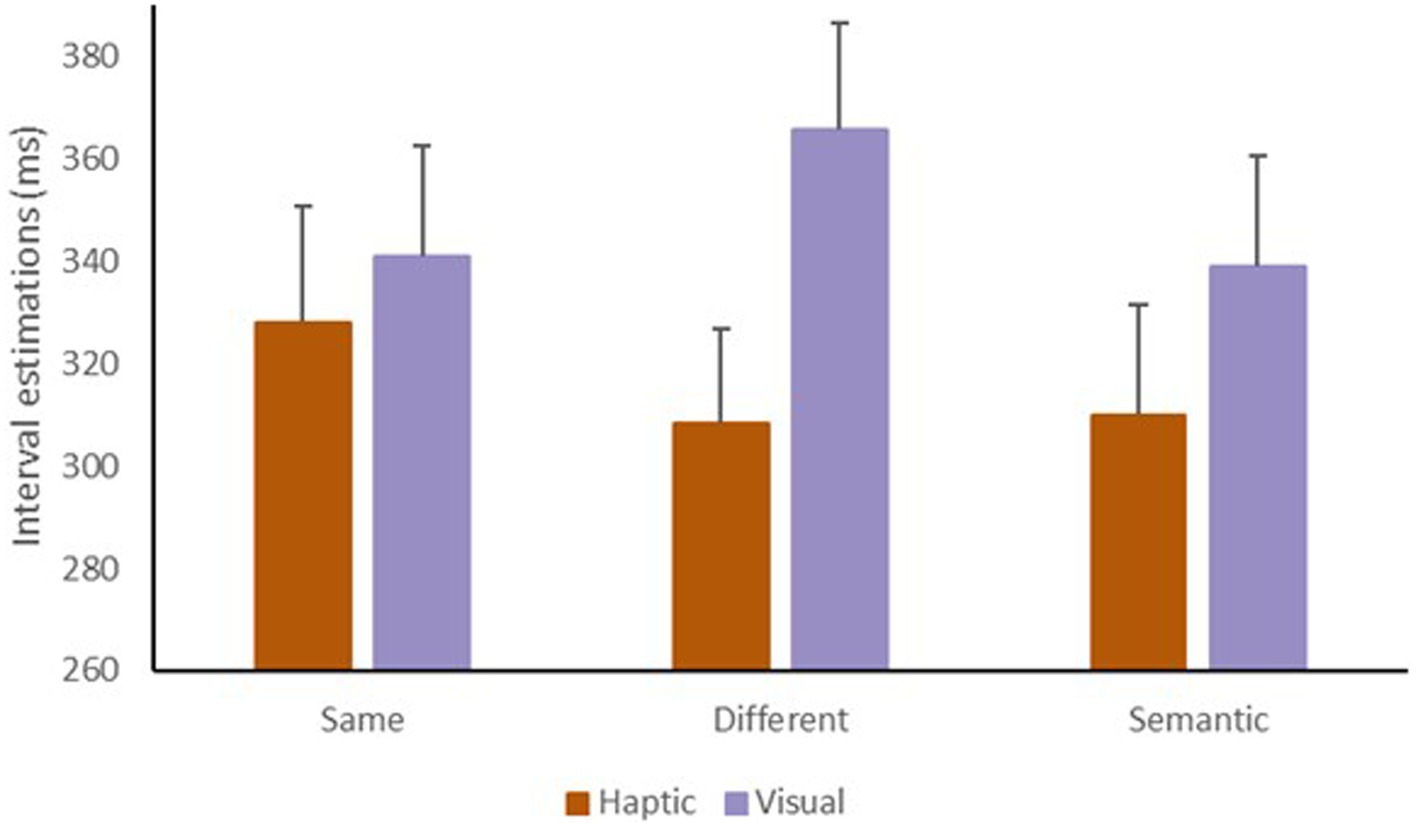

4.1 Sensory modality and feedback meaning on interval estimations

A 2 × 3 repeated measures (RM) ANOVA was carried out with sensory modality (haptic or visual) and feedback meaning (arbitrary same, arbitrary different, or semantic) on interval estimations (Figure 5). There was a significant main effect of sensory modality, F(1, 34) = 9.16, p = 0.005, η p 2 = 0.21, such that interval estimations were shorter in the haptic conditions as compared to visual (Mdifference = −33.14). There was no significant effect of feedback type, F(2, 68) = 0.25, p = 0.778, η p 2 = 0.01. There was also no significant interaction between sensory modality and feedback type, F(2, 68) = 1.78, p = 0.176, η p 2 = 0.05. Overall, this suggests that implicit SoA was significantly greater with haptic feedback than with visual and that there was no influence of the meaning of feedback received.

Figure 5. Mean interval estimations by sensory modality, as a function of feedback meaning. Error bars represent standard errors across participants.

4.2 Sensory modality and feedback meaning on self-reported agency

Due to significant departures from normality in the self-report data (Shapiro Wilk, p < 0.001, Skewness Z > 1.96), we applied the aligned rank transform (Wobbrock et al., 2011) before conducting the ANOVAs. This method permits factorial ANOVA on non-parametric data to also examine interactions.

4.2.1 Self-reported control over gestural input

A 2 × 3 RM ANOVA was carried out with sensory modality (haptic or visual) and feedback meaning (arbitrary same, arbitrary different, or semantic) on the aligned ranks for self-reported control. There were no statistically significant main effects of sensory modality, F(1, 34) = 0.09, p = 0.771, η p 2 = 0.00, nor feedback meaning, F(2, 68) = 0.43, p = 0.651, η p 2 = 0.01. There was also no statistically significant interaction between sensory modality and feedback meaning, F(2, 68) = 0.36, p = 0.703, η p 2 = 0.01. Overall, this suggests there were no effects of outcome feedback on ratings of control over the gesture action made.

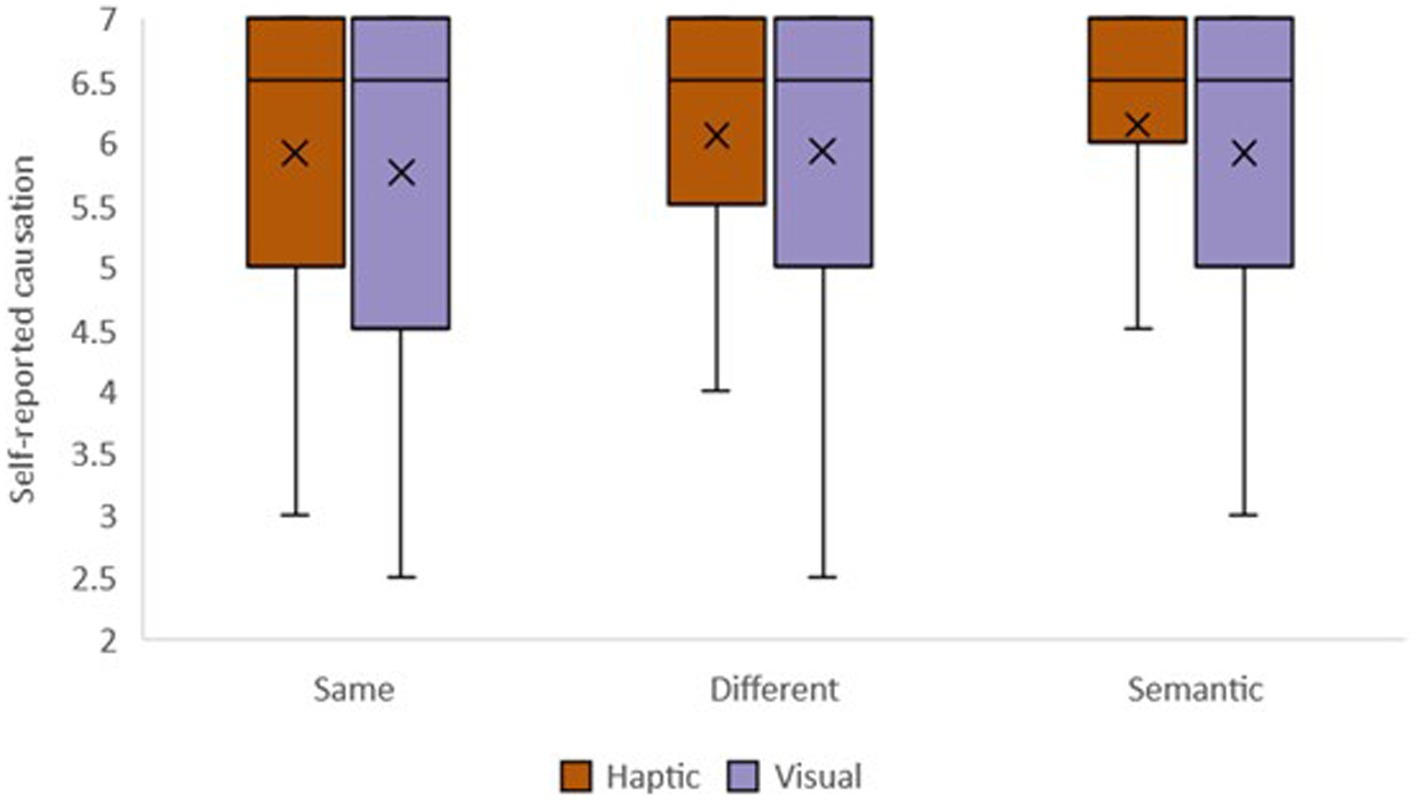

4.2.2 Self-reported causal influence over feedback received

A 2 × 3 RM ANOVA was carried out with sensory modality (haptic or visual) and feedback meaning (arbitrary same, arbitrary different, or semantic) on the aligned ranks for self-reported causation (Figure 6). There was a significant main effect of sensory modality, F(1, 34) = 5.02, p = 0.032, η p 2 = 0.13, such that ratings of causal influence over the feedback were greater with haptics as compared to visual (M difference = 0.17). There was no statistically significant effect of feedback meaning, F(2, 68) = 0.16, p = 0.855, η p 2 = 0.01. There was also no statistically significant interaction between sensory modality and feedback meaning, F(2, 68) = 0.42, p = 0.656, η p 2 = 0.01. Overall, this suggests that self-reported causal influence was significantly greater with haptic feedback than with visual and that there was no influence of the meaning of feedback received.

Figure 6. Ratings of causation plotted as a function of sensory modality and feedback meaning. The middle lines of the boxplot indicate the median (X’s represent the mean); the upper and lower limits indicate the first and third quartile. The error bars represent the 1.5 X interquartile range or minimum or maximum.

4.2.3 Self-reported responsibility for icon selection

A 2 × 3 RM ANOVA was carried out with sensory modality (haptic or visual) and feedback meaning (arbitrary same, arbitrary different, or semantic) on the aligned ranks for self-reported responsibility. There were no significant main effects of sensory modality, F(1, 34) = 0.21, p = 0.638, η p 2 = 0.01, nor feedback meaning, F(2, 68) = 0.38, p = 0.689, η p 2 = 0.01. There was also no significant interaction observed between sensory modality and feedback meaning, F(2, 68) = 0.47, p = 0.629, η p 2 = 0.01. Overall, this suggests there may be no effects of outcome feedback on ratings of responsibility for which icon was selected.

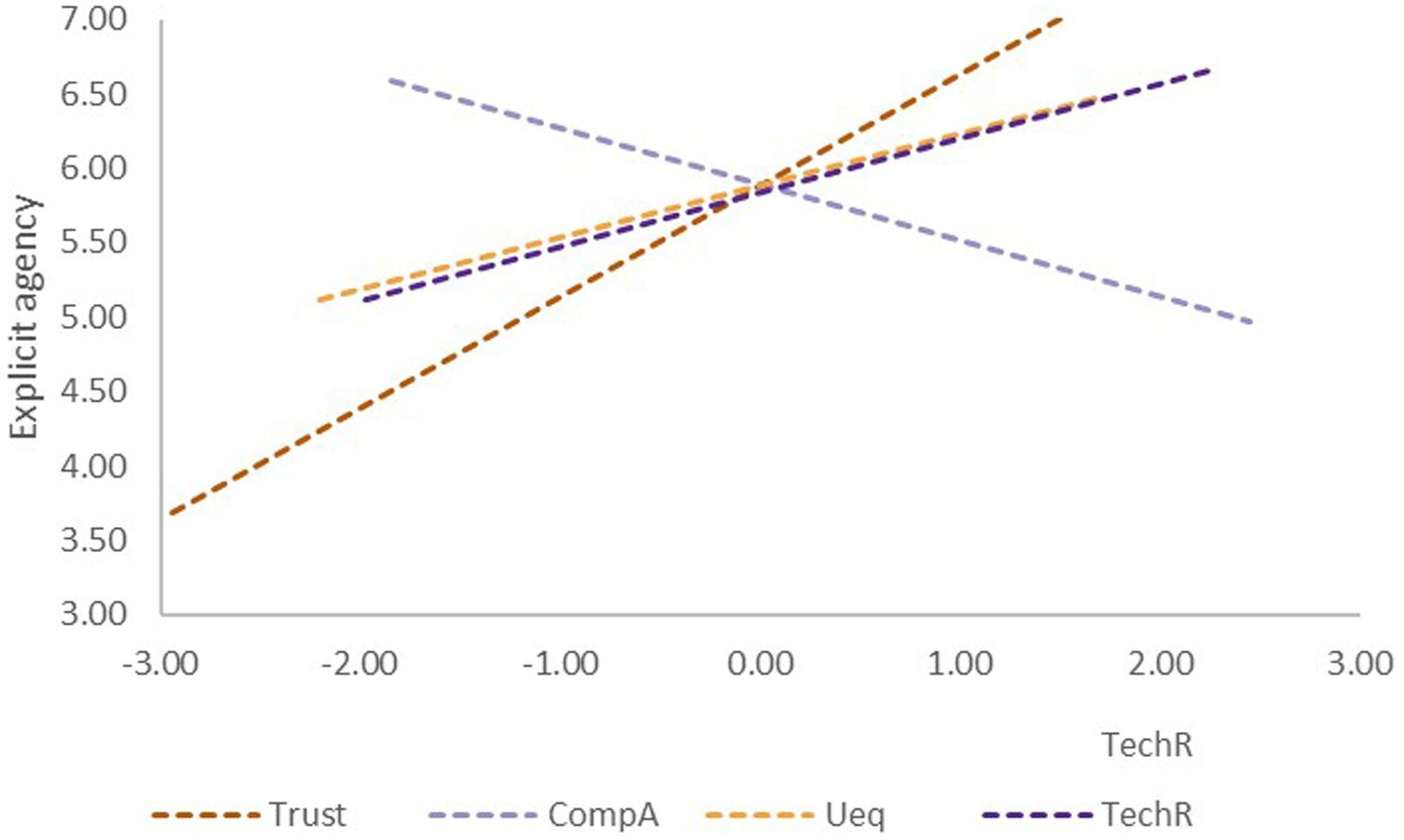

4.3 Relationship between agency and other HCI factors

To explore the relationship between agency and other HCI factors, we looked at correlations between the measures (Figure 7). For implicit agency, interval estimations were averaged across conditions. For explicit agency, self-reported control and causation were averaged across conditions. Spearman’s correlations were used where there were significant departures from normality.

Figure 7. Correlations between explicit agency and HCI factors. All scores on HCI scales are standardized for parity on the graph (centered around 0). CompA, Computer Anxiety; Ueq, Usability; TechR, Technological readiness.

Implicit agency did not significantly correlate with any of the other HCI factors (all p > 0.05). Explicit agency significantly positively correlated with trust, rs(35) = 0.644, p < 0.001, 95% CI [0.37, 0.62], and negatively with computer anxiety, rs(35) = −0.364, p = 0.031, 95% CI [−0.36, 0.03], but only showed a marginal positive trend with technological readiness, rs(35) = 0.326, p = 0.056, 95% CI [0.01, 0.61], and usability, rs(35) = 0.211, p = 0.061, 95% CI [0.32, 0.61]. Overall, this suggests there is a relationship between SoA and trust with gestural input, and SoA and general computer anxiety. Additionally, there is a potential relationship between SoA and perceived usability with gestural input, and SoA and general technological readiness.

5 Discussion

The current study aimed to investigate how differences in the feedback received in response to gesture input impact SoA. Associations between SoA and other important factors of the interaction were also explored. We found that the haptic modality significantly improved implicit SoA and explicit judgments of causation as compared to visual, independent of whether the feedback is meaningful or not. We also found that reporting a greater SoA in the interaction is associated with having more trust in the system; there is also a notable trend as such with usability. Finally, having more general anxiety around HCI is associated with reporting less SoA; there is also a notable trend as such with general technological readiness.

5.1 Mid-air haptic information for gesture recognition

This study supports and extends research on SoA with gesture-based interactions using mid-air haptic feedback. Previous research has looked at mid-air haptics for touchless interfaces with an in-air button press activation and found it to improve SoA as compared to visual feedback (Martinez et al., 2017). Here, the gesture is somewhat more separate from the outcome, as the pose-feedback model does not emulate a physical interaction, unlike a button press, which is typically accompanied by tactile feedback. We find that this increase in SoA provided by mid-air haptics does indeed extend to this scenario. Similarly, although not with gesture recognition, previous research has shown weaker binding for such separate visual outcomes (color change) relative to audio tone outcomes (Imaizumi and Tanno, 2019; Ruess et al., 2018). Together, this shows that sensory feedback is a relatively influential external cue in an agentic chain, highlighting the importance of retrospective cues with respect to already available prospective cues (Moore and Fletcher, 2012; Synofzik et al., 2013).

The current findings also show mid-air haptics as an outcome-feedback cue may be particularly beneficial, as there was an increase in both implicit and explicit SoA. Previous research looking at mid-air haptics accompanying virtual objects as action feedback shows that it can increase explicit but not implicit SoA (Evangelou et al., 2023). Such findings highlight the complex agency processing system, as both a feeling and a judgment, and the importance of including implicit and explicit measures. The current study extends this and shows that both the feeling and judgment of agency are positively impacted by having mid-air haptic feedback in response to their gesture pose. This has positive implications for mid-air haptics in automotive environments by not only promising to decrease eyes off the road time by removing visual elements (Shakeri et al., 2018) but potentially also improving SoA with gesture control systems. Notably, the current study found an increase in explicit judgments of causation but not (action) control. Interestingly, previous research found that the positive effects of action feedback may be more pronounced for reported control than for causation (Evangelou et al., 2021). Together, this suggests that judgments of control over actions and causal influence over outcomes may be impacted by action -and outcome feedback, respectively. Future research could utilize a direct comparison of these conditions of feedback to test this and further inform models of agency.

Finally, this study revealed that differences in the meaning of feedback received did not modulate SoA. This finding is surprising as previous research has shown the importance of sensory prediction congruency. Lafleur et al. (2020) manipulated this by having the visual result of a pinch action either match the actual force applied or not, which modulated SoA. Here, we manipulated the meaning of the feedback received such that it was either arbitrarily the same or different for each pose, or uniquely represented the icon. The results show the positive impact of mid-air haptics does not depend on this, but also more interestingly and perhaps unexpectedly, that differences in the haptic meaning received did not impact SoA. It may be that these more nuanced differences are more beneficial for the recognition of the icon selected and its hedonistic qualities (Brown et al., 2020, 2022). However, previous research has shown that subtle differences in mid-air haptics for virtual objects may impact SoA (Evangelou et al., 2024). Research with a focus specifically on mid-air haptic differences for gesture recognition could investigate this further.

5.2 Trust, usability, and agency with gesture-based automotive UI

This research has provided an exploratory opening into the relationship between trust and agency when using gesture control to operate an automotive UI system. We found that higher trust in the system made it more likely to report greater agency. A marginally positive relationship between agency and usability was also observed. This suggests that the judgments of control and influence in a system are related to the degree of trust in its ability to perform changes as intended, as well as how usable the system is perceived to be. Whether this speaks more to the gulf of execution or evaluation (Norman, 1986) is something for future research. For example, looking at whether the change in gesture poses made or feedback received impacts trust in and usability of the system in the same way it appears to impact SoA.

5.3 Technology readiness, anxiety and agency in HCI

Our exploratory findings also provide insight into a relationship between general anxiety when it comes to engagement with technology and the reported sense of agency. We found that higher general computer anxiety was associated with reporting lower agency. A marginally positive trend between technological readiness and agency was also identified. This suggests that greater apprehension about using technology, and potentially a general lack of inclination to engage with it, correlates with a reduced sense of control over it. This is interesting and speaks to wider discussed issues around the self and agency in HCI (McCarthy and Wright, 2005), as well as the role of affect (Hudlicka, 2003). For example, future research into improving people’s attitudes toward technology and reducing anxiety around its use could potentially enhance their sense of agency in HCI.

5.4 Limitations and future directions

One limitation of the current study concerns the lack of a passive control condition typically used in psychological research on SoA. A passive condition for comparison provides a means of categorically claiming participants have experienced SoA in the active conditions (Bednark et al., 2015; Cravo et al., 2009). As we were already comparing many different conditions, we opted for the approach of comparing the magnitude of agency between them, still informative as seen in previous research (Winkler et al., 2020; Coyle et al., 2012; Evangelou et al., 2021). Future research then, could now narrow down the comparisons of interest, allowing for the inclusion of a passive condition.

Another limitation to consider here is the limited ecological validity. Although we can say here that mid-air haptics as a response to gesture poses improve SoA compared to visual feedback, it remains uncertain whether this extends to an automotive environment, or whether it might interact with the driving experience. Furthermore, whether these effects change depend on the driving experience/behaviour itself. Future research could look at validating the effects reported here in a driving experience such as a simulation, and compare to more typically used audio feedback (Sterkenburg et al., 2017; Stecher et al., 2018) in already available gesture control automotive systems.

We also consider the inclusion of too many conditions—six in this case—which may decrease the statistical power when examining smaller effects. For example, looking at Figure 5, it appears there are larger, albeit not statistically significant, differences between haptic and visual conditions when the feedback is assigned at least arbitrary differences. Future research could utilize just two conditions for a more powerful statistical analysis.

6 Conclusion

In sum, the current study looked at SoA in gesture-based interactions with a focus on the impact of different feedback modalities and types. We also explored the relationship between agency and other HCI variables. Our results showed an overall improvement in both implicit and explicit SoA with mid-air haptics as compared to visual, irrespective of differences in the meaning of feedback received. We also found self-reported agency with gesture control to be positively related to more trust and usability. Finally, those with more general technology-related anxiety tended to report having less agency. These findings have positive implications for advances in gesture recognition technology by demonstrating the benefits of mid-air haptics using robust, quantitative measures of SoA. They also show agency as an important measure by bearing relation to other factors of trust and usability. Furthermore, presenting interesting relationships with general HCI factors increases the scope for future research.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Goldsmiths, University of London Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

GE: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Writing – original draft, Writing – review & editing. OG: Conceptualization, Funding acquisition, Methodology, Resources, Writing – review & editing. EB: Conceptualization, Methodology, Resources, Software, Writing – review & editing. JM: Conceptualization, Funding acquisition, Methodology, Project administration, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research was supported by UKRI with ESRC SeNSS (grant no. 2238934). OG acknowledged support from the EU Horizon 2020 research and innovation program (grant agreement no. 101017746).

Conflict of interest

OG and EB were employed by the Ultraleap Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Antusch, S., Custers, R., Marien, H., and Aarts, H. (2021). Studying the sense of Agency in the Absence of motor movement: an investigation into temporal binding of tactile sensations and auditory effects. Exp. Brain Res. 239, 1795–1806. doi: 10.1007/s00221-021-06087-8

Aoyagi, K., Wen, W., An, Q., Hamasaki, S., Yamakawa, H., Tamura, Y., et al. (2021). Modified sensory feedback enhances the sense of agency during continuous body movements in virtual reality. Sci. Rep. 11, 1–10. doi: 10.1038/s41598-021-82154-y

Barlas, Z., and Kopp, S. (2018). Action choice and outcome congruency independently affect intentional binding and feeling of control judgments. Front. Hum. Neurosci. 12:137. doi: 10.3389/fnhum.2018.00137

Bednark, J. G., Poonian, S. K., Palghat, K., McFadyen, J., and Cunnington, R. (2015). Identity-specific predictions and implicit measures of agency. Psychol. Conscious. Theory Res. Pract. 2, 253–268. doi: 10.1037/cns0000062

Berberian, B., Le Blaye, P., Schulte, C., Kinani, N., and Sim, P. R. (2013). “Data transmission latency and sense of control” in Engineering Psychology and Cognitive Ergonomics. Understanding Human Cognition. EPCE 2013. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) 8019 LNAI (PART 1). Ed. D. Harris (Berlin, Heidelberg: Springer) 3–12.

Blakemore, S.-j., and Frith, C. (2003). Self-awareness and action. Curr. Opin. Nuerobiol. 13, 219–224. doi: 10.1016/S0959-4388(03)00043-6

Brown, E., Large, D. R., Limerick, H., and Burnett, G. (2020). “Ultrahapticons: ‘Haptifying’ drivers’ mental models to transform automotive mid-air haptic gesture infotainment interfaces” in 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (New York, NY: ACM), 54–57.

Brown, E., Large, D. R., Limerick, H., Frier, W., and Burnett, G. (2022). “Augmenting automotive gesture infotainment interfaces through mid-air haptic icon design” in Ultrasound Mid-Air Haptics for Touchless Interfaces. Human–Computer Interaction. Eds. O. Georgiou, W. Frier, E. Freeman, C. Pacchierotti, and T. Hoshi (Cham: Springer) 119–145.

Carter, T., Seah, S. A., Long, B., Drinkwater, B., and Subramanian, S. (2013). “UltraHaptics: multi-point mid-air haptic feedback for touch surfaces.” UIST 2013 - Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, (New York, NY, USA: Association for Computing Machinery)505–514.

Christensen, J. F., Di Costa, S., Beck, B., and Haggard, P. (2019). I just lost it! Fear and anger reduce the sense of agency: a study using intentional binding. Exp. Brain Res. 237, 1205–1212. doi: 10.1007/s00221-018-5461-6

Coyle, D., Moore, J., Kristensson, P. O., Fletcher, P., and Blackwell, A. (2012). “I did that! Measuring users’ experience of agency in their own actions” in Proceedings of the 2012 ACM Annual Conference on Human Factors in Computing Systems - CHI ‘12, 2025 (New York, NY: ACM Press).

Cravo, A. M., Claessens, P. M. E., and Baldo, M. V. C. (2009). Voluntary action and causality in temporal binding. Exp. Brain Res. 199, 95–99. doi: 10.1007/s00221-009-1969-0

David, N., Skoruppa, S., Gulberti, A., Schultz, J., and Engel, A. K. (2016). The sense of agency is more sensitive to manipulations of outcome than movement-related feedback irrespective of sensory modality. PLoS ONE 11:e0161156. doi: 10.1371/journal.pone.0161156

Dewey, J. A., and Knoblich, G. (2014). Do implicit and explicit measures of the sense of agency measure the same thing? PLoS One 9:e110118. doi: 10.1371/journal.pone.0110118

Engbert, K., Wohlschläger, A., and Haggard, P. (2008). Who is causing what? The sense of agency is relational and efferent-triggered. Cognition 107, 693–704. doi: 10.1016/j.cognition.2007.07.021

Evangelou, G., Georgiou, O., and Moore, J. (2023). Using virtual objects with hand-tracking: the effects of visual congruence and mid-air haptics on sense of agency. IEEE Trans. Hapt. 16, 580–585. doi: 10.1109/TOH.2023.3274304

Evangelou, G., Georgiou, O., and Moore, J. W. (2024). “Mid-air haptic congruence with virtual objects modulates the implicit sense of agency.” In 14th International Conference on Human Haptic Sensing and Touch Enabled Computer Applications, EuroHaptics 2024, Lille, France, June 30–July 03, 2024, Proceedings, edited by H. Kajimoto, P. Lopes, C. Pacchierotti, M. Basdogan, B. Gori, and M. Semail. Springer International Publishing.

Evangelou, G., Limerick, H., and Moore, J. (2021). “I feel it in my fingers! Sense of agency with mid-air haptics.” In 2021 IEEE World Haptics Conference (WHC), 727–732. Montreal, QC, Canda: IEEE.

Farrer, C., Bouchereau, M., Jeannerod, M., and Franck, N. (2008). Effect of distorted visual feedback on the sense of agency. Behav. Neurol. 19, 53–57. doi: 10.1155/2008/425267

Georgiou, O., Frier, W., Freeman, E., and Pacchierotti, C. (2022). “Ultrasound mid-air haptics for touchless interfaces” in Human–computer interaction series. eds. O. Georgiou, W. Frier, E. Freeman, C. Pacchierotti, and T. Hoshi (Cham: Springer International Publishing).

Graichen, L., Graichen, M., and Krems, J. F. (2019). Evaluation of gesture-based in-vehicle interaction: user experience and the potential to reduce driver distraction. Hum. Fact. 61, 774–792. doi: 10.1177/0018720818824253

Haggard, P. (2017). Sense of agency in the human brain. Nat. Rev. Neurosci. 18, 196–207. doi: 10.1038/nrn.2017.14

Haggard, P., Clark, S., and Kalogeras, J. (2002). Voluntary action and conscious awareness. Nat. Neurosci. 5, 382–385. doi: 10.1038/nn827

Harrington, K., Large, D. R., Burnett, G., and Georgiou, O. (2018). “Exploring the use of mid-air ultrasonic feedback to enhance automotive user interfaces” in Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (New York, NY: ACM), 11–20.

Heinssen, R. K., Glass, C. R., and Knight, L. A. (1987). Assessing computer anxiety: development and validation of the computer anxiety rating scale. Comput. Hum. Behav. 3, 49–59. doi: 10.1016/0747-5632(87)90010-0

Hudlicka, E. (2003). “To feel or not to feel: the role of affect in human–computer interaction.” Int. J. Hum. Comput. Stud. 59:1–32. doi: 10.1016/S1071-5819(03)00047-8

Imaizumi, S., and Tanno, Y. (2019). Intentional binding coincides with explicit sense of agency. Conscious. Cogn. 67, 1–15. doi: 10.1016/j.concog.2018.11.005

Janczyk, M., Xiong, A., and Proctor, R. W. (2019). Stimulus-response and response-effect compatibility with touchless gestures and moving action effects. Hum. Fact. 61, 1297–1314. doi: 10.1177/0018720819831814

Jian, J.-Y., Bisantz, A. M., and Drury, C. G. (2000). Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 4, 53–71. doi: 10.1207/S15327566IJCE0401_04

Lafleur, A., Soulières, I., and d’Arc, B. F. (2020). Sense of agency: sensorimotor signals and social context are differentially weighed at implicit and explicit levels. Conscious. Cogn. 84:103004. doi: 10.1016/j.concog.2020.103004

Limerick, H., Coyle, D., and Moore, J. W. (2014). The experience of agency in human-computer interactions: a review. Front. Hum. Neurosci. 8:643. doi: 10.3389/fnhum.2014.00643

Limerick, H., Moore, J. W., and Coyle, D. (2015). “Empirical evidence for a diminished sense of agency in speech interfaces.” Conference on Human Factors in Computing Systems - Proceedings (New York, NY, USA: Association for Computing Machinery) 3967–3970.

Maclean, K., and Enriquez, M. (2003). “Perceptual design of haptic icons.” Dublin, UK: Proceedings of EuroHaptics 2003, 351–363. Available at: https://www.cs.ubc.ca/~murphy/cpsc590/EH03-hapticIcon-reprint.pdf or https://www.mle.ie/palpable/eurohaptics2003/

Martinez, P. C., De Pirro, S., Vi, C. T., and Subramanian, S. (2017). “Agency in mid-air Interfaces” in Conference on Human Factors in Computing Systems – Proceedings (New York, NY: ACM), 2426–2439.

McCarthy, J., and Wright, P. (2005). “Putting ‘felt-life’ at the centre of human–computer interaction (HCI).” Cogn. Tech. Work 7:262–271. doi: 10.1007/s10111-005-0011-y

Moore, J. W., and Fletcher, P. C. (2012). Sense of agency in health and disease: a review of cue integration approaches. Conscious. Cogn. 21, 59–68. doi: 10.1016/j.concog.2011.08.010

Moore, J. W., Lagnado, D., Deal, D. C., and Haggard, P. (2009). Feelings of control: contingency determines experience of action. Cognition 110, 279–283. doi: 10.1016/j.cognition.2008.11.006

Moore, J. W., and Obhi, S. S. (2012). Intentional binding and the sense of agency: a review. Conscious. Cogn. 21, 546–561. doi: 10.1016/j.concog.2011.12.002

Moore, J. W., Ruge, D., Wenke, D., Rothwell, J., and Haggard, P. (2010). Disrupting the experience of control in the human brain: pre-supplementary motor area contributes to the sense of agency. Proc. R. Soc. B Biol. Sci. 277, 2503–2509. doi: 10.1098/rspb.2010.0404

Moore, J. W., Wegner, D. M., and Haggard, P. (2009). Modulating the sense of agency with external cues. Conscious. Cogn. 18, 1056–1064. doi: 10.1016/j.concog.2009.05.004

National Highway Traffic Safety Administration. (2020). Overview of the National Highway Traffic Safety Administration’s driver distraction program. Available at: https://crashstats.nhtsa.dot.gov/Api/Public/Publication/813309

Norman, D. A. (1986). User-Centered System Design: New Perspectives on Human-computer Interaction, in Cognitive Engineering, Lawrence Erlbaum Associates, Hillsdale. Eds. D. A. Norman and S. W. Draper, 31–61.

O’Hara, K., Harper, R., Mentis, H., Sellen, A., and Taylor, A. (2013). On the naturalness of touchless. ACM Trans. Comput. Hum. Interact. 20, 1–25. doi: 10.1145/2442106.2442111

Ohn-Bar, E., and Trivedi, M. M. (2014). Hand gesture recognition in real time for automotive interfaces: a multimodal vision-based approach and evaluations. IEEE Trans. Intell. Transp. Syst. 15, 2368–2377. doi: 10.1109/TITS.2014.2337331

Parada-Loira, F., Gonzalez-Agulla, E., and Alba-Castro, J. L. (2014). “Hand gestures to control infotainment equipment in Cars.” In 2014 IEEE Intelligent Vehicles Symposium Proceedings, 1–6. IEEE.

Parasuraman, A., and Colby, C. L. (2015). An updated and streamlined technology readiness index. J. Serv. Res. 18, 59–74. doi: 10.1177/1094670514539730

Ruess, M., Thomaschke, R., and Kiesel, A. (2018). Intentional binding of visual effects. Atten. Percept. Psychophys. 80, 713–722. doi: 10.3758/s13414-017-1479-2

Schneiderman, B., and Plaisant, C. (2004). Designing the user Interface: strategies for effective human-computer interaction. 4th Edn. Reading, MA: Pearson Addison-Wesley.

Schrepp, M., Hinderks, A., and Thomaschewski, J. (2017). Design and evaluation of a short version of the user experience questionnaire (UEQ-S). Int. J. Interact. Multim. Artif. Intell. 4:103. doi: 10.9781/ijimai.2017.09.001

Shakeri, G., Williamson, J. H., and Brewster, S. (2017). “Novel multimodal feedback techniques for in-Car mid-air gesture interaction” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (New York, NY: ACM), 84–93.

Shakeri, G., Williamson, J. H., and Brewster, S. (2018). “May the force be with you: ultrasound haptic feedback for mid-air gesture interaction in Cars” in Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (New York, NY: ACM), 1–10.

Sidarus, N., and Haggard, P. (2016). Difficult action decisions reduce the sense of agency: a study using the Eriksen flanker task. Acta Psychol. 166, 1–11. doi: 10.1016/j.actpsy.2016.03.003

Spakov, O., Farooq, A., Venesvirta, H., Hippula, A., Surakka, V., and Raisamo, R. (2022). “Ultrasound feedback for mid-air gesture interaction in vibrating environment.” Human Interaction & Emerging Technologies (IHIET-AI 2022): Artificial Intelligence & Future Applications. Vol 23. Lausanne, Switzerland: AHFE International. p.10.

Stecher, M., Michel, B., and Zimmermann, A. (2018). The benefit of touchless gesture control: an empirical evaluation of commercial vehicle-related use cases. Adv. Intell. Syst. Comput. 597, 383–394. doi: 10.1007/978-3-319-60441-1_38

Sterkenburg, J., Landry, S., and Jeon, M. (2017). “Eyes-free in-vehicle gesture controls: auditory-only displays reduced visual distraction and workload” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct (New York, NY: ACM), 195–200.

Synofzik, M., Vosgerau, G., and Newen, A. (2008). Beyond the comparator model: a multifactorial two-step account of agency. Conscious. Cogn. 17, 219–239. doi: 10.1016/j.concog.2007.03.010

Synofzik, M., Vosgerau, G., and Voss, M. (2013). The experience of agency: an interplay between prediction and Postdiction. Front. Psychol. 4:127. doi: 10.3389/fpsyg.2013.00127

Tabbarah, M., Cao, Y., Shamat, A. A., Fang, Z., Li, L., and Jeon, M. (2023). “Novel in-vehicle gesture interactions: design and evaluation of auditory displays and menu generation interfaces” in Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (New York, NY: ACM), 224–233.

Waltemate, T., Senna, I., Hülsmann, F., Rohde, M., Kopp, S., Ernst, M., et al. (2016). “The impact of latency on perceptual judgments and motor performance in closed-loop interaction in virtual reality.” Proceedings of the ACM Symposium on Virtual Reality Software and Technology, VRST 02-04-Nove. (New York, NY, USA: Association for Computing Machinery) 27–35.

Wegner, D. M., and Wheatley, T. (1999). Apparent mental causation: sources of the experience of will. Am. Psychol. 54, 480–492. doi: 10.1037/0003-066X.54.7.480

Wen, W., Yamashita, A., and Asama, H. (2015). The influence of action-outcome delay and arousal on sense of agency and the intentional binding effect. Conscious. Cogn. 36, 87–95. doi: 10.1016/j.concog.2015.06.004

Winkler, P., Stiens, P., Rauh, N., Franke, T., and Krems, J. (2020). How latency, action modality and display modality influence the sense of agency: a virtual reality study. Virt. Real. 24, 411–422. doi: 10.1007/s10055-019-00403-y

Wobbrock, J. O., Findlater, L., Gergle, D., and Higgins, J. J. (2011). “The aligned rank transform for nonparametric factorial analyses using only Anova procedures” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (New York, NY: ACM), 143–146.

Keywords: agency, gesture, sensory, modality, haptics, visual, feedback

Citation: Evangelou G, Georgiou O, Brown E and Moore JW (2025) Sense of agency in gesture-based interactions: modulated by sensory modality but not feedback meaning. Front. Comput. Sci. 7:1511928. doi: 10.3389/fcomp.2025.1511928

Edited by:

Bruno Mesz, National University of Tres de Febrero, ArgentinaReviewed by:

Hideyuki Nakanishi, Kindai University, JapanAsterios Zacharakis, Aristotle University of Thessaloniki, Greece

Copyright © 2025 Evangelou, Georgiou, Brown and Moore. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: George Evangelou, Zy5ldmFuZ2Vsb3VAZ29sZC5hYy51aw==

George Evangelou

George Evangelou Orestis Georgiou

Orestis Georgiou Eddie Brown2

Eddie Brown2 James W. Moore

James W. Moore