- 1Department of Political Sciences, Communication and International Relations, University of Macerata, Macerata, Italy

- 2Department of Information Engineering, Universitá Politecnica delle Marche, Ancona, Italy

- 3Department of Construction, Civil Engineering and Architecture, Universitá Politecnica delle Marche, Ancona, Italy

Artificial Intelligence (AI) has revolutionized various sectors, including Cultural Heritage (CH) and Creative Industries (CI), defining novel opportunities and challenges in preserving tangible and intangible human productions. In such a context, Neural Rendering (NR) paradigms play the pivotal role of 3D reconstructing objects or scenes by optimizing images depicting them. However, there is a lack of work examining the ethical concerns associated with its usage. Those are particularly relevant in scenarios where NR is applied to items protected by intellectual property rights, UNESCO-recognized heritage sites, or items critical for data-driven decisions. For this, we here outline the main ethical findings in this area and place them in a novel framework to guide stakeholders and developers through principles and risks associated with the use of NR in CH and CI. Such a framework examines AI's ethical principles, connected to NR, CH, and CI, supporting the definition of novel ethical guidelines.

1 Introduction

Artificial Intelligence (AI) sparked advancements across various sectors, both in industry and academia. One of the most impacted sectors corresponds to Cultural Heritage (CH) and Creative Industries (CI), often considered as a unique discipline (CCI; Jobin et al., 2019; Pansoni et al., 2023a; European Commission, 2024b,c; Cascarano et al., 2022b).

Through AI paradigms, tangible and intangible CCI could be better analyzed, preserved, and promoted, with a positive social impact (Jobin et al., 2019; Pansoni et al., 2023a). In particular, it has facilitated digitization efforts supported by international institutions such as the EU Commission and UNESCO, democratizing accessibility, preservation, and dissemination of culture (European Commission, 2024b; UNESCO, 2024a,b). Monuments, sites, and intangible traditions such as crafts, and art, are just a few examples of CCI elements that are being preserved through AI.

The advent of Neural Rendering (NR) techniques has dramatically improved this digitization and preservation process considering their ability to reconstruct 3D objects and scenes, being only optimized on the 2D image that depicts them. In the NR arena, Neural Radiance Fields (NeRFs) and 3D Gaussian Splatting (3DGS), are the most adopted paradigms, which can be efficiently applied in different environments with variable illumination settings, with pictures taken in the wild and be optimized with a small number of images (Gao et al., 2022; Mazzacca et al., 2023; Cascarano et al., 2022a; Abdal et al., 2023; Manfredi et al., 2023a). This feature allows their adoption to digitize scenes and objects not only in a perfect laboratory environment but also in non-optimal ones (also for those objects that no longer exist, like lost heritage) (Halilovich, 2016; Fangi et al., 2022). However, the application of NR in CCI raised new challenges from an ethical perspective. As a preliminary example, one could argue about the authenticity and (intellectual) property of the digital replica (European Commission, 2024b; Micozzi and Giannini, 2023). Issues like this become even more relevant considering elements protected by UNESCO or critics for data-driven decisions (UNESCO, 2024a,b). Nevertheless, there is a lack of work that has analyzed the ethical implications surrounding the application of NR to CCI items. This also indicates the lack of a robust framework from which new guidelines and regulations can be derived.

This paper fills this gap by reviewing the primary ethical evidence in this area. It aims to clarify the ethical principles and risks associated with the use of NR in CCI contexts. Our framework attempts to navigate the complex ethical playground of NR integration, taking into account the well-established principles of trustworthy AI contained in the AI Act, including responsibility, reliability, fairness, sustainability, and transparency (Madiega, 2021; Manfredi et al., 2022). Moreover, it takes into consideration additional world's most recognized ethical guidelines, such as the European Commission's White Paper on AI (European Commission, 2024b), the Assessment List for Trustworthy AI (ALTAI; European Commission, 2024a), The ICOM Code of Ethics [International Council of Museums (ICOM), 2018], UNESCO's documents on the Recommendation on the Ethics of Artificial Intelligence (UNESCO, 2024b) and report on Cultural and Creative Industries (UNESCO, 2024a). The framework aims to support and enhance the development of NR technologies in CCIs while preserving their intrinsic values and importance. At the same time, this framework laids the groundwork for developing ethical guidelines for NR solutions by identifying specific risks associated with their application in CCI, such as transparency, fairness, and sustainability. The guidelines developed with our considerations can guide stakeholders in mitigating these risks, while also promoting the adaption of our framework into quantitative metrics and indicators to objectively assess and monitor ethical compliance in NR technologies across different CCI applications.

The main contributions of this paper are (i) identify the ethical pitfalls in the current literature of NR paradigms applied on CCI data and use-cases; (ii) design and implement a structured ethical framework that stakeholders can use to address technical risks, challenges, and regulatory concerns in NR applications, inspired by globally relevant guidelines; (iii) provide a foundational background to define novel guidelines for the development of NR solutions, taking into account their specific risks, delineating future work directions.

The paper is organized as follows: Section 2 reviews existing literature and research efforts related to NR, along with ethical frameworks for AI, and their applications in CCI contexts. Then, in Section 3, we present our proposed ethical framework for trustworthy NR, detailing its key components and principles. Then, Section 4 presents the results obtained by applying such a framework while delineating its limitations. Finally, we conclude the paper by summarizing our contributions, and suggesting directions for future research in Section 5.

2 Related works

In this section, we provide a thorough review of the current status of the state of the art of NR as applied to CH and CI, while delineating ethical considerations. This section is therefore divided into two distinct but related parts: “Technical State of the Art” and “Ethical State of the Art.”

2.1 Technical state of the art

Traditionally, extracting 3D models from 2D images has been primarily implemented through conventional geometric methods. These methods rely on established techniques such as photometric consistency and gradient-based features to extract depth cues from visual data (Strecha et al., 2006; Goesele et al., 2007; Remondino et al., 2008; Hirschmuller, 2008; Barnes et al., 2009; Furukawa and Ponce, 2010; Brocchini et al., 2022; Jancosek and Pajdla, 2011; Bleyer et al., 2011; Schönberger et al., 2016). However, recent advances in neural networks laid the path to the development of novel techniques that are able to generate 3D volumes from 2D images by approximating non-linear functions (Xie et al., 2020; Murez et al., 2020; Tewari et al., 2020). For example, Xie et al. (2020) introduced a Convolutional Neural Network (CNN) encoder-decoder multi-scale fusion module that selects high-quality reconstructions from multiple coarse 3D volumes, approximating a 3D voxel representation. However, this method is limited to those exact representations and is optimized on one dataset, limiting its context of use. Overcoming this voxel representation limitation, research direction was defined through works like Murez et al. (2020), where authors propose a similar 3D voxel reconstruction methods like Xie et al. (2020) but refining it through 3D CNN encoder-decoder network, resulting in a Truncated Signed Distance Function (TSDF) volume from which a mesh is extracted. However, relying first on voxels and then on TSDF, this approach is memory-intensive and low-resolution. Moreover, the method primarily focuses on geometric reconstruction, which results in a low capacity for capturing complex visual details and phenomena. To overcome all of the mentioned limitations, a novel research direction has seen a lot of traction in recent periods. It allows to learn higher levels of visual details, by approximating 3D representation directly learned by a neural network, with an efficient approach: Neural Rendering (NR; Tewari et al., 2020). These techniques are characterized by deep image or video generation methods that provide explicit or implicit control over various scene properties, including camera parameters and geometry. Such models learn complex mappings from existing images to generate new ones (Tewari et al., 2020). In such a space, two paradigms are emerging: Neural Radiance Fields (NeRFs) and 3D Gaussian Splatting (3DGS). These have attracted considerable attention due to their power and speed of reconstruction (Tewari et al., 2020; Mildenhall et al., 2022; Kerbl et al., 2023; Meng et al., 2023). NeRFs are implicit neural radiance field representations via multi-layer perceptrons (MLPs), optimized via rendering reconstruction loss over 2D images to learn the complex geometry and lighting of the 3D scene they capture (Mildenhall et al., 2022; Gao et al., 2022). While primarily recognized for novel view synthesis, NeRFs allow the extraction of 3D surfaces, meshes, and textures (Tancik et al., 2023). This is achieved through an internal representation as an Occupancy Field (OCF) or a Signed Distance Function (SDF), which can be easily converted into a 3D mesh using conventional algorithms such as the Marching Cubes (Lorensen and Cline, 1987). Similarly, 3DGS aims to efficiently learn and render high-quality 3D scenes from 2D images (Kerbl et al., 2023). 3DGS introduces a continuous and adaptive framework using differentiable 3D Gaussian primitives, in contrast to traditional volumetric representations such as voxel grids. These primitives parameterize the radiance field, allowing novel views to be generated during rendering. 3DGS achieves real-time rendering through a tile-based rasterizer, unlike NeRF which relies on computationally intensive volumetric ray sampling (Kerbl et al., 2023; Tosi et al., 2024). Both NeRFs and 3DGS are self-supervised and can be trained using only multi-view images and their corresponding poses, eliminating the need for 3D/depth supervision (using algorithms such as Structure from Motion to extract camera poses). In addition, they generally deliver higher photo-realistic quality compared to traditional novel view synthesis methods (Gao et al., 2022). These factors make them suitable for various applications in different domains, especially in the context of CCI, where the generation of the most faithful representation is key. NeRF has recently been considered for CH applications for different contexts and data, such as those collected with smartphones or professional cameras, in different environments (Mazzacca et al., 2023; Croce et al., 2023; Balloni et al., 2023). At the same time, they have been used in the context of CI, mainly for industrial design, and various fashion applications, such as 3D object reconstruction and human generation (Poole et al., 2022; Manfredi et al., 2023b; Wang K. et al., 2023; Fabra et al., 2024; Fu et al., 2023; Yang H. et al., 2023). Notwithstanding its newness, 3DGS has also been considered and applied in CH, where it was compared with NeRF for the reconstruction of real monuments, and also in CI, where it was used to efficiently generate dressed humans (Abdal et al., 2023; Basso et al., 2024). Although not specifically applied to the CCI context, few-shot approaches amount to a variation of NR that can be optimized for the 3D representation of scenes and objects by using only a few frames (typically 1–10) (Kim et al., 2022; Yang J. et al., 2023; Long et al., 2023). Such approaches can be adopted for those CCI objects that can no longer be captured and are stored in a small number of images, but also for those objects that can only be captured from a limited set of views. In such a context, relevant works amount to PixelNeRF, which introduces an approach that preserves the spatial alignment between images and 3D representations by learning a prior over different input views (Yu et al., 2021). In contrast, models such as DietNeRF, RegNeRF, InfoNeRF, and FreeNerf address few-shot optimization without relying on knowledge, instead employing optimization and regularization strategies along with auxiliary semantic losses (Jain et al., 2021; Kim et al., 2022; Yang J. et al., 2023).

2.2 Ethical state of the art

As mentioned in the introduction, while the ethical implications of AI in CCI have been explored, the ethical implications of using NR paradigms have been poorly explored. For this, works such as Jobin et al. (2019), Srinivasan and Uchino (2021), Loli Piccolomini et al. (2019), Pansoni et al. (2023a,b), Piskopani et al. (2023), Giannini et al. (2019), and Tiribelli et al. (2024) draw on important and relevant sources of knowledge regarding the ethics of AI, and in some cases, the ethics of generative AI. In particular, Jobin et al. (2019) outlined the implications of AI across sectors on a global scale, sparking debates about the ethical principles that should guide its development and use. Concerns include potential job displacement, misuse by malicious actors, accountability issues, and algorithmic bias. It also highlights efforts to engage different stakeholders, including public and private companies, questions about their motivations, and the convergence of ethical principles. Finally, it discusses the main ethical principles currently analyzed in AI ethics, while delineating guidelines to develop fair and trustworthy systems. Specifically, CH (Pansoni et al., 2023a,b) analyzed ethical concerns regarding the use of AI's role in activities such as creating digital replicas or providing unbiased explanations of artworks. They also developed an ethical framework for these activities, including relevant ethical principles such as shared responsibility, meaningful participation, and accountability. Their findings underscore the need to develop sector-specific ethical guidelines for AI in both tangible and intangible CH to ensure its sustainable development while preserving its values, meaning, and social impact. In the context of CI, Flick and Worrall (2022) pointed out the urgency of defining ethical rules and exploring issues of ownership and authorship, biases in datasets, and the potential dangers of non-consensual deepfakes. In the same context, in Srinivasan and Uchino (2021), the authors analyzed the lack of ethical discussion around generative AI, particularly around biases, while exploring their implications from a socio-cultural art perspective. Their findings analyzed how generative AI models showed biases toward artists' styles that were also present in the training data. We should also reflect on public primary sources of global AI ethical significance, to establish a robust AI ethical framework about NR. To this end, we rely first on the Assessment List for Trustworthy Artificial Intelligence (ALTAI) developed by the European Commission's High-Level Group on Artificial Intelligence (implemented by HLEGAI in 2019; European Commission, 2024a). ALTAI identifies seven requirements necessary to achieve trustworthy AI, covering aspects such as human oversight, technical robustness, privacy, transparency, fairness, societal wellbeing, and accountability. It is important to note that these ethical imperatives are regulative, not legally binding, and serve as guiding principles for the responsible development of the technology. Second, UNESCO's Recommendation on the Ethics of Artificial Intelligence and the Readiness assessment methodology provides systematic regulatory and evaluation guidance with a globally sensitive perspective to guide companies in responsibly managing the impact of AI on individuals and society (UNESCO, 2024b, 2023). These recommendations emphasize bridging digital and knowledge gaps among nations throughout the AI lifecycle and precisely define the values guiding the responsible development and utilization of AI systems. In line with the EU guidelines, UNESCO emphasizes 'transparency and accountability' as key principles for trustworthy AI. Transparency guarantees that the public is informed when AI systems influence policy decisions, promoting comprehension of their significance. This transparency is essential to ensure equity and inclusivity in the outcomes of AI-based systems. Explainability refers to understanding how different algorithmic pipelines work, from the received input data to their processed outputs. We also considered the European Commission's White Paper on AI (European Commission, 2024b), which highlights the importance of a European approach to the development of AI, based on ethical values and aimed at promoting benefits while addressing risks. In particular, it outlines the need for the trustworthiness of AI systems based on European values and fundamental rights such as human dignity and privacy. It provides a regulatory and investment-oriented approach to address the ethical risks of AI, focusing on building an ecosystem of excellence and trust throughout its lifecycle. We then considered specifically the CCI context of our research, starting with the ICOM Code of Ethics and Museums [International Council of Museums (ICOM), 2018], which defines ethical standards on issues specific to museums and provides standards of professional practice that can serve as a normative basis for museum institutions. Such a code begins with a position statement that explains the purpose of museums and their responsibilities. It then focuses on the specific challenges faced by museums, including (i) the responsibility to safeguard both tangible and intangible natural and cultural heritage, while protecting and promoting this heritage within the human, physical, and financial resources allocated; (ii) the acquisition, conservation, and promotion of collections as a contribution to the preservation of heritage; (iii) the provision of access to, interpretation of and promotion of heritage; (iv) the definition of policies to preserve the community's heritage. (iv) to define policies for the conservation of community heritage and identity. Again in the context of the CCI, we considered the well-known artists' associations' specification of the EU AI law dedicated to the creative arts, including safeguards that require rights holders to be specifically (Piskopani et al., 2023; Urheber.info, 2024). Such a document, issued by 43 unions representing creative authors, performers, and copyright holders, emphasizes the urgent need for effective regulatory measures to deal with generative AI. In particular, the document highlights how existing measures are insufficient to protect the digital ecosystem and society at large. It sets out requirements for providers of foundational models, including transparency about training materials, their accuracy and diversity, and compliance with legal frameworks for data collection and use. These proposals aim to ensure the responsible development and deployment of generative AI systems while protecting against potential harms such as misinformation, discrimination, and infringements of privacy and copyright. Finally, we have included in our analysis the UNESCO document on Cultural and Creative Industries in the COVID-19 era (UNESCO, 2024a). This document was one of the first to analyze the impact of the pandemic by exploring the use of digital technologies by audiences and cultural professionals in the CCIs, which are now becoming pervasive, particularly in the visual industries, and which can be analyzed through an ethical lens.

3 Methodology

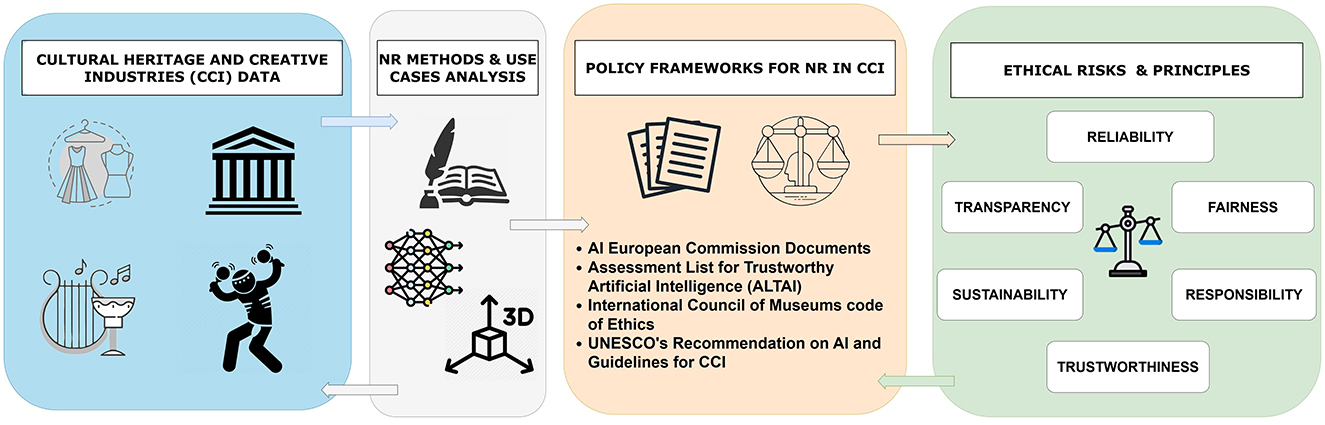

In this section, we present a detailed approach for the analysis of ethical pitfalls within NR techniques in CCI. On top of this analysis, we defined an ethical framework for assessing the trustworthiness of such techniques, given the lack of work on this topic. Our study begins with an analysis of the scientific literature on NR approaches, focusing on NeRFs and 3DGS, their use cases, and the data coming from CCI fields where they were adopted or could be applied. From this research, we derived the technical challenges of their application in CCI. Then, considering these challenges, we examined ethical documents issued by public and globally relevant issuers and scientific literature. Through these, we highlight the key ethical risks that these technical challenges may pose, along with their associated and well-established principles. Following these documents and reported guidelines, we have selected those principles and risks that can be linked to specific NR challenges that could help mitigate them. The result of this process results in a novel framework to analyze the applications of NR methods in CCI with an ethical lens (visually illustrated in Figure 1).

Figure 1. Ethical workflow for NR in CCI applications. The approach starts from the selection of the NR methodology and data where they are applied. Then the ethical framework defines principles that must be evaluated to avoid risks, providing a conclusive analysis and quantification of ethical compliance scores.

This framework aims to build NR systems with a trustworthy approach by providing a structured methodology for the analysis of the ethical risks associated with NR in the CCI sector. To clarify the framework application, we here report the flow that we adopted in its usage:

• The first step involves gathering data from CCI, such as 3D scans, digital archives, or other creative data forms like images, dance, or even fashion designs. This data forms the basis for applying NR techniques, which are used to create high-quality digital representations of CCI artifacts or creative assets;

• In the second step, a thorough analysis of the NR methods and their use cases is performed. This includes understanding the specific algorithms being used, their potential and current applications in CCI, and evaluating the technical challenges they present, such as accuracy, representation quality, and limitations when applied to various creative domains;

• Once the technical challenges have been identified, we move to the third step, where existing ethical guidelines and policy documents, such as the AI European Commission's documents, the Assessment List for Trustworthy Artificial Intelligence (ALTAI), the International Council of Museums code of ethics, and UNESCO's recommendations on AI, are reviewed. These guidelines help in framing the analysis within a broader ethical context to derive specific regulations and policies for NR in CCI;

• Finally, the main ethical risks (e.g., transparency and responsibility) associated with NR in CCI are identified. These risks are mapped to the principles outlined in the ethical documents, ensuring that they are addressed systematically. This step is key and ensures an ethical NR application in the CCI field. The resulting analysis of those risks, ensures that these methods are applied responsibly, transparently, with fairness, supporting sustainability and ensuring reliability, and trustworthiness.

The next section will detail the Challenges and Opportunities of NR in CCI that emerged by applying our framework. Then, we will analyze the Ethical Principles and the description of how those are related to these paradigms, highlighting risks and possible mitigation strategies.

3.1 Challenges and opportunities of Neural Rendering in CCI

Considering NR, in particular, possible challenges, and technical risks may arise for the specific elements in the CCI domain. These challenges include but are not limited to (i) Understanding complex AI models and validating the data collection process; (ii) Ensuring the accuracy of reconstructions; (iii) Demonstrating stability and generalization in different (social) environments; (iv) Unbiased and fair results; (v) Ethical data ownership; (vi) Minimizing environmental impact. Considering (i) significant challenges arise as the lack of interpretability of those NR models that expose knowledge prior or are being conditioned on models with prior knowledge (Yu et al., 2021; Haque et al., 2023). Moreover, missing descriptions of the data acquisition steps hinder accountability and a data-driven decision-making approach (Schneider, 2018). These challenges underline the importance of developing methods and tools to improve transparency, interpretability, and accountability in NR systems (Barceló et al., 2020; Haque et al., 2023; Xie et al., 2023; Cainelli et al., 2022). Other technical challenges and risks related to confidence in the accuracy/fidelity of the reconstructions and the consistency of the outputs generated (ii). Inconsistent outputs could be generated due to few-shot learning approaches, in-the-wild datasets, or data corruption, requiring rigorous testing and validation procedures (Martin-Brualla et al., 2021; Yu et al., 2021; Toschi et al., 2023). Validation of the consistency and fidelity of NR input and synthesized data in different domains is a crucial challenge to define generalized and reliable systems. Stability and generalization across different (social) environments (iii) could also be defined as an issue, considering that NR methods may lack visual generalization and inconsistent geometric representations, which are significant barriers to achieving robust performance in diverse CCI contexts (Condorelli et al., 2021; Croce et al., 2023; Mazzacca et al., 2023). This phenomenon could happen while optimizing an NR in a few-shot or an incomplete set of scene views. A possible solution to cope with such phenomena amounts to adopting few-shot architectures or pre-trained models (Yu et al., 2021; Kim et al., 2022; Yang J. et al., 2023). In this particular case, however, (iv) we should consider the kind of architectural approach followed by those few-shot networks (e.g., overlook high-frequency details Yang J. et al., 2023) and the bias that those pre-trained models expose in their knowledge priors (Yu et al., 2021; Kim et al., 2022; Cao et al., 2022). Such models, along with biases that could emerge within the data collection process, highlight the importance of developing methods that mitigate bias and promote equitable results (Zheng et al., 2023). It is also worth mentioning the criticalities (v) that emerge while discussing already considered challenges like misuse of input and generated data and unfaithful generation in the context of data ownership and responsibility (Avrahami and Tamir, 2021; Chen et al., 2023). The ownership of the NR 3D-generated items entails the rightful possession of data and the responsibility to ensure usage and protection against misuse (Pansoni et al., 2023a). Data misuse poses a great risk, ranging from unauthorized reproduction to malicious manipulation, that could be applied in NR to generate unfaithful items (Haque et al., 2023), damaging stakeholders that have economical or emotive interest in them (Pansoni et al., 2023a). For example, if some views or geometric structures of the 3D models reconstructed by NR methods are inconsistent with reality, one could argue about their authenticity and also debate their intellectual property (Luo et al., 2023). All of these aspects define the urgency of integrating social considerations into the system functionality, requiring careful human validation protocols (Stacchio et al., 2023). Finally, (vi) NR lays significant risks for the environment (Poole et al., 2023; Lee et al., 2023). Sustainability is a critical aspect associated with the high computational demand of NR processes, and the energy used to maintain ready-to-visualize renderers (Wang Y. et al., 2023). Moreover, the indirect energy costs stemming from activities such as professional digital photography waste, creating photo capture settings, data transmission, and storage further contribute to the environmental footprint of NR paradigms.

3.2 Ethical principles of Neural Rendering in CCI

Our study begins with a review of guidelines from key regulatory frameworks, including the Assessment List for Trustworthy Artificial Intelligence (ALTAI; European Commission, 2024a), the UNESCO Recommendation on the Ethics of Artificial Intelligence (UNESCO, 2024b), and the European Commission's White Paper on AI (European Commission, 2024b). We also thoroughly analyzed (Jobin et al., 2019; Srinivasan and Uchino, 2021; Piskopani et al., 2023), which provides a global mapping of AI regulations and robust ethical principles. Then, given the CCI context of our investigation, we considered the ICOM Code of Ethics and Museums [International Council of Museums (ICOM), 2018] and a specification of the AI act for the creative arts (Piskopani et al., 2023; Urheber.info, 2024). We have also included in our analysis the UNESCO on Cultural and Creative Industries in the face of COVID-19 (UNESCO, 2024a). Following these documents and reported guidelines, we selected specific ethical principles to develop a framework to be applied concerning the usage of NR in CCI. In the following, we highlight the ethical principles considered and how they connect to the technical challenges listed in the previous Section 3.1.

3.2.1 Responsibility

One of the most relevant ethical principles that should be recognized for a trustworthy application of NR in CCI is responsibility. Responsibility refers to the moral obligation of individuals, organizations, and societies to ensure that AI technologies are developed, deployed, and used in ways that respect and preserve cultural heritage and promote the wellbeing of individuals and communities involved in creative endeavors (Jobin et al., 2019). It is worth highlighting that concerning NR, actions taken from data capturing to model training, evaluation, and deployment, rely on the different stakeholders (e.g., data generator, data owners, and ML engineers). For this reason, the accountability of the action taken through NR is addressed to both engineers as well as cultural managers or creative professionals [International Council of Museums (ICOM), 2018; Giannini and Iacobucci, 2022]. For this, a multidisciplinary approach is required to ensure NR accountability, defining policies to co-create and evaluate processes and results. Such principle should also be applied to input data to NR models and those that are instead generated, providing adherence to ethical guidelines throughout the entire data lifecycle (Pansoni et al., 2023a). This includes transparent documentation of data sources, data usage consent, and robust security measures to safeguard against misuse (Pansoni et al., 2023a). Furthermore, ensuring the authenticity of generated content is essential to uphold trust and credibility in NR systems, particularly in applications where the generated output may influence decision-making or perception, such as replication of UNESCO-protected material or Digital Twins real-time monitoring (UNESCO, 2023; Chen et al., 2023; Luo et al., 2023; Jignasu et al., 2023; Li Y. et al., 2023; Stacchio et al., 2022; Dashkina et al., 2020). With data ownership and compliance against ethical principles, stakeholders can mitigate the risks associated with data misuse and unfaithful generation. Responsibility toward real and generated data ownership and legal liability for unfaithful ones is essential to maintain the integrity of NR applications (Pansoni et al., 2023a; Jobin et al., 2019).

3.2.2 Transparency and explainability

Transparency and explainability are core principles in the development of NR systems, in particular, to define accountability and trustworthiness in CCI. Transparency involves the clear and open communication of processes, algorithms, data, and outcomes associated NR (Jobin et al., 2019), enabling stakeholders to understand how decisions are made and assess potential biases or limitations (Pansoni et al., 2023a; Flick and Worrall, 2022). Explainability regards instead the ability of NR systems to provide understandable explanations for their synthesis and 3D model extraction (Xu et al., 2019). For example, NR produces an incorrect visual representation of a real-world facility, and the influenced stakeholders must be able to understand the reason (Bhambri and Khang, 2024). Considering the complexity of NR approaches for novel view synthesis and 3D object rendering and their implicit black-box structure, the adoption of explainability techniques for their analysis is required. For example, different mechanisms like visualizing the learned geometrical structure, saliency maps, interpreting network activations, or analyzing the influence of input parameters on the rendered images are all techniques that could be adopted to support NR (Samek et al., 2017; Li X. et al., 2023; Nousias et al., 2023). In particular, such approaches could support the improvement of such systems, from both an architectural or data-centric perspective, detecting biases, but also comparing different models according to their learned features (Samek et al., 2017; Li X. et al., 2023; Nousias et al., 2023). Such aspects are all crucial in the context of CCI, where an enormous tangible and intangible patrimony could now get digitized thanks to NR paradigms in a cheap and fast way (Croce et al., 2023). For these reasons, is it crucial to tackle the aforementioned challenges to elucidate the inner workings of such algorithms to ensure that their decisions are understandable and accountable to guarantee NR reliability, fairness, and impact.

3.2.3 Reliability

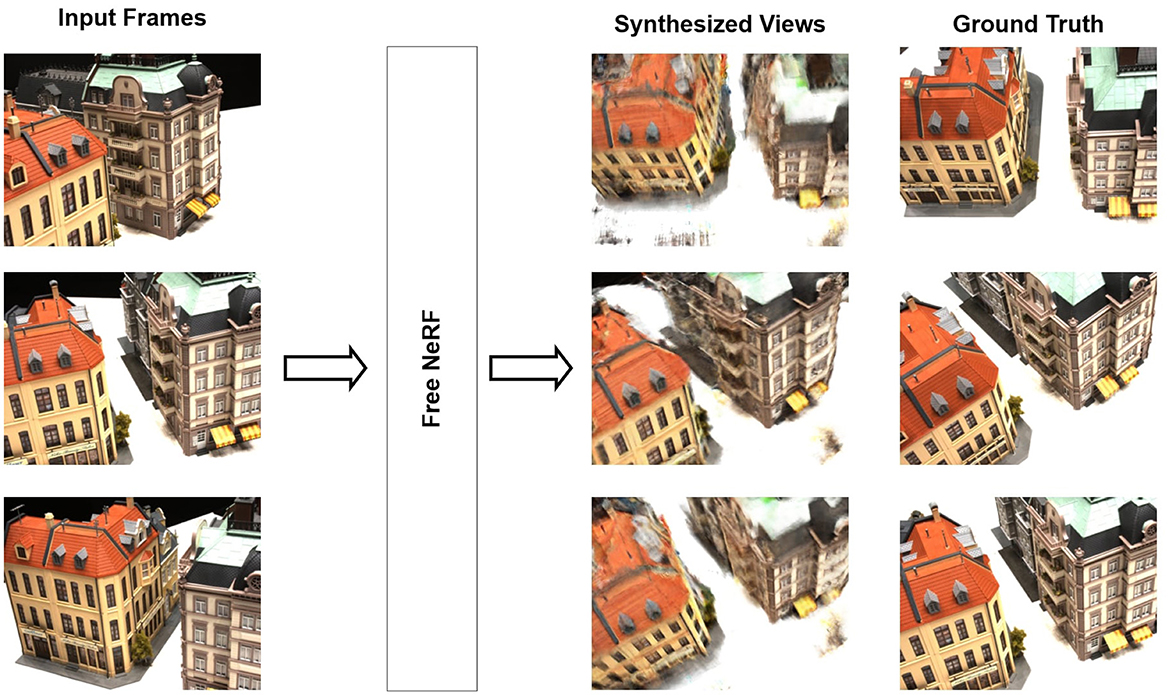

Reliability refers to the ability of AI applications to comply with data protection providing high accuracy and completeness considering both input datasets used to develop and train the models, and their outcomes (European Commission, 2024a; Jobin et al., 2019). For NR to be reliable, we should first consider the completeness of the data. We should, in general, acquire around 50 and 150 pictures based on the object complexity, following a spherical omnidirectional approach to optimize NR methods (Müller et al., 2022). Even having at our disposal such pictures, and techniques to extract 3D geometric structures from the optimized networks, like marching cubes for NeRFs and Poisson reconstruction from point clouds, which could discard several high-frequency details (Guédon and Lepetit, 2023). Moreover, such a quantity of pictures could not be available for different CCI items (due to objects that do not exist anymore, or that can't be moved to be captured from all sides Halilovich, 2016). Even adopting few-shot NR architectures (Niemeyer et al., 2022; Yang J. et al., 2023) we should have at our disposal, 3–9 sparse viewpoints to have reasonable, but non-comparable quantitative-qualitative results. To visually explore such a concept, we re-trained one of the state-of-the-art few-shot NeRFs, named FreeNeRF (Yang J. et al., 2023), using the same 3-image setting reported by the authors, depicting the results in Figure 2. As can be qualitatively appreciated, different parts of the synthesized views present artifacts and incomplete geometrical structures. It is worth highlighting that such artifacts were verified on pictures taken in a controlled laboratory setting, with fixed illumination and camera poses. This raises ethical concerns related to the missing data biases, i.e., the lack of data from under represented regions, cultures, and objects (Pansoni et al., 2023a). Such bias could negatively influence the training of NR, creating distorted geometries and textures (Yu et al., 2021). Such bias also involves camera pose estimation, which is a necessary step for NR in case pictures were taken with classical RGB cameras (Over et al., 2021). In particular, this raises two ethical concerns: (i) camera pose estimation algorithms could provide inaccurate estimation or (ii) non-converge. Such situations mostly regard cases of few-shot settings with low scene coverage and in-the-wild settings (Iglhaut et al., 2019; Martin-Brualla et al., 2021; Cutugno et al., 2022; Balloni et al., 2023). Recent methods based on Diffusion Models are emerging, with preliminary results toward a few image camera pose estimations, which however only work on fixed environmental conditions (Zhang et al., 2024). Considering these concerns, rigorous quantitative and qualitative validation of the fidelity of collected/generated data is necessary to determine the reliability of NR.

Figure 2. FreeNeRF (Yang J. et al., 2023) trained on three images from the DTU dataset with the same setting provided by the original authors and three synthesized novel views compared against their ground truths.

3.2.4 Trustworthiness

Trustworthiness refers to the capacity of AI systems to be ethical toward transparency, accountability, and respect for human values and rights (Jobin et al., 2019). A trustworthy system not only produces accurate and consistent results but also operates in a manner that aligns with ethical principles and societal expectations (European Commission, 2024b). Considering such a large definition, we here contextualize the trust in NR paradigms, in terms of technical robustness (the ability of the system to function reliably and effectively), and social robustness (the ability of the system to integrate and operate ethically in different social contexts; Petrocchi et al., 2023; Pansoni et al., 2023a). Such models must demonstrate stability and reliability in their predicted generations maintaining coherent performances, most of all in use cases related to CCI, where complex objects, dresses, buildings, and variable illumination conditions would be aspects of their everyday usage (Pansoni et al., 2023a). Such a principle is strongly bonded and shares the same reflections of reliability and responsibility. To demonstrate trust, novel empirical frameworks should be defined to take into account the performance of such models in extreme cases (e.g., strong luminance, one-shot settings), where there is missing information about the scene or the object we want to reconstruct (Cui et al., 2023).

3.2.5 Sustainability

The ethical dimensions of sustainability represent a critical focal factor within contemporary AI research and development (UNESCO, 2024b; European Commission, 2024a). Central to this discourse is a comprehensive understanding of the environmental impact and optimizing resources for models' lifecycle, spanning data collection, model training, and deployment phases. NR research should so analyze the environmental footprint stemming from various computational activities integral to model development and rendering pipelines (Jobin et al., 2019). Data collection, iterative model training procedures, and model deployment exert considerable energy demands (Poole et al., 2023; Kuganesan et al., 2022). Smart capture data setting and training strategies should be adopted to define computationally efficient processes to minimize energy waste (Guler et al., 2016). For example, intelligent protocols could be adopted to reduce the number of cameras and/or GPU processing techniques for camera pose estimation (Xu M. et al., 2024). Also, indirect sources of energy consumption activities like human photographer transportation, picture capture settings, digital photography, data transmission, and storage should be taken into account (Balde et al., 2022). Considering model training and deployment, relevant efforts should involve the refinement of model architectures to optimize computational efficiency, taking into account the usage of lower-image resolutions to reduce memory and teraflops, the exploitation of optimized hardware systems, and the adoption of renewable energy sources. In particular considering model architectures, distillation or quantization techniques could be adopted to optimize NR training and deployment (Gordon et al., 2023; Shahbazi et al., 2023). Sustainable practices are necessary to reduce these impacts and promote environmental responsibility. This includes optimizing models, training pipelines, and infrastructure to minimize energy consumption, considering the environmental implications at every stage of the NR workflow. By prioritizing sustainability in development and deployment, stakeholders can minimize the environmental footprint of NR and contribute to a more sustainable digital ecosystem.

3.2.6 Fairness

Fairness in AI encompasses justice, consistency, inclusion, equality, non-bias, and non-discrimination, which denotes principles and equitable treatment of individuals and communities (UNESCO, 2024b; European Commission, 2024a). Also, NR systems must ensure their rights, dignity, and opportunities are upheld and respected (Jobin et al., 2019). Considering such principle, NR should produce consistent results that are unbiased and fair across different demographics, environments, and scenarios. Such principles are particularly at risk when considering NR methods with prior knowledge, or those that exploit regularization and optimizations for few or one-shot settings (e.g., synthetic generation from other views or ignore high-frequency details; Niemeyer et al., 2022; Zhu et al., 2023; Long et al., 2023; Yang J. et al., 2023). Ensuring unbiased and fair outcomes for NR necessitates so careful consideration of potential biases introduced during pre-training, which can influence the generation of outputs in ways that exacerbate existing inequalities or inaccuracies (Long et al., 2023; Yang J. et al., 2023). This bias may amount to cultural, social, or historical ones, inherent in the training data or underlying assumptions embedded within the model architecture, especially for unrepresented items (Amadeus et al., 2024; Xu Z. et al., 2024). At the same time, NR architectures that exploit strategies for few or one-shot settings (e.g., overlook high-frequency details or synthesize novel 3d views; Long et al., 2023; Niemeyer et al., 2022; Yang J. et al., 2023; Liu et al., 2023) can contribute to disparities in the representation and depiction of scenes or objects within NR outputs (creating similar phenomena to the one depicted in Figure 2). Such oversights may affect certain features or characteristics, leading to biased or unfair outcomes, mostly in contexts where high-frequency detail is essential for accurate representation (e.g., dance, fashion, and art). To ameliorate these phenomena, data quality and bias analysis must be performed, along with bias examination of the pre-trained knowledge learned by the models. Moreover, technical improvements in architectures, optimization losses, regularization, and generative models should be fostered (in particular considering domain adaption paradigms Joshi and Burlina, 2021). This holistically includes rigorous evaluation and validation of biases, as well as the incorporation of diversity considerations into model design and development. To this date, a patch-wise level combination of quantitative metrics should be applied, like combining PSNR, LPIPS, and MSE for novel view synthesis and DICE, DMax, ASDlike for 3D meshes and Chamfer, Hausdorff, and Earth-Mover's distances for synthesized point clouds (Elloumi et al., 2017; Mejia-Rodriguez et al., 2012; Zhang et al., 2021; Bai et al., 2023). The focus of such quantitative analysis should in particular regard cases where limited training data (few or one shot) are employed, considering that several artifacts could be generated and a small change in the input data can lead to significantly different representations (Yang J. et al., 2023; Niemeyer et al., 2022).

4 Results and discussions

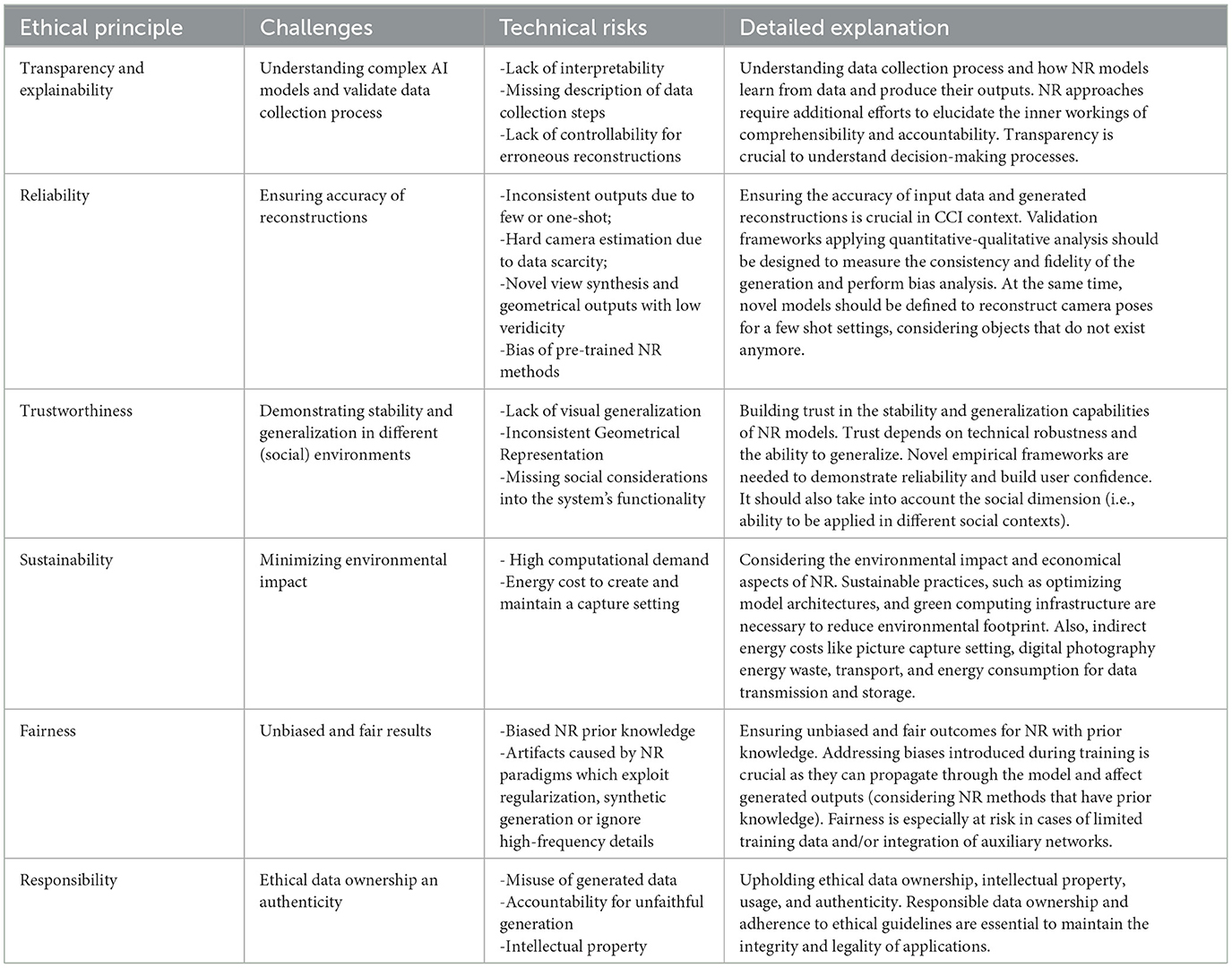

We here summarize the key ethical principles and challenges of NR in CCI in a framework, highlighting technical risks we aim to mitigate. Table 1 schematically reports the findings produced from our investigation. The ethical documents and the scientific literature acted as mediators, bridging data related to CCI and AI ethical principles to key ethical risks of NR applied to them, providing a robust basis for defining fair regulations. In particular, considering CCI items that naturally exhibit ethical issues like bias, fairness, and responsibility and are prone to define reliability concerns. Such an ethical framework, should in principle support stakeholders in the individuation of principles and responsibilities that should be considered when designing, implementing, monitoring, and evaluating NR in CCI.

Table 1. Neural Rendering ethical principles, challenges, and risks detailed starting from the designed ethical framework.

Several solutions can be implemented to reduce the identified ethical risks associated with NR. First, addressing the challenge of transparency and explainability requires comprehensive documentation of the data collection process and efforts to improve the description and interpretability of NR generative pipelines. In such a context, we could also use well-established explainability paradigms (Wen et al., 2023; Alabi et al., 2023) to describe how NR models learn from data and generate outputs. To mitigate reliability-related risks, rigorous testing and validation protocols should be established to verify the accuracy and consistency of NR reconstructions, in high-variance settings, including extreme cases (e.g., poor lighting and occluded objects). However, reliability goes beyond technical stability and includes social aspects that should be considered developing NR model. It becomes mandatory to include social factors such as cultural sensitivity, and historical accuracy narration, collaborating with domain experts in a multi-disciplinary approach (Pansoni et al., 2023a). Sustainability concerns can be addressed by optimizing multi-camera hardware, and model architectures while adopting energy-efficient optimization algorithms and hardware systems (Anthony et al., 2020). In particular, adopting energy-efficient algorithms involves implementing techniques such as model pruning, quantization, and compression, which reduce the computational workload with an often negligible loss in performances (Kulhánek et al., 2022). Ensuring fairness requires careful consideration of addressing biases in training data and model architectures. For example, imagine digitizing ancient sculptures from various civilizations for virtual museum exhibits. Biases in the training data, such as a disproportionate focus on artifacts, could led the NR model to prioritize reconstructions of artifacts from dominant cultures, neglecting others. To mitigate biases, we can curate a diverse training dataset, including artifacts from different cultures, periods, and geographical regions. The integration of auxiliary networks to detect and correct biases in the rendering process can improve the fairness of NR outputs. Finally, responsibility in NR requires ethical data ownership practices, protection against misuse of generated data, and ensuring faithful generation following ethical guidelines and legal frameworks. This could include the implementation of encryption protocols, and data anonymization techniques to protect the integrity and confidentiality of digitized objects.

It is worth noticing that, such kind of critical analysis, provided by our framework has a high degree of portability in different contexts of use cases. Particularly, it could be applied in fields like digital heritage preservation, virtual museums, education, and fashion. In digital heritage preservation, NR can accurately recreate historical artifacts and monuments, with the ethical framework ensuring cultural sensitivity, fairness in representation, and responsible data ownership. Similarly, virtual museums and exhibitions benefit from NR by providing immersive experiences that are inclusive and unbiased. In architectural and archaeological reconstructions, NR allows for the digital restoration of damaged or lost structures, emphasizing historical accuracy and sustainable practices. In education, NR can facilitate the creation of interactive 3D models for cultural and historical learning, ensuring that bias is mitigated, and intellectual property rights are respected. The fashion and artistic industry can also adopt NR for virtual prototyping, enabling sustainable design processes and fair representation of cultural styles and diverse body types.

At the same time, our framework is not without limitations. While the proposed ethical framework addresses crucial principles such as transparency, fairness, and sustainability, a significant limitation remains in its predominantly qualitative nature, only be applied with the support of ethical experts. Before this framework can be broadly applied across various fields, including managerial contexts, it must be converted into a quantitative model that can objectively measure compliance with ethical principles. This quantitative approach requires the development of novel scales and metrics capable of evaluating the extent to which NR applications adhere to these ethical standards. Defining quantitative indicators, such as transparency scores for data provenance, reliability metrics for reconstruction accuracy, or sustainability indices for energy consumption, would enable a more precise evaluation of NR implementations from an ethical perspective. These quantitative tools would allow stakeholders to assess not only whether ethical guidelines are being followed but also to what degree they are respected. Moreover, these quantitative approach would ensure that ethical compliance in NR becomes measurable, facilitating the standardization and monitoring of NR technologies across diverse applications within the CCI and beyond.

5 Conclusions and future works

Our research explored the use of NR in CCI focusing on the ethical considerations and relevant legal frameworks that pertain to these domains. The output of this process is a new ethical framework that serves as a guide for addressing the potential ethical risks identified in our analysis and provides a structured approach for ethical decision-making in the context of NR applications in CCI. We have further elaborated on the specific ethical principles that should be prioritized since they are crucial to ensure the responsible use of NR. We also highlighted ethical pitfalls that require clear guidelines to protect the integrity and sustainability of CCI sectors when applying NR technologies. Building on the foundation established by this work, future developments will focus on transforming the ethical framework from a qualitative only guide into a mixed qualitative-quantitative tool capable of quantitatively assess ethical compliance in NR applications. This will involve defining measurable ethical standards, criteria, and metrics to quantify the degree to which different NR methodologies adhere to ethical principles For example, transparency could be quantified through scores reflecting the interpretability of NR models and the documentation of data sources, while fairness could be assessed through metrics that evaluate the diversity and inclusiveness of training datasets. The development of such metrics will enable a systematic, data-driven evaluation of NR technologies, making it possible to compare and benchmark applications across different sectors within CCI (e.g., cultural, social, and historical). This qualitative-quantitative hybrid approach will be rigorously validated across diverse CCI contexts to assess its adaptability and resilience. Finally, we will explore the potential integration of this ethical framework within managerial decision-making processes, ensuring that it can be used not only for technical evaluations but also for strategic planning and policy development in organizations leveraging NR technologies. This will further ensure that NR applications are ethical, sustainable, and aligned with the long-term goals of CCI sectors.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

LS: Writing – original draft, Writing – review & editing. EB: Writing – original draft, Writing – review & editing. LG: Writing – review & editing. AM: Writing – review & editing. BG: Writing – review & editing. ST: Writing – review & editing. PZ: Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was funded by the European Union's Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement no. 101007820-TRUST.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

This publication reflects only the author's view and the REA (Research Executive Agency) is not responsible for any use that may be made of the information it contains.

References

Abdal, R., Yifan, W., Shi, Z., Xu, Y., Po, R., Kuang, Z., et al. (2023). Gaussian shell maps for efficient 3D human generation. arXiv preprint arXiv:2311.17857. doi: 10.1109/CVPR52733.2024.00902

Alabi, R. O., Elmusrati, M., Leivo, I., Almangush, A., and Mäkitie, A. A. (2023). Machine learning explainability in nasopharyngeal cancer survival using lime and shap. Sci. Rep. 13:8984. doi: 10.1038/s41598-023-35795-0

Amadeus, M., Castañeda, W. A. C., Zanella, A. F., and Mahlow, F. R. P. (2024). From pampas to pixels: fine-tuning diffusion models for ga\'ucho heritage. arXiv preprint arXiv:2401.05520. doi: 10.48550/arXiv.2401.05520

Anthony, L. F. W., Kanding, B., and Selvan, R. (2020). Carbontracker: tracking and predicting the carbon footprint of training deep learning models. arXiv preprint arXiv:2007.03051. doi: 10.48550/arXiv.2007.03051

Avrahami, O., and Tamir, B. (2021). Ownership and creativity in generative models. arXiv preprint arXiv:2112.01516. doi: 10.48550/arXiv.2112.01516

Bai, Y., Dong, C., Wang, C., and Yuan, C. (2023). “PS-NeRV: patch-wise stylized neural representations for videos,” in 2023 IEEE International Conference on Image Processing (ICIP) (Kuala Lumpur: IEEE), 41–45.

Balde, A. Y., Bergeret, E., Cajal, D., and Toumazet, J.-P. (2022). Low power environmental image sensors for remote photogrammetry. Sensors 22:7617. doi: 10.3390/s22197617

Balloni, E., Gorgoglione, L., Paolanti, M., Mancini, A., and Pierdicca, R. (2023). Few shot photogrametry: a comparison between nerf and mvs-sfm for the documentation of cultural heritage. Int. Archiv. Photogrammet. Rem. Sens. Spat. Inform. Sci. 48, 155–162. doi: 10.5194/isprs-archives-XLVIII-M-2-2023-155-2023

Barceló, P., Monet, M., Pérez, J., and Subercaseaux, B. (2020). Model interpretability through the lens of computational complexity. Adv. Neural Inform. Process. Syst. 33, 15487–15498. doi: 10.48550/arXiv.2010.12265

Barnes, C., Shechtman, E., Finkelstein, A., and Goldman, D. B. (2009). Patchmatch: a randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 28:24. doi: 10.1145/1531326.1531330

Basso, A., Condorelli, F., Giordano, A., Morena, S., and Perticarini, M. (2024). Evolution of rendering based on radiance fields. the palermo case study for a comparison between nerf and gaussian splatting. Int. Archiv. Photogrammet. Rem. Sens. Spat. Inform. Sci. 48, 57–64. doi: 10.5194/isprs-archives-XLVIII-2-W4-2024-57-2024

Bhambri, P., and Khang, A. (2024). “The human-machine nexus with art-making generative AIS,” in Making Art With Generative AI Tools, ed. S. Hai-Jew (Hershey, PA: IGI Global), 73–85.

Bleyer, M., Rhemann, C., and Rother, C. (2011). “Patchmatch stereo-stereo matching with slanted support windows,” in Proceedings of the British Machine Vision Conference (BMVC) (Dundee).

Brocchini, M., Mameli, M., Balloni, E., Sciucca, L. D., Rossi, L., Paolanti, M., et al. (2022). “Monster: a deep learning-based system for the automatic generation of gaming assets,” in International Conference on Image Analysis and Processing (Berlin: Springer), 280–290.

Cainelli, G., Giannini, V., and Iacobucci, D. (2022). How local geography shapes firm geography. Entrepr. Region. Dev. 34, 955–976. doi: 10.1080/08985626.2022.2115559

Cao, A., Rockwell, C., and Johnson, J. (2022). “FWD: real-time novel view synthesis with forward warping and depth,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA: IEEE), 15713–15724.

Cascarano, P., Comes, M. C., Sebastiani, A., Mencattini, A., Loli Piccolomini, E., and Martinelli, E. (2022a). Deepcel0 for 2D single-molecule localization in fluorescence microscopy. Bioinformatics 38, 1411–1419. doi: 10.1093/bioinformatics/btab808

Cascarano, P., Franchini, G., Porta, F., and Sebastiani, A. (2022b). On the first-order optimization methods in deep image prior. J. Verif. Valid. Uncertain. Quantif. 7:e041002. doi: 10.1115/1.4056470

Chen, L., Liu, J., Ke, Y., Sun, W., Dong, W., and Pan, X. (2023). MarkNerf: watermarking for neural radiance field. arXiv preprint arXiv:2309.11747. doi: 10.32604/cmc.2024.051608

Condorelli, F., Rinaudo, F., Salvadore, F., and Tagliaventi, S. (2021). A comparison between 3D reconstruction using NERF neural networks and mvs algorithms on cultural heritage images. Int. Archiv. Photogrammet. Rem. Sens. Spat. Inform. Sci. 43, 565–570. doi: 10.5194/isprs-archives-XLIII-B2-2021-565-2021

Croce, V., Caroti, G., De Luca, L., Piemonte, A., and Véron, P. (2023). Neural radiance fields (NeRF): review and potential applications to digital cultural heritage. Int. Archiv. Photogrammet. Rem. Sens. Spat. Inform. Sci. 48, 453–460. doi: 10.5194/isprs-archives-XLVIII-M-2-2023-453-2023

Cui, Z., Gu, L., Sun, X., Ma, X., Qiao, Y., and Harada, T. (2023). Aleth-NeRF: low-light condition view synthesis with concealing fields. arXiv preprint arXiv:2303.05807. doi: 10.48550/arXiv.2303.05807

Cutugno, M., Robustelli, U., and Pugliano, G. (2022). Structure-from-motion 3D reconstruction of the historical overpass ponte della cerra: a comparison between micmac® open source software and metashape®. Drones 6:242. doi: 10.3390/drones6090242

Dashkina, A., Khalyapina, L., Kobicheva, A., Lazovskaya, T., Malykhina, G., and Tarkhov, D. (2020). “Neural network modeling as a method for creating digital twins: from industry 4.0 to industry 4.1,” in Proceedings of the 2nd International Scientific Conference on Innovations in Digital Economy (New York, NY), 1–5.

Elloumi, N., Kacem, H. L. H., Dey, N., Ashour, A. S., and Bouhlel, M. S. (2017). Perceptual metrics quality: comparative study for 3D static meshes. Int. J. Serv. Sci. Manag. Eng. Technol. 8, 63–80. doi: 10.4018/IJSSMET.2017010105

European Commission (2024a). Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-assessment. Available at: https://digital-strategy.ec.europa.eu/it/node/806 (accessed June 5, 2024).

European Commission (2024b). White Paper on Artificial Intelligence: A European Approach to Excellence and Trust. Available at: https://commission.europa.eu/publications/white-paper-artificial-intelligence-european-approach-excellence-and-trust_en (accessed June 5, 2024).

European Commission (2024c). Cultural Heritage and Cultural and Creative Industries (CCIS). Available at: https://research-and-innovation.ec.europa.eu/research-area/social-sciences-and-humanities/cultural-heritage-and-cultural-and-creative-industries-ccis_en (accessed March 17, 2024).

Fabra, L., Solanes, J. E., Muñoz, A., Martí-Testón, A., Alabau, A., and Gracia, L. (2024). Application of neural radiance fields (NeRFs) for 3D model representation in the industrial metaverse. Appl. Sci. 14:1825. doi: 10.3390/app14051825

Fangi, G., Wahbeh, W., Malinverni, E. S., Di Stefano, F., and Pierdicca, R. (2022). “Documentation of syrian lost heritage: from 3D reconstruction to open information system,” in Challenges, Strategies and High-Tech Applications for Saving the Cultural Heritage of Syria (Minna Silver), 213–226.

Flick, C., and Worrall, K. (2022). “The ethics of creative AI,” in The Language of Creative AI: Practices, Aesthetics and Structures (Berlin: Springer), 73–91.

Fu, Y., Ye, Z., Yuan, J., Zhang, S., Li, S., You, H., et al. (2023). “Gen-NeRF: Efficient and generalizable neural radiance fields via algorithm-hardware co-design,” in Proceedings of the 50th Annual International Symposium on Computer Architecture (New York, NY), 1–12.

Furukawa, Y., and Ponce, J. (2010). Accurate, dense and robust multiview stereopsis. IEEE Trans. Pat. Anal. Machine Intell. 32, 1362–1376. doi: 10.1109/TPAMI.2009.161

Gao, K., Gao, Y., He, H., Lu, D., Xu, L., and Li, J. (2022). NeRF: neural radiance field in 3D vision, a comprehensive review. arXiv preprint arXiv:2210.00379. doi: 10.48550/arXiv.2210.00379

Giannini, V., and Iacobucci, D. (2022). “The role of internal capital market in business groups,” in The Palgrave Handbook of Managing Family Business Groups, ed. P. Macmillan (Berlin: Springer), 49–64.

Giannini, V., Iacobucci, D., and Perugini, F. (2019). Local variety and innovation performance in the EU textile and clothing industry. Econ. Innov. N. Technol. 28, 841–857. doi: 10.1080/10438599.2019.1571668

Goesele, M., Snavely, N., Curless, B., Hoppe, H., and Seitz, S. M. (2007). “Multi-view stereo for community photo collections,” in Proceedings of the IEEE 11th International Conference on Computer Vision (ICCV) (Rio de Janeiro: IEEE), 1–8.

Gordon, C., Chng, S.-F., MacDonald, L., and Lucey, S. (2023). “On quantizing implicit neural representations,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (Waikoloa, HI), 341–350.

Guédon, A., and Lepetit, V. (2023). Sugar: surface-aligned gaussian splatting for efficient 3D mesh reconstruction and high-quality mesh rendering. arXiv preprint arXiv:2311.12775. doi: 10.1109/CVPR52733.2024.00512

Guler, P., Emeksiz, D., Temizel, A., Teke, M., and Temizel, T. T. (2016). Real-time multi-camera video analytics system on GPU. J. Real-Time Image Process. 11, 457–472. doi: 10.1007/s11554-013-0337-2

Halilovich, H. (2016). Re-imaging and re-imagining the past after “memoricide”: intimate archives as inscribed memories of the missing. Archiv. Sci. 16, 77–92. doi: 10.1007/s10502-015-9258-0

Haque, A., Tancik, M., Efros, A. A., Holynski, A., and Kanazawa, A. (2023). “Instruct-NeRF2NeRF: editing 3D scenes with instructions,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris: IEEE), 19740–19750.

Hirschmuller, H. (2008). Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pat. Anal. Machine Intell. 30, 328–341. doi: 10.1109/TPAMI.2007.1166

Iglhaut, J., Cabo, C., Puliti, S., Piermattei, L., O'Connor, J., and Rosette, J. (2019). Structure from motion photogrammetry in forestry: a review. Curr. For. Rep. 5, 155–168. doi: 10.1007/s40725-019-00094-3

International Council of Museums (ICOM) (2018). ICOM Code of Ethics for Museums. Available at: https://icom.museum/wp-content/uploads/2018/07/ICOM-code-En-web.pdf (accessed June 5, 2024).

Jain, A., Tancik, M., and Abbeel, P. (2021). “Putting NeRF on a diet: semantically consistent few-shot view synthesis,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Montreal, QC: IEEE), 5885–5894.

Jancosek, M., and Pajdla, T. (2011). “Multi-view reconstruction preserving weakly-supported surfaces,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Colorado Springs, CO), 3121–3128.

Jignasu, A., Herron, E., Jubery, T. Z., Afful, J., Balu, A., Ganapathysubramanian, B., et al. (2023). “Plant geometry reconstruction from field data using neural radiance fields,” in 2nd AAAI Workshop on AI for Agriculture and Food Systems.

Jobin, A., Ienca, M., and Vayena, E. (2019). The global landscape of AI ethics guidelines. Nat. Machine Intell. 1, 389–399. doi: 10.1038/s42256-019-0088-2

Joshi, N., and Burlina, P. (2021). AI fairness via domain adaptation. arXiv preprint arXiv:2104.01109. doi: 10.48550/arXiv.2104.01109

Kerbl, B., Kopanas, G., Leimkühler, T., and Drettakis, G. (2023). 3D Gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 42:3592433. doi: 10.1145/3592433

Kim, M., Seo, S., and Han, B. (2022). “InfoNeRF: ray entropy minimization for few-shot neural volume rendering,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA: IEEE), 12912–12921.

Kuganesan, A., Su, S.-y., Little, J. J., and Rhodin, H. (2022). UNeRF: time and memory conscious U-shaped network for training neural radiance fields. arXiv preprint arXiv:2206.11952. doi: 10.48550/arXiv.2206.11952

Kulhánek, J., Derner, E., Sattler, T., and Babuška, R. (2022). “Viewformer: NeRF-free neural rendering from few images using transformers,” in European Conference on Computer Vision (Berlin: Springer), 198–216.

Lee, J., Choi, K., Lee, J., Lee, S., Whangbo, J., and Sim, J. (2023). “Neurex: a case for neural rendering acceleration,” in Proceedings of the 50th Annual International Symposium on Computer Architecture (New York, NY), 1–13.

Li, X., Guo, Y., Jin, H., and Zheng, J. (2023). Neural surface reconstruction with saliency-guided sampling in multi-view. IET Image Process. 17, 3411–3422. doi: 10.1049/ipr2.12873

Li, Y., Lin, Z.-H., Forsyth, D., Huang, J.-B., and Wang, S. (2023). “ClimateNeRF: extreme weather synthesis in neural radiance field,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris: IEEE), 3227–3238.

Liu, R., Wu, R., Van Hoorick, B., Tokmakov, P., Zakharov, S., and Vondrick, C. (2023). “Zero-1-to-3: zero-shot one image to 3D object,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris), 9298–9309.

Loli Piccolomini, E., Gandolfi, S., Poluzzi, L., Tavasci, L., Cascarano, P., and Pascucci, A. (2019). “Recurrent neural networks applied to GNSS time series for denoising and prediction,” in 26th International Symposium on Temporal Representation and Reasoning (TIME 2019). Wadern: Schloss-Dagstuhl-Leibniz Zentrum für Informatik.

Long, X., Guo, Y.-C., Lin, C., Liu, Y., Dou, Z., Liu, L., et al. (2023). Wonder3D: aingle image to 3D using cross-domain diffusion. arXiv preprint arXiv:2310.15008. doi: 10.48550/arXiv.2310.15008

Lorensen, W. E., and Cline, H. E. (1987). “Marching cubes: a high resolution 3d surface construction algorithm,” in Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH '87 (New York, NY: Association for Computing Machinery), 163–169.

Luo, Z., Guo, Q., Cheung, K. C., See, S., and Wan, R. (2023). “CopyrNeRF: protecting the copyright of neural radiance fields,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris), 22401–22411.

Madiega, T. (2021). Artificial Intelligence Act. European Parliament: European Parliamentary Research Service.

Manfredi, G., Capece, N., Erra, U., Gilio, G., Baldi, V., and Di Domenico, S. G. (2022). “Tryiton: a virtual dressing room with motion tracking and physically based garment simulation,” in Extended Reality, eds. L. T. De Paolis, P. Arpaia, and M. Sacco (Cham: Springer International Publishing), 63–76.

Manfredi, G., Capece, N., Erra, U., and Gruosso, M. (2023a). TreeSketchNet: from sketch to 3D tree parameters generation. ACM Trans. Intell. Syst. Technol. 14:5. doi: 10.1145/3579831

Manfredi, G., Gilio, G., Baldi, V., Youssef, H., and Erra, U. (2023b). ViCo-DR: a collaborative virtual dressing room for image consulting. J. Imag. 9:76. doi: 10.3390/jimaging9040076

Martin-Brualla, R., Radwan, N., Sajjadi, M. S., Barron, J. T., Dosovitskiy, A., and Duckworth, D. (2021). “NeRF in the wild: neural radiance fields for unconstrained photo collections,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Nashville, TN: IEEE), 7210–7219.

Mazzacca, G., Karami, A., Rigon, S., Farella, E., Trybala, P., Remondino, F., et al. (2023). Nerf for heritage 3D reconstruction. Int. Archiv. Photogram. Rem. Sens. Spat. Inform. Sci. 48, 1051–1058. doi: 10.5194/isprs-archives-XLVIII-M-2-2023-1051-2023

Mejia-Rodriguez, A. R., Scalco, E., Tresoldi, D., Bianchi, A. M., Arce-Santana, E. R., Mendez, M. O., et al. (2012). “Mesh-based approach for the 3D analysis of anatomical structures of interest in radiotherapy,” in 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (San Diego, CA: IEEE), 6555–6558.

Meng, X., Chen, W., and Yang, B. (2023). “NeAT: learning neural implicit surfaces with arbitrary topologies from multi-view images,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Vancouver, BC: IEEE), 248–258.

Micozzi, A., and Giannini, V. (2023). How does the localization of innovative start-ups near the universities influence their performance? L'industria 44, 129–152. doi: 10.1430/107739

Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T., Ramamoorthi, R., and Ng, R. (2022). NeRF: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 99–106. doi: 10.1145/3503250

Müller, T., Evans, A., Schied, C., and Keller, A. (2022). Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 41, 102–115. doi: 10.1145/3528223.3530127

Murez, Z., Van As, T., Bartolozzi, J., Sinha, A., Badrinarayanan, V., and Rabinovich, A. (2020). “Atlas: end-to-end 3D scene reconstruction from posed images,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VII 16 (Berlin: Springer), 414–431.

Niemeyer, M., Barron, J. T., Mildenhall, B., Sajjadi, M. S., Geiger, A., and Radwan, N. (2022). “RegNeRF: regularizing neural radiance fields for view synthesis from sparse inputs,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA: IEEE), 5480–5490.

Nousias, S., Arvanitis, G., Lalos, A., and Moustakas, K. (2023). Deep saliency mapping for 3D meshes and applications. ACM Trans. Multimed. Comput. Commun. Appl. 19, 1–22. doi: 10.1145/3550073

Over, J.-S. R., Ritchie, A. C., Kranenburg, C. J., Brown, J. A., Buscombe, D. D., Noble, T., et al. (2021). Processing Coastal Imagery With Agisoft Metashape Professional Edition, Version 1.6—Structure From Motion Workflow Documentation. Technical Report, US Geological Survey, Reston, VA.

Pansoni, S., Tiribelli, S., Paolanti, M., Di Stefano, F., Frontoni, E., Malinverni, E. S., et al. (2023a). Artificial intelligence and cultural heritage: design and assessment of an ethical framework. Int. Archiv. Photogram. Rem. Sens. Spat. Inform. Sci. 48, 1149–1155. doi: 10.5194/isprs-archives-XLVIII-M-2-2023-1149-2023

Pansoni, S., Tiribelli, S., Paolanti, M., Frontoni, E., and Giovanola, B. (2023b). “Design of an ethical framework for artificial intelligence in cultural heritage,” in 2023 IEEE International Symposium on Ethics in Engineering, Science, and Technology (ETHICS) (West Lafayette, IN: IEEE), 1–5.

Petrocchi, E., Tiribelli, S., Paolanti, M., Giovanola, B., Frontoni, E., and Pierdicca, R. (2023). “Geomethics: ethical considerations about using artificial intelligence in geomatics,” in International Conference on Image Analysis and Processing (Berlin: Springer), 282–293.

Piskopani, A. M., Chamberlain, A., and Ten Holter, C. (2023). “Responsible AI and the arts: the ethical and legal implications of AI in the arts and creative industries,” in Proceedings of the First International Symposium on Trustworthy Autonomous Systems (New York, NY), 1–5.

Poole, B., Jain, A., Barron, J., Mildenhall, B., Hedman, P., Martin-Brualla, R., et al. (2023). Rendering a better future. Comput. Inflect. Sci. Disc. 66:15. doi: 10.1145/3603748

Poole, B., Jain, A., Barron, J. T., and Mildenhall, B. (2022). Dreamfusion: text-to-3D using 2D diffusion. arXiv preprint arXiv:2209.14988. doi: 10.48550/arXiv.2209.14988

Remondino, F., El-Hakim, S., Gruen, A., and Zhang, L. (2008). Turning images into 3D models-development and performance analysis of image matching for detailed surface reconstruction of heritage objects. IEEE Sign. Process. Mag. 25, 55–65. doi: 10.1109/MSP.2008.923093

Samek, W., Wiegand, T., and Müller, K.-R. (2017). Explainable artificial intelligence: understanding, visualizing and interpreting deep learning models. arXiv preprint arXiv:1708.08296. doi: 10.48550/arXiv.1708.08296

Schneider, K. P. (2018). Methods and ethics of data collection. Methods Pragmat. 2018, 37–93. doi: 10.1515/9783110424928-002

Schönberger, J. L., Zheng, E., Frahm, J.-M., and Pollefeys, M. (2016). “Pixelwise view selection for unstructured multi-view stereo,” in Computer Vision—ECCV 2016, eds. B. Leibe, J. Matas, N.Sebe, and M.Welling (Cham: Springer International Publishing), 501–518.

Shahbazi, M., Ntavelis, E., Tonioni, A., Collins, E., Paudel, D. P., Danelljan, M., et al. (2023). “NeRF-GAN distillation for efficient 3D-aware generation with convolutions,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris), 2888–2898.

Srinivasan, R., and Uchino, K. (2021). “Biases in generative art: a causal look from the lens of art history,” in Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (New York, NY), 41–51.

Stacchio, L., Angeli, A., and Marfia, G. (2022). Empowering digital twins with extended reality collaborations. Virt. Real. Intell. Hardw. 4, 487–505. doi: 10.1016/j.vrih.2022.06.004

Stacchio, L., Scorolli, C., and Marfia, G. (2023). “Evaluating human aesthetic and emotional aspects of 3D generated content through extended reality,” in CREAI@ AI* IA, 38–49.

Strecha, C., Fransens, R., and Van Gool, L. (2006). “Combined depth and outlier estimation in multi-view stereo,” in 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06), Volume 2 (New York, NY: IEEE), 2394–2401.

Tancik, M., Weber, E., Ng, E., Li, R., Yi, B., Wang, T., et al. (2023). “NeRFstudio: a modular framework for neural radiance field development,” in ACM SIGGRAPH 2023 Conference Proceedings (New York, NY), 1–12.

Tewari, A., Fried, O., Thies, J., Sitzmann, V., Lombardi, S., Sunkavalli, K., et al. (2020). “State of the art on neural rendering,” in Computer Graphics Forum, Volume 39 (Wiley Online Library), 701–727.

Tiribelli, S., Giovanola, B., Pietrini, R., Frontoni, E., and Paolanti, M. (2024). Embedding ai ethics into the design and use of computer vision technology for consumer's behavior understanding. Comput. Vis. Image Understand. 2024:104142. doi: 10.1016/j.cviu.2024.104142

Toschi, M., De Matteo, R., Spezialetti, R., De Gregorio, D., Di Stefano, L., and Salti, S. (2023). “Relight my NeRF: a dataset for novel view synthesis and relighting of real-world objects,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Paris), 20762–20772.

Tosi, F., Zhang, Y., Gong, Z., Sandström, E., Mattoccia, S., Oswald, M. R., et al. (2024). How NeRFs and 3D Gaussian splatting are reshaping slam: a survey. arXiv preprint arXiv:2402.13255. doi: 10.48550/arXiv.2402.13255

UNESCO (2023). Readiness Assessment Methodology: a Tool of the Recommendation on the Ethics of Artificial Intelligence.

UNESCO (2024a). UNESCO Cultural and Creative Industries in the Face of COVID-19: an Economic Impact Outlook.

Urheber.info (2024). Call for Safeguards Around Generative AI. Available at: https://urheber.info/diskurs/call-for-safeguards-around-generative-ai (accessed June 5, 2024).

Wang, K., Zhang, G., Cong, S., and Yang, J. (2023). “Clothed human performance capture with a double-layer neural radiance fields,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Vancouver, BC: IEEE), 21098–21107.

Wang, Y., Li, Y., Zhang, H., Yu, J., and Wang, K. (2023). “Moth: a hardware accelerator for neural radiance field inference on FPGA,” in 2023 IEEE 31st Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM) (Marina Del Rey, CA: IEEE), 227–227.

Wen, Y., Liu, B., Cao, J., Xie, R., and Song, L. (2023). “Divide and conquer: a two-step method for high quality face de-identification with model explainability,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris: IEEE), 5148–5157.

Xie, B., Li, B., Zhang, Z., Dong, J., Jin, X., Yang, J., et al. (2023). “NaviNeRF: NeRF-based 3D representation disentanglement by latent semantic navigation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Paris), 17992–18002.

Xie, H., Yao, H., Zhang, S., Zhou, S., and Sun, W. (2020). Pix2Vox++: Multi-scale context-aware 3D object reconstruction from single and multiple images. Int. J. Comput. Vis. 128, 2919–2935. doi: 10.1007/s11263-020-01347-6

Xu, F., Uszkoreit, H., Du, Y., Fan, W., Zhao, D., and Zhu, J. (2019). “Explainable AI: a brief survey on history, research areas, approaches and challenges,” in Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019, Dunhuang, China, October 9–14, 2019, Proceedings, Part II 8 (Berlin: Springer), 563–574.

Xu, M., Wang, Y., Xu, B., Zhang, J., Ren, J., Huang, Z., et al. (2024). A critical analysis of image-based camera pose estimation techniques. Neurocomputing 570:127125. doi: 10.1016/j.neucom.2023.127125

Xu, Z., Zhang, X., Chen, W., Liu, J., Xu, T., and Wang, Z. (2024). MuralDIFF: diffusion for ancient murals restoration on large-scale pre-training. IEEE Trans. Emerg. Top. Comput. Intell. 2024:3359038. doi: 10.1109/TETCI.2024.3359038

Yang, H., Chen, Y., Pan, Y., Yao, T., Chen, Z., and Mei, T. (2023). “3Dstyle-diffusion: pursuing fine-grained text-driven 3D stylization with 2D diffusion models,” in Proceedings of the 31st ACM International Conference on Multimedia (New York, NY), 6860–6868.