94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci., 15 April 2022

Sec. Human-Media Interaction

Volume 4 - 2022 | https://doi.org/10.3389/fcomp.2022.844245

Parts of this article's content have been modified or rectified in:

Erratum: Take it to the curb: Scalable communication between autonomous cars and vulnerable road users through curbstone displays

Automated driving will require new approaches to the communication between vehicles and vulnerable road users (VRUs) such as pedestrians, e.g., through external human–machine interfaces (eHMIs). However, the majority of eHMI concepts are neither scalable (i.e., take into account complex traffic scenarios with multiple vehicles and VRUs), nor do they optimize traffic flow. Speculating on the upgrade of traffic infrastructure in the automated city, we propose Smart Curbs, a scalable communication concept integrated into the curbstone. Using a combination of immersive and non-immersive prototypes, we evaluated the suitability of our concept for complex urban environments in a user study (N = 18). Comparing the approach to a projection-based eHMI, our findings reveal that Smart Curbs are safer to use, as our participants spent less time on the road when crossing. Based on our findings, we discuss the potential of Smart Curbs to mitigate the scalability problem in AV-pedestrian communication and simultaneously enhance traffic flow.

The ultimate goal of automated driving is a “Vision Zero” in which no more fatal accidents occur on roadways (Forum, 2013). However, in fully automated driving, existing human-to-human communication (using eye gaze, gestures, or additional signaling devices) needs to be replaced by other concepts or adapted. This ensures that automated vehicles (AVs) and vulnerable road users (VRUs, such as pedestrians or cyclists), understand each other (Sucha, 2017; Rasouli and Tsotsos, 2019; Holländer et al., 2021). Although path planning algorithms are becoming more sophisticated, research suggests that it is particularly difficult to cater for the social expectations of individuals (Jiang et al., 2021; Pelikan, 2021), and overcoming recently observed phenomena such as griefing (i.e., deliberately misleading) of AVs (Moore et al., 2020a), which can lead to dangerous situations or deadlocks. This holds especially true for urban areas with a high density of road users (Mobility and transport, 2021). Researchers argue that external human-machine interfaces (eHMIs) on AVs could increase trust (Holländer et al., 2019b) and road safety (Holländer et al., 2019a), and support VRUs in better estimating a vehicle's behavior and intent (Dey et al., 2020a,c).

However, eHMIs have an inherent scalability problem and are mostly evaluated in one-to-one scenarios (Colley et al., 2020) (i.e., a single VRU crossing in front of a solitary AV). In a real world scenario, however, the eHMI might be visible for multiple road users, and it might not always be clear which of them is meant. Even for eHMI concepts which address the vehicle's situational awareness of vulnerable road users (e.g., through distance-based eHMIs Dey et al., 2020c), the support of multiple road users will reach a natural limit determined by the eHMI's display space and form factor (Dey et al., 2020a). Furthermore, as eHMIs are attached to the vehicle itself, in right-of-way negotiations most concepts are designed to communicate the corresponding vehicle's decision to come to a stop and give way; however they neither consider other vehicles' status and intent nor the input from stationary smart city infrastructure (e.g., signaling devices). While this seems logical from an information design perspective (i.e., the displayed message is attached to and refers to its physical referent Willett et al., 2017), it is problematic in traffic situations where VRUs have to cross multiple lanes. In this case, a VRU might start crossing the first lane before considering the vehicle's intent on the second lane. This scenario illustrates that VRUs might have to check multiple eHMIs to safely cross a road, which may lead to increased cognitive load and contradictory situations. Additionally, this scenario also illustrates how current eHMI concepts neglect the important aspect of traffic flow optimization (Forum, 2013), as the VRU might unintentionally block the vehicle on the first lane until the second lane becomes safe to cross.

In this work, we developed a design solution called Smart Curbs. Speculating on a novel system integrated into traffic infrastructure, Smart Curbs are specifically targeted to overcome the scalability problem of eHMIs, increase road safety, and optimize traffic flow. Smart Curbs illuminate the curbstone in real time at a specific position where it is safe to cross the road (cf. Figure 1). Hence, when vehicles are moving, the curbstone's signaling color updates accordingly and each pedestrian can see if it is safe to cross at their current position. In contrast to eHMIs, the interface is not attached to an individual vehicle but becomes an integrative part of the urban environment. Following a VRU-centered design, Smart Curbs inform vulnerable road-users about when and where to cross the street safely by dynamically displaying this information via red and green lights. As the LED lights are embedded into the curbstone, it allows information to spread over large parts of the street. Unlike with eHMIs or conventional traffic lights, VRUs are addressed at any position along the modified infrastructure.

Figure 1. Frames from a VR simulation of the two communication concepts we compared: Smart Curbs (left) using LED lights embedded in the curbstone, lighting up in green (safe to cross) or red (not safe), and Projections (middle). In our evaluation, up to four vehicles and two pedestrians shared the road simultaneously; study participants could actually cross the street in our VR lab (right).

In a user study with 18 participants, we assessed crossing time, perceived safety, comprehensibility, and usability of Smart Curbs, collecting quantitative and qualitative data in the process. Within an immersive VR environment, participants crossed the street in three different scenarios using Smart Curbs vs. a projection-based eHMI concept. We compared the concepts with each other and to a baseline condition (i.e., no explicit communication display). To further evaluate the concepts we asked participants to draw walking routes into multi-user scenarios on a tablet. We found that the Smart Curbs worked properly as they ensured the safest use of all tested concepts. Especially in scenarios with several road users, Smart Curbs can stand up to the Projections concept in spite of its ease of use and single point of information. Overall, we argue that Smart Curbs have the potential to increase acceptance of AVs and to contribute to more fluent traffic flow within urban environments.

Research on pedestrian safety has stressed that a pedestrian's decision to cross a road in front of an approaching vehicle is mainly based on the gap size toward the vehicle and on its driving behavior (Yannis et al., 2013; Dey et al., 2019). Furthermore, communication between drivers and pedestrians (e.g., through eye contact or gestures) is another important factor for pedestrians to reinforce their crossing decision (Rasouli et al., 2017). In light of the introduction of fully autonomous vehicles, research and industry have started to investigate design solutions (e.g., explicit human-machine interfaces) to substitute driver-to-pedestrian communication as shown in a recent review by Rasouli and Tsotsos (2019) and Mora et al. (2020). While some research indicated that pedestrians make their decision to cross in front of an AV based on implicit cues (e.g., motion) (Dey et al., 2017; Moore et al., 2019), others have shown that explicit communication channels can increase the subjective feeling of safety and user experience (Holländer et al., 2019b; M. Faas et al., 2021). Below, we provide an overview of previous work on HCI solutions to support pedestrian crossing decisions. We group them according to the interface placement (Dey et al., 2020a), namely (a) AV-pedestrian interfaces attached to the vehicle, often also referred to as eHMIs, (b) handheld devices carried by the VRU, and (c) interfaces integrated into the traffic infrastructure. We discuss the feasibility of those solutions to enable scalable AV to VRU communication.

Researchers and designers have explored a wide range of eHMI concepts to communicate an AV's intent and action to surrounding pedestrians (Dey et al., 2020a; Rasouli and Tsotsos, 2019). Predominant communication modalities include visual cues that can be abstract (Dey et al., 2020b), anthropomorphic (Holländer et al., 2019b), symbolic (Nguyen et al., 2019), textual (Bazilinskyy et al., 2019) or audio-visual (Böckle et al., 2017; Moore et al., 2020b). Various display technologies have been used, for example low-resolution lighting and high-resolution displays attached to the exterior of the vehicle. Another promising approach is the use of projection-based eHMIs with the projector attached to the vehicle and the road being used as a display surface (Nguyen et al., 2019). While empirical studies have demonstrated that eHMIs can increase trust and pedestrian safety, there are still remaining challenges: for example, several systematic reviews indicated that most eHMI concepts are designed for one-to-one scenarios with one VRU interacting with a single AV (Dey et al., 2020a; Colley et al., 2020). Another issue which has been discussed is the underlying communication strategy in right-of-way negotiations, e.g., whether the vehicle should display its intention or give an instruction (Eisma et al., 2021). Here, in particular for color-based eHMIs, the use of red and green lights can lead to confusion as it can be interpreted as a signal for VRUs to cross (e.g., traffic light metaphor) or represent the state of the vehicle itself (Verma et al., 2019). Furthermore, there are strict regulations for the use of colors that are already implemented in vehicles or traffic signaling devices, which would currently prohibit the use of red and green, amongst others, for eHMIs attached to the vehicle (Dey et al., 2020a).

With the ubiquity of hand-held mobile devices (e.g., smartphones), which are equipped with a wide range of sensors, research and industry have looked into the use of mobile pedestrian safety applications. For example, Hwang et al. (2014) presented SANA, which calculates potential safety-risks based on GPS data and sends an alert to pedestrian and driver. At the same time, smartphone usage in public has been shown to alter visual attention of the surrounding environment (Argin et al., 2020), also as smartphones usually do not provide information at the periphery of attention (Bakker et al., 2016). As a consequence, walking in urban traffic while looking at a smartphone has been shown to risk accidents (Nasar, 2013) which such applications aim to prevent (CGactive LLC, 2009; Wang et al., 2012). In the context of automated vehicles, Mahadevan et al. (2018) studied the use of haptic feedback through a phone to communicate to pedestrians when it is safe to cross. While haptic feedback can avoid users constantly having to look at their phones while navigating through traffic situations, such implicit feedback is also limited in terms of its information capacity and might interfere with feedback from other apps (e.g., messenger apps). Holländer et al. (2020a) evaluated on-screen guidance concepts for smartphone-assisted street crossing in front of automated vehicles, thus providing targeted communication cues to individual users. However, while information cues on hand-held devices could overcome the scalability problem of eHMIs, there are several limitations acknowledged by the authors. On the other hand, SmartCurbs (as one HMI example) are integrated into the surrounding environment, do not require constant visual attention, and provide information at the periphery of attention such as enabling barrier-free and easy access, while also addressing ethical considerations such as avoiding the use of smartphones in potentially dangerous traffic situations (Holländer et al., 2020a).

The integration of AV-interfaces into the traffic infrastructure has only found little attention in the research community so far (Dey et al., 2020a). Mahadevan et al. (2018) studied AV-interfaces that reside on the street infrastructure using an exploratory Wizard-of-Oz prototyping approach. They placed LED lights on a chair to simulate a street cue that communicates to pedestrians when it is safe to cross in front of an AV. Furthermore, there exist a few conceptual works and test-bed implementations from design practice that go beyond existing and prevalent traffic signal designs: for example, the Australian firm Büro North (Morby, 2015) proposed a ground-embedded traffic light display tailored to face-down smartphone users. The London-based design studio Umbrellium (Umbrellium, 2017) developed the Starling Crossing, a full-scale prototype of an interactive LED-based pedestrian crossing that visualizes dynamically and in real-time crossing support to pedestrians. However, such test-bed implementations are expensive and have not yet been adapted to the context of automated driving. Building on those examples from industry, to the best of our knowledge, only (Löcken et al., 2019) studied a smart infrastructure concept in Virtual Reality. They compared a smart crosswalk concept with other prevalent eHMI concepts (e.g., projections and light band). They found that the smart crosswalk concept performed best regarding trust, perceived safety and user experience. While these results are promising to make the case for integrating AV-interfaces into traffic infrastructure, there are remaining questions regarding the design concept and the evaluation setup: What could a more ubiquitous infrastructure-based concept look like, that allows crossing not only at designated places? How does such a ubiquitous infrastructure-based concept perform in more complex scenarios with multiple lanes and road users (i.e., multiple vehicles approaching from both sides)?

To overcome the current lack of scalability of eHMIs, we investigate Smart Curbs in an immersive Virtual Reality (VR) study under laboratory conditions (see Figure 1). We imagine this design concept being integrated into dense, urban environments. The implemented car is a model of a Citroën C-Zero, which is “ideal for city driving” according to an online electric car reviewing platform.1 In addition to addressing the scalability problem of eHMIs, the concept of Smart Curbs, as described below, is characterized above all by improving traffic safety and easing communication between AVs and VRUs in future smart cities.

The aim of our research was to find out whether Smart Curbs could succeed in realistic multi-user traffic environments in terms of safety and comprehensibility. Additionally, we aimed to find out if and under which conditions Smart Curbs outperform Projections, which we use as an example of a previously studied eHMI concept (Nguyen et al., 2019). We tested from a VRU (in our case pedestrians) perspective, which concept offers a more understandable, comfortable, and safer use. We further compared Smart Curbs and Projections to a baseline condition without explicit communication. The underlying hypotheses which guide our investigation are based on the following assumptions: In the interaction between pedestrians and automated or driverless vehicles, the lack of human-to-human communication leads to uncertainty for vulnerable road users. To be fairer toward the projections concept, we assume an urban environment with multiple vehicles exclusively of the SAE automation levels four and five (of Automotive Engineers), because in environments with multiple road users and varying levels of automation, eHMIs attached to individual vehicles will no longer function adequately.

Based on these assumptions, we formulated the following three hypotheses:

(H1) Smart Curbs will reduce the time pedestrians spend on the road and thereby increase safety.

(H2) Smart Curbs create a feeling of greater subjective safety for pedestrians than projections or no aid at all.

(H3) Smart Curbs are easier to understand than projections or the unaided traffic situation.

The motivating idea behind H1 is not an increase in walking speed or decision making, but the fact that pedestrians can cross in one step when the smart curb allows this, instead of making partial crossing decisions, observing the projections or behavior of different cars subsequently. Reducing time on the road in such a way will increase safety, simply because it means a shorter exposure to traffic. We investigate each hypothesis by comparing Smart Curbs to the projection-based eHMI concept of Nguyen et al. (2019) as well as a baseline condition (no explicit communication cues).

Below, we describe the design concepts of Smart Curbs and Projections, guided along the eHMI classification taxonomy presented by Dey et al. (2020a). The target audience for both concepts is vulnerable road users. For both, SmartCurbs and Projections, we opted for a minimalist design. Thus, we decided to display abstract visual information (re: modality of communication Dey et al., 2020a). Compared to text, illiterate people and children are also addressed (Charisi et al., 2017; Nguyen et al., 2019). Furthermore, abstract visual information is most commonly used for eHMIs (Dey et al., 2020a), which allows a comparison of our results to existing prominent approaches. The covered vehicle states are “cruising” and “yielding,” resulting in two distinct messages that are displayed in both communication concepts.

For Smart Curbs (see Figure 1, left), we integrate the displayed information into the curbstone as part of the city infrastructure. Unlike Starling Crossing (Umbrellium, 2017) and the smart crosswalk evaluated in Löcken et al. (2019), only the curbstone—instead of the entire road surface—is augmented with digital information. Thus, we argue that the concept becomes more cost-effective for a potential implementation. Assuming a fully connected smart city infrastructure, sensors on the street will detect approaching cars and communicate the status through the color of LEDs embedded in the curbs. The state of protected road users (PRUs, i.e., drivers2) is indicated by two colors. From a VRU's perspective, green lighting indicates that the street can be crossed safely. In contrast, sections illuminated in red indicate that it is not safe to cross. Especially children might benefit from using common traffic light patterns which follow existing mental models (Charisi et al., 2017). Furthermore, we assume that the use of red and green cues located on static traffic infrastructure would not cause any confusion in traffic negotiations, such as previously reported for eHMI concepts that are inspired by traffic light patterns and located on the vehicle itself (Nguyen et al., 2019; Rouchitsas and Alm, 2019). As the lighting of the Smart Curbs is visible from every direction, all VRUs are equally addressed (re: addressing multiple road users Dey et al., 2020a). At the same time, each VRU receives a distinct and unambiguous message relative to its spatial location (re: communication resolution Dey et al., 2020a). Further, when approaching the Smart Curbs, VRUs get a quick overview where they can safely cross the street and do not have to focus on individual messages from multiple vehicles. Dynamically switching from green to red and vice versa depending on the vehicles' current positions, the curbs provide VRUs with real time feedback on not only when, but also in which direction (e.g., when crossing diagonally) the road can be crossed safely as the illuminated green curbs mark an entire safe area. Thus, the message of communication (regarding right-of-way negotiations Dey et al., 2020a) covers not only the vehicle's current operation state, but also indicates the danger and safety zone. Our red area had a buffer distance of about 1.5 m longitudinally around the car, so that the participants could change their mind after making a first move without colliding. We chose this distance based on initial tests within the team, ensuring that there was a comfortable space between the vehicle and pedestrians. The Smart Curb's concept in its current state has one remaining drawback, which is accessibility for red-green-deficient users [which comprise about 7% of the male population, after all, cf. (Lee et al., 2017, page 102)]. This could be mitigated by adding an additional layer of information coding such as color intensity or patterns. But remains future work for now.

Our projection-based eHMI (see Figure 1, middle), projects information onto the road, in front of the vehicle. For the message of communication, we decided to communicate an instruction to the VRU (Dey et al., 2020a). This decision was made in order to achieve comparability with the SmartCurb's communication message and to avoid confusion in the subsequent user study. In terms of the visualization concept, we build on the design by Nguyen et al. (2019) who proposed a wave pattern for the vehicle's moving state and crossing lines when the vehicle has come to a halt. In their study, they used a color encoding inspired by current traffic light designs. However, later they reported on contradictory assumptions made by participants when interpreting the colors on a moving vehicle. We, therefore, opted for a more neutral color encoding: for the crossing lines—signaling the VRU that it is safe to cross—we choose the cyan color as suggested by previous research (Dey et al., 2020b). For the wave pattern— signaling the VRU to wait—we choose an orange color. In terms of addressing multiple road users, following Dey's taxonomy (Dey et al., 2020a), the number of VRUs being addressed can be classified as “unlimited” as the message is broadcasted. However, different from the Smart Curbs, only VRUs who are in sight of the vehicle and facing toward the vehicle's front can see the projections. Further, the communication resolution is lower for Projections, as all surrounding VRUs are subject to the same message, and danger and safety zones are not explicitly communicated.

Unlike Smart Curbs, the implemented Projections concept does not rely on a connected infrastructure (i.e., each eHMI works self-sufficiently and does not incorporate information about surrounding vehicles). We acknowledge that during the concept development phase we discussed the possibility of implementing a centralized and interconnected eHMI. This would mean that, for example, the eHMI would only switch its message to communicate a yielding state when vehicles on the second lane would also give confirmation to come to a halt. However, we abandoned such an implementation for two reasons: Firstly, in the existing literature, AV-pedestrian interfaces attached to the vehicle never supported such a mode of operation, which would make it more difficult to compare our results to the literature. Secondly, from an information perspective, we assume that for an attached eHMI displaying a message that is not matching the operational state of the associated vehicle, along with needing to consider the operational state of surrounding vehicles, would lead to confusion. This assumption is also based on previous research which demonstrated that implicit movement-based cues are an important factor for a pedestrian's crossing decision (Moore et al., 2019; Dey et al., 2020c), and therefore implicit and explicit cues should be in alignment to communicate effectively (Dey et al., 2021).

Previous studies have highlighted that safety (objective and subjective), comprehensibility, and usability are decisive factors when evaluating VRU-AV communication concepts (Noah et al., 2017; Wintersberger et al., 2018). To determine which concepts lead to the most optimal results, we aimed to measure these factors through an immersive virtual reality investigation that simulates a realistic first-person view experience. Previous work has shown the effectiveness of VR for pedestrian safety research in the sense that participant behavior in VR matches behavior observed in the real worl, and that participants perceive the virtual environment as realistic (Deb et al., 2017). We conducted a within-subject design study, meaning that each participant tested all three conditions (Smart Curbs, Projections, and the baseline), in combination with three different traffic scenarios.

To further investigate if participants understood the design concepts as intended, we subsequently let participants draw walking routes on a tablet application depicting a birds-eye view of the different concepts. This task allowed us to gain deeper insights into the comprehensibility of the concepts and whether participants could apply them to even more complex traffic situations.

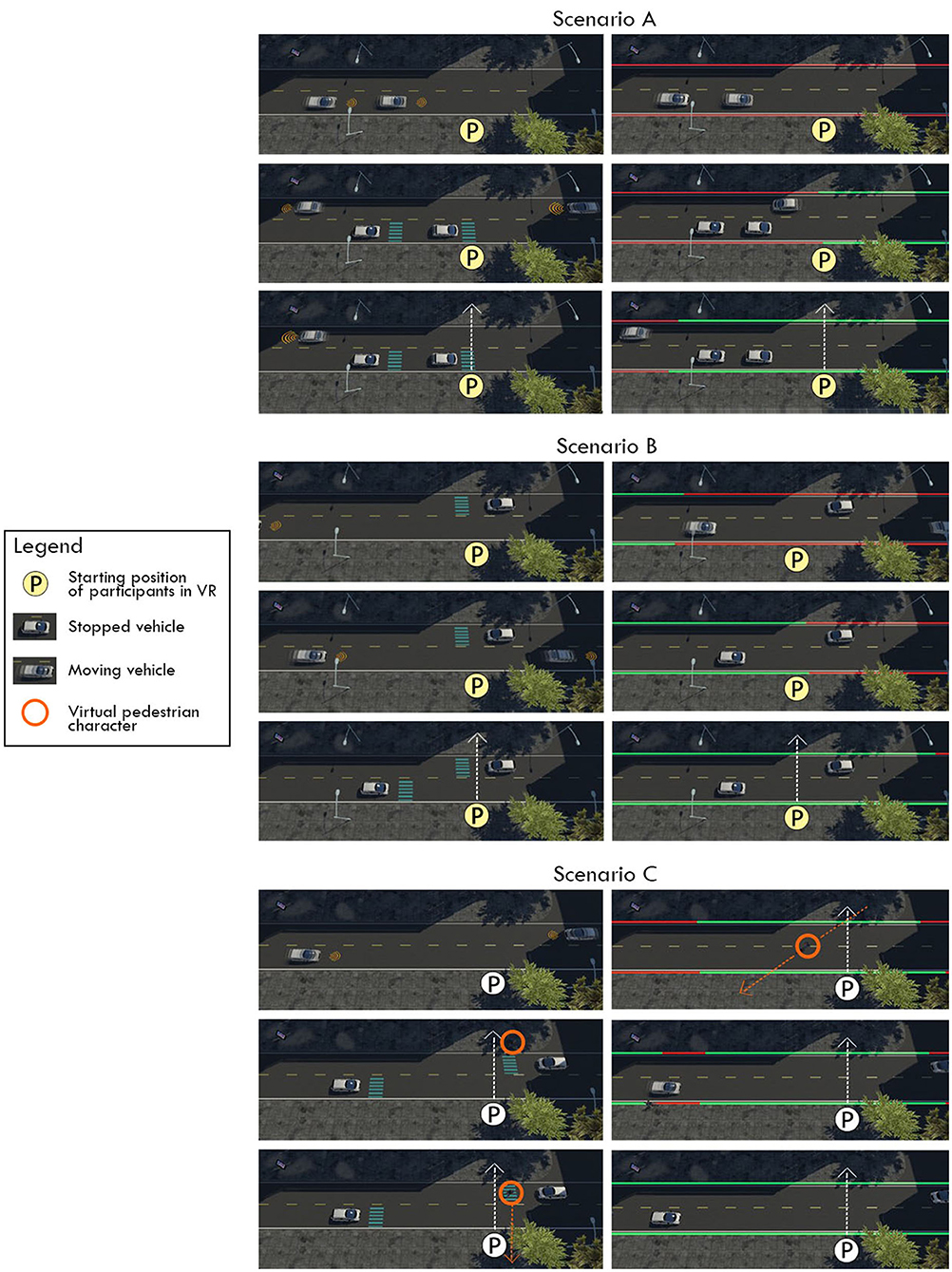

For the VR investigation, we developed three different scenarios in which the approaching AVs behave differently, thus reducing the potential learning effect when comparing the two design concepts and the baseline condition. Furthermore, previous research has stressed that a majority of evaluations do not consider how eHMI concepts perform in various traffic situations (Dey et al., 2020a; Holländer et al., 2019a), in particular with more complex scenarios often missing (Colley et al., 2020). In addition to simpler traffic situations, we therefore also deliberately created situations in which the concepts would reach their limits. In the following sections, we explain the investigated scenarios, which are also depicted in Figure 2. All scenarios were created for right-hand traffic, and all vehicles drove with a constant speed of 30 km/h. The lanes had a width of 3.6m thus the road was 7.2m wide. The street was 261.1m long.

Figure 2. Still images from the three tested scenarios in VR, (left: Projections, right: SmartCurbs). “P” marks the starting position of participants. Vehicles in motion at the given point in time are slightly blurred to indicate this. In Scenario C, the orange circle marks the virtual pedestrian character. This figure includes three blocks of images (each one for a respective Scenario (A, B, and C). The images contain screenshots from the VR scenario which match the descriptions from Section 4.1 (“Scenarios.”).

In Scenario A, two vehicles are approaching from the left (i.e., on the first lane from the study participant's perspective) and come to a halt in front of the participant. Meanwhile another two vehicles are approaching from the right side (i.e., second lane), however, they drive by without stopping. For the Projections concept, the displayed crosswalks are matching the slowing-down behavior of the vehicles on the first lane, before the vehicles from the right-hand side drive by (i.e., when it is not safe yet to cross both lanes). In contrast, the Smart Curbs begin to illuminate in green (from right to the left), with the green lights approaching the VR participant only after the last vehicle on the second lane passes (i.e., when the road can be crossed safely). In this scenario, we assume that conventional eHMIs would potentially underperform because they do not regard the surrounding PRUs (i.e., other road users on multiple lanes).

After a first vehicles passes from the left on the first lane, a second vehicle approaches from the right and shortly after, a third vehicle approaches from the left. Both the second and third vehicle stop before they reach the participant. For the Projections condition, the second vehicle (approaching from the right and closer to the participant) projects a crosswalk when it slows down to halt, while the third vehicle on the left is still cruising. Although it was not possible to anticipate whether the third vehicle would stop as well in this scenario, participants were given enough time to start crossing early and safely cross both lanes. In the respective Smart Curbs condition, green lights along the participant's crossing area only appear after both vehicles stand still. Thus, in this scenario participants could have a small time advantage in the Projections condition. We created this scenario to demonstrate to participants that Projections do not necessarily underperform, in particular in such a simple traffic situation.

In Scenario C, two vehicles are approaching: one vehicle from the right, and one from the left. In this scenario, a virtual pedestrian character (orange circle in Figure 2) approaches the street, on the opposite side of the VR participant. As Smart Curbs already indicate when it is safe to cross within the entire area, the virtual character starts to go right across the street. In this case, the participant can cross the street immediately, even though the vehicles are still moving (before coming to a complete halt). For the Projections, the virtual character crosses the lane partially as the vehicle on the right displays a crosswalk. However, the VR participant has to wait until the vehicle on their side comes to a stop and a crosswalk is projected. With this scenario, we wanted to find out how the two concepts would perform when the study participant is potentially influenced by another pedestrian's crossing behavior (Rasouli and Tsotsos, 2019).

In our study, each scenario (A, B, C) was combined with each of the three communication conditions (SmartCurbs, Projections, and Baseline), which adds up to 9 runs per participant. In order for participants to experience and understand the concepts sufficiently, each concept was tested in one coherent block, consisting of the scenarios A, B, and C. Hence, the order of the concept blocks as well as the order of the scenarios within the blocks were counterbalanced and varied for each participant.

We recruited 18 participants between 19 and 64 years (M = 34.33 years, SD = 16.22 years), of which nine self-identified as female and nine as male. Ten participants were students (56%), six employees (33%), and two pensioners (11%). All indicated how often they had worn head-mounted displays (HMDs) so far. 33.33% had never, 38.89% had once, 22.22% a few times and 5.56% often experienced Virtual Reality via HMDs. Hence, about two thirds of all participants had used an HMD at least once. People suffering from color deficiencies were excluded from the study. 27.78% of the study participants wore glasses while participating in the study. Participants also rated their willingness to take risks when crossing the street as “Very low” (5.56%), “Low” (16.67%), “Neutral” (38.89%), “High” (16.67%), or “Very high” (22.22%). All participants conducted the study in their native language <omitted for anonymity>.

The study was conducted in accordance with the rules set forth by the responsible ERB of our institution. Upon arrival at the lab, participants were welcomed by a researcher and introduced to the study. By handing out an information sheet about the study's purpose and tasks, we made sure that all participants could familiarize themselves with the topic. All participants were asked to fill out a consent form to take part in the study. In addition, participants filled out a demographic questionnaire and answered questions about eyesight-related problems, prior VR experience, and their willingness to take risks when crossing streets.

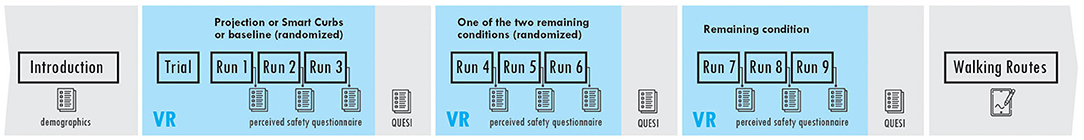

At first, participants moved to the starting position, which we had marked with tape on the ground (see Figure 1, right). Then they put on the HMD and headphones (to hear ambient background sounds and the vehicles' engines). We made sure that the headset was optimally fitted and that it was adjusted accordingly for participants who wore glasses. To give participants a chance to familiarize themselves with the VR system, we showed them the urban environment, however without any vehicles yet. We told participants that the urban environment would stay the same throughout the study, asked them to look around, and made sure they understood the upcoming study task. We explained to participants that all approaching vehicles were automated, and instructed them that they should cross the street when they felt it was safe. In order to become familiar with the crossing task, we asked the participants to cross the street, but without any vehicles involved yet. Thus, they could also accustom themselves to the spatial limitations of the room which were marked through a grid-like cage in VR. When they felt comfortable and understood what to do, we started the actual scenes and they completed their first run. After crossing the street in the first run, the participants were led back to the starting position. After each run, they answered three questions on their experienced feeling of safety. In order to interrupt the immersion as little as possible and reduce the study's duration (Schwind et al., 2019), participants kept the HMD on and answered the corresponding questions directly in VR. Using a button on the HMD, participants could select their answers independently. Each participant completed nine trials in different order and, hence, had to decide nine times when to cross (see Figure 3).

Figure 3. User study procedure. Walking routes were drawn on static images presented on a tablet. The images horizontally present the study procedure in 8 steps including: (1) the introduction (2) trial and first randomized VR run (3) answering of QUESI questionnaire (4) second VR run, depending on order calculated for participant (5) answering of QUESI questionnaire (6) last VR run and (7) answering of QUESI questionnaire (8) drawing of the walking routes. The study procedure is also described in section 4.3.

After three trials (i.e., completing one of the three concepts), the participant took off the HMD and answered the QUESI questionnaire (Naumann and Hurtienne, 2010) for the experienced communication concept. This process, consisting of the three experienced scenarios, the perceived safety questions, and the QUESI questionnaire on the communication concept, was then repeated with the second and the third concept.

The room in which the study took place was 8.6 m by 3.6m with a physical movement area of approximately 3 by 3 m. We used an HTC VIVE Pro Eye VR setup with a corresponding lighthouse tracking system. The simulation ran on a Windows 10 PC including an Nvidia GTX 1980Ti graphics card, an IntelCore i7-6700k processor, and 16GB of RAM. The study environment was created in Unity version 2018.4.16f1. We used a freely available city model downloaded from the Unity Asset store3, and an animated character from Mixamo4 for the virtual pedestrian.

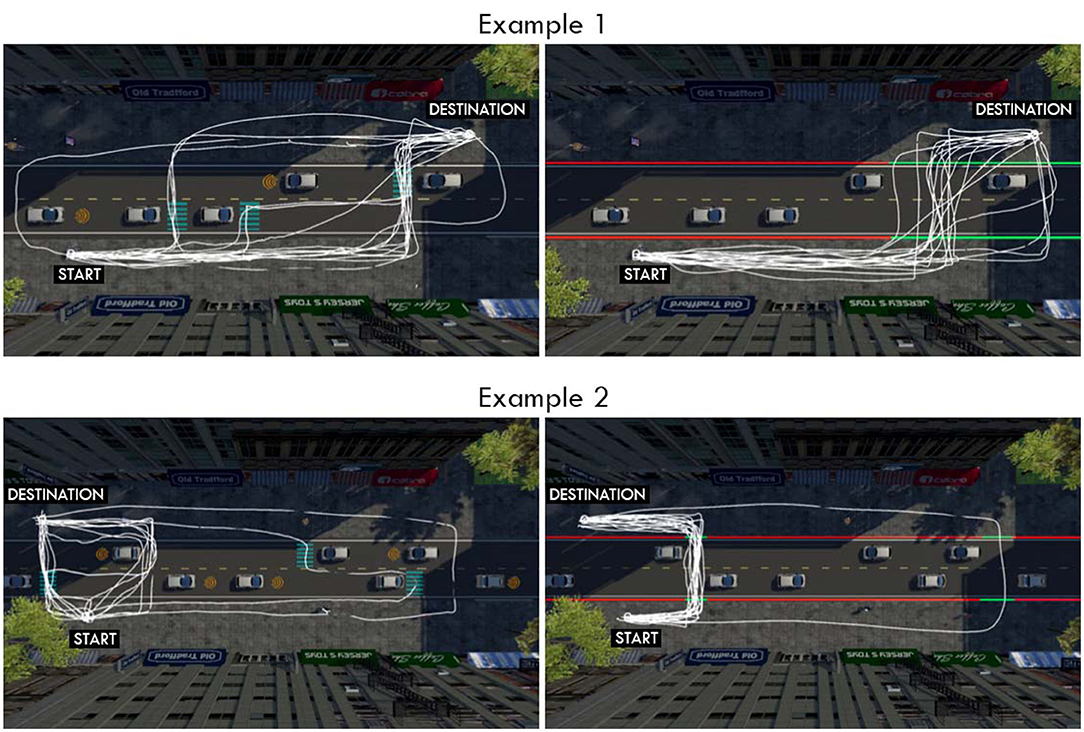

After the experiment in VR, we asked participants to outline walking routes on bird's eye view images on a tablet. We decided to implement this method to evaluate if participants could easily indicate optimal walking routes in more complex traffic situations. Thus, for example, we also wanted to see which walking routes participants would take when their destination point was further away and not orthogonal to the street. However, such an investigation would not have been possible in VR due to the physical boundaries of our room and hardware. Participants were exposed to six static images taken from the VR simulation and asked to draw the walking path they would most likely chose. This was done with the respective concepts Projections and Smart Curbs. We first explained this task via examples and made sure that the participants fully understood both concepts. The estimated walking paths were drawn with a Microsoft Surface Pen on a Microsoft Surface Book 2.

At the end of the study we asked the participants for further comments regarding the user study and concepts. Overall, they spent about 45 to 60 minutes in the lab. We compensated them for their time with 10 Euros in cash. The whole study procedure is also shown in Figure 3.

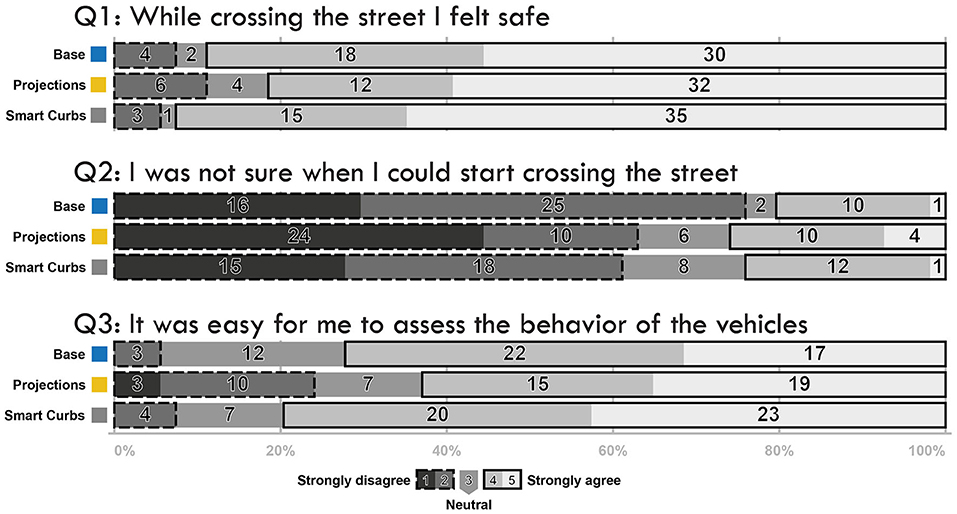

In order to investigate safety we measured the time to cross (TTC) the street. For this, we recorded the time until participants stepped onto the street, the time until they reached the second lane, and the time until they reached the destination point. Additionally, we report collisions between participants and approaching vehicles as well as the time at which the crash occurred and the speed of the vehicle at that time. To investigate subjectively perceived safety we presented three questions and participants rated them on a 5 point Likert scale (Joshi et al., 2015) ranging from fully disagree to fully agree. Likert scale statements included: (1) While crossing the road I felt safe. (2) I was not sure when I could start crossing. (3) It was easy for me to assess the behavior of the vehicles.

In order to assess if participants intuitively understood how to make use of the experienced communication concept in VR, we used the QUESI (Naumann and Hurtienne, 2010) questionnaire. QUESI is a standardized questionnaire to measure the subjective consequences of intuitive use. The questionnaire consists of 14 items to calculate 5 subscales; all items correspond to 5-point Likert scales (ranging from fully disagree to fully agree). To investigate if participants could apply the experienced eHMI concepts to more complicated traffic situations, we visually compared the paths that participants drew on a tablet (from a bird's eye view). We particularly looked for incorrect routes, which would potentially lead to a collision. To further investigate if participants understood the concepts, we measured the time it took them to draw potential walking routes on the tablet (using static images as shown in Figure 8).

In this section we present the results of the immersive investigation and the non-immersive drawing task.

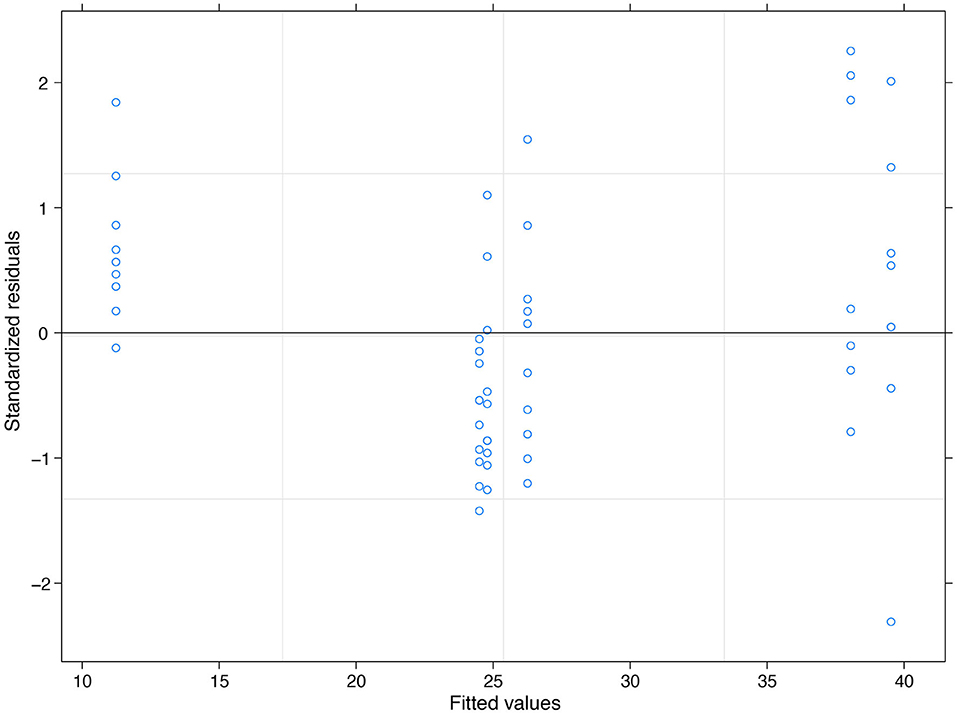

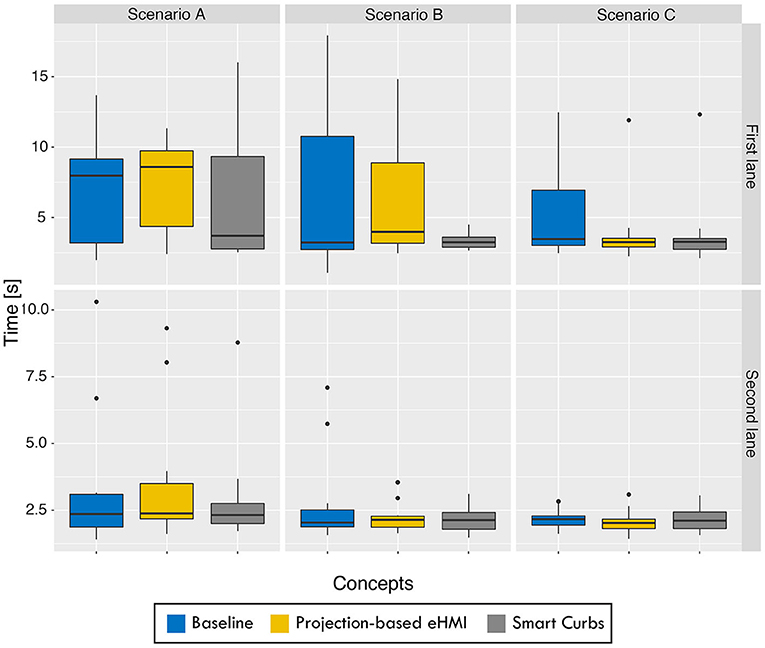

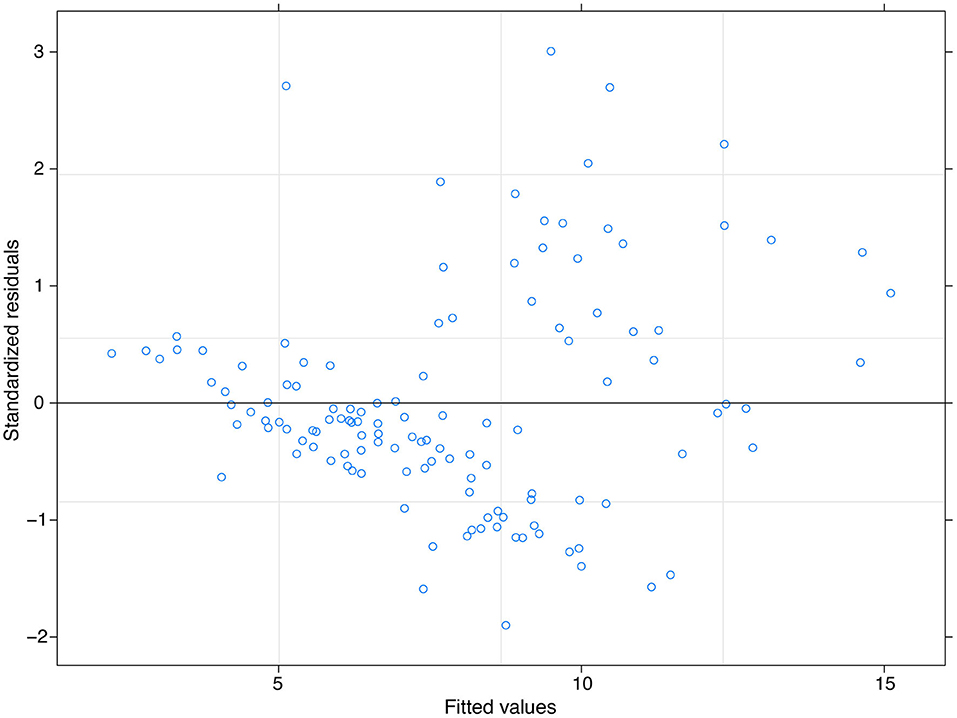

We set up a linear mixed effects model to evaluate the time participants spent on each part of the street. Using Smart Curbs, participants spent significantly less time (estimate: -1.97s, p-value: 0.0115) on the road compared to crossing the street without any communication, as shown in Table 1 and Figure 4. Further p-values were not significant (α = 5%). A boxplot with times needed is shown in Figure 5, illustrating that Smart Curbs enable rather constant crossing times whereas the baseline and projections lead to a much higher variation.

Figure 4. Standardized residuals and fitted values of measured data (see Table 1). Since the residuals do not exhibit any systematic structure we argue that the model fits the data well. Plot with Standardized residuals (ranging from -2 to 3) on the y-axis and Fitted values on the X-axis (ranging from 0 to 15). Each residual is represented by a dot. These dots appear to be scattered randomly within the graph.

Figure 5. Results of measured crossing duration (in s) per lane and scenario as boxplot (with dots representing outliers). First lane and closer to the participant (top), second lane and farther away to the participant (bottom). Boxplots of participants' crossing times in seconds for both lanes and the three experimental conditions. Interquartile ranges are between 1 and 5 seconds, with the first lane having a much broader variance in crossing times among all conditions. For the second lane, Base and Projection conditions show the most outliers.

For the projection-based concept, we noted one collision with a participant in scenario 2. Observations and comments revealed that the participant had seen a projected crosswalk on the farther lane and felt confident to start crossing. At the same time, a vehicle approached from the left side on the first lane and collided with the participant at a speed of 30 km/h and 13.57 s after the start of the scene.

After each of the nine runs, we asked the participants to answer three questions to measure their subjective feeling of safety. Figure 6 shows the frequency of answers for each concept and question. To emphasize the relative distributions of answers, we present the Likert-scale results as axis-aligned frequency plots (Maurer, 2013). We report the median and mode values here as they are a useful indicator for descriptive quantitative analysis that aims to indicate a tendency; also, in consideration that we used a single item Likert scale here (i.e., analysing ordinal data). For Q1—“feeling safe while crossing”—there is a slightly higher approval for Smart Curbs followed by the baseline condition, and respectively a slightly higher disapproval for Projections (however, for all conditions: median=5, mode=5). For Q2—“not sure when to start to cross” (double negative)—there is a slightly higher disapproval for the baseline condition followed by Projections. Projections (median=2, mode=1), compared to the other two conditions (median=2, mode=2), provoke higher frequencies of extreme approvals on both negative and positive sides. For Projections, “strongly agree” was counted 4 times, compared to 1 time for Smart Curbs and the baseline condition. For Q3—“easy to assess the vehicle behavior”—the highest approval was measured for Smart Curbs (median=4, mode=5) with 80% positive agreement, compared to Projections (median=4, mode=5) with 63% and the baseline condition (median=4, mode=4) with 72%.

Figure 6. Results of the Likert-Scale questionnaire on the subjective feeling of safety, showing the frequency of answers collectively across all runs (i.e., in total 54 answers per concept and question). Bar chart with frequency of 52 answers from Likert-Scale questions. Q1Base: strongly disagree: 4; agree: 2; neutral 18; agree: 30. Q1Projections: strongly disagree: 6; agree: 4; neutral 12; agree: 32. Q1Smart Curbs: strongly disagree: 4; agree: 2; neutral 18; agree: 30. Q2Base: strongly disagree: 18; agree: 25; neutral 2; agree: 10; strongly agree: 1. Q2Projections: strongly disagree: 24; agree: 10; neutral 6; agree: 10; strongly agree: 4. Q2Smart Curbs: strongly disagree: 15; agree: 18; neutral 8; agree: 12; strongly agree: 1. Q3Base: strongly disagree: 3; agree: 12; neutral 22; agree: 17; Q3Projections: strongly disagree: 3; agree: 10; neutral 7; agree: 15; strongly agree: 19. Q3Smart Curbs: strongly disagree: 4; agree: 7; neutral 20; agree: 23.

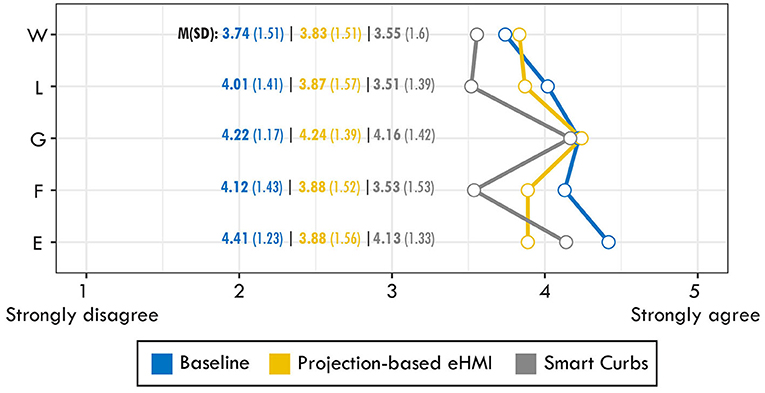

Results of the QUESI questionnaire—measuring subjective consequences of intuitive use—show that the baseline condition ranked highest in the subscales low perceived effort of learning (L), high familiarity (F), and low perceived error rate (E) (cf. Figure 7). Projections ranked highest in the subscales low subjective mental workload (W) and high perceived achievement of goals (G), however closely followed by the baseline and Smart Curbs in (G). Smart Curbs ranked lowest in all subscales except E, where Smart Curbs outperformed Projections. A univariate ANOVA found no statistically significant differences for any of the subscales.

Figure 7. Results of the QUESI questionnaire measuring subjective consequences of intuitive use. A line graph showing the results of the QUESI questionnaire. It includes the following data graphically accumulated.

First we performed a qualitative analysis of drawn walking routes. From the visual assessment of the six presented images, we can conclude that Smart Curbs led to more consistent results. Two examples of the drawn walking routes are shown in Figure 8. In addition to the qualitative analysis of the drawn walking routes, we determined the time required by the participants to map the walking routes to the scenarios in order to see how long the participants had to think about the tasks. Due to technical problems, we could unfortunately only use the data of eleven study participants. Since each participant completed three tasks with the Projections concept and the same three tasks with the Smart Curbs concept, 66 values were accumulated. We performed a linear fixed effects model regarding drawing times (see Table 2, Figures 4, 9). The drawing time with Smart Curbs was significantly lower than in the projection condition (estimate: -11.45 s, p: 0.0001).

Figure 8. Two examples from the drawing task in two different scenarios (top and bottom). In the figures, the drawing routes from all participants are overlaid, which allowed us to quickly assess the consistency of the suggested walking routes. Here are two example scenarios from the drawing task. The images originate from VR screenshots. Each exemplary scenario shows a starting point and a pre-defined destination. These scenarios differ from the ones which participants experienced in VR. Here, we created more complex situations with five vehicles on the road simultaneously. Cars from the projection condition either show a cyan zebra crossing or an orange wave pattern. Smart Curbs illuminate the curbs either red or green symmetrically on either side of the road. Participants were asked to draw their most likely chosen walking route. We overlaid all drawn paths, and it was clearly observable that for Smart Curbs the path became more consistent and shorter.

Figure 9. Standardized residuals and fitted values of measured data (see Table 2). The residuals given above are for straight line regression via least squares, where residuals are scattered both above and below the reference line. We therefore argue the model fits the data well.

Below, we will interpret our results in relation to our stated hypotheses. We will also address some limitations of the study and provide an outlook on the possible application of Smart Curbs, and outline future research questions.

The most important requirement for well-functioning VRU-AV communication is, in our opinion, safety. Smart Curbs performed best regarding the measured safety as our results show that on average, participants spent significantly less time on the road, which in turn reduces their exposure to traffic. In the baseline or Projections condition, participants stepped onto the road earlier, although it was not (yet) safe to cross. We believe that this is also the reason why the baseline and Projections result in larger variations for crossing times (see Figure 5). Based on our observations, the display of the projected crosswalks immediately triggered the feeling in many participants that the road could be crossed safely independently of the other vehicles. When they realized that this was not (yet) the case after stepping onto the first lane, they waited there until it was safe to cross, resulting in longer times on the first lane (i.e., standing on the roadway). With Smart Curbs, on the other hand, participants tended to only start walking when the entire road could be safely crossed, which resulted in less time spent on the road on average. We argue that a shorter time span that pedestrians spend on the road and making the decision to cross only if the entire road is free, reduces the risk of being hit by a car and optimizes traffic flow (as no lane is occupied by a crossing VRU). Therefore, Smart Curbs and the baseline worked more safely in this respect. This may be due to the fact that some participants developed overtrust (Holländer et al., 2019b) by looking at one vehicle projecting a zebra crossing and assuming that the whole road was safe to cross. This is also the situation when the collision occurred in the Projections condition. While we acknowledge that in total only one collision occurred, it provides additional support that Smart Curbs can lead to safer crossing decisions. With Smart Curbs, participants tended to look around again. We assume this was due to the spread of the illuminated curbstone and participants tried to see the whole red and green areas of Smart Curbs.

The assessment of subjective feeling of safety showed only a tendency of higher agreement toward the Smart Curbs concept. For Smart Curbs, we received slightly higher positive agreement that participants felt safe to cross and could easily assess the vehicle behavior. In contrast, the question “I was not sure when I could start crossing” showed better results for the baseline condition. This could be due to the fact that the sensation after each of the three runs of the concept was recorded. Thus, the participants probably had to get used to the communication concepts in order to understand them, while they were more familiar with the baseline condition based on experience in everyday life.

These results indicate that we can accept Hypothesis 1 (“Smart Curbs will reduce the time pedestrians spend on the road and thereby increase safety”). For the assessment of subjective feeling of safety, there is only a small tendency toward Smart Curbs and therefore we cannot accept Hypothesis 2 (“Smart Curbs create a feeling of greater subjective safety for pedestrians than projections or no aid at all”) at this point.

Another key factor for a successful implementation of VRU-AV concepts is comprehensibility. This goes hand in hand with the safety a concept is capable to provide. After the three runs of each concept, we tested the participants' intuitive understanding of the respective concept. The baseline scored best in the categories “High perceived achievement of goals,” “Low perceived effort of learning,” “High familiarity,” and “Low perceived error rate.” This is reasonable, as all participants have interacted with this scenario many times in their lives. In the absence of a human driver (i.e., fully automated vehicle), pedestrians took their crossing decision mainly dependent on the distance of the approaching car, which is in line with the findings of prior investigations (Oxley et al., 2005; Yannis et al., 2013; Moore et al., 2019; Dey et al., 2020c; Holländer et al., 2020b). However, we believe that explicit communication has the potential to enhance trust of automated driving and should therefore not be neglected (Noah et al., 2017; Wintersberger et al., 2018; Holländer et al., 2019b). Projections scored second best for intuitive understanding and slightly exceeded the baseline in the point “Low subjective mental workload.” This is in line with the statements of various participants who felt that they perceived the use of the crosswalk as intuitive since they know crosswalks already. Still, we believe that contradictory crosswalk projections remain a potential source of confusion. Although both concepts Projections and Smart Curbs use common traffic metaphors, the evaluation shows that Projections seem to be even more familiar to the participants than the traffic light metaphor of Smart Curbs. In terms of “Low perceived error rate” Smart Curbs can catch up with Projections. In addition, participants rated Smart Curbs with about 80% as the best concept to easily assess the behavior of the vehicles (see Q3 in Figure 6). Nevertheless, we have to conclude that considering the results from the QUESI questionnaire, the projection-based eHMI performed better in the VR evaluation for intuitive use, even though not significantly. Interestingly, in the drawing task, we found that Smart Curbs outperformed Projections. Evaluating complex traffic situations, it took participants significantly less time to detect an optimal crossing path. The detected paths were also more consistent among participants (see Figure 8), indicating that there were less doubts about the optimal path. Because the results of the QUESI questionnaires are not significant, with a tendency toward the projection-based eHMI over Smart Curbs (see Figure 7), and there is a discrepancy with the results from the drawing task, we cannot accept Hypothesis 3 (“Smart Curbs are easier to understand”).

Nevertheless, there are also strong hints from participants that Smart Curbs do provide a better comprehensibility and that a longer familiarization phase is needed. For example, P1 stated that “[…] perception and assessment of the situation was easiest for [him] with the Smart Curbs.” P1 also mentioned: “[…] as soon as I understood the system [Smart Curbs], I only had to concentrate on the color of the curb and for the Projections concept […] you had to fix each car clearly and observe it well to draw your own conclusions […]”. P16 explained that they “clearly found the Smart Curbs better, because you didn't have to concentrate on the cars here,” referring to a faster overview of the traffic situation. On the other hand, this statement points to potential over-trust issues if users rely fully on external communication of autonomous vehicles (Holländer et al., 2019b). P17 claimed: “[Compared to Projections], you pay more attention to the cars, so the Smart Curbs are better, [however] it takes a while to get it.” In a similar vein, participant P2 said that “[…] it took a while to understand the principle of the curbs completely, however [I] think it is the best help for pedestrians.” These quotes indicate—in alignment with the results from the drawing task—that Smart Curbs might be more intuitive to use in complex traffic situations. On the other hand, it might take the user longer at the beginning to accept that it is not required to look at individual vehicles anymore. Further, users have to adapt the existing traffic light metaphor to a novel type of display that is spatially and temporarily different from existing traffic lights. This might also explain why our findings, in terms of intuitive use, differ from those presented by Löcken et al. (2019). In their work, the smart infrastructure concept outperforms eHMI concepts in terms of intuitive use, however, they rely on an existing crosswalk design. Thus, in their comparision, the scalability problem, multi-user situations and offering a ubiquitous crossing support are not specifically targeted. We therefore argue that our results enrich the domain of VRU-AV interaction research with these perspectives.

In terms of feasibility, one limitation of our concept is that an information network of all vehicles and their planned routes would be needed. If such a system existed, it could also be used for other VRU guidance concepts, for example projection-based eHMIs or light bands located on the vehicle. However, we argue that Smart Curbs have the advantage of presenting information centralized and at the position where the information is needed for VRUs to take safe decisions, thus, VRUs do not have to observe each vehicle individually and guess for whom the displayed information is relevant.

Due to complexity and costs associated with a potential real-world implementation, we fell back on VR simulations. Furthermore, we did not want to risk the health of our participants (indeed a crash occurred). However, we acknowledge that VR simulations cannot represent the complexity of the real world. On the other hand, all of our participants reported a high perceived realism in the simulated urban environment [see also (Deb et al., 2017; Hoggenmüller et al., 2021)] and that they behaved as they would have in real traffic situations. A particular focus in our study was whether the concept is understandable for pedestrians in environments with multiple road users; in our case multiple vehicles and other pedestrians. Due to the limited space in our VR room, it is possible that participants would have moved differently if there were no space restrictions. To counteract the limited space, we additionally used a non-immersive prototype representation where participants could draw their potential walking routes from a birds-eye view. We do not argue that this can replace immersive first-person view evaluations in VR. However, we think that a mixed methods approach, such as presented here, can help to mitigate the current limitations of laboratory VR evaluations, thereby allowing investigation of eHMI concepts in highly complex traffic situations.

From our study we cannot identify if the results from comparing Smart Curbs with a project-based eHMI are transferable to other eHMIs. We agree with Dey et al. (2020a) that a more systematic investigation across the most promising concepts is required. Also, the inconclusive results regarding H2 and H3 suggest that other, more reliable measures might have to be found to assess perceived safety and comprehensibility. In the long run, we agree with one of our reviewers who argued that these aspects can only truly be assessed in real world prototypes, as no simulation can believably claim to capture the complexity of all real world traffic scenarios.

Based on the discussion of our study results and reflecting on the design concepts and evaluation setup presented in this article, we outline a series of future research directions:

Enabling bidirectional communication: Implementing means for VRUs to communicate their intention to cross has only found little attention so far (Gruenefeld et al., 2019). An idea to guarantee mutual bidirectional communication could be that Smart Curbs can register approaching VRUs. Possible options to detect VRUs might be the integration of pressure sensors, cameras, or smartphone tracking. The sensitive zone should be close to the sidewalk and the road. By overlapping this zone, VRUs could communicate their crossing intention. As long as no VRU is around, Smart Curbs would operate in a sleep mode with the illumination turned off. Also, VRUs could easily exit such a zone if they no longer intend to cross the street. This approach would take advantage of the fact that most people intuitively approach the street when they want to cross (Holländer et al., 2020b).

Addressing visually impaired VRUs: Systematic reviews have shown that the majority of eHMI concepts currently neglect the inclusion of VRUs with impairments (e.g., color or complete blindness) (Colley et al., 2019; Dey et al., 2020a). Future work needs to address this gap. We argue that the integration of audio signals might be more promising for infrastructure-based eHMI solutions such as Smart Curbs. Similar to existing accessible traffic lights, the audio output can be placed in close proximity to the VRU. Furthermore, VRUs do not have to pay attention to the audio signals of multiple vehicles.

Reducing complexity in implementation: For the successful uptake of eHMIs, the associated implementation complexity will be a key factor (Dey et al., 2020a). While acknowledging the higher cost and complexity associated with Smart Curbs, future work should investigate whether the curbstone display needs to be continuous along the road, or if single individual spotlights might be sufficient. Furthermore, introducing SmartCurbs requires an advanced city infrastructure, for example curbs might not be a part of every street. As our approach showed that integrating VRU-AV communication in the infrastructure is promising, we hope to inspire fellow researchers with our study to develop other realizations apart from curbs. Future work should also address real world effects, in particular regarding the potential distractions of integrating illuminated curbs.

Testing interconnectivity for existing eHMI concepts: Our work was motivated, amongst other factors, by the lack of current eHMI concepts in taking into account complex traffic scenarios with multiple vehicles. As we were able to demonstrate that Smart Curbs significantly reduced the time participants spent on the road in such scenarios, we argue that interconnectivity should be further explored for predominant eHMI designs (i.e., those that are attached to the vehicle). In this regard, new challenges might arise as each vehicle is no longer only communicating its own status and intent, but also taking into account the behavior of other vehicles in its communication message. Therefore, future research needs to address, in particular, the preferred message of communication for interconnected eHMI concepts.

Addressing additional evaluation scenarios: In our study and argumentation, we assumed that only vehicles of SAE automation levels four and five are driving. Therefore, it would be important to clarify in future studies how the Smart Curbs concept can work within mixed traffic environments including all levels of automation, i.e., also manually controlled cars with human drivers and vehicles of different types and sizes, including motorcycles and scooters. We acknowledge that the main focus of this work was the initial assessment of SmartCurbs, thus we aimed to avoid introducing too many variables. As our study showed the potential of SmartCurbs for complex traffic situations, future work should also consider the influence of other vehicle types and how different driving styles affect safety and user experience. There has been a line of recent research that suggests implicit cues (e.g., motion Dey et al., 2017; Moore et al., 2019) are an important factor for pedestrians' crossing decisions. It is therefore our belief that eHMI concepts, such as SmartCurbs, constitute one means to improve pedestrian safety, however, other factors need to be considered as well to design safe and intuitive interactions between autonomous vehicles and VRUs. Future work should further consider multi-user virtual environments (Carlsson and Hagsand, 1993) instead of using computer-controlled virtual avatars in order to fully assess the influence of social aspects on crossing behavior.

Based on our results and discussion we conclude that Smart Curbs could succeed in realistic traffic environments in terms of safety, comprehensibility, and acceptance. In direct comparison to previously studied projection-based eHMIs (Nguyen et al., 2019), we showed that Smart Curbs have the potential to further decrease the risk of accidents between autonomous vehicles and pedestrians. Using Smart Curbs, VRUs would no longer have to focus on multiple vehicles and their eHMIs, but could rely on information displayed at a focal point and situated within the immediate urban environment. This would make it easier for VRUs to recognize the intention of all vehicles at a glance and instantly receive guidance on where to best cross a road. We showed that Smart Curbs lower the time that pedestrians spend on the road. This, we argue, has not only implications on safety, but can in addition enhance traffic flow as VRUs are less likely to block a lane while crossing. Speculating on the upgrade of urban environments with smart technologies, our work contributes to the domain of AV-VRU communication research by offering a starting point for addressing the scalability problem of eHMIs.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

The studies involving human participants were reviewed and approved by LMU Munich Ethics Committee Mediainformatics. The patients/participants provided their written informed consent to participate in this study.

KH and MH conzeptualized the work. RG created the study setup. AB and SV refined the final presentation of this work. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2022.844245/full#supplementary-material

1. ^https://www.drivingelectric.com/citroen/c-zero, last accessed: September 2021.

2. ^For a classification of protected and unprotected road users we refer to the taxonomy introduced by Holländer et al. (2021).

3. ^https://assetstore.unity.com/packages/3d/environments/urban/nyc-block-6-16272, last accessed September 2021.

4. ^https://www.mixamo.com, last accessed September 2021.

Argin, G., Pak, B., and Turkoglu, H. (2020). Between post-flâneur and smartphone zombie: smartphone users' altering visual attention and walking behavior in public space. ISPRS Int. J. Geo Inf. 9, 700. doi: 10.3390/ijgi9120700

Bakker, S., Hausen, D., and Selker, T. (2016). Peripheral Interaction: Challenges and Opportunities for HCI in the Periphery of Attention, 1st Edn. Berlin: Springer Publishing Company.

Bazilinskyy, P., Dodou, D., and de Winter, J. (2019). Survey on ehmi concepts: The effect of text, color, and perspective. Transp. Res. Part F Traffic Psychol. Behav. 67, 175–194. doi: 10.1016/j.trf.2019.10.013

Böckle, M.-P., Brenden, A. P., Klingegård, M., Habibovic, A., and Bout, M. (2017). “Sav2p: exploring the impact of an interface for shared automated vehicles on pedestrians' experience,” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, AutomotiveUI '17 (New York, NY: Association for Computing Machinery), 136–140.

CGactive LLC. (2009). Available online at: https://www.type-n-walk.com (accessed April 1, 2021).

Charisi, V., Habibovic, A., Andersson, J., Li, J., and Evers, V. (2017). “Children's views on identification and intention communication of self-driving vehicles,” in Proceedings of the 2017 Conference on Interaction Design and Children, IDC '17 (New York, NY: Association for Computing Machinery) 399–404.

Colley, M., Walch, M., Gugenheimer, J., and Rukzio, E. (2019). “Including people with impairments from the start: external communication of autonomous vehicles,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings AutomotiveUI '19 (New York, NY: Association for Computing Machinery), 307–314.

Colley, M., Walch, M., and Rukzio, E. (2020). “Unveiling the lack of scalability in research on external communication of autonomous vehicles,” in Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems CHI EA '20 (New York, NY: Association for Computing Machinery), 1–9.

Deb, S., Carruth, D. W., Sween, R., Strawderman, L., and Garrison, T. M. (2017). Efficacy of virtual reality in pedestrian safety research. Appl. Ergon. 65, 449–460. doi: 10.1016/j.apergo.2017.03.007

Dey, D., Habibovic, A., Löcken, A., Wintersberger, P., Pfleging, B., Riener, A., et al. (2020a). Taming the ehmi jungle: a classification taxonomy to guide, compare, and assess the design principles of automated vehicles' external human–machine interfaces. Transp. Res. Interdiscipl. Perspect. 7, 100174. doi: 10.1016/j.trip.2020.100174

Dey, D., Habibovic, A., Pfleging, B., Martens, M., and Terken, J. (2020b). “Color and animation preferences for a light band ehmi in interactions between automated vehicles and pedestrians,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems CHI '20 (New York, NY: Association for Computing Machinery), 1–13.

Dey, D., Holländer, K., Berger, M., Eggen, B., Martens, M., Pfleging, B., and Terken, J. (2020c). “Distance-dependent ehmis for the interaction between automated vehicles and pedestrians,” in 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '20 (New York, NY: Association for Computing Machinery), 192–204.

Dey, D., Martens, M., Eggen, B., and Terken, J. (2017). “The impact of vehicle appearance and vehicle behavior on pedestrian interaction with autonomous vehicles,” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct AutomotiveUI '17 (New York, NY: Association for Computing Machinery), 158–162.

Dey, D., Martens, M., Eggen, B., and Terken, J. (2019). Pedestrian road-crossing willingness as a function of vehicle automation, external appearance, and driving behaviour. Transp. Res. Part F Traffic Psychol. Behav. 65, 191–205. doi: 10.1016/J.TRF.2019.07.027

Dey, D., Matviienko, A., Berger, M., Pfleging, B., Martens, M., and Terken, J. (2021). Communicating the intention of an automated vehicle to pedestrians: the contributions of ehmi and vehicle behavior. it - Inf. Technol. 63, 123–141. doi: 10.1515/itit-2020-0025

Eisma, Y., Reiff, A., Kooijman, L., Dodou, D., and de Winter, J. (2021). External human-machine interfaces: Effects of message perspective. Transp. Res. Part F Traffic Psychol. Behav. 78, 30–41. doi: 10.1016/j.trf.2021.01.013

Forum, T. I. T. (2013). Towards Zero Ambitious Road Safety Targets and the Safe System Approach. http://www.internationaltransportforum.org/jtrc/safety/targets/targets.html

Gruenefeld, U., Weiß, S., Löcken, A., Virgilio, I., Kun, A. L., and Boll, S. (2019). “Vroad: Gesture-based interaction between pedestrians and automated vehicles in virtual reality,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings AutomotiveUI '19 (New York, NY: Association for Computing Machinery), 399–404.

Hoggenmüller, M., Tomitsch, M., Hespanhol, L., Tran, T. T. M., Worrall, S., and Nebot, E. (2021). “Context-based interface prototyping: understanding the effect of prototype representation on user feedback,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems CHI '21 (New York, NY: Association for Computing Machinery).

Holländer, K., Andy, K., and Butz, A. (2020a). “Save the smombies: App-assisted street crossing,” in Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services MobileHCI 2020 (New York, NY: ACM).

Holländer, K., Colley, A., Mai, C., Häkkilä, J., Alt, F., and Pfleging, B. (2019a). “Investigating the influence of external car displays on pedestrians' crossing behavior in virtual reality,” in Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services MobileHCI 2019 (New York, NY: ACM).

Holländer, K., Colley, M., Rukzio, E., and Butz, A. (2021). A taxonomy of vulnerable road users for hci based on a systematic literature review.

Holländer, K., Schelleneberg, L., Ou, C., and Butz, A. (2020b). “All fun and games: obtaining critical pedestrian behavior data from an online simulation,” in Extended Abstracts, CHI Conference on Human Factors in Computing Systems CHI'20 Adjunct Proceedings (New York, NY: ACM).

Holländer, K., Wintersberger, P., and Butz, A. (2019b). “Overtrust in external cues of automated vehicles: An experimental investigation,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '19 (New York, NY: ACM).

Hwang, T., Jeong, J. P., and Lee, E. (2014). “Sana: safety-aware navigation app for pedestrian protection in vehicular networks,” in 2014 International Conference on Information and Communication Technology Convergence (ICTC) (Busan), 947–953.

Jiang, D., Worrall, S., and Shan, M. (2021). What is the appropriate speed for an autonomous vehicle? designing a pedestrian aware contextual speed controller.

Joshi, A., Kale, S., Chandel, S., and Pal, D. K. (2015). Likert scale: explored and explained. Curr. J. Appl. Sci. Technol. 7, 396–403. doi: 10.9734/BJAST/2015/14975

Lee, J., Wickens, C., Liu, Y., and Boyle, L. (2017). Designing for People: An Introduction To Human Factors Engineering.

Löcken, A., Golling, C., and Riener, A. (2019). “How should automated vehicles interact with pedestrians? a comparative analysis of interaction concepts in virtual reality,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '19 (New York, NY: Association for Computing Machinery), 262–274.

Mahadevan, K., Somanath, S., and Sharlin, E. (2018). “Communicating awareness and intent in autonomous vehicle-pedestrian interaction,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems CHI '18 (New York, NY: Association for Computing Machinery), 1–12.

Maurer, M.-E. (2013). likertplot.com - plot likert scales. Available online at: http://www.likertplot.com/

M. Faas, S., Kraus, J., Schoenhals, A., and Baumann, M. (2021). “Calibrating pedestrians' trust in automated vehicles: does an intent display in an external hmi support trust calibration and safe crossing behavior?” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems CHI '21 (New York, NY: Association for Computing Machinery).

Mobility E. C. Transport. (2021). Road Safety Pedestrians and Cyclists. https://ec.europa.eu/transport/road_safety/specialist/knowledge/pedestrians_en

Moore, D., Currano, R., Shanks, M., and Sirkin, D. (2020a). “Defense against the dark cars: design principles for griefing of autonomous vehicles,” in HRI '20: Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (New York, NY: Association for Computing Machinery), 201–209.

Moore, D., Currano, R., and Sirkin, D. (2020b). “Sound decisions: how synthetic motor sounds improve autonomous vehicle-pedestrian interactions,” in 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '20 (New York, NY: Association for Computing Machinery), 94–103.

Moore, D., Currano, R., Strack, G. E., and Sirkin, D. (2019). “The case for implicit external human-machine interfaces for autonomous vehicles,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '19 (New York, NY: Association for Computing Machinery), 295–307.

Mora, L., Wu, X., and Panori, A. (2020). Mind the gap: developments in autonomous driving research and the sustainability challenge. J. Clean. Prod. 275, 124087. doi: 10.1016/j.jclepro.2020.124087

Morby, A. (2015). Büro North proposes ground-level traffic lights to prevent pedestrian accidents. Available online at: https://www.dezeen.com/2016/07/28/movie-buronorth-ground-level-traffic-lights-prevent-pedestrian-accidents-video/

Nasar, J. L. (2013). Pedestrian injuries due to mobile phone use in public places. Accid. Anal. Prevent., 57, 91–95. doi: 10.1016/j.aap.2013.03.021

Naumann, A., and Hurtienne, J. (2010). “Benchmarks for intuitive interaction with mobile devices,” in Proceedings of the 12th International Conference on Human Computer Interaction with Mobile Devices and Services (Lisbon: ACM), 401–402. doi: 10.1145/1851600.1851685

Nguyen, T. T., Holländer, K., Hoggenmueller, M., Parker, C., and Tomitsch, M. (2019). “Designing for projection-based communication between autonomous vehicles and pedestrians,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '19 (New York, NY: ACM).

Noah, B. E., Wintersberger, P., Mirnig, A. G., Thakkar, S., Yan, F., Gable, T. M., et al. (2017). “First workshop on trust in the age of automated driving,” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct AutomotiveUI '17 (New York, NY: Association for Computing Machinery), 15–21.

of Automotive Engineers S. Sae International Releases Updated Visual Chart for ITS “Levels of Driving Automation” Standard for Self-Driving Vehicles. https://www.sae.org/news/press-room/2018/12/sae-international-releases-updated-visual-chart-for-its-%E2%80%9Clevels-of-driving-automation%E2%80%9D-standard-for-self-driving-vehicles (accessed April 14 2021).

Oxley, J. A., Ihsen, E., Fildes, B. N., Charlton, J. L., and Day, R. H. (2005). Crossing roads safely: an experimental study of age differences in gap selection by pedestrians. Accident Anal. Prevent. 37, 962–971. doi: 10.1016/j.aap.2005.04.017

Pelikan, H. R. (2021). “Why Autonomous Driving Is So Hard: The Social Dimension of Traffic,” in HRI '21 Companion: Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction (New York, NY: Association for Computing Machinery), 81–85.

Rasouli, A., Kotseruba, I., and Tsotsos, J. K. (2017). “Agreeing to cross: how drivers and pedestrians communicate,” in 2017 IEEE Intelligent Vehicles Symposium (IV) (Los Angeles, CA), 264–269.

Rasouli, A., and Tsotsos, J. K. (2019). Autonomous vehicles that interact with pedestrians: a survey of theory and practice. IEEE Trans. Intell. Transp. Syst. 21, 900–918. doi: 10.1109/TITS.2019.2901817

Rouchitsas, A., and Alm, H. (2019). External human–machine interfaces for autonomous vehicle-to-pedestrian communication: a review of empirical work. Front. Psychol. 10, 2757. doi: 10.3389/fpsyg.2019.02757

Schwind, V., Knierim, P., Haas, N., and Henze, N. (2019). “Using presence questionnaires in virtual reality,” CHI '19: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY: Association for Computing Machinery), 1–12.

Sucha, M., Dostal, D., and Risser, R. (2017). Pedestrian-driver communication and decision strategies at marked crossings. Acc Analy. Prevent. 102, 41–50. doi: 10.1016/j.aap.2017.02.018

Umbrellium (2017). Starling crossing: interactive pedestrian crossing. Available online at: https://umbrellium.co.uk/projects/starling-crossing/

Verma, H., Evéquoz, F., Pythoud, G., Eden, G., and Lalanne, D. (2019). “Engaging pedestrians in designing interactions with autonomous vehicles,” in Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems CHI EA '19 (New York, NY: Association for Computing Machinery), 1–6.

Wang, T., Cardone, G., Corradi, A., Torresani, L., and Campbell, A. T. (2012). “Walksafe: a pedestrian safety app for mobile phone users who walk and talk while crossing roads,” in Proceedings of the Twelfth Workshop on Mobile Computing Systems & a Applications HotMobile '12 (New York, NY: Association for Computing Machinery).

Willett, W., Jansen, Y., and Dragicevic, P. (2017). Embedded data representations. IEEE Trans. Visual. Comput. Graph. 23, 461–470. doi: 10.1109/TVCG.2016.2598608

Wintersberger, P., Noah, B. E., Kraus, J., McCall, R., Mirnig, A. G., Kunze, A., Thakkar, S., and Walker, B. N. (2018). “Second workshop on trust in the age of automated driving,” in Adjunct Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications AutomotiveUI '18 (New York, NY: Association for Computing Machinery), 56–64.

Keywords: smart cities, eHMIs, city infrastructure, automated vehicles, VRUs, AV-VRU communication, human computer interaction (HCI)

Citation: Holländer K, Hoggenmüller M, Gruber R, Völkel ST and Butz A (2022) Take It to the Curb: Scalable Communication Between Autonomous Cars and Vulnerable Road Users Through Curbstone Displays. Front. Comput. Sci. 4:844245. doi: 10.3389/fcomp.2022.844245

Received: 27 December 2021; Accepted: 14 February 2022;

Published: 15 April 2022.

Edited by: