- Management College, Beijing Union University, Beijing, China

Introduction: Blended learning combines the strengths of online and offline teaching and has become a popular approach in higher education. Despite its advantages, maintaining and enhancing students’ continuous learning motivation in this mode remains a significant challenge.

Methods: This study utilizes questionnaire surveys and structural equation modeling to examine the role of AI performance assessment in influencing students’ continuous learning motivation in a blended learning environment.

Results: The results indicate that AI performance assessment positively influences students’ continuous learning motivation indirectly through expectation confirmation, perceived usefulness, and learning satisfaction. However, AI performance assessment alone does not have a direct impact on continuous learning motivation.

Discussion: To address these findings, this study suggests measures to improve the effectiveness of AI performance assessment systems in blended learning. These include providing diverse evaluation metrics, recommending personalized learning paths, offering timely and detailed feedback, fostering teacher-student interactions, improving system quality and usability, and visualizing learning behaviors for better tracking.

1 Introduction

With the rapid advancement of information technology, particularly the rise of artificial intelligence (AI), educational technology has gained widespread application. Blended learning combines the advantages of online and offline instruction, offering a more flexible and efficient teaching model for higher education (Yang et al., 2023). However, a persistent challenge in this teaching model is how to continuously stimulate students’ learning motivation (Zhang et al., 2016). Although research indicates that blended learning can enhance students’ learning experiences and engagement, their sustained motivation often remains limited. This limitation arises from the higher demands placed on students’ self-discipline and autonomous learning capabilities, causing some students to feel lost or unmotivated during their studies (Wang and Huang, 2023).

While numerous studies have explored the role of AI in enhancing educational outcomes, few have delved into how AI-enabled assessment systems affect students’ sustained motivation in blended learning environments. Existing research largely focuses on the technical advantages of AI in optimizing teaching processes or providing personalized feedback (Guo et al., 2023; Rad et al., 2023). However, understanding the mechanisms through which these systems operate—particularly how they influence key motivational constructs such as expectation confirmation, perceived usefulness, and learning satisfaction in an integrated teaching model—remains limited. This study addresses this research gap by applying the Expectation Confirmation Model (ECM) and Self-Determination Theory (SDT) to explore how AI performance assessment systems can enhance students’ sustained motivation in blended learning.

The potential theoretical contributions of this study include verifying the impact of AI performance assessment on students learning experiences and expanding the application of the ECM and SDT. By analyzing the influence of AI performance assessment technology in blended learning, this research reveals the critical roles of expectation confirmation and perceived usefulness in enhancing students’ motivation for continuous learning, providing a theoretical foundation for future research in related fields. Practically, this study offers valuable insights for educational institutions and technology developers. The findings suggest that AI performance assessment systems should focus on designing personalized feedback and enhancing perceived usefulness to improve student learning satisfaction and intentions for continuous learning. Effective application of technology in blended learning environments can significantly enhance students learning experiences and provide strong support for long-term motivation.

2 Literature review and research hypotheses

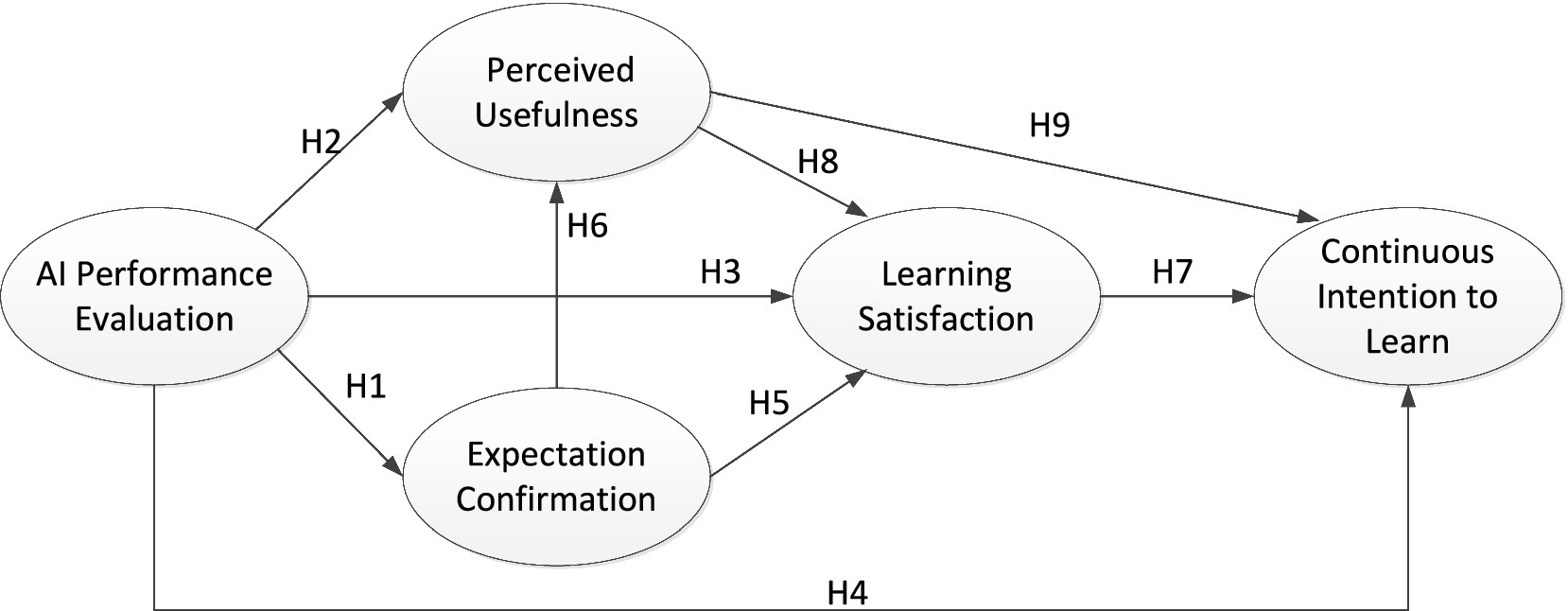

Figure 1 presents the conceptual framework of this study, which examines the influence of AI-driven performance assessment on students’ continuous intention to learn within a blended learning environment. This model is grounded in two primary theoretical frameworks: Self-Determination Theory and the Expectation Confirmation Model. These theories underscore the essential psychological and motivational factors that contribute to sustained learning engagement and satisfaction. Within this framework, AI performance assessment functions as an independent variable, influencing three key psychological responses among students: expectation confirmation, perceived usefulness, and learning satisfaction. These responses collectively shape students’ continuous intention to learn.

Specifically, expectation confirmation reflects the extent to which students perceive that their experience with AI assessment aligns with or surpasses their initial expectations. When students’ expectations are met or exceeded, they are more likely to view the assessment as both useful and satisfying. Perceived usefulness, on the other hand, captures students’ beliefs about the value that AI assessment adds to their learning experience and outcomes; higher perceived usefulness is often linked to greater satisfaction and a stronger inclination to continue utilizing AI tools for learning. Learning satisfaction encompasses students’ overall evaluation of their experience with AI-driven assessment, shaped by factors such as personalized feedback, autonomy, and real-time support provided by the AI system. Satisfied students are generally more inclined to engage persistently in the learning model.

This framework posits several causal relationships among these constructs, suggesting that continuous intention to learn is influenced by both satisfaction and perceived usefulness derived from AI performance assessments. With this conceptual foundation in place, the specific hypotheses are developed as follows.

2.1 The impact of AI performance assessment on students

SDT posits that individual motivation is driven by three fundamental psychological needs: autonomy, competence, and relatedness (Ryan, 2017). In blended learning environments, AI grading systems enhance students’ sense of control over their learning processes by providing personalized feedback and immediate support, thereby fostering greater engagement and sustained effort in their studies.

Firstly, AI grading systems facilitate a better understanding of students’ learning progress and areas needing improvement through real-time, personalized feedback. This tailored feedback not only boosts students’ confidence but also statistically significantly enhances learning outcomes by assisting them in adjusting their learning strategies (Guo et al., 2023; Rad et al., 2023). Personalized feedback is particularly effective in blended learning contexts, as it allows students to receive direct responses during their self-directed learning, thereby reducing uncertainty and maintaining high levels of participation (Lim et al., 2020). This feedback mechanism is a crucial factor in expectation confirmation, as it helps students verify whether their learning progress aligns with their goals (Wang and Lehman, 2021). When students receive immediate and specific feedback during their learning journey, they are more likely to affirm their expectations and recognize their progress, thus enhancing their motivation for continued learning. Therefore, we propose the following hypothesis:

H1: AI Performance Assessment positively influences the level of expectation confirmation among students in blended learning.

Additionally, AI Performance Assessment systems help students identify knowledge gaps and adjust their learning strategies promptly through automated grading and personalized feedback. This immediate and detailed feedback enhances students’ sense of control over their learning progress in blended learning, reducing their anxiety while waiting for responses (Riezebos et al., 2023) and clarifying how blended learning supports their education. SDT emphasizes that greater autonomy in tasks fosters intrinsic motivation. When students recognize that the system can quickly and accurately identify their learning needs and provide effective improvement suggestions, their perceived usefulness statistically significantly increases (Troussas et al., 2023). Furthermore, students gradually realize that the system not only addresses their current learning challenges but also continuously supports their academic progress, leading to overall performance improvements (Pulfrey et al., 2011). Consequently, students’ trust and reliance on the blended learning system increase, further reinforcing their perception of the system’s importance and usefulness in achieving learning objectives. Therefore, we propose the following hypothesis:

H2: AI Performance Assessment positively influences students' perceived usefulness in blended learning.

Furthermore, AI Performance Assessment systems can effectively enhance students’ satisfaction with blended learning. Learning satisfaction refers to students’ overall evaluation of their learning experiences and is influenced by various factors, including the timeliness of feedback, fulfillment of learning outcomes, and personalized support (Huo et al., 2018). AI systems utilize intelligent analysis and algorithms to quickly identify students’ learning needs and provide timely, personalized support and suggestions. This prompt feedback not only meets students’ learning requirements but also enhances their learning experience and recognition of learning outcomes (Gong et al., 2021). When students perceive that their learning needs are effectively addressed, their satisfaction statistically significantly increases (Rad et al., 2023). Particularly in blended learning environments, students require more personalized support; the feedback mechanisms provided by AI help them feel a greater sense of attention, further improving overall learning satisfaction (Huo et al., 2018). Therefore, we propose the following hypothesis:

H3: AI Performance Assessment positively influences students' satisfaction with blended learning.

Finally, AI Performance Assessment systems can directly enhance students’ willingness to engage in continuous learning. The concept of “willingness” refers to students’ readiness and motivation to pursue ongoing learning opportunities, which is essential for academic success. According to SDT, intrinsic motivation plays a critical role in this process. When students experience a sense of autonomy and control over their learning, their self-efficacy increases significantly (Chen and Hoshower, 2003). AI systems provide immediate feedback and personalized learning path recommendations, helping students gain a clearer understanding of their learning progress. This sense of control boosts students’ confidence and encourages them to adjust their learning strategies based on feedback. When students can timely adapt their strategies and witness their own progress, their self-efficacy statistically significantly improves (Pulfrey et al., 2011), thereby fostering their willingness to continue learning. Research indicates that AI-based feedback mechanisms not only enhance students’ academic performance but also effectively boost their intrinsic motivation (Fidan and Gencel, 2022). Therefore, we propose the following hypothesis:

H4: AI Performance Assessment positively influences students' willingness to engage in continuous learning in blended environments.

2.2 Expectation confirmation model and blended learning

The ECM was developed by Bhattacherjee based on Oliver (1980) Expectation Confirmation Theory (ECT) (Bhattacherjee, 2001). Bhattacherjee (2001) posited that users’ behavior in using information systems is highly consistent with consumer behavior in purchasing, where the users’ experience with the information system impacts their willingness to continue using it. ECM merges the pre-purchase expectations and post-purchase perceived effects in ECT into a single variable: perceived usefulness. It suggests that the continued use intention of information technology or information systems is influenced by perceived usefulness, expectation confirmation, and satisfaction. Hayashi et al. (2004) noted that the main difference between ECT and ECM is that ECM focuses on constructs after the technology’s use.

In recent years, the ECM has been widely applied in educational technology research, demonstrating its effectiveness in explaining the sustained use of information technology in education. According to the ECM, after using educational technology, students reevaluate the technology’s usefulness by assessing the difference between their actual experience and their expectations, which then influences their satisfaction and their intention to continue using the technology (Limayem and Cheung, 2008; Shin et al., 2011).

Specifically, in a blended learning environment, when students experience high levels of expectation confirmation, they are more likely to perceive the blended learning model as useful. This perceived usefulness encompasses not only students’ recognition of the teaching tools and resources used in blended learning but also their positive evaluation of learning outcomes in this teaching model (Kumar et al., 2021). Therefore, we hypothesize:

H5: Students' degree of expectation confirmation positively influences their perceived usefulness in blended learning.

Furthermore, the ECM suggests that when students’ learning experiences meet or exceed their expectations, they exhibit higher learning satisfaction. In blended learning, this satisfaction comes not only from the learning outcomes but also from the learning process and resources (Gong et al., 2021). Satisfaction with the learning resources and teaching process in blended learning will enhance overall students learning experience and their willingness to continue learning. Therefore, we hypothesize:

H6: Students' degree of expectation confirmation positively influences their learning satisfaction in blended learning.

According to ECM theory, satisfaction is a key factor influencing the intention to continue using a system. In a blended learning environment, when students are satisfied with the learning process and outcomes, they are more motivated to continue using this teaching model. Students with high satisfaction are not only content with their current learning but also maintain a positive attitude towards future blended learning, thereby enhancing their willingness for continuous learning (Chen et al., 2022). Therefore, we hypothesize:

H7: Learning satisfaction positively influences students' willingness for continuous blended learning.

Perceived usefulness is an important factor affecting student satisfaction. For example, studies have shown that course quality and perceived practicality statistically significantly influence college students’ satisfaction and behavioral intentions (Chen et al., 2022). Additionally, research has found that blended learning statistically significantly improves students’ motivation, emotional state, and satisfaction compared to traditional teaching methods (Lozano-Lozano et al., 2020). If students perceive blended learning as beneficial to their learning outcomes, they are more likely to be satisfied with the entire blended learning experience. Therefore, we hypothesize:

H8: Students' perceived usefulness of blended learning positively influences their satisfaction with blended learning.

Perceived usefulness not only affects student satisfaction but also directly influences their continuous learning intention. Studies have shown that information quality and self-efficacy are key factors determining student satisfaction and continued learning intentions (Li and Phongsatha, 2022). Research during the pandemic has also shown that information quality and self-efficacy statistically significantly influence the intention to continue using Learning Management Systems (LMS) (Alzahrani and Seth, 2021). These studies suggest that when students find blended learning statistically significantly beneficial to their learning, they are not only satisfied with their current learning experience but also more willing to continue using this learning method. This continuous use intention reflects students’ recognition of their current learning experience and predicts their positive attitude towards future learning. Therefore, we hypothesize:

H9: Students' perceived usefulness of blended learning positively influences their continuous learning intention in a blended learning environment.

3 Research methodology

3.1 Participants

This study targeted undergraduate business students at the university, covering various disciplines such as accounting, finance, marketing, and international trade. The gender distribution among participants was relatively balanced, with approximately 48% male and 52% female. The grade distribution was as follows: 30% first-year students, 25% second-year, 28% third-year, and 17% fourth-year students. In terms of academic performance, 35% of participants were in the top 20% of their class, 50% were at an average level, and 15% were in the lower percentile. Additionally, 32% of participants reported having prior experience with AI technology.

3.2 Data collection procedure

The questionnaire was designed and distributed via an online platform (Wenjuanxing). The introduction of the survey included a brief explanation of the study’s purpose, privacy protection measures, and the principle of voluntary participation. It was emphasized that all data would be anonymized and used solely for academic research, and participants could withdraw at any time without affecting their academic performance or other rights. The questionnaire link was shared through WeChat class and course groups, allowing students to complete it online by clicking the link.

To boost participation, the research team sent regular reminders via WeChat class and course groups throughout the two-week survey period, with two additional reminders sent during the final 3 days to encourage students to complete the survey promptly. A total of 282 questionnaires were distributed, and 202 valid responses were collected, yielding a response rate of 76.6%. Invalid or incomplete questionnaires were removed during the data-cleaning process to ensure data quality and reliability.

3.3 Scale design

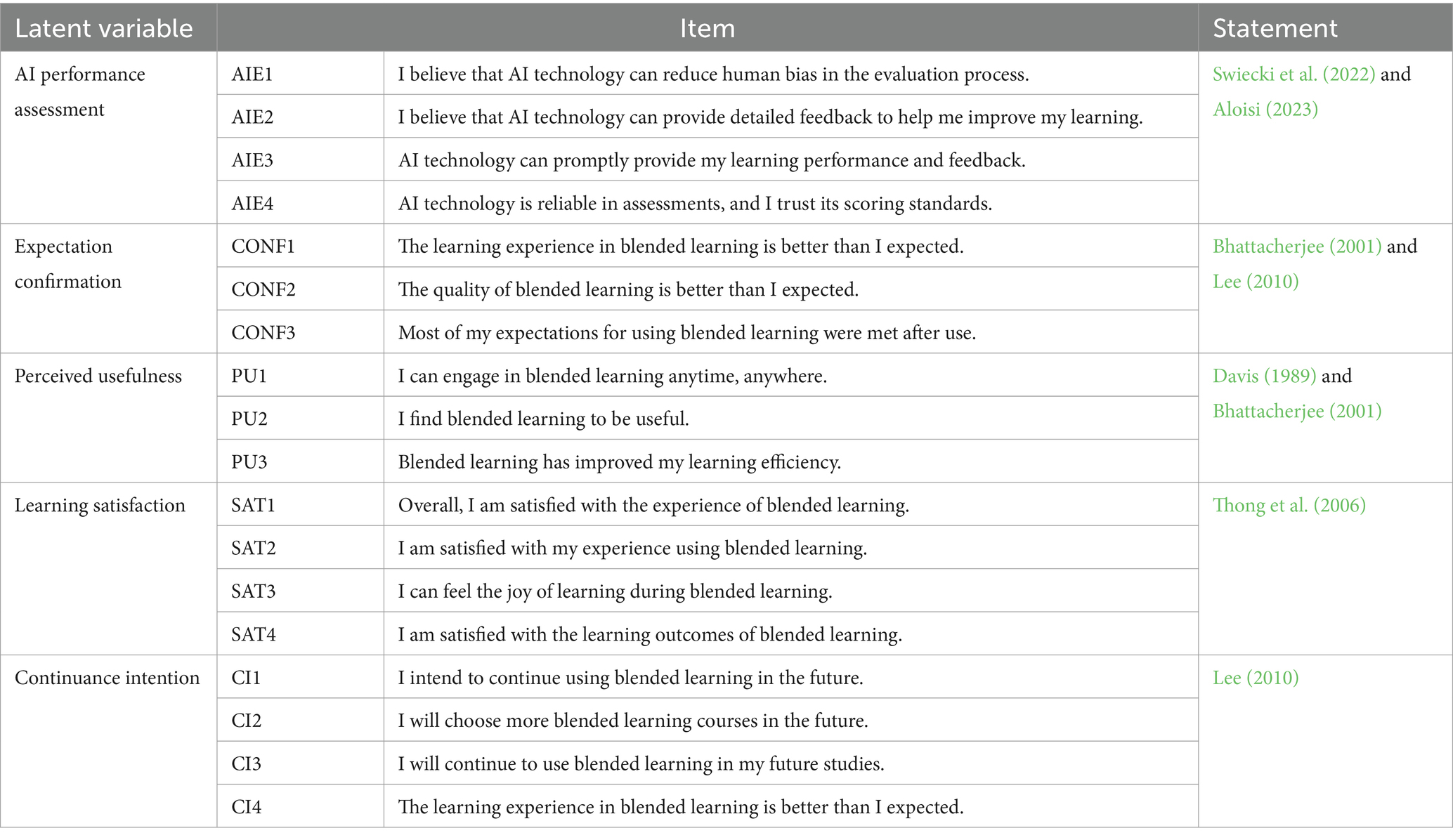

The questionnaire scales used in this study were primarily based on established and validated scales. The AI performance assessment was designed according to the findings of Swiecki et al. (2022) and Aloisi (2023). The perceived usefulness scale was derived from Davis et al. (1989) and Bhattacherjee (2001); the student expectation confirmation scale referenced Bhattacherjee (2001) and Lee (2010); the student satisfaction scale was based on Thong et al. (2006); and the intention to continue mobile learning scale was adapted from Lee (2010).

After the initial design of the questionnaire, we invited three experts in the field of educational technology to review it. They provided valuable feedback on the relevance, wording, and clarity of the items. Based on their suggestions, we revised the wording to ensure that each item accurately reflects its corresponding construct and is easily understood by participants.

To further verify the reliability of the questionnaire, we conducted a pilot study with 30 students who shared similar characteristics with the main sample. The results showed that the Cronbach’s α coefficients for all scales exceeded 0.75, indicating good internal consistency. Based on feedback from the pilot study, we made minor adjustments to certain items to enhance the reliability and validity of the scales.

All scales employed a 7-point Likert scale, ranging from “1” (strongly disagree) to “7” (strongly agree). At the beginning of the questionnaire, we provided clear instructions emphasizing voluntary participation and data confidentiality. We also ensured uniformity in the data collection process, with all participants completing the questionnaire under the same conditions to minimize the influence of external factors on the data.

3.4 Data analysis and model evaluation

This study employed Structural Equation Modeling (SEM) for data analysis and hypothesis testing. SEM is a multivariate statistical technique that simultaneously addresses measurement and structural models, making it suitable for analyzing complex relationships between latent variables.

To ensure the validity and reliability of the SEM analysis, sample size adequacy is crucial. According to Hair et al. (2019), there should be at least 5 to 10 observations per free parameter. This study included 18 observed variables across 5 latent constructs, requiring a minimum sample size of 90 (18 × 5). With 202 valid questionnaires collected, the sample size exceeds this threshold, meeting SEM requirements and ensuring the stability of parameter estimates.

Data preprocessing and descriptive statistical analyses were conducted using SPSS 22, addressing any missing values and outliers. Subsequently, AMOS 24.0 was utilized for SEM analysis, including Confirmatory Factor Analysis (CFA) and structural model testing.

Reliability was assessed using Cronbach’s α coefficient, with values above 0.7 indicating acceptable internal consistency (Taber, 2018). Convergent validity was evaluated through CFA, ensuring factor loadings exceeded 0.5, composite reliability (CR) was above 0.6, and average variance extracted (AVE) surpassed 0.5 (Fornell and Larcker, 1981). Discriminant validity was confirmed following Hair et al. (2013) by comparing constrained and unconstrained models in CFA; significant differences in chi-square values indicated adequate discriminant validity. Model fit was assessed using multiple indices, including chi-square (χ2), degrees of freedom (df), χ2/df ratio, Root Mean Square Error of Approximation (RMSEA), Comparative Fit Index (CFI), Incremental Fit Index (IFI), and Tucker-Lewis Index (TLI).

By utilizing these methods, the study effectively assessed the reliability and validity of the measurement and structural models, providing a solid statistical foundation for testing the research hypotheses. Detailed analysis results will be reported in the Results section.

4 Research results

We follows the two-step analysis method of structural equation modeling, which includes confirmatory factor analysis to measure the validity of the model constructs and path analysis along with significance testing of the structural model.

4.1 Confirmatory factor analysis

The reliability and validity of the scales were evaluated using Confirmatory Factor Analysis (CFA), focusing on three key aspects: reliability, convergent validity, and discriminant validity.

Reliability: The Cronbach’s α coefficients for each construct ranged from 0.815 to 0.912, exceeding the recommended threshold of 0.7 (Taber, 2018). This indicates a high level of internal consistency among the items within each construct, confirming good reliability.

Convergent Validity: The factor loadings for all items ranged between 0.612 and 0.936, all above the acceptable minimum of 0.5. Composite Reliability (CR) values were between 0.829 and 0.950, exceeding the recommended threshold of 0.6. The Average Variance Extracted (AVE) ranged from 0.551 to 0.865, surpassing the minimum requirement of 0.5 (Fornell and Larcker, 1981). These results confirm that the scales exhibit good convergent validity.

Discriminant Validity: Discriminant validity was assessed using the method proposed by Hair et al. (2013). The chi-square differences between constrained models (where correlations between constructs are fixed at 1) and unconstrained models (where correlations are freely estimated) ranged from 25.6 to 131.0, all of which were significant at p < 0.001. This demonstrates strong discriminant validity between the constructs, indicating that each construct is distinct and measures a unique aspect.

These findings collectively suggest that the measurement model has good reliability and validity, making it suitable for subsequent structural model analysis.

4.2 Model fit and path coefficient analysis

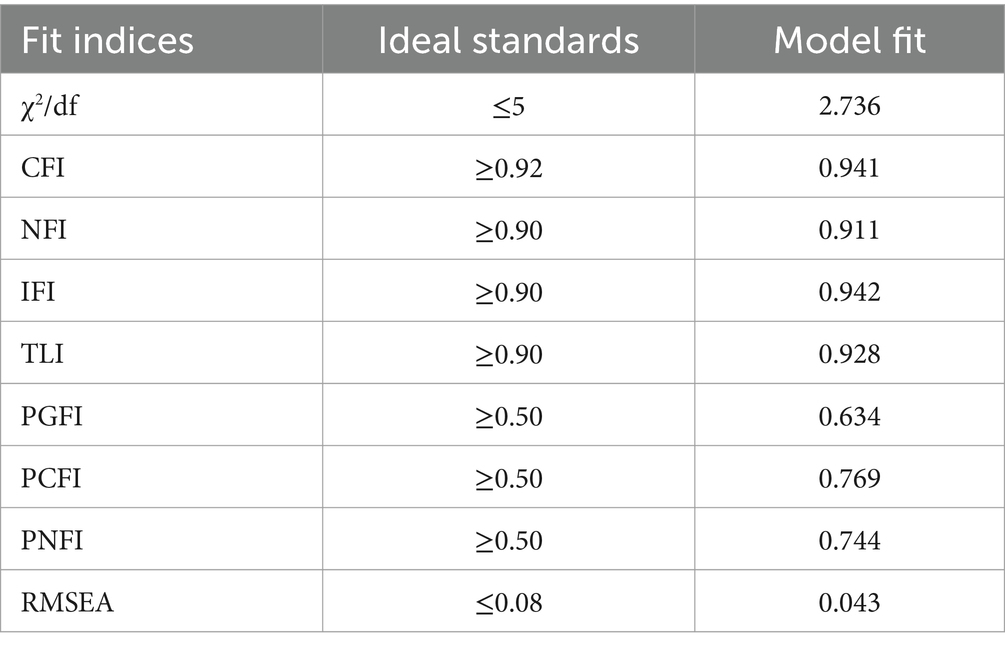

Model fit measures the degree of consistency between the theoretically estimated model and the actual sample data. A better fit indicates that the expected covariance matrix aligns more closely with the sample matrix, and good fit indices are essential for structural equation modeling (SEM) analysis. The model fit indices used in this study are shown in Table 1. Specifically, the normalized chi-square value (χ2/df) is 2.736, which is below the ideal threshold of 5. The CFI, NFI, IFI, and TLI values are 0.941, 0.911, 0.942, and 0.928, respectively, all exceeding the 0.90 benchmark, indicating good model fit. The RMSEA is 0.043, which is less than 0.08, suggesting a high level of model parsimony. Additionally, the other parsimony fit indices—PGFI, PCFI, and PNFI—are all above 0.50, confirming that the model is not overly complex and further supporting its simplicity. Overall, these results indicate a good fit between the model and the data, allowing for further path analysis.

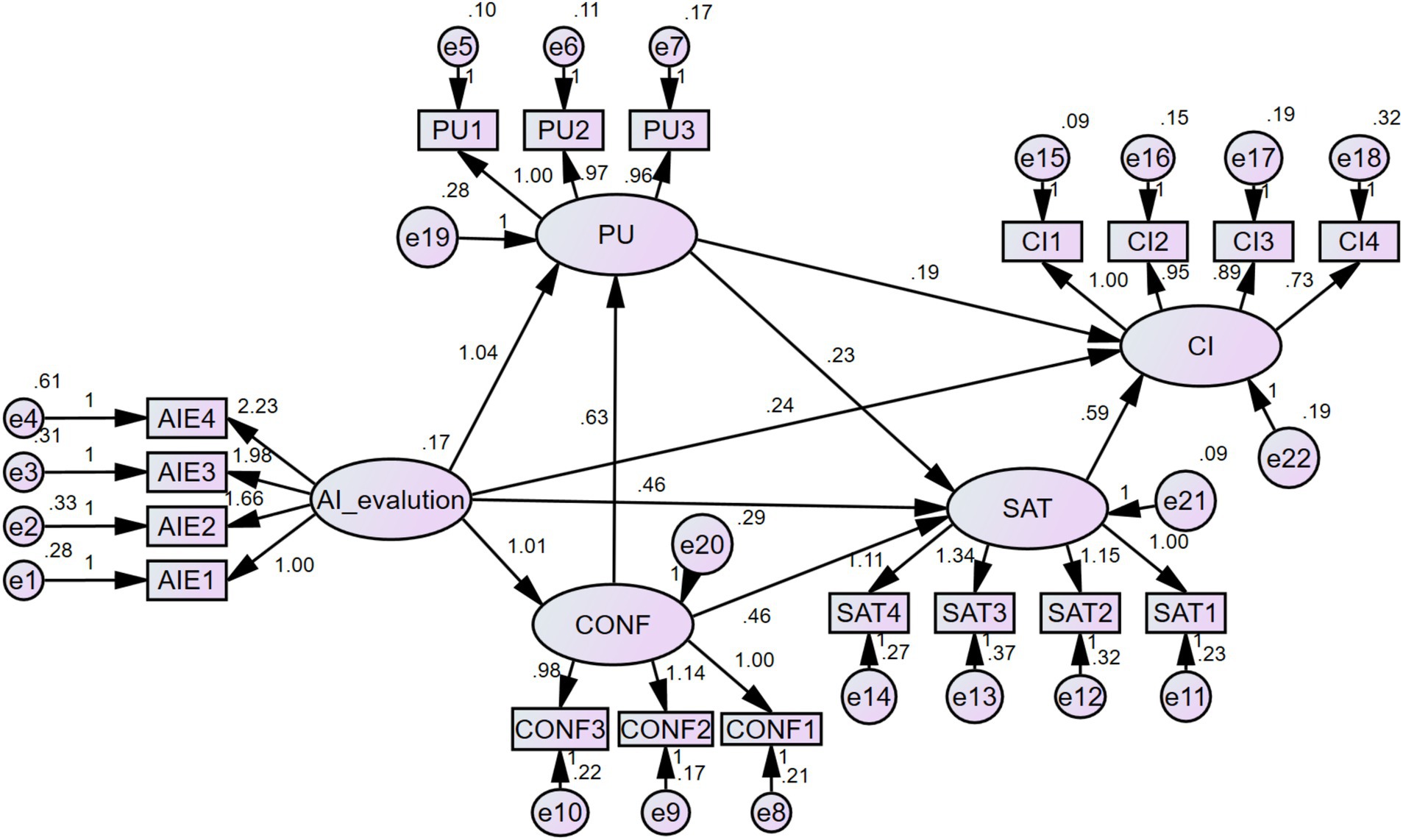

The model fit results are shown in Figure 2, and the standardized path coefficients and hypothesis testing results are presented in Table 2. The analysis indicates that AI performance assessment significantly enhances students’ expectation confirmation (path coefficient = 0.610, p < 0.001), supporting hypothesis H1. It also has a significant positive effect on students’ perceived usefulness (path coefficient = 0.455, p < 0.001), supporting H2, and significantly improves students’ learning satisfaction (path coefficient = 0.267, p < 0.001), supporting H3. However, AI performance assessment does not have a direct significant effect on students’ continuous learning intention (path coefficient = 0.125, p = 0.15), which does not support H4.

Further analysis reveals that expectation confirmation has a significant positive effect on perceived usefulness (path coefficient = 0.461, p < 0.001), supporting H5, and it also significantly enhances learning satisfaction (path coefficient = 0.437, p < 0.001), supporting H6. Learning satisfaction significantly boosts continuous learning intention (path coefficient = 0.530, p < 0.001), supporting H7. Perceived usefulness not only has a positive effect on learning satisfaction (path coefficient = 0.303, p < 0.001), supporting H8, but also directly increases continuous learning intention (path coefficient = 0.228, p < 0.05), supporting H9.

In summary, all hypotheses were supported except H4. The results suggest that AI performance assessment indirectly influences students’ continuous learning intention by enhancing expectation confirmation, perceived usefulness, and learning satisfaction, highlighting the positive role of AI technology in optimizing blended learning.

5 Discussion

This study examined the impact of AI performance assessment on students in blended learning environments. The results indicate that all hypotheses, except H4, were supported. These findings align with existing literature and further validate the applicability of SDT and the ECM, emphasizing the critical role of personalized feedback in enhancing the students learning experience. In the context of this study, it is essential to consider the unique cultural and educational environment in which these findings were obtained. Local factors, such as students’ expectations of teacher-student interaction and their familiarity with technology in educational settings, likely influence how AI-based assessments are perceived and utilized. In regions where students highly value close interaction with instructors, AI feedback mechanisms may complement traditional teaching styles by providing continuous support between in-person sessions. Alternatively, in contexts with limited exposure to AI technology, students may require additional guidance to fully engage with these systems.

Firstly, the support for H1 indicates that AI performance assessment significantly improves students’ expectation confirmation. According to Self-Determination Theory (Deci and Ryan, 2013), personalized and timely feedback fulfills students’ basic psychological needs for autonomy and competence, helping them better manage their learning progress, reduce uncertainty, and boost confidence. Through personalized feedback provided by AI technology, students can gain real-time insights into their learning status, clearly identifying which aspects meet their expectations and which require improvement. This process helps students compare their actual learning experiences with their initial expectations, thus strengthening their expectation confirmation. This finding is consistent with Wang and Lehman (2021), who found that personalized feedback not only helps students confirm whether their learning progress aligns with expectations but also enhances their confidence and motivation. In the context of AI performance assessment, precise and customized feedback enables students to promptly adjust their learning strategies, ensuring that their learning experience remains aligned with their expected goals. This alignment enhances students’ expectation confirmation, which contributes to increased learning satisfaction and a stronger intentions to continue participating (Bhattacherjee, 2001). In the local educational context, where students may prioritize clear feedback and guidance, AI-driven feedback fulfills this need by offering real-time, objective evaluations that students might not otherwise receive as consistently. This increased feedback frequency and specificity can play a critical role in meeting students’ expectations, especially in educational systems where personalized feedback from instructors may be limited due to larger class sizes or resource constraints.

The support for H2 demonstrates that AI performance assessment has a significant positive effect on students’ perceived usefulness. Perceived usefulness reflects the sense of competence from SDT, specifically students’ confidence in their ability to master learning tasks and their sense of control over the learning process. Through personalized feedback, AI performance assessment allows students to promptly understand their learning progress, identify strengths and weaknesses, and thus enhance their control over the learning process. This not only boosts students’ recognition of their own learning abilities but also increases their trust and reliance on teaching tools and resources. This finding aligns with the results of Troussas et al. (2023), who found that AI performance assessment enhances students’ trust in the system through precise feedback, thereby increasing their perceived usefulness of the blended learning model. When students feel that they can effectively use these tools to improve their learning outcomes, their perceived usefulness is significantly enhanced, further supporting the positive role of AI performance assessment in blended learning. In regions where students may not have extensive prior experience with technology in educational contexts, the perceived usefulness of AI tools may initially depend on their ease of use and the clarity of feedback provided. By ensuring that AI tools are intuitive and tailored to local educational needs, educators can enhance perceived usefulness and encourage broader acceptance and adoption of AI systems.

To further enhance students’ perceived usefulness, educators should provide timely and targeted feedback through AI performance assessment systems. One effective method is utilizing Learning Analytics Dashboards (LAD) to track and visualize students’ learning behaviors, helping them accurately assess their progress. Additionally, offering a personalized learning experience is key to boosting perceived usefulness. AI systems should recommend relevant supplementary materials and exercises based on students’ learning progress and interests to meet their individual needs and improve learning outcomes. In some educational contexts, these tools could address a gap where students may otherwise lack access to detailed, individualized guidance. By adapting LAD and personalized content to reflect the specific curriculum and cultural expectations, educators can further increase the perceived relevance and value of these tools.

Teacher support and trust are also crucial. To ensure the effective use of AI tools, it is important that these tools do not increase teachers’ workload but instead assist in simplifying the assessment process and providing useful teaching insights. By offering support mechanisms and addressing ethical concerns, educators’ trust and acceptance of AI systems can be strengthened, leading to more positive adoption of these tools in the teaching process and further improving students’ perceived usefulness.

The validation of H3 demonstrates that AI performance assessment significantly improves students’ learning satisfaction. According to SDT, learning satisfaction is derived not only from external feedback but also from a sense of autonomy and control during the learning process. Through personalized feedback provided by AI systems, students gain clearer insights into their learning progress, identify areas that need improvement, and adjust their learning strategies accordingly. This feedback mechanism not only enhances students’ sense of control over their learning tasks but also improves their overall learning experience in blended learning, thereby increasing their learning satisfaction. This finding is consistent with Lim et al. (2020), who found that timely feedback and personalized support play a critical role in improving students’ learning satisfaction. In local contexts where teacher-student relationships are highly valued, the role of AI in supplementing teacher feedback may be especially important. By providing timely and personalized feedback, AI systems can bridge potential gaps in teacher availability, allowing students to feel supported and improving their satisfaction with the learning experience.

To further boost students’ learning satisfaction, educators should fully leverage the advantages of AI systems to ensure that students receive timely and detailed performance feedback. By using AI tools to automatically generate comprehensive evaluations and promptly deliver them to students, educators can help students clearly understand their learning progress and areas for improvement. This not only enhances learning outcomes but also strengthens students’ trust in AI systems. Furthermore, when designing AI systems, it is essential to account for students’ social backgrounds, psychological conditions, and cultural diversity to offer inclusive and personalized features that increase learning satisfaction. For example, in regions with diverse linguistic backgrounds, AI systems that offer multilingual support or culturally relevant content can enhance satisfaction and make the learning process more inclusive. This kind of personalized support effectively meets students’ psychological needs for autonomy, competence, and relatedness, thereby improving their overall learning experience.

Additionally, improving the quality and usability of AI systems is vital. Through regular updates and system optimizations, educational institutions can ensure that students experience convenient and efficient operations while using the system. This reduces technical barriers and enhances students’ positive experience with the system, leading to greater learning satisfaction. To address the diverse needs of students, providing easy-to-understand user guides and necessary training can help students use AI systems more effectively, giving them a greater sense of autonomy and control over their learning. Mastery of the system’s functionality can reduce technical difficulties and uncertainty, further increasing students’ satisfaction with the system during their use.

Although H4 was not supported, indicating that AI performance assessment does not have a direct impact on students’ continuous learning intentions, the effects of expectation confirmation and perceived usefulness on continuous learning intentions remain significant through indirect pathways. This aligns with the conclusions of Chen and Hoshower (2003), suggesting that students rely more on the positive experiences gained during the learning process rather than on the direct influence of AI technology itself on their motivation to continue learning. Autonomy and competence, key elements of motivation in SDT, are reinforced when students receive personalized feedback through AI systems and perceive progress in their learning. This boosts their learning motivation and confidence. However, the findings of H4 also indicate that while AI performance assessment enhances students’ sense of autonomy and competence, it is insufficient to directly influence their continuous learning intentions. Instead, it requires mediation through other variables, such as expectation confirmation or perceived usefulness, to have an indirect effect. This suggests that future research could explore how to further enhance the interactivity and personalized support of AI systems to directly impact students’ continuous learning intentions.

The empirical results show that H5 and H6 were supported, consistent with the predictions of the ECM. When students used the AI performance assessment system, they confirmed the alignment between their learning experience and expectations, which enhanced their perceived usefulness and learning satisfaction. This is consistent with Bhattacherjee (2001) ECM, which states that the higher a user’s expectation confirmation, the more their perceived usefulness and satisfaction increase.

To strengthen the expectation confirmation mechanism, educators should introduce a diverse set of assessment metrics that comprehensively reflect students’ learning progress, ensuring the thoroughness and accuracy of evaluation results. Additionally, integrating AI-based teaching evaluations with intelligent tutoring systems and chatbots can enhance students’ understanding and mastery of the learning process by providing real-time support. A third approach is to adopt a comprehensive assessment method that combines quantitative and qualitative metrics, to ensure the reliability of students’ performance assessments. By leveraging AI to analyze vast amounts of learning data, educators can better understand students’ learning conditions and needs, thereby meeting their expectations.

The results for H7, H8, and H9 demonstrate that learning satisfaction and perceived usefulness have a significant impact on students’ continuous learning intentions. Learning satisfaction not only directly enhances students’ willingness to continue participating in blended learning, but perceived usefulness also influences continuous learning intentions by improving learning satisfaction and through direct effects. This aligns with Davis (1989) Technology Acceptance Model (TAM), which emphasizes the central role of perceived usefulness and satisfaction in technology adoption and continued use. Students’ recognition of teaching tools and resources, as well as the satisfaction they gain from the learning process, are key drivers of sustained learning motivation.

To further enhance learning satisfaction and perceived usefulness, educators and technology developers should implement a variety of strategies. First, optimizing instructional design by providing high-quality content and a user-friendly experience will better meet students’ practical needs. Second, diversifying learning resources to cater to different students’ interests and needs can increase the appeal and engagement of the learning process. Additionally, improving the user interface of AI systems to make them more intuitive and convenient will reduce barriers to usage. Establishing incentive mechanisms, such as points and rewards, can also motivate students to participate more actively, boosting their learning motivation and engagement with the system. Finally, strengthening data security and privacy protection will ensure that students’ data is handled appropriately, enhancing their trust in the system.

In summary, this study reveals the relationships between AI performance assessment, expectation confirmation, perceived usefulness, learning satisfaction, and continuous learning intentions. The validation of multiple pathways enriches the existing theoretical framework, highlighting the importance of meeting students’ psychological needs and enhancing the learning experience in technology-enhanced education. This research also expands the application of the ECM and SDT.

On a practical level, the findings offer valuable insights for educational institutions and technology developers. First, AI performance assessment systems should focus on the design of personalized feedback to enhance students’ sense of autonomy and competence. Second, improving students’ perceived usefulness and satisfaction with teaching tools is key to promoting their continuous learning intentions. Thus, instructional design should be centered on students’ actual needs, offering valuable learning resources and support. Although AI technology itself did not directly increase continuous learning intentions, it can indirectly achieve this goal by improving the students’ learning experiences. This underscores that in blended learning, the effective use of technology should be student-centered, with a focus on enhancing expectation confirmation, perceived usefulness, and learning satisfaction to foster students’ sustained learning motivation.

6 Conclusion

This study summarizes the role of AI performance assessment systems in blended learning and their influence on students’ continuous learning intentions. By integrating SDT and the ECM, this study demonstrates the multiple pathways through which AI performance assessment systems affect students’ learning motivation. The findings show that AI performance assessment does not directly impact students’ continuous learning intentions but instead exerts an indirect effect through key mediating variables such as expectation confirmation, perceived usefulness, and learning satisfaction. This discovery broadens the application of existing theories, revealing how technology tools enhance intrinsic motivation by fulfilling students’ psychological needs for autonomy, competence, and relatedness. Expectation confirmation plays a crucial role in this process, enhancing students’ learning experience and outcomes in technology-enhanced learning environments.

On a practical level, the study offers valuable guidance for educators and technology developers. To maximize the positive impact of AI performance assessment on learning outcomes, educational institutions should design AI systems that meet or exceed students’ expectations, thereby improving perceived usefulness and learning satisfaction, which in turn promotes continuous learning motivation. Educators should focus on providing personalized feedback to address students’ learning needs, improving system usability and functionality, and ensuring that these systems fulfill students’ psychological needs for autonomy, competence, and relatedness. Technology developers should prioritize optimizing user experience, protecting data privacy, and providing AI tools that simplify educators’ workloads without adding to their burden.

However, this study has some limitations. First, the data were collected solely from business students at one university. Future research could expand the sample to include students from various disciplines and educational levels to better validate the generalizability of the findings. Second, this study used a survey method for data collection, which may be subject to social desirability bias. Future research could incorporate experimental designs or longitudinal studies to more comprehensively assess the long-term impact of AI performance assessment on students’ learning motivation and performance. Finally, while this study examined the overall effects of AI performance assessment systems, it did not delve into the specific performance of different types of AI tools in various educational contexts. Future studies could analyze the application and potential impact of AI tools such as adaptive learning platforms, virtual teaching assistants, and intelligent feedback systems across different teaching scenarios to better understand the role of AI in education.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

HJ: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. LS: Data curation, Writing – original draft, Writing – review & editing. HC: Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research was funded by the Educational Science Research Project at Beijing Union University, titled “Optimization Path and Effect Study on the Evaluation of Core Competencies of Undergraduate Accounting Talents Empowered by Digital Intelligence” (No. JK202407).

Acknowledgments

We sincerely thank Huantong Wang from the School of Art, Tianjin Polytechnic University, for her valuable contributions during the revision stages of this manuscript, particularly in review, editing, and visualization.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aloisi, C. (2023). The future of standardised assessment: validity and trust in algorithms for assessment and scoring. Eur. J. Educ. 58, 98–110. doi: 10.1111/ejed.12542

Alzahrani, L., and Seth, K. P. (2021). Factors influencing students’ satisfaction with continuous use of learning management systems during the COVID-19 pandemic: an empirical study. Educ. Inf. Technol. 26, 6787–6805. doi: 10.1007/s10639-021-10492-5

Bhattacherjee, A. (2001). Understanding information systems continuance: an expectation-confirmation model. MIS Q. 25, 351–370. doi: 10.2307/3250921

Chen, Y., and Hoshower, L. B. (2003). Student evaluation of teaching effectiveness: an assessment of student perception and motivation. Assess. Eval. High. Educ. 28, 71–88. doi: 10.1080/02602930301683

Chen, X., Xu, X., Wu, Y. J., and Pok, W. F. (2022). Learners’ continuous use intention of blended learning: TAM-SET model. Sustain. For. 14:16428. doi: 10.3390/su142416428

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

Davis, F. D., Bagozzi, R. P., and Warshaw, P. R. (1989). User acceptance of computer technology: a comparison of two theoretical models. Manag. Sci. 35, 982–1003. doi: 10.1287/mnsc.35.8.982

Deci, E. L., and Ryan, R. M. (2013). Intrinsic motivation and self-determination in human behavior. New York, USA: Springer Science & Business Media.

Fidan, M., and Gencel, N. (2022). Supporting the instructional videos with chatbot and peer feedback mechanisms in online learning: the effects on learning performance and intrinsic motivation. J. Educ. Comput. Res. 60, 1716–1741. doi: 10.1177/07356331221077901

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.1177/002224378101800104

Gong, J., Ruan, M., Yang, W., Peng, M., Wang, Z., Ouyang, L., et al. (2021). Application of blended learning approach in clinical skills to stimulate active learning attitudes and improve clinical practice among medical students. PeerJ 9:e11690. doi: 10.7717/peerj.11690

Guo, K., Zhong, Y., Li, D., and Chu, S. K. W. (2023). Effects of chatbot-assisted in-class debates on students’ argumentation skills and task motivation. Comput. Educ. 203:104862. doi: 10.1016/j.compedu.2023.104862

Hair, J. F., Ringle, C. M., and Sarstedt, M. (2013). Partial least squares structural equation modeling: rigorous applications, better results and higher acceptance. Long Range Plan. 46, 1–12. doi: 10.1016/j.lrp.2013.01.001

Hair, J. F., Risher, J. J., Sarstedt, M., and Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 31, 2–24. doi: 10.1108/ebr-11-2018-0203

Hayashi, A., Chen, C., Ryan, T., and Wu, J. (2004). The role of social presence and moderating role of computer self-efficacy in predicting the continuance usage of e-learning systems. J. Inf. Syst. Educ. 15, 139–154. Available at: https://aisel.aisnet.org/jise/vol15/iss2/5

Huo, Y., Xiao, J., and Ni, L. M. (2018). Towards personalized learning through class contextual factors-based exercise recommendation. In: 2018 IEEE 24th International Conference on Parallel and Distributed Systems (ICPADS). Sentosa, Singapore: IEEE.

Kumar, A., Krishnamurthi, R., Bhatia, S., Kaushik, K., Ahuja, N. J., Nayyar, A., et al. (2021). Blended learning tools and practices: a comprehensive analysis. IEEE Access 9, 85151–85197. doi: 10.1109/access.2021.3085844

Lee, M.-C. (2010). Explaining and predicting users’ continuance intention toward e-learning: an extension of the expectation–confirmation model. Comput. Educ. 54, 506–516. doi: 10.1016/j.compedu.2009.09.002

Li, C., and Phongsatha, T. (2022). Satisfaction and continuance intention of blended learning from perspective of junior high school students in the directly-entering-socialism ethnic communities of China. PLoS One 17:e0270939. doi: 10.1371/journal.pone.0270939

Lim, L.-A., Dawson, S., Gašević, D., Joksimović, S., Fudge, A., Pardo, A., et al. (2020). Students’ sense-making of personalised feedback based on learning analytics. Australas. J. Educ. Technol. 36, 15–33. doi: 10.14742/ajet.6370

Limayem, M., and Cheung, C. M. (2008). Understanding information systems continuance: the case of internet-based learning technologies. Inf. Manag. 45, 227–232. doi: 10.1016/j.im.2008.02.005

Lozano-Lozano, M., Fernández-Lao, C., Cantarero-Villanueva, I., Noguerol, I., Álvarez-Salvago, F., Cruz-Fernández, M., et al. (2020). A blended learning system to improve motivation, mood state, and satisfaction in undergraduate students: randomized controlled trial. J. Med. Internet Res. 22:e17101. doi: 10.2196/17101

Oliver, R. L. (1980). A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 17, 460–469. doi: 10.1177/002224378001700405

Pulfrey, C., Buchs, C., and Butera, F. (2011). Why grades engender performance-avoidance goals: the mediating role of autonomous motivation. J. Educ. Psychol. 103, 683–700. doi: 10.1037/a0023911

Rad, H. S., Alipour, R., and Jafarpour, A. (2023). Using artificial intelligence to foster students’ writing feedback literacy, engagement, and outcome: a case of Wordtune application. Interact. Learn. Environ. 32, 5020–5040. doi: 10.1080/10494820.2023.2208170

Riezebos, J., Renting, N., van Ooijen, R., and van der Vaart, A. (2023). Adverse effects of personalized automated feedback. In: 9th International Conference on Higher Education Advances (HEAd 2023). Valencia, Spain: Universidad Politecnica de Valencia.

Ryan, R. M. (2017). Self-determination theory: basic psychological needs in motivation, development, and wellness. New York, USA: Guilford Press.

Shin, D.-H., Shin, Y.-J., Choo, H., and Beom, K. (2011). Smartphones as smart pedagogical tools: implications for smartphones as u-learning devices. Comput. Hum. Behav. 27, 2207–2214. doi: 10.1016/j.chb.2011.06.017

Swiecki, Z., Khosravi, H., Chen, G., Martinez-Maldonado, R., Lodge, J. M., Milligan, S., et al. (2022). Assessment in the age of artificial intelligence. Computers and education. Artif. Intell. 3:100075. doi: 10.1016/j.caeai.2022.100075

Taber, K. S. (2018). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 48, 1273–1296. doi: 10.1007/s11165-016-9602-2

Thong, J. Y., Hong, S.-J., and Tam, K. Y. (2006). The effects of post-adoption beliefs on the expectation-confirmation model for information technology continuance. Int. J. Hum. Comput. Stud. 64, 799–810. doi: 10.1016/j.ijhcs.2006.05.001

Troussas, C., Papakostas, C., Krouska, A., Mylonas, P., and Sgouropoulou, C. (2023). “Personalized feedback enhanced by natural language processing in intelligent tutoring systems” in International conference on intelligent tutoring systems. (Corfu, Greece: Springer).

Wang, Q., and Huang, Q. (2023). Engaging online learners in blended synchronous learning: a systematic literature review. IEEE Trans. Learn. Technol. 17, 594–607. doi: 10.1109/tlt.2023.3282278

Wang, H., and Lehman, J. D. (2021). Using achievement goal-based personalized motivational feedback to enhance online learning. Educ. Technol. Res. Dev. 69, 553–581. doi: 10.1007/s11423-021-09940-3

Yang, H., Cai, J., Yang, H. H., and Wang, X. (2023). Examining key factors of beginner’s continuance intention in blended learning in higher education. J. Comput. High. Educ. 35, 126–143. doi: 10.1007/s12528-022-09322-5

Zhang, Y., Dang, Y., and Amer, B. (2016). A large-scale blended and flipped class: class design and investigation of factors influencing students' intention to learn. IEEE Trans. Educ. 59, 263–273. doi: 10.1109/te.2016.2535205

Appendix

Keywords: AI performance assessment, blended learning, continuous learning motivation, expectation confirmation model (ECM), educational technology

Citation: Ji H, Suo L and Chen H (2024) AI performance assessment in blended learning: mechanisms and effects on students’ continuous learning motivation. Front. Psychol. 15:1447680. doi: 10.3389/fpsyg.2024.1447680

Edited by:

Mei Tian, Xi’an Jiaotong University, ChinaReviewed by:

Fidel Çakmak, Alanya Alaaddin Keykubat University, TürkiyeYuqi Wang, Beihang University, China

Copyright © 2024 Ji, Suo and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hao Ji, aGFvLmppQGJ1dS5lZHUuY24=

Hao Ji

Hao Ji Lingling Suo

Lingling Suo Hua Chen

Hua Chen