94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Neurorobot., 18 February 2025

Volume 19 - 2025 | https://doi.org/10.3389/fnbot.2025.1451923

This article is part of the Research TopicNeural Network Models in Autonomous RoboticsView all 7 articles

Jun Hu1,2

Jun Hu1,2 Yuefeng Wang2

Yuefeng Wang2 Shuai Cheng2*

Shuai Cheng2* Jinghan Xu3

Jinghan Xu3 Ningjia Wang4

Ningjia Wang4 Bingjie Fu4

Bingjie Fu4 Zuotao Ning2

Zuotao Ning2 Jingyao Li2

Jingyao Li2 Hualin Chen4

Hualin Chen4 Chaolu Feng5

Chaolu Feng5 Yin Zhang2

Yin Zhang2Autonomous driving technology has garnered significant attention due to its potential to revolutionize transportation through advanced robotic systems. Despite optimistic projections for commercial deployment, the development of sophisticated autonomous driving systems remains largely experimental, with the effectiveness of neurorobotics-based decision-making and planning algorithms being crucial for success. This paper delivers a comprehensive review of decision-making and planning algorithms in autonomous driving, covering both knowledge-driven and data-driven approaches. For knowledge-driven methods, this paper explores independent decision-making systems, including rule based, state transition based, game-theory based methods and independent planing systems including search based, sampling based, and optimization based methods. For data-driven methods, it provides a detailed analysis of machine learning paradigms such as imitation learning, reinforcement learning, and inverse reinforcement learning. Furthermore, the paper discusses hybrid models that amalgamate the strengths of both data-driven and knowledge-driven approaches, offering insights into their implementation and challenges. By evaluating experimental platforms, this paper guides the selection of appropriate testing and validation strategies. Through comparative analysis, this paper elucidates the advantages and disadvantages of each method, facilitating the design of more robust autonomous driving systems. Finally, this paper addresses current challenges and offers a perspective on future developments in this rapidly evolving field.

Autonomous driving technology exemplifies a crucial application of robotics theories and techniques, aiming to ensure safe and efficient self-driving in real-world traffic environments (Zhang et al., 2024). Within autonomous driving systems, decision-making and planning algorithms are pivotal, tasked with generating driving behaviors and planning trajectories. Broadly autonomous driving encompasses two phases: behavior decision-making and motion planning. Behavior decision-making addresses responses to temporary events, such as abnormal driving behaviors of other vehicles, sudden pedestrian crossings, and emergency vehicle avoidance (Xu et al., 2024; Wang T. et al., 2024). At this phase, the decision system must exhibit high adaptability and predictive capability for potential future scenarios, allowing for quick adjustments like lane changes, acceleration, or deceleration based on real-time conditions (Yang et al., 2024; Feng et al., 2024). Motion planning delves into a more granular aspect of autonomous driving, generating detailed trajectories based on the current vehicle state and behavior decision outputs (Li Z. et al., 2023). The motion planning ensures the smoothness and comfort of the vehicle's trajectory while adhering to dynamic constraints such as speed and acceleration. Given the significant challenges associated with achieving safe and flexible interactions, behavior decision-making and motion planning have become critical focal points in autonomous driving research, which is also the primary subject of this paper.

The decision-making and motion planning algorithms for autonomous vehicles integrate theories from multiple disciplines, including machine learning, pattern recognition, intelligent optimization, and nonlinear control (Lu et al., 2024). Deep learning techniques effectively improve the ability to encode model features (Bidwe et al., 2022). These technologies provide the foundation for safe interactions between autonomous vehicles and other road users on public roads. Furthermore, decision-making and planning algorithms must consider ethical and legal responsibilities, ensuring adherence to socially accepted moral standards and compliance with traffic regulations during emergencies (Zheng et al., 2024; Gao et al., 2024). Current research on decision-making and planning algorithms focuses on improving robustness, enhancing stability and safety in unforeseen situations, and increasing predictive accuracy of the surrounding environment and other traffic participants (Wen et al., 2023; Wang W. et al., 2023; Zhai et al., 2023). Additionally, efforts are being made to reduce computational resource consumption and improve algorithmic efficiency to achieve rapid responses under resource-constrained conditions.

Aiming at the aforementioned research objectives, the researchers primarily employ knowledge-driven and data-driven approaches to construct decision-making and motion planning systems. The knowledge-driven approach simulates human decision-making processes through the encoding of expert knowledge and logical rules. By integrating information such as road characteristics, traffic regulations, and historical behavior data, these approaches can search for the optimal driving path or optimize for a specific objective function, thereby achieving safe and efficient driving strategies (Jia et al., 2023; Chen L. et al., 2023; Aoki et al., 2023). Concurrently, data-driven approaches have emerged prominently propelled by advancements in machine learning and statistical analysis. Unlike knowledge-driven methods, data-driven approaches do not necessitate pre-defined explicit rules. They enhance decision accuracy and adaptability by training and optimizing decision models using vast amounts of real driving data. Particularly in complex and dynamic traffic environments, data-driven strategies effectively learn and emulate human driver behaviors and decision-making processes (Wang T. H. et al., 2023). The application of data-driven technologies also significantly enhances their generalizability across different environments. However, solely relying on data-driven methods has its limitations; these methods typically require large volumes of labeled data for training and often have poor interpretability, making it challenging to ensure consistent and safe decisions.

On the other hand in industry, the deployment of autonomous vehicles (AVs) is incrementally expanding, particularly within the commercial sector. The available AVs on the market primarily employ several key decision-making and planning methodologies. These include rule-based systems, state transition models such as Markov Decision Processes (MDPs) and Partially Observable Markov Decision Processes (POMDPs), as well as game-theoretic approaches. These vehicles utilize sophisticated algorithms to process road environments and vehicle states, optimizing state transitions to make the best possible decisions.

The deployment of AVs is being tested and operated in specific geographic areas and under certain traffic conditions. Waymo and Tesla stand out as prominent examples. Waymo offers autonomous taxi services in Phoenix, Arizona, and is expanding its service reach. Reports indicate that Waymo plans to extend its services to Miami, Florida, in an effort to gain an edge in the intensifying competitive market. Moreover, Waymo has established a partnership with the automotive financing company Moove, which will manage Waymo's fleet operations in Phoenix, including maintenance of the autonomous taxis and management of charging infrastructure. Currently, Waymo has deployed approximately 200 autonomous vehicles in Phoenix. Tesla, on the other hand, collects data through its fleet learning program to improve its autonomous driving systems. While Tesla's Autopilot and Full Self-Driving (FSD) systems have made significant advancements in autonomous technology, there is still a considerable gap before achieving true Level 4 (L4) autonomous driving capabilities. Tesla employs an end-to-end (E2E) deep learning strategy, integrating neural networks and reinforcement learning in an attempt to enhance the intelligence level of autonomous driving. Tesla's Robotaxi technology faces challenges, including safety and reliability issues, regulatory and licensing hurdles, and market acceptance and operational challenges. These deployments demonstrate the applicability and challenges of autonomous technology under real-world conditions and highlight how industry leaders are testing and optimizing their technologies in specific geographic and traffic settings. As technology matures and regulatory environments adapt, it is anticipated that the deployment of AVs will become more widespread and in-depth.

Despite the immense potential of AVs, they still face certain limitations in decision-making and planning. These include interactions with human drivers under mixed traffic conditions, responses to unexpected situations, and adaptability within complex traffic environments. Additionally, AV decision-making and planning systems must consider ethical and legal responsibilities, ensuring adherence to socially accepted moral standards and compliance with traffic regulations during emergencies.

Neurorobotic approaches, which combine neural networks and robotics, offer new possibilities for AV decision-making and planning. These methods can improve the accuracy and adaptability of decision-making by learning from and optimizing decision models with extensive real-world driving data. Particularly in complex and dynamic traffic environments, data-driven strategies effectively learn and emulate human driver behaviors and decision-making processes, significantly enhancing generalizability across different environments. However, relying solely on data-driven methods has its limitations; these methods typically require large volumes of labeled data for training and often have poor interpretability, making it challenging to ensure consistent and safe decisions.

Thus, an increasing number of researchers are attempting to combine knowledge-driven and data-driven methods to complement each other. In this paper, we refer to these combined methods as hybrid methods. Hybrid methods harness the advantages of both approaches: the data-driven component improves the system's adaptability to complex environments and the accuracy of predictions by extracting patterns and behaviors from extensive driving data; meanwhile, the knowledge-driven component ensures decisions comply with traffic regulations and safety standards, providing systematic constraints and guidance within a well-defined framework. This combination allows for more flexible, robust, and interpretable decision planning (Singh, 2023). Although hybrid methods aim to integrate the strengths of knowledge-driven and data-driven approaches, they also present certain limitations and potential challenges in development. The design and implementation of hybrid methods are complex, requiring precise integration of two fundamentally different techniques. This not only demands strong theoretical knowledge from algorithm designers but also necessitates continuous tuning and optimization in practice to achieve optimal performance. By thoroughly discussing and comparing these algorithms, we aim to understand their respective advantages and limitations and explore effective ways to integrate these methods to tackle complex decision-making and motion planning problems in autonomous driving.

This review provides a comprehensive overview of decision-making and planning technologies in autonomous driving systems. The following sections detail the research progress in each aspect.

Introduction: The introduction reviews the essential role of decision-making and planning in autonomous driving systems, outlining the historical applications and unique advantages and limitations of knowledge-driven, data-driven, and hybrid methods. It provides a detailed comparative analysis of these methods, discusses their effectiveness in various scenarios, and summarizes the article's structure to offer a comprehensive understanding of the advancements in the field.

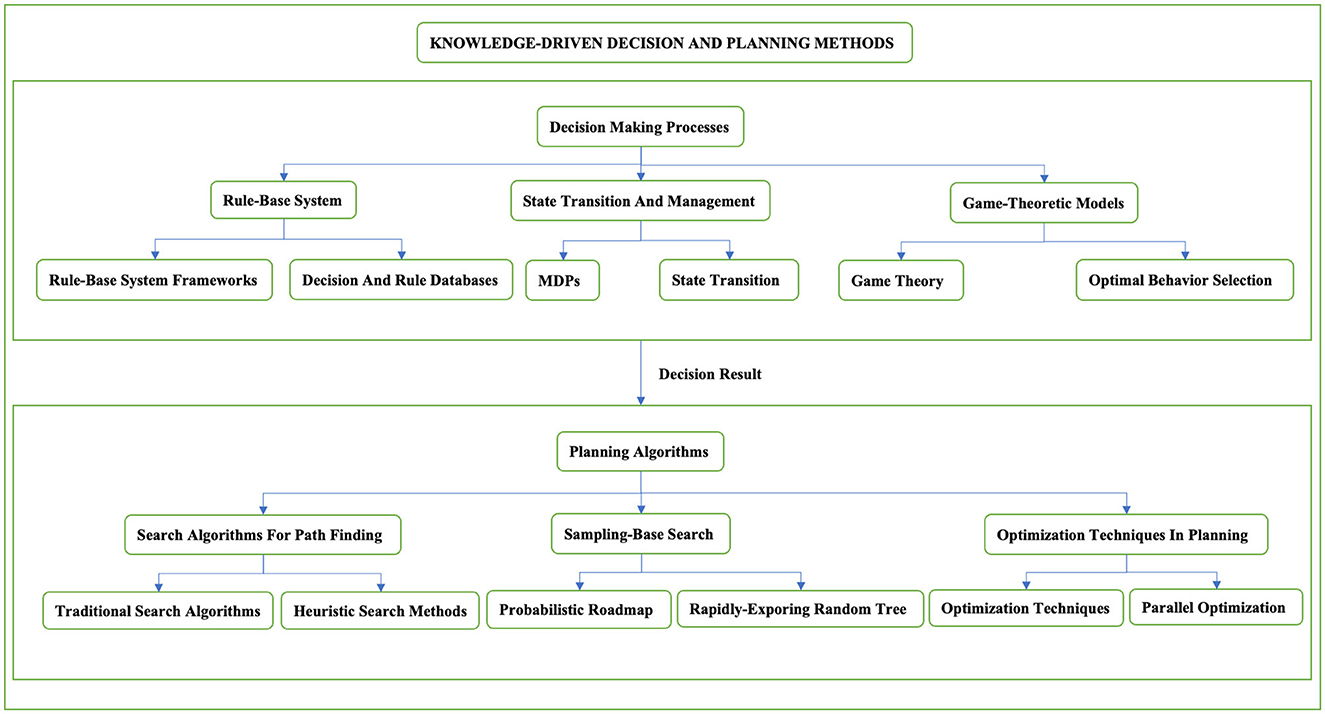

Knowledge-driven decision and planning methods: This section explores knowledge-driven decision-making and planning methods, focusing on the decision process and path planning process. It covers the framework of rule based systems, state-transition systems including Markov Decision Processes (MDPs), game-theory based decision models, and path planning methods like search-based algorithms (A* and Dijkstra) and optimization-based techniques.

Data-driven decision and planning methods: This section explores data-driven decision-making and planning methods, covering imitation learning, reinforcement learning, inverse reinforcement learning, and associated challenges. It discusses training systems via expert behavior observation, environment interaction for optimal strategy learning, and inferring reward functions, emphasizing their application and real-world challenges in autonomous driving.

Hybrid decision and planning methods: This section examines hybrid decision-making and planning methods that merge knowledge-driven and data-driven approaches to improve accuracy and efficiency. It discusses the integration of expert knowledge with techniques like imitation and reinforcement learning, highlighting both the benefits of this combination and the technical and practical challenges in implementation.

Experiment platform: This section explores pivotal resources for autonomous driving: datasets and simulation platforms, detailing their sources, composition, applications, and support for decision-making algorithm development. It also evaluates simulation platforms, examining their features and role in testing algorithms and simulating complex traffic environments, crucial for advancing autonomous driving technology.

Challenges and future perspectives: This section addresses current challenges in autonomous driving decision-making and planning, including environmental perception uncertainties, unpredictability of traffic participants, and limitations of data-driven algorithms, and examines industry and academic responses. It also looks to future trends, such as multi-sensor fusion, deep learning, behavior prediction models, scenario simulation, reinforcement learning, synthetic data, continuous learning, and explainable AI.

The autonomous driving industry has been progressing at a rapid pace, yet there exists a notable gap in the systematic analysis and synthesis of decision-making and planning methods. Our review aims to bridge this gap by providing a comprehensive and systematic categorization, comparison, and analysis of the current state of the art in autonomous driving. Through this rigorous examination, we have identified a clear trend and a compelling direction for future research: the integration of knowledge-driven and data-driven approaches into hybrid methods.

The lack of systematic analysis in the field has led to fragmented development and a lack of clarity on the most effective strategies for advancing autonomous driving systems. Our review stands as a testament to the need for a structured evaluation of the various methodologies, encompassing rule-based, state transition-based, game-theory based, search-based, sampling-based, and optimization-based methods. By conducting a thorough comparative analysis, we have been able to elucidate the strengths and limitations of each approach and how they complement one another.

Our systematic summary and synthesis have led us to conclude that the future of autonomous driving decision-making and planning lies in hybrid methods. This conclusion is not merely a promotion of a particular approach but is grounded in the recognition that no single methodology can address the multifaceted challenges of autonomous driving. Hybrid methods offer a balanced and comprehensive framework that leverages the strengths of both knowledge-driven and data-driven strategies, thereby enhancing the adaptability, safety, and interpretability of autonomous vehicles.

We advocate for hybrid methods as the future research direction because they hold the potential to: Improve Adaptability: By incorporating data-driven learning, hybrid methods can adapt to dynamic and complex traffic scenarios that exceed the capabilities of traditional rule-based systems.

Enhance Safety and Reliability: Knowledge-driven components provide a safety net, ensuring that decisions comply with predefined rules and ethical standards, which is critical for public trust and regulatory compliance.

Ensure Interpretability: The combination of data-driven flexibility with knowledge-driven structure allows for greater transparency in decision-making processes, which is essential for debugging, optimization, and building user trust.

In conclusion, our systematic analysis and synthesis of autonomous driving decision-making and planning methods provide a clear premise and direction for the field. We believe that the hybrid approach, informed by our comprehensive review, is not only a promising direction but also a necessary evolution in the development of autonomous driving systems. Our review serves as a roadmap for researchers and practitioners, guiding the industry toward a future where autonomous vehicles can operate with enhanced safety, efficiency, and reliability. The contributions of this paper can be summarized as follows:

This paper provides a comprehensive overview of automated decision-making and planning methods, and innovatively classifies these methods into three categories: knowledge-driven methods, data-driven methods, and hybrid methods. Regarding knowledge-driven methods, we highlight the efficiency and accuracy of expert systems in handling specific decision problems through rule system design and state management, and we explore the role of game theory in strategy formation. Additionally, we also discuss how search and optimization algorithms plan paths. For data-driven methods, we analyze decision-making and planning methods based on reinforcement learning, imitation learning, and inverse reinforcement learning. We explore the theoretical foundations, current applications, and challenges of these algorithms. This comprehensive analysis highlights the strengths and limitations of various methods and provides direction for future research and technological improvements. Moreover, we delve into hybrid methods, emphasizing their potential to integrate the advantages of data-driven and knowledge-driven approaches in autonomous driving decision systems.

Furthermore, we detail various virtual simulation platforms and physical experimental facilities necessary for testing and validating algorithms, emphasizing their crucial role in transitioning algorithms from theory to real-world applications.

Finally, we discuss industry challenges and prospects, clarifying future research directions and the integration potential of emerging technologies in the field of automated decision-making and planning.

Overall, this paper enriches academic research in automated decision-making and planning and guides practitioners in the field, contributing positively to technological advancements in this domain.

The knowledge-driven method typically separates the decision-making phase from the path planning phase to enhance modularity, manageability, and efficiency. This distinction allows the decision module to focus on high-level strategies, such as overtaking or following other vehicles, while the path planning module translates these strategies into specific driving paths. The overall classification structure of knowledge driven methods is shown in Figure 1.

Figure 1. Classification structure of knowledge driven methods. This figure carefully classifies the knowledge-driven decision-making planning methods and introduces the decision-making planning methods in stages. It is mainly divided into two stages, including the decision-making process and the planning process. The second section will discuss each method in the two-stage process in detail.

The advantage of the knowledge-driven planning method is that it ensures the vehicle remains in a safe state within the predefined range of rules. However, this approach also has several drawbacks, such as overly conservative decision-making and increased time complexity due to the accumulation of rules. While the introduction of trajectory post-processing helps ensure the smoothness and safety of the vehicle's trajectory, the issue of planning delays persists. In the following sections, we will discuss the knowledge-driven planning method in detail, including rule-based and learning-based approaches, examining its benefits, limitations, and potential improvements.

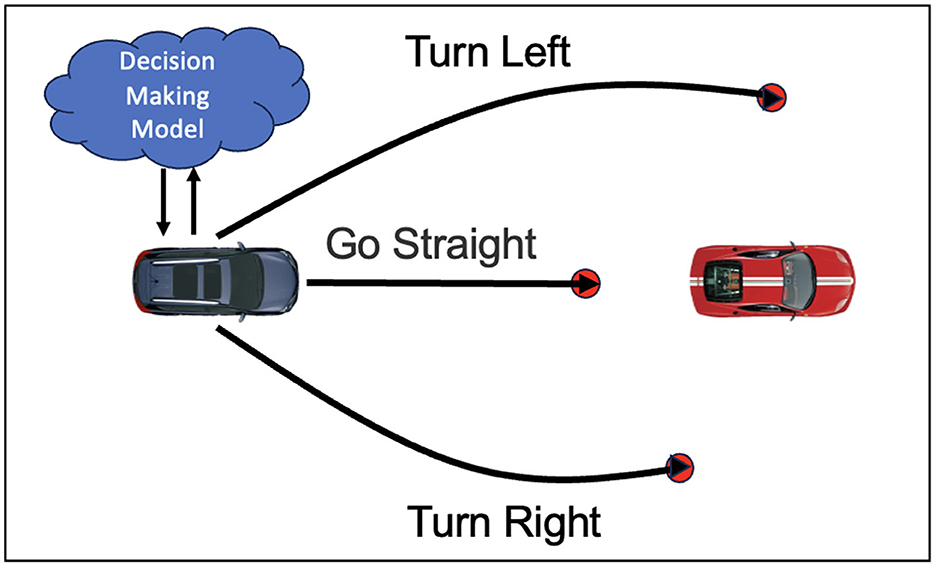

The decision module provides initial coarse-grained decision results for the knowledge driven decision and planning model, as shown in Figure 2. Decision-making methods can be categorized into rule based, state-transition based, and game-theory based approaches. Rule based methods use predefined traffic rules and driving strategies, making decisions with conditional logic and reasoning. State-transition methods, such as Markov Decision Processes (MDP) and Partially Observable Markov Decision Processes (POMDP), represent the driving environment and vehicle states as a state space, optimizing state transitions for optimal decisions. Game-theory based approaches treat autonomous driving as a multi-agent system, using game theory to analyze and predict other traffic participants' behaviors to form cooperative or competitive driving strategies. The following sections provide a detailed overview of these decision-making methods.

Figure 2. Schematic diagram of knowledge driven decision-making. The figure describes the possible decisions that the vehicle may make when encountering an obstacle vehicle, including going straight, turning left, and turning right. The vehicle plans the optimal trajectory based on the decision-planning model.

Rule based systems make decisions with predefined rules and logic. These systems rely on expert knowledge and experience to build decision logic and rules. Zhao et al. (2021) propose a rule based system using if-then rules to process perception information from the traffic environment, generating corresponding driving behaviors. The decision logic of this system is to select the optimal driving strategy based on the surrounding traffic conditions and potential risk assessment. Additionally, the design of the rule database is a crucial component of rule based systems. Pellkofer and Dickmanns (2002) propose a behavior decision module, which execute task plans generated by task planning experts rule database. Hillenbrand et al. (2006) introduce a multi-level collision mitigation system that decides whether to intervene by evaluating the remaining reaction time (TTR). The system employs a time-based decision-making approach, and provides a flexible trade-off between potential benefits and risks while maintaining product liability protection and driver acceptance. Dam et al. (2022) propose an advanced predictive mechanism that comprehensively analyzes the current state of traffic flow and vehicle behavior patterns. This system can anticipate potential upcoming situations by utilizing probabilistic models and machine learning techniques.

In highly complex traffic environments, maintaining system reliability and effectiveness is a critical issue. Zhang T. et al. (2023) address this problem by proposing several solutions, including enhanced perception capabilities, real-time traffic data analysis, adaptive rule adjustment, multimodal decision fusion, safety strategies, redundancy design, and human-machine interaction optimization. Koo et al. (2015) discuss how to enhance the transparency of rule based systems, making their decision-making processes easier to understand and verify. Noh and An (2017) define a decision-making framework for highway environments, capable of reliably and robustly assessing collision probabilities under current traffic conditions and automatically determining appropriate driving strategies. This framework consists of two main components: situation assessment and strategy decision-making. The situation assessment component uses multiple complementary “threat metrics” and Bayesian networks to calculate “threat levels” at both vehicle and lane levels, assessing collision probabilities in specific highway traffic conditions. The strategy decision-making component automatically determines suitable driving strategies for given highway scenarios, aiming for collision-free, goal-oriented behavior.

Trajectory prediction and multi-angle trajectory quality evaluation must be introduced into the rule-based decision-making system. This will allow the drivable trajectory to be planned at future moments more quickly and accurately while ensuring driving safety. The most important thing is to ensure the absolute safety of all agents in a complex environment.

State transition and management models describe how a vehicle moves between different states. State management involves making decisions based on current state and environmental information. The application of Markov Decision Processes (MDPs) is particularly significant, providing an effective mathematical framework for state management. Galesloot et al. (2024) introduce a novel online planning algorithm to address challenges in multi-agent partially observable Markov decision processes (MPOMDPs). They integrate weighted particle filtering into sample-based online planners, and leverage the locality of agent interactions to develop new online planning algorithms operating on a Sparse Particle Filter Tree. Sheng et al. (2023) introduce a safe online POMDP planning approach that computes shields to restrict unsafe actions violating reach-avoid specifications. These shields are integrated into the POMDP algorithm, presenting four different methods for shield computation and integration, including a decomposed variant aimed at enhancing scalability. Furthermore, Barenboim and Indelman (2024) propose an online POMDP planning method that provides deterministic guarantees by simplifying the relationship between the actual solution and the theoretical optimum. They derived tight deterministic upper and lower bounds for selecting observation subsets at each posterior node of the tree. The method simultaneously constrains subsets of state and observation spaces to support comprehensive belief updates. Ulfsjöö and Axehill (2022) combine POMDP and scenario model predictive control (SCMPC) in a two-step planning method to address uncertainty in highway planning for autonomous vehicles. Huang Z. et al. (2024) propose an online learning-based behavior prediction model and an efficient planner for autonomous driving, utilizing a transformer-based model integrated with recurrent neural memory to dynamically update latent belief states and infer the intentions of other traffic participants. They also employed an option-based Monte Carlo Tree Search (MCTS) planner to reduce computational complexity by searching action sequences. Schörner et al. (2019) develop a hierarchical framework for autonomous vehicles in multi-interaction environments. This framework addresses decision-making under occluded conditions by computing the vehicle's observation range. Additionally, it considers current and predicted environments to foresee potential hidden traffic participants. Lev-Yehudi et al. (2024) introduce a novel POMDP planning approach for target object search in partially unknown environments. Liu et al. (2015) present a trajectory planning method using POMDP to handle scenarios with hidden road users. Chen and Kurniawati (2024) propose a context-aware decision-making algorithm for urban autonomous driving, modeling the decision problem as a POMDP and solving it online.

The utilization of Markov Decision Processes (MDP) and their various extensions in the field of autonomous driving has greatly enhanced the capacity for effective decision-making and strategic planning, particularly in environments that are partially observable or involve multiple interacting agents. In real-world driving scenarios, vehicles often encounter situations where not all variables or conditions are fully visible or predictable, such as obstacles obscured from sensors or dynamic traffic patterns. MDPs provide a structured framework to address these uncertainties by allowing autonomous systems to evaluate potential actions based on probabilistic models of outcomes, thereby optimizing decision-making under uncertainty. Furthermore, in multi-agent environments where interaction with other vehicles, pedestrians, and traffic systems is required, extensions of MDPs, such as Multi-agent MDPs (MMDPs), facilitate coordinated strategies that ensure safe and efficient navigation. These advanced models enable autonomous vehicles to anticipate and respond to the actions of other agents, leading to more reliable and intelligent driving solutions. Overall, the applications of MDP and its variants in autonomous driving enable effective decision-making and planning in partially observable and multi-agent environments.

Game theory plays a critical role in decision-making by analyzing the strategies and potential actions of different participants, enabling autonomous driving systems to optimize their behavior in competitive environments. Fisac et al. (2019) propose a hierarchical dynamic game theory planning algorithm, effectively handling the complex interactions between autonomous vehicles and human drivers by decomposing dynamic games into long-term strategic games and short-term tactical games. Sankar and Han (2020) employ adaptive robust game theory decision strategies within a hierarchical game theory framework to manage vehicle interactions on highways. This strategy allows autonomous vehicles to adjust their behavior based on other drivers' actions, reducing collision rates and increasing lane-changing success. Li et al. (2020), Cheng et al. (2019), and Li et al. (2018) utilize non-cooperative game theory methods to address traffic decision-making at unsignalized intersections. In these methods, each vehicle is viewed as an independent decision-maker to minimize its travel time or enhance its safety without necessarily cooperating with other vehicles. Martin et al. (2023), Tian et al. (2022), and Fang et al. (2024) explore cooperative game theory to enhance the efficiency and safety of interactions among autonomous vehicles. In cooperative game theory, multiple participants form coalitions and share information or resources to achieve common goals, such as reducing overall travel time or increasing overall system safety. The cooperative driving framework allows vehicles to share their location and speed information and predict the intentions and behaviors of others, leading to more coordinated and safer decisions in complex road environments. For autonomous vehicle control at roundabouts, Tian et al. (2018) demonstrate the effectiveness of adaptive game theory decision algorithms by online estimating the opponent driver types and adjusting strategies accordingly, thus managing multi-vehicle interactions in complex traffic environments.

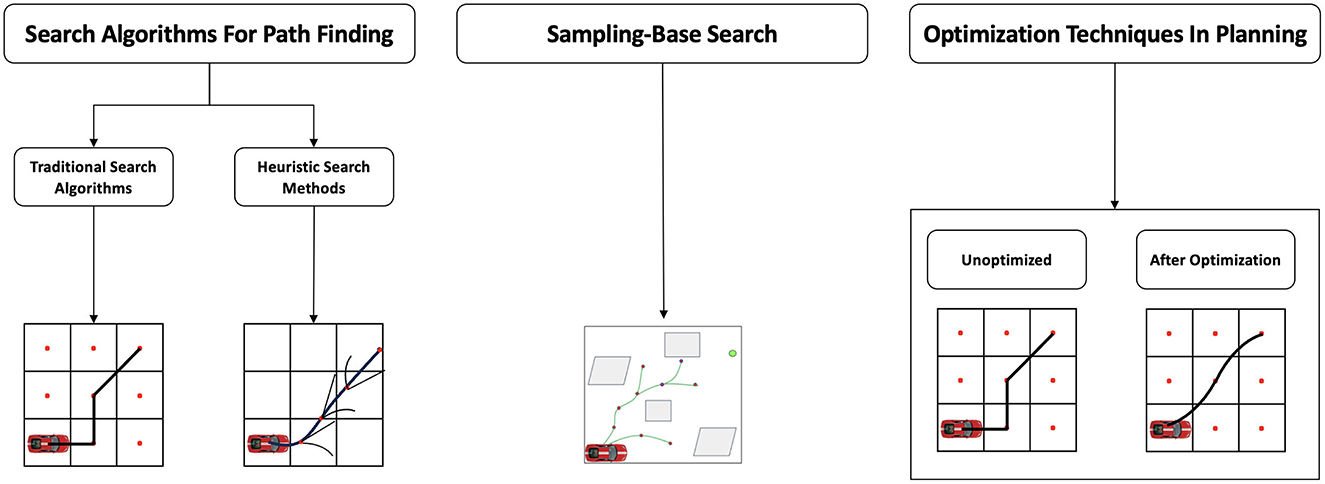

Path planning methods are typically divided into two stages. The first stage includes search-based methods, such as A* and Dijkstra, which systematically search all possible paths to find the optimal solution for well-defined and relative problems. Additionally, it also includes sampling-based methods, such as Rapidly-Exploring Random Trees (RRT) and Probabilistic Roadmaps (PRM), which generate candidate paths through random sampling and are suitable for high-dimensional and complex planning spaces. The second stage primarily employs optimization techniques by designing objective functions and constraints to model the path optimization goals. Ultimately, this process results in a planned path that meets requirements for smoothness, safety, and efficiency. Figure 3 depicts the architectural forms of different planning algorithms.

Figure 3. The knowledge-driven algorithm framework is mainly divided into three parts: search-based methods, sampling-based methods, and optimization-based methods. From the figure, the hybrid search effect is more prominent in the search-based methods, and the trajectory planned by the optimization-based method is smoother.

Traditional search algorithms such as A* and Dijkstra systematically explore the path space to find the optimal route from a start to an end point. However, the environments for path planning have become increasingly complex. Diverse heuristic and meta-heuristic search methods have emerged to enhance computational efficiency and path quality. Ferguson and Stentz (2006) explore the field D* algorithm, which calculates path cost estimates during linear interpolation to generate paths with continuous headings. Daniel et al. (2010) find shorter paths on grids without restricting path direction. Wang J. et al. (2019) combine A* with neural networks to incorporate rich contextual information and learn user movement patterns. Meanwhile, Melab et al. (2013) demonstrate the efficiency and practicality of the ParadisEO-MO-GPU framework, a GPU-based parallel local search meta-heuristic algorithm, which implements parallel iterative search models on graphical processing units. Stochastic search algorithms and their variants are also applied in autonomous vehicle path planning to handle dynamic obstacles and complex scenarios. Kuffner and LaValle (2000) solve single-query path planning problems by constructing trees from both the start and end points. Yu et al. (2024a) improve planning efficiency in dynamic environments, which combines dual-tree search with efficient collision detection mechanisms. Huang and Lee (2024) achieve asymptotically optimal path planning in narrow corridors by adaptive information sampling and tree growth strategies.

Sampling-based methods address path planning problems in complex and dynamic environments by generating candidate paths with random sampling in the planning space. Rapidly-exploring Random Trees (RRT) and their variants are representative of these methods. Specifically, Wang Z. et al. (2024) demonstrate that by introducing guided paths and dynamically adjusting weights, which effectively reduces planning time and path curvature. Similarly, Dong et al. (2020) propose a knowledge-biased sampling-based path planning method for Automatic Parking (AP). This approach improves the algorithm's integrity and feasibility by introducing reverse RRT tree growth, using Reeds-Shepp curves to directly connect tree branches, and employing standardized parking space/vehicle knowledge-biased RRT seeds. Chen et al. (2022) propose a strategy combining RRT and Dijkstra algorithms to adapt to semi-structured roads. The method first narrows the planning area using an RRT-based guideline planner, then translates the path planning problem into a discrete multi-source cost optimization problem. The final output path is obtained by applying an optimizer to a discrete cost evaluation function designed to consider obstacles, lanes, vehicle kinematics, and collision avoidance performance. Huang H. et al. (2024) adopt the least action principle to general optimal trajectory planning for autonomous vehicles, offering a method to simulate driver behavior for safer and more efficient trajectory planning. Beyond RRT algorithms, the Probabilistic Roadmap (PRM) algorithm and its improved versions have shown superiority in narrow path planning. Huang Y. et al. (2024) enhance the PRM method by combining uniform sampling with Gaussian sampling, increasing the success rate and efficiency of path planning in narrow passages. Zhang Z. et al. (2023) transform a grid map into a formal context of concepts, mapping the relative positional relationships between rectangular areas. They then convert these relationships into partial order relationships within a rectangular region graph based on concept lattices.

Optimization techniques in planning are broadly applied and critical, By appropriately selecting and applying suitable optimization methods, the performance and efficiency of planning systems can be significantly improved. Xu et al. (2012) introduce a real-time motion planner that achieves efficient path planning through trajectory optimization. Similarly, Zhang et al. (2020) decompose the path planning process into two stages: generating smooth driving guidance lines and then optimizing the path within the Frenet frame. Additionally, Werling et al. (2010) combine long-term goals (such as speed maintenance, merging, following, stopping) with reactive collision avoidance. The method demonstrates its capability in typical highway scenarios, generating trajectories that adapt to traffic flow and validating. Zhang Y. et al. (2023) and Gulati et al. (2013) employ nonlinear constrained optimization methods to compute trajectories that comply with kinematic constraints. The method focuses on dynamic factors such as continuous acceleration, obstacle avoidance, and boundary conditions to achieve human-acceptable comfortable motion.

In practical applications, the efficiency and feasibility of implementing optimization algorithms are critical. Stellato et al. (2020) propose the OSQP solver, which effectively addresses convex quadratic programming problems using the Alternating Direction Method of Multipliers (ADMM), making it suitable for real-time applications. High-performance nonlinear optimization has been realized through domain-specific languages (DSL), where GPU acceleration is used to enhance solving efficiency (Yu et al., 2024b). Furthermore, Huang X. et al. (2023) adopt the Levenberg-Marquardt optimization algorithm for nonlinear systems to the control of continuous stirred-tank reactors, showing faster convergence rates and stronger disturbance resistance.

In summary, optimization techniques play a pivotal role in enhancing the efficiency and effectiveness of planning systems, particularly in the context of autonomous driving and real-time applications. By strategically selecting and applying appropriate optimization methods, such as trajectory optimization and nonlinear constrained optimization, these systems can achieve significant improvements in path planning and dynamic response to environmental factors. The integration of advanced solvers like the OSQP for convex quadratic programming and the utilization of domain-specific languages for leveraging GPU acceleration further highlight the importance of optimization in achieving high-performance planning. These approaches demonstrate the capability to address complex scenarios, maintain compliance with kinematic constraints, and ensure comfort and safety in motion, thereby validating their critical role in theoretical and practical applications across various domains.

Imitation Learning (IL) has become a pivotal methodology in the advancement of Autonomous Vehicles (AVs), leveraging expert demonstrations to navigate around the complexities and hazards intrinsic. Imitation Learning for autonomous vehicles is categorized into three main approaches: Behavioral Cloning (BC), Inverse Reinforcement Learning (IRL), and Generative Adversarial Imitation Learning (GAIL).

Behavioral Cloning (BC) is a straightforward approach that directly mimics human driving behavior. The approach offers several advantages, including simplicity, ease of training, and effective performance when there is a substantial amount of high-quality human driving data available. However, BC encounters difficulties when confronted with unfamiliar road scenarios, is susceptible to noise in the training data, and does not consider the long-term implications of decisions. BC is typically employed in relatively simple and structured environments, such as highways or known routes. Inverse reinforcement learning (IRL) offers the advantage of understanding the underlying intent of human drivers by inferring a reward function, thereby capturing complex driving strategies. This makes it an advantageous approach in diverse and complex scenarios. It demonstrates effective adaptation to novel environments; however, it is associated with considerable computational complexity, necessitating substantial resources and well-designed features and models. IRL is frequently utilized in scenarios that necessitate the comprehension of intricate decision-making processes, such as urban driving. Generative Adversarial Imitation Learning (GAIL) integrates the strengths of generative adversarial networks to enhance model robustness and generalization through adversarial training. GAIL can imitate complex behaviors without explicit reward functions, thereby providing better adaptability to unknown situations. However, its training process can be unstable, involves complex tuning, and demands high-quality and diverse training data. GAIL is suitable for uncertain driving environments that require high robustness and adaptability, such as dynamic urban traffic. An overview of these approaches will be presented in this section.

Imitation Learning approach exploits the vast repository of human driving data to train policies that emulate expert behavior. The fundamental problem definition for IL in the context of AVs revolves around deriving a policy π* that closely matches the expert's policy πE by minimizing the discrepancy between their state-action distributions across a dataset D of demonstrated trajectories. Each trajectory t within D consists of sequential state-action pairs (sit,ait), where ait is the action executed by the expert in state sit according to πE. The optimization framework for achieving this can be mathematically formalized as:

where is a divergence measure that quantifies the dissimilarity between the expert's policy and the learned policy.

BC simplifies the IL challenge by transforming it into a supervised learning problem, with the aim of learning a policy. πθ that minimizes the loss function over the dataset D of state-action tuples, thereby replicating the expert's behavior:

Where the PE(s ∣ πE) is the state distribution of the expert policy, and the can be a loss function that measures the imitation quality of the expert's actions. Commonly, can be the L1 loss (mean absolute error) or the L2 loss (mean squared error). Taking L2 loss as an example, the loss function can be:

The problem of IRL in autonomous driving revolves around inferring a reward function r* from expert demonstrations. Given a set of state-action pairs sampled under the expert policy π*, IRL attempts to learn a reward function r* such that:

Generative Adversarial Imitation Learning (GAIL) is a framework that extracts expert-driving policies without explicitly defining reward functions or employing laborious reinforcement learning cycles. It synergistically combines imitation learning with Generative Adversarial Networks (GANs), creating a duel between a generator and a discriminator. The generator, parameterized by θ, emulates expert maneuvers by matching the distribution of state-action pairs observed in demonstrations. Meanwhile, the discriminator represented by Dω within the interval (0, 1) serves as a stand-in reward evaluator, quantifying the likeness between the generated and actual expert behaviors. The GAIL lies in a min-max optimization objective, succinctly expressed as:

This formula pits the generator against the discriminator, where Eπθ[logDω(s, a)] encourages the generator to produce actions indistinguishable, and ensures the discriminator's sharpness in distinguishing real from fake samples. The term EπE[log(1 − Dω(s, a))] introduces entropy regularization to promote policy exploration. Here, H(πθ) denotes the entropy of policy π, a measure of randomness in action selection that fosters learning flexibility. Gradients guiding updates for both components are defined as:

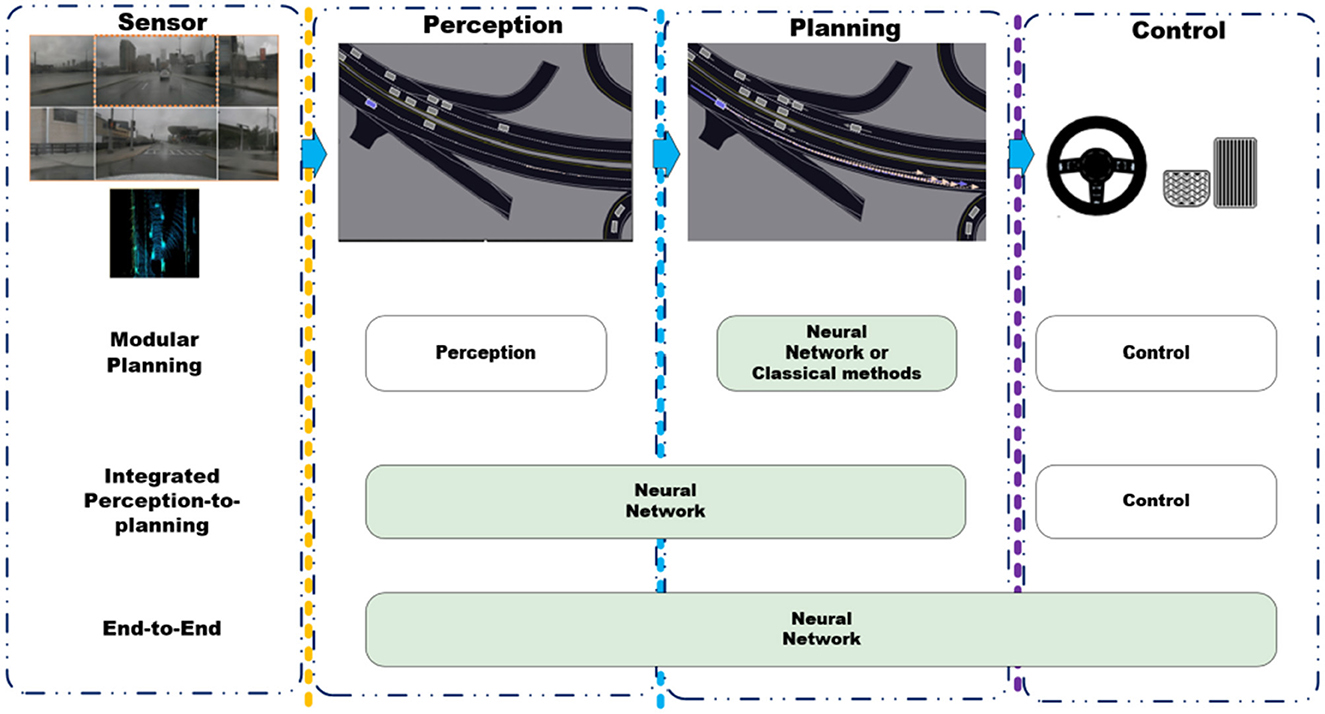

We refine the taxonomy of Behavioral Cloning into two unique categories, as shown in Figure 4: End-to-End and Modular Planning.

Figure 4. The taxonomy of Behavioral Cloning. Autonomous driving tasks are composed of three key components: perception, planning, and control. Perception generates environmental perception outcomes based on sensor inputs. Planning creates the future trajectory of the vehicle by considering perception results and historical state information. Control implements vehicle control actions according to the planning outcomes. Regarding the taxonomy of Behavioral Cloning methods within the context of planning task, it can be categorized into three types. Modular Planning links the perception, planning, and control modules in a pipeline fashion. Integrated Perception-to-planning integrates the perception and planning tasks into a single module. End-to-End indicates that the entire perception, planning, and control tasks are accomplished by a unified module.

End-to-End methodologies involve models that are optimized to infer precise steering and acceleration commands directly from raw sensor data. ALVINN (Pomerleau, 1988) was the first implementation of end-to-end Imitation Learning for autonomous driving in 1989. There is a front-facing camera installed on ALVINN as visual input, and it uses a 3-layer MLP as the policy function approximator. ALVINN learns in real time using data from the human driver to train for lane-keeping steering commands. The AVLINN is extended with obstacle avoidance by Muller et al. (2005). It presents an obstacle avoidance system for small off-road vehicles equipped with twin forward-facing cameras named DAVE. Using end-to-end learning, the system is trained on raw image data coupled with human driver input in a variety of environments. The work from NVIDIA (Bojarski et al., 2016) has taken the concept of end-to-end imitation learning a step further with its DAVE-2, which uses inputs from three onboard cameras. The dual perspective provided by the offset left and right cameras enables the system to correct for vehicle drift. A further work from NVIDIA (Bojarski et al., 2017) provides an exhaustive analysis of the interpretability of the neural network for autonomous driving proposed by Bojarski et al. (2016). The core of this research is to reveal how the deep neural network decides the driving direction based on the input road images, particularly in the context of an end-to-end learning framework. Another work (Cultrera et al., 2020) proposes an explainable autonomous driving system using imitation learning with visual attention. By integrating an attention mechanism, the system highlights important image sections to enhance decision transparency. Hecker et al. (2018) present an end-to-end driving model utilizing eight surround-view cameras strategically mounted for a 360-degree perspective. The model integrates CNNs for feature extraction and LSTM layers for temporal encoding. Codevilla et al. (2018) propose Conditional Imitation Learning (CIL), a framework that enriches the learning process by incorporating explicit intent information alongside visual observations. The CIL model is designed to receive not only visual data from a three-camera configuration–inspired by the DAVE-2 system (Bojarski et al., 2016), but also a high-level command indicating the intended action (e.g., turn left, turn right, go straight). Their network comprises convolutional layers for feature extraction, followed by Long Short-Term Memory (LSTM) units to handle temporal sequences.

The efficacy of the CIL framework is assessed through a dual-pronged evaluation approach. Hawke et al. (2020) advance the application of Conditional Imitation Learning (CIL) by presenting an end-to-end autonomous driving system proficient in executing both steering and speed adjustments amidst intricate urban landscapes. To infuse temporal awareness, an optical flow model is incorporated by Sun D. et al. (2018), enhancing the system's understanding of motion dynamics. Xiao et al. (2020) develop an end-to-end autonomous vehicle framework that integrates both RGB imagery and depth data from onboard LiDAR sensors. Wang Q. et al. (2019) employ the vehicle's current location and intended trajectory to compute a “subgoal angle,” which serves as an input to the neural network. The network takes sequential images, vehicle speed, and the computed subgoal angle as inputs. Its initial seven layers are pre-trained on ImageNet to facilitate feature extraction. Separate feature extractor modules process the input images, speed, and subgoal angle independently. These extracted features are subsequently combined to forecast steering commands and throttle inputs. Haavaldsen et al. (2019) delve into the integration of recurrent layers within end-to-end autonomous vehicle architectures. It involves the training of two distinct models: a standard Convolutional Neural Network (CNN) operating in an end-to-end framework and a CNN with recurrent layers. The training data contains 3 camera images, traffic signals, a high-level command, output steers, and speed control signals. Chi and Mu (2017) introduce a model that incorporates LSTM architecture to enhance its capacity for temporal reasoning. This model treats the steering angle as a dynamically evolving variable, reflecting the continuity inherent in driving actions. The integration of LSTM within a dual-subnetwork framework ensures a comprehensive understanding of the driving environment and historical vehicle dynamics. Kebria et al. (2019) uncover that models with increased depth surpass their less deep counterparts, with a substantial improvement particularly evident when transitioning from 9 to 12 layers. Furthermore, models incorporating a varied assortment of filter sizes emerged as the top performers, highlighting the advantage of filter size diversity. Barnes et al. (2017) integrate video odometry for tracking vehicle movement and employs LiDAR for detecting impediments. By merging visual cues from the camera with spatial data from LiDAR, the system can partition the incoming visuals into three classifications at the pixel level: traversable paths, non-traversable areas, and unclassified zones. Cai et al. (2019) propose a model that integrates camera visuals, high-level navigational commands, and past trajectories of autonomous vehicles. This model is structured with three distinct sub-networks, each dedicated to executing a fundamental maneuver: maintaining a straight course, turning left, or turning right. These sub-networks are concatenated with Long Short-Term Memory (LSTM) and Fully Connected (FC) layers to formulate trajectories. Bansal et al. (2018) transform bird's-eye view environmental inputs into driving commands. To overcome the limitations of standard behavior cloning, the model integrates synthesized data simulating challenging scenarios such as collisions and off-road incidents. By augmenting the loss function to penalize unwanted events and encourage progression, the system learns from both ideal and adverse behaviors, achieving higher robustness. Caltagirone et al. (2017) propose a LiDAR-based driving path generation approach using a fully convolutional neural network (FCN) that integrates LiDAR point clouds, GPS-IMU data, and Google navigation instructions. The system learns to perform perception and path planning directly from real-world driving sequences. This learning-based method bridges low-level scene understanding with behavioral reflexes, enhancing autonomous vehicle technology by producing human-interpretable outputs for vehicle control. Xu et al. (2017) present an end-to-end learning framework for autonomous vehicles with a large, uncalibrated, crowd-sourced video dataset to mitigate the Cascading Error Problem. Their model integrates spatial and temporal cues for continuous steering angle prediction. Model assessment entails contrasting the highest probability predicted action against the actual action for validation. Unlike approaches that directly use a single network to fit autonomous driving tasks, some researchers have introduced more complex multi-stage architectures to enhance network performance. Chen et al. (2020) outline a novel two-stage training method for autonomous driving systems named “Learning by Cheating.” Initially, a “privileged” agent is trained with access to ground-truth environmental data, providing an unrealistic advantage akin to “cheating.” This agent then teaches a “sensorimotor” agent, which operates solely on visual input, mimicking expert behavior without direct access to the privileged information. This strategy breaks new ground by separating perception from decision-making, enabling the vision-based agent to excel without needing explicit environmental cues. Chen and Krähenbühl (2022) present a pioneering system that leverages the experiences of not just the ego-vehicle but also surrounding vehicles for autonomous driving policy training. This innovative approach enriches the diversity of driving scenarios without requiring additional data collection. Wu et al. (2022) propose an integrated solution for autonomous vehicles, merging trajectory planning and control prediction into one system. Shao et al. (2023b) process intricate urban traffic scenarios by incorporating both temporal and global reasoning mechanisms. This system uniquely addresses the challenges of predicting future object movements and managing obscured entities, enhancing safety through superior anticipation of potential hazards in complex situations. Hu et al. (2023b) propose a novel framework that departs from conventional autonomous driving architectures by focusing on planning as the objective. UniAD integrates perception, prediction, and planning tasks into one unified network. The network's unique design revolves around transformer decoder-based modules that facilitate multi-task cooperation and emphasize a planning-first mindset.

In contrast to the aforementioned end-to-end architectures that use data-driven methods to model the entire autonomous driving system, Modular Planning focuses on the data-driven aspects, specifically within the decision-making and planning components. Chen et al. (2019) address the challenges associated with conventional model-based decision-making systems. By learning from offline expert driving datasets, the proposed method bypasses the need for manual policy design, thereby offering scalability and adaptability to diverse road conditions, including varying topologies, geometries, and traffic regulations. Sun L. et al. (2018) present planning and control framework, addressing the challenges of real-time, safety, and efficiency. Renz et al. (2022) deviate from conventional pixel-based planning systems that often struggle with efficiency and interpretability in complex environments. PlanT employs an object-centric representation, processing a compact set of scene elements rather than dense grids, thereby enhancing both computational speed and decision transparency. Guo et al. (2023) innovate urban autonomous driving by tackling the covariate shift issue in behavior cloning. It introduces a policy mapping context states directly to ego vehicle trajectories, bypassing combined state-action predictions. Cheng et al. (2023) address the inefficiencies arising from the lack of a standardized benchmark in evaluating imitation-based autonomous driving planners. By leveraging the newly introduced nuPlan dataset and its closed-loop benchmarking framework, they conduct an extensive analysis focusing on two key aspects: crucial features for ego-motion planning and effective data augmentation strategies to mitigate compounding errors. Cheng et al. (2024a) propose a query-based model that integrates lateral and longitudinal self-attentions sequentially, followed by a cross-attention mechanism that aligns the decoded trajectory with the scene context. To mitigate computational intensity, PLUTO employs factorized attention, reducing complexity without sacrificing expressiveness.

Inverse Reinforcement Learning (IRL) recognizes reward structures from expert demonstrations that are critical for emulating nuanced human driving behaviors in autonomous vehicles. While Reinforcement Learning (RL) optimizes actions based on known rewards, Inverse Reinforcement Learning (IRL) deduces the underlying reward functions from observed behaviors, providing valuable insights into decision-making processes.

Rosbach et al. (2019) optimize driving styles in an integrated general-purpose planner for autonomous vehicles. The learning process uses human demonstration data, approximating feature expectations within the planner's graph representation to facilitate maximum entropy IRL. Sadigh et al. (2016) adopt IRL to develop strategies for autonomous vehicles that proactively shape the behavior of human drivers. By approximating the human driver as an optimal planner with reward functions learned from demonstrations, the method optimizes robot actions without explicit communication coding. Brown and Niekum (2018) address the challenge of deriving high-confidence performance bounds in scenarios, where the reward function is unknown by employing a Bayesian Inverse Reinforcement Learning (IRL) sampling method. Using demonstration data, the framework employs Markov Chain Monte Carlo techniques to sample reward functions. These samples are used to compute a tight upper bound on the worst-case performance discrepancy between any evaluated policy and the optimal policy induced by the expert's latent reward structure. Palan et al. (2019) employ a novel hybrid framework, which synergistically integrates human demonstrations and preference queries for efficient reward function learning. Lee et al. (2022) adopt an IRL-based spatiotemporal approach for Model Predictive Control (MPC), utilizing a deep neural network structure with goal-conditioning. This network design ingests concatenated bird's eye view images, encompassing occupancy, velocity, acceleration, and lane information, to implicitly learn an interpretable reward function. Cai et al. (2021) adopt IRL to harmoniously blend imitation learning with model-based reinforcement learning techniques. Phan-Minh et al. (2022) employ a unique structured neural network, processing separated features with masked self-attention before integration. Liang et al. (2018) employ a Controllable Imitative Reinforcement Learning (CIRL) methodology. The architecture features a gating mechanism enabling conditional policy execution based on distinct commands, processing raw visual inputs to output continuous control actions like steering angles. Huang et al. (2023c) use IRL for conditional predictive behavior planning in autonomous vehicles. It features a Transformer-based network structure that integrates future ego plans with agent history and vectorised map.

Generative Adversarial Imitation Learning (GAIL) innovatively combines the strengths of imitation and generative adversarial networks (GANs) for autonomous vehicle policy learning. Unlike traditional reinforcement learning, GAIL learns policies directly from expert demonstrations. It employs a two-part structure: a generator acting as a policy and a discriminator acting as a reward function. With GAIL, autonomous systems can learn sophisticated behaviors in an end-to-end manner, improving their adaptability and performance in the field. Li et al. (2017) extend GAIL for unsupervised discovery of latent structures in expert demonstrations. By leveraging visual data, it learns interpretable representations directly from raw pixel inputs. Kuefler and Kochenderfer (2017) extend the InfoGAIL algorithm to address multi-modal imitation learning for sustained behavioral replication. By introducing “burn-in demonstrations,” the method conditions policies at test time, enhancing their ability to mimic expert behavior over extended periods. Kuefler et al. (2017) utilize GAIL to emulate human highway driving patterns. In particular, the performance of this method is significantly better on longer-term predictions (over 3 s), highlighting the superiority under a wide range of assessment metrics. Merel et al. (2017) introduce a novel extension to GAIL, specifically tailored for extracting human-like behaviors from sparse and noisy motion capture data. This method innovates by successfully training policies using partial state features alone. It further demonstrates the feasibility of imitation across dissimilar body structures and dynamics. By integrating a context variable into the GAIL framework to manage multi-behavior policies, the approach fosters seamless transitions between various learned behaviors. Sharma et al. (2018) propose a framework for learning hierarchical policies from unsegmented demonstrations. Directed-info Gail adopts directed information flow in a graphical model to uncover subtasks without the need for action labels. Using an L2 loss, it refines action replication in complex tasks and demonstrates effectiveness in various environments, including grid navigation and continuous control scenarios such as hopper and walker. Fei et al. (2020) introduce a novel approach to the multi-modal GAIL method. It integrates an auxiliary skill selector, enabling the system to adaptively choose behaviors in response to varying contexts. Theoretical convergence guarantees for both the generator and selector ensure optimal policy learning.

RL is primarily classified into three methodologies: value-based, policy-based, and actor-critic methods. Value-based methods, such as Q-learning, concentrate on estimating the value of actions in given states in order to derive optimal policies. The principal benefit of these methods is their simplicity and efficacy in discrete action spaces, rendering them well-suited to environments where the state-action space can be accurately represented and managed. However, they frequently encounter difficulties when confronted with extensive action spaces, a phenomenon known as the curse of dimensionality. Furthermore, they necessitate a considerable amount of exploration to reach optimal policies. These methods are typically employed in scenarios with a finite set of actions, such as grid-world problems or simplified driving tasks. In contrast, policy-based methods directly parameterise and optimize the policy itself, thereby offering advantages in the handling of continuous action spaces and enabling more direct learning of stochastic policies. These methods are particularly effective in environments where the action space is continuous or high-dimensional, such as robotic control or complex maneuvering tasks. However, policy-based methods may be less sample-efficient and may exhibit high variance during training, necessitating the careful tuning of learning rates and exploration strategies. Actor-critic methods integrate the advantages of both value-based and policy-based techniques by employing two distinct structures: the actor, which updates the policy, and the critic, which assesses the action taken by the actor. This combination enables more stable and efficient learning, reducing variance and improving convergence rates. Actor-critic methods are versatile and can be applied in a wide range of settings, from relatively simple tasks to those of a more complex nature, such as autonomous driving in dynamic environments. However, they are prone to being computationally intensive and require careful balancing between the updates to the actor and critic in order to maintain stability.

Reinforcement Learning (RL) constitutes a foundational mathematical framework grounded in the principle of trial-and-error learning. Mathematically refined of RL, is formalized as (S, A, P, R, γ), with S and A representing the sets of all possible states and actions, respectively. The transition dynamics function, P(st+1 ∣ st, at): S × S × A → [0, 1], maps state-action pairs to a probability distribution over subsequent states. The instantaneous reward function, R(st, at, st+1): S × A × S → , furnishes the learning cues. A discount factor γ ∈ [0, 1] governs the present valuation of prospective rewards, with lower values promoting shortsighted decision-making.

For scenarios where some environments are not fully observable, they incorporate an observation space Ω and an observation function O, such that O(at, st + 1, ot+1) = P(ot+1 ∣ at, st+1) quantifies the likelihood of perceiving ot+1 following the execution of action at leading to state st+1.

At each discrete time step t, the agent conditioned on its present state st chooses an action at from the action set A, subsequently earning a numerical reward rt+1 and transitioning to a new state st+1, as shown in Figure 5. The sequential record {s0, a0, r1, s1, a1, r2, ...} formed is termed a rollout or trajectory. The anticipated accumulation of future rewards, encapsulated by the expected discounted return Gt beyond time step t, is mathematically defined as follows:

where T represents a finite value for problems with a finite horizon, and ∞ for those with an infinite horizon. The policy π(a ∣ s) assigns probabilities to each potential action based on the current state. Meanwhile, the value function under policy π, denoted vπ(s), estimates the expected cumulative return when starting from state s and adhering to policy π thereafter.

Similarly, the action-value function qπ(s, a) is defined as:

which satisfies the recursive Bellman equation:

The objective of RL is to identify the optimal policy that maximizes the expected return .

RL is primarily classified into three methodologies: value-based, policy-based, and actor-critic methods.

Value-based RL focuses on assessing the value of states or state-action pairs to guide decision-making. By iteratively updating a value function that predicts future rewards, these methods prioritize actions associated with the highest expected return. Alizadeh et al. (2019) adopt DQN (Deep Q-Network) to address a discrete action space where decisions such as lane changes are made in autonomous driving simulations. Reward mechanisms are designed to incentivise safe behavior, penalize collisions or aggressive maneuvers and encourage goal-directed navigation. Deshpande and Spalanzani (2019) address the challenge of autonomous in the presence of pedestrians. The action space encompasses velocity adjustments and steering decisions, while the state space is depicted through a grid-based representation encoding vehicle and pedestrian positions. Reward mechanisms are in place to encourage safe distances from pedestrians and adherence to traffic rules. Tram et al. (2019) integrate DQN with Model Predictive Control (MPC) to address intersection navigation. The action space comprises six distinct actions, including proceeding through the intersection and yielding. The state space encompasses the dynamic vehicle conditions and partial observability of surrounding traffic. A reward mechanism is devised to ensure that driving practices are both safe and effective. Li and Czarnecki (2018) employ a multi-objective Deep Q-Network variant to address the challenge of autonomous driving. The action space encompasses a wide range of maneuvers, including lane changes and adhering to traffic rules at intersections. The model employs a neural network architecture featuring shared and specialized layers, with inputs including vehicle states and environmental factors. Auxiliary factored Q-functions are integrated to enhance learning efficiency, leveraging structured representations of the environment. Ronecker and Zhu (2019) address the complexity of decision-making in autonomous driving on highways. The action space encompasses the selection of target points for trajectory planning. The reward functions have been designed to align with the specific requirements of highway scenarios. The method provides incentives for safe and efficient driving behaviors, including maintaining speed, executing smooth lane changes, and avoiding collisions. Yuan et al. (2019) employ a Multi-Reward Architecture based DQN approach. The reward functions have been structured in such a way as to incentivise the maintenance of a certain speed, overtakes and safe lane changes. A distinctive network design incorporates a shared low-level with three distinct high-level branches, each dedicated to a specific reward aspect. The training process encompasses a diverse range of scenarios that have been simulated for autonomous highway driving. Liu et al. (2019) employ the DDQN (Double Deep Q-Network) for reinforcement learning, navigating within a discrete action space comprised of semantic actions. Rewards are tailored for safe and efficient maneuvers. Min et al. (2019) employ the DDQN (Distributional Deep Q-Network), which operates in a discrete action space characterized by highway driving scenarios. The rewards are designed for safe and efficient navigation. The network architecture integrates convolutional layers for processing camera images and additional layers for LiDAR data fusion. A distinctive dual Q-function mechanism serves to prevent value overestimation, thereby enhancing the stability of the training process. The model undergoes rigorous learning phases, validated through simulations in a Unity-based highway driving environment. Shi et al. (2019) employ an HDQN (Hierarchical DQN) approach, which addresses the complexity of autonomous lane change tasks in dynamic environments. The rewards are shaped by safety and feasibility metrics. The method employs a dual-layer network structure, with fully connected layers used for decision-making. Through iterative learning and rigorous training, the model demonstrates its proficiency via simulations, exhibiting convergent loss curves and accumulating rewards that signify effective decision-making and planning.

Policy-based RL directly optimizes a policy function, mapping states directly to action probabilities. This approach adjusts the policy parameters based on the policy gradient. Policy-based methods naturally handle continuous action spaces and can induce more complex behaviors, yet they may suffer from higher variance during optimization. Osiński et al. (2020) employ PPO (Proximal Policy Optimization) to optimize steering commands in a continuous action. The network is trained predominantly on synthetic data, which is more cost-effective than training on real data. The results of real-world validation demonstrated remarkable success in sim-to-real transfer, evidenced by the performance of the system in nine diverse driving scenarios, totalling 2.5 km. Belletti et al. (2017) encompass a variety of policy update methods, with PPO exhibiting a faster convergence to optimal policies. Tang (2019) employ PPO for learning multi-agent negotiations in complex environments. The study addresses a continuous action space, where agents perform tasks such as acceleration, steering, and signaling in a state space that encompasses dynamic traffic scenarios. The rewards are designed to encourage safe and efficient navigation, with penalties for collisions and incentives for adherence to traffic rules. Jang et al. (2019) train autonomous vehicles (AVs) to navigate traffic in a continuous action space with the TRPO (Trust Region Policy Optimization) algorithm. The objective of the reward signals was to minimize traffic delays and to promote smooth merging behaviors. The neural networks process inputs and apply nonlinear transformations for decision-making. Chen et al. (2018) adopt a deep HRL (hierarchical reinforcement learning) approach to address the challenge of autonomous driving tasks with distinct behaviors, such as passing or stopping at traffic lights. The reward system is dynamically adjusted based on the vehicle's action and the factors of vehicle velocity, distance to crossing line, and time till signal change. The hierarchical structure comprises distinct modules for decision-making levels, with inputs including vehicle dynamics and the generation of acceleration commands through a policy network.

Actor-critic algorithms integrate the strengths of both methods: an “actor” generates actions based on learned policies, while a “critic” evaluates these actions through a value function. Actor-critic methods often achieve greater stability and learning efficiency, particularly in complex tasks. Next, we will explore in detail the application of these three methods in decision-making and planning for autonomous driving. Wu et al. (2018) address the challenge of dynamically assigning varying speed limits across lanes, which requires consideration of a complex state space that encompasses multiple traffic factors. The method employs Deep Deterministic Policy Gradient (DDPG), a variant of reinforcement learning, which operates in continuous action spaces. The reward signals encompass efficiency metrics, safety indicators, and environmental impact through emissions. The DDPG model employs a sophisticated actor-critic architecture that utilizes inputs reflecting real-time traffic conditions to learn optimal speed limit adjustments. The training process optimizes both the actor and critic via temporal difference errors and deterministic policy gradients. Gao and Chang (2021) use the Soft Actor-Critic(SAC) algorithm to model autonomous driving system. A ResNet-34 architecture serves as the backbone for both actor and critic networks, processing raw image states coupled with vehicle speed as inputs. Imitation learning pre-training is adopted to improve model initialization before reinforcement learning fine-tuning for optimal performance. Chu et al. (2019) employ Advantage Actor-Critic (A2C) to address the challenge of large, discrete action space inherent in traffic signal control. Utilizing Long Short-Term Memory (LSTM) networks, the model processes complex spatio-temporal traffic flows, maintaining historical context without overwhelming the state representation. The model proposed by Lin et al. (2018) is also an A2C method operating in a discrete action space, where decisions involve switching or maintaining traffic light phases. The state space includes a 2-D tensor reflecting the number of stopped vehicles and average speeds across a 3 × 3 intersection grid. Mousavi et al. (2017) use deep policy gradient algorithms to control traffic signal operations. The model operates in a discrete action space, dictating traffic signal phases and navigating high-dimensional state spaces derived from complex urban traffic dynamics.

Hybrid methods exhibit unique advantages in the decision-making and planning of autonomous driving, yet they also encounter numerous challenges. Their merit lies in the successful integration of the learning capabilities of data-driven approaches and the characteristics of knowledge-driven methods. On one hand, the knowledge-driven component furnishes a rule framework that ensures the legality, consistency, and interpretability of decisions, enabling autonomous driving behaviors to adhere to traffic regulations and common sense. On the other hand, the data-driven part, by virtue of learning and mining from vast amounts of data, accurately identifies and adapts to complex and variable scenarios. Algorithms such as EPSILON and MARC, through the collaboration of deep learning models and tree models, significantly enhance the decision-making level, accomplishing flexible, safe, and highly interactive decision planning and strengthening the system's capacity to cope with diverse road conditions and the variety of traffic participants.

Nevertheless, the limitations of hybrid methods cannot be overlooked. In terms of technology integration, due to the disparate underlying principles, data processing logics, and model architectures between data-driven and knowledge-driven technologies, compatibility issues readily emerge during integration, leading to a substantial increase in system complexity. For instance, when combining deep learning models with rule decision trees, differences in data formats, learning modes, and reasoning logics can easily trigger system malfunctions or performance degradation. Regarding stability and reliability, potential conflicts between data and rules frequently disrupt the normal operation of the system. In extreme scenarios, strategies learned from data may contravene knowledge rules, resulting in decision chaos or even system collapse, severely endangering driving safety and reliability.

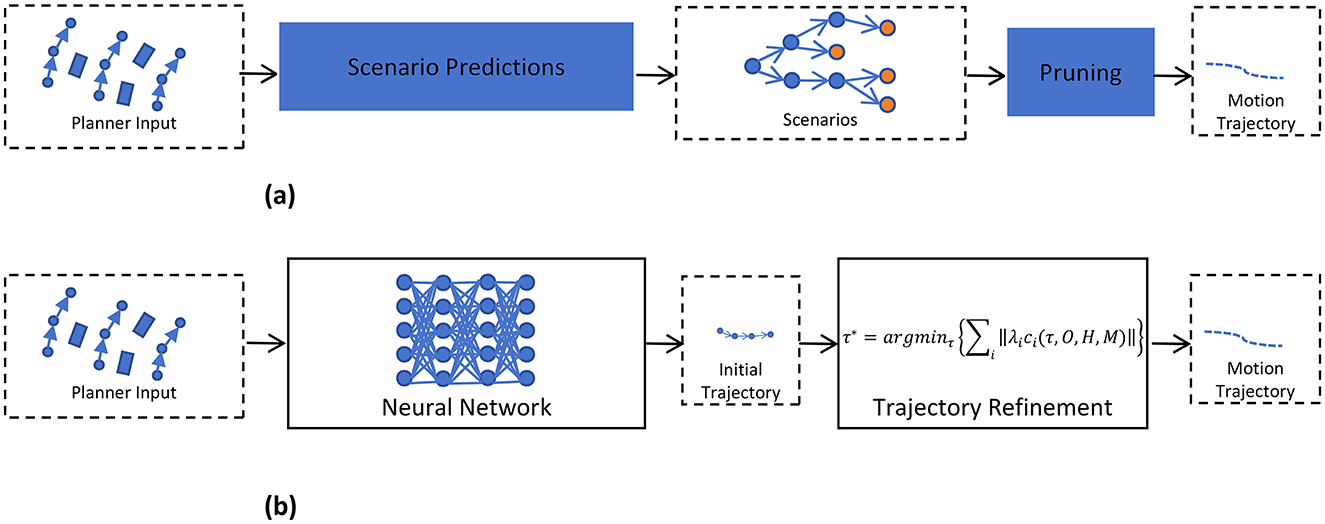

The algorithmic framework of hybrid models achieves flexible and safe high-interactivity decision planning by integrating the data-driven learning capabilities with the interpretability, safety, and efficiency of knowledge-driven approaches. The knowledge-driven component of the hybrid model provides rules to ensure the legality, consistency, and interpretability of decisions. Simultaneously, the data-driven component offers insights to identify and adapt to complex scenarios and dynamic changes that fall outside the scope of predefined rules. Algorithms such as EPSILON (Ding et al., 2021), MARC (Li T. et al., 2023), DTPP (Huang et al., 2023a), TPP (Chen Y. et al., 2023), and GameFormer (Huang et al., 2023b) enhance the decision-making process through deep learning models, while using tree-based modeling to incorporate prior knowledge for rule constraints, the pipeline of these methods are shown in Figure 6A.

Figure 6. Two typical hybrid model pipelines. (A) Represents the pipeline of EPSILON, MARC, DTPP, TPP, and GameForme, and the (B) represents the pipeline of methods using neural networks to provide initial solutions.