- 1Faculty of Humanities, Department of Languages and Translation, Birzeit University, Ramallah, Palestine

- 2Faculty of Humanities and Educational Sciences, An Najah National University, Nablus, Palestine

The emergence of generative AI in education introduces both opportunities and challenges, especially in student assessment. This paper explores the transformative influence of generative AI on assessment practices, drawing from recent training workshops conducted with educators in the Global South. It examines how AI can enrich traditional assessment approaches by fostering critical thinking, creativity, and collaboration. The paper introduces innovative frameworks, such as AI-resistant assessments and the Process-Product Assessment Approach, which emphasize evaluating not only the final product but also the student’s interaction with AI tools throughout their learning journey. Additionally, it provides practical strategies for integrating AI into assessments, underscoring the ethical use and preservation of academic integrity. Addressing the complexities of AI adoption, including concerns around academic misconduct, this paper equips educators with tools to navigate the intricacies of human-AI collaboration in learning settings. Finally, it discusses the significance of institutional policies for guiding the ethical use of AI and offers recommendations for faculty development to align with the evolving educational landscape.

The role of generative AI in educational assessment

Generative AI (Gen AI) has undergone significant advancements, transforming from simple text generation tools into highly sophisticated systems capable of producing human-like content across a broad spectrum of domains (Feuerriegel et al., 2024). With the emergence of advanced models like GPT-40 and the GPT-01 preview model, AI is now able to perform a range of complex tasks, including text analysis and natural language understanding, and it even demonstrates creativity in writing and problem solving (Shahriar et al., 2024). This leap in AI capabilities has opened up exciting new possibilities for its integration into education, particularly in the areas of providing feedback and enhancing assessment processes.

In the past year, the rapid development and increasing power of generative AI tools have sparked a revolution in education. These tools have enabled educators to devise innovative teaching strategies and redesign student tasks to better meet the demands of the AI era (Khlaif et al., 2023). As a result, there is a growing movement among decision makers, educators, practitioners, and researchers aimed at exploring how generative AI can be integrated into both higher and public education systems, creating opportunities for significant advancements across a variety of educational contexts (Noroozi et al., 2024).

Within education, generative AI is proving to be much more than a tool for administrative efficiency; it is opening new doors for improving student engagement and transforming the way learning is assessed. Furthermore, generative AI is becoming a point of competition among higher education institutions that strive to meet global goals such as the United Nations’ Sustainable Development Goals (SDGs), particularly SDG 4 (quality education) and SDG 5 (gender equality) (George and Wooden, 2023; Pisica et al., 2023).

However, despite these promising advancements, significant challenges remain in the process of integrating AI into higher education. Based on the author’s experience, supported by numerous studies, these challenges are not limited to financial costs. Rather, a critical issue lies in the attitudes and perspectives of the academic community, which are often accompanied by a lack of clear vision and strategy within higher education institutions, particularly in many countries of the Global South (Bozkurt et al., 2023; Jin et al., 2024). Even though UNESCO has published guidelines (Holmes and Miao, 2023) to assist decision makers in these regions, progress in AI adoption remains slow. While individual initiatives, such as professional development workshops, attempt to address these gaps and integrate AI into educational practices, many countries continue to lag behind despite these efforts.

Thus, while generative AI offers tremendous potential for reshaping education and advancing institutional goals, its full integration will require overcoming not only technical and financial barriers but also addressing the broader academic and structural challenges that persist, especially in less-resourced regions.

Assessment has always been a cornerstone of the educational process. It serves as a critical mechanism to measure learning outcomes, provide feedback, and ensure that educational goals are met (Botuzova et al., 2023). The development of traditional assessments, from standardized tests and exams to authentic assessments and the emerging new flipped-assessment approach, continues in light of technological advancements and emerging teaching strategies (Aziz et al., 2020). Such developments aim to align with 21st-century skills and enhance the quality of education, thereby meeting one of the sustainability goals.

Addressing the gaps in AI-driven assessment research

While the use of AI in education has been explored extensively in areas such as personalized learning, instructional design, and administrative automation, there exists a significant gap in understanding how generative AI can be integrated into assessment practices, particularly in higher education. Most current research focuses on the application of AI to automate grading or provide instant feedback, but there is limited research on how AI can transform the nature of assessments themselves. Additionally, much of the discourse around AI in education has been concentrated in the Global North, with insufficient attention paid to how these technologies can be adapted and implemented in Global South contexts, where resource constraints and educational challenges differ. This paper addresses these gaps by exploring how generative AI can be harnessed to rethink assessment methods, focusing on early adopters in the Global South and their diverse academic fields, such as medical education, humanities, and engineering.

Rethinking assessment strategies with AI

The purpose of this paper is to provide a comprehensive framework for rethinking assessment in the era of generative AI, drawing on both theoretical insights and practical experiences from workshops conducted with faculty members in higher education. By examining how generative AI can be integrated into assessment strategies, this paper aims to offer educators, particularly in the Global South, practical solutions for designing more meaningful, AI-enhanced assessments that align with modern educational needs. Furthermore, the present paper will highlight how these assessments can promote the development of higher-order thinking skills, encourage creativity, and provide more equitable and scalable solutions to challenges faced by educators worldwide. Through this exploration, this paper seeks to contribute to the ongoing dialog about the future of assessment in an AI-driven society.

Key insights from AI assessment workshops

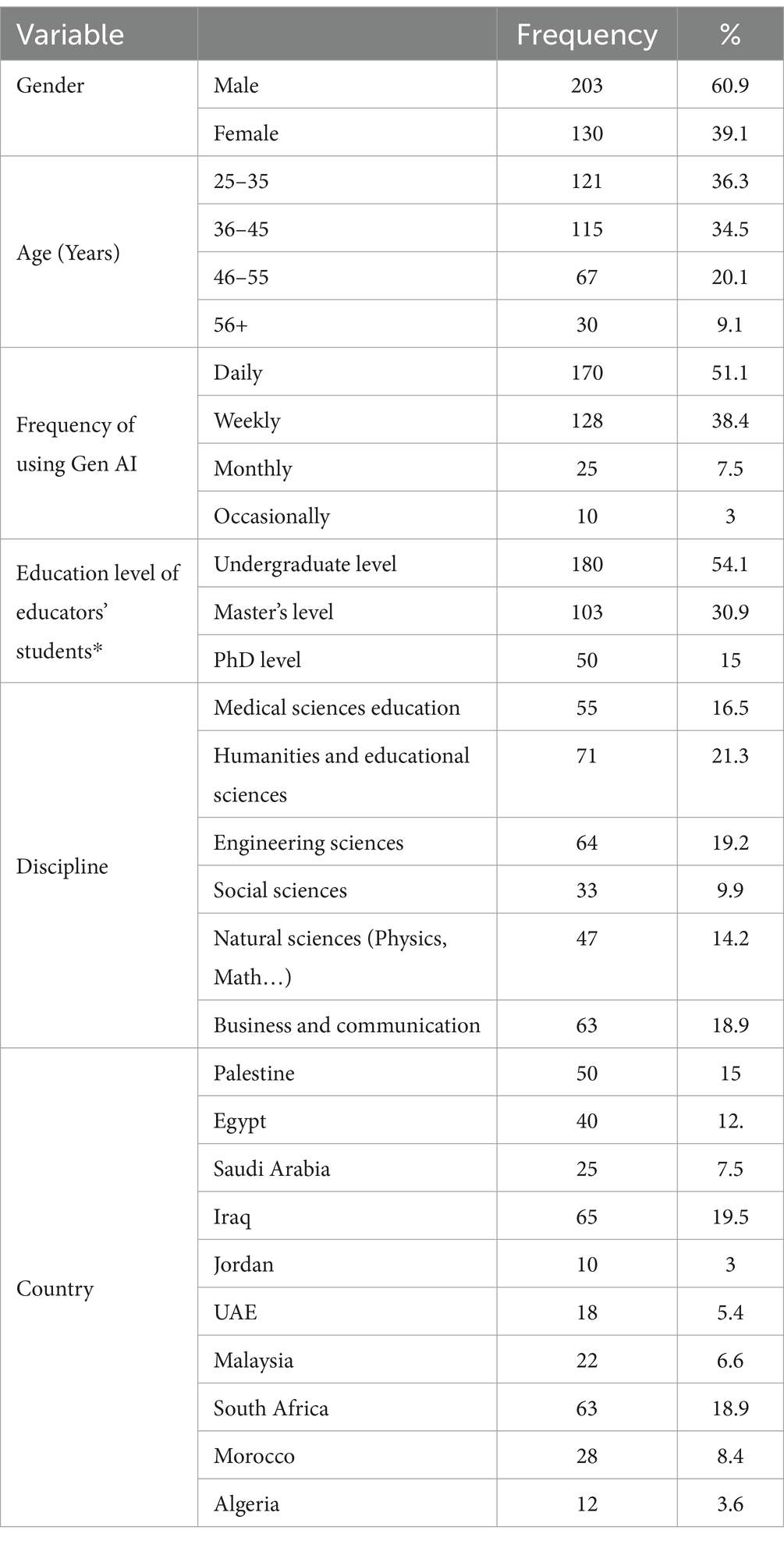

During the last year, the author designed and delivered a series of comprehensive training workshops and public lectures aimed at rethinking educational assessment in the age of artificial intelligence (AI). These sessions were conducted both in-person and online, bringing together 333 educators from various countries. The workshops were offered in both Arabic and English to accommodate the diverse linguistic backgrounds of the attendees. These sessions focused on equipping educators with theoretical insights and practical tools to redesign student assignments, fostering critical thinking, creativity, and ethical AI use in the classroom. Table 1 presents the demographic information of the participants in the training workshops.

The workshops were structured into two key components: theoretical discussions and practical applications. In the theoretical part, the author explored cutting-edge models and frameworks for assessing student work in the context of AI. One of the key models introduced was the AI Task Assessment Scale, a tool designed to help educators systematically evaluate student performance in assignments that incorporate AI tools. Another major framework discussed was the Process-Product Assessment Model, an emerging approach that not only assesses the final product of student work but also evaluates the quality of the steps students take during the process. This includes how they develop AI prompts and collaborate with AI tools throughout their learning journeys. This shift in assessment philosophy places greater emphasis on human-AI interaction, which offers educators a richer and more comprehensive understanding of student learning and critical-thinking processes.

In the practical component, educators engaged in hands-on activities to design AI-resistant assignments. This approach aligns with the AI-Resistance Assessment Scale (AIAS), which the author developed to guide educators in creating tasks that promote critical engagement and ethical AI use. Educators explored various ways to encourage students to use generative AI tools constructively, particularly during the brainstorming phase of assignments. This method of integrating AI into the learning process ensures that students remain responsible for the content generated by AI, fostering accountability and deepening their understanding of the material. By encouraging students to develop AI prompts carefully and strategically, educators can assess the cognitive processes involved in human-AI collaboration rather than just focusing on the output.

A central theme of the workshops was the Process-Product Approach, which was discussed extensively as a new and emerging method of assessment. Educators were trained to use this approach to evaluate both the quality of the final product and the process that led to it. This includes assessing the interactivity between students and AI, as well as the steps students take in developing effective prompts. The aim was to help educators design tasks that allow for a detailed evaluation of human-AI collaboration, ensuring that students engage critically with AI tools rather than use them passively. This focus on the process helps educators identify the depth of student understanding and decision making during their assignments.

As the educators engaged in these discussions, they reflected on ways to incorporate AI into student work while fostering critical thinking. One key suggestion was to allow students to use generative AI during the brainstorming and proofreading stages of their assignments. However, to ensure that students critically engage with the AI-generated content, educators recommended requiring students to write a reflection paper about their experiences using AI in course projects. This reflection would not only detail the students’ interactions with AI but also include a critique of the ideas and content generated by the tools. The purpose of this approach is to equip students with critical-thinking skills and foster the development of essential 21st-century skills such as problem solving, ethical decision making, and the ability to assess the credibility of AI-generated information.

Educators were particularly interested in designing a variety of AI-resistant tasks, which refer to assignments structured to minimize the risk of over-reliance on AI-generated content. These tasks require students to engage deeply with the subject matter, ensuring that they contribute their own insights and creativity while using AI as a supportive tool rather than a replacement for original thought. Emphasis was placed on designing assignments that challenge students to use AI ethically, critically, and responsibly. By requiring students to validate and reflect on AI outputs, educators can assess not only the final product but also how effectively students navigate the complexities of human-AI collaboration.

During the workshops, educators raised concerns about the implications of generative AI on academic integrity. Many feared that AI might dilute the originality of student work or lead to plagiarism. To address these concerns, we discussed strategies for shifting the focus of assessment from the final product to the learning process itself. By assessing the quality of the interactions between students and AI, educators can maintain academic integrity while still embracing the potential benefits of AI. Tasks that require problem solving, critical thinking, and creativity were highlighted as particularly resistant to AI overreach, as they demand original input from students and cannot be fully automated.

One of the key insights that emerged from these discussions was the need for authentic assessment, meaning assignments that reflect real-world challenges and require students to apply their knowledge in complex, context-rich situations. These tasks make it more difficult for students to rely entirely on AI-generated content, as they require critical thinking, collaboration, and ethical reasoning. This shift toward authentic and process-based assessments allows educators to better evaluate student learning in a way that safeguards academic integrity while fostering deeper engagement with the course material.

Looking ahead, the author plans to publish a detailed guide for teachers and academics on how to design AI-resistant assessments using the AI-Resistance Assessment Scale (AIAS). This guide will provide educators with practical strategies for creating tasks that enhance critical thinking, creativity, and ethical AI use. In the meantime, educators can begin applying the concepts discussed in the workshops by using the AI Task Assessment Scale to navigate the integration of AI into their teaching while maintaining the integrity of student learning.

In conclusion, these training workshops offered more than just theoretical insights; they provided a platform for educators from diverse backgrounds to collaboratively rethink the future of educational assessment in the age of AI. By focusing on critical thinking, human-AI collaboration, and ethical responsibility, educators are now better equipped to design assessments that are not only resistant to the misuse of AI but also promote deeper, more meaningful student learning. These workshops laid the foundation for educators to harness the potential of AI while safeguarding the essential values of creativity, originality, and academic integrity in education.

Open discussions: sharing best practices and experiences

After each training session, educators engaged in open discussions where they exchanged best practices for integrating AI into teaching and professional development. These conversations allowed faculty members to reflect on their individual experiences and learn from their colleagues’ diverse approaches. The collaborative environment fostered an exchange of practical resources, including AI-based activities and assignments. By sharing these resources, educators were able to explore the broad potential of generative AI tools and consider how to implement them effectively within their individual teaching contexts. This peer-to-peer learning was crucial in expanding educators’ understanding of AI’s applications in education.

Redesigning assessments for the AI era

One of the most critical points of discussion during the workshops was the need to redesign student assessments to align with the realities of the AI era. Faculty explored strategies for adapting assessments that not only integrate AI tools but also ensure students are challenged in ways that AI cannot easily replicate. For instance, they emphasized context-specific solutions tailored to their disciplines and the unique challenges of their institutions. These discussions underscored the importance of designing assessments that encourage deeper engagement with the subject matter, creativity, and the development of critical-thinking skills. The focus was on moving beyond rote learning and creating tasks that would require students to demonstrate higher-order thinking, problem-solving abilities, and ethical decision making in their interactions with AI tools.

Institutional policy and vision: the path forward

A recurring theme throughout the workshops was the necessity of establishing a clear institutional vision and policy regarding the integration of AI into both teaching and research. Educators agreed that institutions must provide clear guidance on how AI should be used, with policies that encompass both the educational and research contexts. As noted by Pedro et al. (2019), such policies are essential for fostering positive attitudes toward AI and encouraging educators to adopt these tools in thoughtful and productive ways.

Moreover, institutions with well-defined AI policies can play a pivotal role in raising awareness about AI literacy and competencies. Faculty and students alike need a structured framework to navigate the ethical and practical implications of using AI in their academic work. As Spivakovsky et al. (2023) highlighted, these policies can help build a more informed academic community which is capable of leveraging AI’s benefits while being mindful of its challenges.

Policy development for AI-enhanced courses: addressing assessment challenges

Another key takeaway from the workshops was the importance of developing policies specifically for AI-enhanced courses, with particular focus given to addressing assessment-related challenges. Such policies can provide students with clear guidelines on when and how to use AI tools in their coursework. By setting clear boundaries, these policies enhance students’ understanding of the ethical implications of AI use, ensuring that the technology is applied responsibly.

Faculty members also discussed the importance of balancing AI’s benefits with the need to preserve the authenticity of student work. A well-crafted policy framework would empower educators to manage AI’s use effectively, reducing concerns about cheating and misuse. For example, a few educators reported creating GPT models for their courses, uploading lecture content, and using the tool to generate both closed and open-ended questions. While some colleagues appreciated this practice, others criticized it, noting that AI-generated questions were sometimes repetitive or misaligned with course material. These conversations highlighted the need for a thoughtful, well-monitored approach to integrating AI in assessments.

Human-AI collaboration: redefining student assignments

A recurring theme in the workshops was the importance of viewing AI as a collaborative tool that supports, rather than replaces, student learning. Some educators shared their experience using generative AI to help design assignments or generate initial ideas for tasks. These assignments were structured around the AI-Resistance Assessment Scale (Petihakis et al., 2024), which encourages students to engage critically with AI tools for brainstorming, idea generation, proofreading, and project development.

Educators suggested linking this AI assessment scale to Bloom’s Taxonomy (Forehand, 2010) to ensure a deeper alignment with educational objectives. This integration encourages students to reflect on the ideas generated by AI, compare them with their own thoughts, and improve their critical-thinking skills. By combining AI-supported tasks with a clear course policy, students are guided to use AI responsibly while deepening their understanding of the subject matter.

Product-process assessment: emphasizing critical engagement with AI

During the workshop discussions, the facilitator introduced the concept of Product-Process Assessment as an emerging evaluation approach that shifts the focus from the final product to the process involved in completing a task. This approach is particularly relevant in the context of human-AI collaboration, where the interaction between the learner and AI tools becomes a vital component of the assessment.

The Product-Process Assessment method evaluates how students develop prompts to guide AI tools and how they collaborate with AI throughout the learning journey. This model encourages educators to assess not only the final output but also the decisions students make during the process. Faculty members were encouraged to involve students in the development of evaluation rubrics, creating a partnership in the assessment process. This collaborative approach allows students to critically engage with AI-generated content, refining their initial ideas based on comparison and critical reflection.

AI and authentic assessments: real-world applications in education

Another significant topic covered during the workshops was the role of AI in fostering authentic assessments which focus on real-world tasks and applications. Authentic assessments differ from traditional tests by requiring students to apply their knowledge in practical, context-rich scenarios which reflect the complexities they will encounter in professional settings (Fatima et al., 2024; Thanh et al., 2023). Xia et al. (2024) emphasized the importance of authentic assessment in the AI era, where tools like ChatGPT can challenge students’ beliefs and promote critical thinking. In this context, students demonstrate their understanding by applying their knowledge to evaluate complex cases generated by ChatGPT. Moving beyond traditional knowledge-based assessments, there is a growing need to focus on problem solving, data interpretation, and case-study-based questions. This highlights the significance of carefully designing new assessment strategies that prioritize the learning process, cultivate higher-order thinking, and immerse students in meaningful, real-world tasks.

For example, instead of a traditional essay, educators might ask students to create podcasts, develop multimedia presentations, or solve real-world problems using AI tools. These assessments foster creativity, collaboration, and critical thinking, promoting higher-order skills that are essential in an AI-driven world.

An educator in the Faculty of Humanities and Educational Sciences developed the following authentic assessment for her students:

“Develop a comprehensive marketing campaign for a new product. Please integrate AI level 2 for brainstorming and generating ideas, then critique the generated ideas by AI tool.”

In the business field, an educator provided the following example:

“Develop a sales strategy for a new generative AI tool designed to assist academic writing in a student’s area of interest.”

Another educator in medical education, specifically nursing, provided the following:

Create a comprehensive nursing care plan for a patient with a specific set of health issues (e.g., a diabetic patient with hypertension and a risk of stroke).

AI-resistant assessments: a new approach to preserving academic integrity

AI-resistant assessments have emerged as a critical approach in the AI era, particularly as educators grapple with concerns about academic integrity (Khlaif et al., 2024b). The rise of generative AI has made it easier for students to produce automated content, increasing the risk of plagiarism and over-reliance on AI-generated outputs. To counter these challenges, educators and researchers have introduced AI-resistant assessments designed to minimize the risk of students misusing AI tools while still leveraging AI for productive learning.

The central idea behind AI-resistant assessments is to create tasks that cannot be solved easily by AI, encouraging deeper engagement from students. This type of assessment typically requires higher-order thinking skills such as critical analysis, creativity, and problem solving—areas in which AI struggles to fully replicate human abilities. Additionally, AI-resistant assessments focus on the process of learning rather than just the final product. This means that students are evaluated based on their interactions with AI tools, including how they develop prompts, refine their ideas, and critically engage with AI-generated content.

The purpose of reflective writing is to assess learners’ ability to connect theoretical skills and knowledge with individual experiences, fostering critical thinking and self-awareness (Sudirman et al., 2021). In reflection writing, students are encouraged to use AI tools to brainstorm ideas, generate drafts, or receive feedback on their work. However, rather than submitting AI-generated content directly, students are required to write a reflection on how they used AI and detail the specific prompts they used, their rationale for selecting certain AI-generated content, and how they modified or critiqued the AI’s suggestions. Therefore, reflective writing serves as an excellent AI-resistant form of assessment because it involves personal, subjective experiences that are challenging for AI to replicate. Since reflective writing requires self-reflection and connections to individual experiences, the authenticity and originality of the student’s thoughts are essential (Zeng et al., 2024). Reflection writing is considered an AI-resistant assessment for the following justifications, as reported by the majority of the educators in the training sessions:

• Personalization: Reflective assignments will encourage students to share their unique experiences and interpretations, which reduces the risk of AI-generated responses.

• Critical thinking: They foster critical thinking and analysis skills that go beyond factual recall or synthesis, which AI might generate easily.

• Process documentation: Students are often asked to document their thought processes and revisions, making it difficult for AI to substitute original, evolving reflections.

• Ethical considerations: In fields like medicine, reflective practice is tied to professional development and empathy, involving inherently human qualities.

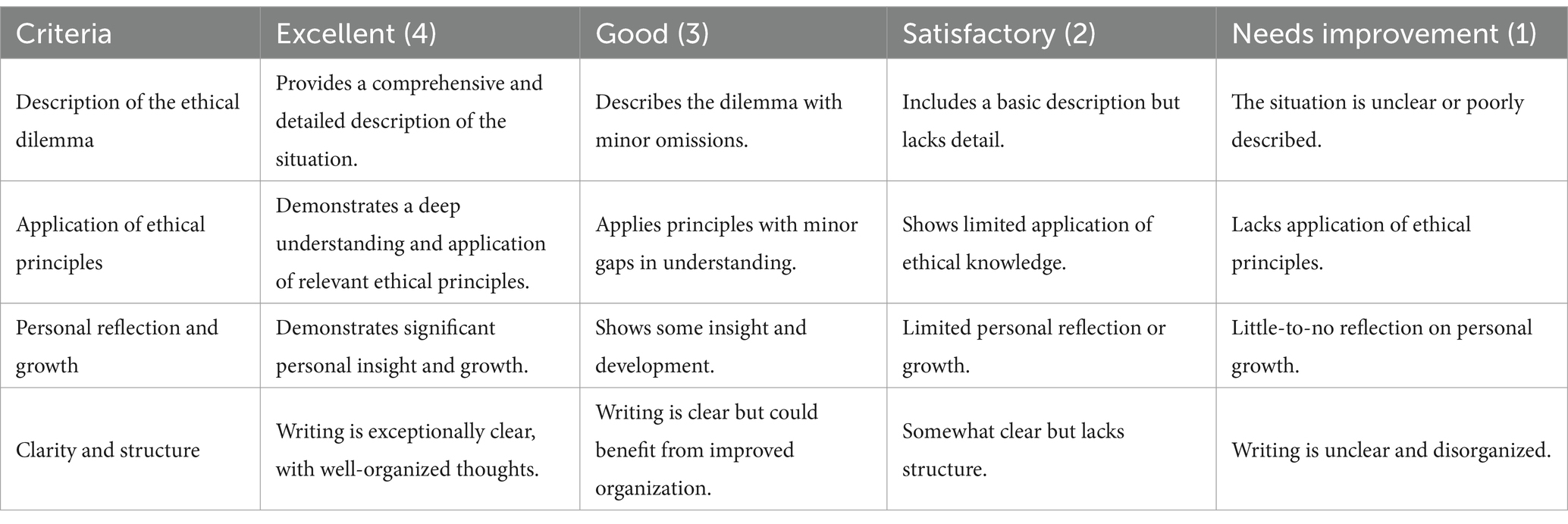

For further clarification, a faculty member from medical education presented an example of reflected writing in her course and the rubric (Table 2) she used to evaluate her students’ final work.

This reflective component forces students to engage critically with AI, ensuring they remain responsible for the final product. All of these are based on a rubric developed by the educators. She reported that: “In my field of teaching, medical education, I use reflective writing to assess the students’ responses to real-life clinical experiences.”

Here is the example relating to medical education:

Assignment title: Reflective essay on handling ethical dilemmas in clinical practice

• Instructions: Write a 1,500-word reflective essay about an ethical dilemma you encountered or observed during your clinical practice. Describe the situation, the ethical conflict, and the resolution, if any. Reflect on your emotions, the challenges faced, and how this experience has influenced your professional growth.

• Objective: Encourage students to connect their theoretical knowledge of medical ethics with real-world experiences and reflect on their personal development.

• Expected outcome: Students will demonstrate their understanding of ethical principles (e.g., autonomy, beneficence) and their application in clinical settings.

Discussion

The findings from the training workshops connect directly with UNESCO’s AI guidelines for education and research (Holmes and Miao, 2023), as well as align with recent academic research on the transformative potential of AI in educational settings (Moorhouse et al., 2023; Xia et al., 2024). This alignment is evident in several key areas: ethical AI use, inclusivity, the enhancement of educational quality, teacher development, and institutional policy frameworks.

Ethical use of AI in assessment

The emphasis on AI-resistant assessments and the ethical use of AI in education mirrors UNESCO’s advocacy for human-centric AI integration that ensures fairness, transparency, and academic integrity (Holmes and Miao, 2023; Moorhouse et al., 2023). The workshops’ focus on fostering critical thinking and ethical considerations in AI usage, particularly in assessment, supports recent findings that stress the need for ethical deployment of AI to prevent academic dishonesty and to protect student data (Xia et al., 2024; Saaida, 2023). Moreover, AI-driven assessment tools must address issues of fairness and data privacy, which are critical to maintaining trust in AI-enhanced educational environments (Lyanda et al., 2024).

Process-product assessment and human-AI collaboration

The introduction of the Process-Product Assessment Model in the workshops aligns with the literature advocating for the evaluation of both final outcomes and the process of human-AI interaction in learning (Xia et al., 2024). This approach is supported by studies emphasizing that AI-powered assessments should evaluate not only the end product but also critical thinking, problem-solving, and the quality of decision-making in human-AI collaborations (Lyanda et al., 2024). This shift from product-based to process-based assessment encourages deeper student engagement with AI and fosters self-regulated learning, as highlighted by adaptive learning platforms that dynamically adjust to student performance (Selwyn, 2022; Gamage et al., 2023).

Accessibility, inclusiveness, and SDG 4: quality education

The workshops, which involved educators from the Global South, resonate with UNESCO’s goal to bridge the digital divide and promote inclusivity in education. As AI technologies become more pervasive, equitable access to these tools is critical, particularly in underserved regions (Holmes and Miao, 2023; Saaida, 2023). By focusing on how AI tools can be leveraged to enhance learning experiences in diverse educational contexts, the workshops contribute to SDG 4’s goal of ensuring inclusive and equitable quality education for all (Lyanda et al., 2024). AI-driven personalized learning tools, such as intelligent tutoring systems and adaptive assessments, offer tailored learning pathways that address diverse learning needs, further enhancing educational inclusivity (George, 2023).

Professional development and teacher capacity building

A key aspect of the workshops was the emphasis on building teacher capacity to integrate AI responsibly into their assessments. This echoes UNESCO’s call for professional development initiatives that equip educators with the skills to navigate AI-enhanced teaching and learning environments (Holmes and Miao, 2023). As recent studies have demonstrated, AI tools like chatbots and virtual assistants can play a pivotal role in supporting teachers, reducing their workload, and enhancing their ability to provide timely and personalized feedback (Lyanda et al., 2024; Saaida, 2023). Teacher training programs, as recommended by George (2023), should prioritize critical thinking, creativity, and adaptability, preparing educators for the changing dynamics of AI-driven education (George, 2023).

Institutional policy development and vision

The workshops also addressed the need for clear institutional policies that guide the ethical integration of AI in education, a point echoed in UNESCO’s guidelines and supported by recent studies (Holmes and Miao, 2023; Moorhouse et al., 2023; Khlaif et al., 2024b). As educational institutions increasingly adopt AI technologies, it is crucial to develop localized policies that ensure academic integrity, protect data privacy, and promote inclusivity (Saaida, 2023; Lyanda et al., 2024). In addition, the need for ongoing dialog between educators and policymakers to ensure that AI implementation aligns with broader educational goals has been highlighted in the literature (Selwyn, 2024).

Redesigning assessment for AI-enhanced learning

Participants in the workshops explored innovative assessment redesigns that integrate AI tools like ChatGPT, not just for brainstorming but for fostering critical thinking and ethical reasoning. This reflects findings by Wang et al. (2024) that students recognize the value of AI-generated content but emphasize the importance of critically engaging with AI outputs. The workshops went beyond this by providing educators with strategies to design assessments that hold students accountable for AI-generated content (Khlaif et al., 2024a), ensuring they reflect on and critique the material they engage with, thus promoting ethical AI use in student assessments (Lyanda et al., 2024).

Addressing academic integrity concerns

Concerns about academic integrity, raised in both the workshops and current research, underscore the importance of developing AI-resistant assessments that encourage authentic student engagement and reflection. Studies by Xia et al. (2024) and Khlaif et al. (2024b) highlight the challenges of detecting AI-generated content, emphasizing the need for assessments that foster student responsibility and ethical decision-making. The workshops’ focus on reflective writing tasks, where students critically engage with AI outputs, aligns with calls in the academic community for more authentic and process-based assessments (Lyanda et al., 2024).

Theoretical implications

This paper offers several important theoretical implications for the field of education, particularly in the context of integrating AI into assessment practices. Traditionally, assessment methods in higher education have relied on standardized tests, essays, and projects. However, the introduction of AI shifts the focus toward more dynamic and complex forms of assessment, such as AI-resistant assessments and human-AI collaboration. The paper explores how AI can not only automate grading but also transform the way educators assess higher-order thinking skills like critical analysis, creativity, and ethical reasoning. This challenges existing assessment paradigms by pushing the boundaries of what can be evaluated in educational settings.

One of the key theoretical contributions of the paper is the proposal of the AI Task Assessment Scale and the Process-Product Assessment Model, both of which offer frameworks for assessing student interactions with AI. These models provide a foundation for rethinking how students’ learning processes, rather than just their final outcomes, are evaluated. The paper also highlights the need for a shift from purely product-based assessments to assessments that evaluate both the process and the student’s engagement with AI tools, which is a relatively unexplored area in educational theory. Additionally, the discussion on AI-resistant assessments introduces a new layer of complexity in how educators design tasks that mitigate the risk of over-reliance on AI, thus enriching the theoretical dialog on the integrity of assessment.

Practical implications

From a practical perspective, the present paper provides educators and institutions with actionable strategies to integrate AI into their teaching and assessment practices. For faculty members, it outlines how to design assessments that encourage student engagement with AI while maintaining academic integrity. For example, the paper discusses the implementation of the AI-Resistance Assessment Scale (AIAS), which can be used to design tasks that promote critical thinking and responsible AI use. It also offers practical guidance on how educators can assess not only the final output but also the process students undergo when using AI tools, thus providing a more comprehensive understanding of student learning.

In addition to classroom practices, this paper underscores the importance of institutional policies in supporting the integration of AI into education. It recommends that institutions develop clear policies that guide both students and educators on how AI should be used ethically in educational settings. The.

se policies can help mitigate risks such as academic dishonesty while ensuring that AI is used as a tool to enhance, rather than replace, learning. Furthermore, the paper provides a roadmap for professional development, emphasizing the need for continuous training for educators to stay up to date with AI advancements and their implications for assessments.

Conclusion

This research has demonstrated the potential of integrating generative AI into educational assessments while addressing the ethical, pedagogical, and institutional challenges that accompany its use. The development of AI-resistant assessments, such as the Process-Product Assessment Model, offers a practical framework for evaluating both the final output and the collaborative steps between students and AI tools. These findings emphasize the importance of fostering critical thinking, creativity, and ethical reasoning, ensuring that AI serves as a supplement rather than a replacement in learning processes.

Moreover, the research highlights the necessity of institutional policies that guide AI integration in education, particularly in regions with limited resources, such as the Global South. By offering solutions that address academic integrity and promote responsible AI use, this study bridges gaps in the global educational landscape and provides actionable strategies for educators to implement AI-enhanced assessments effectively.

Overall, the findings reinforce the idea that AI can be a transformative force in education when used thoughtfully, with an emphasis on fostering lifelong learning skills, preserving ethical standards, and adapting assessment methods to meet the evolving demands of an AI-driven world. There still exists a need for a complete assessment plan that fits the different subjects in higher education. Future research should work on creating clear rules to make these assessments the same for everyone. This will help ensure fairness across different fields of study and solve the challenges that come with using advanced AI tools like ChatGPT in education.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

WA: Conceptualization, Formal analysis, Investigation, Writing – review & editing, Funding acquisition. ZK: Conceptualization, Formal analysis, Investigation, Methodology, Resources, Supervision, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

We used ChatGPT 4o for proofreading and the authors took responsibility for the accuracy of the generated text.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aziz, M. N. A., Yusoff, N. M., and Yaakob, M. F. M. (2020). Challenges in using authentic assessment in 21st century ESL classrooms. Int. J. Eval. Res. Educ. 9, 759–768. doi: 10.11591/ijere.v9i3.20546

Botuzova, Y., Iievliev, O., Okipniak, I., Yandola, K., and Charkina, T. (2023). Innovative approaches to assessment in pedagogical practice: new technologies, methods and development of objective assessment tools. Cadernos de Educação Tecnologia e Sociedade 16, 386–398. doi: 10.14571/brajets.v16.n2.386-398

Bozkurt, A., Junhong, X., Lambert, S., Pazurek, A., Crompton, H., Koseoglu, S., et al. (2023). Speculative futures on ChatGPT and generative artificial intelligence (AI): a collective reflection from the educational landscape. Asian J. Dist. Educ. 18, 53–130.

Fatima, S. S., Sheikh, N. A., and Osama, A. (2024). Authentic assessment in medical education: exploring AI integration and student-as-partners collaboration. Postgrad. Med. J. 783. doi: 10.1093/postmj/qgae088

Feuerriegel, S., Hartmann, J., Janiesch, C., and Zschech, P. (2024). Generative ai. Bus. Inf. Syst. Eng. 66, 111–126. doi: 10.1007/s12599-023-00834-7

Gamage, K. A., Dehideniya, S. C., Xu, Z., and Tang, X. (2023). ChatGPT and higher education assessments: more opportunities than concerns?. Journal of Applied Learning and Teaching 6.

George, A. S. (2023). Preparing students for an AI-driven world: rethinking curriculum and pedagogy in the age of artificial intelligence. Partners Univ. Innov. Res. Publ. 1, 112–136.

George, B., and Wooden, O. (2023). Managing the strategic transformation of higher education through artificial intelligence. Admin. Sci. 13:196. doi: 10.3390/admsci13090196

Holmes, W., and Miao, F. (2023). Guidance for generative AI in education and research. Paris, France: UNESCO Publishing.

Jin, Y., Yan, L., Echeverria, V., Gašević, D., and Martinez-Maldonado, R. (2024). Generative AI in higher education: a global perspective of institutional adoption policies and guidelines. arXiv preprint arXiv:2405.11800.

Khlaif, Z. N., Ayyoub, A., Hamamra, B., Bensalem, E., Mitwally, M. A., Ayyoub, A., et al. (2024b). University teachers’ views on the adoption and integration of generative AI tools for student assessment in higher education. Educ. Sci. 14:1090. doi: 10.3390/educsci14101090

Khlaif, Z. N., Mousa, A., Hattab, M. K., Itmazi, J., Hassan, A. A., Sanmugam, M., et al. (2023). The potential and concerns of using AI in scientific research: ChatGPT performance evaluation. JMIR Med. Educ. 9:e47049. doi: 10.2196/47049

Khlaif, Z., Odeh, A., and Bsharat, T. R. (2024a). “Generative AI-powered adaptive assessment” in Power of persuasive educational Technologies in Enhancing Learning (USA: IGI Global), 157–176.

Lyanda, J. N., Owidi, S. O., and Simiyu, A. M. (2024). Rethinking higher education teaching and assessment in-line with AI innovations: a systematic review and Meta-analysis. Afr. J. Emp. Res. 5, 325–335. doi: 10.51867/ajernet.5.3.30

Moorhouse, B. L., Yeo, M. A., and Wan, Y. (2023). Generative AI tools and assessment: guidelines of the world's top-ranking universities. Comp. Educ. Open 5:100151. doi: 10.1016/j.caeo.2023.100151

Noroozi, O., Soleimani, S., Farrokhnia, M., and Banihashem, S. K. (2024). Generative AI in Education: Pedagogical, Theoretical, and Methodological Perspectives. International Journal of Technology in Education 7, 373–385.

Pedro, F., Subosa, M., Rivas, A., and Valverde, P. (2019). Artificial intelligence in education: Challenges and opportunities for sustainable development.

Petihakis, G., Farao, A., Bountakas, P., Sabazioti, A., Polley, J., and Xenakis, C. (2024). AIAS: AI-ASsisted cybersecurity platform to defend against adversarial AI attacks. In Proceedings of the 19th International Conference on Availability, Reliability and Security (pp. 1–7

Pisica, A. I., Edu, T., Zaharia, R. M., and Zaharia, R. (2023). Implementing artificial intelligence in higher education: pros and cons from the perspectives of academics. Societies 13:118. doi: 10.3390/soc13050118

Saaida, M. B. (2023). AI-driven transformations in higher education: opportunities and challenges. Int. J. Educ. Res. Stud. 5, 29–36.

Selwyn, N. (2022). The future of AI and education: Some cautionary notes. European Journal of Education, 57, 620–631.

Selwyn, N. (2024). On the limits of artificial intelligence (AI) in education. Nordisk tidsskrift for pedagogikk og kritikk, 10, 3–14.

Shahriar, S., Lund, B. D., Mannuru, N. R., Arshad, M. A., Hayawi, K., Bevara, R. V. K., et al. (2024). Putting GPT-4o to the sword: a comprehensive evaluation of language, vision, speech, and multimodal proficiency. Appl. Sci. 14:7782. doi: 10.3390/app14177782

Spivakovsky, O. V., Omelchuk, S. A., Kobets, V. V., Valko, N. V., and Malchykova, D. S. (2023). Institutional policies on artificial intelligence in university learning, teaching and research. Inform. Technol. Learn. Tools 97, 181–202. doi: 10.33407/itlt.v97i5.5395

Sudirman, A., Gemilang, A. V., and Kristanto, T. M. A. (2021). Harnessing the power of reflective journal writing in global contexts: a systematic literature review. Int. J. Learn. Teach. Educ. Res. 20, 174–194. doi: 10.26803/ijlter.20.12.11

Thanh, B. N., Vo, D. T. H., Nhat, M. N., Pham, T. T. T., Trung, H. T., and Xuan, S. H. (2023). Race with the machines: assessing the capability of generative AI in solving authentic assessments. Australas. J. Educ. Technol. 39, 59–81. doi: 10.14742/ajet.8902

Wang, Y., Wang, L., and Siau, K. L. (2024). Human-Centered Interaction in Virtual Worlds: A New Era of Generative Artificial Intelligence and Metaverse. International Journal of Human–Computer Interaction 1–43.

Xia, Q., Weng, X., Ouyang, F., Lin, T. J., and Chiu, T. K. (2024). A scoping review on how generative artificial intelligence transforms assessment in higher education. Int. J. Educ. Technol. High. Educ. 21:40. doi: 10.1186/s41239-024-00468-z

Zeng, W., Goh, Y. X., Ponnamperuma, G., Liaw, S. Y., Lim, C. C., Jayarani, D., et al. (2024). Promotion of self-regulated learning through internalization of critical thinking, assessment and reflection to empower learning (iCARE): a quasi-experimental study. Nurse Educ. Today 142:106339. doi: 10.1016/j.nedt.2024.106339

Keywords: assessment, Gen AI, human-AI engagement, UNESCO, AI-resisted assessment, quality of education, sustainability

Citation: Awadallah Alkouk W and Khlaif ZN (2024) AI-resistant assessments in higher education: practical insights from faculty training workshops. Front. Educ. 9:1499495. doi: 10.3389/feduc.2024.1499495

Edited by:

Huichun Liu, Guangzhou University, ChinaReviewed by:

Hui Luan, National Taiwan Normal University, TaiwanCopyright © 2024 Awadallah Alkouk and Khlaif. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zuheir N. Khlaif, emtobGFpZkBuYWphaC5lZHU=

†ORCID: Wejdan Awadallah Alkouk, https://orcid.org/0009-0006-8803-2147

Zuheir N. Khlaif, https://orcid.org/0000-0002-7354-7512

Wejdan Awadallah Alkouk1†

Wejdan Awadallah Alkouk1† Zuheir N. Khlaif

Zuheir N. Khlaif