94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Comput. Sci., 19 November 2024

Sec. Computer Graphics and Visualization

Volume 6 - 2024 | https://doi.org/10.3389/fcomp.2024.1423129

Nvidia’s Omniverse platform represents a paradigm shift in the realm of virtual environments and simulation technologies. This paper presents a comprehensive examination of the Omniverse platform, a transformative force in virtual environments and simulation technologies. We offer a detailed systematic survey of the Omniverse’s impact across various scientific fields, underscoring its role in fostering innovation and sculpting the technological future. Our focus includes the Omniverse Replicator for generating synthetic data to address data insufficiency, and the utilization of Isaac Sim with its Issac Gym and software development kit (SDK) for robotic simulations, alongside Drive Sim for autonomous vehicle emulation. We further investigate the Extended Reality (XR) suite for augmented and virtual realities, as well as the Audio2Face application, which translates audio inputs into animated facial expressions. A critical analysis of Omniverse’s technical architecture, user-accessible applications, and extensions are provided. We contrast existing surveys on the Omniverse with those on the metaverse, delineating their focus, applications, features, and constraints. The paper identifies potential domains where the Omniverse excels and explores its real-world application capabilities by discussing how existing research papers utilize the Omniverse platform. Finally, we discuss the challenges and hurdles facing the Omniverse’s broader adoption and implementation, mitigating the lack of surveys solely focusing on the Omniverse.

Modern technology has brought us to a time where our imagination and the real world come together. In today’s digital age, the mix of 3D simulations, data, and artificial intelligence has turned things we used to only see in science fiction into real and useful ideas. Nvidia Omniverse (henceforth only referred to as the Omniverse) is a big part of this change. It’s a platform that can change how we use graphics, simulations, and data in a big way.

In the past few years, many researchers have gotten interested in the idea of the metaverse. It has become a big topic of study and discussion, showing that more and more researchers are curious about what the metaverse could mean for how we interact with digital worlds. This growing interest is pushing scientists and tech experts to explore and understand the different aspects of the metaverse. Nvidia is a significant player in the AI and HPC domains, renowned for its potent Graphics Processing Units (GPUs), capable of multitasking and particularly effective for deep learning and various AI applications. Their contributions have been instrumental in advancing AI and revolutionizing scientific research. The CUDA platform, a pivotal component, facilitates collaboration among GPUs and holds substantial importance across diverse research areas. However, Nvidia’s impact extends beyond hardware. They offer a comprehensive range of software solutions, including the CUDA Toolkit, which supports AI and HPC applications. Moreover, their software tools like cuDNN and TensorRT are highly optimized for deep learning, empowering researchers and developers to excel in AI-related endeavors (Huynh-The et al., 2023).

The Omniverse is a computer system that helps people and teams work together to make 3D projects using the Universal Scene Description standard. It breaks down the barriers between different 3D data collections, helping big teams work together better and making new 3D projects and simulations. This is a big step for the future of 3D work and simulations (Nvidia, 2021). In the Omniverse, developers can create custom add-ons, tools, and small programs. This helps them work better with 3D projects, generate synthetic data and build metaverse apps for industries. Also, the Omniverse makes it easy for different creative apps to work together with the USD framework. This allows the user to visualize and manipulate their three-dimensional scene data in real-time with interconnected applications that utilize the Omniverse platform.

This paper takes a close look at the Omniverse platform and its important parts like Nvidia Replicator, Nvidia Create, Nvidia Audio2Face, Nvidia Create XR, Nvidia Nucleus, Nvidia Drive Sim, and Isaac Sim. It also has two helpful libraries, Nvidia Orbit and SKRL. Each part of the Omniverse is a big step forward in its field, but it also has its own challenges and limitations. In addition, this paper also discusses the opportunities that each component brings. We explore the application of the Omniverse in diverse areas such as synthetic data generation, education, military, business, manufacturing, medicine, culture, and smart cities. This helps us to envision the future evolution of the Omniverse and how it will improve in terms of its graphical, simulations, and AI capabilities.

As we contemplate the opportunities and limitations within each component of the Omniverse platform, we imagine a future where these limitations are carefully fixed and where the Omniverse platform can work even better with other technologies. This could create a more complete and realistic simulation world. The Omniverse platform aims to change how we use graphics, simulations, and making data. This could lead to important advancements in artificial intelligence, autonomous vehicles, and robotics. This survey aims to provide a detailed and dive deep study into the platform, while also clearly differentiating itself from the related reviews in the area as our survey primarily emphasizes the Omniverse as a sophisticated metaverse ecosystem rather than discussing the metaverse concept from a general perspective.

To the best of our knowledge this is the first comprehensive survey that analyzes all the major components in detail. In addition, no survey has analyzed the application of the Omniverse in such a wide range of application domains compared to our work.

The main contributions of this paper: (1) A novel deep analysis of Nvidia Omniverse, which is currently state-of-the-art in metaverse ecosystems. (2) We provide a comparison of existing surveys on the Omniverse and metaverse from multiple viewpoints: available apps and functionality, and potential domains for implementing the metaverse concept, which identifies the novelty of our work. (3) We conduct a quantitative analysis of the Omniverse based on recent systematic research papers. (4) Conduct a comparative analysis between the Omniverse and other metaverse platforms. (5) The survey suggests possible implementations of the Omniverse and details how this implementation can utilize its capabilities.

The structure of this paper is organized as Section 2 outlines the motivation behind our focus on the Omniverse ecosystem, highlighting its relevance and potential impact. In Section 3, we dive deeper into the Omniverse, discussing its technical architecture and the applications it supports. Section 4 provides a comprehensive summary of related surveys and presents quantitative results of the Omniverse, along with a comparative analysis between the Omniverse and other leading metaverse systems. In Section 5, we detail our methodology, including the research questions and the criteria used for selecting relevant papers. Section 6 explores the various domains and applications of the Omniverse, supported by real-world implementations across different fields. In Section 7, we synthesize our findings, addressing the limitations, challenges, security concerns, and proposing future research directions. Finally, Section 8 concludes the paper.

In this section, we will explore why the Omniverse is a system of choice when it comes to designing and implementing AI metaverse applications. We will also discuss the main functionalities offered by the Omniverse, including synthetic data generation, 3D environment for real-time robotics simulations, and XR integration.

The Omniverse’s importance is motivated by a combination of key factors that showcase the platform’s potential to be a game-changer in the evolving metaverse landscape. Leveraging Nvidia’s renowned industry expertise in graphics and AI technologies, the Omniverse holds the promise of delivering exceptionally high-quality graphics, simulations, and AI capabilities within the metaverse, making it an attractive choice for those who prioritize immersive and realistic experiences. Its commitment to interoperability, creating an open platform that seamlessly connects various 3D design and simulation tools, positions it as a valuable resource for professionals in architecture, gaming, and entertainment, streamlining complex 3D workflows. Additionally, the emphasis on advanced collaboration tools makes the Omniverse an appealing option for teams and organizations seeking to engage in collaborative design, simulations, and content creation within a shared virtual environment. Furthermore, the platform’s potential to foster a diverse and vibrant metaverse ecosystem through strategic partnerships and developer engagement adds to its allure. For those already invested in Nvidia hardware, the Omniverse offers the advantage of seamless integration and optimized performance. Lastly, the tailored features for specific industries and applications make the Omniverse adaptable to a wide array of use cases, aligning with the unique needs and objectives of potential users (Huynh-The et al., 2023).

The prevailing trend among existing surveys is to discuss the metaverse in a generalized manner, rather than concentrating on specific platforms. Notably, none of the observed surveys focus solely on the Omniverse; they either cursorily mention it or compare it with other platforms without a detailed exploration of its functionalities and applications. Despite the Omniverse’s widely acclaimed status as the preeminent metaverse platform, there remains an evident absence of research dedicated to unraveling its details. Given the Omniverse’s status as a sophisticated ecosystem replete with innovative features, it holds considerable allure for researchers. Therefore, our survey assumes the responsibility of meticulously examining the Omniverse, elucidating its complete spectrum of applications, capabilities, and potential real-world implementations.

Synthetic data generation (SDG) constitutes a fundamental technique within the realm of data science and machine learning. It involves the creation of artificial datasets designed to emulate real-world data. The primary objective of synthetic data generation is to fulfill several pivotal purposes (Geyer, 2023). Foremost among these purposes is the facilitation of the production of extensive datasets that may otherwise prove challenging or prohibitively expensive to acquire. Such augmented datasets are instrumental in bolstering the efficacy of model training and validation processes, thereby enhancing the robustness of machine learning algorithms. Another key objective is the preservation of data privacy and security. Synthetic data empowers researchers to develop and assess algorithms without exposing sensitive or confidential information. This is particularly crucial in domains where privacy regulations are stringent. Furthermore, synthetic data generation addresses the issue of data scarcity, which often poses a substantial impediment to the progress of emerging technologies such as deep learning. In these contexts, the availability of large, diverse datasets is paramount for the accurate and reliable performance of machine learning models. The Omniverse accomplishes these objectives via its advanced synthetic data generation tool known as the Replicator, offering a highly refined data generation pipeline that can be easily customized and tailored to meet the user’s specific preferences (Conde et al., 2021). Ultimately, the overarching goal of synthetic data generation is to propel advancements in artificial intelligence applications. It accomplishes this by fostering improved model generalization, reducing data-related constraints, and facilitating innovation across a wide spectrum of domains (Geyer, 2023).

Robotics and autonomous vehicle (AV) simulation is a critical technology in the development and testing of robotics and self-driving vehicles. It involves creating virtual environments where robots or autonomous vehicles can operate, allowing engineers and researchers to assess their performance under various scenarios and conditions. This simulation enables the refinement of algorithms, testing of safety mechanisms, and validation of control systems without the need for costly physical prototypes or risking real-world accidents. It is an indispensable tool that accelerates the advancement of autonomous systems, making them safer, more efficient, and better equipped to navigate the complexities of the real world. In the context of robotics and autonomous vehicles simulation, synthetic data facilitates the development and testing of algorithms and control systems in a safe, virtual environment. This technology plays a pivotal role in autonomous vehicle training, offering a cost-effective means to simulate various driving scenarios and improve vehicle safety. In robotics, it accelerates the advancement of robot capabilities, enabling realistic testing and refining of navigation, perception, and control algorithms, fostering innovation in industries from manufacturing to healthcare and beyond (Khatib et al., 2002). The Omniverse, employing Isaac Sim and Drive Sim, offers a convenient solution for generating environments capable of emulating real-life locations. Furthermore, these applications provide an extensive range of tools for managing and overseeing the learning process, accompanied by detailed feedback mechanisms (Makoviychuk et al., 2021).

Augmented Reality (AR), Virtual Reality (VR), and Mixed Reality (MR) represent cutting-edge technologies that blend the digital and physical worlds, each with their unique characteristics and applications. XR, which is an umbrella continuum for VR, AR, and MR has proven to be one of the most promising areas for technological innovation. AR overlays digital information onto the real world through devices like smartphones or AR glasses, providing contextually enriched experiences. VR immerses users in entirely virtual environments, typically through headsets, offering an unparalleled level of immersion. XR encompasses both AR and VR, as well as MR experiences, where the virtual and physical worlds interact seamlessly (Zhan et al., 2020). These technologies hold immense importance and fascination due to their transformative potential. AR, for instance, enhances productivity in fields like manufacturing and healthcare by providing real-time data visualization and assistance. It also fuels engaging consumer experiences, such as interactive marketing campaigns and location-based gaming. VR transports users to virtual realms, revolutionizing industries like gaming, education, and therapy by offering immersive, realistic simulations. XR extends these possibilities, allowing for applications like remote collaboration in MR environments or augmented training experiences for professionals. The Omniverse provides the create XR application which provides a pipeline for integrating XR technologies providing a seamless integration with all other Omniverse applications (Shapira, 2022).

This section provides a comprehensive overview of the scholarly contributions within the domain of the Omniverse, its functionalities, architectural components, and its applications, elucidating contemporary research themes and conducting a systematic assessment of the latest empirical discoveries.

This study looked into answering the following research questions (RQ):

1. What is NVIDIA Omniverse and what are its capabilities?

2. What other surveys have covered so far with respect to the Omniverse?

3. How does Nvidia Omniverse compare to other metaverse platforms?

4. How are research papers utilizing the Omniverse platform?

5. What are possible domains and applications of the Omniverse platform?

6. What gaps does the Omniverse fill?

7. What are the limitations and challenges of the platform?

8. What are the future plans of the platform?

This Systematic Literature Review (SLR) employed Google Scholar as the primary scientific search engine to collect relevant surveys and articles. The search parameters were carefully defined, focusing on publications from the years 2018 to 2024. Additionally, the search terms were configured to be identified anywhere within the text of the papers. The searching terms are:

1. “Nvidia Omniverse survey”

2. “Omniverse Survey”

3. “Metaverse” AND “Omniverse”

4. “Omniverse Replicator”

5. “Synthetic Data Generation” And (“Nvidia Omniverse” Or “Omniverse”)

6. “Robotics Simulation” And (“Nvidia Omniverse” Or “Omniverse”)

7. “Nvidia Drive sim”

8. “Isaac sim” AND (Isaac gym)

The initial search phase resulted in a total of 456 papers. We then conducted a thorough review of the full text of each paper to assess their relevance to our research questions, ultimately narrowing the selection down to 70 papers based on specific inclusion and exclusion criteria.

Inclusion Criteria

• Studies published within the specific time frame of 2018 to 2024.

• Studies published in English only.

• Seminal or highly cited papers outside the time frame may also be included for relevance.

• Quantitative, qualitative, or mixed-method studies that fit the scope of the SLR.

• Experimental, observational, or simulation-based studies.

• Published reviews, meta-analyses, or survey papers relevant to the topic.

• Theses or dissertations (if they contribute valuable insights).

Exclusion Criteria

• Studies published in languages other than English unless an official translation is available.

• Studies published outside the defined time frame, unless highly relevant.

• Presentations, posters, or abstracts without full papers.

• Preliminary studies or incomplete research (e.g., pilot studies without full results).

Figure 1 quantifying the volume and percentage distribution of selected papers and articles retrieved based on the specified search criteria, delineated annually from 2018 through 2024, whereas Figure 2 shows the SLR search strings.

In this section, we will explore the architectural components of the Omniverse ecosystem and discuss their structure in detail. We will also address our first research question (RQ1), focusing on the core elements and how they interact within the Omniverse architecture.

The Omniverse stands as a cutting-edge metaverse technology platform founded upon the USD format (OpenUSD). This platform enables users to develop and oversee metaverse applications, eradicating 3D data silos, fostering collaboration within expansive teams, and enhancing 3D and simulation workflows. Users have the capability to fashion personalized extensions, tools, and microservices tailored for industrial 3D workflows, produce synthetic data, and seamlessly synchronize with creative applications for streamlined 3D data manipulation. Additionally, the Omniverse offers a range of built-in applications and extensions for users’ convenience. Please see Figure 3 for an overview of the Omniverse built in available applications and extensions.

The Replicator is the Omniverse’s synthetic data gathering and generation tool. It leverages the power of NVIDIA’s RTX GPUs to create highly photorealistic environments and scenarios using a wide range of assets from both NVIDIA’s repository and external sources. These assets can be customized and scaled according to the needs of various AI applications, offering users an intuitive and user-friendly interface that simplifies the entire data generation process, making it accessible even to those with limited experience in 3D modeling or simulation (Kokko and Kuhno, 2024).

Within the Omniverse ecosystem, Replicator functions similarly to a highly advanced digital camera embedded within the scene or environment of any Omniverse application. Much like cameras used in CGI creation, Replicator captures scenes with the added power of NVIDIA’s ray tracing technology, specifically path tracing. This technology enables the rendering of scenes onto a 2D plane with exceptional accuracy and realism, producing detailed 2D views of environments by leveraging cutting-edge rendering techniques. The ease of integration with various Omniverse applications further enhances its usability, allowing seamless workflow transitions between tools (Abu Alhaija et al., 2023).

Figure 4 shows the Replicator pipeline workflow from importing 3D assets until generating synthetic data.

The Replicator features a wide range of tunable parameters that can be adjusted to meet specific user needs, allowing for highly customized synthetic data generation. Users can set the batch size to control the number of images or samples generated per iteration, while applying various augmentations such as scaling, rotation, flipping, or noise addition to increase data diversity. The distribution and frequency of objects appearing in the generated data can also be finely tuned, alongside the addition or modification of noise in lighting, textures, or object positions. Specific parameters like bounding box generation, including padding and format (2D or 3D), and the control over segmentation masks and depth maps are also available, allowing for detailed customization of these critical annotations. Key points can be specified, including the number and precise locations for each object, enhancing the accuracy of the generated data. Users can configure multi-view rendering to generate data from different angles and set the frame rate for temporal consistency, which is essential for applications like video analysis. The Replicator also offers control over render layers, enabling the inclusion of specific data such as RGB, depth, and normals. For further customization, users can define an augmentation pipeline, applying transformations such as color jittering or blur during data generation. Domain randomization extends flexibility by allowing random changes in textures, lighting, and object placements, which is particularly useful for creating robust training datasets. Additionally, parameters like camera path, field of view variation, and depth of field adjustments can be modified to simulate various scenarios, such as automated driving or robotics. Finally, the output format of the generated dataset can be specified, including the file type and structure, while annotation frequency and data labels can be customized to ensure compatibility with specific machine learning pipelines. This ease of use is complemented by the portability of the generated datasets, which can be easily transferred and utilized across different platforms and applications, making it an invaluable tool for diverse AI projects (Richard et al., 2024).

Replicator also integrates seamlessly with the Omniverse’s advanced visual features, such as accurate physics via the PhysX engine, realistic lighting, and materials using MDL. It excels in simulating diverse scenarios, producing high-quality datasets, and automating labeling. By employing advanced algorithms for object detection and semantic segmentation, it enhances the robustness and accuracy of AI models, while speeding up data annotation by up to 100 times and reducing costs proportionately. Its standout feature, domain randomization, alters digital scenes to create varied datasets from one set of assets, ensuring models can handle real-world scenarios by changing parameters like lighting, textures, and object positions, making digital landscapes look different each time. This flexibility, combined with the tool’s intuitive interface, makes it highly accessible and adaptable for various use cases (Abou Akar et al., 2022).

The Replicator interacts synergistically with other applications such as Isaac Sim, Nucleus, Audio2Face, DriveSim, Create XR, and USD Composer. For instance, Isaac Sim, used for simulating robots, benefits from the realistic environments generated by Replicator to enhance the training of robotic AI systems. Audio2Face utilizes the harvested synthetic data for creating life-like facial animations driven by audio inputs, which can be used in virtual characters and avatars. DriveSim employs the synthetic scenarios generated by Replicator to train and validate autonomous driving systems in a variety of conditions. Create XR and USD Composer facilitate the creation and manipulation of extended reality environments and complex 3D scenes, respectively, both benefiting from the high-quality data produced by Replicator. These applications benefit from Replicator’s portability, as the synthetic datasets can be easily shared via Nucleus, allowing for efficient collaboration and deployment across different platforms (Conde et al., 2021).

NVIDIA DRIVE Sim an end-to-end AV simulation platform designed from the ground up to facilitate large-scale, physically based multi-sensor simulations. This open, scalable, and modular platform supports AV development and validation from concept to deployment by creating highly realistic virtual environments for testing and training (Apostolos, 2024). The platform supports Hardware-in-the-Loop (HiL) testing by connecting with physical hardware, handling both single and multi-vehicle scenarios for research and commercial applications. It helps AI training for real-world unpredictability, speeds up development, and ensures regulatory compliance (Bjornstad, 2021). Using Nvidia AutoDrive’s advanced data, DRIVE Sim improves decision-making and employs a neural reconstruction engine to create interactive 3D test environments (Ghodsi et al., 2021). Its architecture includes highly accurate sensor models that simulate cameras, LiDAR, and radar, mirroring real-world conditions. It creates dynamic traffic scenarios with multiple vehicles, pedestrians, and environmental factors, adding complexity to simulations. Advanced techniques are used for detailed environmental rendering to precisely replicate real-world driving conditions. Its standout feature is the Neural Reconstruction Engine, a deep learning tool that translates real roads scanned by specific cameras into interactive 3D test environments, enhancing both the realism and variability of simulations. Additionally, it uses data gathered from various sensors to replicate real-world vehicles, creating assets that can be utilized in different scenes (Autonomous Vehicle International, 2023) as depicted in Figure 5 the left image shows a yellow truck scanned from a real-life scenario, which is then used as an asset in a different environment, as seen in the right image.

Figure 5. Asset harvesting using the Neural Reconstruction Engine Autonomous Vehicle International (2023).

DRIVE Sim is connected to the Nucleus so it can share environments with Isaac Sim, which utilizes these realistic settings for robotic simulations. The Replicator generates diverse and realistic datasets that DRIVE Sim uses for training and validating autonomous driving systems.

Isaac Sim is the Omniverse application for robotics training and simulation, renowned for its high-fidelity 3D simulations and integration with deep reinforcement learning through Isaac Gym. This enables seamless GPU integration and extensive customization for robotic control research (Makoviychuk et al., 2021). Isaac Sim also offers a user-friendly interface for controlling movement and automating robotic actions such as movement and rotation. For advanced users, it includes a built-in Python IDE, allowing for manual control, modification of robots, and scripting of scenarios. Isaac Sim supports importing custom robots not only in USD but also in MJCF and URDF formats, offering flexibility for various design needs also features pre-built robotics models such as Jetbot, Jackal, and various control arms seen in Figure 6, each equipped with a different suite of sensors including Perception-Based Sensors and Physics-Based Sensors like Contact Sensors, IMU Sensors, Effort Sensors, Force Sensors, Proximity Sensors, and Lightbeam Sensors.

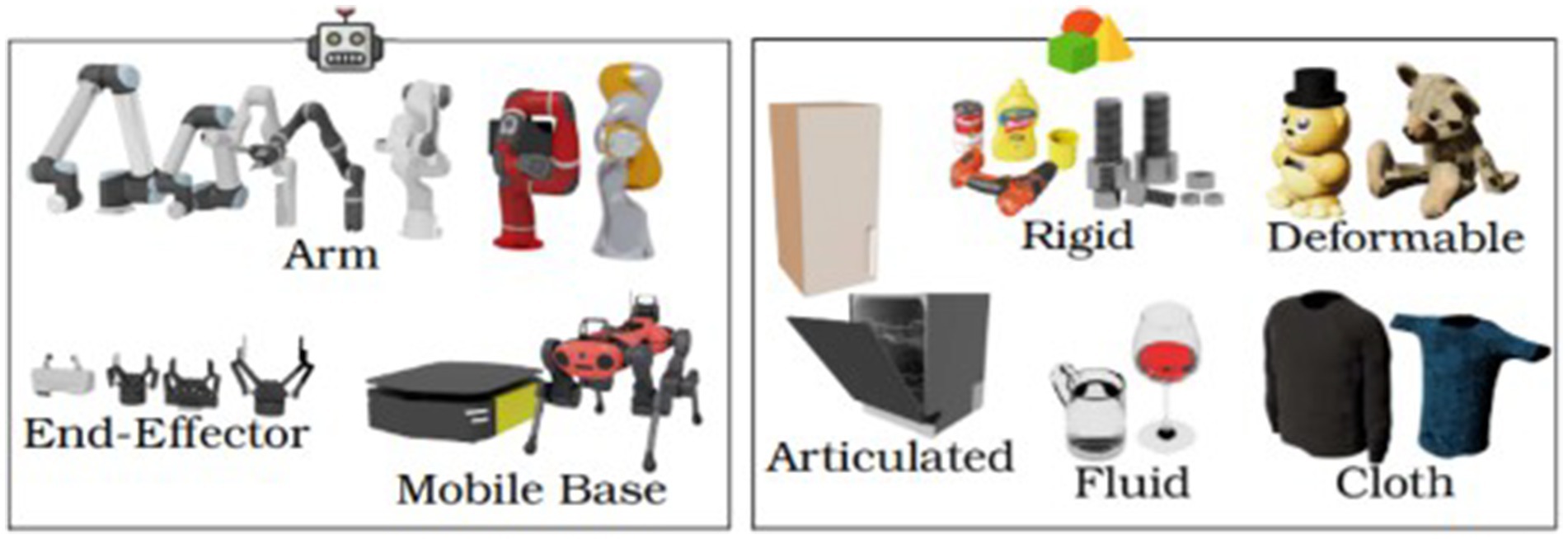

Within the Isaac Sim and Gym ecosystem, two supporting libraries stand out: SKRL and Isaac Orbit. SKRL is an open-source Python library for Reinforcement Learning, celebrated for its modularity and compatibility with environments like OpenAI Gym, Farama Gymnasium, DeepMind, and Nvidia Isaac Gym. It provides a comprehensive suite of RL agents, trainers, learning rate schedulers, and basic rendering capabilities using PYGAME (Serrano-Munoz et al., 2023). Isaac Orbit, another open-source framework, delivers realistic simulations for a variety of tasks such as reaching, lifting, cabinet manipulation, cloth manipulation, rope reshaping, and more (Mittal et al., 2023). Figure 7 illustrates the diverse range of robot types and manipulable objects available through Isaac Orbit.

Figure 7. Available robots and manipulatable objects in Nvidia Isaac orbit (Mittal et al., 2023).

Isaac Sim works in conjunction with the Omniverse USD Composer, allowing users to create detailed environments and scenarios that can be easily imported into Isaac Sim for simulation and testing. This makes it simple to build and test realistic simulation settings. For immersive experiences, Isaac Sim integrates with the Omniverse XR allowing users to interact with and test their robotics simulations in a VR environment, making the design and validation process more intuitive and hands-on. These features are supported by Isaac Sim’s integration with the Omniverse Nucleus. Nucleus lets users store, version, and collaborate on 3D assets, ensuring smooth workflow integration across different applications. Users can share robot models, simulation environments, and datasets with team members or other Omniverse applications, making collaboration and asset management easy.

Nvidia’s Create XR is the Omniverse tool for immersive world-building and interactive scene assembly for navigation in VR and AR (Ortiz et al., 2022), providing developers the capability to create immersive, ray-traced XR experiences through the use of specialized SDKs and RTX GPUs rendering complex scenes in real-time (Shapira, 2022). It enhances the immersion with features like realistic illumination, soft shadows and authentic reflections also its compatibility with Oculus and HTC Vive controllers enhances the user experience (Chamusca et al., 2023). Create XR integrates with Nucleus, enabling the import of assets and scenes made with other applications in the Omniverse ecosystem, like Nvidia USD Composer. Additionally, scenes created in Create XR can be easily shared and used in other Omniverse applications, ensuring seamless workflow and collaboration (Vatanen, 2024).

Audio2Face is the Omniverse application to create facial expressions such as amazement, anger, joy and many more, it uses generative AI to create expressive lifelike animations for 3D characters in real-time from audio inputs, a solution for animators and developers (Omn, 2022a). Audio2Face supports multiple languages and the ability to animate multiple characters simultaneously, each synchronized to different or the same audio source streamlining the creation of dynamic and interactive characters in a variety of settings, from video games to virtual assistants and cinematic productions. Users can also customize emotional responses by manually tuning the controlling parameters of facial masks, smoothing of upper and lower face as well as the strength of the overall motion, it also offers the ability of manually control the strength of each emotion manually providing versatility and ease of use also (Nvidia, 2024). Its integration with Nvidia’s Nucleus for collaboration with other Omniverse applications allows sharing assets at a press of a button.

Figure 8 shows the level of each emotion during every frame of the animated output. Values between 0 and 0.5 are automatically set by the application, while values above 0.5 indicate that the strength of that particular emotion was manually adjusted.

Figure 8. Audio2Face Emotions Visualizer (NVIDIA, 2024).

Its architecture is based on the Generative Adversarial Network (GAN) along with Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs). GANs are used to generate realistic facial expressions from audio inputs. They consist of two neural networks, a generator and a discriminator, that work in tandem (Goodfellow et al., 2014). The generator creates facial animations, while the discriminator evaluates their realism, providing feedback to improve the generator’s performance. RNNs (Wu, 2023), particularly Long Short-Term Memory (LSTM) networks, are used to handle the temporal aspects of the audio signal. They analyze the sequence of audio frames to capture the dynamics and emotional content over time, synchronizing the animations. CNNs are used for feature extraction from the audio signal to identify relevant features that correspond to specific facial movements and expressions (Choi et al., 2017). The audio input gets converted into a spectrogram which is then fed into CNN to extract relevant audio features to be processed by the RNN (LSTM) to capture temporal dependencies and emotional details. The spectrogram is then fed into the CNN to extract relevant audio features. The extracted features are processed by the RNN (LSTM) to capture temporal dependencies and emotional then its outputs are used to guide the GAN facial animations. The generator creates facial expressions, which are continuously refined by the discriminator for realism (Liu et al., 2024).

Figure 9 shows the Audio2Face pipeline workflow from inputting the audio data until the final facial animation rendering.

Nvidia USD Composer (formerly Create) is an Omniverse application for large-scale 3D world construction workflows (Sim et al., 2024). It integrates design, content creation, CAD, and simulation tools into a single platform, using a unified format of USD. The Composer also provides complex simulations, including fluid dynamics and soft body physics, through Nvidia’s PhysX simulation technologies, bringing realistic physics to scenes and creating immersive 3D content (Omn, 2022b).

For models’ creation The Composer supports USD meshes and subdivision surfaces, as well as USD Geom Points, which are specialized geometric primitives for rendering small particles like rain, dust, and sparks. It also allows for the rendering and authoring of basis curves in USD, providing enhanced workflow flexibility for artists, developers, and engineers, including applications from hair to camera tracks (Figure 10). Additionally, the Composer supports OpenVDB volumes as textures, offering advanced texturing capabilities (NVIDIA Corporation, 2024). It also supports Nvidia Index to interactively visualize and explore entire volumetric data sets in a collaborative setting (Schneider et al., 2021).

Figure 10. An astronaut model created in USD Composer demonstrates impressive visual quality (NVIDIA Corporation, 2024).

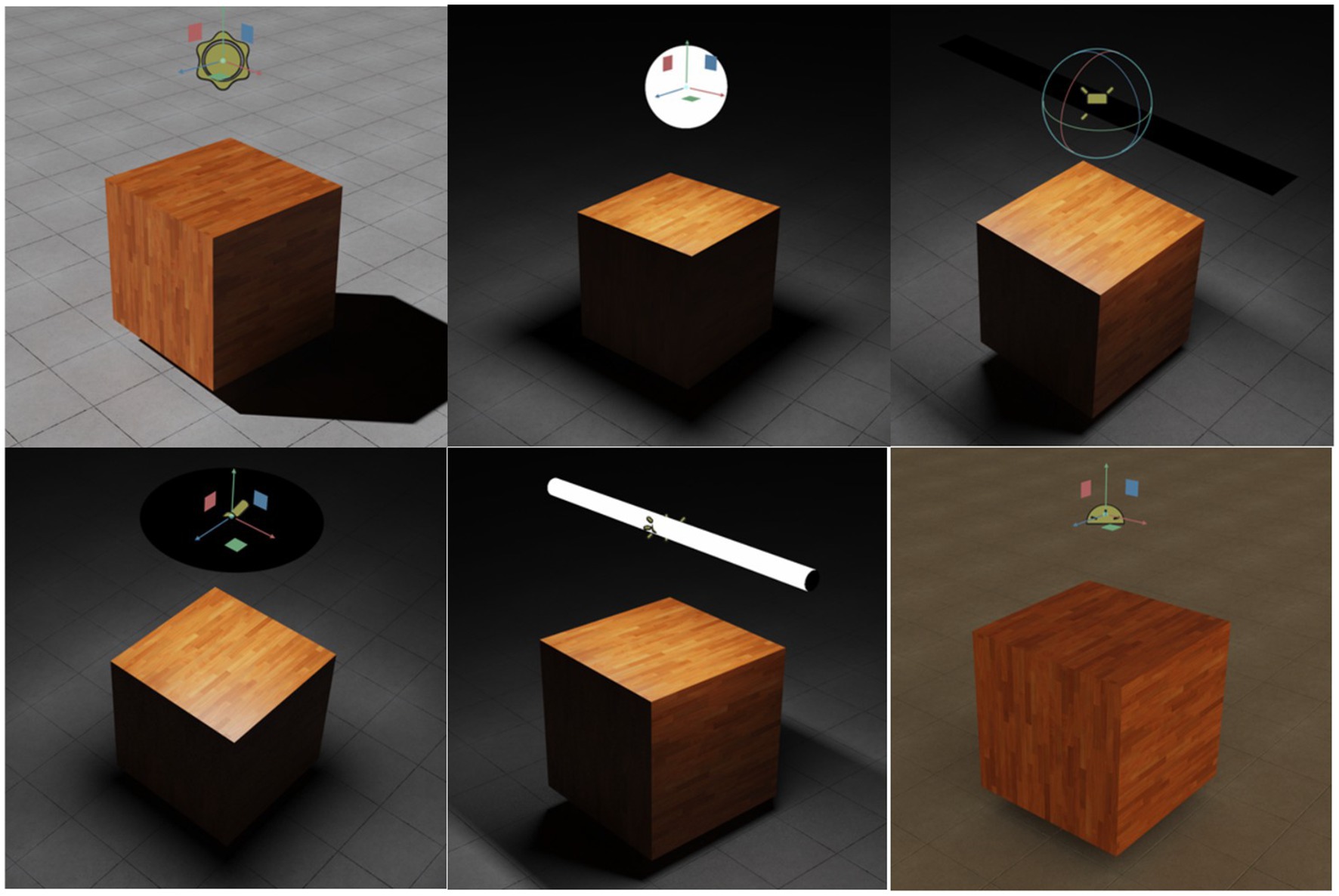

For rendering it uses built-in RTX Renderer for enhanced visual quality, and its direct interface options with Hydra renderers and Pixar form add considerable flexibility. It offers post-processing features such as tone mapping, color correction, chromatic aberration, and more, alongside core technologies like MDL (Zhao et al., 2022). To light up scenes, it supports various light sources, including Distant Light (resembling sunlight or moonlight), Sphere Light, Rect Light, Disc Light, Cylinder Light, Dome Light, and HDRI Skysphere (Background) lighting (Figure 11). All these can be enhanced using NVIDIA GPU hardware acceleration like DLSS (Deep Learning Super Sampling), which significantly boosts rendering performance while preserving image quality.

Figure 11. Different types of lights available in the USD Composer (NVIDIA, 2024).

USD Composer offers portability and user-friendliness, running seamlessly on both Windows and Linux. This cross-platform support is particularly advantageous for teams operating in diverse environments. The tool comes with an extensive library of built-in assets that can be easily utilized through a simple drag-and-drop interface, and it also connects to an external asset store for even more options. For monitoring performance, USD Composer includes built-in tools that track CPU, GPU, and RAM usage, ensuring efficient resource management. Additionally, it features an asset processing extension that not only enables the import of assets from external sources but also converts non-compatible assets into compatible formats, such as USD. Users can choose from three viewing modes—Draft, Preview, and Photo—where Draft utilizes the Omniverse RTX Renderer’s Real-Time mode, and Preview and Photo modes leverage RTX’s Interactive Path Tracing (IPT) to balance quality and performance, making it suitable for various needs, including the replicator tool. It is directly connected to the Omniverse Nucleus API so that all assets and virtual worlds created in Composer are easily accessible and usable by other applications like Isaac Sim and the XR application (Vatanen, 2024).

The Omniverse Connectors facilitate smooth communication between the Omniverse and other software, allowing 3D assets and data exchange in the USD format, enhancing the Omniverse’s versatility and portability across various platforms and workflows (Li et al., 2022). These connectors ensure that assets are easily transferable between different software environments without loss of fidelity or functionality.

The Nucleus is the backbone of the Omniverse ecosystem, connecting all applications like the Omniverse Create, Isaac Sim, and Drive Sim. It acts as the central hub for data and assets exchange, including version control, live collaboration, and real-time updates, allowing multiple users to work on the same assets simultaneously without conflicts. This provides efficient and synchronized collaboration and updates across the entire ecosystem. Operating under a publishing/subscription model, Nucleus enables clients to publish changes to digital assets and subscribe to updates while offering flexible deployment options to meet various operational needs. Technically, Nucleus is built on a scalable architecture that supports high-performance data storage and retrieval, using advanced caching known as Content Addressable Storage (CAS) (Kaigom, 2024), by assigning a unique identifier, known as a content address, to each piece of data based on its content rather than its location. This approach allows CAS to store only one version of the data, even if it is used across multiple projects, thereby minimizing redundancy and optimizing storage usage. When data needs to be retrieved, the system uses the content address to quickly locate and access the information, significantly reducing latency. CAS also supports deduplication and versioning, ensuring that identical files are stored only once, which is vital for maintaining version control and facilitating real-time collaboration. This mechanism is particularly advantageous in large-scale environments where data may be frequently accessed or modified, as it reduces the overhead associated with data management. Overall, CAS is a critical component of Nucleus, enabling it to efficiently support the demands of real-time, collaborative 3D workflows (Lin et al., 2023).

The Nucleus operates through interconnected services that users can interact with via built- in APIs and interfaces, providing ease of use with no additional programming (see Figure 12). Nucleus’s design emphasizes portability, enabling it to be deployed in various configurations to meet different operational needs, from local servers to cloud-based solutions. This flexibility ensures that Nucleus can be adapted to different organizational infrastructures, providing a tailored fit for diverse project requirements. This interconnectedness ensures instant reflection of changes across all connected applications, enabling a truly collaborative workflow (Omn, 2022d).

In recent years, there has been a significant increase in literature studies exploring new technologies related to the concept of the metaverse. However, the Omniverse has received relatively little attention in these research papers.

Our paper addresses this gap by specifically targeting the Omniverse, narrowing the focus from the broader concept of the metaverse. This work is pioneering in providing a comprehensive technical analysis of the Omniverse as an independent ecosystem, thoroughly exploring its capabilities and limitations. To address the second research question (RQ2), we have summarized, presented, and discussed recent surveys and systematic papers related to this topic.

Xu et al. (2022) conducted a detailed study on the metaverse, suggesting it could be the next big thing after smartphones and the internet. They recognized that people have not yet tapped into everything the metaverse can do but highlighted the importance of new technologies, like edge computing, which could help the metaverse reach its full potential. Their study looked closely at the complicated technology needed for the metaverse and discussed problems with building it, including issues with computing power, network connections, and device communication. They suggested some possible solutions, especially using blockchain technology, which could help overcome these problems. They also paid more attention to the Omniverse compared to other studies, laying the groundwork for more research into Nvidia’s specific metaverse technology.

Chow et al. (2022) conducted a detailed study comparing different metaverse systems, including the Omniverse. Their research covered a broad range of issues, especially security problems, emphasizing the need to protect these online spaces. They also highlighted important features of metaverse environments and proposed future directions, showing the evolving nature of the metaverse.

Huynh-The et al. (2023) reviewed the metaverse, focusing on its integration with artificial intelligence and various applications. Their study maintained a broad perspective without concentrating on any specific metaverse platform. However, they did not fully address the challenges and limitations of the metaverse, indicating a need for further investigation. Similarly, Agarwal et al. (2022) explained the basics and potential benefits of metaverse technology. While they touched on some challenges, their discussion lacked depth, primarily highlighting the negatives. This suggests a gap in understanding the complex issues critical to the development of metaverse technology. Wang et al. (2022) focused on the security aspects of the metaverse. They examined various security problems, such as access control, identity verification, network vulnerabilities, and financial risks. They also looked closely at data handling. The paper discussed important features that define the metaverse, like realism, spatial and temporal consistency, persistence, interoperability, and scalability. The research also explored many uses of the metaverse, including highly immersive games.

Ning et al. (2023) provided a thorough look at the metaverse, going beyond a specific version. They examined the rules and policies related to the metaverse that different countries, companies, and organizations have put in place. The study looked at the current development of the metaverse and its many potential uses, like gaming, virtual socializing, shopping, online collaboration, and content creation. They also explored how technologies like digital twins and blockchain could be used in the metaverse and how they work together. The research concluded by discussing unanswered questions and future directions, especially regarding security, showing the importance of continued investigation as the metaverse grows. Zhang et al. (2022) investigated robot control software, focusing on the Robot Operating System (ROS) and its newer version, ROS 2. Their work helps understand new ways to design and build technology that connects robots and enables them to work in various areas. They studied how well these designs work with ROS and provided detailed examples from other studies. Their work highlighted the flexibility of these technologies and their potential applications in many industries. Although they mentioned well-known systems like AWS RoboMaker and the Omniverse, they did not discuss much detail, maintaining a broad view to cover the growing field. Mann et al. (2023) wrote an in-depth paper about XR, encompassing AR, mixed reality. They aimed to clarify these different terms and their relationships. To make it easier to understand, they introduced a new name, XV (eXtended meta-uni-omni-Verse), which they believe could be key in the XR world. They categorized different types of reality to help people grasp the subject better. Their paper also discussed the Omniverse as a platform that could unify these technologies, although they did not go into too much technical detail. They addressed challenges related to XR, such as privacy, data ownership, and the impact of XV on jobs and social interactions, showing the complexity of XR. As can be seen in the extensive analysis of the related surveys, none of the existing works present a comprehensive analysis of the Omniverse and its applications. Table 1 reveals that existing surveys lack emphasis on extensions and components related to the Omniverse. Specifically, Huynh-The et al. (2023), Agarwal et al. (2022), Chow et al. (2022), Xu et al. (2022), Ning et al. (2023), and Wang et al. (2022) make no mention of Omniverse applications or extensions. In contrast, Zhang et al. (2022) dives into the replicator tool and the Isaac Sim simulation environment, while Mann et al. (2023) briefly touches on possible implementations of Create XR. In our survey, we have deliberately concentrated on thoroughly exploring all aspects, components, and extensions of the Omniverse platform.

Our survey also goes into a much greater detail when it comes to the application of the Omniverse. From Table 2 it is clear that the synthetic data generation aspect of the Omniverse is only mentioned by Zhang et al. (2022). In the realm of teaching and education, possible metaverse implementations are acknowledged by Huynh-The et al. (2023), Xu et al. (2022), Ning et al. (2023), and Wang et al. (2022). Interestingly, no survey to date has explored the military potential in the Omniverse. The business sector stands out as the most frequently discussed domain for metaverse applications. Huynh-The et al. (2023), Agarwal et al. (2022), Xu et al. (2022), Ning et al. (2023), Wang et al. (2022), and Mann et al. (2023) all go into possible metaverse implementations in the field of business and trade. Concerning industry and manufacturing benefits from the metaverse, only two surveys, Xu et al. (2022) and Wang et al. (2022), have addressed this aspect. In the medical field, Agarwal et al. (2022), Xu et al. (2022), and Ning et al. (2023) have explored the potential applications of the metaverse. For culture and tourism, Huynh-The et al. (2023), Xu et al. (2022), Ning et al. (2023), and Wang et al. (2022) have contributed discussions, while the topic of smart cities is touched upon solely by Ning et al. (2023).

The replicator was used by authors in Kokko and Kuhno (2024) for the development and utilization of a synthetic data generator. The goal was to create a reference generator that could produce high-quality training data for computer vision tasks, specifically semantic segmentation. Replicator was chosen for its high integration capability, use of the USD format, and connectivity to various third-party applications, which facilitated the import of diverse 3D assets and tools into the platform. The study found that the Omniverse’s rendering quality, combined with Replicators domain randomization and domain gap bridging techniques, significantly improved the visual realism and diversity of the synthetic data. Domain randomization, in particular, enhanced data distribution, improving the model’s ability to generalize to real-world scenarios, demonstrated by a DeepLabV3+ model trained solely on the generated synthetic data achieved a mean Intersection over Union (mIoU) accuracy of 94.05% on a small hand-labeled evaluation dataset. The study also indicates a substantial decrease in annotation costs through the use of the Replicator as manually annotating an image could cost around $6, whereas generating a synthetic image costs about $0.06 achieving a reduction of 100 times.

In Zhou et al. (2024) the authors present a benchmark for robotics manipulation using Isaac Sim. The authors propose this benchmark to establish a reliable development platform for the design and performance assessment of AI-enabled robotics systems surveying industrial and academic practitioners to compare Isaac Sim with other physical simulators. The benchmark consisted of eight robotics manipulation tasks Point Reaching (PR), Cube Stacking (CS), Peg-in-Hole (PH), Ball Balancing (BB), Ball Catching (BC), Ball Pushing (BP), Door Opening (DO), and Cloth Placing (CP) each designed to test different aspects of a robot’s manipulation capabilities. The study employs various DRL algorithms such as Trust Region Policy Optimization (TRPO), and Proximal Policy Optimization (PPO) Evaluations using metrics like Success Rate (SR), Dangerous Behavior Rate (DBR), Task Completion Time (TCT), and Training Time (TT) were conducted under conditions with and without action noise.

Table 3 summarizes the performance of AI controllers (PPO and TRPO) on various robotics manipulation tasks using different simulators, including Isaac Sim, Gazebo, PyBullet, and Mujoco. Tasks were evaluated with and without action noise. Isaac Sim paired with TRPO generally outperforms the others, achieving high success rates (up to 100%) and low error rates. In contrast, PyBullet and Mujoco show higher error rates and longer task times, especially under noise. Notably, tasks like ‘PR’ and ‘BB’ excel with Isaac Sim and TRPO, while complex tasks like ‘DO’ and ‘BP’ see performance drops across all simulators.

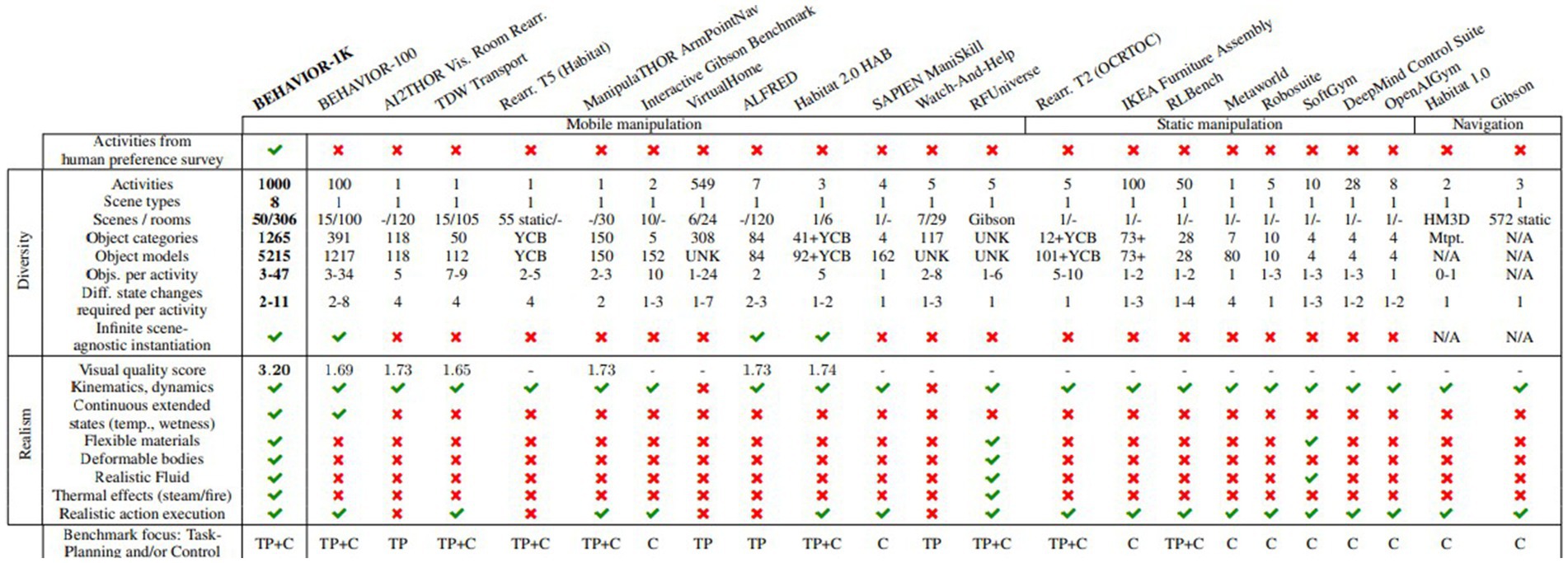

BEHAVIOR-1K dataset and the OMNIGIBSON simulation environment, which are developed using the Nvidia’s Omniverse technologies were introduced by Li et al. (2023). OMNIGIBSON exploits the capabilities of the Omniverse and PhysX 5 to deliver high- fidelity physics simulations and rendering, including rigid bodies, deformable bodies, and fluids. This environment is designed to support 1,000 diverse activities with realistic simulation features, including temperature variations, soaked levels, and dirtiness states, enabling the simulation of complex activities such as cooking and cleaning. The paper also presents a quantitative comparison between OMNIGIBSON and its competitor, AI2-THOR which is based on UNITY engine, highlighting OMNIGIBSON’s superior performance across various metrics. In a survey assessing visual realism, OMNIGIBSON achieved a score of 3.20 ± 1.23, significantly higher than AI2-THOR’s 1.73 ± 1.37. Furthermore, OMNIGIBSON offers a broader range of activities and scene types, featuring 1,265 object categories and 5,215 object models, compared to AI2-THOR’s 118 object categories and 118 object models (Figure 13).

Figure 13. Comparison of Embodied AI Benchmarks: BEHAVIOR-1K contains 1,000 diverse activities that are grounded by human needs. It achieves a new level of diversity in scenes, objects, and state changes involved (Srivastava et al., 2022), table extended by Li et al. (2023).

The Omniverse stands out among various metaverse platforms due to its advanced capabilities in real-time simulation, AI integration, and seamless collaboration, making it ideal for professional and industrial applications (Lee et al., 2021). Unlike Decentraland, Facebook’s Horizon, and Roblox, which focus on social interaction, user-generated content, and entertainment, the Omniverse emphasizes high-fidelity simulation and content creation. Decentraland leverages blockchain for a decentralized economy, Horizon focuses on social networking, and Roblox prioritizes user creativity and gaming. The Omniverse’s precision, interoperability, and industrial applicability set it apart from these socially and creatively oriented platforms (Wang et al., 2022). The Omniverse also distinguishes itself from platforms like Second Life and VRChat, which focus on social interaction and user-generated content. The Omniverse’s robust integration capabilities and real-time collaborative environment make it a comprehensive tool for developers to build sophisticated virtual worlds with photorealistic graphics and precise physics. Its interoperability with various software applications and support for Universal Scene Description (USD) enable seamless collaboration across different domains, contrasting with the more isolated ecosystems of other metaverse platforms (Lee et al., 2021). Compared to Unity, which caters to 2D and 3D game development with broad cross-platform compatibility and an extensive asset store, the Omniverse leverages Nvidia’s AI and rendering technologies, supports Python and C++ for customization, and is based on the OpenUSD platform, making it suitable for large-scale projects and enterprises. Unity, supporting multiple scripting languages, is more geared toward indie developers and mobile projects (Aircada, 2024a). When compared to Unreal Engine 5, the Omniverse is optimized for industrial applications with its USD-based platform and tools for interconnected workflows, suitable for enterprise-level virtual world creation and simulation. Unreal Engine 5, ideal for game development, supports various platforms and offers a financially feasible royalty-based model for developers. Both platforms have unique strengths, catering to different user bases and needs (Aircada, 2024b).

Table 4 answers (RQ3) by highlighting the strengths and weaknesses of various metaverse platforms across different aspects. Each aspect is rated as Excellent, Good, Moderate, Low, or No, representing the platform’s capability in that area.” Excellent” indicates the highest level of performance or support,” Good” signifies a strong but not top-tier capability,” Moderate” reflects an average level of functionality,” Low” indicates below-average performance, and” No” means the feature or capability is absent.

In this section we provide an overview and synthesis of potential domains and applications within the Omniverse, answering (RQ4) and (RQ5) with specific emphasis on ten primary fields outlined in Figure 14.

The Omniverse offers capabilities for the generation of synthetic data through the creation of a simulated environment incorporating all essential assets to construct a comprehensive scene. Subsequently, the Omniverse Replicator can be harnessed to apply domain randomization techniques, thus allowing for the randomization of one or multiple attributes within the USD framework (see Figure 15). This process yields a diverse array of synthetic data, which can further be subjected to automated annotation procedures, facilitating the training and development of artificial intelligence models (Geyer, 2023).

Figure 15. Domain randomization using Nvidia replicator generating three different scenes using the same assets [The Omniverse replicator Huynh-The et al. (2023)].

In a study Omn (2022c) researchers used the Omniverse system to make Synthetic data and build an AI that can find problems in car parts, specifically for the SIERRA RX3 model. The main goal was to create a big and varied set of data from just one computer model of a SIERRA car part without any defects. This was to help make a strong AI that can detect when parts have defects. They used several tools, including the Nvidia Omniverse Replicator, a custom extension toolset for making Synthetic defects, and RoboFlow, which helps label images and train AI models. They made fake Synthetic scratches on the car part that could be adjusted in shape, size, and where they were placed (Figure 16), which shows the car part before and after projecting synthetic scratches on its surface. Then, using the Omniverse Replicator and randomization techniques, they made a lot of images that showed these Synthetic defects in different ways.

Figure 16. Left image shows the Sierra RX3 defect-free part (CAD), while right images Show Decals projecting synthetic scratches on the defect-free car part (Nvidia defect detection Omn (2022c)].

This case study efficiently produces a strong defect detection model for a particular car part, attaining a precision rate of 97.7% and a recall rate of 73% through the exclusive use of a single computer-aided generated file. This achievement effectively tackles the issue of restricted data availability and reduces the related temporal and financial expenses that would be involved in creating a similar dataset using authentic defect detection models.

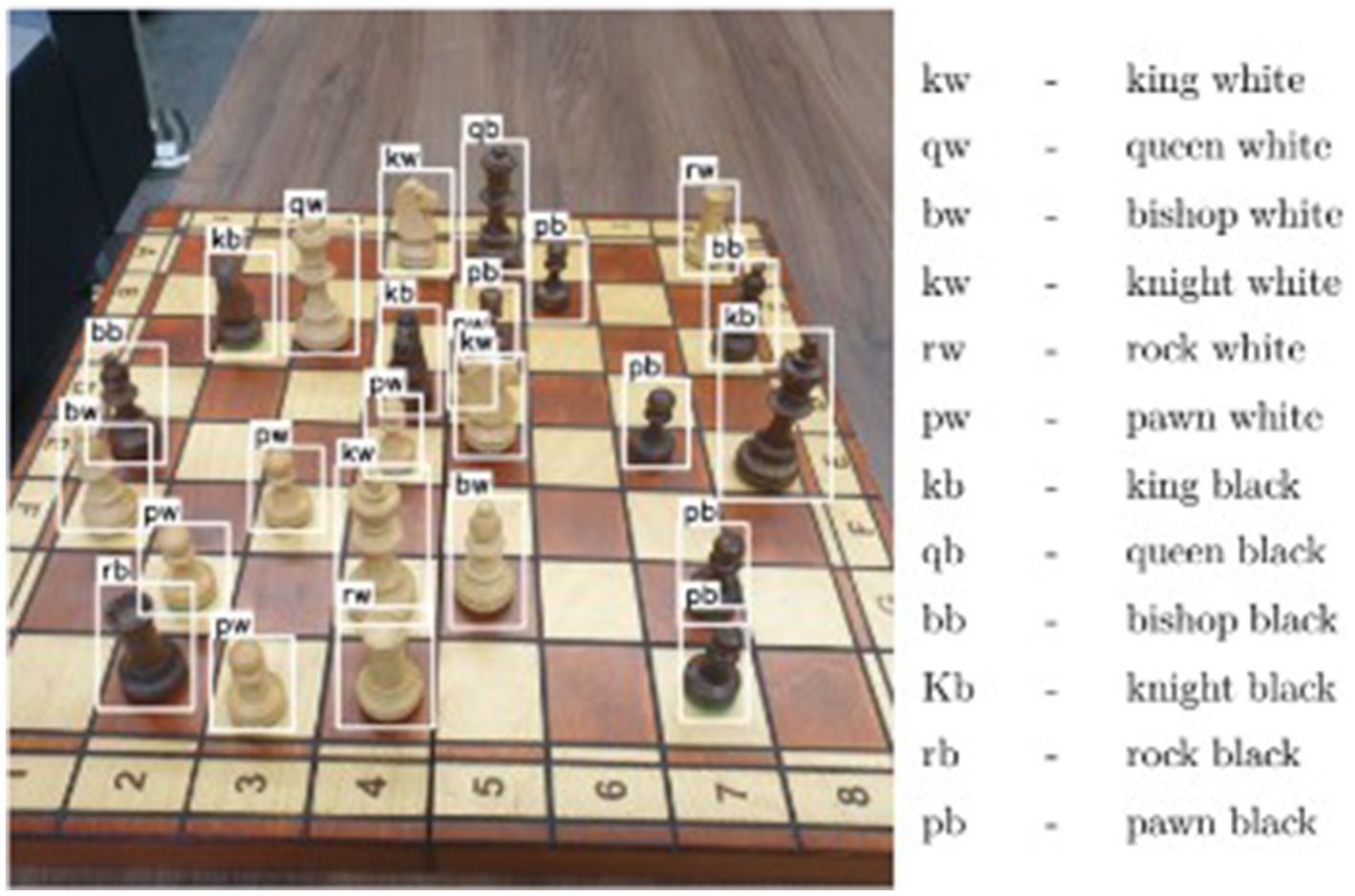

In another study (Metzler et al., 2023) proposed to use the Omniverse to overcome the challenge of training object detection models using synthetic data. By using the Omniverse capabilities to automate the generation of synthetic images for robust object detection tasks, particularly in scenarios where capturing diverse real-world images is impractical due to time, cost, and environmental constraints. The methodology revolves around creating 3D models of objects, applying domain randomization, and rendering synthetic images within the Omniverse. The authors applied their method to a chess piece recognition task, where the Omniverse Replicator was utilized for its advanced capabilities of photorealistic rendering, domain randomization, and efficient GPU-based batch processing to overcoming the sim- to-real gap by providing realistic textures, lighting conditions, and randomized object placements (Figure 17). The paper reports an impressive accuracy of 98.8% in object detection using the YOLOv5 model with the generated synthetic dataset. Furthermore, the study compares different sizes of training datasets and YOLOv5 variants.

Figure 17. Example of a picture from the validation dataset with labels (Metzler et al., 2023).

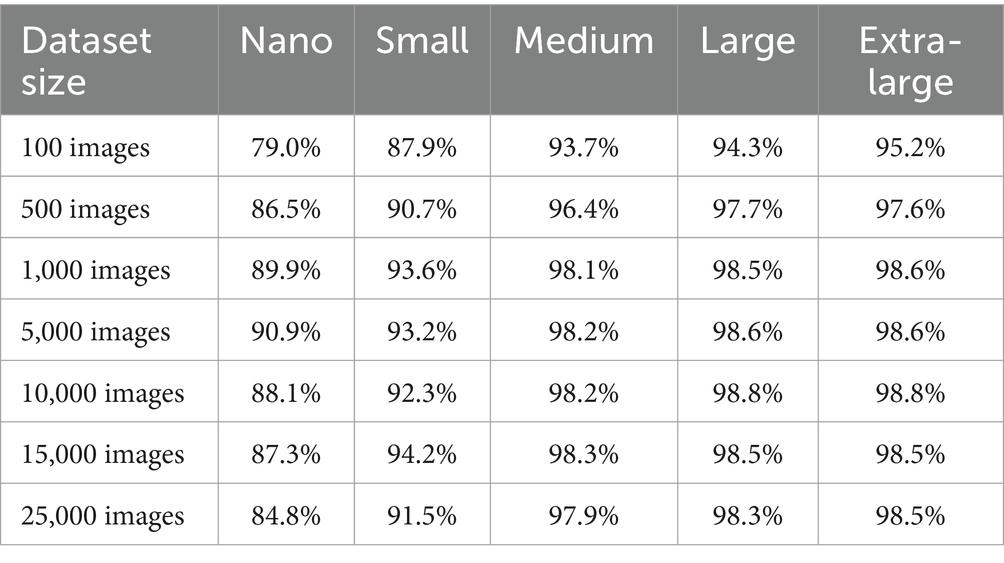

Table 5 summarizes the performance across different dataset sizes and YOLO5 model variants from Nano to Extra Large. The results from Table 5 show that even with a small dataset size of just 100 images, the model was able to achieve impressive accuracy scores across different YOLOv5 variants. Notably, the YOLOv5 Extra-Large variant reached an accuracy of 95.2%, while the YOLOv5 Nano variant achieved 79.0%. These high accuracy levels, despite the limited dataset size, demonstrate the exceptional quality of synthetic data gener7ated using the Omniverse and the Replicator tool.

Table 5. Performance of YOLOv5 variants across different dataset sizes (Metzler et al., 2023).

In April 2020, UNICEF said that because of COVID-19, most students worldwide could not go to regular schools (Miks and McIlwaine, 2020). Almost all the schools, colleges and universities moved to online learning. They used the internet to teach, but there were some problems, like students getting distracted and not feeling like they were in a real classroom. Now, thanks to better technology and powerful computers, we can create engaging online classrooms. XR functionality of the Omniverse lets students and teachers feel like they are in a real classroom, using digital characters. This means students can learn from anywhere, and it’s almost like being in a real classroom (Spitzer et al., 2022). But it is not just about copying regular classes. Now, students can do experiments and practical lessons online, even ones that might be dangerous in real life. This is safer and cheaper, and students can do the experiments as many times as they need. Also, virtual tools make sure everything is safe and follows the rules. The Omniverse can also help people with special needs. For example, it can use digital characters to teach sign language, making learning fun for students who cannot hear. This way, they can overcome challenges and learn better (Novaliendry et al., 2023).

At Astana IT University, the Omniverse is used to help students learn by working on projects together. The students learned about 3D modeling and design, even though they were far away from each other. They had to make a virtual model of the university that looked just like the real one. This way, teachers could see how well the students were doing and help them fix mistakes quickly. They also used data to learn more about how students worked together (Alimzhanov et al., 2021). This project is about sharing how they organized this kind of learning during quarantine using the Omniverse platform. They used the Omniverse View to see the 3D model in real time. They also used a system named Omniverse Connectors to connect everything to the Omniverse Nucleus. All of this worked on a computer with a powerful Nvidia Quadro RTX 4000 graphics card. They used software like Autodesk 3ds Max and Blender to create objects and things in the virtual building. The rooms were made very carefully based on architectural plans in Autodesk 3ds Max and then put into the Unreal Engine using a special tool. With the help of connectors, students connected to a server where the 3D building model was kept, and they could add things to the virtual space easily (Alimzhanov et al., 2021).

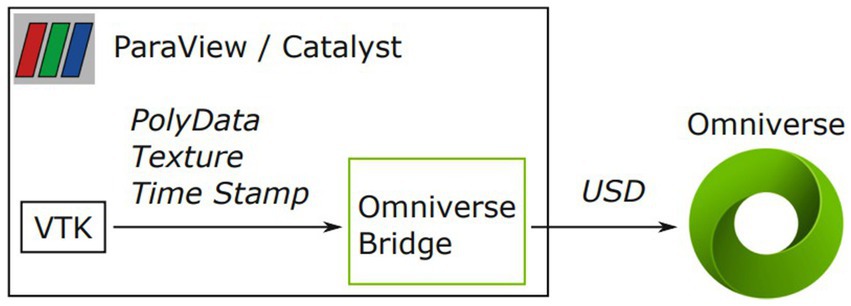

Hummel and van Kooten (2019) proposes using the Omniverse as a ‘Rosetta Stone’ for in situ visualization geometry, enabling the direct integration of scientific visualization data with creative tools, assets, and engines.

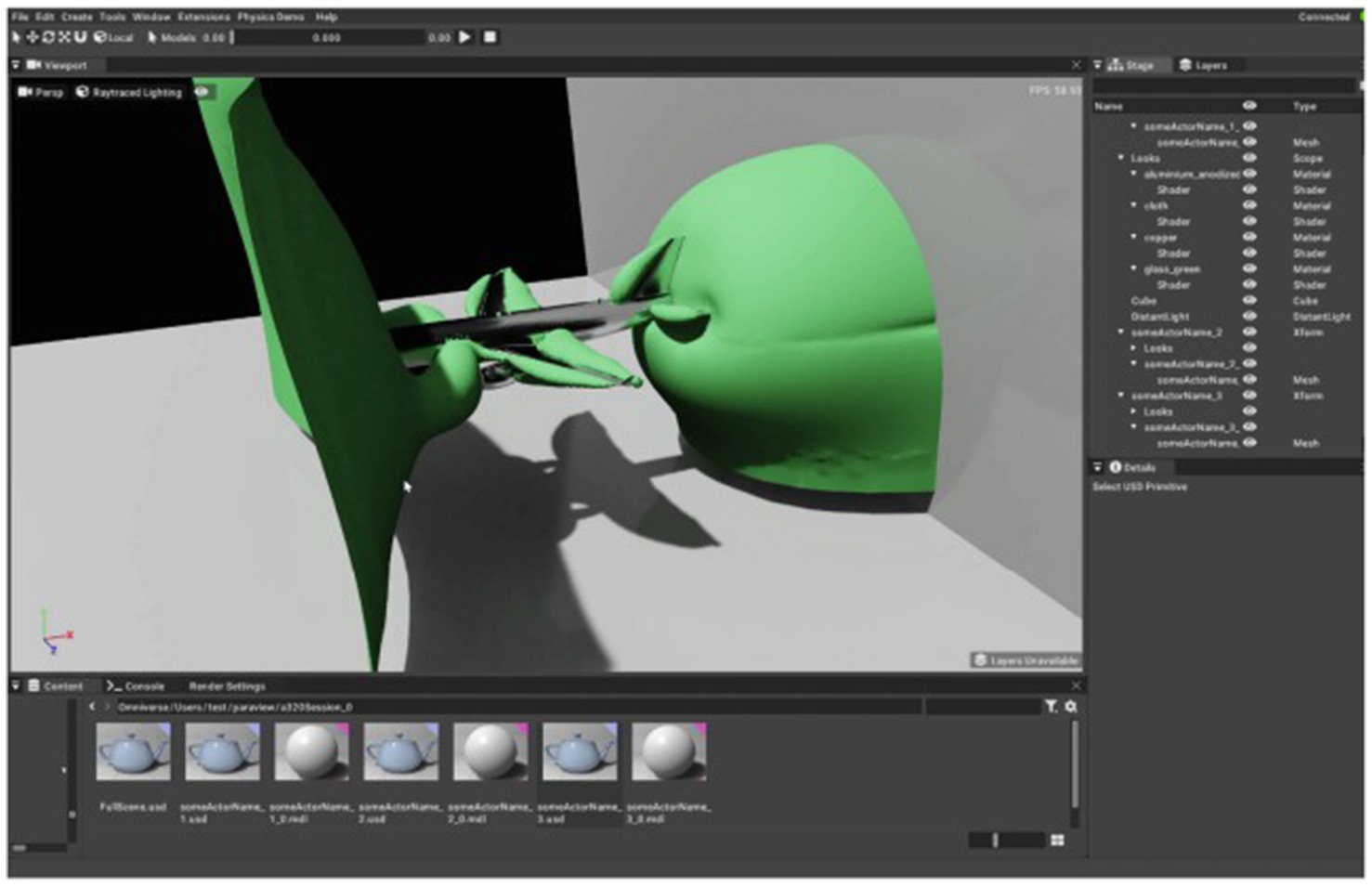

To achieve this, they have developed the Omniverse bridge, an adapter that connects in situ visualization tools with Omniverse. Distilled geometry, textures, and color maps are converted on-the-fly to USD and pushed to the Omniverse as they become available (Figure 18). If the visualization geometry includes time step information, these are represented as time samples in USD. The current implementation is integrated with ParaView, where the Omniverse bridge functions as a specialized render view. Any geometry flagged for rendering through the Omniverse bridge is automatically converted to USD and transferred into the Omniverse. In a Catalyst script, this view can be registered with the coprocessor in the same way as a regular render view would be used to render and save images.

Figure 18. The Omniverse bridge converts visualization geometry from the in situ visualization framework into USD format and then integrates the results into the Omniverse (Hummel and van Kooten, 2019).

Airliner Flow Simulation: In this example, an OpenFOAM (Jasak et al., 2007) simulation models the airflow around an A320 jet aircraft (Weller et al., 1998). ParaView Catalyst was used to compute an isosurface of the pressure field in situ, which was then transferred to the Omniverse using the Omniverse bridge, along with the aircraft’s boundary mesh. In the Omniverse Kit, the visualization geometries were combined with planes as a contextual background. The aircraft mesh was given a polished aluminum material using MDL. Any modifications to the visualization geometry, such as changing the isovalue, are automatically updated in the Omniverse Kit. Advanced rendering techniques like ray-traced reflections, ambient occlusion, and shadows deliver high-quality visuals in real-time (Figure 19).

Figure 19. An isosurface of the pressure volume and the airliner boundary mesh, both pushed into the Omniverse by the Omniverse bridge, are visualized using the Omniverse Kit application. Materials and background geometry were added in the Omniverse Kit; the underlying visualization geometry is updated when it changes in ParaView/Catalyst (Hummel and van Kooten, 2019).

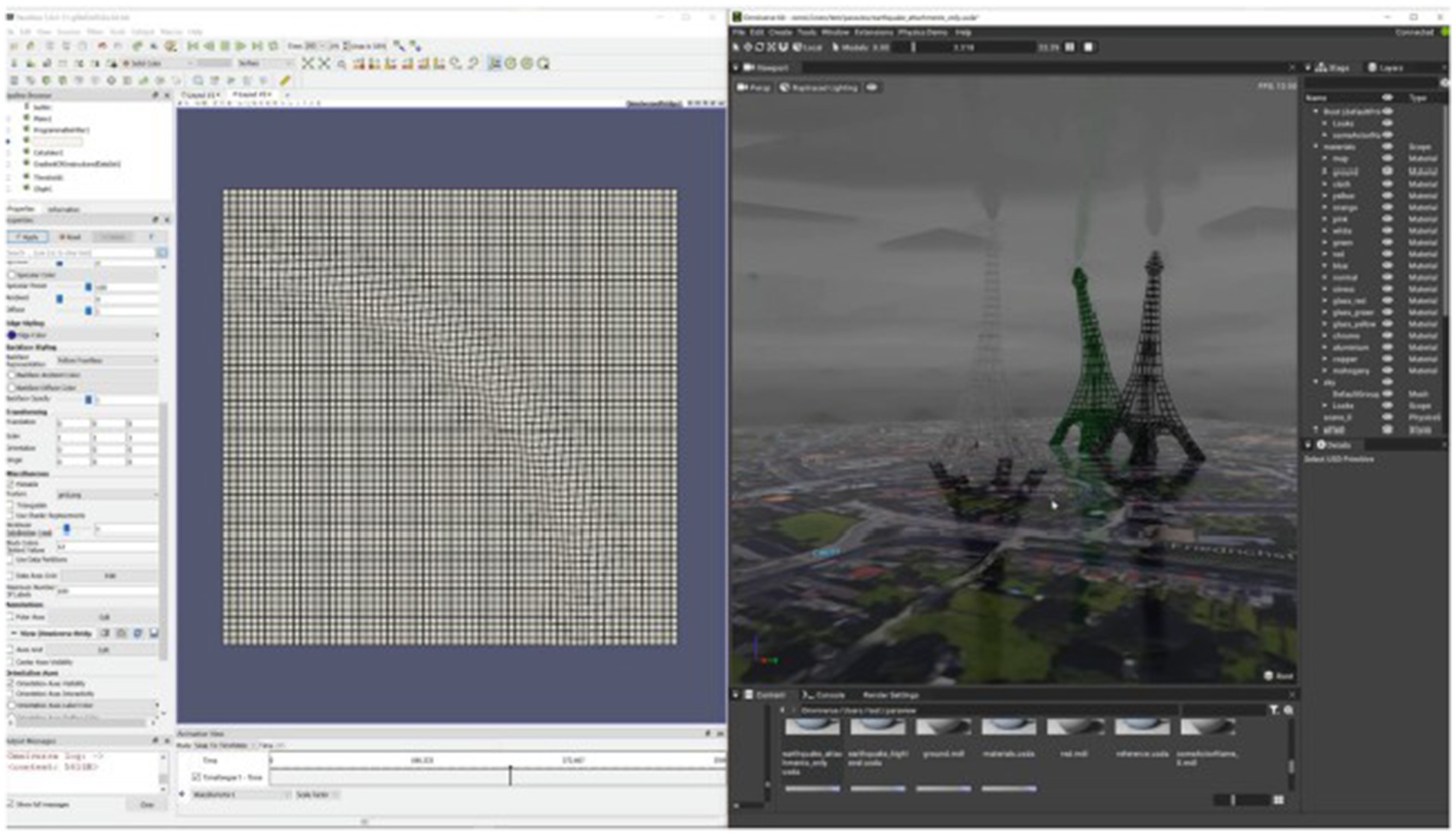

Earthquake: The Earthquake sample is not derived from an actual simulation. Instead, it uses a programmable filter within ParaView to create a time-varying, wave-like distortion on a planar surface, which is then transmitted to the Omniverse (see Figure 20). As the filter generates time-based samples of the surface, the Omniverse bridge updates the USD representation, and differential updates are sent to the Omniverse server. A client, such as the Omniverse Kit, can then play back all the available time samples of the surface, incorporating any new samples into the ongoing animation as they arrive.

Figure 20. A programmable filter is used in ParaView to generate a time-varying, wave- shaped distortion on a planar surface (left). The resulting, time-varying mesh is pushed into the Omniverse using the Omniverse bridge. The mesh is used as an animated ground model in Omniverse Kit (right), where a number of physics-enabled building models are attached to it (Map Data: Google, GeoBasis-DE/BKG). As the animation is played back, the wave passes underneath the buildings and causes them to sway (Hummel and van Kooten, 2019).

The research conducted in Bahrpeyma et al. (2023) utilizes the Omniverse Isaac Sim environment to simulate multi-step robotic inspection tasks, focusing specifically on the UR10 robotic arm (Supplementary Figure 1). The core objective is to optimize the robot’s positioning across multiple inspection points in a dynamic manufacturing setting using Reinforcement Learning algorithms. In this study, the Omniverse Isaac Sim’s ability to accurately replicate real-world physics and support complex robotic systems are particularly beneficial. The platform allows for the creation of a detailed simulation environment that closely mirrors the dynamic conditions of actual manufacturing processes. This capability is essential for testing the robot’s performance under conditions that would be encountered in real-world scenarios, such as varying inspection points and the associated time constraints.

The simulation setup within the Omniverse involves the UR10 robotic arm performing a series of movements to reach predefined inspection points accurately. The RL algorithms employed—Deep Deterministic Policy Gradient (DDPG), Twin Delayed DDPG (TD3), Trust Region Policy Optimization (TRPO), and Proximal Policy Optimization (PPO)—are tested within this environment to optimize the robot’s trajectory planning and joint control.

The realistic simulation environment provided by the Omniverse was critical in these evaluations, allowing the researchers to observe how each RL algorithm performed under various constraints, such as time limits and dynamic target positions. This high-fidelity simulation not only helped in developing a robust RL-based solution but also provided insights into potential deployment scenarios in actual manufacturing settings. The results underscore the Omniverse’s value as a simulation platform, particularly in applications requiring precise and reliable robotic positioning in dynamic environments, such as those found in mass customization and modern production lines.

The notion of immersive training within the Metaverse represents an intriguing proposition within the realm of military applications. Leveraging the Omniverse technology, which integrates AR into synthetic training environments within virtual domains, holds the promise of providing a practical and highly efficient training experience. These environments faithfully replicate a wide spectrum of real-world combat scenarios, granting military personnel the means to accomplish training objectives while maintaining a paramount concern for safety. Furthermore, the real-time capture and analysis of data streams empower soldiers to engage in targeted training exercises that scrutinize enemy performance, which implies training efficiency. Additionally, the recorded data can serve the purpose of real-time evaluation of each soldier’s progress and performance (Zhai et al., 2023).

The integration of the Omniverse for fighter pilot training constitutes a paradigm- shifting approach that equips them with the necessary skills to meet the formidable challenges they may encounter. This innovative methodology leverages digital twin models in conjunction with AR and XR to establish an immersive and hyper-realistic training environment that faithfully emulates the rigors of authentic aerial combat scenarios. Within this virtual domain, trainees can assume control of virtual cockpit interfaces and engage in a multifaceted array of missions, encompassing aerial duels, reconnaissance expeditions, and precision target engagement, all meticulously crafted to replicate diverse weather conditions and terrains. Moreover, the Omniverse fosters collaborative interactions among trainees and virtual ground personnel, fostering teamwork and enhancing the realism of combat coordination. A salient advantage of this metaverse-enabled training is its ability to simulate scenarios that would be excessively perilous or financially prohibitive to recreate in actuality, providing a safe yet dynamic arena where pilots can refine their skills, emphasizing rapid decision-making and precise execution, while mitigating real-world risks to both human lives and valuable equipment. In summary, the application of the metaverse in fighter pilot training offers a transformative platform, delivering a secure, dynamic, and ultra-realistic context in which the next generation of aviators can hone their abilities, ultimately preparing them to safeguard our skies effectively (Zhai et al., 2023).

An additional prospective utility of the Omniverse platform lies in the domain of communication and collaboration. The platform’s capacity to facilitate user interactions within a collectively shared virtual environment offers the potential to enhance communication channels, particularly in contexts involving military personnel. Such a technology could effectively serve as a means of communication between soldiers in the field and their commanding officers, as well as fostering inter-branch and inter-service communication within the military infrastructure. The consequence of this is the prospect of informed and high-quality decision-making under high-pressure and intricate circumstances, ultimately contributing to the optimization of operational efficiency and effectiveness (Fawkes and Cheshire, 2023).

Electronic Warfare (EW) involves the strategic use of electromagnetic energy to disrupt an adversary’s operation of electronic equipment, including communication systems and radar. Creating lifelike simulations of electromagnetic environments for EW training can be challenging in practical settings, but in metaverse-based EW training, users can engage with simulated electromagnetic scenarios, including various forms of electromagnetic interference. They are taught how to employ Electronic Counter Measures (ECM) and Electronic Counter Counter Measures (ECCM) technologies to detect and mitigate these disruptions. One significant advantage of an Omniverse-based approach is the ability to generate intricate Electro-Magnetic (EM) environments, allowing users to customize training exercises by selecting from diverse simulated settings, devices, and scenarios, catering to their specific needs. This approach ensures preparedness for wartime scenarios and provides the opportunity to explore electronic spoofing techniques, even during peacetime. Additionally, the Materials Defining Language (MDL) proves invaluable in these simulations by enabling the accurate replication of real-world materials, enhancing authenticity and precision in training scenarios (Fawkes and Cheshire, 2023).

The missile defense system is an integral element of national security for countries worldwide. Ensuring the readiness and competence of air defense systems stands as a top priority for any sovereign nation. Effectively preparing for missile defense systems requires the establishment of a simulation environment that provides a high level of authenticity and immersion, replicating the complex nature of real-world missile threats. In the age of hypersonic technologies, embracing an Omniverse-based approach to missile defense training offers a multitude of potential benefits when compared to traditional training methods. This shift in approach enables the creation of highly adaptable training scenarios, granting users the ability to simulate a wide range of missile threats and the corresponding defensive tactics. Moreover, an Omniverse-based approach can lead to more cost-effective and safer training exercises, thereby reducing potential risks. However, the successful implementation of this approach demands a substantial infusion of technical expertise and resources, requiring precise modeling of missile flight paths, physics, and sensor systems to achieve a practical and effective augmented training environment (Zhai et al., 2023).

The utilization of the metaverse for marketing has transcended the realm of futuristic speculation and has emerged as a prominent focus within the Omniverse platform. The heightened interest in employing metaverse technology for marketing is primarily attributable to the advantages of accessibility and versatility it offers. Current marketing methods have some problems, but the Omniverse platform offers a way for businesses to create and promote digital versions of real products. It is like using the metaverse for advertising. Also, the Omniverse’s XR tech lets potential customers interact with these products using AR, no matter where they are. This means it solves issues with distance and strict advertising schedules. In the metaverse, businesses can collect a lot of customer data in real time. This data is helpful for creating and improving smart computer programs for suggesting products. It also helps businesses understand how customers behave and what they like. This new way of marketing helps companies make better plans and spend less money on advertising (Jeong et al., 2022).

The retail industry can benefit a lot from using the Omniverse for shopping. It makes online shopping more like a real store. This new way combines two technologies: digital twins and real-time shopping, which is better than regular online shopping. In this Omniverse retail concept, customers can use virtual avatars to shop in a virtual store. It is almost like shopping in a real store because customers can not only see products but also check them out virtually. Unlike normal online shopping, the Omniverse retail concept lets customers not only look at products but also examine them and even try them virtually. Also, by using the Omniverse’s AR applications, customers can explore products in a 3D replica format, making it easier to judge the quality and design. The Omniverse also uses Material Definition Language (MDL) technology to make things like cloth and metal look and feel real, making the shopping experience more realistic. All of these technologies change the old” Click-To-Buy” idea into a more advanced” Experience-To-Buy” concept (Jeong et al., 2022).

Real estate companies can use the Omniverse to make 3D models of real properties. These models let potential clients explore properties online, so they can see if they like them before spending a lot of money. They do this by using the Omniverse’s digital twin features and the Omniverse’s XR tech to create these realistic experiences (Azmi et al., 2023). This idea solves the problems of physically going to property sites. It is a good alternative that might reduce the need for many real estate agents and lower property costs. Overall, it helps customers make better choices before buying properties.

A key feature of the Omniverse is creating a shared virtual space where design engineers and product experts can work together (Kritzinger et al., 2018). The XR powered by the Omniverse simplifies this process. It makes designing new products easier and cheaper. The Omniverse gives accurate real-time data, which makes the design process simpler. Third-party manufacturers can also join the engineers and designers in real time. This makes it easier to get feedback and make changes without spending a lot of time and money. All of this helps reduce the overall cost of a product and improves its quality and efficiency (Zhang et al., 2020).

The Metaverse uses data from different sources to show real-time visuals of how things are made. This helps leaders see how their business is doing in a new way. Engineers can use it to watch how things work and fix problems, especially in tough places like mines and oil fields. They can also help field technicians from far away (Lee and Banerjee, 2011). At the same time, Metaverse lets manufacturers test different ways of making things. They can see what might happen when they make more or less stuff. These tests also show how they can use machines to make things better. Manufacturers use XR tech to quickly find problems with equipment, which makes quality control and maintenance better. This way, they can have fewer bad products and spend less on maintenance (Siyaev and Jo, 2021). The BMW Group is adopting this novel approach in manufacturing. They use a digital-first approach, which means they use virtual tools to plan how to make things before they start for real. They use the Omniverse to do this in their factories all over the world, like the electric vehicle plant in Hungary that opens in 2025 (Geyer, 2023). The virtual version of the Hungarian plant is a great example of how they can use smart computer programs to run factories, which is a big change in how things are made.

The Omniverse can make traditional 2D medical images, like X-rays and CT scans, much better in two ways. First, it can turn them into 3D images by creating digital models of organs right in the Omniverse platform. Alternatively, one can use other software like MEDIP PRO to change medical images into the special file format of USD and then bring them into the Omniverse. This novel technology lets doctors and researchers see and study medical data, including images, in 3D.

The Omniverse is a big step forward in making 3D medical images look very real, thanks to its fast rendering and advanced techniques. For example, MEDIP PRO was used to analyze a CT SCAN image of a 6-day-old baby with a heart problem called coarctation of the aorta. They turned the important parts of the image into the special USD file format. Then, they put it in the Omniverse, where they used its powerful rendering capabilities with the raytracing technique as shown in Supplementary Figure 2 to generate highly accurate organ digital twin (Yoon and Goo, 2023).