94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Robot. AI, 14 February 2025

Sec. Industrial Robotics and Automation

Volume 12 - 2025 | https://doi.org/10.3389/frobt.2025.1540476

This article is part of the Research TopicAdvances in Industrial RoboticsView all 3 articles

This paper presents a mini-review of the current state of research in mobile manipulators with variable levels of autonomy, emphasizing their associated challenges and application environments. The need for mobile manipulators in different environments, especially hazardous ones such as decommissioning and search and rescue, is evident due to the unique challenges and risks each presents. Many systems deployed in these environments are not fully autonomous, requiring human-robot teaming to ensure safe and reliable operations under uncertainties. Through this analysis, we identify gaps and challenges in the literature on Variable Autonomy, including cognitive workload and communication delays, and propose future directions, including whole-body Variable Autonomy for mobile manipulators, virtual reality frameworks, and large language models to reduce operators’ complexity and cognitive load in some challenging and uncertain scenarios.

Robots are deployed in different environments to aid and complement humans in tasks, including manufacturing (Rajendran et al., 2021), healthcare (Lin et al., 2020), and agriculture (Chen et al., 2022), where the benefits of automation are observable. Robots have also been used in more challenging scenarios. For example, mobile robots and mobile manipulators deployed in disaster zones (Chen and Cho, 2019) or in other extreme environments such as nuclear disaster response or decommissioning (Nagatani et al., 2013; Chiou et al., 2022; Cragg and Hu, 2003) excel because of their mobility and manipulation capabilities. However, despite their potential, uncertainties prevent these systems from being fully autonomous. Human intervention remains essential due to a lack of trust and the limitations of current autonomous systems.

The deployment of autonomous robots across environments shows that no single solution fits all needs. Autonomous systems are limited by their prior knowledge and adaptive capabilities, with training being difficult and time-consuming. Learning from demonstration approaches (Moridian et al., 2018) are often confined to their training environments and require human intervention for decisions beyond their training. Improvements in path planning for mobile bases and manipulators (Rajendran et al., 2021; Hargas et al., 2015; Chen et al., 2022) also face limitations needing human intelligence. Manual operation requires significant training and concentration, with cognitive demands varying between operators. These cognitive challenges make manual systems more error-prone than semi-autonomous or fully autonomous systems (Rastegarpanah et al., 2024; Chiou et al., 2015).

Robots deployed in complex environments increase operator demands for alertness (Chiou, 2017), adaptability to new information (Rastegarpanah et al., 2024), and concentration due to unexpected delays and drops in connection (Cragg and Hu, 2003). These burdens can cause physical fatigue and monotony. Some challenges can be alleviated by balancing teleoperation and autonomy, as proposed by field exercises (Chiou et al., 2022), and reinforced by our anecdotal interactions with operators in Japan preparing for a teleoperated deployment at Fukushima Daiichi Nuclear Power Plant. The operators noted that these three factors are needed due to environmental uncertainties and issues with repetitiveness and communication delays. Automating simple, repetitive processes can address some issues, while other conditions require manual control. Given the challenges for fully autonomous and manual systems, developing systems that can switch between human control and autonomy is logical. Mobile bases give the freedom to explore environments, while manipulators enable interaction with objects within them, making mobile manipulators a system worth studying within this context.

Previous reviews on Variable Autonomy (VA) have focused on cognitive aspects, methodologies, and applications but not specifically on mobile manipulators. For instance, Tabrez et al. (2020) examined mental models and their traits like fluency, adaptability, and effective communication. Villani et al. (2018) discuss cognitive and physical aspects of programming collaborative and shared control robots in industrial settings, emphasizing safe interaction and intuitive interfaces. Bengtson et al. (2020) focused on computer vision for semi-autonomous control of assistive robots, while Moniruzzaman et al. (2022) focus on the teleoperation of mobile robots. On the other hand, reviews on mobile manipulators have focused on motion planning (Sandakalum and Ang, 2022) and the decision-making process of planning algorithms (Thakar et al., 2023) with limited coverage of human-robot interaction, and Variable Autonomy.

Our previous work has addressed varying levels of autonomy in disaster and rescue scenarios, focusing on cognitive and robotic challenges within this scope, limited to mobile robots (Chiou, 2017; Chiou et al., 2021; Panagopoulos et al., 2022; Ramesh et al., 2023). However, there is a need to expand this understanding to other environments where human-robot teams are deployed and mobile manipulators are used. Mobile manipulators can function as single-entity systems, where locomotion and manipulation are coupled, or as dual-entity systems, treating the base and manipulator separately. With this mini-review, we aim to 1) present the current state of research, 2) identify some challenges, insights, and gaps from the current literature, and 3) propose future research directions with a focus on mobile manipulators, their control within human-robot teams, and Variable Autonomy.

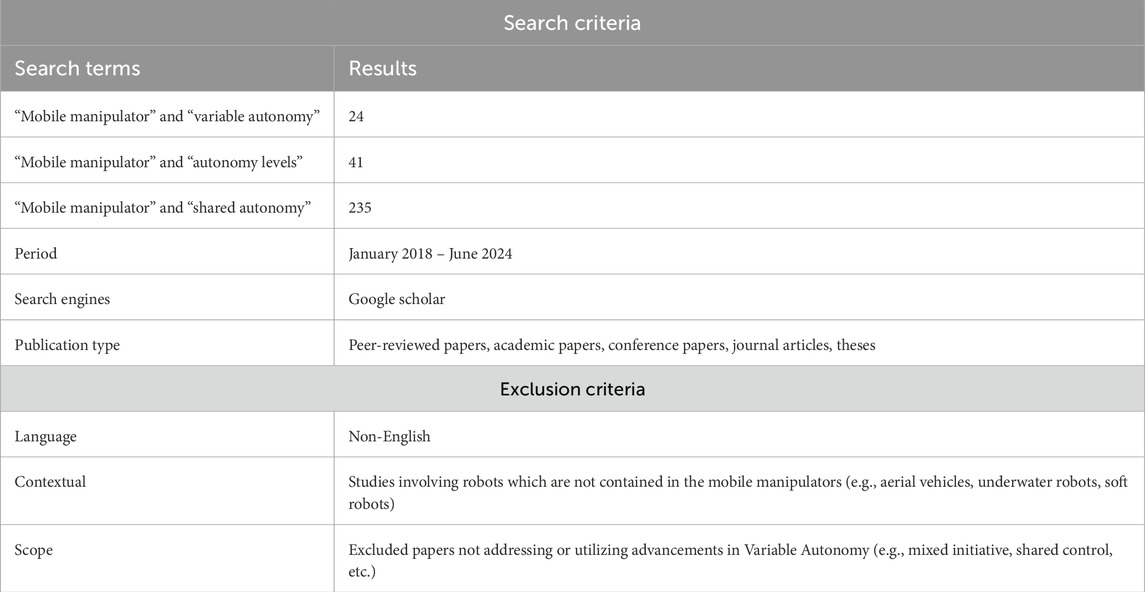

For the review, we performed a Google Scholar search, with the specifics of our search criteria found in Table 1. We define mobile manipulators as robots with locomotion decoupled from manipulation, moving primarily on the ground. This definition includes humanoid robots, quadruped robots, wheeled manipulators, and robots on tracks. For the manipulation system, we consider any robot capable of performing tasks typically done by a hand, such as throwing, pushing, grasping, cutting, etc. The system does not necessarily need to perform any manipulation or mobile base task, but must be physically capable to do so.

Table 1. Search and Exclusion Criteria for the mini-review on Mobile Manipulators with Variable Autonomy.

This definition excludes robots with integrated or inseparable locomotion and manipulation systems, such as snake, octopus-inspired, and soft robots. It also excludes aerial and underwater robots and stationary robotic arms without a mobile base.

Based on the number of search results, most research is centred around shared autonomy rather than mixed initiative or other forms of Variable Autonomy. After applying the exclusion and contextual criteria, 38 papers were included in the review.

Variable Autonomy systems enable flexible control by humans and machines across different levels of operation. Although various definitions exist for Variable Autonomy in the literature (Reinmund et al., 2024; Methnani et al., 2024), we classify these systems into two primary categories. The first is autonomy-changing systems, where autonomy levels can be modified during task execution: Full VA or Mixed-Initiative systems allow humans and machines to adjust these levels. Only humans can make autonomy changes in human-initiative (HI) or adjustable autonomy systems, whereas AI-initiative (AI-I) or sliding autonomy systems permit machines to do them. The second category is autonomy-sharing systems, often called Shared Control systems. In these systems, humans and robots work together on tasks. Shared Control can be either supervisory, where humans provide high-level directives, or assistive, where humans directly control the robot with system support, such as visual feedback or trajectory guidance.

The first focus of this paper was on robotic deployment environments, analyzing works detailing their application methodologies. This condition identified seven primary environments: hazardous materials and environments handling, disaster response, industrial manufacturing, Research and Development (R&D) in laboratories, healthcare and medical applications, agriculture or farming, and domestic/household environments. Table 2 lists the papers by environment and briefly describes their applications.

After categorizing the papers by environment, the next step is to categorize them by the tasks to which Variable Autonomy is applied. This categorization means focusing on how Variable Autonomy and human-robot interaction are utilized as tools to accomplish various tasks. In other words, while some papers include Variable Autonomy, they do so to aid in completing other tasks and not necessarily researching ways to change the autonomy levels.

Humanoid robots often take inspiration from human capabilities (Lin et al., 2020; Baek et al., 2022; Pohl et al., 2024). Some of these systems use motion mapping based on human posture or control (Rastegarpanah et al., 2016). For instance, Baek et al. (2022) utilize human leaning to control velocity while avoiding obstacles. In this approach, the robot provides a force feedback based on proximity to obstacles, allowing users to adjust their input. The robot can also alter its own velocity and path. Similarly, Lin et al. (2020) map human movements to specific humanoid movements to simplify a pick-and-place task. In their system, humans only need to make physical movements and signs that show a decision, and robots autonomously complete the task.

Research in manipulation includes grasping, autonomous manipulation, and load balancing. This type of research is characterized by robots performing tasks with some level of autonomy. Papers that involve manipulation research in any form include (Lin et al., 2020; Chen et al., 2022; Hargas et al., 2015; Frese et al., 2022; Roennau et al., 2022; Rastegarpanah et al., 2021; Schuster et al., 2020; Wedler et al., 2021; Merkt et al., 2019; Stibinger et al., 2021; Cheong et al., 2021; Fozilov et al., 2021; Kapusta and Kemp, 2019; Sanchez and Smart, 2021; 2022; Mirjalili et al., 2024; Park et al., 2020; Pohl et al., 2024; Rakita et al., 2019). Unlike teleoperation research, in this area, the robot completes most of the manipulation tasks by itself. For example, Frese et al. (2022) use a giant excavator that plans how to dig soil, understands its properties, and balances the load. The system allows it to ask for the help of an operator if there is a low probability of success. Another example is Cheong et al. (2021), who allows the operator to select the object he wants to manipulate, with the robot autonomously extracting key points and finding grasp candidates.

This area includes path planning that considers the geometry and physical properties of the objects being moved, as well as obstacles in the path of the robot or object (Woock et al., 2022; Sirintuna et al., 2024; Benzi et al., 2022; Abubakar et al., 2020). For example, ARNA, from Abubakar et al. (2020), can transport an object while assisting a patient walking through a scene. In this case, the patient only controls the direction, while the robot independently manipulates and transports the object. Sirintuna et al. (2024) proposed a collaborative approach where the robot provides haptic feedback through a belt worn by a human in an occluded environment. Assisting them in transporting an object collaboratively with the robot by feeling a force when obstacles get closer. The human commands the direction, while the robot provides environmental information and transports the vehicle with a fixed end-effector position relative to the base.

This subsection explores areas where VA has provided critical support, including collision avoidance, communication handling, semantic understanding, and intent recognition.

This area utilizes sensor integration and real-time processing to enable robots to make decisions and adjust their path to avoid collisions. Relevant papers include (Hargas et al., 2015; Frese et al., 2022; Roennau et al., 2022; Woock et al., 2022; Cheong et al., 2021; Fozilov et al., 2021; Gholami et al., 2020; Valner et al., 2018; Kapusta and Kemp, 2019). Roennau et al. (2022) describe a system where an operator selects an object to retrieve, and the robot plans the path using a 3D SLAM-generated map to avoid collisions. Another example is Valner et al., 2018 who developed a framework where the machine autonomously switches sensor feed to another if one fails. The human operator provides high-level commands, asking the robot to capture the environment while the system handles mapping.

The impact of communication delays is discussed by various researchers (Frese et al., 2022; Merkt et al., 2019; Båberg, 2022; Li et al., 2024; Valner et al., 2018). Båberg (2022) have shown some work in user interfaces that helps an operator assess network reliability. Frese et al. (2022) depend on hardware communication speeds with buffer configuration and pre-allocation of memory. Variable Autonomy can help mitigate delays by providing autonomous control when high latency is detected, running directly on the robot’s internal systems, while allowing long-distance manual control when latency is low.

This area takes advantage of the computational power for object recognition (Lin et al., 2020; Woock et al., 2022; Cheong et al., 2021; Bhattacharjee et al., 2020; Park et al., 2020; Pohl et al., 2024), task learning (Wong et al., 2022; Park et al., 2020; Rakita et al., 2019), and the use of Large Language Models (LLMs) (Kim et al., 2023; Mirjalili et al., 2024) for developing smarter systems. The primary focus of this area is helping humans reduce their cognitive load; smarter systems can allow humans to take a supervisory role in tasks and only take full control when an object or task not previously trained for is encountered. As an example, Bhattacharjee et al. (2020) allow a user to select a food from an interface, limiting its choices to some fruits detected by a perception algorithm but allowing the user to take manual control of other feeding processes.

Research areas that allow humans to manually control a robotic system from a distance or share control with the robot (Schuster et al., 2020; Verhagen et al., 2024; Baek et al., 2022; Chen et al., 2018; Gholami et al., 2020; Li et al., 2024; Wong et al., 2022; Bhattacharjee et al., 2020; Kemp et al., 2022). Some researchers focus on providing high-level commands, enabling the robot to execute pre-programmed tasks while they explore other methods to communicate their intentions. Bhattacharjee et al. (2020) employ voice commands, Wong et al. (2022) try influencing a robot with physical touch, and Chen et al. (2018) propose utilising hand gestures. Other researchers use computer assistance for specific tasks, while manually moving the robots. Li et al. (2024) manage teleoperation of the mobile base and manipulator arm independently but use the system to decide when to switch between devices.

The explored literature on Variable Autonomy for mobile manipulators is divided into two focuses: 1) high-level control, or supervisory control, and 2) low-level control or system assistance. Most implementations involving manipulation, obstacle avoidance, mapping, transportation, and machine learning research aim for fully automated tasks. In these cases, the role of the operator is primarily to decide, choose tasks, or supervise to ensure the robot is not making mistakes. For known problems, this solution is good, providing automated solutions that are easy to use. On the other hand, we have teleoperated scenarios, often with some uncertainty. In these, humans drive the base or move the arm, with autonomy serving in an assistive capacity, with the main objective of lowering human cognitive load or reducing the operation completion time.

Separate Focus on Base and Manipulator - In mobile manipulators, Variable Autonomy is still primarily focused on controlling the base or the manipulator separately. Current research does not consider the joint problem of integrating changes in autonomy for both. This can be seen in a multitude of papers including: (Lin et al., 2020; Frese et al., 2022; Woock et al., 2022; Merkt et al., 2019; Båberg, 2022; Cheong et al., 2021; Fozilov et al., 2021; Gholami et al., 2020; Palan et al., 2019; Valner et al., 2018; Sanchez and Smart, 2022; Bhattacharjee et al., 2020; Karim et al., 2023; Kemp et al., 2022; Kim et al., 2023; Mirjalili et al., 2024; Park et al., 2020; Pohl et al., 2024; Rakita et al., 2019). Researchers in this area focus on applying varying levels of autonomy to either of the systems while keeping the rest of the robot static or at the same autonomy level throughout the task. Even transportation tasks follow a sequential process of changing between both: reaching a position with the base, picking the object with the manipulator, reaching a dropping position with the base, and placing the object with the manipulator. This approach can theoretically limit the operational workspace of a mobile manipulator. For example, in Sanchez and Smart (2022), the disinfection area is limited because the mobile base is not used simultaneously to increase the reach of the manipulator.

Human Cognitive Load - Refers to the mental effort required to perform a task and is a term acknowledged and investigated in multiple papers including, (Chiou, 2017; Sirintuna et al., 2024; Baek et al., 2022; Lin et al., 2020). However, it is still primarily studied using subjective measurements, such as the NASA Task Load Index (NASA-TLX). Currently, objective metrics and biometric data from the human operator are not widely used in systems of mobile manipulators with variable autonomy or involving human-in-the-loop operations. Implementing objective data from the participants would enhance our understanding of cognitive load and help design better support for human operators.

Communication Delays and System Reliability - Although known to cause issues, they are often ignored or not measured in implementations of mobile manipulation. There is a lack of studies addressing this problem in relevant environments. Moniruzzaman et al. (2022) mention compensation techniques, such as future pose estimation and point-cloud 3D reconstruction, that could benefit the area if applied. In addition, Variable Autonomy could be used by switching from manual teleoperation to a local compensation algorithm when higher latency is detected.

Uncertain Environments - Applying varying levels of autonomy in known environments, where high-level and supervisory control is feasible, is a popular and researched area. The challenge lies in extending high-level control strategies to more complex and unpredictable environments, where robust decision-making and adaptability matter.

In future work, several key directions merit attention to advance the field further. First, better system integration is essential, with research focusing on enabling simultaneous control of both the base and manipulator, whether coupled or decoupled. Such integration would facilitate switching between different levels of autonomy for each component. Building on existing work that allows the autonomous switching of operator control between the base and manipulator (Li et al., 2024), this approach could expand the operational range to larger manipulation workspaces.

Second, Virtual Reality (VR) offers significant potential in this domain. Current studies already highlight VR’s role in reducing cognitive load and enhancing environmental awareness (Baek et al., 2022; Woock et al., 2022; Rastegarpanah et al., 2024). Future research could delve deeper into its application in more complex mobile manipulation problems. Incorporating considerations like world physics and virtual world design metrics could create a smoother and more intuitive operator experience.

Another promising avenue lies in employing machine learning to address delays and errors. Techniques like intent recognition, already used in task assignment and reward function learning (Gholami et al., 2020; Palan et al., 2019), could be further developed to manage tasks typically dropped due to latency. By integrating onboard autonomous compensation algorithms with manual teleoperation, long-distance systems could benefit from reduced errors and delays. Switching between autonomous and manual modes could offer additional resilience.

Finally, the increasing capability of Large Language Models (LLMs) presents an exciting opportunity. As these models evolve to become multimodal, they can serve as versatile general assistants. Research has shown their potential for contextual awareness (Kim et al., 2023), which could be leveraged to enhance task awareness and dynamically adjust autonomy levels based on previously unconsidered data. This adaptability could enable the development of on-demand algorithms, significantly improving the flexibility and efficiency of mobile manipulators.

This mini-review synthesized current research on mobile manipulators with Variable Autonomy, revealing gaps and possible opportunities. The gaps included: First, Variable Autonomy and control in mobile manipulators often focus separately on the base and the manipulator. However, some challenges, such as cutting in large surfaces (Pardi et al., 2020) and cleaning of contaminated areas (Sanchez and Smart, 2022), require control of both and changes in both simultaneously. Second, studies on cognitive workload are heavily based on subjective metrics. However, some tasks with operators in the loop in hazardous environments would heavily benefit from real-time objective metrics during robot deployment (Chiou et al., 2022), as this could aid in better setting the autonomy levels in the system affected by operator load. Third, communication delays and reliability issues are acknowledged but not extensively addressed, which can be a very important factor to consider in time-critical situations (Moniruzzaman et al., 2022) that search and rescue or hazardous environments can have. Finally, most of the research is designed for static and known environments, lacking implementations in uncertain environments, when most of the research in this area is needed for situations under heavy uncertainties (search and rescue, manufacturing, decommissioning) (Båberg, 2022; Rajendran et al., 2021; Woock et al., 2022). Future research should aim to develop integrated variable autonomy for both the base and the manipulator, use VR or other intuitive interfaces as a way to deal with workload and facilitate shared control on the robot systems, implement adaptive communication protocols or change autonomy levels in the robot to handle network instability and implement real-time general decision-making frameworks based on LLMs that dynamically adjust autonomy levels based on situational and contextual demands.

CC: Conceptualization, Data curation, Formal Analysis, Methodology, Writing–original draft, Writing–review and editing. AR: Conceptualization, Funding acquisition, Investigation, Project administration, Resources, Supervision, Writing–review and editing. MC: Conceptualization, Funding acquisition, Methodology, Project administration, Resources, Supervision, Writing–original draft, Writing–review and editing. RS: Conceptualization, Funding acquisition, Resources, Supervision, Writing–review and editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was funded by the Nuclear Decommissioning Authority (NDA) and supported by the United Kingdom National Nuclear Laboratory (UKNNL). This work was also supported by the project called “Research and Development of a Highly Automated and Safe Streamlined Process for Increase Lithium-ion Battery Repurposing and Recycling” (REBELION) under Grant 101104241.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abubakar, S., Das, S. K., Robinson, C., Saadatzi, M. N., Logsdon, M. C., Mitchell, H., et al. (2020). “Arna, a service robot for nursing assistance: system overview and user acceptability,” in 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20-21 August 2020 (IEEE), 1408–1414. doi:10.1109/CASE48305.2020.9216845

Båberg, F. (2022). “Improving manipulation and control of search and rescue UGVs operating across autonomy levels,”. Ph.D. thesis (Stockholm: Kungliga Tekniska högskolan).

Baek, D., Chen, Y., Chang, Y., and Ramos, J. (2022). A study of shared-control with force feedback for obstacle avoidance in whole-body telelocomotion of a wheeled humanoid. Dblp Comput. Sci. Bibliogr. doi:10.48550/ARXIV.2209.03994

Bengtson, S. H., Bak, T., Struijk, L. N. S. A., and Moeslund, T. B. (2020). A review of computer vision for semi-autonomous control of assistive robotic manipulators (arms). Disabil. Rehabilitation Assistive Technol. 15, 731–745. doi:10.1080/17483107.2019.1615998

Benzi, F., Mancus, C., and Secchi, C. (2022). Whole-body control of a mobile manipulator for passive collaborative transportation. IFAC-PapersOnLine 55, 106–112. doi:10.1016/j.ifacol.2023.01.141

Bhattacharjee, T., Gordon, E. K., Scalise, R., Cabrera, M. E., Caspi, A., Cakmak, M., et al. (2020). “Is more autonomy always better?,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (ACM), Cambridge, United Kingdom, 23-26 March 2020, 181–190. doi:10.1145/3319502.3374818

Chen, J., and Cho, Y. K. (2019). “Detection of damaged infrastructure on disaster sites using mobile robots,” in 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Korea (South), 24-27 June 2019 (IEEE), 648–653. doi:10.1109/URAI.2019.8768770

Chen, M., Liu, C., and Du, G. (2018). A human–robot interface for mobile manipulator. Intell. Serv. Robot. 11, 269–278. doi:10.1007/s11370-018-0251-3

Chen, Y., Fu, Y., Zhang, B., Fu, W., and Shen, C. (2022). Path planning of the fruit tree pruning manipulator based on improved rrt-connect algorithm. Int. J. Agric. Biol. Eng. 15, 177–188. doi:10.25165/j.ijabe.20221502.6249

Cheong, S., Chen, T. P., Acar, C., You, Y., Chen, Y., Sim, W. L., et al. (2021). “Supervised autonomy for remote teleoperation of hybrid wheel-legged mobile manipulator robots,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September 2021 - 01 October 2021 (IEEE), 3234–3241. doi:10.1109/IROS51168.2021.9635997

Chiou, E. (2017). “Flexible robotic control via co-operation between an operator and an ai-based control system,”. Ph.D. thesis (United Kingdom: University of Birmingham Research Archive E-theses Repository).

Chiou, M., Epsimos, G.-T., Nikolaou, G., Pappas, P., Petousakis, G., Mühl, S., et al. (2022). “Robot-assisted nuclear disaster response: report and insights from a field exercise,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23-27 October 2022 (IEEE), 4545–4552. doi:10.1109/IROS47612.2022.9981881

Chiou, M., Hawes, N., and Stolkin, R. (2021). Mixed-initiative variable autonomy for remotely operated mobile robots. ACM Trans. Human-Robot Interact. 10, 1–34. doi:10.1145/3472206

Chiou, M., Hawes, N., Stolkin, R., Shapiro, K. L., Kerlin, J. R., and Clouter, A. (2015). “Towards the principled study of variable autonomy in mobile robots,” in 2015 IEEE International Conference on Systems, Man, and Cybernetics (IEEE), Hong Kong, China, 09-12 October 2015, 1053–1059. doi:10.1109/SMC.2015.190

Cragg, L., and Hu, H. (2003). “Application of mobile agents to robust teleoperation of internet robots in nuclear decommissioning,” in IEEE International Conference on Industrial Technology, 2003 (IEEE), Maribor, Slovenia, 10-12 December 2003, 1214–1219. doi:10.1109/ICIT.2003.1290838

Fozilov, K., Hasegawa, Y., and Sekiyama, K. (2021). “Towards self-autonomy evaluation using behavior trees,” in 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (IEEE), Melbourne, Australia, 17-20 October 2021, 988–993. doi:10.1109/SMC52423.2021.9658838

Frering, L., Eder, M., Kubicek, B., Albert, D., Kalkofen, D., Gschwandtner, T., et al. (2022). Enabling and assessing trust when cooperating with robots in disaster response (easier). arXiv preprint arXiv:2207.03763.

Frese, C., Zube, A., Woock, P., Emter, T., Heide, N. F., Albrecht, A., et al. (2022). An autonomous crawler excavator for hazardous environments. A. T. - Autom. 70, 859–876. doi:10.1515/auto-2022-0068

Gholami, S., Garate, V. R., Momi, E. D., and Ajoudani, A. (2020). “A shared-autonomy approach to goal detection and navigation control of mobile collaborative robots,” in 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August 2020 - 04 September 2020 (IEEE), 1026–1032. doi:10.1109/RO-MAN47096.2020.9223583

Hargas, Y., Mokrane, A., Hentout, A., Hachour, O., and Bouzouia, B. (2015). “Mobile manipulator path planning based on artificial potential field: application on robuter/ulm,” in 2015 4th International Conference on Electrical Engineering (ICEE), Boumerdes, Algeria, 13-15 December 2015 (IEEE), 1–6. doi:10.1109/INTEE.2015.7416774

Kapusta, A., and Kemp, C. C. (2019). Task-centric optimization of configurations for assistive robots. Aut. Robots 43, 2033–2054. doi:10.1007/s10514-019-09847-2

Karim, R., Nanavati, A., Faulkner, T. A. K., and Srinivasa, S. S. (2023). Investigating the levels of autonomy for personalization in assistive robotics.

Kemp, C. C., Edsinger, A., Clever, H. M., and Matulevich, B. (2022). “The design of stretch: a compact, lightweight mobile manipulator for indoor human environments,” in 2022 International Conference on Robotics and Automation (ICRA) (IEEE), 3150–3157. doi:10.1109/ICRA46639.2022.9811922

Kim, G., Kim, T., Kannan, S. S., Venkatesh, V. L. N., Kim, D., and Min, B.-C. (2023). Dynacon: dynamic robot planner with contextual awareness via llms.

Li, W., Huang, F., Chen, Z., and Chen, Z. (2024). Automatic-switching-based teleoperation framework for mobile manipulator with asymmetrical mapping and force feedback. Mechatronics 99, 103164. doi:10.1016/j.mechatronics.2024.103164

Lin, T.-C., Krishnan, A. U., and Li, Z. (2020). “Shared autonomous interface for reducing physical effort in robot teleoperation via human motion mapping,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May 2020 - 31 August 2020 (IEEE), 9157–9163. doi:10.1109/ICRA40945.2020.9197220

Merkt, W., Ivan, V., Yang, Y., and Vijayakumar, S. (2019). “Towards shared autonomy applications using whole-body control formulations of locomanipulation,” in 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22-26 August 2019 (IEEE), 1206–1211. doi:10.1109/COASE.2019.8843153

Methnani, L., Chiou, M., Dignum, V., and Theodorou, A. (2024). Who’s in charge here? a survey on trustworthy ai in variable autonomy robotic systems. ACM Comput. Surv. 56, 1–32. doi:10.1145/3645090

Mirjalili, R., Krawez, M., Silenzi, S., Blei, Y., and Burgard, W. (2024). “LAN-grasp: an effective approach to semantic object grasping using large language models,” in First workshop on vision-Language Models for navigation and manipulation at ICRA 2024.

Moniruzzaman, M., Rassau, A., Chai, D., and Islam, S. M. S. (2022). Teleoperation methods and enhancement techniques for mobile robots: a comprehensive survey. Robotics Aut. Syst. 150, 103973. doi:10.1016/j.robot.2021.103973

Moridian, B., Kamal, A., and Mahmoudian, N. (2018). “Learning navigation tasks from demonstration for semi-autonomous remote operation of mobile robots,” in 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 06-08 August 2018 (IEEE), 1–8. doi:10.1109/SSRR.2018.8468640

Nagatani, K., Kiribayashi, S., Okada, Y., Otake, K., Yoshida, K., Tadokoro, S., et al. (2013). Emergency response to the nuclear accident at the fukushima daiichi nuclear power plants using mobile rescue robots. J. Field Robotics 30, 44–63. doi:10.1002/rob.21439

Palan, M., Landolfi, N. C., Shevchuk, G., and Sadigh, D. (2019). Learning reward functions by integrating human demonstrations and preferences

Panagopoulos, D., Petousakis, G., Ramesh, A., Ruan, T., Nikolaou, G., Stolkin, R., et al. (2022). “A hierarchical variable autonomy mixed-initiative framework for human-robot teaming in mobile robotics,” in 2022 IEEE 3rd International Conference on Human-Machine Systems (ICHMS), Orlando, FL, USA, 17-19 November 2022 (IEEE), 1–6. doi:10.1109/ICHMS56717.2022.9980686

Pardi, T., Maddali, V., Ortenzi, V., Stolkin, R., and Marturi, N. (2020). “Path planning for mobile manipulator robots under non-holonomic and task constraints,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020 - 24 January 2021, 6749–6756. doi:10.1109/IROS45743.2020.9340760

Park, D., Hoshi, Y., Mahajan, H. P., Kim, H. K., Erickson, Z., Rogers, W. A., et al. (2020). Active robot-assisted feeding with a general-purpose mobile manipulator: design, evaluation, and lessons learned. Robotics Aut. Syst. 124, 103344. doi:10.1016/j.robot.2019.103344

Pohl, C., Reister, F., Peller-Konrad, F., and Asfour, T. (2024). Makeable: memory-centered and affordance-based task execution framework for transferable mobile manipulation skills.

Rajendran, P., Thakar, S., Bhatt, P. M., Kabir, A. M., and Gupta, S. K. (2021). Strategies for speeding up manipulator path planning to find high quality paths in cluttered environments. J. Comput. Inf. Sci. Eng. 21. doi:10.1115/1.4048619

Rakita, D., Mutlu, B., Gleicher, M., and Hiatt, L. M. (2019). Shared control–based bimanual robot manipulation. Sci. Robotics 4, eaaw0955. doi:10.1126/scirobotics.aaw0955

Ramesh, A., Braun, C. A., Ruan, T., Rothfuß, S., Hohmann, S., Stolkin, R., et al. (2023). “Experimental evaluation of model predictive mixed-initiative variable autonomy systems applied to human-robot teams,” in 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, Oahu, HI, USA, 01-04 October 2023 (IEEE), 5291–5298.

Rastegarpanah, A., Contreras, C. A., and Stolkin, R. (2024). Semi-autonomous robotic disassembly enhanced by mixed reality. RobCE 24 Proc. 2024 4th Int. Conf. Robotics Control Eng., 7–13. doi:10.1145/3674746.3674748

Rastegarpanah, A., and Saadat, M. (2016). Lower limb rehabilitation using patient data. Applied Bionics and Biomechanics (1), 2653915.

Rastegarpanah, A., Hathaway, J., and Stolkin, R. (2021). Vision-guided mpc for robotic path following using learned memory-augmented model. Front. Rob. AI. 8, 688275.

Reinmund, T., Salvini, P., Kunze, L., Jirotka, M., and Winfield, A. F. T. (2024). Variable autonomy through responsible robotics: design guidelines and research agenda. J. Hum.-Robot Interact. 13, 1–36. doi:10.1145/3636432

Roennau, A., Mangler, J., Keller, P., Besselmann, M. G., Huegel, N., and Dillmann, R. (2022). Grasping and retrieving unknown hazardous objects with a mobile manipulator. Automatisierungstechnik 70, 838–849. doi:10.1515/auto-2022-0061

Sanchez, A. G., and Smart, W. D. (2021). “A shared autonomy surface disinfection system using a mobile manipulator robot,” in 2021 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), New York City, NY, USA, 25-27 October 2021 (IEEE), 176–183. doi:10.1109/SSRR53300.2021.9597678

Sanchez, A. G., and Smart, W. D. (2022). Verifiable surface disinfection using ultraviolet light with a mobile manipulation robot. Technologies 10, 48. doi:10.3390/technologies10020048

Sandakalum, T., and Ang, M. H. (2022). Motion planning for mobile manipulators—a systematic review. Machines 10, 97. doi:10.3390/machines10020097

Schuster, M. J., Muller, M. G., Brunner, S. G., Lehner, H., Lehner, P., Sakagami, R., et al. (2020). The arches space-analogue demonstration mission: towards heterogeneous teams of autonomous robots for collaborative scientific sampling in planetary exploration. IEEE Robotics Automation Lett. 5, 5315–5322. doi:10.1109/LRA.2020.3007468

Sirintuna, D., Kastritsi, T., Ozdamar, I., Gandarias, J. M., and Ajoudani, A. (2024). Enhancing human–robot collaborative transportation through obstacle-aware vibrotactile warning and virtual fixtures. Robotics Aut. Syst. 178, 104725. doi:10.1016/j.robot.2024.104725

Stibinger, P., Broughton, G., Majer, F., Rozsypalek, Z., Wang, A., Jindal, K., et al. (2021). Mobile manipulator for autonomous localization, grasping and precise placement of construction material in a semi-structured environment. IEEE Robotics Automation Lett. 6, 2595–2602. doi:10.1109/LRA.2021.3061377

Tabrez, A., Luebbers, M. B., and Hayes, B. (2020). A survey of mental modeling techniques in human–robot teaming. Curr. Robot. Rep. 1, 259–267. doi:10.1007/s43154-020-00019-0

Thakar, S., Srinivasan, S., Al-Hussaini, S., Bhatt, P. M., Rajendran, P., Jung Yoon, Y., et al. (2023). A survey of wheeled mobile manipulation: a decision-making perspective. J. Mech. Robotics 15, 020801. doi:10.1115/1.4054611

Valner, R., Vunder, V., Zelenak, A., Pryor, M., Aabloo, A., and Kruusamäe, K. (2018). “Intuitive ‘human-on-the-loop’interface for tele-operating remote mobile manipulator robots,” in International symposium on artificial intelligence, robotics, and automation in space (i-SAIRAS), 1–8.

Verhagen, R. S., Neerincx, M. A., and Tielman, M. L. (2024). Meaningful human control and variable autonomy in human-robot teams for firefighting. Front. Robotics AI 11, 1323980. doi:10.3389/frobt.2024.1323980

Villani, V., Pini, F., Leali, F., and Secchi, C. (2018). Survey on human–robot collaboration in industrial settings: safety, intuitive interfaces and applications. Mechatronics 55, 248–266. doi:10.1016/j.mechatronics.2018.02.009

Wedler, A., Müller, M. G., Schuster, M., Durner, M., Brunner, S., Lehner, P., et al. (2021). “Preliminary results for the multi-robot, multi-partner, multi-mission, planetary exploration analogue campaign on mount etna,” in Proceedings of the international astronautical congress, IAC.

Wong, C. Y., Samadi, S., Suleiman, W., and Kheddar, A. (2022). Touch semantics for intuitive physical manipulation of humanoids. IEEE Trans. Human-Machine Syst. 52, 1111–1121. doi:10.1109/THMS.2022.3207699

Keywords: mobile manipulators, uncertain environments, variable autonomy, human-robot teaming, shared control, human-robot interaction

Citation: Contreras CA, Rastegarpanah A, Chiou M and Stolkin R (2025) A mini-review on mobile manipulators with Variable Autonomy. Front. Robot. AI 12:1540476. doi: 10.3389/frobt.2025.1540476

Received: 05 December 2024; Accepted: 20 January 2025;

Published: 14 February 2025.

Edited by:

Seemal Asif, Cranfield School of Engineering, United KingdomReviewed by:

Jonathan M. Aitken, The University of Sheffield, United KingdomCopyright © 2025 Contreras, Rastegarpanah, Chiou and Stolkin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cesar Alan Contreras, Y2FjMjE0QHN0dWRlbnQuYmhhbS5hYy51aw== Alireza Rastegarpanah, YS5yYXN0ZWdhcnBhbmFoQGJoYW0uYWMudWs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.