- 1Autonomous Systems Laboratory, Universidad Politécnica de Madrid, Madrid, Spain

- 2Centre for Automation and Robotics, Universidad Politécnica de Madrid-CSIC, Madrid, Spain

Autonomous robots are already present in a variety of domains performing complex tasks. Their deployment in open-ended environments offers endless possibilities. However, there are still risks due to unresolved issues in dependability and trust. Knowledge representation and reasoning provide tools for handling explicit information, endowing systems with a deeper understanding of the situations they face. This article explores the use of declarative knowledge for autonomous robots to represent and reason about their environment, their designs, and the complex missions they accomplish. This information can be exploited at runtime by the robots themselves to adapt their structure or re-plan their actions to finish their mission goals, even in the presence of unexpected events. The primary focus of this article is to provide an overview of popular and recent research that uses knowledge-based approaches to increase robot autonomy. Specifically, the ontologies surveyed are related to the selection and arrangement of actions, representing concepts such as autonomy, planning, or behavior. Additionally, they may be related to overcoming contingencies with concepts such as fault or adapt. A systematic exploration is carried out to analyze the use of ontologies in autonomous robots, with the objective of facilitating the development of complex missions. Special attention is dedicated to examining how ontologies are leveraged in real time to ensure the successful completion of missions while aligning with user and owner expectations. The motivation of this analysis is to examine the potential of knowledge-driven approaches as a means to improve flexibility, explainability, and efficacy in autonomous robotic systems.

1 Introduction

Autonomous robots have endless possibilities; they are applied in a variety of sectors such as transport, logistics, industry, agriculture, healthcare, education, energy, etc. Robotics has shown enormous potential in diverse tasks and environments. However, there are still open issues that compromise autonomous robot dependability. To support their deployment in real-world scenarios, we need to provide robots with tools to act and react properly in unstructured environments with high uncertainty.

Various strategies support the pursuit of a better grasp of intelligence: neuroscience tries to understand how the brain processes information; mathematics seeks computation and rules to draw valid conclusions using formal logic or handling uncertainty with probability and statistics; control theory and cybernetics aim to ensure that the system reaches desired goals, etc. (Russell and Norvig, 2021). One way that we explore in this survey is the use of symbolic explicit knowledge as a means to enhance system intelligence.

Knowledge representation and reasoning (KR&R) is a sub-area of artificial intelligence that concerns analyzing, designing, and implementing ways of representing information on computers so that computational agents can use this information to derive information implied by it (Shapiro, 2003). Reasoning is the process of extracting new information from the implications of existing knowledge. One of the challenges of getting robots to perform tasks in open environments is that programmers cannot fully predict the state of the world in advance. KR&R provides some background to reason about the runtime situation and act in consequence. In addition to adaptability, these approaches can provide an explanation; knowledge can be queried so humans or other agents can understand why a robot acts in a certain way. Lastly, KR&R provides reusability. The robot needs information about its capabilities and the environment in which it is involved; this information can be shared among different agents, applications, or tasks because knowledge bases can be stored in broadly applicable modular chunks.

To be useable by robots, knowledge bases must be machine-understandable because the robot shall read, reason about, and update its content. In the context of intelligent robots, ontologies allow us to define the conceptualizations that the robot requires to support autonomous decision making. These ontologies are written in specific computer languages. In this article, we present a review of the ontologies used by autonomous robots to perform complex missions. In our analysis, we focus on the critical aspects of robot autonomy, that is, how KR&R can contribute to increasing the dependability of these types of systems. We aim to draw a landscape on how ontologies can support decision making to build more dependable robots performing elaborated tasks. The main contributions of this work are:

1. A classification of ontologies based on concepts for autonomous robots,

2. An analysis of existing approaches that use ontologies to facilitate action selection, and

3. A discussion of future research directions to increase dependability in autonomous robots.

This article is structured as follows. Section 2 provides background information on KR&R with a special focus on ontologies for robot autonomy. Section 3 discusses the classification criteria for ontologies that support robust autonomy in robotic applications. In Section 4, we describe the selection process for a systematic review in the field of ontologies for autonomous robots and briefly discuss other surveys in the field. In Section 5, we classify and compare the selected approaches based on their main application domain. Section 6 provides a general discussion and describes future research directions. Lastly, Section 7 presents the article's conclusions.

2 Knowledge representation and reasoning for robot autonomy

In this section, we introduce the use of ontologies to increase dependability in autonomous systems and the main languages for knowledge-based agents. Finally, we also discuss the essential and desirable capabilities of fully autonomous robots.

Dependability is the ability to provide the intended services of the system with a certain level of assurance, including factors such as availability, reliability, safety, and security (SEBoK Editorial Board, 2023). As an introductory exploration into this field, Guiochet et al. (2017) provide an analysis of techniques used to increase safety in robots and autonomous systems. Avižienis et al. (2004) offer definitions and conceptualizations of aspects related to dependability and create a taxonomy of dependable computing and its associated faults as a framework.

Dependability ensures consistent performance that goes beyond reliability, as it also considers factors such as fault tolerance, error recovery, and maintaining service levels. Various models of tackling these challenges include fault trees, failure modes and effect analysis (FMEAs), and safety case modes. There are also model-based approaches in which fault analysis can be used in runtime to evaluate goals and develop and execute alternative plans (Abbott, 1990). Similarly, this survey focuses on using explicit models to select the most suitable actions for the situation the robot faces to provide the desired outcomes. This survey concentrates on leveraging ontologies as a means to enhance dependability rather than analyzing dependability aspects concerning robotics.

2.1 Languages for ontologies

The most extended approach to robot programming is procedural: The intended robot behavior is explicitly encoded in an imperative language. Knowledge-based agents depart from this, using declarative approaches to abstract the control flow. A knowledge base (KB) stores information that allows the robot to deduce how to operate in the environment. This information can be updated with new facts, and repeated queries are used to deduce new facts relevant to the mission. A successful agent often combines declarative knowledge with procedural programming to produce more efficient code (Russell and Norvig, 2021). This declarative knowledge is commonly encoded in logic systems to formally capture conceptualizations.

Prolog (PROgramming in LOGic) is the most widely used declarative programming language. Many knowledge-based systems have been written in this language for legal, medical, financial, and other domains (Russell and Norvig, 2021). Prolog defines a formalism based on decidable fragments of first-order logic (FOL). In addition to its notation, which is somewhat different from standard FOL, the main difference is the closed-world assumption. The closed-world assumption asserts that only the predicates explicitly defined in the KB are true; there is no way to declare that a sentence is false.

Another widely used language for writing ontologies is Ontology Web Language (OWL). OWL is not a programming language like Prolog but rather a family of languages that can be used to represent knowledge. It is designed for applications that need to process information rather than simply presenting information to humans. Therefore, OWL facilitates greater machine interpretability with a panoply of different formats, such as extensible markup language (XML), resource description framework (RDF), and RDF Schema (RDF-S). The language has been specified by the W3C OWL Working Group (2012) and is a cornerstone of the semantic web.

The formal basis of OWL is description logic (DL): a family of languages with a compromise between expressiveness and scalability. DL uses decidable fragments of FOL to reason with more expressiveness. The main difference between Prolog and OWL is that the latter uses the open-world assumption. This means that a statement can be true whether it is known or not; that is, only explicitly false predicates are false, in contrast to the closed-world assumption in which only explicitly true predicates are true.

Regarding accessibility, OWL can be encoded by hand or by editors such as Protégé (Musen, 2015) for a user-friendly environment. OWL can be integrated into robotic software through application program interfaces (APIs) such as Jena Ontology API1 or OWLAPI2 implemented in Java or OWLREADY (Lamy, 2017) implemented in Python. There are also reasoners such as FaCT++3, Pellet4>, or HermiT5.

Prolog can also be used to reason about knowledge represented by OWL or to directly encode a Prolog ontology. The main Prolog APIs are GNU-PROLOG6 and SWI-PROLOG7. There is even a package for the robot operating system (ROS) called rosprolog8 that interfaces between SWI-Prolog and ROS.

2.2 Fundamental and domain ontologies

Ontological systems can be classified according to several criteria. We distinguish three levels of abstraction based on Guarino’s hierarchy (Guarino, 1998): upper level, domain, and application. Upper-level or foundational ontologies conceptualize general terms such as object, property, event, state, and relations such as parthood, constitution, participation, etc. Domain ontologies provide a formal representation of a specific field that defines contractual agreements on the meaning of terms within a discipline (Hepp et al., 2006); these ontologies specify the highly reusable vocabulary of an area and the concepts, objects, activities, and theories that govern it. Application ontologies contain the definitions required to model knowledge for a particular application: information about a robot in a specific environment, describing a particular task. Note that the environment or task knowledge could be a subdomain ontology, depending on the reusability it allows. In fact, the progress from upper-level to application ontologies is a continuous spectrum of concept subclassing, with somewhat arbitrary divisions into levels of abstraction reified as ontologies.

Perhaps the most extended upper-level ontology is the Suggested Upper Merged Ontology (SUMO) (Niles and Pease, 2001; Pease, 2011)9. SUMO is the largest open-source ontology that has expressive formal definitions of its concepts. Domain ontologies for medicine, economics, engineering, and many other topics are part of SUMO. This formalism uses the Standard Upper Ontology Knowledge Interchange Format (SUO-KIF), a logical language to express concepts with higher-order logic (a logic with more expressiveness than first-order logic) (Brown et al., 2023).

Another relevant foundational ontology for this research is the Descriptive Ontology for Linguistic and Cognitive Engineering (DOLCE), described as an “ontology of universals” (Gangemi et al., 2002), which means that it has classes but not relations. It aims to capture the ontological categories that underlie natural language and human common sense (Mascardi et al., 2006). The taxonomy of the most basic categories of particulars assumed in DOLCE includes, for example, abstract quality, abstract region, agentive physical object, amount of matter, temporal quality, etc. Although the original version of the few dozen terms in DOLCE was defined in FOL, it has since been implemented in OWL; most extensions of DOLCE are also in OWL.

The DOLCE + DnS Ultralite ontology10 (DUL) simplifies some parts of the DOLCE library, such as the names of classes and relations with simpler constructs. The most relevant aspect of DUL is perhaps the design of the ontology architecture based on patterns.

Other important foundational ontologies are the Basic Formal Ontology (BFO) (Arp et al., 2015), the Bunge–Wand–Weber Ontology (BWW) (Bunge, 1977; Wand and Weber, 1993), and the Cyc Ontology (Lenat, 1995). BFO focuses on continuant entities involved in a three-dimensional reality and occurring entities, which also include the time dimension. BWW is an ontology based on Bunge’s philosophical system that is widely used for conceptual modeling (Lukyanenko et al., 2021). Cyc is a long-term project in artificial intelligence that aims to use an ontology to understand how the world works by trying to represent implicit knowledge and perform human-like reasoning.

2.2.1 Robotic domain ontologies

Ontologies have gained popularity in robotics with the growing complexity of actions that systems are expected to perform. A well-defined standard for knowledge representation is recognized as a tool to facilitate human–robot collaboration in challenging tasks (Fiorini et al., 2017). The IEEE Standard Association of Robotics and Automation Society (RAS) created the Ontologies for Robotics and Automation (ORA) working group to address this need.

They first published the Core Ontology for Robotics and Automation (CORA) (Prestes et al., 2013). This standard specifies the most general concepts, relations, and axioms for the robotics and automation domain. CORA is based on SUMO and defines what a robot is and how it relates to other concepts. For this, it defines four main entities: robot part, robot, complex robot, and robotic system. CORA is an upper-level ontology currently extended in the IEEE Standard 1872-2015 (IEEE SA, 2015) with other subontologies, such as CORAX, RPARTS, and POS.

CORAX is a subontology created to bridge the gap between SUMO and CORA. It included high-level concepts that the authors claimed to not be explicitly defined in SUMO and particularized in CORA, in particular those associated with design, interaction, and environment. RPARTS provides notions related to specific kinds of robot parts and the roles they can perform, such as grippers, sensors, or actuators. POS presents general concepts associated with spatial knowledge, such as position and orientation, represented as points, regions, and coordinate systems.

However, CORA and its extensions are intended to cover a broad community, so their definitions of ambiguous terms are based solely on necessary conditions and do not specify sufficient conditions (Fiorini et al., 2017). For this reason, concepts in CORA must be specialized according to the needs of specific subdomains or robotics applications.

CORA, like most of the other application ontologies considered here, is defined in a language of very limited expressiveness, mostly expressible in OWL-Lite, and is therefore limited to simple classification queries. Although it is based on upper-level terms from SUMO, it recreated many terms that could have been used directly from SUMO. Moreover, given its choice of representation language, it did not use the first- and higher-order logic formulas from SUMO, limiting its reuse to only the taxonomy.

The IEEE ORA group created the Robot Task Representation subgroup to produce a middle-level ontology with a comprehensive decomposition of tasks, from goal to subgoals, that enables humans or robots to accomplish their expected outcomes at a specific instance in time. It includes a definition of tasks and their properties and terms related to the performance capabilities required to perform them. Moreover, it covers a catalog of tasks demanded by the community, especially in industrial processes (Balakirsky et al., 2017).

This working group also created three additional subgroups for more specific domain knowledge: Autonomous Robots Ontology, Industrial Ontology, and Medical Robot Ontology. Only the first of these has been active. The Autonomous Robot subgroup (AUR) extends CORA and its associated ontologies for the domain of autonomous robots, including, but not limited to, aerial, ground, surface, underwater, and space robots.

They developed the IEEE Standard for Autonomous Robotics Ontology (IEEE SA, 2022) with an unambiguous identification of the basic hardware and software components necessary to provide a robot or a group of robots with autonomy. It was conceived to serve different purposes, such as to describe the design patterns of Autonomous Robotics (AuR) systems, to represent AuR system architectures in a unified way, or as a guideline to build autonomous systems consisting of robots operating in various environments.

In addition to the developments of the IEEE ORA working group, there are other relevant domain ontologies, such as OASys and the Socio-physical Model of Activities (SOMA). The Ontology for Autonomous Systems (OASys) (Bermejo-Alonso et al., 2010) captures and exploits concepts to support the description of any autonomous system with an emphasis on the associated engineering processes. It provides two levels of abstraction systems in general and autonomous systems in particular. This ontology connects concepts such as architecture, components, goals, and functions with the engineering processes required to achieve them.

The SOMA for Autonomous Robotic Agents represents the physical and social context of everyday activities to facilitate accomplishing tasks that are trivial for humans (Beßler et al., 2021). It is based on DUL, extending their concept to different event types such as action, process, and state, the objects that participated in the activities, and the execution concept. It is worth mentioning that SOMA was intended to be used in the runtime along with the concept of narratively enabled episodic memories (NEEMs), which are comprehensive logs of raw sensor data, actuator control histories, and perception events, all semantically annotated with information about what the robot is doing and why using the terminology provided by SOMA.

The relationship between upper-level ontologies and domain ontologies is a relationship of progressive domain focalization (Sanz et al., 1999). The frameworks described in the following sections are mostly specializations of these general robotic ontologies and other foundations.

2.3 Capabilities for robot autonomy

Etymologically, autonomy means being governed by the laws of oneself rather than by the rules of others (Vernon, 2014). Beer et al. (2014) provide a definition more closely related to robotics: autonomy as the extent to which a robot can sense its environment, plan based on that environment, and act on that environment with the intention of reaching some task-specific goal (either given or created by the robot) without external control. A related, systems-oriented perspective pursued in our lab considers autonomy as a relationship between system, task, and context (Sanz et al., 2000).

Rational agents use sense-decide-act loops to select the best possible action. According to Vernon (2014), cognition allows one to increase the repertoire of actions and extend the time horizon of one’s ability to anticipate possible outcomes. He also reviews several cognitive architectures to support artificial cognitive systems and discusses the relationship between cognition and autonomy. For him, cognition includes six attributes: perception, learning, anticipation, action, adaptation, and, of course, autonomy.

Following the link between cognition and autonomy, Langley et al. (2009) also review cognitive architectures and establish the main functional capabilities that autonomous robots must demonstrate. Knowledge is described as an internal property to achieve the following capabilities: (i) recognition and categorization to generate abstractions from perceptions and past actions, (ii) decision making and choice to represent alternatives for selecting the most prosperous action considering the situation, (iii) perception and situation assessment to combine perceptual information from different sources and provide an understanding of the current circumstances, (iv) prediction and monitoring to evaluate the situation and the possible effects of actions, (v) problem solving and planning to specify desired intermediate states and the actions required to reach them, (vi) reasoning and belief maintenance to use and update the KB in dynamic environments, (vii) execution and action to support deliberative and reactive behaviors, (viii) interaction and communication to share knowledge with other agents, and (ix) remembering, reflection, and learning to use meta-reasoning to use past executions as experiences for the future.

Brachman (2002) argues about the promising capabilities of reflective agents. For him, real improvements in computational agents come when systems know what they are doing, that is, when the agent can understand the situation: what it is doing, where, and why. Brachman establishes practically the same foundations as Langley et al. (2009) but explicitly mentions the necessity of coordinated teams and robust software and hardware infrastructure.

In conclusion, most authors recall the importance of the features described above with different levels of granularity. In the next section, we explain and conceptualize those functional capabilities that enable robot operation autonomously. Note that the systems under study use explicit knowledge—ontologies—as the backbone to achieve autonomy.

3 Processes for knowledge-enabled autonomous robots

In this section, we introduce the classification on which we will base the review of ontologies for dependable robot autonomy in Section 5. The classification criterion is based on the capabilities introduced in Section 2.3. It establishes the fundamental processes that an autonomous robot should perform.

3.1 Perception

A percept is the belief produced as a result of a perceptor sensing the environment in an instant. Perception involves five entities: sensor, perceived quality, perceptive environment, perceptor, and the percept itself.

A sensor is a device that detects, measures, or captures a property in the environment. Sensors can measure one particular aspect of the physical world, such as thermometers, or capture complex characteristics, such as segmenting cameras.

A perceived quality is a feature that allows the perceptor to recognize some part of the environment—or the robot itself. Note that this quality is mapped into the percept as an instantiation, a belief produced to translate physical information to the system model. Examples of perceived qualities are the temperature, visual images of the environment, and the rotation of a wheel measured by a rotative encoder placed in a robot.

A perceptive environment is the part of the environment that the sensor can detect. The perceptive region can be delimited by the sensor’s resolution or to save memory or other resources.

An agent that perceives is a perceptor. It constitutes the link between perception and categorization because it takes sensor information and categorizes it. Usually, the perceptor embodies the sensor, as is the case in autonomous robots, but the two could be decoupled if the system processes information from external sensors. Finally, the percept is the inner entity—a belief—that results from the perceptual process.

3.2 Categorization

Percepts are instantaneous approximate representations of a particular aspect of the physical world. To provide the autonomous robot with an understanding of the situation in which it is deployed, the perceptor must abstract the sensor information and recognize objects, events, and experiences.

Categorization is the process of finding patterns and categories to model the situation in the robot’s knowledge. It can be done at different levels of granularity. Examples of categorizations appear everywhere: at sensor fusion processes from different types of sensors, with their corresponding uncertainty propagation; at the classification of an entity as a mobile obstacle when it is an uncontrolled object approaching the robot; or, in a more abstract level, when a mobile miner robot recognizes the type of mine ore depending on its geo-chemical properties.

In general, this step corresponds to a combination of information about objects, events, action responses, physical properties, etc., to create a picture of what is happening in the environment and in the robot itself. For this purpose, the robot shall incorporate other processes, such as reasoning and prediction.

3.3 Decision making

An autonomous robot must direct its actions towards a goal. When an action cannot be performed, the robot shall implement mechanisms to make decisions and select among the most suitable alternatives for the runtime situation.

Note the difference between decision making and planning. Decision making has a shorter time frame because it focuses on the successful completion of the plan. An example of a decision at this level could be to slightly change the trajectory of a robot to avoid an obstacle and then return to the initial path. Planning, on the other hand, is concerned with achieving a goal; it has a longer time horizon to establish the action sequences to complete a mission. For example, the miner robot presented above must examine the mine, detect the mineral vein, and dig in that direction.

Decision making acts upon the different alternatives that the robot can select, for example, in terms of directions and velocity, but also in terms of what component with functional equivalence can substitute for a defective one. The execution of the action—how it is done—changes slightly, but the plan—the action sequence that achieves the goal—remains the same.

3.4 Prediction and monitoring

Once the robot has an internal model, it can supervise the situation. This model can be given a priori or created before stating the task; it could also be learned. The model reflects the understanding of the robot about its own characteristics, its interactions with the environment, and the relationships between its actions and its outcomes. At runtime, the robot can use the model to predict the effect of an action. It can also anticipate future events based on the way the situation is evolving.

This mechanism also allows the robot to monitor processes and compare the result obtained with the expected response. In the event of inconsistencies, it can inform an external operator or use adaptation techniques to solve possible errors. For example, if a robot is stuck, it may change its motion direction to get out, and if this is not possible, it can alert the user.

The prediction process can also benefit from a learning procedure to improve the models from experience and refine them over time. Furthermore, monitoring can be used during learning, as it detects errors that can help improve the model.

3.5 Reasoning

Reasoning is the process of using existing knowledge to draw logical conclusions and extend the scope of that knowledge. It requires solid definitions and relationships between concepts. It uses instances of such concepts to ground them to the robot’s operation and to be used during the mission.

The reasoning process can be used to infer events based on the current percepts, such as the dynamic object approaching the robot, or to infer the best possible action to overcome it based on its background knowledge, such as reducing speed and slightly changing the direction. Lastly, as robots operate in dynamic worlds—specifically, they operate by changing their environment—the knowledge shall evolve over time.

Reasoning includes two processes: (i) infer and maintain beliefs and (ii) discard beliefs that are no longer valid. Logical systems support assertions and retractions for this purpose; however, they must be handled carefully to maintain ontological consistency. Truth maintenance is a critical capability for cognitive agents situated in dynamic environments.

3.6 Planning

Planning is the process of finding a sequence of actions to achieve a goal. To reach a solution, the problem must be structured and well defined, especially in terms of the starting state, which the robot shall transform into a desired goal state. The system also needs to know the constraints to execute an action and its expected outcome, that is, preconditions and postconditions. These conditions are also used to establish the order between actions and the effect that they may have on subsequent actions.

The required information is usually stored in three types of models: environment, robot, and goal models. Most authors only mention the environmental model, which includes the most relevant information about the robot world and its actions, tasks, and goals; however, we prefer to isolate the three models to make explicit the importance of proprioceptive information and performance indicators for a more dependable autonomous robot.

Plans can completely guide the behavior of the robot or suggest a succession of abstract actions that can be expanded in different ways. This can result in branches of possible actions, depending on the result of previous states.

Planning is also closely related to monitoring; the supervision output can conclude the effectiveness of the plan or detect some unreachable planned actions. In this case, the plan may need adjustments, such as changing parameters or replacing some actions. Replanning can use part of the plan or draw a completely new structure depending on the progress and status of the plan and the available components.

Lastly, successful plans or sub-plans can be stored for reuse. These stored plans can also benefit from learning, especially with regard to the environmental response to changes and action constraints and outputs.

3.7 Execution

A key process in robotic deployments is the execution of actions that interact with the environment. The robot model must represent the motor skills that produce such changes. Execution can be purely deliberative or combined with more reactive approaches; for example, a patrolling robot may reduce its speed or stop to ensure safety when close to a human—reactiveness—but it also needs a defined set of waypoints to fully cover an area—deliberation.

Hence, an autonomous robot must facilitate the integration of both reactive and deliberative actions within a goal-oriented hierarchy. A strictly reactive approach would limit the ability to direct the robot’s actions toward a defined objective. Meanwhile, an exclusively deliberative approach might be excessively computationally intensive and lead to delayed responses to instantaneous changes.

Another aspect of execution is control. Robots use controllers to overcome small deviations from their state. These controllers can operate in open-loop or closed-loop mode. Open-loop controllers apply predetermined actions based on a set of inputs, assuming that the system will respond predictably. Although they lack the ability to correct runtime deviations, they are often simpler and faster. Closed-loop controllers provide a more accurate and precise action based on inputs and feedback received. Control grounds the decision-making process by specifying the final target value for the robot effectors. It constitutes the final phase of action execution.

3.8 Communication and coordination

In many applications, robots operate with other agents—humans or robots of a different nature. Communication is a key feature to organize actions and coordinate them towards a shared goal. Moreover, in knowledge-based systems, communication provides an effective way to obtain knowledge from other agents’ perspectives.

Shared information provides a means to validate perceived elements, fuse them with other sources, and provide access to unperceivable regions of the world. However, this requires a way to exchange information between agents in a neutral, shared conceptualization that is understandable and useable for both.

Once communication is established, we shall coordinate the actions of the systems involved. Decision-making and planning processes should take into account the capabilities and availability of agents to direct and sequence their actions toward the most promising solution. For example, in multirobot patrols, agents shall share their pose and planned path to avoid collisions. Another example could be exploring a difficult-to-access mine in which a wheeled robot could be used for most of the inspection activities, and a legged robot could be used to inspect the unreachable areas.

3.9 Interaction and design

Interaction and design are often omitted when analyzing autonomous capabilities. Although they are part of the design phase, this engineering knowledge holds considerable influence over the robot’s performance and dependability.

Interaction between agents can be handled through coordination; however, the embodiment of robots can produce interactions between software and/or hardware components. Robots should be aware of the interaction ports and the possible errors that arise from them. This concern is presented by Brachman (2002), as awareness of interaction allows the robot to step back from action execution and understand the sources of failure. This becomes particularly significant during the integration of diverse components and subsystems, where the application of systems engineering techniques proves to be highly beneficial.

Hernández et al. (2018) argue about the need to exploit functional models to make explicit design decisions and alternatives at runtime. These models can provide background knowledge about requirements, constraints, and assumptions under which a design is valid. With this knowledge, we can endow robots with more tools for adaptability, providing the capability to overcome deviations or contingencies that may occur. For example, a manipulator robot with several tools may have one optimal tool for a task, but if this component is damaged, it can use an alternative tool to solve the problem in a less-than-optimal way.

3.10 Learning

Most of the processes described above can improve their efficacy through learning: categorization, decision making, prediction and monitoring, planning, execution, coordination, design, etc. Learning can be divided into three steps: remember, reflect, and generalize.

• Remember is the ability to store information from previous executions.

• Reflect involves analyzing remembered information to detect patterns and establish relationships.

• Generalize is the process of abstract conclusions derived from reflection and subsequently extended to use them in future experiences.

The classification of these competencies reveals their incorporation into KBs, identifies potential underrepresented elements, and explores the contributions of knowledge structures to the decision-making process.

4 Review process

One of our main objectives in this article is to conduct a systematic analysis of recent and relevant projects that use ontologies for autonomous robots. The methodology followed is inspired by relevant surveys discussed in the next section, such as those by Olivares-Alarcos et al. (2019) and Cornejo-Lupa et al. (2020). To avoid personal bias, the entire article selection process has been cross-analyzed by two people, and the framework analysis has been validated by the five authors.

The first step in our review process was to search for relevant keywords in scientific databases. Specifically, we used the most extended literature browsers, Scopus11 and Web of Science12. These databases provide a wide range of peer-reviewed literature, including scientific journals, books, and conference proceedings. Scopus includes more than 7,000 publishers, and WOS includes more than 34,000 journals, including important journals in the field, such as those from IEEE, Springer, and ACM. It also has a user-friendly interface to store, analyze, and display articles. Moreover, related articles cited in the analyzed articles are included in the review process to ensure that relevant articles were not missed. The search was done in terms of title, abstract, or keywords containing the terms robot, ontology, plan, behavior, adapt, autonomy, or fault. In practice, we used the following search string. The search can be replicated using the provided query. However, it is important to note that the survey was conducted in 2022, and there may have been developments or new publications since then. Additionally, a list of the analyzed works and intermediate documents is available upon request.

The required terms are “ontology” and “robot,” as they are the foundation of our survey. Using this restriction may seem somewhat limiting because there could be knowledge-based approaches to cognitive robots that are not based on ontologies. However, in this review, we are specifically interested in using explicit ontologies for this purpose, hence the strong requirement regarding “ontology.” We also target at least one keyword related to (i) selection and arrangement of actions—autonomous, planning, behavior—or (ii) overcoming contingencies—fault, adapt. Note that we use asterisks to be flexible with the notation.

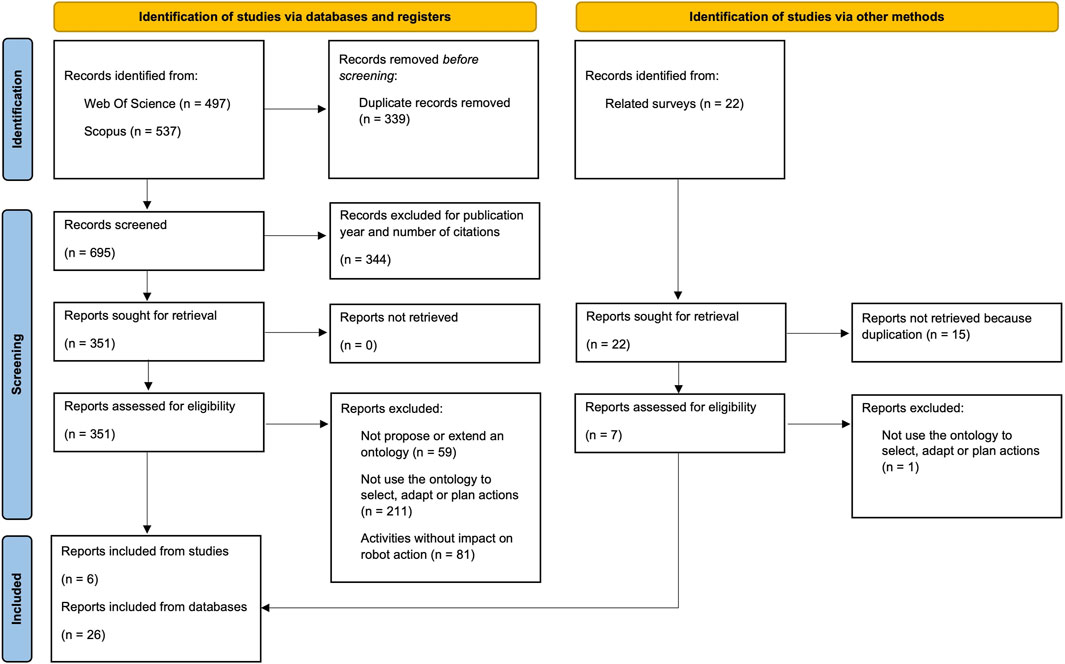

This search returned 695 articles after removing duplicates. To mitigate potential biases, we implemented specific inclusion criteria based on publication dates and citation counts. We only include articles widely cited, with 20 or more citations, before 2010; articles between 2010 and 2015 with five or more citations; and articles between 2015 and 2018 with two or more citations. All articles from 2018 onward were included. With these criteria, we reduced the list to 351 articles. The selection process applied these criteria to identify articles that are not only recent but have also demonstrated impact and influence within their respective publication periods.

Then, we analyzed the content of the articles. We included works in which the main point of the article is the ontology. In particular, the work (i) proposes or extends the ontology and (ii) uses the ontology to select, adapt, or plan actions. We eliminated a number of articles on how to use ontologies to encode simulations, on-line generators of ontologies, or ontologies only used for conversation, perception, or collaboration without impact on robot action. The application of these criteria produced 26 relevant articles.

Finally, we complemented our search with articles from related surveys described in Section 4.1. This ensured that we included all relevant and historical articles in the field with a snowball process. In this step, we obtained 22 more articles; some of them were already included in the previous list, and others did not meet our inclusion criteria. After evaluating them, we included six more articles, resulting in a total of 32 articles in deep review. The entire process is depicted in Figure 1 with a Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) flow diagram.

Figure 1. PRISMA flow diagram, adapted from Page et al. (2021).

4.1 Related surveys

This section evaluates a variety of comparative studies and surveys on the application of KR&R to several robotic domains. These works address robot architecture, as well as specific topics such as path planning, task decomposition, and context comprehension. However, our approach transcends these specific domains, aiming to offer a more comprehensive view of the role of ontologies in enhancing robot understanding and autonomy.

Gayathri and Uma (2018) compare languages and planners for robotic path planning from the KR&R perspective. They discuss ontologies for spatial, semantic, and temporal representations and their corresponding reasoning. In Gayathri and Uma (2019), the same authors expand their review by focusing on DL for robot task planning.

Closer to our perspective are articles that compare and analyze different approaches for ontology-based robotic systems, specifically those articles that focus on what to model in an ontology. Cornejo-Lupa et al. (2020) compare and classify ontologies for simultaneous localization and mapping (SLAM) in autonomous robots. The authors compare domain and application ontologies in terms of (i) robot information such as kinematic, sensor, pose, and trajectory information; (ii) environment mapping such as geographical, landmark, and uncertainty information; (iii) time-related information and mobile objects; and (iv) workspace information such as domain and map dimensions. They focus on one important but specific part of robot operation, autonomous navigation, and, in a particular type of robot, mobile robots.

Manzoor et al. (2021) compare projects based on application, ideas, tools, architecture, concepts, and limitations. However, this article does not examine how architecture in the compared frameworks supports autonomy. Their review focuses on objects, environment maps, and task representations from an ontology perspective. It does not compare the different approaches regarding the robot’s self-model. It also does not tackle the mechanisms and consequences of using such knowledge to enhance the robot’s reliability.

Perhaps the most relevant review from our perspective is the one by Olivares-Alarcos et al. (2019). They analyze five of the main projects that use KBs to support robot autonomy. They ground their analysis in (i) ontological terms, (ii) the capabilities that support robot autonomy, and (iii) the application domain. Moreover, they discuss robots and environment modeling. This work is closely related to the development of the recent IEEE Standard 1872.2 (IEEE SA, 2022).

Our approach differentiates itself from previous reviews because we focus on modeling not only robot actions and their environments but also engineering design knowledge. This type of knowledge is not often considered but provides a deeper understanding of the robot’s components and its interaction, design requirements, and possible alternatives to reach a mission. We also base our comparison on explicit knowledge of the mission and how we can ensure that it satisfies the user-expected performance. For this reason, we focus on works that select, adapt, or plan actions. Our search used more flexible inclusion criteria to analyze a variety of articles, even if they address only some of the issues or their ontologies are not publicly available. We have adopted this wide perspective to draw a general picture of different approaches that build the most important capabilities for dependable autonomous robots.

4.2 Review scope

Our analysis focuses on exploring the role of ontologies in advancing the autonomy of robotic systems. For this purpose, we delve into research articles that address ontologies created or extended to select, adapt, or plan actions autonomously. We excluded studies that solely encode simulations or utilize ontologies for non-action-related tasks, aiming to concentrate on contributions directly impacting robot action. Furthermore, our scope excludes non-ontology-based approaches, even if they are important for dependability or autonomy, such as all the developments in safe-critical systems.

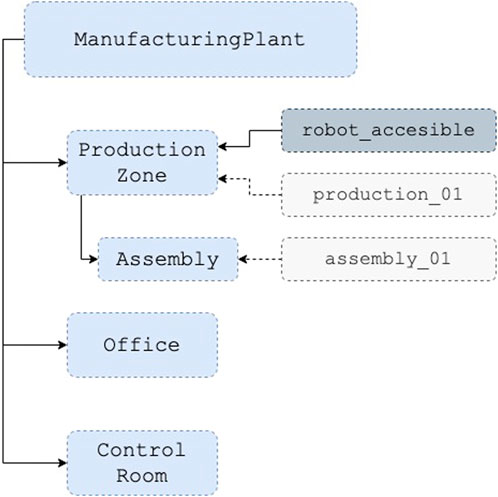

An ontology is a shared conceptualization that structures objects into classes, which can be seen as categories within the domain of discourse. While interaction with the world involves specific objects, referred to as individuals or instances, much reasoning occurs at the class level. Classes can possess properties that characterize the collection of objects they represent or are related to other classes. Additionally, they are organized through inheritance, expressed by the subclass relation. Figure 2 represents a simple ontology with partial information about zones in a manufacturing plant.

Figure 2. Simple example of manufacturing zones ontology. Classes in light blue, properties in dark blue, and individuals in gray.

Consider the assertion of the class ProductionZone with the property robot_accessible. According to this assertion, every instance of ProductionZone, such as the specific area production_01, is considered robot_accessible. In this hierarchy, Assembly is a subclass of ProductionZone. Given this hierarchy, we can deduce that every instance of AssemblyZone inherits the properties of its superclass. Every individual of it, such as assembly_01, inherits the robot_accessible property. Zones without the property, such as Office or Control Room, are considered restricted.

This kind of reasoning based on classification and consistency can be used to derive new assertions about elements in the robot environment and the robot itself. In this example, the robot can use this information to traverse only the accessible areas when selecting a route to traverse the plant. Therefore, the use of knowledge-driven policies facilitates the fulfillment of safety regulations and compliance standards and can enhance human understanding of the decisions the robot is making.

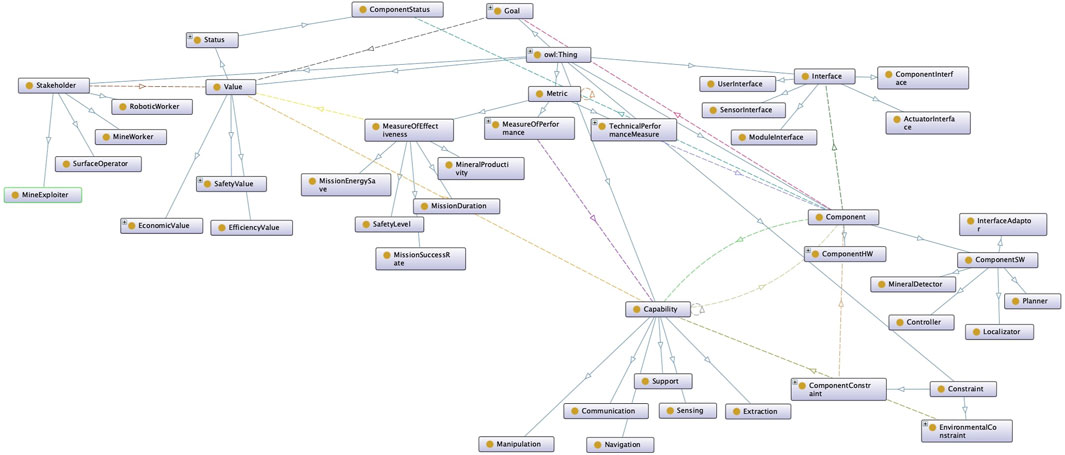

If the ontology represented components, capabilities, and goals, these conclusions could be used to adapt the system. Figure 3 represents a robotic ontology based on components, capabilities, goals, and values to determine which design alternatives are available for the robot. Following the approach of TOMASys Hernández et al. (2018), we are working on a system that selects the most suitable reconfiguration action for the robot. For example, if a component fails, the ontology can find another component that provides an equivalent capability so the robot can use it to complete the mission. This KB is also useful for determining which interfaces the system requires in that case, which metrics would be affected, and which stakeholders should be advised of the change. More information on the fundamental aspects of this ontology can be found in Aguado et al. (2024).

Figure 3. Example of an ontology to formalize robotics system models and their runtime deployment to select the most appropriate reconfiguration strategy in response to unexpected circumstances. This ontology represents the robot components, capabilities, and mission goals. The objective of the ontology is to find adaptation solutions that are not predefined or ad hoc; rather, they are computed during operation based on explicit engineering knowledge from the design phase.

The analysis developed here does not address different approaches to solving a specific problem; rather, it focuses on examining, for each framework, the capabilities explicitly represented in ontologies or those that leverage the ontology at runtime to enhance the operation of the robot.

The survey is centered on action-related conceptualizations, with the objective of finding the theoretical foundations and relevant applications of ontological frameworks within the field of robotics. For this reason, and especially given that most of the articles do not provide source files for the ontology, we focus on the concepts, and discussions regarding scalability, computational cost, and efficiency are not within the scope of our study.

4.3 Review audience

This survey is directed towards a specific audience that is not seeking introductory knowledge about ontologies or their basic usage. That content can be found in resources such as Staab and Studer (2009). For an introduction from the robotics perspective, refer to Chapter 10 in Russell and Norvig (2021). Our target audience consists of people interested in understanding the practical application of concepts related to robot autonomy during robot task execution. We aim to cater to researchers, practitioners, and academics who already possess a foundational understanding of ontologies and are now seeking to explore how these concepts intersect with and enhance the execution of tasks in robotic systems.

5 A survey of applications using ontologies for robot autonomy

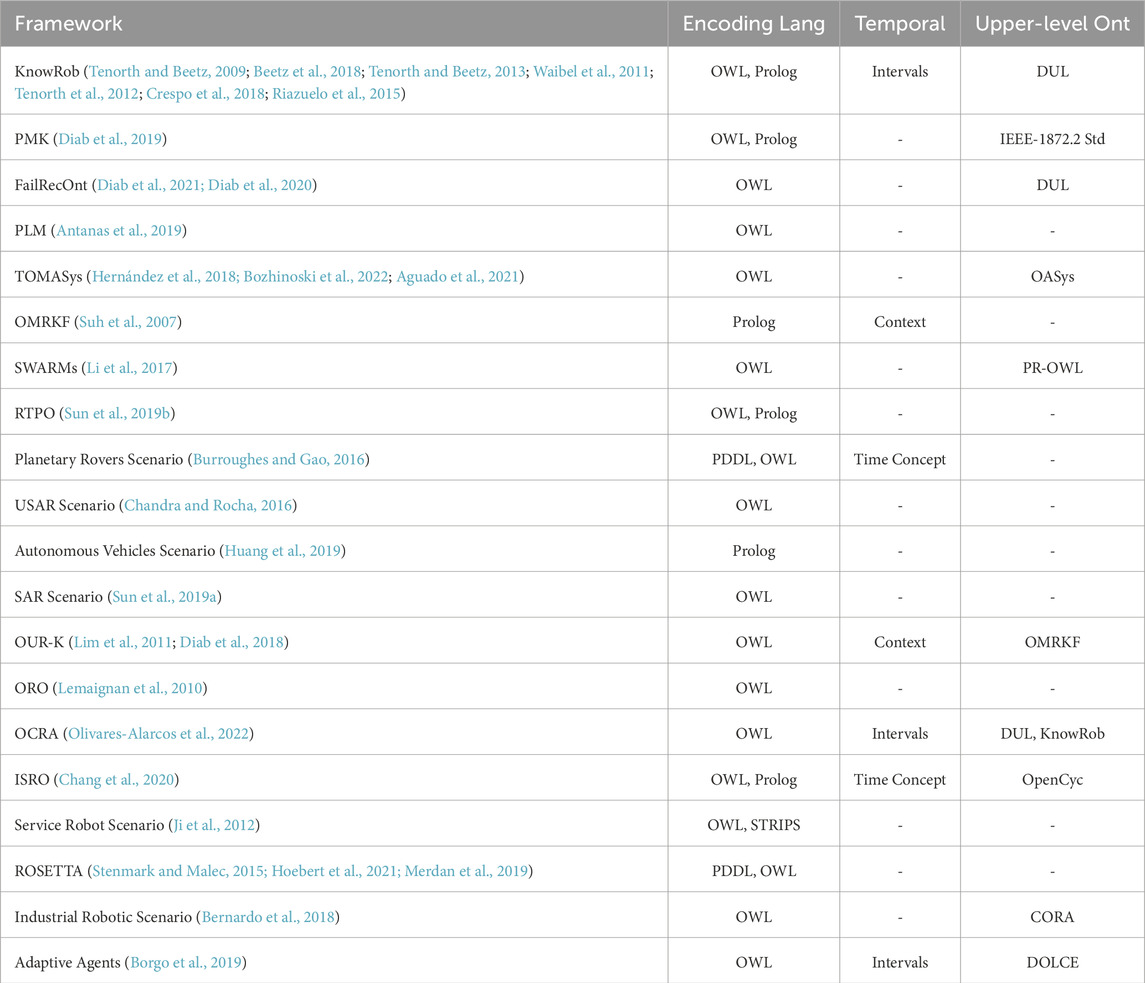

The following sections review and compare some projects to find their specific contributions to the field of robotic ontologies. We have organized the analysis of the projects under review based on the application domain—manipulation, navigation, social, and industrial—in which they have been deployed. However, most of the work has a broader perspective and may be applied to other domains. Each analyzed work includes a brief description and a discussion of the above elements. Finally, each domain provides a comparative table with the most relevant aspects of each capability.

5.1 Manipulation domain

In the domain of robotics, manipulation refers to the control and coordination of robotic arms, grippers, and other mechanical systems to interact with objects in the physical world. This includes a wide range of applications, from industrial automation to tasks in unstructured environments, such as household chores or healthcare assistance. In this section, most of the work under analysis focuses on domestic applications for manipulation.

5.1.1 KnowRob and KnowRob-based approaches

KnowRob13 is a framework that provides a KB and a processing system to perform complex manipulation tasks (Tenorth and Beetz, 2009; Tenorth and Beetz, 2013). KnowRob214 (Beetz et al., 2018) represents the second generation of the framework and serves as a bridge between vague instructions and specific motion commands required for task execution.

KnowRob2’s primary objective is to determine the appropriate action parametrization based on the required motion and identify the physical effects that must be achieved or avoided. For example, if the robot is asked to pick up a cup and pour out its contents, the KB retrieves the necessary action of pouring, which includes a sub-task to grasp the source container. Subsequently, the framework queries for pre-grasp and grasp poses, along with grasp force, to establish the required motion parameters.

KnowRob ontology includes a spectrum of concepts related to robots, including information about their body parts, connections, sensing and action capabilities, tasks, actions, and behavior. Objects are represented with their parts, functionalities, and configuration, while context and environment are also taken into account. Additionally, the ontology incorporates temporal predicates based on event logic and time-dependent relations.

While KnowRob’s initial ontology was based on Cyc (Lenat, 1995), which was designed to understand how the world works by representing implicit knowledge and performing human-like reasoning, Cyc has remained proprietary. OpenCyc, a public initiative related to Cyc, is no longer available. The KnowRob framework later transitioned to DUL, which was chosen for its compatibility with concepts for autonomous robots. KnowRob uses Prolog as a query and assert interface, but all perception, navigation, and manipulation actions are encoded in plans rather than Prolog queries or rules.

One of the main expansions of KnowRob is RoboEarth, a worldwide open-source platform that allows any robot with a network connection to generate, share, and reuse data (Waibel et al., 2011). It uses the principle of linked data connections through web and cloud services to speed up robot learning and adaptation in complex tasks.

RoboEarth uses an ontology to encode concepts and relations in maps and objects and a SLAM map that provides the geometry of the scene and the locations of objects with respect to the robot (Riazuelo et al., 2015). Each robot has a self-model that describes its kinematic structure and a semantic model to provide meaning to robot parts, such as a set of joints that form a gripper. This model also includes actions, their parameters, and software components, such as object recognition systems (Tenorth et al., 2012).

Another example of a KnowRob-based application is Crespo et al. (2018). In this case, the focus is on using semantic concepts to annotate a SLAM map with additional conceptualizations. This application diverges somewhat from KnowRob’s initial emphasis on robot manipulators. The work models the environment utilizing semantic concepts but specifically captures the relationships between rooms, objects, object interactions, and the utility of objects.

The detailed analysis of comparison criteria follows:

• Perception and categorization: KnowRob and RoboEarth incorporate inference mechanisms to abstract sensing information, particularly in the context of object recognition (Beetz et al., 2018; Crespo et al., 2018). As highlighted in Tenorth and Beetz (2013), inference processes information perceived from the external environment and abstracts it to the most appropriate level while retaining the connection to the original percepts. These ontologies serve as shared conceptualizations that accommodate various data types and support various forms of reasoning: effectively handling uncertainties arising from sensor noise, limited observability, hallucinated object detection, incomplete knowledge, and unreliable or inaccurate actions (Tenorth and Beetz, 2009).

• Decision making and planning: KnowRob places a strong emphasis on determining action parametrizations for successful manipulation, using hybrid reasoning with the goal of reasoning with eyes and hands (Beetz et al., 2018).

This approach equips KnowRob with the ability to reason about specific physical effects that can be achieved or avoided through its motion capabilities. Although KnowRob’s KB might exhibit redundancy or inconsistency, the reasoning engine computes multiple hypotheses, subjecting them to plausibility and consistency checks and ultimately selecting the most promising parametrization. The planning component of KnowRob2 is tailored for motion planning and solving inverse kinematics problems. Tasks are dynamically assembled on the basis of the robot’s situation.

• Prediction and monitoring: KnowRob uses its ontology to represent the evolution of the state, facilitating the retrieval of semantic information and reasoning (Beetz et al., 2018). Through these heterogeneous processes, the framework can predict the most appropriate parameters for a given situation. However, the monitoring capabilities within this framework are limited to objects in the environment. Unsuccessful experiences are labeled and stored in the robot’s memory, contributing to the selection of action parameters in subsequent scenarios. The framework introduces NEEMs, allowing queries about the robot’s actions, their timing, execution details, success outcomes, the robot’s observations, and beliefs during each action. This knowledge is used primarily during the learning process.

• Reasoning: KnowRob2 incorporates a hybrid reasoning kernel comprising four KBs with their corresponding reasoning engines (Beetz et al., 2018):

• Inner World KB: Contains CAD and mesh models of objects positioned with accurate 6D poses, enhanced with a physics simulation.

• Virtual KB: Computed on demand from the data structures of the control system.

• Logic KB: Comprises abstracted symbolic sensor and action data enriched with logical axioms and inference mechanisms. This type of reasoning is the focus of our discussion in this article.

• Episodic Memories KB: Stores experiences of the robotic agent.

• Execution: KnowRob execution is driven by competency questions to bridge the gap between undetermined instructions and action. The framework incorporates the Cognitive Robot Abstract Machine (CRAM), where one of its key functionalities is the execution of the plan. The framework provides a plan language to articulate flexible, reliable, and sensor-guided robot behavior. The executor then updates the KB with information about perception and action results, facilitating the inference of new data to make real-time control decisions (Beetz et al., 2010).

RoboEarth and earlier versions of KnowRob rely on action recipes for execution. Before executing an action recipe, the system verifies the availability of the skills necessary for the task and orders each action to satisfy the constraints. In cases where the robot encounters difficulties in executing a recipe, it downloads additional information to enhance its capabilities (Tenorth et al., 2012). Once the plan is established, the system links robot perceptions with the abstract task description given by the action recipe. RoboEarth ensures the execution of reliable actions by actively monitoring the link between robot perceptions and actions (Waibel et al., 2011).

• Communication and coordination: RoboEarth (Tenorth et al., 2012) uses a communication module to facilitate the exchange of information with the web. This involves making web requests to upload and download data, allowing the construction and updating of the KB.

• Learning: KnowRob includes learning as part of its framework. It focuses on acquiring generalized models that capture how the physical effects of actions vary depending on the motion parametrization (Beetz et al., 2018). The learning process involves abstracting action models from the data, either by identifying a class structure among a set of entities or by grouping the observed manipulation instances according to a specific property (Tenorth and Beetz, 2009). KnowRob2 extends its capabilities with the Open-EASE knowledge service (Beetz et al., 2015), offering a platform to upload, access, and analyze NEEMs of robots involved in manipulation tasks. NEEMs use descriptions of events, objects, time, and low-level control data to establish correlations between various executions, facilitating the learning-from-experience process.

5.1.2 Perception and Manipulation Knowledge

The Perception and Manipulation Knowledge (PMK)15 framework is designed for autonomous robots, with a specific focus on complex manipulation tasks that provide semantic information about objects, types, geometrical constraints, and functionalities. In concrete terms, this framework uses knowledge to support task and motion planning (TAMP) capabilities in the manipulation domain (Diab et al., 2019).

PMK ontology is grounded in IEEE Standard 1872.2 (IEEE SA, 2022) for knowledge representation in the robotic domain, extending it to the manipulation domain by incorporating sensor-related knowledge. This extension facilitates the link between low-level perception data and high-level knowledge for comprehensive situation analysis in planning tasks. The ontological structure of PMK comprises a meta-ontology for representing generic information, an ontology schema for domain-specific knowledge, and an ontology instance to store information about objects. These layers are organized into seven classes: feature, workspace, object, actor, sensor, context reasoning, and actions. This structure is inspired by OUR-K (Lim et al., 2011), which is further described in Section 5.3).

The detailed analysis of comparison criteria follows:

• Perception and categorization: The PMK ontology incorporates RFID sensors and 2D cameras to facilitate object localization, explicitly grouping sensors to define equivalent sensing strategies. This design enables the system to dynamically select the most appropriate sensor based on the current situation. PMK establishes relationships between classes such as feature, sensor, and action. For example, it stores poses, colors, and IDs of objects obtained from images.

• Decision making and planning: PMK augments TAMP parameters with data from its KB. The KB contains information on action feasibility considering object features, robot capabilities, and object states. The TAMP module utilizes this information, combining a fast-forward task planner with physics-based motion planning to determine a feasible sequence of actions. This helps determine where the robot should place an object and the associated constraints.

• Reasoning: PMK reasoning targets potential manipulation actions employing description logic’s inference for real-time information, such as object positions through spatial reasoning and relationships between entities in different classes. Inference mechanisms assess robot capabilities, action constraints, feasibility, and manipulation behaviors, facilitating the integration of TAMP with the perception module. This process yields information about constraints such as interaction parameters (e.g., friction, slip, maximum force), spatial relationships (e.g., inside, right, left), feasibility of actions (e.g., arm reachability, collisions), and action constraints related to object interactions (e.g., graspable from the handle, pushable from the body).

• Interaction and design: PMK represents interaction as manipulation constraints, specifying, for example, which part of an object is interactable, such as a handle. It also considers interaction parameters such as friction coefficient, slip, or maximum force.

5.1.3 Failure Interpretation and Recovery in Planning and Execution

Failure Interpretation and Recovery in Planning and Execution (FailRecOnt)16 is an ontology-based framework featuring contingency-based task and motion planning. This innovative system empowers robots to handle uncertainty, recover from failures, and engage in effective human–robot interactions. Grounded in the DUL ontology, it addresses failures and recovery strategies, but it also takes some concepts from CORA and SUMO for robotics and ontological foundations, respectively.

The framework identifies failures through the non-realized situation concept and proposes corresponding recovery strategies for actions. To improve the understanding of failure, the ontology models terms such as causal mechanism, location, time, and functional considerations, which facilitates a reasoning-based repair plan (Diab et al., 2020; Diab et al., 2021).

Failure ontology requires a system knowledge model in terms of tasks, roles, and object concepts. Lastly, FailRecOnt has some similarities with KnowRob; both target manipulation tasks are at least partially based on DUL and share some of the authors. Moreover, they propose using PMK as a model of the system (Diab et al., 2021).

The detailed analysis of comparison criteria follows:

• Perception and categorization: Perception in FailRecOnt is limited to action detection to detect abnormal events. For geometric information and environment categorization, the framework leverages PMK to abstract perceptual information related to the environment.

• Decision making and planning: FailRecOnt is structured into three layers: planning and execution, knowledge, and an assistant low-level layer. The planning and execution layer provides task planning and a task manager module. The assistant layer manages perception and action execution for the specific robot, determining how to sense an action and checking whether a configuration is collision-free. The framework has been evaluated for a task that involves storing an object in a given tray according to its color; it can handle situations such as facing a closed or flipped box and continuing the plan (Diab et al., 2021).

• Prediction and monitoring: Monitoring is a crucial aspect of the FailRecOnt framework. It continuously monitors executed actions and signals a failure to the recovery module if an error occurs. The reasoning component interprets potential failures and, if possible, triggers a recovery action to repair the plan.

• Reasoning: FailRecOnt ontology describes how the perception of actions should be formalized. Reasoning selects an appropriate recovery strategy depending on the kind of failure, why it happened, and if other activities are affected, etc.

• Execution: FailRecOnt relies on planning for execution. The task planner generates a sequence of symbolic-level actions without geometric considerations. Geometric reasoning comes into play to establish a feasible path. During action execution, the framework monitors each manipulation action for possible failures by sensing. Reasoning is then applied to interpret potential failures, identify causes, and determine recovery strategies.

• Communication and coordination: FailRecOnt incorporates the reasoning for communication failure from Diab et al. (2020) to address failures in scenarios where multiple agents exchange information.

5.1.4 Probabilistic Logic Module

Probabilistic Logic Module (PLM) offers a framework that integrates semantic and geometric reasoning for robotic grasping (Antanas et al., 2019). Specific details about the KB, such as the source files, are not publicly available, so the information provided here is derived from articles about the framework.

The primary focus of this work is on an ontology that generalizes similar object parts to semantically reason about the most probable part of an object to grasp, considering object properties and task constraints. This information is used to reduce the search space for possible final gripper poses. This acquired knowledge can also be transferred to objects within the same category.

The object ontology comprises specific objects such as cup or hammer, along with supercategories based on functionality, such as kitchen container or tool. The task ontology encodes grasping tasks with objects, such as pick and place right or pour in. Additionally, a third ontology conceptualizes object-task affordances, considering the manipulation capabilities of a two-finger gripper and the associated probability of success.

In using the ontology, high-level knowledge is combined with low-level learning based on visual shape features to enhance object categorization. Subsequently, high-level knowledge utilizes probabilistic logic to translate low-level visual perception into a more promising grasp planning strategy.

The detailed analysis of comparison criteria follows:

• Perception and categorization: This approach is based on visual perception, employing vision to identify the most suitable part of an object for grasping. The framework utilizes a low-level perception module to label visual data with semantic object parts, such as detecting the top, middle, and bottom areas of a cup and its handle. The probabilistic logic module combines this information with the affordances model.

• Decision making and planning: PLM uses ontologies to support grasp planning. It integrates ontological knowledge with probabilistic logic to translate low-level visual perception into an effective grasp planning strategy. Once an object is categorized and its affordances are inferred, the task ontology determines the most likely object part to be grasped, thereby reducing the search space for possible final gripper poses. Subsequently, a low-level shape-based planner generates a trajectory for the end effector.

• Prediction and monitoring: Although this framework does not specifically predict or monitor robot actions, it uses task prediction to select among alternative tasks based on their probability of success but does not actively monitor them.

• Reasoning: PLM uses semantic reasoning to grasp. It selects the best grasping task based on object affordances, addressing the uncertainty of visual perception through a probabilistic approach.

• Learning: Learning techniques are used to identify the visual characteristics of the shape, which are then categorized. The robot utilizes the acquired knowledge for grasp planning.

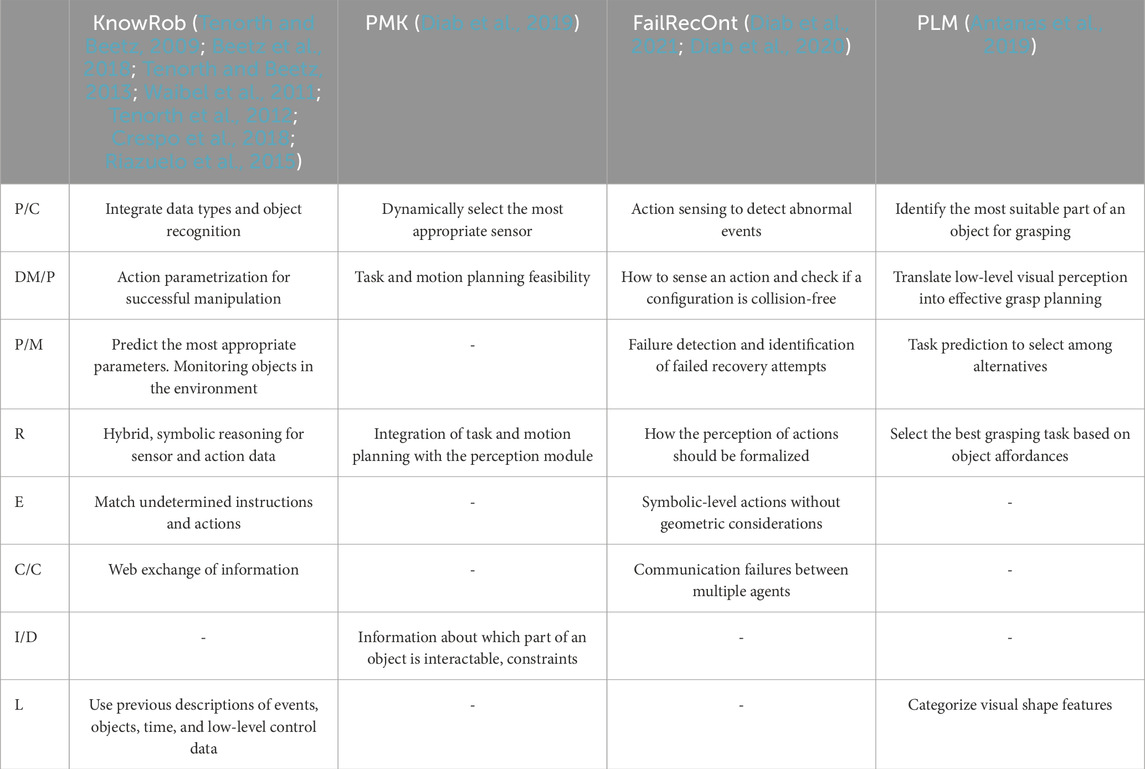

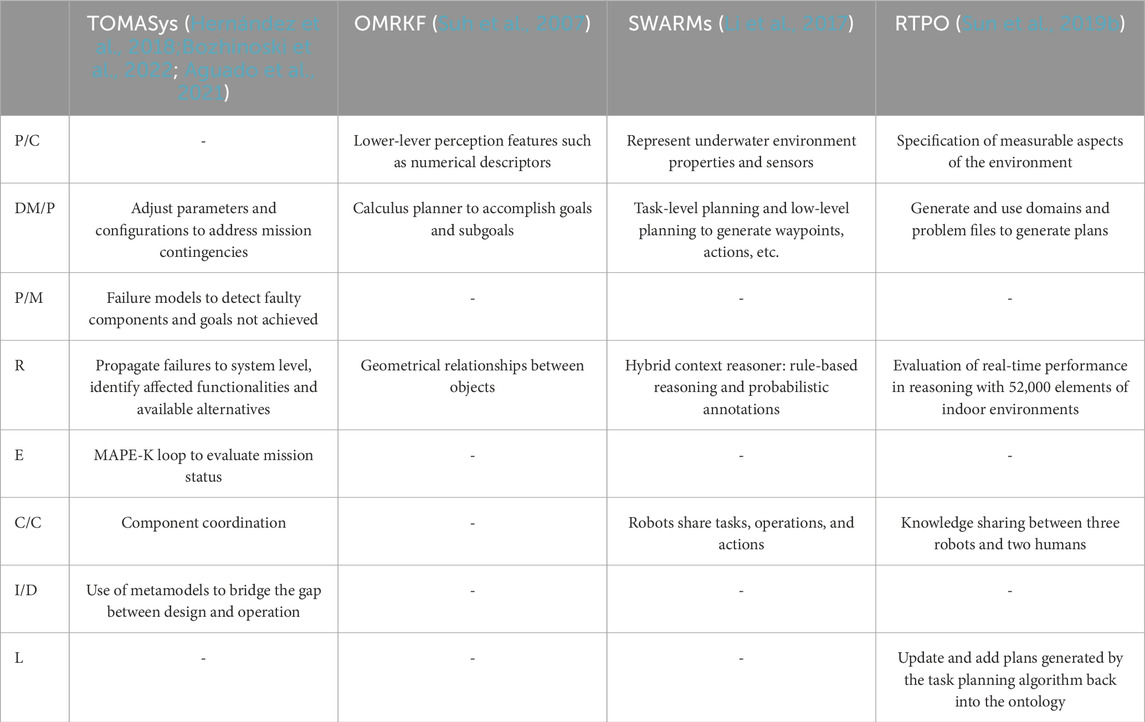

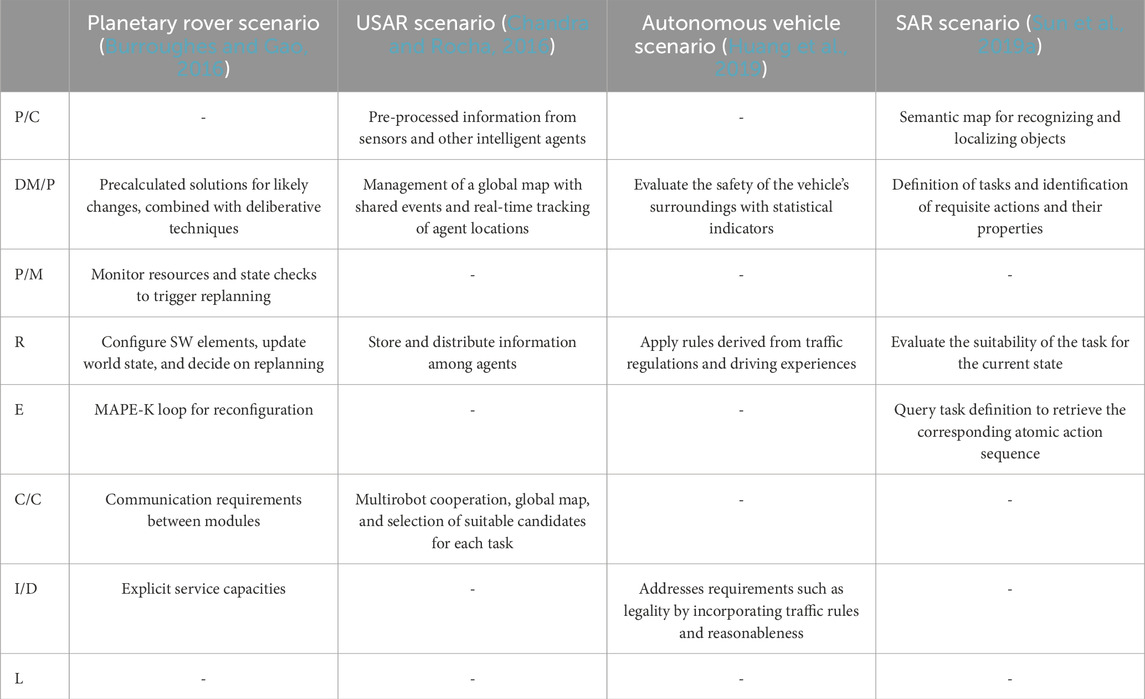

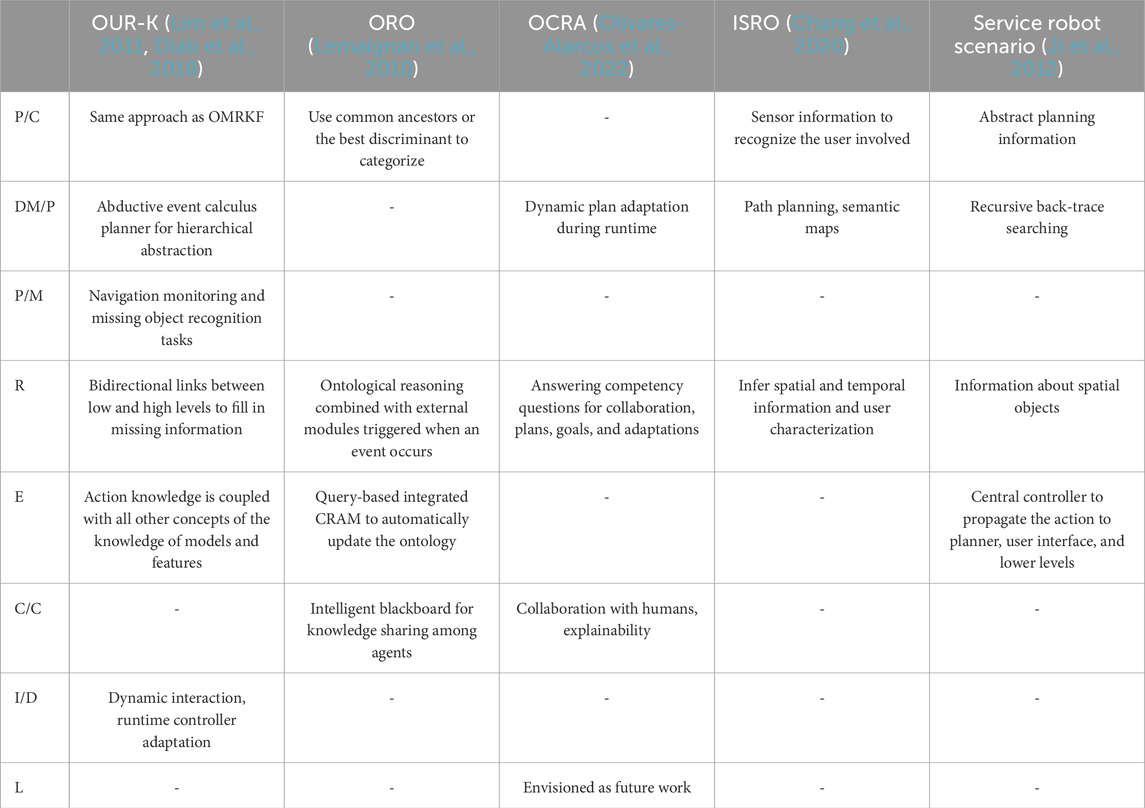

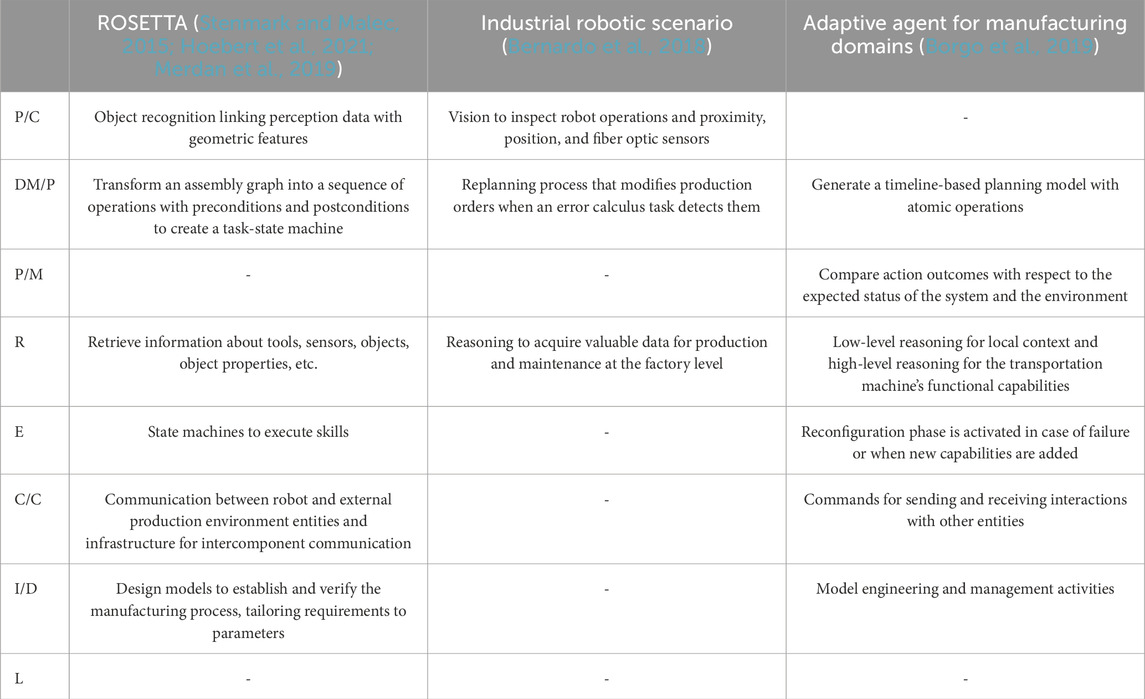

Table 1 provides a concise comparison of the four frameworks analyzed. Although all of them address perception and categorization, decision making and planning, and reasoning, the specific aspects involved depend on the perspective. Only half of the frameworks explicitly utilize their KB for execution, prediction and monitoring, communication and coordination, and learning. In particular, integration and design are addressed exclusively by the PMK framework.

Table 1. Use of ontologies in the manipulation domain for perception and categorization (P/C), decision making and planning (DM/P), prediction and monitoring (P/M), reasoning (R), execution (E), communication and coordination (C/C), interaction and design (I/D) and learning (L).

5.2 Navigation domain

The navigation domain describes the challenges and techniques involved in enabling robots to autonomously move around their environment. This involves the processes of guidance, navigation, and control (GNC), incorporating elements such as computer vision and sensor fusion for perception and localization, as well as control systems and artificial intelligence for mapping, path planning, or obstacle avoidance.

5.2.1 Teleological and Ontological Model for Autonomous Systems

Teleological and Ontological Model for Autonomous Systems (TOMASys)17 is a metamodel designed to consider the functional knowledge of autonomous systems, incorporating both teleological and ontological dimensions. The teleological aspect includes engineering knowledge, which represents the intentions and purposes of system designers. The ontological dimension categorizes the structure and behavior of the system.

TOMASys serves as a metamodel to ensure robust operation, focusing on mission-level resilience (Hernández et al., 2018). This metamodel relies on a functional ontology derived from the Ontology for Autonomous Systems (OASys) (Bermejo-Alonso et al., 2010), establishing connections between the robot’s architecture and its mission. The core concepts in TOMASys include functions, objectives, components, and configurations. However, it operates as a metamodel and intentionally avoids representing specific features of the operational environment, such as objects, maps, etc.

At the core of the TOMASys framework is the metacontroller. While a conventional controller closes a loop to maintain a system component’s output close to a set point, the metacontroller closes a control loop on top of a system’s functionality. This metacontroller triggers reconfiguration when the system deviates from the functional reference, allowing the robot to adapt and maintain desired behavior in the presence of failures. Explicit knowledge of mission requirements is leveraged for reconfiguration using the system’s functional specifications captured in the ontology.

In practice, TOMASys has been applied to various robots and environments, particularly for navigation tasks. Examples include its application to an underwater mine explorer robot (Aguado et al., 2021) and a mobile robot patrolling a university campus (Bozhinoski et al., 2022).

The detailed analysis of comparison criteria follows:

• Decision making and planning: In TOMASys, the metacontrol system manages decision making by adjusting parameters and configurations to address contingencies and mission deviations. It assumes the presence of a nominal controller responsible for standard decisions. In the case of failure detection or unmet mission requirements, the metacontroller selects an appropriate configuration. The planning process is integrated into the metacontrol subsystem, where reconfiguration decisions can impact the overall system plan, potentially altering parameters, components, functionalities, or even relaxing mission objectives to ensure task accomplishment.

• Prediction and monitoring: Monitoring is a critical aspect of TOMASys, providing failure models to detect contingencies or faulty components. Reconfiguration is triggered not only in the event of failure but also when mission objectives are not satisfactorily achieved. Observer modules are used to monitor reconfigurable components of the system.

• Reasoning: TOMASys uses a DL reasoner for real-time system diagnosis. It propagates component failures to the system level, identifying affected functionalities and available alternatives. This reasoning process helps to select the most promising alternative to fulfill mission objectives.

• Execution: The execution in TOMASys follows the monitor-analyze-plan-execute (MAPE-K) loop (IBM Corporation, 2005). It evaluates the mission and system state through monitoring observers, uses ontological reasoners for assessing mission objectives and propagating component failures, decides reconfigurations based on engineering and runtime knowledge, and executes the selected adaptations.

• Communication and coordination: TOMASys uses its hierarchical structure to coordinate components working toward a common goal. Components utilize roles that specify parameters for specific functions, and bindings facilitate communication by connecting component roles with function specifications during execution. Bindings are crucial for detecting component failures or errors. In cases where the metacontroller cannot handle errors, a function design log informs the user.

• Interaction and design: TOMASys provides a metamodel that leverages engineering models from design time to runtime. This approach aims to bridge the gap between design and operation, relying on functional and component modeling to map mission requirements to the engineering structure. The explicit dependencies between components, roles, and functions, along with specifications of required component types based on functionality, support the system’s adaptability at runtime.

5.2.2 Ontology-based multi-layered robot knowledge framework

The Ontology-based Multi-layered Robot Knowledge Framework (OMRKF) aims to integrate high-level knowledge with perception data to enhance the intelligence of a robot in its environment (Suh et al., 2007). Specific details about the KB, such as the source files, are not publicly available, so the information provided here is derived from articles about the framework.

The framework is organized into knowledge boards, each representing four knowledge classes: perception, activity, model, and context. These classes are divided into three knowledge levels (high, middle, and low). Perception knowledge involves visual concepts, visual features, and numerical descriptions. Similarly, activity knowledge is classified into service, task, and behavior, while model knowledge includes space, objects, and their features. The context class is organized into high-level context, temporal context, and spatial context.

At each knowledge level, OMRKF employs three ontology layers: (a) a meta-ontology for generic knowledge, (b) an ontology schema for domain-specific knowledge, and (c) an ontology instance to ground concepts with application-specific data. The framework uses rules to define relationships between ontology layers, knowledge levels, and knowledge classes.

OMRKF facilitates the execution of sequenced behaviors by allowing the specification of high-level services and guiding the robot in recognizing objects even with incomplete knowledge. This capability enables robust object recognition, successful navigation, and inference of localization-related knowledge. Additionally, the framework provides a querying-asking interface through Prolog, enhancing the robot’s interaction capabilities.

The detailed analysis of comparison criteria follows:

• Perception and categorization: OMRKF includes perception as one of its knowledge classes, specifically addressing the numerical descriptor class in the lower-level layer. Examples of these numerical descriptors are generated by robot sensors and image processing algorithms such as Gabor filter or scale-invariant feature transform (SIFT) (Suh et al., 2007).

• Decision making and planning: OMRKF uses an event calculus planner to define the sequence to execute a requested service. The framework relies on query-based reasoning to determine how to achieve a goal. In cases of insufficient knowledge, the goal is recursively subdivided into subgoals, breaking down the task into atomic functions such as go to, turn, and extract feature. Once the calculus planner generates an output, the robot follows a sequence to complete a task, such as the steps involved in a delivery mission.

• Reasoning: OMRKF employs axioms, such as the inverse relation of left and right or on and under, to infer useful facts using the ontology. The framework uses Horn rules to identify concepts and relations, enhancing its reasoning capabilities.

5.2.3 Smart and Networked Underwater Robots in Cooperation Meshes ontology

The Smart and Networked Underwater Robots in Cooperation Meshes (SWARMs) ontology addresses information heterogeneity and facilitates a shared understanding among robots in the context of maritime or underwater missions (Li et al., 2017). Specific details about the KB, such as the source files, are not publicly available, so the information provided here is derived from articles about the ontology.

SWARMs leverages the probabilistic ontology PR-OWL18 to annotate the uncertainty of the context based on the multi-entity Bayesian network (MEBN) theory (Laskey, 2008). This allows SWARMs to perform hybrid reasoning on (i) the information exchanged between robots and (ii) environmental uncertainty.

SWARMs establishes a core ontology to interrelate several domain-specific ontologies. The core ontology manages entities, objects, and infrastructures. These ontologies include:

– Mission and Planning Ontology: Provides a general representation of the entire mission and the associated planning procedures.

– Robotic Vehicle Ontology: Captures information on underwater or surface vehicles and robots.

– Environment Ontology: Characterizes the environment through recognition and sensing.

– Communication and Networking Ontology: Describes the communication links available in SWARMs to interconnect different agents involved in the mission, enabling communication between the underwater segment and the surface.

– Application Ontology: Provides information on scenarios and their requirements. PR-OWL is included in this layer to handle uncertainty.