94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Res. Metr. Anal., 20 April 2017

Sec. Research Policy and Strategic Management

Volume 2 - 2017 | https://doi.org/10.3389/frma.2017.00004

Thed N. van Leeuwen*

Thed N. van Leeuwen* Paul F. Wouters

Paul F. Wouters

In this article, we present the results of an analysis that describes the research centered on Journal Impact Factors (JIFs). The purpose of the analysis is to make a start of studying part of the field of quantitative science studies that relates to the most famous and classic bibliometric indicator around and to see what characteristics apply to the research on JIFs. In this article, we start with a general description of the research, from the perspective of the journals used, the fields in which research on JIFs appeared, and the countries that contributed to the research on JIF. Finally, the article presents a first attempt to describe the coherence of the field, which will form the basis for next steps in this line of research on JIFs.

Undeniably, one of the most widely used bibliometric indicators is the Journal Impact Factor (JIF). This indicator reflects the average impact of a journal and is defined as the number of citations a journal receives in a particular year divided by the number of citable documents published in the 2 years previous to the year of publication. The JIF is developed by Eugene Garfield in the 1950/1960s, with first publications on the indicator in 1955 and 1963 (Garfield, 1955; Garfield and Sher, 1963). Journal citation statistics were included in the Journal Citation Reports (JCR), the annual summarizing volumes to the printed editions of the Science Citation Index (SCI), and the Social Sciences Citation Index (SSCI). In an ever more complex world, in which more and more journals appeared on the scene, thereby complicating the life of academic librarians, the JIF was a helpful tool in decision-making on collection management. When the JCR started to appear on electronic media, first on CD-ROM and later through the Internet, JIF was more frequently used for other purposes such as assessments on various levels. This use of the JIF was warned against by Garfield himself (Garfield, 1972, 2006). The JIF is included in the JCR from 1975 onward, initially only for the SCI, later also for the SSCI. For the Arts and Humanities Citation Index, no JIFs were produced. From the definition of the JIF, it becomes apparent that JIF is a relatively simple measure, is easily available through the JCR, and relates to scientific journals that are the main channel for scientific communication in the natural sciences, biomedicine, and parts of the social sciences (e.g., in psychology, economics, business, and management) and humanities (e.g., in linguistics). The bibliometrics community mainly studied the methodological issues related to JIFs (Moed and van Leeuwen, 1995; Seglen, 1997; Archambault and Lariviere, 2009) and other journal impact measures such as the Eigenfactor (Bergstrom et al., 2008), the Audience Factor (Zitt and Small, 2008), and the Source Normalized Impact per Paper (Moed, 2010; Waltman et al., 2013). While this work was of a critical nature on the usage of journal impact measures for assessment purposes, trying to develop solutions for the problems related to JIFs, more recently, we observe many publications in the bibliometrics literature using the JIF in a less critical manner, applying JIF in various assessment contexts. Many studies outside the bibliometrics community examined the possibilities of the application of JIFs in management of research, journals and journal publishing, or simply reported on the value of the JIF for their own journal. This literature is an indication of the growing relevance of this bibliometric indicator for science and science management.

In this study, we will describe the development of the research about JIFs from 1981 onward, until 2015. The focus will be on the development of output related to JIF, looking at the disciplinary and geographical origin of the output focusing on JIFs. Co-occurrence analysis of title and abstract words is used to see how the publications in the research on JIFs are related. This article is a first step in a line of research, describing the landscape in a general sense, followed by research in which we want to follow the development of the research on JIFs, for example, to see whether we can speak of the development of a scholarly specialty and also how the use of JIFs is embedded in evaluation practices in academia worldwide, the actors using these indicators in those evaluation practices, and the role of academic libraries therein, and so on. This follow-up research will also extend the period of analysis to the most recent years, up until 2016, as that will also connect to discussions on actors, specialty development, etc.

We collected from the Web of Science all publications that contain the words “Impact Factor” or the shortening to IF or JIF in their title or abstract. We concluded that “if” or “IF” in itself was impossible to include in the search, because this would include all occurrences of the word “if” in its form of a conjunction in the English language (over 1.2 million hits in Web of Science online). A first search was conducted in November 2012 and resulted in a set of 2,855 publications of various document types and updated in January 2017, adding another 1,772 publications to the set. This set of 4,627 publications was combined with the in-house version of the Web of Science at CWTS, a bibliometric version of the original Web of Science database. This resulted in a set of 3,932 publications, which were present in both versions of the WoS database. The main reason for the difference between the two sets is the gap between the periods covered in both sets, where the CWTS version was up-to-date for analytical purposes to 2015. A detailed manual analysis of the contents of the publications resulted in the deletion of 462 publications, which focused on other topics than JIFs.1

The resulting data set contained 3,470 publications in WoS.

Below we will analyze the disciplinary embedding of the publications selected in the WoS database according to the so-called Journal Subject Categories. As the data collected for the study are collected irrespective of the field to which the publications belong, the set contains publications from a variety of subfields. Information on geographical origin was extracted from the addresses attached to the publications selected. We only looked at country names attached to publications and counted a country name only once when it appears on a publication.

In this study, we use the VOS Viewer methodology, through which structures between publications are identified on the basis of the co-occurrence of title and abstract words, but also other entities in science such as journals, authors, or institutions. The VOS Viewer is an analysis tool developed at CWTS that allows one to construct and visualize networks based on bibliometric information. The relations used can be copublications, and consequently, coauthorship and coinstitution occurrences, as well as cocitation or bibliographic coupling relations (van Eck and Waltman, 2010). We start this analysis of the data from 1996 onward, the year in which WoS publications structurally started having abstracts in the database. The availability of this type of data in our set means that we have 20 years of publication data, which we will analyze divided in 4 equally long periods of 5 years (1996–2000, 2001–2005, 2006–2010, and 2011–2015).

In the part on the disciplinary and geographical background, we use a standard bibliometric impact indicator, namely MNCS, the field normalized mean citation score, to give an impression to what extent publications in the research on JIFs are more or less influential and visible in the fields to which they belong (Waltman et al., 2011). One of the main features of the MNCS is field normalization. Various fields have different citation densities, so to compare across fields normalization for these differences are needed if one wants to know the relative position of the article within the field. Of course, if one is interested in the differences in citation density among fields, one should not normalize. MNCS is an indicator developed by CWTS, but similar field normalized impact indicators have been developed elsewhere as well. Furthermore, in MNCS, age of publications and document types are also taken into consideration.

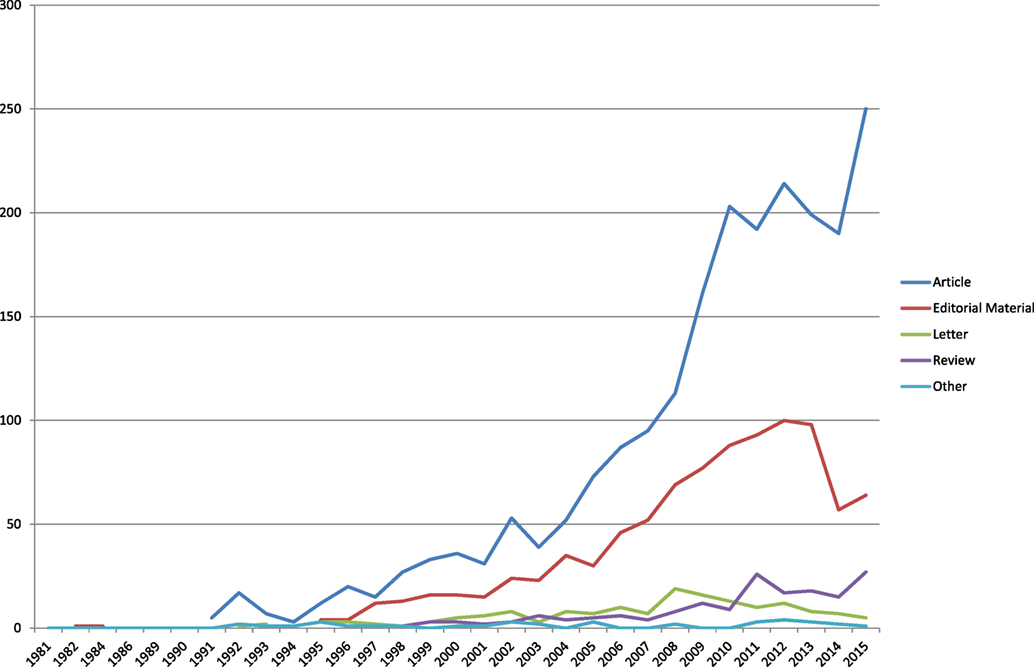

The overall development of research and output on JIFs is displayed in Figure 1. After taking off in the early 1990s, since 1994 the output is steadily increasing. In Figure 1, we also display the annual overall growth of output in WoS. If we want to indicate the growth in output, we apply a formula for calculation of developments in output numbers. As the analysis will further focus on the 20-year period, we will indicate the growth rate of research on JIFs over that period, 1996–2015, in comparison with a global increase in output numbers in the WoS database. In addition, we will indicate what the growth rate is within that period, by looking at the last 10 years, 2006–2015. We apply the following formula: we take the output in the last year and extract the output in the first year. This is divided by 1% of the output in the starting year. This is interpretable as a percentage, although growth rate in itself is more accurate. This delivers for the world a growth rate of 84, while for the research on JIFs, the growth rate is 1,157, both for the period 1996–2015. The comparison of this ends up with the conclusion that research on JIFs grows about 13/14 times as fast as global science does. Whenever we focus on the last 10 years (period 2006–2015), the growth rate for global science is 39, while that of research on JIFs is 135, which leads to the conclusion that research on JIFs grows three to four times as quick as global science does. So in this last period, we notice some sort of stabilization, which is also visible in Figure 1, from 2010 onward, with a drop after 2012, after which the output again starts to increase.

In Figure 2, we display the trend shown in Figure 1, but broken down into various document types in the WoS database. Normal articles do account for the largest share of the output, as can be expected. Remarkably, the document type Editorial material covers nearly 25% of all publications on JIFs. Editorial material is apparently a popular way to discuss JIFs. Moreover, we hypothesize that editorials function as a way to make public the value of the JIF of the respective journals. The other document types play a relatively modest role.

Figure 2. Trend analysis of output in WoS on Journal Impact Factors across various document types, 1981–2015.

We notice a change in the trends of output numbers of articles from 2009 onward, after which the annual numbers fluctuate, with a recovery of the upward trend in 2015. For Editorial material, we observe this decrease somewhat later in time, in 2012. Finally, Reviews seem to be gaining in importance from 2010 onward.

Given the low numbers of publications involved in the period before 1996, a year in which we clearly observe an increase of the number of publications on JIFs, we from here on focus on the period 1996–2015, cutting this period in four equally long 5-year periods.

In Table 1, we present the publications about JIFs in the period 1996–2015 by journal. We show only the 25 journals that appeared most frequently in the period 2011–2015 and then look back in time to what extent these journals contributed to the overall increase of output in the research on JIFs. Among the 25 journals that publish most frequent about JIFs in 2010–2015, we find Scientometrics as the top ranking journal, with 158 publications, while the journal also published on this topic in the 3 previous periods. A new journal that publishes research about JIFs is the Journal of Informetrics (launched in 2007), with 79 publications in the research on JIFs in the period 2011–2015. Other journals among the top 25 most frequently publishing journals in 2011–2015 are Journal of Informetrics, PLoS ONE, Current Science, and Journal of the American Society for Information Science and Technology. These five journals actually form the core journals for the specialty. Next in the table, we find two journals that publish about JIFs for the first time in 2011–2015 (Revista Portuguesa de Pneumologia and Academic Emergency Medicine). Learned Publishing is a journal that is shown in Table 1, which is also visible in all four periods of the analysis.

The development of the total number of publications per period indicates the rapid growth in the research on JIFs, as it doubles every next period, with the number of publications in 2011–2015 being 10-fold the output volume compared to the output in the period 1996–2000. In Table 2, the journals are shown that contained publications about JIFs in every period of our analysis, indicative of the stability in the research on JIFs. The first two journals were mentioned in the discussion of Table 1. A remarkable fact in Table 2 is the dominance of biomedical journals (10 out of 17). Another remarkable fact is the relative large number of Spanish journals (n = 4) among these 17 journals. An explanation for this phenomenon may be the strong pressure in the Spanish science system to publish internationally (Jimenez-Contreras et al., 2003), in particular in journals with a high JIF. In the manual selection process, we noted a relatively large number of publications from Germany and German language publications, on JIFs. However, this did not result in a very strong visibility of one particular journal from Germany or in the German language.

In Table 3, we present the distribution of main contributing countries to the research around JIFs. The countries are shown according to the order of numbers of publications in the period 2010–2015. The USA takes a first position in the research on JIFs. Rather surprising is the position of Spain. Although the share of the output of Spain decreases, the absolute numbers increases strongly, and equally interesting, the citation impact of these publications increases as well. This prominent position of Spain in this analysis is mainly due to two reasons, namely the before mentioned pressure, also legally, for Spanish academics to publish in high impact journals, in combination with the fact that most universities in Spain have faculties of library and information science, therefore many scholars are working in the field. China appears in the second period of our analysis, increasing its output in research on JIFs in the last two periods. This development is in line with the overall growth of the number of scientific publications from China (Moed, 2002; Bouabid et al., 2016). And although based on somewhat lower numbers of publications, the citation impact of some of the countries publishing on JIFs stand out, in particular of the Netherlands, Canada, and Australia.

In Figure 3, we compare the output of the countries that are most active in the research on JIFs with their total contribution to global science in the period 2011–2015. Please note that shares are taken among this group only, for both the contribution to global science and to the body of publications on JIFs, so the global shares presented here are not the actual contributions, and these might be somewhat smaller due to the exclusion of some countries from this analysis. Moreover, these scores contain all document types, since editorials seem to be of importance in the research on JIFs. The countries are presented in the order of their contribution to global science. So we expect the USA and China, together with large science producing European countries such as Great Britain, Germany, and France to be on top of the figure. However, among the top three countries, their contribution to the research on JIFs is lower compared to their contribution to global science, while Great Britain and Germany have relatively more output on JIFs compared to their global contribution to science. Spain has a contribution more than three times as high to the research on JIFs, just as Taiwan has a double as high contribution to JIF research compared to the country’s contribution to global science output. Other countries that are overrepresented compared to their national share on global science are Canada, Italy, Australia, the Netherlands, Brazil, and Belgium.

In Table 4, we present the disciplinary background of the journals publishing on JIFs. The social sciences field Library and Information Science plays the most important role. Many journals classified under this heading in the WoS are also labeled as Computer Science, interdisciplinary applications (which explains why that field is so strongly visible in all three periods). The second largest field when it comes to publications on the topic of JIFs is Medicine, general and internal. This field contains next to the well-known general medicine journals such as New England Journal of Medicine, British Medical Journal, JAMA, and The Lancet, many local medicine journals, many of which publish occasionally on JIFs. We see this as evidence for the popularity of JIFs in this field.

Another remarkable phenomenon in the overview of the disciplinary composition of the research on JIFs is the fact that these articles tend to have high impact. Moreover, those publications in rather peripheral fields, as seen from the core of the research on JIFs, still seem to generate high impact scores.

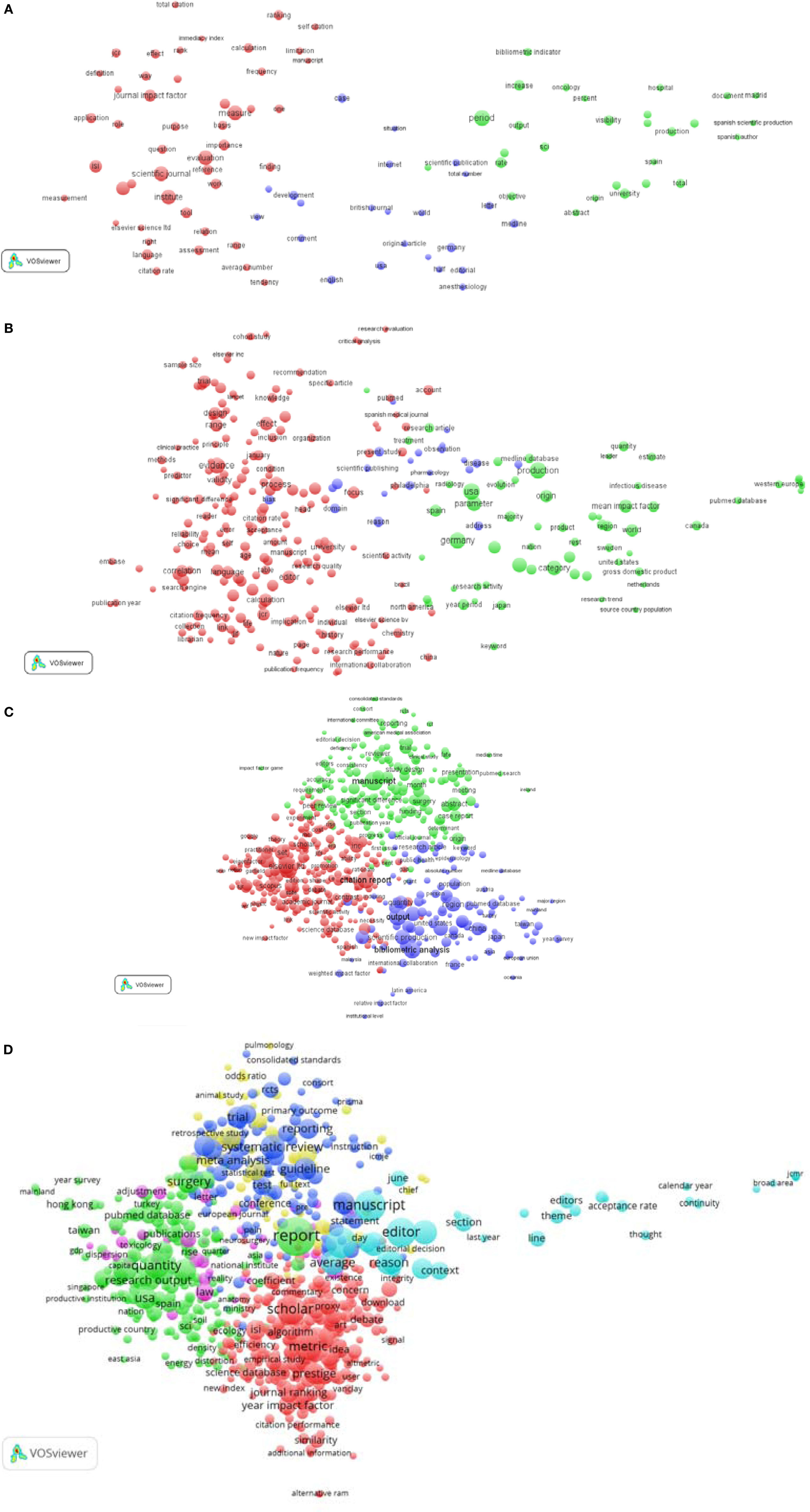

Next, we focus on the way the publications in the research on JIFs are interrelated on the basis of terms (title and abstract words) and how these terms co-occur on the publications in the research on JIFs. For this, we used the VOS Viewer methodology.

In Figures 4A–D, we present the publications related to research on JIF in the periods 1996–2000, 2001–2005, 2006–2010, and 2011–2015, respectively. In Figure 4A, we show the network for the period 1996–2000. Here, we distinguish three loosely connected clusters, somewhat stretched out, in which the most left cluster contains the core of the research field. Here, we find important terms, such as Scientific journal, Journal Impact factor, Evaluation, Institute, and Measure. The other two clusters seem to be containing less relevant terms in the research on JIFs, with some focus on the application in both the geographic dimension (mentioning terms such as Spanish scientific production, but also Germany, British journal, and English) as well as the disciplinary dimension (mentioning terms such as Oncology, Hospital, Anaesthesiology). Here, we would like to recall that in the earlier parts of this study, we have shown that in this period, from 1996 to 2000, output numbers just started to increase in the research on JIFs.

Figure 4. (A) Term map of title and abstract words in output on Journal Impact Factors (JIFs), 1996–2000 (based on VOS Viewer). (B) Term map of title and abstract words in output on JIFs, 2001–2005 (based on VOS Viewer). (C) Term map of title and abstract words in output on JIFs, 2006–2010 (based on VOS Viewer). (D) Term map of title and abstract words in output on JIFs, 2011–2015 (based on VOS Viewer).

In Figure 4B, we show the terms map that represents the research on JIFs in the period 2001–2005. In the graph, we distinguish three different clusters as expressed by the color coding; however, if one takes a closer look, there seems to be two major clusters, one on the right-hand side, in green, and one on the left-hand side, in red. A third, much smaller cluster in purple, is positioned somewhere in the middle, but consists of a small number of terms. The green cluster mainly contains terms related to geographic issues related to JIF research, while the red cluster contains publications that focus on issues such as Research evaluation, Research performance, University, Citation rate. So here we observe a much more evaluative context in the research centered on JIFs.

In Figure 4C, we show the term map for the period 2006–2010. Here, the words plotted in the graph show a dense network, in which we distinguish three different clusters, which are of nearly equally large size/volume of words and density. On the lower left (in red), we observe the cluster that contains the core of the library and information science and evaluation-related topics. The second cluster (in green) contains both elements of scientific publishing and terms from biomedicine, while the lower right part of the figure contains the third cluster (in purple). This cluster contains mainly elements of a geographical nature.

Finally, in Figure 4D, we present the structure based on terms in the period 2011–2015. Remarkable fact is here that for the first time, we can distinguish six separate clusters of terms. The blue and yellow clusters, that are on top of the structure, and mingled, relate to biomedical research. What we have seen in the publications selected for the analysis in this period, we notice an increase of the systematic reviews and guidelines, in the light of a discussion on publishing in high impact factor journals. A next development is visible in the green cluster, which represents the output on research assessment, various country names, indicating the importance of JIF research for the research assessment realm. On the other hand, the light blue cluster represents the discussion on the editorial role, length of citation window, etc. A small pink cluster on JIF research relates to law. Finally, the red cluster indicates the core of scientometric research as can be read from terms such as metric, scholar, impact factor.

As we have seen previously in our analysis, the number of publications grew steadily through time, which means that within the maps displaying the structure of the words used in these publications from one period to another, the underlying relations also become more prominent. The technique used displays a complicated structure in a two-dimensional manner, which is of course a limitation on the cognitive structure underlying the publications. So the increasing density in the four maps reflects the increased density in both terms, as well as the underlying relationships of the words used in titles and abstracts of the publications in the field of research on JIFs.

In this study, we applied bibliometric techniques in a descriptive fashion, to understand the developments around the JIF. We first focused on a description of the characteristics of the research on JIFs. We observed a strong growth in output in the research on JIFs, stronger than the overall output growth in WoS. Focusing on the documents in which research about JIFs is published, editorials play an important role in the publishing on JIFs, and they cover approximately 25% of the output. When we shift our attention to the geographical location of research on JIFs, we can conclude that some countries contribute particularly strongly to research on JIFs, such as Spain and Australia. For these countries, we observe relatively large contributions to the research on JIFs, compared to their overall contribution to science. In a follow-up study, we will investigate to what extent this type of geographical focus on JIF-related scientometric research coincides with the degree to which JIFs are used for various purposes in these science systems.

The initial selection of the publications in this study taught us that we can distinguish three different types of publications on JIFs: publications from the field of library and information science that forms the core of the research on the topic (e.g., the critical studies on the JIF and its’ various ways of application, or the comparison of JIF with newly developed journal impact measures); a set of publications in other fields that relate to the popularity of the indicator in research management (e.g., publications that report on the value of the JIF or propose usage in a policy context); and finally research papers on the controversies around JIFs (these can be of a methodological or a more policy-oriented nature).

Some countries seem to be contributing more to the research on JIFs compared to other countries. A possible reason for the fact that Spain, Denmark, Greece, and Australia contribute more than expected given their overall scientific production might be that in these countries library and information sciences are a discipline often separately distinguished within the academic landscape, housed in separate faculties, next to faculties of social sciences. This is a topic for further research, in particular related to the typology of research papers on JIFs, described in the previous paragraph.

The VOS Viewer graphs in this article suggest an increasing coherence of the research on JIFs. However, future research based on citation relations might help to understand the development of the research on the topic in more detail. Does research on JIFs demonstrate the characteristics of an emerging specialty or can we explain the observed coherence in other ways? We are also interested in the question of replicability and redundancies in the literature. Is this area demonstrating scientific progress by building up a more advanced body of knowledge or do we rather witness a cyclical process in which older findings are regularly repeated?2 And how can we characterize the social network underpinning the body of literature? Do we see a fragmented adhocracy or rather a distributed community?

Another interesting topic for future studies is the question to what extent the DORA Declaration (2012), in which the editors of molecular biology journals called for a stop on using the JIF for assessment purpose, as well as the 2015 Leiden Manifesto (Hicks et al., 2015) and the Metric Tide (Wilsdon et al., 2015) that calls for a more sensible use of bibliometric indicators (among which the JIF) in research assessment contexts, influences the global research on JIFs. Trends in the output numbers showed fluctuations around the moments these documents were published, in particular for the DORA Declaration, so further inquiry is necessary to fully understand the influence of these documents on the scholarly communities working on and using JIFs.

PW is the co-author who presented this research at the 2014 ISSI Conference, while most of the writing, both of the conference paper as well as this manuscript was done by TL. Most research was conducted by TL.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This article is an improved and extended version of the contribution to the 2013 ISSI Conference in Vienna. The manuscript appeared in the Proceedings of the Conference on pages 66–76 (for the conference proceedings, please see http://issi2013.org/Images/ISSI_Proceedings_Volume_I.pdf). Compared to that paper, text has been revised, the aim of the study has been adopted, and the mapping part in the current article is completely new compared to the 2013 conference paper. The authors want to express their thanks to Ludo Waltman, for his instruction on the VOS Viewer usage for this study.

Archambault, E., and Lariviere, V. (2009). History of the journal impact factor: contingencies and consequences. Scientometrics 79, 635–649. doi: 10.1007/s11192-007-2036-x

Bergstrom, C. T., Jevin, D., and Wiseman, M. A. (2008). The Eigenfactor™ metrics. J. Neurosci. 28, 11433–11434. doi:10.1523/JNEUROSCI.0003-08.2008

Bouabid, H., Paul-Hus, A., and Lariviere, V. (2016). Scientific collaboration and high-technology exchanges among BRICS and G-7 countries. Scientometrics 106, 873–899. doi:10.1007/s11192-015-1806-0

DORA Declaration. (2012). The San Francisco Declaration on Research Assessment. Available at: http://www.ascb.org/dora/

Garfield, E. (1955). Citation indexes to science: a new dimension in documentation through association of ideas. Science 122, 108–111. doi:10.1126/science.122.3159.108

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science 178, 471–479 Reprinted in: Current Contents 6, 5–6 (1973); Essays of an Information Scientist, 1, 527–544 (1977). doi:10.1126/science.178.4060.471

Garfield, E. (2006). The history and meaning of the journal impact factor. JAMA 295, 90–93. doi:10.1001/jama.295.1.90

Garfield, E., and Sher, I. H. (1963). New factors in the evaluation of scientific literature through citation indexing. Am. Doc. 14, 195–201. doi:10.1002/asi.5090140304

Hicks, D., Wouters, P. F., Waltman, L., de Rijcke, S., and Rafols, I. (2015). The Leiden Manifesto for research metrics: use these 10 principles to guide research evaluation. Nature 520, 429–431. doi:10.1038/520429a

Hubbard, S. C., and McVeigh, M. E. (2011). Casting a wide net: the journal impact factor numerator. Learn. Publ. 4, 133–137. doi:10.1087/20110208

Jimenez-Contreras, E., de Moya-Anegon, F., and Delgado Lopez-Cozar, E. (2003). The evolution of research activity in Spain. The impact of the National Commission for the Evaluation of Research Activity (CNEAI). Res. Pol. 32, 123–142. doi:10.1016/S0048-7333(02)00008-2

McVeigh, M. E., and Mann, S. J. (2009). The journal impact factor denominator. Defining citable (counted) items. JAMA 302, 1107–1109. doi:10.1001/jama.2009.1301

Moed, H. F. (2002). Measuring China’s research performance using the science citation index. Scientometrics 52, 281–296. doi:10.1023/A:1014812810602

Moed, H. F. (2010). Measuring contextual citation impact of scientific journals. J. Informetr. 4, 265–277. doi:10.1016/j.joi.2010.01.002

Moed, H. F., and van Leeuwen, T. N. (1995). Improving the accuracy of Institute for Scientific Information’s JIFs. J. Am. Soc. Inf. Sci. Technol. 46, 461–467. doi:10.1002/(SICI)1097-4571(199507)46:6<461::AID-ASI5>3.0.CO;2-G

Rossner, M., van Epps, H., and Hill, E. (2007). Show me the data. J. Cell Biol. 179, 1091–1092. doi:10.1083/jcb.200711140

Seglen, P. O. (1997). Why the impact factor of journals should not be used for evaluating research. Br. Med. J. 314, 498–502. doi:10.1136/bmj.314.7079.497

van Eck, N. J., and Waltman, L. (2010). Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 84, 523–538. doi:10.1007/s11192-009-0146-3

Waltman, L., van Eck, N. J., van Leeuwen, T. N., and Visser, M. S. (2013). Some modifications to the SNIP journal impact indicator. J. Informetr. 7, 271–285. doi:10.1016/j.joi.2012.11.011

Waltman, L., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., and van Raan, A. F. J. (2011). Towards a new crown indicator: some theoretical considerations. J. Informetr. 5, 37–47. doi:10.1016/j.joi.2010.08.001

Wilsdon, J., Allen, L., Belfiore, E., Campbell, P., Curry, S., Hill, S., et al. (2015). The Metric Tide: Report of the Independent Review of the Role of Metrics in Research Assessment and Management. doi:10.13140/RG.2.1.4929.1363

Keywords: Journal Impact Factor, scientometric measures, specialty development, science mapping, quantitative science studies

Citation: van Leeuwen TN and Wouters PF (2017) Analysis of Publications on Journal Impact Factor Over Time. Front. Res. Metr. Anal. 2:4. doi: 10.3389/frma.2017.00004

Received: 23 November 2016; Accepted: 31 March 2017;

Published: 20 April 2017

Edited by:

Henk F. Moed, Sapienza University, ItalyReviewed by:

Peter Jacso, University of Hawaii at Manoa, USACopyright: © 2017 van Leeuwen and Wouters. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Thed N. van Leeuwen, bGVldXdlbkBjd3RzLmxlaWRlbnVuaXYubmw=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.