- 1Physical Medicine and Rehabilitation Unit, AUSL-IRCCS di Reggio Emilia, Reggio Emilia, Italy

- 2Department of Biomedical, Metabolic and Neural Sciences, University of Modena and Reggio Emilia, Modena, Italy

- 3Azienda Ospedaliero Universitaria (AOU) of Modena, Modena, Italy

Introduction: Recent developments in the field of social cognition have led to a renewed interest in basic and social emotion recognition in early stages of Alzheimer's Disease (AD) and FrontoTemporal Dementia (FTD). Despite the growing attention to this issue, only few studies have attempted to investigate emotion recognition using both visual and vocal stimuli. In addition, recent studies have presented conflicting findings regarding the extent of impairment in patients in the early stages of these diseases. The present study aims to investigate emotion understanding (both basic and social emotions), using different tasks with visual and auditory stimuli, to identify supramodal deficits in AD and FTD to provide a reliable tool to better outline their behavioral and emotional profile and useful instruments for their management.

Methods: Eighteen patients with AD and 15 patients with FTD were included in the study. Healthy control (HCs) subjects were recruited to obtain normative data for basic emotion recognition tests and social emotion recognition tasks. To evaluate basic emotion recognition, the Facial Emotion Recognition Battery (FERB) and the Emotional Prosody Recognition Battery (EPRB) were administered. To evaluate social emotion recognition, the Faux Pas (FP), Reading the Mind in the Eyes (RME), and Reading the Mind in the Voice (RMV) tests were employed.

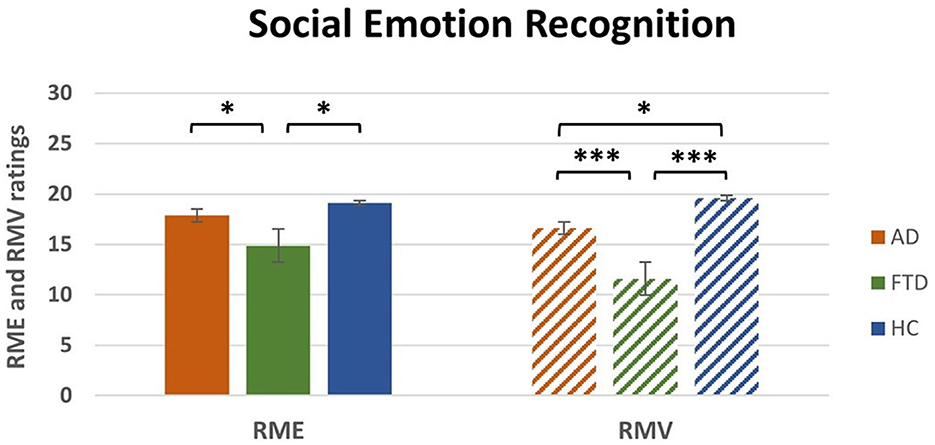

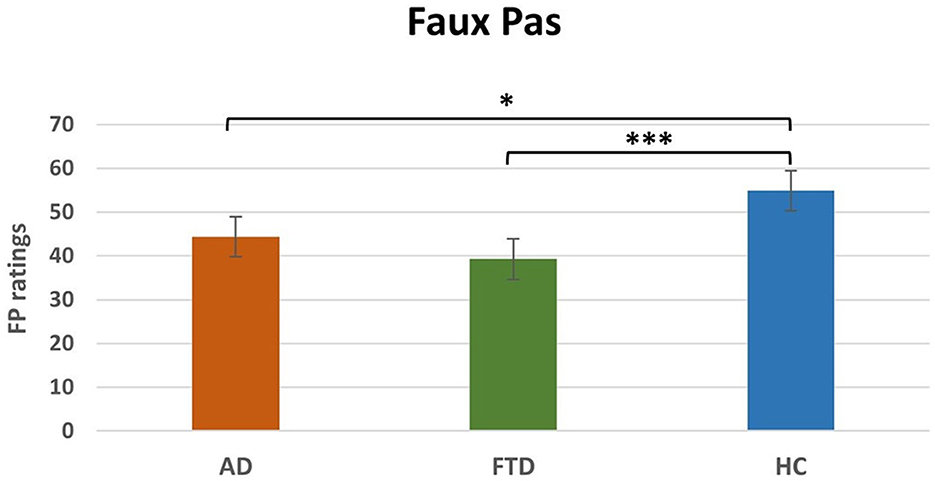

Results: FTD patients performed significantly worse than HCs in most of the subtests of the basic emotion recognition batteries, where, instead, AD patients were significantly impaired only when required to match emotional facial expression in different individuals (subtask of the FERB). Moreover, FTD patients scored significantly lower in RME and RMV tests compared both to AD patients and to HCs. In addition, ADs were selectively impaired in RMV as respect to HCs.

Discussion: FTD patients showed deficits in emotion recognition, affecting both basic and social emotions, whether conveyed through facial expressions or prosody. This result may explain the well-known social behavioral difficulties observed in FTD patients from the early stages of the disease. The fewer and specific deficits in AD patients with comparable MMSE scores may be attributed to the mild degree of impairment, as these deficits may appear later in the progression of AD.

1 Introduction

The term “Social Cognition” refers to several abilities involved in social information processing, consisting of inferring emotions and socially relevant stimuli to modulate behavior (Adolphs, 1999; Frith, 2008).

Emotional processing plays an important role among high-level social abilities; several studies support the idea that it relies on a broad neural network including fusiform face area (FFA; Kanwisher and Yovel, 2006), amygdala (Adolphs et al., 1998, 1994; Todorov et al., 2013), insula (Wicker et al., 2003; Craig, 2009). Additional areas appear to be specifically involved in prosody, an important social signal, during emotional recognition, including superior temporal sulcus (STS; Grandjean et al., 2005; Sander et al., 2005), middle temporal gyrus (MTG), inferior frontal gyrus (IFG; Johnstone et al., 2006), putamen, pallidum, subthalamic nucleus, and cerebellum (Ceravolo et al., 2021).

Recent data have emphasized the need for a supramodal approach to understanding the neural basis of emotion processing (Schirmer and Adolphs, 2017). Each distinct input channel engages partly non-overlapping neuroanatomical systems with different processing specializations. Then, elaborations of signals across different modalities converge into supramodal representations in areas involving a modality-non-specific abstract code, such as STS, prefrontal and posterior cingulate cortex.

Deficits in emotional processing are observed in both Alzheimer's Disease (AD) and Frontotemporal Dementia (FTD). In AD, mild impairments in emotion recognition, particularly for low-intensity or negative emotions, emerge early and worsen over time (Luzzi et al., 2007; Maki et al., 2013; Torres et al., 2015; Garcia-Cordero et al., 2021; Amlerova et al., 2022; Chaudhary et al., 2022). These deficits extend to multiple sensory modalities, including emotional prosody, likely due to overlap between memory and emotional processing regions affected by neurodegeneration (Bediou et al., 2012). On the other hand, in FTD, significant impairments in recognizing visual and vocal emotional stimuli, especially negative emotions, are more severe than in AD (Fernandez-Duque and Black, 2005; Dara et al., 2013; Bertoux et al., 2015; Bora et al., 2016; Jiskoot et al., 2021; Wright et al., 2018). These deficits, prominent in the behavioral variant (bvFTD), are linked to atrophy in brain regions involved in emotional and social cognition (Rascovsky et al., 2011).

Within social cognition abilities, Theory of Mind (ToM) pertains to the capacity to attribute mental states to others and to anticipate, describe, and elucidate behavior based on these mental states (Baron-Cohen, 1997). Traditionally, ToM is divided in two subcomponents: cognitive ToM and affective ToM (Zhou et al., 2023), which rely on different neural networks. The capacity to understand others' beliefs, intentions and goals (cognitive ToM; Amodio and Frith, 2006) has been connected to the activity of the dorsolateral prefrontal cortex (dlPFC; Shamay-Tsoory and Aharon-Peretz, 2007). On the other hand, the ventromedial prefrontal cortex (vmPFC) and the orbitofrontal cortex (OFC), together with the amygdala, are involved in the representation and top-down regulation of emotional states and represent the node for the affective processing of others' mental states (Abu-Akel and Shamay-Tsoory, 2011).

Whereas, cognitive ToM has been explored using the first-order (Baillargeon et al., 2010) and the second-order (Perner and Wimmer, 1985) false belief tasks, affective ToM has been usually investigated by using the Reading the Mind in the Eyes task (RME; Baron-Cohen et al., 2001) and the Reading the Mind in the Voice task (RMV; Rutherford et al., 2002; Golan et al., 2007). Additionally, the Faux Pas test (Baron-Cohen et al., 1999) is commonly used to assess ToM in a non-specific way.

Despite the extensive research on ToM abilities in neurodegenerative diseases, the findings are highly heterogeneous. Although some studies have shown that AD patients exhibit deficits only in ToM tasks that require high cognitive demand (Castelli et al., 2011; Demichelis et al., 2020; Kessels et al., 2021; de Lucena et al., 2023), other results suggest that certain subcomponents of ToM abilities are preserved in AD (e.g., interpretation of sarcasm, social inference, and emotion evaluation; Kumfor et al., 2017). In contrast, research on patients with FTD has shown more consistent results, with a widespread and severe impairment of ToM abilities, which could serve as a clinical marker distinguishing FTD from other neurodegenerative diseases (Gossink et al., 2018; Dodich et al., 2021).

Given the clinical and social importance of AD and FTD, and the relevance of social cognition in these two neurodegenerative diseases, the purpose of this study was to better characterize them, by using a complete assessment to investigate both visual and auditory processing, both for basic and for social emotions, in the same patients. Although these tests may not reveal such striking differences that can be used for individual diagnosis, we aim to provide a reliable tool to better outline the behavioral and emotional profile of these two pathologies, thus also providing useful instruments for their management. To this aim, we used both visual and prosodic stimuli, specifically, two batteries (the Facial Affect Recognition Battery and the Prosodic Affect Recognition Battery) devised by our research group (Benuzzi et al., 2004; Ariatti et al., 2008). Furthermore, processing of social emotions (affective ToM) within the visual and the prosodic domain was assessed by the RME and RMV tasks. Finally, the Faux Pas test (FP) was used to assess the cognitive component of ToM abilities.

We hypothesized that early stages FTD patients would exhibit a global impairment on emotion recognition tasks and in the affective component of ToM abilities, as opposed to substantially preserved functions in early stages AD patients.

2 Materials and methods

2.1 Participants

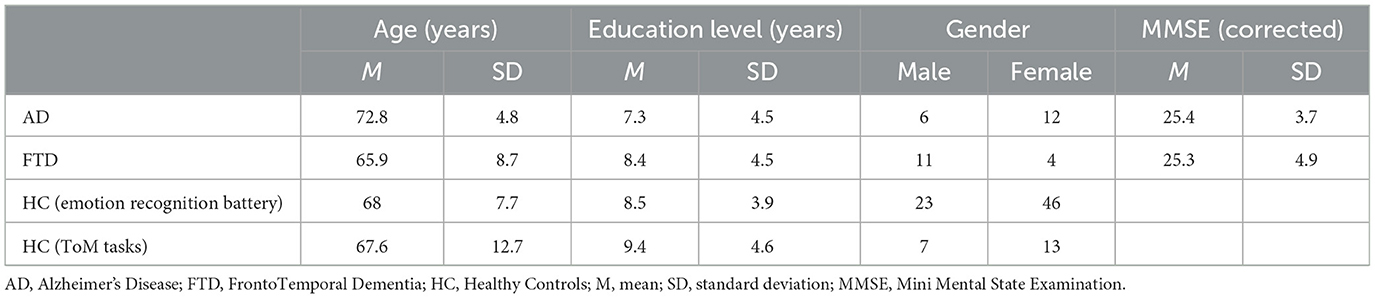

Patients affected by either AD or FTD were recruited among those followed by the Neuropsychology Service of the University Hospital (AOU) of Modena. Eighteen patients with AD (mean age = 72.8 years, SD ± 4.8 years; mean school age = 7.3 years, SD ± 4.5 years; mean MMSE = 25.4, SD ± 3.7) and 15 patients with FTD (mean age = 65.9 years, SD ± 8.7 years; mean school age = 8.4 years, SD ± 4.5 years; mean MMSE = 25.3, SD ± 4.9) were included in the study. They were all right-handed (assessed using the Edinburgh Inventory; Oldfield, 1971) and diagnosed with either AD or FTD according to criteria given by McKhann et al. (2011). Exclusion criteria were as follows: MMSE < 16, history of stroke, history of psychiatric illness or of traumatic brain injury.

As control groups, 70 healthy controls (HC; mean age = 68 years, SD ± 7.7 years; mean school age = 8.5 years, SD ± 3.9 years) were recruited through public announcements among employees or former employees of the University of Modena and Reggio Emilia. They were administered the emotion recognition battery. Among these HC participants, 20 were also submitted to the ToM tasks (mean age = 67.6 years, SD ± 12.7 years; mean school age = 9.4 years, SD ± 4.6 years). HCs were recruited according to the following exclusion criteria: no history of neurological or psychiatric diseases, alcoholism, brain injury, cerebrovascular disease or other neurological conditions. Moreover, exclusion criteria included the presence of depression and obsessive-compulsive disorders, since it has been demonstrated that these disorders interfere with emotion identification (Gur et al., 1992; Abbruzzese et al., 1995; Bouhuys et al., 1999). The presence of these diseases was assessed through the Beck Depression Inventory (BDI > 11; Sica and Ghisi, 2007) and a reduced version of Maudsley Obsessive-Compulsive Questionnaire (MOCQ-R < 75th percentile; Sanavio et al., 1986). See Table 1 for the demographic and clinical features of groups (AD, FTD, HC).

All subjects gave their informed consent to participate in the study. Consent was obtained according to the Declaration of Helsinki. Moreover, the study procedure was approved by the ethical committee of the University of Modena and Reggio Emilia (Comitato Etico di Ateneo per la Ricerca, CEAR; Prot. n 83243). Both patients and HC underwent a clinical neuropsychological evaluation which was conducted during a single session lasting ~1 h and a half. On a subsequent day, experimental tests (emotion recognition batteries and ToM tasks) were administered in a single session lasting between 40 min and 60 min, depending on the individual patients' abilities.

2.2 Materials

2.2.1 Basic emotion recognition

To evaluate the ability to process basic emotion (fear, happiness, sadness, anger, and disgust; Ekman, 1999), two batteries were used (Benuzzi et al., 2004).

The Facial Emotion Recognition Battery includes the following subtests:

Face Matching (FM). In this task, subjects are presented with a vertically arranged set of four neutral expression faces and must select the photograph identical to a target face. Photographs of different individuals of the same gender are used as distractors. The task includes 14 trials and assesses perceptual deficits in face discrimination, for each correct answer, one point was assigned (range score 0–14), thus the higher the score, the better the performance.

Facial Identity Recognition (FIR). This task evaluates the ability to recognize a single person across various facial expressions. It consists of 14 trials, in which the subject is asked to identify the target person from a vertically arranged set of four faces, each showing different expressions. The task assesses associative deficits in face perception. For each correct answer, one point was assigned (range score 0–14), thus the higher the score, the better the performance.

Facial Affect Naming (FAN). Subjects are asked to choose the name that best describes the emotional expression displayed from five options printed below a stimulus face. The subtest includes 25 trials, with five trials for each basic emotion, for each correct answer, one point was assigned (range score 0–25), thus the higher the score, the better the performance.

Facial Affect Selection (FAS). The participant is asked to select the face with an expression that matches a target label from a vertically arranged set of five ones. The test includes 25 trials, for each correct answer, one point was assigned (range score 0–25), thus the higher the score, the better the performance.

Facial Affect Matching (FAM). In this task, subjects must choose from a vertically arranged set of five faces, the one displaying the same expression as a stimulus face. The person in the stimulus photo is always different, with one identity foil included, i.e., a photograph of the same individual as the stimulus, but with a different expression. The test comprises 25 trials, for each correct answer, one point was assigned (range score 0–25), thus the higher the score, the better the performance.

FM and FIR subtests represent control tasks, since they assess the ability to discriminate the perceptual features of faces. On the other hand, FAN, FAS, and FAM assess basic emotion processing and recognition. There is no time limit to complete the task.

The Emotional Prosody Recognition Battery (Benuzzi et al., 2004; Ariatti et al., 2008; Bonora et al., 2011) evaluates the ability to process basic emotions from prosodic cues presented via a computer application. Before the administration of the battery, all participants underwent an auditory acuity evaluation. All subjects (HC, AD, and FTD) had a normal hearing threshold. Stimuli are brief Italian sentences with a neutral meaning (e.g., “Marta is combing the cat”). Sentences vary only with respect to emotional prosody, which could express one of the five basic emotions: fear, anger, sadness, happiness, and disgust. At the beginning of the testing session, the computer volume is regulated by the examiner according to the subject's requests. Sentences are presented both orally and in written form on a computer screen at the same time, and subjects can listen to each trial up to three times. The Emotional Prosody Recognition Battery includes different subtests as follows:

Vocal Identity Discrimination (VID) assesses basic voice discrimination abilities. Participants are asked to determine whether two sentences are spoken by the same person. VID consists of 16 pairs of neutral (aprosodic) stimuli, for each correct answer, one point was assigned (range score 0–16), thus the higher the score, the better the performance.

Prosodic Discrimination (PrD) measures basic intonation discrimination abilities. Given two sentences, subjects must identify whether they are uttered with the same prosodic intonation. PrD consists of 16 pairs of sentences expressing four different intonations: interrogative, declaratory, exclamatory, and imperative, for each correct answer, one point was assigned (range score 0–25), thus the higher the score, the better the performance.

Prosodic Affect Naming (PrAN) assesses emotional prosodic recognition abilities. Subjects are asked to choose from five options on the screen (representing five basic emotions) the one that best describes the emotional prosody of the target recorded sentence. PrAN consists of 25 trials, for each correct answer, one point was assigned (range score 0–25), thus the higher the score, the better the performance.

Prosodic Affect Discrimination (PrAD) measures emotional prosodic discrimination abilities. Given two recorded sentences, subjects must decide whether they are spoken with the same emotional prosody. PrAD consists of 45 pairs of sentences expressing the five basic emotions, for each correct answer, one point was assigned (range score 0–45), thus the higher the score, the better the performance.

Similarly to what happens for the Facial Emotion Recognition Battery, some sub-tests (here, VID, and PrD) represent control tasks, since they assess basic prosodic recognition abilities. On the contrary, PrAN and PrAD assess the emotional prosodic discrimination ability. There is no time limit for answering.

2.2.2 Social emotion recognition (ToM tasks)

To assess social emotion recognition, the following three tasks were used: the Reading the Mind in the Eyes test (RME; Baron-Cohen et al., 2001), the Reading the Mind in the Voice (RMV; Rutherford et al., 2002; Golan et al., 2007) and the Faux Pas test (FP; Stone et al., 2002).

For the RME test (Baron-Cohen et al., 2001), we translated the official version of the Baron-Cohen test (https://www.autismresearchcentre.com/tests/eyes-test-adult/) into Italian, since the first participants were tested before the Italian adaptation was published. Furthermore, we selected 30 out of the original 36 items, excluding items where the verbal label in Italian corresponded to a word with very low usage frequency. In order to ensure methodological consistency, data collection was carried out in the same manner for all the subsequent participants. An independent group of 15 healthy subjects validated the chosen stimuli. The selected 30 images were presented using PowerPoint on a 15″ screen. Each slide featured a black-and-white photograph of the eye region of a human face against a white background, accompanied by four adjectives (e.g., bothered, joking, passionate, comforting). Subjects are asked to choose the adjective that best describes the mental state expressed by the person in the image. There was no time limit for responding.

RMV (Rutherford et al., 2002; Golan et al., 2007) assesses the ability to recognize one's intention through the prosody. We adapted the original version of the task to Italian, including two different distractors for each trial (see below), selecting 35 new short sentences that were recorded by means of the Audacity software 1.2.6 (http://audacity.sourceforge.net/). Then, these sentences were validated by an independent group of healthy subjects and 30 of them were selected for the task.

Stimuli are presented both orally and in written form at the same time, on a computer screen through Microsoft Office PowerPoint. The items were designed so that the meaning of each sentence never matched the prosody with which it was pronounced. For instance, the sentence “I swear I have” typically indicates the completion of an action. However, when pronounced with a sarcastic tone, it implies the opposite, namely that the action has not been completed. The task required subjects to listen to the sentence and select the label that best describes the prosodic meaning conveyed by the sentence. The labels include: (i) an adjective that accurately reflects the prosody (correct answer); (ii) an adjective that corresponds to the sentence's semantic meaning (semantic error); (iii) an adjective that matches neither the prosody nor the meaning of the sentence (incorrect answer).

FP (Stone et al., 2002) assesses both the cognitive and affective components of ToM. Given the length of time required to administer the entire test, the 10 least complex stories in terms of comprehension were selected, choosing five stories that contain faux pas and five stories that do not (control tasks), from the Italian version developed by Massaro and colleagues (https://www.autismresearchcentre.com/tests/faux-pas-test-adult). The task requires participants to listen to a story read by an examiner. Some stories contain a faux pas, in which a character says or does something that unintentionally offends or embarrasses another character, while others do not. After each story, participants are asked to answer a series of questions designed to test their understanding of the social dynamics. These questions include: (i) Faux Pas detection; (ii) Theory of Mind questions; and (iii) Control questions to ensure basic comprehension. The total score was obtained from the sum of the single scores. Correct identification of faux pas and correct answers to ToM-related questions indicate an understanding of social nuances and the ability to infer others' mental states, while errors might indicate difficulties in recognizing or interpreting social information.

2.2.3 Neuropsychological assessment

All patients were submitted to a comprehensive neuropsychological evaluation. For the purpose of the present study, performances in the following tasks were considered: Mini-Mental State Examination (MMSE; Magni et al., 1996) for the assessment of the stage of the neurodegenerative disease, Benton test of facial recognition (Benton et al., 1983) to identify the presence of perceptual difficulties in processing faces and the Similarities subtest from the Italian revised version of the Wechsler Adult Intelligence Scale (Orsini and Laicardi, 1997), as a measure of executive functions.

2.3 Statistical analyses

Data management and analysis were performed using RStudio (2024; RStudio: Integrated Development for R. RStudio, PBC, Boston, MA URL http://www.rstudio.com/) and Statistica (https://docs.tibco.com/).

Normality of variables was assessed using the Shapiro-Wilk test.

Parametric tests (repeated-measures ANOVAs; t-test for independent samples) and non-parametric tests (Kruskal-Wallis rank sum test; Pearson's Chi-Squared test) were used to investigate differences among groups. For post-hoc comparisons, Newman-Keuls tests were used and they were adjusted by Bonferroni corrections for multiple comparisons to account for the probability of committing type-1 errors. Finally, Pearson or Spearman's correlations were performed to assess the relationship between neuropsychological scores and emotion recognition test scores.

3 Results

Analyses of data distribution using the Shapiro-Wilk test showed that age and corrected MMSE scores were normally distributed, whereas education and gender distribution were not normal. Analyses of data distribution using the Shapiro-Wilk test showed that basic emotion recognition batteries, RME, RMV, Benton test of facial recognition and Similarities WAIS subtest were normally distributed, whereas FP and MMSE were not.

3.1 Demographic data

The one-way ANOVA was conducted with Age as within-subjects factor and Group (AD, FTD, HC) as between-subjects factor. The main effects of the Groups were not significant [F(3, 119) = 2.11, p = 0.1, η2p = 0.05]. The Kruskal-Wallis ANOVA by Ranks sum test was conducted with Education as within-subject factor and Group (AD, FTD, HC) as between-subjects factor. The main effect of the Group was not significant (H3 = 3.81, p = 0.3). The T-test for independent samples conducted on corrected MMSE scores between AD and FTD was not significant [t(31) = 0.06, p = 0.95]. The Chi-squared test of independence was performed to analyze the distribution of gender across the groups (AD, FTD, HC). The test revealed a significant difference in gender distribution among the groups (χ2 = 8.46, df = 3, p-value = 0.04).

3.2 Basic emotion recognition batteries

3.2.1 Facial emotion recognition battery

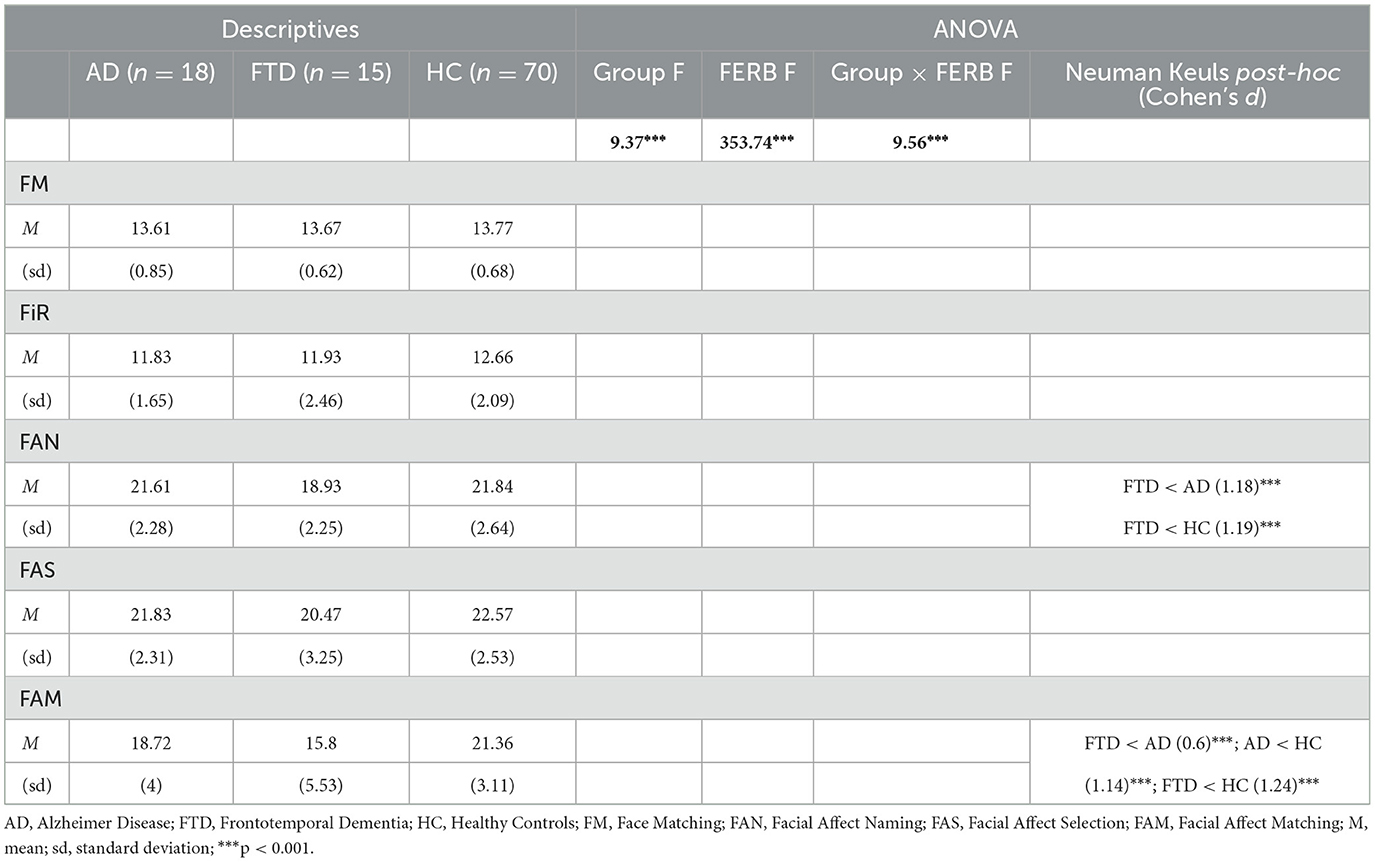

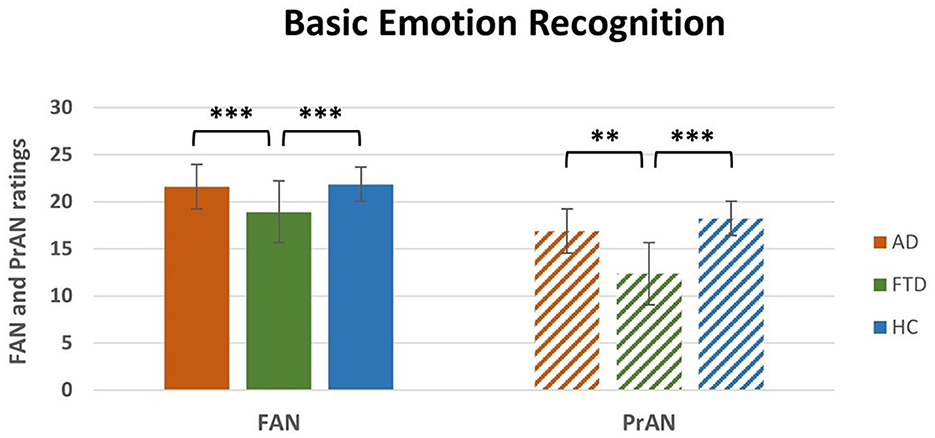

A repeated measures ANOVA was conducted on the Facial Emotion Recognition Battery scores with Subtests (FM, FIR, FAN, FAS, and FAM) as within-subjects factor and Group (AD, FTD, HC) as between-subjects factor (Table 2). The main effects of Group [F(2, 100) = 9.4, p < 0.001, η2p = 0.16] and Subtests [F(4, 400) = 9.4, p < 0.001, η2p = 0.78] were statistically significant, as well as the interaction Subtests * Group [F(8, 400) = 9.6, p < 0.001, η2p = 0.16]. The post-hoc comparisons showed (Table 2) that FTD patients significantly differed in the FAN (M = 18.9, SEM = 0.6; Figure 1, left) and in the FAM (M = 15.8, SEM = 1.4) subtests from both AD (FAN M = 21.6, SEM = 0.1, p < 0.01; FAM M = 18.7, SEM = 0.2, p < 0.001) and HC (FAN M = 21.8, SEM = 0.3, p < 0.01; FAM M = 21.4, SEM = 0.4, p < 0.001); in addition, AD differed from HC in the FAM subtest (p < 0.01). Interestingly, the significant differences resisted also when conducting an Analysis of Covariance (ANCOVA) considering age and education as covariates [F(2, 98) = 14.2, p < 0.001]. All the p's resisted Bonferroni's correction.

Table 2. Descriptive statistics, repeated measures ANOVA and post-hoc results conducted on facial emotion recognition battery (FERB) scores.

Figure 1. Interaction Subtest * Group post-hoc results for FAN and PrAN subtests. FAN, Facial Affect Naming; PrAN, Prosodic Affect Naming; AD, Alzheimer's Disease; FTD, FrontoTemporal Dementia; HC, Healthy Controls. **p < 0.01, ***p < 0.001.

3.2.2 Emotional prosody recognition battery

A repeated measures ANOVA was conducted on the Emotional Prosody Recognition Battery scores with Subtests (VID, PrD, PrAN, PrAD) as within-subjects factor and Group (AD, FTD, HC) as between-subjects factor (Table 3). The main effects of Group [F(2, 100) = 13, p < 0.001, η2p = 0.21] and of Subtests [F(3, 300) = 1063.3, p < 0.001, η2p = 0.91] were significant. The interaction Subtests * Group was also significant [F(6, 300) = 4.3, p < 0.001, η2p = 0.08]. The post-hoc analyses (Table 3) showed that FTD significantly differed in the PrAN (M = 12.3, SEM = 1.2; Figure 1, right) subtest from both AD (M = 16.9, SEM = 0.2, p < 0.01) and HC (M = 18.2, SEM = 0.5, p < 0.001). FTD patients' scores (M = 33.6, SEM = 0.9) were also significantly different from HC (M = 37.7, SEM = 0.6, p < 0.001) and AD (M = 35.8, SEM = 0.2, p = 0.02) in the PrAD subtest. The difference between FTD and AD did not resist Bonferroni's correction for multiple comparisons. All other p's resisted Bonferroni's correction. Interestingly, the significant differences resisted also when conducting an ANCOVA analysis considering age and education as covariates [F(2, 98) = 14.7, p < 0.001].

Table 3. Descriptive statistics, repeated measures ANOVA and post-hoc results conducted on emotional prosody recognition battery (EPRB) scores.

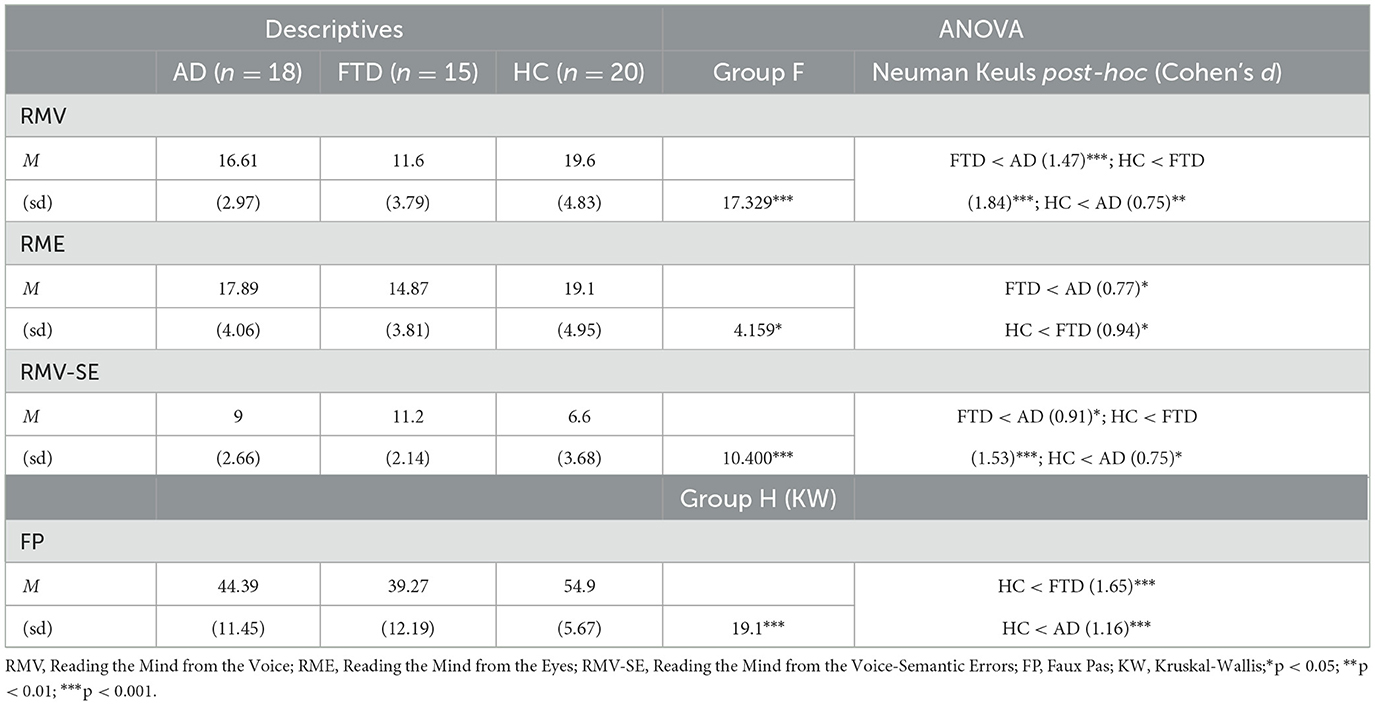

3.3 Social emotion recognition (ToM tasks)

One-way between-subject ANOVA on RME ratings revealed a significant effect of Group [F(2, 50) = 4.2, p < 0.05; η2p = 0.14, Table 4]. Post-hoc comparisons (Table 4) that FTD ratings (M = 14.9, SEM = 1) were significantly lower compared to HC (M = 19.1, SEM = 1.1; p < 0.05) and to AD (M = 17.9, SEM = 1; p < 0.05). There was no significant difference between HC and AD (Figure 2, left).

Table 4. Descriptive statistics, repeated measures ANOVA and post-hoc results conducted on ToM scores.

Figure 2. Main effect of group post-hoc results for RME and RMV tasks. RME, Reading the Mind from the Eyes; RMV, Reading the Mind in the Voice; AD, Alzheimer's Disease; FTD, FrontoTemporal Dementia; HC, Healthy Controls. *p < 0.05, ***p < 0.001.

One-way between-subject ANOVA on Semantic Errors of RMV revealed a significant effect of Group [F(2, 50) = 10.4, p < 0.001; η2p = 0.29, Table 4]. Post-hoc comparisons (Table 4) revealed that FTD ratings (M = 11.2, SEM = 0.6) were significantly higher compared to HC (M = 6.6, SEM = 0.8; p < 0.001) and AD (M = 9, SEM = 0.6; p < 0.05). Moreover, there was a significant difference between AD and HC (p < 0.05).

One-way between-subject ANOVA on RMV ratings revealed a significant effect of Group [F(2, 50) = 17.3, p < 0.0001; η2p = 0.41, Table 4]. Post-hoc comparisons (Table 4) revealed that FTD ratings (M = 11.6, SEM = 1) were significantly lower compared to HC (M = 19.6, SEM = 1.1; p < 0.001) and to AD (M = 16.6, SEM = 0.7; p < 0.001). Moreover, there was a significant difference between AD and HC (p < 0.05; Figure 2, right).

Non-parametric one-way between-subject ANOVA (Kruskal-Wallis rank sum test) on FP ratings revealed a significant effect of Group (H2 = 19.1, p < 0.0001, Table 4). Post-hoc comparisons (Table 4) revealed that FTD (M = 16.9, SEM = 3.1; p < 0.001) and AD (M = 22.6, SEM = 2.7; p < 0.05) ratings were significantly lower compared to HC (M = 38.5, SEM = 1.3). The difference between FTD and HC resisted Bonferroni's correction, whereas there was no significant difference between AD and FTD (Figure 3).

Figure 3. Main effect of group post-hoc results for FP. FP, Faux Pas; AD. Alzheimer's disease; FTD, FrontoTemporal Dementia; HC, Healthy Controls. *p < 0.05, ***p < 0.001.

3.4 Neuropsychological tests

There was no significant difference between AD and FTD at the Benton test of facial recognition [t(30.54) = 1.38, p = 0.18]. The mean score of Similarities WAIS subtest of FTD patients (M = 27.32, SEM = 3.8) was significantly different compared to the mean score of AD patients [M = 39.91, SEM = 1.5; t(18.51) = 3.06, p < 0.01].

3.5 Correlations

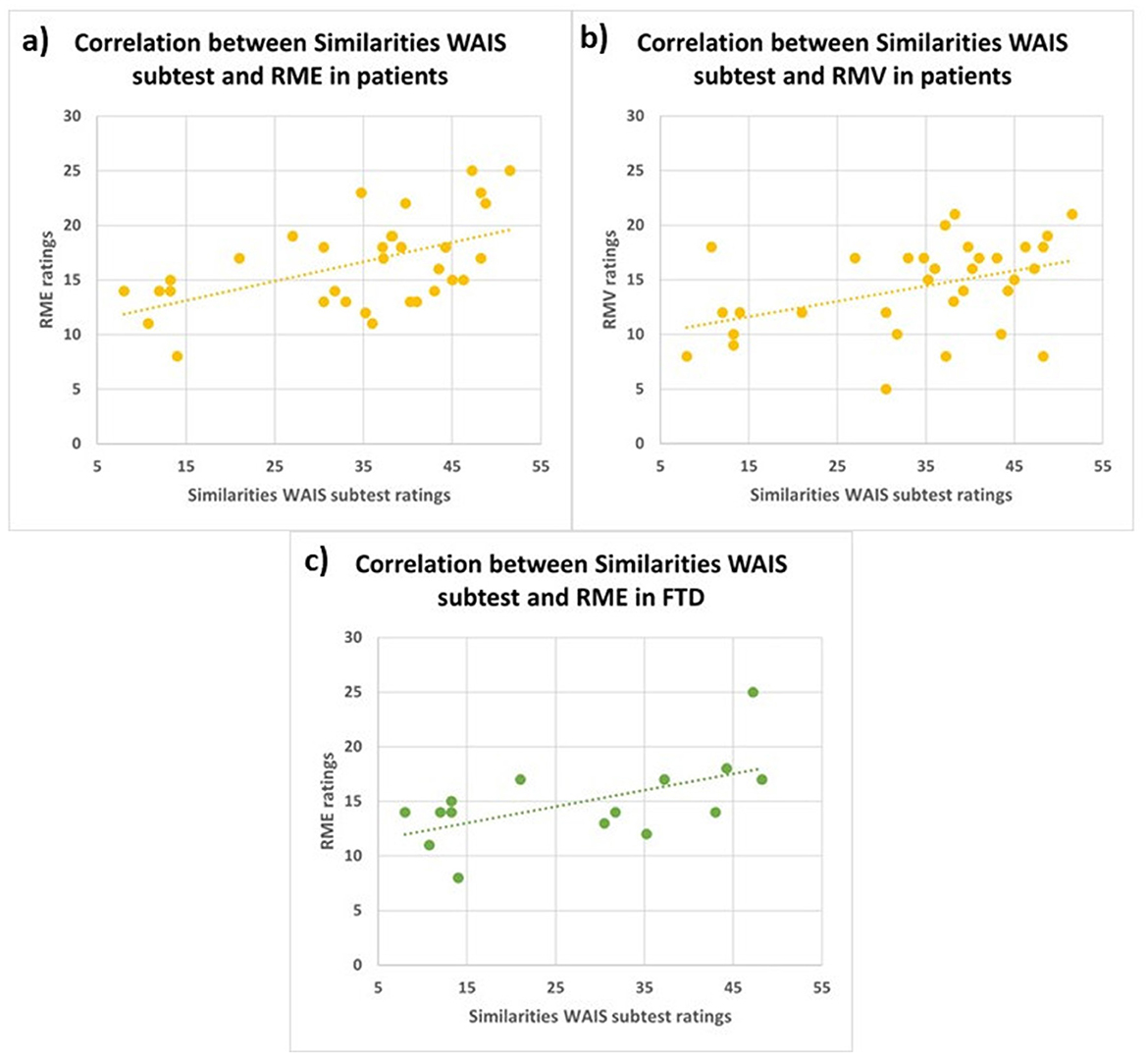

Pearson's correlation analyses revealed three significant positive correlations: between Similarities WAIS subtest Test and RME in FTD patients (r = 0.6, p < 0.05); between Similarities WAIS subtest and RME in all patients (r = 0.5, p < 0.01); and between Similarities WAIS subtest and RMV in patients (r = 0.4, p < 0.05; Figure 4).

Figure 4. Correlations between similarities WAIS subtest and RME in all patients (A; r = 0.5, p < 0.01), between Similarities WAIS subtest and RMV in all patients (B; r = 0.4, p < 0.05) and between Similarities WAIS subtest and RME in FTD patients (C; r = 0.6, p < 0.05). RME, Reading the Mind in the Eyes; RMV, Reading the Mind in the Voice; FTD, FrontoTemporal Dementia.

4 Discussion

In the present study we aimed to assess the social skills in the early stages of Alzheimer's Disease (AD) and FrontoTemporal Dementia (FTD), this information can be useful both for a better characterization and for a better clinical management of the two conditions. To this end, we recruited two groups of patients, one with AD and one with FTD, whose cognitive impairment was comparable (MMSE not significantly different between the two groups), and a control group of healthy participants. Both visual and auditory tasks were administered, both for basic emotion and for social emotion (ToM) recognition. In addition, to test cognitive ToM, the Faux Pas (FP) test was used. Overall, our results suggest that in early stages of FTD and AD there is an impairment of social cognition.

Regarding FTD, in line with the literature, our data show that these patients are significantly impaired, as compared to AD patients and HC, in all tasks that evaluate basic and social emotions processing. Specifically, FTD patients' deficits emerge, in comparison to AD patients, in those subtests of Facial Emotion Recognition Battery and Emotional Prosody Recognition Battery which require the association of an emotional (visual and auditory) expression with a verbal label, that is, in the Facial Affect Naming (FAN) and Prosodic Affect Naming (PrAN) subtests. Various studies showed consistent deficits in emotion recognition common to both visual (Bertoux et al., 2015; Jiskoot et al., 2021) and vocal stimuli (Dara et al., 2013; Wright et al., 2018), and particularly severe for negative emotions (Rosen et al., 2002; Fernandez-Duque and Black, 2005). Indeed, social cognition deficits are widely recognized as a hallmark of FTD, especially in the behavioral variant (bvFTD; Rascovsky et al., 2011). Notably, this impairment is particularly relevant in bvFTD as well as in the semantic variant of FTD (svFTD; Kumfor et al., 2014; Lee et al., 2020), and has been linked to structural atrophy in brain regions such as the anterior temporal lobes and the amygdala (Rosen et al., 2002, 2006; Kumfor et al., 2014; Lee et al., 2020). On the other hand, previous findings showed that tasks that increase the intensity of emotional expressions may mitigate recognition issues in bvFTD and primary progressive non-fluent aphasia (PNFA), suggesting that attentional and perceptual difficulties contribute to deficits in some FTD subtypes (Rascovsky et al., 2011). However, in svFTD, these issues are likely due to primary emotion processing impairments, rather than to cognitive overload. Interestingly, negative emotion recognition turned out to be particularly useful for differentiating FTD from AD (Bora et al., 2016), as AD patients typically show milder deficits.

Deficits in the ToM skills are usually found in FTD patients, especially in the behavioral variant, with respect to AD patients, both in visual (Gregory et al., 2002) and auditory modality (Orjuela-Rojas et al., 2021). Our findings demonstrate that FTD patients exhibit significantly greater impairments in both RME and RMV tests, compared to HC. Additionally, FTD patients show significantly worse performance in the RMV test compared to AD patients. Interestingly, especially in FTD patients, we found that Similarities WAIS subtest and RME scores positively correlated, showing that the higher the deficit in executive functions, the higher the impairment in recognizing emotions from eyes' cues. The association between executive functions and emotional recognition has been reported in healthy aging (Circelli et al., 2013) and in several psychiatric diseases (David et al., 2014; Yang et al., 2015; Williams et al., 2015), as well as in neurodegenerative diseases, such as Parkinson's Disease (Péron et al., 2012) and AD (Buçgün et al., 2023). In particular, social cognition skills could rely on executive processes, such as mental speed, cognitive flexibility, and inhibitory control to disregard personal viewpoints and concentrate on pertinent aspects, enabling the timely processing of all relevant information (David et al., 2014). Indeed, the ability to understand others' beliefs, intentions, and goals (cognitive ToM) relies on a frontotemporal network comprising the dorsolateral prefrontal cortex (dlPFC; cognitive processing of mental states and perspective-taking), the ventromedial prefrontal cortex (vmPFC), the orbitofrontal cortex (OFC), and the amygdala (processing and regulating emotional states; Amodio and Frith, 2006; Shamay-Tsoory and Aharon-Peretz, 2007; Abu-Akel and Shamay-Tsoory, 2011). On the other hand, the posterior superior temporal sulcus (pSTS), the temporo-parietal junction (TPJ), and medial prefrontal cortex (mPFC) belong to both emotional and cognitive ToM networks (Schurz and Perner, 2015; Molenberghs et al., 2016). This network facilitates representing others' mental states and differentiating them from one's own, regardless of the nature of the states.

Lastly, we found that FTD patients in the RMV test made a significant amount of semantic errors as compared to healthy controls. This indicates that these patients were able to understand the sentences' meaning, nevertheless they exhibited selective impairment in recognizing the affective aspects of prosody.

Focusing on AD, our study revealed a clear impairment in the recognition of emotional expression in the Facial Affect Matching (FAM) subtest, which requires to keep in memory and compare two emotional stimuli. This deficit could be explained by the overlap between areas engaged in memory tasks, and those involved in emotional processing, both prone to neurodegeneration in AD (Bediou et al., 2012). Indeed, most of the previous studies concluded that the ability to understand facial and prosodic emotional expressions is likely impaired because of the general cognitive decline observed in these patients (Amlerova et al., 2022; Buçgün et al., 2023). The mild deficits described in emotion recognition in the early stages of AD were more specifically related to low-intensity or negative emotions, such as sadness (Maki et al., 2013; Torres et al., 2015; Garcia-Cordero et al., 2021). On the other hand, emotion recognition seemed preserved in tasks with low cognitive demand (Luzzi et al., 2007). Furthermore, deficits in emotional processing in AD also extended across different sensory modalities (e.g., prosody; Amlerova et al., 2022). Therefore, the deficits observed in AD patients in the FAM subtest could be related to the high cognitive demand intrinsic to the task, since the patient is required to remember a face and associate it with an emotional label.

Regarding the ToM skills in AD patients, according to Wright et al. (2018), the recognition of affective prosody relies on a ventral processing stream involving the superior temporal cortex as well as the inferior and anterior temporal cortex in the right hemisphere. Impairments in this pathway may result in a compromised access to the Abstract Representations of Acoustic Characteristics that Convey Emotion (ARACCE; Wright et al., 2018). This is in line with our findings that AD patients are selectively impaired in recognizing emotions from the voice, that is, from the prosody, as compared to the HC group. These emotional recognition deficits in AD are consistent with neurodegeneration in temporal lobes (Bediou et al., 2012; Amlerova et al., 2022), affecting the abstract representations of acoustic features that convey emotions.

Finally, FTD and AD patients are significantly impaired in several Faux Pas (FP) subtests, including the affective and cognitive scores, as compared to the HC, whereas the groups of patients did not differ from each other. Our FP task contained several questions which enabled us to assess whether patients understood both the semantic aspects of the stories (control stories and questions) and the social gaffes (faux pas stories and questions).

Recent neuroimaging studies showed that the areas associated with the RME task are the left and right middle temporal gyri, superior temporal gyrus, cingulate gyrus, superior frontal gyrus, inferior frontal gyrus, middle frontal gyrus and left precentral gyrus. A recent FDG-PET and MRI (Magnetic Resonance Imaging) study hypothesizes that the ToM neural correlates can be categorized into hubs and spokes (Orso et al., 2022). Within the connectionist paradigm (van den Heuvel et al., 2009), it has been suggested that regions with greater connectivity to other components of a network (i.e., the “hubs”) play a more crucial role in network functioning than those with less connectivity (i.e., the “spokes;” Hwang et al., 2013). Moreover, it has been hypothesized that damage to secondary nodes (spokes) can be compensated by the integrity of central nodes (hubs), whereas damage to the hubs themselves may result in clinical symptoms (van den Heuvel et al., 2009; Hwang et al., 2013). According to the structural connectivity and distribution of hypometabolism, hubs of the RME network were identified in frontal regions. This may explain ToM deficits commonly observed in FTD patients, where neurodegeneration impacts these hubs in the early stages of the disease (Adenzato et al., 2010). In contrast, in AD, their functional involvement typically becomes evident in the later stages of the disease, thus explaining the absence of ToM impairments in the early stages of the disease (Lucena et al., 2020). Indeed, our results in the RME subtest are consistent with these hypotheses, in that we only found deficits in FTD patients, which could likely be due to the neurodegeneration of these hubs.

To the best of our knowledge, this is the first extensive evaluation of emotional and social abilities in groups of neurodegenerative patients in the Italian population. Namely, we revised the RMV task to better assess the prosodic affective component of ToM in FTD patients. In particular, we introduced the possibility to evaluate semantic vs. non-semantic errors. The development of two tools for studying ToM abilities in the Italian language fills a gap in neuropsychological testing by providing instruments specifically adapted for use with Italian patients, which were previously unavailable. Furthermore, alongside the ability to quantify errors in the RMV test, we have introduced the capability to qualify these errors. This allows for the identification of patients with deficits in mental state processing stemming from semantic impairments, as opposed to those whose errors may be attributed to task complexity, thus reflecting the underlying neurodegenerative process. Thus, the modified versions of the two ToM tests are sensitive to detecting deficits that cannot be attributed to a generic neurodegenerative process. Combined with the two emotion processing batteries, they represent effective tools for both the quantification and qualification of social cognition impairments, even in patients with different neurological conditions such as traumatic brain injury, epilepsy (as demonstrated in our previous studies; Benuzzi et al., 2004; Bonora et al., 2011), focal lesions, and brain tumors.

Some limitations of the present study must be considered. Firstly, the involvement of a larger sample size for both groups of patients with dementia will be necessary. Specifically, in the group of patients with FTD, future studies should examine the impact of the different dementia variants on social abilities. Additionally, utilizing more and/or more refined tests than in the present study, with further tasks and tests, will enable a better understanding of the changes in the various components of ToM and emotion recognition in various forms of dementia.

Summing up, in the current study we found that FTD patients are significantly impaired in social cognition abilities, both in visual and in auditory modality, as compared to both AD patients and HC. On the other hand, in AD the emotional recognition impairment is prevalent in the auditory modality. Therefore, the introduction of the evaluation of these aspects in the clinical neuropsychological assessment could provide new insights into the cerebral localization of emotional and social skills, and into the neurodegenerative processes that may affect them.

Considering the clinical and social influence of social cognition impairments in these two neurodegenerative diseases, this study aimed to provide a more comprehensive characterization of their impact. Specifically, we employed an extensive assessment protocol designed to evaluate both visual and auditory processing across basic and social emotions within the same patient groups. While these tests may not produce clear differences sufficient for individual diagnosis, our objective is to offer a robust and reliable framework for delineating the behavioral and emotional profiles characteristic of AD and FTD. This, in turn, can serve as a valuable tool for enhancing our understanding of these diseases and facilitating improved clinical management strategies, including tailored therapeutic interventions and caregiver support.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Comitato Etico di Ateneo per la Ricerca, CEAR; Prot. n 83243. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

CS: Investigation, Methodology, Validation, Writing – review & editing. VZ: Formal analysis, Visualization, Writing – original draft, Writing – review & editing. CC: Formal analysis, Validation, Writing – original draft, Writing – review & editing. MM: Conceptualization, Methodology, Supervision, Writing – review & editing, Investigation. FR: Writing – original draft, Writing – review & editing. OC: Software, Writing – review & editing. MT: Writing – review & editing, Investigation. FL: Conceptualization, Project administration, Supervision, Writing – review & editing. PN: Conceptualization, Supervision, Writing – review & editing. FB: Conceptualization, Data curation, Investigation, Project administration, Supervision, Validation, Writing – review & editing, Methodology, Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbruzzese, M., Bellodi, L., Ferri, S., and Scarone, S. (1995). Frontal lobe dysfunction in schizophrenia and obsessive-compulsive disorder: a neuropsychological study. Brain Cogn. 27, 202–212. doi: 10.1006/brcg.1995.1017

Abu-Akel, A., and Shamay-Tsoory, S. (2011). Neuroanatomical and neurochemical bases of theory of mind. Neuropsychologia 49, 2971–2984. doi: 10.1016/j.neuropsychologia.2011.07.012

Adenzato, M., Cavallo, M., and Enrici, I. (2010). Theory of mind ability in the behavioural variant of frontotemporal dementia: an analysis of the neural, cognitive, and social levels. Neuropsychologia 48, 2–12. doi: 10.1016/j.neuropsychologia.2009.08.001

Adolphs, R. (1999). Social cognition and the human brain. Trends Cogn. Sci. 3, 469–479. doi: 10.1016/S1364-6613(99)01399-6

Adolphs, R., Tranel, D., and Damasio, A. R. (1998). The human amygdala in social judgment. Nature 393, 470–474. doi: 10.1038/30982

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672. doi: 10.1038/372669a0

Amlerova, J., Laczó, J., Nedelska, Z., Laczó, M., Vyhnálek, M., Zhang, B., et al. (2022). Emotional prosody recognition is impaired in Alzheimer's disease. Alzheimer. Res. Ther. 14:50. doi: 10.1186/s13195-022-00989-7

Amodio, D. M., and Frith, C. D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nat. Rev. Neurosci. 7, 268–277. doi: 10.1038/nrn1884

Ariatti, A., Benuzzi, F., and Nichelli, P. (2008). Recognition of emotions from visual and prosodic cues in Parkinson's disease. Neurol. Sci. 29, 219–227. doi: 10.1007/s10072-008-0971-9

Baillargeon, R., Scott, R. M., and He, Z. (2010). False-belief understanding in infants. Trends Cogn. Sci. 14, 110–118. doi: 10.1016/j.tics.2009.12.006

Baron-Cohen, S., O'Riordan, M., Stone, V., Jones, R., and Plaisted, K. (1999). Recognition of faux pas by normally developing children and children with Asperger syndrome or high-functioning autism. J. Autism Dev. Disord. 29, 407–418. doi: 10.1023/A:1023035012436

Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., and Plumb, I. (2001). The “Reading the Mind in the Eyes” test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. J. Child Psychol. Psychiatry Allied Discipl. 42, 241–251. doi: 10.1111/1469-7610.00715

Bediou, B., Brunelin, J., d'Amato, T., Fecteau, S., Saoud, M., Hénaff, M. A., et al. (2012). A comparison of facial emotion processing in neurological and psychiatric conditions. Front. Psychol. 3:98. doi: 10.3389/fpsyg.2012.00098

Benton, A., Hamsher, K. D., Varney, N., and Spreen, O. (1983). Contribution to Neuropsychological Assessment. New York, NY: Oxford University Press.

Benuzzi, F., Meletti, S., Zamboni, G., Calandra-Buonaura, G., Serafini, M., Lui, F., et al. (2004). Impaired fear processing in right mesial temporal sclerosis: a fMRI study. Brain Res. Bull. 63, 269–281. doi: 10.1016/j.brainresbull.2004.03.005

Bertoux, M., de Souza, L. C., Sarazin, M., Funkiewiez, A., Dubois, B., and Hornberger, M. (2015). How preserved is emotion recognition in Alzheimer disease compared with behavioral variant frontotemporal dementia? Alzheimer Dis. Assoc. Disord. 29, 154–157. doi: 10.1097/WAD.0000000000000023

Bonora, A., Benuzzi, F., Monti, G., Mirandola, L., Pugnaghi, M., Nichelli, P., et al. (2011). Recognition of emotions from faces and voices in medial temporal lobe epilepsy. Epilepsy Behav. 20, 648–654. doi: 10.1016/j.yebeh.2011.01.027

Bora, E., Velakoulis, D., and Walterfang, M. (2016). Meta-analysis of facial emotion recognition in behavioral variant frontotemporal dementia: comparison with Alzheimer disease and healthy controls. J. Geriatric Psychiatry Neurol. 29, 205–211. doi: 10.1177/0891988716640375

Bouhuys, A. L., Geerts, E., and Gordijn, M. C. (1999). Depressed patients' perceptions of facial emotions in depressed and remitted states are associated with relapse: a longitudinal study. J. Nervous Mental Dis. 187, 595–602. doi: 10.1097/00005053-199910000-00002

Buçgün, I., Korkmaz, S. A., and Öyekçin, D. G. (2023). Facial emotion recognition is associated with executive functions and depression scores, but not staging of dementia, in mild-to-moderate Alzheimer's disease. Brain Behav. 14:e3390. doi: 10.1002/brb3.3390

Castelli, I., Pini, A., Alberoni, M., Liverta-Sempio, O., Baglio, F., Massaro, D., et al. (2011). Mapping levels of theory of mind in Alzheimer's disease: a preliminary study. Aging Mental Health 15, 157–168. doi: 10.1080/13607863.2010.513038

Ceravolo, L., Frühholz, S., Pierce, J., Grandjean, D., and Péron, J. (2021). Basal ganglia and cerebellum contributions to vocal emotion processing as revealed by high-resolution fMRI. Sci. Rep. 11:10645. doi: 10.1038/s41598-021-90222-6

Chaudhary, S., Zhornitsky, S., Chao, H. H., van Dyck, C. H., and Li, C. R. (2022). Emotion processing dysfunction in Alzheimer's disease: an overview of behavioral findings, systems neural correlates, and underlying neural biology. Am. J. Alzheimer. Dis. Other Dement. 37, 1–33. doi: 10.1177/15333175221082834

Circelli, K. S., Clark, U. S., and Cronin-Golomb, A. (2013). Visual scanning patterns and executive function in relation to facial emotion recognition in aging. Neuropsychol. Dev. Cogn. Sect. B Aging Neuropsychol. Cogn. 20, 148–173. doi: 10.1080/13825585.2012.675427

Craig, A. D. (2009). How do you feel–now? The anterior insula and human awareness. Nat. Rev. Neurosci. 10, 59–70. doi: 10.1038/nrn2555

Dara, C., Kirsch-Darrow, L., Ochfeld, E., Slenz, J., Agranovich, A., Vasconcellos-Faria, A., et al. (2013). Impaired emotion processing from vocal and facial cues in frontotemporal dementia compared to right hemisphere stroke. Neurocase 19, 521–529. doi: 10.1080/13554794.2012.701641

David, D. P., Soeiro-de-Souza, M. G., Moreno, R. A., and Bio, D. S. (2014). Facial emotion recognition and its correlation with executive functions in bipolar I patients and healthy controls. J. Affect. Disord. 152–154, 288–294. doi: 10.1016/j.jad.2013.09.027

de Lucena, A. T., Dos Santos, T. T. B. A., Santos, P. F. A., and Dourado, M. C. N. (2023). Affective theory of mind in people with mild and moderate Alzheimer's disease. Int. J. Geriatric Psychiatry 38:e6032. doi: 10.1002/gps.6032

Demichelis, O. P., Coundouris, S. P., Grainger, S. A., and Henry, J. D. (2020). Empathy and theory of mind in Alzheimer's disease: a meta-analysis. J. Int. Neuropsychol. Soc. 26, 963–977. doi: 10.1017/S1355617720000478

Dodich, A., Crespi, C., Santi, G. C., Cappa, S. F., and Cerami, C. (2021). Evaluation of discriminative detection abilities of social cognition measures for the diagnosis of the behavioral variant of frontotemporal dementia: a systematic review. Neuropsychol. Rev. 31, 251–266. doi: 10.1007/s11065-020-09457-1

Ekman, P. (1999). “Basic emotions,” in Handbook of Cognition and Emotion, eds. T. Dalgleish and M. J. Power (New York, NY: John Wiley and Sons Ltd), 45–60. doi: 10.1002/0470013494.ch3

Fernandez-Duque, D., and Black, S. E. (2005). Impaired recognition of negative facial emotions in patients with frontotemporal dementia. Neuropsychologia 43, 1673–1687. doi: 10.1016/j.neuropsychologia.2005.01.005

Frith, C. D. (2008). Social cognition. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 363, 2033–2039. doi: 10.1098/rstb.2008.0005

Garcia-Cordero, I., Migeot, J., Fittipaldi, S., Aquino, A., Campo, C. G., García, A., et al. (2021). Metacognition of emotion recognition across neurodegenerative diseases. Cortex 137, 93–107. doi: 10.1016/j.cortex.2020.12.023

Golan, O., Baron-Cohen, S., Hill, J. J., and Rutherford, M. D. (2007). The ‘reading the mind in the voice' test-revised: a study of complex emotion recognition in adults with and without autism spectrum conditions. J. Autism Dev. Disord. 37, 1096–1106. doi: 10.1007/s10803-006-0252-5

Gossink, F., Schouws, S., Krudop, W., Scheltens, P., Stek, M., Pijnenburg, Y., et al. (2018). Social cognition differentiates behavioral variant frontotemporal dementia from other neurodegenerative diseases and psychiatric disorders. Am. J. Geriatric Psychiatry 26, 569–579. doi: 10.1016/j.jagp.2017.12.008

Grandjean, D., Sander, D., Pourtois, G., Schwartz, S., Seghier, M. L., Scherer, K. R., et al. (2005). The voices of wrath: brain responses to angry prosody in meaningless speech. Nat. Neurosci. 8, 145–146. doi: 10.1038/nn1392

Gregory, C., Lough, S., Stone, V., Erzinclioglu, S., Martin, L., et al. (2002). Theory of mind in patients with frontal variant frontotemporal dementia and Alzheimer's disease: theoretical and practical implications. Brain 125, 752–764. doi: 10.1093/brain/awf079

Gur, R. C., Erwin, R. J., Gur, R. E., Zwil, A. S., Heimberg, C., and Kraemer, H. C. (1992). Facial emotion discrimination: II. Behavioral findings in depression. Psychiatry Res. 42, 241–251. doi: 10.1016/0165-1781(92)90116-K

Hwang, K., Hallquist, M. N., and Luna, B. (2013). The development of hub architecture in the human functional brain network. Cereb. Cortex 23, 2380–2393. doi: 10.1093/cercor/bhs227

Jiskoot, L. C., Poos, J. M., Vollebergh, M. E., Franzen, S., van Hemmen, J., Papma, J. M., et al. (2021). Emotion recognition of morphed facial expressions in presymptomatic and symptomatic frontotemporal dementia, and Alzheimer's dementia. J. Neurol. 268, 102–113. doi: 10.1007/s00415-020-10096-y

Johnstone, T., van Reekum, C. M., Oakes, T. R., and Davidson, R. J. (2006). The voice of emotion: an FMRI study of neural responses to angry and happy vocal expressions. Soc. Cogn. Affect. Neurosci. 1, 242–249. doi: 10.1093/scan/nsl027

Kanwisher, N., and Yovel, G. (2006). The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 361, 2109–2128. doi: 10.1098/rstb.2006.1934

Kessels, R. P. C., Waanders-Oude Elferink, M., and van Tilborg, I. (2021). Social cognition and social functioning in patients with amnestic mild cognitive impairment or Alzheimer's dementia. J. Neuropsychol. 15, 186–203. doi: 10.1111/jnp.12223

Kumfor, F., Honan, C., McDonald, S., Hazelton, J. L., Hodges, J. R., and Piguet, O. (2017). Assessing the “social brain” in dementia: applying TASIT-S. Cortex 93, 166–177. doi: 10.1016/j.cortex.2017.05.022

Kumfor, F., Sapey-Triomphe, L. A., Leyton, C. E., Burrell, J. R., Hodges, J. R., and Piguet, O. (2014). Neuroanatomical correlates of emotion recognition deficits in behavioral variant frontotemporal dementia: a voxel-based morphometry study. PLoS ONE 8:e67457. doi: 10.1371/journal.pone.0067457

Lee, J. H., Jeong, H. K., Kim, Y. H., et al. (2020). Facial emotion recognition and its neuroanatomical correlates in elderly Korean adults with mild cognitive impairment, Alzheimer's disease, and frontotemporal dementia. Front. Psychol. 11:571760. doi: 10.3389/fnagi.2019.00091

Lucena, A. T., Bhalla, R. K., Belfort Almeida Dos Santos, T. T., and Dourado, M. C. N. (2020). The relationship between theory of mind and cognition in Alzheimer's disease: a systematic review. J. Clin. Exp. Neuropsychol. 42, 223–239. doi: 10.1080/13803395.2019.1710112

Luzzi, S., Piccirilli, M., and Provinciali, L. (2007). Perception of emotions on happy/sad chimeric faces in Alzheimer disease: relationship with cognitive functions. Alzheimer Dis. Assoc. Disord. 21, 130–135. doi: 10.1097/WAD.0b013e318064f445

Magni, E., Binetti, G., Bianchetti, A., Rozzini, R., and Trabucchi, M. (1996). Mini-mental state examination: a normative study in Italian elderly population. Euro. J. Neurol. 3, 198–202. doi: 10.1111/j.1468-1331.1996.tb00423.x

Maki, Y., Yoshida, H., Yamaguchi, T., and Yamaguchi, H. (2013). Relative preservation of the recognition of positive facial expression “happiness” in Alzheimer disease. Int. Psychogeriatr. 25, 105–110. doi: 10.1017/S1041610212001482

McKhann, G. M., Knopman, D. S., Chertkow, H., Hyman, B. T., Jack, C. R. Jr., Kawas, C. H., et al. (2011). The diagnosis of dementia due to Alzheimer's disease: recommendations from the National Institute on Aging-Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimer. Dement. 7, 263–269. doi: 10.1016/j.jalz.2011.03.005

Molenberghs, P., Johnson, H., Henry, J. D., and Mattingley, J. B. (2016). Understanding the minds of others: a neuroimaging meta-analysis. Neurosci. Biobehav. Rev. 65, 276–291. doi: 10.1016/j.neubiorev.2016.03.020

Oldfield, R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113. doi: 10.1016/0028-3932(71)90067-4

Orjuela-Rojas, J. M., Montañés, P., Rodríguez, I. L. L., and González-Marín, N. R. (2021). Recognition of musical emotions in the behavioral variant of frontotemporal dementia. Rev. Colomb. Psiquiatr. 50, 74–81. doi: 10.1016/j.rcp.2020.01.002

Orsini, A., and Laicardi, C. (1997). WAIS-R. Contributo alla taratura italiana. Firenze: OS Organizzazioni Speciali.

Orso, B., Lorenzini, L., Arnaldi, D., Girtler, N., Brugnolo, A., Doglione, E., et al. (2022). The role of hub and spoke regions in theory of mind in early Alzheimer's disease and frontotemporal dementia. Biomedicines 10:544. doi: 10.3390/biomedicines10030544

Perner, J., and Wimmer, H. (1985). John thinks what Mary thinks that...: attribution of second order false belief by 5- to 10-years old children. J. Exp. Child Psychol. 39, 437–471. doi: 10.1016/0022-0965(85)90051-7

Péron, J., Dondaine, T., Le Jeune, F., Grandjean, D., and Vérin, M. (2012). Emotional processing in Parkinson's disease: a systematic review. Mov. Disord. 27, 186–199. doi: 10.1002/mds.24025

Rascovsky, K., Hodges, J. R., Knopman, D., Mendez, M. F., Kramer, J. H., Neuhaus, J., et al. (2011). Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain 134(Pt 9), 2456–2477. doi: 10.1093/brain/awr179

Rosen, H. J., Perry, R. J., Murphy, J., Kramer, J. H., Mychack, P., Schuff, N., et al. (2002). Emotion comprehension in the temporal variant of frontotemporal dementia. Brain 125(Pt 10), 2286–2295. doi: 10.1093/brain/awf225

Rosen, H. J., Wilson, M. R., Schauer, G. F., Allison, S., Gorno-Tempini, M. L., Pace-Savitsky, C., et al. (2006). Neuroanatomical correlates of impaired recognition of emotion in dementia. Neuropsychologia 44, 365–373. doi: 10.1016/j.neuropsychologia.2005.06.012

Rutherford, M. D., Baron-Cohen, S., and Wheelwright, S. (2002). Reading the mind in the voice: a study with normal adults and adults with Asperger syndrome and high functioning autism. J. Autism Dev. Disord. 32, 189–194. doi: 10.1023/A:1015497629971

Sanavio, E., Bertolotti, G., Michielin, P., Vidotto, G., and Zotti, A. M. (1986). CBA-2.0 Scale Primarie: Manuale. Una batteria a largo spettro per l'assessment psicologico. Firenze: GIUNTI – Organizzazioni Speciali.

Sander, D., Grandjean, D., Pourtois, G., Schwartz, S., Seghier, M. L., Scherer, K. R., et al. (2005). Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. Neuroimage 28, 848–858. doi: 10.1016/j.neuroimage.2005.06.023

Schirmer, A., and Adolphs, R. (2017). Emotion perception from face, voice, and touch: comparisons and convergence. Trends Cogn. Sci. 21, 216–228. doi: 10.1016/j.tics.2017.01.001

Schurz, M., and Perner, J. (2015). An evaluation of neurocognitive models of theory of mind. Front. Psychol. 6:1610. doi: 10.3389/fpsyg.2015.01610

Shamay-Tsoory, S. G., and Aharon-Peretz, J. (2007). Dissociable prefrontal networks for cognitive and affective theory of mind: a lesion study. Neuropsychologia 45, 3054–3067. doi: 10.1016/j.neuropsychologia.2007.05.021

Sica, C., and Ghisi, M. (2007). “The Italian versions of the beck anxiety inventory and the beck depression inventory-II: psychometric properties and discriminant power,” in Leading-Edge Psychological Tests and Testing Research, ed. M. A. Lange (Hauppauge, NY: Nova Science Publishers), 27–50.

Stone, V., Baron-Cohen, S., Knight, R. T., Gregory, C., Lough, S., Erzinclioglu, S., et al. (2002). Faux Pas recognition test (adult version). J. Cogn. Neurosci. 10, 640–656. doi: 10.1162/089892998562942

Todorov, A., Mende-Siedlecki, P., and Dotsch, R. (2013). Social judgments from faces. Curr. Opin. Neurobiol. 23, 373–380. doi: 10.1016/j.conb.2012.12.010

Torres, B., Santos, R. L., Sousa, M. F., Simões Neto, J. P., Nogueira, M. M., Belfort, T. T., et al. (2015). Facial expression recognition in Alzheimer's disease: a longitudinal study. Arq. Neuropsiquiatr. 73, 383–389. doi: 10.1590/0004-282X20150009

van den Heuvel, M. P., Stam, C. J., Kahn, R. S., and Hulshoff Pol, H. E. (2009). Efficiency of functional brain networks and intellectual performance. J. Neurosci. 29, 7619–7624. doi: 10.1523/JNEUROSCI.1443-09.2009

Wicker, B., Keysers, C., Plailly, J., Royet, J. P., Gallese, V., and Rizzolatti, G. (2003). Both of us disgusted in My insula: the common neural basis of seeing and feeling disgust. Neuron 40, 655–664. doi: 10.1016/S0896-6273(03)00679-2

Williams, G. E., Daros, A. R., Graves, B., McMain, S. F., Links, P. S., and Ruocco, A. C. (2015). Executive functions and social cognition in highly lethal self-injuring patients with borderline personality disorder. Pers. Disord. 6, 107–116. doi: 10.1037/per0000105

Wright, A., Saxena, S., Sheppard, S. M., and Hillis, A. E. (2018). Selective impairments in components of affective prosody in neurologically impaired individuals. Brain Cogn. 124, 29–36. doi: 10.1016/j.bandc.2018.04.001

Yang, C., Zhang, T., Li, Z., Heeramun-Aubeeluck, A., Liu, N., Huang, N., et al. (2015). The relationship between facial emotion recognition and executive functions in first-episode patients with schizophrenia and their siblings. BMC Psychiatry 15:241. doi: 10.1186/s12888-015-0618-3

Keywords: Theory of Mind, emotion recognition, emotional prosody, Alzheimer's disease, frontotemporal dementia

Citation: Sola C, Zanelli V, Molinari MA, Casadio C, Ricci F, Carpentiero O, Tondelli M, Lui F, Nichelli PF and Benuzzi F (2025) Understanding basic and social emotions in Alzheimer's disease and frontotemporal dementia. Front. Psychol. 16:1535722. doi: 10.3389/fpsyg.2025.1535722

Received: 27 November 2024; Accepted: 20 January 2025;

Published: 07 February 2025.

Edited by:

Sara Isernia, Fondazione Don Carlo Gnocchi Onlus (IRCCS), ItalyReviewed by:

Valeria Manera, Université Côte d'Azur, FranceValeria Blasi, Fondazione Don Carlo Gnocchi Onlus (IRCCS), Italy

Copyright © 2025 Sola, Zanelli, Molinari, Casadio, Ricci, Carpentiero, Tondelli, Lui, Nichelli and Benuzzi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Claudia Casadio, Y2xhdWRpYS5jYXNhZGlvQHVuaW1vcmUuaXQ=

†These authors share first authorship

Carlotta Sola

Carlotta Sola Vanessa Zanelli

Vanessa Zanelli Maria Angela Molinari

Maria Angela Molinari Claudia Casadio

Claudia Casadio Francesco Ricci

Francesco Ricci Omar Carpentiero

Omar Carpentiero Manuela Tondelli

Manuela Tondelli Fausta Lui

Fausta Lui Paolo Frigio Nichelli

Paolo Frigio Nichelli Francesca Benuzzi

Francesca Benuzzi