94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 27 February 2025

Sec. Educational Psychology

Volume 16 - 2025 | https://doi.org/10.3389/fpsyg.2025.1321712

Introduction: The immersive and interactive nature of panoramic video empowers learners with experiences that are infinitely close to the real environment and increases the use of imagination in learners’ knowledge acquisition. Studies have shown that embedding question feedback in traditional educational videos can effectively improve learning. However, little research has been conducted on embedding question feedback in panoramic videos to explore what types of question feedback effectively improve the dimensions of learners’ learning engagement and yield better learning experiences and learning effects.

Methods: This study embedded questions with feedback within panoramic videos by categorizing feedback into two types: simple feedback and elaborated feedback. Using eye tracking, brainwave meters, and subjective questionnaires as measurement tools, this study investigated which type of question feedback embedded in panoramic videos improved various dimensions of learner engagement and academic performance. Participants (n = 91) were randomly assigned to the experimental group (simple feedback, elaborated feedback) or the control group (no feedback).

Results: The results of the study showed that (1) the experimental group significantly improved in cognitive engagement, behavioral engagement, and emotional engagement compared to the control group. When the precision of feedback information was greater, the learner’s behavioral engagement was greater; however, the precision of feedback information did not significantly affect cognitive and emotional engagement. (2) When the feedback information was more detailed, the learners’ academic performance was better.

Discussion: The findings of this study can support strategic recommendations for the design and application of panoramic videos.

There has been great progress in the field of virtual reality (VR) technology in recent years, and there is a growing demand for VR technology in various industries; the Horizon Report considers VR technology to be one of the key technologies for promoting informatization and intelligence in education (Lan et al., 2021). Panoramic video supported by VR technology brings new vitality to technology-enabled education and guides a new direction for educational innovation. The almost real learning environment that it creates can provide learners with an immersive feeling. Panoramic videos can be viewed or controlled in a low immersive manner on a desktop or in a highly immersive way with head-mounted-displays (HMDs) (Rosendahl and Wagner, 2023). However, due to the omnidirectional view of panoramic videos, learners usually get lost in the wide view and thus miss the learning content that they should pay attention to, leading to the possible problem of inattention while learning (Johnson, 2018) and thus making it difficult for this technology to significantly impact academic performance (Panchuk et al., 2018).

Embedding questions in traditional instructional videos is an important form of interaction that significantly impacts learning. For example, it can significantly improve learners’ academic performance (Rose et al., 2016) and effectively help learners avoid the tendency to wander off during the learning process (Szpunar et al., 2013). The design and application of embedded questions are also becoming increasingly widespread to improve the interactivity and teaching effectiveness of instructional videos (Callender and McDaniel, 2007). Feedback can be defined as information that is provided to learners about their performance or behavior (Hattie and Timperley, 2007). This study defines question feedback as the presetting of questions based on panoramic video learning content, where the questions are embedded into the panoramic video and learners are provided with feedback information after they answer the questions.

Panoramic video provides a low-cost opportunity for video instruction (Roche et al., 2021) that extends the benefits of traditional video through immersion and multiview reflection (Rosendahl and Wagner, 2023). Therefore, the question of how to increase the interaction between learners and videos and improve learner engagement in an immersive learning environment such as a panoramic video by embedding question feedback deserves attention. The aims of this study are to investigate whether the presence or absence of feedback within panoramic videos embedded with questions has an impact on learners’ engagement and academic performance and to explore the impact of different types of feedback on learning engagement and academic performance. The findings of this study will provide guidance for the design and development of educational panoramic videos.

Panoramic video is shot with an omni-directional camera, which allows the viewer to see the scene in an uninterrupted circle, as opposed to the fixed point of view of traditional 2D video (Meyer et al., 2019). Numerous studies have investigated the pedagogical applications of panoramic videos. For example, Araiza-Alba et al. (2020) compared three media (panoramic video, traditional video, and posters) for learning water safety skills and found that children learning through panoramic videos experience greater interest and enjoyment and that panoramic videos are more encouraging and engaging than traditional methods of learning. Ulrich et al. (2021) compared panoramic videos to traditional videos and found that panoramic videos can influence students’ emotional responses to the learning atmosphere in a positive way. Rupp et al. (2019) examined the effects of viewing a water safety video on a smartphone with four devices with different levels of immersion (a smartphone, Google Cardboard, Oculus Rift DK2, and Oculus CV1) to watch space-themed panoramic educational videos and found that the highly immersive experience of panoramic videos resulted in a positive learning experience, improved learning outcomes, and increased engagement and attention. Li et al. (2020) conducted a quasi-experimental study on the empathic accuracy of 68 teacher trainees by recording VR panoramic videos in a fixed camera position and found that VR panoramic videos were more effective at improving teacher trainees’ empathic accuracy than traditional videos. Although most studies on the learning effects of panoramic videos have shown positive effects, some studies have shown that panoramic videos distract students (Rupp et al., 2016), make them physically uncomfortable (Wilkerson et al., 2022), and ultimately fail to improve or even inhibit learning effects. Therefore, researchers have not yet reached a consensus on the effectiveness of panoramic videos in educational applications.

Previous studies have investigated types of feedback on questions. Hsieh and O’Neil (2002) found that learners learning from videos embedded with questions learned better when elaborated feedback was provided than when simple feedback was provided. Levant et al. (2018) compared two types of delayed feedback (correct feedback and correct feedback with justification) provided to students after an exam in a computer-based testing system and found that the delayed feedback that provided not only correct feedback but also a rationale resulted in a greater increase in exam scores. Lin et al. (2013) linked instructional proxies to verbal feedback (simple and elaborated feedback) and showed that participants who learned using an animated proxy that provided detailed feedback scored significantly higher on a learning measure. Lang et al. (2022) investigated the effects of both emotional and detailed feedback on multimedia learning with an instructional agent and found that well-designed feedback increased intrinsic motivation and transfer scores but reduced the associated cognitive load. Lin (2011) found that among three types of static and dynamic visual materials—without questions, with questions, and with both questions and feedback—learning with questions and feedback was the best. Xie et al. (2021) used an eye-tracking experiment to examine the effects of pre-embedded questions and feedback design in instructional videos on learners’ attention allocation and learning performance and found that pre-embedded questions without feedback not only increased learners’ attention to the content but also improved their learning performance. According to the feedback principle in multimedia learning, novice students learn better with explanatory feedback than with corrective feedback alone (Mayer, 2014). Explanatory feedback provides learners with a principle-based explanation for why their answer was correct or incorrect, whereas corrective feedback merely informs learners that their answer was correct or incorrect (Mayer, 2014). Some scholars have also found that feedback is not positively effective for learning outcomes. For example, Kluger and DeNisi (1996) found that feedback interventions instead decrease performance, and Pashler et al. (2005) reported that timely or delayed feedback after a participant has answered correctly has little or no effect on learning outcomes. Jordan (2012) noted that feedback can be effective if learners can understand the content of the feedback. If the feedback provided can explain the errors made by learners rather than just providing answers, then it is more helpful in promoting learning.

Learning engagement is the energy, flexibility, and positive emotions that an individual displays during the learning process and reflects the learner’s comprehension of the nature of learning and immersion in it (Wu and Zhang, 2018). Fredricks et al. (2004) argued that learning engagement is the emotional and behavioral engagement of students through the learning process and encompasses three independent dimensions: behavioral, emotional, and cognitive. Learning engagement has a determining influence on the effectiveness of active learning. In this study, learning engagement is the intrinsic and extrinsic behavioral state of learners through the learning process by actively participating in information exchange activities and by investing time and energy into deep thinking, which includes three dimensions: cognitive engagement, behavioral engagement, and emotional engagement.

As an important indicator for observing the learning process and measuring the quality of learning (Kim et al., 2021), learning engagement has a direct positive effect on learning outcomes (Hu et al., 2020). For example, Pascarella et al. (2010) found that learning engagement can positively impact academic performance, Pizzimenti and Axelson (2015) concluded that the level of students’ learning engagement can effectively predict their learning outcomes, and Liu (2022) determined that when the learning engagement of hearing-impaired students is greater, they are more willing to devote their time and energy to learning, which is conducive to improving academic performance. These studies show that learning engagement is an important factor that affects learning outcomes and deserves the attention of researchers.

Numerous studies have measured learning engagement. Junco (2012) used a questionnaire to study the impact of over two thousand school students’ use of social media on their learning engagement and found that indulgence in social media was detrimental to students’ learning engagement in the classroom. Zheng et al. (2021) developed a virtual simulation experimental teaching platform for situational English, used a questionnaire survey method, and found that enhancing the motivation of English language learners promoted their level of learning engagement. Cao et al. (2019) observed that the multimodal learning engagement recognition method is superior to the unimodal learning engagement recognition method. Using a multimodal data analysis approach, Wang et al. (2021) determined that learners’ cognitive engagement and behavioral engagement were greater in desktop VR learning environments than in online learning environments. Moreover, learning engagement is affected by the learning environment, and both directly intervening in the learning process and changing the learning environment can affect learner engagement (Lawson and Lawson, 2013).

Based on the above analysis, it is worth further verifying whether the impact of question feedback types on learning in panoramic videos is the same as that in traditional videos. This study focuses on exploring what types of feedback can enhance learners’ learning engagement and academic performance in a panoramic video environment while also comparing the impact of the presence or absence of feedback on learning engagement and academic performance. Therefore, the following research questions are proposed in this study:

1: What type of question feedback is embedded in panoramic videos to improve learning engagement and academic performance?

2: What impact does the presence or absence of feedback in panoramic videos with embedded questions have on learning engagement and academic performance?

This study selected a total of 127 undergraduate students from M University, aged between 19 and 21 years old, with normal or corrected-to-normal vision. To eliminate the impact of prior knowledge on academic performance, participants who were relatively familiar with the experimental materials were excluded through a pre-test questionnaire. The remaining 102 individuals, who had little understanding of the relevant information in the experimental materials, were chosen as subjects and randomly divided into three groups.

This study developed a panoramic video that embeds questions and provides feedback. Referring to Shute’s (2008) classification of feedback types, question feedback types were divided into simple feedback and elaborated feedback. Simple feedback refers to providing learners with only correct or incorrect answers, while elaborated feedback indicates providing learners with not only correct or incorrect answers but also detailed explanations of the question. The research hypotheses of this study are as follows:

H1: Compared to simple feedback, elaborated feedback can better enhance learners’ learning engagement and academic performance.

H2: Compared to panoramic videos without feedback, providing feedback can enhance learners’ learning engagement and academic performance.

The panoramic video resources embedded with question feedback in this study are mainly developed in Unity 3D, and the learning material is a video about “Deng Xiaoping, the Great Man,” which shows the site of Deng Xiaoping’s former residence and exhibition hall, briefly explains Deng Xiaoping’s biography and experiences, and then explains the main contents of Deng Xiaoping’s theory of socialism with Chinese characteristics, the development strategy of socialist construction, and the reform and opening-up. The panoramic video discussed in this study was viewed with an HMD, thus increasing the immersion of the learners during the learning process and enabling them to turn their heads 360° to view the entire world as in real life. The experimental materials consisted of three versions: a no-feedback version, a simple-feedback version, and an elaborated-feedback version. The study standardized the simple feedback phrases as “Great, correct answer!” or “Go for it, wrong answer!” and used consistently “Correct, the question is about the core knowledge and explanation” or “Wrong, the question is about the core knowledge and explanation” for elaborated feedback. The content of the narration in the panoramic video and the embedded questions and feedback are shown in Appendix Table 1. An example of simple question feedback embedded in question one is shown in Figure 1, and an example of elaborated question feedback is shown in Figure 2.

Measurement of prior knowledge and its instruments: All pre-knowledge test questions were related to the main events of Comrade Deng Xiaoping’s great and glorious life, with a total of 16 single-choice questions.

The tools used to measure learning engagement are shown in Table 1.

In this study, an eye-movement version of an HTC VIVE Pro EYE model helmet was used, and the eye-movement data were recorded and collected in Tobii Pro VR Analytics; the computer equipment used was HP Z620 as the running workstation for the running of the software required for the experiment and the display of the panoramic video learning materials. A BrainLink-Lite portable brainwave meter from Hongli Technology was used to monitor and record the subjects’ brainwaves and other mental state data in real time.

Knowledge post-test questionnaire: The post-test questionnaire was compiled by combining the pre-test questionnaire and the knowledge points in the study materials of “Deng Xiaoping, the Great Man,” which focused on the main content of Deng Xiaoping’s theory of socialism with Chinese characteristics, the international situation at the time, the basis for the formation of Deng Xiaoping’s theories, the significance of Deng Xiaoping’s theories during the revolutionary period, the content and significance of the basic line of the primary stage of socialism, and the main content of the Reform and Opening Up. The questionnaire contained 16 multiple-choice questions.

The eye movement data in this study were recorded and collected in Tobii Pro VR Analytics, a plug-in that provides metrics such as total gaze time, total number of gazes, first gaze time, interaction time, and number of interactions. The study used the data recording and export function provided by the plug-in to select the relevant eye movement indicators to be exported after the data recording was completed, and the eye movement behavior indicators required for export in this experiment were mainly the data such as total gaze time and total number of gaze times.

To start recording eye movement data, the VR headset needs to be activated, which can be done in the Home tab. When everything is ready, in the main recording interface, the “New Recording” button in the “Recording” control bar is used to record the data, and in the state of data recording, the learning process can be viewed in real time through the VR view provided by SteamVR. In the state of data recording (as shown in Figure 3), you can view the learner’s learning process in real time through the VR view provided by SteamVR.

Finally, the eye movement data were exported after the experiment was completed. Enter the replay mode of Tobii Pro VR Analytics, select “Record” in the eye movement data export menu bar to bring up the eye movement data recording interface (Figure 4), check the experimental record, and then select “Record” in the eye movement data export control bar at the bottom of the page to export the data. Select “Record” in the eye movement data export menu bar to bring up the eye movement data recording interface.

The brainwave data of this experiment are recorded and exported in the APP accompanying BrainLinkd, through which the trend of brainwave data and brainwave energy can be monitored in real time, Figure 5 is the trend of the brainwave after the completion of the data recording process, and the brainwave energy at a certain moment can also be viewed. Finally, select the brainwave data recording in APP to export and analyze the experimental data.

This experiment was conducted in the Virtual Reality Laboratory at the University of M. One week before the start of the experiment, all subjects were summoned to a computer room to randomly obtain a test serial number and complete the knowledge pre-test questionnaire. They then entered the VR training room to adapt to and use the VR equipment.

The experimental assistant led the subjects to wait for the experiment in the waiting room, the subjects entered the formal measurement laboratory and provided their demographic information, and the researcher informed the subjects about the experiment and the use of the study materials and precautions and waited for the subjects to familiarize themselves with the experimental environment before starting the formal experiment. The HTC helmet and brainwave meter were worn according to the subject’s actual condition, and the helmet was adjusted for comfort. It was confirmed that the subject could see the panoramic video, the subject was assisted in calibrating his or her eye movements through the five-point calibration method built into the HTC helmet, and the handle was given to the subject. Then, the researcher turned on the brainwave meter and recorded the eye movement data, and the subjects formally watched the video and learned the experimental material; during the video, they answered questions by clicking on the options using the handles.

After the subjects completed the study, the researcher stopped recording the data, helped the subjects unlock the HTC helmet, and guided the subjects to sit in the corresponding area. The participants then completed the Learning Engagement Questionnaire and the Knowledge Post-test Questionnaire. The specific flow of the experiment is shown in Figure 6.

This application study of panoramic video teaching resources embedded with question feedback mainly examined the impact of learning effects on learning engagement and academic performance, and experimental data with subjective and objective measurements were used to illustrate the results of the experiments in a comprehensive analysis. After eliminating the subject samples with an incomplete sampling of eye movement data or brain wave data, a total of 91 samples remained. In this study, descriptive statistics of learners’ prior knowledge were conducted and the results are shown in Table 2.

In order to test whether the learners’ prior knowledge level is at the same level, the variance chi-square test and one-way ANOVA were conducted successively with the pre-test scores between the groups as the dependent variable, and the one-way ANOVA results showed that [F (2, 88) = 0.033, P = 0.967 > 0.05], which indicated that the difference between the total scores of the three groups’ pre-test scores did not reach the level of significance, and that the learners’ knowledge level of the material of this experiment converged to the same level prior to the acceptance of the experiment. The level of knowledge mastery of the material of this experiment tends to be the same, and prior knowledge does not affect the results of the experiment. The results of one-way ANOVA are shown in Table 3.

Descriptive statistics on the subjective measures of the cognitive engagement questionnaire, concentration, total gaze time, and total number of gazes were obtained, and the statistical results are shown in Table 4.

To further examine whether there was a significant difference in the subjective judgments of cognitive engagement among the groups for the three types of feedback, a one-way analysis of variance (ANOVA) was conducted to analyze the subjective judgments of cognitive engagement among the three groups. The results showed that the differences in the subjective cognitive engagement judgments among the three groups reached a significant level [F = 2.716 (2,88), P = 0.072 < 0.1]. Post hoc multiple comparisons of subjective judgments of cognitive engagement using least significant difference (LSD) revealed that the difference in the subjective judgment values of cognitive engagement between the no-feedback group and the elaborated-feedback group was significant (P = 0.027 < 0.05), and the differences between the no-feedback group and the simple-feedback group (P = 0.554 > 0.05) and between the simple-feedback group and the elaborated-feedback group (P = 0.103 > 0.05) were not significant.

To further examine whether there was a significant difference among the groups of learners’ levels of concentration for the three types of feedback, a one-way ANOVA was conducted to analyze the learners’ levels of concentration of the three groups. The results showed that the differences in concentration among the three groups reached the level of significance (F = 5.699, P = 0.005 < 0.05). Post hoc multiple comparisons of the learners’ levels of concentration using LSD revealed that there was a significant difference in concentration between the no-feedback group and the simple-feedback group (P = 0.001 < 0.05) and between the no-feedback group and the elaborated-feedback group (P = 0.025 < 0.05) and that the difference between the simple-feedback group and the elaborated-feedback group was not significant (P = 0.299 > 0.05).

To further examine whether there were significant differences in the total gaze time and the total number of gazes among the groups for the three types of feedback, a one-way ANOVA was conducted on the total gaze time and the total number of gazes of the three groups. The results showed that the differences among the three groups in total gaze time reached a significant level [F = 14.003 (2,88), P = 0.000 < 0.05] and that the differences among the three groups in the number of gazes reached a significant level [F = 14.497 (2,88), P = 0.000 < 0.05]. The difference in total gaze time among the three groups reached a significant level [F = 14.497 (2,88), P = 0.000 < 0.05). Post hoc multiple comparisons of the total gaze time and total number of gazes using LSD revealed that in terms of total gaze time, the difference between the no-feedback group and the simple-feedback group was significant (P = 0.001 < 0.05), the difference between the no-feedback group and the elaborated-feedback group was significant (P = 0.000 < 0.05), and the difference between the simple-feedback group and the elaborated-feedback group was not significant (P = 0.094 > 0.05). The total number of gazes significantly differed between the no-feedback group and the simple-feedback group (P = 0.000 < 0.05), between the no-feedback group and the elaborated-feedback group (P = 0.000 < 0.05), and between the simple-feedback group and the elaborated-feedback group (P = 0.695 > 0.05). The results of the one-way ANOVA for cognitive engagement are shown in Table 5.

Descriptive statistics of the subjective questionnaire data on behavioral engagement and hours of study were obtained, and the results are shown in Table 6.

To further examine whether the subjective judgments of the behavioral engagement of the three groups were significantly different for the three types of feedback, a one-way ANOVA was conducted on the subjective judgments of behavioral engagement for the three groups. The results showed that the differences among the three groups of subjective behavioral engagement judgments reached the level of significance [F = 4.135 (2,88), P = 0.019 < 0.05]. Post hoc multiple comparisons of the subjective judgments of behavioral engagement using LSD revealed significant differences between the no-feedback group and the elaborated-feedback group (P = 0.010 < 0.05) and between the simple-feedback group and the elaborated-feedback group (P = 0.025 < 0.05) and revealed non-significant differences between the no-feedback group and the simple-feedback group (P = 0.714 > 0.05).

The type of feedback as the independent variable and the duration of study as the dependent variable were successively subjected to a variance chi-square test and one-way ANOVA. The results of the variance chi-square test showed that the variance in the three groups was not significant [F = 7.119 (2,88), P = 0.001 < 0.05]. The results of a one-way ANOVA showed that the difference between the three groups of study hours reached a significant level [F = 23.492 (2,88), P = 0.000 < 0.05]. Due to the heterogeneity of the variance in the length of study in the three groups, post hoc multiple comparisons of the length of study using the Tammany method revealed significant differences between the no-feedback group and the simple-feedback group (P = 0.001 < 0.05), between the no-feedback group and the elaborated-feedback group (P = 0.000 < 0.05), and between the simple-feedback group and the elaborated-feedback group (P = 0.013 < 0.05). The results of the one-way ANOVA for behavioral engagement are shown in Table 7.

This study measured emotional engagement during panoramic video learning for three types of question feedback through emotional engagement questionnaire data and relaxation.

Descriptive statistics of the subjective questionnaire data on emotional engagement and relaxation were obtained, and the results are shown in Table 8.

To further examine whether there was a significant difference in the subjective judgments of emotional investment among the groups for the three types of feedback, a one-way ANOVA was conducted to analyze the subjective judgments of emotional investment among the three groups. The results showed that the differences in the subjective judgments of emotional investment among the three groups did not reach the level of significance [F = 1.105 (2,88), P = 0.336 > 0.05].

To further examine whether there was a significant difference among the relaxation levels of the groups of learners for the three types of feedback, a one-way ANOVA was conducted to analyze the relaxation levels of the three groups of learners. The results showed that the difference in learners’ relaxation levels among the three groups reached the level of significance [F = 7.842 (2,88), P = 0.001 < 0.05]. Post hoc multiple comparisons of learner relaxation using LSD revealed significant differences between the no-feedback group and the simple-feedback group (P = 0.000 < 0.05), between the no-feedback group and the elaborated-feedback group (P = 0.005 < 0.05), and between the simple-feedback group and the elaborated-feedback group (P = 0.344 > 0.05). The results of the one-way ANOVA for emotional engagement are shown in Table 9.

The descriptive statistics of the pre- and poststudy test scores are shown in Table 10.

To test whether the learners’ prior knowledge levels were similar, a one-way ANOVA was conducted with the pre-test scores of each group as the dependent variable, and the results showed that the differences between the total pre-test scores of the three groups did not reach a significant level [F (2, 88) = 0.033, P = 0.967 > 0.05]. The learners’ level of knowledge of “Deng Xiaoping, the Great Man” before they participated in the experiment tended to be similar, and prior knowledge and experience did not affect the results of the experiment.

The total post-test score, which was used as the dependent variable; the feedback format, which was used as the independent variable; and the total pre-test score, which was used as the covariate, were tested for between-subjects effects. The results showed that [F (2, 85) = 2.258, P = 0.111 > 0.05] the feedback format*total pre-test score, which indicates the total pre-test score of the learners in the groups with different feedback formats, did not significantly affect the total post-test score, and the relationship between the feedback format and post-test score can be tested with covariance analysis.

Based on this, a one-way covariance analysis of learners’ post-test academic performance across the feedback formats was conducted, with feedback format as the independent variable, post-test performance as the dependent variable, and the total pre-test performance score as the covariate. The results showed that the question feedback format significantly affected learners’ post-test performance [F (2, 87) = 21.460, P = 0.000 < 0.05].

Then, the question feedback type was further used as the independent variable, and the learners’ post-test scores were used as the dependent variable to analyze the extent to which the feedback form affected the post-test scores. After employing the LSD method to compare the groups with different feedback types, a post-hoc test based on the test revealed significant differences between the no-feedback group and the simple-feedback group (P = 0.001 < 0.05), between the no-feedback group and the elaborated-feedback group (P = 0.000 < 0.05), and between the simple-feedback group and the elaborated-feedback group (P = 0.005 < 0.05).

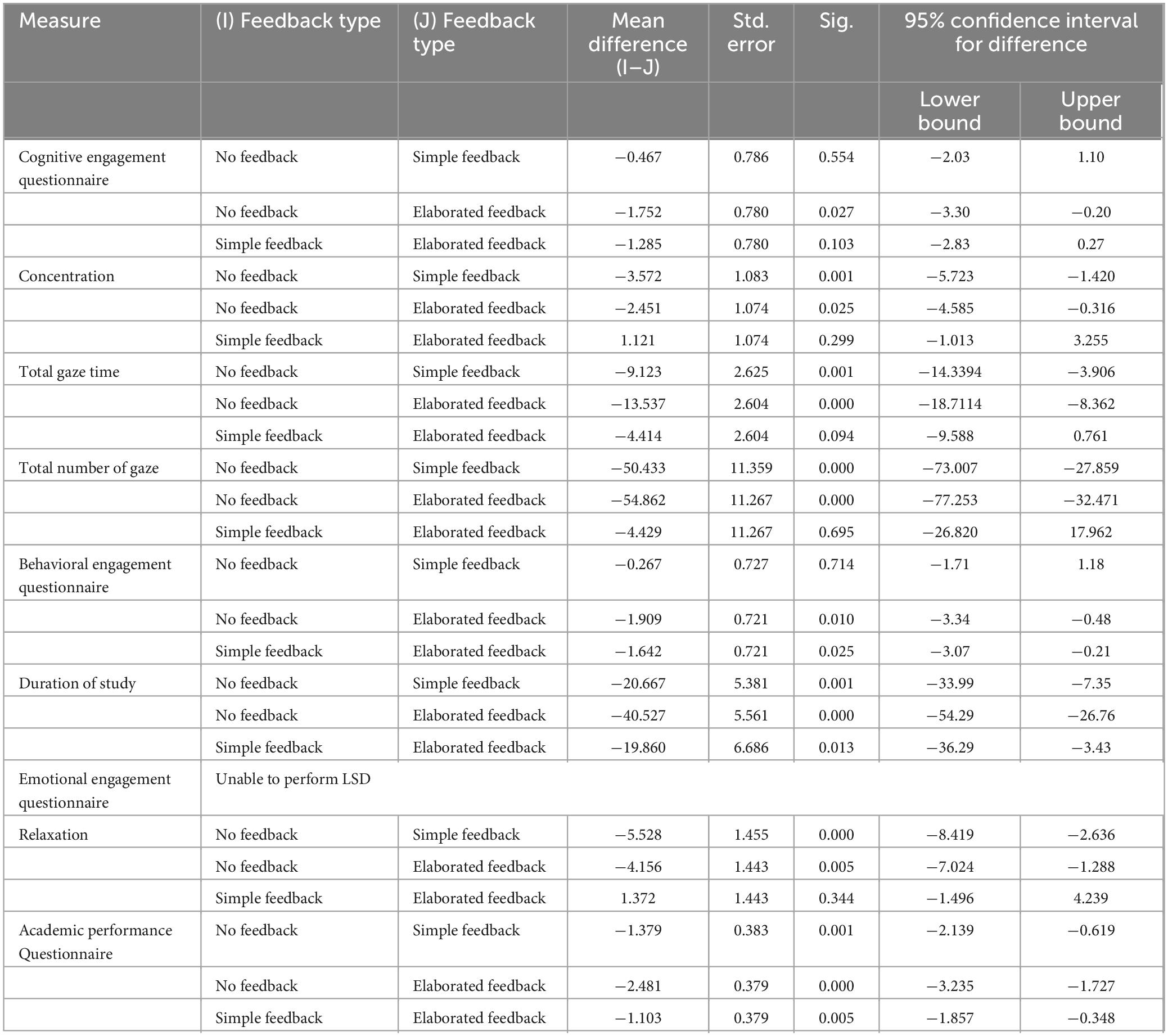

The post hoc comparison results of the effect of question feedback on learning engagement and academic performance are shown in Table 11.

Table 11. Post hoc comparisons of the effect of question feedback on learning engagement and academic performance.

Combining the results of the subjective and objective measurement data revealed that the presence or absence of feedback information in panoramic videos embedded with questions significantly affects learners’ cognitive engagement, but there is no significant difference in the impact of simple feedback and elaborated feedback on cognitive engagement.

According to cognitive conflict theory, when learners receive feedback information and discover that new knowledge is inconsistent with existing knowledge, this can trigger cognitive conflicts among learners, thereby stimulating their learning motivation (Rahim et al., 2015). Cognitive engagement includes both psychological and cognitive factors. In terms of psychological factors, the learning motivation triggered by cognitive conflicts has a promoting effect and can make learners more willing to work hard to understand knowledge. In addition, as an important branch of social learning theory, Triadic reciprocal determinism was proposed by the famous American psychologist Bandura in the 1960s based on the Lewin model research (Chen and Li, 2015). From the perspective of Triadic reciprocal determinism, the process through which learners acquire knowledge is formed through the interaction of their individual factors, behaviors, and environmental factors. Individual factors refer to the intrinsic traits of learners, such as cognition and characteristics; behavior is the specific activity, action reflection, and external manifestation that learners can observe during the learning process; and environmental factors refer to the external environment that can influence learner behavior under the influence of individual factors. Embedding question feedback in panoramic videos creates an external environment for learners; learning engagement is the reflection of actions taken by learners during the learning process. When learners engage in internal self-regulation to learn knowledge, they receive feedback stimuli from the external environment, which can lead to monitoring and adjusting their cognitive processes. Learners learn through their own knowledge and experience. When the feedback information they receive conflicts with their existing cognition, their attention begins to increase, which will make certain adjustments to their cognitive process and make certain changes to the learning strategies that they use during the learning process. They exert psychological effort for the knowledge that they have learned, thereby affecting their cognitive engagement in the learning process. When a learner receives feedback that aligns with his existing cognition, he will consider the learning strategies and psychological efforts that he adopts appropriate and may monitor his cognitive process but will rarely make adjustments. Therefore, compared to no feedback, embedding question feedback in panoramic videos can improve learners’ cognitive engagement.

Previous studies have shown that learning engagement is influenced by the learning environment (Lawson and Lawson, 2013). Compared to a 2D environment, a 3D immersive VR environment can enhance learners’ interest and learning motivation (Huang et al., 2010). The learning motivation generated in a highly immersive environment such as panoramic videos promotes the improvement of cognitive engagement among learners. Because the level of precision in feedback information is weaker in stimulating learners than in the learning environment, there is no significant difference in the impact of simple feedback and elaborated feedback on cognitive engagement.

Combining the results of the subjective and objective measurement data showed that the presence or absence of feedback information in panoramic videos with embedded questions significantly affects learners’ behavioral engagement, and compared to simple feedback, elaborated feedback can better enhance learners’ behavioral engagement.

According to Triadic reciprocal determinism, in a panoramic video learning environment with embedded questions, learners receive feedback information after encountering and answering embedded questions. When the feedback information deviates from their expected expectations, learners adjust their learning strategies and invest more psychological effort, time, and energy, thus improving behavioral engagement. This provides a good explanation for why learners in the feedback group showed greater improvement in behavioral engagement than those in the no-feedback group showed.

When learners learn by using panoramic videos with embedded questions, they are unable to communicate with or receive timely feedback from the outside world. They can only solve problems independently and recall and connect them to their previous knowledge and experience. The emergence of feedback information allows learners to further actively explore, process, and construct the problems and difficulties that they encounter. According to the brainwave data, in terms of learning duration, the elaborated-feedback group had longer learning durations than the simple-feedback group had, indicating that learners in the elaborated-feedback group invested more time and energy, were more willing to spend time learning, and thus had improved behavioral engagement compared to the simple-feedback group. Wang et al. (2021) also used learning duration to reflect learners’ behavioral engagement and reported that learners spend more time learning in desktop VR environments than in traditional learning environments. Therefore, learners have better behavioral engagement in desktop VR environments. The reason for the inconsistency between the subjective and objective measures of variability of behavioral engagement in the no feedback group and the simple feedback group may be due to the fact that although the learners gave some time to pay attention to and learn from the simple feedback information, they spent more time in the question-answering activities, which resulted in the subjective perceptual judgment that they did not perceive that there was too much learning behavior and time invested in the simple feedback information.

Combining the results of the subjective and objective measurement data revealed that the presence or absence of feedback information did not significantly affect emotional engagement. There was no significant difference between the simple-feedback group and the elaborated-feedback group.

The reason that the presence or absence of feedback information did not significantly affect emotional engagement may be that learners’ emotional learning states are stimulated when they learn using panoramic videos (Stavroulia et al., 2019), and the stimulus given may not have been sufficient to bring about a change in emotion, and learners learn in immersive learning environments with an overall higher emotional state, which leads to a non-significant difference in affective engagement, resulting in a non-significant difference in emotional engagement. Another reason why the fineness of the feedback information did not significantly affect emotional engagement may be that the learning materials were less difficult and met the learners’ expectations, which caused them to not put too much mental effort into the feedback information.

Research has shown that when learning from panoramic videos, learners who provide elaborated feedback tend to perform better in terms of academic performance.

When learners learn by using panoramic video teaching content without feedback, they may repeatedly think about whether they have answered questions correctly during the learning process; when learners learn using panoramic video teaching content with simple feedback, they may be disturbed by and doubtful because of their wrong answers. The provision of elaborated feedback can be an effective solution to these two situations. Feedback can help learners identify shortcomings in the learning process and make improvements, thereby enhancing academic performance (Lerdpornkulrat et al., 2019). With respect to the feedback principle, providing elaborated information to learners during a multimedia learning lesson aids in their knowledge construction and reduces cognitive processing demands (Mayer, 2014). The academic performance measured in this study refers to maintaining grades, and this conclusion is also in line with Mayer’s feedback principle.

This study helps to expand the boundaries of feedback principles in multimedia learning and provides reference suggestions for the design and development of panoramic teaching videos. However, there are still some shortcomings in this study.

1. The study was conducted on undergraduate students, with an unbalanced gender ratio, and the study materials contained ideological and political content. Therefore, caution should be exercised in generalizing the findings to other groups or courses. It is worth exploring the study of research subjects of different ages and learning materials.

2. This study did not explore learners’ own characteristics as moderating variables, such as learners’ prior knowledge, learning styles, learners’ technology preferences, etc., and did not have a more detailed understanding of how these factors affect the relationship between question feedback types and learning engagement. Future research could explore the influence of learners’ own characteristics on learning engagement.

3. The learners’ emotional engagement in this study was measured by subjective questionnaires and relaxation scales, but it is worthwhile to further explore whether these measures reflect the learners’ actual emotional engagement.

4. This study was conducted in a laboratory environment, and further research is needed to investigate the effects of practical application in the classroom.

5. This study did not explore the effects of different multimedia interactive environments (e.g., VR, AR, MR, AI, etc.) on learning engagement. Future research could compare panoramic video with other interactive educational technologies to determine the specific strengths and weaknesses of this media.

This study explored the impact of question feedback on learning engagement in panoramic videos. The results showed that in panoramic video learning, providing feedback information can better enhance learners’ cognitive engagement, behavioral engagement, and academic performance than can providing only questions without feedback. Compared to simple feedback, elaborated feedback can better enhance learners’ cognitive engagement, behavioral engagement, and academic performance. Therefore, in the future, instructional designers can consider embedding questions and providing elaborated feedback when using panoramic video teaching to improve learners’ learning engagement and academic performance.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Ethics Committee of China West Normal University. The participants provided their written informed consent to participate in this study.

GH: Writing – original draft. HZ: Writing – original draft. JZ: Writing – original draft. WC: Writing – original draft.

The author(s) declare that financial support was received for the research, authorship, and/ or publication of this article. First, 2024 Sichuan Provincial Education Science Planning Project “Interdisciplinary Teaching Practice Research on Cultivating Scientific Exploration Ability of Primary and Secondary School Students” (No: SCJG24C308). Second, The Sichuan Higher Education Association has released the 2024 Education Informatization Special Research Project “Virtual Simulation Experiment Design and Application Research on Innovative Design Literacy Cultivation”.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Araiza-Alba, P., Keane, T., Matthews, B. L., Simpson, K., Strugnell, G., Chen, W. S., et al. (2020). The potential of 360-degree virtual reality videos to teach water-safety skills to children. Comput. Educ. 163:104096. doi: 10.1016/j.compedu.2020.104096

Callender, A. A., and McDaniel, M. A. (2007). The benefits of embedded question adjuncts for low and high structure builders. J. Educ. Psychol. 99, 339–348. doi: 10.1037/0022-0663.99.2.339

Cao, X., Zhang, Y., Zhang, Y., Pan, M., Zhu, S., and Yan, H. L. (2019). Research on learning engagement recognition methods in the view of artificial intelligence - Based on an experimental analysis of deep learning with multimodal data fusion. J. Distance Educ. 1, 32–44. doi: 10.15881/j.cnki.cn33-1304/g4.2019.01.003

Fredricks, J. A., Blumenfeld, P. C., and Paris, A. H. (2004). School engagement: Potential of the concept, State of the evidence. Rev. Educ. Res. 74, 59–109. doi: 10.3102/00346543074001059

Fredricks, J. A., Blumenfeld, P., Friedel, J., and Paris, A. (2005). “School Engagement,” in What do Children Need to Flourish: Conceptualizing and Measuring Indicators of Positive Development, eds K. A. Moore and L. H. Lippman (Berlin: Springer Science), 305–321. doi: 10.1007/0-387-23823-9_19

Hsieh, I.-L. G., and O’Neil, H. F. (2002). Types of feedback in a computer-based collaborative problem-solving task. Comput. Hum. Behav. 18, 699–715. doi: 10.1016/S0747-5632(02)00025-0

Hu, X. Y., Hu, X. Y., and Chen, Z. X. (2020). An empirical study on the relationship between learners’ information literacy, online learning engagement and learning performance. China Electrochem. Educ. 3, 77–84.

Huang, H.-M., Rauch, U., and Liaw, S.-S. (2010). Investigating learners’ attitudes toward virtual reality learning environments: Based on a constructivist approach. Comput. Educ. 55, 1171–1182. doi: 10.1016/j.compedu.2010.05.014

Johnson, C. D. L. (2018). Using virtual reality and 360-degree video in the religious studies classroom: An experiment. Teach. Theol. Religion 21, 228–241. doi: 10.1111/TETH.12446

Jordan, S. E. (2012). Student engagement with assessment and feedback: Some lessons from short-answer free-text e-assessment questions. Comput. Educ. 58, 818–834.

Junco, R. (2012). The relationship between frequency of Facebook use, participation in Facebook activities, and student engagement. Comput. Educ. 58, 162–171. doi: 10.1016/j.compedu.2011.08.004

Kim, D., Jung, E., Yoon, M., Chang, Y., Park, S., Kim, D., et al. (2021). Exploring the structural relationships between course design factors, learner commitment, self-directed learning, and intentions for further learning in a self-paced MOOC. Comput. Educ. 166:104171. doi: 10.1016/j.compedu.2021.104171

Kluger, A. N., and DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 119, 254–284. doi: 10.1037/0033-2909.119.2.254

Kumar, A., Khanna, R., Srivastava, R. K., Iqbal, A., and Wadhwani, P. (2014). Alternative healing therapies in today’s era. Int. J. Res. Ayurveda Pharm. 5, 394–396. doi: 10.7897/2277-4343.05381

Lan, G. S., Wei, J. C., Zhang, Y., Guo, Q., Zhang, W. F., Kong, X. K., et al. (2021). The future of teaching and learning in higher education: Macro-trends, key technological practices and future development scenarios - Highlights and reflections from the Horizon Report 2021 (Teaching and Learning Edition). Open Educ. Res. 3, 15–28. doi: 10.13966/j.cnki.kfjyyj.2021.03.002

Lang, Y., Xie, K., Gong, S., Wang, Y., and Cao, Y. (2022). The Impact of emotional feedback and elaborated feedback of a pedagogical agent on multimedia learning. Front. Psychol. 13:810194. doi: 10.3389/fpsyg.2022.810194

Lawson, M. A., and Lawson, H. A. (2013). New conceptual frameworks for student engagement research, policy, and practice. Rev. Educ. Res. 83, 432–479. doi: 10.3102/0034654313480891

Lerdpornkulrat, T., Poondej, C., Koul, R., Khiawrod, G., and Prasertsirikul, P. (2019). The positive effect of intrinsic feedback on motivational engagement and self-efficacy in information literacy. J. Psychoeduc. Assess. 37, 421–434. doi: 10.1177/0734282917747423

Levant, B., Zückert, W., and Paolo, A. (2018). Post-exam feedback with question rationales improves re-test performance of medical students on a multiple-choice exam. Adv. Health Sci. Educ. Theory Pract. 23, 995–1003. doi: 10.1007/s10459-018-9844-z

Li, S., Li, R., and Yu, C. (2018). A model for assessing distance learners’ learning engagement based on LMS data. Open Educ. Res. 24, 91–102. doi: 10.13966/j.cnki.kfjyyj.2018.01.009

Li, W. H., Li, Y. F., Xiang, W., and Chen, D. M. (2020). The effect of VR panoramic video on teacher trainees’ empathy accuracy. Modern Educ. Technol. 12, 62–68.

Lin, H. (2011). Facilitating learning from animated instruction: Effectiveness of questions and feedback as attention-directing strategies. J. Educ. Technol. Soc. 14, 31–42.

Lin, L., Atkinson, R. K., Christopherson, R. M., Joseph, S. S., and Harrison, C. J. (2013). Animated agents and learning: Does the type of verbal feedback they provide matter? Comput. Educ. 67, 239–249. doi: 10.1016/j.compedu.2013.04.017

Liu, Z. H. (2022). The effect of school satisfaction on academic achievement of hearing impaired students: The mediating role of learning engagement. China Special Educ. 3, 56–62.

Mayer, R. E. (2014). The Cambridge Handbook of Multimedia Learning. Cambridge: Cambridge University Press.

Meyer, O. A., Omdahl, M. K., and Makransky, G. (2019). Investigating the effect of pre-training when learning through immersive virtual reality and video: A media and methods experiment. Comput. Educ. 140:103603. doi: 10.1016/j.compedu.2019.103603

Panchuk, D., Klusemann, M. J., and Hadlow, S. M. (2018). Exploring the effectiveness of immersive video for training decision-making capability in elite, youth basketball players. Front. Psychol. 9:2315. doi: 10.3389/fpsyg.2018.02315

Pascarella, E., Seifert, T., and Blaich, C. (2010). How effective are the NSSE benchmarks in predicting important educational outcomes? Change Magazine High. Learn. 42, 16–22. doi: 10.1080/00091380903449060

Pashler, H., Cepeda, N. J., Wixted, J. T., and Rohrer, D. (2005). When does feedback facilitate learning of words? J. Exp. Psychol. Learn. Mem. Cogn. 31, 3–8. doi: 10.1037/0278-7393.31.1.3

Pizzimenti, M. A., and Axelson, R. D. (2015). Assessing student engagement and self-regulated learning in a medical gross anatomy course. Anatomical Sci. Educ. 8, 104–110. doi: 10.1002/ase.1463

Rahim, R. A., Noor, N. M., and Zaid, N. M. (2015). Meta-analysis on element of cognitive conflict strategies with a focus on multimedia learning material development. Int. Educ. Stud. 8, 73–78.

Roche, L., Kittel, A., Cunningham, I., and Rolland, C. (2021). 360° video integration in teacher education: A SWOT analysis. Front. Educ. 6:761176. doi: 10.3389/feduc.2021.761176

Rose, E., Claudius, I., Tabatabai, R., Kearl, L., Behar, S., and Jhun, P. (2016). The flipped classroom in emergency medicine using online videos with interpolated questions. J. Emerg. Med. 51, 284–291.e1. doi: 10.1016/j.jemermed.2016.05.033

Rosendahl, P., and Wagner, I. (2023). 360° videos in education – A systematic literature review on application areas and future potentials. Educ. Inf. Technol. 29, 1319–1355. doi: 10.1007/s10639-022-11549-9

Rupp, M. A., Kozachuk, J., Michaelis, J. R., Odette, K. L., Smither, J. A., and McConnell, D. S. (2016). The effects of immersiveness and future VR expectations on subjec-tive-experiences during an educational 360° video. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 60, 2108–2112. doi: 10.1177/1541931213601477

Rupp, M. A., Odette, K. L., Kozachuk, J., Michaelis, J. R., Smither, J. A., and McConnell, D. S. (2019). Investigating learning outcomes and subjective experiences in 360-degree videos. Comput. Educ. 128, 256–268. doi: 10.1016/j.compedu.2018.09.015

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

Skinner, E., Furrer, C., Marchand, G., and Kindermann, T. (2008). Engagement and disaffection in the classroom: Part of a larger motivational dynamic? J. Educ. Psychol. 100, 765–781. doi: 10.1037/a0012840

Smith, E. E., and Kosslyn, S. M. (2006). Cognitive Psychology: Mind and Brain. London: Pearson Education.

Stavroulia, K. E., Christofi, M., Baka, E., Michael-Grigoriou, D., Magnenat-Thalmann, N., and Lanitis, A. (2019). Assessing the emotional impact of virtual reality-based teacher training. Int. J. Inf. Learn. Technol. 36, 192–217.

Sun, J. (2014). Influence of polling technologies on student engagement: An analysis of student motivation, academic performance, and brainwave data. Comput. Educ. 72, 80–89. doi: 10.1016/j.compedu.2013.10.010

Szpunar, K. K., Khan, N. Y., and Schacter, D. L. (2013). Interpolated memory tests reduce mind wandering and improve learning of online lectures. PNAS Proc. Natl. Acad. Sci. U S A. 110, 6313–6317. doi: 10.1073/pnas.1221764110

Ulrich, F., Helms, N., Frandsen, U., and Rafn, A. (2021). Learning effectiveness of 360° video: Experiences from a controlled experiment in healthcare education. Interactive Learn. Environ. 29, 98–111. doi: 10.1080/10494820.2019.1579234

Wang, C., Xu, P., and Hu, Y. (2021). The effects of desktop virtual reality learning environment on learning engagement and performance - based on multimodal data. Open Educ. Res. 3, 112–120. doi: 10.13966/j.cnki.kfjyyj.2021.03.012

Watson, D., Clark, L. A., and Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Pers. Soc. Psychol. 54, 1063–1070. doi: 10.1037/0022-3514.54.6.1063

Wilkerson, M., Maldonado, V., Sivaraman, S., Rao, R. R., and Elsaadany, M. (2022). Incorporating immersive learning into biomedical engineering laboratories using virtual reality. J. Biol. Eng. 16:20. doi: 10.1186/s13036-022-00300-0

Wu, F., and Zhang, Q. (2018). Learning behavioral input:definition, analytical framework and theoretical model. China Electrochem. Educ. 1, 35–41.

Xie, Y., Jiu-Min, Y., Chung-Ling, P., Dai, C., and Liu, C. (2021). A study on the impact of pre-embedded questions and feedback on learning in instructional videos. China Distance Educ. 12, 63–77. doi: 10.13541/j.cnki.chinade.2021.12.006

Zheng, C., Lu, C.-H., Liu, H., Wang, L.-L., and Han, S.-H. (2021). A study of college students’ English learning perspectives and learning engagement in virtual reality environments. Foreign Lang. Teach. Learn. 2, 85–92.

Keywords: panoramic video, question feedback, cognitive engagement, emotional engagement, behavior engagement

Citation: Huang G, Zhang H, Zeng J and Chen W (2025) A study of the effect of question feedback types on learning engagement in panoramic videos. Front. Psychol. 16:1321712. doi: 10.3389/fpsyg.2025.1321712

Received: 14 November 2023; Accepted: 17 February 2025;

Published: 27 February 2025.

Edited by:

Douglas F. Kauffman, Consultant, Greater Boston Area, United StatesReviewed by:

Ali Hamed Barghi, Texas A&M University, United StatesCopyright © 2025 Huang, Zhang, Zeng and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guan Huang, aGVsZW4xOTgzMjI2QGFsaXl1bi5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.