- 1School of Education, Jingchu University of Technology, Jingmen, China

- 2The Education University of Hong Kong, Hong Kong SAR, China

- 3Haile Experimental School, Shenzhen, China

Though the importance and benefits of students’ active role in the feedback process have been widely discussed in the literature, an instrument for measuring students’ self-feedback behavior is still lacking. This paper reports the development and validation of the Self-feedback Behavior Scale (SfBS), which comprises three dimensions (seeking, processing, and using feedback). The SfBS items were constructed in line with the self-feedback behavioral model. One thousand two hundred fifty-two high school students (Grade 10 to Grade 12) in mainland China participated in this survey. The exploratory factor analysis revealed a three-factor model reaffirmed in the confirmatory factor analysis. The multi-group CFA supported the measurement invariance of the SfBS across gender. Using the SfBS can help researchers and teachers better understand students’ self-feedback behavior and optimize benefits derived from the self-feedback process.

Introduction

The shift of feedback research from a teacher-centered to a student-centered model has obtained more academic attention (Winstone et al., 2017; Bennett et al., 2017; Ryan and Deci, 2017; Mahoney et al., 2019). This shift highlights the role of student agency and how they could be more actively involved and eventually benefit from the feedback process to enhance their learning outcomes (Boud and Molloy, 2013; Dunworth and Sanchez, 2016). This study describes self-feedback behavior as students’ intentional behavior of seeking, processing, and using feedback for their learning improvement (Lipnevich et al., 2013; Lipnevich et al., 2016; Lipnevich and Panadero, 2021). Students could benefit from the self-feedback process since those who actively devote themselves to the self-feedback process would become more proficient in eliciting, making sense of information, and using the feedback for their learning improvement (Carless and Boud, 2018; Panadero et al., 2019a; Malecka et al., 2020; Yan and Carless, 2021). Furthermore, students who take more proactive agency in the self-feedback process likely become more proficient in their learning experiences and thus create more advanced learning opportunities for themselves. Eventually, they could gain more academic self-efficacy throughout the self-feedback process and achieve more outstanding academic achievement (Panadero et al., 2017; Van der Kleij, 2024).

Given the importance of self-feedback behavior, it is still understudied to measure students’ actions of seeking, processing, and using feedback (Evans, 2013; Winstone et al., 2017; Malecka et al., 2020). This instrument is expected to transform theoretical discussions into a shared understanding of students’ self-feedback behavior, thus advancing the research progressions of students’ engagement in the feedback process. This is the primary goal of the knowledge gap this study attempts to close.

Literature review

Behavior model of self-feedback

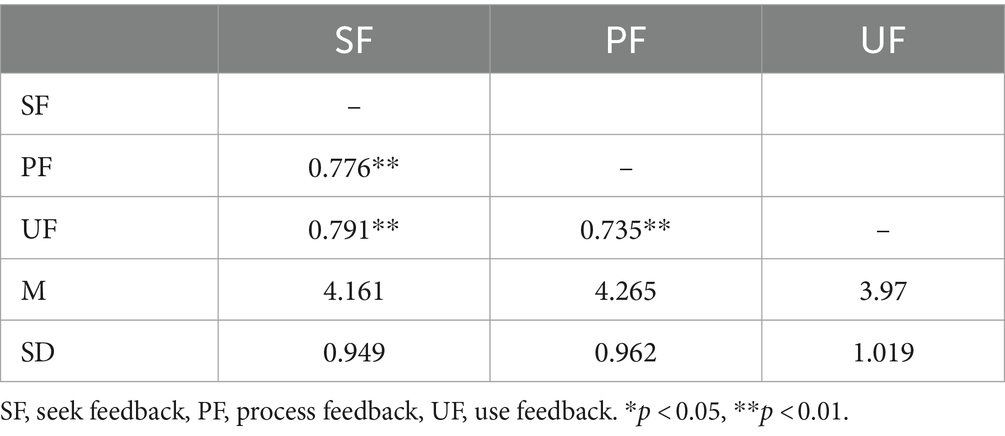

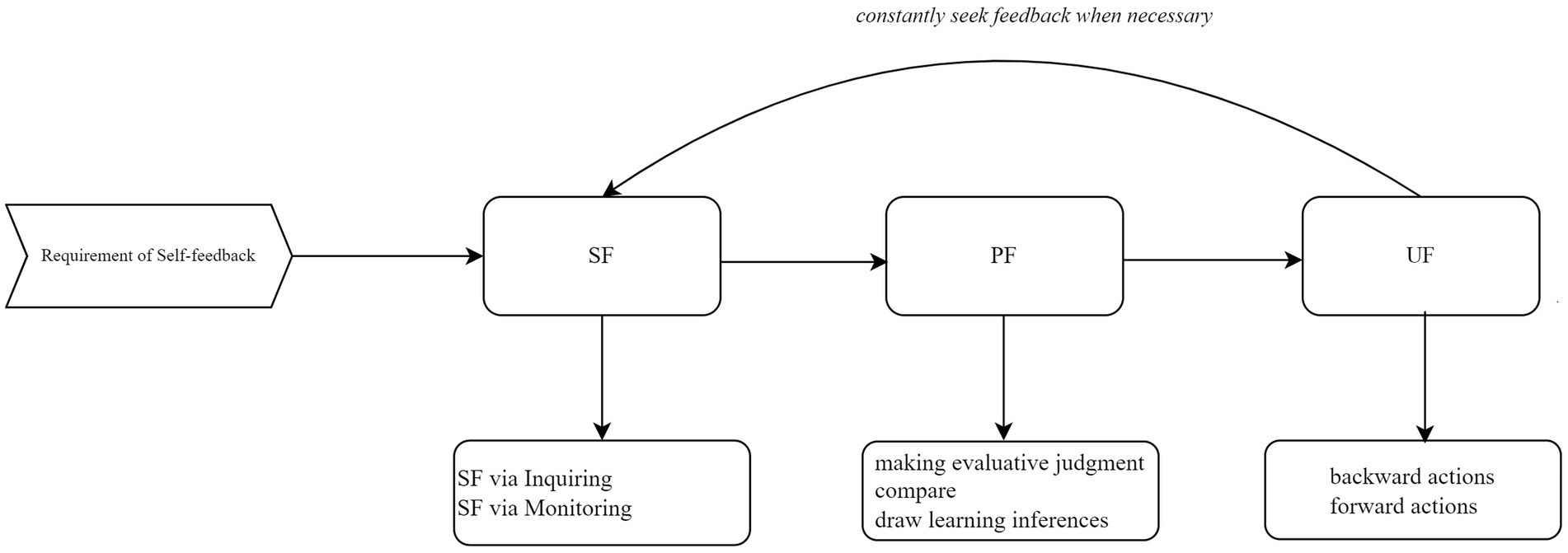

The shift of feedback studies to a “student-centered” framework has led many researchers to re-examine what students need to know to use feedback for their learning improvement. With this effort, Carless and Boud (2018, p. 1315) proposed feedback literacy, which “denotes the understandings, capacities, and dispositions needed to make sense of information and use it to enhance work or learning strategies.” However, students’ specific behaviors to engage in the feedback process have been surprisingly understudied (Molloy et al., 2019; Malecka et al., 2020), which limits its deserved potential to enhance students’ feedback learning. In this paper, unlike the conventional description of “self-level” feedback, which highlights students as the sole agents generating internal feedback without seeking input from external sources (Hattie and Timperley, 2007), we argue that self-feedback should highlight students’ active agency, and comprise three components: students taking the active initiative to seek, process, and use the feedback information to improve their learning performance (see Figure 1). Subsequently, each self-feedback action will be elaborated on.

Figure 1. The self-feedback behavioral model. SF, seek feedback; PF, process feedback; UF, use feedback.

Seek feedback

Previous studies on feedback emphasize that students should actively seek feedback from external sources, positioning students as proactive participants in the feedback process (Winstone et al., 2017; Carless and Boud, 2018; Boud and Molloy, 2013; Yan and Carless, 2021). It is crucial for students to deliberately solicit comments from their physical learning environment and engage with relevant individuals (Nicol, 2021). There are two main behavioral methods for obtaining feedback: (a) inquiry, which involves directly seeking external input from teachers, peers, and friends, and (b) monitoring, which involves indirectly drawing explicit inferences from the environment, their past experiences, and their peers’ work (Carless, 2006; Leenknecht et al., 2019). This study borrows these two forms of feedback-seeking behavior, considering their appropriateness in the context of self-feedback.

Process feedback

Once feedback is obtained, the following action shall be the feedback processing, as the received feedback information could often be mixed or conflicting from various sources and in different formats (Han and Xu, 2021). Students should, therefore, intentionally make evaluative judgments about the elicited comments (Nicol, 2014). However, making evaluative judgments about elicited comments can be emotionally and intellectually challenging, mainly when feedback originates from various sources, complicating its interpretation (McConlogue, 2015; Carless and Boud, 2018). To manage this complexity, students must develop the capacity to evaluate their work against the feedback received, using success criteria and exemplar work as benchmarks (Carless, 2015; Tai et al., 2017). This evaluative process is ideally enhanced through a meta-dialogue with teachers and peers, supplemented by additional learning resources (Carless and Boud, 2018; Panadero et al., 2019b). Subsequently, students can identify valuable inputs for their further use. The ultimate goal of feedback processing is to engage students in more profound reflection on their work by explicitly comparing it with previous coursework, learning objectives, and success criteria.

Use feedback

Students need to take the initiative to use the feedback to maximize their learning attainments throughout the self-feedback process (Malecka et al., 2020; Molloy et al., 2019; Winstone et al., 2022; Wood, 2022; Dawson et al., 2023). Self-feedback action can often be considered short-term and long-term depending on the timing of follow-up actions taken. Short-term action is often correcting their mistaken work, summarizing their strengths and weaknesses, reflecting their learning attainment, and possibly calibrating the success criteria of their learning. These are all recommended practices to be embedded and operationalized in a curriculum setting (Malecka et al., 2020; Yan and Carless, 2021). Long-term action includes re-calibrating their learning goals, building self-feedback skills into their daily learning experiences, and eventually formulating individualized learning growth plans (Malecka et al., 2020; Molloy et al., 2019; Yan and Carless, 2021).

Lastly, the self-feedback process is not a one-off behavior. Instead, it should be a cyclical mode to enhance self-feedback effectiveness. Students should consistently practice self-feedback strategies during their learning process to maximize its potential benefits.

The relationship between self-feedback and these similar concepts was further explained, given its theoretical connections with internal feedback, self-assessment, and feedback literacy (Falchikov and Boud, 1989; Panadero et al., 2016; Li and Han, 2021). Nicol (2021) describes internal feedback as “the new knowledge that students generate when they compare their current knowledge and competence against some reference information” (p. 2), highlighting how students internalize external inputs through comparisons (Laudel and Narciss, 2023). However, self-feedback focuses on processing elicited external feedback to form students’ own learning inferences rather than on the internal generation of information. Self-assessment is defined as a process “during which students collect information about their performance, evaluate, and reflect on the quality of their learning process and outcomes according to selected criteria to identify their strengths and weaknesses” (Yan and Brown, 2017, p. 1248) emphasizes assessment accuracy, while self-feedback highlights actions taken upon processed feedback. Carless and Boud (2018) conceptualize feedback literacy with four key elements: appreciating feedback, making judgments, managing affect, and taking action (Molloy et al., 2019, p. 3), framing it as feedback capacity rather than behavioral engagement (Noble et al., 2020). Self-feedback, in contrast, emphasizes students’ behavioral engagement in the feedback process.

Methods

Instrument development

Adopting a theory-driven approach, the Self-feedback Behavior Scale (SfBS) was created in line with the self-feedback behavioral model described. Therefore, the SfBS is comprised of three sub-scales: seek feedback (SF), process feedback (PF), and use feedback (UF). The SfBS items were developed using two primary resources: adapted items from the Feedback Literacy Behavior Scale (Dawson et al., 2023) and the Self-assessment Practice Scale (Yan, 2018, 2020). Particularly, for the Seek Feedback (SF) and Use Feedback (UF) dimensions; the items were initially adapted from the corresponding dimensions from Feedback Literacy Behavior Scale given its theoretical connections, while the SF in SfBS emphasizing the seeking feedback behavior from various learning resources, and UF emphasizing the cyclical process for students to constantly seek external feedback for optimizing their learning improvement plan. For the Process Feedback (PF) dimension, items were generated initially through adaption from the dimension of Make Sense of Information (MS) from the Feedback Literacy Behavior Scale and Self-reflection (SER) from the Self-assessment Practice Scale, while the SfBS emphasizing the importance of making evaluative judgments about comments elicited from multiple sources, additionally, all original items generated based on three rounds of focus group discussions. The focus group members have different levels of feedback proficiency across various subject domains. An initial set of 26 items was created, but five were eliminated due to content redundancy. The 21 obtained items were then reviewed by six experts, three from the educational assessment field and three from veteran teachers. The expert panel evaluated the appropriateness of factor-item structure and the accuracy of item content. Four items were removed due to their ambiguity. After rounds of reviews, 17 items were eventually generated.

The scale of seek feedback

Seeking feedback refers to students proactively soliciting comments from external sources. According to Ashford and Cummings (1983), this feedback can be named “seeking feedback through monitoring” and “seeking feedback through inquiry,” respectively. Henceforth, SF consists of six items to measure two types of feedback-seeking behavior: (a) seeking feedback through inquiry (4 items, e.g., I ask people for feedback on certain elements of my work); and (b) seeking feedback through monitoring (2 items, e.g., When I am working on a task, I consider comments I have received on similar tasks).

The scale of process feedback

Process feedback refers to students evaluating the comments they received, considering the credibility of the sources of the comments, comparing the inputs they received with their prior experiences with similar works and future learning goals, etc. (Han and Xu, 2020). Therefore, PF comprises six items to measure students’ various feedback processing steps. Items (e.g., When receiving conflicting information from different sources, I judge what I will use, and I carefully consider comments about my work before deciding if I will use them or not) are curated to measure the actions students might take when they receive conflicting comments from different sources; they would like to make evaluative judgments about whether these comments are correct and relevant or not for their coursework before they would further consider it. Items (e.g., When deciding what to do with comments, I consider the credibility of their sources) are constructed to measure how students can distinguish the valuable information from non-useful or misleading information they received; Items (e.g., I explicit the inferences after comparing comments with my leaning experiences) are constructed to measure students’ practices of comparing the feedback with their past learning experiences of similar coursework.

The scale of using feedback

Using feedback refers to how students use the processed information to facilitate their learning improvements. Therefore, the five-item scale (UF) measures how students used the comments and learning inferences they obtained to optimize their future learning strategy. Items (e.g., I can formulate my learning improvement plan after explicit inferences) aimed to measure whether students took measures to create their learning growth scheme after seeking and processing feedback information. Items (e.g., I would spend more time working on my advantageous areas, and I would spend more time working on my weak areas) were constructed to measure which areas the students took efforts to improve their learning attainment.

The scale was drafted in simplified Chinese to be administered to high school students in mainland China. It is a six-point positive Likert-type scale (1 = strongly disagree; 2 = disagree; 3 = slightly agree; 4 = agree; 5 = mostly agree; 6 = strongly agree) (Lam and Klockars, 1982). It aimed to match Chinese students’ inclination toward positive conformity, obtaining more variation in their responses (Brown and Harris, 2013).

Data collection

The Human Research Ethics Committee (HREC) approval was acquired before the commencement of the investigation. Psychometricians and behavioral experts were consulted regarding the potential dangers and associated precautions. Before the survey started, a set of consent forms and information sheets was provided to relevant stakeholders. The participants from three high schools in mainland China were surveyed. A dataset of 1,252 students from Grade 10 to Grade 12 (aged 15–18 years) was collected in this study.

Sample

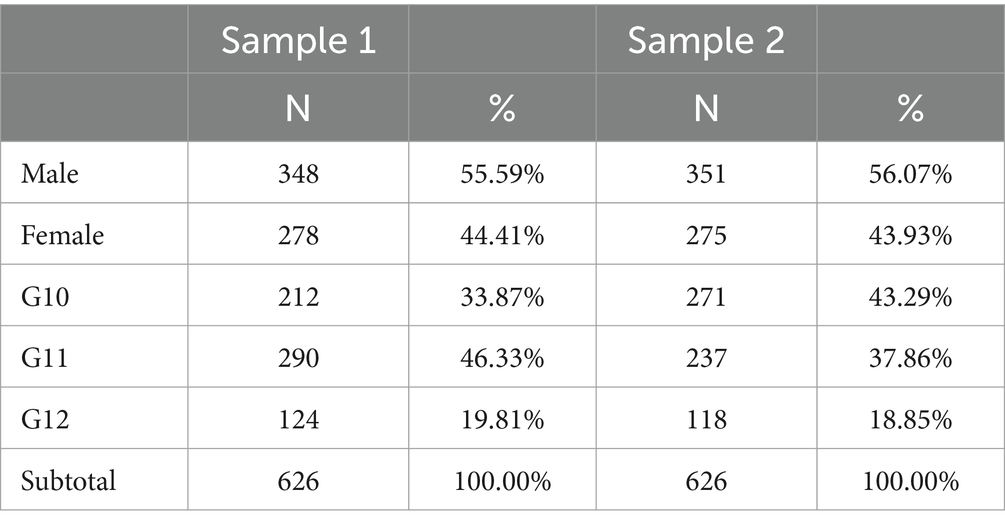

The dataset was then randomly divided into two sub-samples: Sample 1 (N = 626) was used for a series of exploratory factor analysis (EFA) to obtain the factorial structure of self-feedback behavior. In contrast, Sample 2 (N = 626) was adopted for confirmatory factor analysis (CFA) to assess the model fit of the factorial structure. The demographics of participants in both samples were reported in Table 1.

Data analysis

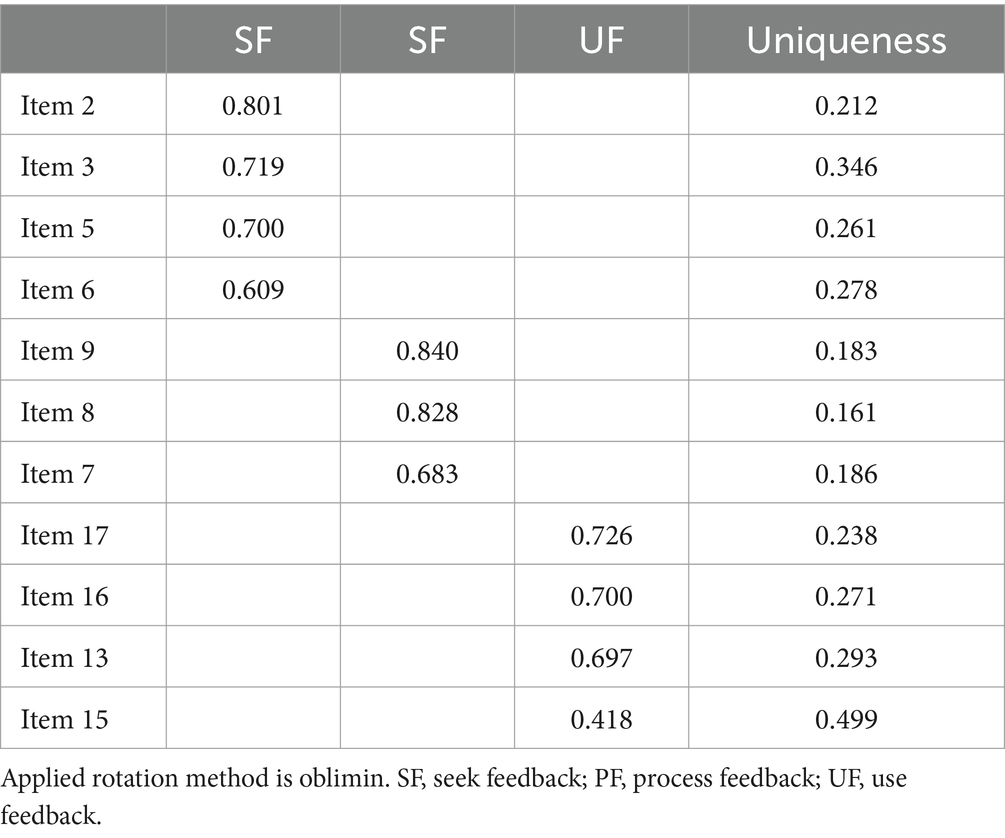

First, this study employed EFA with principal component extraction and oblique rotation (direct oblimin) in Sample 1 to delineate the factorial structure of the 17-item SfBS. Multiple criteria guided the factor extraction process. These consisted of scrutinizing the scree plot (Cattell, 1966; Floyd and Widaman, 1995), following Kaiser’s criterion, which entails extracting factors with eigenvalues equal to or exceeding 1.00 (Kaiser, 1960). Additionally, the commonalities of each variable, the proportion of variance explained, and the interpretability of the resultant factors were also evaluated. Furthermore, items demonstrating a disparity between their primary and secondary factor loadings of less than 0.20, alongside secondary factor loadings of at least 0.30, were identified as cross-loading items and subsequently excluded (Schaefer et al., 2015).

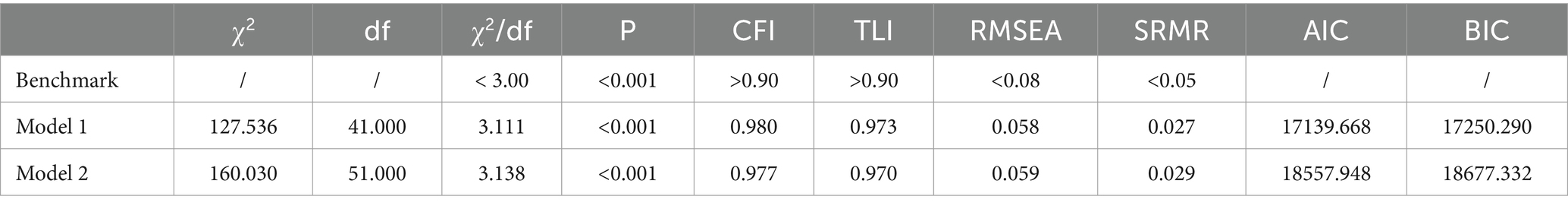

Second, CFA with maximum likelihood estimation was adopted in Sample 2 to compare the model fits between the theoretical and measurement structures resulting from EFA in Sample 1. As the model chi-square index is inclined to be significant and impacted by the sample size (Bentler and Bonett, 1980; Jöreskog and Sörbom, 1993; Newsom, 2012), other series of model fit indicators were considered, as reported in Table 2.

Third, this study assessed the validity of the SfBS using Messick (1995) framework, which treats validity as a comprehensive concept comprising six distinct aspects: content, substantive, structural, generalizability, external, and consequential validity (Messick, 1995). Moreover, the convergent and discriminant validities were examined to evaluate the item-factor structure of SfBS. Cronbach’s alpha (α) was also calculated for three factors of SfBS. An equal to or greater than 0.70 implies acceptable scale reliability (George and Mallery, 2003; Kline, 1999). Meanwhile, the composite scores of each subscale were determined by averaging the values of their components.

The EFA and CFA studies were computed using the lavaan package (Rosseel, 2012) for the R statistical computing environment (R Core Team, 2019), while the validity and internal reliability computation was performed using the SPSS 26.0 program.

Results

Exploratory factor analysis

Before the EFA was performed on Sample 1, each item’s skewness and kurtosis evaluation were examined. All items were within the range of ±1; this supported the assumption of normal distribution of this dataset (Bryman and Cramer, 2001; Kline, 2010). The item-total correlations were also satisfactory, following Wu (2010) suggested criteria (r > 0.40, p < 0.01). This indicated that Sample 1 was suitable for EFA testing. The Kaiser-Meyer-Olkin measure of sampling adequacy was 0.953, and Bartlett’s test of sphericity was χ2(136) = 10143.701, p < 0.001, implying that the data was acceptable for factor analysis.

Three factors with eigenvalues over 1.00 were identified and could be interpreted. The screen test (Cattell, 1966) echoed this solution further. The identified three-factorial structure of the self-feedback behavior model was aligned with the self-feedback behavioral model. However, three items did not produce an acceptable level of 0.40 on any factor; for example, item “I reflect on the quality of my own work and use my reflection as a source of information to improve my work” was deleted as it appeared that students do not think the self-reflection as a source of feedback, they would more prefer to seek external feedback from other people or their learning environment; item “When other people provide me with input about my work I listen or read thoughtfully” was deleted as students might interpret this item with emphasis on their listening and reading behavior. Therefore, they did not interpret this item as seeking feedback behavior; the item “I would spend more time working on my advantageous areas” was deleted since students would prefer spending more time on their weak areas for improvement rather than maintaining their advantageous areas. Meanwhile, another three items were reported to have cross-loading effects on both PF and UF. These three items were (I consider how comments relate to criteria or standards; I consider my experience on similar tasks when doing my current work; I explicit the inferences after comparing comments with my learning objectives.) Interestingly, this finding was consistent with some discussions raised in the focus group discussion where some students pointed out that they interpreted these three items as Use Feedback behavior. At the same time, some thought it should belong to the Process Feedback behavior. Eventually, the three-factorial solution based upon 11 items (Table 3) was obtained, namely, Seek Feedback (SF) with four items, Process Feedback (PF) with three items, and Use Feedback (UF) with four items. The three-factor solution contributed to 70.9% of the total variance.

Confirmatory factor analysis

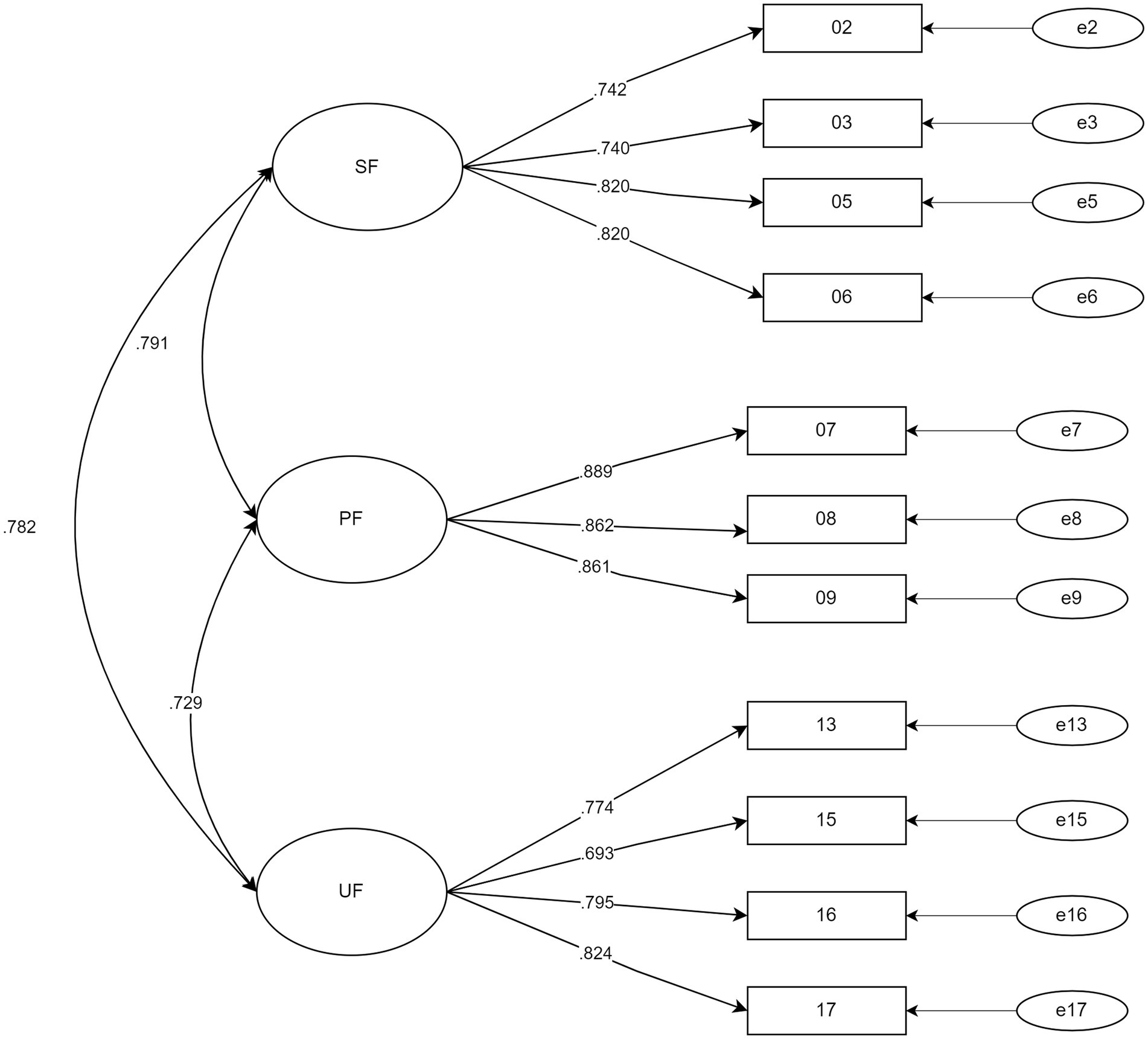

Two CFAs were employed in Sample 2 based on the hypothesized model (model 2) to cross-validate the factorial structure (model 1) proposed by the EFAs. For model 1, CFA has produced a satisfactory model fit, as described in Table 4. For model 2, a series of CFAs were conducted, and an adequate model fit was achieved (Hu and Bentler, 1999; Wu, 2010). Five items were removed due to either their low factor loadings (less than 0.3) or high modification indices (more than four, resulting in a poor goodness-of-fit index). Eventually, model 2 consisted of 12 items and achieved an acceptable model fit. In conclusion, the cross-validation study for both models supported the three-factorial structure of SfBS, which aligns with the theoretical description of the self-feedback behavioral model.

Notably, due to our study’s comparatively large sample size (N = 626), the Chi-square indices were not ideal, as the χ2/df indices for both models were slightly more than 3.0. Therefore, we chose to evaluate the model fit by considering the rest of the model fit indices (Browne and Cudeck, 1993). Meanwhile, for model comparison purposes, the information criteria (e.g., Akaike’s Information Criteria, AIC, Bayesian Information Criteria, BIC) would meaningfully indicate model comparison for further selection. As Kline (2010) suggested, a smaller AIC and BIC implies a better model fit.

Table 2 reported the goodness-of-fit indices for both CFA models. Albeit both models obtained acceptable model fits, the CFI and TLI indices were greater than 0.90, RMSEA were less than 0.80, and SRMR was less than 0.50. However, model 1 achieved comparatively better regular model fit indices. Moreover, both AIC and BIC indices for model 1 were smaller compared with model 2. Therefore, Model 1 with 11 items was chosen as the final model, as described in Figure 2.

Figure 2. Confirmatory factor analysis item loadings and correlations for the final model (Model 1).

Multi-groups CFAs

Multi-group CFAs were conducted for male and female participants. The chi-square test for variance should be stringent (Cheung and Rensvold, 2002). Considering the comparatively large sample size, the changes in CFI were adopted as criteria for evaluating model differences. A decrease of 0.01 in CFI could be acceptable to determine a lack of invariance across different groups (Byrne, 1998).

Table 4 reports the multi-group CFA results in different constraint levels. First, no equality constraints were imposed; the results indicated that M1 fit well with the CFI, RMSEA, and PNFI. Equality constraints were imposed on the measurement weights. M2 results also showed a good fit as the change of CFI was 0.001, implying the measurement weights in both gender groups are consistent. Equality constraints were further imposed on the measurement weights and measurement intercepts. No change of CFI was reported in M3. Finally, equality constraints were imposed on the measurement weights, intercepts, and structural covariances. Still, no change in CFI was reported in M4. In conclusion, these multi-group CFA tests suggested the consistency of the structure of SfBS across different gender groups.

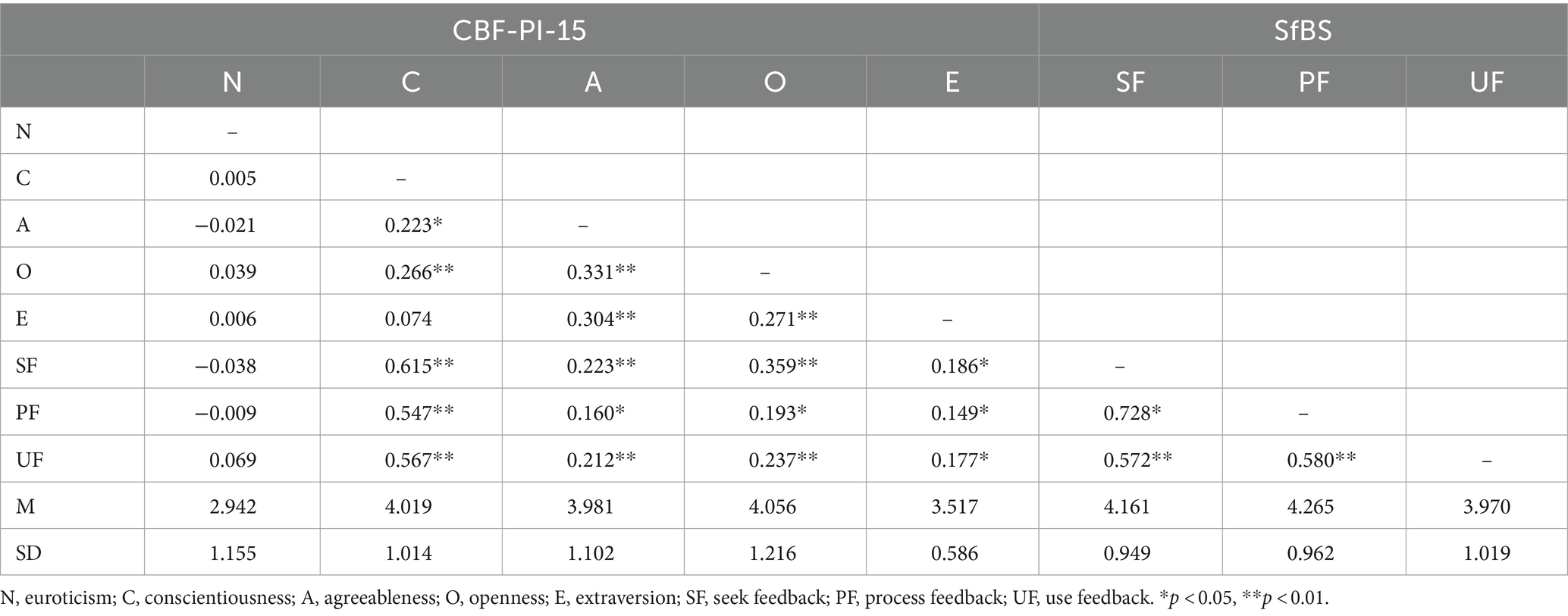

Validity

Table 5 reports the correlations between the three subscales of the SfBS and the five subscales of the CBF-PI-15 (Zhang et al., 2019). It was found that all three sub-scales of self-feedback behavior (SF, PF, and UF) had no significant correlation with neuroticism (N) but had substantial correlations with Conscientiousness, Agreeableness, Openness, and Extraversion (from 0.15 to 0.73, p < 0.05). It indicated that whether students had neuroticism personality or not, no impact was produced on their self-feedback behavior. Moreover, students with a low level of neuroticism were more likely to be active in seeking and processing feedback. In contrast, students’ conscientiousness was strongly correlated with self-feedback behaviors. Conscientiousness reflects thoroughness, responsibility, self-motivation, achievement orientation (Barrick and Mount, 1991; Costa and McCrae, 1992; Goldberg, 1993), and cooperation (Molleman et al., 2004). Individuals with high conscientiousness are more purposeful and motivated to accomplish tasks (Witt et al., 2002), suggesting that such students are more proactive in seeking, processing, and using feedback. Agreeableness, linked to compassion and cooperation, was also positively correlated with self-feedback, indicating that agreeable students are more likely to engage in the feedback process (Laursen et al., 2002). Openness, which measures creativity and receptiveness to new experiences, encourages students to seek and use feedback to enhance their performance (Schretlen et al., 2010). Extraversion, reflecting sociability and outgoingness, suggests that extroverted students are more likely to interact with teachers and peers to elicit feedback and apply it to their learning (Godfrey and Koutsouris, 2023).

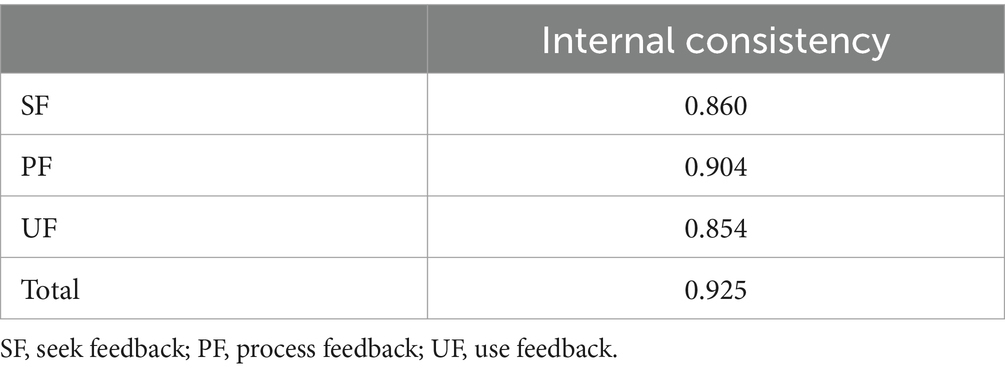

Furthermore, the convergent and discriminant validities of SfBS were also evaluated. All items demonstrated an acceptable range of factor loadings from 0.69 to 0.89, which implies robust convergent validity at the item level. The heterotrait-monotrait ratio of correlations (HTMT) analysis, which examines the ratio of the inter-item correlations between constructs to the inter-item correlations within a construct, was employed to evaluate the discriminant validity. Values of 0.85 or less are acceptable (Henseler et al., 2015). All three factors reported adequate discriminant validity in this study. In short, the factors were meaningfully different, and the strong correlations among the factors did not result from cross-loading items (Table 6).

Reliability

The Cronbach’s alpha (α) reliability of the three factors was computed to vary between 0.85 and 0.90 (Table 7). All these values were above the criteria of acceptable reliability of 0.70. Therefore, the SfBS (see Appendix) was considered to have sufficient remaining items.

Discussion

This study aimed to develop and validate an instrument for assessing student self-feedback behavior using samples of mainland Chinese students. The results supported the Student Self-feedback Behavior Scale (SfBS) as an appropriate instrument to measure self-feedback behavior for high school students in mainland China. EFA and CFA supported a three-factor model representing seeking, processing, and using feedback actions. Multi-group CFA ensured measurement invariance across gender groups, indicating measurement consistency of the SfBS among male and female students.

This study has two limitations. First, all participants were drawn from a single cultural context, namely mainland China, where Confucian values are deeply embedded. These cultural norms likely shape students’ self-feedback behaviors, as they tend to show deference to teachers’ feedback (Hofstede, 2001). In contrast, students from Western cultures may be more inclined to question teachers’ feedback and rely on their own learning experiences (Joy and Kolb, 2009; Holtbrügge and Mohr, 2010). Consequently, future research should explore self-feedback behaviors across diverse sociocultural contexts to determine whether the items in the SfBS exhibit cross-cultural invariance. Second, while this study provides preliminary evidence for the content, substantive, structural, and generalizability aspects of the SfBS’s validity, it did not address the consequential dimension of validity. As Panadero et al. (2024) argue, students’ active participation in the self-feedback process can “have a stronger effect on performance and learning” (p. 4). Therefore, future studies should further investigate this dimension to enhance the assessment of self-feedback practices using the SfBS and examine its relationship with students’ academic performance.

Despite areas for improvement, the SfBS offers a valuable instrument for researchers investigating student self-feedback behavior. Its theoretical foundation aligns with the proposed self-feedback process and enables the collection of crucial data for constructing a comprehensive understanding of student actions in the self-feedback process. Enhanced insight into students’ self-feedback behavior can inform teaching practices, promoting self-feedback and optimizing its effects on learning outcomes.

From the teachers’ perspective, understanding how to effectively implement self-feedback as an instructional strategy in the classroom can illuminate its benefits and foster active student engagement in the feedback process. Additionally, from the students’ viewpoint, being equipped with self-feedback as a learning strategy can significantly reduce the likelihood of misinterpretations of feedback. This, in turn, enables students to make more nuanced evaluative judgments of feedback and enhances their feedback self-efficacy (Carless and Boud, 2018; Panadero et al., 2019b). Moreover, students can derive more significant benefits from the self-feedback process by taking informed actions, such as feed-back, correcting misconceptions or errors in assignments, adjusting their learning objectives through feed-up, and refining their learning improvement strategies through feed-forward (Carless and Boud, 2018; Hattie et al., 2021; Mandouit and Hattie, 2023).

Conclusion and implications

This study made a theoretical contribution to the in-depth knowledge of the behavioral model of self-feedback. This self-feedback behavioral model can help researchers and teachers better understand how students could take the initiative to engage in the self-feedback process, thus offering insights into effective classroom instruction strategies. Furthermore, the SfBS provides a reliable instrument for researchers and practitioners to investigate students’ self-feedback behavior and its relevant areas of interest further. Researchers can use the SfBS to collect essential data measuring students’ self-feedback actions. With a clear description and understanding of students’ self-feedback, researchers and practitioners can better help students engage and benefit from their self-feedback process and, eventually, improve their academic self-efficacy and achievement.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Human Research Ethics Committee, The Education University of Hong Kong. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

YY: Conceptualization, Methodology, Writing – original draft. ZY: Conceptualization, Supervision, Writing – review & editing. JZ: Investigation, Writing – review & editing. WG: Resources, Writing – review & editing. JW: Investigation, Writing – review & editing. BH: Methodology, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Start-up Research Grant funded by the Jingchu University of Technology (Grant No. YY202419) and the Research Center for Basic Education Quality Development (Grant No. 2024YB01), Jingchu University of Technology, and 2024 Higher Educational Science Planning Grant, Chinese Association of Higher Education (Grant No. 24XX0403).

Acknowledgments

We are grateful to the focus group and expert panel members for their constructive feedback and meticulous comments on the SfBS item revisions.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ashford, S. J., and Cummings, L. L. (1983). Feedback as an individual resource: personal strategies of creating information. Organ. Behav. Hum. Perform. 32, 370–398. doi: 10.1016/0030-5073(83)90156-3

Barrick, M. R., and Mount, M. K. (1991). The big five personality dimensions and job performance: a meta analysis. Pers. Psychol. 44, 1–26. doi: 10.1111/j.1744-6570.1991.tb00688.x

Bennett, S., Dawson, P., Bearman, M., Molloy, E., and Boud, D. (2017). How technology shapes assessment design: findings from a study of university teachers. Br. J. Educ. Technol. 48, 672–682. doi: 10.1111/bjet.12439

Bentler, P. M., and Bonett, D. G. (1980). Significance tests and goodness of fit in the analysis of covariance structures. Psychol. Bull. 88, 588–606. doi: 10.1037/0033-2909.88.3.588

Boud, D., and Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assess. Eval. High. Educ. 38, 698–712. doi: 10.1080/02602938.2012.691462

Brown, G. T. L., and Harris, L. R. (2013). “Student self-assessment” in The SAGE handbook of research on classroom assessment. ed. J. H. McMillan (Thousand Oaks, CA: Sage), 367–393.

Browne, M. W., and Cudeck, R. (1993). “Alternative ways of assessing model fit” in Testing structural equation models. eds. K. A. Bollen and J. S. Long (Newbury Park, CA: Sage), 136–162.

Bryman, A., and Cramer, D. (2001). Quantitative data analysis with spss release 8 for Windows: for social scientists. New York, NY: Routledge.

Byrne, B. M. (1998). Structural equation modeling with LISREL, PRELIS, and SIMPLIS: basic concepts, applications, and programming. Mahwah, NJ: Lawrence Erlbaum Associates Publishers.

Carless, D. (2006). Differing perceptions in the feedback process. Stud. High. Educ. 31, 219–233. doi: 10.1080/03075070600572132

Carless, D. (2015). Excellence in university assessment: learning from award-winning practice. London: Routledge.

Carless, D., and Boud, D. (2018). The development of student feedback literacy: enabling uptake of feedback. Assess. Eval. High. Educ. 43, 1315–1325. doi: 10.1080/02602938.2018.1463354

Cattell, R. B. (1966). The scree test for the number of factors. Multivar. Behav. Res. 1, 245–276. doi: 10.1207/s15327906mbr0102_10

Cheung, G. W., and Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Struct. Equ. Model. Multidiscip. J. 9, 233–255. doi: 10.1207/S15328007SEM0902_5

Costa, P. T., and McCrae, R. R. (1992). Normal personality assessment in clinical practice: the NEO Personality Inventory. Psychol. Assess. 4, 5–13. doi: 10.1037/1040-3590.4.1.5

Dawson, P., Yan, Z., Lipnevich, A., Tai, J., Boud, D., and Mahoney, P. (2023). Measuring what learners do in feedback: the feedback literacy behaviour scale. Assess. Eval. High. Educ. 49, 348–362. doi: 10.1080/02602938.2023.2240983

Dunworth, K., and Sanchez, H. S. (2016). Perceptions of quality in staff-student written feedback in higher education: a case study. Teach. High. Educ. 21, 576–589. doi: 10.1080/13562517.2016.1160219

Evans, C. (2013). Making sense of assessment feedback in higher education. Rev. Educ. Res. 83, 70–120. doi: 10.3102/0034654312474350

Falchikov, N., and Boud, D. (1989). Student self-assessment in higher education: a meta-analysis. Rev. Educ. Res. 59, 395–430. doi: 10.3102/00346543059004395

Floyd, F. J., and Widaman, K. F. (1995). Factor analysis in the development and refinement of clinical assessment instruments. Psychol. Assess. 7, 286–299. doi: 10.1037/1040-3590.7.3.286

George, D., and Mallery, P. (2003). SPSS for Windows step by step: a simple guide and reference 11.0 update. 4th Edn. Boston: Allyn & Bacon.

Godfrey, E., and Koutsouris, G. (2023). Is personality overlooked in educational psychology? Educational experiences of secondary-school students with introverted personality styles. Educ. Psychol. Pract. 40, 159–184. doi: 10.1080/02667363.2023.2287524

Goldberg, L. R. (1993). The structure of phenotypic personality traits. Am. Psychol. 48, 26–34. doi: 10.1037/0003-066X.48.1.26

Han, Y., and Xu, Y. (2020). The development of student feedback literacy: the influences of teacher feedback on peer feedback. Assess. Eval. High. Educ. 45, 680–696. doi: 10.1080/02602938.2019.1689545

Han, Y., and Xu, Y. (2021). Student feedback literacy and engagement with feedback: a case study of chinese undergraduate students. Teach. High. Educ. 26, 181–196. doi: 10.1080/13562517.2019.1648410

Hattie, J., Crivelli, J., Van Gompel, K., West-Smith, P., and Wike, K. (2021). Feedback that leads to improvement in student essays: testing the hypothesis that “where to next” feedback is most powerful. Front. Educ. 6:645758. doi: 10.3389/feduc.2021.645758

Hattie, J., and Timperley, H. (2007). The Power of Feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A New criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Hofstede, G. H. (2001). Culture’s consequences: comparing values, behaviors, institutions and organizations across nations. Beverly Hills, CA: Sage.

Holtbrügge, D., and Mohr, A. T. (2010). Cultural determinants of learning style preferences. Acad. Manag. Learn. Educ. 9, 622–637. doi: 10.5465/amle.2010.56659880

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Jöreskog, K. G., and Sörbom, D. (1993). LISREL 8: structural equation modeling with the SIMPLIS command language. Scientific Software International. Chicago, IL: Lawrence Erlbaum Associates, Inc.

Joy, S., and Kolb, D. A. (2009). Are there cultural differences in learning style? Int. J. Intercult. Relat. 33, 69–85. doi: 10.1016/j.ijintrel.2008.11.002

Kaiser, H. F. (1960). The application of electronic computers to factor analysis. Educ. Psychol. Meas. 20, 141–151. doi: 10.1177/001316446002000116

Kline, R. B. (2010). Principles and practice of structural equation modeling. 3rd Edn. New York: The Guilford Press.

Lam, T. C. M., and Klockars, A. J. (1982). Anchor point effects on the equivalence of questionnaire items. J. Educ. Meas. 19, 317–322. doi: 10.1111/j.1745-3984.1982.tb00137.x

Laudel, H., and Narciss, S. (2023). The effects of internal feedback and self-compassion on the perception of negative feedback and post-feedback learning behavior. Stud. Educ. Eval. 77:101237. doi: 10.1016/j.stueduc.2023.101237

Laursen, B., Pulkkinen, L., and Adams, R. (2002). The antecedents and correlates of agreeableness in adulthood. Dev. Psychol. 38, 591–603. doi: 10.1037/0012-1649.38.4.591

Leenknecht, M., Hompus, P., and van der Schaaf, M. (2019). Feedback seeking behaviour in higher education: the association with students’ goal orientation and deep learning approach. Assess. Eval. High. Educ. 44, 1069–1078. doi: 10.1080/02602938.2019.1571161

Li, F., and Han, Y. (2021). Student Feedback Literacy in L2 Disciplinary Writing: Insights from International graduate Students at a UK university. Assess. Eval. High. Educ. 47, 198–212. doi: 10.1080/02602938.2021.1908957

Lipnevich, A. A., Berg, D., and Smith, J. K. (2016). “Toward a model of student response to feedback” in Human factors and social conditions in assessment. eds. G. T. L. Brown and L. Harris (New York, NY: Routledge), 169–185.

Lipnevich, A. A., McCallen, L. N., and Smith, J. K. (2013). Perceptions of the effectiveness of feedback: school leaders’ perspectives. Am 5, 74–93. doi: 10.18296/am.0109

Lipnevich, A. A., and Panadero, E. (2021). A review of feedback models and theories: descriptions, definitions, and conclusions. Front. Educ. 6:720195. doi: 10.3389/feduc.2021.720195

Mahoney, P., Macfarlane, S., and Ajjawi, R. (2019). A qualitative synthesis of video feedback in higher education. Teach. High. Educ. 24, 157–179. doi: 10.1080/13562517.2018.1471457

Malecka, B., Boud, D., and Carless, D. (2020). Eliciting, processing and enacting feedback: mechanisms for embedding student feedback literacy within the curriculum. Teach. Higher Educ. 27, 908–922. doi: 10.1080/13562517.2020.1754784

Mandouit, L., and Hattie, J. (2023). Revisiting “the power of feedback” from the perspective of the learner. Learn. Instr. 84:101718. doi: 10.1016/j.learninstruc.2022.101718

McConlogue, T. (2015). Making judgements: investigating the process of composing and receiving peer feedback. Stud. High. Educ. 40, 1495–1506. doi: 10.1080/03075079.2013.868878

Messick, S. (1995). Validity of psychological assessment: validation of inferences from persons' responses and performances as scientific inquiry into score meaning. Am. Psychol. 50, 741–749. doi: 10.1037/0003-066X.50.9.741

Molleman, E., Nauta, A., and Jehn, K. A. (2004). Person-job fit applied to teamwork: a multilevel approach. Small Group Res. 35, 515–539. doi: 10.1177/1046496404264361

Molloy, E., Boud, D., and Henderson, M. (2019). Developing a learner-centred framework for feedback literacy. Assess. Eval. High. Educ. 45, 527–540. doi: 10.1080/02602938.2019.1667955

Nicol, D. (2014). Guiding principles for peer review: unlocking learners’ evaluative skills. Advances and innovations in university assessment and feedback, 197–224. doi: 10.1515/9780748694556-014

Nicol, D. (2021). The power of internal feedback: exploiting natural comparison processes. Assess. Eval. High. Educ. 46, 756–778. doi: 10.1080/02602938.2020.1823314

Noble, C., Billett, S., Armit, L., Collier, L., Hilder, J., Sly, C., et al. (2020). "It's yours to take": generating learner feedback literacy in the workplace. Adv. Health Sci. Educ. 25, 55–74. doi: 10.1007/s10459-019-09905-5

Panadero, E., Broadbent, J., Boud, D., and Lodge, J. M. (2019b). Using formative assessment to influence self- and co-regulated learning: the role of evaluative judgement. Eur. J. Psychol. Educ. 34, 535–557. doi: 10.1007/s10212-018-0407-8

Panadero, E., Brown, G. T. L., and Strijbos, J.-W. (2016). The future of student self-assessment: a review of known unknowns and potential directions. Educ. Psychol. Rev. 28, 803–830. doi: 10.1007/s10648-015-9350-2

Panadero, E., Fernández, J., Pinedo, L., Sánchez, I., and García-Pérez, D. (2024). A self-feedback model (SEFEMO): secondary and higher education students’ self-assessment profiles. Assess. Educ. 31, 221–253. doi: 10.1080/0969594X.2024.2367027

Panadero, E., Jonsson, A., and Botella, J. (2017). Effects of self-assessment on self-regulated learning and self-efficacy: four meta-analyses. Educ. Res. Rev. 22, 74–98. doi: 10.1016/j.edurev.2017.08.004

Panadero, E., Lipnevich, A., and Broadbent, J. (2019a). “Turning self-assessment into self-feedback” in The impact of feedback in higher education: improving assessment outcomes for learners. eds. Henderson, M., Ajjawi, R., Boud, D., Molloy, E. Cham: Palgrave Macmillan, 147–163.

R Core Team (2019). R: A language and environment for statistical computing. Vienna: R Foundation for statistical Computing Available at: https://www.R-project.org/ (Accessed December 12, 2023).

Rosseel, Y. (2012). lavaan: An R Package for Structural Equation Modeling. J. Stat. Softw. 48, 1–36. doi: 10.18637/jss.v048.i02

Ryan, R. M., and Deci, E. L. (2017). Self-determination theory: basic psychological needs in motivation, development, and wellness. New York: Guilford.

Schaefer, L. M., Burke, N. L., Thompson, J. K., Dedrick, R. F., Heinberg, L. J., Calogero, R. M., et al. (2015). Development and validation of the sociocultural attitudes towards appearance questionnaire-4 (SATAQ-4). Psychol. Assess. 27, 54–67. doi: 10.1037/a0037917

Schretlen, D. J., van der Hulst, E. J., Pearlson, G. D., and Gordon, B. (2010). A neuropsychological study of personality: trait openness in relation to intelligence, fluency, and executive functioning. J. Clin. Exp. Neuropsychol. 32, 1068–1073. doi: 10.1080/13803391003689770

Tai, J., Ajjawi, R., Boud, D., Dawson, P., and Panadero, E. (2017). Developing evaluative judgement: enabling students to make decisions about the quality of work. 76, 467–481 High. Educ. doi: 10.1007/s10734-017-0220-3

Van der Kleij, F. M. (2024). Supporting students to generate self-feedback: critical reflections on the special issue. Stud. Educ. Eval. 81:101348. doi: 10.1016/j.stueduc.2024.101348

Winstone, N., Balloo, K., and Carless, D. (2022). Discipline-specific feedback literacies: a framework for curriculum design. High. Educ. 83, 57–77. doi: 10.1007/s10734-020-00632-0

Winstone, N., Nash, R. A., Rowntree, J., and Parker, M. (2017). It’d be useful, but I wouldn’t use it’: barriers to university students’ feedback seeking and recipience. Stud. High. Educ. 42, 2026–2041. doi: 10.1080/03075079.2015.1130032

Witt, L. A., Burke, L. A., Barrick, M. R., and Mount, M. K. (2002). The interactive effects of conscientiousness and agreeableness on job performance. J. Appl. Psychol. 87, 164–169. doi: 10.1037/0021-9010.87.1.164

Wood, J. (2022). Making peer feedback work: the contribution of technology-mediated dialogic peer feedback to feedback uptake and literacy. Assess. Eval. High. Educ. 47, 327–346. doi: 10.1080/02602938.2021.1914544

Wu, M. (2010). Statistical analysis of questionnaire: manipulation of SPSS. Chongqing: Chongqing university Press.

Yan, Z. (2018). The Self-assessment Practice Scale (SaPS) for students: development and psychometric studies. Asia Pac. Educ. Res. 27, 123–135. doi: 10.1007/s40299-018-0371-8

Yan, Z. (2020). Developing a short form of the Self-assessment Practices Scale: Psychometric Evidence. Frontiers in Education 4:153. doi: 10.3389/feduc.2019.00153

Yan, Z., and Brown, G. T. L. (2017). A cyclical self-assessment process: towards a model of how students engage in self-assessment. Assess. Eval. High. Educ. 42, 1247–1262. doi: 10.1080/02602938.2016.1260091

Yan, Z., and Carless, D. (2021). Self-assessment is about more than self: the enabling role of feedback literacy. Assess. Eval. High. Educ. 47, 1116–1128. doi: 10.1080/02602938.2021.2001431

Zhang, X., Wang, M.-C., He, L., Jie, L., and Deng, J. (2019). The development and psychometric evaluation of the Chinese Big Five Personality Inventory-15. PLoS One 14:e0221621. doi: 10.1371/journal.pone.0221621

Appendix

Self-feedback Behavior Scale (SfBS)

Seek feedback (SF)

1. I seek out examples of good work to improve my work.

2. I ask for comments about specific aspects of my work from others.

3. When I am working on a task, I consider comments I have received on similar tasks.

4. I seek feedback information from various learning resources.

Process feedback (PF)

5. I carefully consider comments about my work before deciding whether to use them.

6. When receiving conflicting information from different sources, I judge what I will use.

7. When deciding what to do with comments, I consider the credibility of their sources.

Use feedback (UF)

8. I can formulate my learning improvement plan after explicit inferences.

9. I would spend more time working on my weak areas.

10. I plan how I will use feedback to improve my future work, not just the immediate task.

11. I would continue seeking comments to improve my future learning.

Keywords: self-feedback behavior, scale development and validation, Chinese student, cross validation, measurement invariance

Citation: Yang Y, Yan Z, Zhu J, Guo W, Wu J and Huang B (2025) The development and validation of the Student Self-feedback Behavior Scale. Front. Psychol. 15:1495684. doi: 10.3389/fpsyg.2024.1495684

Edited by:

Wei Wei, Macao Polytechnic University, ChinaReviewed by:

Tanase Tasente, Ovidius University, RomaniaMihaela Luminita Sandu, Ovidius University, Romania

Copyright © 2025 Yang, Yan, Zhu, Guo, Wu and Huang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongle Yang, eWFuZzAzMTNAcXEuY29t

Yongle Yang

Yongle Yang Zi Yan

Zi Yan Jinyu Zhu2

Jinyu Zhu2 Junsheng Wu

Junsheng Wu