94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 04 February 2025

Sec. Perception Science

Volume 15 - 2024 | https://doi.org/10.3389/fpsyg.2024.1458373

Qian Xu1,2*

Qian Xu1,2*Perceiving facial expressions plays a crucial role in face-to-face social interactions. A wealth of studies has revealed the unconscious processing of emotional stimuli, including facial expressions. However, the relationship between the unconscious processing of happy faces and socially oriented personality traits—such as extraversion and prosocial tendency—remains largely unexplored. By pairing backward-masked faces with supraliminally presented faces in both visual fields, we found that the discrimination of visible emotional faces was modulated by the facial expressions of the invisible faces in the opposite visual field. The emotionally consistent condition showed a shorter reaction time (Exp 1) or higher accuracy (Exp 2) than the inconsistent condition. Moreover, the unconscious processing of happy faces was positively correlated with prosocial tendency but not with extraversion personality. These findings shed new light on the adaptive functions of unconscious emotional face processing, and highlight the importance of future investigations into the unconscious processing of extrafoveal happy expression.

Facial expressions play a crucial role in social communication (Tracy et al., 2015). Happy expressions can convey trust, friendliness, approval, or liking. Angry expressions might signal hostility or dissatisfaction, while fearful expressions can suggest vigilance or potential danger. Our perception of others’ facial expressions often influences our subsequent decisions and behaviors toward them.

Over the past three decades, numerous studies have shown that emotional stimuli, such as facial expressions, can be registered by parts of the brain, including the amygdala, even when they are not consciously perceived (Bertini and Làdavas, 2021; Diano et al., 2016; Jiang and He, 2006; Pegna et al., 2005; Qiu et al., 2022; Tamietto and De Gelder, 2010; Wang et al., 2023). This unconscious perception can influence social perception (Anderson et al., 2012; Gruber et al., 2016; Sagliano et al., 2020), and guide behaviors (Almeida et al., 2013) or eye movements (Vetter et al., 2019). “Unconscious” refers to sensory input below the consciousness threshold in psychophysics. Only a small percentage of sensory input triggers conscious perception. There are two types of unconsciousness (or unawareness): sensory and attentional (Tamietto and De Gelder, 2010). Sensory unconsciousness occurs when stimuli are presented with extremely weak strength, such as being too short in duration or too low in contrast, leading us to perceive nothing even when we are actively paying attention to the stimuli (Tamietto and De Gelder, 2010). Through a forced-choice awareness check procedure conducted after the main experiment, researchers could measure the objective awareness level. Some studies employed a trial-by-trial awareness check procedure to rigorously ensure sensory unconsciousness (Liu et al., 2023; Skora et al., 2024).

Previous research has suggested significant individual differences in the unconscious processing of facial expressions. For instance, a series of studies (Alkozei and Killgore, 2015; Cui et al., 2014; Günther et al., 2020; Günther et al., 2023; Vizueta et al., 2012) demonstrated correlations between the neuroimaging response to the subliminal fearful faces and psychopathology-related traits, such as negative affectivity which entails anxiety, depression, neuroticism, and so on (Günther et al., 2020; Vizueta et al., 2012). However, little is known about the individual differences in the unconscious processing of happy faces, particularly in extrafoveal vision.

Behavioral-level experimental studies have developed methods for measuring the degree of unconscious perception of extrafoveal emotional faces. Research by Bertini et al. (2013) and Tamietto and Gelder (2008) presented participants with visible and visually masked emotional faces (happy and fearful) in both visual fields, respectively, asking them to make emotional judgments about the visible face. They consistently found that when the invisible and visible emotional faces had the same expression, reaction times were significantly shorter than in other conditions. This “interhemispheric interaction between seen and unseen facial expressions” (Tamietto and Gelder, 2008) provides a behavioral measure for the unconscious processing of emotional faces, particularly in extrafoveal vision.

Since facial expressions convey crucial social communicative information (Frith, 2009), we hypothesize that unconscious processing of facial expressions might enhance social interactions. We predict that the stronger one’s ability to unconsciously perceive others’ facial expressions, the stronger their social communication abilities, and consequently, the more pronounced their extroverted personality. In addition, the happy facial expression is the most recognizable facial expression, which is a positive sign of prosocial intentions that is recognized in even the most remote cultures (Ekman and Friesen, 1971). Happy faces were identified most accurately and quickly compared to other facial expressions, even in the extrafoveal visual field (Calvo et al., 2010). Therefore, we also hypothesize that unconsciously perceiving happiness in others’ faces might facilitate our prosocial behaviors toward them. In other words, unconscious processing of happy facial expressions might enhance one’s prosocial tendency. It’s important to distinguish between extraversion and prosocial tendency, as they are distinct and unrelated personality traits (Kline et al., 2019). Extraversion refers to an individual’s enjoyment of and tendency to seek out social interactions. In contrast, prosocial tendency describes one’s inclination to act in ways that benefit others during social interactions. Notably, introverted individuals can also display high levels of prosocial behavior. Thus, these two personality traits—introversion and prosocial tendency—are separate and not necessarily correlated.

The present study aims to investigate whether there is a significant positive correlation between the unconscious processing of happy faces and socially oriented personality traits, particularly extraversion and prosocial tendency. The unconscious processing of happy faces was measured at the behavioral level, using the paradigm of previous studies (Bertini et al., 2013; Tamietto and Gelder, 2008). Experiment 1 investigates the unconscious processing of happy faces. Experiment 2 further validates the phenomenon and explores its correlation with prosocial tendency and extraversion personality traits.

A repeated-measures, within-factors power analysis in G*Power indicated a minimum sample size of 14 to achieve appropriate power to detect a medium effect size (parameters: effect size f(U) = 0.5, α = 0.05, power = 0.85, number of groups = 1, number of measurements = 6, “as in SPSS” option enabled) (Faul et al., 2009).

A total of 27 undergraduates participated in this study. Nine participants were excluded due to high performances in the post-experiment awareness check procedure (see section “2.5.1 Exclusion criteria”). Therefore, there were 18 valid participants (4 males and 14 females), with an average age of 19.40 ± 4.98 years. All participants reported normal or corrected-to-normal visual acuity and were naive to the purpose of the experiment. They all gave written informed consent in accordance with procedures and protocols approved by the institutional review board of our university and received payment for their participation.

The experiment was conducted in an independent cubicle laboratory. Stimuli were displayed on a Tsinghua Tongfang CRT display with a refresh rate of 60 Hz (resolution 1280 × 1024 pixels). The participants were seated in a chair with a chin rest fixed 57 cm from the monitor. The experimental program was written in MATLAB 7.1 (MathWorks) using the Psychtoolbox-3 platform (Brainard, 1997; Kleiner et al., 2007; Pelli, 1997).

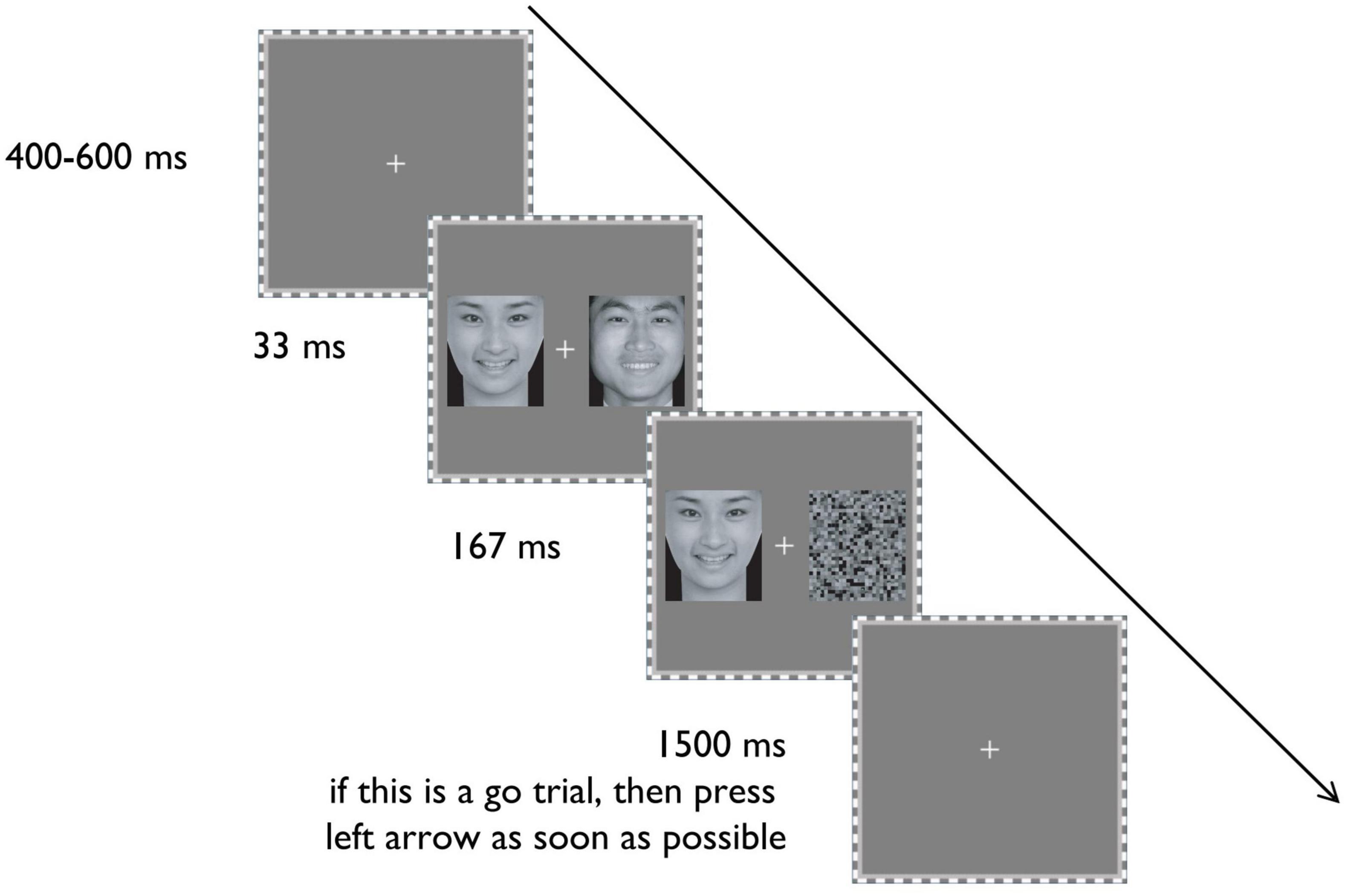

From the Chinese Emotional Face System (Gong et al., 2011), we selected pictures of happy, fearful, and neutral faces. Six happy and six fearful faces, with high degrees of arousal, were selected, each of which had 3 male and female faces. In addition, six neutral faces (3 male and 3 female) were chosen as the invisible neutral faces in the experiment. Another 180 new neutral faces (half male and half female) were selected and spatially scrambled, serving as masks (see Figure 1).

Figure 1. The schematic display of the procedure of a typical trial. Reproduced with permission from Gong et al. (2011).

The mean valence of the six fearful faces (2.79) is smaller than that of the neutral faces (5.00), which is smaller than that of the happy faces (6.58). The differences between either two types of faces reached statistically significant levels (ps < 0.003).

The mean arousal of the six fearful faces (5.89) is approximately equal to that of happy faces (5.78), which is higher than that of neutral faces (3.33). There is no significant difference between the arousals of fearful and happy faces (p ≈ 1.0), but both are significantly higher than the arousals of neutral faces (ps < 0.001).

Finally, we adjusted the average RGB luminance of each face to around 128 through Photoshop software.

As shown in Figure 1, each trial starts with a fixation at the center of the screen for 400–600 ms. Subsequently, an emotional (either happy or fearful) face appears on one side of the screen (randomly left or right side) for 200 ms. Simultaneously, on the opposite side, an emotional (either happy or fearful) or neutral face is displayed for 33 ms, followed by a spatially scrambled neutral face for 167 ms as a backward mask.

The experimental task is the classical Go/no-go task (Tamietto and Gelder, 2008). Participants must press the left arrow key on the keyboard as quickly as possible when they detect the target facial expression (Go response). They should not respond when they detect the non-target expression (No-go response). The target expression of the Go reaction is fixed in each block. The target face is the 200-ms visible emotional (either happy or fearful) face. Each trial has a time limit of 1.5 s. The next trial begins after 1.5 s or a key response.

Before the formal experiment, the participants practice until they are familiar with the task. The formal experiment consists of 4 blocks, each with 90 trials. The target expression of the Go reaction is fixed within each block. For half the participants, the target expressions for the four blocks are happy, fear, fear, and then happy. For the other half, the sequence is fear, happy, happy, and then fear. These two ABBA sequences are balanced across participants.

At the end of the experiment, the participants complete an awareness check task. This task follows the same procedure as the formal experiment, but with half the number of trials and a modified experimental task. Participants must choose or guess whether the briefly presented face before the mask (that is, the 167-ms scrambled neutral faces) is emotional or neutral.

The experiment employs a 2 (emotion of the visible face: happy or fearful) × 3 (emotional consistency between visible and invisible faces: consistent, irrelevant, or inconsistent) within-subject design. There are six experimental conditions. Each condition has 60 trials—30 Go trials and 30 No-go trials.

We calculated the mean and standard deviation of 27 participants on these indices: the overall accuracy and reaction time, and the d’ of the awareness check task, which is calculated by the signal detection theory method, with emotional faces as signals and neutral faces as noises.

Those who exceeded the mean ± 2.5 standard deviation of all participants on any of the above indices were excluded. Finally, 9 participants’ d’ excluded the mean + 2.5SD (standard deviation) of all participants in the awareness check task.

Therefore, 18 participants were included in the final analysis. Their mean accuracy of Go trials was 0.94, and the mean false alarm rate of No-go Trials was 0.12.

In the final awareness check task, the mean d’ of all participants was 0.08, which was not significantly different from 0 (t(17) = 1.629, p = 0.122). This demonstrated that the participants could not distinguish whether the 33-ms masked face was emotional or neutral.

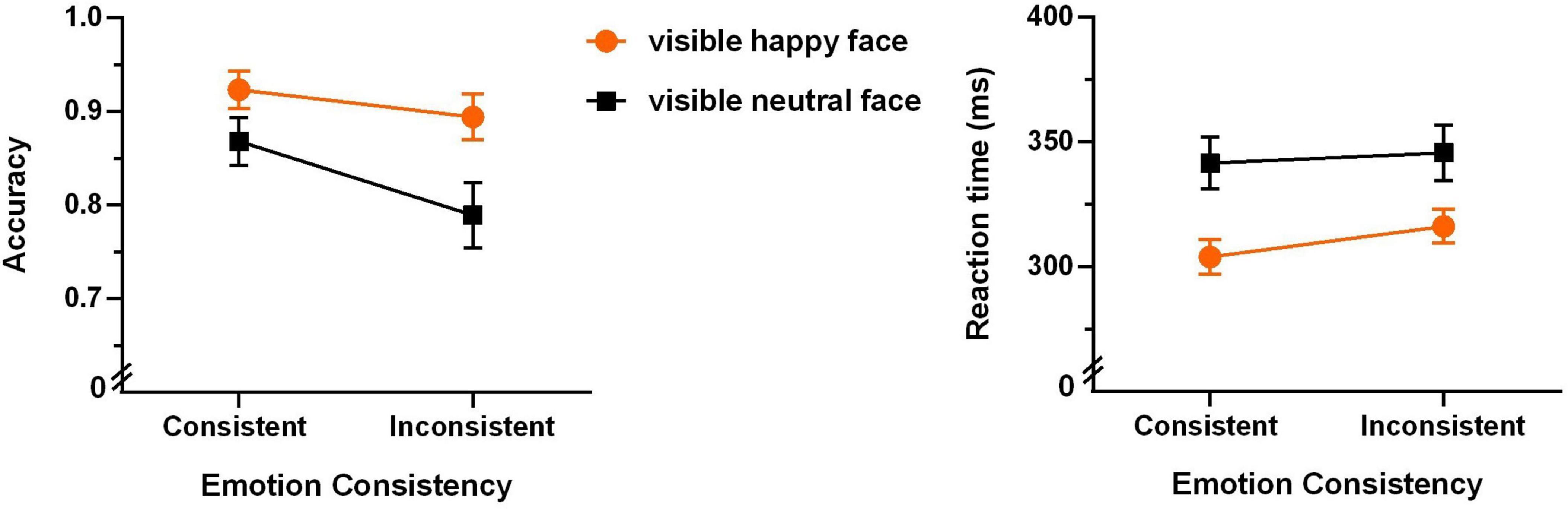

The mean accuracies and reaction times for the six experimental conditions are shown in Figure 2.

Accuracy

A 2 (emotion of the visible face: happy or fearful) × 3 (emotional consistency between visible and invisible faces: consistent, irrelevant, or inconsistent) repeated-measures ANOVA of accuracy only revealed a significant main effect of “emotion of the visible face” (F(1,17) = 12.782, p = 0.002, ηp2 = 0.429; the left panel of Figure 2). The fearful face condition shows significantly higher accuracy than the happy face condition. The main effect of emotional consistency was marginally significant (F(2,34) = 3.012, p = 0.062, ηp2 = 0.151). The interaction between them was not significant (F(2,34) = 0.627, p = 0.540, ηp2 = 0.036).

These results indicate that the participants’ accuracy on the Go/no-go task was significantly higher when identifying the fearful faces compared to happy faces, supporting the “negativity bias” (Carretié et al., 2001; Luo et al., 2010; Zhu and Luo, 2012).

Reaction time

A 2 (emotion of the visible face: happy or fearful) × 3 (emotional consistency between visible and invisible faces: consistent, irrelevant, or inconsistent) repeated-measures ANOVA of reaction time revealed that the main effect of “emotion of the visible face” was not significant (F(1,17) = 0.482, p = 0.497, ηp2 = 0.028), and the interaction was not significant (F(2,34) = 0.240, p = 0.788, ηp2 = 0.014). But the main effect of emotional consistency was significant (F(2,34) = 5.224, p = 0.011, ηp2 = 0.235; the right panel of Figure 2). Bonferroni multiple comparisons revealed that the consistent condition was significantly faster than the inconsistent condition (p = 0.032), and the irrelevant condition (i.e., the neutral face condition) was marginally significantly faster than the inconsistent condition (p = 0.057).

This demonstrates that even if a face on one side of the visual field is not consciously perceived, its emotion (specifically happiness and fear) can strongly affect the emotional discrimination of the emotional face on the other side of the visual field. When the emotions of visible and invisible faces conflict the discrimination of the visible emotional faces takes significantly longer compared to when emotions are consistent. This evidence supports the unconscious processing of facial expressions at the behavioral level, which is consistent with previous studies (Bertini et al., 2013; Tamietto and Gelder, 2008). In addition, the effect of emotional consistency is primarily demonstrated through the interference effect of conflicting invisible facial expressions toward discriminating the emotions of visible faces.

A repeated-measures, within-factors power analysis in G*Power revealed a minimum sample size of 19 to achieve adequate power to detect a medium effect size (parameters: effect size f(U) = 0.5, α = 0.05, power = 0.85, number of groups = 1, number of measurements = 4, “as in SPSS” option enabled) (Faul et al., 2009).

Forty-seven sophomores (7 males and 40 females), aged 20 years old, took part in this study. All participants reported normal or corrected-to-normal visual acuity and were naive to the purpose of the experiment. They all gave electronic informed consent in accordance with procedures and protocols approved by the institutional review board of our university and received course bonus points for their participation. To explore the correlation between the unconscious processing of happy faces, prosocial tendency, and extraversion, they finished an online questionnaire measuring prosocial tendency and extraversion personality traits after the experiment.

The experiment was conducted on the laptop of each student at home. The experimental program was written in E-Prime 2.0.

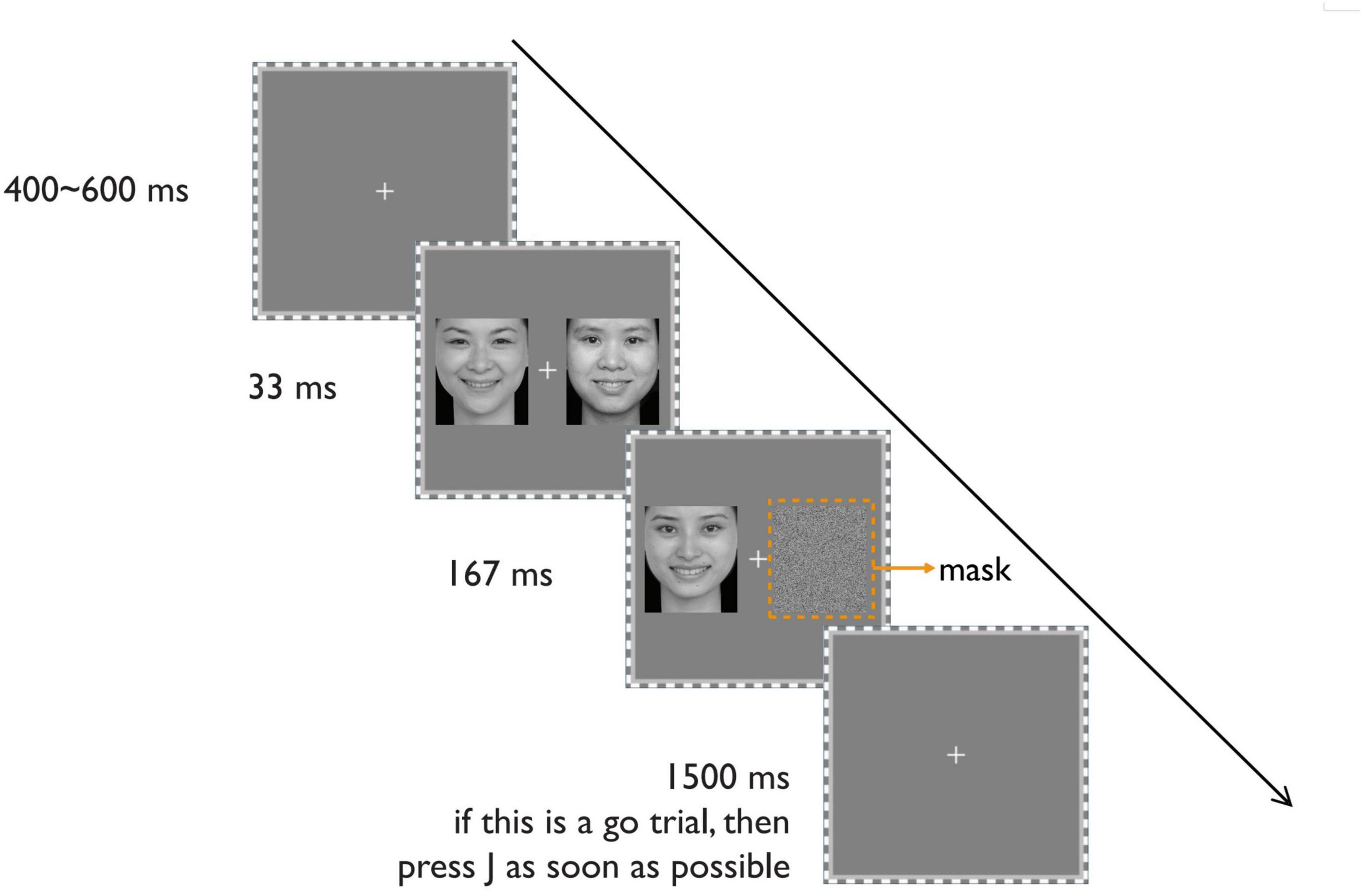

From the Chinese Emotional Face System (Gong et al., 2011), we selected pictures of happy and neutral faces with straight heads and hairless faces. Eight (4 male and 4 female) happy faces, with open-mouthed broad smiles, and eight (4 male and 4 female) neutral faces were selected. For the pictures used to backwardly mask the happy or neutral faces, a new neutral face was selected and spatially scrambled (see Figure 3). Another four (2 male and 2 female) happy faces and another four (2 male and 2 female) neutral faces were selected for the practice phase.

Figure 3. The schematic display of the procedure of a typical trial. Reproduced with permission from Gong et al. (2011).

Finally, we adjusted the average RGB luminance of each face to around 135 through Photoshop software.

Two measures were used in this study: the revised Chinese edition of Prosocial Tendency Measure (PTM) (Kou et al., 2007) and the extraversion subscale of the revised Chinese edition of the simplified NEO-FFI (Neuroticism Extraversion Openness Five-Factor Inventory) (Yao and Liang, 2010).

The revised PTM consists of 26 questions assessing one’s tendency to help other people during various conditions, which has high reliability and validity (Kou et al., 2007).

The extraversion subscale of the revised Chinese edition of the simplified NEO-FFI (Neuroticism Extraversion Openness Five-Factor Inventory) comprises 12 questions measuring the extraversion personality, which also shows high reliability and validity (Yao and Liang, 2010).

As shown in Figure 3, each trial starts with a fixation at the center of the screen for 400–600 ms. Then, on one side of the screen (randomly left or right side), a happy or neutral face appears for 33 ms, followed by a spatially scrambled neutral face for 167 ms as a backward mask. Simultaneously, the other side of the screen displays two successive faces for 33 and 167 ms, respectively. These faces share the same expression (happy or neutral) but have different identities. The manipulation of the visible side differs from Experiment 1 to make the two visual fields as similar as possible.

Before the formal experiment, the participants practice until they are familiar with the task. The experimental task, identical to Experiment 1, is the classical Go/no-go task (Tamietto and Gelder, 2008). Participants must press the J key on the keyboard as quickly as possible when they detect the target facial expression (Go response) and not respond when they detect the other non-target expression (No-go response). The target expression for the Go reaction is fixed in each block. The target face is the 167-ms visible (either happy or neutral) face. Each trial has a time limit of 1500 ms. The next trial begins after 1500 ms or a key response.

The formal experiment consists of 2 blocks, each containing 64 trials. The target expression for the Go reaction is fixed within each block. For half the participants, the target expressions for two blocks are happy and then neutral (Happy-Neutral sequence). For the other half, the target expressions for two blocks are neutral and then happy (Neutral-Happy sequence). These two sequences are balanced across participants.

Different from Experiment 1, we ensured all three faces were of the same gender during each trial. Besides, the identities of visible-side faces were completely different from those of the visible-side faces. Therefore, the masked faces were never exposed at a conscious level.

At the end of the experiment, the participants complete an awareness check task. This task follows the same procedure as the formal experiment but consists of only 16 trials. Participants must choose or guess whether the briefly presented face before the mask (that is, the 167-ms scrambled neutral faces) is happy or neutral.

The experiment employs a 2 (emotion of the visible face: happy or neutral) × 2 (emotional consistency between visible and invisible faces: consistent or inconsistent) within-subject design, which is different from Experiment 1. As a result, there are four experimental conditions (Table 1). Each condition has 32 trials—16 Go trials and 16 No-go trials.

We calculated the mean and standard deviation for 47 participants on these indices: the overall accuracy and reaction time, and the accuracy of the awareness check task. Unlike Experiment 1, we did not calculate the d’ by the signal detection theory method due to the small number of trials in the awareness check task for Experiment 2.

Three participants’ overall accuracy of the Go/no-go task exceeded the mean–2.5SD of all participants. No participants’ accuracy excluded the mean + 2.5SD of all participants in the awareness check task.

Consequently, data from 44 participants were considered valid for analysis. Their mean accuracy of Go trials was 0.93, while the mean false alarm rate of No-go trials was 0.23.

In the final awareness check task, the mean accuracy of all participants was 0.581, significantly higher than 0.5 (i.e., chance-level accuracy) (t(43) = 3.468, p ≈ 0.001). This suggests that some participants could recognize the 33-ms masked happy or neutral face.

Therefore, we divided them into two groups using an accuracy threshold of 0.6. Twenty-two participants formed the high-accuracy group, with a mean accuracy of 0.701, significantly higher than 0.5 (t(21) = 13.575, p < 0.001). The remaining 22 participants formed the low-accuracy group, with a mean accuracy of 0.461, not significantly different from 0.5 (t(21) = –1.54, p = 0.139). Therefore, the low-accuracy group was named the “unaware group,” while the high-accuracy group was named the “partially aware group.”

(1) Unaware group

To examine the strictly unconscious effect, we first analyzed the data of the unaware group. The mean accuracies and reaction times for the four experimental conditions are shown in Figure 4.

Figure 4. Accuracies (left panel) and reaction times (right panel) of the Go/no-go task of the unaware group (N = 22, the error bars denote ± 1 SEM).

Accuracy

A 2 (emotion of the visible face: happy or neutral) × 2 (emotional consistency between visible and invisible faces: consistent or inconsistent) repeated-measures ANOVA of accuracy revealed that the main effect of emotional consistency was very significant (F(1,21) = 15.039, p < 0.001, η2 = 0.417). As shown in Figure 4, the consistent condition shows significantly higher accuracy than the inconsistent condition. The main effect of “emotion of the visible face” was also very significant (F(1,21) = 15.051, p < 0.001, ηp2 = 0.417), with significantly higher accuracy for happy than neutral faces. The interaction between them was marginally significant (F(1,21) = 3.789, p = 0.065, ηp2 = 0.153).

Reaction time

A 2 (emotion of the visible face: happy or neutral) × 2 (emotional consistency between visible and invisible faces: consistent or inconsistent) repeated-measures ANOVA of reaction time only revealed a significant main effect of “emotion of the visible face” (F(1,21) = 18.484, p < 0.001, ηp2 = 0.468). As shown in Figure 4, the happy face condition shows significantly shorter reaction time than the neutral face condition. The main effect of emotional consistency was not significant (F(1,21) = 1.192, p = 0.154, ηp2 = 0.095), and the interaction between them was not significant (F(1,21) = 0.522, p = 0.478, ηp2 = 0.024).

This result indicates that the participants’ reaction time on the Go/no-go task is significantly shorter when identifying the happy face than the neutral face. Along with the results of accuracy, they all support the happy face recognition advantage in extrafoveal vision (Calvo et al., 2010).

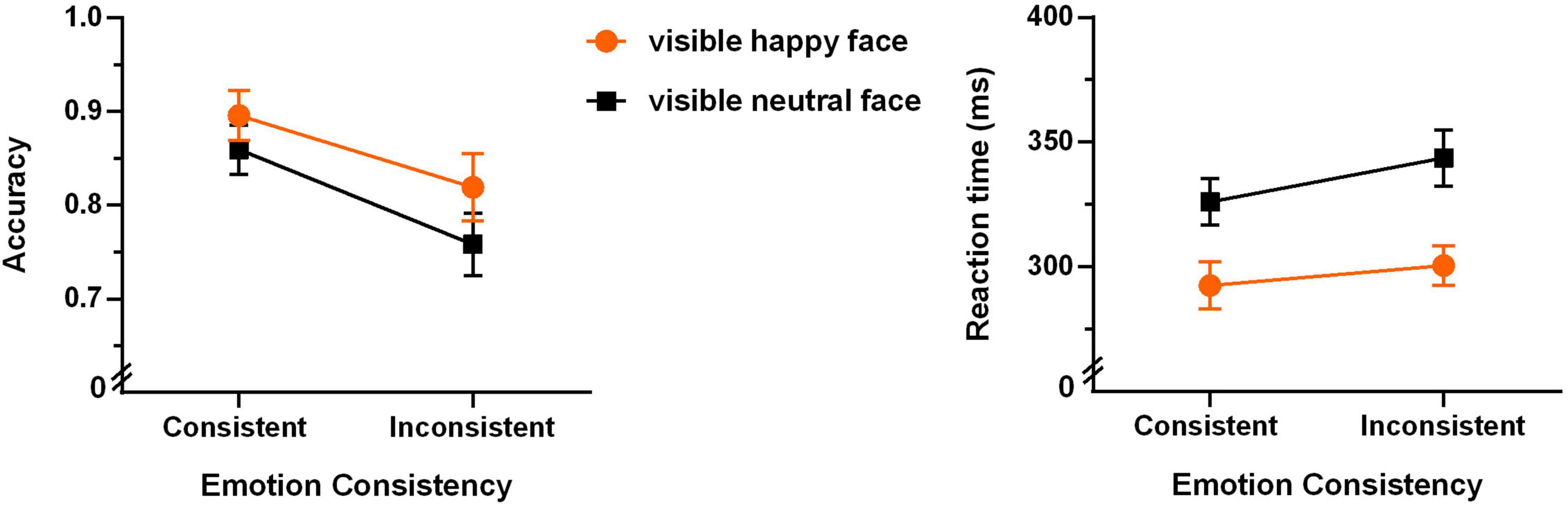

(2) Partially aware group

Furthermore, we analyzed the data of the partially aware group. The mean accuracies and reaction times for the four experimental conditions are shown in Figure 5.

Figure 5. Accuracies (left panel) and reaction times (right panel) of the Go/no-go task of the partially aware group (N = 22, the error bars denote ± 1 SEM).

Accuracy

A 2 (emotion of the visible face: happy or neutral) × 2 (emotional consistency between visible and invisible faces: consistent or inconsistent) repeated-measures ANOVA of accuracy revealed that the main effect of emotional consistency was very significant (F(1,21) = 8.606, p = 0.008, η2 = 0.291). As shown in Figure 5, the consistent condition shows significantly higher accuracy than the inconsistent condition. The main effect of “emotion of the visible face” was marginally significant (F(1,21) = 3.598, p = 0.072, ηp2 = 0.146), with higher accuracy for happy than neutral faces. The interaction between them was not significant (F(1,21) = 1.481, p = 0.237, ηp2 = 0.066).

Reaction time

A 2 (emotion of the visible face: happy or neutral) × 2 (emotional consistency between visible and invisible faces: consistent or inconsistent) repeated-measures ANOVA of the reaction time revealed significant main effect of “emotion of the visible face” (F(1,21) = 8.636, p = 0.008, ηp2 = 0. 291) and marginally significant main effect of “emotional consistency” (F(1,21) = 3.686, p = 0.069, ηp2 = 0.149). As shown in Figure 5, happy faces show significantly shorter reaction time than neutral face, and the consistent condition shows shorter reaction time than the inconsistent condition. The interaction effect was not significant (F(1,21) = 1.228, p = 0.280, ηp2 = 0.055).

Comparing the results of the two groups would demonstrate that the emotional consistency effect is robust regardless of awareness level, primarily reflected in the accuracy index.

The unconscious processing of invisible happy faces was reflected in two aspects:

(1) the promotion effect of invisible happy expressions on identifying happy faces, which is quantified by ACCPP – ACCPT and RTPT – RTPP (see Table 1 for the explanation of the abbreviations). The across-participant mean Promotion Effect can be observed from the slope of the orange lines in Figures 4, 5.

(2) the interference effect of invisible happy expressions on identifying neutral faces, which is quantified by ACCTT – ACCTP and RTTP – RTTT. In the same vein, the across-participant mean Interference Effect can be observed from the slope of the black lines in Figures 4, 5.

The larger the above 4 indices, the stronger the unconscious processing of happy faces.

After calculating the above indices, Pearson correlation analysis was performed on the above 4 indices and scores of two questionnaires.

(1) Unaware group

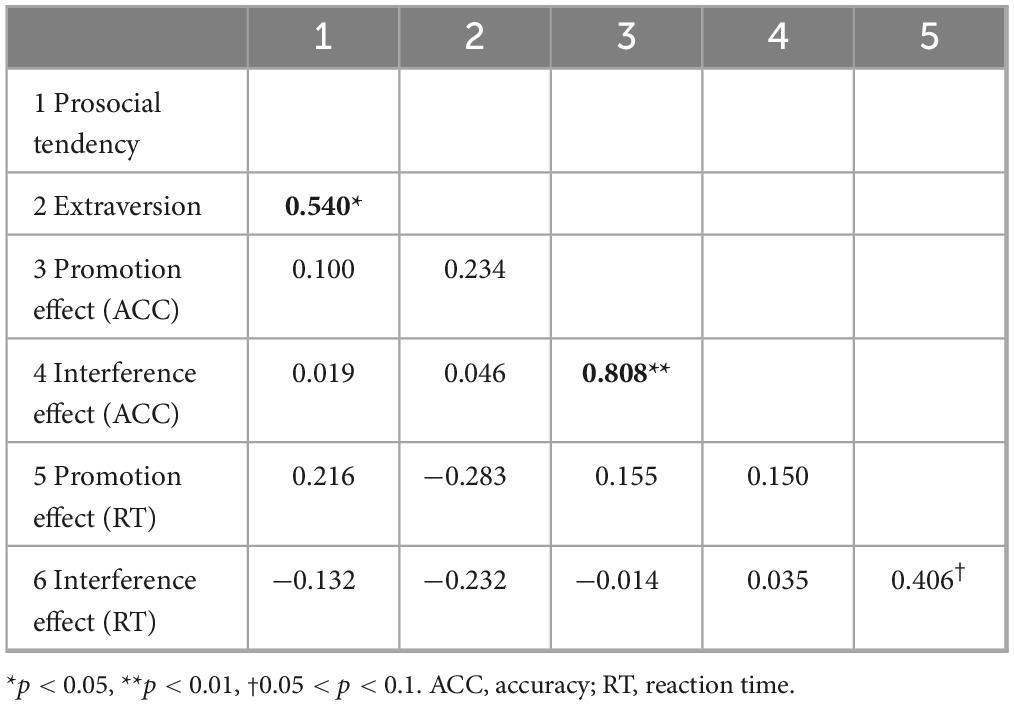

As shown in Table 2, three significant correlations were found for the unaware group.

First, the promotion effect of reaction time (i.e., RTPT – RTPP) and the promotion effect of accuracy (i.e., ACCPP – ACCPT) were very significantly positively correlated [r(22) = 0.577, p = 0.005, FDR-corrected p = 0.005]. In addition, the promotion effect of reaction time (i.e., RTPT – RTPP) and the interference effect of accuracy (i.e., ACCTT – ACCTP) were significantly positively correlated [r(22) = 0.444, p = 0.039, FDR-corrected p = 0.045].

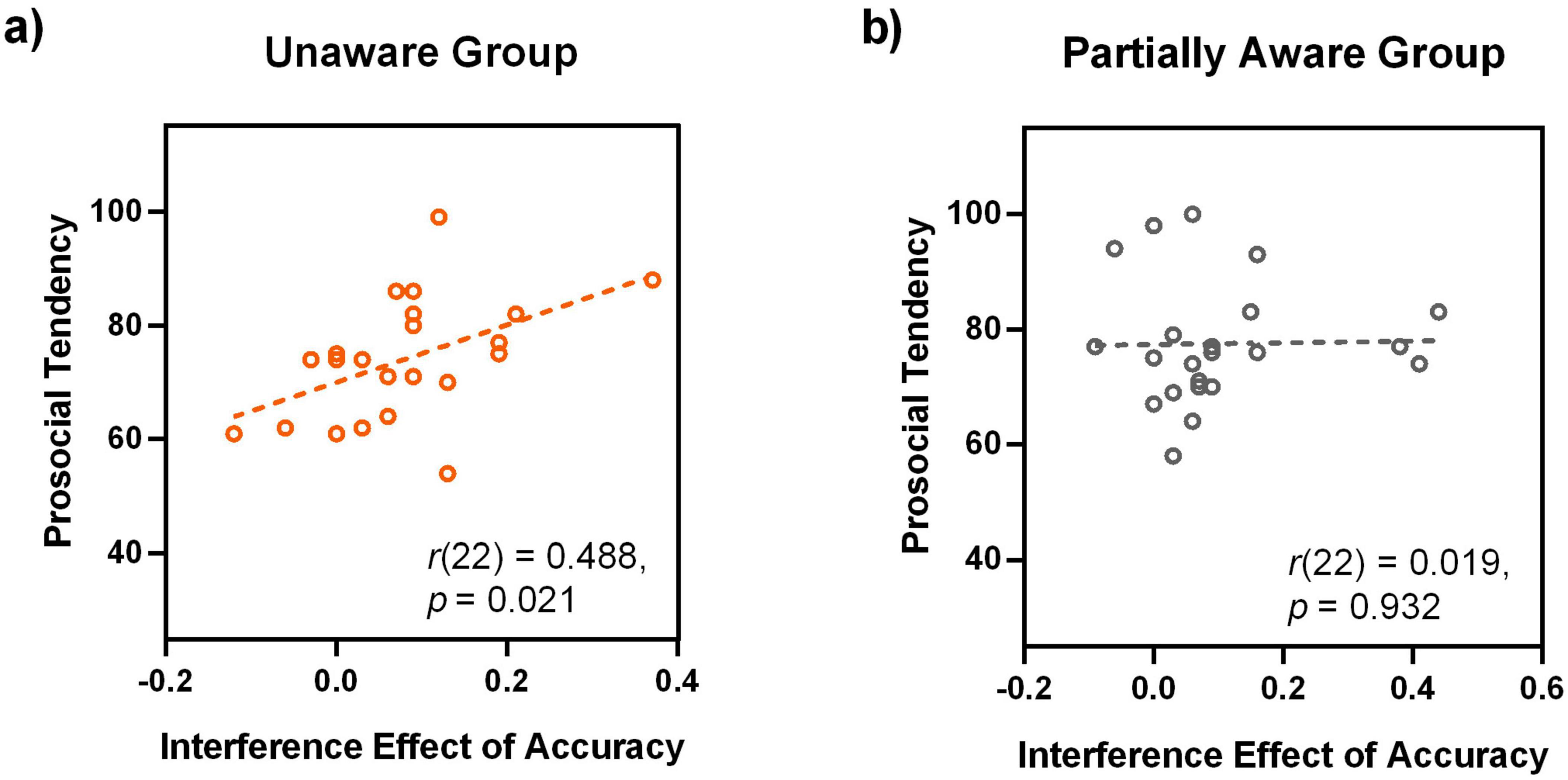

Second, the prosocial tendency score and the interference effect of accuracy (i.e., ACCTT – ACCTP) were significantly positively correlated [r(22) = 0.488, p = 0.021, FDR-corrected p = 0.023] (Figure 6A). This result indicates that there is a significant correlation between the unconscious processing of happy expressions and the prosocial tendency.

Figure 6. Correlation between prosocial tendency and the processing of happy faces for the unaware (A) and partially aware (B) groups. Note that the panel (B) shows non-significant correlation for the partially aware group.

(2) Partially aware group

As shown in Table 3, only two significant correlations were found for the partially aware group.

Table 3. Correlations between all the behavioral indices and personalities for the partially aware group.

First, the promotion effect of accuracy (i.e., ACCPP – ACCPT) and the interference effect of accuracy (i.e., ACCTT – ACCTP) were very significantly positively correlated [r(22) = 0.808, p < 0.001, FDR-corrected p < 0.001].

Second, the prosocial tendency score significantly positively correlated with extraversion [r(22) = 0.540, p = 0.009, FDR-corrected p = 0.01], which is quite different from the results of the unaware group (as shown in Table 2) and the overall correlation result ([r(44) = 0.215, p = 0.161]).

For a direct comparison with the unaware group, we also presented the correlation between the prosocial tendency score and the interference effect of accuracy (i.e., ACCTT – ACCTP), which is not significant at all (Figure 6B).

Taken together, a positive correlation was demonstrated between the individual prosocial tendency and the processing of happy faces, specifically for the unaware group. And the correlation was reflected in the interference effect of accuracy.

Previous studies have shown that the brain can process unconscious (i.e., invisible) emotional faces (Bertini and Làdavas, 2021; Diano et al., 2016; Jiang and He, 2006; Pegna et al., 2005; Qiu et al., 2022; Tamietto and De Gelder, 2010; Wang et al., 2023), which influence subsequent cognition and behaviors (Almeida et al., 2013; Anderson et al., 2012; Gruber et al., 2016; Sagliano et al., 2020; Vetter et al., 2019). Consistent with previous behavioral studies (Bertini et al., 2013; Tamietto and Gelder, 2008), the ANOVA results of the present study (both Experiment 1 and Experiment 2) provide additional behavioral-level evidence for the unconscious processing of emotional faces (specifically happy and fearful faces). Furthermore, Experiment 2 revealed a significant correlation between the behavioral index of unconscious processing of extrafoveal happy faces and one’s prosocial tendency. However, no such correlation was found with extraversion personality

The correlation results of Experiment 2 revealed that the larger the prosocial tendency of an individual, the stronger the interference effect of unconscious happy expressions on identifying neutral faces. Facial expressions, conveying non-verbal social cues like social intentions, play an important role in social communications (Frith, 2009; Tracy et al., 2015). For example, happy expressions convey trust, friendliness, approval, or liking, and fearful expressions indicate vigilance or potential dangers. In Experiment 2, we focused solely on happy faces, omitting fearful faces, for two reasons. First, the unconscious processing of fearful faces has been extensively studied, and prior studies already demonstrated a correlation between the neuroimaging response to subliminal fearful faces and psychopathology-related traits such as negative affectivity (including anxiety, depression, neuroticism, and so on) (Günther et al., 2020; Vizueta et al., 2012). Second, the happy facial expression is the most recognizable facial expression, serving as a positive sign of prosocial intentions even in the most remote cultures (Ekman and Friesen, 1971). Happy faces are identified most accurately and quickly compared to other facial expressions, even in the extrafoveal visual field (Calvo et al., 2010). Moreover, happy faces can attract attention in the dot-probe paradigm, which could not be attributed to low-level factors (Wirth and Wentura, 2020). The correlation results of Experiment 2 strongly supported one of the social communicative functions of happy face perception: receiving prosocial messages from the happy faces and thereby promoting prosocial behavior toward them. This result is in keeping with a previous study where participants were trained to perceive happy rather than angry expressions on an emotionally ambiguous face, leading to a decrease in self-reported state anger and aggressive behaviors of adolescents at high risk of criminal offending and delinquency (Penton-Voak et al., 2013). Therefore, the perception of subtle expressions of happiness might causally promote prosocial tendencies and reduce antisocial behaviors like aggression. Future studies could further examine this possibility, potentially paving the way for unconscious interventions to encourage prosocial behavior and discourage antisocial actions.

Besides, Killgore and Yurgelun-Todd (2007) suggested that empathy and prosocial behaviors might be related to the ability to detect subtle expressions of sadness because sad facial expressions communicate loss and the need for social support. Consequently, further research into sad facial expressions is warranted. Notably, future studies should consider dynamic facial expressions rather than static ones, as dynamic expressions are more effective in inducing unconscious emotional responses than static expressions (Sato et al., 2014).

Some researchers assumed that the results of unconscious processing of faces could be attributed to partial or residual awareness (Kleckner et al., 2018; Kouider et al., 2010). It should be noted that the correlation result of Experiment 2 is restricted to the unaware group, (Figure 6 and Tables 2, 3). Therefore, it demonstrated a dissociation between the conscious and unconscious levels. As Merikle and Daneman (2000) noted, a qualitative difference between unconscious-level and conscious-level results is the most valid indicator of unawareness. Thus, the distinct patterns of correlation results support the notion that the unconscious effect in the present study is restricted to the unconscious level, but not due to partial or residual awareness. Some may argue that a 33-ms presentation is long enough to produce partial awareness. However, it should be noted that the 33-ms face was presented to the extrafoveal vision, and participants could not predict which visual field the conscious face would appear in. These factors made it difficult to detect the 33-ms extrafoveal face. In addition, the extrafoveal presentation of facial expressions more closely resembles everyday life, as we don’t always gaze directly at others’ faces. Our results suggest that in daily life, prosocial individuals are more likely to detect happy facial expressions during extrafoveal vision, even with limited clues.

The current study had several limitations. First, the awareness check procedure was not conducted on a trial-by-trial basis, which might be considered insufficiently rigorous. During some trials, participants’ responses may be influenced by partial awareness. However, if the awareness check is conducted in a trial-wise fashion, the secondary task might interfere with the main task by interrupting transitions from one trial to the next (Cheng et al., 2019; Rabagliati et al., 2018). Secondly, there is a long-lasting debate on how to measure the efficacy of backward masking techniques (Wiens, 2006). To ensure strict unconsciousness of backward-masked stimuli, the experimenters should present forced-choice questions about the emotional valence of each masked stimulus. The present study adhered to this protocol. Some researchers argue that d’ = 0 may not be sufficient to guarantee the absence of consciousness. This is because d’ = 0 is a null hypothesis lacking statistical power. Additionally, d’ = 0 might result from low motivation rather than a true lack of awareness (Merikle and Daneman, 2000; Wiens, 2006). Future research could implement a trial-by-trial awareness check procedure, asking participants to rate their level of awareness. This approach would help study the dose-response relationship between changes in awareness and other variables (Wiens, 2006). Lastly, to achieve a more balanced gender representation, more male participants should be enrolled to bring the male-to-female ratio closer to 1:1. This will help determine whether the correlation effect is consistent across genders.

In summary, few studies have examined the unconscious processing of extrafoveal happy faces. The present study underscores the need for future research into the unconscious processing of extrafoveal happy expression and calls for more investigation into the adaptive functions of unconscious emotional face processing.

(1) The discrimination of visible emotional faces was modulated by the facial expression of the invisible face in the opposite visual field. Emotionally consistent conditions showed shorter reaction time (Experiment 1) or higher accuracy (Experiment 2) than inconsistent conditions.

(2) The unconscious processing of emotional face is positively correlated with individual prosocial tendency, but not extraversion. These results shed new light on the functional role of the unconscious processing of happy expressions for the first time and support the social-communicative function of facial expressions.

The original contributions presented in this study are included in this article/Supplementary material, further inquiries can be directed to the corresponding author.

The studies involving humans were approved by the Institutional Review Board of Beijing Union University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

QX: Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Software, Supervision, Visualization, Validation, Writing – original draft, Writing – review and editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research was supported by grants from Beijing Social Science Fund (grant number: 18JYC027), Beijing Municipal Education Commission (grant number: KM202311417008), and Beijing Union University (grant number: Zk10201604).

Many thanks to Meihui Song and Xuanhe Wang (our undergraduate students) for data collection. We have used Notion AI (Plus) to refine the language.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1458373/full#supplementary-material

Alkozei, A., and Killgore, W. D. S. (2015). Emotional intelligence is associated with reduced insula responses to masked angry faces. Neuroreport 26, 567–571. doi: 10.1097/WNR.0000000000000389

Almeida, J., Pajtas, P. E., Mahon, B. Z., Nakayama, K., and Caramazza, A. (2013). Affect of the unconscious: visually suppressed angry faces modulate our decisions. Cogn. Affect. Behav. Neurosci. 13, 94–101. doi: 10.3758/s13415-012-0133-7

Anderson, E., Siegel, E., White, D., and Barrett, L. F. (2012). Out of sight but not out of mind: unseen affective faces influence evaluations and social impressions. Emotion 12, 1210–1221.

Bertini, C., and Làdavas, E. (2021). Fear-related signals are prioritised in visual, somatosensory and spatial systems. Neuropsychologia 150:107698. doi: 10.1016/j.neuropsychologia.2020.107698

Bertini, C., Cecere, R., and Làdavas, E. (2013). I am blind, but I “see” fear. Cortex 49, 985–993. doi: 10.1016/j.cortex.2012.02.006

Calvo, M. G., Nummenmaa, L., and Avero, P. (2010). Recognition advantage of happy faces in extrafoveal vision: featural and affective processing. Vis. Cogn. 18, 1274–1297.

Carretié, L., Mercado, F., Tapia, M., and Hinojosa, J. A. (2001). Emotion, attention, and the ‘negativity bias’, studied through event-related potentials. Int. J. Psychophysiol. 41, 75–85.

Cheng, K., Ding, A., Jiang, L., Tian, H., and Yan, H. (2019). Emotion in Chinese words could not be extracted in continuous flash suppression. Front. Hum. Neurosci. 13:309. doi: 10.3389/fnhum.2019.00309

Cui, J., Olson, E. A., Weber, M., Schwab, Z. J., Rosso, I. M., Rauch, S. L., et al. (2014). Trait emotional suppression is associated with increased activation of the rostral anterior cingulate cortex in response to masked angry faces. Neuroreport 25, 771–776. doi: 10.1097/WNR.0000000000000175

Diano, M., Celeghin, A., Bagnis, A., and Tamietto, M. (2016). Amygdala response to emotional stimuli without awareness: facts and interpretations. Front. Psychol. 7:2029. doi: 10.3389/fpsyg.2016.02029

Ekman, P., and Friesen, W. V. (1971). Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 17, 124–129.

Faul, F., Erdfelder, E., Buchner, A., and Lang, A.-G. (2009). Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Frith, C. (2009). Role of facial expressions in social interactions. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 364, 3453–3458.

Gong, X., Huang, Y.-X., Wang, Y., and Luo, Y.-J. (2011). Revision of the Chinese facial affective picture system. Chin. Ment. Health J. 25, 40–46.

Gruber, J., Siegel, E. H., Purcell, A. L., Earls, H. A., Cooper, G., and Barrett, L. F. (2016). Unseen positive and negative affective information influences social perception in bipolar I disorder and healthy adults. J. Affect. Disord. 192, 191–198. doi: 10.1016/j.jad.2015.12.037

Günther, V., Hußlack, A., Weil, A.-S., Bujanow, A., Henkelmann, J., Kersting, A., et al. (2020). Individual differences in anxiety and automatic amygdala response to fearful faces: a replication and extension of Etkin et al. (2004). Neuroimage 28, 102441. doi: 10.1016/j.nicl.2020.102441

Günther, V., Pecher, J., Webelhorst, C., Bodenschatz, C. M., Mucha, S., Kersting, A., et al. (2023). Non-conscious processing of fear faces: a function of the implicit self-concept of anxiety. BMC Neurosci. 24:12. doi: 10.1186/s12868-023-00781-9

Jiang, Y., and He, S. (2006). Cortical responses to invisible faces: dissociating subsystems for facial-information processing. Curr. Biol. 16, 2023–2029.

Killgore, W. D., and Yurgelun-Todd, D. A. (2007). Unconscious processing of facial affect in children and adolescents. Soc. Neurosci. 2, 28–47.

Kleckner, I. R., Anderson, E. C., Betz, N. J., Wormwood, J. B., Eskew, R. T. Jr., and Barrett, L. F. (2018). Conscious awareness is necessary for affective faces to influence social judgments. J. Exp. Soc. Psychol. 79, 181–187. doi: 10.1016/j.jesp.2018.07.013

Kline, R., Bankert, A., Levitan, L., and Kraft, P. (2019). Personality and prosocial behavior: a multilevel meta-analysis. Polit. Sci. Res. Methods 7, 125–142.

Kou, Y., Hong, H.-F., Tan, C., and Li, L. (2007). Revisioning prosocial tendencies measure for adolescent. Psychol. Dev. Educ. 23, 112–117.

Kouider, S., de Gardelle, V., Sackur, J., and Dupoux, E. (2010). How rich is consciousness? The partial awareness hypothesis. Trends Cogn. Sci. 14, 301–307. doi: 10.1016/j.tics.2010.04.006

Liu, D., Liu, W., Yuan, X., and Jiang, Y. (2023). Conscious and unconscious processing of ensemble statistics oppositely modulate perceptual decision-making. Am. Psychol. 78, 346–357. doi: 10.1037/amp0001142

Luo, W., Feng, W., He, W., Wang, N.-Y., and Luo, Y.-J. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage 49, 1857–1867.

Merikle, P. M., and Daneman, M. (2000). “Conscious vs. unconscious perception,” in The New Cognitive Neurosciences, 2nd Edn, ed. M. S. Gazzaniga (Cambridge, MA: MIT Press), 1295–1303.

Pegna, A. J., Khateb, A., Lazeyras, F., and Seghier, M. L. (2005). Discriminating emotional faces without primary visual cortices involves the right amygdala. Nat. Neurosci. 8, 24–25. doi: 10.1038/nn1364

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis. 10, 437–442.

Penton-Voak, I. S., Thomas, J., Gage, S. H., McMurran, M., McDonald, S., and Munafò, M. R. (2013). Increasing recognition of happiness in ambiguous facial expressions reduces anger and aggressive behavior. Psychol. Sci. 24, 688–697.

Qiu, Z., Lei, X., Becker, S. I., and Pegna, A. J. (2022). Neural activities during the processing of unattended and unseen emotional faces: a voxel-wise meta-analysis. Brain Imaging Behav. 16, 2426–2443. doi: 10.1007/s11682-022-00697-8

Rabagliati, H., Robertson, A., and Carmel, D. (2018). The importance of awareness for understanding language. J. Exp. Psychol. Gen. 147, 190–208.

Sagliano, L., Maiese, B., and Trojano, L. (2020). Danger is in the eyes of the beholder: the effect of visible and invisible affective faces on the judgment of social interactions. Cognition 203:104371. doi: 10.1016/j.cognition.2020.104371

Sato, W., Kubota, Y., and Toichi, M. (2014). Enhanced subliminal emotional responses to dynamic facial expressions. Front. Psychol. 5:994. doi: 10.3389/fpsyg.2014.00994

Skora, L. I., Scott, R. B., and Jocham, G. (2024). Stimulus awareness is necessary for both instrumental learning and instrumental responding to previously learned stimuli. Cognition 244:105716. doi: 10.1016/j.cognition.2024.105716

Tamietto, M., and De Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11:697. doi: 10.1038/nrn2889

Tamietto, M., and Gelder, B. D. (2008). Affective blindsight in the intact brain: neural interhemispheric summation for unseen fearful expressions. Neuropsychologia 46, 820–828. doi: 10.1016/j.neuropsychologia.2007.11.002

Tracy, J. L., Randles, D., and Steckler, C. M. (2015). The nonverbal communication of emotions. Curr. Opin. Behav. Scien. 3, 25–30.

Vetter, P., Badde, S., Phelps, E. A., and Carrasco, M. (2019). Emotional faces guide the eyes in the absence of awareness. Elife 8:e43467. doi: 10.7554/eLife.43467

Vizueta, N., Patrick, C. J., Jiang, Y., Thomas, K. M., and He, S. (2012). Dispositional fear, negative affectivity, and neuroimaging response to visually suppressed emotional faces. Neuroimage 59, 761–771. doi: 10.1016/j.neuroimage.2011.07.015

Wang, Y., Luo, L., Chen, G., Luan, G., Wang, X., Wang, Q., et al. (2023). Rapid processing of invisible fearful faces in the human amygdala. J. Neurosci. 43, 1405–1413. doi: 10.1523/JNEUROSCI.1294-22.2022

Wirth, B. E., and Wentura, D. (2020). It occurs after all: attentional bias towards happy faces in the dot-probe task. Atten. Percept. Psychophys. 82, 2463–2481.

Yao, R., and Liang, L. (2010). Analysis of the application of simplified NEO-FFI to undergraduates. Chin. J. Clin. Psychol. 18, 457–459.

Keywords: unconscious, emotional face, happy face, prosocial tendency, extraversion

Citation: Xu Q (2025) Unconscious processing of happy faces correlates with prosocial tendency but not extraversion. Front. Psychol. 15:1458373. doi: 10.3389/fpsyg.2024.1458373

Received: 05 July 2024; Accepted: 29 November 2024;

Published: 04 February 2025.

Edited by:

George L. Malcolm, University of East Anglia, United KingdomCopyright © 2025 Xu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qian Xu, sftxuqian@buu.edu.cn

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.