- Department of Psychiatry and Behavioral Sciences, Northwestern University Feinberg School of Medicine, Chicago, IL, United States

Introduction

Neuropsychological testing can inform practitioners and scientists about brain-behavior relationships that guide diagnostic classification and treatment planning (Donders, 2020). However, not all examinees remain engaged throughout testing and some may exaggerate or feign impairment, rendering their performance non-credible and uninterpretable (Roor et al., 2024). It is therefore important to regularly assess the validity of data obtained during a neuropsychological evaluation (Sweet et al., 2021). However, performance validity assessment (PVA) is a complex process. Practitioners must know when and how to use multiple performance validity tests (PVTs) while accounting for various contextual, diagnostic, and intrapersonal factors (Lippa, 2018). Furthermore, inaccurate PVA can lead to erroneous and potentially harmful judgments regarding an examinee's mental health and neuropsychological status. Although the methods used to address these complexities in PVA are evolving (Bianchini et al., 2001; Boone, 2021), improvement is still needed.

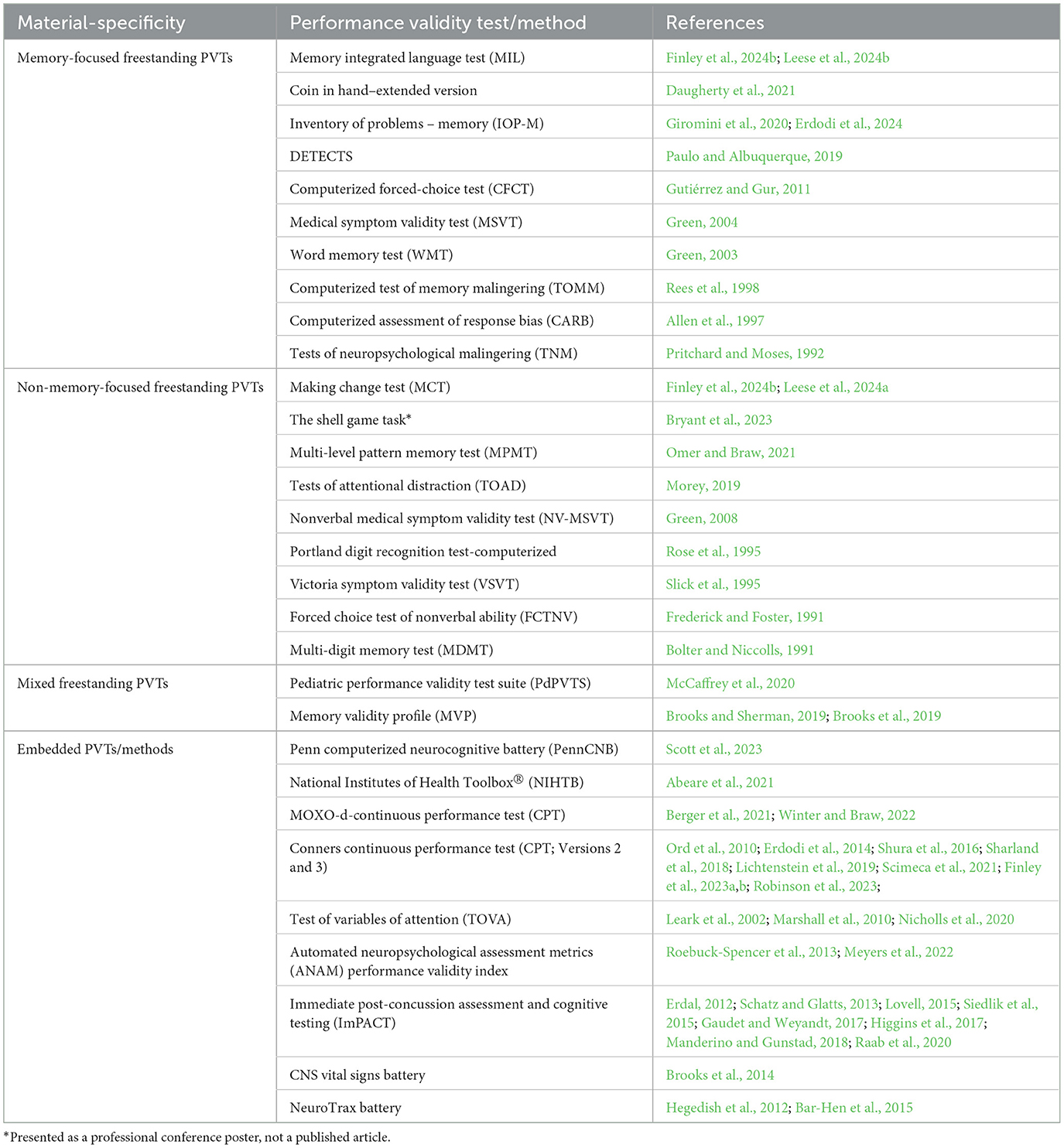

Modern digital technologies have the potential to significantly improve PVA, but such technologies have not received much attention. Most PVTs used today are pencil-and-paper tests developed several decades ago (Martin et al., 2015), and digital innovations have largely been confined to computerized validity testing (see Table 1). Meanwhile, other areas of digital neuropsychology have rapidly expanded. Technologies can now capture high-dimensional data conducive to precision medicine (Parsons and Duffield, 2020; Harris et al., 2024), and this surge in digital assessment may soon become the rule rather than exception for neuropsychology (Bilder and Reise, 2019; Germine et al., 2019). If PVA does not keep pace with other digital innovations in neuropsychology, many validity tests and methods may lose relevance.

This paper aims to increase awareness of how digital technologies can improve PVA so that researchers within neuropsychology and relevant organizations have a clinically and scientifically meaningful basis for transitioning to digital platforms. Herein, I describe five ways in which digital technologies can improve PVA: (1) generating more informative data, (2) leveraging advanced analytics, (3) facilitating scalable and sustainable research, (4) increasing accessibility, and (5) enhancing efficiencies.

Generating more informative data

Generating a greater volume, variety, and velocity of data core and ancillary to validity testing may improve the detection of non-credible performance. With these data, scientists and practitioners can better understand the dimensionality of performance validity and assess it effectively, especially in cases without clear evidence of fabrication. However, capturing sundry data in PVA is challenging, as practitioners are often limited to a few PVTs throughout an evaluation that is completed in a single snapshot of time (Martin et al., 2015). Furthermore, many PVTs index redundant information because they have similar detection paradigms that generate only one summary cut-score (Boone, 2021). Digital technologies can address these issues by capturing additional aspects of performance validity without increasing time or effort.

Digitally recording the testing process is one way to generate more diverse data points than a summary score. Some process-based metrics are already employed in PVA, including recording response consistency and exaggeration across test items (Schroeder et al., 2012; Finley et al., 2024a). For example, Leese et al. (2024a) found that using a digital software to assess discrepancies between item responses and correct answers improved the detection of non-credible performance. Using digital tools to objectively and unobtrusively record response latencies and reaction times during testing is another useful process-based approach (Erdodi and Lichtenstein, 2021; Rhoads et al., 2021). Examinees typically cannot maintain consistent rates of slowed response latencies across items when attempting to feign impairment (Gutiérrez and Gur, 2011). Various software can record these process-based scores (e.g., item-level indices of response time, reliable span, and exaggeration magnitude) in most existing tests if they are migrated to tablets/computers (Kush et al., 2012). Recording both the process and outcome (summary scores) of test completion can index dimensions of performance validity across and within tests.

Technologies can also record biometric data ancillary to validity testing. Biometrics including oculomotor, cardiovascular, body gesture, and electrodermal responses are indicators of cognitive load and are associated with deception (Ayres et al., 2021). Deception is believed to increase cognitive load because it requires more complex processing to falsify a response (Dinges et al., 2024). Although deception is different from non-credible performance, neuroimaging research suggests non-credible performance can be indicative of greater cognitive effort (Allen et al., 2007). For this reason, technologies like eye-tracking have been used to augment PVA (Braw et al., 2024). These studies are promising, but other avenues within this literature have yet to be explored due to technological limitations. Fortunately, many technologies now possess built-in cameras, accelerometers, gyroscopes, and sensors that “see,” “hear,” and “feel” at a basic level, and may be embedded within existing PVTs to record biometrics.

Technologies under development for cognitive testing may also provide informative data that has not yet been linked to PVA. For example, speech analysis software for verbal fluency tasks (Holmlund et al., 2019) could identify non-credible word choice or grammatical errors. Similarly, digital phenotyping technologies may identify novel and useful indices during validity testing, such as keystroke dynamics (e.g., slowed/inconsistent typing; Chen et al., 2022) embedded with PVTs requiring typed responses. These are among many burgeoning technologies that can generate higher dimensional data needed for robust PVA without adding time or labor. However, access to a greater range and depth of data requires advanced methods to effectively and efficiently analyze the data.

Leveraging advanced analytics

Fortunately, technologies can leverage advanced analytics to rapidly and accurately analyze a large influx of digital data in real time. Although several statistical approaches are described within the PVA literature (Boone, 2021; Jewsbury, 2023), machine learning (ML) and item response theory (IRT) analytics may be particularly useful for analyzing large volumes of interrelated, nonlinear, and high-dimensional data at the item level (Reise and Waller, 2009; Mohri et al., 2012).

Not only can these approaches analyze more complex data but they can also improve the development and refinement of PVTs relative to classical measurement approaches. For example, person-fit statistics is an IRT approach that has been used to identify non-credible symptom reporting in dichotomous and polytomous data (Beck et al., 2019). This approach may also improve embedded PVTs by estimating the extent to which each item-level response deviates from one's true abilities (Bilder and Reise, 2019). Scott et al. (2023) found that using person-fit statistics helped embedded PVTs detect subtle patterns of non-credible performance. IRT is especially amenable to computerized adaptive testing, which adjusts each item's difficulty based on one's response. Computerized adaptive testing systems can create shorter and more precise PVTs with psychometrically equivalent alternative forms (Gibbons et al., 2008). These systems can also detect careless responding based on unpredictable error patterns that deviate from normal difficulty curves. Detecting careless responding may be useful for PVTs embedded within digital self-paced continuous performance tests (e.g., Nicholls et al., 2020; Berger et al., 2021). Other IRT approaches can improve PVTs by scrutinizing item difficulty and discriminatory power and identifying culturally biased items. For example, differential item functioning is an IRT approach that may identify items on English-verbally mediated PVTs that are disproportionately challenging for those who do not speak English as their primary language, allowing for appropriate adjustments.

ML has proven useful in symptom validity test development (Orrù et al., 2021) and may function similarly for PVTs. Two studies recently investigated whether supervised ML improves PVA (Pace et al., 2019; Hirsch et al., 2022). Pace et al. (2019) found that a supervised ML model trained with various features (demographics, cognitive performance errors, response time, and a PVT score) discriminated between genuine and simulated cognitive impairment with high accuracy. Using similar features, Hirsch et al. (2022) found that their supervised models had moderate to weak prediction of PVT failure in a clinical attention-deficit/hyperactivity disorder sample. No studies have used unsupervised ML for PVA. It is possible that unsupervised ML could also identify groups of credible and non-credible performing examinees using relevant factors such as PVT scores, litigation status, medical history, and referral reasons, without explicit programming. Software can be developed to extract data for the ML via computerized questionnaires or electronic medical records. Deep learning, a form of ML that processes data using multiple dimensions, may also detect complex and anomalous patterns indicative of non-credible performance. Deep learning may be especially useful for analyzing response sequences over time (e.g., non-credible changes in performance across repeat medico-legal evaluations). Furthermore, deep-learning models may be effective at identifying inherent statistical dependencies and patterns of non-credible performance, and thus generating expectations of how genuine responses should appear. Combining these algorithms with other statistical techniques that assess response complexity and highly anomalous responses (e.g., Lundberg and Lee, 2017; Parente and Finley, 2018; Finley and Parente, 2020; Orrù et al., 2020; Mertler et al., 2021; Parente et al., 2021, 2023; Finley et al., 2022; Rodriguez et al., 2024), may increase the signal of non-credible performance. These algorithmic approaches can improve as we better understand cognitive phenotypes and what is improbable for certain disorders using precision medicine and bioinformatics.

Facilitating scale and sustainability

To optimize the utility of these digital data, technologies can include point-of-testing acquisition software that automatically transfers data to cloud-based, centralized repositories. These repositories facilitate sustainable and scalable innovations by increasing data access and collaboration among PVA stakeholders (see Reeves et al., 2007 and Gaudet and Weyandt, 2017 for large-scale developments of digital tests with embedded PVTs). Multidisciplinary approaches are needed to make theoretical and empirical sense of the data collected via digital technologies (Collins and Riley, 2016). With more comprehensive and uniform data amenable to data mining and deep-learning analytics, collaborating researchers can address overarching issues that remain poorly understood within research. For example, with larger centralized data researchers can directly evaluate different statistical approaches (e.g., chaining likelihood ratios vs. multivariable discriminant function analysis, Bayesian model averaging, or logistic regression) as well as the joint validity of standardized test batteries (Davis, 2021; Erdodi, 2023; Jewsbury, 2023). Such data and findings could also help determine robust criterion-grouping combinations, given that multiple PVTs assessing complementary aspects of performance across various cognitive domains may be necessary for a strong criterion-grouping combination (Schroeder et al., 2019; Soble et al., 2020). Similarly, researchers could expand upon existing decision-making models (e.g., Rickards et al., 2018; Sherman et al., 2020) by using these comprehensive data to develop algorithms that automatically generate credible/non-credible profiles based on the type and proportion or number of PVTs failed in relation to various contextual and diagnostic factors, symptom presentations, and clinical inconsistencies (across medical records, self- and informant-reports, or behavioral observations). A greater range and depth of data may further help elucidate the extent to which several putative factors—such as bona fide injury/disease, normal fluctuation and variability in testing, level of effort (either to perform well or to deceive), and symptom validity, among others—are associated with performance validity (Larrabee, 2012; Bigler, 2014). Understanding these associations could help identify the mechanisms underlying non-credible performance.

Collaboration is especially needed for basic and applied sciences to coalesce unique aspects of PVA that have been studied independently, such as integrating neuropsychology and neurocognitive processing theories to develop more sophisticated stimuli/paradigms (Leighton et al., 2014). For example, less applied scientific models, such as memory familiarity vs. conscious recollection theories, may be applied to clinically available PVTs to reduce false-positive rates in certain neurological populations (Eglit et al., 2017). Similar areas of cognitive science have also shown that using pictorial or numerical stimuli (vs. words) across multiple learning trials can reduce false-positive errors in clinical settings (Leighton et al., 2014). Furthermore, integrating data in real time into these repositories offers a sustainable and accurate way of estimating PVT failure base rates and developing cutoffs accordingly. Finally, as proposed by the National Neuropsychology Network (Loring et al., 2022), a centralized repository for digital data that is backward-compatible with analog test data can provide a smooth transition from traditional pencil-and-paper tests to digital formats. These repositories (including those curated via the National Neuropsychology Network) thus enable sustainable innovation by supporting continuous incremental refinement of PVTs over time.

Increasing accessibility

As observed in other areas of neuropsychology (Miller and Barr, 2017), digital technologies can offer more accessible PVA. Specifically, web-based PVTs can help access underserved and geographically restricted communities, but with the understanding that disparities in digital technology may also exist. Although more web-based PVTs are needed, not every PVT requires digitization for telehealth (e.g., Reliable Digit Span; Kanser et al., 2021; Harrison and Davin, 2023). Digital PVTs can also increase accessibility in primary care settings where digital cognitive screeners are being developed for face-to-face evaluations and may be completed in distracting, unsupervised environments (Zygouris and Tsolaki, 2015). Validity indicators could be embedded within these screeners rather than creating new freestanding PVTs. The National Institutes of Health Toolbox® (Abeare et al., 2021) and Penn Computerized Neurocognitive Battery (Scott et al., 2023) are well-established digital screeners with embedded PVTs that offer great promise for these evaluations. In primary care, embedded PVTs could serve as preliminary screeners for atypical performance that warrants further investigation. Digital PVTs may also increase accessibility in research settings. Although it is not highly likely research volunteers would deliberately feign impairment, they may lose interest, doze off, or rush through testing (An et al., 2017), especially in dementia-focused research where digital testing is common. Some digitally embedded PVTs have been developed for ADHD research (Table 1) and may be used in other research focused on digital cognitive testing (Bauer et al., 2012).

Enhancing efficiencies

Finally, the application of digital technologies introduces new efficiencies; in PVA, they hold the promise of improved standardization and administration/scoring accuracy. Technologies can leverage automated algorithms to reduce time spent on scoring and routine aspects of PVA (e.g., finding/adjusting PVT cutoffs according to various contextual/intrapersonal factors). Automation would allow providers to allocate more time to case conceptualization and responding to (rather than detecting) validity issues. Greater efficiencies in PVA translate into greater cost-efficiencies as well as reduced collateral expenses for specialized training, testing support, and materials (Davis, 2023). Further, digital PVTs can automatically store, retrieve, and analyze data to generate multiple relevant scores (e.g., specificity, sensitivity, predictive power adjusted for diagnostic-specific base rates, false-positive estimates, and likelihood ratios or probability estimates for single/multivariable failure combinations). Automated scoring will likely become increasingly useful as more PVTs and data are generated.

Limitations and concluding remarks

By no means an exhaustive review, this paper describes five ways in which digital technologies can improve PVA. These improvements can complement rather than replace the uniquely human aspects of PVA. Thus, the upfront investments required to transition to digital approaches are likely justifiable. However, other limitations deserve attention before making this transition. As described elsewhere (Miller and Barr, 2017; Germine et al., 2019), limitations to digital assessment may include variability across devices, which can impose different perceptual, motor, and cognitive demands that affect the reliability and accuracy of the tests. Variations in hardware and software within the same class of devices can affect stimulus presentation and response (including response latency) measurement. Individual differences in access to and familiarity with technology may further affect test performance. Additionally, the rapid advancement in technologies suggests that hardware and software can quickly become obsolete. A large influx of data and the application of “black box” ML algorithms and cloud-based repositories also raises concerns regarding data security and privacy. Addressing these issues and implementing digital methods into practice or research would require substantial technological and human infrastructure that may not be attainable in certain settings (Miller, 2019). Indeed, the utility of digital assessments likely depends on the context in which they are implemented. For example, PVA is critical in forensic evaluations but the limitations described above could challenge compliance with the evolving standards for the admissibility of scientific evidence in these evaluations. Further discussion of these limitations along with the logistical and practical considerations for a digital transition is needed (for further discussion, see Miller, 2019; Singh and Germine, 2021). Finally, other digital opportunities, such as using validity indicators with ecological momentary assessment and virtual reality technologies, merit further discussion. Moving forward, scientists are encouraged to expand upon these digital innovations to ensure that PVA evolves alongside the broader landscape of digital neuropsychology.

Author contributions

J-CF: Conceptualization, Investigation, Methodology, Resources, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

I would like to thank Jason Soble and Anthony Robinson for providing their expertise and guidance during the preparation of this manuscript.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abeare, C., Erdodi, L., Messa, I., Terry, D. P., Panenka, W. J., Iverson, G. L., et al. (2021). Development of embedded performance validity indicators in the NIH Toolbox Cognitive Battery. Psychol. Assess. 33, 90–96. doi: 10.1037/pas0000958

Allen, L. M., Conder, R. L., Green, P., and Cox, D. R. (1997). CARB'97 manual for the computerized assessment of response bias. Durham, NC: CogniSyst.

Allen, M. D., Bigler, E. D., Larsen, J., Goodrich-Hunsaker, N. J., and Hopkins, R. O. (2007). Functional neuroimaging evidence for high cognitive effort on the Word Memory Test in the absence of external incentives. Brain Injury 21, 1425–1428. doi: 10.1080/02699050701769819

An, K. Y., Kaploun, K., Erdodi, L. A., and Abeare, C. A. (2017). Performance validity in undergraduate research participants: A comparison of failure rates across tests and cutoffs. Clin. Neuropsychol. 31, 193–206. doi: 10.1080/13854046.2016.1217046

Ayres, P., Lee, J. Y., Paas, F., and Van Merrienboer, J. J. (2021). The validity of physiological measures to identify differences in intrinsic cognitive load. Front. Psychol. 12:702538. doi: 10.3389/fpsyg.2021.702538

Bar-Hen, M., Doniger, G. M., Golzad, M., Geva, N., and Schweiger, A. (2015). Empirically derived algorithm for performance validity assessment embedded in a widely used neuropsychological battery: validation among TBI patients in litigation. J. Clin. Exper. Neuropsychol. 37, 1086–1097. doi: 10.1080/13803395.2015.1078294

Bauer, R. M., Iverson, G. L., Cernich, A. N., Binder, L. M., Ruff, R. M., and Naugle, R. I. (2012). Computerized neuropsychological assessment devices: joint position paper of the American Academy of Clinical Neuropsychology and the National Academy of Neuropsychology. Arch. Clin. Neuropsychol. 27, 362–373. doi: 10.1093/arclin/acs027

Beck, M. F., Albano, A. D., and Smith, W. M. (2019). Person-fit as an index of inattentive responding: a comparison of methods using polytomous survey data. Appl. Psychol. Meas. 43, 374–387. doi: 10.1177/0146621618798666

Berger, C., Lev, A., Braw, Y., Elbaum, T., Wagner, M., and Rassovsky, Y. (2021). Detection of feigned ADHD using the MOXO-d-CPT. J. Atten. Disord. 25, 1032–1047. doi: 10.1177/1087054719864656

Bianchini, K. J., Mathias, C. W., and Greve, K. W. (2001). Symptom validity testing: a critical review. Clin. Neuropsychol. 15, 19–45. doi: 10.1076/clin.15.1.19.1907

Bigler, E. D. (2014). Effort, symptom validity testing, performance validity testing and traumatic brain injury. Brain Injury 28, 1623–1638. doi: 10.3109/02699052.2014.947627

Bilder, R. M., and Reise, S. P. (2019). Neuropsychological tests of the future: How do we get there from here?. Clin. Neuropsychol. 33, 220–245. doi: 10.1080/13854046.2018.1521993

Bolter, J. F., and Niccolls, R. (1991). Multi-Digit Memory Test. Wang Neuropsychological Laboratories. Boone, K. B. (2021). Assessment of Feigned Cognitive Impairment. London: Guilford Publications.

Braw, Y. C., Elbaum, T., Lupu, T., and Ratmansky, M. (2024). Chronic pain: Utility of an eye-tracker integrated stand-alone performance validity test. Psychol. Injury Law. 13, 139–151. doi: 10.1007/s12207-024-09507-6

Brooks, B. L., Fay-McClymont, T. B., MacAllister, W. S., Vasserman, M., and Sherman, E. M. (2019). A new kid on the block: the memory validity profile (MVP) in children with neurological conditions. Child Neuropsychol. 25, 561–572. doi: 10.1080/09297049.2018.1477929

Brooks, B. L., and Sherman, E. M. (2019). Using the Memory Validity Profile (MVP) to detect invalid performance in youth with mild traumatic brain injury. Appl. Neuropsychol. 8, 319–325. doi: 10.1080/21622965.2018.1476865

Brooks, B. L., Sherman, E. M., and Iverson, G. L. (2014). Embedded validity indicators on CNS Vital Signs in youth with neurological diagnoses. Arch. Clin. Neuropsychol. 29, 422–431. doi: 10.1093/arclin/acu029

Bryant, A. M., Pizzonia, K., Alexander, C., Lee, G., Revels-Strother, O., Weekman, S., et al. (2023). 77 The Shell Game Task: Pilot data using a simulator-design study to evaluate a novel attentional performance validity test. J. Int. Neuropsychol. Soc. 29, 751–752. doi: 10.1017/S1355617723009359

Chen, M. H., Leow, A., Ross, M. K., DeLuca, J., Chiaravalloti, N., Costa, S. L., et al. (2022). Associations between smartphone keystroke dynamics and cognition in MS. Digital Health 8:234. doi: 10.1177/20552076221143234

Collins, F. S., and Riley, W. T. (2016). NIH's transformative opportunities for the behavioral and social sciences. Sci. Transl. Med. 8, 366ed14. doi: 10.1126/scitranslmed.aai9374

Daugherty, J. C., Querido, L., Quiroz, N., Wang, D., Hidalgo-Ruzzante, N., Fernandes, S., et al. (2021). The coin in hand–extended version: development and validation of a multicultural performance validity test. Assessment 28, 186–198. doi: 10.1177/1073191119864652

Davis, J. J. (2021). “Interpretation of data from multiple performance validity tests,” in Assessment of feigned cognitive impairment, ed. K. B. Boone (London: Guilford Publications), 283–306.

Davis, J. J. (2023). Time is money: Examining the time cost and associated charges of common performance validity tests. Clin. Neuropsychol. 37, 475–490. doi: 10.1080/13854046.2022.2063190

Dinges, L., Fiedler, M. A., Al-Hamadi, A., Hempel, T., Abdelrahman, A., Weimann, J., et al. (2024). Exploring facial cues: automated deception detection using artificial intelligence. Neural Comput. Applic. 26, 1–27. doi: 10.1007/s00521-024-09811-x

Donders, J. (2020). The incremental value of neuropsychological assessment: a critical review. Clin. Neuropsychol. 34, 56–87. doi: 10.1080/13854046.2019.1575471

Eglit, G. M., Lynch, J. K., and McCaffrey, R. J. (2017). Not all performance validity tests are created equal: the role of recollection and familiarity in the Test of Memory Malingering and Word Memory Test. J. Clin. Exp. Neuropsychol. 39, 173–189. doi: 10.1080/13803395.2016.1210573

Erdal, K. (2012). Neuropsychological testing for sports-related concussion: how athletes can sandbag their baseline testing without detection. Arch. Clin. Neuropsychol. 27, 473–479. doi: 10.1093/arclin/acs050

Erdodi, L., Calamia, M., Holcomb, M., Robinson, A., Rasmussen, L., and Bianchini, K. (2024). M is for performance validity: The iop-m provides a cost-effective measure of the credibility of memory deficits during neuropsychological evaluations. J. Forensic Psychol. Res. Pract. 24, 434–450. doi: 10.1080/24732850.2023.2168581

Erdodi, L. A. (2023). Cutoff elasticity in multivariate models of performance validity assessment as a function of the number of components and aggregation method. Psychol. Inj. Law 16, 328–350. doi: 10.1007/s12207-023-09490-4

Erdodi, L. A., and Lichtenstein, J. D. (2021). Invalid before impaired: An emerging paradox of embedded validity indicators. Clin. Neuropsychol. 31, 1029–1046. doi: 10.1080/13854046.2017.1323119

Erdodi, L. A., Roth, R. M., Kirsch, N. L., Lajiness-O'Neill, R., and Medoff, B. (2014). Aggregating validity indicators embedded in Conners' CPT-II outperforms individual cutoffs at separating valid from invalid performance in adults with traumatic brain injury. Arch. Clin. Neuropsychol. 29, 456–466. doi: 10.1093/arclin/acu026

Finley, J. C. A., Brook, M., Kern, D., Reilly, J., and Hanlon, R. (2023b). Profile of embedded validity indicators in criminal defendants with verified valid neuropsychological test performance. Arch. Clin. Neuropsychol. 38, 513–524. doi: 10.1093/arclin/acac073

Finley, J. C. A., Brooks, J. M., Nili, A. N., Oh, A., VanLandingham, H. B., Ovsiew, G. P., et al. (2023a). Multivariate examination of embedded indicators of performance validity for ADHD evaluations: a targeted approach. Appl. Neuropsychol. 23, 1–17. doi: 10.1080/23279095.2023.2256440

Finley, J. C. A., Kaddis, L., and Parente, F. J. (2022). Measuring subjective clustering of verbal information after moderate-severe traumatic brain injury: A preliminary review. Brain Injury 36, 1019–1024. doi: 10.1080/02699052.2022.2109751

Finley, J. C. A., Leese, M. I., Roseberry, J. E., and Hill, S. K. (2024b). Multivariable utility of the Memory Integrated Language and Making Change Test. Appl. Neuropsychol. Adult 1–8. doi: 10.1080/23279095.2024.2385439

Finley, J. C. A., and Parente, F. J. (2020). Organization and recall of visual stimuli after traumatic brain injury. Brain Injury 34, 751–756. doi: 10.1080/02699052.2020.1753113

Finley, J. C. A., Rodriguez, C., Cerny, B., Chang, F., Brooks, J., Ovsiew, G., et al. (2024a). Comparing embedded performance validity indicators within the WAIS-IV Letter-Number Sequencing subtest to Reliable Digit Span among adults referred for evaluation of attention deficit/hyperactivity disorder. Clin. Neuropsychol. 2024, 1–17. doi: 10.1080/13854046.2024.2315738

Frederick, R. I., and Foster, H. G. (1991). Multiple measures of malingering on a forced-choice test of cognitive ability. Psychol. Assess. 3, 596–602. doi: 10.1037/1040-3590.3.4.596

Gaudet, C. E., and Weyandt, L. L. (2017). Immediate Post-Concussion and Cognitive Testing (ImPACT): a systematic review of the prevalence and assessment of invalid performance. Clin. Neuropsychol. 31, 43–58. doi: 10.1080/13854046.2016.1220622

Germine, L., Reinecke, K., and Chaytor, N. S. (2019). Digital neuropsychology: Challenges and opportunities at the intersection of science and software. Clin. Neuropsychol. 33, 271–286. doi: 10.1080/13854046.2018.1535662

Gibbons, R. D., Weiss, D. J., Kupfer, D. J., Frank, E., Fagiolini, A., Grochocinski, V. J., et al. (2008). Using computerized adaptive testing to reduce the burden of mental health assessment. Psychiatr. Serv. 59, 361–368. doi: 10.1176/ps.2008.59.4.361

Giromini, L., Viglione, D. J., Zennaro, A., Maffei, A., and Erdodi, L. A. (2020). SVT Meets PVT: development and initial validation of the inventory of problems–memory (IOP-M). Psychol. Inj. Law 13, 261–274. doi: 10.1007/s12207-020-09385-8

Green, P. (2004). Green's Medical Symptom Validity Test (MSVT) for microsoft windows: User's manual. Kelowna: Green's Publishing.

Green, P. (2008). Green's Nonverbal Medical Symptom Validity Test (NV-MSVT) for microsoft windows: User's manual 1.0. Kelowna: Green's Publishing.

Gutiérrez, J. M., and Gur, R. C. (2011). “Detection of malingering using forced-choice techniques,” in Detection of malingering during head injury litigation, ed. C. R. Reynolds (Cham: Springer), 151–167. doi: 10.1007/978-1-4614-0442-2_4

Harris, C., Tang, Y., Birnbaum, E., Cherian, C., Mendhe, D., and Chen, M. H. (2024). Digital neuropsychology beyond computerized cognitive assessment: Applications of novel digital technologies. Arch. Clin. Neuropsychol. 39, 290–304. doi: 10.1093/arclin/acae016

Harrison, A. G., and Davin, N. (2023). Detecting non-credible performance during virtual testing. Psychol. Inj. Law 16, 264–272. doi: 10.1007/s12207-023-09480-6

Hegedish, O., Doniger, G. M., and Schweiger, A. (2012). Detecting response bias on the MindStreams battery. Psychiat. Psychol. Law 19, 262–281. doi: 10.1080/13218719.2011.561767

Higgins, K. L., Denney, R. L., and Maerlender, A. (2017). Sandbagging on the immediate post-concussion assessment and cognitive testing (ImPACT) in a high school athlete population. Arch. Clin. Neuropsychol. 32, 259–266. doi: 10.1093/arclin/acw108

Hirsch, O., Fuermaier, A. B., Tucha, O., Albrecht, B., Chavanon, M. L., and Christiansen, H. (2022). Symptom and performance validity in samples of adults at clinical evaluation of ADHD: a replication study using machine learning algorithms. J. Clin. Exp. Neuropsychol. 44, 171–184. doi: 10.1080/13803395.2022.2105821

Holmlund, T. B., Cheng, J., Foltz, P. W., Cohen, A. S., and Elvevåg, B. (2019). Updating verbal fluency analysis for the 21st century: applications for psychiatry. Psychiatry Res. 273, 767–769. doi: 10.1016/j.psychres.2019.02.014

Jewsbury, P. A. (2023). Invited commentary: Bayesian inference with multiple tests. Neuropsychol. Rev. 33, 643–652. doi: 10.1007/s11065-023-09604-4

Kanser, R. J., O'Rourke, J. J. F., and Silva, M. A. (2021). Performance validity testing via telehealth and failure rate in veterans with moderate-to-severe traumatic brain injury: a veterans affairs TBI model systems study. NeuroRehabilitation 49, 169–177. doi: 10.3233/NRE-218019

Kush, J. C., Spring, M. B., and Barkand, J. (2012). Advances in the assessment of cognitive skills using computer-based measurement. Behav. Res. Methods 44, 125–134. doi: 10.3758/s13428-011-0136-2

Larrabee, G. J. (2012). Performance validity and symptom validity in neuropsychological assessment. J. Int. Neuropsychol. Soc. 18, 625–630. doi: 10.1017/S1355617712000240

Leark, R. A., Dixon, D., Hoffman, T., and Huynh, D. (2002). Fake bad test response bias effects on the test of variables of attention. Arch. Clin. Neuropsychol. 17, 335–342. doi: 10.1093/arclin/17.4.335

Leese, M. I., Finley, J. C. A., Roseberry, S., and Hill, S. K. (2024a). The Making Change Test: Initial validation of a novel digitized performance validity test for tele-neuropsychology. Clin. Neuropsychol. 2024, 1–14. doi: 10.1080/13854046.2024.2352898

Leese, M. I., Roseberry, J. E., Soble, J. R., and Hill, S. K. (2024b). The Memory Integrated Language Test (MIL test): initial validation of a novel web-based performance validity test. Psychol. Inj. Law 17, 34–44. doi: 10.1007/s12207-023-09495-z

Leighton, A., Weinborn, M., and Maybery, M. (2014). Bridging the gap between neurocognitive processing theory and performance validity assessment among the cognitively impaired: a review and methodological approach. J. Int. Neuropsychol. Soc. 20, 873–886. doi: 10.1017/S135561771400085X

Lichtenstein, J. D., Flaro, L., Baldwin, F. S., Rai, J., and Erdodi, L. A. (2019). Further evidence for embedded performance validity tests in children within the Conners' continuous performance test–second edition. Dev. Neuropsychol. 44, 159–171. doi: 10.1080/87565641.2019.1565535

Lippa, S. M. (2018). Performance validity testing in neuropsychology: A clinical guide, critical review, and update on a rapidly evolving literature. Clin. Neuropsychol. 32, 391–421. doi: 10.1080/13854046.2017.1406146

Loring, D. W., Bauer, R. M., Cavanagh, L., Drane, D. L., Enriquez, K. D., Reise, S. P., et al. (2022). Rationale and design of the national neuropsychology network. J. Int. Neuropsychol. Soc. 28, 1–11. doi: 10.1017/S1355617721000199

Lovell (2015). ImPACT test administration and interpretation manual. Available at: http://www.impacttest.com (accessed July 23, 2024).

Lundberg, S. M., and Lee, S. I. (2017). “A unified approach to interpreting model predictions,” in Advances in neural information processing systems, eds. I. Guyon, Von Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., et al. (New York: Curran Associates), 4765–4774.

Manderino, L., and Gunstad, J. (2018). Collegiate student athletes with history of ADHD or academic difficulties are more likely to produce an invalid protocol on baseline impact testing. Clin. J. Sport Med. 28, 111–116. doi: 10.1097/JSM.0000000000000433

Marshall, P., Schroeder, R., O'Brien, J., Fischer, R., Ries, A., Blesi, B., et al. (2010). Effectiveness of symptom validity measures in identifying cognitive and behavioral symptom exaggeration in adult attention deficit hyperactivity disorder. Clin. Neuropsychol. 24, 1204–1237. doi: 10.1080/13854046.2010.514290

Martin, P. K., Schroeder, R. W., and Odland, A. P. (2015). Neuropsychologists' validity testing beliefs and practices: a survey of North American professionals. Clin. Neuropsychol. 29, 741–776. doi: 10.1080/13854046.2015.1087597

McCaffrey, R. J., Lynch, J. K., Leark, R. A., and Reynolds, C. R. (2020). Pediatric performance validity test suite (PdPVTS): Technical manual. Multi-Health Systems, Inc.

Mertler, C. A., Vannatta, R. A., and LaVenia, K. N. (2021). Advanced and Multivariate Statistical Methods: Practical Application and Interpretation. London: Routledge. doi: 10.4324/9781003047223

Meyers, J. E., Miller, R. M., and Vincent, A. S. (2022). A validity measure for the automated neuropsychological assessment metrics. Arch. Clin. Neuropsychol. 37, 1765–1771. doi: 10.1093/arclin/acac046

Miller, J. B. (2019). Big data and biomedical informatics: Preparing for the modernization of clinical neuropsychology. Clin. Neuropsychol. 33, 287–304. doi: 10.1080/13854046.2018.1523466

Miller, J. B., and Barr, W. B. (2017). The technology crisis in neuropsychology. Arch. Clin. Neuropsychol. 32, 541–554. doi: 10.1093/arclin/acx050

Mohri, M., Rostamizadeh, A., and Talwalkar, A. (2012). Foundations of Machine Learning. London: The MIT Press.

Morey, L. C. (2019). Examining a novel performance validity task for the detection of feigned attentional problems. Appl. Neuropsychol. 26, 255–267. doi: 10.1080/23279095.2017.1409749

Nicholls, C. J., Winstone, L. K., DiVirgilio, E. K., and Foley, M. B. (2020). Test of variables of attention performance among ADHD children with credible vs. non-credible PVT performance. Appl. Neuropsychol. 9, 307–313. doi: 10.1080/21622965.2020.1751787

Omer, E., and Braw, Y. (2021). The Multi-Level Pattern Memory Test (MPMT): Initial validation of a novel performance validity test. Brain Sci. 11, 1039–1055. doi: 10.3390/brainsci11081039

Ord, J. S., Boettcher, A. C., Greve, K. W., and Bianchini, K. J. (2010). Detection of malingering in mild traumatic brain injury with the Conners' Continuous Performance Test–II. J. Clin. Exp. Neuropsychol. 32, 380–387. doi: 10.1080/13803390903066881

Orrù, G., Mazza, C., Monaro, M., Ferracuti, S., Sartori, G., and Roma, P. (2021). The development of a short version of the SIMS using machine learning to detect feigning in forensic assessment. Psychol. Inj. Law 14, 46–57. doi: 10.1007/s12207-020-09389-4

Orrù, G., Monaro, M., Conversano, C., Gemignani, A., and Sartori, G. (2020). Machine learning in psychometrics and psychological research. Front. Psychol. 10:2970. doi: 10.3389/fpsyg.2019.02970

Pace, G., Orrù, G., Monaro, M., Gnoato, F., Vitaliani, R., Boone, K. B., et al. (2019). Malingering detection of cognitive impairment with the B test is boosted using machine learning. Front. Psychol. 10:1650. doi: 10.3389/fpsyg.2019.01650

Parente, F. J., and Finley, J. C. A. (2018). Using association rules to measure subjective organization after acquired brain injury. NeuroRehabilitation 42, 9–15. doi: 10.3233/NRE-172227

Parente, F. J., Finley, J. C. A., and Magalis, C. (2021). An association rule general analytical system (ARGAS) for hypothesis testing in qualitative and quantitative research. Int. J. Quant. Qualit. Res. Methods 9, 1–13. Available online at: https://ssrn.com/abstract=3773480

Parente, F. J., Finley, J. C. A., and Magalis, C. (2023). A quantitative analysis for non-numeric data. Int. J. Quant. Qualit. Res. Methods 11, 1–11. doi: 10.37745/ijqqrm13/vol11n1111

Parsons, T., and Duffield, T. (2020). Paradigm shift toward digital neuropsychology and high-dimensional neuropsychological assessments. J. Med. Internet Res. 22:e23777. doi: 10.2196/23777

Paulo, R., and Albuquerque, P. B. (2019). Detecting memory performance validity with DETECTS: a computerized performance validity test. Appl. Neuropsychol. 26, 48–57. doi: 10.1080/23279095.2017.1359179

Pritchard, D., and Moses, J. (1992). Tests of neuropsychological malingering. Forensic Rep. 5, 287–290.

Raab, C. A., Peak, A. S., and Knoderer, C. (2020). Half of purposeful baseline sandbaggers undetected by ImPACT's embedded invalidity indicators. Arch. Clin. Neuropsychol. 35, 283–290. doi: 10.1093/arclin/acz001

Rees, L. M., Tombaugh, T. N., Gansler, D. A., and Moczynski, N. P. (1998). Five validation experiments of the Test of Memory Malingering (TOMM). Psychol. Assess. 10, 10–20. doi: 10.1037/1040-3590.10.1.10

Reeves, D. L., Winter, K. P., Bleiberg, J., and Kane, R. L. (2007). ANAM® Genogram: Historical perspectives, description, and current endeavors. Arch. Clin. Neuropsychol. 22, S15–S37. doi: 10.1016/j.acn.2006.10.013

Reise, S. P., and Waller, N. G. (2009). Item response theory and clinical measurement. Annu. Rev. Clin. Psychol. 5, 27–48. doi: 10.1146/annurev.clinpsy.032408.153553

Rhoads, T., Resch, Z. J., Ovsiew, G. P., White, D. J., Abramson, D. A., and Soble, J. R. (2021). Every second counts: a comparison of four dot counting test scoring procedures for detecting invalid neuropsychological test performance. Psychol. Assess. 33, 133–141. doi: 10.1037/pas0000970

Rickards, T. A., Cranston, C. C., Touradji, P., and Bechtold, K. T. (2018). Embedded performance validity testing in neuropsychological assessment: potential clinical tools. Appl. Neuropsychol.25, 219–230. doi: 10.1080/23279095.2017.1278602

Robinson, A., Calamia, M., Penner, N., Assaf, N., Razvi, P., Roth, R. M., et al. (2023). Two times the charm: Repeat administration of the CPT-II improves its classification accuracy as a performance validity index. J. Psychopathol. Behav. Assess. 45, 591–611. doi: 10.1007/s10862-023-10055-7

Rodriguez, V. J., Finley, J. C. A., Liu, Q., Alfonso, D., Basurto, K. S., Oh, A., et al. (2024). Empirically derived symptom profiles in adults with attention-deficit/hyperactivity disorder: An unsupervised machine learning approach. Appl. Neuropsychol. 23, 1–10. doi: 10.1080/23279095.2024.2343022

Roebuck-Spencer, T. M., Vincent, A. S., Gilliland, K., Johnson, D. R., and Cooper, D. B. (2013). Initial clinical validation of an embedded performance validity measure within the automated neuropsychological metrics (ANAM). Arch. Clin. Neuropsychol. 28, 700–710. doi: 10.1093/arclin/act055

Roor, J. J., Peters, M. J., Dandachi-FitzGerald, B., and Ponds, R. W. (2024). Performance validity test failure in the clinical population: A systematic review and meta-analysis of prevalence rates. Neuropsychol. Rev. 34, 299–319. doi: 10.1007/s11065-023-09582-7

Rose, F. E., Hall, S., and Szalda-Petree, A. D. (1995). Portland digit recognition test-computerized: measuring response latency improves the detection of malingering. Clin. Neuropsychol. 9, 124–134. doi: 10.1080/13854049508401594

Schatz, P., and Glatts, C. (2013). “Sandbagging” baseline test performance on ImPACT, without detection, is more difficult than it appears. Arch. Clin. Neuropsychol. 28, 236–244. doi: 10.1093/arclin/act009

Schroeder, R. W., Martin, P. K., Heinrichs, R. J., and Baade, L. E. (2019). Research methods in performance validity testing studies: Criterion grouping approach impacts study outcomes. Clin. Neuropsychol. 33, 466–477. doi: 10.1080/13854046.2018.1484517

Schroeder, R. W., Twumasi-Ankrah, P., Baade, L. E., and Marshall, P. S. (2012). Reliable digit span: A systematic review and cross-validation study. Assessment 19, 21–30. doi: 10.1177/1073191111428764

Scimeca, L. M., Holbrook, L., Rhoads, T., Cerny, B. M., Jennette, K. J., Resch, Z. J., et al. (2021). Examining Conners continuous performance test-3 (CPT-3) embedded performance validity indicators in an adult clinical sample referred for ADHD evaluation. Dev. Neuropsychol. 46, 347–359. doi: 10.1080/87565641.2021.1951270

Scott, J. C., Moore, T. M., Roalf, D. R., Satterthwaite, T. D., Wolf, D. H., Port, A. M., et al. (2023). Development and application of novel performance validity metrics for computerized neurocognitive batteries. J. Int. Neuropsychol. Soc. 29, 789–797. doi: 10.1017/S1355617722000893

Sharland, M. J., Waring, S. C., Johnson, B. P., Taran, A. M., Rusin, T. A., Pattock, A. M., et al. (2018). Further examination of embedded performance validity indicators for the Conners' Continuous Performance Test and Brief Test of Attention in a large outpatient clinical sample. Clin. Neuropsychol. 32, 98–108. doi: 10.1080/13854046.2017.1332240

Sherman, E. M., Slick, D. J., and Iverson, G. L. (2020). Multidimensional malingering criteria for neuropsychological assessment: A 20-year update of the malingered neuropsychological dysfunction criteria. Arch. Clin. Neuropsychol. 35, 735–764. doi: 10.1093/arclin/acaa019

Shura, R. D., Miskey, H. M., Rowland, J. A., Yoash-Gantz, R. E., and Denning, J. H. (2016). Embedded performance validity measures with postdeployment veterans: Cross-validation and efficiency with multiple measures. Appl. Neuropsychol. 23, 94–104. doi: 10.1080/23279095.2015.1014556

Siedlik, J. A., Siscos, S., Evans, K., Rolf, A., Gallagher, P., Seeley, J., et al. (2015). Computerized neurocognitive assessments and detection of the malingering athlete. J. Sports Med. Phys. Fitness 56, 1086–1091.

Singh, S., and Germine, L. (2021). Technology meets tradition: A hybrid model for implementing digital tools in neuropsychology. Int. Rev. Psychiat. 33, 382–393. doi: 10.1080/09540261.2020.1835839

Slick, D. J., Hoop, G., and Strauss, E. (1995). The Victoria Symptom Validity Test. Odessa, FL: Psychological Assessment Resources. doi: 10.1037/t27242-000

Soble, J. R., Alverson, W. A., Phillips, J. I., Critchfield, E. A., Fullen, C., O'Rourke, J. J. F., et al. (2020). Strength in numbers or quality over quantity? Examining the importance of criterion measure selection to define validity groups in performance validity test (PVT) research. Psychol. Inj. Law 13, 44–56. doi: 10.1007/s12207-019-09370-w

Sweet, J. J., Heilbronner, R. L., Morgan, J. E., Larrabee, G. J., Rohling, M. L., and Boone, K. B. (2021). American Academy of Clinical Neuropsychology (AACN) 2021 consensus statement on validity assessment: Update of the 2009 AACN consensus conference statement on neuropsychological assessment of effort, response bias, and malingering. Clin. Neuropsychol. 35, 1053–1106. doi: 10.1080/13854046.2021.1896036

Winter, D., and Braw, Y. (2022). Validating embedded validity indicators of feigned ADHD-associated cognitive impairment using the MOXO-d-CPT. J. Atten. Disord. 26, 1907–1913. doi: 10.1177/10870547221112947

Keywords: performance validity, malinger, feign, digital, artificial intelligence, technology, neuropsychology, computerized

Citation: Finley J-CA (2024) Performance validity testing: the need for digital technology and where to go from here. Front. Psychol. 15:1452462. doi: 10.3389/fpsyg.2024.1452462

Received: 20 June 2024; Accepted: 29 July 2024;

Published: 13 August 2024.

Edited by:

Alessio Facchin, Magna Graecia University, ItalyReviewed by:

Ruben Gur, University of Pennsylvania, United StatesTyler M. Moore, University of Pennsylvania, United States, in collaboration with reviewer RG

Rachael L. Ellison, Rosalind Franklin University of Medicine and Science, United States

Copyright © 2024 Finley. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: John-Christopher A. Finley, amZpbmxleTMwNDVAZ21haWwuY29t

John-Christopher A. Finley

John-Christopher A. Finley