- 1Chongqing University of Posts and Telecommunications, Chongqing, China

- 2Yangtze Normal University, Fuling District, China

The Bergen Facebook addiction scale (BFAS) is a screening instrument frequently used to evaluate Facebook addiction. However, its reliability varies considerably across studies. This study aimed to evaluate the reliability of the BFAS and its adaptation, the Bergen Social Media Addiction Scale (BSMAS), and to identify which study characteristics are associated with this reliability. We performed a reliability generalization meta-analysis involving 173,641 participants across 127 articles, which reported 147 Cronbach’s alpha values for internal consistency. The random-effects model revealed that the pooled Cronbach’s alpha values were 0.8535 (95% CI [0.8409, 0.8660]) for the BFAS and 0.8248 (95% CI [0.8116, 0.8380]) for the BSMAS. Moderator analyses indicated that the mean and standard deviation of the total scores accounted for 10.06 and 36.7% of the total variability in the BFAS alpha values, respectively. For the BSMAS, the standard deviation of the total scores and sample size accounted for 13.54 and 10.22% of the total variability alpha values, respectively. Meta-ANOVA analyses revealed that none of the categorical variables significantly affected the estimated alpha values for either the BFAS or BSMAS. Our findings endorse the BFAS and BSMAS as reliable instruments for measuring social media addiction.

1 Introduction

Social media addiction is a psychological condition characterized by an excessive focus on social media platforms. Individuals with this addiction feel a strong compulsion to use social media and invest substantial time and energy into it, often at the expense of their social activities, learning, interpersonal relationships, mental health, and overall well-being (Andreassen and Pallesen, 2014). Research has consistently highlighted the detrimental health effects of social media addiction, including sleep disturbances (Ho, 2021; Marino et al., 2018), impaired decision-making (Delaney et al., 2018), and increased risk of depression (Ho, 2021; Mamun and Griffiths, 2019; Seabrook et al., 2016). Therefore, accurately assessing social media addiction is crucial for understanding its underlying mechanisms and potential harmful effects. The Bergen Facebook addiction scale (BFAS; Andreassen et al., 2012) is a widely utilized tool for assessing Facebook addiction. It is a self-report scale designed primarily for college students and is based on six criteria: salience, tolerance, mood modification, relapse, withdrawal, and conflict, as defined by Brown (1993) and Griffiths (1996). The BFAS includes a 6-item short version and an 18-item standard version. Each item is rated on a 5-point Likert scale (1 = very rarely, 5 = very often). The total score is calculated by summing individual item scores, with higher scores indicating greater levels of Facebook addiction. The higher the total score, the more severe the addiction to the Facebook platform. Preliminary findings indicate that the BFAS demonstrates good validity. Total BFAS scores correlate well with other measures of Facebook activity, neuroticism, and extraversion, and show a negative relationship with conscientiousness. Additionally, higher BFAS scores are associated with delayed sleep onset and wake times (Andreassen et al., 2012). Given the proliferation of social media platforms beyond Facebook, researchers have adapted the BFAS to assess addiction across various platforms through the Bergen Social Media Addiction Scale (BSMAS; Schou Andreassen et al., 2016). Both the BFAS and BSMAS have been translated into several languages, including German (Brailovskaia et al., 2018), Spanish (Elphinston et al., 2022), Portuguese (da Veiga et al., 2019), and Chinese (Yam et al., 2019), due to their demonstrated validity.

In classical test theory, reliability refers to how consistently a measurement tool produces results. It is typically defined as the ratio of true score variance to the total variance, reflecting the proportion of variance in scores due to the true score rather than measurement error (Higgins et al., 2003; Rodriguez and Maeda, 2006). Cronbach’s alpha is commonly used to assess reliability because it provides a measure of internal consistency, indicating how well the items in a scale measure the same underlying construct. Researchers frequently use it as the reliability indicator for the BFAS. The BFAS itself has a good Cronbach’s alpha (0.83). Studies using the BFAS have also found high internal consistency reliability in specific contexts. However, there are several issues with reporting this reliability indicator in studies. For the BFAS, Cronbach’s alpha ranges from 0.66 (Błachnio et al., 2017; Brailovskaia et al., 2023) to 0.94 (Satici, 2019; Soraci et al., 2023). Similarly, for BSMAS, it varies from 0.66 (Chung et al., 2019) to 0.92 (Brailovskaia et al., 2019; Hoşgör et al., 2021). These variations highlight significant discrepancies in reported reliability. Another major issue is that, when some studies have used the BFAS or BSMAS, they report reliability values from previous research rather than calculating them from their own data, which can lead to inaccurate or misleading conclusions. This phenomenon of omitting or improperly reporting reliability values is an issue of reliability induction (Henson and Thompson, 2002). It is clear that reliability is context-dependent and can vary based on sample and testing conditions. Discrepancies in reliability estimates as well as reliability induction threaten the reliability of statistical analyses and research conclusions based on such indicators. Therefore, although the BFAS and BSMAS are widely used, no study has systematically explored the variability in the reliability of the two tools in different test scenarios and estimated their overall reliability.

Reliability generalizability analysis is a method that evaluates the average reliability of a measurement tool, explores variability in reliability across studies, and identifies factors that affect reliability (Henson and Thompson, 2002; Vacha-Haase, 1998). This study uses this approach to address gaps in the current research on the BFAS and BSMAS. This study aims to (1) estimate the average internal consistency reliability of the BFAS and BSMAS, (2) assess the variability in reliability across different studies, (3) identify research characteristics that might influence reliability, and (4) address issues related to reliability induction.

2 Materials and methods

The review methods and reporting followed the Reliability Generalization Meta-analysis (REGEMA) guidelines (Sánchez-Meca et al., 2021), which outline best practices for conducting reliability generalization studies. The research protocol was registered with the International Prospective Register of Systematic Reviews (PROSPERO ID: CRD42021295390) to ensure transparency and adherence to systematic review standards.

2.1 Study search strategy

Systematic searches were conducted in the EBSCO, Elsevier, Springer, ProQuest, Wiley Online Library, and CNKI databases using keywords such as ‘Facebook addiction,’ ‘social media addiction,’ and related terms. No search limits were applied. In addition, backward searches were performed from recent qualitative reviews and key studies to identify additional relevant articles. The final search was completed on December 30, 2021.

2.2 Study selection criteria

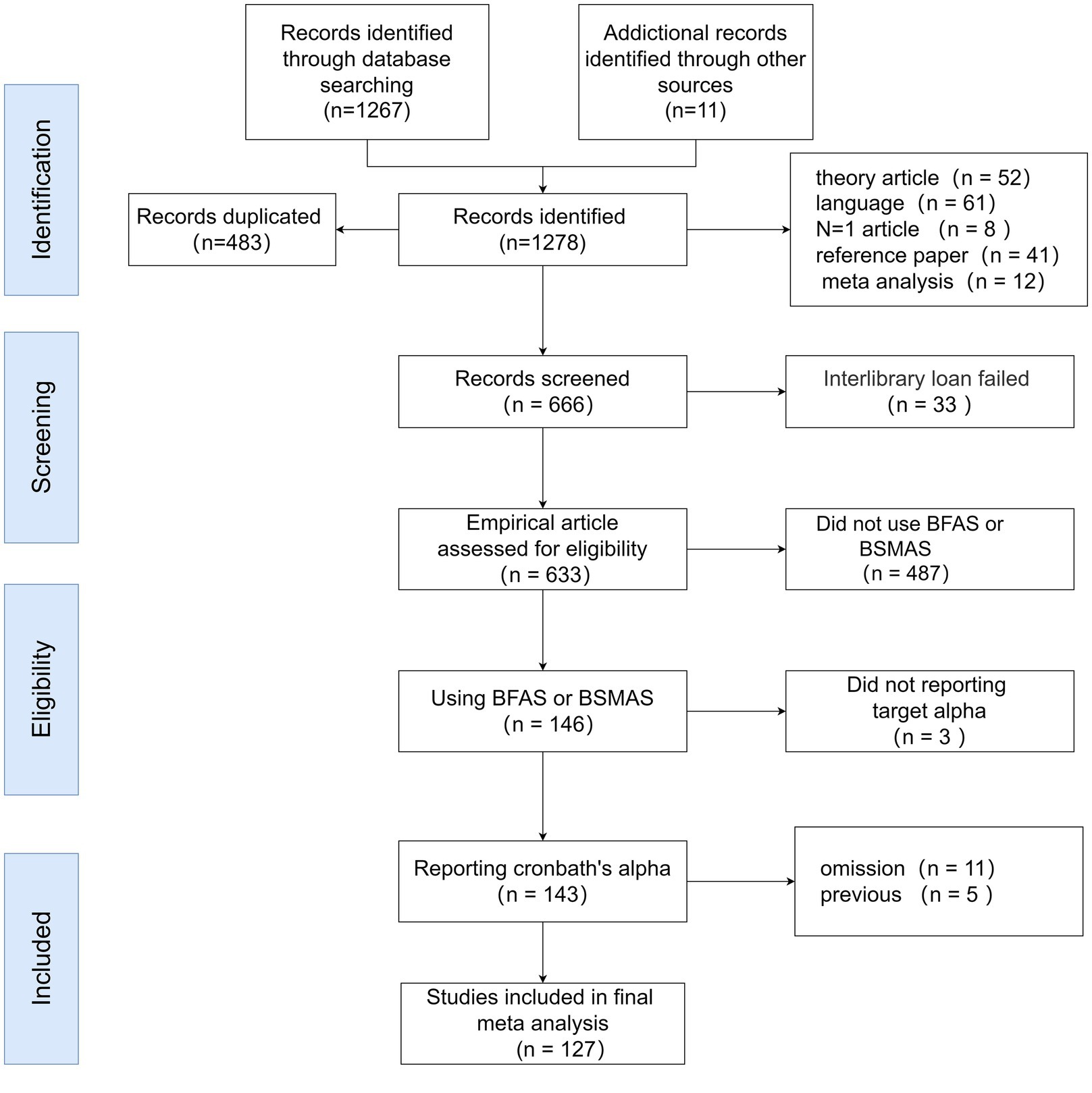

To be included in this reliability generalization meta-analysis, studies had to meet the following criteria: (1) Published in English or Chinese; (2) Empirically reported Cronbach’s alpha values for the scales used; (3) Published in a peer-reviewed academic journal or as a dissertation to ensure quality. Figure 1 illustrates the study selection process.

2.3 Data extraction and coding

Characteristics were only extracted from studies that reported the target Cronbach’s alpha values. To examine how study characteristics influenced alpha values, the following category moderators were coded: COVID-19, administration, country, test language, study aim, study nature, test length, participant group, sampling method, and social media platform. Continuous variables included publication year, sample size, mean and standard deviation of sample age, female proportion, and mean and standard deviation of the total score. Missing data for studies was recorded as such, and no imputation was performed. The coding manual, developed by the first and second authors, included detailed guidelines for extracting and categorizing study characteristics. The coding process involved dual coding of a random sample of 40 studies to ensure accuracy. Disagreements between coders were assessed using the intraclass correlation coefficient (ranging from 0.99 to 1) for continuous variables and kappa coefficients (ranging from 0.87 to 1.00) for categorical variables.

2.4 Data analysis

The current study used Cronbach’s alpha values for the reliability generalization meta-analysis. The transformation method was not employed based on recommendations by Thompson and Vacha-Haase (2000), who suggested that it may not be necessary for the analyses conducted. Random-effect models using a frequentist framework were chosen for their ability to account for variability between studies, in line with standard practice in meta-analysis. Inverse variance was used as the weighting method. The between-study variance estimator is a restricted maximum likelihood method. The confidence limits of the overall reliability estimates were computed using the method proposed by researchers (Knapp and Hartung, 2003). Heterogeneity was assessed using the Q test and the I2 index, which indicates the percentage of total variation across studies due to heterogeneity rather than chance (Thompson and Vacha-Haase, 2000). I2 values of 25, 50, and 75% correspond to low, moderate, and high heterogeneity, respectively (Higgins et al., 2003). To explain the variance of alpha values, moderator analysis was applied. Specifically, meta-analyses of variances (meta-ANOVA) and meta-regression analyses were applied for categorical and continuous variables, respectively. Moreover, the adjustments method proposed by Knapp and Hartung were used (Knapp and Hartung, 2003) to examine the statistical significance of the moderator variable and explain the residual heterogeneity. The QW and QE indices were used to examine model misspecification of meta-ANOVA and meta regression, respectively. Furthermore, the present study also employed R2 as an index to quantify the degree of variance explained by the moderator variables. If more than one moderator contributed to the variance of the coefficient alpha, a multiple meta-regression analysis was conducted to identify the unique contributions of the moderators.

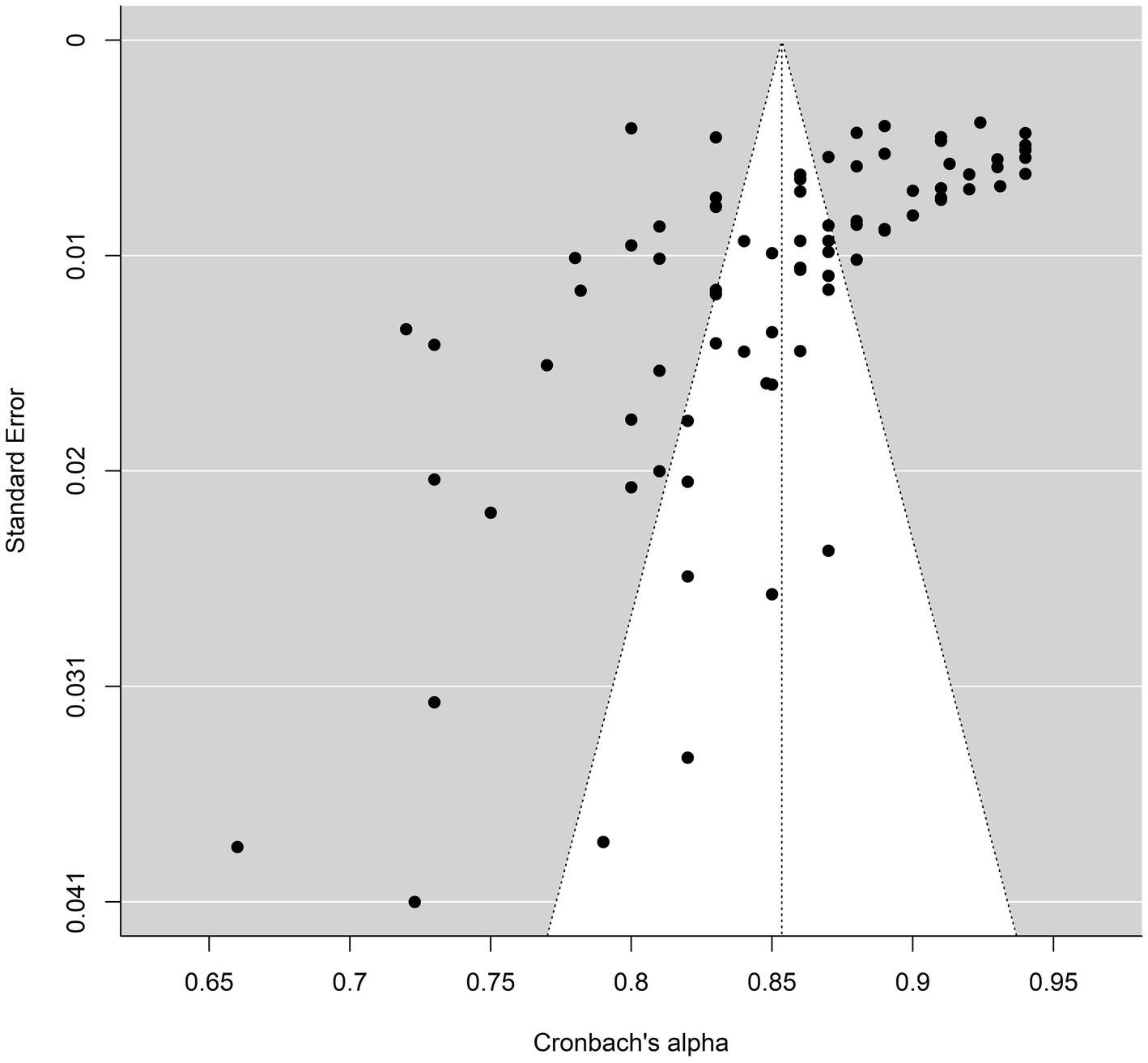

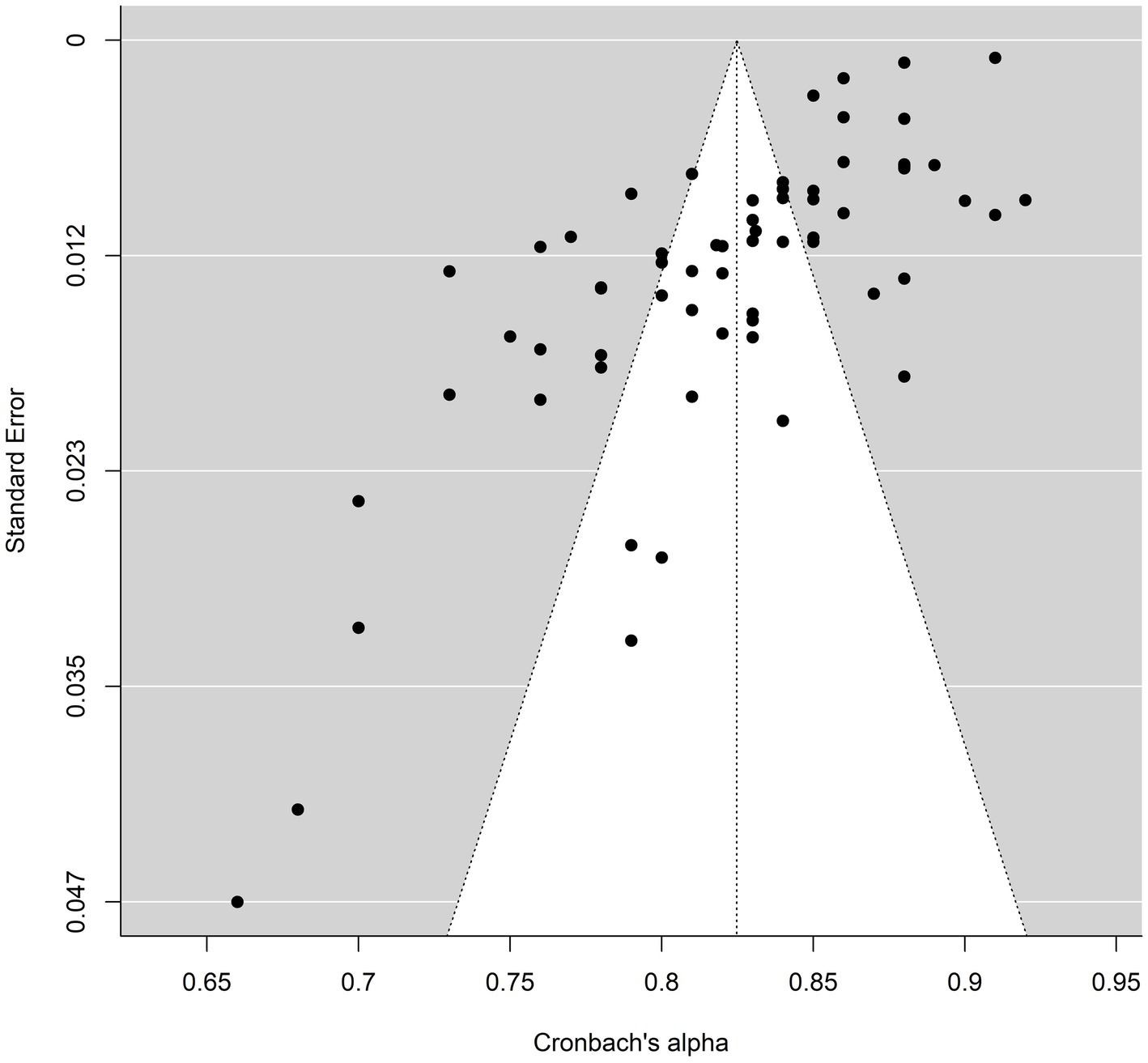

Publication bias was assessed using funnel plots for both BFAS and BSMAS, and the trim-and-fill method (Duval and Tweedie, 2000) was used to estimate and adjust for any asymmetry in the funnel plots. The asymmetry of the funnel plots indicates that there are potential coefficient alphas that were not included in the current meta-analysis, and the number of these coefficient alphas can be estimated using the trim-and-fill method. The fail-safe number (Durlak and Lipsey, 1991) was calculated to assess the robustness of the meta-analytic findings against publication bias. Meta-analytic results were considered reliable if the fail-safe number exceeded the critical value of 5 × k + 10, where k represents the number of studies included in the analysis. If the fail-safe number falls below this critical value, publication bias or file drawer problems may exist.

All statistical analyses were performed using the metafor (Viechtbauer, 2010) package (V3.8) in the R program 4.1.2. for Windows.

3 Results

3.1 Description of sample

In total, 127 articles, 147 reliability values, and 173,641 subjects were included in the formal meta-analysis (reliability-induced articles were excluded). Among the 127 articles that reported the target reliability values, the distribution of the number of reliability values in the top five countries were: China (21), Germany (20), the United States (19), Italy (18) and Turkey (11); Concerning the language of the scale, the top five languages were English (38), Chinese (20), German (20), Italian (18) and Turkish (11), and the distribution of the number of subjects was: Norway (47,283), China (46,712), Italy (11,435), the United States (10,137), and Hungary (4073). From the perspective of the language used in the scale, the top five languages in terms of the number of subjects were English (70221), Chinese (45797), Italian (11435), Hungarian (7043), and German (6840). From the perspective of sample distribution, the mixed sample, undergraduate student sample, unknown sample, and adult sample were 96,756, 35,871, 34,600, 3,499, and 2,915, respectively. The number of subjects using the BFAS was 122,018, and the number using the BSMAS was 51,623.

Sixteen articles had reliability-induction issues. Specifically, 12 studies using BFAS had been induced, eight studies omitted reports, and four studies introduced initial reliability. The BSMAS had four studies that had been induced, three studies omitted reports, and one study introduced initial reliability.

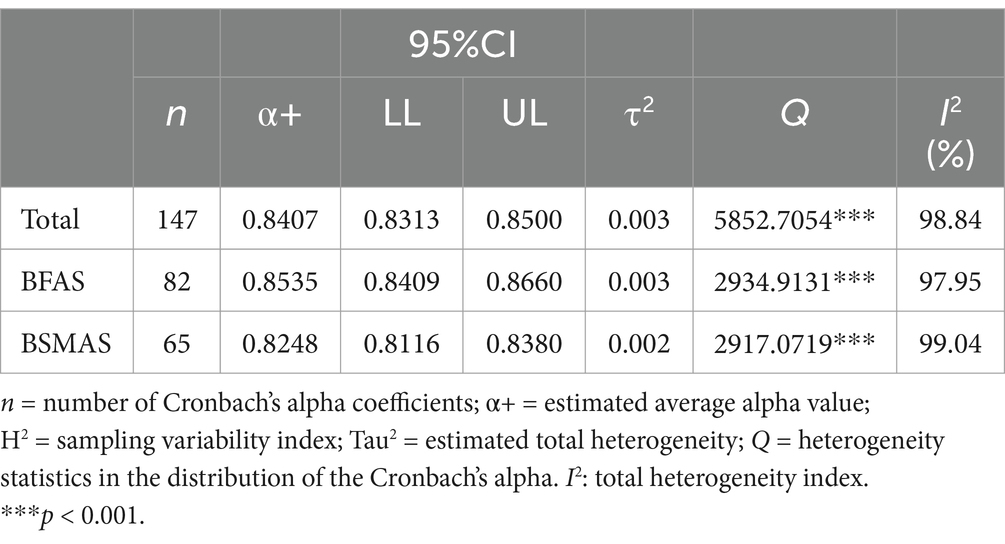

3.2 Overall reliability estimates and test of heterogeneity

The averaged estimated Cronbach’s alpha value is 0.8407 (95% CI [0.8313, 0.8500]) without considering the version of BFAS and BSMAS; more specific results are presented in Table 1. To further investigate the sources of heterogeneity in the overall reliability estimates, we first analyzed the moderating effect of the version; the results showed that the moderating effect of the version was significant, QM = 10.03, p < 0.0001, QE = 5851.985, p < 0.0001, τ2 = 0.0027, R2 = 7.04%. The high heterogeneity observed indicates substantial variability in reliability estimates across different studies, suggesting that factors such as sample characteristics and study conditions significantly influence reliability. The overall heterogeneity of each version was tested to further investigate the heterogeneity of the reliability values of different versions. The estimation results are presented in Table 1.

The meta-analysis revealed that the BFAS and BSMAS had high internal consistency, with Cronbach’s alpha values of 0.85 and 0.82, respectively, indicating strong reliability. The results also indicate heterogeneity in the reliability values of both the BFAS and BSMAS; therefore, further analyses are required.

3.3 Moderation analysis

3.3.1 Meta-ANOVA for category variables

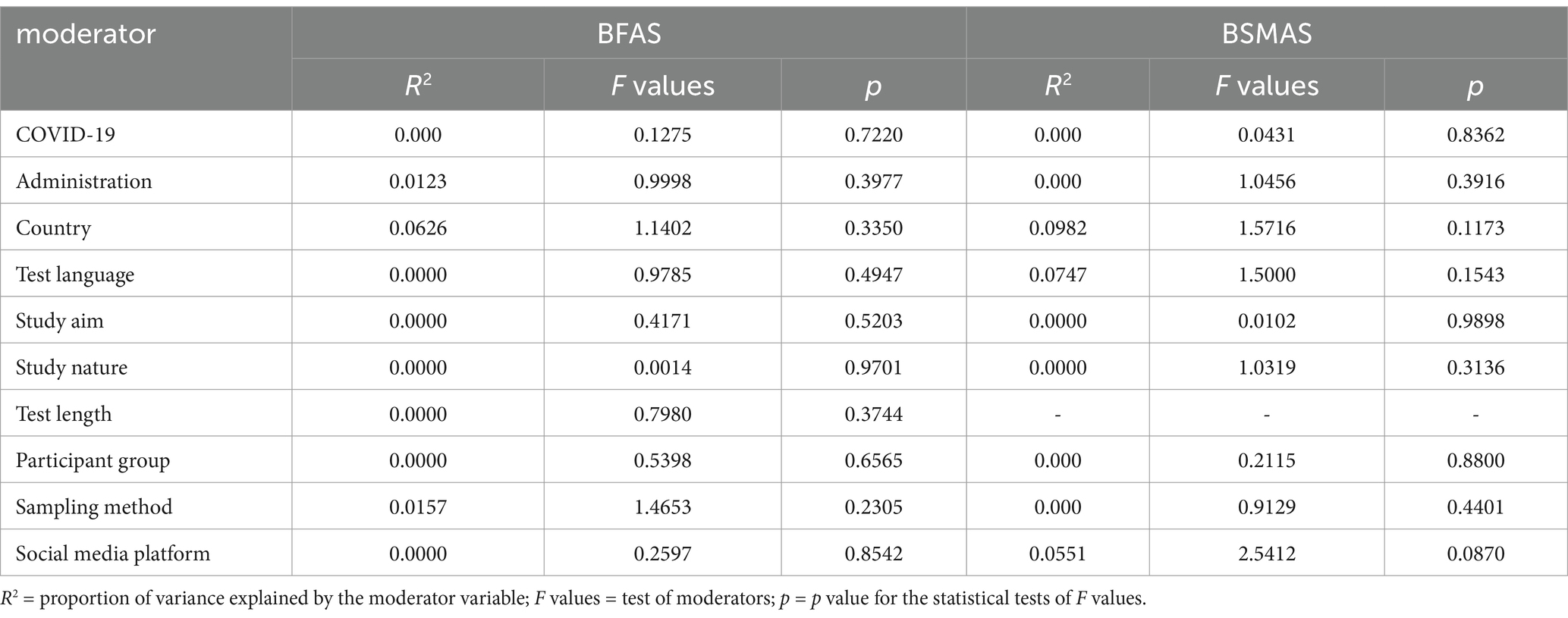

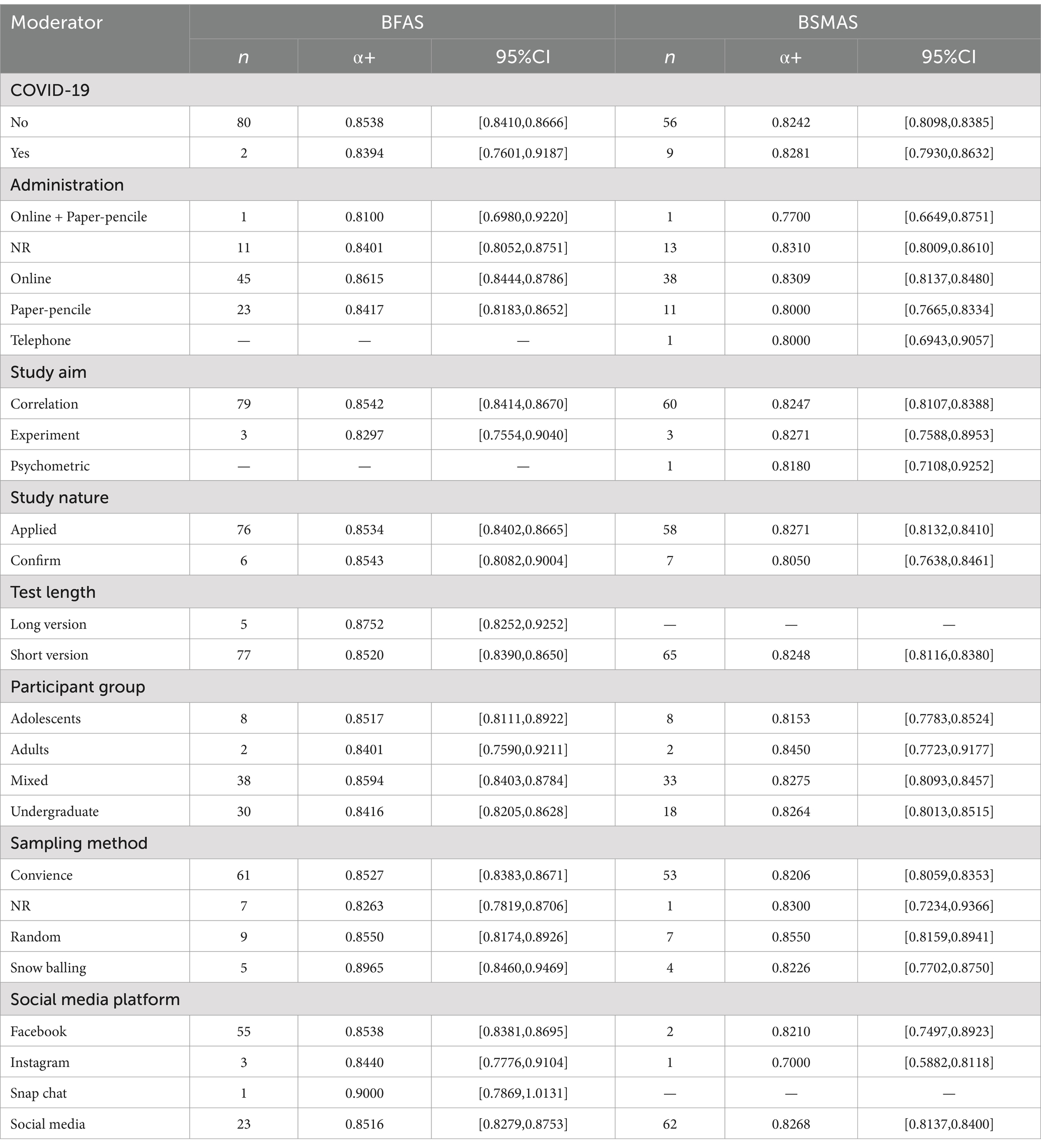

The summary results of the meta-ANOVA for the categorical variables are shown in Table 2. For all category variables, the estimated average alpha values of the BFAS and BMAS were not statistically significant. For COVID-19, administration, country, test language, study aim, study nature, test length, participant group, sampling method, and social media platform, none of the variables exerted an effect on the average internal consistency reliability of the BFAS and BSMAS. Tables 3 present the reliability estimates between the different levels of the category variables.

3.3.2 Meta-regression for continuous variables

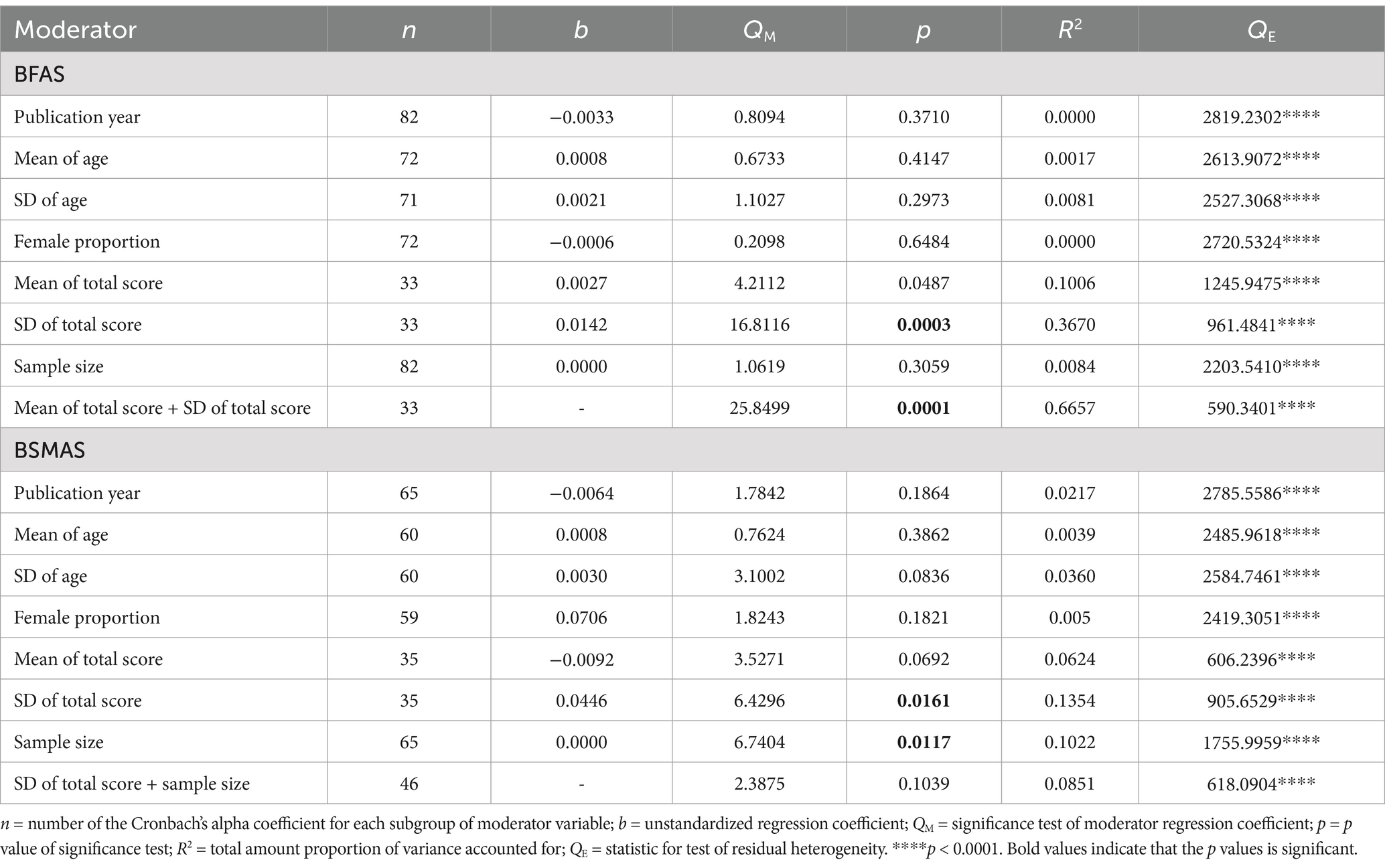

As shown in Table 4, the mean and standard deviation of the total score for BFAS account for 10.06 and 36.7% of the variance of alpha values, respectively. Together, these two variables explained 66.57% of the variance in alpha values. For the BSMAS, the standard deviation of the total score and sample size accounted for 13.54 and 10.22% of the variance in alpha values, respectively. However, these two variables explain only 8.51% of the variance.

3.4 Publication bias

To investigate the publication bias of the BFAS and BSMAS, corresponding funnel plots were drawn; the results are shown in Figures 2, 3. The trim-and-fill method results showed that the number of studies on right-side BFAS trimming was zero (SE = 5.1902), indicating no publication bias; for BSMAS, the number of studies on right clipping was zero (SE = 4.6142), indicating no publication bias. The BFAS internal consistency reliability measurements for the Rosenthal (24,493,065), Owen (82), and Rosenberg (22,335,449) methods were calculated for this study; the BFAS values for the methods were 21,193,629 (Rosenthal), 65 (Owen), and 44,612,242 (Rosenberg), indicating that the reliability generalization results are relatively reliable.

4 Discussion

The purpose of this study was to conduct a meta-analysis of the BFAS and BSMAS’s internal consistency reliability using a reliability generalization method. The study found that the internal consistency reliabilities of the BFAS and BSMAS were 0.8535 (95% CI [0.8409, 0.8660]) and 0.8248 (95% CI [0.8116, 0.8380]), respectively. Second, there was high heterogeneity between the BFAS and BSMAS studies. Third, Category variables did not significantly moderate the reliability of either scale. However, for the BFAS, the mean and standard deviation of total scores were significant moderators, whereas for the BSMAS, only the standard deviation of total scores and sample size played a role. Fourth, reliability-induction issues were noted in both scales. For the BFAS, eight studies failed to report reliability values, and four studies reported initial reliability values incorrectly. For the BSMAS, three studies omitted reliability reporting, and one incorrectly reported initial values.

According to the evaluation criteria for internal consistency reliability; 0.9 indicates good internal consistency reliability, above 0.8 is ideal, above 0.7 is recommended for modification, and below 0.7 should be reworked (Sijtsma, 2009). This study’s results show that both BFAS and BSMAS have an estimated reliability of more than 0.8, which is ideal. This finding is consistent with the initial reliability values of the two measures, indicating that this tool is reliable for measuring an individual’s social media addiction. Moreover, there was a significantly high heterogeneity between the studies using the two scales. This indicates that there are significant differences in research tools across a wide range of populations, samples, age groups, countries, regions, and publication years. Despite this, the average estimation reliability of the two tools still reached a high level, indicating the stability of the measurement results of the two tools from a side perspective. However, the BSMAS is more heterogeneous than the BFAS, which may be related to the measured social media platforms. The BFAS was specifically designed to investigate Facebook platform addiction, whereas the BSMAS measures a wider range of platforms.

In addition to examining the average estimation reliability and heterogeneity of the BFAS and BSMAS, this study examined the impact of research characteristic variables (continuous and category variables) on their reliability. The results showed that, for the BFAS, the moderating effect of category variables on the average estimate of reliability was not statistically significant, while the mean and standard deviation of the test scores affected its reliability. For the BSMAS, only the standard deviation of the test scores and sample size affected its internal consistency reliability. The year that the study was published, mean age of the subjects, standard deviation of the subjects’ age, proportion of women in the sample, and total test score had no impact on internal consistency. For the BFAS, the mean and standard deviation of the test scores independently explained about 10.06 and 36.7% of the variance, respectively, while the two together explained about 66.57% of the variance. For the BSMAS, the standard deviation of test scores and sample size explained approximately 13.54 and 10.22% of the variance, respectively, but both were not significant together. The effects of test scores and standard deviations on the reliability estimates have also been reported in other reliability generalizability studies (Blázquez-Rincón et al., 2022; Liang et al., 2021; López-Pina et al., 2015). Consistent with classical test theory, which posits that greater variation in observation scores enhances reliability, our findings align with previous studies showing that the variability in test scores influences reliability estimates (Vacha-Haase, 1998).

The present study identified reliability-induction issues associated with both the BFAS and BSMAS. This phenomenon can be attributed, in part, to a misunderstanding regarding the nature of reliability—specifically, whether it pertains to the measurement instrument itself or the outcomes derived from the testing process. Our findings underscore a prevalent misconception that reliability is an intrinsic quality of the testing tool, rather than a characteristic that is contingent upon specific testing conditions and sample populations. It is important to note that the reliability of psychological assessments is not an inherent property of the instrument; rather, it is a feature of the results obtained from the test. Consequently, administering the same assessment to different sample groups will inevitably yield varying reliability estimates due to factors such as differences in research samples, testing environments, cultural contexts, and linguistic backgrounds. Therefore, it is imperative for researchers to consistently report the reliability of a testing instrument as it pertains to their specific study context.

This study had certain limitations. The main limitations were as follows: Firstly, the research was restricted to English and Chinese publications, which could potentially limit the broader applicability of the findings. Secondly, there was a notable underrepresentation of studies from South America and Africa, and most studies lacked racial demographic data, which might restrict the thoroughness of the analysis. Thirdly, since race was not reported in the majority of studies, it was not included as a variable in this analysis. Fourthly, the exploratory model indicated that variations in test scores were the primary source of error. Nevertheless, a significant portion of the variations remained unexplained. Future research should consider including studies from a wider range of languages and regions. It should also explore the influence of racial and cultural factors, as well as investigate other possible factors that could moderate reliability.

The widespread use of social media has led to a prevalent issue of addiction, making the assessment of social media addiction a hot topic in the field of cyberpsychology. Effectively evaluating social media addiction is crucial for guiding adolescents to use social media responsibly and for intervening in cases of addiction. This paper employs the generalizability theory to conduct reliability analyses on the widely used BFAS scale and its variants, explores the sources of reliability variation, and further clarifies and confirms that the scale demonstrates high reliability. It is therefore suitable for a broad application in assessing social media addiction and can be used in clinical settings to identify participants who meet the criteria for addiction.

5 Conclusion

In summary, this pioneering study offers the first generalized assessment of the internal consistency reliability of the BFAS and BSMAS. Our results demonstrate that both tools have average reliability estimates exceeding 0.8, confirming their stability and dependability as evaluation instruments for social media addiction. This robust reliability underscores their suitability for use in a wide range of research and clinical settings. However, the study’s limitations, such as language and regional constraints, should be considered. Future research should aim to validate these findings in diverse languages and contexts, and examine additional factors that may impact the reliability of these tools.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Ethics committee of Chongqing University of Posts and Telecommunications approved present study. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

J-LM: Conceptualization, Data curation, Formal analysis, Methodology, Software, Writing – original draft. ZJ: Methodology, Software, Writing – review & editing. CL: Investigation, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The present study was supported by Humanities & Social Sciences Program of Chongqing Education Committee (Chang Liu, 22SKSZ070; Jianling Ma, 21SKGH064; and ZhengCheng Jin, 23SKGH121) and the 2020 Key Social Sciences Program of Chongqing Education Science 13th-Five-Year-Plan (Jianling MA, 2020-GX-114).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Andreassen, C. S., and Pallesen, S. (2014). Social network site addiction - an overview. Curr. Pharm. Des. 20, 4053–4061. doi: 10.2174/13816128113199990616

Andreassen, C. S., Torsheim, T., Brunborg, G. S., and Pallesen, S. (2012). Development of a Facebook addiction scale. Psychol. Rep. 110, 501–517. doi: 10.2466/02.09.18.PR0.110.2.501-517

Błachnio, A., Przepiorka, A., Senol-Durak, E., Durak, M., and Sherstyuk, L. (2017). The role of personality traits in Facebook and internet addictions: a study on Polish, Turkish, and Ukrainian samples. Comput. Hum. Behav. 68, 269–275. doi: 10.1016/j.chb.2016.11.037

Blázquez-Rincón, D., Durán, J. I., and Botella, J. (2022). The fear of COVID-19 scale: a reliability generalization meta-analysis. Assessment 29, 940–948. doi: 10.1177/1073191121994164

Brailovskaia, J., Margraf, J., and Teismann, T. (2023). Repetitive negative thinking mediates the relationship between addictive Facebook use and suicide-related outcomes: a longitudinal study. Curr. Psychol. 42, 6791–6799. doi: 10.1007/s12144-021-02025-7

Brailovskaia, J., Rohmann, E., Bierhoff, H. W., Schillack, H., and Margraf, J. (2019). The relationship between daily stress, social support and Facebook addiction disorder. Psychiatry Res. 276, 167–174. doi: 10.1016/j.psychres.2019.05.014

Brailovskaia, J., Schillack, H., and Margraf, J. (2018). Facebook addiction disorder in Germany. Cyberpsychol. Behav. Soc. Netw. 21, 450–456. doi: 10.1089/cyber.2018.0140

Brown, R. I. F. (1993). “Some contributions of the study of gambling to the study of other addictions” in Gambling behavior and problem gambling. eds. W. R. Eadington and J. Cornelius (Reno, NV: Univer. of Nevada Press), 341–372.

Chung, K. L., Morshidi, I., Yoong, L. C., and Thian, K. N. (2019). The role of the dark tetrad and impulsivity in social media addiction: findings from Malaysia. Personal. Individ. Differ. 143, 62–67. doi: 10.1016/j.paid.2019.02.016

da Veiga, G. F., Sotero, L., Pontes, H. M., Cunha, D., Portugal, A., and Relvas, A. P. (2019). Emerging adults and Facebook use: the validation of the Bergen Facebook addiction scale (BFAS). Int. J. Ment. Heal. Addict. 17, 279–294. doi: 10.1007/s11469-018-0018-2

Delaney, D., Stein, L. A. R., and Gruber, R. (2018). Facebook addiction and impulsive decision-making. Addict. Res. Theory 26, 478–486. doi: 10.1080/16066359.2017.1406482

Durlak, J. A., and Lipsey, M. W. (1991). A practitioner's guide to meta-analysis. Am. J. Community Psychol. 19, 291–332. doi: 10.1007/BF00938026

Duval, S., and Tweedie, R. (2000). Trim and fill: a simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics 56, 455–463. doi: 10.1111/j.0006-341X.2000.00455.x

Elphinston, R. A., Gullo, M. J., and Connor, J. P. (2022). Validation of the Facebook addiction questionnaire. Personal. Individ. Differ. 195:111619. doi: 10.1016/j.paid.2022.111619

Henson, R. K., and Thompson, B. (2002). Characterizing measurement error in scores across studies: some recommendations for conducting “reliability generalization” studies. Meas. Eval. Couns. Dev. 35, 113–127. doi: 10.1080/07481756.2002.12069054

Higgins, J. T., Thompson, S. G., Deeks, J. J., and Altman, D. G. (2003). Measuring inconsistency in meta-analyses. Br. Med. J. 327, 557–560. doi: 10.1136/bmj.327.7414.557

Ho, T. T. Q. (2021). Facebook addiction and depression: loneliness as a moderator and poor sleep quality as a mediator. Telematics Inform. 61:101617. doi: 10.1016/j.tele.2021.101617

Hoşgör, H., Ülker Dörttepe, Z. U., and Memiş, K. (2021). Social media addiction and work engagement among nurses. Perspect. Psychiatr. Care 57, 1966–1973. doi: 10.1111/ppc.12774

Knapp, G., and Hartung, J. (2003). Improved tests for a random effects meta-regression with a single covariate. Stat. Med. 22, 2693–2710. doi: 10.1002/sim.1482

Liang, J. H., Shou, Y. Y., Wang, M. C., Deng, J. X., and Luo, J. (2021). Alabama parenting questionnaire-9: a reliability generalization meta-analysis. Psychol. Assess. 33, 940–951. doi: 10.1037/pas0001031

López-Pina, J. A., Sánchez-Meca, J., López-López, J. A., Marín-Martínez, F., Núñez-Núñez, R. M., Rosa-Alcázar, A. I., et al. (2015). The Yale-Brown obsessive compulsive scale: a reliability generalization meta-analysis [Meta-analysis; research support, non-U.S. Gov't]. Assessment 22, 619–628. doi: 10.1177/1073191114551954

Mamun, M. A. A., and Griffiths, M. D. (2019). The association between Facebook addiction and depression: a pilot survey study among Bangladeshi students. Psychiatry Res. 271, 628–633. doi: 10.1016/j.psychres.2018.12.039

Marino, C., Gini, G., Vieno, A., and Spada, M. M. (2018). The associations between problematic Facebook use, psychological distress and well-being among adolescents and young adults: a systematic review and meta-analysis. J. Affect. Disord. 226, 274–281. doi: 10.1016/j.jad.2017.10.007

Rodriguez, M. C., and Maeda, Y. (2006). Meta-analysis of coefficient alpha. Psychol. Methods 11, 306–322. doi: 10.1037/1082-989X.11.3.306

Sánchez-Meca, J., Marín-Martínez, F., López-López, J. A., Núñez-Núñez, R. M., Rubio-Aparicio, M., López-García, J. J., et al. (2021). Improving the reporting quality of reliability generalization meta-analyses: the REGEMA checklist. Res. Synth. Methods 12, 516–536. doi: 10.1002/jrsm.1487

Satici, S. A. (2019). Facebook addiction and subjective well-being: a study of the mediating role of shyness and loneliness. Int. J. Ment. Heal. Addict. 17, 41–55. doi: 10.1007/s11469-017-9862-8

Schou Andreassen, C. S., Billieux, J., Griffiths, M. D., Kuss, D. J., Demetrovics, Z., Mazzoni, E., et al. (2016). The relationship between addictive use of social media and video games and symptoms of psychiatric disorders: a large-scale cross-sectional study. Psychol. Addict. Behav. 30, 252–262. doi: 10.1037/adb0000160

Seabrook, E. M., Kern, M. L., and Rickard, N. S. (2016). Social networking sites, depression, and anxiety: a systematic review. JMIR Mental Health 3:e50. doi: 10.2196/mental.5842

Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach's alpha. Psychometrika 74, 107–120. doi: 10.1007/s11336-008-9101-0

Soraci, P., Ferrari, A., Barberis, N., Luvarà, G., Urso, A., Del Fante, E., et al. (2023). Psychometric analysis and validation of the Italian Bergen Facebook addiction scale. Int. J. Ment. Heal. Addict. 21, 451–467. doi: 10.1007/s11469-020-00346-5

Thompson, B., and Vacha-Haase, T. (2000). Psychometrics is datametrics: the test is not reliable. Educ. Psychol. Meas. 60, 174–195. doi: 10.1177/0013164400602002

Vacha-Haase, T. (1998). Reliability generalization: exploring variance in measurement error affecting score reliability across studies. Educ. Psychol. Meas. 58, 6–20. doi: 10.1177/0013164498058001002

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. J. Stat. Softw. 36, 1–48. doi: 10.18637/jss.v036.i03

Keywords: Facebook addiction, Facebook addiction scale, reliability, reliability generalization, meta-analysis

Citation: Ma J-L, Jin Z and Liu C (2024) The Bergen Facebook addiction scale: a reliability generalization meta-analysis. Front. Psychol. 15:1444039. doi: 10.3389/fpsyg.2024.1444039

Edited by:

Saleem Alhabash, Michigan State University, United StatesCopyright © 2025 Ma, Jin and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jian-Ling Ma, amlhbmxpbmdfbWFAMTYzLmNvbQ==; Chang Liu, Mjk0MzMyODA1QHFxLmNvbQ==

Jian-Ling Ma

Jian-Ling Ma ZhengCheng Jin1

ZhengCheng Jin1 Chang Liu

Chang Liu