- 1BrainCheck Inc., Austin, TX, United States

- 2Frontier Psychiatry, PLLC, Billings, MT, United States

Introduction: Previous validation studies demonstrated that BrainCheck Assess (BC-Assess), a computerized cognitive test battery, can reliably and sensitively distinguish individuals with different levels of cognitive impairment (i.e., normal cognition (NC), mild cognitive impairment (MCI), and dementia). Compared with other traditional paper-based cognitive screening instruments commonly used in clinical practice, the Montreal Cognitive Assessment (MoCA) is generally accepted to be among the most comprehensive and robust screening tools, with high sensitivity/specificity in distinguishing MCI from NC and dementia. In this study, we examined: (1) the linear relationship between BC-Assess and MoCA and their equivalent cut-off scores, and (2) the extent to which they agree on their impressions of an individual’s cognitive status.

Methods: A subset of participants (N = 55; age range 54–94, mean/SD = 80/9.5) from two previous studies who took both the MoCA and BC-Assess were included in this analysis. Linear regression was used to calculate equivalent cut-off scores for BC-Assess based on those originally recommended for the MoCA to differentiate MCI from NC (cut-off = 26), and dementia from MCI (cut-off = 19). Impression agreement between the two instruments were measured through overall agreement (OA), positive percent agreement (PPA), and negative percent agreement (NPA).

Results: A high Pearson correlation coefficient of 0.77 (CI = 0.63–0.86) was observed between the two scores. According to this relationship, MoCA cutoffs of 26 and 19 correspond to BC-Assess scores of 89.6 and 68.5, respectively. These scores are highly consistent with the currently recommended BC-Assess cutoffs (i.e., 85 and 70). The two instruments also show a high degree of agreement in their impressions based on their recommended cut-offs: (i) OA = 70.9%, PPA = 70.4%, NPA = 71.4% for differentiating dementia from MCI/NC; (ii) OA = 83.6%, PPA = 84.1%, NPA = 81.8% for differentiating dementia/MCI from NC.

Discussion: This study provides further validation of BC-Assess in a sample of older adults by showing its high correlation and agreement in impression with the widely used MoCA.

Introduction

Millions of older adults around the world suffer from Alzheimer’s Disease and AD-Related Dementias (AD/ADRD) yet it is still underdiagnosed and often detected in its late stages (Lang et al., 2017; Amjad et al., 2018; Liu et al., 2023), particularly in primary care settings (Borson et al., 2006). The implications of an early diagnosis can prompt the delivery of timely interventions, open access to resources limited by medical necessity, and incite planning ahead for future support, living, and safety of the person living with dementia. This has been further accentuated in light of the United States Food and Drug Administration’s approval of two novel treatment options of Alzheimer’s Disease [Lecanemab (Van Dyck et al., 2023) and Donanemab (Reardon, 2023)]. The earlier the diagnosis, the more likely treatments and interventions will be able to slow disease progression (Rasmussen and Langerman, 2019) which can aid in longer preservation of function.

In clinical practice, a common standard has been to utilize paper based instruments such as the Montreal Cognitive Assessment (MoCA) or the Mini-Mental State Examination (MMSE) to measure cognition for the screening of AD/ADRD. Of these, the MoCA is generally accepted to be among the most comprehensive and robust screening tools, with high sensitivity/specificity in detecting cognitive impairment (Nasreddine et al., 2005; Carson et al., 2018; Dautzenberg et al., 2020). It covers a broad range of domains essential for cognitive assessment and has been well-validated in many studies (Nasreddine et al., 2005; Smith et al., 2007; Luis et al., 2009; Roalf et al., 2013). However, these paper-based instruments have certain limitations. Firstly, they lack precision needed for measuring response time, a significant aspect when measuring cognitive processes. Secondly, these tests are time-and labor-intensive as they require verbal administration and manual scoring by a trained or licensed administrator. Moreover, these administration and scoring processes open up opportunities of inconsistency and subjectivity which makes it susceptible to interrater variability (Price et al., 2011; Hilgeman et al., 2019; Cumming et al., 2020). Lastly, although these tools have been available for many years, the detection rate of cognitive impairment and AD/ADRD has not been significant (Chodosh et al., 2004; Lang et al., 2017), leading to the conclusion that additional tools are necessary to improve the rate and the timeliness of diagnosis. Doing so would naturally lower the high burden of care that comes with late stage clinical symptoms driving significant costs in our healthcare system (Alzheimers Association, 2019; Matthews et al., 2019).

Computerized cognitive assessments inherently offer greater efficiency and feasibility, thanks to their self-administration capability, remote accessibility, automated scoring, and ability to seamlessly integrate with electronic health records (EHR). Designed to overcome limitations in traditional instruments and align with clinical workflow, BrainCheck has developed a digital cognitive assessment tool, BrainCheck Assess (BC-Assess), to objectively measure multiple cognitive domains to aid early detection of AD/ADRD. BC-Assess takes 10–15 min to complete and can be self-administered remotely or administered in person by clinical staff with minimal training required. A comprehensive clinical report is immediately generated with test results and applicable knowledge to aid in clinical decision making. Multiple validation studies demonstrated that BC-Assess could reliably and sensitively identify those suffering from concussion (Yang et al., 2017) or age-related cognitive impairment (Groppell et al., 2019; Ye et al., 2022). In the latest study (Ye et al., 2022) with 99 participants, we found BC-Assess overall scores were significantly different across the three groups [normal cognition (NC), mild cognitive impairment (MCI), and dementia (DEM)]. Results showed ≥88% sensitivity and specificity for separating DEM and NC, and ≥77% sensitivity and specificity in differentiating MCI with NC and DEM. However, we have not provided a crosswalk between BC-Assess and a traditional paper-based cognitive test that allows for converting scores from one test to the other and supports interpretations of its results.

The aim of this study was to validate BC-Assess against the MoCA through comparison of their scores. In this study, we retrospectively analyzed clinical data collected from two previous studies (Groppell et al., 2019; Ye et al., 2022) to examine: the linear relationship between BC-Assess overall score and MoCA total score and their equivalent cut-off scores, and the extent to which they agree on their impressions of an individual’s cognitive status.

Materials and methods

Participants

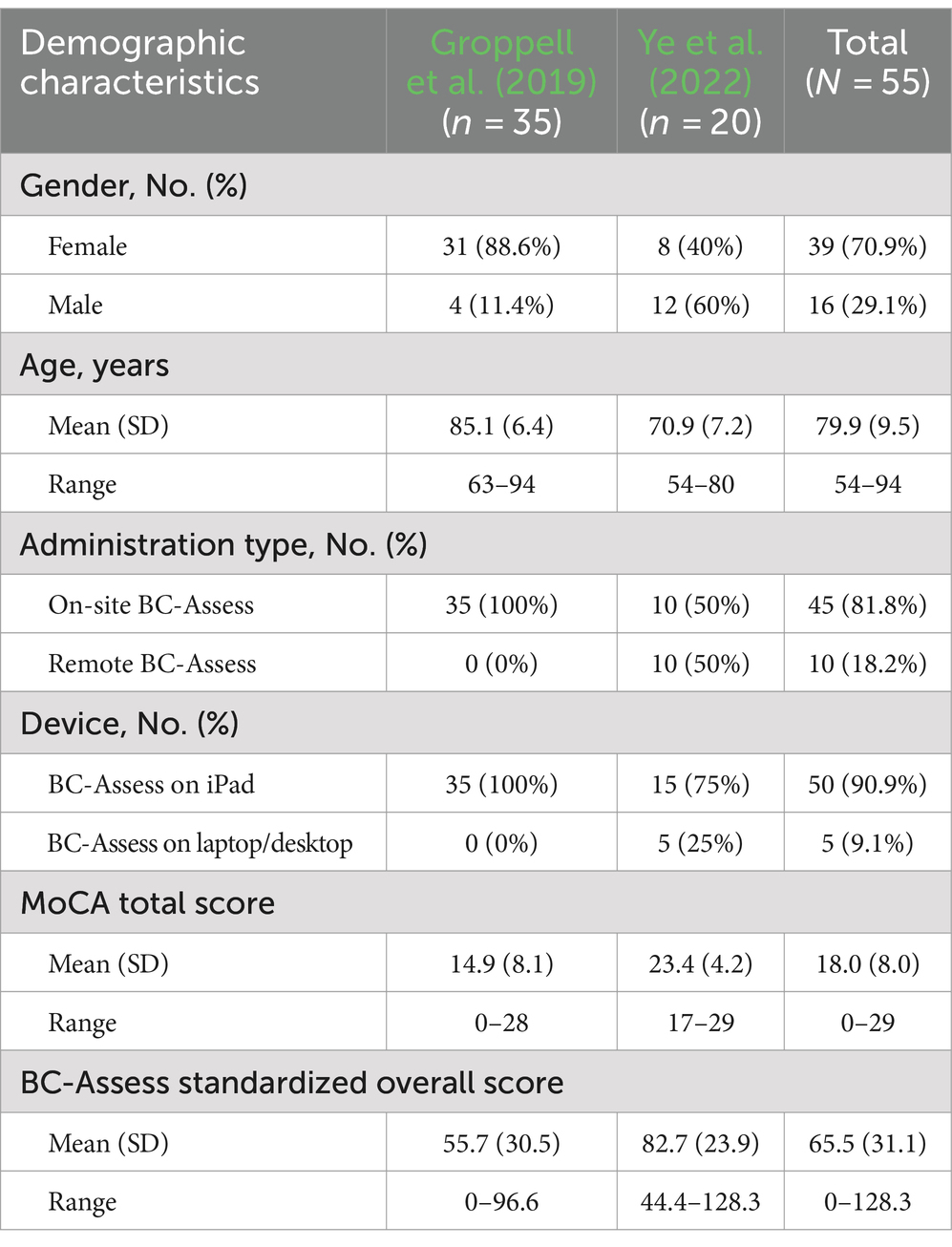

A subset of 55 participants (age range 54–94, mean/SD = 79.9/9.5; 70.9% Female) from two previous studies (Groppell et al., 2019: N = 35; Ye et al., 2022: N = 20) who completed both the MoCA and BC-Assess were included in this analysis. Table 1 summarizes demographic characteristics of the participants from each study. The 35 participants from the Groppel et al. study were recruited from two assisted-living facilities in Houston, TX. The 20 participants from the Ye et al. study were recruited from a research registry maintained by the University of Washington (UW) Alzheimer’s Disease Research Center Alzheimer’s Disease Research Center associated with UW Medicine’s Memory and Brain Wellness Center.

Data collection

Participants in the Groppel et al. group completed both the MoCA and BC-Assess on the same day at the testing centers. In the Ye et al. group, the time interval between the two tests and administration type varied among participants. For this group, except for one participant completing the MoCA 28 days after the BC-Assess, the remaining 19 participants completed the BC-Assess 39–299 days after the MoCA. MoCA scores for these participants were obtained from EHR where only the total scores were available. Of these 19 participants, 10 were administered the BC-Assess battery remotely over a video call with the moderator due to the COVID-19 pandemic.

MoCA total scores

The MoCA is a cognitive screening test that measures multiple cognitive domains: short-term memory recall, visuospatial abilities, executive functioning, phonemic fluency, verbal abstraction, attention, concentration, language, and time and place orientation. The total possible score is 30 points. Cut-off scores of 19 and 26 are typically used to differentiate dementia from MCI and MCI from NC, respectively (Davis et al., 2013).

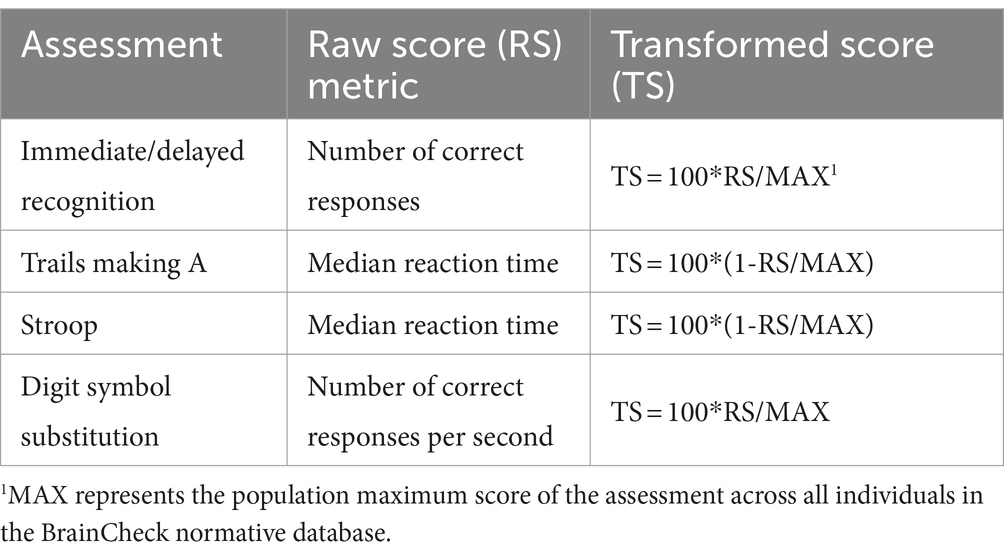

BC-Assess standardized overall scores

The BC-Assess consisted of six individual assessments: Immediate/Delayed Recognition, Digit Symbol Substitution, Stroop, Trails Making A/B. A detailed description of these assessments can be found in our previous study (Yang et al., 2017). Performance on each assessment was quantified by either accuracy- or reaction time-based measures (Table 2). The BC-Assess raw overall score was calculated as the mean of performance scores from all assessments in the battery (except the Trails Making B), where each assessment-specific score had been transformed from its natural range into a common range [0,100] using the formula in Table 2. An overall z-score was then calculated using population mean and standard deviation values from the BrainCheck normative database to correct for age and testing device differences across participants. The BC-Assess standardized overall score (BC-SS) was the z-score rescaled to describe each individual’s overall score relative to a population mean of 100 and a population standard deviation of 15. The BC-SS ranges from 0 to 200. This score was clipped if it fell out of the range.

Data analysis

This study analyzed the relationship between BC-SS and MoCA total scores. Linear regression was used to find the linear relationship between the two test scores. The best fit model was used to calculate equivalent cut-off scores for BC-SS based on those originally recommended for the MoCA to differentiate MCI from NC (cut-off = 26), and dementia from MCI (cut-off = 19). Impression agreement between the two instruments were measured through overall agreement (OA), positive percent agreement (PPA), and negative percent agreement (NPA).

The above analysis assumed that the cognitive status of participants had not changed significantly between the two tests, which might not be true for participants from the Ye et al. study. The effect of remote testing was also assumed to be negligible for those completing the BC-Assess remotely. For comparison purposes, the same analysis was performed on the entire study sample and on only the Groppel et al. group who took the BC-Assess and MoCA in-person and on the same day.

Results

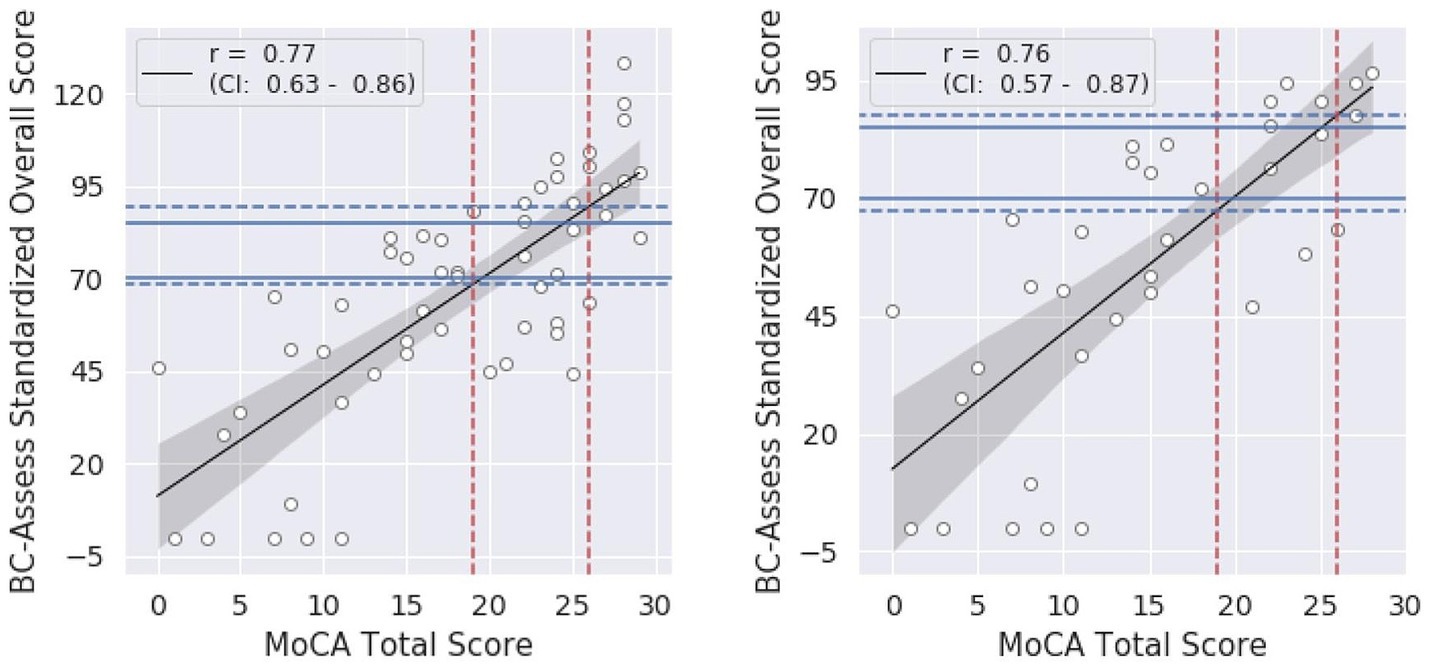

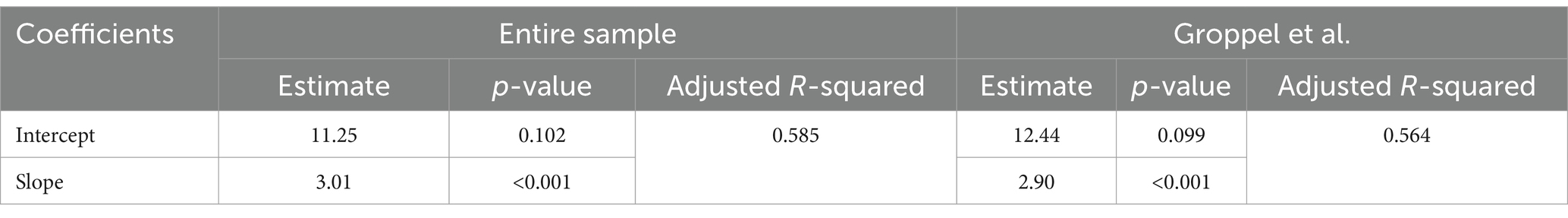

Summary statistics of the BC-SS and MoCA total score across participants in each group and the entire sample are shown in Table 1. A high correlation between the two test scores was observed for both the entire study sample (Pearson correlation coefficient r = 0.77; 95% Confidence Interval = 0.63–0.86; Figure 1 - left panel) and for only the Groppel et al. group (r = 0.76; 95% Confidence Interval = 0.57–0.87; Figure 1 - right panel). Coefficient estimates and goodness of fit of the linear regression model characterizing the relationship between the two scores are shown in Table 3 for each case.

Figure 1. Relationship between the BC-SS and MoCA total score for the entire sample (left) and for only the Groppel et al. group (right): black lines = linear regression models, shaded areas = 95% confidence intervals. Blue dashed lines represent BC-SS calculated from the model for each MoCA cut-off value (red dashed lines). Blue solid lines show BC-SS cut-offs currently recommended.

Table 3. Linear regression model fitting (BC-SS = Intercept + Slope x MoCA Total Score) for the entire sample and for only the Groppel et al. group.

According to the linear relationship found for the entire sample, MoCA cutoffs of 26 and 19 correspond to BC-Assess scores of 89.6 and 68.5, respectively. Similar BC-Assess scores, 87.8 and 67.5, respectively, were obtained for the case where only the Groppel et al. group was included. Results based on either set of participants are close with the currently recommended BC-Assess cutoffs (i.e., 85 and 70).

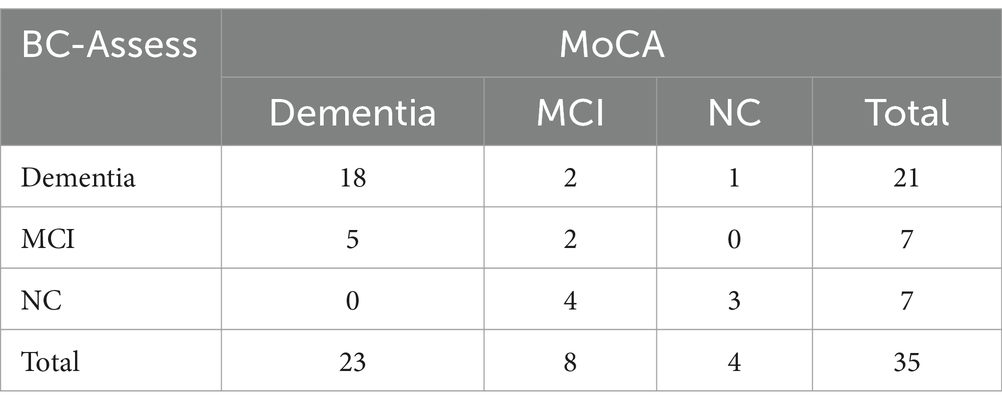

Tables 4, 5 show the confusion matrices for comparisons of the two tests’ impressions according to their recommended cut-offs for the entire study sample and for only the Groppel et al. group, respectively. For both sets of participants, a high degree of agreement in their impressions were observed:

a. Entire sample: OA = 70.9%, PPA = 70.4%, NPA = 71.4% for differentiating dementia from MCI/NC; and OA = 83.6%, PPA = 84.1%, NPA = 81.8% for differentiating dementia/MCI from NC.

b. Groppel et al. group: OA = 77.1%, PPA = 78.3%, NPA = 75.0% for differentiating dementia from MCI/NC; and OA = 85.7%, PPA = 87.1%, NPA = 75.0% for differentiating dementia/MCI from NC.

Table 4. Number of participants from the entire study sample classified as NC, MCI, and Dementia by BC-Assess (rows) and MoCA (columns) based on their recommended cut-offs.

Table 5. Number of participants from the Groppel et al. group classified as NC, MCI, and Dementia by BC-Assess (rows) and MoCA (columns) based on their recommended cut-offs.

Discussions

This retrospective study sought to compare the psychometric characteristics of a digital cognitive assessment (BC-Assess) against the widely used paper MoCA based on existing data collected from a cohort of 55 participants in two previous studies. We found a strong linear association between scores from these two tests and a high agreement in their impression of an individual’s cognitive status. The MoCA cut-off scores commonly used for detecting dementia (cut-off = 19) and MCI (cut-off = 26) corresponded to BC-Assess scores of 68.5 and 89.6, respectively, which align well with the cut-off values recommended by BrainCheck (70 and 85). These results further demonstrate the validity of BC-Assess as a cognitive screening tool.

Over the years, BC-Assess has gained good adoption among primary care providers for its demonstrated high diagnostic performance (Groppell et al., 2019; Ye et al., 2022), high usability and feasibility of implementation (BrainCheck, 2019, 2020), and its likeliness to secure medical claim reimbursement. However, many providers who have been trained to use the MoCA are not familiar with the clinical meaning of BC-Assess scores and how they are related to the MoCA scores they have been trained on. This limits these providers’ usage of BC-Assess with their patients and may hinder the widespread screening of cognitive impairment in the community. By directly comparing BC-Assess against the MoCA and providing BC-Assess equivalent cut-off scores for detecting MCI and dementia, this study offers additional support to providers in interpreting BC-Assess results and in decision making, which is expected to facilitate their use of this computerized tool in clinical practice.

To promote widespread detection of cognitive impairment and AD/ADRD, it is critical to equip primary care providers with assessment tools that are not only validated and reliable but also easy to administer and seamlessly integrated into their routine workflows (Mattke et al., 2023). In the primary care setting, the limited time of patient visits, which typically last an average of 18.9 min (Neprash et al., 2023), and the fact that geriatric patients tend to have multiple health conditions that rank over cognitive concerns, introduce the major challenge for deploying cognitive assessments. As a computerized cognitive assessment, BC-Assess holds promise for meeting these criteria as demonstrated in multiple case studies (BrainCheck, 2019, 2020). To gain a better understanding of BC-Assess’ usability and feasibility of implementation in comparison with the MoCA, future studies with comprehensive evaluations of the deployment process for each tool across a wide range of real-world settings are needed.

The results in this study should be interpreted within the context of some limitations. Firstly, the BC-Assess and MoCA were taken at different time points for a large proportion of participants. For these participants, the time interval between the two tests was up to several months. A participant’s cognitive status might change substantially during this time due to normal aging and other medical issues, which is especially true for older adults. This could lead to unsound results because analysis of the linear relationship between scores from the two tests assumed that there was no or minimal intra-individual variability in cognition across the two measures. Another factor that could have contaminated the results is the inconsistency of administration type across participants. With 10 out of 55 participants completing the BC-Assess remotely, the effects of self-administration and variability of testing environment on test scores, if any, would need to be taken into account. Secondly, the study sample lacked information about education level and was relatively small and homogeneous in regard to age and range of cognitive performance, which may impact the generalizability of the findings. More than 90% of participants were over 65 years of age and nearly 60% of which were older than 80. Similarly, roughly 50% of participants scored lower than 19 in the MoCA test. Only 30% and 20% of participants scored between 19 and 26 and higher than 26, respectively. A larger sample size and more symmetric distributions of education level, age, and range of cognitive performance, are imperative to acquire a more precise and generalized relationship between the two tests. In addition, this study also lacked a thorough comparison between the BC-Assess and MoCA by cognitive domain due to missing information of MoCA subscales for participants from the Ye et al. study. Future studies utilizing BC-Assess and the MoCA or other cognitive measurements should include more controlled procedures to further corroborate these results.

Data availability statement

The datasets presented in this article will be made available upon reasonable request to the corresponding author.

Ethics statement

The studies involving humans were approved by University of Washington Institutional Review Board and Solutions Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

DH: Conceptualization, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. KS: Writing – review & editing. RG: Writing – review & editing. BH: Conceptualization, Investigation, Methodology, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The authors declare that this study received funding from BrainCheck, Inc. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication.

Conflict of interest

DH, KS, RG and BH were employed by BrainCheck Inc., Austin. RG was also employed by Frontier Psychiatry, PLLC, Billings. All authors receive salaries and stock options from BrainCheck.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alzheimers Association (2019). 2019 Alzheimer’s disease facts and figures. Alzheimers Dement. 15, 321–387. doi: 10.1016/j.jalz.2019.01.010

Amjad, H., Roth, D. L., Sheehan, O. C., Lyketsos, C. G., Wolff, J. L., and Samus, Q. M. (2018). Underdiagnosis of dementia: an observational study of patterns in diagnosis and awareness in US older adults. J. Gen. Intern. Med. 33, 1131–1138. doi: 10.1007/s11606-018-4377-y

Borson, S., Scanlan, J. M., Watanabe, J., Tu, S. P., and Lessig, M. (2006). Improving identification of cognitive impairment in primary care. Int. J. Geriatr. Psychiatry 21, 349–355. doi: 10.1002/gps.1470

BrainCheck. (2019). Case study: new cognitive testing for neurology clinic enhances experience for patients and staff. Available at: https://braincheck.com/articles/case-study-comprehensive-neurology/ (Accessed May 1, 2024).

BrainCheck. (2020). Case study: Fast and accurate cognitive assessment. Available from: https://braincheck.com/articles/accurate-and-fast-cognitive-assessment/ (Accessed May 1, 2024).

Carson, N., Leach, L., and Murphy, K. J. (2018). A re-examination of Montreal cognitive assessment (MoCA) cutoff scores. Int. J. Geriatr. Psychiatry 33, 379–388. doi: 10.1002/gps.4756

Chodosh, J., Petitti, D. B., Elliott, M., Hays, R. D., Crooks, V. C., Reuben, D. B., et al. (2004). Physician recognition of cognitive impairment: evaluating the need for improvement. J. Am. Geriatr. Soc. 52, 1051–1059. doi: 10.1111/j.1532-5415.2004.52301.x

Cumming, T. B., Lowe, D., Linden, T., and Bernhardt, J. (2020). The AVERT MoCA data: scoring reliability in a large multicenter trial. Assessment 27, 976–981. doi: 10.1177/1073191118771516

Dautzenberg, G., Lijmer, J., and Beekman, A. (2020). Diagnostic accuracy of the Montreal cognitive assessment (MoCA) for cognitive screening in old age psychiatry: determining cutoff scores in clinical practice. Avoiding spectrum bias caused by healthy controls. Int. J. Geriatr. Psychiatry 35, 261–269. doi: 10.1002/gps.5227

Davis, D. H., Creavin, S. T., Yip, J. L., Noel-Storr, A. H., Brayne, C., and Cullum, S. (2013). “The Montreal cognitive assessment for the diagnosis of Alzheimer’s disease and other dementia disorders” in Cochrane database of systematic reviews. ed. The Cochrane collaboration (Chichester, UK: John Wiley & Sons, ltd).

Groppell, S., Soto-Ruiz, K. M., Flores, B., Dawkins, W., Smith, I., Eagleman, D. M., et al. (2019). A rapid, Mobile neurocognitive screening test to aid in identifying cognitive impairment and dementia (brain check): cohort study. JMIR Aging 2:e12615. doi: 10.2196/12615

Hilgeman, M. M., Boozer, E. M., Snow, A. L., Allen, R. S., and Davis, L. L. (2019). Use of the Montreal cognitive assessment (MoCA) in a rural outreach program for military veterans. J Rural Soc Sci. 34, 2–16.

Lang, L., Clifford, A., Wei, L., Zhang, D., Leung, D., Augustine, G., et al. (2017). Prevalence and determinants of undetected dementia in the community: a systematic literature review and a meta-analysis. BMJ Open 7:e011146. doi: 10.1136/bmjopen-2016-011146

Liu, Y., Jun, H., Becker, A., Wallick, C., and Mattke, S. (2023). Detection rates of mild cognitive impairment in primary Care for the United States Medicare Population. J. Prev. Alzheimers Dis. 11, 7–12.

Luis, C. A., Keegan, A. P., and Mullan, M. (2009). Cross validation of the Montreal cognitive assessment in community dwelling older adults residing in the southeastern US. Int. J. Geriatr. Psychiatry 24, 197–201. doi: 10.1002/gps.2101

Matthews, K. A., Xu, W., Gaglioti, A. H., Holt, J. B., Croft, J. B., Mack, D., et al. (2019). Racial and ethnic estimates of Alzheimer’s disease and related dementias in the United States (2015–2060) in adults aged ≥65 years. Alzheimers Dement. 15, 17–24. doi: 10.1016/j.jalz.2018.06.3063

Mattke, S., Batie, D., Chodosh, J., Felten, K., Flaherty, E., Fowler, N. R., et al. (2023). Expanding the use of brief cognitive assessments to detect suspected early-stage cognitive impairment in primary care. Alzheimers Dement. 19, 4252–4259. doi: 10.1002/alz.13051

Nasreddine, Z. S., Phillips, N. A., Bédirian, V., Charbonneau, S., Whitehead, V., Collin, I., et al. (2005). The Montreal cognitive assessment, MoCA: a brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 53, 695–699. doi: 10.1111/j.1532-5415.2005.53221.x

Neprash, H. T., Mulcahy, J. F., Cross, D. A., Gaugler, J. E., Golberstein, E., and Ganguli, I. (2023). Association of Primary Care Visit Length with Potentially Inappropriate Prescribing. JAMA Health Forum. 4:e230052. doi: 10.1001/jamahealthforum.2023.0052

Price, C. C., Cunningham, H., Coronado, N., Freedland, A., Cosentino, S., Penney, D. L., et al. (2011). Clock drawing in the Montreal cognitive assessment: recommendations for dementia assessment. Dement. Geriatr. Cogn. Disord. 31, 179–187. doi: 10.1159/000324639

Rasmussen, J., and Langerman, H. (2019). Alzheimer’s disease – why we need early diagnosis. Degener Neurol Neuromuscul Dis. 9, 123–130. doi: 10.2147/DNND.S228939

Reardon, S. (2023). Alzheimer’s drug donanemab: what promising trial means for treatments. Nature 617, 232–233. doi: 10.1038/d41586-023-01537-5

Roalf, D. R., Moberg, P. J., Xie, S. X., Wolk, D. A., Moelter, S. T., and Arnold, S. E. (2013). Comparative accuracies of two common screening instruments for classification of Alzheimer’s disease, mild cognitive impairment, and healthy aging. Alzheimers Dement. 9, 529–537. doi: 10.1016/j.jalz.2012.10.001

Smith, T., Gildeh, N., and Holmes, C. (2007). The Montreal cognitive assessment: validity and utility in a memory clinic setting. Can. J. Psychiatr. 52, 329–332. doi: 10.1177/070674370705200508

Van Dyck, C. H., Swanson, C. J., Aisen, P., Bateman, R. J., Chen, C., Gee, M., et al. (2023). Lecanemab in early Alzheimer’s disease. N. Engl. J. Med. 388, 9–21. doi: 10.1056/NEJMoa2212948

Yang, S., Flores, B., Magal, R., Harris, K., Gross, J., Ewbank, A., et al. (2017). Diagnostic accuracy of tablet-based software for the detection of concussion. PLoS One 12:e0179352.

Keywords: dementia, mild cognition impairment, BrainCheck, MOCA, neuropsychology, Alzheimer, cognition, computerized cognitive assessment

Citation: Huynh D, Sun K, Ghomi RH and Huang B (2024) Comparing psychometric characteristics of a computerized cognitive test (BrainCheck Assess) against the Montreal cognitive assessment. Front. Psychol. 15:1428560. doi: 10.3389/fpsyg.2024.1428560

Edited by:

Maira Okada de Oliveira, Massachusetts General Hospital and Harvard Medical School, United StatesReviewed by:

Xudong Li, Capital Medical University, ChinaLuis Carlos Jaume, University of Buenos Aires, Argentina

Copyright © 2024 Huynh, Sun, Ghomi and Huang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bin Huang, YmluQGJyYWluY2hlY2suY29t

Duong Huynh

Duong Huynh Kevin Sun1

Kevin Sun1 Bin Huang

Bin Huang