- 1Department of Physical Education, Sun Yat-sen University, Guangzhou, China

- 2Hebei Sports University, Shijiazhuang, China

- 3Organization Department of the CPC Jinzhou Municipal Committee, Jinzhou, China

- 4University of Derby, Derby, United Kingdom

- 5School of Physical Education, Kunming University of Science and Technology, Kunming, China

- 6Physical Education School, Shenzhen University, Shenzhen, China

The current study presents the development process and initial validation of the Engagement in Athletic Training Scale (EATS), which was designed to evaluate athletes’ engagement in athletic training. In study 1, item generation and initial content validity of the EATS were achieved. In study 2, the factor structure of the EATS was examined using exploratory factor analysis (EFA) and exploratory structural equation modeling (ESEM). Internal consistency reliabilities of the subscales were examined (N = 460). In study 3, factor structure, discriminant validity, internal consistency reliability, and nomological validity of the EATS were further examined in an independent sample (N = 513). Meanwhile, measurement invariance of the EATS across samples (study 2 and study 3) and genders was evaluated. Overall, results from the 3 rigorous studies provided initial psychometric evidence for the 19-item EATS and suggested that the EATS could be used as a valid and reliable measure to evaluate athletes’ engagement in athletic training.

Introduction

In competitive sports, athlete burnout has been extensively studied because it may result in profound negative consequences, including mental health problems, poor performance, injuries, and dropout of sports (Isoard-Gautheur et al., 2012; Gustafsson et al., 2017; Martinent et al., 2020). Researchers and practitioners have invested significant resources and efforts to better understand the mechanisms of burnout development and to prevent the onset of burnout symptoms (Raedeke and Smith, 2001). With the spread of positive psychology, which emphasizes human resources and potentials, rather than malfunctioning and weakness (Seligman and Csikszentmihalyi, 2000), researchers in sports fields started paying attention to the engagement of athletes. These studies advocate that the promotion of positive sports experiences of athletes, rather than the treatment and prevention of malfunctioning and negative experiences of athletes, should be given more attention. Lonsdale et al. (2007b) conducted a series of four studies to qualitatively conceptualize the structure of athlete engagement (AE) and quantitatively develop and validate a psychometric sound instrument measuring athlete engagement (Lonsdale et al., 2007a). The Athlete Engagement Questionnaire (AEQ) includes 16 items measuring 4 dimensions of dedication, confidence, vigor, and enthusiasm. Athletes used a 5-point Likert scale (1 = almost never; 2 = rarely; 3 = sometimes; 4 = frequently; 5 = almost always) to indicate “how often they felt this way in the past four months.” Dedication represents a desire to invest effort and time toward achieving goals one views as important (e.g., I am dedicated to achieving my goals in sports). Confidence refers to a belief in one’s ability to attain a high level of performance and achieve desired goals (e.g., I am confident in my abilities). Vigor is defined as physical, mental, and emotional energy or liveliness (e.g., I feel energized when I participate in my sport). Enthusiasm reflects the feelings of excitement and high levels of enjoyment (e.g., I feel excited about my sport). Since the development of the AEQ, it has been widely used in athlete engagement studies and translated into different languages (Martins et al., 2014; Wang et al., 2014; De Francisco et al., 2018). Conceptualization of athlete engagement and the development of the AEQ allow researchers to better understand and promote positive sports experiences of athletes (Lonsdale et al., 2007b; Hodge et al., 2009).

It is noteworthy that the initial research on athlete engagement in competitive sports was mainly inspired by studies on work engagement (Schaufeli et al., 2002). Lonsdale et al. borrowed the concepts and structure of work engagement to conceptualize and construct athlete engagement (Lonsdale et al., 2007a,b). Schaufeli et al. (2002) defined work engagement as a positive, fulfilling, work-related state of mind that was characterized by vigor, dedication, and absorption. Following a similar logic, Lonsdale et al. (2007b) revealed three core AE dimensions of confidence, vigor, and dedication in their qualitative study with confidence being considered as the context-specific dimension. Therefore, athlete engagement was initially defined as a persistent, positive, cognitive-affective experience in sports that was characterized by confidence, dedication, and vigor (Lonsdale et al., 2007b). In their further quantitative studies, the enthusiasm dimension was added because it was differentiated from the vigor dimension and finally formed the four-dimensional construct of the AEQ based on the results of sequential statistical analyses (Lonsdale et al., 2007a). Kahn, the pioneering researcher on employee engagement, proposes that engaged employees should be physically, emotionally, and cognitively involved in their work roles, which implies that employee engagement may include physical, cognitive, and emotional components (Kahn, 1990). Similarly, in the academic setting, researchers have reached a consensus that the construct of engagement is multidimensional and includes behavioral, cognitive, and affective (or emotional) aspects, operating collectively to reflect individuals’ positive approach to learning activities (Nystrand and Gamoran, 1991; Fredricks et al., 2004; Appleton et al., 2008; Alrashidi et al., 2016). Although Lonsdale et al. (2007a) claimed to include cognitive and affective components of AE in their definition, this differs somehow from the assumptions on work engagement by Kahn (1990) and the engagement in academic settings (Fredricks et al., 2004; Appleton et al., 2008). Specifically, the dedication, absorption, and vigor in Schaufeli et al. (2002) and confidence and enthusiasm in Lonsdale et al. (2007a) describe individuals’ psychological engagement rather than their behaviors (Schaufeli et al., 2002; Upadyaya and Salmela-Aro, 2013). As a result, Schaufeli et al.’s (2002) construct of engagement was considered missing information pertaining to individuals’ attendance, adherence to social norms, and following the rules (Upadyaya and Salmela-Aro, 2013; Alrashidi et al., 2016). Therefore, athlete engagement is mainly related to the continuous and positive emotional and cognitive feelings of athletes toward their sports, which does contribute to the quality of training, but cannot directly reflect to what extent athletes engaged in specific sessions of their athletic training, especially at behavioral level. Athletic training involves various behavioral and cognitive activities guided by particular requirements (e.g., tactical and technical skills; training load and intensity), which dynamically influence and are influenced by athletes’ affective states in the process of performing these activities (Gabbett et al., 2017; Neupert et al., 2024). Therefore, engagement in athletic training (EAT) is different from athlete engagement but similar to academic engagement, which requires individuals to behaviorally, cognitively, and affectively engage in their learning and study activities (Nystrand and Gamoran, 1991). Ben-Eliyahu et al. (2018) defined engagement as the intensity of productive involvement with an activity, encompassing three distinctive yet related components: behavioral, affective, and cognitive engagement. There are some typical scenarios often observed in academic settings where students could be emotionally engaged in tasks at hand but not thinking about the learning at all (Appleton et al., 2008). Some students could be thinking about materials and experiencing emotions but not implementing any learning activities at the behavioral level (Reschly and Christenson, 2022). Some students could be behaviorally active but not cognitively and affectively engaged in the task at hand (Appleton et al., 2006). Similar scenarios are also often observed in athletic training settings. For example, athletes may be physically repeating the movements and actions but without thinking about the reasons and requirements of the training tasks at all (Kuokkanen et al., 2021; Montull et al., 2022). Some athletes may think too much about the techniques and worry about making mistakes, especially under pressure from coaches, leading them to be reluctant to try out physically (Kuokkanen et al., 2021). These kinds of scenarios will be harmful to the quality of athletic training and could not be reflected by athlete engagement.

Athletic training aims at improving and maintaining competitive competence and sports performance of athletes, which is a planned sports activity that is led and guided by coaches and mainly engaged by athletes. Therefore, scientific and reasonable plans and contents (tasks, number of repetitions, intensity and volume, etc.) arranged by coaches in athletic training are the basis of the training quality while the extent to which athletes engage in these training tasks is the guarantee of the training quality. Previous research has extensively evaluated training quality from physical, technical, and tactical perspectives (Saw et al., 2016; Jeffries et al., 2020; Inoue et al., 2022; Bucher Sandbakk et al., 2023; Shell et al., 2023); no research has investigated how athletes’ engagement in athletic training may contribute to their training quality. The main reason derives from two unsolved questions: (1) the concept and structure of the athletes’ engagement in athletic training are not clarified yet; (2) no valid and reliable instrument could be used to assess athletes’ engagement in athletic training. Although Reynders et al. (2019) adjusted academic engagement items to the sports context to assess athletes’ behavioral engagement, affective engagement, and cognitive engagement by changing terms, such as “teacher” into “coach” and “learning-related activities” to “training or competition,” they did not differentiate the athletic training from competitions, which are two different settings with context-specific characteristics. In addition, only internal consistency reliability but other psychometric properties of the modified instrument were evaluated and reported in Reynders et al. (2019) study, which leaves an open question that not sure whether the modified instrument from an academic setting could be used to assess athletes’ engagement in athletic training. Collectively, it is imperative to clarify the concept and structure of engagement in athletic training and further develop a psychometrically sound and context-specific instrument that could be used to assess athletes’ engagement in athletic training.

To shed light on these two questions, three studies were organized. In study 1, the concept and structure of the engagement in athletic training were clarified and the item pool of the Engagement in Athletic Training Scale (EATS) was generated using a qualitative approach. In study 2, factor structure, discriminant validity, and internal consistency reliability of the EATS were initially evaluated in a sample of Chinese athletes. In study 3, factor structure, discriminant validity, internal consistency reliability, and nomological validity of the EATS were further examined in an independent sample of Chinese athletes. Meanwhile, measurement invariance of the EATS across samples and genders was evaluated. Ethical approval for the entire project (involving three studies) was obtained from a local university’s human and animal research ethics committee (Reference No. M202300127).

Study 1: item generation and content validity of the EATS

The purpose of study 1 was to develop a preliminary version of the EATS and further evaluate its content validity. First, based on previous research on work engagement and student engagement, athletes and coaches were interviewed to explore the definition and structure of engagement in athletic training. Second, the pool of EATS items was developed based on interviews with athletes. Third, the content validity of the preliminary version of the EATS was evaluated by athletes, coaches, and sports psychologists.

Method of study 1

Participants

Participants for interview

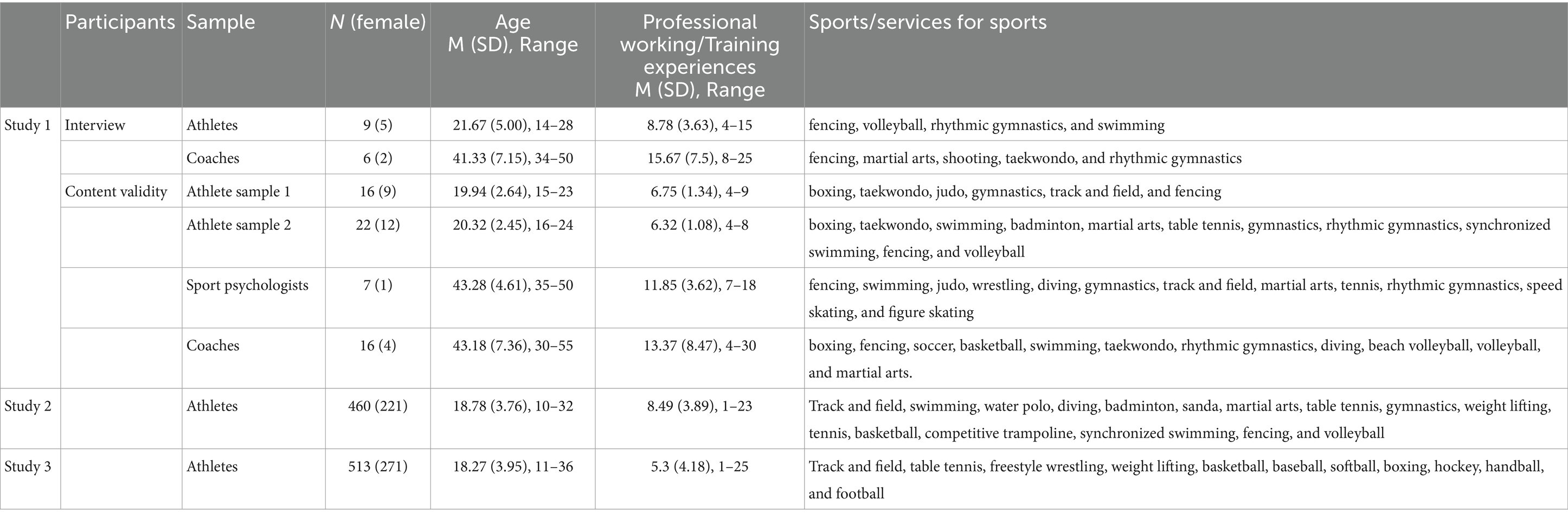

Six coaches (two women) and nine athletes (five women) were invited to participate in a series of face-to-face semi-structured interviews. All participants were competing at national or international games at the moment of data collection. Basic information of participants is presented in Table 1.

Participants for content validity

Sixteen athletes (athlete sample 1) were invited to evaluate whether the EATS items were clear and easy to understand. Twenty-two athletes (athlete sample 2) were invited to evaluate to what extent the EATS items were applicable to measure their EAT. All participants were competing at national or international games at the moment of data collection. Sixteen coaches were invited to evaluate to what extent the EATS items were applicable to evaluate athletes’ EAT in their coaching practice. All coaches had coaching experiences competing at national or international games. Seven sport psychologists were invited to evaluate to what extent the EATS items were applicable to measure athletes’ EAT. All sport psychologists had working experiences with athletes competing at national or international games. Basic information of participants is presented in Table 1.

Procedure

All participants were contacted through telephone or online to invite them to participate in this study. They were provided a copy of the invitation letter together with a written informed consent form, in which anonymity treatment was clarified and the research purpose of the study and requirements for participating in this study were clearly described. For interviews, coaches and athletes attended face-to-face semi-structured interviews, which happened in a quiet room and were audio-tapped (Supplementary material A: interview outline). Qualitative interviews were transcribed verbatim and analyzed using content analysis via NVivo 11 to clarify and identify the conceptual and operational definitions of athletes’ EAT and further generate a preliminary version of EATS (Supplementary material B).

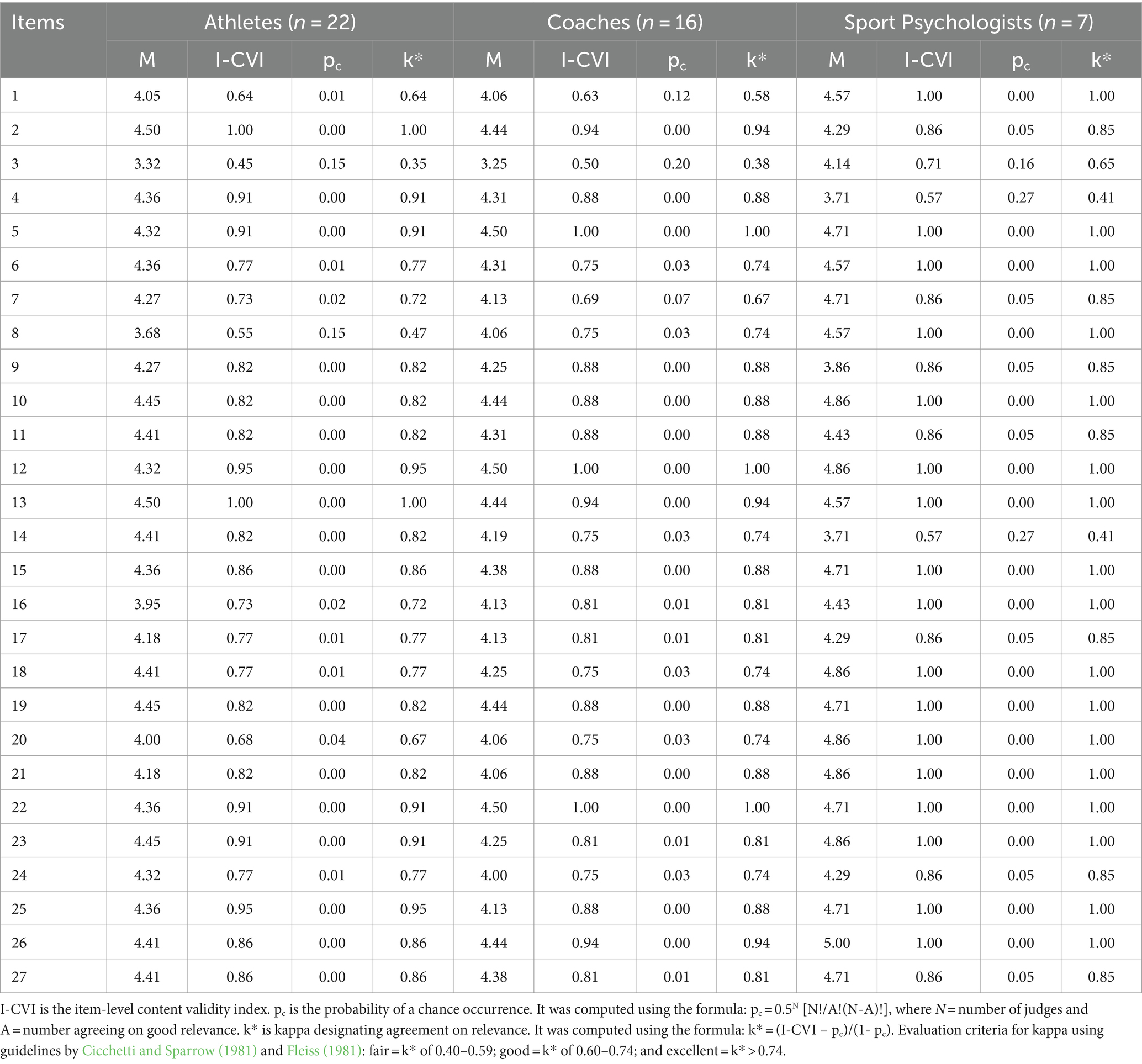

For content validity evaluation, athlete sample 1 was invited to evaluate to what extent they can easily understand the EATS items using a 5-point Likert scale (1 = not clear at all, 5 = very clear). A response of 4 out of 5 from athletes suggests that they may have difficulties understanding the item, indicating that revision or removal of the item should be considered. Athletes (sample 2), coaches, and sports psychologists were invited to answer an online version of the EATS, in which the applicability of the EATS items in measuring athletes’ EAT was evaluated using a 5-point Likert scale (1 = inapplicable at all, 5 = highly applicable). They were also asked to provide suggestions for additional items if appropriate. The content validity index of each item (I-CVI) was computed by dividing the number of judges who gave a rating of 4 or 5 on a specific item by the number of judges (Lynn, 1986). In addition, modified kappa statistic (k*), an index of agreement of a certain type, was computed to further evaluate the content validity of items. The modified kappa statistic was proposed to address the issue of chance agreements for the CVI values by adjusting for chance agreements on the relevance of a certain type and excluding the chance agreements of any type (Polit et al., 2007). Therefore, it is a good supplementing indicator for the usage of CVI in content validity evaluation. According to Polit et al. (2007, p. 466), any I-CVI greater than 0.78 would indicate excellent, regardless of the number of judges. Guidelines described in Fleiss (1981) and Cicchetti and Sparrow (1981) were used as evaluation criteria for k* (fair: k = 0.40–0.59; good: k = 0.60–0.74; excellent: k > 0.74). Both the I-CVI and k* were subsequently used as a reference for deciding whether to retain, delete, or revise the item. It should be noted that the values of I-CVI and k* converge with an increasing number of judges. With 10 or more judges, any I-CVI value greater than 0.75 yields a k* greater than 0.75, which means that an I-CVI of 0.75 would be considered “excellent” with the panel consisting of more than 9 judges, but not with that of less than 9 judges (Polit et al., 2007). All participants participated in the study voluntarily and returned their consent form before the data collection. For athletes who were under 18 years old at the moment of data collection, informed consent was obtained from their coaches who were asked to act in loco parentis.

Results of study 1

Being consistent with previous assumptions of engagement as a multidimensional construct, the results of the qualitative content analysis revealed that athletes’ EAT could be represented by three distinctive but correlated dimensions of behavioral engagement, cognitive engagement, and affective engagement. Athletes could be behaviorally active but not cognitively or affectively engaged in their training. For example, one could be actively repeating the training tasks but without thinking about why she or he should do it. Similarly, athletes may be intensively experiencing their affective feelings while not paying attention to coaches’ instructions or failing to achieve expectations from coaches. Logically, the three EAT components may influence each other and together affect their training quality. We defined the EAT as the intensity of athletes’ productive involvement with their training-related activities and tasks. The operational definition was organized as the extent to which athletes execute, comprehend, analyze, and affectively engage in their training-related tasks. Specifically, behavioral engagement refers to the extent to which elite athletes physically and behaviorally engage in their training activities including physical, skillful, and tactical-related training tasks. Cognitive engagement refers to the extent to which athletes correctly comprehend, actively analyze, and proactively concentrate on their training-related tasks. Affective engagement refers to the affective status that athletes express and experience throughout the training period including both positive and negative affect. According to the abovementioned conceptual and operational definitions, a preliminary version of the EATS was developed based on the qualitative content analyses, which includes 27 items with 10 items measuring behavioral engagement (example item: I successfully completed the training tasks assigned by my coach), 8 items measuring cognitive engagement (example item: I know exactly the purpose of my training tasks), and 9 items measuring affective engagement (example item: I enjoy the training process), respectively (Supplementary material B: 27-item EATS). Materials supporting the development of the definitions of EAT are available by request from the correspondence author.

It was found that athlete sample 1 reported an average score of 4.78 out of 5 ranging from 4.38 to 5 (the average percentage of scoring higher than 4 is 97% ranging from 88 to 100%), which means that the EATS items could be easily and clearly understood by athletes. Table 2 presents the I-CVI values and the modified kappa values (k*) computed-based responses of athletes, coaches, and sports psychologists. As the sample sizes of athletes (n = 22) and coaches (n = 16) were larger than 10, an I-CVI of 0.75 could be considered “excellent” (Polit et al., 2007). For the athlete sample, the I-CVI values of 6 items (item 1: 0.64; item 3: 0.45; item 7: 0.73; item 8: 0.55; item 16: 0.73 and item 20: 0.68) were lower than 0.75. The k* values of the same 6 items were below 0.74 with 4 items (item 1: 0.64; item 7: 0.72; item 16: 0.72 and item 20: 0.67) falling between 0.60 and 0.74 (good) and 2 items (item 3: 0.35 and item 8: 0.47) lower than 0.59 (fair). These results suggested that 25 items were rated as good or excellent and 2 items (item 3 and item 8) were rated as fair. For the coach sample, the I-CVI values of 3 items (item 1: 0.63; item 3: 0.50; item 7: 0.69) were lower than 0.75. The k* values of the same 3 items were below 0.74 with 1 item (item 7: 0.67) falling between 0.60 and 0.74 (good) and 2 items (item 1: 0.58 and item 3: 0.38) lower than 0.59 (fair). These results suggested that 25 items were rated as good or excellent and 2 items (item 1 and item 3) were rated as fair. For the sport psychologist sample, the I-CVI values of 3 items (item 3: 0.71; item 4: 0.57, and item 14: 0.57) were lower than 0.78. The k* values of the same 3 items were below 0.74 with 1 item (item 3: 0.65) falling between 0.60 and 0.74 (good) and 2 items (item 4: 0.41 and item 14: 0.41) lower than 0.59 (fair). These results suggested that 25 items were rated as good or excellent and 2 items (item 4 and item 14) were rated as fair. No additional items were suggested by athletes, coaches, and sports psychologists. Given the inconsistent results observed across the three populations on the content validity of items, we decided to retain all items for the data collection in study 2. Removal or revision decisions would be made together with the results of the statistical analysis in study 2.

Study 2: initial validation of the EATS

The purpose of study 2 was to initially investigate the factor structure, discriminant validity, and internal consistency reliability of the preliminary version of the EATS among a sample of Chinese athletes.

Method of study 2

Participants

A sample of 478 Chinese athletes from 16 sports was invited to participate in this study. Excluding invalid questionnaires, data from 460 athletes were used for data analysis. All athletes had experiences in competing at national or international games at the moment of data collection. Basic information on the participants is presented in Table 1. DeVellis and Thorpe (2021) recommended an item–participant ratio between 1:5 and 1:10 for factor analysis. The initial EATS includes 27 items, which means that our sample size was sufficient for our analytical purposes. In addition, a post-hoc RMSEA-based power analysis was conducted to estimate the statistic power of analysis with our sample size using power4SEM1 (Wang and Rhemtulla, 2021). Specifically, power analysis for the RMSEA test for not-close fit was computed assuming a population RMSEA (H1) of 0.01 [suggested by Mac Callum et al. (2006)] to test the H0 of RMSEA ≥0.05 with our sample size of 460, various df values (of 1–6-factor EFA = 204–324; 27-item and 19-item 4-factor ESEM = 101–249) and an alpha level of 0.05. It was revealed that statistic powers of our analyses to reject not-close fit equaled 1, which further supports our sample size is sufficient to achieve convincing statistic power in our analyses.

Procedure

Coaches were contacted and were provided information about the study to obtain permission to access their athletes. After receiving their approval, informed consent was obtained from athletes before data collection. In addition, for those athletes who were younger than 18 years old, informed consent was obtained from their coaches who were asked to act in loco parentis. All athletes participated in this study voluntarily and completed an anonymous online version of the EATS after the daily training. They were reminded in the instructions of the online survey that all of the information they provided would be absolutely confidential, especially as their coaches would not be able to access their responses. It took about 5 min to complete the questionnaire.

Measure

A preliminary version of the EATS consists of 27 items and includes 3 subscales of behavioral engagement (10 items), cognitive engagement (8 items), and affective engagement (9 items). Athletes were requested to indicate to what extent they disagree or agree with each statement that related to the latest session of athletic training on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Data analysis

SPSS version 22 was used to calculate the descriptive statistics of variables, and Mplus 7.31 was used to conduct exploratory factor analysis (EFA) and exploratory structural equation model (ESEM) to investigate the factor structure of the EATS. First, EFAs were conducted using a robust maximum likelihood estimator (MLR) of Mplus to compare one to six correlated latent factor structures of the EATS. As recommended by Marsh et al. (2009, 2014), the EFAs were estimated with an oblique geomin rotation. The number of factors that have an eigenvalue over one and a large bend in the scree plot indicates the potential reasonable factor structure of the EATS. Meanwhile, goodness-of-fit indices of different solutions were consulted with better goodness-of-fit indices, indicating a reasonable and desirable solution.

Furthermore, given that confirmatory factor analysis (CFA) has been criticized relying on highly restrictive independent cluster model, in which cross-loading of items on unintended factors in multidimensional instruments are forced to be zero (Asparouhov and Muthen, 2009), ESEM has been proposed to overcome the limitations of CFA because it integrates the principles of EFA within the CFA/SEM framework and provides a better representation of complex multidimensional structures of instrument (Asparouhov and Muthen, 2009). ESEM has been widely used in studies from various domains that aim to examine the factor structure and measurement invariance of the instrument (Appleton et al., 2016). Therefore, second, ESEM using the maximum-likelihood robust (MLR) estimator was conducted to reexamine the factor structure and the performance of the items of the EATS. The fit of the models using EFA and ESEM approaches was compared, and the performance of items was evaluated. Multi-fit indices were used to evaluate the adequacy of the model fit to the data in the present study, including the chi-square value, comparative fit index (CFI), Tucker–Lewis index (TLI), root mean square error of approximation (RMSEA) accompanied by its 90% confidence interval (CI), and standardized root mean square residual (SRMR). Due to no specific model-data fit recommendations for the ESEM are available, the commonly recommended criterion for independent cluster model CFA approach was adopted in this study for both EFA and ESEM analysis (Appleton et al., 2016). Specifically, the thresholds of >0.90, close to (or less than) 0.08, and up to 0.08 for the CFI and TLI, SRMR, and RMSEA indices, respectively, indicate an acceptable fit. CFI and TLI values exceeding 0.95, and SRMR and RMSEA close to (or less than) 0.08 and 0.06, respectively, represent a good fit (Hu and Bentler, 1999). In terms of interpreting the extracted factors, items with primary-loading less than 0.40 and/or cross-loading more than 0.32 were considered problematic (Tabachnick and Fidell, 2019) and should be considered to be removed. Cronbach’s alpha coefficient (α) and composite reliability (CR) were calculated to evaluate the internal consistency reliability of the EATS. The value of α > 0.70 (Nunnally and Bernstein, 1994) and CR > 0.70 (Raykov and Shrout, 2002) indicates acceptable internal consistency reliability.

Results of study 2

Factor structure

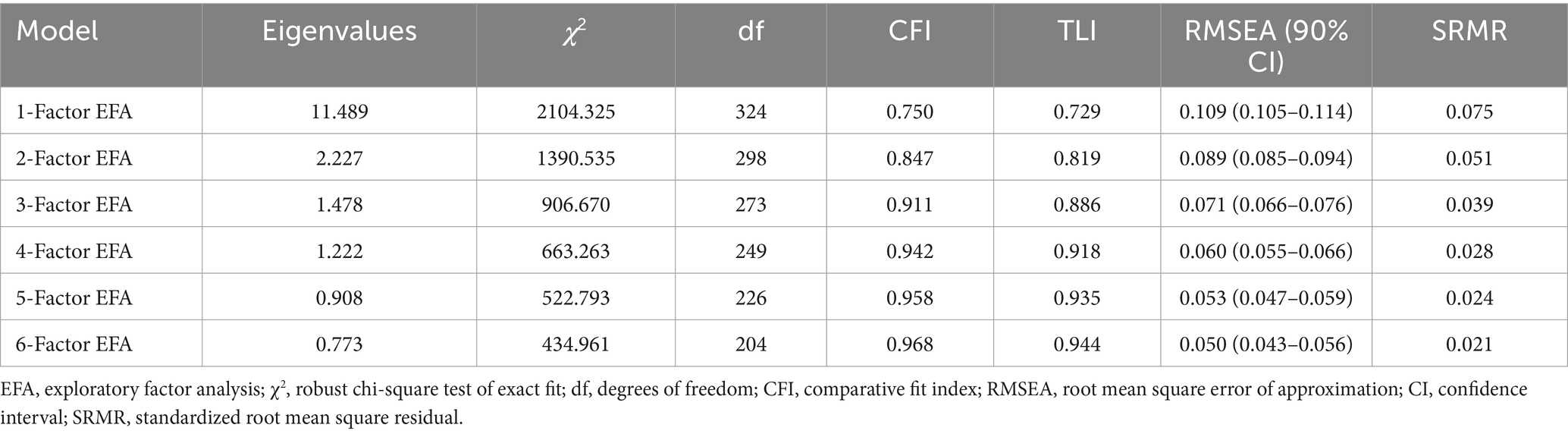

Table 3 presents the eigenvalues and goodness-of-fit indices of different solutions of EFA. It was suggested that the 4-factor solution outperformed other solutions because the eigenvalues were larger than 1, and meanwhile, the solution demonstrated an acceptable model fit. Although the 5- and 6-factor solution demonstrated better model fit than that of the 4-factor solution, some eigenvalues of the two solutions were smaller than 1. In addition, inspection of the factor loadings of the items revealed difficulties in interpreting the results of the two solutions. The four components of the four-factor solution could represent behavioral engagement, cognitive engagement, positive affective engagement, and negative affective engagement. Therefore, in the following ESEM analysis, the four-factor solution was adopted.

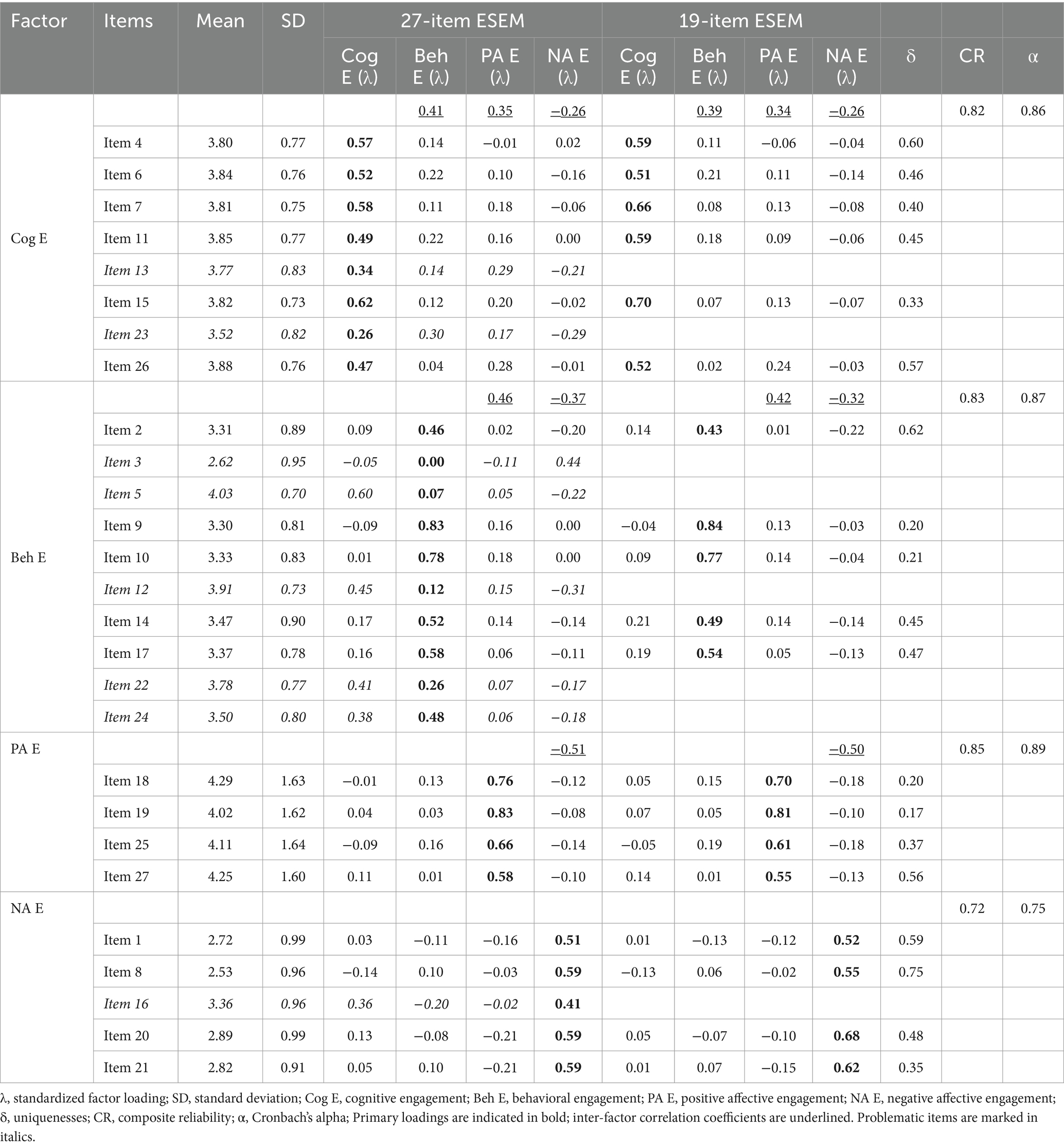

Results of the 27-item 4-factor ESEM demonstrated an acceptable model fit, χ2 (249) = 521.703, p < 0.001; CFI = 0.945; TLI = 0.922; SRMR = 0.028; RMSEA = 0.049 (90% CI: 0.043–0.055). Inspection of the factor loadings of items revealed that the primary loading of 6 items (items 3, 5, 12, 13, 22, and 23) was lower than 0.4 and cross-loading of 2 items (items 16 and 24) larger than 0.32 (see Table 4). The four items (items 1, 4, 8, and 14) that were rated as fair by either of the three samples in content validity analysis in study 1 were found to function well and therefore retained. However, item 3 which was rated as fair by both athletes and coaches in content validity analysis in study 1 was found problematic. Therefore, the 8 problematic items (items 3, 5, 12, 13, 16, 22, 23 and 24) were removed from further analysis. Excluding these 8 items much improved the fit of the model to the data: χ2 (101) = 189.857, p < 0.001; CFI = 0.972; TLI = 0.953; SRMR = 0.023; RMSEA = 0.044 (90% CI: 0.034–0.053). Further examination of the factor loadings revealed that primary loadings ranged from 0.51 to 0.84 and cross-loadings were smaller than 0.32 ranging from −0.01 to 0.26, which suggested that the 19 items of the EATS functioned well (see Table 4).

Table 4. Descriptive statistics, standardized factor loadings, inter-factor correlations, composite reliability, and Cronbach’s alpha of the 27-item ESEM and 19-item ESEM in study 2.

Discriminant validity and internal consistency reliability

It was found that the inter-factor correlations (ESEM results) ranged from −0.50 to 0.42, which suggested that the four factors were distinctive but correlated with each other (Table 4). These results provided initial support for the discriminant validity of the 19-item EATS. All four subscales demonstrated acceptable internal consistency reliabilities with composite reliability (CR) ranging from 0.72 to 0.85 and Cronbach’s alpha coefficients ranging from 0.75 to 0.89. Table 4 displays item means, standard deviations, standardized factor loadings, inter-factor correlations, composite reliability, and Cronbach’s alpha coefficients of the EATS subscales.

Study 3: further examination of the psychometric properties of the EATS

The purpose of study 3 was to further investigate the factor structure, discriminant validity, internal consistency reliability, and nomological validity of the EATS among an independent sample of Chinese athletes. Furthermore, measurement invariance of the EATS across samples and genders was examined.

Method of study 3

Participants and procedure

A sample of 550 Chinese athletes from 11 sports were invited to participate in this study. Excluding invalid questionnaires, data from 513 athletes were used for data analysis. All athletes had experiences in competing at national or international games at the moment of data collection. Basic information on the participants is presented in Table 1. According to the results of the post-hoc RMSEA-based power analysis conducted in study 2, the sample size of 513 is sufficient for our statistical analyses. The procedure was the same as that in study 2. It took about 10 min to complete all questionnaires in study 3.

Measure

The 19-item EATS was used to measure athletes’ behavioral engagement (6 items), cognitive engagement (5 items), positive affective engagement (4 items), and negative affective engagement (4 items). Athletes were requested to indicate to what extent they disagreed or agreed with each statement that related to the latest session of athletic training on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree) (see Supplementary material C).

A 5-item Chinese version of the Subjective Vitality Scale (SVS; Ryan and Frederick, 1997; Liu and Chung, 2019) was employed to measure athletes’ subjective vitality. Responses were provided on a 7-point Likert scale ranging from 1 (strongly disagree) to 7 (strongly agree). Previous research has suggested that the SVS displayed satisfactory validity and reliability among Chinese athletes (Liu et al., 2022). Internal consistency reliability of the scale in previous research (α = 0.954) and in the present study (α = 0.955) was satisfactory.

A 4-item Chinese version of the concentration disruption subscale of the Sport Anxiety Scale-2 (SAS-2; Smith et al., 2006) was used to measure participants’ concentration disruption. Responses were measured on a 4-point Likert scale ranging from 1 (never) to 4 (always). Previous research revealed that the concentration disruption subscale displayed satisfactory validity and reliability among Chinese elite athletes (Liu et al., 2022). Internal consistency reliability of the concentration disruption subscales in previous research (α = 0.896) and in the present study (α = 0.895) were satisfactory.

A 3-item Chinese version of the effort subscale of the Intrinsic Motivation Inventory (IMI; McAuley et al., 1989) was used to measure participants’ effort input in their athletic training. Responses were measured on a 7-point Likert scale ranging from 1 (completely disagree) to 7 (completely agree). Previous research revealed that the effort subscale displayed satisfactory validity and reliability among athletes (McAuley et al., 1989). Internal consistency reliability of the concentration disruption subscale in previous research (α = 0.84) was satisfactory. In the present study, the internal consistency reliability was slightly lower than 0.7 (α = 0.676).

A nine-item Chinese version of the International Positive and Negative Affect Schedule Short Form (I-PANAS-SF; Thompson, 2007; Liu et al., 2020) was used to measure athletes’ positive affect (four items) and negative affect (five items) in general in this study. Responses were provided on a 5-point Likert scale ranging from 1 (never) to 5 (always). Previous research revealed that the Chinese version of the I-PANAS-SF displayed satisfactory validity and reliability among Chinese athletes (e.g., Liu et al., 2022). Internal consistency reliability of the I-PANAS-SF in a previous study (α = 0.755–0.88) and the current study (α = 0.758–0.82) was satisfactory.

Data analysis

First, ESEM using an MLR estimator was conducted to further examine the factor structure of the 19-item EATS. Second, Cronbach’s alpha coefficients and composite reliability (CR) were calculated to evaluate the internal consistency reliability of the EATS. Third, the nomological validity of the EATS was evaluated by examining correlations between subscales of EATS with their theoretically related variables. Finally, measurement invariance of the EATS across samples (study 2 and study 3) and genders (male athletes from study 2 and study 3 vs. female athletes from study 2 and study 3) were investigated using a sequential model testing approach via multiple-group ESEM. Specifically, four models, namely, configural, metric invariance (weak invariance), scalar invariance (strong invariance), and item uniqueness invariance (strict invariance) were evaluated (Meredith, 1993). Due to the chi-square difference test being sensitive to the sample size, the differences in the descriptive fit indices (ΔCFI, ΔRMSEA, and ΔSRMR) were used in model comparisons in this study. According to Chen (2007), when testing metric invariance, non-invariance is indicated by a change of ≥ 0.010 in the CFI supplemented by a change of ≥ 0.015 in the RMSEA or ≥ 0.030 in the SRMR; when testing scalar or uniqueness invariance, non-invariance is indicated by a change of ≥ 0.010 in the CFI supplemented by a change of ≥ 0.015 in the RMSEA or ≥ 0.010 in the SRMR.

Results of study 3

Factor structure, discriminant validity, and internal consistency reliability

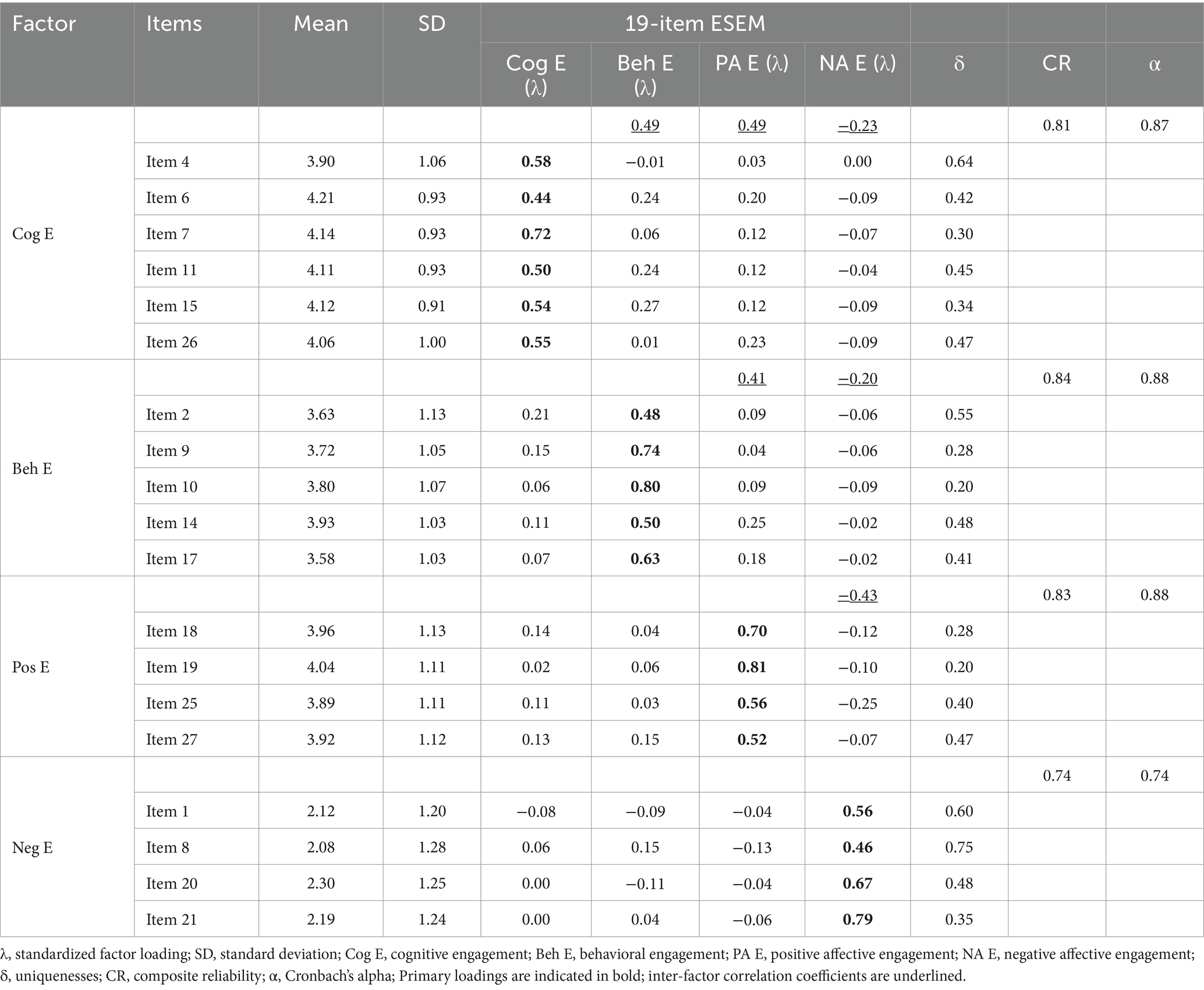

The ESEM results suggested that the 19-item 4-factor ESEM displayed a good fit to the data, χ2 (101) = 191.43, p < 0.001, CFI = 0.975, TLI = 0.958, SRMR =0.021, RMSEA =0.042 (90% CI: 0.033–0.051). Primary loadings were larger than 0.4 (ranging from 0.44 to 0.81), and cross-loadings were smaller than 0.32 (ranging from −0.25 to 0.27), which suggested that the 19 items of the EATS functioned well. Low-to-moderate inter-factor correlations (−0.43 to 0.49) were revealed. These results suggested that the four factors of the EATS were distinctive from but related to each other, which provided further support for the discriminant validity of the EATS. All four subscales demonstrated acceptable internal consistency reliabilities with composite reliability (CR) ranging from 0.74 to 0.84 and Cronbach’s alpha coefficients ranging from 0.74 to 0.88. Table 5 displays item means, standard deviation, standardized factor loadings, inter-factor correlations, and internal consistency reliability of the EATS.

Table 5. Descriptive statistics, standardized factor loadings, inter-factor correlations, composite reliability, and Cronbach’s alpha of the 19-item ESEM in study 3.

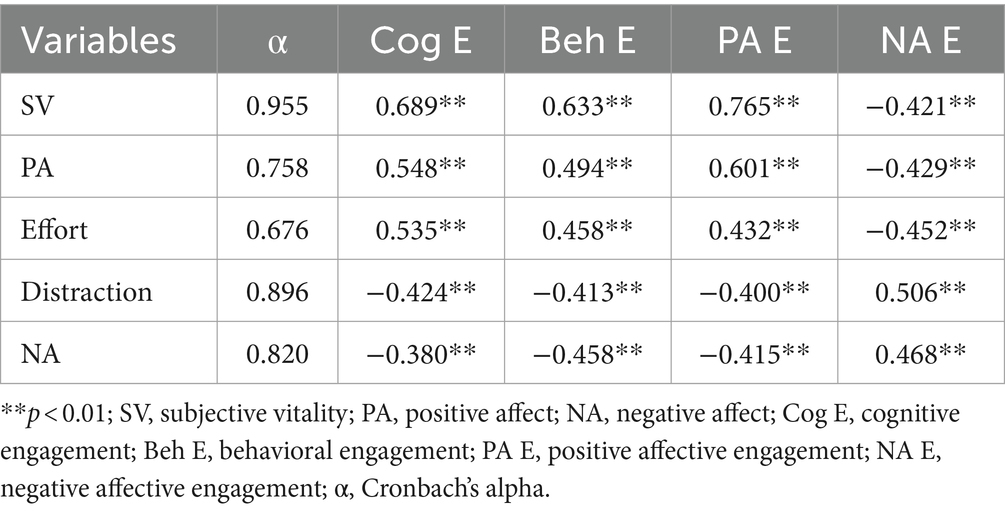

Nomological validity

Previous research in work and academic settings has revealed that engagement is positively associated with vitality (Chughtai and Buckley, 2011; Bakker et al., 2020), subjective wellbeing (Ouweneel et al., 2012; Matthews et al., 2014; King et al., 2015; Garg and Singh, 2019), trait flow (Smith et al., 2023), and satisfaction (Alarcon and Lyons, 2011; Derbis et al., 2018) and negatively associated with burnout and negative affect (Bledow et al., 2011; Upadyaya et al., 2016; Chen et al., 2020). Based on this evidence, it is reasonable to assume that athletes’ behavioral engagement, cognitive engagement, and positive affective engagement would be positively associated with their subjective vitality, positive affect in general, and effort put into athletic training while negatively related to negative affect in general and concentration disruption in athletic training. On the other hand, we anticipated that athletes’ negative affective engagement would be negatively associated with the abovementioned positive outcomes (subjective vitality, positive affect in general, and effort put in athletic training) and negative outcomes (negative affect in general and attentional distraction in athletic training). Therefore, the nomological validity was evaluated by examining the relationships between EATS subscales with the abovementioned positive and negative outcomes. Table 6 displays the correlations among variables. As hypothesized, moderate-to-high positive correlations were evidenced among behavioral engagement, cognitive engagement, and positive affective engagement with positive outcomes. Meanwhile, moderate negative associations were found among behavioral engagement, cognitive engagement, and positive affective engagement with negative outcomes. In addition, negative affective engagement was found negatively associated with positive outcomes while positively associated with negative outcomes. These findings provided support for the nomological validity of the EATS.

Table 6. Correlations between EATS subscales with theoretical related variables in nomological validity.

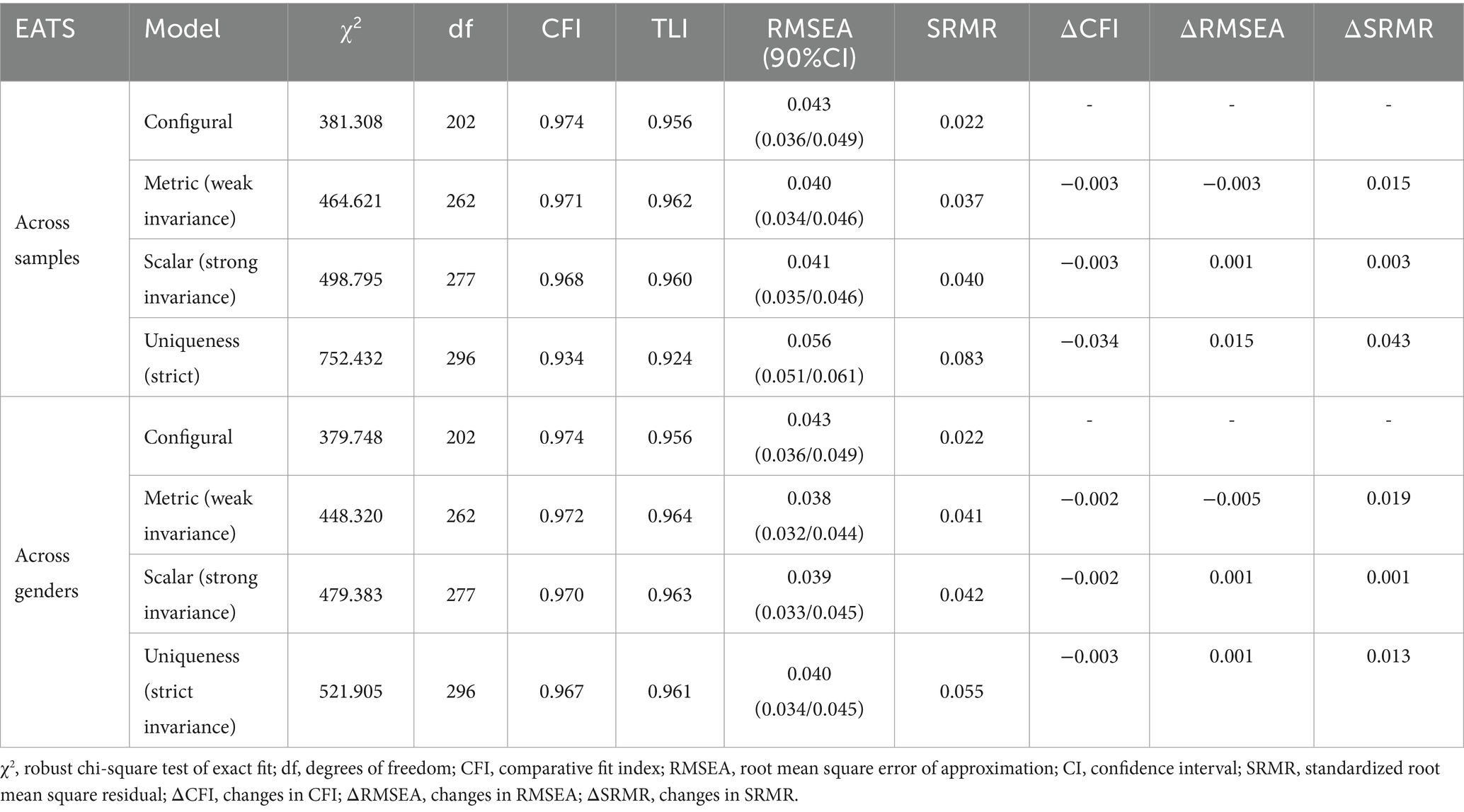

Invariance testing across samples and genders

Table 7 displays the goodness-of-fit indices for the invariance analysis of the EATS measurement model across samples and genders. For the invariance analysis across samples, there were no substantial changes in the selected indices (ΔCFI, ΔRMSEA, and ΔSRMR) for metric and scalar invariance tests. However, the changes in CFI and SRMR were larger than the cut-off values (ΔCFI = 0.034 > 0.01; ΔRMSEA = 0.015; ΔSRMR = 0.043 > 0.01) for the uniqueness invariance test. Therefore, we concluded that the weak and strong invariance of the EATS measurement model across samples was supported. For the invariance analysis across genders, there were no substantial changes in the selected indices (ΔCFI, ΔRMSEA, and ΔSRMR) for metric and scalar invariance tests. Although it was found that the change in SRMR was larger than the cutoff value (ΔSRMR = 0.013 > 0.01) for the uniqueness invariance test, no substantial changes were evidenced in the CFI and RMSEA (ΔCFI = 0.003 < 0.01; ΔRMSEA = 0.001 < 0.01). Therefore, we concluded that weak, strong, and strict invariance of the EATS measurement model across genders was supported.

General discussion

In competitive sports, athlete engagement has been extensively examined, which is mainly related to athletes’ continuous and positive emotional and cognitive feelings toward their sports in general. However, it could not be used to reflect to what extent athletes have engaged in specific sessions of their athletic training and further their training quality. In contrast, athletes’ engagement in athletic training reflects the intensity of athletes’ productive involvement with their training-related activities and tasks and could be used to reflect athletes’ engagement in athletic training, which could be treated as one of the indicators to monitor the training quality of athletes. To the best of our knowledge, no psychometrically sound and context-specific measure of athletes’ engagement in athletic training has been developed yet. To address this deficit, in a series of three rigorous studies, we conceptualized the definition and structure of engagement in athletic training and further initially developed and evaluated the psychometric properties of the Engagement in Athletic Training Scale (EATS). Collectively, it was found that engagement in athletic training could be characterized as four distinct but related dimensions behavioral engagement, cognitive engagement, positive affective engagement, and negative affective engagement. Psychometric analyses revealed that the 19-item EATS displayed satisfactory reliability and validity and could be used to assess athletes’ engagement in athletic training. The current study adds new to the literature in terms of clarifying the concept and structure of engagement in athletic training and providing a valid and reliable instrument that could be used to assess athletes’ engagement in athletic training, which makes further investigations on the antecedents and consequences of engagement in athletic training possible.

The definition and structure of engagement in athletic training have not been clarified and investigated in previous research, which is the main reason that work on this particular topic was rare in the literature. In study 1, we conducted face-to-face semi-structured interviews with coaches and athletes and qualitatively analyzed the verbatim transcripts of interviews using content analysis. It was revealed that athletes’ engagement in athletic training could be represented by three distinct but correlated dimensions behavioral engagement, cognitive engagement, and affective engagement. This is consistent with previous research on student engagement (Nystrand and Gamoran, 1991; Ben-Eliyahu et al., 2018). We defined engagement in athletic training as the intensity of athletes’ productive involvement with their training-related activities and tasks. The operational definition was organized as the extent to which athletes execute, comprehend analyze, and affectively (both positively and negatively) engage in their training-related tasks. This definition reflects both overt behaviors and covert cognitive and affective activities throughout the process of athletic training. In practice, coaches usually evaluate the training quality of athletes by observing those over indicators including physical behaviors (quantity that athletes finish in athletic training such as repetitions, training intensity, and volume), physical movements (quality of the movements and actions such as accuracy and stability), and performance outcomes (concrete performance). However, it is impossible for coaches to directly observe to what extent the athletes have cognitively engaged in their athletic training. For example, one of the typical feedbacks from coaches is that some athletes only physically repeat the movements and actions without thinking about the rationales behind and requirements of the training tasks, which is the main reason that little or no improvements were observed after training for a period of time although they seemed having worked very hard in their athletic training. Similarly, it is difficult for coaches to accurately observe the affective states of athletes in the process of athletic training because most athletes are prone to hide their true emotions, especially those negative ones to avoid coaches judging them as mentally weak or not tough enough. Therefore, it is imperative to develop an instrument that could be used to assess athletes’ engagement in athletic training as a supplemental tool to monitor their training quality in addition to observations from coaches. To achieve this purpose, in study 1, we first developed a pool with 27 EATS items based on the results of qualitative analysis. Although it was revealed that there was some inconsistency across samples on the content validity of 4 (out of 27) items, we decided to retain all items to be further examined in the following statistical analysis in study 2.

The results of study 2 revealed that the EATS successfully captured the core components of behavioral, cognitive, and affective engagement in athletic training. However, it is noteworthy that the three-solution EFA model is inferior to the four-solution EFA model, in which the affective engagement was divided into positive affective engagement and negative affective engagement. Although this result was inconsistent with previous findings in academic engagement, it logically makes sense because both positive and negative affective engagements were reported by athletes and coaches, and more importantly, they contributed to the overall engagement in athletic training differently. Further analysis using the ESEM approach revealed that the 4-factor structure of the 19-item EATS demonstrated a good model fit to the data after removing the eight problematic items including items rated as fair in content validity analysis. Moderate inter-factor correlations among the four dimensions were revealed, which were consistent with previous findings and provided support for the discriminant validity. In other words, the four dimensions were distinctive from but associated with each other. For the negative affective engagement and positive affective engagement, a significant and negative correlation was revealed, which suggested that they may co-exist in one particular athletic training session. This result further reminds researchers and practitioners that athletic training is a dynamic and complex process, in which athletes’ affective states may change from moment to moment or across training tasks. In practice, some athletes always mention that they would be more physically involved in those activities they like rather than those they do not. The result further highlights that athletes’ engagement in athletic training would provide more detailed information that athlete engagement could not provide. For example, although an athlete may have reported high scores on athlete engagement (continuous and positive emotional and cognitive feelings toward their sports in general), she or he may also experience different affective engagements in the process of specific athletic training. This further justified the rationale of the current study that athlete engagement was insufficient to reflect or monitor training quality in comparison with engagement in athletic training.

The results of study 3 provided further support for the factor structure, discriminant validity, and internal consistency reliability of the EATS. Moreover, nomological validity was evidenced by revealing that the EATS subscales were significantly associated with theoretically related variables. Although no previous research has purposely explored these relationships in the context of a competitive sport, previous findings on moderate relationships of academic engagement and work engagement with similar variables justified the rationales of those examined variables in nomological validity. Finally, invariance analyses across samples and genders provided further psychometric evidence for the EATS. Measurement invariance is one of the fundamental psychometric properties of psychometrically sound scales. Weak invariance examines whether the change in each item score corresponds to the change in the factor score across groups. Strong invariance measures whether the values of the observed variables reflect the values of the latent variables in the same way across different groups. Strict invariance examines whether meaningful and unbiased comparisons can be made across groups and evidence of strict invariance means that any difference between groups is a true difference rather than a measurement artifact. The results of invariance analysis in study 3 revealed that weak and strong invariance of EATS across samples were supported while weak, strong, and strict invariance across genders were supported. Based on these results, we can conclude that athletes from different samples (from study 2 and study 3) interpreted the EATS items in a similar way (weak invariance) and the mean scores of participants from different samples on the EATS subscales were comparable (strong invariance). Similarly, for gender invariance analysis, it was concluded that female athletes and male athletes interpreted the EATS items in a similar way (weak invariance), and the mean scores reported by female athletes and male athletes on the EATS subscales were comparable (strong invariance), and moreover, if there were significant differences across genders, they were true and meaningful differences.

Collectively, the current study clarified and conceptualized the definition and structure of engagement in athletic training, contributing new theoretical insights to the literature on this topic. A psychometrically sound and context-specific instrument of the EATS was initially developed and validated among two independent samples, which displayed satisfactory validity and reliability. These results suggest that EATS could be used to assess athletes’ engagement in athletic training for both research and practical purposes. However, several limitations in this study should be acknowledged. First, a convenient sampling approach was used, although athletes from various sports were invited, athletes from many other sports (e.g., rowing and winter sports) were not invited in this study. The results of the current study may not be generalizable to athletes from other sports. Future researchers are encouraged to further investigate the psychometric properties of the EATS in more representative samples. Second, only athlete-level variance was considered; coach-level, sport-level, and institutional-level effects were not examined. Given that athletes are naturally nested in coaches, sports, and institutions, future studies are encouraged to further investigate the research question using multi-level design to differentiate effects at different levels purposely. Third, for the nomological validity, assumed theoretically related variables from other fields (work engagement and academic engagement) were used in this study, future studies may consider further identifying and investigating antecedences and consequences of the engagement in athletic training. Fourth, although measurement invariance analysis across samples and genders was examined in this study, given the distributions of age and sporting experience levels were unequal, the measurement invariance across these two groups was not examined. In addition, longitudinal invariance was not examined, which is important for longitudinal and interventional research. Future researchers are suggested to shed light on these research questions.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Institutional Review Board at the Sun Yat-sen University. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

J-DL: Conceptualization, Data curation, Formal analysis, Methodology, Project administration, Resources, Validation, Writing – original draft, Writing – review & editing. J-XW: Investigation, Methodology, Writing – original draft. Y-DZ: Investigation, Resources, Validation, Writing – review & editing. Z-HW: Data curation, Formal analysis, Writing – original draft, Writing – review & editing. SZ: Methodology, Writing – review & editing. J-CH: Data curation, Formal analysis, Project administration, Writing – review & editing. HL: Conceptualization, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1402065/full#supplementary-material

Footnotes

References

Alarcon, G. M., and Lyons, J. B. (2011). The relationship of engagement and job satisfaction in working samples. J. Psychol. 145, 463–480. doi: 10.1080/00223980.2011.584083

Alrashidi, O., Phan, H. P., and Ngu, B. H. (2016). Academic engagement: an overview of its definitions, dimensions, and major conceptualisations. Int. Educ. Stud. 9, 41–52. doi: 10.5539/ies.v9n12p41

Appleton, J. J., Christenson, S. L., and Furlong, M. J. (2008). Student engagement with school: critical conceptual and methodological issues of the construct. Psychol. Sch. 45, 369–386. doi: 10.1002/pits.20303

Appleton, J. J., Christenson, S. L., Kim, D., and Reschly, A. L. (2006). Measuring cognitive and psychological engagement: validation of the student engagement instrument. J. Sch. Psychol. 44, 427–445. doi: 10.1016/j.jsp.2006.04.002

Appleton, P. R., Ntoumanis, N., Quested, E., Viladrich, C., and Duda, J. L. (2016). Initial validation of the coach-created empowering and disempowering motivational climate questionnaire (EDMCQ-C). Psychol. Sport Exerc. 22, 53–65. doi: 10.1016/j.psychsport.2015.05.008

Asparouhov, T., and Muthen, B. (2009). Exploratory structural equation modeling. Struct. Equ. Model. Multidiscip. J. 16, 397–438. doi: 10.1080/10705510903008204

Bakker, A. B., Petrou, P., Op den Kamp, E. M., and Tims, M. (2020). Proactive vitality management, work engagement, and creativity: the role of goal orientation. Appl. Psychol. Int. Rev. 69, 351–378. doi: 10.1111/apps.12173

Ben-Eliyahu, A., Moore, D., Dorph, R., and Schunn, C. D. (2018). Investigating the multidimensionality of engagement: affective, behavioral, and cognitive engagement across science activities and contexts. Contemp. Educ. Psychol. 53, 87–105. doi: 10.1016/j.cedpsych.2018.01.002

Bledow, R., Schmitt, A., Frese, M., and Kühnel, J. (2011). The affective shift model of work engagement. J. Appl. Psychol. 96, 1246–1257. doi: 10.1037/a0024532

Bucher Sandbakk, S., Walther, J., Solli, G. S., Tønnessen, E., and Haugen, T. (2023). Training quality—what is it and how can we improve it? Int. J. Sports Physiol. Perform. 18, 557–560. doi: 10.1123/ijspp.2022-0484

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Struct. Equ. Model. 14, 464–504. doi: 10.1080/10705510701301834

Chen, H., Richard, O. C., Dorian Boncoeur, O., and Ford, D. L. (2020). Work engagement, emotional exhaustion, and counterproductive work behavior. J. Bus. Res. 114, 30–41. doi: 10.1016/j.jbusres.2020.03.025

Chughtai, A. A., and Buckley, F. (2011). Work engagement: antecedents, the mediating role of learning goal orientation and job performance. Career Dev. Int. 16, 684–705. doi: 10.1108/13620431111187290

Cicchetti, D. V., and Sparrow, S. (1981). Developing criteria for establishing interrater reliability of specific items: Application to assessment of adaptive behavior. American Journal of Mental Deficiency. 86, 127–137.

De Francisco, C., Arce, C., Graña, M., and Sánchez-Romero, E. I. (2018). Measurement invariance and validity of the athlete engagement questionnaire. Int. J. Sports Sci. Coaching 13, 1008–1014. doi: 10.1177/1747954118787488

Derbis, R., Jasiński, A. M., and Craig, T. (2018). Work satisfaction, psychological resiliency and sense of coherence as correlates of work engagement. Cogent Psychol. 5:1451610. doi: 10.1080/23311908.2018.1451610

DeVellis, R. F., and Thorpe, C. T. (2021). Scale development: Theory and applications. New York, NY: Sage Publications.

Fredricks, J. A., Blumenfeld, P. C., and Paris, A. H. (2004). School engagement: potential of the concept, state of the evidence. Rev. Educ. Res. 74, 59–109. doi: 10.3102/00346543074001059

Gabbett, T. J., Nassis, G. P., Oetter, E., Pretorius, J., Johnston, N., Medina, D., et al. (2017). The athlete monitoring cycle: a practical guide to interpreting and applying training monitoring data. Br. J. Sports Med. 51, 1451–1452. doi: 10.1136/bjsports-2016-097298

Garg, N., and Singh, P. (2019). Work engagement as a mediator between subjective well-being and work and-health outcomes. Manag. Res. Rev. 43, 735–752. doi: 10.1108/MRR-03-2019-0143

Gustafsson, H., DeFreese, J. D., and Madigan, D. J. (2017). Athlete burnout: review and recommendations. Curr. Opin. Psychol. 16, 109–113. doi: 10.1016/j.copsyc.2017.05.002

Hodge, K., Lonsdale, C., and Jackson, S. A. (2009). Athlete engagement in elite sport: an exploratory investigation of antecedents and consequences. Sport Psychologist 23, 186–202. doi: 10.1123/tsp.23.2.186

Hu, L. T., and Bentler, P. M. (1999). Cut off criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equat. Model. 6, 1–55. doi: 10.1080/10705519909540118

Inoue, A., dos Santos Bunn, P., do Carmo, E. C., Lattari, E., and da Silva, E. B. (2022). Internal training load perceived by athletes and planned by coaches: a systematic review and Meta-analysis. Sports Med Open 8:35. doi: 10.1186/s40798-022-00420-3

Isoard-Gautheur, S., Guillet-Descas, E., and Lemyre, P. N. (2012). A prospective study of the influence of perceived coaching style on burnout propensity in high level young athletes: using a self-determination theory perspective. Sport Psychologist 26, 282–298. doi: 10.1123/tsp.26.2.282

Jeffries, A. C., Wallace, L., Coutts, A. J., McLaren, S. J., McCall, A., and Impellizzeri, F. M. (2020). Athlete-reported outcome measures for monitoring training responses: a systematic review of risk of bias and measurement property quality according to the COSMIN guidelines. Int. J. Sports Physiol. Perform. 15, 1203–1215. doi: 10.1123/ijspp.2020-0386

Kahn, W. A. (1990). Psychological conditions of personal engagement and disengagement at work. Acad. Manag. J. 33, 692–724. doi: 10.2307/256287

King, R. B., McInerney, D. M., Ganotice, F. A., and Villarosa, J. B. (2015). Positive affect catalyzes academic engagement: cross-sectional, longitudinal, and experimental evidence. Learn. Individ. Differ. 39, 64–72. doi: 10.1016/j.lindif.2015.03.005

Kuokkanen, J., Virtanen, T., Hirvensalo, M., and Romar, J. E. (2021). The reliability and validity of the sport engagement instrument in the Finnish dual career context. Int. J. Sport Exerc. Psychol. 20, 1345–1367. doi: 10.1080/1612197X.2021.1979074

Liu, J. D., and Chung, P. K. (2019). Factor structure and measurement invariance of the subjective vitality scale: evidence from Chinese adolescents in Hong Kong. Qual. Life Res. 28, 233–239. doi: 10.1007/s11136-018-1990-5

Liu, H., Wang, X., Wu, D.-H., Zou, Y.-D., Jiang, X.-B., Gao, Z.-Q., et al. (2022). Psychometric properties of the Chinese translated athlete burnout questionnaire: evidence from Chinese collegiate athletes and elite athletes. Front. Psychol. 13:823400. doi: 10.3389/fpsyg.2022.823400

Liu, J. D., You, R. H., Liu, H., and Chung, P. K. (2020). Chinese version of the international positive and negative affect schedule short form: factor structure and measurement invariance. Health Qual. Life Outcomes 18, 219–235. doi: 10.1186/s12955-020-01526-6

Lonsdale, C., Hodge, K., and Jackson, S. (2007a). Athlete engagement: II. Development and initial validation of the athlete engagement questionnaire. Int. J. Sport Psychol. 38, 471–492.

Lonsdale, C., Hodge, K., and Raedeke, T. (2007b). Athlete engagement: I. A qualitative investigation of relevance and dimensions. Int. J. Sport Psychol. 38, 451–470.

Lynn, M. R. (1986). Determination and quantification of content validity. Nur Res 35, 382–385. doi: 10.1097/00006199-198611000-00017

Mac Callum, R. C., Browne, M. W., and Cai, L. (2006). Testing differences between nested covariance structure models: power analysis and null hypotheses. Psychol. Methods 11, 19–35. doi: 10.1037/1082-989X.11.1.19

Marsh, H. W., Morin, A. J., Parker, P. D., and Kaur, G. (2014). Exploratory structural equation modeling: an integration of the best features of exploratory and confirmatory factor analysis. Annu. Rev. Clin. Psychol. 10, 85–110. doi: 10.1146/annurev-clinpsy-032813-153700

Marsh, H. W., Muthen, B., Asparouhov, T., Ludtke, O., Robitzsch, A., Morin, A. J. S., et al. (2009). Exploratory structural equation modeling, integrating CFA and EFA: application to students’ evaluations of university teaching. Struct. Equ. Model. 16, 439–476. doi: 10.1080/10705510903008220

Martinent, G., Louvet, B., and Decret, J. C. (2020). Longitudinal trajectories of athlete burnout among young table tennis players: a 3-wave study. J. Sport Health Sci. 9, 367–375. doi: 10.1016/j.jshs.2016.09.003

Martins, P., Rosado, A., Ferreira, V., and Biscaia, R. (2014). Examining the validity of the athlete engagement questionnaire (AEQ) in a Portuguese sport setting. Motriz 20, 1–7. doi: 10.1590/S1980-65742014000100001

Matthews, R. A., Mills, M. J., Trout, R. C., and English, L. (2014). Family-supportive supervisor behaviors, work engagement, and subjective well-being: a contextually dependent mediated process. J. Occup. Health Psychol. 19, 168–181. doi: 10.1037/a0036012

McAuley, E., Duncan, T., and Tammen, V. V. (1989). Psychometric properties of the intrinsic motivation inventory in a competitive sport setting: a confirmatory factor analysis. Res. Quarterly Exer. Sport 60, 48–58. doi: 10.1080/02701367.1989.10607413

Meredith, W. (1993). Measurement invariance, factor analysis and factorial invariance. Psychometrika 58, 525–543. doi: 10.1007/BF02294825

Montull, L., Slapsinskaite-Dackeviciene, A., Kiely, J., Hristovski, R., and Balague, N. (2022). Integrative proposals of sports monitoring: subjective outperforms objective monitoring. Sports Med Open 8:41. doi: 10.1186/s40798-022-00432-z

Neupert, E., Holder, T., Gupta, L., and Jobson, S. A. (2024). More than metrics: the role of socio-environmental factors in determining the success of athlete monitoring. J. Sports Sci. 42, 323–332. doi: 10.1080/02640414.2024.2330178

Nystrand, M., and Gamoran, A. (1991). Instructional discourse, student engagement, and literature achievement. Res. Teach. Engl. 25, 261–290. doi: 10.58680/rte199115462

Ouweneel, E., Le Blanc, P. M., Schaufeli, W. B., and Van Wijhe, C. I. (2012). Good morning, good day: a diary study on positive emotions, hope, and work engagement. Hum. Relat. 65, 1129–1154. doi: 10.1177/0018726711429382

Polit, E. F., Beck, T. T., and Owen, S. V. (2007). Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res. Nurs. Health 30, 459–467. doi: 10.1002/nur.20199

Raedeke, T. D., and Smith, A. L. (2001). Development and preliminary validation of an athlete burnout measure. J. Sport Exerc. Psychol. 23, 281–306. doi: 10.1123/jsep.23.4.281

Raykov, T., and Shrout, P. E. (2002). Reliability of scales with general structure: point and interval estimation using a structural equation modeling approach. Struct. Equ. Model. 9, 195–212. doi: 10.1207/s15328007sem0902_3

Reschly, A. L., and Christenson, S. L. (2022). Handbook of research on student engagement. 2nd Edn. Boston, MA: Springer US.

Reynders, B., Vansteenkiste, M., Van Puyenbroeck, S., Aelterman, N., De Backer, M., Delrue, J., et al. (2019). Coaching the coach: intervention effects on need-supportive coaching behavior and athlete motivation and engagement. Psychol. Sport Exerc. 43, 288–300. doi: 10.1016/j.psychsport.2019.04.002

Ryan, R. M., and Frederick, C. (1997). On energy, personality, and health: subjective vitality as a dynamic reflection of well-being. J. Pers. 65, 529–565. doi: 10.1111/j.1467-6494.1997.tb00326.x

Saw, A. E., Main, L. C., and Gastin, P. B. (2016). Monitoring the athlete training response: subjective self-reported measures trump commonly used objective measures: a systematic review. Br. J. Sports Med. 50, 281–291. doi: 10.1136/bjsports-2015-094758

Schaufeli, W. B., Salanova, M., Gonzalez-Roma, V., and Bakker, A. B. (2002). The measurement of engagement and burnout: a two sample confirmatory factor analytic approach. J. Happiness Stud. 3, 71–92. doi: 10.1023/A:1015630930326

Seligman, M. E., and Csikszentmihalyi, M. (2000). Positive psychology. An introduction. Am. Psychol. 55, 5–14. doi: 10.1037/0003-066X.55.1.5

Shell, S. J., Slattery, K., Clark, B., Broatch, J. R., Halson, S. L., and Coutts, A. J. (2023). Development and validity of the subjective training quality scale. Eur. J. Sport Sci. 23, 1102–1109. doi: 10.1080/17461391.2022.2111276

Smith, A. C., Ralph, B. C. W., Smilek, D., and Wammes, J. D. (2023). The relation between trait flow and engagement, understanding, and grades in undergraduate lectures. Br. J. Educ. Psychol. 93, 742–757. doi: 10.1111/bjep.12589

Smith, R. E., Smoll, F. L., Cumming, S. P., and Grossbard, J. R. (2006). Measurement of multidimensional sport performance anxiety in children and adults: the sport anxiety Scale-2. J. Sport Exerc. Psychol. 28, 479–501. doi: 10.1123/jsep.28.4.479

Tabachnick, B. G., and Fidell, L. S. (2019). Using multivariate statistics. 7th Edn. New York: Pearson Publishers.

Thompson, E. R. (2007). Development and validation of an internationally reliable short-form of the positive and negative affect schedule (PANAS). J. Cross-Cult. Psychol. 38, 227–242. doi: 10.1177/0022022106297301

Upadyaya, K., and Salmela-Aro, K. (2013). Development of school engagement in association with academic success and well-being in varying social contexts: a review of empirical research. Eur. Psychol. 18, 136–147. doi: 10.1027/1016-9040/a000143

Upadyaya, K., Vartiainen, M., and Salmela-Aro, K. (2016). From job demands and resources to work engagement, burnout, life satisfaction, depressive symptoms, and occupational health. Burn. Res. 3, 101–108. doi: 10.1016/j.burn.2016.10.001

Wang, Y. A., and Rhemtulla, M. (2021). Power analysis for parameter estimation in structural equation modeling: a discussion and tutorial. Adv. Methods Pract. Psychol. Sci. 4, 251524592091825–251524592091817. doi: 10.1177/2515245920918253

Keywords: athlete engagement, engagement in athletic training, validity, reliability, measurement invariance

Citation: Liu J-D, Wu J-X, Zou Y-D, Wang Z-H, Zhang S, Hu J-C and Liu H (2024) Development and initial validation of the Engagement in Athletic Training Scale. Front. Psychol. 15:1402065. doi: 10.3389/fpsyg.2024.1402065

Edited by:

Mauro Virgilio Gomes Barros, Universidade de Pernambuco, BrazilReviewed by:

Paulo Felipe Ribeiro Bandeira, Regional University of Cariri, BrazilGustavo Silva, University Institute of Maia (ISMAI), Portugal

Copyright © 2024 Liu, Wu, Zou, Wang, Zhang, Hu and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing-Dong Liu, bGl1amQ3QG1haWwuc3lzdS5lZHUuY24=; Hao Liu, bGl1aGFvMTUwOEBvdXRsb29rLmNvbQ==

Jing-Dong Liu

Jing-Dong Liu Jun-Xia Wu2

Jun-Xia Wu2 Shuge Zhang

Shuge Zhang Jin-Chuan Hu

Jin-Chuan Hu