- 1Department of Philosophy, University of Vienna, Vienna, Austria

- 2Complexity Science Hub (CSH) Vienna, Vienna, Austria

- 3Ronin Institute, Essex, NJ, United States

- 4Middle European Interdisciplinary Master’s Program in Cognitive Science, University of Vienna, Vienna, Austria

- 5Cognitive Science Program, University of Toronto, Toronto, ON, Canada

- 6Institute for the History and Philosophy of Science and Technology, University of Toronto, Toronto, ON, Canada

- 7Department of Psychology, University of Toronto, Toronto, ON, Canada

- 8Department of Philosophy, University of Toronto, Toronto, ON, Canada

The way organismic agents come to know the world, and the way algorithms solve problems, are fundamentally different. The most sensible course of action for an organism does not simply follow from logical rules of inference. Before it can even use such rules, the organism must tackle the problem of relevance. It must turn ill-defined problems into well-defined ones, turn semantics into syntax. This ability to realize relevance is present in all organisms, from bacteria to humans. It lies at the root of organismic agency, cognition, and consciousness, arising from the particular autopoietic, anticipatory, and adaptive organization of living beings. In this article, we show that the process of relevance realization is beyond formalization. It cannot be captured completely by algorithmic approaches. This implies that organismic agency (and hence cognition as well as consciousness) are at heart not computational in nature. Instead, we show how the process of relevance is realized by an adaptive and emergent triadic dialectic (a trialectic), which manifests as a metabolic and ecological-evolutionary co-constructive dynamic. This results in a meliorative process that enables an agent to continuously keep a grip on its arena, its reality. To be alive means to make sense of one’s world. This kind of embodied ecological rationality is a fundamental aspect of life, and a key characteristic that sets it apart from non-living matter.

“To live is to know.”

“Between the stimulus and the response, there is a space. And in that space lies our freedom and power to choose our responses.”

(Frankl, 1946, 2020)

“Voluntary actions thus demonstrate a ‘freedom from immediacy.’ ”

(Haggard, 2008; channeling Shadlen and Gold, 2004)

1 Introduction

All organisms are limited beings that live in a world overflowing with potential meaning (Varela et al., 1991; Weber and Varela, 2002; Thompson, 2007), a world profoundly exceeding their grasp (Stanford, 2010). Environmental cues likely to be important in a given situation tend to be scarce, ambiguous, and fragmentary. Clear and obvious signals are rare (Felin and Felin, 2019).1 Few problems we encounter in such a “large world” are well-defined (Savage, 1954). On top of this, organisms constantly encounter situations they have never come across before. To make sense of such an ill-defined and open-ended world—in order to survive, thrive, and evolve—the organism must first realize what is relevant in its environment. It needs to solve the problem of relevance.

In contrast, algorithms—broadly defined as automated computational procedures, i.e., finite sets of symbols encoding operations that can be executed on a universal Turing machine—exist in a “small world” (Savage, 1954). They do so by definition, since they are embedded and implemented within a predefined formalized ontology (intuitively: their “digital environment” or “computational architecture”), where all problems are well-defined. They can only mimic (emulate, or simulate) partial aspects of a large world: algorithms cannot identify or solve problems that are not precoded (explicitly or implicitly) by the rules that characterize their small world (Cantwell Smith, 2019). In such a world, everything and nothing is relevant at the same time.

This is why the way organisms come to know their world fundamentally differs from algorithmic problem solving or optimization (Roli et al., 2022; see also Weber and Varela, 2002; Di Paolo, 2005). This elementary insight has profound consequences for research into artificial intelligence (Jaeger, 2024a), but also for our understanding of natural agency, cognition, and ultimately also consciousness, which will be the focus of this article. An organism’s actions and behavior are founded on the ability to cope with unexpected situations, with cues that are uncertain, ambivalent, or outright misleading (see Wrathall and Malpas, 2000; Ratcliffe, 2012; Wheeler, 2012, for discussion). It does so by being directly embodied and embedded in its world, which allows it to actively explore its surroundings through action and perception (see, for example, Varela et al., 1991; Thompson, 2007; Soto et al., 2016a; Di Paolo et al., 2017). Algorithms do not have this ability—they cannot truly improvise, but only mimic exploratory behavior, emulate true agency—due to their rigidly formalized nature.

Contrary to an algorithm, the most sensible (and thus “rational”) course of action for an organism does not simply follow from logical rules of inference, not even abductive inference to the best explanation (see, for example, Feyerabend, 1975; Arthur, 1994; Thompson, 2007; Gigerenzer, 2021; Riedl and Vervaeke, 2022; Roli et al., 2022). Before they can “infer” anything, living beings must first turn ill-defined problems into well-defined ones, transform large worlds into small, translate intangible semantics into formalized syntax (defined as the rule-based processing of symbols free of contingent, vague, and ambiguous external referents). And they must do this incessantly: it is a defining feature of their mode of existence.

This process is called relevance realization (Vervaeke et al., 2012; Vervaeke and Ferraro, 2013a,b). The ability to solve the problem of relevance is a necessary condition and the defining criterion for making sense of a large world.2 Indeed, we could say that an organism actively brings forth a whole world of meaning (Varela et al., 1991; Rolla and Figueiredo, 2023).

In this article, we shall argue that the ability to realize relevance—to bring forth a world—is present in all organisms, from the simplest bacteria to the most sophisticated human beings. In fact, it is a universal feature of any limited living being that must make sense of its large world. Therefore, relevance realization is one of the key properties that sets apart living systems from non-living ones, such as algorithms and their concrete physical implementations, which we will call machines. The ability to solve the problem of relevance arises from the characteristic self-referential self-manufacturing dynamic organization of living matter, which enables organisms to attain a degree of self-determination, to act with some autonomy, and to anticipate the consequences that may follow from their actions.

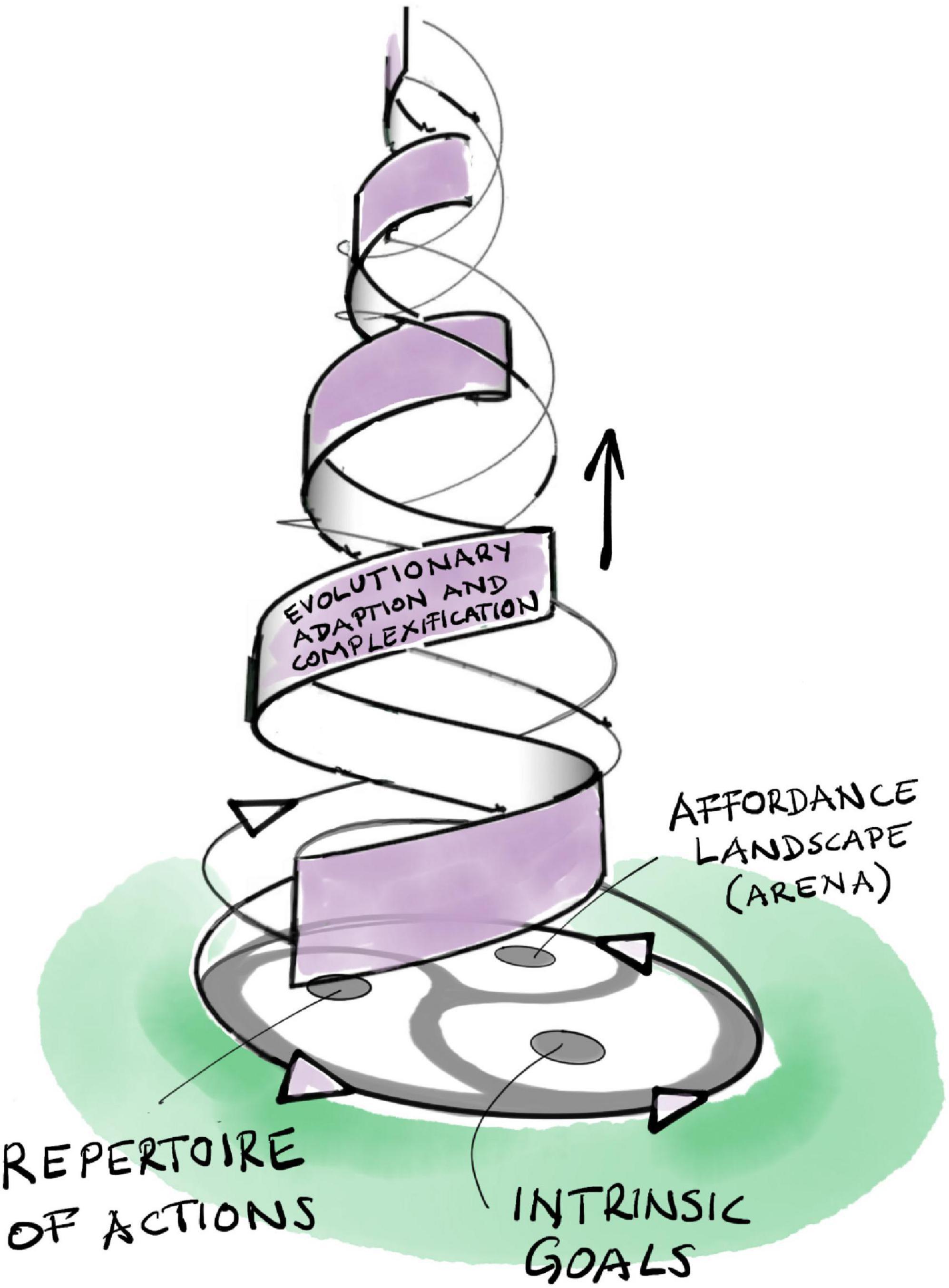

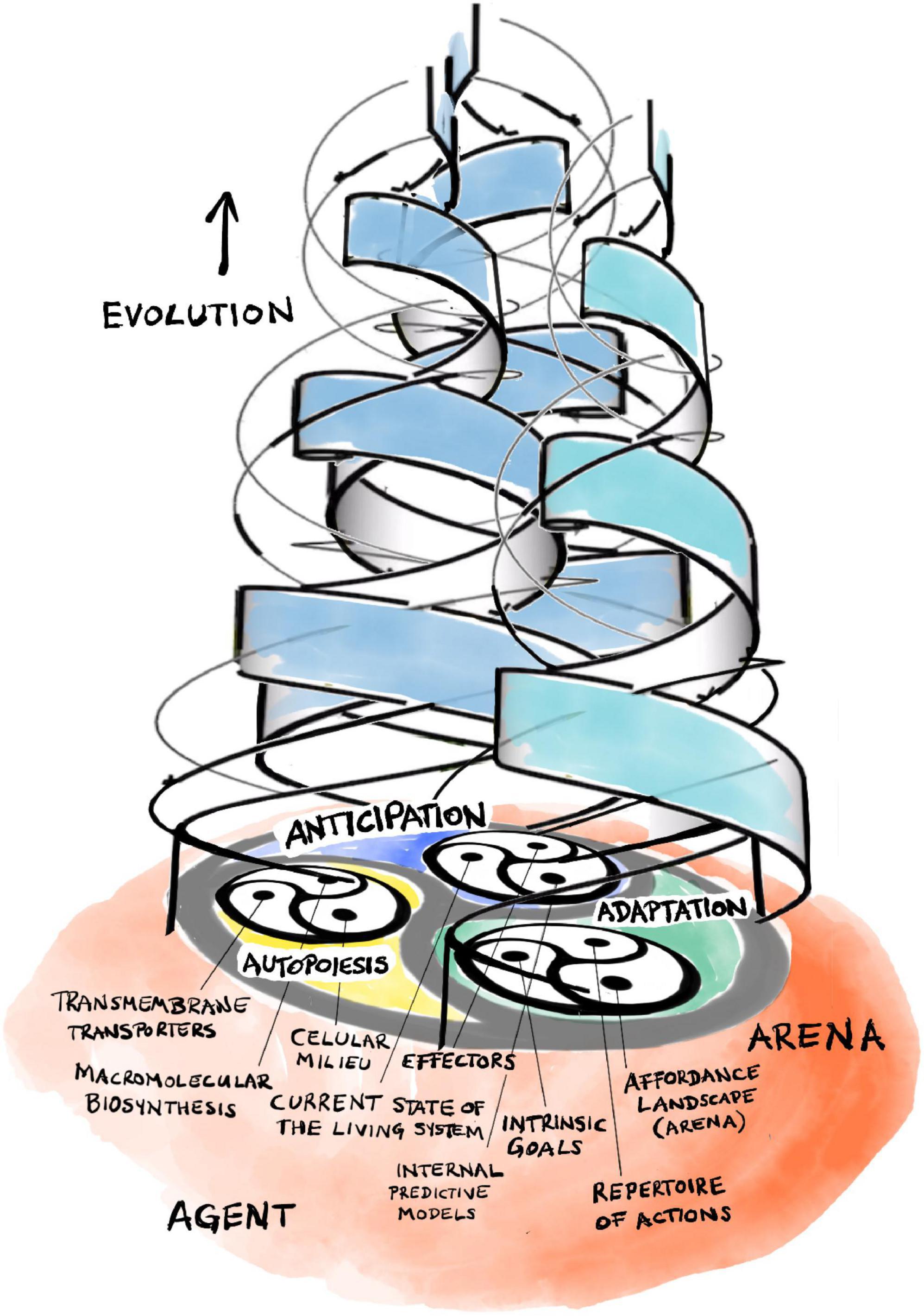

All of this involves a radically context-dependent generative dialectic3 called opponent processing—the continual establishment of trade-offs and synergies between competing and complementary organismic behaviors and dynamics (Vervaeke et al., 2012). Such competing and synergizing processes also mediate an organism’s interactions with its living and non-living surroundings, interactions that are inherently and irreducibly semantic, in the sense of having value (i.e., relevance) for the organism as a unity which strives to persist in the face of the constant threat of decay. This manifests as mutually co-creating (and thus collectively co-emergent) interrelations between a living agent’s intrinsic goals, its repertoire of actions, and the affordances that arise from the interactions of the organism with its experienced environment (Walsh, 2015).

As we shall see, this dialectic and hierarchical tangle of processes forms the generative core of the phenomenon of natural agency, and therefore also of its evolutionary elaborations: cognition and consciousness (cf. Felin and Koenderink, 2022). There is nothing mystifying or obscurantist about this view. In fact, we can demonstrate that this dialectic is straightforwardly analogous to the dynamics of an evolving population of biological individuals, where different survival strategies are played out against each other to bring about adaptation through natural selection (Vervaeke et al., 2012). It is, in essence, a Darwinian adaptive evolutionary dynamic. Our central claim in what follows is that this dialectic dynamic of relevance realization is not an algorithmic process, due to its fundamentally impredicative and co-constructive nature. Therefore, natural agency, cognition, and consciousness are, at their very core, not computational phenomena, and if we restrict ourselves to study them by purely computational means, we are likely to miss the point entirely.

We will carefully unpack this rather dense and compact mission statement in the following sections. As our starting point, let us take the analogy of relevance and evolutionary fitness implied above (see also Vervaeke et al., 2012).4 Both concepts are closely related in the sense of pertaining to a radically context-dependent match between an agent and its immediate task-relevant environment, or arena (Vervaeke et al., 2017). Neither “fitness” nor “relevance” have any universal attributes: there is no trait that renders you fit in all environments, nor is there any factor that is relevant across all possible situations. Furthermore, both fitness and relevance can only be assessed in a relative manner: one individual in a specific population and environment is fitter than another, and some features of the world may be more relevant than others. In other words, these concepts are only explanatory when used in an appropriate comparative context.

Because of this close conceptual analogy between relevance and fitness, an understanding of relevance realization ought to be of an evolutionary, ecological, and economic nature, formulated as the interplay of competing adaptive processes, situated in a particular setting, and phrased in terms of the variable and weighed commitments of organisms to a range of different goals.

Opponent processing means that organisms constantly play different approaches and strategies against each other in a process that iteratively evaluates progress toward a problem solution (toward reaching a particular goal). This can include dynamically switching back and forth between opposing strategies, if the necessity arises. By identifying molecular, cellular, organismic, ecological, and evolutionary processes and relations that can implement such an adaptive dynamic, we can generalize the concept of relevance realization from the fundamental prerequisite for cognition in animals with nervous systems (including us humans, of course, Vervaeke et al., 2012) to the fundamental prerequisite underlying natural agency in all living organisms.

It is important to mention at the outset that our project—to naturalize relevance realization—happens in an incredibly rich historical context of established research traditions. It is not our aim here to provide a careful review of all of those traditions (although we will do our best to refer to them wherever relevant). Instead, we see relevance realization as a promising framework that could provide a conceptual bridge between empirical approaches to organismic biology and the cognitive neurosciences, research into artificial intelligence, and intellectual traditions such as evolutionary epistemology (see, for example, Campbell, 1974; Lorenz, 1977; Bradie, 1986; Cziko, 1995; Bradie and Harms, 2023; but also Wimsatt, 2007), ecological psychology (Gibson, 1966, 1979), 4E cognition (Di Paolo et al., 2017; Durt et al., 2017; Newen et al., 2018; Carney, 2020; Shapiro and Spaulding, 2021), and biosemiotics (Favareau, 2010; Deacon, 2011, 2015; Pattee and Rączaszek-Leonardi, 2012; Barbieri, 2015). We draw from all of these in our argument. Note, however, that to achieve our aim of cross-disciplinary translation, we will deliberately try to avoid the specific terminologies of these various domains, and express ourselves in terms that we hope are easily accessible to a wide range of researchers across relevant disciplines.

This article is structured as follows: section “2 Agential emergentism” starts by outlining a number of problems we encounter when considering cognition (and the world) as some form of algorithmic computation and introduces our proposed alternative perspective. In section “3 Relevance realization,” we characterize the process of relevance realization, outlining its foundational role in cognition and in the choice of rational strategies to solve problems. Section “4 Biological organization and natural agency” introduces the notion of biological organization, shows how it emerged as a novel kind of organization of matter at the origin of life, and demonstrates how it enables basic autonomy and natural agency through the organism’s ability to set its own intrinsic goals. The pursuit of its goals requires an organism to anticipate the consequences of its actions. How this is achieved even in the simplest of creatures is the topic of section “5 Basic biological anticipation.” In section “6 Affordance, goal, and action,” we examine the adaptive dialectic (or trialectic) interplay between an organism’s goals, actions, and affordances. As the core of our argument, we bring this model to bear on the process of relevance realization itself, and show how this process cannot be captured completely by any kind of algorithm. In section “7 To live is to know,” we synthesize the insights from the previous three sections, to show how the evolution of cognition in organisms with a nervous system, and perhaps also consciousness, can be explained as adaptations to realizing relevance in ever more complex situations. In section “8 Conclusion,” we conclude our argument by applying the concept of relevance realization to rational strategies for problem solving. We argue that a basic ecological notion of rational behavior is common to all living beings. In other words, organisms make sense of the world in a way that makes sense to them.

2 Agential emergentism

Contemporary approaches to natural agency and cognition take a very wide range of stances on what these two concepts mean, what they refer to, and how they relate to each other. Some of these approaches consider cognition a fundamental property of all living beings (see, for example, Maturana and Varela, 1980; Varela et al., 1991; Lyon, 2006; Baker and Stock, 2007; Shapiro, 2007; Thompson, 2007; Baluška and Levin, 2016; Birch et al., 2020; Lyon et al., 2021), some treat it as restricted to animals with sufficiently complex internal models of the world (Djedovic, 2020; Sims, 2021) or in possession of a nervous system (e.g., Moreno et al., 1997; Moreno and Etxeberria, 2005; Moreno and Mossio, 2015; Di Paolo et al., 2017). Some see natural agency as a phenomenon that is clearly distinct from cognition (see, for example, Kauffman, 2000; Barandiaran and Moreno, 2008; Rosen, 2012; Moreno and Mossio, 2015; Fulda, 2017), some consider the two to be the same (e.g., Maturana and Varela, 1980; Lyon, 2006; Thompson, 2007; Baluška and Levin, 2016). Among this diversity of perspectives, we can identify two general trends in attitudes. Let us call them agential emergentism and computationalism.5

Computationalism, as we use the term here, encompasses various forms of cognitivism and connectionism (cf. classification by Thompson, 2007). It is extremely popular and widespread in contemporary scientific and philosophical thinking, the basic tenet being that both natural agency and cognition are special varieties of algorithmic computation. Computationalism formulates agential and cognitive phenomena in terms of (often complicated, nonlinear, and heavily feedback-driven) input-output information processing (see, Baluška and Levin, 2016; Levin, 2021, for a particularly strong and explicit example). In this framework, goal-directedness—sometimes unironically called “machine wanting” (McShea, 2013)—tends to be explained by some kind of cybernetic homeostatic regulation (e.g., McShea, 2012, 2013, 2016; Lee and McShea, 2020).

The strongest versions of computationalism assert that all physical processes which can be actualized (not just cognitive ones) must be Turing-computable. This pancomputationalist attitude is codified in the strong (or physical) Church-Turing conjecture (also called Church-Turing-Deutsch conjecture; Deutsch, 1985, 1997; Lloyd, 2006).6 It is fundamentally reductionist in nature, an attempt to force the explanation of all of physical reality in terms of algorithmic computation based on lower-level mechanisms. For this reason, computation is not considered exclusive to living systems. Researchers in the pancomputationalist paradigm see agency and cognition as continuous with non-linear and self-organizing information processing outside the living world (see, for example, Levin, 2021; Bongard and Levin, 2023). On this view, there is no fundamental boundary between the realms of the living and the non-living, between biology and computer engineering. The emergence of natural agency and cognition in living systems is simply due to a (gradual) increase in computational complexity and capacity in the underlying physical processes.

We consider this view of reality to be highly problematic. One issue with pancomputationalism is that physical processes and phenomena are generally not discrete by nature (at least not obviously so) and are rarely deterministic in the sense that algorithmic processes are (Longo, 2009; Longo and Paul, 2011). We will not dwell on this here, but will focus instead on the relation between syntactic formal systems and a fundamentally semantic and ill-defined large world. In this context, it is crucial to distinguish the ability to algorithmically simulate (i.e., approximate) physical and cognitive processes from the claim that these processes intrinsically are a form of computation. The latter view mistakes the map for the territory by misunderstanding the original purpose of the theory of computation: as defined by Church and Turing, “computation” is a rote procedure performed by a human agent (the original “computer”) carrying out some calculation, logical inference, or planning procedure (Church, 1936; Turing, 1937; see, Copeland, 2020, for an historical review). The theory of computation was intended as a model of specific human activities, not a model of the brain or physical reality in general. Consequently, assuming that the brain or the world in general is a computer means committing a category mistake called the equivalence fallacy (Copeland, 2020). Treating the world as computation imputes symbolic (information) content onto physical processes that is only really present in our simulations, not in the physical processes that we model. The world we directly experience as living organisms is not formalized and, in fact, is not formalizable completely by any limited being, as we shall see in section “3. Relevance realization.” This poses an obvious and fundamental problem for the pancomputationalist view.

To better understand and ultimately overcome this problem, we adopt an alternative stance called agential emergentism (outlined in detail in Walsh, 2013, 2015). The basic idea is to provide a fresh and expanded perspective on life that allows us to bridge the gap between the syntactic and the semantic realms, between small and large worlds. Agential emergentism postulates that all organisms possess a kind of natural agency. Note that this is not the same as the so-called intentional stance (Dennett, 1987; recently reviewed in Okasha, 2018), which merely encourages us to treat living systems as if they had agency while retaining a thoroughly reductionist worldview (see also the teleonomy account of Mayr, 1974, 1982, 1992). In contrast, agential emergentism treats agency as natural and fundamental: the key property that distinguishes living from non-living systems (Moreno and Mossio, 2015; Walsh, 2015; Mitchell, 2023). Only organisms—not algorithms or other machines—are true agents, because only they can act on their own behalf, for their own reasons, in pursuit of their own goals (Kauffman, 2000; Nicholson, 2013; Walsh, 2015; Roli et al., 2022; Mitchell, 2023; Jaeger, 2024a).

We can define natural agency in its broadest sense as the capability of a living system to initiate actions according to its own internal norms (Di Paolo, 2005; Barandiaran et al., 2009; Moreno and Mossio, 2015; Djedovic, 2020; Walsh and Rupik, 2023). This capability arises from the peculiar self-referential and hierarchical causal regime that underlies the self-manufacturing organization of living matter (see section “4 Biological organization and natural agency”). A lower bound for normativity arises from such self-manufacture: the right thing to do is what keeps me alive (Weber and Varela, 2002; Di Paolo, 2005; Di Paolo et al., 2017; Djedovic, 2020). This imposes a discrete discontinuity between the realms of the living and the non-living: algorithms and machines only possess extrinsic purpose, imposed on them from outside their own organization, while organismic agents can, and indeed must, define their own intrinsic goals (see also Nicholson, 2013; Mossio and Bich, 2017).

This is why it makes good sense to treat relevance realization from an agential rather than computationalist perspective: the ability to solve the problem of relevance is intimately connected to the possession of intrinsic goals. To put it simply: if you do not truly want or desire anything, if there is nothing that is good or bad in your world, you cannot realize what is relevant for you. Phrased a bit less informally: the relevance of certain features of a situation to the organism is a function of the organism’s goals and how the situation promotes or impedes them. This is why algorithms in their small worlds cannot solve the problem of relevance: they never even encounter it! In addition, relevance realization requires an organism to assess potential outcomes of its behavior.7 To realize what is relevant in a given situation, you have to be able to somehow anticipate the consequences of your actions. On top of all this, an organism must be motivated to pursue its goals. Motivation ultimately stems from our fragility and mortality (Jonas, 1966; Weber and Varela, 2002; Thompson, 2007; Deacon, 2011; Moreno and Mossio, 2015). While it is possible to impose external motivation on a system that mimics aspects of internal motivation, true internal motivation can only arise from precariousness. We must be driven to continue living. Without this drive, we do not get the proper Darwinian dynamics of open-ended evolution (as argued in detail in Roli et al., 2022; Jaeger, 2024b). In what follows, we develop a naturalistic evolutionary account of relevance realization that is framed based on this simple set of basic principles.

3 Relevance realization

Organisms actively explore their world through their actions. For an organismic agent, selecting an appropriate action in a given situation poses a truly formidable challenge. How do living systems—including us humans—even begin to tackle the problems they encounter in their environment, considering that they live in a large world that contains an indefinite (and potentially infinite) number of features that may be relevant to the situation at hand? To address this question, we must first clarify what kind of environment we are dealing with.

It is not simply the external physical environment that matters to the organism, but its experienced environment, the environment it perceives, the environment that makes a difference for choosing how to act (sometimes called the organism’s umwelt; von Uexküll, 1909; Thompson, 2007; Walsh D. M., 2012). Both paramecia and porpoises, for example, live in the physical substance “water,” but due to their enormous size difference, they have to deal with very distinct sensorimotor contexts concerning their propulsion through that physical medium (Walsh, 2015). What matters most on the minuscule scale of the paramecium is the viscosity of water. It “digs” or “drills” its way through a very syrupy medium. The porpoise, in contrast, needs to solve problems of hydrodynamics at a much larger scale. It experiences almost none of the viscosity but all the fluidity of water, hence the convergent evolution of fish-like body shape and structures like flippers that enhance its hydrodynamic properties.

This simple example illustrates an important general point: what is relevant to an organism in its environment is never an entirely subjective or objective feature. Instead, it is transjective, arising through the interaction of the agent with the world (Vervaeke and Mastropietro, 2021a,b). In other words, the organism enacts, and thereby brings forth, its own world of meaning and value (Varela et al., 1991; Weber and Varela, 2002; Di Paolo, 2005; Thompson, 2007; Rolla and Figueiredo, 2023). This grounds the process of relevance realization in a constantly changing and evolving agent-arena relationship, where “arena” designates the situated and task-relevant portion of the larger experienced environment (see Vervaeke et al., 2017, p. 104). The question of relevance then becomes the question of how an agent manages to delimit the appropriate arena, to single out the task-relevant features of its experienced environment, given its specific situation.

For the computationalist, singling out task-relevant features is indistinguishable from problem solving itself, and both must be subsumed under an algorithmic frame. If we take the human context of scientific inquiry as an example, inductive, deductive, and abductive inference—all adhering to explicitly formalized logical rules—are generally deemed sufficient for identifying, characterizing, and solving research problems (see, Roli et al., 2022, for a critical discussion). Accordingly, the general problem solving framework by Newell and Simon (1972) delimits a problem formally by requiring specific initial and goal states, plus a set of operators (actions) used by the problem-solving agent to transition from the former to the latter within a given set of constraints. A problem solution is then defined as a sequence of actions that succeeds in getting the agent from the initial state to the attainment of its goal. The agent’s task is prescribed as solving a formal optimization problem that identifies solutions to a given challenge. To a computationalist, the foundation of natural agency and cognition is formal problem solving.

This kind of computational framing can be very useful. For instance, it points our attention to the issue of combinatorial explosion, revealing that for all but the most trivial problems, the space of potential solutions is truly astronomical (Newell and Simon, 1972). For problem solving to be tractable under real-world constraints, agents must rely on heuristics, make-shift solutions that are far from perfect (Simon and Newell, 1958). Unlike algorithms (strictly defined), they are not guaranteed to converge toward a correct solution of a well-posed problem in finite time. Still, heuristics are tried and tested to work well enough (to satisfice) in a range of situations which the agent or its ancestors have encountered in the past, or which the agent deems in some way analogous to such past experiences (Simon, 1956, 1957, 1989; Simon and Newell, 1958Gigerenzer and Gaissmaier, 2011; Gigerenzer, 2021).

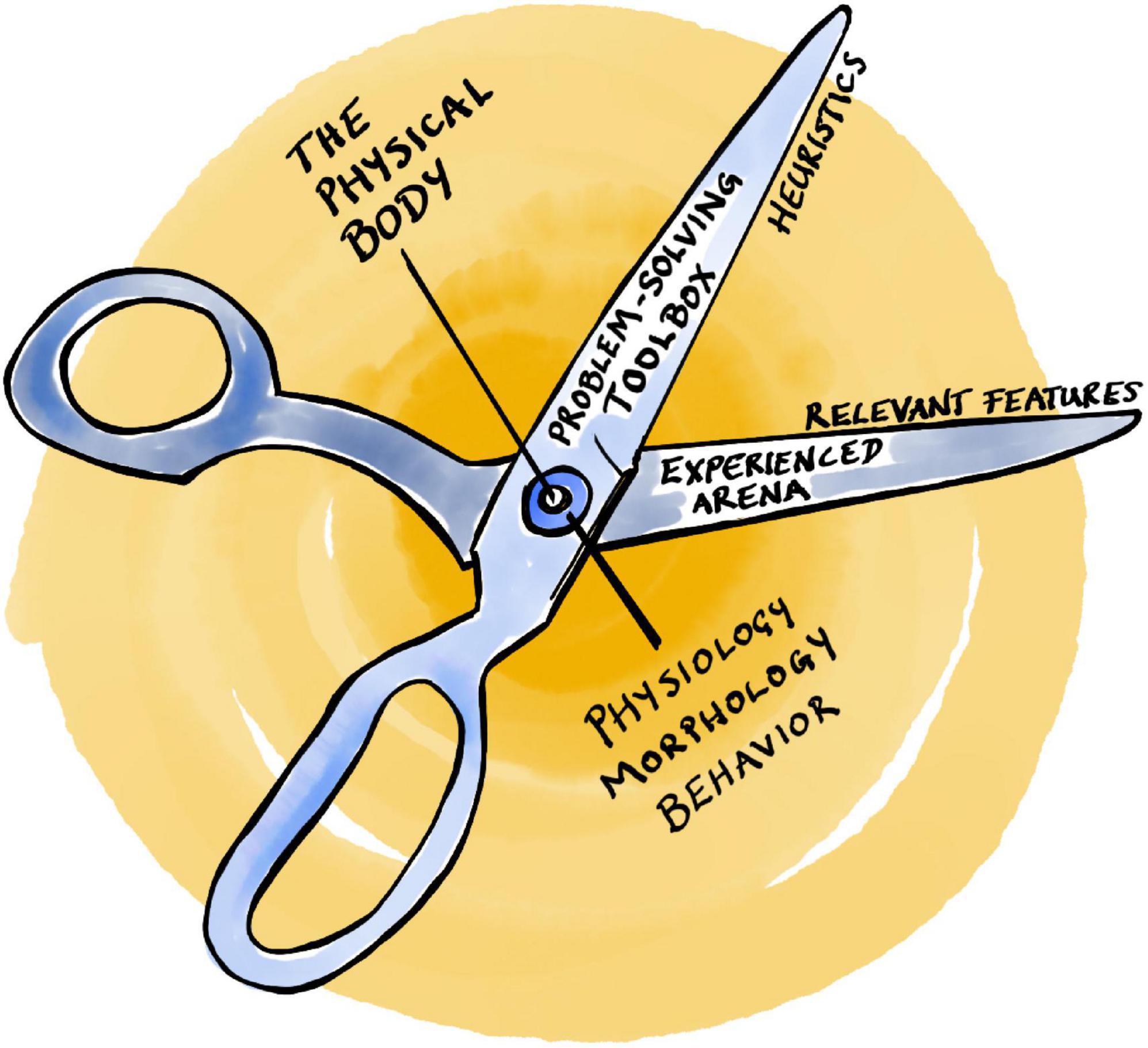

This notion of bounded rationality (Simon, 1957) is illustrated by the visual metaphor of Simon’s scissors with two blades that have to fit together (Figure 1; Simon, 1990): (1) the agent’s internal cognitive toolbox (with its particular set of heuristics and associated limitations), and (2) an experienced arena with a given structure of relevant features. Put simply, heuristics must be adapted to the task at hand, otherwise they do not work. On top of this, the physical body with its peculiar physiology, morphology, and sensorimotor abilities can be added to the metaphor as the pivot between the two scissor blades (Figure 1; Mastrogiorgio and Petracca, 2016; Gallese et al., 2020), reflecting the notion of embodied bounded rationality (Gallese et al., 2020; Petracca, 2021; Petracca and Grayot, 2023), or evolved embodied heuristics (Gigerenzer, 2021).

Evolved embodied heuristics can effectively reduce the problem of intractably large search spaces. They are indispensable tools for limited beings in large worlds, because they enable us to ignore a vast amount of information that is likely to be irrelevant (Brighton, 2018). Yet, they leave one central issue untouched: how to link the use of specific heuristics to the identification of underlying relevant cues (Felin et al., 2017; Felin and Koenderink, 2022). The problem of relevance thus persists. One reason for this is that heuristics remain confined to a small world after all. They are still algorithms (automated computational procedures) in the broader sense of the term.

This reveals a vicious circularity in the argument above (Vervaeke et al., 2012; Riedl and Vervaeke, 2022): it presupposes that an agent can turn ill-defined problems into problems that are defined precisely enough to be tractable heuristically. There must still be a well-defined goal and search space, a set of available actions, and the agent must be able to categorize its problems to judge whether a given situation is analogous to contexts encountered in the past (Vervaeke et al., 2012; see also Hofstadter and Sander, 2013). And here lies the crux: all of this requires the agent to distinguish relevant features in its experienced environment—to circumscribe its arena—before it can apply any heuristics. In other words, embodied heuristics presuppose a solution to the problem that they are meant to tackle in the first place.

Algorithmic approaches to relevance realization in a large world generally get us nowhere. A first challenge is that the search space required for formal optimization usually cannot be circumscribed precisely because the collection of large-world features that may be relevant in a given situation is indefinite: it cannot be prestated explicitly in the form of a mathematical set (Roli et al., 2022).8 Indeed, the collection of potentially relevant features may also be infinite, because even the limited accessible domain of an organism’s large world can be inexhaustibly fine-grained, or because there is no end to the ways in which it can be validly partitioned into relevant features (Kauffman, 1971; Wimsatt, 2007). Connected to this difficulty is the additional problem that we cannot define an abstract mathematical class of all relevant features across all imaginable situations or problems, since there is no essential general property that all of these features share (Vervaeke et al., 2012). What is relevant is radically mutable and situation-dependent. Moreover, the internal structure of the class of relevant features for any particular situation is unknown (if it has any predefined structure at all): we cannot say in advance, or derive from first principles, how such features may relate to each other, and therefore cannot simply infer one from another. Last but not least, framing the process of relevance realization as a formal optimization problem inexorably leads to an infinite regress: delimiting the search space for one problem poses a new optimization challenge at the next level (how to find the relevant search space limits and dimensions) that needs a formalized search space of its own, and so on and so forth.

Taken together, these problems constitute an insurmountable challenge for any limited being attempting to construct a universal formal theory of relevance for general problem solving in a large world. In other words, relevance realization is not completely formalizable. This makes sense if we consider that relevance realization is the act of formalization, of turning semantics into syntax, as we will outline in detail below. This is exactly how David Hilbert defined “formalization” for the purpose of his ultimately unsuccessful program to put mathematics on a complete and consistent logical foundation (Zach, 2023). Hilbert’s failure was made obvious by Gödel’s incompleteness theorems, which state that every sufficiently complicated formal system remains incomplete, because there will always be valid propositions that are true but cannot be proven within the existing formalism (reviewed in Nagel and Newman, 2001). If systems of mathematical propositions cannot be completely formalized, even in principle, is it really surprising that the large world we live in cannot either?

Once we accept that organisms live in a large world, and that this world is not fully formalizable, we must recognize that natural agency and cognition cannot be grounded wholly in formal problem solving, or any other form of algorithmic computation. Before they can attempt to solve any problems, organisms must first perform the basic task of formalizing or framing the problem they want to solve. Without this basic framing, it is impossible to formulate hypotheses for abductive reasoning or, more generally, to select actions that are appropriate to the situation at hand. Algorithms are unable to perform this kind of framing. By their nature, they are confined to the syntactic realm, always operating within some given formal frame—their predefined ontology—which must be provided and precoded by an external programmer (see also Jaeger, 2024a).9 This is true even if the framing provided is indirect and implicit, and may allow for a diverse range of optimization targets, as is the case in many contemporary approaches to unsupervised machine learning. Indeed, the inability of algorithms to frame problems autonomously has been widely recognized as one of the fundamental limitations of our current quest for artificial general intelligence (see, for example, McCarthy and Hayes, 1969; Dreyfus, 1979, 1992; Dennett, 1984; Cantwell Smith, 2019; Roitblat, 2020; Roli et al., 2022). Algorithms cannot, on their own, deal with the ambiguous semantics of a large world.

This has some profound and perhaps counterintuitive implications. The most notable of these is the following: if the frame problem, defined in its most general form as the problem of relevance (Dennett, 1984; Vervaeke et al., 2012; Riedl and Vervaeke, 2022), cannot be solved within an algorithmic framework, yet organisms are able to realize relevance, then the behavior and evolution of organisms cannot be fully captured by formal models based on algorithmic frameworks.

More specifically, it follows that relevance realization cannot be an algorithmic process itself: to avoid vicious circularity and infinite regress, it must be conceptualized as lying outside the realm of purely syntactic inferential computation (Roli et al., 2022; Jaeger, 2024a). As a direct corollary, it must also lie outside the domain of symbolic processing,10 which is embedded in the realm of syntax, and is therefore completely formalized just like algorithmic computation (Vervaeke et al., 2012). Note that this does not preclude that we can superficially mimic or emulate the process of relevance realization through algorithmic simulation or formal symbolic description. Remember that we are formulating an incompleteness argument here, which suggests that a purely algorithmic (syntactic) approach will never be able to capture the process of relevance realization in its entirety. To any limited being, there always remains some semantic residue in its large world that defies precise definition. To make sense of such a world, natural and cognitive agents cannot rely exclusively on algorithmic computation or symbolic processing.

How, then, are we to understand relevance realization if not in terms of formal problem solving? One possibility is through an economic perspective (Vervaeke et al., 2012; Vervaeke and Ferraro, 2013a,b), which frames the problem of relevance based on commitment, i.e., the dynamic allocation of resources by an agent to the pursuit of a range of potentially conflicting or competing goals. Opponent processing is seen as a meta-heuristic approach: the agent employs a number of complementary or even antagonistic heuristics that are played against each other in the presence of different kinds of challenges and trade-offs. The trade-offs involved can be subsumed under the general opposition of efficiency vs. resilience or, more specifically, as generality vs. specialization, exploration vs. exploitation, and focusing vs. diversifying (Vervaeke et al., 2012; Andersen et al., 2022). On this account, resource allocation is fundamentally dialectic: the agent continually reassesses what strategy does or does not work in a given situation, and adjusts its goals and priorities accordingly, which in turn affects its appraisal of progress. This leads to a situated and temporary adaptive fit between agent and arena, which is continuously updated according to the experienced environment, anticipated outcomes of actions, and the inner state of the agent. Overall, it accounts for the context-specific nature of relevance realization in terms of localized adaptive dynamics.

Such high-level adaptive dynamics can be embedded in a physical context through the notion of predictive processing (see, for example, Friston, 2010, 2013; Clark, 2013, 2015; Hohwy, 2014; Andrews, 2021; Colombo and Wright, 2021; Seth, 2021; Andersen et al., 2022). Predictive processing means that an agent iteratively and recursively evaluates the relevance of its sensory input through the estimation of prediction errors. It does this by measuring the discrepancy between expectations based on its internal models of the world (see section “5 Basic biological anticipation”) and the sensory feedback it receives from its interactions within its current arena. Higher weights are assigned to input with low prediction errors, while perceptions with persistent larger errors are preferentially discounted. Particular importance is attributed to error dynamics, the selection of actions and cognitive strategies that rapidly reduce prediction errors in a particular stream of sensory input (Friston et al., 2012; Kiverstein et al., 2019; Andersen et al., 2022). Predictive processing can ground the economic account of relevance realization by connecting it to the underlying perceptual and cognitive processes that account for the dynamic and recurrent weighing of prediction errors.

For our present purposes, however, both of these accounts exhibit several significant limitations. The economic account was developed specifically in the context of human cognition, and it is not entirely clear whether it can be generalized beyond that scope. It takes agency (even intention) for granted without providing a naturalistic justification for that assumption. The commitment of resources in the economic account, and the assignment of precision weights in predictive processing, presuppose that the agent has intrinsic goals in relation to which such actions can be taken (Andersen et al., 2022). This presupposition may be acceptable in the context of human cognition, with its evident and explicit intentionality, but poses a serious challenge for generalizing relevance realization to the domain of non-human and (even more so) non-cognitive living beings.

On top of all this, both the economic account and formalized versions of predictive processing remain embedded in a thoroughly computationalist framework that views the allocation of relevance (and hence resources or precision weights) as a simple iterative and recursive algorithmic process. This begs the question where organismic goals come from in the first place, and how anticipated outcomes can affect the strategies and actions chosen. For these reasons, we will take a different route, with the aim of grounding the process of relevance realization in the basic organization of living beings and the kind of agent-arena relationship this organization entails. Our approach is not intended to oppose the economic account or the account based on predictive processing, but rather to ground them in the natural sciences with the aim of extending relevance realization beyond human cognition and intentionality. It is an evolutionary view of relevance realization, which also provides an explanation of why it is that the process cannot be completely captured by a purely syntactic or algorithmic approach.

4 Biological organization and natural agency

The ability to solve the problem of relevance crucially relies on an agent setting intrinsic goals. Therefore, we first need to demonstrate that all organisms can define and pursue their own goals without requiring any explicit intentionality, cognitive capabilities, or consciousness. Such basic natural agency does not primarily rely on causal indeterminacy or randomness. Instead, it rests in the peculiar self-referential and hierarchical causal regime that underlies the organization of living matter (see, for example, Rosen, 1958a,b, 1959, 1972, 1991; Piaget, 1967; Varela et al., 1974; Varela, 1979; Maturana and Varela, 1980; Juarrero, 1999, 2023; Kauffman, 2000; Weber and Varela, 2002; Di Paolo, 2005; Thompson, 2007; Barandiaran et al., 2009; Louie, 2009, 2013, 2017a; Deacon, 2011; Montévil and Mossio, 2015; Moreno and Mossio, 2015; Mossio and Bich, 2017; DiFrisco and Mossio, 2020; Hofmeyr, 2021; Harrison et al., 2022; Mitchell, 2023; Mossio, 2024a).

This peculiar organization of living matter is both the source and the outcome of the capacity of a living cell or multicellular organism to self-manufacture (Hofmeyr, 2021). Life is what life does. A free-living cell, for example, must be able to produce all its required macromolecular components from external sources of matter and energy, must render these components functional through constant maintenance of a suitable and bounded internal milieu, must assemble functional components in a way that upholds its self-maintaining and self-reproducing abilities throughout its life cycle and, if we want it to evolve, must pass this integrated functional organization on to future generations via reproduction with some form of reliable but imperfect heredity (Saborido et al., 2011; Hofmeyr, 2021; Jaeger, 2024b; Pontarotti, 2024). This process of self-manufacture is encapsulated by the abstract concept of autopoiesis (Varela et al., 1974, 1991; Varela, 1979; Maturana and Varela, 1980; Weber and Varela, 2002; Thompson, 2007), which emphasizes the core ability of a living system to self-produce and maintain its own boundaries.

Biological organization emerged at the origin of life, and is therefore shared among all organisms, from bacteria to plants to fungi to animals to humans. In fact, some of us have argued previously that it is a fundamental prerequisite for biological evolution by natural selection (see Walsh, 2015; Jaeger, 2019, 2024b, for details). This kind of organization is unique to organisms. Nothing equivalent exists anywhere outside the realm of the living. While self-organizing physical processes, such as candle flames, convection currents (e.g., Bénard cells), turbulent water flows, or hurricanes, share some important properties with living systems, including the temporary reduction of entropy at the cost of the local environment, they are not able to self-manufacture in the sense described above (Kauffman, 2000; Deacon, 2011; Djedovic, 2020; Hofmeyr, 2021).

Two aspects of biological organization are particularly important here. First, note that functional organization is not the same as physical structure: the capability to self-manufacture does not coincide with any specific arrangement of material parts, nor does it correspond to any fixed pattern of interacting processes (cf. Rosen, 1991; Louie, 2009). Instead, the systemic pattern of interactions that constitutes biological organization is fundamentally fluid and dynamic: connections between components constantly change—in fact, have to change—for the capacity to self-manufacture to be preserved (see, for instance, Kauffman, 2000; Deacon, 2011; DiFrisco and Mossio, 2020; Hofmeyr, 2021; Jaeger, 2024b).

Second, at the heart of all contemporary accounts of biological organization lies the concept of organizational closure (see, Letelier et al., 2011; Moreno and Mossio, 2015; Cornish-Bowden and Cárdenas, 2020; Mossio, 2024b, for reviews). Organizational closure is a peculiar relational pattern of collective dependence between the functional components of a living system: each component process must be generated by, and must in turn generate, at least one other component process within the same organization (Montévil and Mossio, 2015; Moreno and Mossio, 2015; Mossio et al., 2016). This means that individual components could not operate—or even exist—without each other. Let us illustrate this central concept using two complementary approaches.

One useful way to think about biological organization is to separate the underlying processes (physico-chemical flows) from the higher-level constraints that impinge on them by reducing their dynamical degrees of freedom (Juarrero, 1999, 2023; Deacon, 2011; Pattee and Rączaszek-Leonardi, 2012; Montévil and Mossio, 2015; Mossio et al., 2016). Constraints arise through the interactions between the component processes that make up the living system. Like the underlying flows, they are dynamic, but change at different time scales. Constraints can thus be formally described as boundary conditions imposed on the underlying dynamics (Montévil and Mossio, 2015; Mossio et al., 2016). They decrease the degrees of freedom of the living system as a consequence of the restrictions that are placed upon it by the organized interactions of its constituent processes. An enzyme is a good example of a constraint: it alters the kinetics of a biochemical reaction without itself being altered in the process.

We can now conceptualize organizational closure as the closure of constraints (Montévil and Mossio, 2015): the organism-level pattern of constraints restricts and channels the dynamics of the underlying processes in such a way as to preserve the overall pattern of constraints. Evidently, organizational closure is causally circular: it is a form of self-constraint (Juarrero, 1999, 2023; Montévil and Mossio, 2015). In this way, the organization of the system becomes the cause of its own relative stability: this is what equips an organism with identity and individuality (Deacon, 2011; Montévil and Mossio, 2015; Moreno and Mossio, 2015; Mossio et al., 2016; DiFrisco and Mossio, 2020).

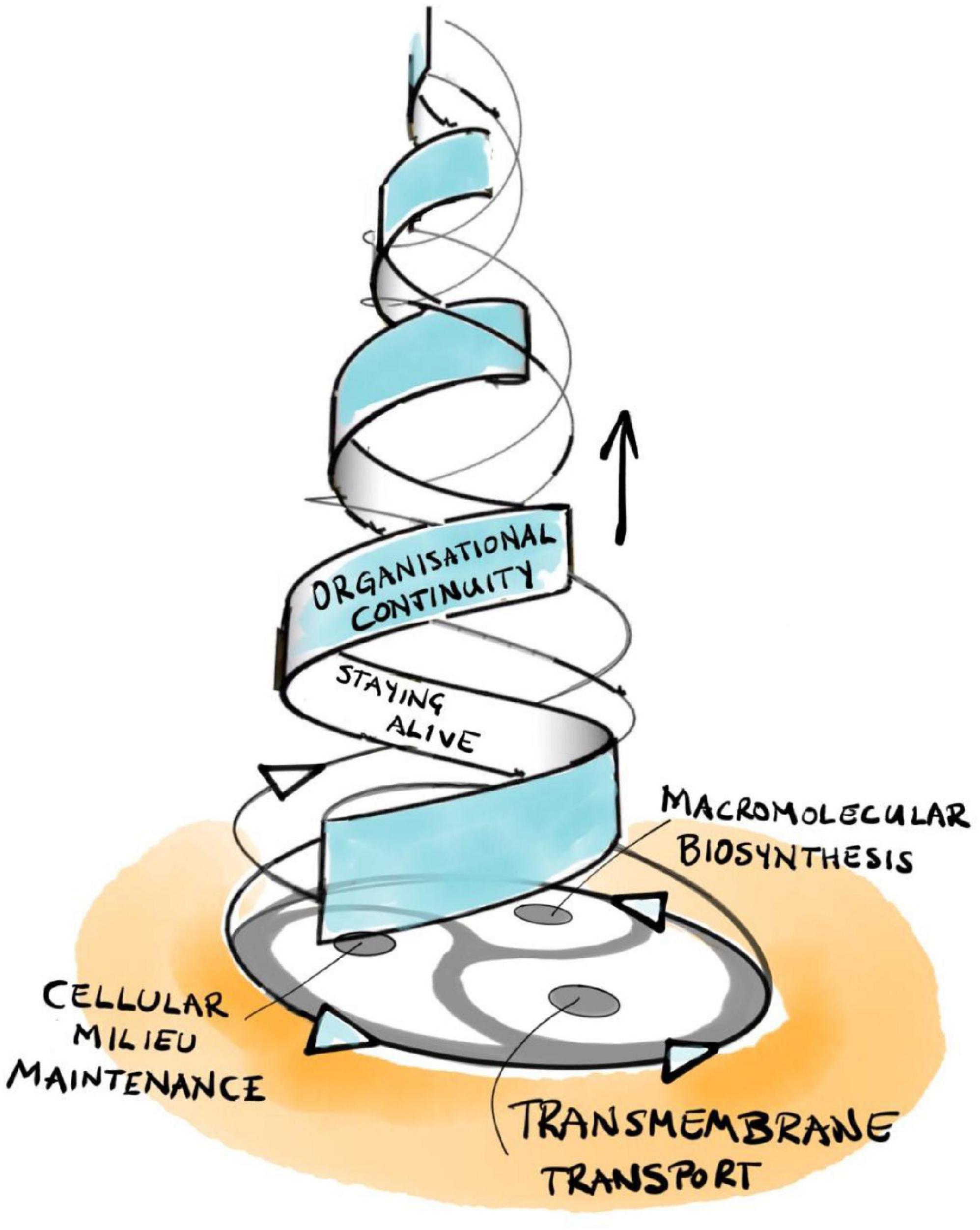

Organizational closure requires thermodynamic openness: it only occurs in physical systems that operate far from equilibrium. The basic reason for this is that the organism must constantly produce work by harvesting some entropy gradient in its environment to regenerate, repair, and replenish its set of constraints (Kauffman, 2000; Deacon, 2011). This leads to organizational continuity (DiFrisco and Mossio, 2020; Mossio and Pontarotti, 2020; Pontarotti, 2024). To revisit our previous example, think of all the enzymes in a living cell, enabling a set of interrelated biochemical flows that lead to macromolecular synthesis and the continued replenishment of the pool of enzymes. This requires physical work (driven by ATP-dephosphorylation, and other exergonic reactions). Note that enzyme concentrations need not be kept constant over time. They only have to remain within the less stringent boundaries that ensure the future preservation of overall metabolic flow. Metabolic flow can (and indeed must) change adaptively in response to environmental conditions and the internal requirements of the organism, but if it ceases to repair and replenish itself, the organism dies.

A more formal and abstract way to think about biological organization is Robert Rosen’s relational theory of metabolism-repair (M,R)-systems (Rosen, 1958a,b, 1959, 1972, 1991), and its recent refinement to fabrication-assembly (F,A)-systems (Hofmeyr, 2021). It treats biological organization in the rich explanatory context of Aristotle’s four “causes,” or aitia (Rosen, 1991; Louie, 2009, 2013, 2017a). Aitia go beyond our restricted sense of “causality” in the modern scientific sense. They correspond to different ways of answering “why” questions about some natural phenomenon (Falcon, 2023). As an example, take a marble sculpture depicting Aristotle: its material cause is the marble it is made of, its formal cause is what makes it a sculpture of Aristotle (and not of anyone else), its efficient cause is the sculptor wielding their tools to produce the sculpture, and its final cause is the sculptor’s intention to make a statue of Aristotle.

Using the (meta)mathematical tool of category theory, Rosen constructs a rigorous formal framework that distinguishes material causes (physico-chemical flows) from their processors, which are the efficient causes generating the particular dynamics that characterize a living system (Rosen, 1991, later extensively refined by Louie, 2009, 2013, 2017a). Thinking of enzymes again as possible examples of efficient causes, we can see that this distinction is similar in spirit, but not equivalent, to the separation of processes and constraints above. Constraints, as we shall see, not only incorporate aspects of efficient but also of formal cause.

Rosen’s central insight is that his (M,R)-system models are open to material (and energy) flows but are closed to efficient causation (Rosen, 1991; Louie, 2009, 2013, 2017a).11 This is a form of organizational closure, meaning that each processor has as efficient cause another processor within the organization of the system. Formally, each processor must be part of a hierarchical cycle of efficient causation. Such cycles represent a type of self-referential circularity that Rosen calls immanent causation, which represents more than mere cybernetic feedback, the latter being restricted to material causes (i.e., hierarchically “flat”) and only generating circular material flows. Hierarchical cycles, in contrast, consist of nested cycles of interacting processors that preserve their own pattern of interrelations over multiple scales of space and time. As an example, recall the circular multi-level relationship between intermediate metabolism/macromolecular biosynthesis, the internal milieu, and the regulated permeability of the boundaries of a living cell that enable its self-manufacturing capability (Figure 2; Hofmeyr, 2017, 2021).

As a consequence of this hierarchical circularity, efficient cause coincides with final cause in living systems (Rosen, 1991). This is precisely what is meant by autopoiesis or self-manufacture: the primary and most fundamental goal of an organism is to keep on producing itself. Biological organization is intrinsically and unavoidably teleological in this specific and well-defined sense (Weber and Varela, 2002; Deacon, 2011; Mossio and Bich, 2017). We will discuss the wider consequences of introducing this particular kind of finality into the study of biological systems in section “8 Conclusion.” For now, let us simply assure the reader that it leads to none of the difficulties usually associated with teleological explanation (as argued in detail in Walsh, 2015).

Hofmeyr extends Rosen’s mathematical methodology in a number of crucial aspects. First, he integrates the missing formal cause into Rosen’s framework: its role is to determine the specific functional form of each efficient processor and/or material flow (Hofmeyr, 2018, 2021). It is in this precise sense that the notion of “constraint” includes aspects of both formal and efficient cause. With this tool in hand, we can now extend Rosen’s framework and map it explicitly onto the cellular processes involved in self-manufacture—intermediary metabolism/macromolecular biosynthesis, maintenance of the internal milieu, and transmembrane transport (Figure 2; Hofmeyr, 2017, 2021). The resulting model is called a fabrication-assembly (F,A)-system to reflect the fact that self-manufacture consists of two fundamental aspects: self-fabrication of required components, plus their self-assembly into a functional whole (Hofmeyr, 2007, 2017, 2021).

(F,A)-Systems highlight a number of features of biological organization that are not evident from Rosen’s original account. First of all, one of the major efficient causes of the model (the interior milieu) exists only at the level of the whole living system (or individual cell), and cannot be reduced or localized to any subset of component processes (Hofmeyr, 2007, 2017). If it was not already clear before: biological organization is an irreducible systems-level property. This is perhaps why it is so difficult to study with purely reductionist analytical approaches (but see Jaeger, 2024c).

Second, (F,A)-systems are closed to efficient cause but open to formal causation. This means that the specific form of their processors and flows constantly changes while still maintaining organizational closure (Hofmeyr, 2021). This enables physiological and evolutionary adaptation by introducing heritable variability to Rosen’s formalism (see also Barbieri, 2015). It links the fundamental biological principles of organization and variability in a way that is not possible with the less refined distinction between processes and constraints (cf. Montévil et al., 2016; Soto et al., 2016a,b; Jaeger, 2024b). We will revisit this topic in section “6 Affordance, goal, and action,” when we start focusing on ecology and evolution.

Finally, due to overall closure all efficient causes in the (F,A)-model are part of hierarchical cycles with the mathematical property of being self-referential in the sense of being collectively impredicative, which means that the processors involved mutually define and generate each other, and thus cannot exist in isolation (Hofmeyr, 2021). This leads to an apparent paradox: without these processors all being present at the same time, already interacting with each other, none of them can ever become active in the first place. To compare and contrast it with Turing’s (1937) halting problem, Hofmeyr calls this kind of deadlock the starting problem of biological systems. It illustrates the basic difference between mere recursion (processes feeding back on each other) and co-construction (processes building each other through continuous constraint-building).12

With this conceptual toolkit in hand, we can now revisit and refine the distinction between living and non-living systems, in order to better understand why the self-manufacturing organization of living matter cannot be fully formalized or captured by algorithmic computation. Rosen frames this problem in terms of complexity (Rosen, 1991; see also Louie, 2009; Louie and Poli, 2017; Hofmeyr, 2021). He (re)defines a complex system through the presence of at least one hierarchical cycle among its functional components, while the category of simple systems comprises all those that are not complex (Rosen, 1991; Louie, 2009). Rosennean complex systems include living cells and organisms, plus systems that contain them (such as symbioses, ecologies, societies, and economies). This contrasts with more familiar definitions of “complexity,” which rely on the number, nonlinearity, and heterogeneity of (interactions among) system components, on the presence of regulatory feedback and emergent properties, and/or on the algorithmic incompressibility of simulated system dynamics. Such definitions result in graded rather than categorical differences in the complexity of living vs. non-living systems (see, for example, Mitchell, 2009; Ladyman and Wiesner, 2020).

Based on his categorical distinction between simple and complex systems, Rosen derives his most famous conjecture (Rosen, 1991; Louie, 2009, 2013, 2017a): he shows, in a mathematically rigorous manner, that only simple systems can be captured completely by analytical (algorithmic) models, while any characterization of complex systems in terms of computation must necessarily remain incomplete. This closely relates to our claims concerning the formalization of relevance realization from section “3 Relevance realization.” Rosen’s, like ours, is an incompleteness argument analogous to Gödel’s proof in mathematics. It says that it may well be possible to approximate aspects of biological organization through algorithmic simulation, but it will never capture the full range of dynamic behaviors or the evolutionary potential of a living system completely. If true, this implies that the strong Church-Turing conjecture—that all physical processes in nature must be computable (Deutsch, 1985, 1997; Lloyd, 2006; see section “2 Agential emergentism”)—is false, since biological organization provides a clear counterexample of a physical process that cannot be captured fully by computation.

Here, we extend and recontextualize Rosen’s conjecture to arrive at an even stronger claim: it no longer makes sense to ask if organisms are computable if they are not completely formalizable in the first place. While discussions about computability focus on our limited ability to predict organismic behavior and evolution, our argument about formalization reveals even deeper limitations concerning our ability to explain living systems.

To recognize these limitations for what they are, we need to return to the matter of intrinsic goals. Once a living system is able to maintain organizational continuity through self-constraint or immanent causation, it starts exhibiting a certain degree of self-determination (Mossio and Bich, 2017). In other words, it becomes autonomous (Moreno and Mossio, 2015) because, ultimately, the process of maintaining closure must be internally driven. Even though the environment is a necessary condition for existence (not just as a source of food and energy, as we shall see in section “6 Affordance, goal, and action”), an organism does not behave in a purely reactive manner with regard to external inputs. Instead, future states of the system are dynamically presupposed by its own inherent organization at earlier points in time (Bickhard, 2000). This is exactly what we mean when we say an organism is its own final cause. If organizational continuity ceases, the organism dies and is thus no longer a living system.13 It still engages in exchange with its environment (e.g., by getting colonized by saprophages), but the inner source of its aliveness is gone. According to Rosen, it has made the transition from complex to simple. Complexity, therefore, originates from within. And it remains opaque to any external observer, forever beyond full formalization.

Taken together, all of the above defines in a minimal account of what it means for an organism to act for its own reasons, on its own behalf (Kauffman, 2000; Mitchell, 2023): basic natural agency is characterized by the ability to define and attain the primary and principal goal of all living beings—to keep themselves alive. This is achieved through the process of autopoiesis or self-manufacture, implemented by a self-referential, hierarchical, and impredicative causal regime that realizes organizational closure. This simple model, which is completely compatible with the known laws of physics, provides a naturalistic proof of principle that organisms can (and indeed do) pursue at the very least one fundamental goal: to continue their own existence. It accounts for the organism’s fundamental constitutive autonomy (Moreno and Mossio, 2015). But this is not enough. What we need to look at next is another important dimension of an organism’s behavior: its agent-arena relations, which are guided by a kind of interactive autonomy (Di Paolo, 2005; Moreno and Mossio, 2015; Vervaeke et al., 2017). A full-blown account of natural agency requires both organizational and ecological dimensions. The latter will be the topic of the next two sections.

5 Basic biological anticipation

The interactive dimension of natural agency is also called adaptive agency, because it is concerned with how an organism, once it has achieved basic self-manufacture, can adaptively regulate its state in response to its environment (Di Paolo, 2005; Moreno and Mossio, 2015; Di Paolo et al., 2017). As an example, consider the paramecium again: its cilia beat as a consequence of its metabolism and the maintenance of its internal milieu, but their effect lies outside the cell, causing the organism to move toward food sources, or food to be brought into proximity through the turbulent flow induced in its viscous watery surroundings. Thus, constraints subject to closure can (and indeed must) exert effects beyond the boundaries of the organism.14 Agency is not only an organizational, but also an ecological phenomenon (Walsh, 2015). It is as much about the relations of the agent to its arena, as it is about internal self-manufacture.

Once it has set itself a goal, the organism needs to be able to pursue it. Such pursuit presupposes two things: first, the organism must be motivated to attain its goal. Motivation ultimately springs from an organism’s fragility and mortality (Jonas, 1966; Weber and Varela, 2002; Thompson, 2007; Deacon, 2011; Moreno and Mossio, 2015; see also section “2 Agential emergentism”). If a system cannot perish (or at least suffer strain or damage), it has no reason to act. This is why the execution of an algorithm must be triggered by an external agent. It pursues nothing on its own.15

Second, and more importantly, the organism must be able to identify appropriate combinations or sequences of actions, suitable strategies, that increase the likeliness of attaining its goals. This is what it means to make the right “choice”: to select an appropriate action or strategy from one’s repertoire that satisfices in a given situation. The chosen path may not be optimal—nothing ever is. But making adequate choices still requires the ability to assess, in some reliable way, the potential consequences of one’s actions. More precisely, it means that even the simplest organism must have the capacity to project the present state of the world into the future or, perhaps more appropriately, to pull the future back into the present (Louie, 2009; Rosen, 2012; Kiverstein and Sims, 2021; Sims, 2021). Or simply put: any purposive system is able to perform an activity (rather than some other activity) because of its (likely) consequences. Recall that this cannot rely on intention or awareness, if we are to develop an account of relevance realization suitable for all living beings. Therefore, our next task is to show that even the most primitive organisms are anticipatory systems (Louie, 2009, 2012, 2017b; Rosen, 2012).

An anticipatory system possesses predictive internal models of itself and its immediate environment (the accessible part of the large world it lives in; Rosen, 2012). These models need not be explicit representations. They simply stand for an organism’s “expectations” in some generic way. Often, they manifest as evolved automatisms or habituated instinctive behaviors: selectors of actions inherited from ancestors that attained their goals to survive (and later reproduce) in comparable circumstances in the past.16 Only exceptionally, in a small set of highly complex animals (including humans), can we speak of representations or even premeditated mental simulations of future events (Deacon, 2011). Most internal predictive models are much simpler, yet still anticipatory. Let us examine what the minimal requirements of such biological anticipation are.

To start with a simple example: a bacterium can modify the frequency of change in its flagellar motion to swim up a nutrient gradient or, conversely, to avoid the presence of a toxin. This evolved automatism anticipates the outcome of the bacterium’s actions in two ways: first, it enables the organism to discern between nutritious (good) and noxious (bad) substances in its surroundings. Second, it directs the bacterium’s movements toward an expected beneficial outcome, or away from a suspected detrimental one. This process can and does go wrong: outside the laboratory there is not always an abundance of food at the top of the sugar gradient, and new dangers constantly await.

It is important to repeat that there is nothing the bacterium explicitly intends to do, nor is it in any way aware of what it is doing, or how it selects an appropriate action. Its responses are evolved habits in the sense that there are few alternative paths of action, there is very little flexibility in behavior, and there is certainly no self-reflection. And yet, bacteria have evolved the capacity to distinguish what is good and what is bad for their continued existence, purely based on endless runs of trial-and-error in countless generations of ancestors. This is basic relevance realization grounded in adaptive evolution. And it qualifies as basic anticipatory behavior: the expected outcome of an action influences the bacterium’s present selection of actions and strategy. The fundamental requirement for a predictive model is fulfilled: there is a subsystem in the bacterium’s physiology that induces changes in its present state based on expectations about what the future may bring. This is what we mean when we say that anticipatory systems can pull the future into the present.

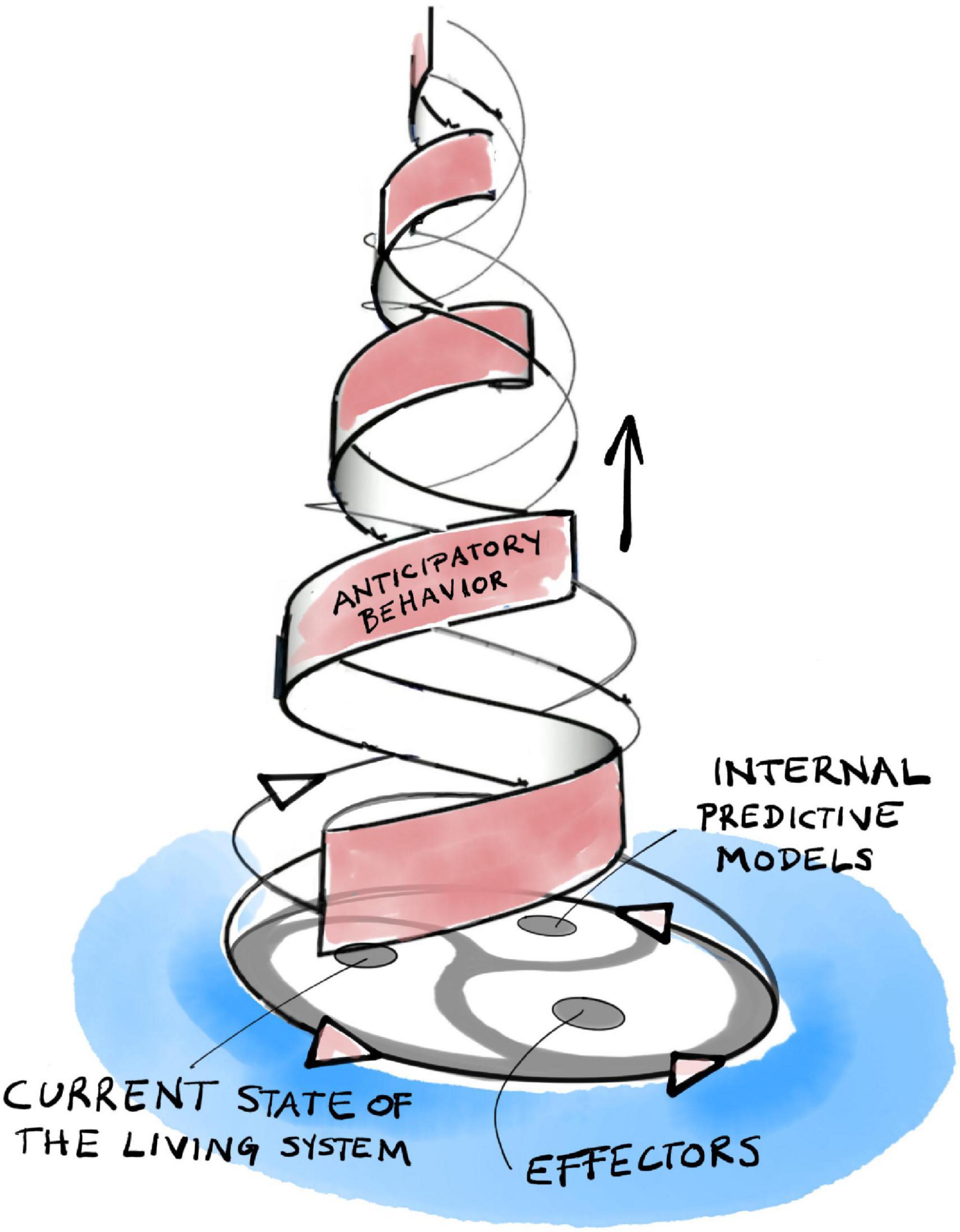

More generally, we can consider a living system organized in the way described in section “4 Biological organization and natural agency,” which contains predictive models as subsystems with effectors that feedback on its self-manufacturing organization. These effectors influence system dynamics in two distinct ways (Louie, 2009, 2012, 2017b; Rosen, 2012). Either they modify the selection of actions (as in the bacterial example above), or they modulate the sensory inputs of the system (see also Kiverstein and Sims, 2021). Our expectations constrain and color what we perceive. It is in this sense that anticipation is most essential for relevance realization.

Let us consider human predictive processing once more (see section “3 Relevance realization”): on the one hand, people cannot help but project their expectations onto their environment. It is hard to let go of preconceived notions: we only modify our expectations when we encounter errors and discrepancies of a magnitude we can no longer ignore. On the other hand, we also use opponent processing to great effect when we play predictive scenarios against each other while monitoring the streams of discrepancies they generate to prioritize between them. We will revisit such adaptive evolution of internal models in section “7 To live is to know.” For now, it suffices to say that human beings are anticipatory systems par excellence, and our behavior cannot be understood unless we take seriously our abilities to plan ahead and strategize.

The internal organization of anticipatory systems is intimately related to the basic organization of living systems (Louie, 2012; Rosen, 2012). There are familiar self-referential patterns (Figure 3). Without going into too much detail, let us note that organisms generate their internal models of the world from within their own organization. These models, in turn, direct the organism’s behavior, its choice of actions and strategies, through their effectors. Actions have consequences and, thus, our internal models become modified through the comparison of their predictions with actual outcomes. This leads to a dialectic adaptive dynamic guiding our active explorations of a large world, not unlike that which governs the interactions of self-manufacturing processes described in section “3 Relevance realization” (compare Figure 2 with Figure 3).

As is the case with Rosennean complex systems, there are anticipatory systems that are not organisms. Economies, as a case in point, are heavily driven by internal (individual- and societal-level) models of their own workings (e.g., Tuckett and Taffler, 2008; Schotanus et al., 2020). Once somebody finds out how to predict the stock market, for instance, its dynamics will immediately and radically change in response. We can thus say that all organisms are anticipatory systems, but not all anticipatory systems are organisms; similarly, all anticipatory systems are complex systems, but not all complex systems are anticipatory (Louie, 2009; Rosen, 2012).

To summarize: all organisms, from bacteria to humans, are anticipatory agents. They are able to set their own goals and pursue them based on their internal predictive models. Organisms, essentially, are systems that solve the problem of relevance. In contrast, algorithms and machines are purely reactive: even if they seemingly do anticipate and are able to simulate future sequences of operations in their small-world context, it is always in response to a task or target that is ultimately predefined and externally imposed.

It is worth interjecting at this point that nothing in our account violates any laws of logic or physics. In particular, we do not allow future physical states of the world to affect the present. The states of internal predictive models are as much physically embedded in the present as the organism of which they are a part. Also, the model can (and often does) go wrong. Consequences of actions are bound to diverge from predictions in some, often surprising and unexpected, ways. A good model is one where they do not diverge catastrophically. A bad model can be improved by experience through the monitoring and correction of errors. This yields the adaptive dialectic dynamic depicted in Figure 3. Remember that every living system can do this. With this capacity in hand, we can now move to the bigger picture of how organisms evolve an ever richer repertoire of goals and actions through solving the problem of relevance (cf. Roli et al., 2022).

6 Affordance, goal, and action

We now come to the core of our evolutionary account of relevance realization, which is based on an organism-centered agential perspective on evolution called situated Darwinism (Walsh D. M., 2012, 2015). It is an ecological theory of agency and its role in evolution, which centers around the engagement of organisms with their experienced environment. Situated Darwinism centers around the following three basic ingredients: (1) a collection of intrinsic goals for the organism to pursue (see section “4 Biological organization and natural agency”), (2) a set of available actions (the repertoire of the agent, shaped with respect to its experience and expectations; section “5 Basic biological anticipation”), and (3) affordances in the experienced environment. In what follows, we show that the dialectic co-emergent dynamics between these three components provide an evolutionary explanation of relevance realization.

Affordances are what the environment offers an agent (Gibson, 1966, 1979; Chemero, 2003; recently reviewed in Heras-Escribano, 2019). They typically manifest as opportunities or impediments, defined with respect to an organismic agent pursuing some goal (Walsh, 2015). An open door, for example, affords us to pass through it, but when the door is shut it prevents us from entering. Similarly, gradients of nutrients and toxins provide positive and negative affordances to a bacterium seeking to persist and reproduce. In this context, perception becomes the active identification of affordances. Actions are initiated in direct response to them. Affordances are a quintessentially relational, ecological, and thus transjective phenomenon: they do not reside objectively in the physical environment, but neither are they subjective. Instead, they arise through the exploratory interaction of an agent with particular attributes and aspects of its surroundings (Chemero, 2003; Stoffregen, 2003; Di Paolo, 2005; Walsh, 2015). They are what constitutes the agent’s arena, the task-relevant part of its experienced environment (see section “3 Relevance realization”).

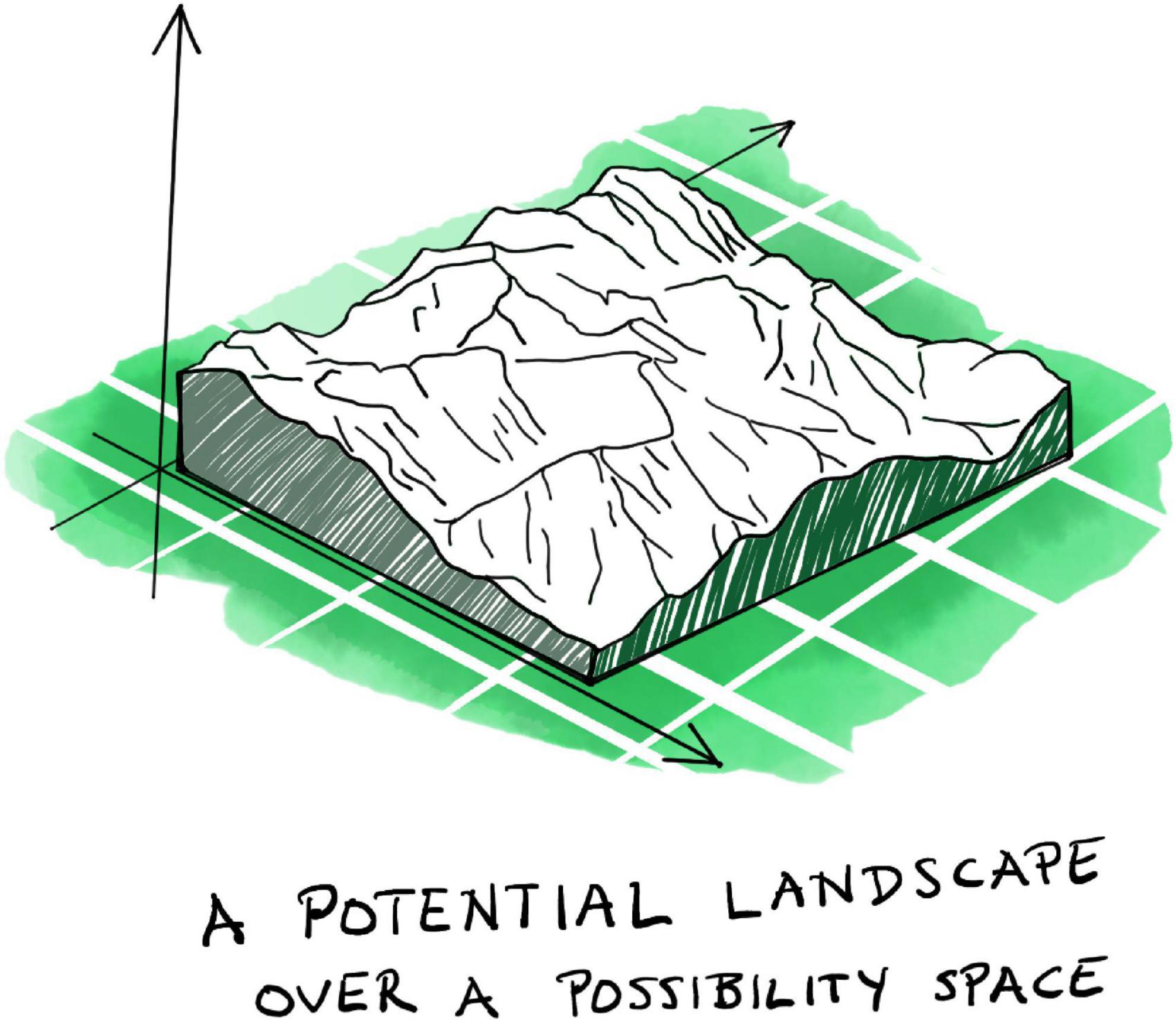

Affordances ground the agent-arena relationship in the world: organisms experience their physical environment as an affordance landscape (Walsh, 2014, 2015; Felin and Kauffman, 2019). This concept highlights the complementarity of agent and arena. The latter does not simply preexist, independent of the agent. Through their mutual interrelations, an organism’s goals, actions, and affordances continuously co-constitute each other through the kind of emergent dialectic dynamic we have already encountered in the last two sections (Figure 4). The arena (as an affordance landscape) constantly co-emerges and co-evolves with the evolving set of goals and action repertoire of the agent as it explores and comes to know the large world it is living in.

Figure 4. The triadic dialectic (trialectic) underlying evolutionary adaptation and complexification.

This dialectic proceeds in the following way: an organismic agent identifies affordances in its surroundings through active sensing or perception (which may be based on predictive processing as described in section “3 Relevance realization”), generating its arena by delimiting the task-relevant region of its larger experienced environment. This highlights the co-dependency of agent and arena: an organism’s affordance landscape exists only in relation to the set of intrinsic goals that the organism may select to pursue17 (see section “5 Basic biological anticipation”). This landscape represents a world of meaning, laden with value, where affordances are more or less “good” or “bad” with respect to attaining the agent’s goals. We cannot repeat often enough that this does not require any explicit intention, awareness, or cognitive or mental processing. The classification of affordances as beneficial or detrimental, and their effect on the process of selecting a goal, may be habituated by adaptive evolution through the inherited memory of the successes and failures of previous generations.

To complicate the situation, different (even conflicting or contradictory) goals can coexist at the same time, even in agents with very simple behavioral repertoires and predictive internal models. A bacterium, for instance, may encounter a nutrient gradient that coincides with the presence of a toxin, contradictory affordances which create conflicting signals of attraction and avoidance. Moreover, the same goal can be assigned different weights (priorities) in different situations, and goals may build on each other in other non-trivial ways that tend to be radically dependent on context. All of this means that the goals of an agent come with a complicated and rich structure of interdependencies, intimately commingled with the affordances in its arena (Haugeland, 1998; Walsh D. M., 2012; Walsh, 2014, 2015).

When an agent selects a particular goal to pursue, it collapses its set of goals down to one specific element. Similar considerations apply to the choice of an appropriate action, or a small set of actions that constitute a strategy, from the organism’s current repertoire to pursue the selected goal. This repertoire also comes with a complicated and rich internal structure: some actions are riskier, or more strenuous, or more difficult to carry out than others; some are quicker, more obvious, or more directly related to the attainment of the goal. Some actions only make sense if employed in a certain combination or temporal order. The potential usefulness of an action must be assessed through internal predictive models that take all these complications into account (see section “5 Basic biological anticipation”). Based on this, the agent collapses its repertoire to select a particular action, or combination or sequence of actions, which it will commit to carry out.

All of this leads to the agent leveraging specific positive affordances in its arena, while trying to avoid or ameliorate negative ones, through its actions thereby also collapsing the set of available affordances (the arena) to some subset of itself. Taken together, all of the above results in a constant, coordinated, and co-dependent collapse and reconstruction of all three sets—affordances, goals, and actions—ultimately committing the agent to a particular pursuit at any one time through a specific sequence of actions. This is how agential behavior is generated.

Of course, this is not the end of the process. Committing to a specific action or strategy will immediately change the affordance landscape and will likely also affect the set of goals of the agent. Acting in one’s arena inevitably generates new opportunities and impediments, while modifying, suppressing, or removing others. The altered affordance landscape is then again perceived and assessed by the agent (through its internal predictive models), affecting the weights and relations of its goals, while generating new ones (and obsoleting others). All the while, the agent may learn to carry out new actions and to adjust old ones. This iterative evaluation and amelioration of behavioral patterns (Figure 4) leads to an adaptive dynamic that induces a closer fit between the agent and its arena, a tighter and often more intricate agent-arena relationship, and thus a firmer and broader grip on the world. In short, the agent may learn to behave more appropriately in its particular situation. It is enacting its own adaptation (Di Paolo, 2005).

This yields an organism-centered model of Darwinian evolution, which is very different from most other current approaches to evolutionary biology. It is thoroughly Darwinian, because the population-level selection of heritable variants remains central for stabilizing adaptive behavioral patterns across generations (see Walsh D. M., 2012; Walsh, 2015). This is especially evident in simple organisms (like bacteria) with small and rather inflexible sets of affordances, goals, and actions that show a high degree of genetic influence and low flexibility in their behaviors. Yet, unlike reductionist and strictly adaptationist accounts of Darwinian evolution, it also provides an explanation for how such simple behaviors can evolve into more complex and multi-layered interactions between an agent and its arena over time.18

Situated Darwinism implies that agents and arenas constantly co-evolve and co-construct each other (Walsh, 2014, 2015; Rolla and Figueiredo, 2023). This constant mutual engagement recapitulates Darwin’s original framing of evolution through the organism’s struggle for existence. In particular, it reunites the selection of appropriate behaviors by an agent during its lifetime (physiological and behavioral adaptation) with the organism’s ability to preserve and adjust its organization within and across generations (evolutionary adaptation; Saborido et al., 2011; DiFrisco and Mossio, 2020; Mossio and Pontarotti, 2020; Pontarotti, 2024). Several of us have argued in detail elsewhere that such an integration of adaptive processes within and across generations is a fundamental prerequisite for evolution by natural selection (Walsh, 2015; Jaeger, 2019, 2024b).