- 1Distance Education, Research and Application Centre, Hitit University, Çorum, Türkiye

- 2Department of Physical Education Teacher Education, Faculty of Sport Sciences, Hitit University, Çorum, Türkiye

Introduction: Improving teachers' digital competences is sine qua non for effective teaching and learning in today's digital society. However, there is a limited number of comprehensive and reliable scales to measure teachers' digital competences. Regarding this, the present study aimed to develop and validate a comprehensive scale to assess teachers' digital competences.

Methods: Building on previous studies, a draft scale developed and piloted with a sample of teachers from all educational levels. The procedures of Exploratory Factor Analysis (EFA) were followed to refine the scale, resulting in a five-point Likert scale with 36 items loaded onto four factors. The final scale was called as Teachers' Digital Competences Scale (TDC-S). Confirmatory factor analysis (CFA) was employed to validate the four-factor structure. Reliability analysis was performed using Cronbach's alpha (α), McDonald's omega (ω), and Composite Reliability (CR), indicating high psychometric properties. Convergent and discriminant validity analyses were also performed to assess the validity of the latent structures in TDC-S.

Results and discussion: The findings suggest that the TDC-S is a valid and reliable instrument for assessing teachers' digital competences at all grade levels from primary to high schools. It can be used to inform teacher training and development programs, and to identify teacher candidates who need additional assistance regarding improving their digital competences.

1 Introduction

Digital technologies have had a profound impact on society and learning culture over the past two decades. This has necessitated the adaptation of schools and society to this digital transformation, which was further underscored by the COVID-19 pandemic. In response, many countries have prioritized the digitalization of society and education, developing frameworks and tools to improve digital competency (Fernández-Miravete and Prendes-Espinosa, 2022). In today's digital society, it is essential for every citizen to acquire digital skills as a core competence for personal development, socialization, employment, and lifelong learning (Council of the European Union, 2018; Rodríguez-García et al., 2019). Digital competence and digitalization are expected to play crucial roles in shaping the future economic and social landscape of Europe. Thus, recognizing its growing importance, the European Council has highlighted digital competence as a fundamental skill necessary for personal fulfillment, promoting a healthy lifestyle, ensuring employability, fostering active citizenship, and facilitating social inclusion. This strategic focus on digital competence is in line with the evolving educational needs and trends, emphasizing the critical role that digital literacy plays in contemporary society (European Commission, 2023). The European Commission's proactive approach to integrating digital competence into essential competencies for individuals demonstrates a forward-looking strategy aimed at equipping citizens with the skills needed to thrive in an increasingly digitalized world. By prioritizing digital competence as a key competence, the European Council not only acknowledges the transformative potential of digital skills but also shows a commitment to preparing individuals for the challenges of the digital age (Castaño Muñoz et al., 2023; Council of the European Union, 2018). However, digital transformation presents some major challenges for education systems, including digital literacy and the quality of education. Improving teachers' and students' digital competences is essential to achieve digital transformation effectively in educational settings (Martín-Párraga et al., 2023). In this context, educators at all levels of education have a great responsibility to raise qualified and creative individuals. Thus, teachers need to update their competency profiles and teaching strategies to align with the requirements of the digital society (Caena and Redecker, 2019; Gómez-García et al., 2022).

Although the impact of technology in the teaching-learning process has become more evident in the last few years, there are many challenges education systems should overcome for an effective integration of digital technologies into this process. Several influencing factors and challenges can be listed as such the use of virtual learning environments, emerging technologies, digital platforms, and social networks and so forth (Garzón Artacho et al., 2020). To achieve the goal of sustainable and quality education, teachers need to be competent enough to meet the educational demands placed on them (Mafratoglu et al., 2023). This has led to the development of not only teachers' digital competences (TDC) but also students' digital competences and the utilization of digital technology to improve education (Ghomi and Redecker, 2019). Teachers who were exposed to technology in the middle of their professional lives, especially during the COVID-19 pandemic period, had to use various educational tools such as e-textbooks, internet technologies, exam programs and educational resources (Yelubay et al., 2020). Caught unprepared, teachers have faced a lack of understanding of digital technology, which has caused a huge gap in the education system (Ochoa Fenández, 2020). Therefore, the challenge of preparing teachers to ensure the effective and efficient use of digital technology in schools remains unclear. The evolving nature of technology requires educators to continuously develop their skills and digital competences to keep pace with the rapidly changing educational realm (Falloon, 2020).

The integration of ICTs in the educational context has prompted the development of policies to address one of the pedagogical challenges, namely improving teachers' digital literacy (Garzón Artacho et al., 2020). In this regard, particularly the emergence of “The European Framework for the Digital Competence of Educators” (DigCompEdu) in 2017 has catalyzed the formulation and implementation of policies by various nations to assess and enhance TDC (Christine, 2017). The updated version of DigCompEdu framework proposes a structured six-point progression model for appraising educator competence proficiency. The framework outlines six distinct levels of TDC from A1 (Awareness) to C2 (Innovation). Concurrently with the introduction of DigCompEdu, there has been a salient surge in research endeavors focusing on the examination of TDC (Aydin and Yildirim, 2022; Madsen et al., 2023). To facilitate the acquisition of digital competency among educators, several competence frameworks have been proposed. All these frameworks aim to explore the way in which technologies should be integrated and used in teaching in order to identify educational needs and propose personalized educational programs (Flores-Lueg and Roig Vila, 2016).

TDC and underpinning frameworks are essential for effective teaching and learning in the 21st century. These frameworks provide a clear and comprehensive roadmap for teacher development, supporting the development of school-wide digital learning initiatives, and enabling the pathways to the assessment of the impact of teacher professional development programs. In line with this, several organizations have attempted to identify and develop TDC. These efforts include European Digital Competence Framework for Educators, DigCompEdu; ISTE standards for Educators; UNESCO ICT Competence Framework for Teachers; Spanish Common Framework of Teacher's Digital Competence; British Digital Teaching Professional Framework; Colombian ICT Competences for teachers' professional development; Chilean ICT Competences and Standards for the teaching profession. However, Cabero-Almenara et al. (2020b) evaluated seven most commonly used TDC frameworks and concluded that the DigCompEdu is the most appropriate model for assessing TDC. The structured breakdown of DigCompEdu offers a robust framework for assessing educators' competencies, enabling a detailed examination of their digital skills and capabilities within the educational domain. This hierarchical model categorizes educators into discrete stages, facilitating a systematic approach to evaluating and enhancing educator competence (Cabero-Almenara et al., 2020b). By delineating competence levels in this manner, the model supports the continuous professional development (CPD) of teachers, aligning with the evolving demands of the educational landscape (Santo et al., 2022). In line with this, many researchers have employed DigCompEdu as a framework to develop instruments in order to assess TDC (Alarcón et al., 2020; Cabero-Almenara and Palacios-Rodríguez, 2020; Ghomi and Redecker, 2019). Building upon the DigCompEdu framework, SELFIEforTEACHERS has been develop more recently as a self-reflection tool designed to support primary and secondary teachers in developing their digital capabilities. Through a self-reflective process, teachers are able to self-assess their digital competence, pinpointing both strengths and areas for improvement. The feedback provided by this tool empowers teachers to actively engage in their professional learning journey, fostering the integration of digital technologies within their professional context (European Commission, 2023). Additionally, this tool empowers teachers to take charge of their professional growth and development. By fostering a culture of self-directed learning, SELFIEforTEACHERS aligns with contemporary educational paradigms that emphasize the importance of personalized and continuous professional development for educators.

In a similar vein, in the present study, DigCompEdu served as a theoretical framework to inform the creation of item pool and the development of draft scale. In addition to the value of these frameworks, the scale development studies pertinent to assessment of TDC are salient since these tools can provide invaluable information regarding teachers' knowledge, skills, and attitudes regarding digital technologies (Rodríguez-García et al., 2019). This information can be used to identify areas where teachers need additional support and to provide them feedback on self-direct their own continuous professional learning. Furthermore, measuring teachers' digital competencies is essential for ensuring effective integration of digital resources in educational settings, enhancing teaching practices, and preparing educators to meet the demands of digital learning environments. There are a variety of different TDC measurement tools available (Alarcón et al., 2020; Al Khateeb, 2017; Cabero-Almenara et al., 2020a; Ghomi and Redecker, 2019; Gümüş and Kukul, 2023; Kuzminska et al., 2019; Tondeur et al., 2017; Tzafilkou et al., 2022). All these efforts delineate the growing importance of teachers' digital competency, which is among the most important competences that teachers should master in today's digital society (Aydin and Yildirim, 2022; Cabero-Almenara and Martínez, 2019; Cabero-Almenara and Palacios-Rodríguez, 2020).

Given this context, although a number of assessment tools have been devised to assess TDC, a comprehensive synthesis and critical evaluation of these tools are lacking. As a response to this need, in a recent review study, Nguyen and Habók (2024) systematically reviewed the 33 TDC scales sourced from peer-reviewed journals indexed in prominent databases, such as the Education Resources Information Center (ERIC), Web of Science (WoS), and Scopus. The time frame for the study was from 2011 to 2022 and the search terms included “ICT competency” or “ICT literacy” or “digital literacy” or “information literacy” or “computer literacy” or “technology literacy” and “assessment”. The study aimed to discern prevalent evaluation aspects, types of assessment tools employed, and the reported reliability and validity of these tools in addition to the frameworks or models underpinning the design of these assessment tools. The analysis revealed a predominant research focus on digital competence in teachers' utilization of educational technology, integration of ICT in teaching and learning, professional development, and support mechanisms for learners through digital competence. Results suggested that future research endeavors should aim at advancing the exploration of TDC. The study also purported that there's no perfect assessment tool for TDC, making it tough to gauge teachers' or students' skills accurately. To create effective assessment tools, researchers should consider factors like where the study takes place, who's participating, and the available resources. Additionally, since technology keeps changing, there is a need to update these assessment tools regularly to keep up with new advancements. As a result, despite the availability of several tools to assess TDC, there is a need for further tools that are more comprehensive and practical, and that can be used to measure the digital competence of teachers and empirically tested in different contexts (Nguyen and Habók, 2024). The selection of appropriate measurement tool for a particular context will depend on the specific needs of the researchers, teachers and the school or district. This highlights the need for a practical and comprehensive scale to assess TDC. Moreover, existing scales often focus on subject-specific digital competences (Al Khateeb, 2017) and primarily target university faculty members (Cabero-Almenara et al., 2020a), undergraduate and graduate students (Tzafilkou et al., 2022), and teacher candidates (Tondeur et al., 2017). Unlike the previous studies, the present study addresses these limitations by incorporating a sample group of teachers from diverse branches and levels, ranging from primary to upper-secondary grade levels. This empirical originality highlights comprehensiveness, practical applicability, as well as the rationale of the current study. Consequently, by developing and validating a comprehensive scale that measures TDC, the present study holds significant contributions to both theoretical and practical aspects of the educational technology research realm. On the practical side, by using TDC-S, teachers can self-assess their digital competence, identifying both strengths and areas for improvement as well as providing feedback for self-directing their continuous professional learning journey, in addition to fostering the integration of digital technologies within their professional context.

In the present study, our aim is to develop and validate a contemporary measurement tool, building upon previous reports and studies in the field. To align with this overarching goal, we have outlined specific research objectives: (1) To examine the validity and reliability of the Teachers' Digital Competence Scale. (2) To evaluate and confirm both the exploratory and confirmatory factor structures of the Teachers' Digital Competence Scale.

2 Materials and methods

2.1 Development process of TDC scale

This study employed a quantitative research method, specifically a descriptive survey design, to develop the Teachers' Digital Competence Scale (TDC-S). The development of the scale followed an 8-step framework proposed by DeVellis (2017). This framework ensures the reliability, validity, and comprehensiveness of the resulting measurement tool. The stages of development include identifying conceptual bases, generating an item pool, determining the format for measurement, obtaining expert review for the item pool, considering the inclusion of validation items, administering items to a development sample, evaluating the items, and optimizing the scale length (DeVellis, 2017).

2.2 Participants

The study was conducted in the 2022–2023 academic year with a sample of teachers from different educational levels and branches in Çorum, Türkiye. Convenience sampling was used to select the participants. Data were collected through online and face-to-face survey techniques, ensuring voluntary participation, and protecting the privacy and confidentiality of the participants. An informed consent was obtained from the participants, and they were informed about the purpose of the study and the conditions of participation. In addition, a letter of approval was retrieved from the Ethics Committee Review Board of Hitit University (2021-88/10.01.2022). As a result, the study was carried out in accordance with the guidelines set forth in the Declaration of Helsinki. All participants took part in the surveys on their own free will, and no incentives were given to encourage participation.

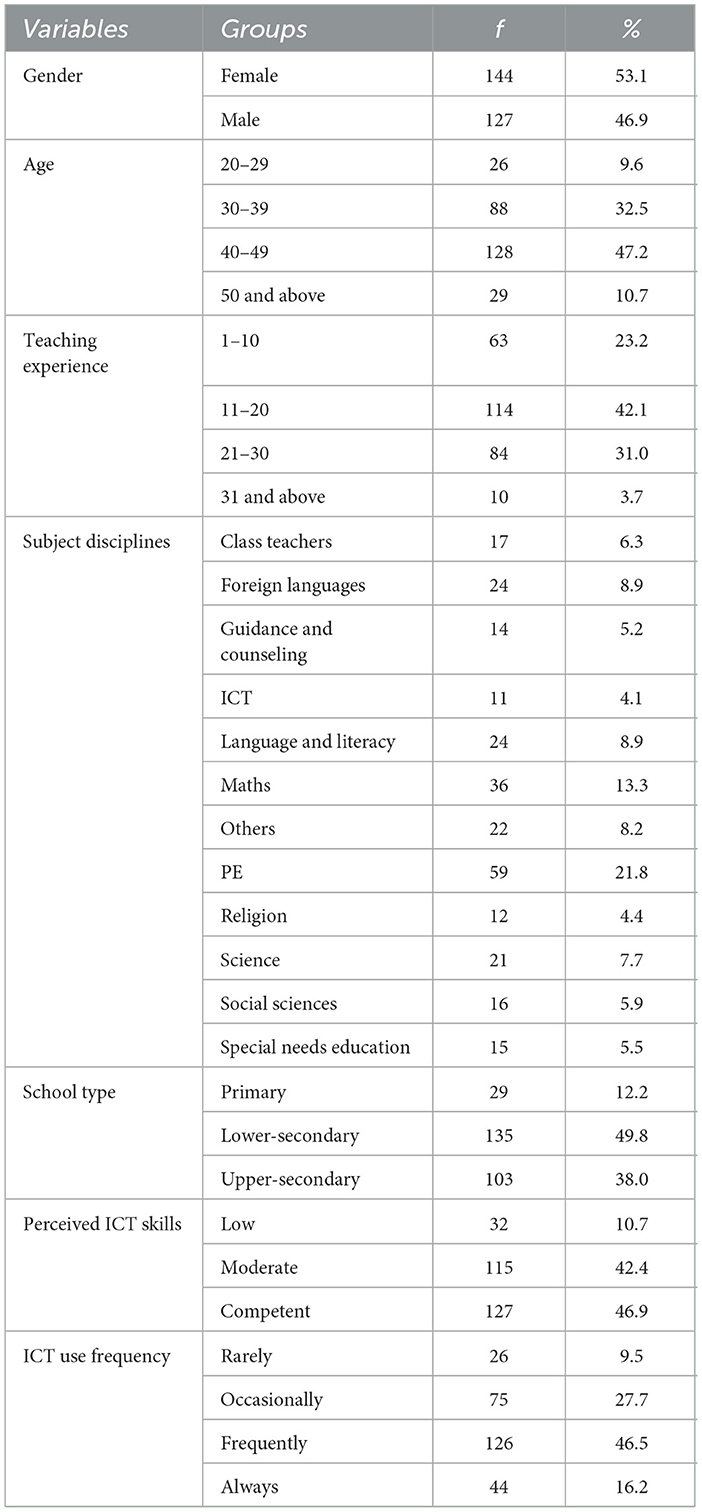

The initial scale form consisted of 46 items developed building on previous studies in the literature (Alarcón et al., 2020; Al Khateeb, 2017; Cabero-Almenara et al., 2020a; Ghomi and Redecker, 2019; Gümüş and Kukul, 2023; Kuzminska et al., 2019; Tondeur et al., 2017; Tzafilkou et al., 2022), expert opinions and feedback. A total of 271 teachers participated in the study. Validity and reliability analyses were conducted. The demographic characteristics of the participants are presented in Table 1.

Upon analysis of the demographic data presented in Table 1, it is evident that the study group consisted of 271 teachers, with 53% female and 47% male participants. The age distribution of the participants was as follows: 10% were aged 20–29, 32% were aged 30–39, 47% were aged 40–49, and 11% were aged 50 and above, with a mean age of 40.61 (SD=7.57). In terms of teaching experience, 23% of the participants had 1–10 years of experience, 42% had 11–20 years, 31% had 21–30 years, and 4% had 31 years teaching experience and above, with a mean seniority of 16.70 (SD=7.96). In line with the research aim, the participants represented a wide range of subject disciplines, including class teachers and special needs education teachers. They were employed in primary schools (12%), lower-secondary schools (50%), and upper-secondary schools (38%). Regarding the perceived ICT skills of the participant teachers, the findings indicated that 11% reported insufficient competence in ICT use, while 42% reported a moderate level of competency. The majority of participants (47%) reported a high level of competency in ICT use. The frequency of ICT use in their classes varied, with 10% reporting rare use, 28% reporting occasional use, 46% reporting frequent use, and 16% reporting constant use during classes.

2.3 Analysis of data

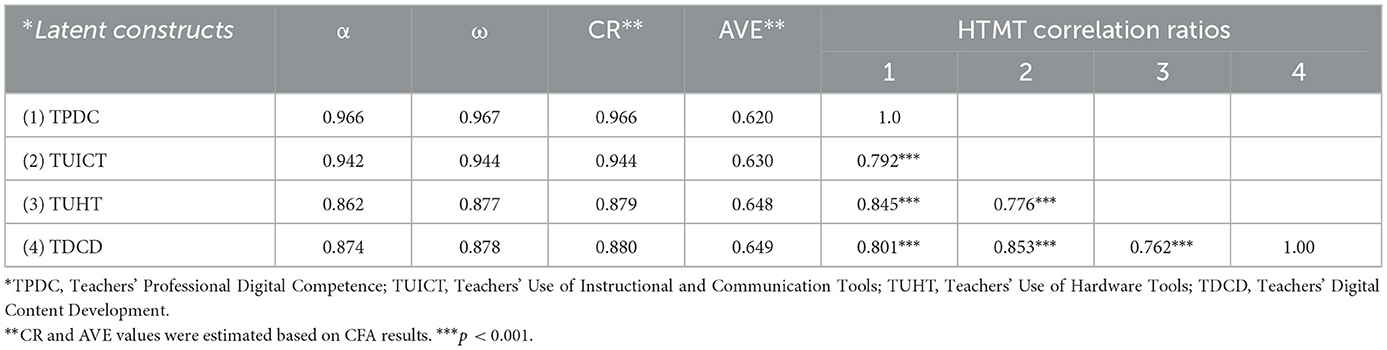

The validity and reliability of the Teachers' Digital Competence Scale were examined. Before conducting the analyses, the normality assumptions of the data were evaluated by examining the skewness and kurtosis values and it was seen that the data were normally distributed. Then, Kaiser-Meyer-Olkin (KMO) coefficient and Bartlett's test were performed to evaluate the suitability of the data for factor analysis. Kaiser (1974) eloquently stated that Kaiser-Meyer-Olkin (KMO) values in the 0.90s are superb, in the 0.80s are commendable, in the 0.70s are adequate, in the 0.60s are mediocre, in the 0.50s are unsatisfactory, and below 0.50 are unacceptable (Kaiser, 1974). After checking the appropriateness of the data set for factor analysis, an Exploratory Factor Analysis (EFA) and a Confirmatory Factor Analysis (CFA) were performed to evaluate the construct validity of the scale. Internal consistency coefficients [Cronbach's alpha (α), McDonald's omega (ω), and Composite Reliability (CR)] were estimated to assess the reliability of the scale. In addition to internal consistency coefficients, convergent and discrimination validity tests such as CR, Average Variance Extracted (AVE), and Heterotrait-Monotrait (HTMT) correlations ratio were performed to assess the psychometric quality of the scale.

The primary objective of this study was to explore the latent structure of the 46 items. To achieve this goal, a common factor model, specifically Exploratory Factor Analysis (EFA), was employed as the appropriate statistical technique (Watkins, 2021). EFA is a common method used in research to identify latent factors that explain the observed variance in a set of variables. In this study, squared multiple correlations (SMCs) were used to estimate the initial communality values (Tabachnick and Fidell, 2019). SMC is a measure that indicates the proportion of variance in an observed variable that can be accounted for by the common factors. It provides an initial estimate of the shared variance among the variables before the factor analysis is conducted (Watkins, 2021). By employing EFA and using SMC for initial communality estimates, this study aimed to uncover the latent structure and understand the underlying relationships among the measured variables. While EFA was used to determine the underlying factor structure of the scale, CFA was used to confirm the factor structure proposed in EFA. The methodological choices in this study were designed to ensure a rigorous and comprehensive analysis of the data, resulting in a reliable and valid measurement tool for assessing TDC.

3 Results

In this section, the findings of the validity and reliability studies conducted as part of the development of the instrument were presented. Exploratory factor analysis (EFA) and Confirmatory factor analysis (CFA) were conducted to demonstrate construct validity. The internal consistency coefficients and convergent and discrimination validity tests were performed to assess the psychometric quality of the scale.

3.1 Exploratory factor analysis (EFA)

Exploratory factor analysis (EFA) is a multivariate statistical technique that has become a cornerstone in the development and validation of measurement tools. However, the application of EFA requires researchers to make several methodological decisions, each of which can have a significant impact on the results. These decisions include the choice of factor extraction method, rotation method, and factor loading threshold, among others. There is no one-size-fits-all approach to EFA, and the best choices for each decision will vary depending on the specific research context. However, researchers should carefully consider the available options and make their decisions based on sound theoretical and empirical grounds (Watkins, 2021).

An EFA was performed to ensure the construct validity of the Teachers' Digital Competence Scale. Before performing EFA, the dataset used in this study was checked if it is appropriate for factor analysis based on several indicators. Firstly, the sample size of 271 participants met the recommended criteria for factor analysis. A sample size of this magnitude is considered suitable for conducting factor analysis (Bryant and Yarnold, 1995; Tabachnick and Fidell, 2001). Furthermore, the correlation matrix of the data was inspected to assess the inter-correlations among the scale items. It was found that the majority of inter-correlations exceeded the threshold of <0.3 (Tabachnick and Fidell, 2001). This indicates that there is a sufficient level of association between the items, which is a prerequisite for factor analysis. In addition, Bartlett's test of sphericity was conducted to evaluate the interdependence among the items. The test yielded a significant result (X2 = 8,916,504, df = 630, p < 0.05), suggesting that the items in the dataset are not independent and are suitable for factor analysis (Bartlett, 1954). Lastly, the Kaiser-Meyer-Olkin (KMO) sampling statistic was calculated to assess the adequacy of the dataset for factor analysis. The KMO value of 0.965, which exceeds the recommended threshold of 0.70 (Kaiser, 1974), indicates that the dataset is highly suitable for factor analysis. In summary, all these indicators provide confidence in the suitability of the dataset for conducting factor analysis.

After confirming the EFA assumptions, a principal axis factor analysis (PAF) with oblique rotation was conducted. Oblique rotation was chosen to allow for the possibility of correlated factors, which is appropriate when the factors are expected to be related or correlated. PAF is a method of extracting factors from a correlation matrix, and oblique rotation is a method of rotating the factors in such a way that they are allowed to correlate with each other. This is in contrast to orthogonal rotation, in which the factors are forced to be uncorrelated (Watkins, 2021). It is noteworthy that the factor structure analysis was conducted without imposing any restrictions, allowing the scale items to load on any number of factors. This approach provides flexibility in capturing the underlying structure of the data and allows for a more accurate representation of the TDC. By employing an oblique rotation and not imposing any restrictions on the factor structure, the analysis aimed to capture the complex relationships and potential interdependencies among the scale items. This approach is supported by the literature, which emphasizes the importance of considering the design and analytical decisions in factor analysis and the consequences they have on the obtained results (Fabrigar et al., 1999; Li et al., 2015).

Prior to conducting exploratory factor analysis (EFA), researchers should establish a threshold for factor loadings to be considered meaningful (Worthington and Whittaker, 2006). Pattern coefficients for oblique rotation loadings that meet this threshold are defined as salient. It is common practice to arbitrarily consider factor loadings of 0.30, 0.32, or 0.40 as salient (Hair et al., 1998), meaning that variables with around 9%, 10%, or 16% (factor loading squared) of their variance is explained by the factor. Some researchers consider 0.30 or 0.32 to be salient for EFA and 0.40 to be salient for PCA (Watkins, 2021). These thresholds reflect practical significance but do not guarantee statistical significance. A loading of 0.32 may account for 10% of a variable's variance, yet it may not be statistically significantly different from zero (Zhang and Preacher, 2015). In this regard, Norman and Streiner (2014), suggested an approximation based on Pearson correlation coefficients to calculate the statistical significance (p = 0.01) of factor loadings (Norman and Streiner, 2014). For the TDC scale, statistical significance (p = 0.05) would equate to 0.32. Therefore, in this study, a loading of 0.32, which may account for 10% of a variable's variance, was specified as a threshold for salient loadings (Watkins, 2021). In line with this, preliminary analysis purported that 10 items should be removed from the item pool. The items 4, 19, 36, and 45 had a factor loading below cut-off value of 0.32. Additionally, the items 7, 25, 28, 30, and 34 were also removed since they are overlapping loadings which is <0.10. As a result of the preliminary analysis these 10 items were removed to refine scale items prior to EFA as suggested by Tabachnick and Fidell (2019) and Watkins (2021).

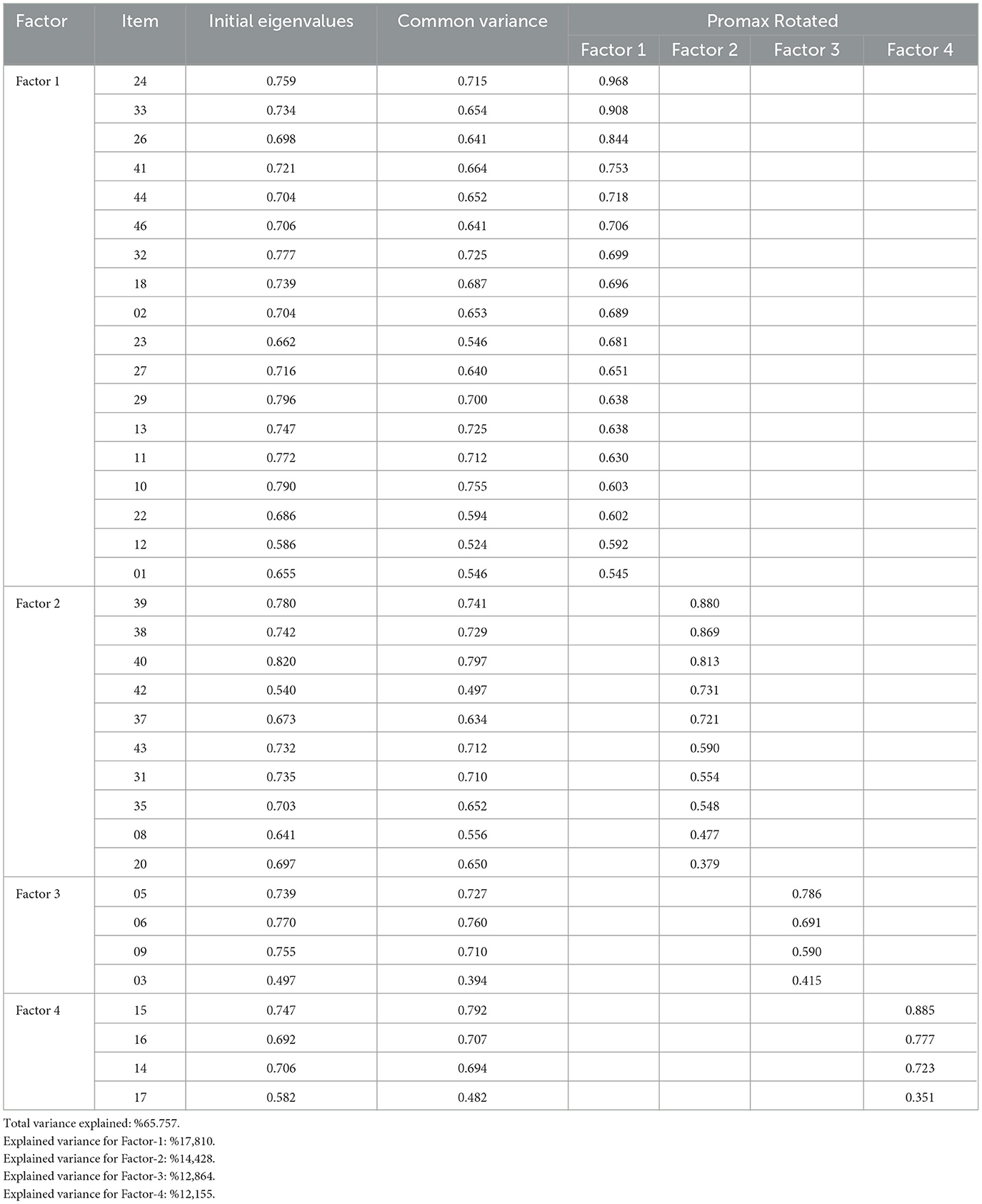

EFA outputs are presented in Table 2, Figure 1. The factor structure of the scale was examined by analyzing the eigenvalues, salient loadings, total variance explained and scree plot. The scree plot of the eigenvalues is presented in Figure 1. The EFA results provided evidence for the construct validity of the Teachers' Digital Competence Scale because the factor structure of the scale was found to be consistent with the underlying theoretical framework, DigCompEdu. EFA is a widely accepted method for assessing the construct validity of measurement instruments and was used in this study to validate the Teachers' Digital Competence Scale.

The scree plot in Figure 1 suggested a four-dimension structure with eigenvalues above 1. The initial eigenvalues of the first, second, third, and fourth factors are 19.981, 2.527, 1.295, and 1.161, respectively. The number of factors with eigenvalues above 1 indicates the number of components.

When the total variance explained is examined, it was seen that the first factor explained 24.262% of the total variance, while the second, third, and fourth factors explained 17.810%, 14.428%, 12.864%, and 12.155% of the factor variance, respectively. The total variance explained by the four factors together is 65.757%. These values are within the variance ratios (2/3) that multifactor scales should account for social science research as suggested by Hair et al. (2019).

Promax output was examined to determine which items fell under each of the four factors. The EFA results provided evidence for the construct validity of the TDC Scale, as the factor structure of the scale was found to be consistent and mostly overlapping with the dimensions of DigCompEdu framework.

When the distribution of the Promax rotated items in the Teachers' Digital Competence Scale was examined, the first factor consisted of 18 items and labeled as “Teachers' Professional Digital Competence” (TPDC), the second factor consisted of 10 items and labeled as “Teachers' Use of Instructional and Communication Tools” (TUICT), the third factor consisted of 4 items and labeled as “Teachers' Use of Hardware Tools” (TUHT), and finally the fourth factor consisted of 4 items and labeled as “Teachers' Digital Content Development” (TDCD). The factor loadings of the items in the first factor ranged between 0.968 and 0.545. Factor loadings of the items in the second, third, and fourth factors ranged between 0.880 and 0.379, 0.786, and 0.415, and 0.885 and 0.351, respectively. The results of the analysis showed that there were no overlapping items in the scale and no item had a factor loading below 0.32. Based on the eigenvalues, explained variance and the scree plot, it was concluded that the TDC-S may have a four-factor latent structure. However, it is important that this structure is statistically demonstrated and theoretically justified for the convenience of researchers. Therefore, the four-factor structure proposed by EFA was tested using CFA.

3.2 Confirmatory factor analysis

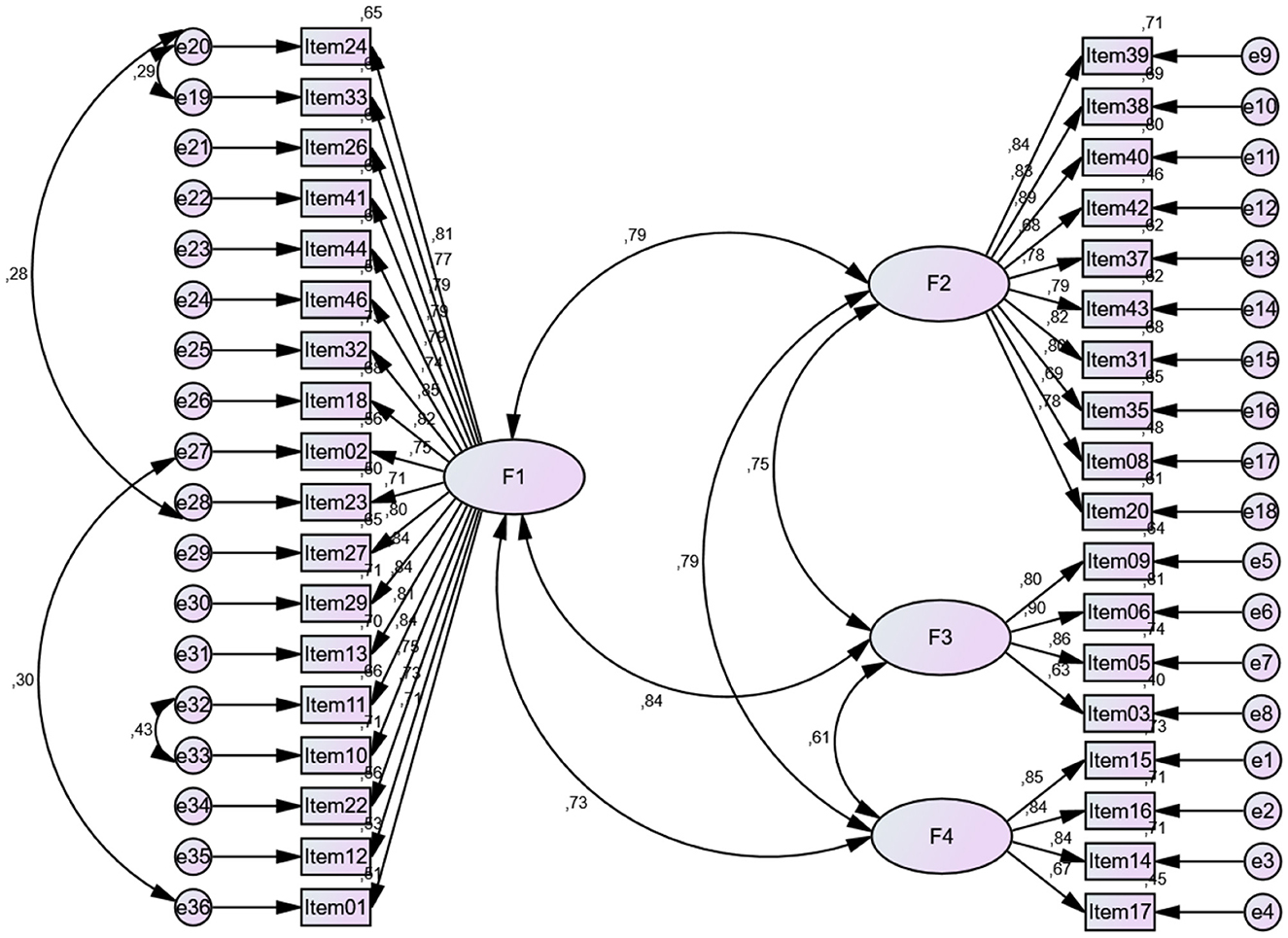

The suitability of the four-factor structure that emerged as a result of the Exploratory Factor Analysis (EFA) was tested with Confirmatory Factor Analysis (CFA) with three models. Model 1 was first order four-factor uncorrelated model. Model 2 was first order four-factor correlated model and model 3 was first order four-factor correlated model with modifications. The fit indices retrieved as a result of testing all three models were reported in Table 3 and CFA output of measurement model 3 was illustrated in Figure 2.

Table 3. Goodness of fit indices and criterion values regarding the fitness of the measurement model of the TDC scale.

X2/df , RMSEA, NFI, CFI, IFI and CFI values were examined to determine the model fit of the alternative measurement model. The Δ X2 test were employed to understand if there is a significant change in the alternative goodness of fit indices for each model. The results of the model fit and criterion values are presented in Table 3.

A comparative analysis of model fit indices revealed that Model 1 exhibited the poorest fit [X2 = 2,324.244 df = 594, p = 0.00, X2/df = 3.913, RMSEA = 0.104, CFI = 0.802, IFI = 0.803, NFI = 0.752], with indices largely exceeding acceptable thresholds. In contrast, Model 3 outperformed the other two models producing indices [X2 = 1,480.693 df = 584, p = 0.00, X2/df = 2.535, RMSEA = 0.075, CFI = 0.897, IFI = 0.898, NFI = 0.842] that met or exceeded acceptability criteria. Based on the CFA results, the modified model fit values were at an acceptable level and the four-factor structure were supported and best fit with the dataset (Collier, 2020; Tabachnick and Fidell, 2019). Additionally, the Δ X2 test was performed to understand if there is a significant change in the alternative goodness of fit indices for each model. Results illustrated that both Model 2 (Δ X2 = 717,579) and Model 3 (Δ X2 = 843,551) were significantly performed better results compared with Model 1.

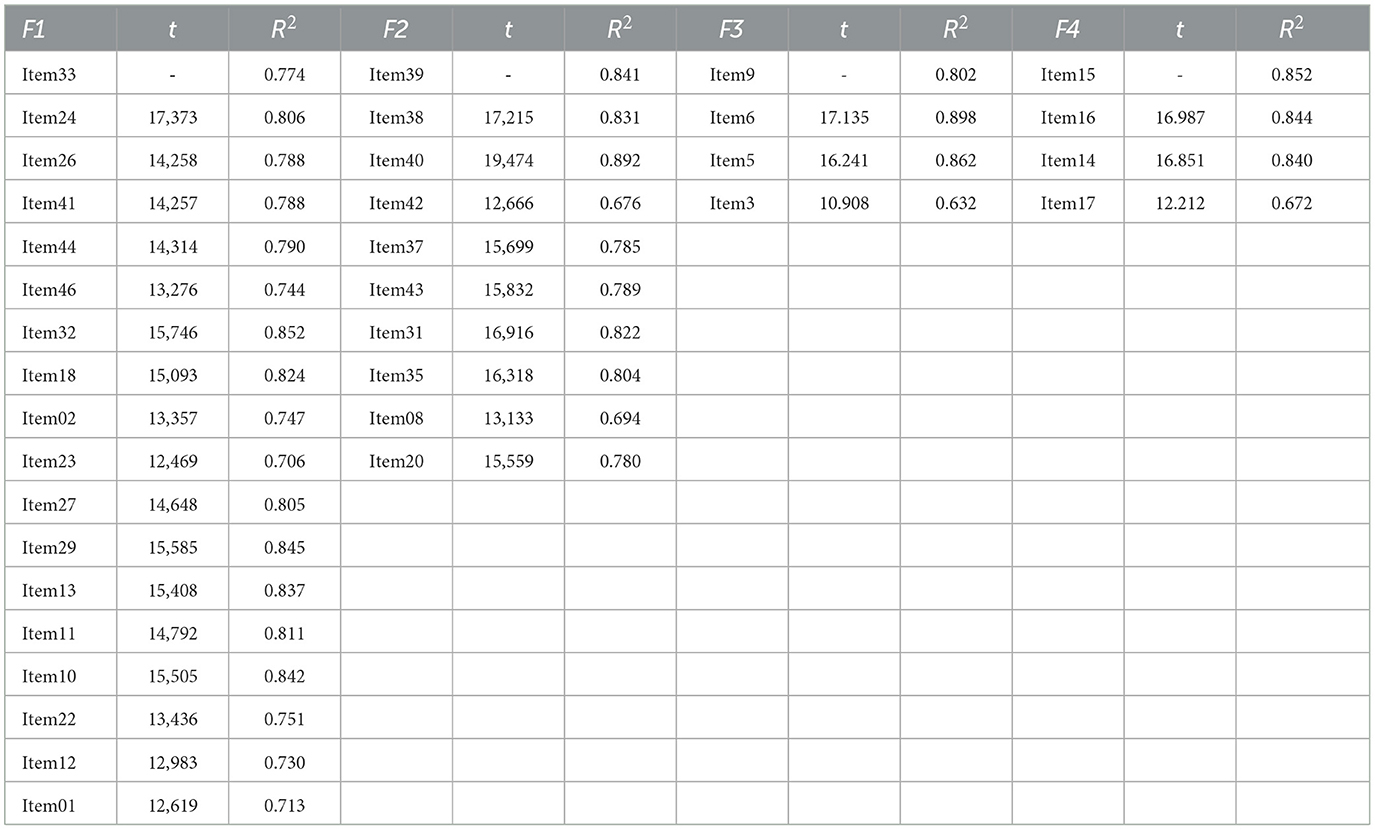

When the t and R2 values of the items belonging to the measurement model are examined in Table 4, the highest values were consecutively in the first factor, Item24 (t = 17.373, R2 = 0.806), in the second factor Item40 (t = 19.474, R2 = 0.892), in the third factor item Item6 (t = 17.135, R2 = 0.898), and in the fourth factor Item16 (t = 16.987, R2 = 0.844). These items make the highest contribution. These findings supported and validated the four-factor structure proposed in the EFA.

3.3 Reliability, discriminant and convergent validity analysis

To assess the reliability of the TDC scale, a comprehensive battery of psychometric measures was employed. While Cronbach's alpha coefficient is a commonly used method for assessing the reliability of multi-factor scales, its reliance on the assumption of unidimensionality has been widely criticized (Sijtsma, 2009). In recognition of this limitation, researchers have advocated for the use of multiple reliability indices to ensure the psychometric soundness of TDC scale. One such index is McDonald's omega (ω) coefficient. The ω coefficient is a more robust measure of reliability than Cronbach's alpha as it does not assume unidimensionality and is less affected by the number of items in a scale.

In addition to reliability, the establishment of discriminant validity is essential in research involving latent variables, particularly when multiple items or indicators are employed to operationalize constructs. This is to ensure that the latent constructs do not represent the same underlying phenomenon (Hamid et al., 2017). Discriminant validity is concerned with the extent to which a construct is distinct from other constructs. In this study, the composite reliability (CR) and average variance extracted (AVE) values were estimated to assess the convergent and discriminant validity of the latent structures of the TDC scale. CR, also known as internal consistency, is the combined reliability of the latent constructs that underlie the scale (Fornell and Larcker, 1981). The CR coefficient is a more robust measure of reliability than Cronbach's alpha as it takes into account both the factor loading values and error variances of the items (Sijtsma, 2009). Hair et al. (2019) suggested a CR value of 0.70 or higher as indicative of acceptable reliability. AVE is a measure of the amount of variance in the items of a construct that is explained by the construct itself. An AVE value of 0.50 or higher is generally considered indicative of acceptable convergent validity. The results of internal consistency, convergent and discriminant validity estimates and Heterotrait-Monotrait (HTMT) ratio of correlations between the constructs were presented in Table 5.

As illustrated in Table 5, the Cronbach's alpha internal consistency coefficients calculated for the underlying constructs including TPDC (α = 0.97), TUICT (α = 0.94), TUHT (α = 0.86), and TDCD (α = 0.87), which indicated a good reliability regarding the internal consistency of the indicators (Hair et al., 1998). Additionally, McDonald's Omega (ω) coefficients, which are another internal consistency measure, are estimated values ranging from 0.97 to 0.88 across the constructs of TDC scale, which also supported the high-level internal consistency of the indicators. As shown in Table 5, all constructs exhibited CR values above the recommended threshold of 0.70 (Hair et al., 2019), ranging from 0.966 to 0.880. Additionally, all constructs exhibited AVE values above the recommended threshold of 0.50 (Fornell and Larcker, 1981), ranging from 0.649 to 0.620.

Convergent validity is a type of construct validity that assesses the extent to which a measure correlates with other measures of the same construct. In other words, it measures how well a measure captures the construct it is designed to assess. Convergent validity is demonstrated when a measure is highly correlated with other measures of the same construct, and when the items in the measure are all measuring the same thing (Hair, 2009). Two statistical indicators that can be used to assess convergent validity are CR and AVE. Hair (2009) suggests that convergent validity is observed when CR is higher than AVE, and AVE is higher than 0.5. In this study, all CR values are higher than estimated AVE values and all AVE values are higher than 0.5, which is an indication of the convergent validity of the latent constructs in the TDC scale. These findings suggest that the TDC scale constructs have good convergent validity, meaning that the items within each construct are well-correlated and measure the same underlying concept.

Discriminant validity was assessed using the HTMT ratio of correlations method (Henseler et al., 2015). The HTMT ratio is a modern approach to testing multicollinearity issues within latent constructs, and it is reportedly superior to Fornell and Larcker's (1981) method, which compares the square root of each AVE (Hamid et al., 2017). As proposed by Henseler et al. (2015), if the HTMT ratio is below 0.90, then discriminant validity has been established between two constructs. All HTMT ratios between the TDC scale constructs were below 0.90 while minimal concerns exist regarding the discriminant validity of the TUICT and TDCD constructs. The HTMT ratio between these two constructs were above 0.85. However, even this HTMT ratio was well below the threshold of 0.90, suggesting that discriminant validity can be considered acceptable for these two constructs in the measurement model. These results provide support for the distinctiveness of the TDC scale constructs (Henseler et al., 2015; Raykov, 1997). In conclusion, the TDC-S is a psychometrically sound measure of teachers' digital competences. This is evidenced by the strong convergent and discriminant validity of the constructs, as demonstrated by the CR, AVE, and HTMT ratio indices.

4 Discussion and conclusions

Digital competence is essential for teachers to effectively utilize digital educational tools, enhance the learning process, and engage students. It also contributes to teachers' professional development by enabling them to learn new teaching methods, collaborate with other teachers, and expand their professional networks (Basilotta-Gómez-Pablos et al., 2022; Garzón Artacho et al., 2020). In this regard, the present study aimed to develop a valid and reliable measurement tool to assess the digital competences of teachers. The sample group consisted of 271 teachers with a mean age of 40.61 ±7.57. As a result of the research, a five-point Likert-type scale consisting of a 36-item loaded onto four dimensions was developed, validated, and named as Teachers' Digital Competence Scale (TDC-S). The first factor consisting of 18 items was labeled as “Teachers' Professional Digital Competence (TPDC)”. The second factor, labeled as “Teachers' Use of Instructional and Communication Tools (TUICT)” consisted of 10 items, while the third factor, labeled as “Teachers' Use of Hardware Tools (TUHT)” consisted of four items. Finally, the fourth factor consisted of four items was labeled as “Teachers' Digital Content Development (TDCD)”. All these four factors mostly overlapped with the six dimensions of DigCompEdu. The total variance explained by four factors was 65.757%, which is beyond cut-off point for multi factor scales. Regarding the reliability analysis, internal consistency coefficients including Cronbach's Alpha, McDonald's Omega, CR and AVE were examined and all values validated the psychometric quality of the TDC-S. Confirmatory factor analysis (CFA) results showed that the four-factor structure proposed by exploratory factor analysis (EFA) was confirmed. All these results together confirmed the four-factor structure, resulting in TDC-S is a reliable and valid tool to assess teachers' digital competences.

Our research offers distinct theoretical and empirical contributions when juxtaposed with prior studies focused on the development of TDC scales (Alarcón et al., 2020; Al Khateeb, 2017; Cabero-Almenara et al., 2020a; Ghomi and Redecker, 2019; Gümüş and Kukul, 2023; Kuzminska et al., 2019; Tondeur et al., 2017). Among these, only three studies (Al Khateeb, 2017; Gümüş and Kukul, 2023; Tondeur et al., 2017) employed both EFA and CFA. In contrast, other studies either exclusively used CFA (Alarcón et al., 2020; Cabero-Almenara et al., 2020a; Ghomi and Redecker, 2019) or EFA (Kuzminska et al., 2019) to discern the primary components of the digital competence scale, such as Internet skills and Technology/ICT Literacy. Unlike many previous scale development studies, we utilized both EFA and CFA, and further enhanced its validity by assessing Composite Reliability (CR), Average Variance Extracted (AVE), and the Heterotrait-Monotrait (HTMT) correlation ratio. This rigorous approach arguably offers a more comprehensive and superior scale. As a result of the validity and reliability study of the TDC-S was rigorously conducted, it can be administered to all teachers at all levels and in different branches to measure their digital competences. Thus, TDC-S can be supportive in determining the digital competency level of teachers or prospective teachers. Additionally, our participant pool consisted of 271 teachers spanning a range of educational levels from primary to upper-secondary grades. This contrasts with previous studies, which either had limited sample sizes (Al Khateeb, 2017) or focused on teaching demographics, such as pre-service teachers (Tondeur et al., 2017), subject-specific teachers (Al Khateeb, 2017), or those in higher education (Cabero-Almenara et al., 2020a). Notably, although a limited number of studies (Alarcón et al., 2020; Ghomi and Redecker, 2019; Gümüş and Kukul, 2023) recruited a sample of teachers across all educational levels, their methodologies lacked the combined EFA and CFA approach, and/or the clarity of their convergent and discriminant validity measures for latent structures remains ambivalent. Lastly, our EFA results heralded that the total variance explained by all four factors in TDC-S surpassed that of other studies (Al Khateeb, 2017; Kuzminska et al., 2019; Tondeur et al., 2017), underscoring the robustness of TDC-S.

In addition to its theoretical and practical contributions to the field of TDC, our study has some limitations since the TDC-S tool was validated with a sample of teachers from a limited number of schools at different grade levels in Türkiye. Future research should therefore focus on adapting the TDC-S to other languages and countries, or on developing similar instruments in those countries, to enable cross-cultural comparisons and discussions. It would also be of interest to incorporate the views of students into instruments of this kind, regarding the digital competence of their educators and the digital resources available in their learning environment.

The development of the TDC-S measurement tool in this study is expected to add to the existing literature by evaluating TDC, which is essential for meeting individual needs. TDC-S will also serve as a valuable instrument for future research endeavors owing to its robust psychometric properties. The TDC-S holds the potential for broad applicability across diverse educational professionals and prospective teachers to assess their digital competencies effectively. This tool, validated through empirical research, offers a means to assess the digital proficiency of teachers and pre-service educators, enabling the identification of areas requiring further development. The TDC-S instrument stands poised to contribute to future studies aimed at evaluating and enhancing the digital competencies of educators, thereby supporting ongoing efforts to sustain teachers' continuous professional learning within the educational landscape. Furthermore, the validity and reliability of the scale can be tested with larger sample groups by applying it to different groups of teachers. Future research should also administer TDC-S and other existing digital competence assessment tools in order to test concurrent validity of the measures. In addition, TDC-S can be applied in future studies to evaluate multiple group differences in TDC-S scores according to gender, age, branch, and similar conditions. This could provide valuable insights into the factors that influence TDC. Such insights can be pivotal in reshaping teacher training curricula, ensuring the integration of digital competences into teaching-learning process in the digital era.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Hitit University Ethical Board of Non-Interventional Studies (2021-88/10.01.2022). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

MA: Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Software, Supervision, Validation. TY: Data curation, Writing – original draft, Writing – review & editing. MK: Conceptualization, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The authors sincerely acknowledge the teachers who participated in the study on a voluntary basis and provided written informed consent.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1356573/full#supplementary-material

References

Al Khateeb, A. A. M. (2017). Measuring digital competence and ICT literacy: an exploratory study of in-service English language teachers in the context of Saudi Arabia. Int. Educ. Stud. 10, 38–51. doi: 10.5539/ies.v10n12p38

Alarcón, R., Del Pilar Jiménez, E., and De Vicente-Yagüe, M. I. (2020). Development and validation of the DIGIGLO, a tool for assessing the digital competence of educators. Br. J. Educ. Technol. 51, 2407–2421. doi: 10.1111/bjet.12919

Aydin, M. K., and Yildirim, T. (2022). Teachers' digital competence: bibliometric analysis of the publications of the Web of Science scientometric database. Inform. Technol. Learn. Tools 91, 205–220. doi: 10.33407/itlt.v91i5.5048

Bartlett, M. S. (1954). A note on the multiplying factors for various χ2 approximations. J. Royal Statis.Soc. Series B 16, 296–298. doi: 10.1111/j.2517-6161.1954.tb00174.x

Basilotta-Gómez-Pablos, V., Matarranz, M., Casado-Aranda, L.-A., and Otto, A. (2022). Teachers' digital competencies in higher education: a systematic literature review. Int. J. Educ. Technol. Higher Educ. 19:8. doi: 10.1186/s41239-021-00312-8

Bryant, F. B., and Yarnold, P. R. (1995). “Principal-components analysis and exploratory and confirmatory factor analysis,” in Reading and Understanding Multivariate Statistics, eds. L. G. Grimm and P. R. Yarnold (Washington, DC: American Psychological Association), 99–136.

Cabero-Almenara, J., Gutiérrez-Castillo, J.-J., Palacios-Rodríguez, A., and Barroso-Osuna, J. (2020a). Development of the teacher digital competence validation of digcompedu check-in questionnaire in the university context of Andalusia (Spain). Sustainability 12:15. doi: 10.3390/su12156094

Cabero-Almenara, J., and Martínez, A. (2019). Information and Communication Technologies and initial teacher training. Digital models and competences. Profesorado 23, 247–268. doi: 10.30827/profesorado.v23i3.9421

Cabero-Almenara, J., and Palacios-Rodríguez, A. (2020). Digital Competence Framework for Educators DigCompEdu. Translation and adaptation of DigCompEdu Check-In questionnaire. EDMETIC Rev. Educ. Mediática Y TIC. 9, 213–234. doi: 10.21071/edmetic.v9i1.12462

Cabero-Almenara, J., Romero-Tena, R., and Palacios-Rodríguez, A. (2020b). Evaluation of teacher digital competence frameworks through expert judgement: the use of the expert competence coefficient. J. New Approa. Educ. Res. 9:2. doi: 10.7821/naer.2020.7.578

Caena, F., and Redecker, C. (2019). Aligning teacher competence frameworks to 21st century challenges: The case for the European Digital Competence Framework for Educators (Digcompedu). Eur. J. Educ. 54, 356–369. doi: 10.1111/ejed.12345

Castaño Muñoz, J., Vuorikari, R., Costa, P., Hippe, R., and Kampylis, P. (2023). Teacher collaboration and students' digital competence—evidence from the SELFIE tool. Eur. J. Teach. Educat. 46, 476–497. doi: 10.1080/02619768.2021.1938535

Christine, R. (2017). European Framework for the Digital Competence of Educators: DigCompEdu, Publications Office of the European Union. Belgium. Available at: https://policycommons.net/artifacts/2163302/european-framework-for-the-digital-competence-of-educators/2918998/ (accessed November 01, 2023). CID: 20.500.12592/qzx461.

Collier, J. (2020). Applied Structural Equation Modeling using AMOS: basic to advanced techniques. New York: Routledge.

Council of the European Union (2018). Council Recommendation of 22 May 2018 on Key Competences for Lifelong Learning. Brussels, Belgium: Official Journal of the European Union.

DeVellis, R. F. (2017). Scale Development: Theory and Applications 4th ed. Thousand Oaks, CA: Sage Publications.

European Commission (2023). SELFIE for teachers: Designing and developing a self reflection tool for teachers' digital competence. Publications Office. Available at: https://data.europa.eu/doi/10.2760/561258

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., and Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychol. Methods 4, 272–299. doi: 10.1037/1082-989X.4.3.272

Falloon, G. (2020). From digital literacy to digital competence: the teacher digital competency (TDC) framework. Educ. Technol. Res. Dev. 68, 2449–2472. doi: 10.1007/s11423-020-09767-4

Fernández-Miravete, Á. D., and Prendes-Espinosa, P. (2022). Digitalization of educational organizations: evaluation and improvement based on DigCompOrg model. Societies 12:6. doi: 10.3390/soc12060193

Flores-Lueg, C., and Roig Vila, R. (2016). Design and validation of a scale of self-assessment Digital Skills for students of education. Pixel-Bit-Revista De Medios Y Educ. 48, 209–224. doi: 10.12795/pixelbit.2016.i48.14

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.1177/002224378101800104

Garzón Artacho, E., Martínez, T. S., Ortega Martín, J. L., Marín Marín, J. A., and Gómez García, G. (2020). Teacher training in lifelong learning—the importance of digital competence in the encouragement of teaching innovation. Sustainability 12:7. doi: 10.3390/su12072852

Ghomi, M., and Redecker, C. (2019). “Digital Competence of Educators (DigCompEdu): Development and Evaluation of a Self-assessment Instrument for Teachers' Digital Competence,” in Proceedings of the 11th International Conference on Computer Supported Education, 541–548.

Gómez-García, G., Hinojo-Lucena, F.-J., Fernández-Martín, F.-D., and Romero-Rodríguez, J.-M. (2022). Educational challenges of higher education: validation of the information competence scale for future teachers (ICS-FT). Educ. Sci. 12:1. doi: 10.3390/educsci12010014

Gümüş, M. M., and Kukul, V. (2023). Developing a digital competence scale for teachers: validity and reliability study. Educ. Inform. Technol. 28, 2747–2765. doi: 10.1007/s10639-022-11213-2

Hair, J. F., Black, W. C., Babin, B. J., Anderson, R. E., and Tatham, R. L. (1998). Multivariate Data Analysis. New Jersey: Uppersaddle River.

Hair, J. F., Risher, J. J., Sarstedt, M., and Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. Eur. Busin. Rev. 31, 2–24. doi: 10.1108/EBR-11-2018-0203

Hamid, M. R. A., Sami, W., and Sidek, M. H. M. (2017). Discriminant validity assessment: use of Fornell & Larcker criterion versus HTMT criterion. J. Phys.: Conf. Series. 890:012163. doi: 10.1088/1742-6596/890/1/012163

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika 39, 31–36. doi: 10.1007/BF02291575

Kuzminska, O., Mazorchuk, M., Morze, N., Pavlenko, V., and Prokhorov, A. (2019). “Study of digital competence of the students and teachers in Ukraine,” in Information and Communication Technologies in Education, Research, and Industrial Applications, eds. V. Ermolayev, M. C. Suárez-Figueroa, V. Yakovyna, H. C. Mayr, M. Nikitchenko, & A. Spivakovsky (Cham: Springer International Publishing), 148–169.

Li, X.-L., Dong, V. A.-Q., and Fong, K. N. K. (2015). Reliability and Validity of School Function Assessment for Children with Cerebral Palsy in Guangzhou, China. Hong Kong Journal of Occupational Therapy 26, 43–50. doi: 10.1016/j.hkjot.2015.12.001

Madsen, S. S., O'Connor, J., Jane,š, A., Klančar, A., Brito, R., and Demeshkant, N. (2023). International perspectives on the dynamics of pre-service early childhood teachers' digital competences. Educ. Sci. 13:7. doi: 10.3390/educsci13070633

Mafratoglu, R., Altinay, F., Ko,ç, A., Dagli, G., and Altinay, Z. (2023). Developing a School improvement scale to transform education into being sustainable and quality driven. Sage Open 13:21582440231157584. doi: 10.1177/21582440231157584

Martín-Párraga, L., Llorente-Cejudo, C., and Barroso-Osuna, J. (2023). Self-perception of digital competence in university lecturers: a comparative study between universities in spain and peru according to the DigCompEdu model. Societies 13:6. doi: 10.3390/soc13060142

Nguyen, L. A. T., and Habók, A. (2024). Tools for assessing teacher digital literacy: a review. J. Comput. Educ. 11, 305–346. doi: 10.1007/s40692-022-00257-5

Norman, G. R., and Streiner, D. L. (2014). Biostatistics: The Bare Essentials Shelton. Connecticut: People's Medical House.

Ochoa Fenández, C. J. (2020). “Special Session—XR Education 21th. Are We Ready for XR Disruptive Ecosystems in Education?,” in 2020 6th International Conference of the Immersive Learning Research Network (iLRN) (San Luis Obispo, CA: IEEE), 424–26. doi: 10.23919/iLRN47897.2020.9155215

Raykov, T. (1997). Scale reliability, Cronbach's coefficient alpha, and violations of essential tau-equivalence with fixed congeneric components. Multivariate Behav. Res. 32, 329–353. doi: 10.1207/s15327906mbr3204_2

Rodríguez-García, A. M., Raso Sanchez, F., and Ruiz-Palmero, J. (2019). Digital competence, higher education and teacher training: a meta-analysis study on the Web of Science. Pixel-BIT-Revista de Medios y Educ. 54, 65–81. doi: 10.12795/pixelbit.2019.i54.04

Santo, E., do, E., Dias-Trindade, S., and Reis, R. S. dos. (2022). Self-Assessment of digital competence for educators: a Brazilian study with university professors. Res. Soc. Dev. 11:9. doi: 10.33448/rsd-v11i9.30725

Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach's Alpha. Psychometrika 74, 107–120. doi: 10.1007/s11336-008-9101-0

Tabachnick, B. G., and Fidell, L. S. (2001). Using Multivariate Statistics (4th ed.). Boston: Allyn & Bacon.

Tabachnick, B. G., and Fidell, L. S. (2019). Using Multivariate Statistics, 7th ed. London: Pearson.

Tondeur, J., Aesaert, K., Pynoo, B., van Braak, J., Fraeyman, N., and Erstad, O. (2017). Developing a validated instrument to measure preservice teachers' ICT competencies: meeting the demands of the 21st century. Br. J. Educ. Technol. 48, 462–472. doi: 10.1111/bjet.12380

Tzafilkou, K., Perifanou, M., and Economides, A. A. (2022). Development and validation of students' digital competence scale (SDiCoS). Int. J. Educ. Technol. Higher Educ. 19:30. doi: 10.1186/s41239-022-00330-0

Watkins, M. W. (2021). Exploratory Factor Analysis: A Guide to Best Practice. Thousand Oaks, CA: Sage Publications.

Worthington, R. L., and Whittaker, T. A. (2006). Scale development research: a content analysis and recommendations for best practices. Couns. Psychol. 34, 806–838. doi: 10.1177/0011000006288127

Yelubay, Y., Seri, L., Dzhussubaliyeva, D., and Abdigapbarova, U. (2020). “Developing future teachers' digital culture: challenges and perspectives,” in 2020 IEEE European Technology and Engineering Management Summit (E-TEMS) (Dortmund: IEEE), 1–6.

Keywords: digital competence, reliability, scale development, teachers, validation

Citation: Aydin MK, Yildirim T and Kus M (2024) Teachers' digital competences: a scale construction and validation study. Front. Psychol. 15:1356573. doi: 10.3389/fpsyg.2024.1356573

Received: 15 December 2023; Accepted: 25 July 2024;

Published: 16 August 2024.

Edited by:

Fahriye Altinay, Near East University, CyprusReviewed by:

Muhammet Berigel, Karadeniz Technical University, TürkiyeJoao Mattar, Pontifical Catholic University of São Paulo, Brazil

Copyright © 2024 Aydin, Yildirim and Kus. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mehmet Kemal Aydin, bWVobWV0a2VtYWxheWRpbkBoaXRpdC5lZHUudHI=

Mehmet Kemal Aydin

Mehmet Kemal Aydin Turgut Yildirim

Turgut Yildirim Metin Kus

Metin Kus