- 1Faculty of Arts and Philosophy, IPEM Institute of Psychoacoustics and Electronic Music, Ghent University, Ghent, Belgium

- 2Institute of Cognitive Sciences and Technologies, National Research Council of Italy (CNR), Rome, Italy

In this perspective paper, we explore the use of haptic feedback to enhance human-human interaction during musical tasks. We start by providing an overview of the theoretical foundation that underpins our approach, which is rooted in the embodied music cognition framework, and by briefly presenting the concepts of action-perception loop, sensorimotor coupling and entrainment. Thereafter, we focus on the role of haptic information in music playing and we discuss the use of wearable technologies, namely lightweight exoskeletons, for the exchange of haptic information between humans. We present two experimental scenarios in which the effectiveness of this technology for enhancing musical interaction and learning might be validated. Finally, we briefly discuss some of the theoretical and pedagogical implications of the use of technologies for haptic communication in musical contexts, while also addressing the potential barriers to the widespread adoption of exoskeletons in such contexts.

1 Introduction

One of the primary motivations for human collaborative interaction is the pursuit of reaching goals that typically go beyond the scope of individual capabilities (Jarrassé et al., 2012; Sebanz and Knoblich, 2021). Such forms of collaboration commonly rely on the exchange of verbal and sensory information among the interacting humans. For instance, a table, too heavy for one person, can be moved collaboratively by two individuals. Effective collaboration can reduce cost, time and overall effort required for the task.

In this paper, we consider the question of how collaborative interactions might be enhanced through the use of technology with the twofold aim of increasing effectiveness and reducing the effort required from humans. We believe that playing music is a suitable context in which to test this hypothesis, for at least two reasons. First, because playing music is paradigmatic for human-human collaboration and sensorimotor interaction (D’Ausilio et al., 2012, 2015; Nijs, 2019). Second, because providing a measurable definition of the augmented level of collaboration in the domain of music is seemingly facilitated by the fact that several quantifiable sensorimotor parameters are at stake in music interaction (Biasutti et al., 2013; Volpe et al., 2016; Campo et al., 2023). For example, a stable musical rhythm and tempo require each musician in an ensemble to coordinate their actions in response to the rhythms played by other musicians. The co-regulation of the ensemble can be measured by onset extraction and subsequent analysis of timing intervals (for example, by using trackers of periods based on Kalman filtering, Leman, 2021).

Over the last decades, musical interactions have been conceptualized within the broad theoretical framework of embodied cognition, according to which human action and body movement play a central role in the experience of music, among others sensorimotor activities (Leman, 2007; Biasutti et al., 2013). The question we want to address here deals with the possibility of technologies to enhance these embodied interactions. We envision the possibility that enhancing the exchange of information among users by providing an additional channel of information based on haptic feedback might help boost the collaborative dimension of human-human interaction. The challenge is whether these technologies can enhance collaborative interactions and how to empirically demonstrate their positive effects, if any.

To address these questions, we first outline the theoretical foundation on which our explorations are based, namely the embodied music cognition framework, focusing especially on the concepts of action-perception loop and entrainment (Section 2). Thereafter, we examine the nature of haptic information (Section 3) and the role of haptic exoskeletons to communicate such information (Section 4). We present two experimental scenarios that could demonstrate the effectiveness of the technology for enhancing musical interaction (Section 5). Finally, we discuss the theoretical and pedagogical implications of this perspective technology as well as its limitations (Section 6).

2 Action-perception cycles and entrainment

Using technology to add sensory feedback providing information on other musicians could be seen as a natural extension of the human action-perception cycle, in which external auditory feedback generates a motor output, which in turn produces auditory feedback and another motor response (Kaspar et al., 2014; Leman and Maes, 2014; Pinardi et al., 2023). The technological augmentation of the sensory feedback with the addition of haptic information would influence action-perception cycles via entrainment, which can be conceived of as a dynamic adaptation of the actions of a subject influenced by the actions of another subject (Kaspar et al., 2014; König et al., 2016; Pinardi et al., 2023). Both action-perception loops and entrainment are fundamental concepts that underpin the theoretical foundation for technology-mediated collaborative feedback (Kaspar et al., 2014).

Music performance is an excellent domain for exploring this kind of feedback as it involves a tight coupling of perception and action. The idea that music taps into a shared action repertoire for both the encoding (playing) and decoding (listening, dancing) of music has been central to the embodied music cognition framework (Leman, 2007; Barsalou, 2008; Godøy and Leman, 2010; Leman and Maes, 2014; Schiavio, 2014). It connects with recent trends in cognitive science for understanding human action and experience (Prinz, 1997; Sheets-Johnstone, 1999; Thelen, 2000; Gibbs, 2006; Shapiro, 2011; Witt, 2011). The conceptual framework generated a solid body of empirical research that provided evidence for the role of human motor system and body movements in music perception, showing that how we move affects how we interpret and perceive rhythm, and what we hear depends on how we move and vice-versa (Phillips-Silver and Trainor, 2005, 2007; Maes and Leman, 2013; Manning and Schutz, 2013; Maes et al., 2014a). For instance, Maes et al. (2014b) showed that music-driven gestures could facilitate music perception and Moura et al. (2023) recently showed that the tonal complexity of music tightly couples to knee bending movements of saxophone players performing the music.

In embodied music cognition, sensorimotor coupling is seen as a lower-level form of action-perception coupling. Sensorimotor coupling covers multiple proprioceptive and exteroceptive cycles at work during playing, including haptic feedback (Leman, 2007). Consider a violinist who receives proprioceptive feedback from their own musculoskeletal system holding the violin, as well as acoustic feedback from the sound produced through air and bone conduction. At higher-level, one could mention gestures, body movement and actions, connected to perceived structures, for example. In this embodied perspective, the collaborative interaction with co-regulated actions in view of shared musical goal is one of the most characterizing aspects of any ensemble, from duet, to trio, to big orchestra (Biasutti et al., 2013; Glowinski et al., 2013; Badino et al., 2014). The action-perception cycle can be seen as the ability in each subject to react coherently to changes that occur in the ensemble (Biasutti et al., 2013). Last but not least, action-perception cycles exist also between the players and the listeners, with feedback coming through multiple sensory channels, such as the visual channel (facial expressions, gaze, gestures and movements), the acoustic channel (e.g., singing, hand clapping), the olfactory channel (e.g., different smells).

The action-perception cycle can thus be seen as the engine driving co-regulated actions at group level. Entrainment has been suggested to be a useful concept (Clayton, 2012; Keller et al., 2014; MacRitchie et al., 2017; Clayton et al., 2020) to capture the dynamic change in musical actions. Clayton (2012) distinguished between three different levels of the entrainment, namely intra-individual, inter-individual, and inter-group entrainment. Intra-individual entrainment refers to processes that occur within a particular human being, such as the entrainment of networks of neuronal oscillators or the coordination between individual body parts (e.g., the limbs of a drummer). Inter-individual (or intra-group) entrainment concerns the co-ordination between the actions of individuals in a group, as might occur chamber or jazz ensembles. Finally, inter-group entrainment concerns the coordination between different groups.

Notably, the interaction and synchronization between individuals in Western group ensembles primarily occurs through visual and auditory channels (Goodman, 2002; Biasutti et al., 2013). This is because the nature of the task, where musicians play individual instruments, prevents the exchange of information between players via physical touch. What is at stake in this paper, however, is the emergence of novel technologies that could facilitate the haptic communication among musicians, thereby fostering a more profound sensorimotor interaction between them.

3 Haptic feedback

Having defined the basic concepts for collaborative interaction, it is of interest to consider how haptic feedback affects music playing. In general, haptics refers to the study of how humans perceive and manipulate touch, specifically through kinesthetic (force/position) and cutaneous (tactile) receptors (Hannaford and Okamura, 2016). Research has demonstrated the significance of touch and haptic information in facilitating human interaction, such as dancers synchronizing their movements through touch (Sofianidis and Hatzitaki, 2015; Chauvigné et al., 2018) or children learning to walk with parental assistance (Berger et al., 2014). Moreover, haptic feedback might be used to provide visuo-spatial information to people with visual impairments or blindness (Sorgini et al., 2018, for a review). For instance, a recent work by Marichal et al. (2023) a system for training basic mathematical skills of children with visual impairments based on the combination of additional haptic and auditory information.

In music learning, haptic information serves as a powerful tool, enabling learners to adjust their movements and develop new musical actions (Abrahamson et al., 2016). Teachers use touch to physically guide learners’ movement, to direct their attention to their bodies and to receive haptic information about students’ bodies, such as tension, which helps them guide, assess, and adapt their touch to the learners’ needs (Zorzal and Lorenzo, 2019; Bremmer and Nijs, 2020). Remarkably, haptic communication in music education depends on music teachers’ understanding of the ethical boundaries of physical contact with their learners, as learners might be highly sensitive to being touched (Bremmer and Nijs, 2020).

Over the last decade, several haptic feedback-based devices for instrumental music training have been developed. Most of them are based on vibrotactile stimulators that provide real-time feedback whenever the player deviates from a target movement trajectory (van der Linden et al., 2011), has incorrect body posture (Dalgleish and Spencer, 2014), deviates from the target pitch (Yoo and Choi, 2017), or provide guidelines to accurately execute rhythmic patterns that require multi-limb coordination (Holland et al., 2010) (see Figure 1 for the conceptual representation of these devices). Nevertheless, validation studies of these devices show that the efficiency of vibrotactile feedback is dependent on the player’s attentional needs, with some individuals experiencing difficulties concentrating on playing due to frequent and/or unclear vibrotactile input (van der Linden et al., 2011; Yoo and Choi, 2017). These findings suggest that vibrotactile feedback may not be the most effective type of haptic feedback for facilitating sensorimotor skill acquisition (van Breda et al., 2017).

Figure 1. A conceptual representation of state-of-the-art technologies developed for music playing. The systems typically include multiple sensors that track students’ physiological processes and provide real-time feedback on various movement and audio parameters related to their performance. They indicate when a player deviates from the target movement trajectory, adopts incorrect body posture, or deviates from the target pitch. They are usually designed to be used individually.

Surprising as it may seem, however, little research has been conducted on technologies that actively assist musicians in developing prediction schemes while playing via physical forces that guide sensorimotor control (e.g., when the teacher holds and guides the student’s arm while playing). Yet, kinesthetic haptic feedback, which transmits force and position information of the motor target movement, is considered a more promising type of haptic feedback for promoting the development of sensorimotor abilities as it enables fine-grained movement guidance in space and time (Fujii et al., 2015; Pinardi et al., 2023). For example, the validation study of the Haptic Guidance System apparatus (HAGUS), a kinesthetic device that targets the optimal rendition of wrist movements during drumming, suggests that force haptic guidance is significantly more effective than auditory guidance alone at communicating velocity information (Grindlay, 2008).

Furthermore, with the possible exception of the MoveMe system (Fujii et al., 2015), which connects an expert and a beginner via two haptic robots to enable the expert to guide and correct the beginner’s hand movement in real time, it seems that none of the previously mentioned devices tackle interpersonal interaction, which is vital in joint music performance and learning (D’Ausilio et al., 2015; Nijs, 2019).

4 Haptic exoskeleton technology

Exoskeletons are body-grounded kinesthetic devices designed to closely interact with the structures of the human body. They are positioned on the user’s body to provide sensory touch information, serving as amplifiers to enhance, strengthen, or recover human locomotor performance (Zhang et al., 2017). In this paper, we focus on lightweight, portable upper-limb exoskeletons with spring mechanisms, consisting of two haptic robotic modules for the shoulder and elbow. In addition to shoulder and elbow active modules, the robotic interface also includes passive elements that stabilize the device and evenly distribute the reaction forces over the user’s body, as well as torque-controlled, compliant actuators for accurate haptic rendering. This design allows the user to move the shoulder and elbow freely while receiving assistive action for flexion-extension.

This system is suitable for instrumental music training, particularly for these instruments that require relatively large arm movements for sound production, like strings or percussion instruments (see, e.g., the CONBOTS project, conbots.eu). Such a robotic system could convey force and position information on the motor target to the user, potentially improving prediction accuracy, accelerating, and facilitating the learning process. Exoskeletons might have the capability to not only provide haptic information to the user and guide his/her movement based on a targeted trajectory, but also to enable haptic communication between two exoskeleton-wearing users, allowing them to obtain accurate information on each other’s movements and forces in real-time (Takagi et al., 2017, 2018). The physical communication between users could be thus established by physically connecting two exoskeleton-wearing humans via a coupling mechanical impedance, which is haptically rendered in correspondence with the exoskeleton’s attachment points. As a result, users should experience a virtual impedance in accordance with their joints, whose equilibrium positions are based on the joint positions of the other user. Notably, the system’s visco-elastic properties enable real-time modulation of virtual impedance during tasks by adjusting spring/damping coefficients.

5 Music interaction scenarios

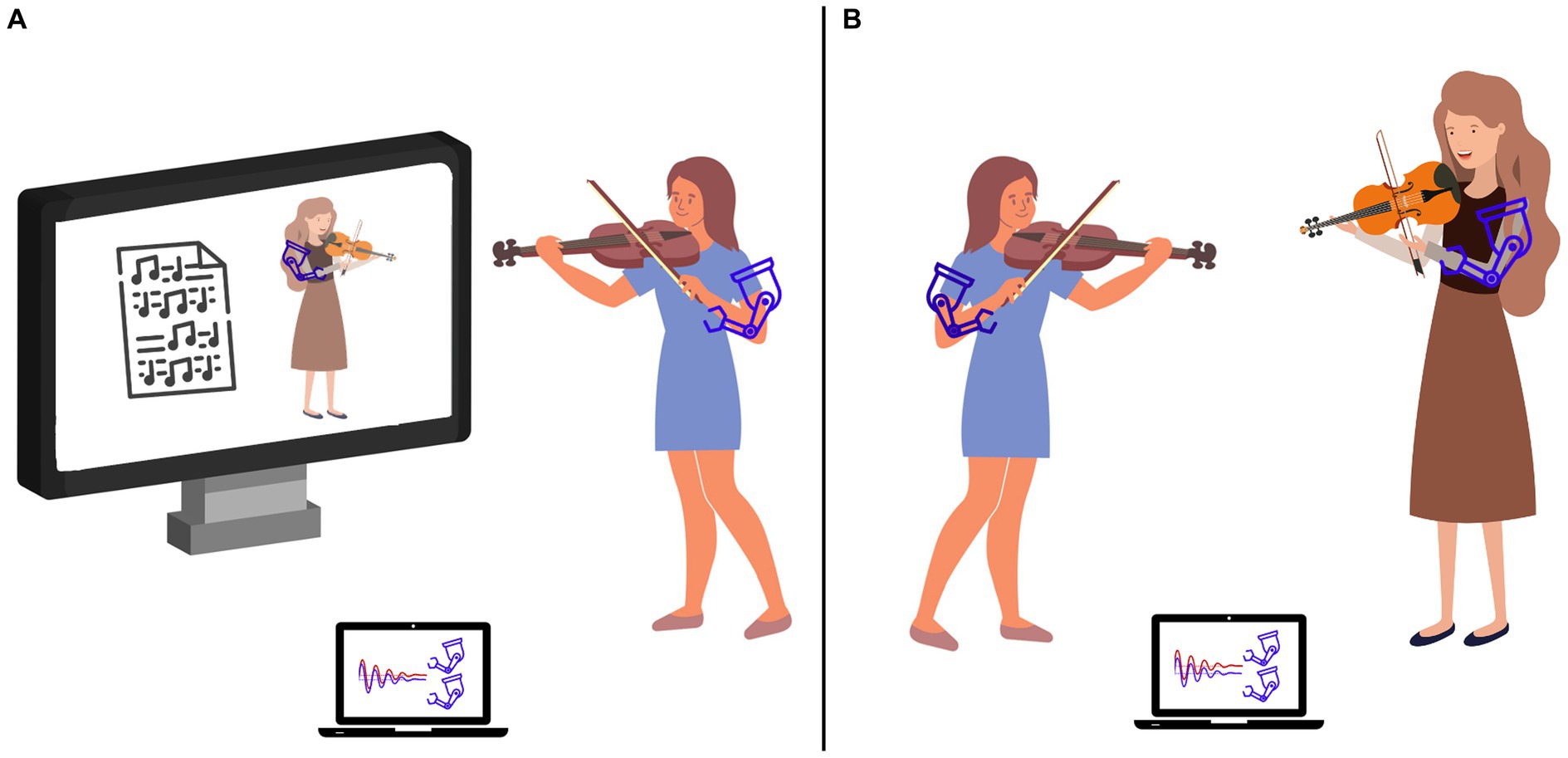

In this section, we propose two experimental scenarios in which to test the efficacy of real-time haptic feedback mediated by exoskeletons for musical tasks. The first scenario is based on the unidirectional human-machine interaction (see Figure 2A). The second scenario is based on the bidirectional human-human interaction (see Figure 2B).

Figure 2. A conceptual representation of the real-time kinesthetic haptic feedback mediated by exoskeletons to enhance learning and performance. Besides providing haptic information to the users and guide their movement based on a targeted trajectory (A), this technology has the capability to enable the exchange of forces between two exoskeleton-wearing users, allowing them to obtain haptic information on each other’s movements in real-time thus enhancing sensorimotor interaction and facilitating entrainment effects between users without interfering with the general performance and flow of the lesson or rehearsal (B).

5.1 Unidirectional human-machine interaction

The first scenario is based on a music playback system for violin beginners which involves a student who plays along with a video showing the teacher. The video is augmented with haptic exoskeletons providing information about the teacher’s movement. Wearing the lightweight exoskeleton, the student receives both auditory, visual, and haptic information while performing the task (see Figure 2A). The student’s goal is to synchronize their bowing movements with the ones of the teacher. Besides following the teacher’s instructions audio-visually, students can also benefit from the haptic information on the actual movements performed during the lesson, thus introducing an additional information channel. It would be reasonable to hypothesize that such additional haptic feedback can enhance the action-perception cycles by providing additional information which fuels the student’s sensorimotor loop. The enrichment of action-perception cycles might have, in turn, cascading positive effects on student’s performance of bowing movements and on learning pace. Improvements in bowing can be quantitatively assessed by comparing the parameters of movements (e.g., trajectory and smoothness) of a group of students who train with the haptic teacher with a control group of learners training with the video playback only.

5.2 Bidirectional human-human interaction

The second scenario shifts from individual training to joint music performance, where individual musicians operate as processing units within a complex dynamical system, with the objective to drive each other toward a common esthetic goal (D’Ausilio et al., 2012). For instance, string musicians in an orchestra who need to possess precise gesture coordination and motion synchronization to produce a unified, harmonious, and cohesive section sound. Performers currently exchange sensorimotor information through visual and auditory feedback (Biasutti et al., 2013). The central question at hand is whether bidirectional haptic feedback can serve as additional feedback that improves violinists’ co-regulated action in terms of bowing gestures coordination, motion synchronicity, tempo stability, volume balance, and tone blending.

In order to test it in an experimental setting, pairs of violinists (with varying expertise levels) might be asked to perform the same piece multiple times in four different conditions: audio-visual-haptic, audio-visual, audio-haptic, and audio-only condition. The experimental task would be to perform a musical piece as well as possible as a group, especially in terms of tempo changes such as ritardando and accelerando, which require precise rhythmic co-regulation and synchronization. In order to exclude the influence of visual information in the audio-haptic and audio-only conditions, participants could be separated by a black curtain. The bidirectional haptic feedback would be activated through exoskeletons worn by participants throughout the experiment in the audio-visual-haptic and audio-haptic conditions only. Importantly, violinists should not be informed that the forces they feel are coming from their colleague. We hypothesize that the presence of haptic feedback would improve violinists’ performance because it provides direct or non-mediated sensorimotor feedback on motor parameters of bowing gestures. In contrast, indirect (or mediated) sensorimotor feedback via auditory and/or visual channels requires the translation from one modality (audio, visual) to another (motor). Our hypothesis is that direct (non-mediated) feedback will prove more effective than indirect (mediated) feedback. This improvement is expected to be even more significant in violinist pairs with less experience.

6 Discussion

6.1 Extension of the theoretical basis

As described in Section 2, the theoretical underpinning of collaborative interaction relies on the concepts of action-perception cycle and entrainment. Obviously, both concepts need further elaboration and refinement, for example in the direction of predictive processing (Clark, 2013), and expressive information exchange (Leman, 2016). The success of integrating haptic exoskeleton technology in a natural action-perception cycle indeed draws upon the ability of the user to feed and form predictive models of acting and interacting. The concept of entrainment thereby offers a dynamic perspective for understanding co-regulated actions. Concurrently, enabling bidirectional haptic feedback between two musicians could contribute to the emergence of novel research paradigms, the development of new metrics, and a deeper understanding of the mechanisms that govern action-perception cycles and entrainment.

To date, the level of unidirectional interaction can be assessed through the metrics developed in Campo et al. (2023), which involves a comparison between movement information captured via a motion capture system with infra-red cameras, based on kinematic features, such as movement smoothness (SPARC, see Balasubramanian et al., 2015) or similarity of the bow gestures of the student relative to the teacher. Additional metrics can rely on angular information of joints provided by the exoskeleton. The bidirectional interaction could be measured by entrainment as it aims at indicating the effect of collaborative interaction via the dynamic change of actions. The angular velocity of the shoulder and elbow of each violinist using the exoskeleton, as well as the movement displacement of the upper and lower arm using a motion caption system can be used as signals. Bi-directional interaction may then be mapped out using techniques from dynamical systems analysis, such as recurrence analysis (Demos et al., 2018; Rosso et al., 2023), or cross-wavelet transforms (Torrence and Compo, 1998; Eerola et al., 2018).

6.2 Extension of the scenarios

Additional experimental scenarios in collaborative haptic interaction will be needed to allow for a clearer understanding of the effects of kinesthetic haptic feedback. Of particular interest might be the extension of the music playback scenario from unidirectional to bidirectional interaction. Differently from the bidirectional human-human scenario, in the playback scenario the bidirectional exchange would occur between the user and an AI teacher, trained via machine learning of bowing movements. Of course, the development of a bidirectional platform for interaction is a challenging task involving human-based AI. The unidirectional approach as well as understanding of adaptive mechanisms that might coregulate dynamics of haptic human-human interactions are steps toward such a bidirectional system equipped with advanced machine intelligence for interacting.

Furthermore, understanding the needs, preferences, and concerns of users is essential for the technology’s successful adoption and further development (Bauer, 2014; Mroziak and Bowman, 2016; Michałko et al., 2022). Since musicians typically rely on auditory and visual information for communication (Goodman, 2002; Biasutti et al., 2013), they may initially find this additional communication channel as distracting rather than beneficial. Therefore, to achieve successful technology adoption, a participatory design should be carefully implemented, encompassing various validation scenarios and intense interaction between the developers and end users at every stage of technology development (Bobbe et al., 2021; Michałko et al., 2022). This approach will not only help shape the design of the technology to better meet users’ expectations (Bauer, 2014; Mroziak and Bowman, 2016), but it can also help verify in a proactive way the technical effectiveness of the devices themselves.

6.3 Extending music education

Traditionally, learning to play a musical instrument is mediated by verbal and non-verbal communication between the teacher and the trainee, including hand gestures, physical guidance, apart from the use of verbal imagery and metaphors (Williamon and Davidson, 2002; Nijs, 2019; Bremmer and Nijs, 2020). Each of these communication channels has been demonstrated to play a positive role in transferring instrumental music instruction and ideas (Simones et al., 2017; Meissner and Timmers, 2019; Bremmer and Nijs, 2020), but their drawbacks have also been identified, such as ambiguous interpretation, delayed feedback, and the fact that they only provide a rough approximation of the target movement (Hoppe et al., 2006; Howard et al., 2007; Grindlay, 2008). Some of the currently available devices provide real-time objective feedback to overcome these limitations (Schoonderwaldt and Demoucron, 2009; Blanco et al., 2021), but they focus on movement and posture monitoring, reinforcing the master-apprentice approach, which has been criticized for emphasizing reproductive imitation over creative music making (Nijs, 2019; Schiavio et al., 2020). Exoskeletons may be a promising alternative to current technologies because they enable real-time, direct sensorimotor feedback between two users thereby supporting teacher-student or peer interactions, which are key to students’ long-term music engagement (Davidson et al., 1996; Zdzinski, 2021). Exoskeleton technology might also enhance learning and current communication channels (auditory and visual) without interfering with lesson performance or flow (see Figure 2B). It could be of interest to investigate the impact of various haptic modes of exoskeletons (bi-directional vs. unidirectional) on different pedagogical models, as well as its impact on interpersonal relationships between teacher-student and peers.

6.4 Extension to other domains

Haptic devices have been developed in the field of rehabilitation (Wu and Chen, 2023), sport science (Spelmezan et al., 2009), and mixed reality and gaming industry (Moon et al., 2023). However, little research has pointed out the potential benefits of collaborative interaction with force haptic feedback through exoskeletons. So far, research has predominantly concentrated on the action-perception cycle rather than the entrainment dynamics typical of collaborative interactions. Lightweight exoskeleton technology offers a huge potential in several (non-musical) domains of application where bidirectional, i.e., collaborative, interaction is useful, for example, in physiotherapy where movements are guided in interaction with the therapist (Bjorbækmo and Mengshoel, 2016). It could be of interest to investigate whether incorporating bidirectional haptic feedback via exoskeletons could improve the relationship between physiotherapists and patients, thereby allowing for a faster recovery process.

6.5 Limitations

The current state-of-the-art exoskeletons are considered lightweight compared to, for instance, exoskeletons used in factories; however, they might still be perceived as bulky and heavy especially when worn by children. The scalability of anthropomorphic features is also a critical issue for exchanging meaningful feedback between, say, a child and an adult, which are often paired in training/learning contexts. These exoskeletons therefore require the assistance and control of experts for correct attachment to limbs and for setting the different interaction paradigms such as unidirectional and bidirectional. More effort will be required for making these devices more user friendly and ready to be used out of the laboratory. Another important factor to investigate is the potential time delay between two users when exchanging forces. Further limitations are concerned with the restricted domain of application in music learning, with arm exoskeletons being less useful when playing brass or woodwind instruments, which produce sound through air emission and finger movements rather than shoulder and elbow movements.

7 Conclusion

In this paper, we envisioned the possibility of using lightweight robotic exoskeletons to allow for the exchange of haptic information during musical interactions. We introduced two possible validation scenarios involving violin playing to explore and test the feasibility of adding haptic information to the audio and visual channels on which collaborative interaction is based. These scenarios leverage on music playback systems and real-time interactive music making, especially in the context of learning. However, the application of kinesthetic haptic systems that allow for bidirectional force exchange may potentially extend to various domains, encompassing physical rehabilitation, sports, gaming, and those fields in which gestures and limb movements benefit from haptic guidance. In such scenarios, exoskeletons can be employed to enhance the entrainment effect, ultimately fostering improved co-regulation activities.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

AM: Writing – review & editing, Writing – original draft, Visualization, Conceptualization. NDS: Writing – review & editing, Writing – original draft, Supervision, Conceptualization. AC: Writing – review & editing. ML: Writing – review & editing, Writing – original draft, Supervision, Funding acquisition, Conceptualization.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was funded by the H2020/ICT European project “CONnected through roBOTS: Physically Coupling Humans to Boost Handwriting and Music Learning” (CONBOTS) (grant agreement no. 871803; call topic ICT-092019-2020) and the Methusalem project (grant agreement BOF.MET.2015.0001.01; ML) funded by the Flemish Government.

Acknowledgments

We thank prof. Nevio Luigi Tagliamonte for the previous comments on an earlier version of this manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abrahamson, D., Sánchez-García, R., and Smyth, C. (2016). Metaphors are projected constraints on action: An ecological dynamics view on learning across the disciplines. Available at: https://escholarship.org/uc/item/9d78b83f.

Badino, L., D’Ausilio, A., Glowinski, D., Camurri, A., and Fadiga, L. (2014). Sensorimotor communication in professional quartets. Neuropsychologia 55, 98–104. doi: 10.1016/j.neuropsychologia.2013.11.012

Balasubramanian, S., Melendez-Calderon, A., Roby-Brami, A., and Burdet, E. (2015). On the analysis of movement smoothness. J. Neuroeng. Rehabil. 12:112. doi: 10.1186/s12984-015-0090-9

Barsalou, L. W. (2008). Grounded cognition. Annu. Rev. Psychol. 59, 617–645. doi: 10.1146/annurev.psych.59.103006.093639

Bauer, W. I. (2014). Music learning and technology. 1. Available at: https://www.newdirectionsmsu.org/issue-1/bauer-music-learning-and-technology/.

Berger, S. E., Chan, G. L., and Adolph, K. E. (2014). What cruising infants understand about support for locomotion. Infancy 19, 117–137. doi: 10.1111/infa.12042

Biasutti, M., Concina, E., Wasley, D., and Williamon, A. (2013). Behavioral coordination among chamber musicians: A study of visual synchrony and communication in two string quartets. 223–228. Available at: http://performancescience.org/publication/isps-2013/.

Bjorbækmo, W. S., and Mengshoel, A. M. (2016). “A touch of physiotherapy”—the significance and meaning of touch in the practice of physiotherapy. Physiother. Theory Pract. 32, 10–19. doi: 10.3109/09593985.2015.1071449

Blanco, A. D., Tassani, S., and Ramirez, R. (2021). Real-time sound and motion feedback for violin Bow technique learning: a controlled, randomized trial. Front. Psychol. 12:1268. doi: 10.3389/fpsyg.2021.648479

Bobbe, T., Oppici, L., Lüneburg, L.-M., Münzberg, O., Li, S.-C., Narciss, S., et al. (2021). What early user involvement could look like—developing technology applications for piano teaching and learning. Multimodal Technol. Interact. 5:38. doi: 10.3390/mti5070038

Bremmer, M., and Nijs, L. (2020). The role of the body in instrumental and vocal music pedagogy: a dynamical systems theory perspective on the music Teacher’s bodily engagement in teaching and learning. Front. Educ. 5:79. doi: 10.3389/feduc.2020.00079

Campo, A., Michałko, A., Van Kerrebroeck, B., Stajic, B., Pokric, M., and Leman, M. (2023). The assessment of presence and performance in an AR environment for motor imitation learning: a case-study on violinists. Comput. Hum. Behav. 146:107810. doi: 10.1016/j.chb.2023.107810

Chauvigné, L. A., Belyk, M., and Brown, S. (2018). Taking two to tango: fMRI analysis of improvised joint action with physical contact. PLoS One 13:e0191098. doi: 10.1371/journal.pone.0191098

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204. doi: 10.1017/S0140525X12000477

Clayton, M. (2012). What is entrainment? Definition and applications in musical research. Empir. Musicol. Rev. 7, 49–56. doi: 10.18061/1811/52979

Clayton, M., Jakubowski, K., Eerola, T., Keller, P. E., Camurri, A., Volpe, G., et al. (2020). Interpersonal entrainment in music performance: theory, method, and model. Music. Percept. 38, 136–194. doi: 10.1525/mp.2020.38.2.136

D’Ausilio, A., Badino, L., Li, Y., Tokay, S., Craighero, L., Canto, R., et al. (2012). “Communication in orchestra playing as measured with granger causality” in Intelligent Technologies for Interactive Entertainment. eds. A. Camurri and C. Costa (Berlin Heidelberg, Germany: Springer), 273–275.

D’Ausilio, A., Novembre, G., Fadiga, L., and Keller, P. E. (2015). What can music tell us about social interaction? Trends Cogn. Sci. 19, 111–114. doi: 10.1016/j.tics.2015.01.005

Dalgleish, M., and Spencer, S. (2014). “POSTRUM: developing good posture in trumpet players through directional haptic feedback” in Paper presented at the 9th conference on interdisciplinary musicology- CIM14, 3rd-6th December 2014 (Berlin: Staatliches Institut für Musikforschung)

Davidson, J. W., Howe, M. J. A., Moore, D. G., and Sloboda, J. A. (1996). The role of parental influences in the development of musical performance. Br. J. Dev. Psychol. 14, 399–412. doi: 10.1111/j.2044-835X.1996.tb00714.x

Demos, A. P., Chaffin, R., and Logan, T. (2018). Musicians body sway embodies musical structure and expression: a recurrence-based approach. Music. Sci. 22, 244–263. doi: 10.1177/1029864916685928

Eerola, T., Jakubowski, K., Moran, N., Keller, P. E., and Clayton, M. (2018). Shared periodic performer movements coordinate interactions in duo improvisations. R. Soc. Open Sci. 5:171520. doi: 10.1098/rsos.171520

Fujii, K., Russo, S. S., Maes, P., and Rekimoto, J. (2015). “MoveMe: 3D haptic support for a musical instrument” in Proceedings of the 12th international conference on advances in computer entertainment technology

Gibbs, R. W. (2006). Embodiment and cognitive science. New York, NY, USA: Cambridge University Press.

Glowinski, D., Mancini, M., Cowie, R., Camurri, A., Chiorri, C., and Doherty, C. (2013). The movements made by performers in a skilled quartet: a distinctive pattern, and the function that it serves. Front. Psychol. 4:841. doi: 10.3389/fpsyg.2013.00841

Godøy, R. I., and Leman, M. (2010). Musical gestures: sound, movement, and meaning. Routledge Available at: https://books.google.com?id=lHaMAgAAQBAJ

Grindlay, G. (2008). Haptic guidance benefits musical motor learning. In: 2008 Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, 397–404.

Hannaford, B., and Okamura, A. M. (2016). “Haptics,” in springer handbook of robotics eds B. Siciliano and O. Khatib (Berlin Heidelberg: Springer).

Holland, S., Bouwer, A. J., Dalgelish, M., and Hurtig, T. M. (2010). “Feeling the beat where it counts: fostering multi-limb rhythm skills with the haptic drum kit” in Proceedings of the fourth international conference on tangible, embedded, and embodied interaction, 21–28.

Hoppe, D., Sadakata, M., and Desain, P. (2006). Development of real-time visual feedback assistance in singing training: a review. J. Comput. Assist. Learn. 22, 308–316. doi: 10.1111/j.1365-2729.2006.00178.x

Howard, D. M., Brereton, J., Welch, G. F., Himonides, E., DeCosta, M., Williams, J., et al. (2007). Are real-time displays of benefit in the singing studio? An exploratory study. J. Voice 21, 20–34. doi: 10.1016/j.jvoice.2005.10.003

Jarrassé, N., Charalambous, T., and Burdet, E. (2012). A framework to describe, analyze and generate interactive motor behaviors. PLoS One 7:e49945. doi: 10.1371/journal.pone.0049945

Kaspar, K., König, S., Schwandt, J., and König, P. (2014). The experience of new sensorimotor contingencies by sensory augmentation. Conscious. Cogn. 28, 47–63. doi: 10.1016/j.concog.2014.06.006

Keller, P. E., Novembre, G., and Hove, M. J. (2014). Rhythm in joint action: psychological and neurophysiological mechanisms for real-time interpersonal coordination. Philos. Trans. R. Soc. B Biol. Sci. 369:20130394. doi: 10.1098/rstb.2013.0394

König, S. U., Schumann, F., Keyser, J., Goeke, C., Krause, C., Wache, S., et al. (2016). Learning new sensorimotor contingencies: effects of long-term use of sensory augmentation on the brain and conscious perception. PLoS One 11:e0166647. doi: 10.1371/journal.pone.0166647

Leman, M. (2007). Embodied music cognition and mediation technology MIT Press Available at: https://books.google.com?id=s70oAeq3XYMC.

Leman, M. (2016). The expressive moment: how interaction (with music) shapes human empowerment MIT Press Available at: https://books.google.com?id=1KwDDQAAQBAJ.

Leman, M. (2021). Co-regulated timing in music ensembles: a Bayesian listener perspective. J. New Music Res. 50, 121–132. doi: 10.1080/09298215.2021.1907419

Leman, M., and Maes, P.-J. (2014). The role of embodiment in the perception of music. Empir. Musicol. Rev. 9, 236–246. doi: 10.18061/emr.v9i3-4.4498

MacRitchie, J., Varlet, M., and Keller, P. E. (2017). “Embodied expression through entrainment and co-representation in musical ensemble performance” in The Routledge Companion to Embodied Music Interaction, Eds. M. Lesaffre, P-J. Maes, and M. Leman (New York, NY, USA and London, UK: Routledge)

Maes, P.-J., Dyck, E. V., Lesaffre, M., Leman, M., and Kroonenberg, P. M. (2014a). The coupling of action and perception in musical meaning formation. Music. Percept. 32, 67–84. doi: 10.1525/mp.2014.32.1.67

Maes, P.-J., and Leman, M. (2013). The influence of body movements on Children’s perception of music with an ambiguous expressive character. PLoS One 8:e54682. doi: 10.1371/journal.pone.0054682

Maes, P.-J., Leman, M., Palmer, C., and Wanderley, M. (2014b). Action-based effects on music perception. Front. Psychol. 4:1008. doi: 10.3389/fpsyg.2013.01008

Manning, F., and Schutz, M. (2013). “Moving to the beat” improves timing perception. Psychon. Bull. Rev. 20, 1133–1139. doi: 10.3758/s13423-013-0439-7

Marichal, S., Rosales, A., González Perilli, F., Pires, A. C., and Blat, J. (2023). Auditory and haptic feedback to train basic mathematical skills of children with visual impairments. Behav. Inform. Technol. 42, 1081–1109. doi: 10.1080/0144929X.2022.2060860

Meissner, H., and Timmers, R. (2019). Teaching young musicians expressive performance: an experimental study. Music. Educ. Res. 21, 20–39. doi: 10.1080/14613808.2018.1465031

Michałko, A., Campo, A., Nijs, L., Leman, M., and Van Dyck, E. (2022). Toward a meaningful technology for instrumental music education: teachers’ voice. Front. Educ. 7:1027042. doi: 10.3389/feduc.2022.1027042

Moon, H. S., Orr, G., and Jeon, M. (2023). Hand tracking with Vibrotactile feedback enhanced presence, engagement, usability, and performance in a virtual reality rhythm game. Int. J. Hum. Comput. Interact. 39, 2840–2851. doi: 10.1080/10447318.2022.2087000

Moura, N., Vidal, M., Aguilera, A. M., Vilas-Boas, J. P., Serra, S., and Leman, M. (2023). Knee flexion of saxophone players anticipates tonal context of music. Npj Sci. Learn. 8, 22–27. doi: 10.1038/s41539-023-00172-z

Mroziak, J., and Bowman, J. (2016). “Music TPACK in higher education: educating the educators” in Handbook of technological pedagogical content knowledge (TPACK) for educators. 2nd ed (London, UK: Routledge)

Nijs, L. (2019). “Moving together while playing music: Promoting involvement through student-centred collaborative practices,” in Becoming musicians. Student involvement and teacher collaboration in higher music education, Eds. S. Gies and J. H. Sætre (Oslo: NMH Publications). 239–260.

Phillips-Silver, J., and Trainor, L. J. (2005). Feeling the beat: movement influences infant rhythm perception. Science 308:1430. doi: 10.1126/science.1110922

Phillips-Silver, J., and Trainor, L. J. (2007). Hearing what the body feels: auditory encoding of rhythmic movement. Cognition 105, 533–546. doi: 10.1016/j.cognition.2006.11.006

Pinardi, M., Longo, M. R., Formica, D., Strbac, M., Mehring, C., Burdet, E., et al. (2023). Impact of supplementary sensory feedback on the control and embodiment in human movement augmentation. Commun. Eng. 2:64. doi: 10.1038/s44172-023-00111-1

Prinz, W. (1997). Perception and action planning. Eur. J. Cogn. Psychol. 9, 129–154. doi: 10.1080/713752551

Rosso, M., Moens, B., Leman, M., and Moumdjian, L. (2023). Neural entrainment underpins sensorimotor synchronization to dynamic rhythmic stimuli. NeuroImage 277:120226. doi: 10.1016/j.neuroimage.2023.120226

Schiavio, A. (2014). Action, Enaction, inter(en)action. Empir. Musicol. Rev. 9, 254–262. doi: 10.18061/emr.v9i3-4.4440

Schiavio, A., Küssner, M. B., and Williamon, A. (2020). Music teachers’ perspectives and experiences of ensemble and learning skills. Front. Psychol., 11:291. doi: 10.3389/fpsyg.2020.00291

Schoonderwaldt, E., and Demoucron, M. (2009). Extraction of bowing parameters from violin performance combining motion capture and sensors. J. Acoust. Soc. Am. 126, 2695–2708. doi: 10.1121/1.3227640

Sebanz, N., and Knoblich, G. (2021). Progress in joint-action research. Curr. Dir. Psychol. Sci. 30, 138–143. doi: 10.1177/0963721420984425

Shapiro, L. (2011). Embodied cognition, vol. 39. London, UK and New York, NY, USA: Routledge, 121–140.

Sheets-Johnstone, M. (1999). The primacy of movement. Amsterdam, The Netherlands: John Benjamins Publishing Company.

Simones, L., Rodger, M., and Schroeder, F. (2017). Seeing how it sounds: observation, imitation, and improved learning in piano playing. Cogn. Instr. 35, 125–140. doi: 10.1080/07370008.2017.1282483

Sofianidis, G., and Hatzitaki, V. (2015). Interpersonal entrainment in dancers: Contrasting timing and haptic cues. Posture, Balance Brain Int Work Proc, 34–44.

Sorgini, F., Caliò, R., Carrozza, M. C., and Oddo, C. M. (2018). Haptic-assistive technologies for audition and vision sensory disabilities. Disabil. Rehabil. Assist. Technol. 13, 394–421. doi: 10.1080/17483107.2017.1385100

Spelmezan, D., Jacobs, M., Hilgers, A., and Borchers, J. (2009). Tactile motion instructions for physical activities. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2243–2252.

Takagi, A., Ganesh, G., Yoshioka, T., Kawato, M., and Burdet, E. (2017). Physically interacting individuals estimate the partner’s goal to enhance their movements. Nat. Hum. Behav. 1:0054. doi: 10.1038/s41562-017-0054

Takagi, A., Usai, F., Ganesh, G., Sanguineti, V., and Burdet, E. (2018). Haptic communication between humans is tuned by the hard or soft mechanics of interaction. PLoS Comput. Biol. 14:e1005971. doi: 10.1371/journal.pcbi.1005971

Thelen, E. (2000). Grounded in the world: developmental origins of the embodied mind. Infancy 1, 3–28. doi: 10.1207/S15327078IN0101_02

Torrence, C., and Compo, G. P. (1998). A practical guide to wavelet analysis. Bull. Am. Meteorol. Soc. 79, 61–78. doi: 10.1175/1520-0477(1998)079<0061:APGTWA>2.0.CO;2

van Breda, E., Verwulgen, S., Saeys, W., Wuyts, K., Peeters, T., and Truijen, S. (2017). Vibrotactile feedback as a tool to improve motor learning and sports performance: a systematic review. BMJ Open Sport Exerc. Med. 3:e000216. doi: 10.1136/bmjsem-2016-000216

van der Linden, J., Johnson, R., Bird, J., Rogers, Y., and Schoonderwaldt, E. (2011). “Buzzing to play: lessons learned from an in the wild study of real-time vibrotactile feedback” in Paper presented at the SIGCHI conference on human factors in computing systems CHI’11 (New York, NY: Association for Computing Machinery), 533–542.

Volpe, G., D’Ausilio, A., Badino, L., Camurri, A., and Fadiga, L. (2016). Measuring social interaction in music ensembles. Philos. Trans. R. Soc. B Biol. Sci. 371:20150377. doi: 10.1098/rstb.2015.0377

Williamon, A., and Davidson, J. W. (2002). Exploring co-performer communication. Music. Sci. 6, 53–72. doi: 10.1177/102986490200600103

Witt, J. K. (2011). Action’s effect on perception. Curr. Dir. Psychol. Sci. 20, 201–206. doi: 10.1177/0963721411408770

Wu, Q., and Chen, Y. (2023). Adaptive cooperative control of a soft elbow rehabilitation exoskeleton based on improved joint torque estimation. Mech. Syst. Signal Process. 184:109748. doi: 10.1016/j.ymssp.2022.109748

Yoo, Y., and Choi, S. (2017). “A longitudinal study of haptic pitch correction guidance for string instrument players” in Paper presented at the 2017 IEEE world haptics conference (WHC) (Munich), 177–182.

Zdzinski, S. F. (2021). “The role of the family in supporting musical learning” in Routledge international handbook of music psychology in education and the community. eds. A. Creech, D. A. Hodges, and S. Hallam (London, UK: Routledge), 401–417.

Zhang, J., Fiers, P., Witte, K. A., Jackson, R. W., Poggensee, K. L., Atkeson, C. G., et al. (2017). Human-in-the-loop optimization of exoskeleton assistance during walking. Science 356, 1280–1284. doi: 10.1126/science.aal5054

Keywords: instrumental music training, haptic feedback, collaborative learning, technology mediation, exoskeletons, wearable robotics, human-human interaction (HHI), kinesthetic feedback

Citation: Michałko A, Di Stefano N, Campo A and Leman M (2024) Enhancing human-human musical interaction through kinesthetic haptic feedback using wearable exoskeletons: theoretical foundations, validation scenarios, and limitations. Front. Psychol. 15:1327992. doi: 10.3389/fpsyg.2024.1327992

Edited by:

Pil Hansen, University of Calgary, CanadaReviewed by:

Bettina E. Bläsing, Bielefeld University, GermanyCopyright © 2024 Michałko, Di Stefano, Campo and Leman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aleksandra Michałko, YWxla3NhbmRyYS5taWNoYWxrb0B1Z2VudC5iZQ==

Aleksandra Michałko

Aleksandra Michałko Nicola Di Stefano

Nicola Di Stefano Adriaan Campo

Adriaan Campo Marc Leman

Marc Leman