- Conservatory of Music, Communication University of Zhejiang, Hangzhou, China

Introduction: Drawing on the S-O-R model, this study aims to investigate the influence of three stimuli from AI-modified music (i.e., event relevance, lyric resonance, and AI-singer origins), two responses from social media content consumers (i.e., audience interpretation and emotional resonance) on the social media engagement of personalized background music modified by artificial intelligence (AI).

Methods: The structural equation modeling analyses of 467 social media content consumers’ responses confirmed the role of those three stimuli and the mediating effect of audience interpretation and emotional resonance in shaping social media engagement.

Results: The findings shed light on the underlying mechanisms that drive social media engagement in the context of AI-modified background music created for non-professional content creators.

Discussion: The theoretical and practical implications of this study advance our understanding of social media engagement with AI-singer-originated background music and provide a basis for future investigations into this rapidly evolving phenomenon in the gig economy.

1 Introduction

In recent years, artificial intelligence (AI) has revolutionized the way social media content, such as music and art, is produced and consumed, generating new career opportunities in social media content creation (Suh et al., 2021; Fteiha et al., 2024). This paradigm shift not only empowers professionals and artists but also extends opportunities to ordinary digital users to engage in content creation for income generation. Digital platforms such as Facebook Live, Metaverse, TikTok, Twitter, YouTube, and WeChat have allowed non-professional content creators to share self-made, engaging and high-quality content with global audiences (Rodgers et al., 2021). Despite the burgeoning interest in leveraging AI for content creation, a fundamental question remains largely unaddressed: how does AI-modified music, as different from human-generated music, affect listeners?

The integration of AI in music dates back to the mid-1960s, with early research focusing on music as a cognitive process and algorithmic composition (Berz and Bowman, 1995). The integration of AI technology may help unlock new dimensions of artistic expression and audience engagement (Zulić, 2019). AI-driven innovations such as AIVA, may redefine traditional paradigms of musical performance and composition (Zulić, 2019). Despite such remarkable development, the integration of AI in music production is still in its nascent stages, with ample room for exploration and innovation. By elucidating the nuanced interplay between AI-modified music and human perception, this study seeks to contribute to the understanding of the evolving relationship between technology and creativity in the digital age.

AI-modified music can be different from human composed music in different manners. Understanding this difference is essential for comprehending the unique value proposition of AI-modified music in the social media content creation. Indeed, the two types of music are different in their underlying mechanisms music generation and implications for audience experience. While human composers infuse their creations with personal emotions, cultural nuances, and artistic insights, AI algorithms operate based on computational models and data-driven analyses (Zulić, 2019). Consequently, music purely modified by AI may lack the depth of human expression and the contextual richness inherent in human-created compositions. Moreover, the proliferation of AI in the creative domain engenders concerns regarding the authenticity and emotional resonance of AI-generated art (Trunfio and Rossi, 2021). Therefore, alongside exploring the instrumental role of AI-modified music in social media engagement, this study seeks to critically evaluate the perceptual and emotional dimensions of AI-generated compositions vis-à-vis their human counterparts.

The evolution of social media has facilitated the rise of non-professional content creators who leverage various forms of content, including gaming, tutorials, and talk shows, to interact with their audience in real-time (Spangler, 2023). Music, as a central element in content creation, plays a significant part in shaping atmosphere, evoking emotions, and influencing audience perceptions (Kuo et al., 2013; Schäfer et al., 2013; Hudson et al., 2015). Particularly noteworthy is the prevalence of AI-powered tools, such as RIADA and Juedeck, which enable non-professional creators to customize and personalize music to align with their content (Civit et al., 2022; Dredge, 2023). These tools offer features such as tempo adjustment, mood customization, and even the creation of entirely new compositions, thereby providing creators with unprecedented flexibility in tailoring music to suit their specific needs (Alvarez et al., 2023).

Non-professional content creators in this study refer to the individuals who produce various forms of content to showcase their skills, talents, and expertise in activities such as gaming, tutorials, and talk shows in real-time, creating a sense of immediacy and interactivity with their audience through social media. Over 50% of U.S. non-professional creators have profited from their content, with over 75% starting the practice within one year (Spangler, 2023). Music often plays a critical role in creating atmosphere and arousing emotions (Kuo et al., 2013; Schäfer et al., 2013; Hudson et al., 2015), affecting creators’ perceived personality and attractiveness (Yang and Li, 2013). Songs can express individuals’ inner feelings, produce sensations, and bring audiences to various emotional states by sharing an emotional state, triggering specific memories (Schön et al., 2008). In particular, non-professional content creators often perform (e.g., dance or sing) with songs of modified lyrics to produce viral video content. For instance, 34% of the most popular non-professional content creators on TikTok attributed their success to dancing content with background music (Shutsko, 2020).

Unlike studies that focus on the power of AI on professional artists (Anantrasirichai and Bull, 2022; Kadam, 2023), this study focuses on the AI-modified social media music that non-professional content creators develop to add value to their social media content rather than selling music works. This focus makes practical significance considering the size of the gig economy, which is expected to grow from USD 455.2 billion in 2023 to USD 1,864.16 billion by 2031, according to the Business Research Insights report (2023). Music-AI developers such as Soundful and Ecrett1 have provided services to facilitate the creation of royalty-free music for social media content, game development, and video production. Indeed, the ability to personalize background music using AI holds immense potential for enhancing the cognitive and affective engagement and satisfaction of social media content consumers (Hwang and Oh, 2020; Gray, 2023).

The literature has captured the advantages of AI-facilitated music creation, including breaking conventional musical structures, enabling listeners to tailor music, and adapting lyrics and vocal styles to suit various cultural contexts (Civit et al., 2022; Deruty et al., 2022). As a result, non-professional non-professional content creators can modify lyrics and infuse local elements to create personalized songs in response to special events (e.g., birthdays, celebrations, and social incidents). Those modified songs can provide meaningful expression of creators’ personal experiences and foster empathy among audiences. Moreover, AI allows music consumers to perform songs using the voice and singing techniques of their favorite singers, thereby extending the original singers’ limitations due to language and cultural differences. Despite the growing popularity of personalized background music, limited attention has been given to the role of AI-modified music in increasing audience engagement in content created by non-professionals. As such, studying the effect of AI-facilitated musical tools can not only inspire creative artists to connect with audiences by experimenting with different musical elements (Grech et al., 2023) but also empower non-professional content creators to commercialize their ideas.

In this context, not much is known about the factors that influence users’ social media engagement, i.e., social media consumers’ positive cognitive, emotional and behavioral activities during or related to consumer-content interactions (Trunfio and Rossi, 2021). The first predictor of social media engagement could be event relevance, which refers to the connection between a piece of music and a specific event, with the music enhancing the emotional impact of the event or evoking extramusical feelings relevant to the event (Ibrahim, 2023). Non-professional non-professional content creators may use AI to modify existing songs to align with the mood, theme, atmosphere, and location of a specific event to attract consumer attention (Anantrasirichai and Bull, 2022). Understanding how event relevance influences social media engagement can provide insights into the importance of context-specific music in generating user interest, interactions, and discussions.

The second feature of AI-modified background music could be lyric resonance, which in this study refers to the emotional impact and connection that the lyrics of a song have on social media consumers (Deng et al., 2015). It indicates a song’s ability to evoke strong emotions, resonate with personal experiences, and create a deep sense of connection or understanding. Lyrics that evoke strong emotional responses, convey relatable narratives or express profound thoughts have the potential to spark discussions and connect content consumers on social media platforms (Mori, 2022). Exploring the impact of lyric resonance on social media engagement can shed light on the role of meaningful and relatable lyrics in enhancing social media consumer interaction and fostering a sense of community.

Third, the singer’s origins, the cultural background or heritage of singers that stimulate listeners’ emotional connection and familiarity (Hemmasi, 2020), may also affect social media engagement. AI-modified songs that mimic singers from specific regions or cultural backgrounds can stimulate online communities centered around specific musical styles or cultural interests. Examining the impact of singer origins on social media engagement can provide valuable insights into the role of cultural diversity and representation in personalized AI-generated music. As such, this study aims to examine the roles of event relevance, lyric resonance, singer origins, emotional understanding, and emotional resonance on social media engagement of personalized background music.

To investigate the three features of AI-modified music, a quantitative research study is necessary. By examining the roles of event relevance, lyric resonance, and singer origins in social media consumers’ cognitive and emotional responses, this study aims to provide valuable insights into the factors that influence social media engagement of personalized background music. The findings of this study will inform the design and development of AI-generated music systems that cater to individual preferences, enhance emotional resonance, and foster active participation and engagement on social media platforms.

2 Literature review and hypotheses

2.1 Theoretical perspective

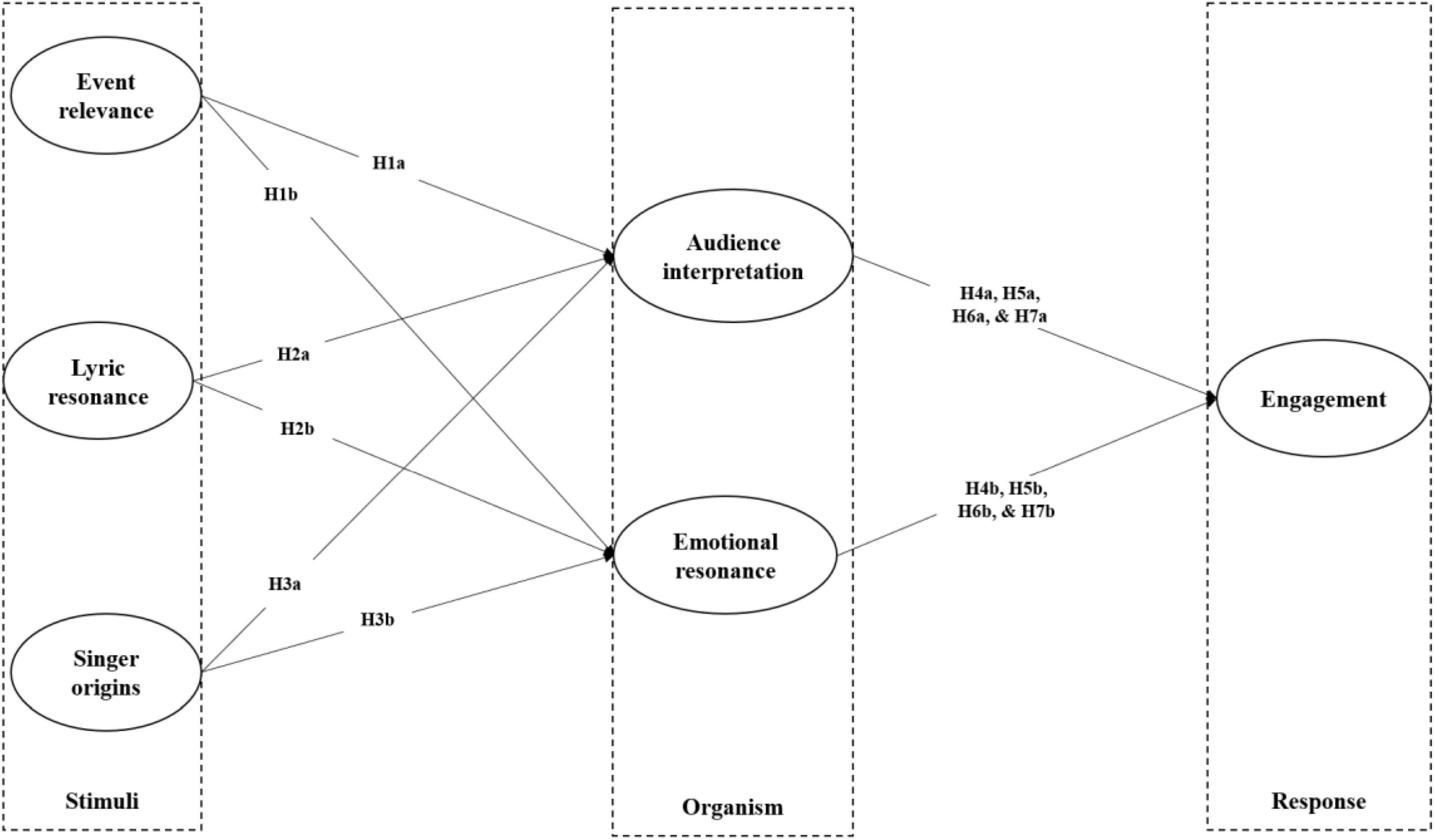

Social media engagement studies (Carlson et al., 2018; Perez-Vega et al., 2021; Abbasi et al., 2023; Wang et al., 2023) have adopted the Stimuli-Organism-Response (S-O-R) model, which assumes that atmospheric and informational cues (S) can trigger a consumer’s cognitive and affective reactions to these cues (O) that further influence his or her behavioral responses (R) (Mehrabian and Russell, 1974; Bigne et al., 2020). In particular, researchers (Suh et al., 2021; Kang and Lou, 2022; Kadam, 2023) have increasingly recognized the role of AI in enhancing audience engagement. In the context of music, the stimuli involve lyrics, musical style and artist characteristics that affect the consumer’s perception of the music (Belfi and Kacirek, 2021; Zhang et al., 2023); the organism refers to music consumers’ personal preferences, emotional states, cultural background, and cognitive processes when processing the external stimuli (Pandita et al., 2021); response, the reactions of the organism to the stimuli, may involve engagement levels and preferences of music (Hwang and Oh, 2020).

This study follows previous studies to adopt the S-O-R model to conceptualize social media content consumers’ responses to AI-modified background music. Music consumers often resonate with music that reflects or comments on the social contexts they have experienced (Murray and Murray, 1996; DeWall et al., 2011). Particular events or experiences often form the social contexts that influence social media content creators’ choice of personalized background music (Threadgold et al., 2019) to align with the intended theme or atmosphere in the content. For instance, Nelson Mandela’s struggles for freedom and equality have been conveyed in the lyrics of ‘Guang Hui Sui Yue’, a classic song by the Hong Kong rock band Beyond2. The AI-moderated music can form the exposure effect where specific social events, lyrics, tunes, and singers are connected to elicit consumers’ implicit memory (e.g., paternal love), thereby capturing their attention and establishing a connection. When a song is modified with a very emotional event, it can be an effective cue to bring back the strong emotion that was felt at that moment (Arjmand et al., 2017; Schaefer, 2017). As a result, I consider the relevance of the social events reflected in the AI-moderated music as a component of stimuli.

Another important stimulus is the resonance delivered by lyrics, i.e., the degree to which the lyrics of AI-modified background music resonate with social media users’ personal experiences, emotions, or themes in their daily lives. Lyrics that evoke familiarity, connection, or emotional relevance can enhance the audience’s engagement with the music (Ruth and Schramm, 2021). Following previous studies (Ruth and Schramm, 2021), I consider resonating lyrics reflected in the AI-moderated music as a component of stimuli.

Finally, AI could imitate the voices and accents of artists from a specific area to sing the songs modified by non-professional social media creators, thereby forming stimuli to social media consumers. Indeed, individuals working and living in culturally diverse cities often bear their hometown identities and self-esteem (Whitesell et al., 2009). AI-modified songs can adopt the voices, accents, and images of singers who may also bear the same cultural and geographical backgrounds with audiences from the same areas to form cultural affinity (Yoon, 2017). In doing so, non-professional creators can foster a sense of belonging and community with their audiences. Therefore, the use of singers from a specific hometown can serve as a stimulus in social media content targeted at a specific cohort of audiences.

Audiences respond to the stimuli through cognitive and emotional processes (Juslin and Västfjäll, 2008). In particular, the cognitive process involves audiences’ understanding and interpretation of AI-moderated songs. This may involve social media users’ understanding of the lyrics, the music composition, and the overall context of the AI-modified songs, i.e., whether consumers can extract the meaning and assimilate the information that the non-professional creators are trying to deliver. It may also involve consumers’ emotional interpretation, i.e., how they perceive and understand the emotional content conveyed by the AI-modified music. Previous studies (Juslin and Västfjäll, 2008; Hill, 2013) have recognized the importance of audience interpretation for audiences to connect with musicians and highlighted the need to explore underlying mechanisms of audience response to music. Moreover, these users integrate their cultural backgrounds, life experiences, and musical preferences into the interpretation process. As such, social media content consumers may attribute specific meaning, personal relevance, and subjective understanding to the music. These individualized cognitive processes collectively influence how they perceive and make sense of the AI-moderated songs. Drawing on the above discussion, this study considers audience interpretation of AI-modified songs as an organism (O) factor.

This study follows previous scholars (Tubillejas-Andres et al., 2020; Nagano et al., 2023) to adopt music consumers’ emotional response as the response factor in the SOR model. Audiences’ organismic processes may also involve emotional resonance, i.e., the degree of emotional connection, alignment, and resonance experienced by the audience with the personalized background music (Qiu et al., 2019). Social media engagement represents the content consumers’ responses to AI-modified music. It involves interactive behaviors, including likes, comments, shares, and discussions related to personalized background music on social media platforms. It reflects the level of involvement, connection, and interest that individuals exhibit toward AI-modified music and their willingness to actively participate and engage with it in the social media environment (Threadgold et al., 2019; Kang and Lou, 2022). Therefore, we consider how the three key elements of AI-modified songs (i.e., event relevance, lyric resonance, and singer origins) influence emotional interpretation and emotional resonance (organismic processes), which in turn impact social media engagement of AI-modified background music (response).

2.2 Event relevance

Social media content consumers’ interpretation of musical works relies on cognitive and communicative efforts, which can be enhanced through inferences (Fraser et al., 2021). AI-modified background music provides familiar contexts where social media content consumers can understand the events, sensations, and memories that they have experienced before. AI-modified background music can be specifically tailored to different events, such as weddings, parties, and relaxation scenarios. This allows content creators to align with content consumers’ experiences or memories of previous events (Gan et al., 2020). The relevance of events delivered by AI-modified background music could also influence social media content consumers’ emotional resonance. Indeed, personalized background music allows social media content consumers to find emotional connections and overall positive emotional resonance when the music is contextually relevant to the given event. Previous studies (Morris and Boone, 1998; Juslin and Västfjäll, 2008; Kwon et al., 2022) have provided empirical evidence on the role of background music in enhancing consumers’ arousal, affect, and attention to ongoing social media content. In particular, compared to original songs, AI-modified music may stimulate a stronger emotional connection and overall positive interpretation of the AI-modified music when it is contextually closer to the given event. As such, AI-modified music can remind the audience of romantic encounters, career milestones, or personal achievements. In short, social media content consumers are more likely to interpret and emotionally resonate with personalized background music that aligns with specific events. Based on such discussion, this study predicts the following hypotheses:

H1a: Event relevance has a positive impact on audience interpretation.

H1b: Event relevance has a positive impact on audience emotional resonance.

The impact of lyrics on the audience’s emotional responses has been well documented (Anderson et al., 2003; DeWall et al., 2011; Mori and Iwanaga, 2014). Music studies (Mori and Iwanaga, 2014) have associated the lyrics with emotions, especially in songs consumed in daily life. The happy and sad lyrics could stimulate social media content consumers’ perception of the pleasant and unpleasant states and situations that the songs are expressing (Vuoskoski et al., 2012; Ruth and Schramm, 2021). With the recent introduction of AI music generators such as LyricStudio and MelodyStudio, social media content creators can easily create and modify lyrics for their music. Some scholars (Louie et al., 2020) on AI-generated music have confirmed the positive impact of AI-generated lyrics on audience satisfaction with the songs. In particular, social media content creators could take advantage of social media platforms to learn about social media content consumers’ experiences, views, and opinions on various events and then integrate those elements into their content (Nwagwu and Akintoye, 2023). As such, AI-modified background music incorporating lyrics relevant to social media content consumers’ personal experiences or preferred topics in their lives can contribute to positive interpretation and emotional resonance. This suggests that lyric resonance plays a crucial role in influencing how audiences interpret and feel about AI-modified background music. Based on the above discussion, the following hypotheses can be predicted:

H2a: Lyric resonance positively influences audience interpretation.

H2b: Lyric resonance positively influences emotional resonance.

While not much has been written about the role of singers’ hometown origins on consumers’ interpretation of AI-modified background music on social media, previous studies (Oh and Lee, 2014; Fraser et al., 2021) shed light on the role of pop stars origins and hometowns in different contexts. For instance, American celebrities touring hometowns were often met with cheers and screams from audiences who share the same hometown roots (McClain, 2010). These stars can serve as hometown brand that further stimulates the understanding of shared experiences, places, and emotions that further generate a sense of belonging (Wei et al., 2023). Now AI music tools enable a piece of specific background music to be performed by virtually any singer. This function could allow social media content creators to achieve cultural affinity with content consumers by using a singer of the same hometown origins to sing a modified song (e.g., with hometown accents). As such, social media content consumers are likely to form cultural connections and develop favorable interpretations and emotional resonances to the social media content. As a result, the following hypotheses can be predicted:

H3a: Singers’ hometown origins positively influence audience interpretation of AI-generated personalized background music.

H3b: Singers’ hometown origins positively influence the emotional resonance of audiences.

Social media engagement involves an audience’s positive cognitive and emotional activity during interactions on social media (Delbaere et al., 2021). Cognitive processing involves a social media content consumer’s degree of understanding and thought about a specific social media content or personal brand (Hollebeek, 2011). Several scholars found that social media engagement can be achieved when audiences can understand the values and meaning of the messages (Shang et al., 2017; Cheng et al., 2020). AI tools can help analyze social media content consumers’ favorite music types and predict the music elements (e.g., themes and lyrics) that are more likely to generate audience liking, commenting, and sharing. As such, social media content creators can integrate those elements into their social media content to help content consumers better understand the tones, attitudes, and origins of and form and interpret the music in a meaningful and engaging manner, leading to increased social media interactions and engagement metrics such as likes, comments, and shares.

Likewise, emotional resonance achieved through AI-modified music could grab users’ attention and evoke user engagement (Shang et al., 2017; Schreiner and Riedl, 2019). When AI-modified background music is able to resonate with audience emotions, audiences are likely to develop positive sentiments and thus foster meaningful interactions with social media content consumers. This is particularly true when content consumers find a strong emotional connection to the AI-modified music content: the sense of community will encourage these audiences to join discussions and conversations. The sense of community could further encourage engagement as users actively participate in discussions, conversations, and reposts (Tafesse and Wien, 2018). As a result, the following hypotheses can be predicted:

H4a: Audience interpretation is positively associated with audience social media engagement related to AI-modified background music.

H4b: Audience emotional resonance is positively associated with audience social media engagement related to AI-modified background music.

In addition to its direct impact, positive audience interpretation from social media consumers could also be the relationship between the three stimuli from AI-modified background music and social media engagement. The special meaning achieved by relating to specific events, using modified lyrics, and adopting singers of specific origins could provide profuse information and facilitate effective communication between social media content creators and content consumers, which has been found to positively influence social media engagement (Osterholt, 2021; Nwagwu and Akintoye, 2023). Hence, this study further predicts the following mediating effect of audience interpretation:

H5a: Audience interpretation positively mediates the relationship between event relevance and social media engagement related to AI-modified background music.

H6a: Audience interpretation positively mediates the relationship between lyric resonance and social media engagement related to AI-modified background music.

H7a: Audience interpretation positively mediates the relationship between AI-singer origins and social media engagement related to AI-modified background music.

Moreover, Emotional resonance felt by social media consumers could also mediate the relationship between the three stimuli from AI-modified background music and social media engagement. Several scholars (Morris and Boone, 1998; Juslin and Västfjäll, 2008) have recognized the role of music in evoking emotions through mechanisms beyond music. For instance, the contagious influence of other content consumers from social media can be achieved when exciting events, touching lyrics, and popular singers are integrated into a piece of background music to provoke strong emotions among them. Therefore, this study predicts the following mediating effect of emotional resonance:

H5b: Emotional resonance positively mediates the relationship between event relevance and social media engagement related to AI-modified background music.

H6b: Emotional resonance positively mediates the relationship between lyric resonance and social media engagement related to AI-modified background music.

H7b: Emotional resonance positively mediates the relationship between AI-singer origins and social media engagement related to AI-modified background music.

The above hypotheses can form the following conceptual framework (see Figure 1).

3 Materials and methods

3.1 Data collection procedure

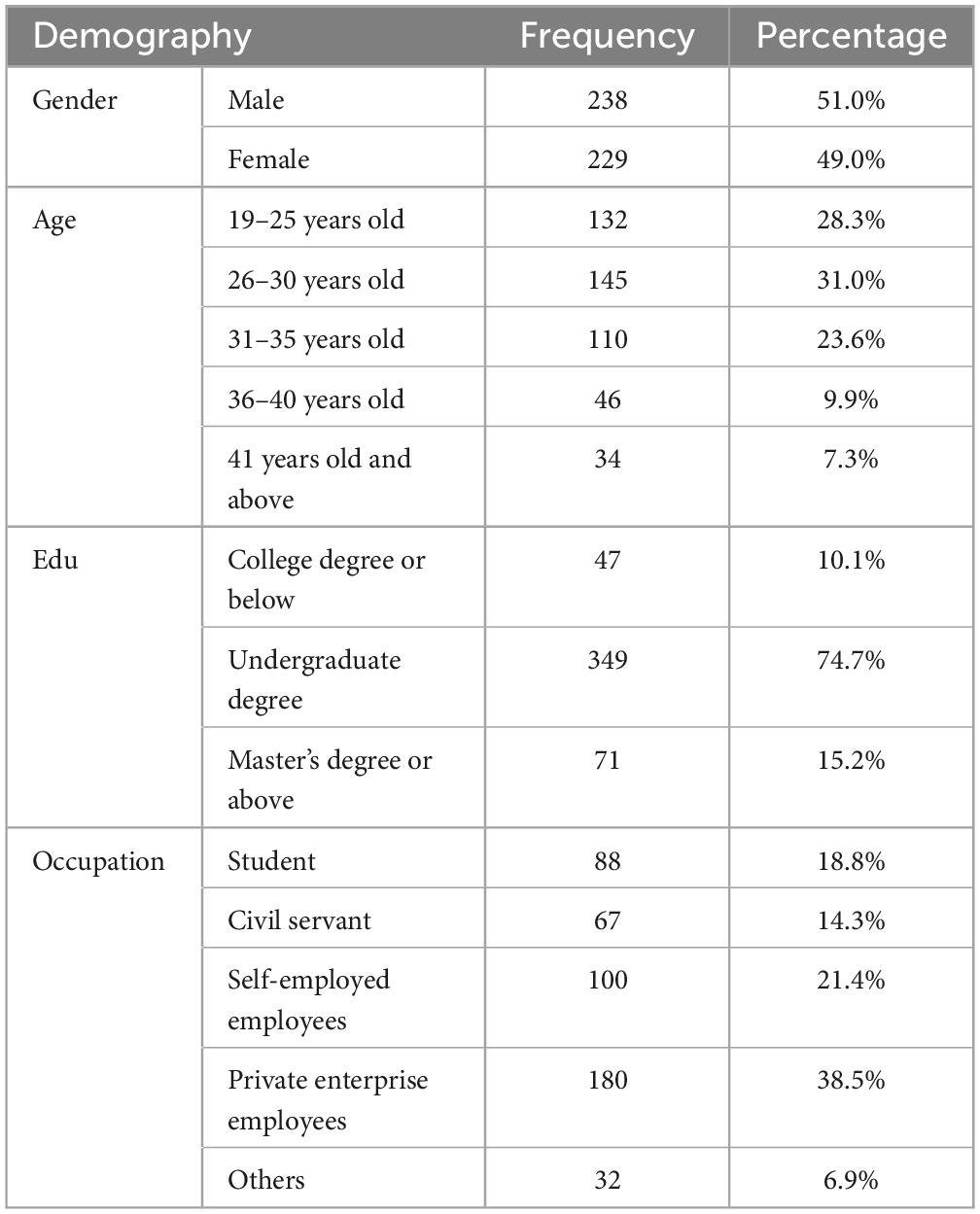

The data used in this study were collected through WENJUANXING, a popular online survey platform in southeastern China. This study distributed the questionnaire after explaining the research purpose to the respondents. Participants were informed that they could leave without repercussions and that their data would only be used for publication and not be shared with other organizations. By confirming this information, this study attempted to ascertain that they shared their thoughts. After excluding invalid responses, 467 valid samples remained from the 500 questionnaires collected. Among the 467 participants, 238 were male (51%) and 229 females (49%).

The highest proportion of respondents aged 26 to 30 is 31%, followed by the 19–25 years old group (28.3%). This result indicates a significant presence of young adults in our study. Furthermore, 74% of respondents hold bachelor’s degrees, suggesting a predominantly educated participant pool. This demographic distribution with previous research highlighting university students and young adult graduates as key demographics in studies regarding music consumption and technology adoption (Karim et al., 2020; Li, 2022). According to Li (2022), individuals in university and young adult life stages demonstrate a heightened interest in music idols and celebrities. This interest often extends to their engagement with novel technologies, as highlighted by Karim et al. (2020), who found that university students and young adult graduates are frequently early adopters of new technologies, such as AI-mediated music platforms.

The selection of university students and young adult graduates as the primary demographic for this study is well-founded given their significance in both music consumption and technology adoption behaviors. Previous studies have underscored the relevance of this demographic group in understanding trends in digital music consumption and the adoption of innovative music technologies. For instance, Borja et al. (2015) explored the preferences and behaviors of international university students regarding music streaming platform, highlighting their role as early adopters in the digital music landscape. Likewise, Cha et al. (2020) investigated the impact of technological advancements on the pop music consumption of young adults, reinforcing the importance of studying this demographic in the context of music and technology.

Moreover, the demographic profile of university students often includes a diverse range of cultural backgrounds, socioeconomic statuses, and technological proficiencies (Simon and Ainsworth, 2012), thus enriching the breadth and depth of our study findings. Furthermore, the inclusion of young adult graduates in our study extends our scope beyond the confines of universities, offering insights into the post-graduation phase when individuals are navigating career transitions and establishing their identities as consumers in the digital marketplace.

Moreover, 38.5% of respondents indicated employment in private enterprises, followed by the 21.4% of self-employed respondents, 18.8% of students, and 14.3% of government employees (civil servants). Such information reflects a diverse occupational background among participants. Table 1 shows the demography statistics of the respondents.

In short, the convergence of empirical evidence from previous research, alongside the detailed demographic profile of our sample, strengthens the credibility and relevance of our study findings in advancing scholarly understanding and practical applications in the field of digital music and consumer behavior.

3.2 Measures

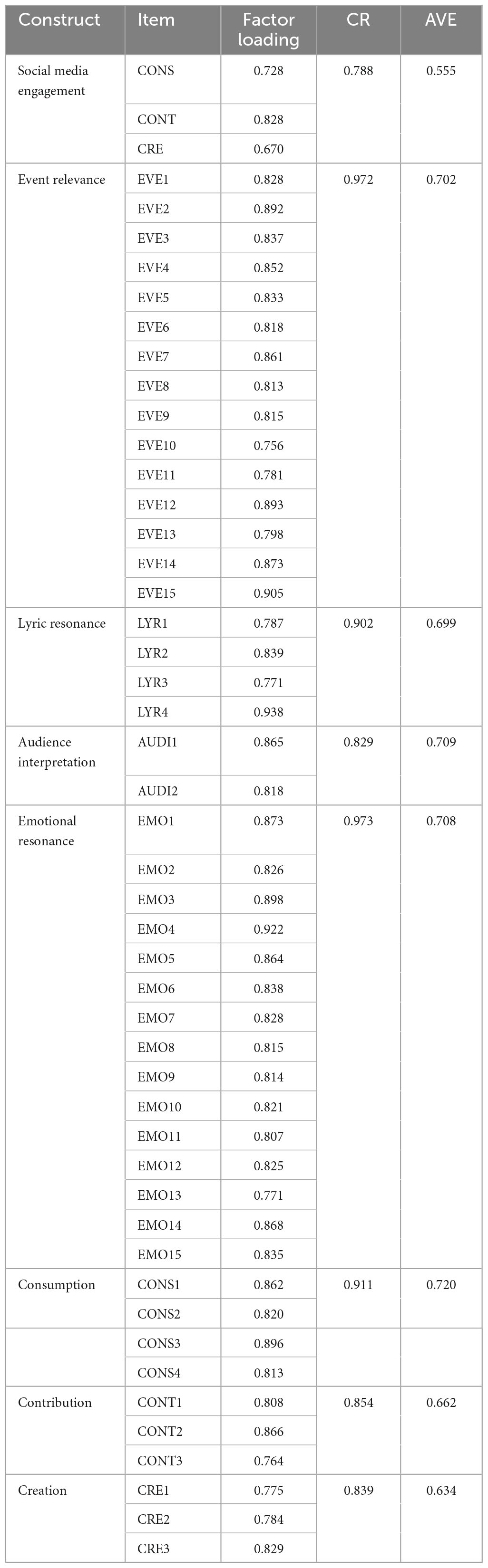

To ensure the reliability and validity of the questionnaire, all items used to measure the variables in this study were adapted from previously published scales. As this study collected data from China, the questionnaire was administered through a translation process in both directions. Following Brislin (1970), two researchers independently translated the questionnaire from English to Mandarin and back to English. To ensure the accuracy of the translation, this study consulted with other researchers. All measures of this study were applied using a 5-point Likert scale (1: strongly disagree; 5: strongly agree), except AI-singer origins, which were measured by a binary item adopted from Zhu et al. (2022), a dummy variable that equals 1 if the singers are from the AI technology, and 0 otherwise. The measurement of event relevance was adopted from Wang (2019), which includes fifteen items, such as “The background music connects to the details of events relevant to me.” The scale of lyric resonance adopted the measurement items proposed by Lee et al. (2021). The measurements of audience interpretation were from Alsaad et al. (2023), which include two items. Emotional resonance was measured by fifteen items adopted from Toivo et al. (2023). The measurements of social media engagement were derived from Alsaad et al. (2023) and consisted of ten items divided into three subconstructs: consumption, contribution, and creation. The detailed measurement items are attached in Supplementary Appendix.

3.3 Analysis

SPSS 25.0 and AMOS 24.0 were utilized for statistical analysis. Initially, descriptive statistics, CMB (common method bias), reliability analysis and correlation analysis were conducted using SPSS. Then, AMOS was used to conduct a confirmatory factor analysis (CFA) to evaluate measurement model. To assess model fit, this study employed conventional indexes and some of the most commonly used standards in the literature (CMIN/DF < 3, CFI > 0.90, RMSEA, and SRMR < 0.08). Reliability testing uses Cronbach alpha and composite reliability to test the reliability of the scale variables. This study employed structural equation modeling (SEM) with maximum likelihood estimation to evaluate each hypothesis. Moreover, the bootstrap method with a sample size of 5,000 was utilized to test the mediation effects. By controlling the effects of measurement error, this method can reduce bias in estimating estimates in a mediation analysis (Ledgerwood and Shrout, 2011).

4 Results

4.1 Common method bias

Because the data were self-reported by live stream users, there might be the issue of common method bias (CMV). To examine this issue, this study used Harman’s single-factor method for CMB testing. This study included all the items in the exploratory factor analysis (EFA). Through the analysis results, it can be seen that the variance explanation rate of the first factor is 37.801%, less than 40%, indicating that there is no serious problem of common method bias in this study.

4.2 Reliability and validity

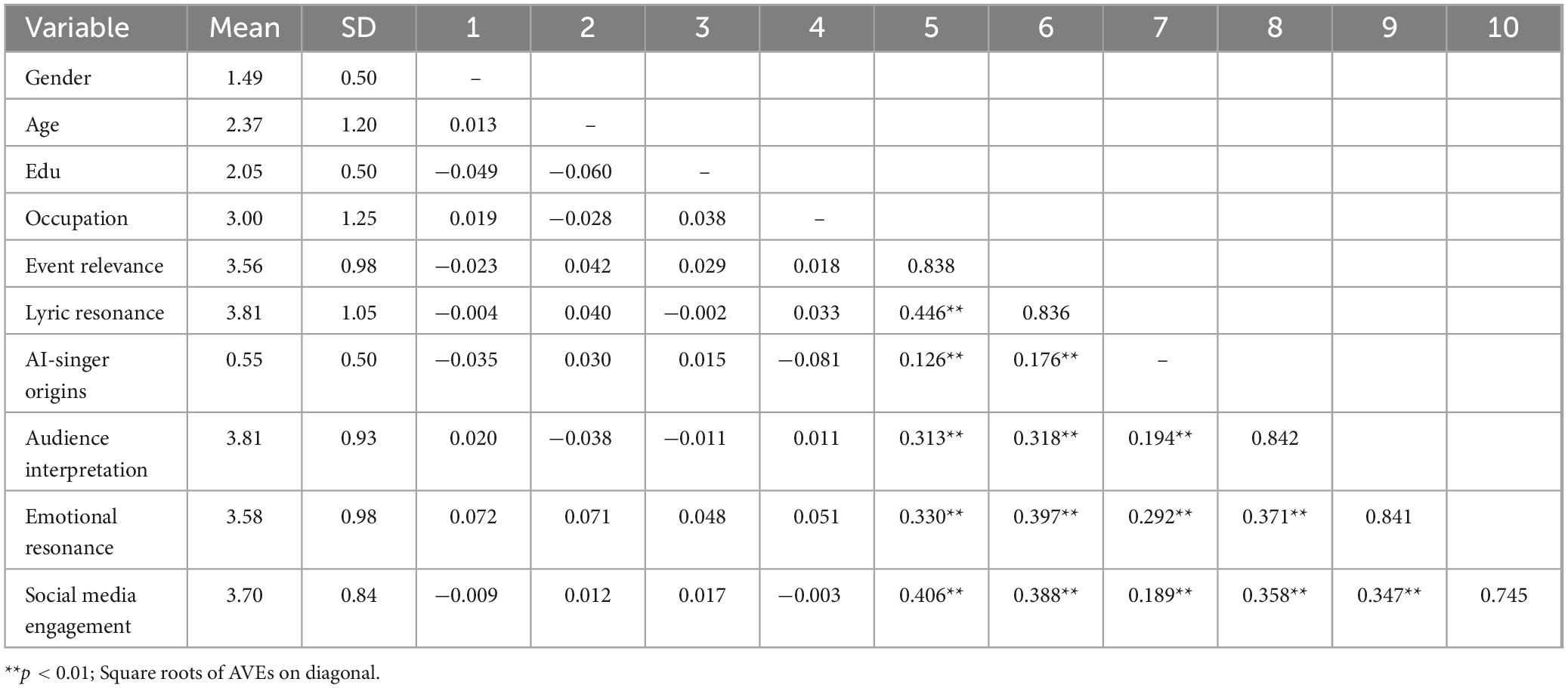

Cronbach’s alpha and composite reliability (CR) were utilized to assess the variables’ internal consistency and dependability. The results indicate that the value of each variable exceeds the recommended threshold of 0.7, and the reliability is good. All variable factor loading coefficients and average variance extraction (AVE) are greater than 0.5, indicating convergence validity (see Table 2). The model fit of the measurement model in this study is CMIN/DF = 2.49, CFI = 0.928, SRMR = 0.045, and RMSEA = 0.057, indicating that the structural validity of this study is good. The results for comparing the correlation coefficient and square roots of AVEs of every variable indicate that discriminant validity is good (see Table 3).

4.3 Structural equation model analysis

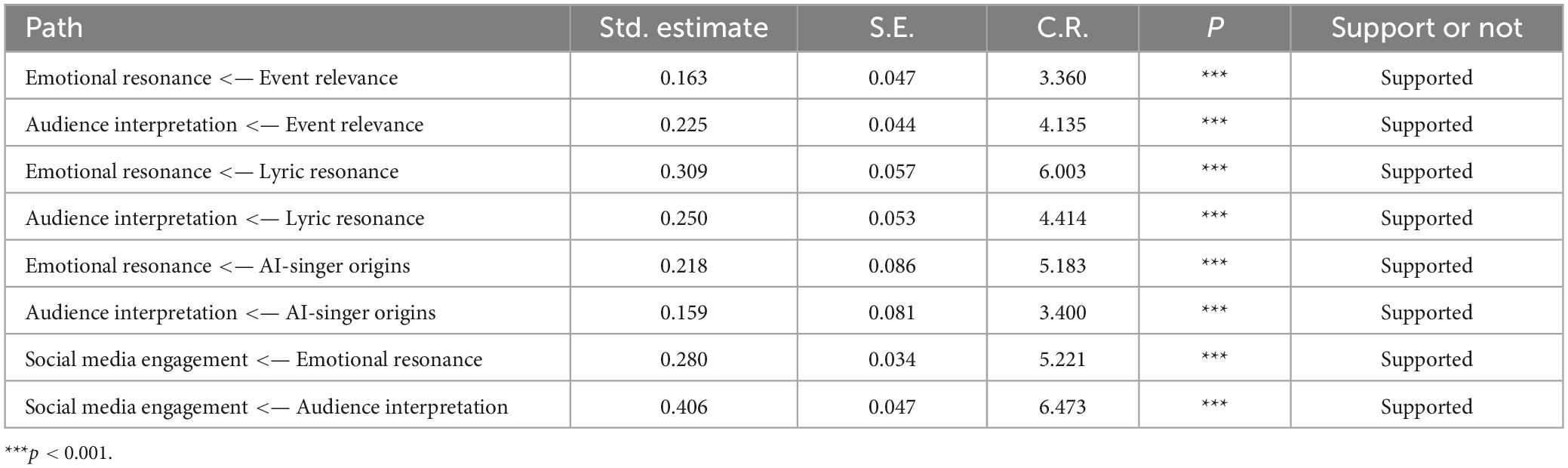

A structural equation model (SEM) was conducted to test hypotheses by using AMOS. The initial model containing only the predictor, mediator, and outcome shows an excellent fit to the data (CMIN/DF = 2.52, CFI = 0.923, SRMR = 0.065, and RMSEA = 0.057). Table 4 displays the model’s path coefficients. Event relevance (β = 0.163, p < 0.05), lyric resonance (β = 0.309, p < 0.05), and AI-singer origins (β = 0.218, p < 0.05) have significant positive effects on emotional resonance; event relevance (β = 0.225, p < 0.05), lyric resonance (β = 0.250, p < 0.05), and AI-singer origins (β = 0.159, p < 0.05) have significant positive impacts on audience interpretation; emotional resonance (β = 0.280, p < 0.05) and audience interpretation (β = 0.406, p < 0.05) have significant positive impacts on social media engagement. This evidence supports H1a, H2a, H3a, H4a, H1b, H2b, H3b, and H4b.

4.4 Mediating effects testing

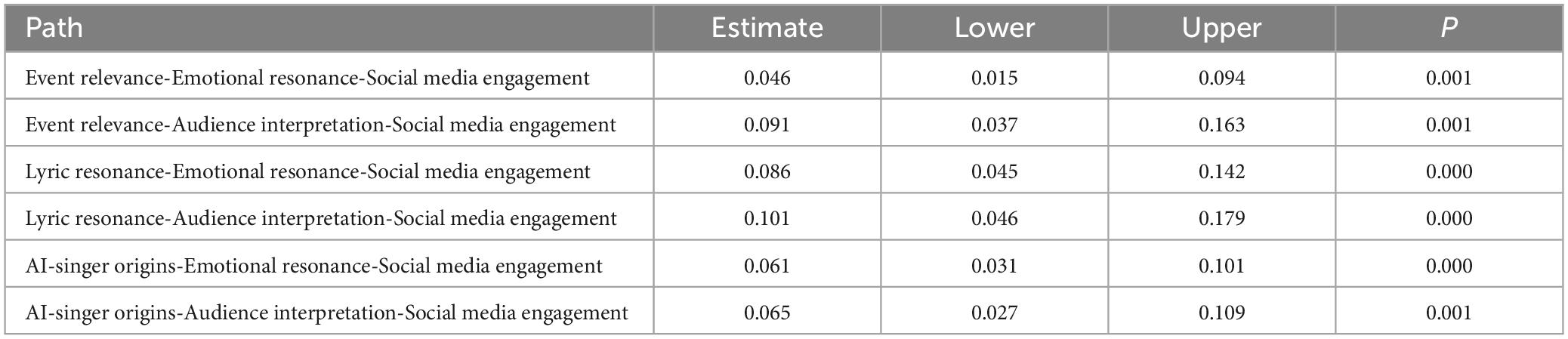

This study further examined the serial mediation effects of emotional resonance and audience interpretation on the association between event relevance, lyric resonance, AI-singer origins and social media engagement. This study examined the mediation effects using bootstrapping method in AMOS. The results are shown in Table 5. The results showed that the significant indirect effect of event relevance on social media engagement via emotional resonance is 0.046, 95% CI (confidence interval) = [0.015, 0.094]. The significant indirect effect of event relevance on social media engagement via audience interpretation is 0.091, 95% CI = [0.037, 0.163]. The significant indirect effect of lyric resonance on social media engagement via emotional resonance is 0.086, 95% CI = [0.045, 0.142]. The significant indirect effect of lyric resonance on social media engagement via audience interpretation is 0.101, 95% CI = [0.046, 0.179]. The significant indirect effect of AI-singer origins on social media engagement via emotional resonance is 0.061, 95% CI = [0.031, 0.101]. The significant indirect effect of AI-singer origins on social media engagement via audience interpretation is 0.065, 95% CI = [0.027, 0.109]. Thus, H5a, H6a, H7a, H5b, H6b, and H7b are supported.

5 Discussion

Drawing on the growing interest from researchers and practitioners (Civit et al., 2022), this study explores how AI enables non-professional content creators to create and modify music to add value to their videos, games, and other content (Dredge, 2023). The structural equation modeling analysis using AMOS 24 provided empirical support to the hypothesized conceptual framework. Analysis results indicate that the model adequately represents the relationships among the variables under investigation. In doing so, this study makes several contributions.

First, the focus on AI-modified music by non-professional content creators adds to the music studies that focus on AI-facilitation in music creation among professional artists (Anantrasirichai and Bull, 2022). More importantly, this study sheds light on the growing body of literature on the gig economy (Waldkirch et al., 2021), where ordinary individuals such as non-professionals are also able to create social media content to generate income. Our findings suggest that non-professional content creatorscan use AI to tailor songs sung by singers with cultural affinity with modified lyrics to elicit content consumers’ experiences or memories regarding important social events. In doing so, we contribute to the AI-music literature by unraveling the key elements that AI enables those creators to develop to achieve consumer engagement.

Second, my findings extend the S-O-R model by contextualizing the three stimuli (e.g., event relevance, lyric resonance, and AI-singer origins) from AI-modified music; content consumers’ cognitive (i.e., audience interpretation) and emotional (emotional resonance) processes (O) that further affect their social media engagement (R) behavior. Specifically, the impact of event relevance, lyric resonance and AI-singer origins on social media content consumers’ interpretation and emotional resonance concurred with previous studies on the role of background music in arousing content consumers’ emotional changes (Juslin and Västfjäll, 2008). In particular, I elaborated on how AI-modified background music can stimulate content consumers’ emotions by reminding them of previous events (e.g., milestones and achievements) that are important to their individual lives and how cultural affinity is achieved to stimulate content consumers’ sense of belonging.

Third, I elaborate on the role of social media content consumers’ cognitive and emotional processing in influencing social media engagement. In particular, I proved the serial mediation effects of emotional resonance and audience interpretation on the relationship between event relevance, lyric resonance, AI-singer origins, and social media engagement. The integration of these research findings with existing literature is noteworthy. Prior studies have emphasized the role of cognitive understanding and emotional resonance in shaping consumers’ responses to various media content (Qiu et al., 2019; Delbaere et al., 2021). This study contributes to this body of knowledge by examining these mechanisms in the context of AI-modified music. In particular, it adds empirical evidence regarding how social media content consumers’ interpretations of lyrical content and emotional connections with music play a pivotal role in fostering social engagement with AI-generated background music.

The practical implications of research findings are twofold. First, non-professional, non-professional content creators and digital platforms can leverage the identified factors of event relevance, lyric resonance, and AI-singer origins to enhance social media content consumers’ cognitive understanding and emotional experiences of social media content. This can be achieved by adopting AI to learn the popular events, themes, and emotions shared by different categories of social media content consumers. Realizing and integrating those elements into background music can lead to increased social media engagement, facilitating a wider reach and greater impact. Second, understanding the mediating effects of audience interpretation and emotional resonance is valuable guidance for non-professional content creators to adopt effective strategies for attracting social media consumers. By encouraging audience understanding and eliciting emotional responses to the background music, non-professional content creators can foster a more engaged and loyal content consumer base.

Despite the aforementioned contributions and insights, this study is subject to some limitations. First, this study focused on a specific genre of music, i.e., the AI-modified singer model. While addressing the research gaps, it may also limit the generalizability of the findings to AI-generated music genres, especially those produced by professional musicians. Second, the study adopted self-reported measures for audience interpretation, emotional resonance, and social media engagement, which may be subject to response biases. Future studies could consider integrating physiological measures or behavioral observations to complement self-reported data. Third, findings of this study are not causal due to the chosen research design, which primarily relies on survey data and structural equation modeling. While these methods provide valuable insights into associations between variables, they do not allow for manipulation of possible confounding variables that may influence the observed relationships. Future studies can adopt controlled experiments to confidently make causal claims. Fourth, this study only focused on social media engagement as a dependent variable. Future studies could develop and examine other potential dimensions of social media engagement with AI-modified music. Expanding the scope of engagement measures could provide a more comprehensive understanding of social media content consumers’ responses to AI-modified music.

6 Conclusion

This study sheds light on the underlying mechanisms that drive social media engagement in the context of AI-modified background music. By investigating the roles of event relevance, lyric resonance, AI-singer origins, audience interpretation, and emotional resonance, this study provide valuable insights for researchers and practitioners in the social media and digital music business. The theoretical contributions and practical implications of this study advance our understanding of social media engagement with AI-singer-originated background music and provide a basis for future investigations in this rapidly evolving domain.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the author, without undue reservation.

Ethics statement

The studies involving humans were approved by the Research Department, Communication University of Zhejiang. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

XG: Writing – review and editing, Writing – original draft, Methodology, Investigation, Formal analysis, Data curation.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1267516/full#supplementary-material

Footnotes

- ^ https://www.makeuseof.com/free-ai-music-generators-create-unique-songs/

- ^ https://www.lyrics.com/lyric/28688428/Beyond/Guang+Hui+Sui+Yue

References

Abbasi, A. Z., Tsiotsou, R. H., Hussain, K., Rather, R. A., and Ting, D. H. (2023). Investigating the impact of social media images’ value, consumer engagement, and involvement on eWOM of a tourism destination: A transmittal mediation approach. J. Retail. Consum. Serv. 71:103231. doi: 10.1016/j.jretconser.2022.103231

Alsaad, A., Alam, M. M., and Lutfi, A. (2023). A sensemaking perspective on the association between social media engagement and pro-environment behavioural intention. Technol. Soc. 72:102201.

Alvarez, P., Quiros, J. G. D., and Baldassarri, S. (2023). RIADA: A machine-learning based infrastructure for recognising the emotions of spotify songs. Int. J. Interact. Multimed. Artif. Intell. 8, 168–181. doi: 10.9781/ijimai.2022.04.002

Anantrasirichai, N., and Bull, D. (2022). Artificial intelligence in the creative industries: A review. Artif. Intell. Rev. 55, 1–68.

Anderson, C. A., Carnagey, N. L., and Eubanks, J. (2003). Exposure to violent media: The effects of songs with violent lyrics on aggressive thoughts and feelings. J. Pers. Soc. Psychol. 84:960. doi: 10.1037/0022-3514.84.5.960

Arjmand, H.-A., Hohagen, J., Paton, B., and Rickard, N. S. (2017). Emotional responses to music: Shifts in frontal brain asymmetry mark periods of musical change. Front. Psychol. 8:2044. doi: 10.3389/fpsyg.2017.02044

Belfi, A. M., and Kacirek, K. (2021). The famous melodies stimulus set. Behav. Res. Methods 53, 34–48. doi: 10.3758/s13428-020-01411-6

Berz, W. L., and Bowman, J. (1995). An historical perspective on research cycles in music computer-based technology. Bull. Counc. Res. Music Educ. 126, 15–28.

Bigne, E., Chatzipanagiotou, K., and Ruiz, C. (2020). Pictorial content, sequence of conflicting online reviews and consumer decision-making: The stimulus-organism-response model revisited. J. Bus. Res. 115, 403–416. doi: 10.1016/j.jbusres.2019.11.031

Borja, K., Dieringer, S., and Daw, J. (2015). The effect of music streaming services on music piracy among college students. Comp. Hum. Behav. 45, 69–76.

Brislin, R. W. (1970). Back-translation for cross-cultural research. J. Cross Cult. Psychol. 1, 185–216.

Carlson, J., Rahman, M., Voola, R., and De Vries, N. (2018). Customer engagement behaviours in social media: Capturing innovation opportunities. J. Serv. Market. 32, 83–94. doi: 10.2196/37982

Cha, K. C., Suh, M., Kwon, G., Yang, S., and Lee, E. J. (2020). Young consumers’ brain responses to pop music on Youtube. Asia Pac. J. Market. Logist. 32, 1132–1148.

Cheng, Y., Wei, W., and Zhang, L. (2020). Seeing destinations through vlogs: Implications for leveraging customer engagement behavior to increase travel intention. Int. J. Contemp. Hosp. Manag. 32, 3227–3248.

Civit, M., Civit-Masot, J., Cuadrado, F., and Escalona, M. J. (2022). A systematic review of artificial intelligence-based music generation: Scope, applications, and future trends. Expert Syst. Appl. 209:118190.

Delbaere, M., Michael, B., and Phillips, B. J. (2021). Social media influencers: A route to brand engagement for their followers. Psychol. Market. 38, 101–112.

Deng, J. J., Leung, C. H., Milani, A., and Chen, L. (2015). Emotional states associated with music: Classification, prediction of changes, and consideration in recommendation. ACM Trans. Interact. Intell. Syst. 5, 1–36.

Deruty, E., Grachten, M., Lattner, S., Nistal, J., and Aouameur, C. (2022). On the development and practice of ai technology for contemporary popular music production. Trans. Int. Soc. Music Inf. Retr. 5, 35–49.

DeWall, C. N., Pond, R. S. Jr., Campbell, W. K., and Twenge, J. M. (2011). Tuning in to psychological change: Linguistic markers of psychological traits and emotions over time in popular US song lyrics. Psychol. Aesth. Creativ. 5:200.

Dredge, S. (2023). Creative AIS and music in 2022: Startups, artists and key questions. London: Music Ally.

Fraser, T., Crooke, A. H. D., and Davidson, J. W. (2021). “Music has no borders”: An exploratory study of audience engagement with youtube music broadcasts during COVID-19 lockdown, 2020. Front. Psychol. 12:643893. doi: 10.3389/fpsyg.2021.643893

Fteiha, B., Altai, R., Yaghi, M., and Zia, H. (2024). Revolutionizing Video Production: An AI-Powered Cameraman Robot for Quality Content. Eng. Proce. 60:19. doi: 10.3390/engproc2024060019

Gan, C., Huang, D., Chen, P., Tenenbaum, J. B., and Torralba, A. (2020). “Foley music: Learning to generate music from videos,” in Proceedings of the 16th European conference computer vision–ECCV 2020, (Glasgow), 16.

Grech, A., Mehnen, J., and Wodehouse, A. (2023). An extended AI-experience: Industry 5.0 in creative product innovation. Sensors 23:3009. doi: 10.3390/s23063009

Hemmasi, F. (2020). Tehrangeles dreaming: Intimacy and imagination in southern California’s iranian pop music. Durham, NC: Duke University Press.

Hill, A. (2013). Understanding interpretation, informing composition: Audience involvement in aesthetic result. Organ. Sound 18, 43–59.

Hollebeek, L. (2011). Exploring customer brand engagement: Definition and themes. J. Strat. Market.g 19, 555–573.

Hudson, S., Roth, M. S., Madden, T. J., and Hudson, R. (2015). The effects of social media on emotions, brand relationship quality, and word of mouth: An empirical study of music festival attendees. Tour. Manag. 47, 68–76.

Hwang, A. H.-C., and Oh, J. (2020). Interacting with background music engages E-Customers more: The impact of interactive music on consumer perception and behavioral intention. J. Retail. Consum. Serv. 54:101928.

Ibrahim, J. (2023). Video game music and Nostalgia: A look into leitmotifs in video game music. Available online at: https://www.diva-portal.org/smash/record.jsf?dswid=-3292&pid=diva2%3A1763460

Juslin, P. N., and Västfjäll, D. (2008). Emotional responses to music: The need to consider underlying mechanisms. Behav. Brain Sci. 31, 559–575.

Kadam, A. V. (2023). Music and AI: How artists can leverage ai to deepen fan engagement and boost their creativity. Int. J. Sci. Res. 11, 1589–1594.

Kang, H., and Lou, C. (2022). AI agency vs. human agency: Understanding human–AI interactions on TikTok and their implications for user engagement. J. Comp.Mediat. Commun. 27:zmac014.

Karim, M. W., Haque, A., Ulfy, M. A., Hossain, M. A., and Anis, M. Z. (2020). Factors influencing the use of E-wallet as a payment method among Malaysian young adults. J. Int. Bus. Manag. 3, 1–12.

Kuo, F.-F., Shan, M.-K., and Lee, S.-Y. (2013). “Background music recommendation for video based on multimodal latent semantic analysis,” in Proceedings of the 2013 IEEE international conference on multimedia and expo (ICME), (San Jose, CA).

Kwon, Y.-S., Lee, J., and Lee, S. (2022). The impact of background music on film audience’s attentional processes: Electroencephalography alpha-rhythm and event-related potential analyses. Front. Psychol. 13:933497. doi: 10.3389/fpsyg.2022.933497

Ledgerwood, A., and Shrout, P. E. (2011). The trade-off between accuracy and precision in latent variable models of mediation processes. J. Pers. Soc. Psychol. 101:1174. doi: 10.1037/a0024776

Lee, S.-H., Choi, S., and Kim, H.-W. (2021). Unveiling the success factors of BTS: A mixed-methods approach. Internet Res. 31, 1518–1540.

Li, K. (2022). A Qualitative study on the significance of idol worship of college students. Cult. Commun. Soc. J. 3, 1–7.

Louie, R., Coenen, A., Huang, C. Z., Terry, M., and Cai, C. J. (2020). “Novice-AI music co-creation via AI-steering tools for deep generative models,” in Proceedings of the 2020 CHI conference on human factors in computing systems, (New York, NY).

McClain, A. S. (2010). American ideal: How “American Idol” constructs celebrity, collective identity, and American discourses. Philadelphia, PA: Temple University.

Mehrabian, A., and Russell, J. A. (1974). An approach to environmental psychology. Cambridge, MA: The MIT Press.

Mori, K. (2022). Decoding peak emotional responses to music from computational acoustic and lyrical features. Cognition 222:105010. doi: 10.1016/j.cognition.2021.105010

Mori, K., and Iwanaga, M. (2014). Pleasure generated by sadness: Effect of sad lyrics on the emotions induced by happy music. Psychol. Music 42, 643–652.

Morris, J. D., and Boone, M. A. (1998). The effects of music on emotional response, brand attitude, and purchase intent in an emotional advertising condition. ACR North Am. Adv. 25:518.

Murray, N. M., and Murray, S. B. (1996). Music and lyrics in commercials: A cross-cultural comparison between commercials run in the Dominican republic and in the United States. J. Ad. 25, 51–63.

Nagano, M., Ijima, Y., and Hiroya, S. (2023). Perceived emotional states mediate willingness to buy from advertising speech. Front. Psychol. 13:1014921. doi: 10.3389/fpsyg.2022.1014921

Nwagwu, W. E., and Akintoye, A. (2023). Influence of social media on the uptake of emerging musicians and entertainment events. Info. Dev. 7:02666669221151162.

Oh, I., and Lee, H.-J. (2014). K-pop in Korea: How the pop music industry is changing a post-developmental society. Cross Curr. 3, 72–93.

Osterholt, L. (2021). Addressing audience engagement through creative performance techniques. Hon. Projects 657, 71–79.

Pandita, S., Mishra, H. G., and Chib, S. (2021). Psychological impact of covid-19 crises on students through the lens of stimulus-organism-response (SOR) model. Child. Youth Serv. Rev. 120:105783. doi: 10.1016/j.childyouth.2020.105783

Perez-Vega, R., Kaartemo, V., Lages, C. R., Razavi, N. B., and Männistö, J. (2021). Reshaping the contexts of online customer engagement behavior via artificial intelligence: A conceptual framework. J. Bus. Res. 129, 902–910.

Qiu, Z., Ren, Y., Li, C., Liu, H., Huang, Y., Yang, Y., et al. (2019). “Mind band: A crossmedia AI music composing platform,” in Proceedings of the 27th ACM international conference on multimedia, (San Francisco, CA).

Rodgers, W., Yeung, F., Odindo, C., and Degbey, W. Y. (2021). Artificial intelligence-driven music biometrics influencing customers’ retail buying behavior. J. Bus. Res. 126, 401–414.

Ruth, N., and Schramm, H. (2021). Effects of prosocial lyrics and musical production elements on emotions, thoughts and behavior. Psychol. Music 49, 759–776.

Schaefer, H.-E. (2017). Music-evoked emotions–current studies. Front. Neurosci. 11:600. doi: 10.3389/fnins.2017.00600

Schäfer, T., Sedlmeier, P., Städtler, C., and Huron, D. (2013). The psychological functions of music listening. Front. Psychol. 4:511. doi: 10.3389/fpsyg.2013.00511

Schön, D., Boyer, M., Moreno, S., Besson, M., Peretz, I., and Kolinsky, R. (2008). Songs as an aid for language acquisition. Cognition 106, 975–983.

Schreiner, M., and Riedl, R. (2019). Effect of emotion on content engagement in social media communication: A short review of current methods and a call for neurophysiological methods. Inf. Syst. Neurosci. 2018, 195–202.

Shang, S. S., Wu, Y.-L., and Sie, Y.-J. (2017). Generating consumer resonance for purchase intention on social network sites. Comp. Hum. Behav. 69, 18–28.

Shutsko, A. (2020). “User-generated short video content in social media. A case study of TikTok. Social computing and social media,” in Proceedings of the 12th International conference, SCSM 2020, Held as Part of the 22nd HCI International conference, HCII 2020: Participation, User experience, consumer experience, and applications of social computing, (Copenhagen), 22.

Simon, J., and Ainsworth, J. W. (2012). Race and socioeconomic status differences in study abroad participation: The role of habitus, social networks, and cultural capital. Int. Scholar. Res. Notices 2012:413896.

Spangler, T. (2023). More than 50% of nonprofessional U.S. creators now monetize their content, Adobe Study finds. Los Angeles, CA: Variety.

Suh, M., Youngblom, E., Terry, M., and Cai, C. J. (2021). “AI as social glue: Uncovering the roles of deep generative AI during social music composition,” in Proceedings of the 2021 CHI conference on human factors in computing systems, (New York, NY).

Tafesse, W., and Wien, A. (2018). Using message strategy to drive consumer behavioral engagement on social media. J. Consum. Market. 35, 241–253.

Threadgold, E., Marsh, J. E., McLatchie, N., and Ball, L. J. (2019). Background music stints creativity: Evidence from compound remote associate tasks. Appl. Cogn. Psychol. 33, 873–888.

Toivo, W., Scheepers, C., and DeWaele, J. (2023). RER-LX: A new scale to measure reduced emotional resonance in bilinguals’ later learnt language. Bilingualism doi: 10.1017/S1366728923000561 [Epub ahead of print].

Trunfio, M., and Rossi, S. (2021). Conceptualising and measuring social media engagement: A systematic literature review. Ital. J. Market. 2021, 267–292.

Tubillejas-Andres, B., Cervera-Taulet, A., and García, H. C. (2020). How emotional response mediates servicescape impact on post consumption outcomes: An application to opera events. Tour. Manag. Perspect. 34:100660.

Vuoskoski, J. K., Thompson, W. F., Ilwain, D. M., and Eerola, T. (2012). Who enjoys listening to sad music and why? Music Percept. 29, 311–317. doi: 10.1525/mp.2012.29.3.311

Waldkirch, M., Bucher, E., Schou, P. K., and Grünwald, E. (2021). Controlled by the algorithm, coached by the crowd–how HRM activities take shape on digital work platforms in the gig economy. Int. J. Hum. Resour. Manag. 32, 2643–2682.

Wang, B. (2019). Entertainment enjoyment as social: Identifying relatedness enjoyment in entertainment consumption. Davis, CA: University of California.

Wang, X., Cheng, M., Li, S., and Jiang, R. (2023). The interaction effect of emoji and social media content on consumer engagement: A mixed approach on peer-to-peer accommodation brands. Tour. Manag. 96:104696. doi: 10.1016/j.tourman.2022.104696

Wei, C., Wang, C., Sun, L., Xu, A., and Zheng, M. (2023). The hometown is hard to leave, the homesickness is unforgettable–the influence of homesickness advertisement on hometown brand citizenship behavior of consumers. Behav. Sci. 13:54. doi: 10.3390/bs13010054

Whitesell, N. R., Mitchell, C. M., and Spicer, P. (2009). A longitudinal study of self-esteem, cultural identity, and academic success among American Indian adolescents. Cult. Divers. Ethn. Minor. Psychol. 15, 38–50. doi: 10.1037/a0013456

Yang, Q., and Li, C. (2013). Mozart or metallica, who makes you more attractive? A mediated moderation test of music, gender, personality, and attractiveness in cyberspace. Comp. Hum. Behav. 29, 2796–2804.

Yoon, K. (2017). Korean wave| cultural translation of K-Pop among asian canadian fans. Int. J. Commun. 11:17.

Zhang, S., Guo, D., and Li, X. (2023). The rhythm of shopping: How background music placement in live streaming commerce affects consumer purchase intention. J. Retail. Consum. Serv. 75:103487.

Zhu, H., Pan, Y., Qiu, J., and Xiao, J. (2022). Hometown ties and favoritism in Chinese corporations: Evidence from CEO dismissals and corporate social responsibility. J. Bus. Ethics 176, 283–310.

Keywords: social media engagement, AI-modified background music, event relevance, lyric resonance, AI-singer origins, audience interpretation, emotional resonance

Citation: Gu X (2024) Enhancing social media engagement using AI-modified background music: examining the roles of event relevance, lyric resonance, AI-singer origins, audience interpretation, emotional resonance, and social media engagement. Front. Psychol. 15:1267516. doi: 10.3389/fpsyg.2024.1267516

Received: 26 July 2023; Accepted: 25 March 2024;

Published: 15 April 2024.

Edited by:

Anfan Chen, Hong Kong Baptist University, Hong Kong SAR, ChinaReviewed by:

Xiaoyan Liu, Beijing Jiaotong University, ChinaAaron Ng, Singapore Institute of Technology, Singapore

Copyright © 2024 Gu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaohui Gu, Z3V4aWFvaEBjdXouZWR1LmNu

Xiaohui Gu

Xiaohui Gu