- 1School of Child Studies, Kyungpook National University, Daegu, Republic of Korea

- 2Department of English Education, Kyungpook National University, Daegu, Republic of Korea

- 3Department of Early Childhood Education, Keimyung College University, Daegu, Republic of Korea

- 4Department of Home Economics Education, Kyungpook National University, Daegu, Republic of Korea

Introduction: This study investigates attention mechanisms and the accuracy of emotion judgment among South Korean children by employing Korean and American faces in conjunction with eye-tracking technology.

Methods: A total of 42 participants were individually presented with photos featuring either Korean or American children, and their task was to judge the emotions conveyed through the facial expressions in each photo. The participants’ eye movements during picture viewing were meticulously observed using an eye tracker.

Results: The analysis of the emotion judgment task outcomes revealed that the accuracy scores for discerning emotions of joy, sadness, and anger in Korean emotional faces were found to be significantly higher than those for American children. Conversely, no significant difference in accuracy scores was observed for the recognition of fear emotion between Korean and American faces. Notably, the study also uncovered distinct patterns of fixation duration among children, depending on whether they were viewing Korean or American faces. These patterns predominantly manifested in the three main facial areas of interest, namely the eyes, nose, and mouth.

Discussion: The observed phenomena can be best understood within the framework of the “other-race effect.” Consequently, this prototype formation leads to heightened accuracy in recognizing and interpreting emotional expressions exhibited by faces belonging to the same racial group. The present study contributes to a deeper understanding of how attention mechanisms and other-race effects impact emotion judgment among South Korean children. The utilization of eye-tracking technology enhances the validity and precision of our findings, providing valuable insights for both theoretical models of face processing and practical applications in various fields such as psychology, education, and intercultural communication.

1. Introduction

For human social interactions, it is important to not only know and express one’s emotions but also recognize and respond appropriately to others’ emotions. Many studies have indicated the ability to read other people’s emotions, namely emotion recognition skills, as an essential factor in interpersonal interactions (Siegman et al., 1987; Choi et al., 2014). Emotion recognition is mainly performed through verbal cues, such as speech and voice, and non-verbal cues, such as facial expressions and behaviors. According to Albert Mehrabian’s 7-38-55 communication model, words of the conversation account for 7%, tone and voice account for 38%, but facial expressions account for 55% of emotion recognition (Mehrabian, 2017). This shows that facial expressions are signals that best reflect and convey human emotional states (Ekman, 1982; Barrett et al., 2007), and they are closely tied to successful interpersonal relationships.

Recognizing faces plays a pivotal role in social interactions (Haxby et al., 2002). Surprisingly, even infants as young as 6 months old exhibit an impressive ability to differentiate between human faces and those of monkeys (Pascalis et al., 2002). However, an intriguing shift occurs around 9 months of age, where infants become increasingly skilled at distinguishing human faces specifically. This phenomenon is known as perceptual narrowing, where face perception becomes finely tuned based on individual experiences.

Further evidence of the influence of experience on face perception is reflected in the “other race effect.” Studies have shown that individuals tend to recognize and distinguish faces of their own race more accurately compared to faces of other races (Meissner and Brigham, 2001; Kelly et al., 2007, 2009). Previous studies have reported that the other-race effect is evident from the age of 9 months (Sangrigoli and De Schonen, 2004; Hayden et al., 2007; Kelly et al., 2007, 2009). A study of six-and nine-month-old infants in South Korea found that Korean infants distinguish Asian faces better than white faces, confirming that race-based selective facial recognition is a common developmental phenomenon (Kim and Song, 2011). Moreover, the other-race effect can be expected to influence the process of interacting and communicating with individuals of different races and cultures as the world enters the era of internationalization and hyper-connectedness.

Facial expressions are an important cue for emotion recognition, and the ability to recognize them begins to develop early in life (Darwin, 1872; Haviland and Lelwica, 1987; Termine and Izard, 1988). Even a 10-week-old infant can distinguish between joy, sadness, and anger in their mother’s face (Haviland and Lelwica, 1987). By the end of their first year, infants exhibit social referencing abilities, using facial expressions of others to guide their behavior in uncertain situations (Klinnert et al., 1986). Around the ages of 3–5, they can recognize basic emotions, such as joy, sadness, anger, and fear, from other people’s facial expressions (Termine and Izard, 1988; Park et al., 2007). Further, the ability to recognize complex emotions (such as embarrassment and shame) and basic emotions develops after the age of five (Yi et al., 2012). The accuracy of emotion recognition from facial expressions increases with age (Zuckerman et al., 1980; Harrigan, 1984; Markham and Adams, 1992; Nowicki and Duke, 1994), but the development of this ability varies depending on the type of emotion. For example, at 4 and 7 months old, infants display a preference for looking at happy faces compared to angry or neutral faces (Walden, 1991). And children are better at recognizing emotions of pleasure, such as joy, than emotions of displeasure, such as sadness, anger, and fear (Fabes et al., 1994). However, the development of children’s emotion recognition ability is not fully understood, especially in relation to the age at what which they are able to recognize different types of emotion.

On the other hand, it is also necessary to determine if there is a difference in emotion recognition from facial expressions due to person’s biological factors such as age, sex, and race. In particular, a difference in emotion recognition from facial expressions due to difference races is called the other-race effect, which we want to focus on in this paper.

Ekman (1972) and Izard and Malatesta (1987) verified that there are facial expressions that are universally recognized through a comparative cultural study of facial expressions. However, other studies indicate a difference in emotion recognition accuracy from facial expressions based on race (Izard, 1971; Huang et al., 2001; Elfenbein and Ambady, 2002). As such, it is difficult to conclude that facial expressions are culturally universal, which are understood in a universal way without any background based on cultural context (Gendron et al., 2014). Hence, it is necessary to conduct research on emotion recognition skills from facial expressions according to race. South Korea has historically maintained a monoculture and has been monolingual for around 5,000 years, and the pride of maintaining the population as a single race is imprinted in the Korean mindset (Kymlicka, 1996; Park, 2006). Ethnic composition of South Korea consists of 97.7% Korean, 2% Japanese, 0.1% Chinese, 0.1% U.S. white persons, and so on (Britanica, 2000). As such, international marriages and the influx of foreigners have not been active compared to other countries. Currently, most Korean children have rare opportunities to be exposed to people of other races in South Korea. Therefore, we can assume that Korean children may exhibit differences in emotion recognition from facial expressions derived from the same and other races. Moreover, as South Korea has not yet become free of multicultural challenges due to the increase in international marriages and internationalization, there is interest in the research on emotion recognition according to race and multicultural families. However, in previous research, the studied faces of other races were Southeast Asians or Japanese, similar to Koreans, or were limited to black and white persons (Ha et al., 2011; Choi et al., 2014). In addition, the participants of prior studies were elementary school students or older individuals, and there is no research on Korean children in the developmental stage of emotion recognition.

This study used eye-tracking to investigate differences in emotion recognition accuracy from facial expressions. The use of eye-tracking is based on the eye-mind link hypothesis (Just and Carpenter, 1976), which states that if we are looking at a particular object or area of a scene, it is likely that we are thinking about that object or area. Just and Carpenter (1976) found that eye fixations are closely correlated with cognitive processes, and can be used to predict the performance of participants on cognitive tasks and to identify the cognitive processes that are involved in a particular task. Eye tracking provides information about where and for how long visual attention is paid to specific stimuli (Carpenter, 1988). Furthermore, by gathering specific information on how much attention participants pay to a certain stimulus and the order in which they view it, it is possible to infer their cognitive process indirectly. Visual attention on a fixed area is the cognitive process of concentrating on information about the area (Just and Carpenter, 1980; Holmqvist et al., 2011; Lockhofen and Mulert, 2021), the eye-tracking method is considered especially useful for understanding the development of emotion recognition from facial expressions, especially among children with limited verbal expression.

Facial expressions consist of muscle movements in specific areas of the face. Therefore, to recognize emotions from facial expressions, information is collected and identified by paying attention to visual cues in areas such as the eyes, nose, and mouth. Previous studies have revealed that certain areas of the face contain more useful information for emotional identification than others, and the eyes and mouth, with the most movement making various shapes, are among the facial components that provide major cues for emotion recognition. For example, to accurately recognize emotions of joy, one has to stare at the mouth because the corners of the mouth rising serve as a decisive cue for the emotion of joy. In contrast, to accurately recognize sadness, anger, and fear, the eyes must be looked at as the gaze and size of the eye provide cues (Eisenbarth and Alpers, 2011). Individuals with schizophrenia and autism tend to be distracted and unfocused and are unable to pay attention to facial features so that they have difficulty recognizing emotions from facial expressions (Kohler et al., 2003). In particular, individuals with autism tend to stare less at the eye area, which provides an important cue for recognizing emotions (Klin et al., 2002; Spezio et al., 2007; Stephan and Caine, 2009). However, these previous studies do not imply that the nose area does not provide emotional information. There are previous studies considering nose area (Watanabe et al., 2011; Schurgin et al., 2014; Reisinger et al., 2020). In particular, nose area plays an important role on comparing pleasant and unpleasant emotions (Schurgin et al., 2014), or deaf and hearing people’s emotion recognition (Watanabe et al., 2011). While a previous eye-tracking study on facial perception of Chinese children aged 4–7 years on other-race faces found that children spend more time fixating on the nose than on other areas of the face, suggesting that the nose plays a role in facial recognition (Hu et al., 2014).

In this context, we hypothesized that Korean children would be more accurate in recognizing emotions from Korean children’s facial expression than from American children’s facial expression. We also hypothesized that Korean children would show different eye movement patterns when recognizing emotions from Korean children’s faces than from American children’s faces. Based on these hypotheses, this study addresses the following research questions: Is there a difference in the accuracy of Korean children’s emotion recognition (joy, sadness, anger, and fear) from Korean and American children’s facial expressions? Is there a difference in the eye movement patterns in Korean children’s emotion recognition (joy, sadness, anger, and fear) from Korean and American children’s facial expressions?

2. Materials and methods

2.1. Participants

The participants were six-year-old Korean children enrolled in kindergartens and daycare centers in the Daegu metropolitan area. Their participation was approved by their parents or legal guardian through a signed consent form. A total of 109 consent forms—52 from kindergarten A, 33 from kindergarten B, and 24 from kindergarten C—were collected without any restriction of children. This study was approved by the Institutional Review Board of Kyungpook National University under approval number KNU2019-0136.

The eye-tracking experiment was conducted in two phases due to the shortage of children’s concentration, which are explained below in detail. In the first phase, 46 children were deemed unsuitable for the eye-tracking experiment after calibration, who had severe myopia, astigmatism, and wore glasses, and most of them could not reach the 80% threshold in eye-tracking recognition rates. Only 52 out of the 63 children selected for the second phase actually took part. After the second phase, 10 children were excluded because they scored an eye-tracking recognition rate of less than 80%, refused to respond, or provided missing responses. The data for the final analysis consisted of data from 42 children, of which 19 were boys and 23 were girls, with an average age of 75.9 months ranging from 70 to 81 months (SD = 3.16).

2.2. Experimental tools

2.2.1. Eye tracker

The eye-tracking device used in the experiment is the Tobii 1750 eye tracker (Tobii Technology, Stockholm, Sweden), which is a 17-inch-wide screen-based eye-tracking system using an IR camera with a frame rate of 50 Hz built into the bottom of the screen to track the user’s gaze. The device allows researchers to freely set areas of interest (AOIs) and extract two most commonly used eye tracking metrics by using Tobii’s ClearView analysis software such as the fixation duration for how how long the participants stare at each AOI.

2.2.2. Photo stimuli

The Assessment of Children’s Emotional Skills (ACES) developed by Schultz, and the Korean ACES were used as photo stimuli by displaying them on the eye-tracking screen. The Korean ACES was modified to suit Korean domestic circumstances such as ethnic composition, by replacing the original ACES’ photos by Korean children’s photos (Chung et al., 2022). ACES’ original tools include four sets of photographs of four emotional expressions of non-Korean children including both black and white children: joy, sadness, anger, and fear. Note that only four out of six basic emotions (joy, sadness, anger, fear, disgust, and surprise) of Ekman (1999) were considered since emotions of disgust and surprise are the more complex ones such as pride, shame, and so on, and therefore they are not correctly recognized by even 5-year-old children (Shaffer, 2005).

Accordingly, photos expressing the same emotions were taken for Korean children, and a domestic validation was performed. To minimize the difference in the facial expressions of Korean and American children, children aged 5–12 attending acting institutes acted as similar as possible to the original ACES tools.

All photos were 10-inch-wide squares. Sixteen photos from the original ACES tools and another 16 photos from the Korean ACES tools were used, with each set consisting of four photos for each of the four emotions. Among these two sets of 32 photos, there are 15 girls and 17 boys, and 16 Korean, 16 American children of various races. See Supplementary Figure S1. Children sat so that their eyes were nearly two feet perpendicular to the center of the screen, which made both horizontal and vertical visual angles of approximately 23.54 degrees.

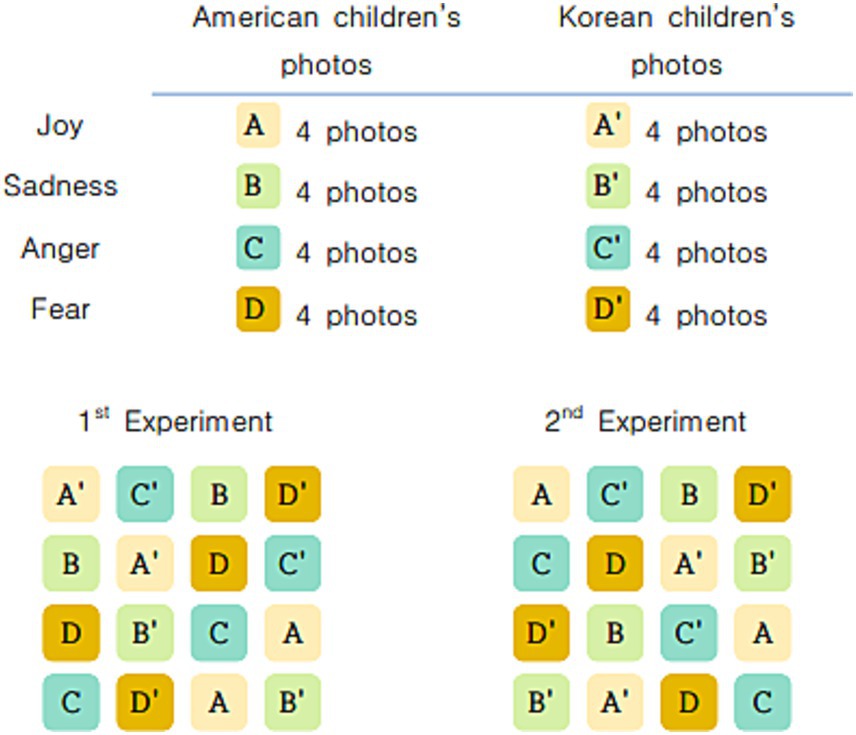

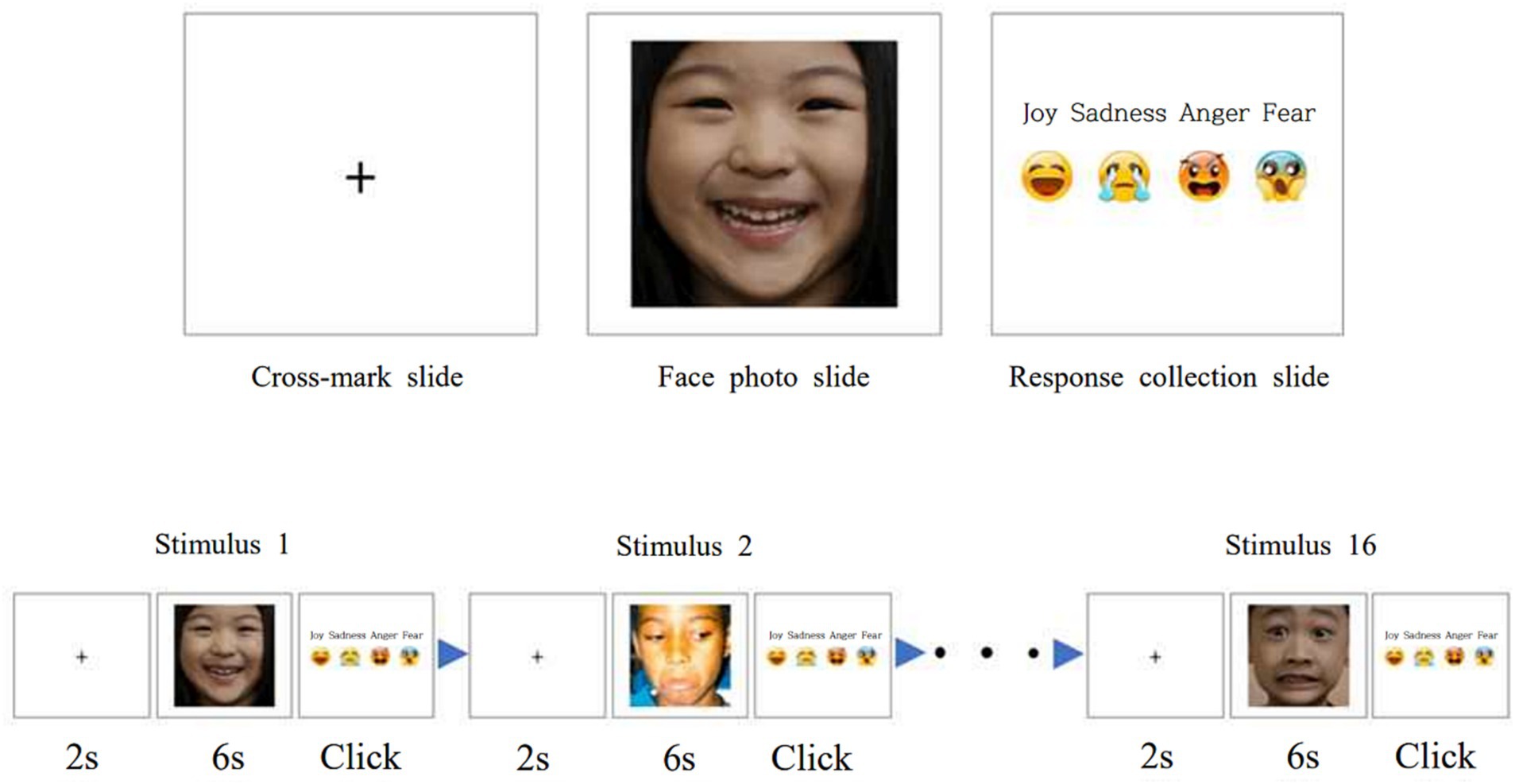

Since the children’s concentration was short, the experiment was conducted in two phases, each of which shows them 16 different photos. Each set of 16 photos consisted of eight Korean and eight American children’s photos, each of which contained two photos for each emotion, and all the photos were presented using a blocking method. The blocking method entails simultaneously presenting multiple stimuli in multiple blocks so that it prevents stimuli of the same kind from appearing; for instance, photos with the same emotion or individuals of the same race were not shown more than twice in a row. In the experiment, the fixed blocking method shown in Figure 1 was used.

Two additional stimuli were used in addition to the Korean and American children’s facial photos. One was a cross-marked slide that helped focus the child’s gaze before the facial photo stimulus, and the second was a slide that was added to collect responses from the child after the facial photo stimulus was presented.

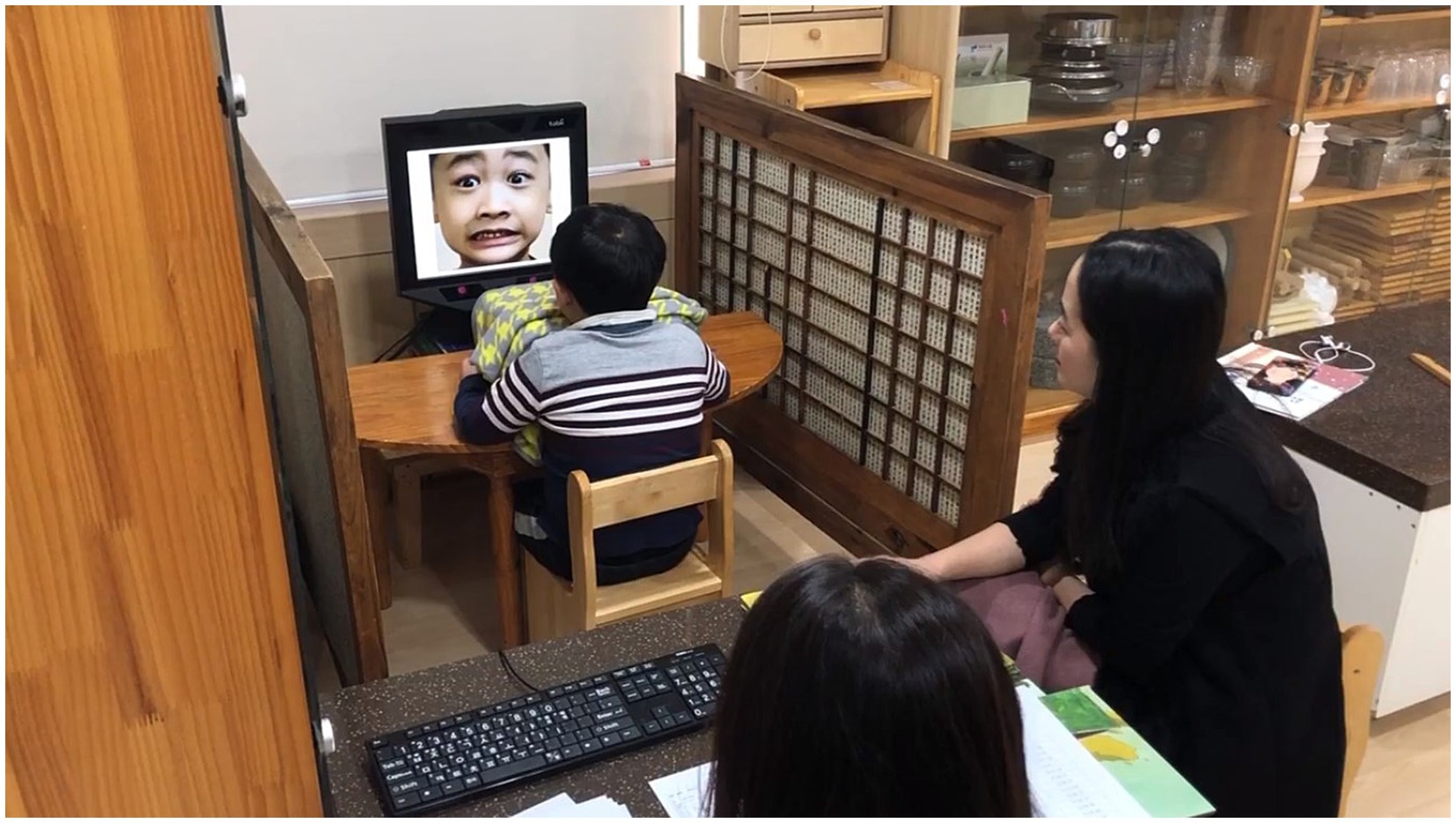

Figure 2. Photo stimuli Procedure. (Photograph in the stimulus 2 reproduced from ACES database with permission of David Schultz).

The cross-marked slide was presented for 2 s at first, and then, the second slide automatically appeared, with ACES measurement tools showing only children’s facial photos for 6 s. At this time, the participants were required to look at the child in the picture and infer which of the four emotions they thought was represented in the picture. They responded only when they moved on to the next slide, which was the response collection slide. The reason participants could speak only when they moved on to the response collection slide, without immediately responding to the picture, was to prevent an unnecessary gaze from being captured when they spoke.

The response collection slide, the last slide for one photo stimulus, was set up to manually move on to the next set of slides for the next photo stimulus, but only when the research assistant clicked the mouse. Then, the next three slides for the subsequent photo stimulus would be repeated. Figure 2 summarizes the process of presenting a photo stimulus and portraying it as a picture.

2.2.3. Experimental procedure

Before the experiment, the researcher visited the participants’ institutions to find a suitable place for the experiment. This is because the participants could have become distracted from the eye-tracking experiment if there were external noise or stimulation. Thus, the researcher found a quiet, independent space where the participating children could remain focused. The luminance was around 300 lux on a desk, which is recommended for lighting in kindergartens or daycare centers in Korea, and it is an ideal luminance for eye tracking.

Three researchers were involved in the experiment. Researcher 1 was in charge of forming a lab with the participating children to explain and conduct the experiment. Researcher 2 was in charge of installing the eye-tracking device, adjusting it to the children’s eye level, and collecting responses. Researcher 3 was in charge of accompanying the children to the experiment site and preparing it for the next set.

First, after receiving parental consent, Researcher 3 escorted each child to the experimental site from the classroom. When the child arrived at the experimental site, Researcher 1 tried to build rapport with them so the child could adapt to the experimental environment. Researcher 1 greeted the child, talked about what they had been doing in the classroom before coming to the experiment site, and explained the experiment: “Today’s experiment involves looking at pictures of various friends on a computer screen and telling us how they feel.” Then, Researcher 1 made the child sit in the correct position. During the eye-tracking experiment, each participant was asked to keep their body as still as possible to ensure that the eye-tracking was accurate. However, children have a shorter attention span than adults, and it was difficult for them to sit still. To ensure stable data collection in cases where the participant exhibited excessive head movements, the researcher gently supported the child’s head with their hands after obtaining consent (Lee, 2019). This was done to minimize head movements and ensure the reliable acquisition of data. The authors were aware of the potential ethical issue caused by supporting children’s head, and had tried to carefully handle this issue. More precisely, we have informed about the experiment to the parents and children in detail, used a soft padded head restraint, and monitored the children closely during the experiment to ensure that they were comfortable. No participating children reported any discomfort or pain during the experiment.

Researcher 2 helped adjust the height of the pedestal and ensured that the distance between the eye tracker and the child was appropriate based on the child’s seated height (see Figure 3).

After completing these preparations, the eye-tracking experiment began with the five-point calibration to measure whether the child’s eye movement was being properly tracked through an example stimulation. The participants practiced seeing how the photo appeared on the screen and when to respond. Subsequently, the participants were shown the 16 photo stimuli. During this process, Researcher 2 clicked the mouse button while collecting the child’s response from behind to quickly move on to the next stimulus.

The eye-tracking experiment ended after all 16 photo stimuli were shown and the responses were collected. Then, the child was checked to see if they had experienced any inconvenience during the experiment, and a return gift was given to them: a bingo game board for the first phase of the experiment and a mini block for the second phase of the experiment. The first and second phases were performed with a gap of at least 1 day. After each phase of the experiment, Researcher 3 safely dropped the child back to the classroom and brought the next child to the experiment site. It took nearly 15–20 min for one child to complete both phases of the experiment.

2.3. Analysis

This study tried to analyze the fixation duration where participants looked at particular facial areas to infer the emotions of the children in the pictures. The studied facial areas were the eyes, nose, and mouth. As the sizes of the eyes, nose, and mouth of the child in each photo stimulus were different, the AOIs were set individually (see Figure 4). In this study, in addition to the eyes and mouth, the nose was included as an AOI. The data extracted via the Tobii eye tracker involved fixation duration, which is the total time for which the eyes were fixed to each AOI.

Figure 4. Examples of AOIs. (Photograph on the right reproduced from ACES database with permission of David Schultz).

The data were analyzed using Jamovi 1.2.27. A two-way repeated measures ANOVA (Type 3 Sums of Squares) was performed with emotion and nationality as independent variables and emotion recognition accuracy as the dependent variable. Based on the results, post hoc tests (Bonferroni) were conducted. Additionally, a three-way repeated measures ANOVA (Type 3 Sums of Squares) was conducted with emotion, nationality, and AOI region as independent variables, and eye fixation duration as the dependent variable. Due to significant interaction effects, separate two-way repeated measures ANOVA (Type 3 Sums of Squares) were performed for each emotion (joy, sadness, anger, fear) with AOI and nationality as independent variables, and eye fixation duration as the dependent variable. Post hoc tests (bonferroni) were conducted based on the results. Jamovi utilized the R package “afex” to conduct ANOVA, and it automatically selected one of the two approximations, either Kenward–Roger or Satterthwaite approximation, for estimating the degrees of freedom (df) and calculating p-values (Singmann, 2018).

3. Results

3.1. Accuracy for the recognition of facial expressions

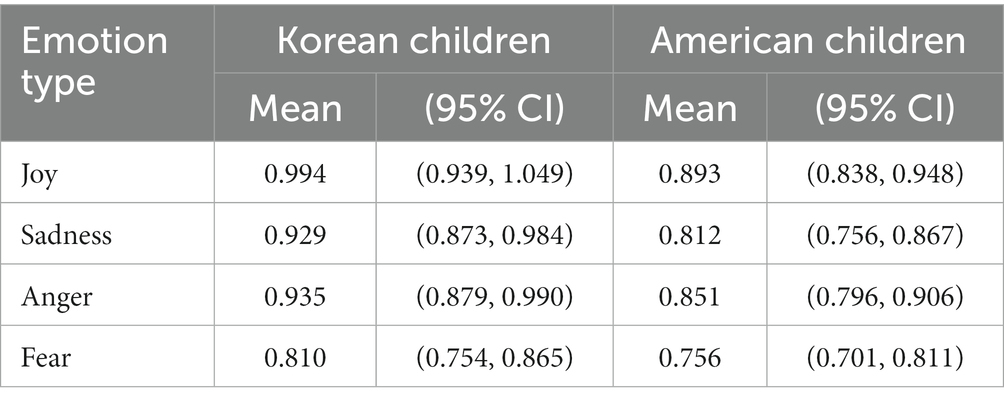

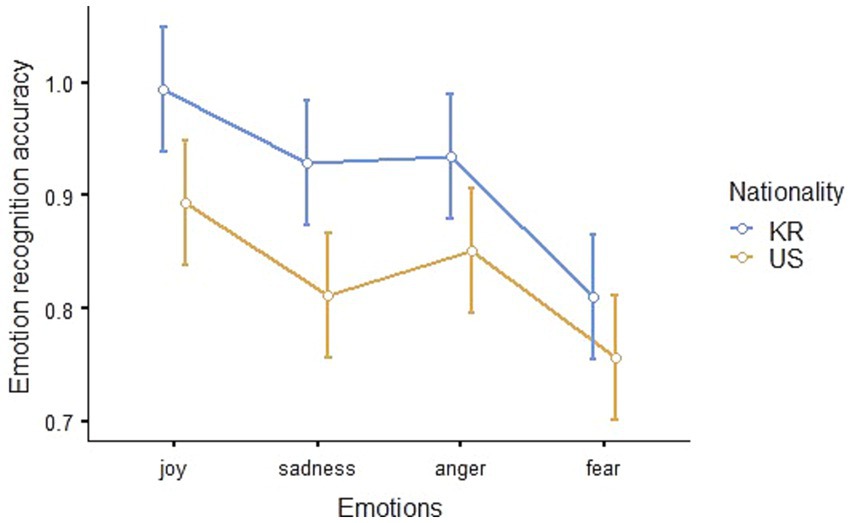

The accuracy of facial recognition of Korean and American children was analyzed considering all emotion types and individual emotional types. The Estimated Marginal Means (Emotion * Nationality, SE = 0.028) of facial recognition accuracy is shown in Table 1, and visualized in Figure 5.

A repeated measures ANOVA was performed to examine the difference in the accuracy of emotion recognition from the facial expressions of Korean and American children. There were significant differences between emotion types (F3,123 = 10.479, p < 0.001, η2p = 0.204) and nationalities (F1,41 = 31.978, p < 0.001, η2p = 0.438), but the interaction effect—which is the product of nationality and emotion types—was not statistically significant (F3,123 = 0.591, p = 0.622, η2p = 0.014).

In a post hoc analysis by Nationality, there was also a statistically significant difference in the accuracy of emotion recognition between Korean and American children (t41 = 5.65, pbonferroni < 0.001).

In a post hoc analysis by emotion type, joy and fear (t123 = 5.482, pbonferroni < 0.001), sadness and fear (t123 = 2.978, pbonferroni = 0.021), and anger and fear (t123 = 3.756, pbonferroni = 0.002) were statistically significant, and the rest of the comparisons showed no significant differences.

In sum, children recognized joy, sadness, and anger more accurately than fear, and Korean children’s emotions were more accurately recognized than the emotions of American children.

3.2. Eye-tracking results

Fixation duration according to the type of emotion and AOI was measured via eye tracking. The results are depicted in Table 2. A repeated measures ANOVA was performed to examine the difference in the fixation duration to emotion recognition from the facial expressions of Korean and American children. Consequently, the interaction effect of nationality * emotion type * AOI was statistically significant (F6,246 = 14.69, p < 0.001, η2p = 0.264). In other words, children’s fixation duration varied according to nationality and emotion type when they grasp emotions in the facial expressions of Korean and American children. Hence, for each emotion type, another repeated measures ANOVA was performed to examine the difference in fixation duration to each emotion type measured by fixation duration as well as between Korean and American children by considering the interaction effect nationality * AOI.

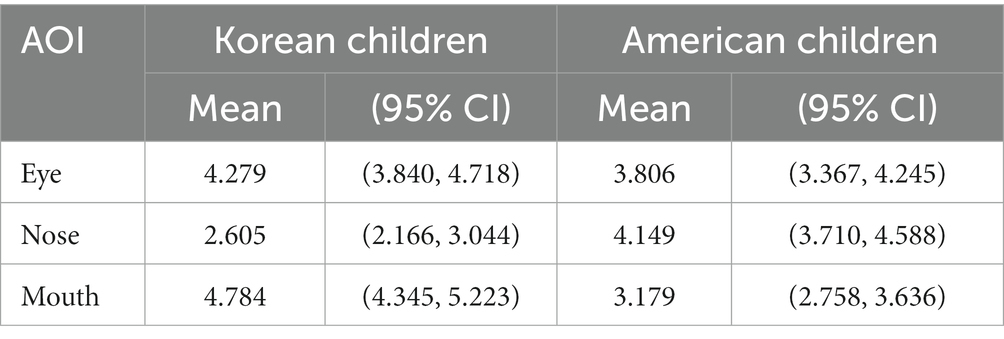

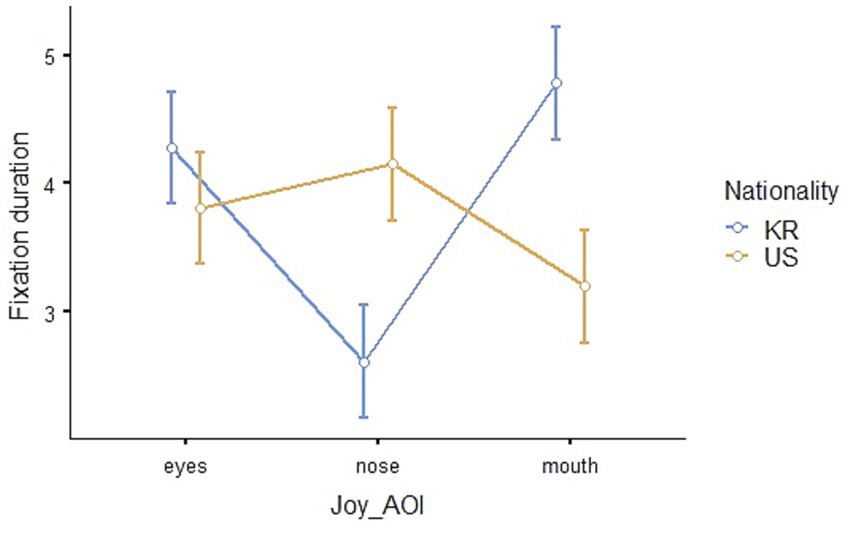

3.2.1. Eye-tracking results for joy

The results of the repeated measures ANOVA to compare the eye-tracking outcomes for joy between Korean and American children showed that the interaction effect of nationality * AOI is statistically significant (F2,82 = 40.05, p < 0.001, η2p = 0.494). The Estimated Marginal Means (Nationality * AOI, SE = 0.223) of fixation duration is shown in Table 2, and visualized in Figure 6.

The post hoc analysis indicated statistically significant differences between the fixation duration on the eyes and nose (t136.557 = 4.958, pbonferroni < 0.001) and the nose and mouth (t136.557 = −6.454, pbonferroni < 0.001) of Korean children. Additionally, the differences in the fixation duration on Korean children’s eyes and American children’s mouths (t135.802 = 3.215, pbonferroni = 0.024), Korean children’s noses and American children’s eyes (t135.802 = −3.571, pbonferroni = 0.007) and noses (t122.960 = −6.196, p bonferroni < 0.001) as well as Korean children’s mouths and American children’s mouths (t122.960 = 6.369, pbonferroni < 0.001) were statistically significant. Hence, the fixation duration to the eyes, nose, and mouth showed a different pattern when recognizing joy from the facial expressions of Korean and American children.

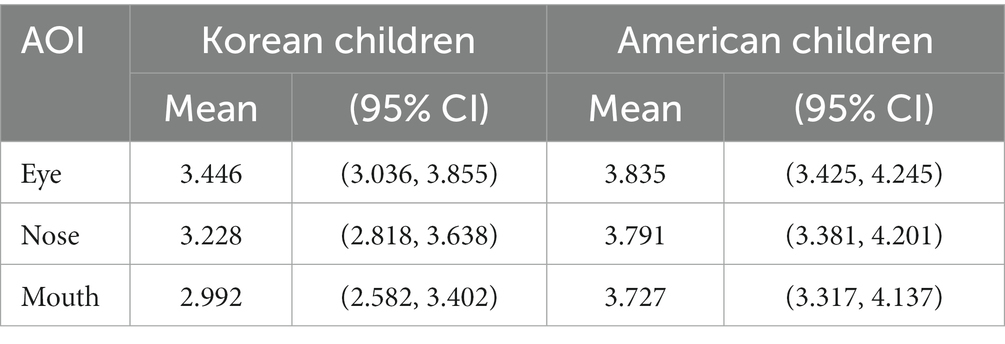

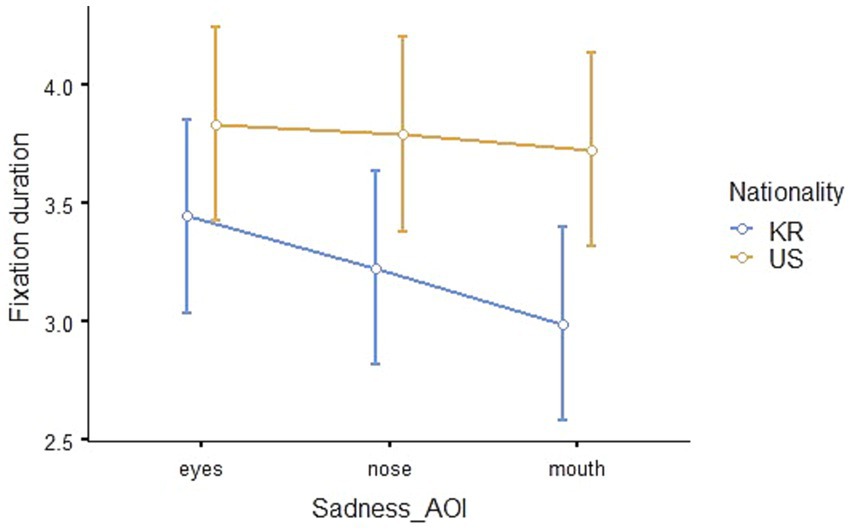

3.2.2. Eye-tracking outcomes for sadness

The results of a repeated measures ANOVA to compare the eye-tracking outcomes for sadness between Korean and American children revealed that the main effect of nationality was statistically significant (F1,41 = 20.187, p < 0.001, η2p = 0.330). The Estimated Marginal Means (Nationality * AOI, SE = 0.208) of fixation duration is shown in Table 3, and visualized in Figure 7.

The outcome of the post hoc analysis indicated that the total fixation duration on the eyes, nose, and mouth of American children was longer than those of Korean children (t41 = −4.49, pbonferroni < 0.001). In other words, there was no significant difference in fixation duration on the eyes, nose, and mouth when recognizing sadness from Korean and American children’s facial expressions, but the total fixation duration on the eyes, nose, and mouths of American children was significantly longer.

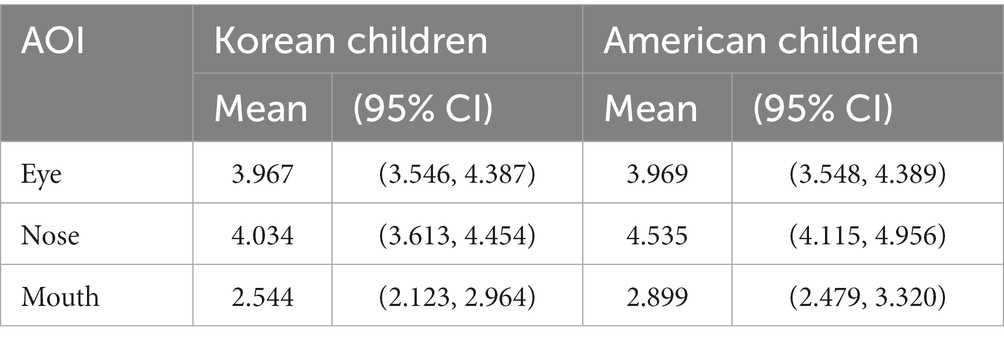

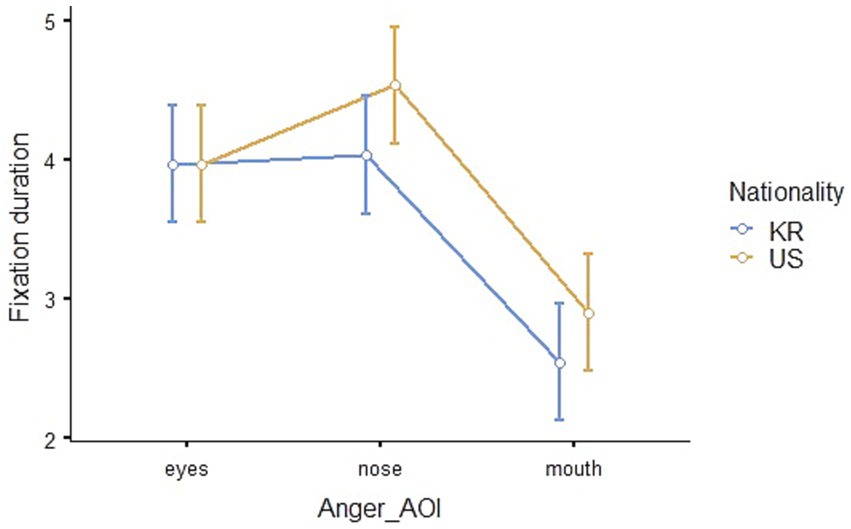

3.2.3. Eye-tracking results for anger

The results of the repeated measures ANOVA to compare the eye-tracking outcomes for anger between Korean and American children demonstrated that the main effects of nationality (F1,41 = 9.89, p = 0.003, η2p = 0.194) and AOI (F2,82 = 14.91, p < 0.001, η2p = 0.267) were statistically significant, but their interaction effect was not statistically significant (F2,82 = 1.52, p = 0.226, η2p = 0.036). The Estimated Marginal Means (Nationality * AOI, SE = 0.213) of fixation duration is shown in Table 4, and visualized in Figure 8.

The outcomes of the post hoc analysis indicated that the differences in the fixation duration on the eyes and mouth (t82 = 4.12, pbonferroni < 0.001) and the nose and mouth (t82 = 5.16, pbonferroni < 0.001) were statistically significant, and the fixation duration of recognizing anger was significantly longer for American children than Korean children (t41 = −3.15, pbonferroni = 0.003).

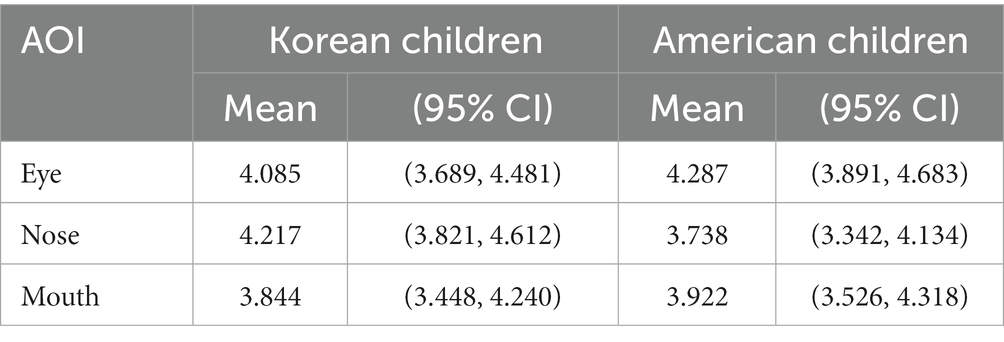

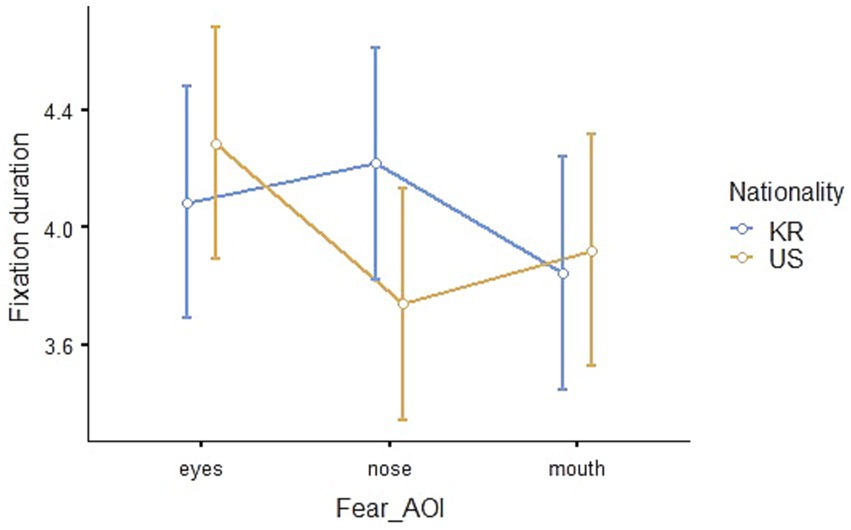

3.2.4. Eye-tracking result for fear

The result of the repeated measures ANOVA to compare the eye-tracking results for fear between Korean and American children showed that none of the main effects of nationality (F1,41 = 0.509, p = 0.480, η2p = 0.012) and AOI (F2,82 = 0.723, p = 0.488, η2p = 0.017) and their interaction effect (F2,82 = 2.466, p = 0.091, η2p = 0.057) was statistically significant. The Estimated Marginal Means (Nationality * AOI, SE = 0.201) of fixation duration is shown in Table 5, and visualized in Figure 9.

4. Discussion

This study analyzed whether there was a difference between Korean and American children’s faces in emotion recognition accuracy and eye movement for 6-year-old Korean children. The suggestions derived, based on the results, are as follows. First, from examining children’s emotion recognition accuracy, the facial expression recognition accuracy of individuals of the same race was higher than that of other races for all emotion types. This result is consistent with past studies, showing a culture-based difference in emotion recognition from facial expressions (Matsumoto and Ekman, 1989; Huang et al., 2001; Elfenbein and Ambady, 2002, 2003a; Choi et al., 2014). The findings also support studies that show that the physical distance between those who express emotions and those who recognize them is related to emotion recognition accuracy (Elfenbein and Ambady, 2003b). In this regard, Hu et al. (2014) tested whether and how Chinese children aged 4 to 7 years scan faces of their own and other races differently for face recognition. In addition, this study shows that the other race effect exists not only in face recognition but also in the emotional recognition of facial expressions.

Also, it was found that children’s emotion recognition accuracy differs based on not only race but also the type of emotion. The results indicate that, regardless of race, children can recognize joy, sadness, and anger more accurate than fear. This result is consistent with previous studies that showed that some emotions can be distinguished more easily than others; specifically, pleasant emotions were found to be easier to distinguish than unpleasant ones (Field and Walden, 1982; Harrigan, 1984; Michalson and Lewis, 1985; Caron et al., 1988; Denham et al., 1990). The finding that children’s emotion recognition accuracy differs based on the type of emotion suggests that there may be different developmental trajectories for different emotions. Some emotions may be easier to recognize at younger ages, while others may take longer to develop.

Overall, the participants recognized Korean children’s facial expressions more accurately than that of American for all emotions. Thus, there was a difference in emotion recognition based on race and/or culture, indicating the influence of the other-race effect on the accuracy of emotion recognition from facial expressions. Additionally, Korean children were able to recognize joy, sadness, and anger more accurately than fear through facial expressions. This indicates that they go through the developmental process of emotion recognition in a culturally universal way. Based on the results, we concluded that children’s emotion recognition accuracy is affected by both race and emotion type.

Secondly, during the process of emotion recognition from facial expressions, children’s fixation duration exhibited different patterns on AOIs depending on whether the children were Korean and American and on the type of emotion. The patterns of children’s gaze at the eyes, nose, and mouth of Korean and American children in the pictures were different when recognizing joy. Regarding sadness, although there was a difference in whole fixation duration, the gaze patterns were similar for Korean and American children. When recognizing anger, Korean children spent more time looking at pictures of American children than pictures of Korean children, and they paid more attention to the eyes and nose than the mouth. However, no significant difference in the gaze patterns or fixation duration was observed when recognizing fear. In eye-tracking studies, the fixed visual attention of children in a particular area means that the participants are interested in that area (Goldberg and Kotval, 1999), and the fixed persistence on a certain area means that cognitive thinking about complex information is taking place in relation to that area (Henderson and Hollingworth, 1998). The findings of this study suggest that children’s visual attention patterns on AOIs are influenced by both race and emotion type. Although facial processing primarily relies on holistic strategies rather than feature-based processing, it is crucial to consider more complex fixation patterns (van Belle et al., 2010; Nakabayashi and Liu, 2014; Megreya, 2018), we may interpret the results as a difference in children’s emotion recognition of their own race and individuals of unfamiliar races.

From a developmental perspective, the medial prefrontal cortex (mPFC) appears to play a specialized role in understanding our own and others’ communicative intentions (Krueger et al., 2009; Grossmann, 2013). Even at 3 months, the prefrontal cortex is activated when babies process faces (Johnson et al., 2005). However, this response is not fully mature. In the early stages, infants’ cortical processing of faces is relatively approximate and uncoordinated, becoming more sensitive to properly positioned human faces only after further development. The brain’s left and right hemispheres are specialized for different functions, with the right hemisphere responsible for processing emotional information, particularly in facial processing. This specialization begins in the womb (Kasprian et al., 2011) and continues to develop in early life (Stephan et al., 2003). Another brain region, the superior temporal sulcus (STS), is also involved in face processing (Allison et al., 2000; Decety et al., 2013). The STS plays a crucial role in processing changing features of faces, such as eye gaze and expressions, providing essential information for social cues. Moreover, the posterior region, where the STS is located, appears to generate a configural representation of faces, essential for extracting the invariant features that make face unique. However, developmental studies have shown that the configural strategy for face processing does not fully develop until 12 years of age (Carey and Diamond, 1977).

According to Nelson (2001), as individuals age and gain experience, their ability to recognize faces becomes more specialized, leading to a narrower range of facial recognition abilities. This specialization increases the sensitivity of the face recognition system to differences in identity within one’s own species. Through extensive exposure to human faces, a mental representation of a prototype is formed, which is finely attuned to commonly observed human faces. Individual faces are then encoded based on their deviations from this prototype.

Based on this perspective, children may have different ways of recognizing emotions from facial expressions of unfamiliar race. Specifically, higher fixation duration but lower emotion recognition accuracy to American faces can be attributed to gathering more information for emotion judgment from unfamiliar faces. Even though joy is an emotion that children accurately recognize, the nationality-based difference in the gaze pattern for joy can be interpreted as a result of engagement in the emotion recognition process when handling less familiar visual data. On the other hand, Korean children looked at the entire AOI region evenly for sadness facial expressions, but they paid more attention to the eyes and nose than the mouth for anger facial expressions. Additionally, they spent more time looking at American children’s anger facial expressions compared to Korean children’s.

Lane (2000) argued that different types of emotions are represented by specific circuits that include different brain structures. The results of this study show that the differences in visual processing patterns for different types of emotions suggests that the brain has become specialized. However, emotion recognition accuracy in recognizing fear in facial expressions is not different, and the visual patterns are also not significantly different between Korean and American children’s faces. This suggests that fear is a relatively less specialized area than joy, sadness, and anger. These findings can be considered as providing empirical evidence for the eye-mind link by establishing an interpretable explanation between visual patterns and facial expression emotion recognition.

As South Korea gradually becomes a multicultural society, cultural differences in emotion recognition accuracy imply that children from multicultural families may face challenges in their peer relations and interactions. The inability to accurately recognize the emotions of children from multicultural families may negatively affect peer relationships or interactions. Hence, early childhood educational institutions need to observe whether children from multicultural families are experiencing difficulties in emotion recognition during their peer interactions. They must also provide support and create opportunities for children from multicultural families to develop and improve their ability to accurately interpret emotions from each other’s facial expressions. This can facilitate better communication and understanding among children from diverse cultural backgrounds, fostering positive relationships and interactions within a multicultural society.

4.1. Limitations

Since previous studies have shown that the accuracy of emotion recognition is related to language skills, social skills, and peer interactions (Chung et al., 2020), it would be meaningful to examine whether there are differences between groups through eye-tracking along with these related variables.

Although two sets of photos were chosen from the original and Korean ACES, which had been already individually validated, there was a significant difference (t30 = −5.603, p < 0.001) in luminance between these sets. However, the relationship between luminosity and emotion recognition accuracy turned out to be non-significant (r = −0.286, p = 0.112). We could not determine any further effect of this difference on the fixation pattern, nor could we find a previous study on the fixation pattern according to the brightness of photos. Hence it will be necessary to study changes in eye fixation pattern according to the brightness of photos.

This study was conducted on a single group of Korean children, so it is not possible to determine whether the observed effects are due to cultural or racial influences. Future studies should target children from diverse racial groups that share a common culture, such as the United States, or present photos of children from other East Asian countries to clarify the differences between race and culture.

Despite only 42 data were used for analysis while the initial sample size was 104, and so the rejection rate seems rather high. Although we could find no methodological issues, it would be better if the rejection rate could be lowered. For example, the newest eye tracker, which has better precision and accuracy, and allows a greater freedom of head movement, could be used.

Finally, the complex design of this study, which involved a three-way repeated measures ANOVA followed by post-hoc tests with Bonferroni correction, may have resulted in an overly conservative control of the Type-I error. Additionally, due to the correlated nature of the data within the two-way ANOVA framework, there is a possibility of potential false positives in the results.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://drive.google.com/drive/folders/1o1VtUwWO0nBMdysaEjyd3IGXsRUhO1Qt?usp=drive_link.

Ethics statement

The studies involving humans were approved by Institutional Review Board of Kyungpook National University (KNU2019-0136). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin. Written informed consent was obtained from the minor(s)’ legal guardian/next of kin for the publication of any potentially identifiable images or data included in this article.

Author contributions

CC, HL, and SC contributed to the conceptualization of the study. SC and HL contributed toward the methodology of the study. HJ and JL contributed toward the investigation. SC, HL, and JL contributed toward the visualization. CC acquired the funding for the study. CC and HL were responsible for project administration and supervised the study. CC, SC, HJ, JL, and HL wrote the original draft of the manuscript. CC and HL reviewed and edited the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea [grant number NRF-2019S1A5A2A03051508].

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2023.1235238/full#supplementary-material

Abbreviations

AOI, area of interest; ACES, Assessment of Children’s Emotional Skills; ANOVA, analysis of variance.

References

Allison, T., Puce, A., and McCarthy, G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278. doi: 10.1016/S1364-6613(00)01501-1

Barrett, L. F., Lindquist, K. A., and Gendron, M. (2007). Language as context for the perception of emotion. Trends Cogn. Sci. 11, 327–332. doi: 10.1016/j.tics.2007.06.003

Britanica (2000). South Korea. Available at: https://www.britannica.com/place/South-Korea (Accessed July 5, 2023)

Carey, S., and Diamond, R. (1977). From piecemeal to configurational representation of faces. Science 195, 312–314. doi: 10.1126/science.831281

Caron, A. J., Caron, R. F., and Mac Lean, D. J. (1988). Infant discrimination of naturalistic emotional expressions: the role of face and voice. Child Dev. 59, 604–616. doi: 10.2307/1130560

Choi, H.-S., Ghim, H.-R., and Eom, J.-S. (2014). Differences in recognizing the emotional facial expressions of diverse races in Korean children and undergraduates. Korean. J. Dev. Psychol. 27, 19–31.

Chung, C., Choi, S., Bae, J., Jeong, H., and Lee, H. (2022). Developing and validating a Korean version of the assessment of Children’s emotional skills. Child Psychiatry Hum. Dev. doi: 10.1007/s10578-022-01452-2

Chung, C., Chung, H., Lee, H., and Lee, J. (2020). The effects of facial expression emotional accuracy and language ability on social competence of young children. J. Learn. Centered Curric. Instr. 20, 143–166. doi: 10.22251/jlcci.2020.20.10.143

Darwin, C. (1872). The expression of the emotions in man and animals. London, United Kingdom: John Murray.

Decety, J., Chen, C., Harenski, C., and Kiehl, K. A. (2013). An fMRI study of affective perspective taking in individuals with psychopathy: imagining another in pain does not evoke empathy. Front. Hum. Neurosci. 7:489. doi: 10.3389/fnhum.2013.00489

Denham, S. A., McKinley, M., Couchoud, E. A., and Holt, R. (1990). Emotional and behavioral predictors of preschool peer ratings. Child Dev. 61, 1145–1152. doi: 10.1111/j.1467-8624.1990.tb02848.x

Eisenbarth, H., and Alpers, G. W. (2011). Happy mouth and sad eyes: scanning emotional facial expressions. Emotion 11, 860–865. doi: 10.1037/a0022758

Ekman, P. (1972). Universals and cultural differences in facial expressions of emotion. Neb. Symp. Motiv. 19, 207–283.

Ekman, P. (1982). “Methods for measuring facial action” in Handbook of methods in nonverbal behavior research. eds. P. Ekman and K. R. Scherer (Cambridge, United Kingdom: Cambridge University Press), 45–90.

Ekman, P. (1999). “Basic emotions” in The handbook of cognition and emotion. eds. T. Dalgleish and T. Power (Sussex, UK: John Wiley & Sons, Ltd), 45–60.

Elfenbein, H. A., and Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128, 203–235. doi: 10.1037/0033-2909.128.2.203

Elfenbein, H. A., and Ambady, N. (2003a). Universals and cultural differences in recognizing emotions. Curr. Dir. Psychol. Sci. 12, 159–164. doi: 10.1111/1467-8721.01252

Elfenbein, H. A., and Ambady, N. (2003b). When familiarity breeds accuracy: cultural exposure and facial emotion recognition. J. Pers. Soc. Psychol. 85, 276–290. doi: 10.1037/0022-3514.85.2.276

Fabes, R. A., Eisenberg, N., Karbon, M., Troyer, D., and Switzer, G. (1994). The relations of children’s emotion regulation to their vicarious emotional responses and comforting behaviors. Child Dev. 65, 1678–1693. doi: 10.1111/j.1467-8624.1994.tb00842.x

Field, T. M., and Walden, T. A. (1982). Production and discrimination of facial expressions by preschool children. Child Dev. 53, 1299–1311. doi: 10.2307/1129020

Gendron, M., Roberson, D., van der Vyver, J. M., and Barrett, L. F. (2014). Perceptions of emotion from facial expressions are not culturally universal: evidence from a remote culture. Emotion 14, 251–262. doi: 10.1037/a0036052

Goldberg, J. H., and Kotval, X. P. (1999). Computer interface evaluation using eye movements: methods and constructs. Int. J. Ind. Ergon. 24, 631–645. doi: 10.1016/S0169-8141(98)00068-7

Grossmann, T. (2013). The role of medial prefrontal cortex in early social cognition. Front. Hum. Neurosci. 7:340. doi: 10.3389/fnhum.2013.00340

Ha, R. Y., Kang, J. I., Park, J. I., An, S. K., and Cho, H.-S. (2011). Differences in the emotional recognition of Japanese and Caucasian facial expressions in Koreans. Mood. Emot. 9, 17–23.

Harrigan, J. A. (1984). The effects of task order on children’s identification of facial expressions. Motiv. Emot. 8, 157–169. doi: 10.1007/BF00993071

Haviland, J. M., and Lelwica, M. (1987). The induced affect response: 10-week-old infants’ responses to three emotion expressions. Dev. Psychol. 23, 97–104. doi: 10.1037/0012-1649.23.1.97

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51, 59–67. doi: 10.1016/S0006-3223(01)01330-0

Hayden, A., Bhatt, R. S., Joseph, J. E., and Tanaka, J. W. (2007). The other-race effect in infancy: evidence using a morphing technique. Infancy 12, 95–104. doi: 10.1111/j.1532-7078.2007.tb00235.x

Henderson, J. M., and Hollingworth, A. (1998). “Eye movements during scene viewing: An overview” in Eye guidance in reading and scene perception. ed. G. Underwood (Amsterdam, Netherlands: Elsevier Science Ltd), 269–293.

Holmqvist, K., Nyström, M., Andersson, R., Dewhurst, R., Jarodzka, H., and Van de Weijer, J. (2011). Eye tracking: A comprehensive guide to methods and measures. Oxford, United Kingdom: Oxford University Press.

Hu, C., Wang, Q., Fu, G., Quinn, P. C., and Lee, K. (2014). Both children and adults scan faces of own and other races differently. Vis. Res. 102, 1–10. doi: 10.1016/j.visres.2014.05.010

Huang, Y., Tang, S., Helmeste, D., Shioiri, T., and Someya, T. (2001). Differential judgement of static facial expressions of emotions in three cultures. Psychiatry Clin. Neurosci. 55, 479–483. doi: 10.1046/j.1440-1819.2001.00893.x

Izard, C. E., and Malatesta, C. Z. (1987). “Perspectives on emotional development I: differential emotions theory of early emotional development” in Handbook of infant development. ed. J. D. Osofsky (New Jersey, United States: John Wiley & Sons)

Johnson, M., Griffin, R., Csibra, G., Halit, H., Farroni, T., Haan, M., et al. (2005). The emergence of the social brain network: evidence from typical and atypical development. Dev. Psychopathol. 17, 599–619. doi: 10.1017/S0954579405050297

Just, M. A., and Carpenter, P. A. (1976). Eye fixations and cognitive processes. Cogn. Psychol. 8, 441–480. doi: 10.1016/0010-0285(76)90015-3

Just, M. A., and Carpenter, P. A. (1980). A theory of reading: from eye fixations to comprehension. Psychol. Rev. 87, 329–354. doi: 10.1037/0033-295x.87.4.329

Kasprian, G., Langs, G., Brugger, P. C., Bittner, M., Weber, M., Arantes, M., et al. (2011). (2011). The prenatal origin of hemispheric asymmetry: an in utero neuroimaging study. Cereb. Cortex 21, 1076–1083. doi: 10.1093/cercor/bhq179

Kelly, D. J., Liu, S., Lee, K., Quinn, P. C., Pascalis, O., Slater, A. M., et al. (2009). Development of the other-race effect during infancy: evidence toward universality? J. Exp. Child Psychol. 104, 105–114. doi: 10.1016/j.jecp.2009.01.006

Kelly, D. J., Quinn, P. C., Slater, A. M., Lee, K., Ge, L., and Pascalis, O. (2007). The other-race effect develops during infancy: evidence of perceptual narrowing. Psychol. Sci. 18, 1084–1089. doi: 10.1111/j.1467-9280.2007.02029.x

Kim, Y., and Song, H. J. (2011). The role of race in Korean infants’ face recognition. Korean. J. Dev. Psychol. 24, 55–66.

Klin, A., Jones, W., Schultz, R., Volkmar, F., and Cohen, D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch. Gen. Psychiatry 59, 809–816. doi: 10.1001/archpsyc.59.9.809

Klinnert, M. D., Emde, R. N., Butterfield, P., and Campos, J. J. (1986). Social referencing: the infant's use of emotional signals from a friendly adult with mother present. Dev. Psychol. 22, 427–432. doi: 10.1037/0012-1649.22.4.427

Kohler, C. G., Turner, T. H., Bilker, W. B., Brensinger, C. M., Siegel, S. J., Kanes, S. J., et al. (2003). Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am. J. Psychiatry 160, 1768–1774. doi: 10.1176/appi.ajp.160.10.1768

Krueger, K. R., Wilson, R. S., Kamenetsky, J. M., Barnes, L. L., Bienias, J. L., and Bennett, D. A. (2009). Social engagement and cognitive function in old age. Exp. Aging Res. 35, 45–60. doi: 10.1080/03610730802545028

Kymlicka, W. (1996). Multicultural citizenship: A liberal theory of minority rights. New York: Oxford University Press

Lane, R. D. (2000). Neural correlates of conscious emotional experience in Cognitive neuroscience of emotion. eds. R. D. Lane and L. Nadel (Oxford: University Press). 345–370.

Lee, B. (2019). Development of eye tracker system for early childhood. J. Korea Contents Assoc. 19, 91–98. doi: 10.5392/JKCA.2019.19.07.091

Lockhofen, D. E. L., and Mulert, C. (2021). Neurochemistry of visual attention. Front. Neurosci. 15:643597. doi: 10.3389/fnins.2021.643597

Markham, R., and Adams, K. (1992). The effect of type of task on children’s identification of facial expressions. J. Nonverbal Behav. 16, 21–39. doi: 10.1007/BF00986877

Matsumoto, D., and Ekman, P. (1989). American-Japanese cultural differences in intensity ratings of facial expressions of emotion. Motiv. Emot. 13, 143–157. doi: 10.1007/bf00992959

Megreya, A. M. (2018). Feature-by-feature comparison and holistic processing in unfamiliar face matching. PeerJ. 6:e4437. doi: 10.7717/peerj.4437

Meissner, C. A., and Brigham, J. C. (2001). Thirty years of investigating the own-race bias in memory for faces: a meta-analytic review. Psychol. Public Policy Law 7, 3–35. doi: 10.1037/1076-8971.7.1.3

Michalson, L., and Lewis, M. (1985). “What do children know about emotions and when do they know it?” in The socialization of emotions. eds. M. Lewis and C. Saarni (Boston, MA: Springer)

Nakabayashi, K., and Liu, C. H. (2014). Development of holistic vs. featural processing in face recognition. Front. Hum. Neurosci. 8:831. doi: 10.3389/fnhum.2014.00831

Nelson, C. A. (2001). The development and neural bases of face recognition. Infant Child Dev. 10, 3–18. doi: 10.1002/icd.239

Nowicki, S., and Duke, P. (1994). Individual differences in the nonverbal communication of affect: the diagnostic analysis of nonverbal accuracy scale. J. Nonverbal Behav. 18, 9–35. doi: 10.1007/BF02169077

Park, B.-S. (2006). The particularity of Korea in the multicultural minorities. Soc. Philos. 12, 99–126.

Park, S.-J., Song, I. H., Ghim, H. R., and Cho, K. J. (2007). Developmental changes in emotional-states and facial expression. Korean. J. Sci. Emot. Sensib. 10, 127–133.

Pascalis, O., De Haan, M., and Nelson, C. A. (2002). Is face processing species-specific during the first year of life? Science 296, 1321–1323. doi: 10.1126/science.1070223

Reisinger, D. L., Shaffer, R. C., Horn, P. S., Hong, M. P., Pedapati, E. V., Dominick, K. C., et al. (2020). A typical social attention and emotional face processing in autism Spectrum disorder: insights from face scanning and Pupillometry. Front. Integr. Neurosci. 13:76. doi: 10.3389/fnint.2019.00076

Sangrigoli, S., and De Schonen, S. D. (2004). Recognition of own-race and other-race faces by three-month-old infants. J. Child Psychol. Psychiatry 45, 1219–1227. doi: 10.1111/j.1469-7610.2004.00319.x

Schurgin, M. W., Nelson, J., Iida, S., Ohira, H., Chiao, Y., and Franconeri, S. L. (2014). Eye movements during emotion recognition in faces. J. Vis. 14:14. doi: 10.1167/14.13.14

Shaffer, D. R. (2005). Social and personality development. 5th Edn. CA, United States: Wadsworth/Thomson Learning.

Siegman, A. W., Feldstein, S., Tomasso, C. T., Ringel, N., and Lating, J. (1987). Expressive vocal behavior and the severity of coronary artery disease. Psychosom. Med. 49, 545–561. doi: 10.1097/00006842-198711000-00001

Singmann, H. (2018). Afex: analysis of factorial experiments. [R package]. Available at: https://cran.r-project.org/package=afex.

Spezio, M. L., Adolphs, R., Hurley, R. S., and Piven, J. (2007). Abnormal use of facial information in high-functioning autism. J. Autism Dev. Disord. 37, 929–939. doi: 10.1007/s10803-006-0232-9

Stephan, B. C. M., and Caine, D. (2009). Aberrant pattern of scanning in prosopagnosia reflects impaired face processing. Brain Cogn. 69, 262–268. doi: 10.1016/j.bandc.2008.07.015

Stephan, K. E., Marshall, J. C., Friston, K. J., Rowe, J. B., Ritzl, A., Zilles, K., et al. (2003). Lateralized cognitive processes and lateralized task control in the human brain. Science 301, 384–386. doi: 10.1126/science.1086025

Termine, N. T., and Izard, C. E. (1988). Infants’ responses to their mothers’ expressions of joy and sadness. Dev. Psychol. 24, 223–229. doi: 10.1037/0012-1649.24.2.223

van Belle, G., Ramon, M., Lefèvre, P., and Rossion, B. (2010). Fixation patterns during recognition of personally familiar and unfamiliar faces. Front. Psychology 1:20. doi: 10.3389/fpsyg.2010.00020

Walden, T. A. (1991). “Infant social referencing” in The development of emotion regulation and dysregulation. Cambridge studies in social and emotional development. eds. J. Garber and K. A. Dodge, vol. 1991 (Cambridge: Cambridge University Press), 69–88.

Watanabe, K., Matsuda, T., Nishioka, T., and Namatame, M. (2011). Eye gaze during observation of static faces in deaf people. PLoS One 6:e16919. doi: 10.1371/journal.pone.0016919

Yi, S.-M., Cho, K. J., and Ghim, H. R. (2012). Developmental changes in reading emotional states through facial expression. Korean. J. Dev. Psychol. 25, 55–72.

Keywords: facial expression, emotions, attention, emotion judgment, eye tracking

Citation: Chung C, Choi S, Jeong H, Lee J and Lee H (2023) Attention mechanisms and emotion judgment for Korean and American emotional faces: an eye movement study. Front. Psychol. 14:1235238. doi: 10.3389/fpsyg.2023.1235238

Edited by:

Antonio Maffei, University of Padua, ItalyReviewed by:

Letizia Della Longa, University of Padua, ItalySayuri Hayashi, National Center of Neurology and Psychiatry, Japan

Copyright © 2023 Chung, Choi, Jeong, Lee and Lee. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hyorim Lee, cmltY2hpbGRAa251LmFjLmty

Chunghee Chung1

Chunghee Chung1 Hyorim Lee

Hyorim Lee