95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 30 May 2023

Sec. Forensic and Legal Psychology

Volume 14 - 2023 | https://doi.org/10.3389/fpsyg.2023.1152904

This article is part of the Research Topic Contextualizing Interviews to Detect Verbal Cues to Truths and Deceit View all 14 articles

Introduction: The verbal deception literature is largely based upon North American and Western European monolingual English speaker interactions. This paper extends this literature by comparing the verbal behaviors of 88 south Asian bilinguals, conversing in either first (Hindi) or second (English) languages, and 48 British monolinguals conversing in English.

Methods: All participated in a live event following which they were interviewed having been incentivized to be either deceptive or truthful. Event details, complications, verifiable sources, and plausibility ratings were analyzed as a function of veracity, language and culture.

Results: Main effects revealed cross cultural similarities in both first and second language interviews whereby all liar’s verbal responses were impoverished and rated as less plausible than truthtellers. However, a series of cross-cultural interactions emerged whereby bi-lingual South Asian truthtellers and liars interviewed in first and second languages exhibited varying patterns of verbal behaviors, differences that have the potential to trigger erroneous assessments in practice.

Discussion: Despite limitations, including concerns centered on the reductionary nature of deception research, our results highlight that while cultural context is important, impoverished, simple verbal accounts should trigger a ‘red flag’ for further attention irrespective of culture or interview language, since the cognitive load typically associated with formulating a deceptive account apparently emerges in a broadly similar manner.

The forensic interviewing literature concerned with distinguishing liars from truth-tellers is largely based upon North American and Western European research and has typically focused on monolingual English speaker interactions (see Granhag and Strömwall, 2004; Castillo and Mallard, 2012; Laing, 2015; Leal et al., 2018). Yet, the transnational nature of criminal investigation is such that forensic interviewers regularly encounter persons of interest from diverse cultures. Culture can be defined as ‘the collective programming’ that distinguishes one group from another (Hofstede and Hofstede, 2005) and so culture typically refers to characteristic societal markers that determine individual attitudes, behaviors and values (Lytle et al., 1995). Accordingly, individuals from different cultures communicate variously, often differing in factors such as their degrees of verbal directness, cohesion and coherence, pacing and pauses, and what to say (e.g., Levine et al., 2007; Reynolds et al., 2011; Taylor et al., 2014).

Psychological understanding of verbal deception in these ‘cross-cultural’ interactions is not well advanced, despite verbal behavior being known to be culturally diverse. Hence, cultural variability adds further to the well documented challenges of detecting verbal truth and lies in forensic interview contexts, which in part emanates from the inconsistent nature of verbal cues in general (see Porter et al., 2000; Dando and Bull, 2011; Vrij, 2014; Sandham et al., 2020). Even when interviewer and interviewee share the same first language, differences in expected norms and cultural speech practices are sufficient to trigger misunderstandings, both in face-to-face dialogue (van der Zee et al., 2014; Giebels et al., 2017; Taylor et al., 2017) and in computer mediated interactions (e.g., Levine et al., 2007; Durant and Shepherd, 2013; Hurn and Tomalin, 2013).

Additional challenges can arise when one or both interviewers are required to speak in a second language, since this complicating verbal communication still further (see Cheng and Broadhurst, 2005; Da Silva and Leach, 2013; Duñabeitia and Costa, 2015). While English is the most widely spoken language worldwide, for many, it is spoken as a second language and so it is not uncommon for cross cultural interviews to be conducted in English. Irrespective of veracity, the additional cognitive demands associated with communicating in a second language (Segalowitz, 2010; Tavakoli and Wright, 2020) may trigger verbal cues that research on first language interviews identifies as diagnostic of truth and lies. For example, research has indicated that liars often provide impoverished, simple verbal accounts, which lack verifiable information and contain proportionally fewer event details and complications than truth-tellers (e.g., Leal et al., 2018; Vrij et al., 2020; Vrij and Vrij, 2020). Although some of these verbal cues appear stable across cultures (Vrij and Vrij, 2020), as far as we are aware the forensic verbal deception literature has yet to fully investigate first and second language variances.

Cognitive theories of deception predict differences in verbal behaviors because lying can be more difficult than being truthful. For example, Cognitive Load/Effort theory (Vrij, 2000) posits that lying is often a more complex mental activity than telling the truth since liars must manage the numerous concurrent cognitive demands associated with (among other things) withholding the truth, formulating a deceive account, matching accounts to known or discoverable information, appearing plausible, and maintaining consistency (e.g., Buller and Burgoon, 1996; Hartwig et al., 2005; Vrij et al., 2011; Leins et al., 2012; Vrij et al., 2012; Verigin et al., 2020). Consequently, liars may offer impoverished and vacuous accounts in response to questions versus truthtellers as a way of managing their deception.

Indeed, the amount of event information provided in verbal accounts has consistently emerged as a useful cue to veracity, with truthtellers typically providing more detail in their accounts than deceivers (e.g., DePaulo et al., 2003; Vrij, 2008; Taylor et al., 2013; Dando and Ormerod, 2020; Dando C. J. et al., 2022). Operationalizing this cue can be challenging, since researchers typically use interview transcripts, rather than having to be alert to deception in real time. Although interviewers are not generally required to make veracity decisions, they are expected to be alert to deception and so concerns about the cue’s utility are valid. Nonetheless, the amount of event detail provided has been found to cue more accurate truth and lie decisions in both face-to-face and remote interview contexts (e.g., DePaulo et al., 2003; Vrij, 2008). Further, researchers have reported that both professionals and some lay persons are able to recognize information poor and impoverished accounts, and so are socially alert to this verbal behavior (e.g., Vrij and Mann, 2004; Verigin et al., 2020).

The amount of verifiable source information provided, the complexity of answers, and the overarching plausibility of the account are related factors that have also emerged as veracity cues (see Vrij and Vrij, 2020; Vrij et al., 2022). Verifiable sources, or ‘concrete’ details that could be verified from witness statements, CCTV, and trace evidence for example (see also Ormerod and Dando, 2015), are often more common in truthful accounts (e.g., Vrij et al., 2020; Leal et al., 2022). Complications are details provided that serve to complicate an account by adding uninvited additional event relevant detail. For example, ‘I went up to the food counter, which had a basket of fruit on top. The fruit looked really lovely. I remember there were bananas, which I really love’ rather than ‘I went to the food counter’. Again, research has indicated that truthful accounts often include more complications (e.g., Vrij et al., 2021b) suggesting paucity of complications is indictive of deception.

Plausibility, in terms of judging how ‘likely’ or ‘believable’ an account is (e.g., DePaulo et al., 2003; Leal et al., 2019), is a subjective assessment/rating. Nonetheless, plausibility judgments have been found to distinguish truth tellers from liars whereby plausibility ratings of deceptive accounts are typically significantly lower (e.g., DePaulo et al., 2003; Vrij et al., 2021a). Furthermore, plausibility ratings using a Likert scale were found to positively predict details, complications and verifiable sources indicating observers recognized these verbal behaviors differed (Vrij et al., 2021a). Indeed, many interview techniques developed toward amplifying cues to detection in real time have drawn on the notion that truthful accounts should be more plausible and make more sense and so have focused on credibility cues (e.g., Dando and Bull, 2011; Granhag and Hartwig, 2014; Ormerod and Dando, 2015). It appears professional observers and interviewers are able to recognize improbable accounts in some circumstances, particularly when interviewers employ techniques to amplify credibility cues (e.g., Dando et al., 2009, 2018; Vrij et al., 2009; Evans et al., 2013; Lancaster et al., 2013).

Our understanding of how consistency, verifiability, plausibility and complications relate to veracity is more advanced for North American and Western European participants than for other populations. It seems sensible to assume, however, that theories of Cognitive Load/Effort are relevant irrespective of culture, since cognitive processes such as memory and attention are universal. What is less clear is how cognitive load will manifest for different cultures. For example, Taylor et al. (2017) found that liars with North African cultural backgrounds tended to increase their provision of perceptual details when lying, with this supplanting their cultural norm of providing social details. The opposite was true for liars from Western Europe. Conversely, some researchers have reported more event details and checkable sources are provided by truthtellers irrespective of language (see Ewens et al., 2016, 2017; Leal et al., 2018). For example, Russian, Korean and Hispanic truthtellers were found to include more complications than liars when providing travel accounts (Vrij and Vrij, 2020). These differing findings illustrate how nascent this area is, with a paucity of interview relevant research findings.

Those few studies of veracity across second language and bilingual communication suggest that expectations, the cues attended to, and language (first versus second) all impact veracity judgment performance. Bilinguals experience heightened cognitive load when being both deceptive and truthful in a second language (Da Silva and Leach, 2013; Akehurst et al., 2018) and so verbal cues to veracity such as low information, reduced complexity, and fewer verifiable sources may be apparent but not necessarily associated with lying. However, laypersons and professionals (police officers) appear to believe liars communicating in both first and second languages are likely to exhibit the similar verbal veracity cues (Leach et al., 2020). They also expect differences in interview length due, for example, to misunderstanding of questions and delayed response times (Leach et al., 2020); this has been borne out by increased response durations when being deceptive in a second language versus first language (McDonald et al., 2020).

Despite expectations of similar verbal behaviors, a lie bias has begun to emerge when judging non-native (second language) speakers. In contrast, a truth bias is more evident when judging native (first language) speakers (Da Silva and Leach, 2013; Evans and Michael, 2014; Wylie et al., 2022). Similarly, veracity judgment accuracy is better when judging first vs. second language speakers (Da Silva and Leach, 2013; Taylor et al., 2014; Leach et al., 2017; Akehurst et al., 2018), although not always. Others have reported improved veracity judgments in second language contexts (e.g., Evans et al., 2013), or no discernable differences (Cheng and Broadhurst, 2005) as a function of language (First Cantonese; second English), although in this research the language status of the observers is not always clear.

The research reported here seeks to advance our understanding of the occurrence and potential cueing utility of details, verifiable details, complications, and plausibility as verbal veracity cues in forensic interview contexts, with bilinguals from a non-western culture. Specifically, monolingual (British) and bilingual (South Asian) participants took part in a laboratory task that involved carrying out an activity (that differed in part as a function of liar or truthteller condition), following which they were interviewed in either their first (English and Hindi) or second (English) language. All deceptive participants self-generated an account to convince the interviewer that they had completed the same activity as the truthful participants. Interviewers and interviewees were culture and language matched. Interview transcripts were coded and rated for plausibility.

The relevant literature is sparce and the findings are mixed. Hence, we formulated a series of questions driven not only by a clear need to advance understanding of verbal behaviors across different cultures with reference to the real-world challenges and associated empirical questions raised by professionals/practitioners tasked with maximizing opportunities to better understand truth and lies. It is these research questions and challenges that guided both our paradigm and analysis approach, as follows.

First, using first language (L1) as a proxy for operationalizing culture, we examined the occurrence of verbal cues (event details, complications, and verifiable sources) and plausibility as a function of veracity. Consistent with previous research, we expected truthtellers to present more of each cue than liars irrespective of cultural background.

Second, we examined the occurrence of verbal cues and plausibility when interviewed in a first versus a second language as a function of veracity. Consistent with previous research, we expected the behavior of second language speakers to include less of the verbal cues than the first language speakers.

Third, we examined cultural differences and similarities in the occurrence of verbal cues and plausibility across cultures as a function of veracity. While we recognize the inconsistencies of prior research in this area, we expected that judgments of plausibility would be particularly impacted for the those interviewed in their second language since empirical evidence has begun to emerge of lie bias for second language speakers (see Wylie et al., 2022).

An a-priori power analysis was conducted using G*Power 3.1 (Faul et al., 2007) to determine minimum sample size estimation. Power analysis for ANOVA: main effects and interactions for three groups with a numerator df of 2 indicated the required sample size of mock witnesses to detect large effects (assuming power = 0.80 and a = 0.05) was N = 121. Thus, the obtained sample size of N = 136 was adequate given resource constraints and access to bilingual populations and is in line with sample size norms described in many empirical cross cultural studies such as the one reported here (e.g., Al-Simadi, 2000; Cheng and Broadhurst, 2005; Castillo and Mallard, 2012; Evans et al., 2017; Primbs et al., 2022). Participant interviewees were recruited through word of mouth, social media and advertisements placed in the locality of the University. This research was approved by Lancaster University’s Psychology Ethics Committee and was run in accordance with the British Psychological Society code of ethical conduct.

A total of 136 adults took part in this research (64 males and 72 females). The Mean age was 22.13 (SD = 2.14), ranging from 18 to 29 years. There were 88 (64.7%) bi-lingual participants with Hindi as their first language and English as a second language (41 male and 47 female) and 48 (35.3%) monolingual English speakers (23 male and 25 female). Participants were randomly allocated to either the liar or truthteller veracity condition, resulting in 70 liars (51.5%) and 66 Truthtellers (48.5%). Bilingual participants were further allocated to one of two interview language groups, namely first language (Hindi) or second language (English). Accordingly, there were six conditions (i) Monolingual British liars (25 participants), (ii) Monolingual British truthtellers (23 participants), (iii) Bilingual first language interview liar (22 participants), (iv) Bilingual first language interview truth (23 participants), (v) Bilingual second language interview truthtellers (20 participants), and (vi) Bilingual second language interview liar (23 participants). There were no significant differences in age across the groups, F(5, 130) = 0.621, p = 0.684, nor differences in gender distribution, X2 (5, N = 136) = 1.450, p = 0.919.

Two female volunteer research assistant interviewers (from here on referred to as interviewers) took part in the research as interviewers (aged 22 and 24 years), one bilingual (Hindi and English Language) and one monolingual female (English language). The monolingual interviewer, a British citizen, born in the UK, employed at an English University, conducted all monolingual English interviews. The bilingual interviewer, a second generation British Indian, conducted all interviews with bilingual participants according to language condition. Both interviewers underwent bespoke training over a 2-day period. Training was designed for this research by the first author, adopting a collaborative pedagogical approach, comprising: (i) a 2-h long classroom-based introduction to the interview protocols behaviors, (ii) a 2-h long practice session that included 3 practice interviews, which were digitally recorded to allow feedback and evaluation. Once the interviewers had attended the classroom training sessions (training day 1) and completed the practice interviews to required level of competency, (training day 2) they were able to commence research interviews. Interviewers were naïve to the veracity conditions and hypotheses.

Prior to participation all participants completed a 10-item hard copy self-report language proficiency and background questionnaire to guide groupings of 1st and 2nd language conditions (Supplementary materials OSF). Monolingual participants were all British citizens, born in the UK, with English as their first/only language. Bi-lingual participants were all Indian citizens born in India, attending a UK university to study at PG level. None of the bilingual participants (n = 88) self-reported having spent any time learning or working in another English-speaking country before the age of 16 years and reported starting to learn English at a mean age of 9.51 years (SD = 2.16, ranging from 6 to 15 years). On a Likert scale ranging from 1 (extremely poorly/never) to 7 (extremely well/always), bi-lingual participants reported that they spoke English well (M = 5.64, SD = 0.93), always spoke Hindi at home as a child (M = 7.00, SD = 0.00), always spoke Hindi with their parents (M = 7.00, SD = 0.00), always spoke Hindi at school (M = 6.78, SD = 0.53), and always spoke Hindi with friends (M = 6.79, SD = 0.55). The mean number of years spent completing formal education in English was 3.84 (SD = 1.19). All bilingual participants reported the language spoken at their first place of education was Hindi. Bilingual participants (Supplementary materials OSF) were asked which language they preferred to use (Hindi, English, or either/both) in various contexts while studying and living in England (see Table 1).

Immediately post interview, participants completed a hard copy questionnaire comprising a series of Likert scale questions ranging from 1 (very little/extremely easy/not at all) to 7 (very much/extremely hard/extremely motivated). Questions concerned adherence to the pre-interview instructions, motivation, experienced difficulty, and understanding.

Irrespective of condition, all interviews were similarly structured and comprised three information gathering phases, in the same order. First, participants were asked to provide a free recall account of everything they could remember, followed by a series of probing questions, finishing with a second free recall account (see Table 2). Explain and rapport building phases preceded the formal information gathering phases. Interviews finished with a closure phase (see Table 2).

Participants were recruited to take part in an unspecified activity and then to take part in an interview following the activity. They were warned that they may be asked to deceive the interviewer as part of the interview but were naïve to the real aims of the project. All participants were asked to meet Researcher A (a confederate) in a café on the ground floor of a university building. Researcher A instructed the participant to deliver a package to Researcher B (also a confederate) who would to be waiting in an office on the third floor of the building. The package was marked confidential. It was explained to the participant that the package contained some important documents. Hence, once the package had been delivered to Researcher B it was vital the participant return to Researcher A, who would be waiting outside of the café in the courtyard, with proof of safe delivery in the form of a signed receipt. Researcher A then told participants that some money had gone missing and that they were going to be interviewed about it. Each participant was given 10 min to prepare for the interview.

Participants in the truth condition arrived at the 3rd floor office and were met by Researcher C (a confederate) who explained that Researcher B was running 15 min late and so could not sign for the package just yet. However, Researcher C suggested they go downstairs to the café until the researcher returned. Participants in this condition accompanied Researcher C to the café, where they had a coffee (or similar) and chatted about a series of general topics (e.g., University, where they lived, whether they had visited nearby cities etc.). After approximately 15 min Researcher C and the participant returned to the 3rd floor office. Researcher B was waiting and took the package from the participants and provided a signed receipt, which the participants took back to researcher A (back downstairs in the café), as instructed.

Participants in the deception condition however, upon arrival at the 3rd floor office, were immediately told by another confederate that the intended recipient (Researcher B) had just left the office but that they should not wait for his return, because it would seriously delay delivery of the package. Instead, they were instructed to deliver the package themselves to a courier who was waiting outside the building, but before doing so to forge Researcher B’s signature on the proof of delivery receipt which should then be returned to Researcher A as directed. The participant was instructed to forge the signature by copying Researcher B’s signature from his bank card that was in his wallet on the office desk. They were further instructed to take £5 from Researcher B’s wallet to give to the courier. Deceptive participants all completed this task as instructed. Once the Deceptive participants gave the signed receipt to Researcher A they were told that some money had gone missing and that they were going to be interviewed about it. Researcher A gave the Deceptive participants 10 min to develop a “plausible” explanation of them being in the café with Researcher C for a coffee and were told that their role was to persuade the interviewer that they were being truthful.

Each participant was then interviewed about the theft of £5 from Researcher B’s office. Two interviewers (one monolingual and one bilingual) conducted all interviews. Monolingual participants were all interviewed in English by the same western monolingual interviewer. The bilingual interviewer conducted all bilingual interviews in participant’s first (Hindi) or second language (English), randomly allocated across veracity conditions (lie and truth).

Interviews were digitally audio recorded. English interviews were transcribed verbatim. Hindi interviews were first translated, and then transcribed. Transcriptions were coded for event details, verifiable information, and complications by a group of 10 British monolingual coders (each coding between 12 and 15 transcripts), all of whom were naïve to the experimental conditions and hypotheses. Coders comprised a group of post graduate research students, with experience of coding transcripts for information items with reference to a set of coding instructions. Prior to coding, all coders took part in two classroom-based group training sessions (each coder attending both sessions) lasting 2 h per session. Coding training was run by the first author and comprised (i) instruction/teaching on coding in general, (ii) project specific coding instructions, (iii) group coding of sample transcripts with feedback, (iv) individual coding of transcripts with feedback and group discussion regarding agreement and managing disagreement across coders, and (v) plausibility coding explanation/instruction. Coders also rated each transcript for plausibility. Items in each of the categories were only scored once (i.e., repetitions were not scored). Each of the 10 coders had therefore independently coded a minimum of three of the same transcripts.

Guided by the approach to coding employed by Leal et al. (2018) and Vrij and Vrij (2020), we counted the number of verifiable sources provided. Verifiable source information concerns verbalizations that could be used to verify the information provided by interviewees during the interview, such as named individuals, CCTV footage, text and phone conversations, purchasing information. For example, ‘I went to the lab on the second floor, scanned in using my student ID and then logged onto my emails’ includes 2 verifiable sources (underlined) that could be accessed to verify what the participant said. Event information details were defined as a unit of detail/information about the café paradigm event (from start to end) and included all visual, spatial, temporal, auditory, and action details. For example, ‘There was a desk and three chairs. There was a middle-aged man sitting on the middle chair. He was talking to someone on the phone. We spent 20 min in the café. XXX brought me a coffee, and packet of crisps. After a while, XXX got a call telling us to go back upstairs’, includes 17 event information items. We defined a complication as a verbalization that serves to make the account of the event more complex and detailed. For example, ‘I was talking to XXX when I asked if we could move because of the fridge in the corner. The light inside was so bright I almost wanted to put sunglasses on!’, includes two complications. Information items, verifiable sources and complications were only coded once in that each was assigned to one of the verbal cues categories, only. Repetitions within each category were not coded. Plausibility (see Vrij et al., 2020) was rated using a 7-point Likert scale, asking coders to rate how ‘believable/plausible’ the account was (1 = not at all believable/plausible; 7 = completely unbelievable/unplausible).

Thirty of these transcripts were randomly selected. Two-way mixed effects Intraclass Correlation Coefficient (ICC) for agreement between multiple (10) research raters for event details, verifiable sources and complications were conducted. Mean estimates with 95% CI revealed very good inter-rater reliability for (i) event details, ICC = 0.994 (95% CI 0.991; 0.997), (ii) verifiable sources, ICC = 0.894 (95% CI 0.836; 0.940), and (iii) complications, ICC = 0.920 (95% CI 0.876; 0.955).

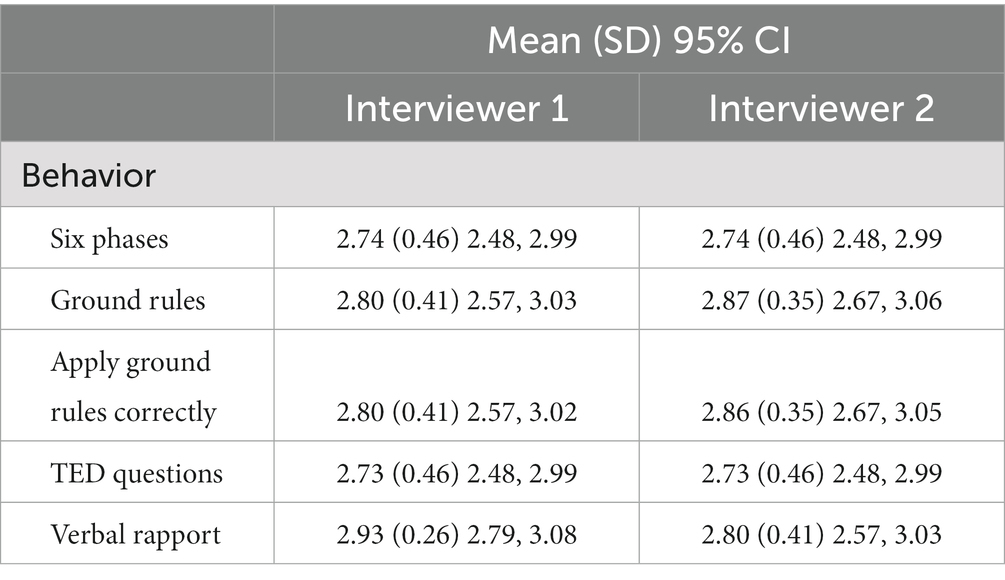

The same sample of 30 interviews were coded by an additional two independent coders for interviewer adherence to the interview protocol using a scoring sheet, which listed each of the required interviewer behaviors (i) inclusion of the 6 phases in the correct order, (ii) explaining the ground rules correctly, (iii) implement the four ground rules at the start of all three information gathering phases, (iv) asking four TED questions, and (v) using verbal rapport building behaviors in the rapport phase. Behaviors were coded, ranging from 1 to 3 for each (e.g., 3 = fully and correctly explained the four ground rules, 2 = partially explained the four ground rules, 1 = did not explain the four ground rules). Two-way mixed effects Intraclass Correlation Coefficient (ICC) analysis testing for absolute agreement between coders for the interviewer behaviors across the sample of 30 interviews revealed good inter-rater reliability for each of the interviewer behaviors, (i) six phases, ICC = 0.937 (95% CI 0.867; 0.970), (ii) correct ground rules, ICC = 1.000, (95% CI 1.00; 1.00), (iii) use of ground rules across three phases, ICC = 0.944 (95% CI 0.889; 0.972), (iv) four TED questions, ICC = 0.865, (95% CI 0.498; 0.964), and (v) rapport building, ICC = 0.757 (95% CI 0.096; 0.935). Mean scores for each behavior as a function of interviewer revealed a very high level of adherence to the protocol for each behavior, with no significant differences across interviewers for each behavior, all Fs < 1.120, all ps > 0.299 (see Table 3).

Table 3. Mean interviewer protocol adherence scores across interviewer 1 and 2 (dip sample of 30 interviews).

A series of 3 (Language: South Asian L1; South Asian L2; British L1) X 2 (Veracity: Truth; Lie) ANOVAs were conducted across the three dependent variables (Event details; Verifiable sources; Complications), applying Bonferroni’s correction as appropriate. Main effects are reported in the results text, interactions are displayed in Table 4.

There was a significant main effect of veracity for event details, F(1, 130) = 1022.73, p < 0.001, ηp2 = 0.89. All liars provided fewer event details than truthtellers (M Liar = 24.49, SD = 5.35, 95% CI 22.58, 26.21; M Truth = 66.97, SD = 10.26, 95% CI 65.09, 68.84, d = 5.44). The main effect of language was non-significant, F(1, 130) = 1.96, p = 0.146, (M SA L1 = 47.54, SD = 25.42, 95% CI 45.25, 49.82; M SA L2 = 44.62, SD = 23.66, 95% CI 42.28, 46.96; M British = 44.13, SD = 19.32, 95% CI 42.69, 47.09). The language X veracity interaction was significant, F(2, 130) = 6.59, p = 0.002, ηp2 = 0.91. South Asian truthtellers provided significantly more event details than South Asian liars in both first (L1), and second (L2) languages, all ps < 0.001, languages (see Table 4).

There was a significant main effect of veracity for complications, F(1, 130) = 248.84, p < 0.001, ηp2 = 0.66. All liars provided fewer complications than truthtellers (M Liar = 2.10, SD = 1.43, 95% CI 1.77, 2.44; M Truth = 5.92, SD = 1.56, 95% CI 5.57, 6.26, d = 1.06). The main effect of language was non-significant, F(2, 130) = 2.38, p = 0.096 (M SA L1 = 4.24, SD = 1.23, 95% CI 3.83, 4.66; M SA L2 = 3.63, SD = 1.09, 95% CI 3.21 4.06; M British = 4.15, SD = 1.35, 95% CI 3.75, 4.55). The language X veracity interaction was significant, F(2, 130) = 10.05, p < 0.001, ηp2 = 0.13. South Asian truthtellers provided significantly more complications than South Asian liars in both first (L1) and second (L2) languages all ps < 0.001 (see Table 4).

There were significant main effects of veracity, F(1, 130) = 152.99, p < 0.001, ηp2 = 0.54 and language F(2, 130) = 11.44, p < 0.001, ηp2 = 0.15, for verifiable sources. Liars provided fewer verifiable sources (M Liar = 2.66, SD = 1.19, 95% CI, 2.72, 3.04) than truthtellers (M Truth = 6.09, SD = 1.78, 95% CI, 5.70, 6.49, p = <0.001, d = 0.82). South Asian L1 (M SA L1 = 4.12, SD = 2.49, 95% CI, 3.67, 4.55) participants provided fewer verifiable details than South Asian L2 (M SA L2 = 5.29, SD = 2.21, 95% CI, 4.80, 7.78), p = 0.003, d = 0.50. The language X veracity interaction was significant, F(2, 130) = 3.93, p = 0.022, ηp2 = 0.06 (see Table 4). South Asian truthtellers provided significantly more verifiable details than South Asian liars in both first (L1) and second (L2) languages (see Table 4), all ps < 0.001. South Asian (L1) liars provided fewer verifiable details than South Asian (L2) liars, p < 0.001.

There was a significant main effect of veracity for plausibility ratings, F(1, 130) = 38.59, p < 0.001, ηp2 = 0.23, All liars were rated less plausible (M Liar = 3.27, SD = 1.18, 95% CI, 3.01, 3.54) than truthtellers, (M Truth = 4.52, SD = 1.26, 95% CI, 4.21, 4.77, d = 0.20). The main effect of language was non-significant, F(2, 130) = 3.85, p = 0.024 (M SA L1 = 4.09, SD = 1.33; M SA L2 = 3.47, SD = 1.16; M British = 4.02, SD = 1.51). The veracity X language interaction was significant, F(2, 130) = 8.138, p < 0.001, ηp2 = 0.11. British (English speaking) truthtellers were rated more plausible than South Asian L1 and L2 truthtellers, all ps < 0.032 (Bonferroni adjusted). British (English speaking) liars were rated as less plausible than South Asian (L1 Hindi) liars, p = 0.023 (Bonferroni adjusted).

Overall, self-reported motivation to comply with researcher instructions was high, M = 6.13 (SD = 0.87). Main effects of veracity (M Liar = 6.16, SD = 0.91; M Liar = 6.11, SD = 0.83) and culture (M British = 6.27, SD = 0.89; M SA = 6.06, SD = 0.85) were non-significant, all Fs < 1.671, all ps > 0.194. However, the veracity X culture interaction was significant with British liars self-reported more motivation (M British = 6.60, SD = 0.76) than South Asian liars (M SA = 5.91, SD = 0.90), p = 0.001. All other interactions were non-significant, p = 0.173.

Overall, self-reported adherence to researcher instructions (as a function of condition) was high, M = 6.32 (SD = 0.72). Main effects of veracity (truthteller, liar) and culture (British, South Asian) were non-significant, as was the veracity X culture interaction, all Fs < 0.001, all ps > 0.269.

Overall, participants self-reported the interview to be neither easy nor difficult (M = 4.34, SD = 0.50). Main effects of veracity, F(1, 132) = 195.167, p < 0.001, ηp2 = 0.60, and culture (British, South Asian), F(1, 132) = 18.463, p < 0.001, ηp2 = 0.12, were significant. All liars found the interview more difficult (M Liar = 3.04, SD = 1.20), than truthtellers, (M Truth = 5.71, SD = 1.26), p < 0.001. South Asian participants found the interview more difficult than British participants (M SA = 4.05, SD = 1.68; M British = 4.88, SD = 1.94). The veracity X culture interaction, was significant, F(1, 132) = 7.787, p = 0.006, ηp2 = 0.56. South Asian truthtellers and liars found the interview more difficult than British truthtellers (M SA Truth = 5.21, SD = 1.23; M British Truth = 6.65, SD = 0.65) and liars (M SA Liar = 2.93, SD = 1.25; M British Liar = 3.43, SD = 0.95), all ps < 0.002.

Overall, participants self-reported understanding of the interviewer’s questions was high (M = 6.78, SD = 0.50). Main effects of veracity (truthteller, liar) and culture (British, South Asian) were non-significant, as was the veracity X culture interaction, all Fs < 4.206, all ps > 0.042.

There is a paucity of research concerned with verbal veracity cues in forensic interview contexts with bilinguals from non-western cultures. We investigated the occurrence of several verbal behaviors that have emerged from North American and Western European monolingual research as promising cues to veracity. To investigate differences and similarities in verbal behaviors between cultures as a function of veracity and interview language (L1 and L2), South Asian participants were interviewed in first and second languages, whereas British participants were interviewed in English only.

Irrespective of interview language (L1; L2) or culture (South Asian; British), all liars verbalized significantly fewer event details, verifiable information, and complications than truthtellers. This pattern of results is consistent with our expectations and with previous research (e.g., DePaulo et al., 2003; Vrij, 2008; Leal et al., 2018; Vrij and Vrij, 2020; Vrij et al., 2021b, 2022) and advances understanding by suggesting these verbal behaviors are stable across cultures (British and South Asian) for liars and truthtellers, including when interviews are conducted in a second language. This latter finding is arguably the most intriguing, given the often-made assumption that speaking in a second language degrades the quality of discourse (Taylor et al., 2014) since speaking in a second language places additional demands on neural processing (Perani and Abutalebi, 2005) which makes conversations more challenging (Ullman, 2001; Da Silva and Leach, 2013). Nonetheless, as predicted by cognitive load theories, the increased cognitive demand typically associated with being deceptive has impacted verbal behavior similarly across cultures, irrespective of interview language, as has been reported by others.

We expected that second language speakers would include less of some of the verbal cues than the first language speakers due to the additive effect of cognitive load stemming from language and veracity. Our results do not support this hypothesis since main effects revealed that South Asian participants in the L2 condition provided more verifiable sources than their L1 language South Asian counterparts. Furthermore, South Asian L2 liars again provided more verifiable sources than their L1 counterparts. That said, the additional cognitive loading imposed by speaking in a second language is neither consistent nor static. L2 practice can lighten cognitive load whereby second language conversations become ‘easier’ because as proficiency improves control mechanisms strengthen, significantly reducing multiple language interference (e.g., Costa et al., 2006; Albl-Mikasa et al., 2020; Liu et al., 2021). Therefore, it seems sensible to expect that bilingual L2 proficiency may moderate cross-cultural differences in verbal veracity cues in an interview context (e.g., Evans et al., 2013).

Here, our bilingual participants were studying at a British university, and all indicated regular, daily use of L2. Indeed, responses to the language questionnaire indicate many participants preferred to speak in English rather than Hindi while at university or had no preference, and so participants were clearly comfortable speaking either language. Accordingly, it is possible that our findings are limited to those with a high level of English language proficiency. Objective language proficiency evaluations that map directly onto cognitive load may be important for understanding possible additive effects for fully understanding the utility of verbal cues. Furthermore, since second language ability develops variously according to exposure to relevant language-learning and cultural contexts, if exposure is limited and/or intermittent, second language ability may be inadequate, despite initial appearances (see Francis, 2006).

Our results are broadly consistent with prior literature, and reinforce an observation made elsewhere (Taylor et al., 2017), which is that cultural variations in interpersonal norms and memory encoding may manifest as ‘main effect’ differences in the behaviors observed from two cultures. This does not affect the evidence for aggregate effects of veracity across our dependent variables. But it does impact any effort to give a point estimate (Nahari et al., 2019) that answers practical questions centered on how to differentiate whether a person of interest is lying. The amount of information that would provide the best cut off between liar and truth-teller may be different for each culture.

Despite the inconsistencies of prior research, we expected that liars across all conditions would judged less plausible that truthtellers. Our results support this hypothesis whereby all liars were rated less plausible. We found no differences in plausibility ratings as a function of L1 or L2 for South Asian participants, but British (English speaking) truthtellers were rated more plausible than South Asian L1 and L2 truthtellers. Further, British (English speaking) liars were rated as less plausible than South Asian liars interviewed in L1 (Hindi). These results suggest that some judges may tend to use more extreme ratings when judging British speakers, which may reflect the cultural background of our raters who were all monolingual British. However, these findings are braodly in line with research suggesting an a more pronounced observer lie bias when judging non-native speakers (Da Silva and Leach, 2013; Evans and Michael, 2014; Wylie et al., 2022), although this may not be the case were judges and coders are bilingual and culturally matched to the interviewee, since the assessments of plausibility are likely to vary depending on the knowledge and expertise of those making a judgment. This would speak to questions concerning whether cross cultural interviews should be conducted in a second language or via an interpreter, perhaps.

That some cues manifested differently across our two cultural groups raises a challenge for research and practice in forensic interview contexts moving forward. As Taylor and colleagues summarize (Taylor et al., 2017), the challenge this poses for the research community is that research could become reductionary, with researchers introducing “new moderators and cut their samples into smaller ‘cultures’” (Hope et al., 2022). This reinforces the view that research moving forward should concern itself less with providing ways to determine veracity and focus on techniques that improve the interaction between interview techniques, interviewer, and the person of interest being interviewed. A constructive interaction is likely to provide the best opportunity to derive checkable evidence that aids an investigation (see also Dando and Ormerod, 2020) rather than relying on research to project an absolute (but arbitrary) value of number of cues related to truthtellers and liars. Cultural differences in cue generation found in this research suggests that monolingual British interviewers and observers may well misjudge the veracity of British and South Asian liars and truthtellers, irrespective of whether they are basing their judgments on plausibility, numbers of complications, or verifiable sources.

Whilst our findings suggest that verifiability, and plausibility may be useful cues to deception, and that generally speaking they appear robust across cultures, how they manifest in absolute terms will vary. It will be interesting to determine if this remains true for cues that are not about information but about other elements of the interaction, such as relational humor (Hamlin et al., 2020) and rapport (Gabbert et al., 2021; Dando C. et al., 2022). We might hypothesize, for example, that if a second language person of interest might focus entirely on providing information, the wider facets of interaction suffer, and this may also expose their deception.

The limitations of our research are clear and ubiquitous. The paradigm employed allowed us to control several variables toward unpicking differences and similarities in verbal behaviors across cultures, but our approach may reduce generalizability. We culturally matched interviewer and interviewee, which maps onto the paradigms employed by some researchers (e.g., Cheng and Broadhurst, 2005; Leal et al., 2018), but differs from other approaches (e.g., Elliott and Leach, 2016; Akehurst et al., 2018). Our interviewers were kept constant, whereby we kept the same bilingual interviewer for the bilingual group and a second monolingual interviewer for the monolingual group. This reduces potentially confounding interviewer behavior variables, but conversely introduces the possibility that our results are confounded by behaviors specific to each interviewer. That said, we used an interview protocol, and the single/multiple interviewer tension is common to all experimental interviewing research such as this.

We used transcripts only as the basis for plausibility judgments, which others have found to leverage higher discrimination accuracy for second language interviews than when visual + audio and/or audio only excepts are utilized (Akehurst et al., 2018). We sought to optimize accurate judgments by eliminating the non-verbal behavior which can decrease accuracy (Vrij et al., 2010; Bull et al., 2019; Sandham et al., 2020; Dando C. J. et al., 2022). However, in doing so we have reduced a complex social interaction to a series of sentences, thus reducing a multifaceted social interaction. It is likely that the value of verbal behavior is far more. Hence our findings may be most relevant for transcript only judgments. Further research centered on the utility of verbal cues when listening to the audio versus listening to the audio plus observing the social interaction would add to our results.

Translation of the Hindi interviews into English has been highlighted by others as a limitation, since around 10% of information may be lost in the process of translation (see Ewens et al., 2017; Leal et al., 2018). This may have impacted our results, although information loss is likely small and translation is a limitation for all bilingual research, irrespective of discipline. We only coded verbalized information within each of the three categories once. Hence, there were no within category duplications (i.e., event details; complications; verifiable sources). However, it is possible that some information items were not mutually exclusive, since an item of event information may also map onto our definition of a verifiable source, for example. This possibility was controlled for by analyzing each category individually, which maps onto the approach employed by others and does not negate our findings. Finally, South Asian liars self-reported being slightly less motivated to be deceptive than British liars. The locus of this result is unclear and the literature in this regard is sparce. It maybe that motivation was influenced by an interplay of intercultural communication, cultural group membership and social moral values (see Giles et al., 2019).

Finally, a-priori power analysis (Faul et al., 2007) revealed our sample size was adequate to detect large effects, but not powerful enough to detect small effects and so future research might consider larger sample sizes toward a more nuanced understanding, although the impact of small effect sizes for applied research is currently the subject of discussion (see Götz et al., 2022; Primbs et al., 2022).

Despite the limitations of research of this nature, our findings offer novel insights into the impact of two contextual variables, culture and language on verbal behavior in face-to-face forensic interviews which were information gathering in nature and designed to amplify potential verbal veracity cues. Our results are promising in terms of again highlighting that while context is an important consideration, irrespective of culture and interview language context, impoverished, simple verbal accounts should trigger a ‘red flag’ for further attention.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/p72ur/?view_only=aa29c3c9cd4f463996cb08e85ca3f473.

The studies involving human participants were reviewed and approved by the Lancaster University Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

CD and PT designed the paradigm. AS, CD, and PT steered the research question and data analysis and wrote the introduction, method, results, and discussion. All authors contributed to the article and approved the submitted version.

We would like to thank our team of coders, interviewers and volunteer researcher assistants who worked together to assist us in bringing this project to life.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Akehurst, L., Arnhold, A., Figueiredo, I., Turtle, S., and Leach, A. M. (2018). Investigating deception in second language speakers: interviewee and assessor perspectives. Leg. Criminol. Psychol. 23, 230–251. doi: 10.1111/lcrp.12127

Albl-Mikasa, M., Ehrensberger-Dow, M., Hunziker Heeb, A., Lehr, C., Boos, M., Kobi, M., et al. (2020). Cognitive load in relation to non-standard language input. Trans. Cogn. Behav. 3, 263–286. doi: 10.1075/tcb.00044.alb

Al-Simadi, F. A. (2000). Detection of deceptive behavior: a cross-cultural test. Soc. Behav. Personal. Int. J. 28, 455–461. doi: 10.2224/sbp.2000.28.5.455

Bull, R., van der Burgh, M., and Dando, C. (2019). “Verbal cues fostering perceptions of credibility and truth/lie detection” in The Palgrave handbook of deceptive communication. ed. T. Docan-Morgan (New York: Palgrave)

Buller, D. B., and Burgoon, J. K. (1996). Interpersonal deception theory. Commun Theory 6, 203–242. doi: 10.1111/j.1468-2885.1996.tb00127.x

Castillo, P. A., and Mallard, D. (2012). Preventing cross-cultural bias in deception judgments: the role of expectancies about nonverbal behavior. J. Cross-Cult. Psychol. 43, 967–978. doi: 10.1177/0022022111415672

Cheng, K. H. W., and Broadhurst, R. (2005). The detection of deception: the effects of first and second language on lie detection ability. Psychiatry Psychol. Law 12, 107–118. doi: 10.1375/pplt.2005.12.1.107

Costa, A., Santesteban, M., and Ivanova, I. (2006). How do highly proficient bilinguals control their lexicalization process? Inhibitory and language-specific selection mechanisms are both functional. J. Exp. Psychol. Learn. Mem. Cogn. 32, 1057–1074. doi: 10.1017/S1366728906002495

Da Silva, C. S., and Leach, A. M. (2013). Detecting deception in second-language speakers. Leg. Criminol. Psychol. 18, 115–127. doi: 10.1111/j.2044-8333.2011.02030.x

Dando, C. J., and Bull, R. (2011). Maximising opportunities to detect verbal deception: training police officers to interview tactically. J. Investig. Psychol. Offender Profiling 8, 189–202. doi: 10.1002/jip.145

Dando, C. J., Bull, R., Ormerod, T. C., and Sandham, A. L. (2018). “Helping to sort the liars from the truth-tellers: the gradual revelation of information during investigative interviews” in Investigating the truth. ed. R. Bull (London: Routledge), 173–189.

Dando, C. J., and Ormerod, T. C. (2020). Noncoercive human intelligence gathering. J. Exp. Psychol. Gen. 149, 1435–1448. doi: 10.1037/xge0000724

Dando, C., Taylor, D. A., Caso, A., Nahouli, Z., and Adam, C. (2022). Interviewing in virtual environments: towards understanding the impact of rapport-building behaviours and retrieval context on eyewitness memory. Mem. Cogn. 51, 1–18. doi: 10.3758/s13421-022-01362-7

Dando, C. J., Taylor, P. J., Menacere, T., Ormerod, T. C., Ball, L. J., and Sandham, A. L. (2022). Sorting insiders from co-workers: remote synchronous computer-mediated triage for investigating insider attacks. Hum. Factors. doi: 10.1177/00187208211068292, [Epub ahead of print]

Dando, C., Wilcock, R., and Milne, R. (2009). The cognitive interview: the efficacy of a modified mental reinstatement of context procedure for frontline police investigators. Appl. Cogn. Psychol. 23, 138–147. doi: 10.1002/acp.1451

DePaulo, B. M., Lindsay, J. J., Malone, B. E., Muhlenbruck, L., Charlton, K., and Cooper, H. (2003). Cues to deception. Psychol. Bull. 129, 74–118. doi: 10.1037/0033-2909.129.1.74

Duñabeitia, J. A., and Costa, A. (2015). Lying in a native and foreign language. Psychon. Bull. Rev. 22, 1124–1129. doi: 10.3758/s13423-014-0781-4

Durant, A., and Shepherd, I. (2013). “‘Culture’ and ‘communication’ in intercultural communication” in Intercultural Negotiations (Abingdon: Routledge), 19–34.

Elliott, E., and Leach, A. M. (2016). You must be lying because I don’t understand you: Language proficiency and lie detection. Journal of experimental psychology: applied 22:488.

Evans, J. R., and Michael, S. W. (2014). Detecting deception in non-native English speakers. Appl. Cogn. Psychol. 28, 226–237. doi: 10.1002/acp.2990

Evans, J. R., Michael, S. W., Meissner, C. A., and Brandon, S. E. (2013). Validating a new assessment method for deception detection: introducing a psychologically based credibility assessment tool. J. Appl. Res. Mem. Cogn. 2, 33–41. doi: 10.1016/j.jarmac.2013.02.002

Evans, J. R., Pimentel, P. S., Pena, M. M., and Michael, S. W. (2017). The ability to detect false statements as a function of the type of statement and the language proficiency of the statement provider. Psychol. Public Policy Law 23, 290–300. doi: 10.1037/law0000127

Ewens, S., Vrij, A., Leal, S., Mann, S., Jo, E., Shaboltas, A., et al. (2016). Using the model statement to elicit information and cues to deceit from native speakers, non-native speakers and those talking through an interpreter. Appl. Cogn. Psychol. 30, 854–862. doi: 10.1002/acp.3270

Ewens, S., Vrij, A., Mann, S., Leal, S., Jo, E., and Houston, K. (2017). The effect of the presence and seating position of an interpreter on eliciting information and cues to deceit. Psychol. Crime Law 23, 180–200. doi: 10.1080/1068316X.2016.1239100

Faul, F., Erdfelder, E., Lang, A. G., and Buchner, A. (2007). G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Francis, N. (2006). The development of secondary discourse ability and metalinguistic awareness in second language learners. Int. J. Appl. Linguist. 16, 37–60. doi: 10.1111/j.1473-4192.2006.00105.x

Gabbert, F., Hope, L., Luther, K., Wright, G., Ng, M., and Oxburgh, G. (2021). Exploring the use of rapport in professional information-gathering contexts by systematically mapping the evidence base. Appl. Cogn. Psychol. 35, 329–341. doi: 10.1002/acp.3762

Giebels, E., Oostinga, M. S. D., Taylor, P. J., and Curtis, J. (2017). The cultural dimension of uncertainty avoidance impacts police-civilian interaction. Law Hum. Behav. 41, 93–102. doi: 10.1037/lhb0000227

Giles, R. M., Rothermich, K., and Pell, M. D. (2019). Differences in the evaluation of prosocial lies: a cross-cultural study of Canadian, Chinese, and German adults. Front. Commun. 4:38. doi: 10.3389/fcomm.2019.00038

Götz, F. M., Gosling, S. D., and Rentfrow, P. J. (2022). Small effects: the indispensable foundation for a cumulative psychological science. Perspect. Psychol. Sci. 17, 205–215. doi: 10.1177/1745691620984483

Granhag, P. A., and Hartwig, M. (2014). “The strategic use of evidence (SUE) technique: a conceptual overview” in Deception detection: current challenges and new approaches. eds. P. A. Granhag, A. Vrij, and B. Verschuere (Chichester: Wiley), 231–251.

Granhag, P. A. E., and Strömwall, L. E. (2004). The detection of deception in forensic contexts. Cambridge: Cambridge University Press.

Hamlin, I., Taylor, P. J., Cross, L., MacInnes, K., and Van der Zee, S. (2020). A psychometric investigation into the structure of deception strategy use. J. Police Crim. Psychol. 37, 229–239. doi: 10.1007/s11896-020-09380-4

Hartwig, M., Granhag, P. A., Strömwall, L. A., and Vrij, A. (2005). Detecting deception via strategic disclosure of evidence. Law Hum. Behav. 29, 469–484. doi: 10.1007/s10979-005-5521-x

Hofstede, G., and Hofstede, G. J. (2005). Cultures and organizations: software of the mind (2nd). New York: McGraw-Hill.

Hope, L., Anakwah, N., Antfolk, J., Brubacher, S. P., Flowe, H., Gabbert, F., et al. (2022). Urgent issues and prospects: examining the role of culture in the investigative interviewing of victims and witnesses. Leg. Criminol. Psychol. 27, 1–31. doi: 10.1111/lcrp.12202

Hurn, B. J., and Tomalin, B. (2013). “What is cross-cultural communication?” in Cross-cultural communication (London: Palgrave Macmillan), 1–19.

Laing, B. L. (2015). The language and cross-cultural perceptions of deception. Provo, UT: Brigham Young University.

Lancaster, G. L., Vrij, A., Hope, L., and Waller, B. (2013). Sorting the liars from the truth tellers: the benefits of asking unanticipated questions on lie detection. Appl. Cogn. Psychol. 27, 107–114. doi: 10.1002/acp.2879

Leach, A. M., Da Silva, C. S., Connors, C. J., Vrantsidis, M. R., Meissner, C. A., and Kassin, S. M. (2020). Looks like a liar? Beliefs about native and non-native speakers' deception. Appl. Cogn. Psychol. 34, 387–396. doi: 10.1002/acp.3624

Leach, A. M., Snellings, R. L., and Gazaille, M. (2017). Observers' language proficiencies and the detection of non-native speakers' deception. Appl. Cogn. Psychol. 31, 247–257. doi: 10.1002/acp.3322

Leal, S., Vrij, A., Deeb, H., and Fisher, R. P. (2022). Interviewing to detect omission lies. Appl. Cogn. Psychol. 37, 26–41. doi: 10.1002/acp.4020

Leal, S., Vrij, A., Deeb, H., and Kamermans, K. (2019). Encouraging interviewees to say more and deception: the ghostwriter method. Leg. Criminol. Psychol. 24, 273–287. doi: 10.1111/lcrp.12152

Leal, S., Vrij, A., Vernham, Z., Dalton, G., Jupe, L., Harvey, A., et al. (2018). Cross-cultural verbal deception. Leg. Criminol. Psychol. 23, 192–213. doi: 10.1111/lcrp.12131

Leins, D. A., Fisher, R. P., and Vrij, A. (2012). Drawing on liars' lack of cognitive flexibility: detecting deception through varying report modes. Appl. Cogn. Psychol. 26, 601–607. doi: 10.1002/acp.2837

Levine, T. R., Park, H. S., and Kim, R. K. (2007). Some conceptual and theoretical challenges for cross-cultural communication research in the 21st century. J. Intercult. Commun. Res. 36, 205–221. doi: 10.1080/17475750701737140

Liu, L., Margoni, F., He, Y., and Liu, H. (2021). Neural substrates of the interplay between cognitive load and emotional involvement in bilingual decision making. Neuropsychologia 151:107721. doi: 10.1016/j.neuropsychologia.2020.107721

Lytle, A. L., Brett, J. M., Barsness, Z. I., Tinsley, C. H., and Janssens, M. (1995). A paradigm for confirmatory cross-cultural research in organizational behavior. Res. Organ. Behav. 17, 167–214.

McDonald, M., Mormer, E., and Kaushanskaya, M. (2020). Speech cues to deception in bilinguals. Appl. Psycholinguist. 41, 993–1015. doi: 10.1017/S0142716420000326

Nahari, G., Ashkenazi, T., Fisher, R. P., Granhag, P. A., Hershkowitz, I., Masip, J., et al. (2019). ‘Language of lies’: urgent issues and prospects in verbal lie detection research. Leg. Criminol. Psychol. 24, 1–23. doi: 10.1111/lcrp.12148

Ormerod, T. C., and Dando, C. J. (2015). Finding a needle in a haystack: toward a psychologically informed method for aviation security screening. J. Exp. Psychol. Gen. 144, 76–84. doi: 10.1037/xge0000030

Perani, D., and Abutalebi, J. (2005). The neural basis of first and second language processing. Curr. Opin. Neurobiol. 15, 202–206. doi: 10.1016/j.conb.2005.03.007

Porter, S., Woodworth, M., and Birt, A. R. (2000). Truth, lies, and videotape: an investigation of the ability of federal parole officers to detect deception. Law Hum. Behav. 24, 643–658. doi: 10.1023/A:1005500219657

Primbs, M. A., Pennington, C. R., Lakens, D., Silan, M. A. A., Lieck, D. S., Forscher, P. S., et al. (2022). Are small effects the indispensable foundation for a cumulative psychological science? A reply to Götz et al. (2022). Perspect. Psychol. Sci. 18, 508–512. doi: 10.1177/17456916221100420

Reynolds, S., Valentine, D., and Munter, M. (2011). Guide to cross-cultural communication. Upper Saddle River, N.J.: Pearson Education India.

Sandham, A. L., Dando, C. J., Bull, R., and Ormerod, T. C. (2020). Improving professional observers’ veracity judgements by tactical interviewing. J. Police Crim. Psychol. 37, 1–9. doi: 10.1007/s11896-020-09391-1

Tavakoli, P., and Wright, C. (2020). Second language speech fluency: from research to practice. Cambridge: Cambridge University Press.

Taylor, P. J., Dando, C. J., Ormerod, T. C., Ball, L. J., Jenkins, M. C., Sandham, A., et al. (2013). Detecting insider threats through language change. Law Hum. Behav. 37, 267–275. doi: 10.1037/lhb0000032

Taylor, P. J., Larner, S., Conchie, S. M., and Menacere, T. (2017). Culture moderates changes in linguistic self-presentation and detail provision when deceiving others. R. Soc. Open Sci. 4:170128. doi: 10.1098/rsos.170128

Taylor, P., Larner, S., Conchie, S., and Van der Zee, S. (2014). “Cross-cultural deception detection” in Detecting deception: current challenges and cognitive approaches. eds. P. A. Granhag, A. Vrij, and B. Verschuere (West Sussex: Wiley-Blackwell), 175–201.

Ullman, M. T. (2001). The neural basis of lexicon and grammar in first and second language: the declarative/procedural model. Biling. Lang. Congn. 4, 105–122. doi: 10.1017/S1366728901000220

van der Zee, S., Taylor, P. J., Tomblin, S., and Conchie, S. M. (2014). “Cross-cultural deception detection” in Detecting deception: current challenges and cognitive approaches. eds. P. A. Granhag, A. Vrij, and B. Verschuere West Sussex.

Verigin, B. L., Meijer, E. H., Vrij, A., and Zauzig, L. (2020). The interaction of truthful and deceptive information. Psychol. Crime Law 26, 367–383. doi: 10.1080/1068316X.2019.1669596

Vrij, A. (2000). Detecting lies and deceit: the psychology of lying and implications for professional practice. Chichester: Wiley.

Vrij, A. (2008). Detecting lies and deceit: Pitfalls and opportunities (2nd ed.). Chichester: John Wiley and Sons.

Vrij, A. (2014). Interviewing to detect deception. Eur. Psychol. 19, 184–194. doi: 10.1027/1016-9040/a000201

Vrij, A., Deeb, H., Leal, S., Granhag, P., and Fisher, R. P. (2021a). Plausibility: a verbal cue to veracity worth examining? Eur. J. Psychol. Appl. Legal Context 13, 47–53. doi: 10.5093/ejpalc2021a4

Vrij, A., Fisher, R. P., and Leal, S. (2022). How researchers can make verbal lie detection more attractive for practitioners. Psychiatry Psychology Law, 1–14. doi: 10.1080/13218719.2022.2035842

Vrij, A., Granhag, P. A., Mann, S., and Leal, S. (2011). Outsmarting the liars: toward a cognitive lie detection approach. Curr. Dir. Psychol. Sci. 20, 28–32. doi: 10.1177/0963721410391245

Vrij, A., Granhag, P. A., and Porter, S. (2010). Pitfalls and opportunities in nonverbal and verbal lie detection. Psychol. Sci. Public Interest 11, 89–121. doi: 10.1177/1529100610390861

Vrij, A., Leal, S., Deeb, H., Chan, S., Khader, M., Chai, W., et al. (2020). Lying about flying: the efficacy of the information protocol and model statement for detecting deceit. Appl. Cogn. Psychol. 34, 241–255. doi: 10.1002/acp.3614

Vrij, A., Leal, S., Granhag, P. A., Mann, S., Fisher, R. P., Hillman, J., et al. (2009). Outsmarting the liars: the benefit of asking unanticipated questions. Law Hum. Behav. 33, 159–166. doi: 10.1007/s10979-008-9143-y

Vrij, A., Leal, S., Mann, S., and Fisher, R. (2012). Imposing cognitive load to elicit cues to deceit: inducing the reverse order technique naturally. Psychol. Crime Law 18, 579–594. doi: 10.1080/1068316X.2010.515987

Vrij, A., and Mann, S. (2004). Detecting deception: the benefit of looking at a combination of behavioral, auditory and speech content related cues in a systematic manner. Group Decis. Negot. 13, 61–79. doi: 10.1023/B:GRUP.0000011946.74290.bc

Vrij, A., Palena, N., Leal, S., and Caso, L. (2021b). The relationship between complications, common knowledge details and self-handicapping strategies and veracity: a meta-analysis. Eur. J. Psychol. Appl. Legal Context 13, 55–77. doi: 10.5093/ejpalc2021a7

Vrij, A., and Vrij, S. (2020). Complications travel: a cross-cultural comparison of the proportion of complications as a verbal cue to deceit. J. Invest. Psychol. Offender Profiling 17, 3–16. doi: 10.1002/jip.1538

Keywords: detecting deception, plausibility, first and second language, cross cultural, South Asian

Citation: Dando CJ, Taylor PJ and Sandham AL (2023) Cross cultural verbal cues to deception: truth and lies in first and second language forensic interview contexts. Front. Psychol. 14:1152904. doi: 10.3389/fpsyg.2023.1152904

Received: 28 January 2023; Accepted: 11 April 2023;

Published: 30 May 2023.

Edited by:

Haneen Deeb, University of Portsmouth, United KingdomReviewed by:

Nicola Palena, University of Bergamo, ItalyCopyright © 2023 Dando, Taylor and Sandham. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Coral J. Dando, Yy5kYW5kb0B3ZXN0bWluc3Rlci5hYy51aw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.