- Department of Elementary School Education, School of Primary Education, Shanghai Normal University Tianhua College, Shanghai, China

Following the global COVID-19 outbreak, blended learning (BL) has received increasing attention from educators. The purpose of this study was: (a) to develop a measurement to evaluate the effectiveness of blended learning for undergraduates; and (b) to explore the potential association between effectiveness with blended learning and student learning outcomes. This research consisted of two stages. In Stage I, a measurement for evaluating undergraduates’ blended learning perceptions was developed. In Stage II, a non-experimental, correlational design was utilized to examine whether or not there is an association between blended learning effectiveness and student learning outcomes. SPSS 26.0 and AMOS 23.0 were utilized to implement factor analysis and structured equation modeling. The results of the study demonstrated: (1) The hypothesized factors (course overview, course objectives, assessments, 1148 class activities, course resources, and technology support) were aligned as a unified system in blended learning. (2) There was a positive relationship between the effectiveness of blended learning and student learning outcomes. Additional findings, explanations, and suggestions for future research were also discussed in the study.

1. Introduction

Following the global COVID-19 outbreak, blended learning (BL) has received increasing attention from educators. BL can be defined as an approach that combines face-to-face and online learning (Dos, 2014), which has become the default means of delivering educational content in the pandemic context worldwide due to its rich pedagogical practices, flexible approaches, and cost-effectiveness (Tamim, 2018; Lakhal et al., 2020). Moreover, empirical research has demonstrated that BL improves learners’ active learning strategies, multi-technology learning processes, and learner-centered learning experiences (Feng et al., 2018; Han and Ellis, 2021; Liu, 2021). Furthermore, students are increasingly requesting BL courses due to the inability to on-campus attendance (Brown et al., 2018). In addition, researchers have examined the positive effects of BL on engaging students, improving their academic performance and raising student satisfaction (Alducin-Ochoa and Vázquez-Martínez, 2016; Manwaring et al., 2017).

In China, the Ministry of Education has strongly supported educational informatization since 2012 by issuing a number of policies (the Ministry of Education, 2012). In 2016, China issued the Guiding Opinions of the Ministry of Education on Deepening the Educational and Teaching Reform of Colleges and Universities, emphasizing the promotion of the BL model in higher education. In 2017, the Ministry of Education listed BL as one of the trends in driving education reform in the New Media Alliance Horizon Report: 2017 Higher Education Edition. In 2018, Minister Chen Baosheng of the Ministry of Education proposed at the National Conference on Undergraduate Education in Colleges and Universities in the New Era to focus on promoting classroom revolution and new teaching models such as flipped classroom and BL approach. In 2020, the first batch of national BL courses was identified, which pushed the development of BL to the forefront of teaching reform. During the pandemic era in China, BL was implemented in all universities and colleges.

However, a number of researchers produced opposing results regarding the benefits of BL. Given the pre-requisites, resources, and attitudes of the students, BL model is suspected to be inapplicable to all courses, such as practicum courses (Boyle et al., 2003; Naffi et al., 2020). Moreover, it should be noted that students, teachers, and educational institutions may lack BL experience and therefore they are not sufficiently prepared (such as technology access) to implement BL methods or focus on the efficiency of BL initiatives (Xiao, 2016; Liliana, 2018; Adnan and Anwar, 2020). Another big concern is that BL practice is hard to evaluate because there are few standardized BL criteria (Yan and Chen, 2021; Zhang et al., 2022). In addition, a number of studies have concluded there was no significant contribution of BL in terms of student performance and test scores, compared to traditional learning environments (U.S. Department of Education, 2009). Therefore, it is extremely necessary to explore the essential elements of BL in higher education and examine the effect of BL on student academic achievement. This paper offers important insights for those attempting to implement BL in classroom practice to effectively support student needs in higher education.

The purpose of this research was: (1) To develop a measurement with key components to evaluate BL in undergraduates; (2) To explore the associations between perceptions of BL effectiveness and student learning outcomes (SLOs) in a higher education course using the developed measurement.

The significance of the current study was listed as follows: (1) The researchers noted that there have only a few studies have focused on the BL measurement in higher education and its effects on SLOs. Therefore, the current study results will add to the literature regarding BL measurement and its validity. (2) The Ministry of Education in China has an explicit goal the desire to update university teaching means and strategies in accordance with the demands of the twenty-first century. Therefore, the current study will contribute to the national goals of the Ministry of Education in China, enhance understanding of BL, and provide a theoretical framework and its applicability. (3) Faculty members in higher education who attempt to apply BL model in their instructions will be aware of the basic components of BL that contribute to SLOs.

2. Literature review

2.1. Definitions of BL

BL is referred to as “hybrid,” “flexible,” “mixed,” “flipped” or “inverted” learning. The BL concept was first proposed in the late 20 century against the backdrop of growing technological innovation (Keogh et al., 2017). The general definition of BL is that it integrates traditional face-to-face teaching with a web-based approach.

However, this description has been hotly debated by researchers in recent years. Oliver and Trigwell (2005) posited that BL may have different attributions in relation to various theories, meaning that the concept should be revised. Others attempted to clarify the significance of BL by classifying the proportion of online learning in BL and the different models that come under the BL umbrella. Allen and Seaman (2010) proposed that BL should include 30–70% online-in person learning (otherwise, it would be considered online learning (more than 70%) or traditional face-to-face learning (less than 30%)). In The Handbook of Blended Learning that edited by Bonk and Graham (2006) set out three categories of BL: web-enhanced learning, reduced face-time learning, and transforming blends. Web-enhanced learning pertains to the addition of extra online materials and learning experiences to traditional face-to-face instruction. Reduced face-time learning means to shift part of face-to-face lecture time to computer-mediated activities. Transforming blends mixes traditional face-to-face instruction with web-based interactions, through which students are able to actively construct their knowledge.

This study views BL as an instructional approach that provides both synchronous and asynchronous modes of delivery through which students construct their own understandings and interact with others in these settings, which is widely accepted by numerous researchers (Liliana, 2018; Bayyat et al., 2021). To phrase this in another way, this description emphasizes that learning has to be experienced by the learner.

2.2. Essential elements of BL

Previous studies, universities, and cooperation have discussed the essential components of online learning courses. Blackboard assesses online learning environments on four scales (course design, cooperation, assessment, and learner support) with 63 items. Quality Matters evaluated online learning according to the following categories: course overview, objectives, assessment, teaching resources, activities and cooperation, course technology, learner support, and practicability. Californian State Universities rated their criteria on a ten scale of 58 items, including learning evaluation, cooperation and activities, technology support, mobile technology, accessibility, and course reflection. New York State Universities evaluate BL under the following six sub scales: course overview, course design, and assignment, class activities, cooperation, and assessment. Due to the lack of criteria for BL, these standards have been considered in evaluating BL.

The present study utilized Biggs’ (1999) constructive alignment as the main theoretical framework to analyze BL courses. “Constructive means the idea that students construct meaning through relevant activities … and the alignment aspect refers to what the teachers do, which is to set up a learning environment” (Biggs, 1999, p. 13). Later, Biggs and Tang (2011) elaborated on the two terms — ‘constructive’ and ‘alignment’ originated from constructivist theory and curriculum theory, respectively, in the book Teaching for Quality Learning at University. Constructivism was regarded as “learners use their own activity to construct their knowledge as interpreted through their own exiting schemata.” The term “alignment” emphasized that the assessments set were relevant and conducive to the intended learning goals (Biggs and Tang, 2011, p. 97). According to Biggs’ statement, various critical components should be closely linked within the learning context, including learning objectives, teaching learning activities, and assessment tasks. These main components have been defined in detail:

(1) Learning objectives indicate the expected level of student understanding and performance. They tell students what they have to do, how they should do it, and how they will be assessed. Both course overview and learning objectives involve intended learning outcomes.

(2) Teaching/learning activities are a set of learning processes that the students have to complete by themselves to achieve a given course’s intended learning outcomes. In BL, activities include both online and face-to-face activities where students are able to engage in collaborations and social interactions (Hadwin and Oshige, 2011; Ellis et al., 2021). The interactive learning activities are chosen to best support course objectives and students’ learning outcomes (Clark and Post, 2021). Examples of activities in BL include: group problem-solving, discussion with peers/teachers, peer instruction, answering clicker questions or in-class polls (Matsushita, 2017).

(3) Assessment tasks are tools to determine students’ achievements based on evidence. In BL, assessments can be conducted either online or in-class. Examples of assessments in BL include: online quizzes, group projects, field-work notes, individual assignments.

(4) Besides, based on the definition of BL and the integration of information technology improvement in recent years, online resources and technological support have become essential components of BL courses (Darling-Aduana and Heinrich, 2018; Turvey and Pachler, 2020). On a similar note, Ellis and Goodyear (2016) and Laurillard (2013) emphasized the role of technical devices in BL, whilst Zawacki-Richter (2009) regarded online resources and technological support as central to achieving BL course requirements. In addition, Liu (2021) suggested that a BL model should include teaching objectives, operating procedures, teaching evaluation, and teaching resources before class, during class, and after class, respectively. With this in mind, the present study integrates both essential curriculum components in the face-to-face course and information technology into the teaching and learning aspects of the BL course.

2.3. BL effectiveness and SLOs

Many researchers have demonstrated the benefits of BL approach on SLOs because of the importance of BL in improving teaching methods and better reflecting the improvement of the learner skills, talents, and interest in learning. Garrison and Kanuka (2004) reported increased completion rates as a result of BL application. Similar results were agreed upon by other researchers. Kenney and Newcombe (2011) and Demirkol and Kazu (2014) conducted comparisons and found that students in BL environments had higher average scores than those in non-BL environments. Alsalhi et al. (2021) utilized a quasi-experimental study at Ajman University (n = 268) and indicated that the use of BL has a positive effect on students’ academic success in a statistics course. “BL helps to balance a classroom that contains students with different readiness, motivation, and skills to learn. Moreover, BL deviates from traditional teaching and memorizing of students” (Alsalhi et al., 2021, p. 253). No statistical significant difference was found among students based on the variables of the university they attended.

However, researchers also showed that BL approach may not be applicable to all learners or improve their learning outcomes. Oxford Group (2013) reported that about 16% of learners had negative attitudes toward BL, while 26% of learners chose not to complete BL. Kintu et al. (2017) examined the relationship between student characteristics, BL design, and learning outcomes and indicated that BL design is beneficial to raise student satisfaction (n = 238). The study also found that BL predicted learning outcomes for learners with high self-regulation skills. Similar results were reported by Siemens (2005) who indicated that students who have higher learner interactions resulted in higher satisfaction and learning outcomes. Hara (2000) identified ambiguous course design and potential technical difficulties as major barriers in BL practice, which led to dissatisfied learning outcomes. Clark and Post (2021) utilized a hybrid study in higher education to explore the effectiveness of different instructional approaches (face-to-face, eLearning, and BL) and indicated that the individual student valued active learning in both face-to-face classes and eLearning classes. Moreover, having an eLearning experience prior to face-to-face classes is beneficial for students to perform well on the assessment. However, the study noted that students who took face-to-face courses were positively associated with their final grades.

2.4. Research questions and hypotheses

To fill in the gaps, the research questions and hypotheses were raised in the present research as follows:

RQ1: What components (among course overview, course objectives, assessments, activities, course resources, and technology support) contribute to the measurement?

RQ2: Is there an association between BL effectiveness and SLOs in higher education?

H1: All components (among course overview, course objectives, assessments, class activities, course resources, and technology support) contribute to the BL course model.

H2: There is an association between BL effectiveness and SLOs.

3. Methodology

The study employed a non-experimental, correlational design and used survey responses from undergraduates to address the research questions. Specifically, a higher education institution in Shanghai with a specialization in teacher education was studied. The present study was a part of an instructional initiative project at this institution designed to identify students’ perceptions of the effectiveness of BL and explore the possible relationships between BL effectiveness and SLOs.

The present research consisted of two stages: Stage I (from March 2021 to July 2021) aimed to develop a measurement for evaluating undergraduates’ BL perceptions through a survey of undergraduates who had experienced BL courses. Stage II (from September 2021 to January 2022) aimed to use the developed measurement to examine whether or not there is an association between BL effectiveness and SLOs.

3.1. Instruments

3.1.1. Effectiveness of BL scale (EBLS)

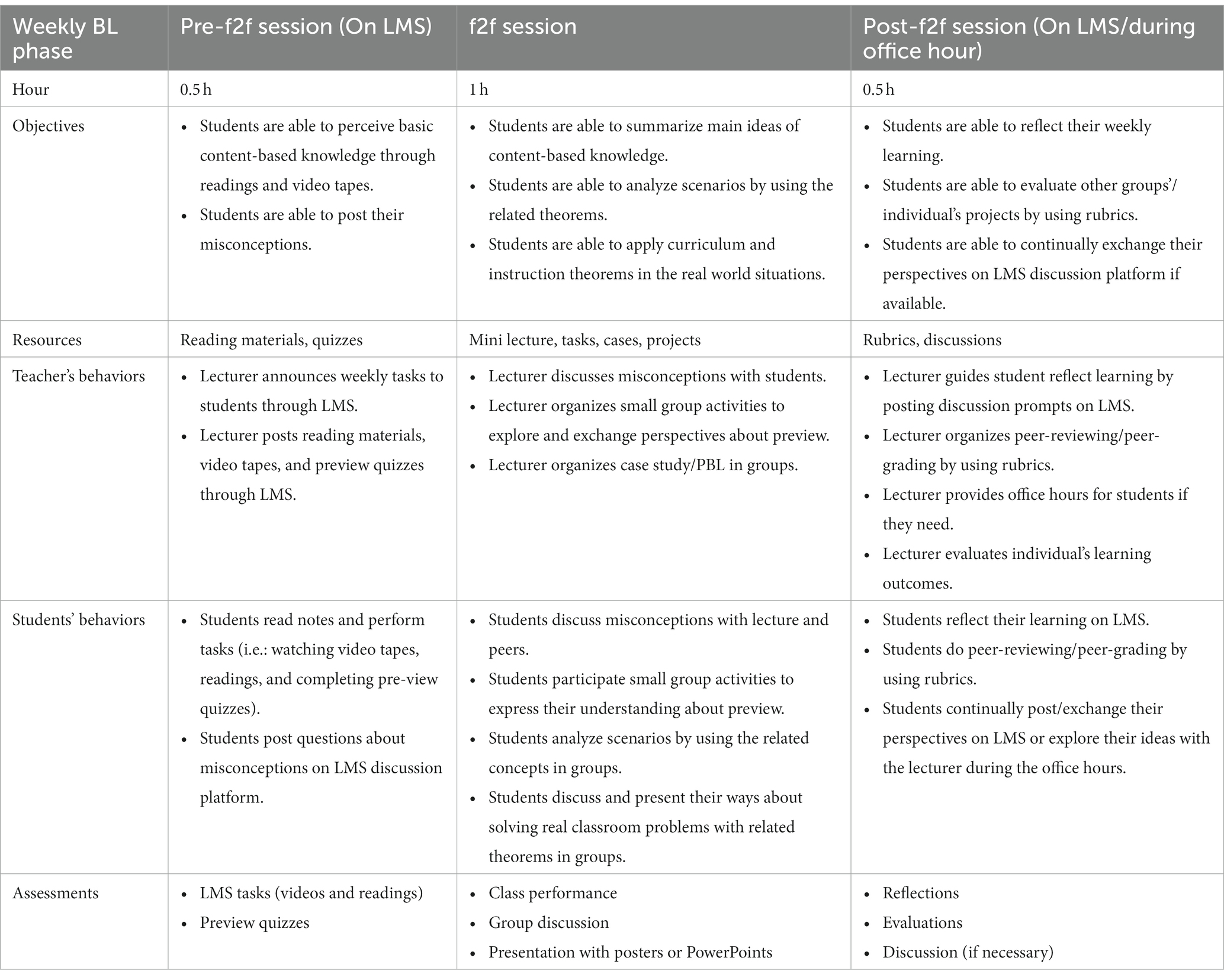

In Stage I, according to Biggs’ theoretical framework and the existing literature, the measurement used in this study was composed of six sub-scales: course overview, learning objectives, assessments, course resources, teaching/learning activities, and technology support. After comparing these criteria, the instrument titled “Blended Learning Evaluation” was derived from Quality Matters Course Design Rubric Standards (QM Rubric) and revised. Following consultation with experienced teaching experts who had experience in BL design and application, the revised QM Rubric can be applied to both the online and face-to-face portions of the course. Table 1 details the modified measurement.

Then, a panel of two experts, two blended course design trainers, and two faculty members in the curriculum and instruction department were asked to evaluate the appropriateness and relevance of each item included in the instrument. Subsequently, a group of 10 sophomores and senior students were asked to check how the questions are read and understood and accordingly give feedback. Based on their comments and the suggestions from the panel, a few minor changes were made and content validity was again evaluated by the panel of experts prior to the administration of the instrument.

Finally, the EBLS that modified from Quality Matters Course Design Rubric Standards was determined as the initial scale that was preparing for the construct validity and reliability checks. The EBLS composed of six sub-scales (25 items in total): course overview (4 items), course objectives (4 items), assessments (4 items), course resources (5 items), in-class and online activities (4 items), and technology support (4 items). The measurement applied a 5-point Likert Scale (1-Strongly Disagree, 2-Disagree, 3-So-so, 4-Agree, and 5-Strongly Agree).

3.1.2. Student learning outcomes

In Stage II, the course marks from the Curriculum and Instruction Theorem module were used as an indicator of students’ learning outcomes. Multiple regression analysis was utilized via SPSS 25.0 to perform data analysis.

In the second stage of the study, the students’ course marks from the Curriculum and Instruction Theorem module were used as an indicator of students’ learning outcomes. This curriculum was a semester-long mandatory course for 91 sophomores which ran for 16 weeks from September 2021 to January 2022. It aimed to develop the students’ knowledge of in-depth disciplinary and academic content but also skills pertaining to cooperation, technology, inquiry, discussion, presentation, and reflection. The course was designed as a synchronous BL curriculum, in which students all had both face-to-face and technologically-mediated interactions (see Table 2). Each week, there were 1.5 h of face-to-face learning that combined lectures, tutorials, and fieldwork. The lectures covered teaching key concepts with examples and non-examples and connected teaching theories to practical issues. Meanwhile, the tutorials provided opportunities for students to collaborate with peers or in groups. The fieldwork offered opportunities for students to observe real classes and interview cooperative teachers or students in local elementary schools. Technologically-mediated interactions supported by the Learning Management System (LMS) provided supplementary learning resources, reading materials, relative videos, cases, assessment and other resources from the Internet. Students were required to complete online quizzes, assignments, projects, and discussions as well on LMS.

The final marks of the course were derived from both formative and summative assessments. The formative assessments covered attendance and participation, individual assignments (quizzes, reflections, discussions, case studies, and class observation reports) and group projects (lesson plan analysis, mini-instruction, reports). The summative assessment was a paper-based final examination, as required by the college administrators.

3.2. Participants

In Stage I of the study, the target population was sophomore and junior undergraduates from different majors at a higher education institution in Shanghai. Detailed demographic information has been reported in the results section of this study. Notably, due to practical constraints, a convenience sample was employed in the present study. As explained by McMillan and Schumacher (2010), although the generalizability of the results is more limited, the findings are nevertheless useful when considering BL effectiveness. Thus, care was taken to gather the demographic background information on the respondents to ensure an accurate description of the participants could be achieved.

In Stage II of the study, the participants were 91 sophomores who took the synchronous BL course, Curriculum and Instruction Theorem, in School of Primary Education in the fall of 2021 (September 2021–January 2022).

3.3. Data collection procedures, analysis and presentation

Institutional Review Board (IRB) approval was obtained prior to the collection of Stage I and Stage II. In Stage I, the informed IRB-approved Informed Consent Form included a brief introduction to the study purpose, the length of time required to complete the survey, possible risks and benefits, the researcher’s contact information, etc. It also clarified to the potential respondent that the survey was voluntary and anonymous. SurveyMonkey1 was used to administer the survey. In Stage II, the informed IRB-approved Informed Consent Form was also provided to participants. LMS was used for data collection.

To address RQ1, the study used the four following steps:

1. An initial measurement was modified and translated from QM rubrics, and the content validity was checked by the authority.

2. Secondly, the reliability of measurement was examined.

3. Exploratory factor analysis (EFA) was conducted to test the construct validity.

4. Confirmatory factor analysis (CFA) was examined to correct for the relationships between the modeling and data. Ultimately, a revised BL measurement was developed with factor loadings and weights. In Stage I, SPSS 26.0 and AMOS 23.0 were utilized to implement factor analysis and structured equation modeling.

To address RQ2, the study followed two steps:

1. Descriptive statistics (mean, standard deviation, minimum rating, and maximum rating) were calculated on the undergraduates’ perspectives on BL effectiveness, as identified by the author.

2. SLOs were regressed on the perceived BL effectiveness. This research question examined whether the overall BL effectiveness was associated with student achievement. In Stage II, SPSS 26.0 was utilized to implement correlations and multiple regressions.

3.4. Limitations

Based on the threats to the validity of internal, external, structural, and statistical findings summarized by McMillan and Schumacher (2010), the following limitations of this study are acknowledged. First, since data were self-reported by participants, may have been influenced and the answers they provided may not reflect their true feelings or behaviors. Second, the study used a convenience sample rather than a database consisting of all undergraduates in higher education in Shanghai; therefore, the population external validity was limited to those faculties with response characteristics. Last, although care was taken to generally phrase the research questions in terms of association rather than effects, a limitation of the study is that correlational design limits our ability to draw causal inferences. The results may be suggestive, but further research is needed in order to draw conclusions about BL impacts.

4. Results

4.1. What factors (among course overview, course objectives, assessments, class activities, course resources, and technology support) contribute to the measurement?

4.1.1. Demographic information in stage I

In Stage I, a survey with 25 items in 6 sub-scales was delivered to undergraduates who had experienced BL in higher education. In total, 295 valid questionnaires were collected in Stage I (from March 2021 to July 2021). Demographic information of the participants were reported as follows: the percentage of male respondents was 27% while the percentage of female respondents was 73%. The majors of respondents included education (51%), literature (22%), computer science (11%), business (10%), arts (5%), and others (1%). All the respondents were single and aged in the range of 19–20 years old.

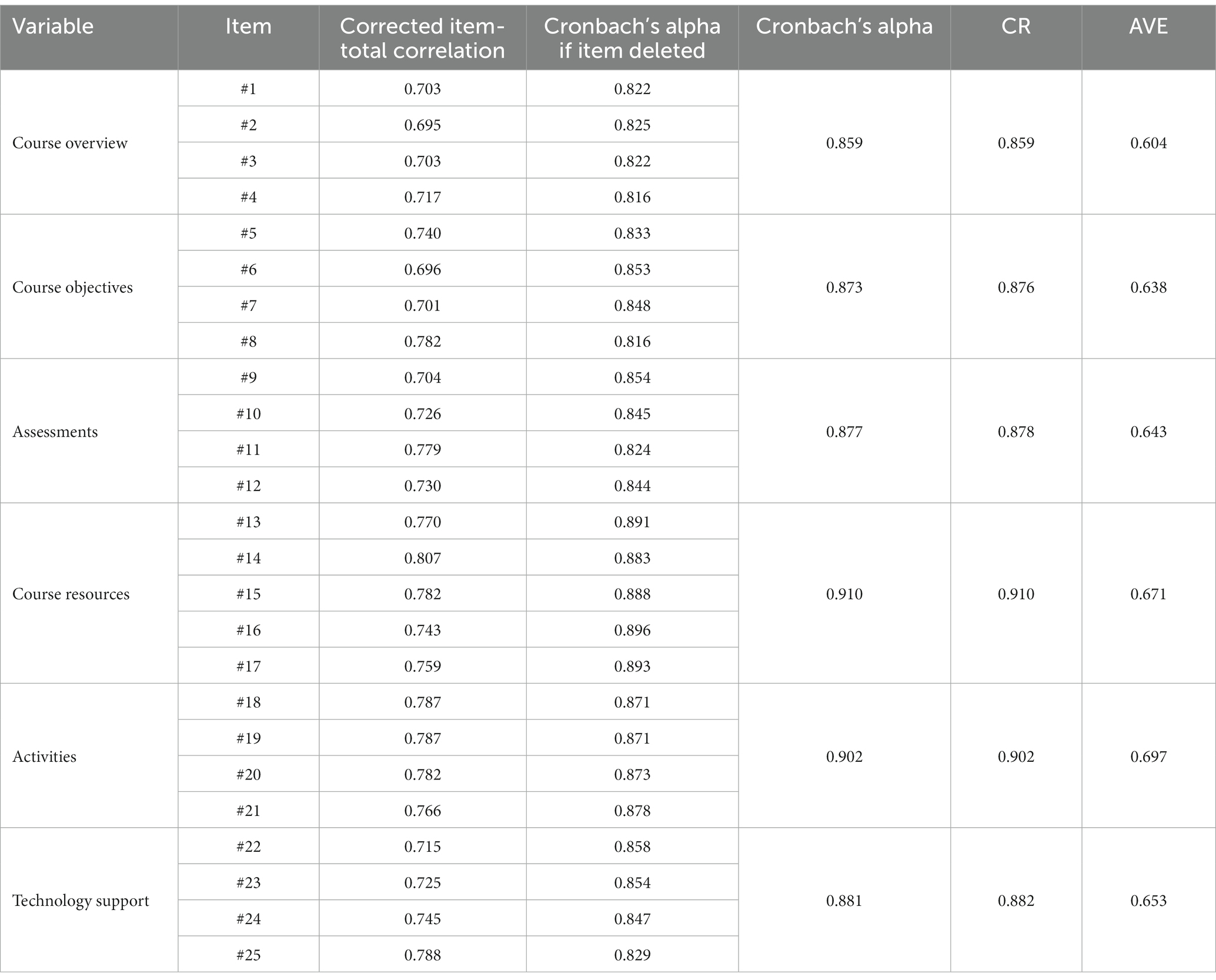

4.1.2. Reliability analysis

To address RQ1, reliability and EFA were conducted on the questionnaire results. Test reliability refers to “the consistency of measurement – the extent to which the results are similar over different forms of the same instrument or occasions of data collection” (McMillan and Schumacher, 2010, p. 179). To be precise, the study tested internal consistency (Cronbach’s Alpha), composite reliability (CR), and Average of Variance Extracted (AVE) evidence for reliability. According to Table 3, the reliability of the measurement (25 Items) showed the internal reliability for this scale was 0.949 (N = 295). The alpha reliability value for each sub-scale is as follows: 0.859, 0.873, 0.877, 0.910, 0.902, and 0.881, respectively. Since the total scale’s alpha value and sub-scales’ alpha values were all greater than 0.70, the reliability of the survey was relatively high and therefore acceptable. Moreover, the AVE of each sub-scale was greater than 0.50, indicating that the reliability and convergence of this measurement were good. In addition, CR values were all greater than 0.80. This indicates that the composite reliability is high. Therefore, this blended course evaluation measurement is deemed reliable.

4.1.3. Exploratory factor analysis

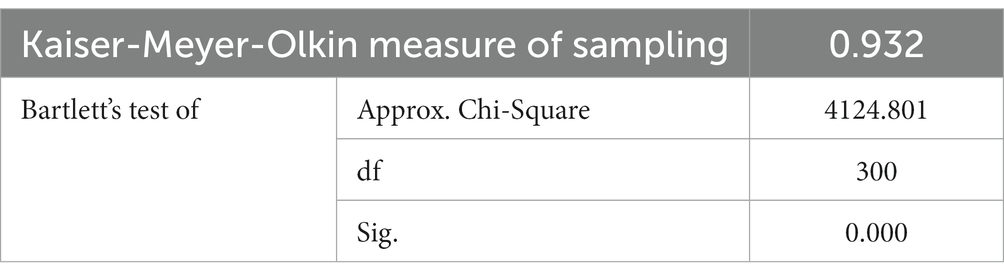

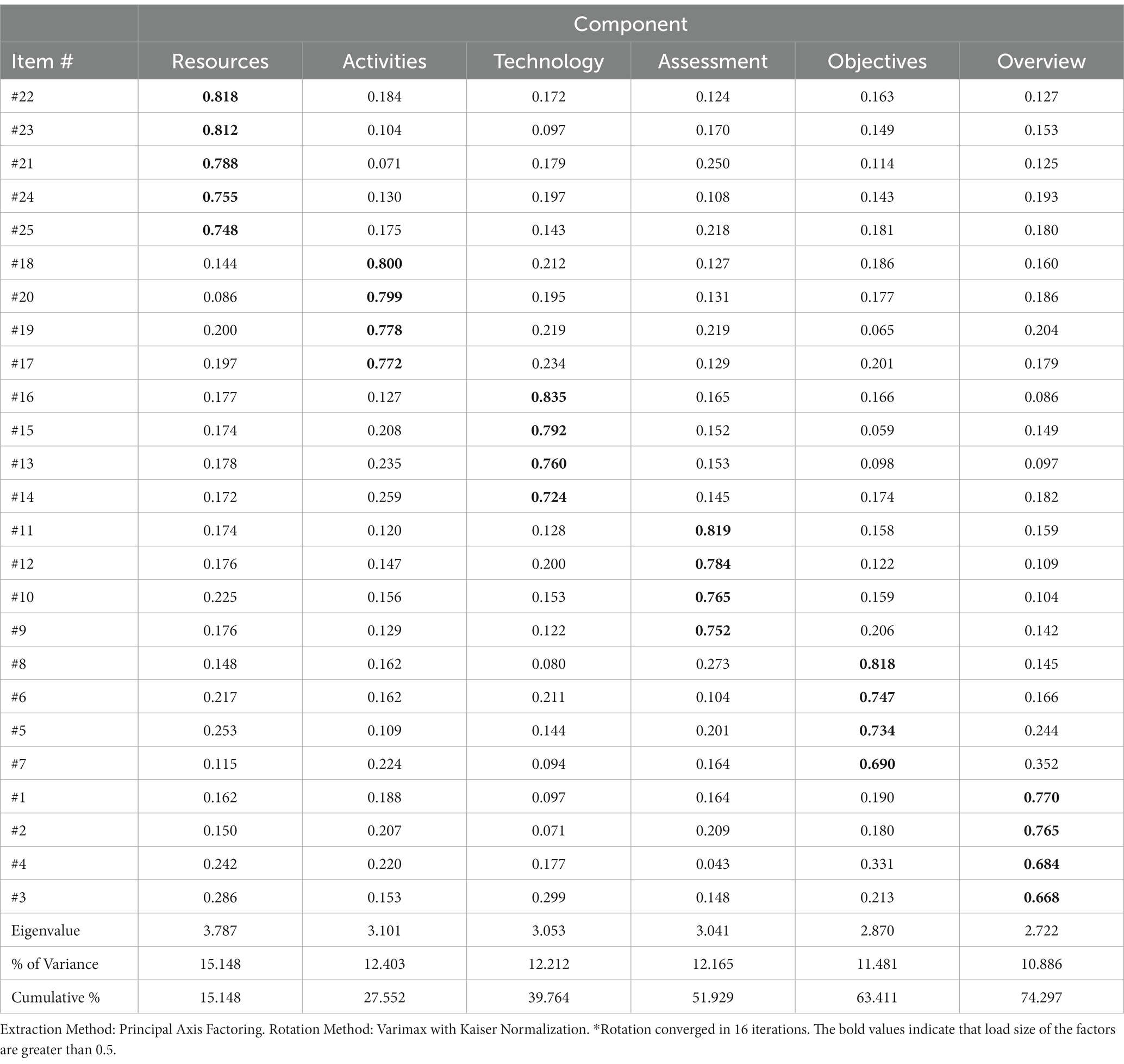

According to the research design, EFA was then carried out to determine its construct validity by using SPSS 26.0 to identify if some or all factors (among course overview, course objectives, assessments, class activities, course resources, and technology support) perform well in the context of a blended course design. According to Bryant and Arnold (1995), to run EFA, the sample should be at least five times the number of variables. The subjects-to-variables ratio should be 5 or greater. Furthermore, every analysis should be based on “a minimum of 100 observations regardless of the subjects-to-variables ratio” (p. 100). This study included 25 variables, meaning that 300 samples were gathered. The number of samples was more than 12 times greater than the variables. Compared to the criteria proposed by Kaiser and Rice (1974), the KMO of measurement in this study was greater than 0.70 (0.932). This result indicates the sampling is more than adequate. According to Table 4 (showing Bartlett’s Test of Sphericity), the approximate Chi-square of Bartlett’s test of Sphericity is 4124.801 (p = 0.000 < 0.001). This shows that the test was likely to be significant. Therefore, EFA could be used to examine the study.

EFA refers to “how items are related to each other and how different parts of an instrument are related” (McMillan and Schumacher, 2010, p. 176). Factor analysis (principal component with varimax rotation) analysis was deployed to assess the degree to which 25 blended course design level questions were asked in the “Blended Course Evaluation Survey.” According to the EFA results detailed in Table 5 (Rotated Factor Matrix), the 25 items loaded on six factors with eigenvalues were greater than 1. The results of the rotated factor matrix showed the loadings were all close to or higher than 0.70 (Comrey and Lee, 1992). Therefore, these six factors mapped well to the dimensions and the measurement can be seen to have relatively good construct validity. Hence, to answer RQ1, all of the factors (among course overview, course objectives, assessments, class activities, course resources, and technology support) performed well in the measurement.

Table 5. Rotated factor matrix*.

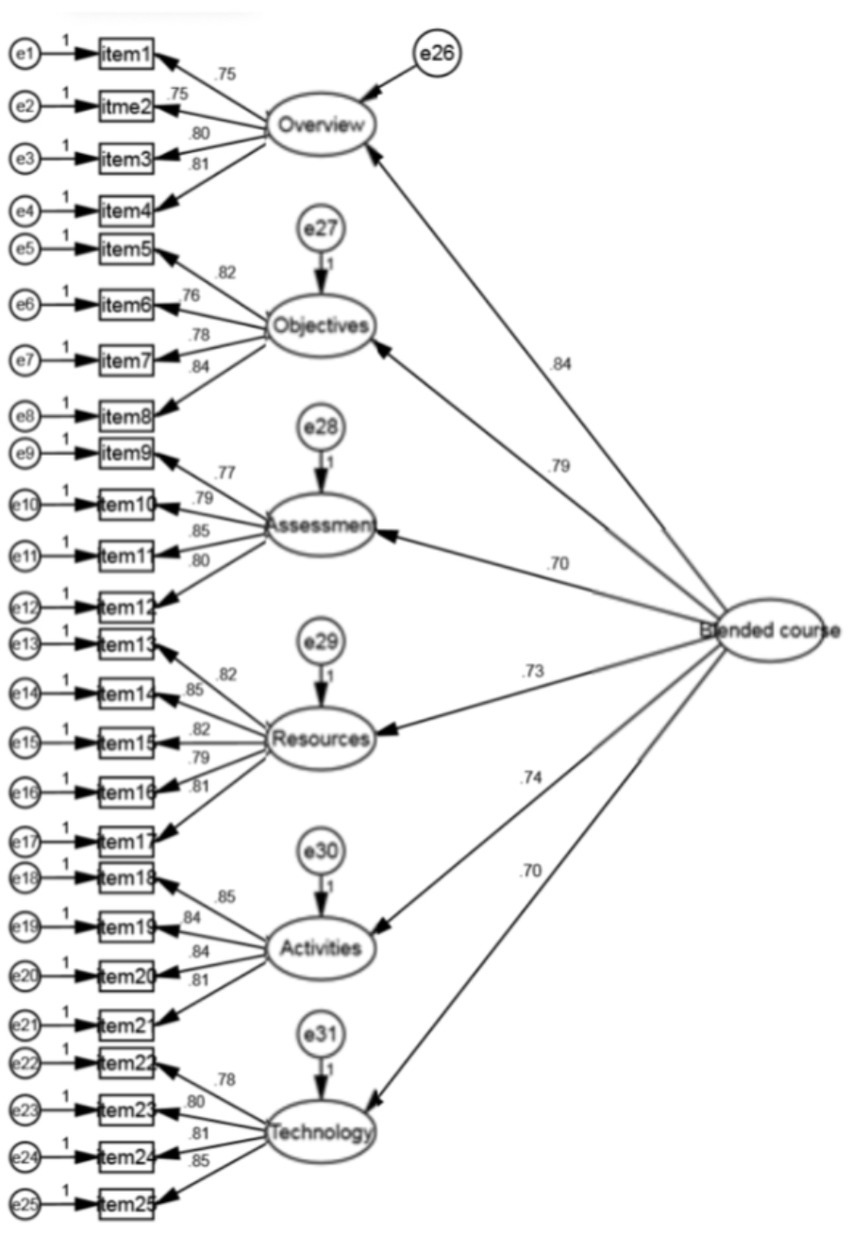

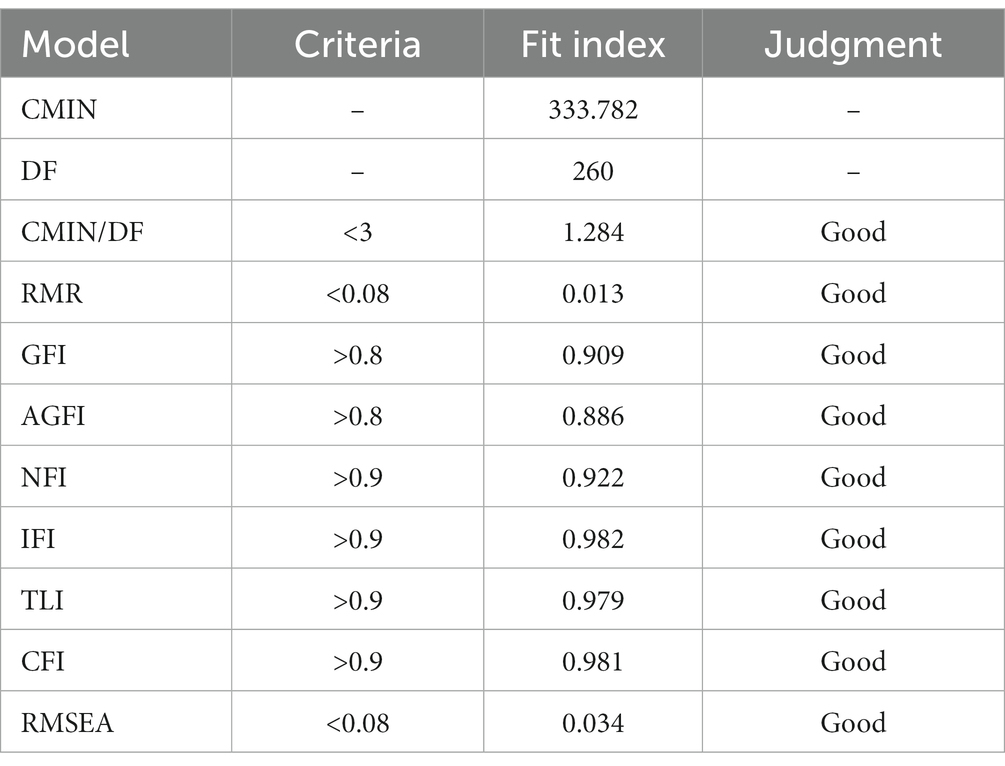

To further address to what extent factors contribute to the measurement, the hypothesized model in the present study was examined, after which the weight of each factor was calculated for educators based on its structural equation modeling. To discern whether the hypothesized model reflects the collected data, AMOS 23.0 was utilized to carry out confirmatory factor analysis. Compared the fit indexes to the criteria in Table 6 Comparison of Fit Indexes for Alternative Models of the Structure of the Blended Course Design Measurement below, the Root Mean Square Error of Approximation (RMSEA) was 0.034, lower than our rule of thumb of 0.05, which would indicate a good model. Additionally, the results of TLI (0.979) and CFI (0.981) were above our target for a good model. Moreover, CMIN/DF was 1.284, lower than 3; GFI was 0.909, greater than 0.8; AGFI was 0.886, greater than 0.8; NFI was 0.922, greater than 0.9; IFI was 0.982, greater than 0.9; and RMR was 0.013 lower than 0.08. Based on these criteria, it appears that the initial model fits the data well. In other words, the initial model can effectively explain and evaluate a blended course design.

Table 6. Comparison of fit indexes for alternative models of the structure of the blended learning measurement.

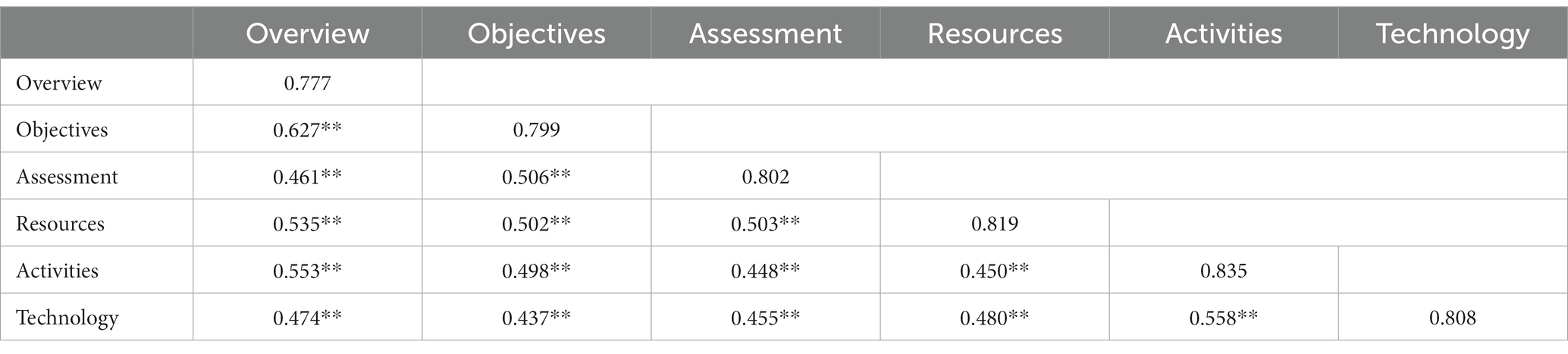

4.1.4. Confirmatory factor analysis

Focusing on the model itself, CFA was examined to correct the relationships between the modeling and data. Figure 1 shows that most subtests provided relatively strong measures of the appropriate ability or construct. Specifically, one factor was positively correlated to the others. For instance, course overview was positively correlated to course objectives, assessments, course resources, class activities, and technology support. The coefficients of the correlations for the respective factors are as follows: 0.72, 0.52, 0.61, 0.63, 0.55. This means that in any BL, if the course overview rises by 1 point, the other variables will rise by 0.72, 0.52, 0.61, 0.63, 0.55 points, respectively. The results match the statement that “cognitive tests and cognitive factors are positively correlated” (Keith, 2015, p. 335). Additionally, this study tested the discriminant validity of the measurement to ensure that each factor performed differently in the model itself. According to Fornell and Lacker’s (1981) criteria, the square root of AVE value must be greater than the correlation value between the other concepts. The results in Table 7 illustrated that the value of the variables (0.777 which was the lowest) exceeded the correlation value (0.72 which was the greatest). From this, it can be confirmed that the hypothesized model used in the present study had sufficient discriminant validity. Therefore, the hypothesized model in the present study reflected reality well.

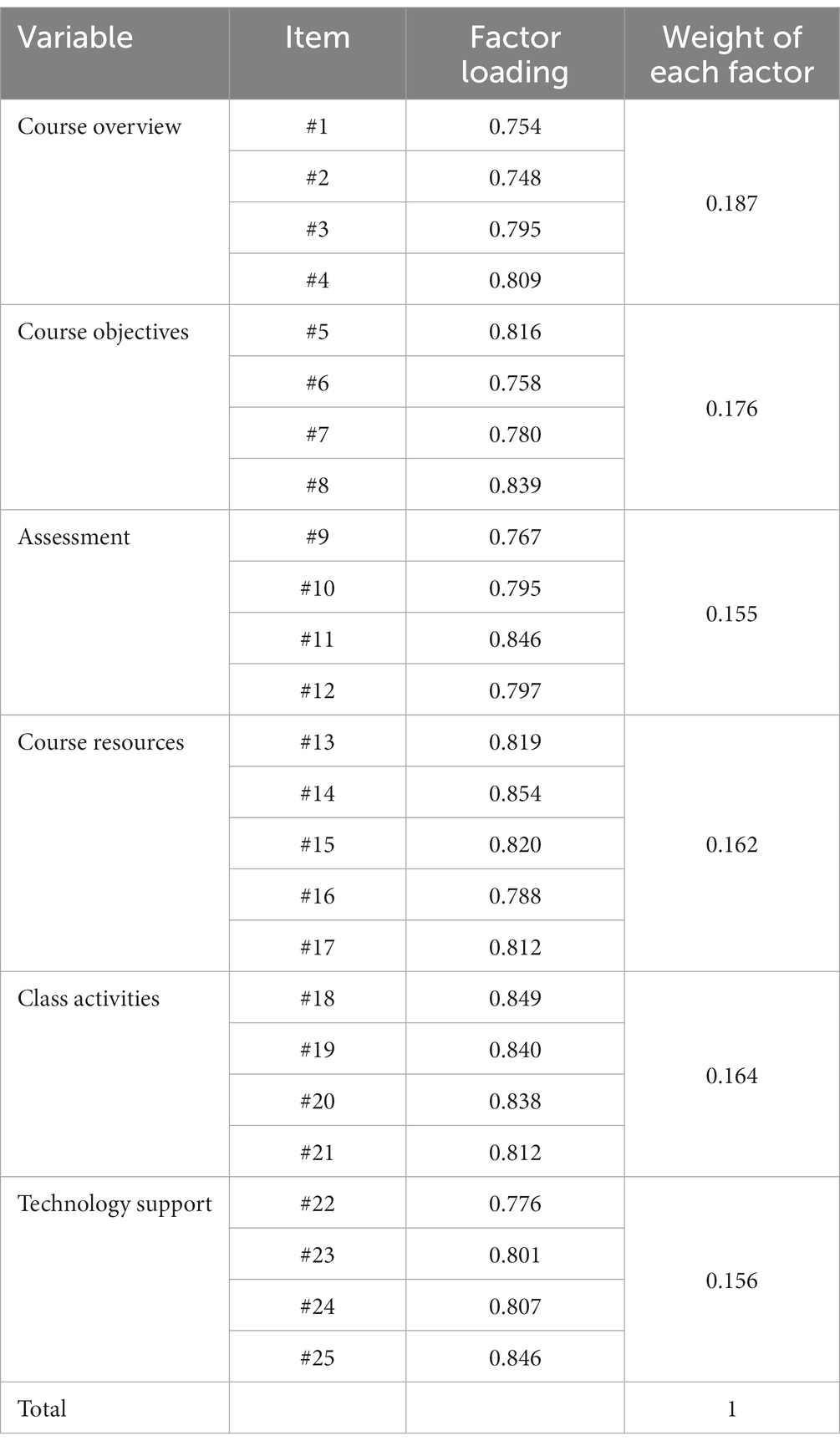

The weight of each factor in the model was further calculated for educators based on structural equation modeling (see Figure 2). For example, the weight of course overview = 0.84/(0.84 + 0.79 + 0.70 + 0.73 + 0.74 + 0.70) = 0.187. Using the same way to calculate the other weighs. The relevant calculations are shown below and the results are shown in Table 8. The total score of a blend course design is calculated as follows: the score of course overview * 0.187 + the score of course objectives * 0.176 + the score of assessment * 0.155 + the score of course resources * 0.162 + the score of class activities * 0.164 + the score of technology support * 0.156. The total grade for this measurement is 100.

4.2. Is there an association between the effectiveness of blended learning and student learning outcomes?

4.2.1. Demographic information in stage II

In Stage II, there were 91 respondents collected through LMS. The percentage of male respondents was 16% while the percentage of female respondents was 84%. All the respondents in Stage II took the synchronous BL course, Curriculum and Instruction Theorem, in School of Primary Education in Fall 2021 (September 2021–January 2022).

4.2.2. Descriptive statistics

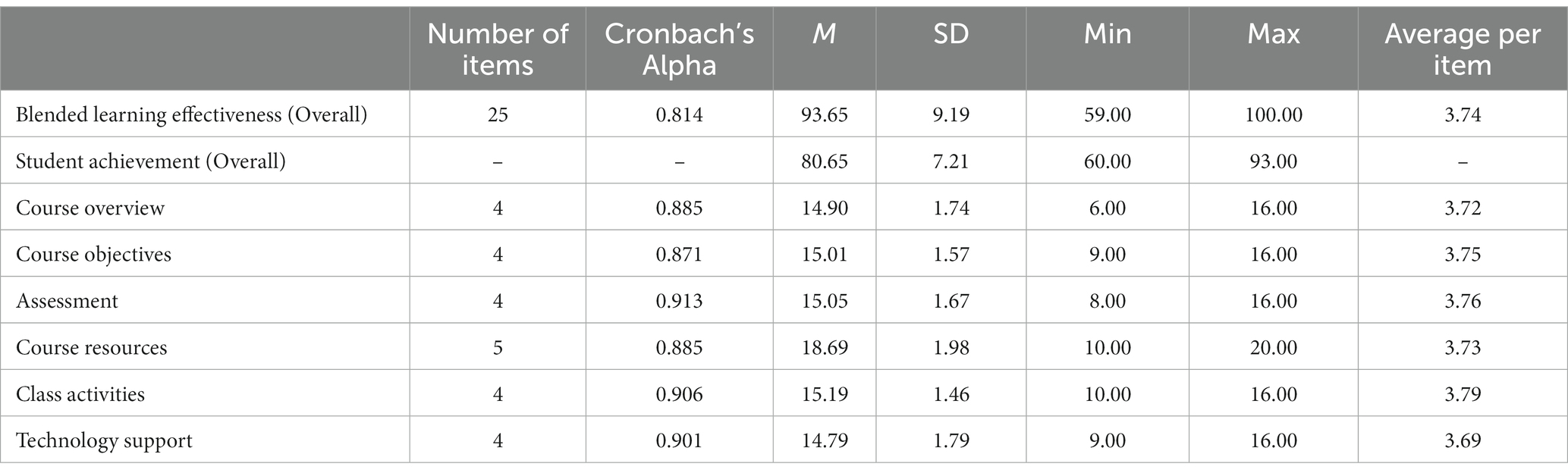

In answering RQ2, the descriptive statistics were reported in Table 9 for the undergraduates’ perspectives on BL effectiveness and SLOs. Higher scores for this measure of BL effectiveness indicate undergraduates perceive BL as more effective, with responses of 1 for “Strongly Disagree” to 4 for “Strongly Agree.” The results revealed that the six elements of BL effectiveness had an overall mean of 93.65 (corresponding to an item average of 3.74, which corresponds to “agree”). The scores of each sub-scale are very similar and, again, correspond to undergraduates reporting that they “agree” with the efficacy of BL with respect to course overview, course objectives, assessment, course resources, class activities, and technology support. Table 8 also provided information about overall SLOs. Specifically, it illustrated that students’ learning outcomes (final marks composed of formative assessments and summertime assessments) in BL had an overall mean of 80.65. The maximum and minimum scores were 93.00 and 60.00, respectively.

Table 9. Descriptive statistics for the overall scores and sub scales of the measures of blended learning effectiveness and student achievement (N = 91).

4.2.3. Regressions between BL effectiveness and SLOs

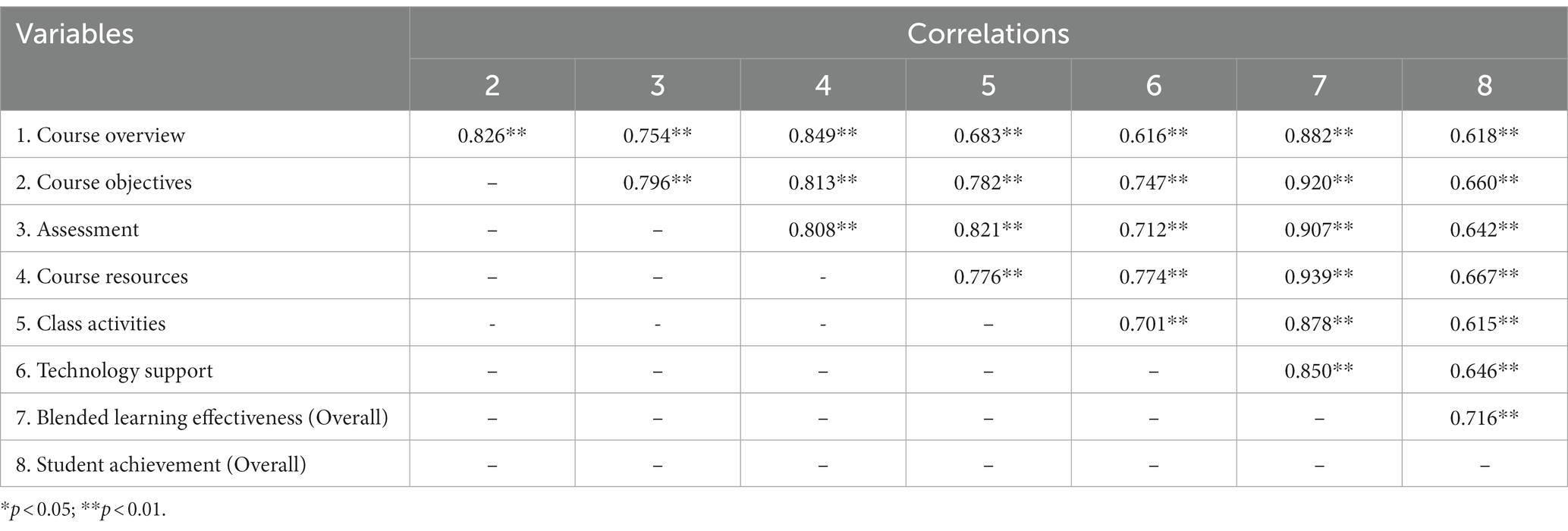

To further address the relationship between BL effectiveness and SLOs, SLOs was regressed on the perceived BL effectiveness. This research question examined whether the overall BL effectiveness was associated with student achievement. Additionally, Pearson correlations (shown in Table 10) between key variables were calculated. The results showed that the overall score of BL effectiveness was significantly correlated with student achievement (r = 0.716, p < 0.01).

Table 10. Descriptive statistics and Pearson correlations between key variables in the regression models.

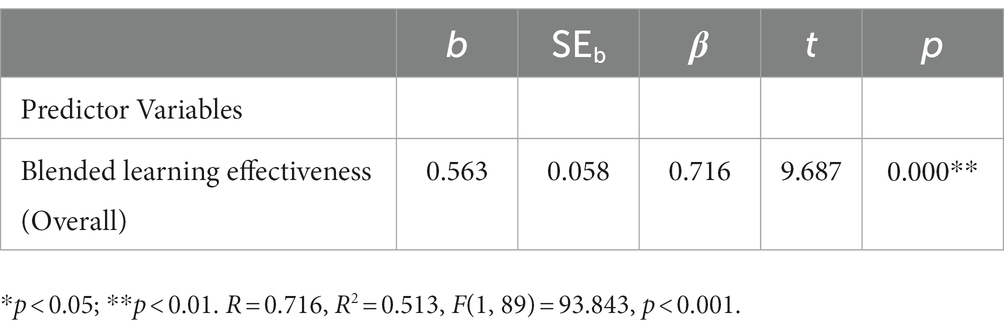

Table 11 shows the results for the regression of total student academic performance on the overall BL effectiveness scores across six components (course overview, course objectives, assessment, course resources, class activities, and technology support). Notably, the full model was statistically significant. Directly addressing RQ2, undergraduates reported that regarding BL effectiveness explained 51.3% of the additional variance, F(1, 89) = 93.843, p < 0.001, ΔR2 = 0.508. Moreover, it was statistically significant and considered to have a large effect. Accordingly, when the perception of BL effectiveness increased by a value of one point, the student’s academic performance would increase by 0.563 (b = 0.563, p < 0.001). Thus, to answer the final research question, there is a positive correlation between the effectiveness of BL and student learning achievement.

Table 11. Summary of simultaneous multiple linear regression results predicting student achievement from perceptions of the blended learning effectiveness.

5. Conclusion and discussion

BL is a combination of face-to-face interactions and online learning, where the instructor manages students in a technological learning environment. In the post-pandemic era, BL courses are widely used and accepted by educators, students, and universities. However, the validity of BL remains controversial. The lack of an accurate BL scale was one of the big concerns. The study developed a measurement to evaluate BL for undergraduates and investigated the relationship between the effectiveness of BL and SLOs. Biggs’ 1999) constructive alignment, including factors like course overview, learning objectives, teaching/learning activities, and assessment, was utilized as the primary theoretical framework for conceptualizing the scale. Later, related literature indicated the importance of adding technology and resources as essential components. Therefore, a scale was developed with six subscales.

RQ1 explored the essential components of BL. Stage I recruited 295 undergraduates from different majors at a university in Shanghai. Hypothetical measurements that include 6 sub-scales (25 items in total) were examined. Construct validity was examined with EFA and CFA. As a result, a 6-factor 5-point Likert-type scale of BL effectiveness made up of 25 times was developed. The total variance regarding the six factors of this scale was calculated as 68.4%. The internal consistency reliability coefficient (Cronbach’s alpha) for the total scale was calculated to be 0.949. The alpha reliability values for each sub-scale were as follows: 0.859, 0.873, 0.877, 0.910, 0.902, and 0.881, respectively. The results of the study demonstrated that the hypothesized factors (course overview, course objectives, assessments, class activities, course resources, and technology support) mainly proposed by Biggs (1999) are aligned as a unified system in BL. Furthermore, the results reflect the real concerns of students as they experience BL in higher education However, the participants in the present study were selected from among students enrolled in BL at the university. The characteristics of these samples were as limited as the responders. In future research, a larger scale including undergraduates in other universities may be recruited to test the validity.

RQ2 examined the association between BL validity and SLOs. In Stage II, the study recruited 91 students who participated in a synchronous BL course at the College of Education. The results demonstrated a positive relationship between the effectiveness of BL and SLOs: the more effective that undergraduates perceived BL, the better their SLOs. It supported the results of the previous literature (Demirkol and Kazu, 2014; Alsalhi et al., 2021). Moreover, the descriptive analysis provided additional findings for educators when designing and implementing BL for undergraduates. First, undergraduates expect a clear class overview about how to start the course, how to learn through the course, and how to evaluate their learning outcomes. A clear syllabus with detailed explanations should be prepared and distributed at the outset of BL. Second, undergraduates pay attention to curriculum objectives and continuously compare their work as they progress through the course to see if it helps them achieve those objectives; on this basis, outlining the objectives at the beginning of chapter learning and showing expected learning outcomes (such as rubrics) are recommended. Finally, undergraduates enjoy rich social interactions in both face-to-face activities and online interactions, therefore, a variety of classroom activities for different levels of students is recommended. In future study, more detailed analyses could be considered. For example, it would be valuable to explore the indirect effect of the effectiveness of BL on SLOS. Besides, qualitative research could be conducted to identify the underlying reasons why BL affects SLOs.

Data availability statement

The datasets presented in this article are not readily available because the datasets generated for this study are not publicly available due to the permissions gained from the target group. Requests to access the datasets should be directed to XH, MTU4NDk5OTU4QHFxLmNvbQ==.

Ethics statement

The studies involving human participants were reviewed and approved by the Shanghai Normal University Tianhua College. The patients/participants provided their written informed consent to participate in this study.

Author contributions

XH: drafting the manuscript, data analysis and perform the analysis, and funding acquisition. JF: theoretical framework, and methodology. BW: supervision. YC: data collection and curation. YW: reviewing and editing. The author confirms being the sole contributor of this work and has approved it for publication.

Funding

This research was supported by the Chinese Association for Non-Government Education (grant number: CANFZG22268) and Shanghai High Education Novice Teacher Training Funding Plan (grant number: ZZ202231022).

Acknowledgments

I would like to express my appreciation to my colleagues: Prof. Jie Feng and Dr. Yinghui Chen. They both provided invaluable feedback on this manuscript.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Adnan, M., and Anwar, K. (2020). Online learning amid the COVID-19 pandemic: students’ perspectives. J. Pedagog. Sociol. Psychol. 2, 45–51. doi: 10.33902/JPSP.2020261309

Alducin-Ochoa, J. M., and Vázquez-Martínez, A. I. (2016). Academic performance in blended- learning and face-to-face university teaching. Asian Soc. Sci. 12:207. doi: 10.5539/ass.v12n3p207

Allen, I. E., and Seaman, J. (2010). Class differences: online education in the United States, 2010. The Sloan consortium. Babson Survey Research Group. Available at: http://sloanconsortium.org/publications/survey/class_differences (Accessed October 7, 2014)

Alsalhi, N., Eltahir, M., Al-Qatawneh, S., Quakli, N., Antoun, H., Abdelkader, A., et al. (2021). Blended learning in higher education: a study of its impact on students’ performance. Int. J. Emerg. Technol. Learn. 16, 249–268. doi: 10.3991/ijet.v16i14.23775

Bayyat, M., Muaili, Z., and Aldabbas, L. (2021). Online component challenges of a blended learning experience: a comprehensive approach. Turk. Online J. Dist. Educ. 22, 277–294. doi: 10.17718/tojde.1002881

Biggs, J. (1999). Teaching for quality learning at university. open university press/society for research in higher education, Buckingham.

Biggs, J., and Tang, C. (2011). Teaching for quality learning at university: what the student does (4th). London: McGraw-Hill.

Bonk, C., and Graham, C. (2006). The handbook of blended learning: global perspectives, local designs. San Francisco, CA: Pfeiffer Publishing.

Boyle, T., Bradley, C., Chalk, P., Jones, R., and Pickard, P. (2003). Using blended learning to improve student success rates in learning to program. J. Educ. Media 28, 165–178. doi: 10.1080/1358165032000153160

Brown, C., Davis, N., Sotardi, V., and Vidal, W. (2018). Towards understanding of student engagement in blended learning: a conceptualization of learning without borders. Available at: http://2018conference.ascilite.org/wp-content/uploads/2018/12/ASCILITE-2018-Proceedings-Final.pdf. 318-323

Bryant, F. B., and Arnold, P. R. (1995). “Principal-components analysis and exploratory and confirmatory factor analysis” in Reading and understanding multivariate statistics. eds. L. G. Grimm and P. R. Arnold (Washington, DC: American Psychological Association), 99–136.

Clark, C., and Post, G. (2021). Preparation and synchronous participation improve student performance in a blended learning experience. Australas. J. Educ. Technol. 37, 187–199. doi: 10.14742/ajet.6811

Comrey, A. L., and Lee, H. B. (1992). A first course in factor analysis (2nd). Hillsdale, NJ: Lawrence Erlbaum Associates.

Darling-Aduana, J., and Heinrich, C. J. (2018). The role of teacher capacity and instructional practice in the integration of educational technology for emergent bilingual students. Comput. Educ. 126, 417–432. doi: 10.1016/j.compedu.2018.08.002

Demirkol, M., and Kazu, I. Y. (2014). Effect of blended environment model on high school students’ academic achievement. Turk. Online J. Educ. Technol. 13, 78–87.

Dos, B. (2014). Developing and evaluating a blended learning course. Anthropologist 17, 121–128. doi: 10.1080/09720073.2014.11891421

Ellis, R., Bliuc, A. M., and Han, F. (2021). Challenges in assessing the nature of effective collaboration in blended university courses. Australas. J. Educ. Technol. 37:1–14. doi: 10.14742/ajet.5576

Ellis, R. A., and Goodyear, P. (2016). Models of learning space: integrating research on space, place and learning in higher education. Rev. Educat. 4, 149–191. doi: 10.1002/rev3.3056

Feng, X., Wang, R., and Wu, Y. (2018). A literature review on blended learning: based on analytical framework of blended learning. J. Dist. Educ. 12, 13–24. doi: 10.15881/j.cnki.cn33-1304/g4.2018.03.002

Fornell, C., and Lacker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.1177/002224378101800104

Garrison, D. R., and Kanuka, H. (2004). Blended learning: uncovering its transformative potential in higher education. Internet High. Educ. 7, 95–105. doi: 10.1016/j.iheduc.2004.02.001

Hadwin, A., and Oshige, M. (2011). Self-regulation, coregulation, and socially shared regulation: exploring perspectives of social in self-regulated learning theory. Teach. Coll. Rec. 113, 240–264. doi: 10.1007/s11191-010-9259-6

Han, F., and Ellis, R. (2021). Patterns of student collaborative learning in blended course designs based on their learning orientations: a student approaches to learning perspective. Int. J. Educ. Technol. High. Educ. 18:66. doi: 10.1186/s41239-021-00303-9

Hara, N. (2000). Students distress with a web-based distance education course. Information Communication and Society Communication & Society. 557–579. doi: 10.1080/13691180010002297

Kaiser, H. F., and Rice, J. (1974). Little jiffy, mark IV. Educ. Psychol. Meas. 34, 111–117. doi: 10.1177/001316447403400115

Keith, T. (2015). Multiple regression and beyond: an introduction to multiple regression and structural equation modeling (2nd). New York: Routledge.

Kenney, J., and Newcombe, E. (2011). Adopting a blended learning approach: challenges, encountered and lessons learned in an action research study. J. Asynchronous Learn. Netw. 15, 45–57. doi: 10.24059/olj.v15i1.182

Keogh, J. W., Gowthorp, L., and McLean, M. (2017). Perceptions of sport science students on the potential applications and limitations of blended learning in their education: a qualitative study. Sports Biomech. 16, 297–312. doi: 10.1080/14763141.2017.1305439

Kintu, M., Zhu, C., and Kagambe, E. (2017). Blended learning effectiveness: the relationship between student characteristics, design features and outcomes. Int. J. Educ. Technol. High. Educ. 14, 1–20. doi: 10.1186/s41239-017-0043-4

Lakhal, S., Mukamurera, J., Bedard, M. E., Heilporn, G., and Chauret, M. (2020). Features fostering academic and social integration in blended synchronous courses in graduate programs. Int. J. Educ. Technol. High. Educ. 17:5. doi: 10.1186/s41239-020-0180-z

Laurillard, D. (2013). Rethinking university teaching: a conversational framework for the effective use of learning technologies. London: Routledge

Liliana, C. M. (2018). Blended learning: deficits and prospects in higher education. Australas. J. Educ. Technol. 34, 42–56. doi: 10.14742/ajet.3100

Liu, Y. (2021). Blended learning of management courses based on learning behavior analysis. Int. J. Emerg. Technol. Learn. 16, 150–165. doi: 10.3991/IJET.V16I09.22741

Manwaring, K. C., Larsen, R., Graham, C. R., Henrie, C. R., and Halverson, L. R. (2017). Investigating student engagement in blended learning settings using experience sampling and structural equation modeling. Internet High. Educ. 35, 21–33. doi: 10.1016/j.iheduc.2017.06.002

Matsushita, K. (2017). Deep active learning: toward greater depth in university education. Singapore: Springer

McMillan, J. H., and Schumacher, S. (2010). Research in education: evidence-based inquiry (7th). Upper Saddle River, NJ: Pearson Education, Inc.

Naffi, N., Davidson, A.-L., Patino, A., Beatty, B., Gbetoglo, E., and Duponsel, N. (2020). Online learning during COVID-19: 8 ways universities can improve equity and access. The Conversation. Available at: https://theconversation.com/online-learning-during-covid-19-8-ways-universities-can-improve-equity-and-access-145286

Oliver, M., and Trigwell, K. (2005). Can “blended learning” be recommended? E-Learn. Digit. Media 2, 17–26. doi: 10.2304/elea.2005.2.1.17

Oxford Group, (2013). Blended learning-current use, challenges and best practices. Available at: (http://www.kineo.com/m/0/blended-learning-report-202013.pdf)

Siemens, G. (2005). Connectivism: A learning theory for the digital age. Int. J. Educ. Technol. High. Educ. 2, 3–10. Retrieved from: http://itdl.org/Journal/Jan_05/Jan_05.pdf

Tamim, R. M. (2018). Blended learning for learner empowerment: voices from the middle east. J. Res. Technol. Educ. 50, 70–83. doi: 10.1080/15391523.2017.1405757

Turvey, K., and Pachler, N. (2020). Design principles for fostering pedagogical provenance through research in technology supported learning. Comput. Educ. 146:103736. doi: 10.1016/j.compedu.2019.103736

U.S. Department of Education, Office of planning, evaluation, and policy development, evaluation of evidence-based practices in online learning: a meta-analysis and review of online learning studies, Washington, DC U.S. Department of Education (2009).

Xiao, J. (2016). Who am I as a distance tutor? An investigation of distance tutors’ professional identity in China. Distance Educ. 37, 4–21. doi: 10.1080/01587919.2016.1158772

Yan, Y., and Chen, H. (2021). Developments and emerging trends of blended learning: a document co-citation analysis (2003-2020). Int. J. Emerg. Technol. Learn. 16, 149–164. doi: 10.3991/ijet.v16i24.25971

Zawacki-Richter, O. (2009). Research areas in distance education—a Delphi study. International review of research in open and distributed learning. 10, 1–17.

Keywords: blended learning effectiveness, measurement, undergraduates, student learning outcomes, structural equation modeling

Citation: Han X (2023) Evaluating blended learning effectiveness: an empirical study from undergraduates’ perspectives using structural equation modeling. Front. Psychol. 14:1059282. doi: 10.3389/fpsyg.2023.1059282

Edited by:

Simone Belli, Complutense University of Madrid, SpainReviewed by:

María-Camino Escolar-Llamazares, University of Burgos, SpainRamazan Yilmaz, Bartin University, Türkiye

Denok Sunarsi, Pamulang University, Indonesia

Copyright © 2023 Han. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaotian Han, MTU4NDk5OTU4QHFxLmNvbQ==

Xiaotian Han

Xiaotian Han