94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 14 September 2022

Sec. Perception Science

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.945951

This article is part of the Research TopicAdvances in Color Science: From Color Perception to Color Metrics and its Applications in Illuminated EnvironmentsView all 22 articles

Color harmony is the focus of many researchers in the field of art and design, and its research results have been widely used in artistic creation and design activities. With the development of signal processing and artificial intelligence technology, new ideas and methods are provided for color harmony theory and color harmony calculation. In this article, psychological experimental methods and information technology are combined to design and quantify the 16-dimensional physical features of multiple colors, including multi-color statistical features and multi-color contrast features. Eighty-four subjects are invited to give a 5-level score on the degree of color harmony for 164 multi-color materials selected from the screenshots of film and television scenes. Based on the multi-color physical features and the subjective evaluation experiment, the correlation analysis is firstly carried out, which shows that the overall lightness, difference of the color tones, number of multiple colors, lightness contrast, color tone contrast, and cool/warm contrast are significantly correlated with color harmony. On the other hand, the regression prediction model and classification prediction model of color harmony are constructed based on machine learning algorithms. In terms of regression prediction model, the prediction accuracy of linear models is higher than that of nonlinear models, with 63.9% as the highest, indicating that the multi-color physical features can explain color harmony well. In terms of classification prediction model, the Random Forest (RF) has the best prediction performance, with an accuracy of 80.2%.

Color harmony refers to the color matching of two or more colors, organized in an orderly and coordinated manner, which can make people feel happy and satisfied (Zheng, 2013). Color harmony is widely used in industrial design, graphic design, interior design, and other color-matching scenes, and its strong correlation with emotion is further applied in computer vision fields such as affective computing and semantic analysis.

Color harmony theory is divided into classic color harmony theory and modern color harmony theory according to different analysis methods. In the 19th century, the study of classical color harmony theory focused on the qualitative analysis that was, color harmony theory was summed up in some descriptive color harmony rules, which were represented by Zheng (2013). Goethe believes that color can result in emotional fluctuations and expound the effect of color from physiological and psychological perspectives (Die Berlin-Brandenburgische Akademie der Vissenschaften in Göttingen und die Heidelberger Akademie der Wissenschaften (Hrsg.), 2004). Chevreul divides the color harmony rules into two categories, namely similar harmony and contrast harmony, which inspires the development of the color system research (Zhang et al., 1996). On this basis, Itten theorizes seven types of color contrast, including contrast by hue, contrast by value, contrast by temperature, contrast by complements (neutralization), simultaneous contrast (from Chevreuil), contrast by saturation (mixtures with gray), and contrast by extension (from Goethe) (Itten, 1999). However, these studies rely on fragmentary understanding of subjective experience and lack of data support, and most of them only stay on the properties of the research objects.

At the beginning of the 20th century, with the construction of the color system, the study of color harmony theory transformed into the quantitative analysis. Researchers tried to investigate, analyze, and explain color harmony, not only satisfied with the general rules of color harmony, but also committed to use a more precise way to elaborate the content of color harmony, which were represented by Munsell, Ostwald, Moon, and Spencer. Munsell develops the Munsell color system, which is the most famous color system so far, and then he proposes seven rules about color harmony, namely vertical harmony, radial harmony, circumferential harmony, oblique interior harmony, oblique transverse interior harmony, spiral harmony, and elliptical harmony (Zhang and Zhang, 2003). The color harmony theory of Ostwald is composed by the regular positions in his color system, including two-color harmony, three-color harmony, and multi-color harmony (Sakahara, 2002). Based on the Munsell color system, Moon and Spencer make a comprehensive qualitative study on color harmony, including the geometric formulation of classical color harmony theory, area of color harmony, and evaluation on the degree of color harmony (Moon and Spencer, 1944).

Nowadays, with the development of signal processing and artificial intelligence, more and more researchers try to adopt different perspectives and methods for color harmony study.Lu et al. (2015) combine classical color harmony theory with machine learning algorithms, and propose a Bayesian framework for constructing color harmony model. In this framework, Matsuda color harmony model and Moon-Spencer color harmony model are integrated into the training process based on the Latent Dirichlet Allocation (LDA) as the prior condition, which achieves a good predicting performance on public data set. On this basis, Lu et al. (2016) further considers the spatial position relationship between each color and proposed a discriminant learning method based on LDA. Zaeimi and Ghoddosian (2020) proposed an art-inspired meta-heuristic algorithm to solve the global optimization for color harmony modeling. According to the relative position in the hue ring and the color harmony template in Moon-Spencer color harmony theory, this algorithm searched for and matched the best combination mode of multiple colors. Wang et al. (2022) combined experimental psychology with artificial intelligence technology together, divided the factors affecting color harmony into objective factors and subjective factors, studied the relationship among three of them, and constructed the mathematical model to predict color harmony. Her study firstly abstracted the generation process of color harmony from objective factors to subjective factors, and tried to construct a research paradigm of color harmony.

In general, the current research on color harmony modeling involves key technologies, such as feature extraction and machine learning. In terms of feature extraction Huang et al. (2022), proposed a Feature Map Distillation (FMD) framework under which the feature map size of teacher and student networks was different. Zhang et al. (2021) integrated several well-proved modules together to learn both short-term and long-term features from video inputs and meanwhile avoid intensive computation. In terms of machine learning, Ohata et al. (2021) propose an automatic detection method for COVID-19 infection based on chest X-ray images, which combines CNNs with consolidated machine learning methods, such as k-Nearest Neighbor, Bayes, Random Forest, multi-layer perceptron (MLP), and Support Vector Machine (SVM). Lei et al. (2022) propose a novel Meta Ordinal Regression Forest (MORF) method for medical image classification with ordinal labels, which learns the ordinal relationship through the combination of convolutional neural network and differential forest in a meta-learning framework, in order to improve model generalization with ordinal information. All the methods mentioned above can provide technology support for the current color harmony modeling research.

The existing research on color harmony theory mainly take simple multi-color combination as the research object, such as color pair and three-color combination, which cannot meet the application requirements of real-life scenes. Therefore, this article takes the multi-color materials in real-life scenes as the research object, combines experimental psychology with information technology together, and constructs a few-shot color harmony modeling method which is suitable for real-life scenes. To sum up, the chapter content arrangement is below. (1) Construct the dataset of multi-color materials and smooth the materials to eliminate the interference of the semantic information (see section “Multi-color dataset construction”). (2) Design and quantify the multi-color physical features (see section “Multi-color physical features extraction”). (3) Carry out the subjective evaluation experiment on color harmony based on the semantic difference (SD) method (see section “The subjective evaluation experiment on color harmony”). (4) Conduct data analysis based on the experimental results, and construct the multi-color harmony prediction model based on machine learning algorithms (see section “Results and discussion”).

The multi-color materials should conform to the multi-color relationships in real-life scenes as much as possible, and the color-matching mode is supposed to be as rich as possible. Therefore, this article selects the screenshots of the film and television scenes based on real life as the source of multi-color materials, which are more practical than the synthetic ones (Jiang et al., 2019). The selecting principles are as follows: (1) color pictures; (2) remove the pictures containing virtual scenes in order to conform to the multi-color relationships of real-life scenes as much as possible; (3) remove the pictures containing obvious semantic information to avoid the impact on the evaluation of the degree of color harmony, such as expressions and words; and (4) select the pictures containing rich color information as much as possible. Therefore, we finally construct the multi-color dataset containing a total of 164 materials captured from the film and television scenes, which are taken from 18 classic film types with a resolution of 1,280 dpi × 720 dpi.

According to CIE Publication No. 17.4, hue is an attribute of a visual sensation according to which an area appears to be similar to one, or to proportions of two, of the perceived colors. Chroma is the colorfulness of an area judged in proportion to the brightness of a reference white. Lightness is the brightness of an area judged relative to the brightness of a reference white. Therefore, CIELAB color space (L* represents lightness, a* represents red/green, and b* represents yellow/blue) proposed by the International Commission on Illumination (CIE), which is perceptual uniform and device independent, is adopted to extract the basic color attributes (Connolly and Fleiss, 1997). Among them, we select the L* dimension to represent lightness, and the h/C* dimension which calculated by the a* dimension and b* dimension to present hue angle/chroma.

It is noted that the L*, a*, and b* values of CIELAB color space are obtained from the digital files, which are converted from RGB color space. The steps are as below based on ITU-R Recommendation BT.709 using the D65 white point reference.

Step I: Convert RGB color space to XYZ color space with the R, G, and B values normalized to the interval .

Step II: Normalize for D65 white point.

Step III: Calculate the value of , where represents , , or value of XYZ color space.

Step IV: Convert XYZ color space to CIELAB color space.

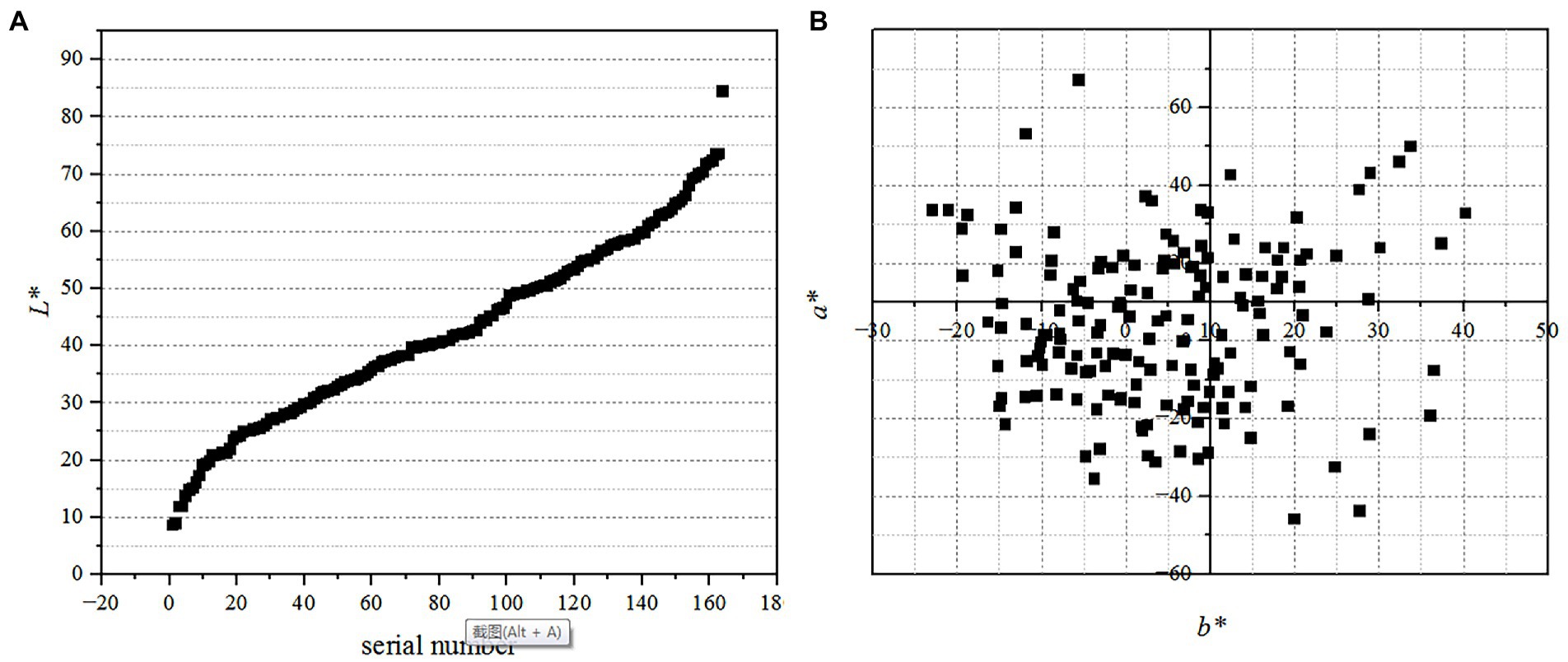

Finally, the spatial distribution of the color basic attributes materials is shown in Figure 1.

Figure 1. The spatial distribution of the color basic attributes of materials. Among them, (A) shows the distribution of L* value with X-axis representing the serial number of L* value ranging from smallest to largest, and (B) shows the plane distribution of a* and b* value.

The materials are taken from the screenshots of film and television scenes, which not only contain color information, but also contain edge information. In general, people recognize specific objects by edge information, which also contains a lot of semantic information, and has an impact on the evaluation on the degree of color harmony. Therefore, it is necessary to process the edge information of multi-color materials.

A circular average filter is adopted to smooth the edge information of multi-color materials. The main idea of the average filter is to replace the grayscale of a pixel with the average grayscale of other pixels in the neighborhood. The steps are below.

Step I: Set the grayscale of pixel as .

Step II: Then, calculate the average value of all pixels in the circular template , with as the center and n as the radius.

Step III: Finally, replace the grayscale of the originalpixel with the average value .

This article set the smoothing degree n as 50 pixels according to the subjective evaluation experiment carried out by the previous study (Wang et al., 2020). In this experiment, two multi-color materials with rich edge information are selected from each color tone category as evaluation materials, and, respectively, smoothed by 10 circular mean filters, with the radius of each circular template ranging from 10 pixels to 100 pixels (10 pixels apart). The subjects are asked to evaluate the minimum smoothing radius in which edge information could not be seen in each group of materials.

Figure 2 shows the differences between the original material and the smoothed material. As shown in Figures 2A,B, the scene information in the original material (e.g., church) is blurred and cannot be effectively recognized by human eye. Similarly, as shown in Figures 2C,D, the facial information of the character is blurred, and you can never know whether the actor is the one you like or not.

Figure 2. The differences between the original material and the smoothed material. Among them, (A) shows the original material, (B) shows the smoothed one, (C) shows the original material, and (D) shows the smoothed one.

In general, color palette refers to the board used to harmonize the fresh pigment, which can be divided into circular palette, elliptic palette, and rectangular palette according to the shape. In color analysis of film and television scenes, the rectangular color palette is often adopted to display the dominant colors and their proportion information. Therefore, in order to enable the subjects to master the multi-color information of the materials more intuitively, we first construct the rectangular color palette of multi-color materials based on K-means clustering algorithm (Krishna and Murty, 1999). are the grayscale of m pixels in one picture which regarded as the input data. Among them, represents the grayscale of L*, a* or b* dimension of the ith pixel based on CIELAB color space. The specific steps to construct the color palette are as below.

Step I: Randomly select j cluster centroids . Among them, j represents the number of cluster centroids, represents the grayscale of R channel, G channel, or B channel of the jth cluster centroids.

Step II: For each pixel , calculate the category that should belong to:

Among them, where is the target category with the shortest Euclidean distance between and all the j clustering centroids.

Step III: For each category , calculate the average value of which belongs to as the new cluster centroid .

Step IV: Repeat Step II and Step III until remain unchanged, and are the dominant colors of the multi-color material. represents the number of which belong to .

Step V: Generate the dominant colors based on the cluster centroids , and calculate the length ratio of each dominant color based on , so as to construct the color palette.

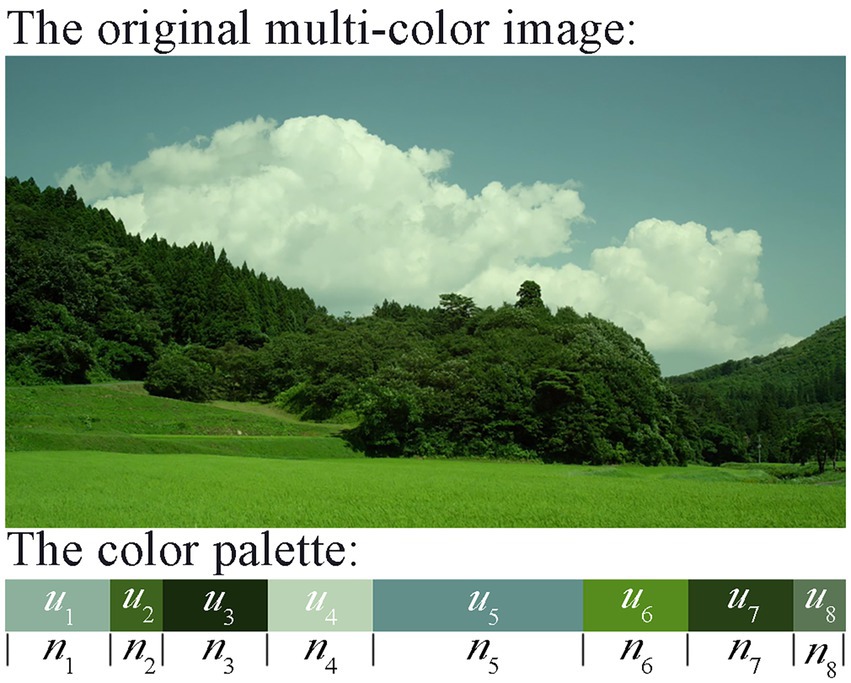

As shown in Figure 3, we generate a color palette to represent the dominant colors. In addition, it is worth noting that, there are three functions of the color palette. (1) Test whether the distribution of the hue attribute of multi-color materials is uniform and complete. (2) In the subjective evaluation of color harmony, the multi-color materials and the corresponding color palette will be presented at the same time, so as to make the experimental procedure more comprehensive and accurate. (3) Some multi-color physical features are extracted which taking the color palette as the input.

Figure 3. Color palette of a multi-color material generated by K-means clustering algorithm. Among them, represents each dominant color, and represents the length of each dominant color in the color palette. j is set to 8.

Multi-color physical features can be directly quantified based on the existing color systems and the information technology methods, which follow the objective physical laws and have nothing to do with human’s cognition, also known as low-level features or objective features (Huang and Shen, 2002). In order to construct the multi-color harmony prediction model, this article first designs and quantifies 16-dimensioanl physical features of multiple colors, and then extracts features based on the smoothed multi-color materials, as shown in Table 1. The calculation method of each feature will be introduced in detail below.

Color moment (Gonzalez and Woods, 2020) is a simple and effective physical feature to represent color, which mainly includes first-order moment, second-order moment, and third-order moment. Since the main information of color is distributed in the low-order moments, the first-order moment, the second-order moment, and the third-order moment are sufficient to express the color distribution of one multi-color material.

Based on CIELAB color space, this article quantified the first-order moment, the second-order moment, and the third-order moment of L*, a*, and b* dimensions to represent Color Moment (CM) feature, so as to construct a nine-dimensional feature vector.

Among them, L*, a*, and b* represent the lightness value, red/green value, and yellow/blue value in CIELAB color space, respectively; N represents the number of pixels in each multi-color material; i represents the ith pixel.

Information entropy can be used to describe the chaotic degree of information, also called the degree of uncertainty, which is quantified by the occurrence probability of discrete random events (Li, 2008). The richer the color of a picture is, the greater the information entropy is. Therefore, this article constructed a one-dimensional feature vector by calculating the information entropy of the grayscale of each multi-color material grayscale to represent Color Richness (CR) feature.

Among them, represents the proportion of the pixels whose grayscale is i in one picture, when . That is, its unit grayscale entropy.

The spatial density of multiple colors refers to how closely various colors are arranged in a picture, which has an impact on color harmony. In this article, the watershed segmentation algorithm (Beucher and Meyer, 1993; Hu, 2010; Shen et al., 2015) based on region segmentation is adopted to segment the color regions in multi-color materials.

The watershed segmentation algorithm is a segmentation method that draws on the morphological theory, which takes the picture as a topographic map, where the grayscale corresponds to the topographic height value. High grayscale corresponds to peaks, and low grayscale corresponds to valleys. The water always flows toward the low ground and stops until a low-lying place, which is called a basin. Eventually, all the water will be concentrated in different basins, and the ridges between the basins are called watersheds. As water flows down from the watershed, it is equally likely to flow toward different basins.

Applying this idea to image segmentation is to find different “water basins” and “watersheds” in grayscale images, and the regions composed of these different “water basins” and “watersheds” are the target to be segmented. The bottom-up simulated flooding process is defined as follows.

Among them, Equation (20) belongs to the initial condition of the recursive process. Among them, is the pixel point with the minimum grayscale in image I; h represents the range of grayscales; represents the minimum value; represents the maximum value. Equation (21) is the recursive process. Among them, represents all the pixels which grayscale is h + 1; the pixel with the minimum gray scaled which belongs to the newly generated basin, that is, a new basin is generated at the altitude of h + 1. represents the intersection point of and ; represents the basin where is located. Therefore, also represents the point where and are in the same basin .

Based on the recursive process, all the pixels in the image I can be divided into several basins. Finally, if a pixel belongs to more than two basins at the same time, the pixel is a point on the watershed. The segmentation line can be further determined by the points on the watershed, thereby realizing image segmentation.

On the basis of regional segmentation of multi-color materials, this article quantifies the number of regions segmented and the standard deviation of the number of pixels contained in each region in the same material as the Space Density (SD) feature to construct a two-dimensional feature vector.

Among them, N represents the number of segmented regions in one material; represents the number of pixels included in the ith segmented region in one material.

Since color tones contain information both on the hue and chroma, this article selects CIELAB color space to quantify this feature, which well separated the lightness property from the color tone property. A one-dimensional feature vector was constructed by calculating the standard deviation of a* and b* of each pixel in one material to represent the Color Tone Contrast (CTC) feature.

Among them, and represent and of the ith pixel of one material in CIELAB color space respectively; and represent the average values of a* and b*; and N represents the number of pixels in one material.

Based on CIELAB color space, this article takes the lightness value of L* of the pixel in each material as the input to quantify the Lightness Contrast (LC) feature constructed by the one-dimensional feature vector.

Among them, the pixel represents the pixel within the block of eight neighborhoods that center on the pixel , as shown in Figure 4. N represents the number of pixels different from pixel .

Due to the strong correlation between the cool/warm and multicolor perception, this article defined and quantified the Cool/warm Contrast (CWC) feature based on the earlier research of Ou et al. (2018). Based on CIELAB color space, the hue angle and chroma values of the pixel in one material is adopted as the input, and the steps are below.

Step I: Calculate the hue angle h and chroma C* values based on CIELAB color space.

Among them, and represent and of the ith pixel of one material in CIELAB color space, respectively.

Step II: Calculate the degree of “cool/warm” based on the research result of Ou.

Among them, and represent and of the ith pixel of one material in CIELAB color space, respectively.

Step III: Calculate the Cool/warm Contrast (CWC) feature based on .

Among them, the pixel represents the pixel within the block of eight neighborhoods that center on the pixel ; N represents the number of pixels different from pixel .

The importance of area in color design has been proved in the field of aesthetics and art practice, and researchers have also conducted a series of studies on the mechanism of area on color harmony and beauty (Granger, 1953; Odabaşıoğlu and Olguntürk, 2020). Therefore, the standard deviation of the number of pixels belonging to each cluster centroid in the color palette was quantified to represent the Area Difference (AD) feature, thereby constructing a one-dimensional feature vector.

Among them, j represents the number of cluster centroids; represents the number of pixels belonging to the ith cluster centroid.

A total of 84 subjects participated in this experiment. The subjects are all college students, aged between 18 and 30 years old, including 35 males and 49 females. All the subjects do not major in color-related professional courses and have no color-related professional knowledge reserves. Before the formal start of the experiment, the Ishihara Color Blindness Test (Marey et al., 2015) is performed on each subject to test their color perception.

In order to avoid the interference of environmental noise on the subjective evaluation of multi-color harmony, the experiment is arranged in a standard listening room with an area of 5.37 m × 6 m to ensure that the background noise is not higher than 30 dB(A). According to “Methodology for the Subjective Assessment of the Quality of Television Pictures” (ITU-R BT. 500–14), the ambient illumination of the monitor (i.e., the incident light formed by the surrounding environment on the monitor, measured in the vertical direction of the monitor) is set to 200 lux (a unit of luminance, which represents the amount of light received per unit area of the monitor).

An independent monitor is used to present multi-color materials. The model of the monitor is BenQ XL2720-B color LCD monitor, equipped with Windows operating system (7, Microsoft Corporation, Redmond, United States). The aspect ratio of the monitor is 16:9, with the resolution of 1920 dpi × 1,080 dpi. A “slideshow” function in the ACD see software (official free version; ACD Systems International Inc., Shanghai, China) is used to randomly present the stimuli. Before the experiment, the monitor is calibrated using the Display Color Calibration Function from Windows Operating System before the experiment.

Only one subject performs the experiment at a time. After the subject enters into the laboratory, a questionnaire is issued by the experimental assistant. The subject is required to sign the informed consent form and fill in the personal information (including gender, age, and major) in the questionnaire.

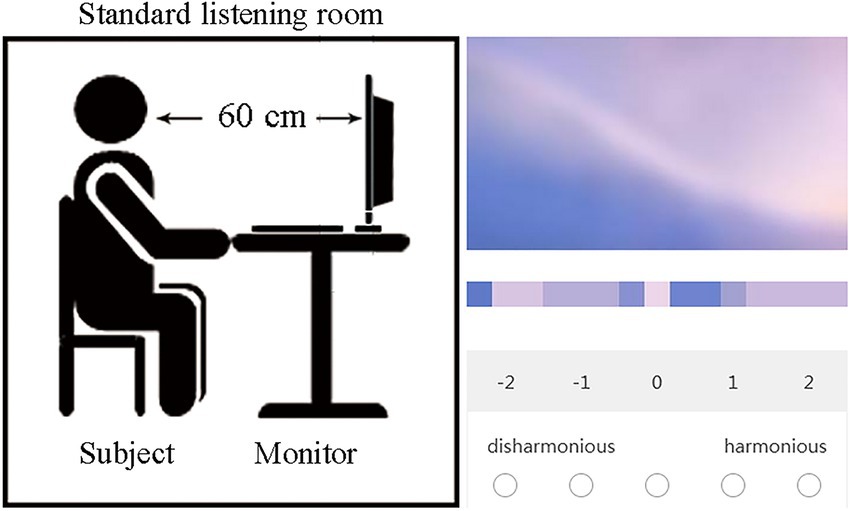

Then, as shown in Figure 5, the subject is asked to sit in front of the monitor with the eyes at the same height as the center of the monitor, and the vertical distance between the eyes and the monitor is 60 cm. The monitor randomly displays each material, including the smoothed multi-color material and the corresponding color palette, to be evaluated in full screen, and the color harmony scores are collected through the “wjx.com” application of the mobile phone.

Figure 5. The experiment condition. On the left is the position diagram between the subject and the monitor; on the right is the operation interface for data collection by “wjx.cn” application on the mobile phone.

The introduction of the experiment is below, “The aim of this experiment is to study the quantifiable rules of color harmony. You should score a total of 164 multi-color materials for the degree of color harmony as a 5-level scale, which −2 means “disharmonious,” −1 means “a little disharmonious,” 0 means “no feeling,” 1 means “a little harmonious,” and 2 means “harmonious.” The detailed experiment is below.

Step I: The subject is asked to be familiar with all multi-color materials in advance, and constructs a psychological evaluation scale on color.

Step II: Three materials randomly selected are displayed, and the subject is asked to evaluate according to his subjective feelings, so as to avoid the effect on the evaluation results because of the unfamiliarity with the experimental process.

Step III: In total, 164 multi-color materials are equally divided into two groups and present on the monitor in random order. The subject is asked to score the degree of each multi-color material as a 5-level scale.

It is noted that there is no time limit for the experiment, and the subjects can play the next material by themselves. It took 1 week to complete the experiments with all 84 subjects. Among them, each participant spent an average of about 30 min in the experiment, including a 5-min break after evaluating one group.

First, in section “Reliability analysis,” this section analyzes the reliability of the experimental data. Second, in section “Correlation analysis,” the correlation between each multi-color physical feature and color harmony is discussed in detail. Then, in section “Multi-color harmony model construction,” based on the results of feature selection, linear and nonlinear learning algorithms are adopted to construct the regression prediction model and classification prediction model for color harmony, and a series of objective evaluation methods are used to measure the accuracy of the models. Finally, in section “Comparative research”, a comparative experiment is carried out to discuss the applicability and limitations of the proposed models.

In this article, Cronbach’s alpha is adopted to evaluate the internal consistency of the experimental results of 84 subjects (Bartko, 1966), as shown in Equation (31).

Among them, K represents the number of subjects; represents the population variance for the observation scores of all the materials; represents the variance for the observation scores of the material to be tested. It is generally believed that when the Cronbach’s alpha is above 0.7, the experimental results have good internal consistency.

The Cronbach’s alpha of the 84 subjects in this experiment is 0.908, which meets the requirement, indicating that the experimental data are reliable and can support subsequent research on correlation analysis and modeling.

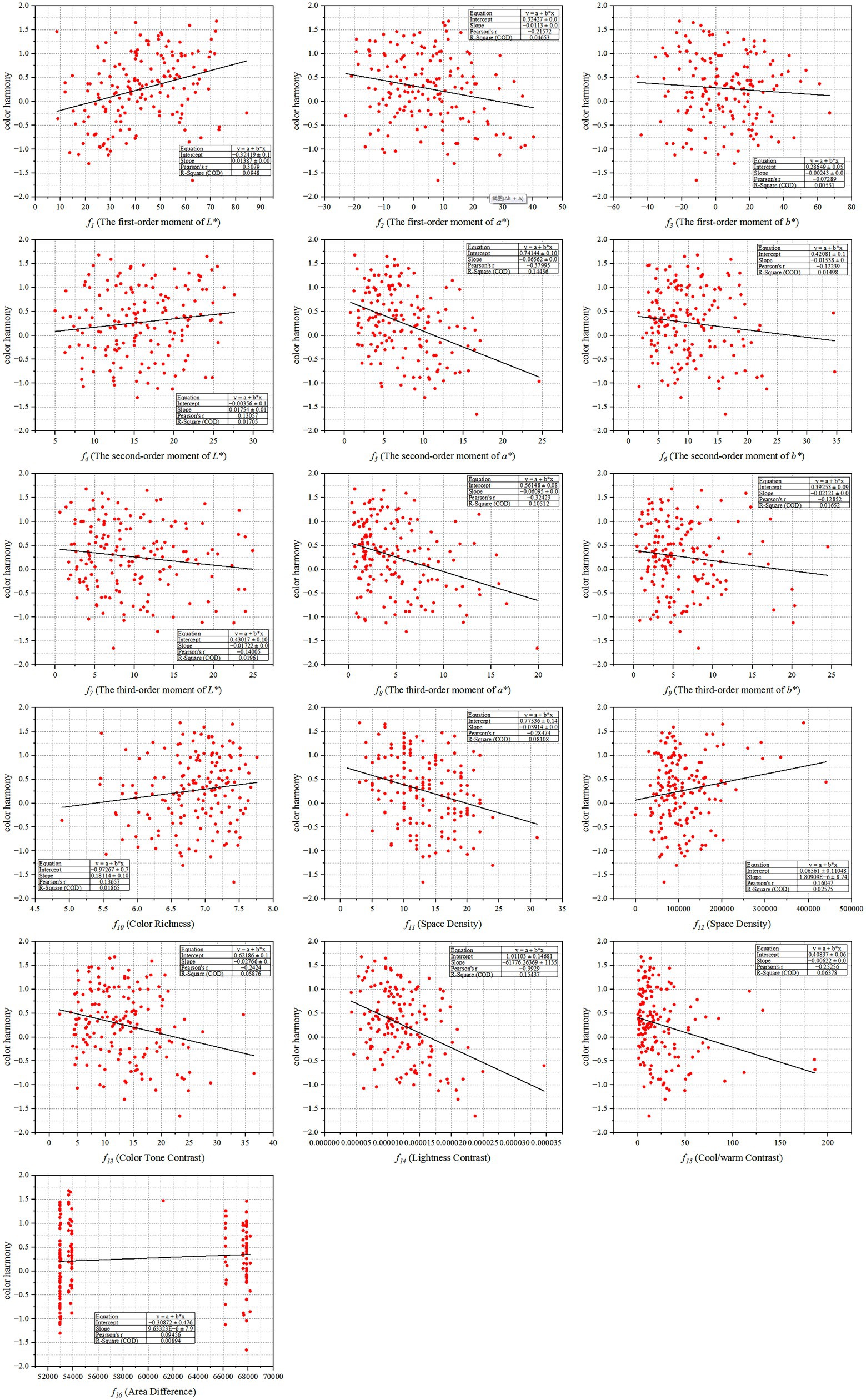

After acquiring the data of multi-color physical features and the subjective evaluation scores on color harmony of all multi-color materials, it is necessary to draw the scatter plots and fitting curves to conduct a qualitative analysis of the relationship between multi-color physical features and color harmony, and then make a quantitative analysis through the correlation coefficient. Figure 6 shows the scatter plots between multi-color physical features and color harmony.

Figure 6. The scatter plots between multi-color physical features and color harmony. Among them, X-axis represents each multi-color physical feature, and Y-axis represents the corresponding value of the degree of color harmony.

It can be seen from Figure 6 that some multi-color physical features have weak correlation with color harmony, namely (The first-order moment of L*), (The first-order moment of a*), (The second-order moment of a*), (The third-order moment of a*), (Space Density), (Color Tone Contrast), (Lightness Contrast), and (Cool/warm Contrast). Among them, the multi-color physical feature (The first-order moment of L*) is positively correlated with color harmony; the multi-color physical features (The first-order moment of a*), (The second-order moment of a*), (The third-order moment of a*), (Space Density), (Color Tone Contrast), (Lightness Contrast), and (Cool/warm Contrast) are negatively correlated with color harmony. That is to say, the brighter, cooler, simpler, and smaller the difference among multiple colors is, the multi-color relationship is more likely to make people feel harmonious.

Then, the Pearson’s correlation coefficients ( ) and fitting curves are also presented in scatterplots. It is generally believed that if , it has a strong correlation; if , it has a moderate correlation; if , it has a weak correlation; and if , there is no correlation. The Pearson’s correlation coefficient of (Lightness Contrast) is the highest, which is: . In addition, the multi-color physical features (The first-order moment of L*), (The second-order moment of a*), and (The third-order moment of a*) are also correlated with color harmony, with the Pearson’s correlation coefficients being , , and . Finally, the multi-color physical features (The first-order moment of a*), (Space Density), (Color Tone Contrast), and (Cool/warm Contrast) have weak correlation with color harmony, with the Pearson’s correlation coefficients being , , , and .

To sum up, the lower the lightness contrast of the multi-color combination, the easier it is to make people feel harmonious; the higher the lightness of the multi-color combination, the easier it is to make people feel harmonious; the cooler the multi-color combination, the smaller the difference among multiple colors, the lower the spatial density of the color blocks, the lower the color tone contrast, the lower the cool/warm contrast, and the easier it is to make people feel harmonious.

In order to further study the correlation between multi-color physical features and color harmony, machine learning algorithms are adopted to construct the multi-color harmony prediction model on the basis of correlation analysis. In order to eliminate the dimensional difference between different multi-color physical features, so as to further improve the accuracy of the prediction model. This article first normalizes the input multi-color physical eigenvalue

Among them, represents the minimum of the eigenvector X; represents the maximum of the eigenvector X.

The Multivariable Linear Regression (MLR) (Hidalgo and Goodman, 2013) has the characteristics of strong interpretability. Therefore, MLR is adopted to construct the regression predication model. Assume that there is a linear relationship between the independent variables and the dependent variable , as shown in Equation (25).

Among them, represents the unknown parameters, is the regression constant, and represents the overall regression parameters; is a random error which obeys the distribution. When , the equation is called the univariate linear regression model; when , the equation is called the MLR model. The Ordinary Least Square (OLS) method is adopted to estimate the overall regression parameters , and calculate the value of to minimize the object function.

In this article, the 10-fold Cross Validation is used to evaluate the accuracy of the model, that is, the dataset is divided into 10 subsets, and nine of them are taken as training sets without repetition, and the prediction errors of the remaining subsets are evaluated. And the evaluation indexes are the Pearson’s correlation coefficient ( ), Mean Absolute Error (MAE), and Root Mean Squared Error (RMSE), respectively. The calculation methods of MAE and RMSE are below.

Among them, represents the prediction value; represents the true value; represents the number of material in testing set.

The weka software (3.8.3; The University of Waikato, Hamilton, New Zealand) with functions of machine learning and data mining was adopted to construct the MLR model. In addition, we apply the M5 rules (Duggal and Singh, 2012) as the attribute selection method, that is, adding the classification discriminators before the linear regression model, so as to construct the piecewise linear regression model to improve the accuracy. Finally, based on the M5 rules, the linear regression prediction model is constructed as follows.

Among them, represents the first-order moment of L*; represents the second-order moment of L*; represents the second-order moment of a*; represents space density; represents color tone contrast; represents lightness contrast; represents cool/warm contrast.

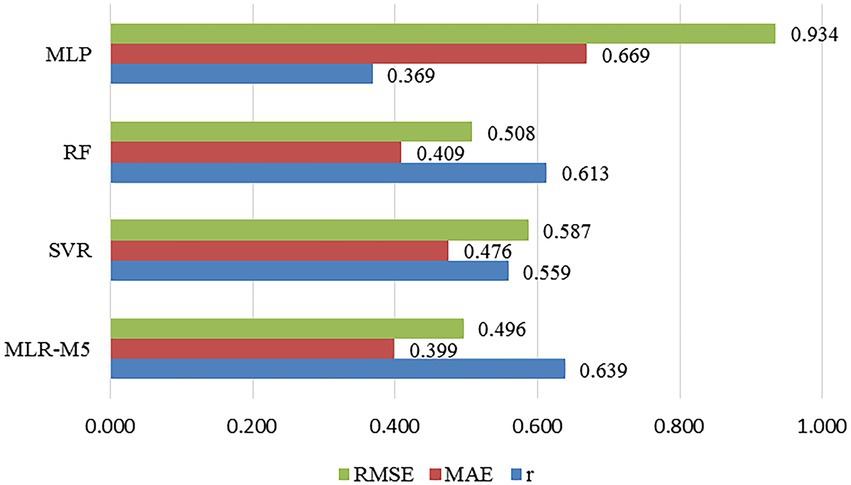

On the basis of constructing the linear regression models, this article tries to apply nonlinear machine learning algorithms into regression model construction, so as to further explore the relationship between multi-color physical features and color harmony. The three classic nonlinear machine learning algorithms used in this article are the Support Vector Regression (SVR), Random Forest (RF), and Multi-layer Perception (MLP). The comparison of the prediction results of the four machine learning algorithms is shown in Figure 7.

Figure 7. The comparison of the regression prediction results of the five machine learning algorithms.

It can be seen that the accuracy of the linear regression model is higher than those of the nonlinear regression models, with the prediction accuracy of MLR being 63.9%. Therefore, the relationship between the multi-color physical features and color harmony designed and quantified in this article can be constructed through the linear regression model, so as to give a reasonable explanation for the influencing factors of color harmony. On the other hand, among the nonlinear machine learning algorithms, the prediction accuracy of SVR and RF are close, which are 55.9% and 61.3%, respectively, and the prediction performance of RF is slightly better than that of SVR. In addition, the prediction accuracy of MLP is poor, which is 36.9% only.

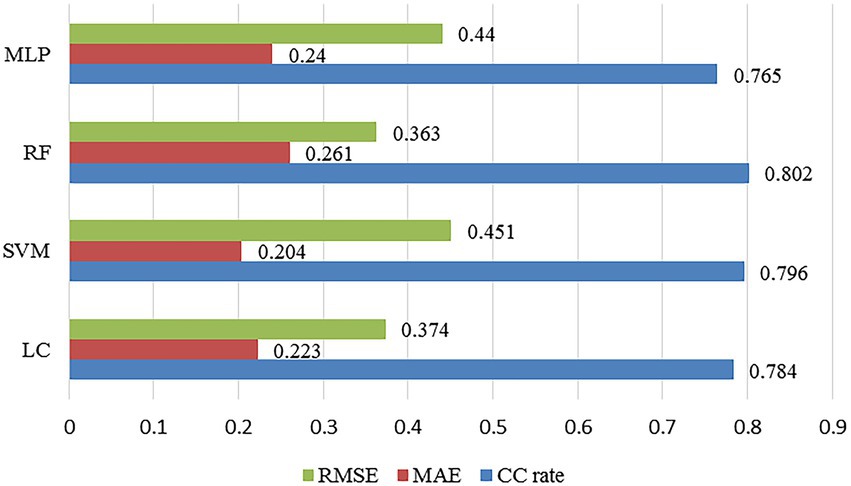

Since the prediction accuracy of the regression model of color harmony needs to be improved, this article attempts to transform the problem into a binary classification problem, and machine learning algorithms are also adopted to construct the classification prediction model. Set the classification threshold , that is, the degree of color harmony greater than 0 belongs to the “harmonious” category, and the degree of color harmony less than 0 belongs to the “disharmonious” category. The Logical Classification (LC) (Povhan, 2020), Support Vector Machine (SVM) (Ohata et al., 2021), RF (Lei et al., 2022), and MLP are, respectively, adopted to construct the classification prediction models (Gao et al., 2019). In the modeling process, 10-fold Cross Validation is used to better optimize the model parameters during the modeling process and reduce the evaluation errors. In terms of evaluation methods, this article measures the prediction accuracy of the classification model by the Correct Classification Rate (CC), MAE, and RMSE. The CC rate calculation method is as below.

Among them, TP represents the number of the true samples predicted as positive by the model; TN represents the number of the true samples predicted as negative by the model; P represents the number of the true samples; and N represents the number of the false samples. The comparison of the prediction results of the four machine learning algorithms is shown in Figure 8.

Figure 8. The comparison of the classification prediction results of the four machine learning algorithms.

It can be seen that the overall performance of the classification prediction models constructed in this article plays better than that of the regression models. Among them, RF has the best classification prediction performance, with the classification accuracy rate of 80.2%. In addition, LC and SVM have similar classification accuracy rates, which are 78.4 and 79.6%, respectively. And MLP also has the poorest classification prediction performance, with the classification accuracy of 76.5%.

In order to verify the applicability of the proposed regression predication models of color harmony, we construct a dataset containing 120 new screenshots of film and television with the 5-scale color harmony scoring. Then, after 16-dimensionl multi-color physical features extracted, the Equation (37) by MLR with the M5 rules is adopted to predict color harmony of the new dataset. And the prediction accuracy evaluated by the Pearson’s correlation coefficients ( ) is up to 50.3%, indicating that the proposed model has a good performance on the similar dataset. The scatterplot and fitting curve between the truth value and the prediction value is shown in Figure 9.

Figure 9. The scatterplot between the truth value and the prediction value. Among them, X-axis represents the truth value of color harmony, and Y-axis represents the corresponding prediction value by the proposed regression model.

On this basis, in order to further test the prediction performance of the proposed regression model on other dissimilar datasets, we select one public dataset for comparative research: the International Affective Picture System (IAPS) (Lang et al., 2008). IAPS is the most widely used dataset for experimental investigations of emotion and attention. It consists of documentary-style natural color photos depicting scenes labeled by pleasure (P), arousal (A), and dominance (D) based on PAD emotion space (Mehrabian, 1980; Russel, 1980). Then, the calculation method of color harmony is below based on the research on the relationship between color harmony (CH) and emotion from Wang et al. (2022). A total of 90 materials are selected in our comparative experiment on the proposed regression prediction model with the obvious semantic information, e.g., people with clear expressions, excluded.

We try to use Equation (37) by MLR with/without the M5 rules to predict color harmony. And the Pearson’s correlation coefficients ( ) between the truth values and the prediction values are and , respectively. Figure 10 shows the comparison of the regression prediction results of the different datasets.

It is noted that the accuracy of IAPS is lower than that of the screenshots of film and television scenes constructed by ourselves, the possible reasons are as follows. (1) The dataset in this article consists of the screenshots of film and television with color design, and the resolution of each material is high with the same format, to avoid the effect on data labeling. (2) Compared with the subjects in this article, the subjects for IAPS have a wider age range. Previous studies (Zhang et al., 2019) have shown that people with different ages have different color preferences, which may affect the judgment for color harmony. Therefore, the prediction models constructed in this article are more suitable for the field of art and design, where the pictures to be analyzed are of high quality.

In addition, different from the prediction performance on our dataset, the MLR model without the M5 rules is more accurate than that with the M5 rules on IAPS, indicating the overfitting problem.

Since the accuracy of the regression models is not very ideal except for MLR, we try to use different sample sizes to train the model, so as to analyze the influence of sample size on the experimental results. Based on the dataset we constructed in the article, we add 120 screenshots of film and television with the 5-scale color harmony scoring (constructed in section “Comparison with other datasets”). Then, we conduct the comparative experiment on 82, 164, and 284 sample sizes which were selected randomly, respectively. The comparative result measured by the Pearson’s correlation coefficient ( ) is shown in Figure 11.

It can be seen that the average values of the 82, 164, and 284 sample sizes are 49.4, 54.5, and 54.8%, respectively, which grows with the sample size. In addition, the average values of MLR, SVR, RF, and MLP are 56.8, 57.8, 55, and 42%, respectively. Then, for the explainable MLR, the 164 sample size has the highest prediction accuracy. To sum up, when the sample size is small, it negatively affects the accuracy of the predictive model; when the sample size reaches a certain size, it is beneficial to improve the accuracy of the prediction model through strict subjective experimental labeling, which provides the idea for few-shot learning. In addition, if the extracted physical features have strong correlation with the subjective ground truth, it is beneficial to construct the explainable linear models.

In this article, we combine experimental psychology and information technology together to study the mechanism of color harmony in real-life scenes. The main contribution is attributed analysis and model construction of color harmony. Especially, (1) Design and quantify seven categories, 16-dimensional, multi-color physical features which are suitable for extracting in real-life scenes, namely Color Moment, Color Richness, Space Density, Lightness Contrast, Color Tone Contrast, Cool/Warm Contrast, and Area Difference. The correlation analysis shows that the overall lightness, difference of the color tones, number of multiple colors, lightness contrast, color tone contrast, and cool/warm contrast are significantly related to color harmony. (2) Based on the machine learning algorithms, the color harmony regression prediction model and the classification prediction model are, respectively, constructed. Among them, the prediction accuracy of the linear regression model is higher than that of the nonlinear regression model, with a maximum of 63.9%, indicating that the multi-color physical features designed and quantified in this article are effective and color harmony can be correlated through the linear regression model, so as to give reasonable explanations for the mechanism of color harmony. For the classification models, RF has the best prediction performance, with a prediction accuracy of 80.2%.

Future works are below. (1) Taking the classical color harmony theories into consideration, design and quantify the physical features of multiple colors that are consistent with the theoretical description, so as to fully explore the influencing factors of color harmony. (2) Previous studies have shown that (Jonauskaite et al., 2020), color preference is easily affected by gender, age, and cultural background. Therefore, we plan to introduce personalized factors as constraints into the prediction model by supplementing the dataset and increasing the number of differentiated subjects to further improve the prediction accuracy of the model. (3) For industry applications, develop color-matching software to increase the practicability of research results.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

SW and YJ constructed the overall research framework. SW designed and quantified multi-color physical features and revised the manuscript. JLa and JJ constructed the material dataset. JLi carried out the subjective evaluation experiment. SW and JLi completed the correlation analysis and model construction and drafted the manuscript. All authors contributed to the article and approved the submitted version.

Funding was provided by the National Key Research and Development Program (Nos. 2021YFF0901705 and 2021YFF0901700) and Open Project of Key Laboratory of Audio and Video Restoration and Evaluation, Mini of Culture and Tourism (2021KFKT006).

JJ is employed by China Digital Culture Group Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.945951/full#supplementary-material

Bartko, J. J. (1966). The intraclass correlation coefficient as a measure of reliability. Psychol. Rep. 19, 3–11. doi: 10.2466/pr0.1966.19.1.3

Beucher, S., and Meyer, F. (1993). The morphological approach to segmentation: the watershed transformation. Math. Morphol. Imag. Proc. 34, 433–481.

Connolly, C., and Fleiss, T. (1997). A study of efficiency and accuracy in the transformation from RGB to CIELAB color space. IEEE Trans. Image Process. 6, 1046–1048. doi: 10.1109/83.597279

Die Berlin-Brandenburgische Akademie der Vissenschaften in Göttingen und die Heidelberger Akademie der Wissenschaften (Hrsg.) (2004). Goethe-Wörterbuch Bd 4:119. Stuttgart: Verlag W. Kohlhammer.

Duggal, H., and Singh, P. (2012). Comparative study of the performance of M5-rules algorithm with different algorithms. J. Softw. Eng. Appl. 5, 270–276. doi: 10.4236/jsea.2012.54032

Gao, S., Zhou, M., Wang, Y., Cheng, J., Yachi, H., and Wang, J. (2019). Dendritic neuron model with effective learning algorithms for classification, approximation, and prediction. IEEE Trans. Neural Netw. Learn. Syst. 30, 601–614. doi: 10.1109/TNNLS.2018.2846646

Gonzalez, R. C., and Woods, R. E. (2020). “Digital image processing,” in Ruan Qiuqi, Ruan Yuzhi. 4th Edn. ed. H. Tan, Beijing: Publishing House of Electronics Industry, 279–282.

Granger, G. W. (1953). Area balance in color harmony: an experimental study. Science 117, 59–61. doi: 10.1126/science.117.3029.59

Hidalgo, B., and Goodman, M. (2013). Multivariate or multivariable regression? Am. J. Public Health 103, 39–40. doi: 10.2105/AJPH.2012.300897

Hu, J. (2010). Research on interpolation of medical image and image segmentation in 3D reconstruction system. Nanjing: Nanjing University of Aeronautics and Astronautics.

Huang, X. L., and Shen, L. S. (2002). Research on content-based image retrieval techniques. Acta Electron. Sin. 7, 1065–1071. doi: 10.2753/CSH0009-4633350347

Huang, Z., Yang, S., Zhou, M. C., Li, Z., Gong, Z., and Chen, Y. (2022). Feature map distillation of thin nets for low-resolution object recognition. IEEE Trans. Image Process. 31, 1364–1379. doi: 10.1109/TIP.2022.3141255

Jiang, W., Wang, S., Yanan, S., and Liu, J. (2019). “A study of color-emotion image set construction and feature analysis.” in 2019 IEEE/ACIS 18th International Conference on Computer and Information Science (ICIS), June 17, 2019. 99–104.

Jonauskaite, D., Abu-Akel, A., Dael, N., Oberfeld, D., Abdel-Khalek, A. M., al-Rasheed, A. S., et al. (2020). Universal patterns in color-emotion associations are further shaped by linguistic and geographic proximity. Psychol. Sci. 31, 1245–1260. doi: 10.1177/0956797620948810

Krishna, K., and Murty, M. N. (1999). Genetic K-means algorithm. IEEE Trans. Syst. Man. Cy. Part B. 29, 433–439. doi: 10.1109/3477.764879

Lang, P., Bradley, M., and Cuthbert, B. (2008). International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Technical report, Univ. Florida, Gainesville.

Lei, Y., Zhu, H., Zhang, J., and Shan, H. (2022). Meta ordinal regression Forest for medical image classification with ordinal labels. IEEE/CAA J. Autom. Sin. 9, 1233–1247. doi: 10.1109/JAS.2022.105668

Li, X. (2008). “A New Measure of Image Scrambling Degree Based on Grey Level Difference and Information Entropy.” in 2008 International Conference on Computational Intelligence and Security, December 13, 2008. 350–354.

Lu, P., Peng, X., Li, R., and Wang, X. (2015). Towards aesthetics of image: a Bayesian framework for color harmony modeling. Signal Process Image Commun. 39, 487–498. doi: 10.1016/j.image.2015.04.003

Lu, P., Peng, X., Yuan, C., Li, R., and Wang, X. (2016). Image color harmony modeling through neighbored co-occurrence colors. Neurocomputing 201, 82–91. doi: 10.1016/j.neucom.2016.03.035

Marey, H. M., Semary, N. A., and Mandour, S. S. (2015). Ishihara electronic color blindness test: an evaluation study. Ophthalmol. Res. Int. J. 3, 67–75. doi: 10.9734/OR/2015/13618

Mehrabian, A. (1980). Basic dimensions for a general psychological theory. Cambridge: Oelgeschlager, Gunn & Hain, 39–53.

Moon, P., and Spencer, D. E. (1944). Aesthetic measure applied to color harmony [J]. JOSA 34, 234–242. doi: 10.1364/JOSA.34.000234

Odabaşıoğlu, S., and Olguntürk, N. (2020). Effect of area on color harmony in simulated interiors. Color. Res. Appl. 45, 710–727. doi: 10.1002/col.22508

Ohata, E. F., Bezerra, G. M., Chagas, J. V. S., Lira Neto, A. V., Albuquerque, A. B., Albuquerque, V. H. C., et al. (2021). Automatic detection of COVID-19 infection using chest X-ray images through transfer learning. J. Aut. Sin. 8, 239–248. doi: 10.1109/JAS.2020.1003393

Ou, L.-C., Yuan, Y., Sato, T., Lee, W. Y., Szabó, F., Sueeprasan, S., et al. (2018). Universal models of colour emotion and colour harmony. Color Res App 43, 736–748. doi: 10.1002/col.22243

Povhan, I. (2020). Logical classification trees in recognition problems. IAPGOŚ 10, 12–15. doi: 10.35784/iapgos.927

Russel, J. A. (1980). A circumflex model of affect. J. Pers. Soc. Psychol. 39, 1161–1178. doi: 10.1037/h0077714

Sakahara, K. (2002). “An exterior expression of the houses on the CG and a color assessment on the method of pair comparison.” in 9th Congress of the International Colour Association. June 6, 2002. Vol. 4421. 170–173.

Shen, X., Xiaoyang, W. U., and Han, D. (2015). Survey of research on watershed segmentation algorithms. Comput. Eng. 41, 26–30. doi: 10.3969/j.issn.1000-3428.2015.10.006

Wang, S., Jiang, W., Su, Y., and Liu, J. (2020). Human perceptual responses to multiple colors: a study of multicolor perceptual features modeling. Color Res. Appl. 45, 728–742. doi: 10.1002/col.22512

Wang, S., Liu, J., Liu, S., Yin, B., Jiang, J., and Lan, J. (2022). Research on attribute analysis and modeling of color harmony based on objective and subjective factors. IEEE Access 10, 75074–75096. doi: 10.1109/ACCESS.2022.3190386

Zaeimi, M., and Ghoddosian, A. (2020). Color harmony algorithm: an art-inspired metaheuristic for mathematical function optimization. Soft. Comput. 24, 12027–12066. doi: 10.1007/s00500-019-04646-4

Zhang, X., Chen, M., and Ji, H. (1996). Industrial design concept and method. Beijing: Beijing Institute of Technology Press.

Zhang, Y., Liu, P., Han, B., Xiang, Y., and Li, L. (2019). Hue, chroma, and lightness preference in Chinese adults: age and gender differences. Color. Res. Appl. 44, 967–980. doi: 10.1002/col.22426

Zhang, W., Wang, J., and Lan, F. (2021). Dynamic hand gesture recognition based on short-term sampling neural networks. IEEE/CAA J. Autom. Sin. 8, 110–120. doi: 10.1109/JAS.2020.1003465

Keywords: color harmony, aesthetic measure, multiple colors, feature extraction, machine learning, real-life scene

Citation: Wang S, Liu J, Jiang J, Jiang Y and Lan J (2022) Attribute analysis and modeling of color harmony based on multi-color feature extraction in real-life scenes. Front. Psychol. 13:945951. doi: 10.3389/fpsyg.2022.945951

Received: 20 May 2022; Accepted: 29 August 2022;

Published: 14 September 2022.

Edited by:

Yandan Lin, Fudan University, ChinaReviewed by:

Suchitra Sueeprasan, Chulalongkorn University, ThailandCopyright © 2022 Wang, Liu, Jiang, Jiang and Lan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yujian Jiang, eWpqaWFuZ0BjdWMuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.