- Laboratory of Brain and Cognition, National Institute of Mental Health, Bethesda, MD, United States

“Parting is such sweet sorrow.” Taste metaphors provide a rich vocabulary for describing emotional experience, potentially serving as an adaptive mechanism for conveying abstract emotional concepts using concrete verbal references to our shared experience. We theorized that the popularity of these expressions results from the close association with hedonic valence shared by these two domains of experience. To explore the possibility that this affective quality underlies the semantic similarity of these domains, we used a behavioral “odd-one-out” task in an online sample of 1059 participants in order to examine the semantic similarity of concepts related to emotion, taste, and color, another rich source of sensory metaphors. We found that the semantic similarity of emotion and taste concepts was greater than that of emotion and color concepts. Importantly, the similarity of taste and emotion concepts was strongly related to their similarity in hedonic valence, a relationship which was also significantly greater than that present between color and emotion. These results suggest that the common core of valence between taste and emotion concepts allows us to bridge the conceptual divide between our shared sensory environment and our internal emotional experience.

Introduction

Metaphors such as “parting is such sweet sorrow”, “right to the bitter end” exemplify the use of our experience of taste to express our emotional states, which is naturally a difficult task, given the internal and essentially subjective nature of emotions. One reason for this phenomenon might have to do with the affective attributes of hedonic valence and arousal (physiological activation), shared by both domains of experience (Young, 1966; Russell, 2003; Small et al., 2003; Barrett and Bliss-Moreau, 2009; Sakamoto and Watanabe, 2016). Current theories of emotion posit that, like taste and interoception, the perception of the internal state of the body, emotion fundamentally serves as a homeostatic signaling mechanism, with certain highly valenced emotions such as fear promoting bodily homeostasis and social emotions such as loss and heartache potentially signaling deviations from homeostasis within the context of social relationships (Damasio, 1999; Barrett and Simmons, 2015). With some exceptions, sweet tastes have positive hedonic valence and bitter tastes negative, which are fundamental properties of food that individuals learn very early in development. Thus, when trying to communicate about other experiences with positive or negative valence, such as internal emotional experiences, we might naturally use terms relating to taste as external referents to convey the substance of our message.

An underlying reason for this shared association with valence might have to do with the neural structures shared by both taste and emotion, such as the insular cortex. The insular cortex is the primary cortical area involved in taste perception (Small, 2010; Avery et al., 2020) and in representing inferences about specific taste properties, which are automatically activated when viewing pictures of food (Simmons et al., 2013b; Avery et al., 2021). The insular cortex is also heavily implicated in the sensory component of emotional experience (Singer et al., 2009), perhaps due to its involvement in the representation of pain and interoceptive sensations from the body (Craig, 2003; Simmons et al., 2013a; Avery et al., 2017). Indeed, according to the Conceptual Metaphor Theory taste–emotion metaphors serve to ground the abstract emotional concepts by using a concrete reference to our shared experience (Lakoff and Johnson, 1980, 1999; Barsalou, 2008; Martin, 2016).

With such a close functional connection of taste and emotion in the human brain and given the shared importance of valence, we might expect that these cognitive domains are tightly linked within the conceptual structure of human thought. One way we can explore this possibility is through examining the semantic similarity of taste and emotion concepts present in the English language. We predict that taste and emotion concepts would exhibit a high degree of semantic similarity, as measured by behavioral tasks, which would be greater than the similarity between emotion and color concepts. Color represents a sensory domain closely associated with emotion that forms another rich source of descriptive metaphors (Sutton and Altarriba, 2016; Jonauskaite et al., 2020), which, unlike taste, is not as clearly linked to emotion-related neural systems. A recent study of emotion semantics across languages identified that the semantic similarity between emotion concepts is most strongly driven by their similarity in hedonic valence (Jackson et al., 2019). Accordingly, we would also expect that the semantic similarity between taste and emotion concepts would be significantly related to similarity in valence, and that this relationship would be greater than that observed between color and emotion concepts.

Materials and Methods

Taste, Emotion, and Color Concepts

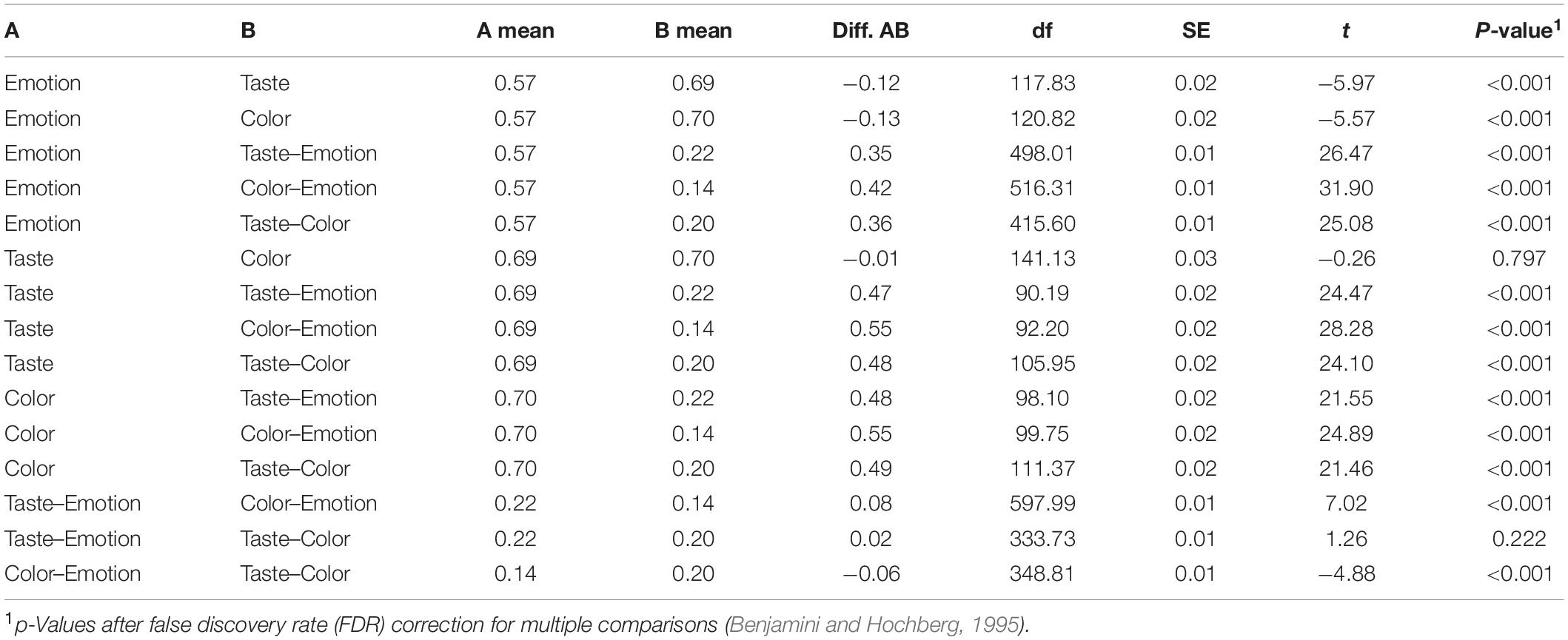

A list of 51 English words drawn from a database of cross-linguistic concepts (List et al., 2018) was used to generate the stimuli for the behavioral task, containing 24 words describing emotion concepts and 13 words describing color concepts which were previously used in study of cross-cultural emotion semantics (Jackson et al., 2019). Also included were 14 descriptive concepts related to taste and food properties. The emotion and color concept groups are the same sets of concepts used in a previous study of emotion semantics (Jackson et al., 2019). The Taste concepts consist of 14 concepts selected by the authors (JA, AL, and AM) relating to taste and food qualities, available within the Concepticon database (List et al., 2016). These consist of four of the five basic tastes (sweet, sour, salty, bitter) as well as several food-related concepts identified within the Concepticon database under the Semantic Field of Food and Drink, with the Ontological Category of Property (List et al., 20161). A full list of the concepts for each of these three groups is available in Table 2.

Ratings of valence and arousal for each of these concepts were obtained from the Warriner Affective database, which contains ratings of valence and arousal for over 14,000 English words, obtained from an online survey of an English-speaking population (Warriner et al., 2013). Concreteness (specificity) ratings for these concepts were obtained from the database of concreteness ratings (Brysbaert et al., 2014). Two of the Taste concepts (“cooked”, “unripe”) were not listed in these databases, so those words have been excluded from their respective analyses. We compared each set of concepts on their average levels of valence, arousal, and concreteness using ANOVA.

Behavioral Experiments

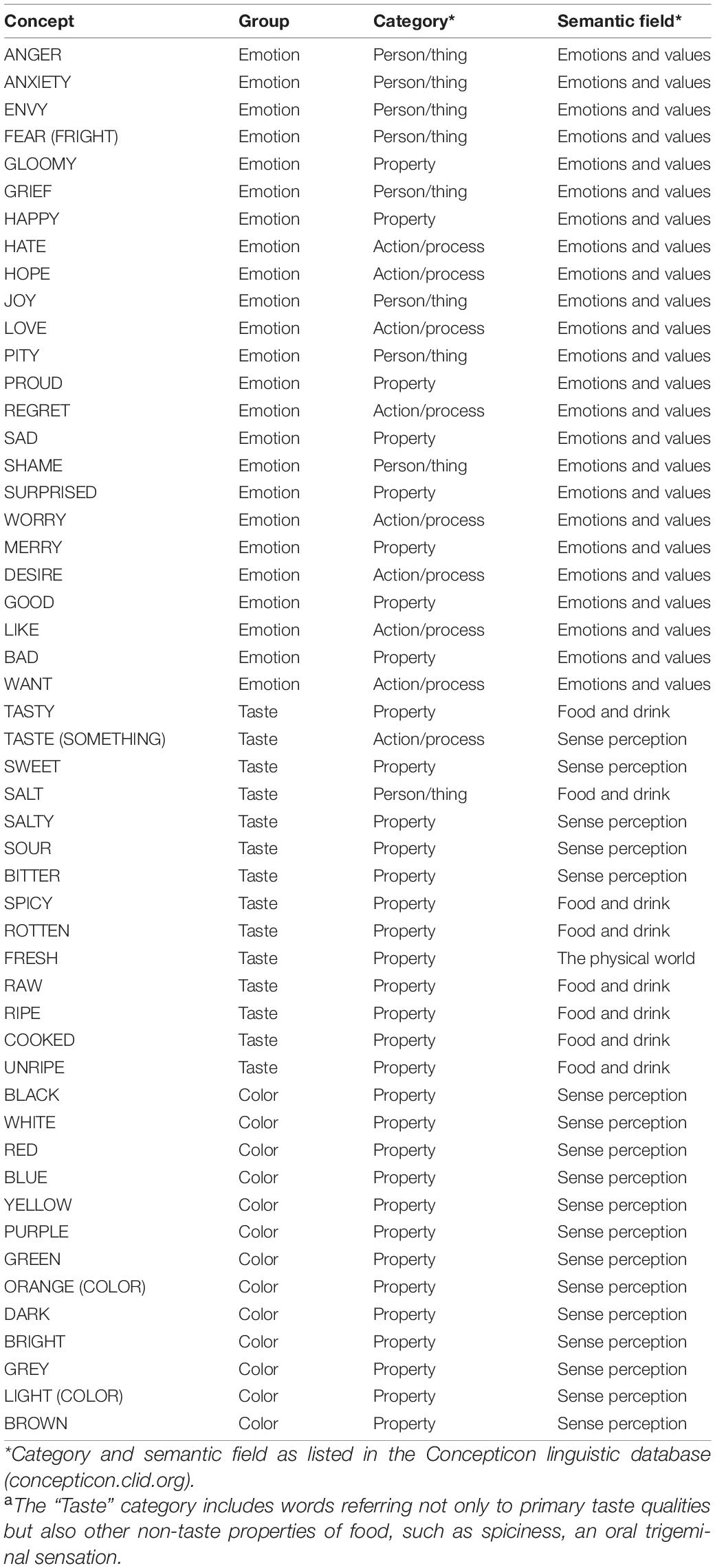

We examined the semantic structure of taste, color, and emotion concepts behaviorally using an odd-one-out triplet task (Hebart et al., 2020) in a group of online participants on the Amazon Mechanical Turk platform. Ethics approval for this study was granted by the NIH Combined Neuroscience Institutional Review Board under 962 protocol number 93-M-0170. The Institutional Review Board of the NIH approved all procedures, and informed consent was obtained for all online subjects. We selected only English-speaking participants from within the continental United States, who indicated that English was their primary language. Within this task, each subject viewed three words on the screen, randomly drawn from the full set of Emotion, Taste, and Color concepts. For each of these word triplets, subjects were instructed to select the word which was the least similar to the other two concepts (Figure 1A). The choice of the odd-one-out indicates which pair of words participants find to be most similar. For instance, the participants might see the words “GREEN,” “BLUE,” and “SOUR.” As “GREEN” and “BLUE” fall within the same conceptual category, they would most likely pick “SOUR” as the odd-one-out. However, participants might also see the words “GREEN,” “ENVY,” and “UNRIPE,” which all belong to different conceptual categories, so the choice is not so straightforward. One advantage of this task is that, rather than explicitly measuring the similarity of two concepts on one dimension, this task measures the overall implicit similarity of these concepts across multiple dimensions. We can then examine how much one or more specific dimensions (e.g., valence and arousal) contribute to that similarity. Rather than explicitly asking how similar two concepts are, without providing any context for that comparison, in this task the third word in the set always serves as the context by which to compare the other two words. By repeatedly varying that context through this task, we are thus sampling across wide variety of different contexts, which allows us to approximate the implicit similarity of these concepts.

Figure 1. The semantic similarity of Taste, Color, and Emotion concepts. (A) In an online behavioral task, we asked participants to select the “odd-one-out” from a series of three-word prompts, each containing concept words related to taste, emotion, or color. (B) The data from this task were used to create a similarity matrix, which indicated the semantic similarity of each pair of concepts. The pairwise semantic similarity values in the matrix reflect the observed probability that neither word in a pair was selected as the odd-one-out during the behavioral task.

Every possible 3-word combination of these 51 words was tested, which was a total of 20,825 triplets. Each full set of triplets was broken into Human Interaction Tasks (HITs), with 25 distinct triplets per HIT. To verify reliability of the behavioral data, we set out to test the full set of 20,825 triplets twice, then compare the resulting behavioral similarity matrices from those two datasets. We did so by calculating the Pearson’s correlation of the upper triangles of both similarity matrices (see section “Behavioral Data Analysis” for more). The order of those triplets was randomized so that subjects across sessions did not encounter triplets in the same order, thus we tested a total of 1666 unique HITs. Subjects were allowed to complete 2 HITs maximum during a testing session, thus some subjects completed 25 unique triplets and others completed 50 unique triplets. The average duration of a participant’s online session across both datasets was 210 s or 3 min 30 s (SD = 128 s). Subjects were compensated $0.50 for each HIT completed. The triplet set each participant observed was drawn from the same full set of triplets without replacement, participants within each session each completed a unique set of triplets and no participant saw the same triplet twice. The sample size for this study was thus the total number of individuals required to complete all HITs (min 833, max 1666). To filter out any potential non-human responses or non-compliant subjects, we included two catch trial triplets within each HIT which consisted of the words PLUS, MINUS, and EQUALS. For these catch trials, subjects were simply instructed to select the word PLUS. After excluding non-compliant HITs, we re-posted the rejected HITs, so that we could collect responses for all triplet combinations. A total of 1814 HITs were completed across both datasets, by 1166 subjects. Using our exclusion criteria, a total of 148 HITs were rejected for non-compliance (approximately 9%). After excluding non-compliant HITs, the data from a total of 1059 online participants was used for the subsequent analyses. Data were pooled across participants, ignoring any participant-specific effects. Given the number of triplets tested, the data from any individual participant constituted at most 0.12% of the total sample.

Behavioral Data Analysis

The responses to the triplet task were used to generate a semantic similarity matrix, as follows (Hebart et al., 2020). For every pair of two words from our full set of 51 concepts, we first identified the number of triplets in which both words were present. From this set of triplets, we identified the number of times either word was selected as the odd-one-out. We subtracted this number from the total number of triplets in the set to obtain the number triplets in which neither word was selected. We then divided this number by the number of triplets in the set to obtain the observed probability that neither concept would be selected as the odd-one-out. Higher numerical values of this index thus reflect a greater degree of semantic similarity between the two concepts.

Concept Groups

We subdivided the upper triangle of the resulting semantic similarity matrix into six sections which included groups of cells containing similarity values comparing concepts within each concept set (Emotion, Taste, and Color groups) or between each concept set (Taste–Emotion, Color–Emotion, and Taste–Color groups; see Figure 1). We then compared the average semantic similarity values within these concept groups in the subsequent analyses.

In our first analysis, we examined whether Taste concepts were more semantically similar to Emotion concepts than Color concepts were to Emotion concepts. For this analysis, we performed an ANOVA comparing the average similarity values of all concept groups. We tested for a main effect of concept group and a main effect of category type (similarity Within concept sets or Between concept sets).

We also performed post hoc t-tests comparing the average similarity values for each concept group against every other, including the average value of the cells in the Taste–Emotion group to the average value of the cells in the Color–Emotion group. These post hoc statistical tests were then false discovery rate-corrected for multiple comparisons, in order to reduce the likelihood of Type-I errors due to performing multiple statistical comparisons (Benjamini and Hochberg, 1995).

Relationship to Valence and Arousal

The core dimensions of emotional concepts are valence and arousal (Russell, 2003; Barrett and Bliss-Moreau, 2009), which have been shown to largely account for the variance within semantic networks of emotional concepts (Jackson et al., 2019). One explanation for the relatively greater similarity of taste and emotion concepts, compared to color and emotion concepts, could be that taste and emotion concepts share a similar conceptual structure of valence and arousal. Thus, the similarity between taste and emotion concepts would be much more related to these shared attributes of valence and arousal than would the similarity between color and emotion concepts, the latter of which lack the shared conceptual similarity related to valence and arousal.

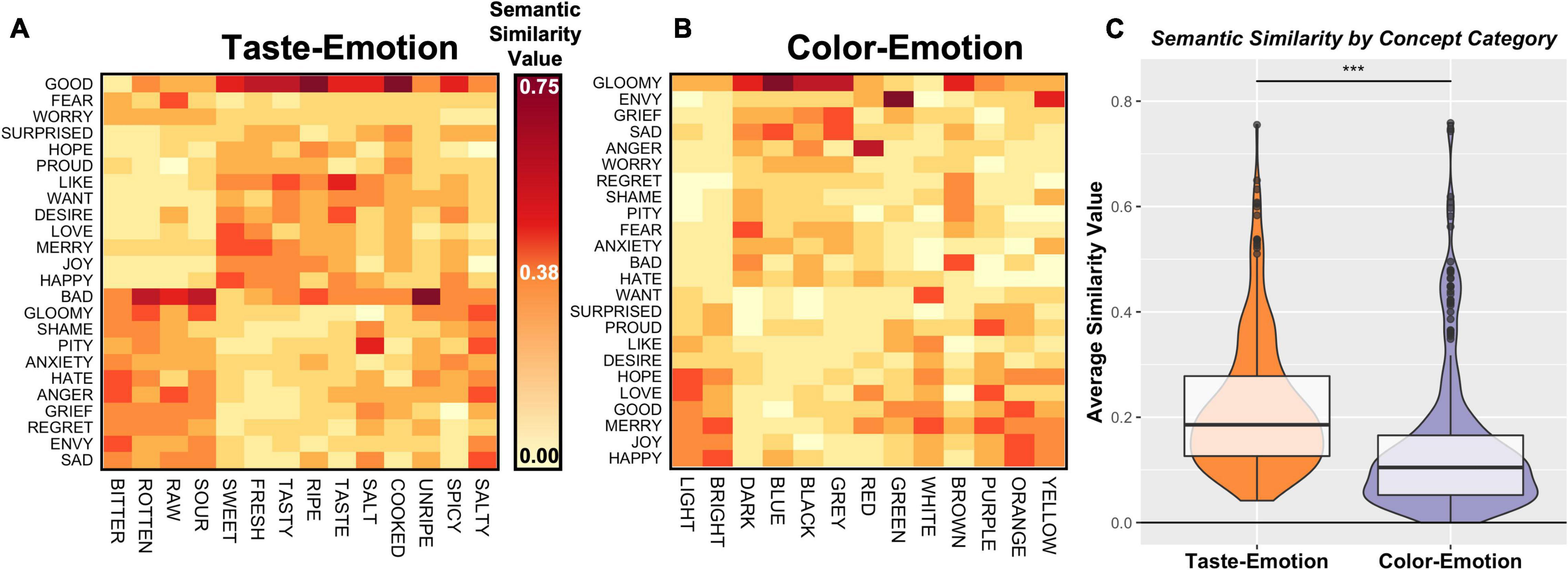

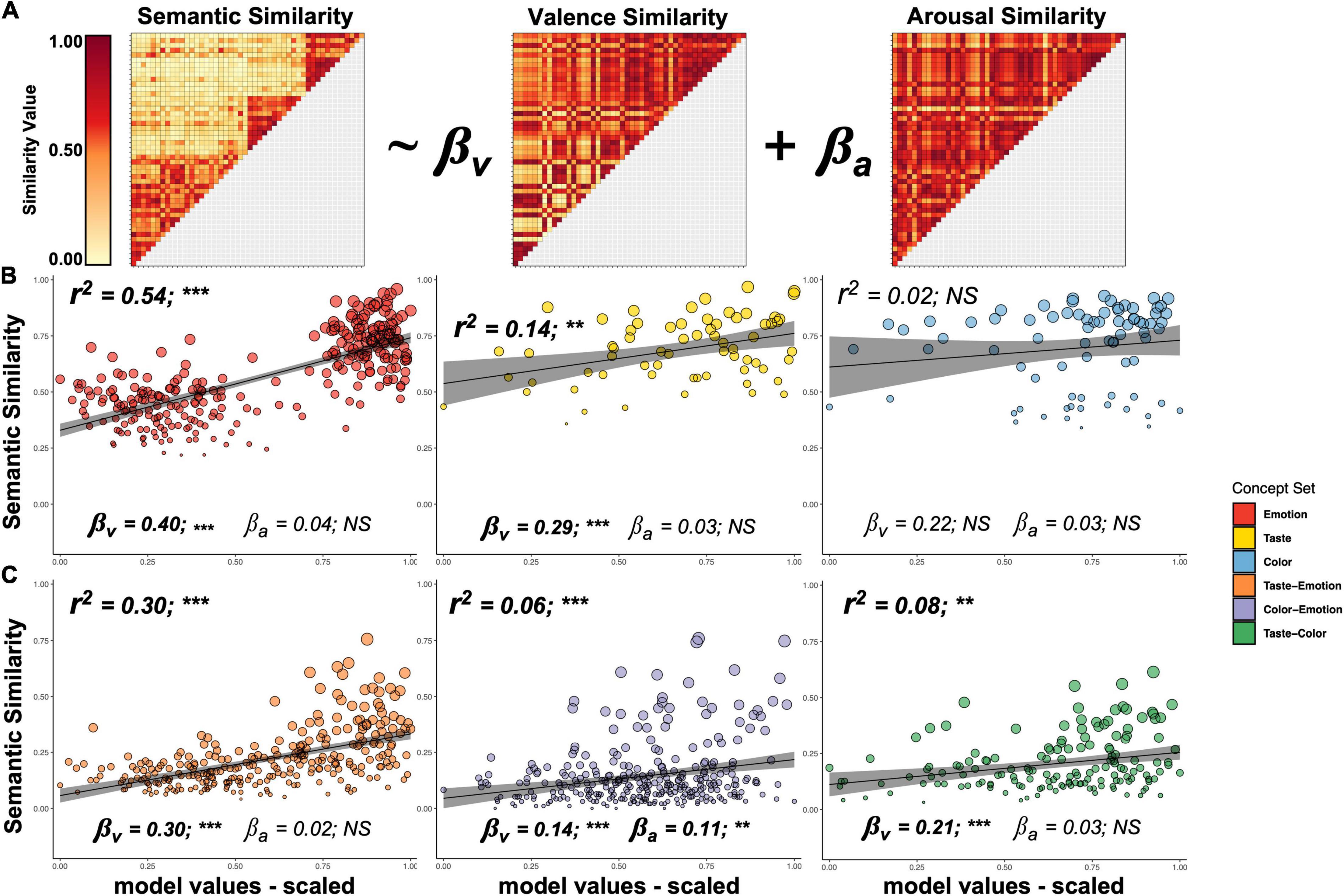

To examine this possibility, we used the ratings from Warriner et al. (2013) database to identify the associated valence and arousal of our taste, color, and emotion concepts. We used these ratings to generate valence and arousal similarity matrices for these concepts, with the same dimensions as the semantic similarity matrices, as follows (see Figure 3). For both valence and arousal ratings, we generated separate distance matrices reflecting the Euclidean distance between each pair of concepts. We then rescaled the distances to values to within a range of 0 and 1 by subtracting the minimum distance from each value and then dividing the resulting number by the range of distance values. To generate the final valence and arousal similarity matrices, we subtracted each of these scaled distance values from 1. We subdivided these valence and arousal matrices into six concept groups as we did above with the semantic similarity matrix.

We included these valence and arousal similarity matrices as regressors in general linear models (GLMs) to examine how similarity in valence and arousal is related to semantic similarity of these concepts. We performed an ANCOVA to identify overall effects of valence and arousal as well as significant group × valence and group × arousal interactions, which would signify that the association between similarity in hedonic valence or arousal and semantic similarity differs across concept groups. We then performed post hoc tests to examine the valence and arousal relationships for each concept group individually using a GLMs. We specifically compared Taste–Emotion and Color–Emotion groups using ANCOVA to determine if the relationship between valence similarity and semantic similarity differed significantly between these two groups.

We tested the significance of each of these GLMs using a permutation test procedure, in which we randomly permuted the semantic similarity values used as the dependent variable 1000 times before application of the GLM. This allowed us to generate an empirical distribution of random r2 values, which we then compared to the observed GLM r2 value. The resulting p-value was thus the proportion of values in this empirical distribution greater than our observed value.

Results

Behavioral Task Results

The three concept groups differed significantly in average concreteness (p < 0.001) and arousal (p < 0.001), but not valence (p = 0.31). The average triplet response time across both sessions was 5.0 s (SD = 7.5 s). Thus, the average length of a HIT (25 triplets) was approximately 125 s. The average participant session (including 1 or 2 HITs) was 210 s (SD = 128 s). Data from the two complete sets of triplets, which were collected on separate days, were used to construct a pairwise similarity matrix containing values for all 51 × 51 concept pairs. The correlation between the upper triangles of these two pairwise similarity matrices was extremely high (r = 0.96, p < 0.001), indicating these measurements were extremely stable across multiple groups of participants. The two similarity matrices were averaged together for the subsequent analyses.

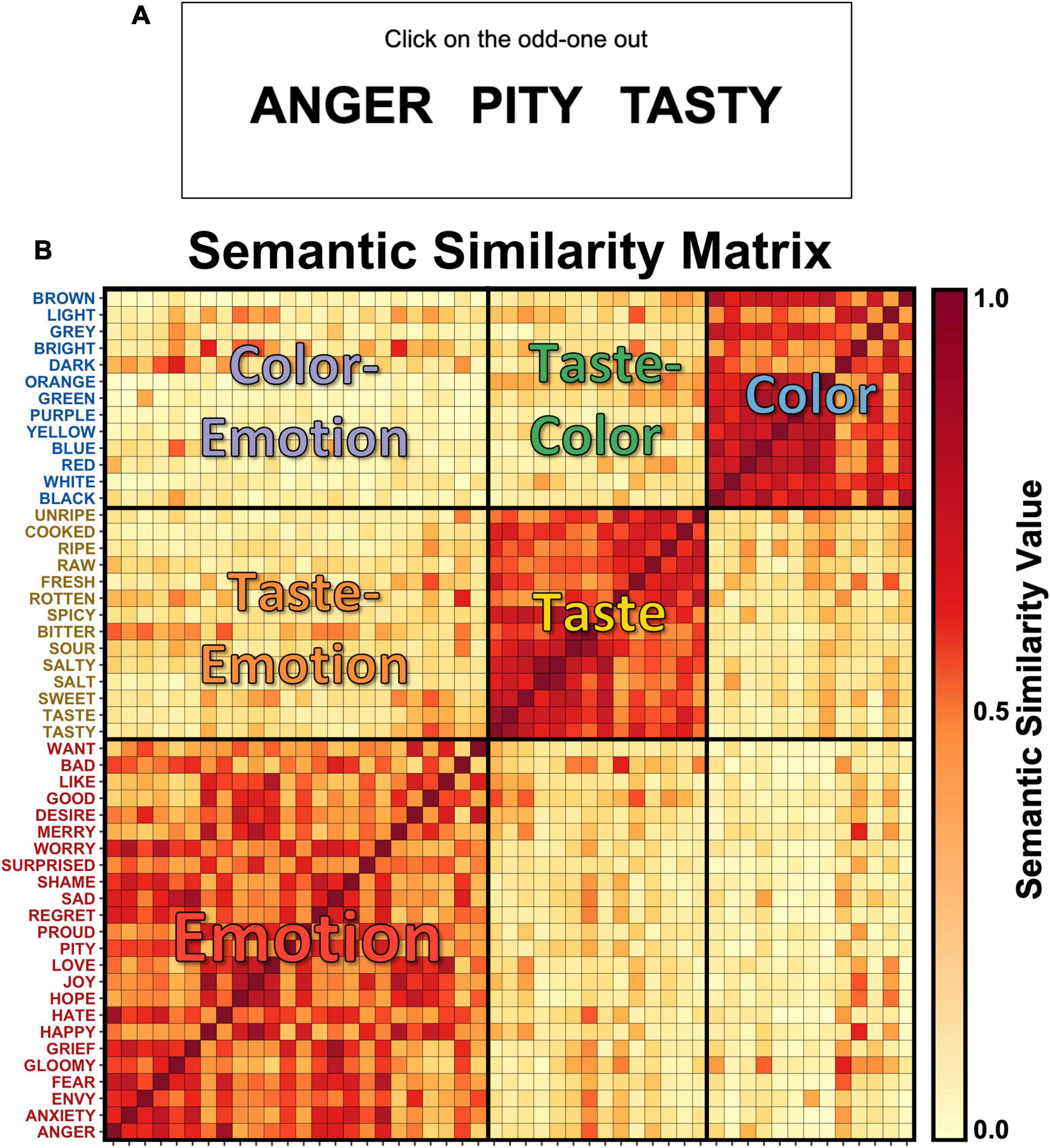

As shown in the color-coded heatmap in Figure 1, the individual concepts are tightly clustered within their respective semantic categories. To examine this structure more closely, we next selected the specific arrays within the larger matrix which corresponded to the three within-category partitions (Emotion, Taste, Color) and the three between-category partitions (Taste–Emotion, Color–Emotion, and Taste–Color; see Figure 1B). We then compared the average similarity of the cells in those groups using ANOVA. We observed a main effect of category type, as similarity within category was significantly greater than between-category similarity (p < 0.001). Notably, planned post hoc t-tests identified that, when comparing between category partitions, the average similarity of taste and emotion concepts was significantly greater than the average similarity of color and emotion concepts (t = 7.0, p < 0.001; Figure 2; see Table 1 for full set of pairwise comparisons), indicating that pairings of taste and emotion concepts were judged to have greater semantic similarity than pairings of color and emotion concepts. This finding held both for the full set of taste concepts, as well as pairings of the four basic tastes and emotion concepts (t = 7.4, p < 0.001).

Figure 2. The semantic similarity of Taste and Emotion concept pairs is greater than that of Color and Emotion Concept pairs. We compared the similarity of (A) Taste and Emotion concept pairs to (B) Color and Emotion concept pairs by selecting the relevant cells of the matrix and comparing the average similarity values within. (C) The results of this analysis show that participants judged taste concepts to have greater conceptual similarity to emotion concepts than did color concepts. ***p < 0.001.

Figure 3. Relationship between semantic similarity and similarity in valence and arousal. (A) We compared the pairwise semantic similarity between concepts to those concepts’ similarity in valence and arousal using a general linear model. (B) The similarity of Emotion and Taste concepts by themselves, but not Color concepts, was related to their similarity in hedonic valence. (C) This relationship with valence was also present in the similarity of Taste–Emotion, Color–Emotion, and Taste–Color concept pairs. βv, beta-coefficient for valence similarity; βv, beta-coefficient for arousal similarity.***p < 0.001; **p < 0.01; NSp > 0.05.

Relationship to Valence and Arousal

Using the Warriner et al. (2013) database, we generated separate pairwise matrices of the similarity of Emotion, Taste, or Color concepts in valence and arousal (Figure 3A). We used the relevant sub-sections of the valence and arousal matrices as regressors in GLMs, to predict the similarity values in our behavioral similarity matrix. An ANCOVA of data from all groups revealed a significant effect of group (p < 0.001), valence (p < 0.001), arousal (p < 0.007), and a significant interaction of group × valence (p < 0.001), indicating that the relationship between conceptual similarity and similarity of valence differed across concept groups. We did not observe a significant group × arousal interaction effect (p = 0.57). At the individual concept group level, we observed that the semantic similarity of Emotion concepts was significantly related to their similarity in valence (βv = 0.40, p < 0.001; see Figure 3B) as it was for Taste concepts (βv = 0.29, p < 0.005) but not Color concepts (βv = 0.22, p = 0.25). For the between-category concepts, we observed that the similarity of Taste–Emotion concepts was also highly related to their similarity in valence (βv = 0.30, p < 0.001; Figure 3C), as was the similarity of Taste–Color concepts (βv = 0.21, p < 0.001) and, to a somewhat lesser degree, to Color–Emotion concepts (βv = 0.14, p < 0.001). Importantly, when comparing the valence relationship between Taste–Emotion and Color–Emotion groups, we observed a significant group × valence interaction (p < 0.001), as the relationship between semantic similarity and valence similarity was significantly greater for Taste–Emotion than Color–Emotion (t = 3.3, p < 0.001). Again, this finding held both for the full set of Taste–Emotion concept pairs, as well as for pairings of the four primary taste qualities and emotion concepts (t = 3.4, p < 0.001). Similarity in arousal scores was not significantly related to conceptual similarity in any of the concept groups except for the Color–Emotion group (βa = 0.11, p = 0.004).

Discussion

We sought to understand the prevalence of verbal taste metaphors for emotional concepts by using a behavioral task designed to test the semantic similarity of a variety of concepts related to taste, emotion, and color. We found that taste concepts are judged to be more semantically similar to emotion concepts than are color concepts, another rich and ubiquitous source of metaphors for emotional concepts (Sutton and Altarriba, 2016; Jonauskaite et al., 2020).

We next examined the associated valence and arousal of these concepts and confirmed that the similarity of emotion concepts was highly related to their similarity in valence, a relationship which accounted for over half of the variability in their semantic similarity. This finding underscores the importance of hedonic valence as one of the core features of emotional experience (Russell, 2003; Barrett and Bliss-Moreau, 2009) and echoes the prior finding that hedonic valence and arousal represent a source of universal structure for emotion semantics (Jackson et al., 2019). We also found that the similarity of taste and emotion concepts was also significantly related to their similarity in valence, which accounted for nearly 30% of the variance in the distribution (see also Zhou and Tse, 2020). This relationship between valence and semantic similarity was also significantly greater between taste and emotion concepts than between color and emotion concepts. These findings support our prior prediction that a shared ability to communicate valence underlies the similarity in conceptual structure between these two distinct conceptual domains.

Conceptual metaphor and grounded cognition theories imply that these conceptual domains are tied together not just in the structure of the English language, but in the structure of cognition as well (Lakoff and Johnson, 1980; Barsalou, 2008; Winter, 2016; Speed and Majid, 2020). Thus, the association between taste and emotion concepts may be due, in part, to the recruitment of similar neural structures involved in processing these separate conceptual domains, such as the insular cortex (Singer et al., 2009; Small, 2010; Avery et al., 2017, 2020). In support of this possibility, an fMRI study of metaphorical statements identified that when metaphorical taste expressions were substituted for emotion expressions, they led to increased activation of the insular and orbitofrontal cortices (Citron and Goldberg, 2014), two regions commonly associated with the perception of taste and inferred taste when viewing pictures of appetizing foods (Simmons et al., 2013b,2014; Avery et al., 2020, 2021), as well as the amygdala and hippocampus. This suggests that the substitution of more concrete metaphorical expressions makes these statements more emotionally engaging and thus better mechanisms for conveying their intended emotional information.

Interestingly, though the similarity of color concepts was not related to valence or arousal, the similarity between color concepts and emotion concepts was related to these factors, though to a degree much less than taste and emotion concepts. Previous studies have also identified valence and arousal related associations between color and emotion words (Sutton and Altarriba, 2016). Other studies suggest that this pattern is not limited to linguistic metaphors, but that similarity in hedonic valence may underly cross-modal correspondence between color and music (Palmer et al., 2013), color and taste (Wan et al., 2014), as well as taste and abstract geometric shapes (Velasco et al., 2016; Wang and Spence, 2017). Thus, it is not surprising that, in the present study, we also identified that the similarity of taste and color concepts was also related to their similarity in valence. Taken together, these results suggest that a similarity in hedonic valence may be a key component of any metaphorical association with emotion concepts, as well as other concept groups that contain a high affective internal structure.

One limitation of the present study is that our behavioral data as well as the valence and arousal norms we employed in the analysis of this data (Warriner et al., 2013) were both obtained from western, English-speaking populations. Taste–emotion metaphors are present across multiple languages, however (for example, see Zhou and Tse, 2020), implying the association between these two domains might be a universal feature of human language. Thus, it will be important for future studies to obtain more widespread cross-cultural measures of these norms and associations (see Jonauskaite et al., 2020) in order to verify their similarity across human languages (see Kemmerer, 2019 for an in-depth discussion of culture and conceptual structure). Future studies on the association between sensory and emotional concepts using this task design would also benefit from a broader group of sensory concept categories, such as olfaction, which also has a close association with emotion at the neural level (Zald and Pardo, 1997; Rolls, 2008; Kontaris et al., 2020).

Some of the concepts used in the “taste” category in this study have multiple sensory associations beyond strictly taste, such as olfactory, tactile, or oral trigeminal qualities, especially the food-related properties such as “spicy” and “rotten.” Within chemosensory research, the concept of “taste” refers strictly to the five basic tastes (sweet, salty, sour, bitter, and umami/savory). However, within this study, we sought to investigate the more common concept of taste as present within the English language, which is often interchangeable with flavor (the combination of taste and smell). Notably, human neuroimaging studies have identified that food-related properties such as flavor, texture, and spiciness recruit the same gustatory regions of the insula (de Araujo and Rolls, 2004; Seubert et al., 2015; Kawakami et al., 2016) that are involved in the response to basic tastes (Avery et al., 2020). As this region also responds strongly to visual representations of foods in a taste–quality-specific manner (Avery et al., 2021), the conceptual processing of these various food-related properties likely involves the same neural substrate in the brain. Importantly, we identified that the core results of this study, that of greater semantic similarity for Taste–Emotion pairings and the greater relationship between semantic similarity and valence similarity for Taste–Emotion pairings, held for the four primary taste qualities as well as for the full set of Taste concepts.

Conceptual metaphors, in general, are used as a method for communicating one concept through another related concept (Lakoff and Johnson, 1980). When the concepts to be conveyed are more abstract and internal, such as emotion concepts, conceptual metaphors from more concrete domains, such as taste or color, may be used to ground the concepts in experiences from our external shared environment (Lakoff and Johnson, 1980, 1999; Barsalou, 2008; for a review of conceptual grounding in touch, taste, and smell see Speed and Majid, 2020). This process of conceptual grounding may then result in a conceptual structure for these abstract concepts which is more easily shared between individuals. Our findings suggest that within this process of conceptual metaphor generation, we use the core affective properties of valence and arousal as a way of bridging the divide between concepts relating to our shared sensory environment and concepts which relate to our internal experience.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/njch2/.

Ethics Statement

The studies involving human participants were reviewed and approved by the NIH Combined Neuroscience Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

JA and AM designed the research. JA, AL, and MC performed the research. JA analyzed the data. JA, AL, MC, and AM wrote the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by the Intramural Research Program of the National Institute of Mental Health, National Institutes of Health, and it was conducted under NIH Clinical Study Protocol 93-M-0170 (ZIA MH002588). Clinical-trials.gov, ID: NCT00001360.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Avery, J. A., Gotts, S. J., Kerr, K. L., Burrows, K., Ingeholm, J. E., Bodurka, J., et al. (2017). Convergent gustatory and viscerosensory processing in the human dorsal mid-insula. Hum. Brain Mapp. 38, 2150–2164. doi: 10.1002/hbm.23510

Avery, J. A., Liu, A. G., Ingeholm, J. E., Gotts, S. J., and Martin, A. (2021). Viewing images of foods evokes taste quality-specific activity in gustatory insular cortex. Proc. Natl. Acad. Sci. U.S.A. 118:e2010932118. doi: 10.1073/pnas.2010932118

Avery, J. A., Liu, A. G., Ingeholm, J. E., Riddell, C. D., Gotts, S. J., and Martin, A. (2020). Taste quality representation in the human brain. J. Neurosci. 40, 1042–1052.

Barrett, L. F., and Bliss-Moreau, E. (2009). Affect as a psychological primitive. Adv. Exp. Soc. Psychol. 41, 167–218.

Barrett, L. F., and Simmons, W. K. (2015). Interoceptive predictions in the brain. Nat. Rev. Neurosci. 16, 419–429.

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Statist. Soc. Seri. B 57, 289–300.

Brysbaert, M., Warriner, A. B., and Kuperman, V. (2014). Concreteness ratings for 40 thousand generally known English word lemmas. Behav. Res. Methods 46, 904–911.

Citron, F. M. M., and Goldberg, A. E. (2014). Metaphorical sentences are more emotionally engaging than their literal counterparts. J. Cogn. Neurosci. 26, 2585–2595. doi: 10.1162/jocn_a_00654

Craig, A. D. (2003). Interoception: the sense of the physiological condition of the body. Curr. Opin. Neurobiol. 13, 500–505.

de Araujo, I. E., and Rolls, E. T. (2004). Representation in the human brain of food texture and oral fat. J. Neurosci. 24, 3086–3093.

Hebart, M. N., Zheng, C. Y., Pereira, F., and Baker, C. I. (2020). Revealing the multidimensional mental representations of natural objects underlying human similarity judgements. Nat. Hum. Behav. 4, 1–20. doi: 10.1038/s41562-020-00951-3

Jackson, J. C., Watts, J., Henry, T. R., List, J.-M., Forkel, R., Mucha, P. J., et al. (2019). Emotion semantics show both cultural variation and universal structure. Science (New York, NY) 366, 1517–1522. doi: 10.1126/science.aaw8160

Jonauskaite, D., Abu-Akel, A., Dael, N., Oberfeld, D., Abdel-Khalek, A. M., Al-Rasheed, A. S., et al. (2020). Universal patterns in color-emotion associations are further shaped by linguistic and geographic proximity. Psychol. Sci. 31, 1245–1260. doi: 10.1177/0956797620948810

Kawakami, S., Sato, H., Sasaki, A. T., Tanabe, H. C., Yoshida, Y., Saito, M., et al. (2016). The brain mechanisms underlying the perception of pungent taste of capsaicin and the subsequent autonomic responses. Front. Hum. Neurosci. 9:720. doi: 10.3389/fnhum.2015.00720

Kemmerer, D. (2019). Concepts in the Brain: The View From Cross-Linguistic Diversity. Oxford: Oxford University Press.

Kontaris, I., East, B. S., and Wilson, D. A. (2020). Behavioral and neurobiological convergence of odor, mood and emotion: a review. Front. Behav. Neurosci. 14:35. doi: 10.3389/fnbeh.2020.00035

Lakoff, G., and Johnson, M. (1999). Philosophy in the Flesh: The Embodied Mind and Its Challenge to Western Thought. New York: Basic Books.

List, J. M., Cysouw, M., and Forkel, R. (2016). “Concepticon: a resource for the linking of concept lists,” in Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), 2393–2400.

List, J. M., Greenhill, S. J., Anderson, C., Mayer, T., Tresoldi, T., and Forkel, R. (2018). CLICS2: An improved database of cross-linguistic colexifications assembling lexical data with the help of cross-linguistic data formats. Linguist. Typol. 22, 277–306.

Martin, A. (2016). GRAPES - grounding representations in action, perception, and emotion systems: how object properties and categories are represented in the human brain. Psychon. Bull. Rev. 23, 979–990. doi: 10.3758/s13423-015-0842-3

Palmer, S. E., Schloss, K. B., Xu, Z., and Prado-León, L. R. (2013). Music-color associations are mediated by emotion. Proc. Natl. Acad. Sci. U.S.A. 110, 8836–8841. doi: 10.1073/pnas.1212562110

Rolls, E. (2008). Functions of the orbitofrontal and pregenual cingulate cortex in taste, olfaction, appetite and emotion. Acta Physiol. Hung. 95, 131–164. doi: 10.1556/APhysiol.95.2008.2.1

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychol. Rev. 110, 145–172.

Sakamoto, M., and Watanabe, J. (2016). Cross-modal associations between sounds and drink tastes/textures: a study with spontaneous production of sound-symbolic words. Chem. Sen. 41, 197–203. doi: 10.1093/chemse/bjv078

Seubert, J., Ohla, K., Yokomukai, Y., Kellermann, T., and Lundström, J. N. (2015). Superadditive opercular activation to food flavor is mediated by enhanced temporal and limbic coupling. Hum. Brain Mapp. 36, 1662–1676. doi: 10.1002/hbm.22728

Simmons, W. K., Avery, J. A., Barcalow, J. C., Bodurka, J., Drevets, W. C., and Bellgowan, P. (2013a). Keeping the body in mind: insula functional organization and functional connectivity integrate interoceptive, exteroceptive, and emotional awareness. Hum. Brain Mapp. 34, 2944–2958. doi: 10.1002/hbm.22113

Simmons, W. K., Rapuano, K. M., Kallman, S. J., Ingeholm, J. E., Miller, B., Gotts, S. J., et al. (2013b). Category-specific integration of homeostatic signals in caudal but not rostral human insula. Nat. Neurosci. 16, 1551–1552. doi: 10.1038/nn.3535

Simmons, W. K., Rapuano, K. M., Ingeholm, J. E., Avery, J., Kallman, S., Hall, K. D., et al. (2014). The ventral pallidum and orbitofrontal cortex support food pleasantness inferences. Brain Struct. Funct. 219, 473–483. doi: 10.1007/s00429-013-0511-0

Singer, T., Critchley, H. D., and Preuschoff, K. (2009). A common role of insula in feelings, empathy and uncertainty. Trends Cogn. Sci. 13, 334–340. doi: 10.1016/j.tics.2009.05.001

Small, D. M., Gregory, M. D., Mak, Y. E., Gitelman, D., Mesulam, M. M., and Parrish, T. (2003). Dissociation of neural representation of intensity and affective valuation in human gustation. Neuron 39, 701–711. doi: 10.1016/s0896-6273(03)00467-7

Speed, L. J., and Majid, A. (2020). Grounding language in the neglected senses of touch, taste, and smell. Cogn. Neuropsychol. 37, 363–392. doi: 10.1080/02643294.2019.1623188

Sutton, T. M., and Altarriba, J. (2016). Color associations to emotion and emotion-laden words: a collection of norms for stimulus construction and selection. Behav. Res. Methods 48, 686–728. doi: 10.3758/s13428-015-0598-8

Velasco, C., Woods, A. T., Marks, L. E., Cheok, A. D., and Spence, C. (2016). The semantic basis of taste-shape associations. PeerJ 4:e1644. doi: 10.7717/peerj.1644

Wan, X., Woods, A. T., van den Bosch, J. J., McKenzie, K. J., Velasco, C., and Spence, C. (2014). Cross-cultural differences in crossmodal correspondences between basic tastes and visual features. Front. Psychol. 5:1365. doi: 10.3389/fpsyg.2014.01365

Wang, Q. J., and Spence, C. (2017). “A sweet smile”: the modulatory role of emotion in how extrinsic factors influence taste evaluation. Cogn. Emot. 32, 1052–1061. doi: 10.1080/02699931.2017.1386623

Warriner, A. B., Kuperman, V., and Brysbaert, M. (2013). Norms of valence, arousal, and dominance for 13,915 English lemmas. Behav. Res. Methods 45, 1191–1207. doi: 10.3758/s13428-012-0314-x

Winter, B. (2016). Taste and smell words form an affectively loaded and emotionally flexible part of the English lexicon. Lang. Cogn. Neurosci. 31, 975–988.

Zald, D. H., and Pardo, J. V. (1997). Emotion, olfaction, and the human amygdala: amygdala activation during aversive olfactory stimulation. Proc. Natl. Acad. Sci. U.S.A. 94, 4119–4124.

Keywords: taste, emotion, metaphor, cognition, grounded cognition

Citation: Avery JA, Liu AG, Carrington M and Martin A (2022) Taste Metaphors Ground Emotion Concepts Through the Shared Attribute of Valence. Front. Psychol. 13:938663. doi: 10.3389/fpsyg.2022.938663

Received: 07 May 2022; Accepted: 24 June 2022;

Published: 12 July 2022.

Edited by:

Carlos Cornejo, Pontificia Universidad Católica de Chile, ChileReviewed by:

Greg Smutzer, Temple University, United StatesKathrin Ohla, Research Center Jülich, Germany

Copyright © 2022 Avery, Liu, Carrington and Martin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jason A. Avery, amFzb24uYXZlcnlAbmloLmdvdg==, orcid.org/0000-0003-4097-2819

Jason A. Avery

Jason A. Avery Alexander G. Liu

Alexander G. Liu Madeline Carrington

Madeline Carrington Alex Martin

Alex Martin