94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 03 August 2022

Sec. Human-Media Interaction

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.920844

Recent advances in automation technology have increased the opportunity for collaboration between humans and multiple autonomous systems such as robots and self-driving cars. In research on autonomous system collaboration, the trust users have in autonomous systems is an important topic. Previous research suggests that the trust built by observing a task can be transferred to other tasks. However, such research did not focus on trust in multiple different devices but in one device or several of the same devices. Thus, we do not know how trust changes in an environment involving the operation of multiple different devices such as a construction site. We investigated whether trust can be transferred among multiple different devices, and investigated the effect of two factors: the similarity among multiple devices and the agency attributed to each device, on trust transfer among multiple devices. We found that the trust a user has in a device can be transferred to other devices and that attributing different agencies to each device can clarify the distinction among devices, preventing trust from transferring.

With the evolution of automation technology, autonomous systems, such as robots and self-driving cars, are becoming a familiar part of people's lives, and the opportunities for people and systems to work together are increasing. There are a variety of ways in which people and autonomous systems can collaborate, from having a person and an arm robot physically assemble parts together to having a person monitor a system while leaving the work to the system. Trust plays an important role in the collaboration between humans and autonomous systems because a system that cannot be trusted may lead to humans avoiding the system due to excessive risk perception of the work and miscommunication of intentions (Oleson et al., 2011).

Therefore, methods for estimating the trust people have in autonomous systems (John and Neville, 1992; Chen et al., 2020; Kohn et al., 2021) and methods for improving trust based on the estimated trust have been studied by investigating how people change their trust in autonomous systems (Floyd et al., 2015). Floyd et al. (2015) developed an algorithm to infer trust in oneself and generate more “trustworthy” behavior in robots working with humans. Soh et al. (2020) showed that when the same robot performs different tasks, the trust acquired in the previous task is transferred to the later task, a phenomenon called “multi-task trust transfer.” It was also shown that the degree of trust transfer is greater when both of the tasks are more similar and when the latter task is easier than the previous task.

Soh et al. have focused on the transition of trust in the same type of device. However, there are situations where multiple devices with different functions and shapes are operated (Tan et al., 2020, 2021). And, there are a lot of examples from the industrial situation such as the combination of trucks and excavators on a construction site (Stentz et al., 1999) to the daily situation such as the combination of smartphones and smartwatches (Chen et al., 2014). Also, even though multiple devices don't exist simultaneously, we could face the transition of trust among multiple devices such as when we update a device to a new one. Therefore, it is necessary to understand not only the transition of trust in the same type of device but also how trust transitions among multiple devices with different functions and shapes. We call the transition of trust among multiple devices “multi-device trust transfer (MDTT),” and investigated whether MDTT can occur and what characteristics it has through an experiment. This paper makes the following contributions:

• We conducted a human-subjects study and found that the trust a user has in a device can be transferred to other devices.

• We also found that creating different agents for each device can enhance the distinction among the devices, preventing trust from transferring.

The study of the trust humans have in robots is an important topic in human-robot interaction. However, trust is a multi-dimensional concept that has varying definitions even within the same field (Xie et al., 2019). For example, Gambetta (2000) defined trust as the subjective probability with which an agent assesses whether another agent will perform a particular action. Jones and Marsh (1997) broadly defined trust as being in three categories: basic trust, general trust, and contextual trust. Basic trust is the trust subject X has regardless of the object and is determined by X's experience. General trust is the trust X has in the other party Y regardless of the context. In a collaborative environment with an autonomous system, context is the task, and general trust is the trust X has in Y regardless of the task. Contextual trust is the trust X has in Y for a specific context, and in a collaborative environment, it is the trust X has in Y in task α. We focused on general trust and contextual trust to investigate the trust users have in an autonomous system.

The success or failure of a task affects trust (Chen et al., 2020). When a task succeeds, trust generally increases, and when it fails, trust decreases. Therefore, by repeating the same task, the user's trust in the system will converge to a value corresponding to the system's ability to perform the task. This process of adjusting the user's trust to a value suitable for the performance of the system is called “trust calibration” (Lee and See, 2004). When the user's trust is not properly calibrated and is lower than the actual performance of the robot, it is called “distrust,” and when it is higher than the actual performance, it is called “overtrust” (Lee and See, 2004). The uncalibrated state has various disadvantages (Oleson et al., 2011; Ullrich et al., 2021). For example, Freedy et al. (2007) showed that humans more frequently intervene with robots in the distrust state, resulting in longer work times. Therefore, several methods of facilitating calibration have been proposed (McGuirl and Sarter, 2006; Verberne et al., 2012; Okamura and Yamada, 2020; Zhang et al., 2020; Lebiere et al., 2021). For example, Wang et al. (2016) improved trust and performance by increasing the transparency of a robot using automatically generated descriptions of the robot.

The observation of the success or failure of a task transfers not only to the observed task but also to different tasks. Soh et al. (2020) called this phenomenon “trust transfer” and investigated the effect of the differences in the relationships between tasks on it. In their experiment, they asked participants to observe a task in which an autonomous robot grasps an object and investigated how the user's trust in the same robot performing a different task changes before and after the observation.

Two factors, similarity between tasks and difference in difficulty, were varied in their experiment. For the similarity-between-tasks factor, they prepared two conditions of similar and dissimilar condition and used two types of tasks, a grasping task to grasp an object and a navigation task. In the similar condition, they evaluated the user's trust in the robot for the grasping task after observing the same task. Whereas, as the dissimilar condition, they evaluated it in the navigation task after observing the grasping task. For the difference-in-difficulty factor, they prepared two levels for each type of task, easy and difficult, by varying the ease of grasping the object in the grasping task and the presence or absence of an accompanying person in the navigation task.

The results of their experiment indicated that the degree of trust transfer was greater for the same type of task than for different tasks. It was also shown that observing the success of a task with high difficulty was transferred to increase the trust in a task with low difficulty. Soh et al. (2020) investigated trust transfer when the same device performed multiple tasks but did not investigate trust transfer among multiple devices. We investigated multi-device trust transfer (MDTT), which is trust transfer among multiple devices.

In human-agent interaction, it has been shown that agency, the perception of intentionality in an anthropomorphic artifact, can transfer among multiple devices (Ogawa and Ono, 2008; Syrdal et al., 2009; Kim et al., 2013). In a system design in which a virtual agent, such as a computer graphics (CG) character, migrates to multiple devices, the agency of the virtual agent is also migrated to those devices. This agent is called a migrate agent.

Imai et al. (1999) have shown that using the ITACO system, in which the same on-screen agent between a display attached to the robot and a laptop, increased the impression of the robot among participants who interacted with the agent on the laptop. Reig et al. (2020) proposed a design in which a personal AI assistant on a user's smartphone transfers to service robots in public places. They compared their proposed design with that in which the robot does not adapt to the user and in which the robot adapts to the user by storing information about the user and found that users prefer the design where their personal AI assistant migrates to the robot.

Even though studies have suggested that agency can enhance trust in a system (Waytz et al., 2014; Large et al., 2019), previous research on migrate agents did not sufficiently investigate the effect of migration in terms of trust. Therefore, it is possible that the trust a user has in a migrate agent may also be transferred to the migrated devices along with the transfer of agency. Therefore, we also investigated MDTT when using a migrate agent and the relationship between agency and MDTT.

We now describe our experiment designed to investigate the characteristics of MDTT. In particular, we formulated the following hypotheses and verified them through the experiment.

Hypothesis 1 (H1): When there are multiple devices and each device performs a task, the observation of one device's successful completion of the task will affect the trust in the other devices.

Research has been conducted on trust when several of the same devices are used, and it has been found that the behavior of one device affects the entire group (Gao et al., 2013; Fooladi Mahani et al., 2020). This suggests that trust may be transferred even among multiple devices with different functions and shapes.

Hypothesis 2 (H2): The degree of trust transfer is greater between similar devices than between devices with different characteristics.

It is thought that the more similar a device is to the observed device, the more likely trust will be transferred. For example, if we observe a self-driving car, we are more likely to apply that experience to another type of self-driving car, such as a bus, than to an autonomous drone.

Hypothesis 3 (H3): Using a migrate agent will increase the degree of trust transfer compared with not using it.

When agency transitions between devices using a migrate agent, it is thought that the user treats the source device and destination device as the same entity. As a result, trust is expected to be transferred along with the transition of the agency.

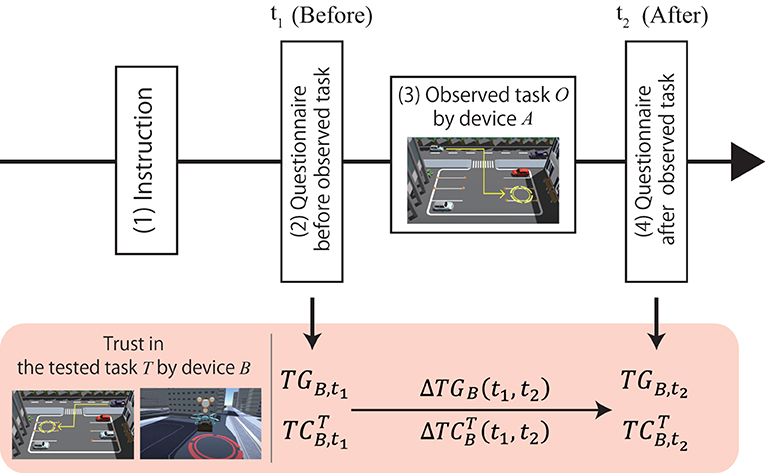

In this experiment, we used 3D CG to create videos of several different devices performing a task and investigated the characteristics of MDTT by measuring the trust in another device before and after the participants observed the video of one device performing the task. Each participant watched two videos depicting different devices performing a task. The task to be watched first is called the observed task, and the task to be watched after the observed task is called the tested task. Figure 1 shows the flow of this experiment, and the following sections provide details of the experimental settings. We measured trust transfer by evaluating the change in the trust in the device performing the tested task before and after the participants watch the observed task.

Figure 1. Experimental procedure proceeded from left to right, and tn at top indicates when participants answered questionnaire. In lower part, correspondence between time and trust value is shown.

We prepared the following three factors.

• Time: Time of evaluating trust

This is a factor to investigate the existence of MDTT (H1). Each condition in the time factor indicates the time when to evaluate the trust participants have in a device performing the tested task. There are two conditions: the “Before” condition, under which participants have not seen the observed task yet, and “After” condition under which participants have seen the observed task.

• Device: Similarity of devices

This is a factor to investigate the effect of device similarity on MDTT (H2). We prepared a “Similar-device” condition, under which the device performing the tested task has similar functionality to the device performing the observed task, and “Dissimilar-device” condition under which the device differs in functionality.

• Agent: Type of agents that migrate to devices

This is a factor to investigate the effect of agency transition on MDTT (H3). We prepared three conditions: the “No-agent” condition, under which no agent is created, “With-migrate-agent” condition, under which a migrating agent transfers among devices and performs two tasks, and the “With-agent” condition under which there is an agent for each device without transition.

To take into account the effect of viewing experience on trust, we designed the device and agent factors as between-subjects factors.

The two tasks used in this experiment were a driving task and a drone task. The driving task involves a self-driving car parking in a parking lot, as shown in Figure 2A. The self-driving car at the top of the screen will attempt to park in the parking space indicated with the yellow circle. Cones are placed at the four corners of the parking space, and if the car hits one of these cones, the task will fail. The drone task, as shown in Figure 2B, involves a drone carrying luggage from the start point inside the blue circle through the city to the goal inside the red circles. If the drone collides with a building or another elevated structure during transportation, the task is considered a failure. We created videos of successful and failed scenes for each task.

The driving task was used as the observed task under all conditions and as the tested task under the Similar-device condition. The drone task was used under the Dissimilar-device condition. However, under the Similar-device condition, the observed and tested tasks used different types of vehicles and stop positions.

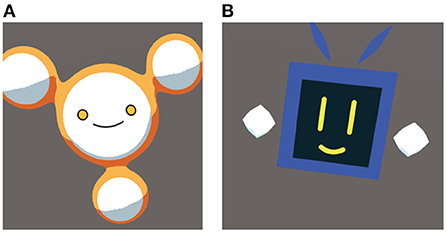

Under the With-agent and With-migrate-agent conditions, we prepared two types of agents as shown in Figure 3. The name of each agent was “Blue” and “Yellow” based on their color, and the name was given under the condition where each agent was used. Different voices were used to clarify the differences between the agents. The agent was placed floating on the top of the device to make it clear that it is the subject that executes the task. Under the With-migrate-agent condition, we created a video to clarify that the agent is being transferred between devices.

Figure 3. Design of agents. (A) Agent type “Yellow.” (B) Agent type “Blue.” These agents were used under with-agent and with-migrate-agent conditions.

To measure trust in a device, we created a questionnaire for trust evaluation referring to the questionnaire created by Washburn et al. (2020) and prepared two questions: “Can the robot be trusted?” and “Do you think this task will be successful?” Both questions were answered on a 7-point Likert scale. The former corresponds to the evaluation of general trust, and the latter corresponds to that of contextual trust.

We focused on the effect of the device and agent factors on not the existence but the degree of MDTT. Therefore, we introduced a scale called “trust change,” which was used in a previous study (Soh et al., 2020), to evaluate the degree of MDTT. Trust change is the degree of change in the trust value before and after the observation of a certain task. Let the value of general trust for device A at time t be TGA, t, and the value of contextual trust for device A in task L at time t be . Let tbefore and tafter be the time before and after the observation of different devices performing the task, respectively, and the trust change for each trust ΔTGA and can be expressed as follows:

In short, to test H1, we used general trust and contextual trust and compared the difference between before and after seeing the observed task. To test H2 and H3, we converted both values into trust change and compared the difference due to the device and agent factors.

Figure 1 shows the flow of the experimental procedure and corresponding evaluation values.

Participants first provided their age and sex then were explained the task they were going to watch during the experiment. Under the Similar-device condition, the driving task was explained, and under the Dissimilar-device condition, both driving and drone tasks were explained. When explaining the tasks, it was mentioned that the task can be successful or fail, and both successful and failed scenes were shown as examples.

After the explanation of the task, under the With-agent and With-migrate-agent conditions (not No-agent condition), the participants watched a video to instruct them that the agents will operate the devices. This video introduced the agents by their names and the task they were in charge of. Under the With-agent condition, two agents (Yellow and Blue) were introduced, and under the With-migrate-agent condition, only one of the agents was introduced. To understand how the trust questionnaire was asked to participants, we attached the detail of raw instruction as Supplementary material.

Before watching the observed task, the participants were asked to answer the three questions related to trust and the execution time described in the previous section for both the observed and tested tasks. The time of the “Before” condition corresponds to this phase.

Participants watched the driving task being performed as an observed task. However, under the With-migrate-agent condition, the transition of the agent to the device used in the tested task was shown at the end of the video.

After the participants finished watching the observed task, they were asked the same questions as in Section 3.5.2 for the observed and tested tasks. The time of the “After” condition corresponds to this phase. Then, according to the instruction that the participants would evaluate their trust in several tasks, participants watched the tested task and answered the questions as well as the observed task. Finally, participants were asked to answer the questions about the objects depicted in the video to avoid invalid responses and comment on the experiment as a whole by writing freely.

We recruited 600 participants using a crowdsourcing service. To investigate the effect of MDTT on various people, we prepared two criteria for recruiting participants. One of the criteria is the participants who are over 18 years old, the other is the participants who can read the Japanese instruction. There were 277 male participants (46.2%), 320 female participants (53.3%), and 3 participants who answered “other” (0.5%), and the average age was 39.7 years old. 100 participants were equally assigned to each of the six conditions, which were a combination of device and agent factors. Each participant was paid 135 yen equally as a reward after the experiment through that service. In order to eliminate the influence of the agent's appearance, the With-migrate-agent condition was designed so that the number of participants in the experiment for each agent, Yellow and Blue, was the same. Under the With-agent condition, we made the number of participants watching the video that each agent performs the observed task equally.

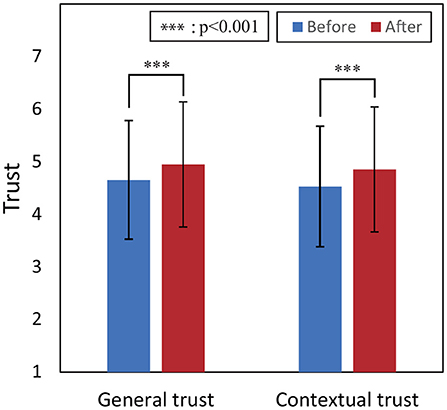

Figure 4 shows the values of general trust and contextual trust for the tested task before and after watching the observed task. To statistically test the differences in these values, a three-way analysis of variance (ANOVA) was conducted, taking into account the effect of the device and agent factors, and adding the both factors in addition to the time factor of before and after watching.

Figure 4. Results of comparison between mean of each trust value before and after watching observed task. Error bars represent standard deviations.

Regarding contextual trust, there was a significant difference in the time factor were shown at a 5% level of significance [F(1, 594) = 70.45, p < 0.001, partial η2 = 0.11]. And there was no three-way and two-way interaction, so it was shown that contextual trust increased after the viewing of the observed task in all conditions of device and agent factor.

Regarding general trust, there was a significant difference in the time factor [F(1, 594) = 80.47, p < 0.001, partial η2 = 0.12]. However, there was a two-way interaction between the time and agent factors [F(2, 594) = 3.57, p = 0.029 < 0.05, partial η2 = 0.012]. There was no need to test for the simple main effect of the agent factor under each time condition; thus, we tested for the simple main effect of the time factor under each agent condition followed by Bonferroni correction. We found that the simple main effect of the time factor under all agent conditions was significant (No-agent: p < 0.001; With-agent: p = 0.001; With-migrate-agent: p < 0.001). This suggests that general trust significantly increased after the viewing of the observed task. These results indicate that both contextual trust and general trust significantly increased before and after the watching of the observed task regardless of device and agent factors, supporting H1.

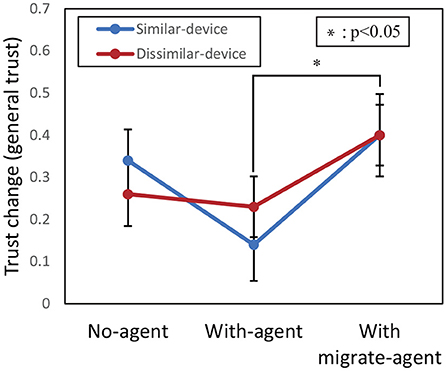

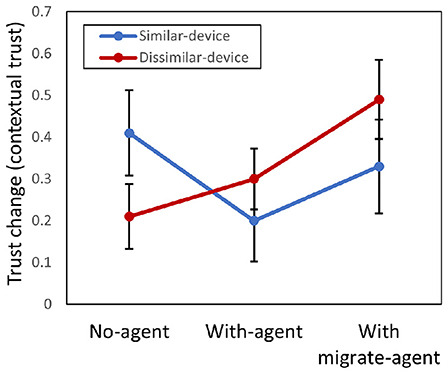

To evaluate the effect of the device and agent factors on the degree of MDTT, a two-way ANOVA between the device and agent factors was conducted on the trust-change values of general trust (Figure 5) and contextual trust (Figure 6).

Figure 5. Results of comparison between mean scores of trust change of general trust. Error bars represent standard errors.

Figure 6. Results of comparison between mean scores of trust change of contextual trust. Error bars represent standard errors.

Regarding general trust, there was a significant difference in the agent factor [F(2, 594) = 3.57, p = 0.029, partial η2 = 0.012]. However, there were no significant differences for the device factor [F(1, 594) = 0.003, p = 0.96, partial η2<0.001] and a two-way interaction between both factors [F(2, 594) = 0.58, p = 0.57, partial η2 = 0.002]. Therefore, multiple comparisons using the Bonferroni method for the agent factor showed a significant difference between the With-migrate-agent and With-agent conditions (p=0.023).

Regarding contextual trust, there was no significant difference in the agent factor [F(2, 594) = 1.47, p = 0.231, partial η2 = 0.005], device factor [F(1, 594) = 0.067, p = 0.79, partial η2<0.001], and a two-way interaction between both factors [F(2, 594) = 2.09, p = 0.13, partial η2 = 0.007].

The results show that H2, “The degree of trust-transfer will be greater between similar devices than between devices with different properties,” was rejected because there was no difference between the device factor regarding trust change for both general trust and contextual trust.

For H3, “Using a migrate agent increases the degree of trust transfer compared with not using a migrate agent,” it was found that the With-migrate-agent condition increased the degree of trust transfer compared with the With-agent condition regarding general trust. However, since there was no significant difference compared with the No-agent condition, H3 was partially supported.

We investigated the characteristics of MDTT using videos including 3D CG characters. Hypothesis 1 was supported by the result that both general trust and contextual trust were significantly higher after watching than before watching the observed task. This was also supported by the result that the after-watching ratings were significantly higher regardless of device and agent factors, indicating that MDTT occurred under all conditions. When multiple autonomous devices are used at the same time, the trust that the user has in one device transitions to another device, so it is necessary to take the other devices into account when estimating the trust in a device. This is especially important to accurately estimate trust when a user has little experience in observing a device's ability to perform a task, such as when using a new system.

Hypothesis 2 was rejected because no difference was found in the device factor between general trust and context trust. This suggests that device similarity has no effect on the degree of MDTT. However, in a previous study of trust transfer (Soh et al., 2020), the similarity of tasks enhanced the degree of the trust transfer. As a hypothesis for the difference, the trust transfer might be weakened by transfer among multiple different devices. As the result, the effect of similarity was also weakened and lost. From the free descriptions for the Dissimilar-device condition, the comment “I thought the drone would also work well because the parking was smooth” was obtained, suggesting that MDTT may occur even when devices are dissimilar in properties. Although two types of tasks were used, driving and drone, there were comments that both were broadly regarded as automatic-driving tasks, which may be due to the fact that the properties of the devices were similar to some extent even under the Dissimilar-device condition.

Although there was no significant difference between the No-agent and With-migrate-agent conditions regarding H3, the degree of trust transfer was significantly smaller under the With-agent condition in terms of general trust. This means that the transition of agency does not increase the degree of MDTT, but attributing different agencies to each device weakens MDTT. In Soh et al.'s (2020) research, the value of general trust was correlated with that of contextual trust. Thus, this unbalanced result is unintuitive. As a hypothesis for the difference, among the multi-dimensions of trust, migrate agents might specifically affect the dimension related to personality. The general trust is evaluated for the system regardless of a task, thus the evaluation of it might be near to that of personality. And, it is shown that migrate agents could increase the impression (Imai et al., 1999). Therefore, migrate agents increased the general trust. On the other hand, when using a device with each agent, it emphasized the distinction between agents and prevented the transfer of general trust clearly. It is also possible to avoid overtrust and negative trust transfer by intentionally changing the agency to be attributed to each device. Also, the other reason there was no difference between the No-agent and With-migrate-agent conditions is that even under the No-agent condition, identification is possible between devices. We did not explicitly indicate in the instructions that the device performing the observed task and that performing the tested task were different.

We created videos using 3D CG and had people watch them. However, people may have different impressions of a physical device and 3D CG, which may affect the transition of trust. Therefore, it is necessary to conduct experiments to investigate the effects of using actual devices. We investigated MDTT when the observed task was successful, but in reality, a system may fail. In previous studies (Lee and See, 2004), the failure of a task decreased trust. Therefore, it is necessary to investigate negative MDTT since the decrease in trust transfer due to failure of the observed task may be transferred to another device. It is also necessary to consider more types of devices, such as humanoid robots, and investigate the effects of such differences in more detail.

In this experiment, we designed the agents and devices with reference to the previous work (Reig et al., 2020) so that the participants can distinguish between the device and agent of the observed task and that of the tested task. For the identification, Reig et al. changed the name, voice, and appearance of a virtual agent. Therefore, in our experiment, we designed the two agents to have different names, voices, and appearances each other. Also, for the device, we designed different appearances and names for each device. Under the Similar-device condition, one is a light blue compact car, and the other is a blue truck. Under the Different-device condition, one is a light blue compact car, and the other is a light blue drone. According to the above setting, we designed the appearance and explained those devices in the instructions. However, since we did not execute the manipulation check for the participant's device identification, there is the possibility that the participants did not distinguish between the device of the observed task and that of the tested task, and the participants transferred their trust in the device because they recognized it as the same one.

We formulated three hypotheses regarding multi-device trust transfer (MDTT), the transition of trust among multiple different devices and conducted an experiment on MDTT. We found that by observing one device successfully completing a task, trust is transferred to other different devices. However, there was no effect on the degree of MDTT due to differences in the similarity of devices. As a result of investigating the effect of the transition of agency by a migrate agent on the degree of MDTT, it was found that there was no difference in this degree when comparing the case in which a migrate agent was used and in which no agent was used. However, compared with drawing a different agent for each device, the degree of MDTT became larger when using a migrate agent.

The original contributions presented in the study are included in the article/Supplementary material; further inquiries can be directed to the corresponding author.

The studies involving human participants were reviewed and approved by Keio University Faculty of Science and Technology, Graduate School of Science and Technology Bioethics Committee. All participants in this study read our informed consent presented in web form and approved the participation.

KE conducted the experiment. KO performed the statistical analysis and drafted the manuscript. All authors contributed to the conception and design of the study, manuscript revision, read, and approved the submitted version.

This work was supported in part by JST, CREST Grant Number JPMJCR19A1, Japan and JSPS KAKENHI Grant Number JP20K19897.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.920844/full#supplementary-material

Chen, M., Nikolaidis, S., Soh, H., Hsu, D., and Srinivasa, S. (2020). Trust-aware decision making for human-robot collaboration: model learning and planning. ACM Trans. Hum. Robot Interact. 9, 1–23. doi: 10.1145/3359616

Chen, X. A., Grossman, T., Wigdor, D. J., and Fitzmaurice, G. (2014). “Duet: exploring joint interactions on a smart phone and a smart watch,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI '14 (New York, NY: Association for Computing Machinery), 159–168. doi: 10.1145/2556288.2556955

Floyd, M. W., Drinkwater, M., and Aha, D. W. (2015). “Trust-guided behavior adaptation using case-based reasoning,” in Proceedings of the 24th International Conference on Artificial Intelligence, IJCAI'15 (Buenos Aires: AAAI Press), 4261–4267.

Fooladi Mahani, M., Wang, Y., and Jiang, L. (2020). A Bayesian trust inference model for human-multi-robot teams. Int. J. Soc. Robot. 13, 1951–1965. doi: 10.1007/s12369-020-00705-1

Freedy, A., DeVisser, E., Weltman, G., and Coeyman, N. (2007). “Measurement of trust in human-robot collaboration,” in 2007 International Symposium on Collaborative Technologies and Systems (Orlando, FL), 106–114. doi: 10.1109/CTS.2007.4621745

Gambetta, D (2000). Can we trust trust. Trust: making and breaking cooperative relations. Br. J. Sociol. 13, 213–237. doi: 10.2307/591021

Gao, F., Clare, A., Macbeth, J., and Cummings, M. (2013). “Modeling the impact of operator trust on performance in multiple robot control,” in 2013 AAAI Spring Symposium Series (Standford, CA: AAAI Press).

Imai, M., Ono, T., and Etani, T. (1999). “Agent migration: communications between a human and robot,” in IEEE SMC'99 Conference Proceedings, 1999 IEEE International Conference on Systems, Man, and Cybernetics (Cat. No. 99CH37028), Vol. 4 (Tokyo), 1044–1048. doi: 10.1109/ICSMC.1999.812554

John, L., and Neville, M. (1992). Trust, control strategies and allocation of function in human-machine systems. Ergonomics 35, 1243–1270. doi: 10.1080/00140139208967392

Jones, S., and Marsh, S. (1997). Human-computer-human interaction: trust in CSCW. SIGCHI Bull. 29, 36–40. doi: 10.1145/264853.264872

Kim, C. H., Yamazaki, Y., Nagahama, S., and Sugano, S. (2013). “Recognition for psychological boundary of robot,” in 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Tokyo: IEEE), 161–162. doi: 10.1109/HRI.2013.6483551

Kohn, S. C., de Visser, E. J., Wiese, E., Lee, Y.-C., and Shaw, T. H. (2021). Measurement of trust in automation: a narrative review and reference guide. Front. Psychol. 12:4138. doi: 10.3389/fpsyg.2021.604977

Large, D. R., Harrington, K., Burnett, G., Luton, J., Thomas, P., and Bennett, P. (2019). “To please in a pod: employing an anthropomorphic agent-interlocutor to enhance trust and user experience in an autonomous, self-driving vehicle,” in Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '19 (New York, NY: Association for Computing Machinery), 49–59. doi: 10.1145/3342197.3344545

Lebiere, C., Blaha, L. M., Fallon, C. K., and Jefferson, B. (2021). Adaptive cognitive mechanisms to maintain calibrated trust and reliance in automation. Front. Robot. AI 8:135. doi: 10.3389/frobt.2021.652776

Lee, J. D., and See, K. A. (2004). Trust in automation: designing for appropriate reliance. Hum. Factors 46, 50–80. doi: 10.1518/hfes.46.1.50.30392

McGuirl, J. M., and Sarter, N. B. (2006). Supporting trust calibration and the effective use of decision aids by presenting dynamic system confidence information. Hum. Factors 48, 656–665. doi: 10.1518/001872006779166334

Ogawa, K., and Ono, T. (2008). “Itaco: effects to interactions by relationships between humans and artifacts,” in 8th International Conference on Intelligent Virtual Agents (IVA 2008) (Tokyo: Springer), 296–307. doi: 10.1007/978-3-540-85483-8_31

Okamura, K., and Yamada, S. (2020). Empirical evaluations of framework for adaptive trust calibration in human-AI cooperation. IEEE Access 8, 220335–220351. doi: 10.1109/ACCESS.2020.3042556

Oleson, K., Billings, D., Kocsis, V., Chen, J., and Hancock, P. (2011). “Antecedents of trust in human-robot collaborations,” in 2011 IEEE International Multi-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support (CogSIMA) (Miami Beach, FL: IEEE), 175–178. doi: 10.1109/COGSIMA.2011.5753439

Reig, S., Luria, M., Wang, J. Z., Oltman, D., Carter, E. J., Steinfeld, A., et al. (2020). “Not some random agent: multi-person interaction with a personalizing service robot,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, HRI '20 (New York, NY: Association for Computing Machinery), 289–297. doi: 10.1145/3319502.3374795

Soh, H., Xie, Y., Chen, M., and Hsu, D. (2020). Multi-task trust transfer for human-robot interaction. Int. J. Robot. Res. 39, 233–249. doi: 10.1177/0278364919866905

Stentz, A., Bares, J., Singh, S., and Rowe, P. (1999). A robotic excavator for autonomous truck loading. Auton. Robots 7, 175–186. doi: 10.1023/A:1008914201877

Syrdal, D. S., Koay, K. L., Walters, M. L., and Dautenhahn, K. (2009). “The boy-robot should bark!: children's impressions of agent migration into diverse embodiments,” in Proceedings: New Frontiers of Human-Robot Interaction, A Symposium at AISB (Edinburgh).

Tan, X. Z., Luria, M., and Steinfeld, A. (2020). “Defining transfers between multiple service robots,” in Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, HRI '20 (New York, NY: Association for Computing Machinery), 465–467. doi: 10.1145/3371382.3378258

Tan, X. Z., Luria, M., Steinfeld, A., and Forlizzi, J. (2021). “Charting sequential person transfers between devices, agents, and robots,” in Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, HRI '21 (New York, NY: Association for Computing Machinery), 43–52. doi: 10.1145/3434073.3444654

Ullrich, D., Butz, A., and Diefenbach, S. (2021). The development of overtrust: an empirical simulation and psychological analysis in the context of human-robot interaction. Front. Robot. AI 8:44. doi: 10.3389/frobt.2021.554578

Verberne, F. M. F., Ham, J., and Midden, C. J. H. (2012). Trust in smart systems: sharing driving goals and giving information to increase trustworthiness and acceptability of smart systems in cars. Hum. Factors 54, 799–810. doi: 10.1177/0018720812443825

Wang, N., Pynadath, D. V., and Hill, S. G. (2016). “Trust calibration within a human-robot team: comparing automatically generated explanations,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Christchurch: IEEE), 109–116. doi: 10.1109/HRI.2016.7451741

Washburn, A., Adeleye, A., An, T., and Riek, L. D. (2020). Robot errors in proximate HRI: how functionality framing affects perceived reliability and trust. ACM Trans. Hum. Robot Interact. 9, 1–21. doi: 10.1145/3380783

Waytz, A., Heafner, J., and Epley, N. (2014). The mind in the machine: anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 52, 113–117. doi: 10.1016/j.jesp.2014.01.005

Xie, Y., Bodala, I. P., Ong, D. C., Hsu, D., and Soh, H. (2019). “Robot capability and intention in trust-based decisions across tasks,” in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Deagu: IEEE), 39–47. doi: 10.1109/HRI.2019.8673084

Zhang, Y., Liao, Q. V., and Bellamy, R. K. E. (2020). “Effect of confidence and explanation on accuracy and trust calibration in ai-assisted decision making,” in Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (New York, NY: Association for Computing Machinery), 295–305. doi: 10.1145/3351095.3372852

Keywords: trust, human-agent interaction (HAI), trust transfer, human-AI cooperation, trusted AI, virtual agent, multi-device

Citation: Okuoka K, Enami K, Kimoto M and Imai M (2022) Multi-device trust transfer: Can trust be transferred among multiple devices? Front. Psychol. 13:920844. doi: 10.3389/fpsyg.2022.920844

Received: 15 April 2022; Accepted: 27 June 2022;

Published: 03 August 2022.

Edited by:

Augusto Garcia-Agundez, Brown University, United StatesReviewed by:

Seiji Yamada, National Institute of Informatics, JapanCopyright © 2022 Okuoka, Enami, Kimoto and Imai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kohei Okuoka, b2t1b2thQGFpbGFiLmljcy5rZWlvLmFjLmpw

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.