94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 05 August 2022

Sec. Psychology of Language

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.917700

This article is part of the Research TopicModality and Language Acquisition: How does the channel through which language is expressed affect how children and adults are able to learn?View all 19 articles

Signed and written languages are intimately related in proficient signing readers. Here, we tested whether deaf native signing beginning readers are able to make rapid use of ongoing sign language to facilitate recognition of written words. Deaf native signing children (mean 10 years, 7 months) received prime target pairs with sign word onsets as primes and written words as targets. In a control group of hearing children (matched in their reading abilities to the deaf children, mean 8 years, 8 months), spoken word onsets were instead used as primes. Targets (written German words) either were completions of the German signs or of the spoken word onsets. Task of the participants was to decide whether the target word was a possible German word. Sign onsets facilitated processing of written targets in deaf children similarly to spoken word onsets facilitating processing of written targets in hearing children. In both groups, priming elicited similar effects in the simultaneously recorded event related potentials (ERPs), starting as early as 200 ms after the onset of the written target. These results suggest that beginning readers can use ongoing lexical processing in their native language – be it signed or spoken – to facilitate written word recognition. We conclude that intimate interactions between sign and written language might in turn facilitate reading acquisition in deaf beginning readers.

There is an ongoing debate on how deaf individuals commanding a signed language acquire literacy (Perfetti and Sandak, 2000; Holmer et al., 2016). Written languages typically are based on spoken languages and signed languages do not share relevant phonology or orthography with written languages. Therefore, deaf individuals can typically not use direct form links between sign and written language. Here, we tested whether emerging literacy in deaf children is closely connected to sign language processing at the word level nevertheless. From a neurocognitive perspective, we investigated whether young signers can exploit aspects of rapid sign processing to foster written word recognition and whether they do so similar to young hearing readers exploiting aspects of rapid spoken word processing.

Similar to hearing individuals processing sequentially unfolding speech in an incremental fashion, signing individuals process sequentially unfolding signs gradually. As soon as hearing individuals have heard some speech sounds, they have available memory representations of words that temporally match the input, and they sequentially exclude those words that no longer match the unfolding input thereafter (e.g., Allopenna et al., 1998; Dahan et al., 2001). Although fundamentally different from phonology in spoken languages, signed languages have a sequential, decomposable phonological structure as well. Typically, handshape, location, movement, palm orientation, and non-manual cues like facial gestures (including mouth movements) are considered as phonological sign language units which define individual signs and unfold over time (Sandler and Lillo-Martin, 2006; Papaspyrou et al., 2008; Brentari, 2011). Comparable to listeners recognizing spoken words, signers use the sequential nature of signs to activate corresponding memory representations even before a signer has completed a sign (Grosjean, 1981; Clark and Grosjean, 1982; Emmorey and Corina, 1990).

In hearing readers, incremental processing of spoken words can immediately modulate the processing of written words. Respective processing links between both domains are exemplified by priming studies, which typically combine spoken primes (complete words or word onsets) and written targets (for an overview see Zwitserlood, 1996). A direct repetition of spoken and written words [“pepper” – pepper (here and in the following, italics represent written stimulus materials)] immediately facilitates processing of written target words (compared to unrelated prime-target pairs like “pepper” – window) in hearing adults (Holcomb et al., 2005) and in hearing children (Reitsma, 1984; Sauval et al., 2017). Facilitated processing has been observed already when only spoken word onsets were presented as primes (e.g., “can” – candy or “ano” – anorak; Soto-Faraco et al., 2001; Spinelli et al., 2001; Friedrich et al., 2004a,b, 2008, 2013; Friedrich, 2005).

Event-related potentials (ERPs) recorded in priming studies indicate that the processing initiated by spoken word onsets taps early aspects of the processing of immediately following written target words in hearing adults. Two ERP deflections are typically obtained when spoken word onsets are used to prime written target words: Prime-target overlap in phonology consistently elicited left-lateralized more positive-going ERP amplitudes (the so-called P350 effect in word onset priming), and reduced N400 amplitudes with central distribution (compared to unrelated targets, respectively; Friedrich et al., 2004a,b, 2013; Friedrich, 2005). Both effects start 200–300 ms after the onset of a written target word, a time window that is associated with access to stored word representations (e.g., Grainger et al., 2006; Holcomb and Grainger, 2006, 2007). Based on the intimate phonological form relationship between spoken and written words in alphabetic writing systems, links between spoken and written language processing might already originate at the level of phonological representations (e.g., Ferrand and Grainger, 1992; Grainger et al., 2006; Pattamadilok et al., 2017), but might also relate to the level of word form representations. The question emerges whether links between written language and sign language, which do not connect via grapheme-phoneme correspondence at the surface level, originate at the level of word form representations as well.

Previous priming research showed that signing adults implicitly activate signs and their respective phonological forms when they are reading written words [e.g., ASL while reading English words: Morford et al., 2011, 2014; Meade et al., 2017; Quandt and Kubicek, 2018; DGS while reading German words: Kubus et al., 2015; Hong Kong Sign Language (HKSL) while reading Cantonese words: Thierfelder et al., 2020]. These studies exploited pairs of written words that were not related in the written or phonological domain in a given spoken language, but shared sign units in a respective sign language, such as MOVIE and PAPER sharing location and handshape in ASL (here and in the following, capitals denote signed stimulus materials), but no speech sounds in spoken English. When deaf signing participants had to detect semantic similarities for pairs of written words, implicit phonological priming of the underlying signs speeds responding and, vice versa, decisions about semantic differences for pairs of written words slowed down when they overlapped in sign phonology (for ASL: Morford et al., 2011, 2014; for DGS: Kubus et al., 2015). In addition, Deaf native signers show phonological similarity effects in ASL when they have to recall lists of English written words (Miller, 2007).

By using online neurocognitive measures, previous ERP studies with signing adults suggested intimate links between sign word processing and the processing of written words. This was attested by unimodal priming studies combining either signed prime-target pairs (Lee et al., 2019; Hosemann et al., 2020) or written prime-target pairs (Meade et al., 2017). In the signed priming studies, phonological relation in the written domain modulated responses to phonologically unrelated sign pairs like BAR – STAR (with no phonological relation in ASL, but phonological relation in written English; e.g., Lee et al., 2019). Here, N400 effects starting 325 ms after target word onset were obtained (however, interpretation of the onset of ERP effects for signed targets is hampered by variation regarding the sequential nature and respective temporal characteristics of the continuously unfolding signs). In priming studies with written stimuli, phonologically and orthographically unrelated written word pairs like gorilla – bath were related in their sign language translations like GORILLA – BATH (sharing handshape and location in the corresponding ASL signs; Meade et al., 2017). Written prime-target pairs overlapping in sign phonology elicited a N400 effect starting 300 ms after target word onset. In the present study, we tested whether signing children are linking sign word processing to written word processing as early as adult signers do.

So far, very little is known about aspects of sign language processing and their links to reading in children who have acquired a sign language. Two phonological priming studies have suggested that deaf children, who were native signers of the Sign Language of the Netherlands (NGT), applied incremental phonological processing to signs (Ormel et al., 2009) and that signs are tightly associated with written words (Ormel et al., 2012). In their first study, Ormel and colleagues tested 8–12-year-old deaf children with picture-sign pairs. Children were asked whether the picture and the sign matched (picture verification task). Some unrelated sign-picture pairs, such as DOG and CHAIR, shared sign phonology in NGT (location and movement), while other sign-picture pairs were unrelated in that respect. As in previous work with deaf adults, in deaf children implicit phonological priming of the signs inhibited responding in cases where the sign-picture pairs were unrelated. In a follow-up study, Ormel and colleagues investigated 9–11-year-old deaf children in a picture-word verification task. In that study, NGT translation of the Dutch word and the sign for the picture were either phonologically related or not. Again, children indicated mismatches more slowly when word-picture pairs implicitly overlapped in sign phonology (compared to unrelated pairs). Recently, co-activation of ASL and written English in deaf signing children (mean age of 12.9 years) has been investigated by using a semantic judgment task for written words (Villwock et al., 2021). The children were faster to make “yes” decisions (the words are semantically related) when the ASL translations were phonologically related. As in previous studies for deaf adults (Morford et al., 2011), a subset of the presented semantically related and unrelated word pairs shared sign phonology in ASL. Children were faster to respond to written word pairs with phonological relations in ASL. Consistent with the results of Ormel et al. (2012) this indicates that children have sign language phonology available while they are reading.

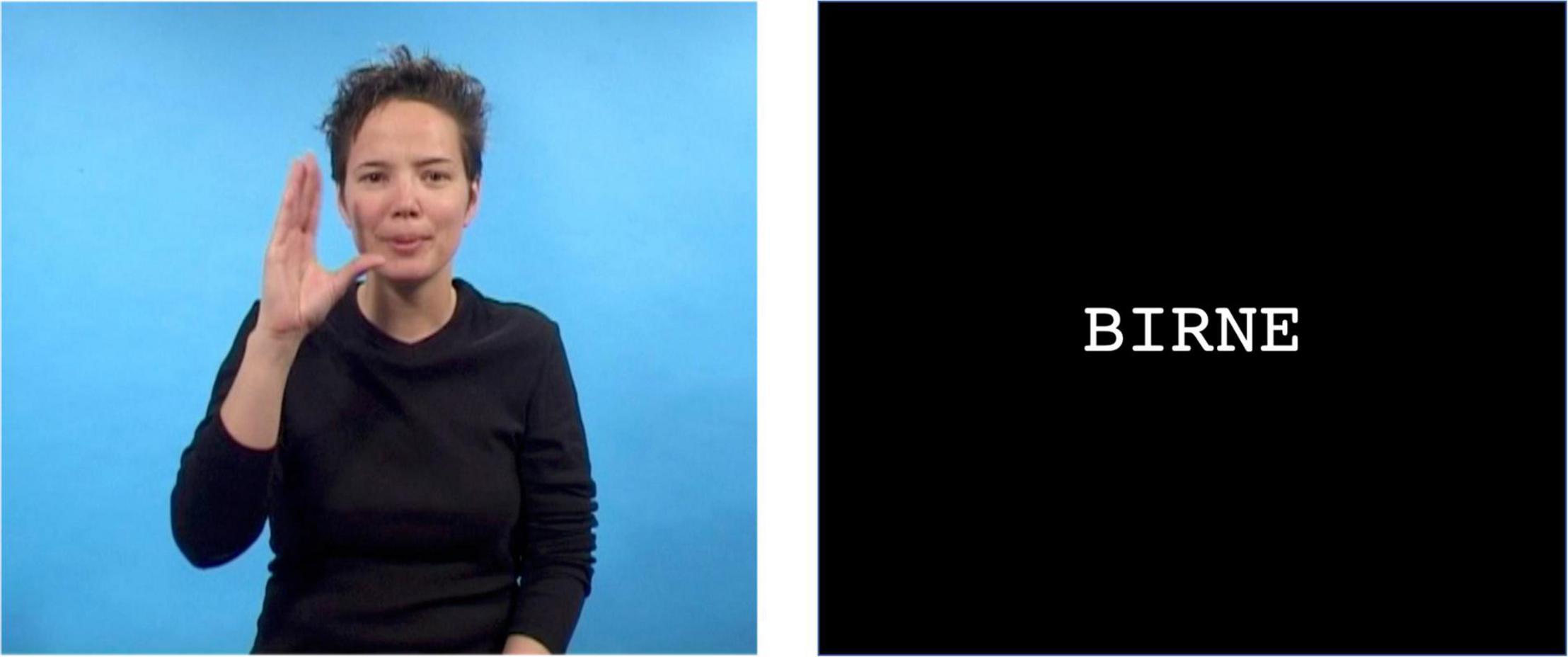

In the present study, we use online neurocognitive measures to investigate the temporal processing dynamics underlying interactions between sign and written language processing in deaf signing beginning readers. By recording ERPs to targets in word onset priming, we aimed to uncover whether beginning readers use incremental processing of sign onsets (deaf native signing children) similarly to incremental processing of spoken word onsets (hearing children) to foster ongoing written word processing. Deaf and hearing children were matched on reading skills. Deaf beginning readers saw videos of sign word onsets (primes), which were followed by written words (targets). Hearing beginning readers watched and heard a speaker articulating word onsets (primes), which were followed by written words (targets). Both groups were asked to decide whether the written target was a possible German word. The crucial comparison within a group was between responses to targets in the condition where prime and target were related [Overlapping condition; e.g., KU1 – Kuchen (Engl. cake) and “ku” – Kuchen, respectively] versus the Unrelated condition (e.g., WE – Kuchen and “we” – Kuchen, respectively). An example trial (Overlapping condition) with a sign prime with a sign prime, followed by a written word target is provided in Figure 1.

Figure 1. Illustration of one trial in the overlapping sign fragment – written word condition: The sign fragment “BI” for BIRNE (pear) is followed by the overlapping written word Birne (pear).

Based on earlier studies using spoken-written word onset priming with hearing adults (Friedrich et al., 2004a,b, 2008, 2013; Friedrich, 2005), we expected to find P350 and N400 effects preceding behavioral responses (lexical decisions). If beginning readers exploit ongoing processing in their native language as rapidly as experienced adult readers, ERP effects should start 200 ms after target word onset (for hearing adults: e.g., Friedrich et al., 2004a,b, 2008, 2013; Friedrich, 2005; Grainger et al., 2006; for deaf signing adult readers: e.g., Gutierrez-Sigut et al., 2017).

Data from fourteen congenitally deaf children (hearing threshold >90 dB in the better ear; seven girls) who had learned sign language from birth from their deaf parents (“native signers”) and fourteen typically developing, hearing, children (four girls) with hearing parents (“controls”) were included in the study. We recruited deaf children from Schools for Deaf and Hard of Hearing in Germany which ran a bilingual (German and DGS) curriculum at the time. A control group of hearing children was then recruited by matching levels of word reading comprehension across groups. The hearing children were monolingual speakers of German from local primary schools in the city of Hamburg. An additional three native signers and four controls originally participated in the study, but their data could not be included in analyses because of low quality of EEG data as a result of excessive movement by the participant (two native signers; two controls), refusal by the participant to complete the reading test (one native signer), low performance on the reading test (one control) or technical failure during EEG recording (one control). None of the children had any neurological disease or learning difficulties. We obtained written informed parental consent for all children.

Data from native signers was collected first. Based on their performance on a normed German word reading test for beginning readers (ELFE 1–6, word reading comprehension subtest, Lenhard and Schneider, 2006), a younger control group was then recruited to ensure similar levels of word reading comprehension across groups. In this subtest, a picture was presented together with four written words. The child was asked to underline the word that matched the picture. Reported are raw scores, which consist of the total number of correct responses within a time window of 3 min [native signers: M = 35.9, SD = 9.6; controls: M = 35.9, SD = 7.1; t(26) = 0, p = 1, Cohen’s d = 0]. Note, that the timeline of reading development for deaf and hearing readers differs due to the patterns of language exposure and the access to language input in the two populations (see e.g., Mayberry et al., 2011; Miller and Clark, 2011; Trezek et al., 2011). As a result, the control group was significantly younger than the group of native signers [native signers: M = 10.7 years; SD = 18 months; controls: M = 8.8, SD = 10 months; t(26) = 4.16, p < 0.001, Cohen’s d = 1.57]. The two groups did not differ in non-verbal cognitive abilities [native signers: M = 68.1, SD = 25.9; controls: M = 62.8, SD = 27.1; t(26) = −0.53, p = 0.603, Cohen’s d = 0.20]. Raven’s Colored Progressive Matrices (Raven, 1936) were used as a measure of non-verbal cognitive ability. Percentile scores in relation to norms for German children are reported (Bulheller and Häcker, 2002).

We used 80 concrete common nouns (see Supplementary Material), selected to be known to young children. As no lexical developmental scale for the acquisition of DGS exists, we checked the nouns with the CDI-ASL (Anderson and Reilly, 2002) and CDI-BSL (Woolfe et al., 2010). While targets consisted of complete written words, primes consisted of onsets of signs/spoken words. Following work by Friedrich et al. (2009) and Schild et al. (2011), spoken word onsets consisted of the first syllable of a respective target word. We created the spoken word onsets (fragments) by filming a hearing male actor speaking the complete words in front of a blue screen. Spoken fragments were created by editing each word after the first syllable. The sign stimuli were created by filming a female deaf native signer of DGS while she produced each noun in DGS in front of a blue screen. The signer was a professional employee of a sign language movie company.

As no grading system exists which would have allowed us to determine the point of uniqueness for DGS signs, we created the sign fragments by taking into account theories on sign phonology. Hereby, the parameter location is proposed to be equivalent to a syllable onset and movement and location properties serve as the skeletal structure for syllable-like units (for a more differentiated analysis of syllables in signs see Sandler, 1989; Brentari, 1998). The combination of movement and location has been shown to result in phonological effects on lexical retrieval which are similar across language modalities (Gutierrez et al., 2012a,b). Taking this as the basis for the production of the sign fragments, a deaf native signer cut each complete sign video at that point in time, when the hands were in the correct position in terms of location. Since sign phonological segments are expressed simultaneously, all sign fragments presented the correct handshape and a very brief movement (M = 52.94 ms). Sign fragments (M = 1418 ms, SD = 108) were on average longer in duration than spoken fragments (M = 1050 ms, SD = 19).

In order to determine if these fragments were ambiguous sign onsets, we presented them to two deaf native and two deaf near-native signing adults whom we asked to complete each sign fragment as rapidly as possible. Out of 80 sign fragments, 43 resulted in the production of the intended complete sign by all participants (“unambiguous sign word onsets”). In contrast, the remaining 37 sign fragments received at least one different completion than the target sign it was created from (“ambiguous sign word onsets,” marked by an asterisk in the Supplementary Material). Because we needed all trials in the ERP experiment and were limited in the choice of signs due to other criteria (e.g., that they were concrete nouns, known by young children) we decided to include all trials in the ERP analyses. However, for the reaction times, we additionally analyzed the responses for ambiguous vs. unambiguous word onsets separately (see section “Results”).

For each participant, half the concrete nouns (i.e., 40) were used as targets in the Overlapping condition, and the other half were used as targets in the Unrelated condition. Allocation of targets to condition was counterbalanced across participants in both groups. The same primes were used to precede targets in both of these conditions. For example, a participant was presented with the prime followed by the target in the Overlapping condition (e.g., KU/“ku” – Kuchen [Engl. cake]) in one block and that same prime followed by the target for the Unrelated condition (e.g., KU/“ku” – Wecker [Engl. alarm clock]) in a different block. Additionally, 20 trials with pseudowords were presented for the lexical decision task. Pseudowords were created that differ only in the last one or two letters from the words. In 10 of those trials the prime and pseudoword showed overlap (e.g., AU/“au,” Aune [pseudoword derived from Auto, Engl. car]); in the remaining 10 trials, the prime and the pseudoword were unrelated (e.g., prime for HUNG/”hung,” Namel [pseudoword derived from Name, Engl. name]).

All participants were tested individually, in a quiet room in their school (native signers) or at the university (controls). After completing the reading and non-verbal cognitive ability tests, the EEG recording cap was fitted and the child was seated behind a computer.

Presentation® software (Neurobehavioral Systems, Inc., Berkeley, CA, United States)2 was used to control stimulus presentation and record behavioral responses. All visual stimuli were presented on a computer screen placed approximately 40 cm in front of the participant, with videos of sign and spoken stimuli being presented at natural speed, and at a size of 21.4 cm by 17.1 cm, on a black background showing the face and torso of the speaker. Written stimuli were in white capital letters (font: Courier, font size: 41) on a black background. Auditory stimuli were presented to controls through speakers positioned directly to the right and the left of the computer screen.

Each trial began with the presentation of the fixation picture for 1,000 ms at the center of the screen, which participants were asked to fixate on whenever it appeared. The prime was presented, followed by a blank screen for 450 ms, before the target was presented. Subsequently, participants were asked to press the space bar only if they believed the target was a possible written word in German. A response was followed by a feedback stimulus (2,000 ms in duration) consisting of a smiley for correct and a picture of a ghost for incorrect responses. The next trial started after a 1,500 ms inter-trial interval (from response onset) during which the screen was blank. If participants did not respond within 5,000 ms, the task continued with the inter-trial interval regardless.

Trials were presented in one of two pseudo-random orders, and in blocks of 10 with short breaks in between. A set of 10 practice trials preceded the experimental blocks. Trial order and response hand were counterbalanced across participants in both groups. The total duration of the experiment was about 60 min (including breaks).

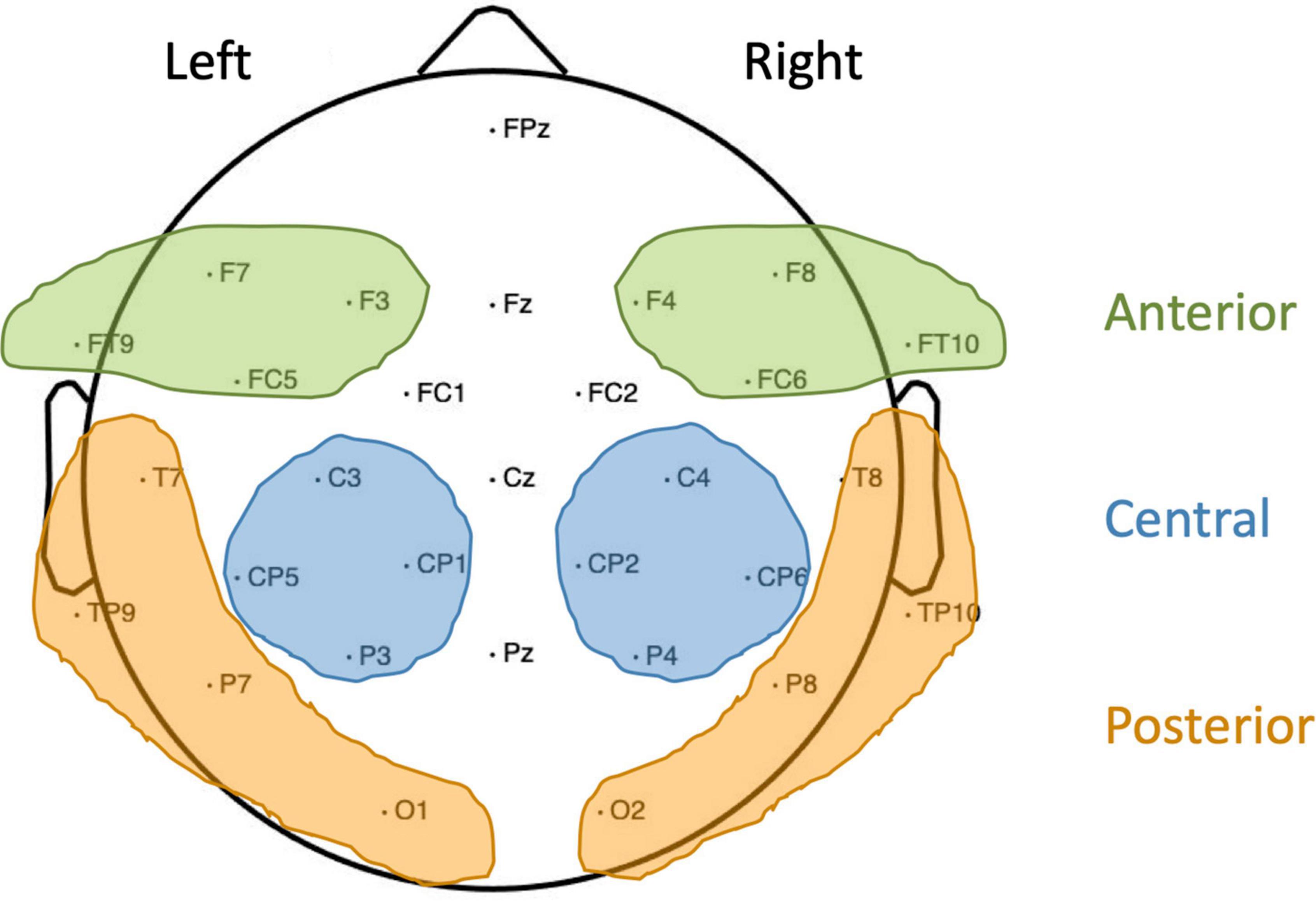

The continuous electroencephalogram (EEG; 500 Hz/22 bit sampling rate, 0.01–100 Hz bandpass) was recorded from 30 Ag/AgCl active electrodes (Brain Products) mounted into an elastic cap (Easycap) according to the 10–20 system. Additionally, electrodes F9 and F10 (positioned close to the outer canthi of the left and right eye) were used to monitor horizontal eye movements, while two further electrodes were attached below the eyes to record vertical eye movements, all referenced to the nose. A left frontal scalp electrode (AF3) served as ground. Off-line analysis was performed using BESA-Research software (MEGIS Software GmbH; Version 5.3): the EEG was re-referenced to an average reference, eye artifacts were corrected using surrogate Multiple Source Eye Correction by Berg and Scherg (1994), and noisy trials were manually excluded. If an electrode was noisy throughout a substantial part of the recording, this electrode was interpolated. In controls, for two children no electrodes were interpolated, for three children one electrode was interpolated, for seven children two electrodes were interpolated and for another two children, three electrodes were interpolated. In native signers, for eight children no electrodes were interpolated, for three children one electrode was interpolated and in another three children two electrodes were interpolated. A minimum of 22 artifact-free trials was included in each condition per child. Controls (M = 31.11, SD = 5.05) did not differ from native signers (M = 32.21, SD = 3.30) in the average number of artifact-free trials included per condition, t(26) = 0.336, p = 0.74, d = 0.26. Event-related potentials (ERPs) were computed for the target words with correct responses, starting from the beginning of the presentation of the written word up to 1,000 ms post-stimulus onset. The ERPs were baseline corrected to a 200 ms pre-stimulus period. The dependent variable for the ERPs was the mean amplitude for each participant in the Overlapping and the Unrelated condition across regions of interest and time windows informed by previous work (Friedrich et al., 2004a,b, 2013; Friedrich, 2005; Schild et al., 2011). For the P350, regions of interest were: left anterior (F7, F3, FT9, and FC5), right anterior (F4, F8, FC6, and F10), left posterior (T7, TP9, P7, and O1) and right posterior (T8, TP10, P8, and O2). For the N400, regions of interest were left central (C3, CP5, CP1, and P3) and right central (C4, CP2, CP6, and P4). Regions of interest are illustrated in Figure 2. Time windows for both the P350 and the N400 were 200–400 and 400–600 ms post-stimulus onset.

Figure 2. Overview of electrodes used and regions of interest formed. For the P350, left and right anterior (shaded green) and posterior (shaded yellow) regions were used. For the N400, left and right central regions (shaded blue) were used.

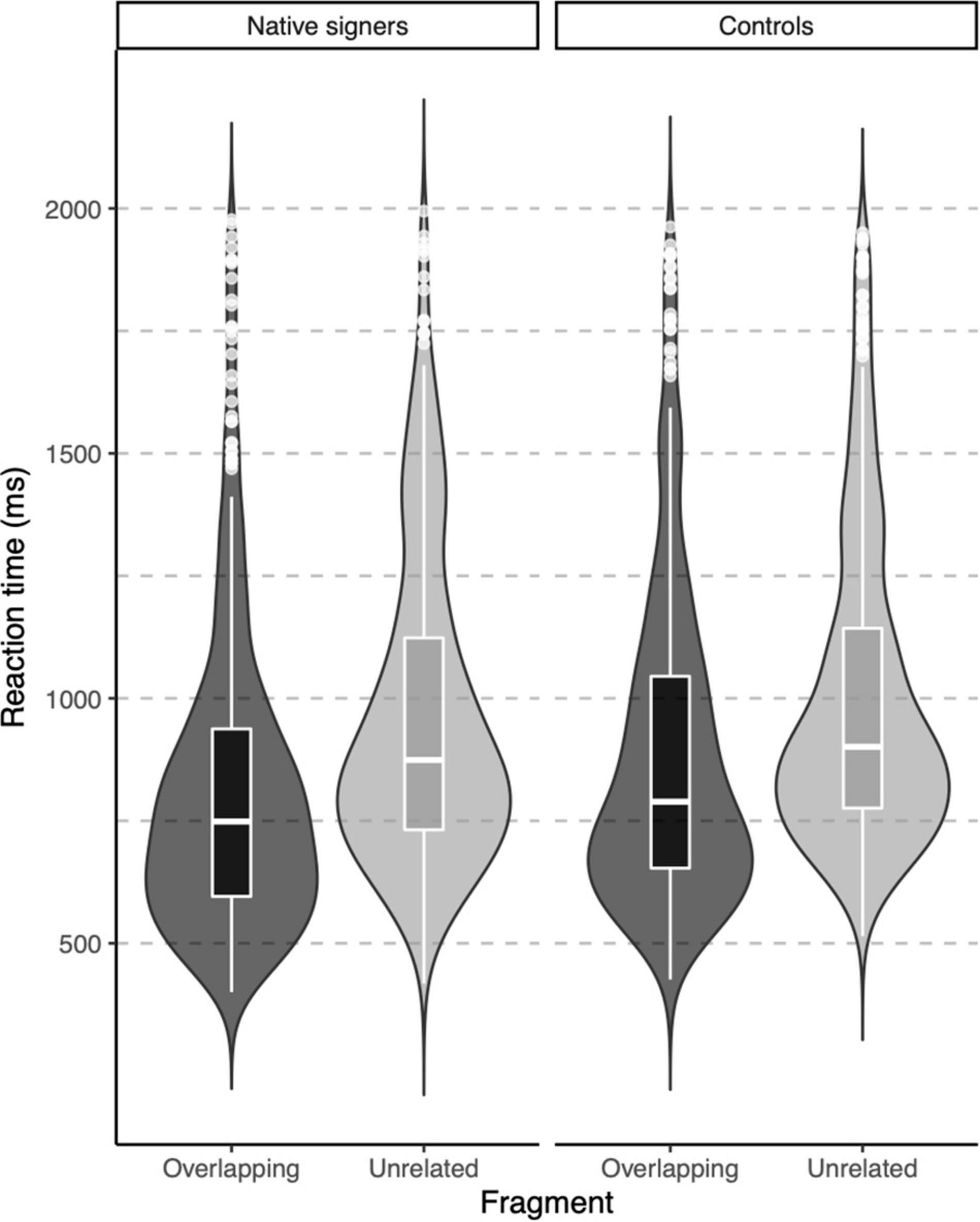

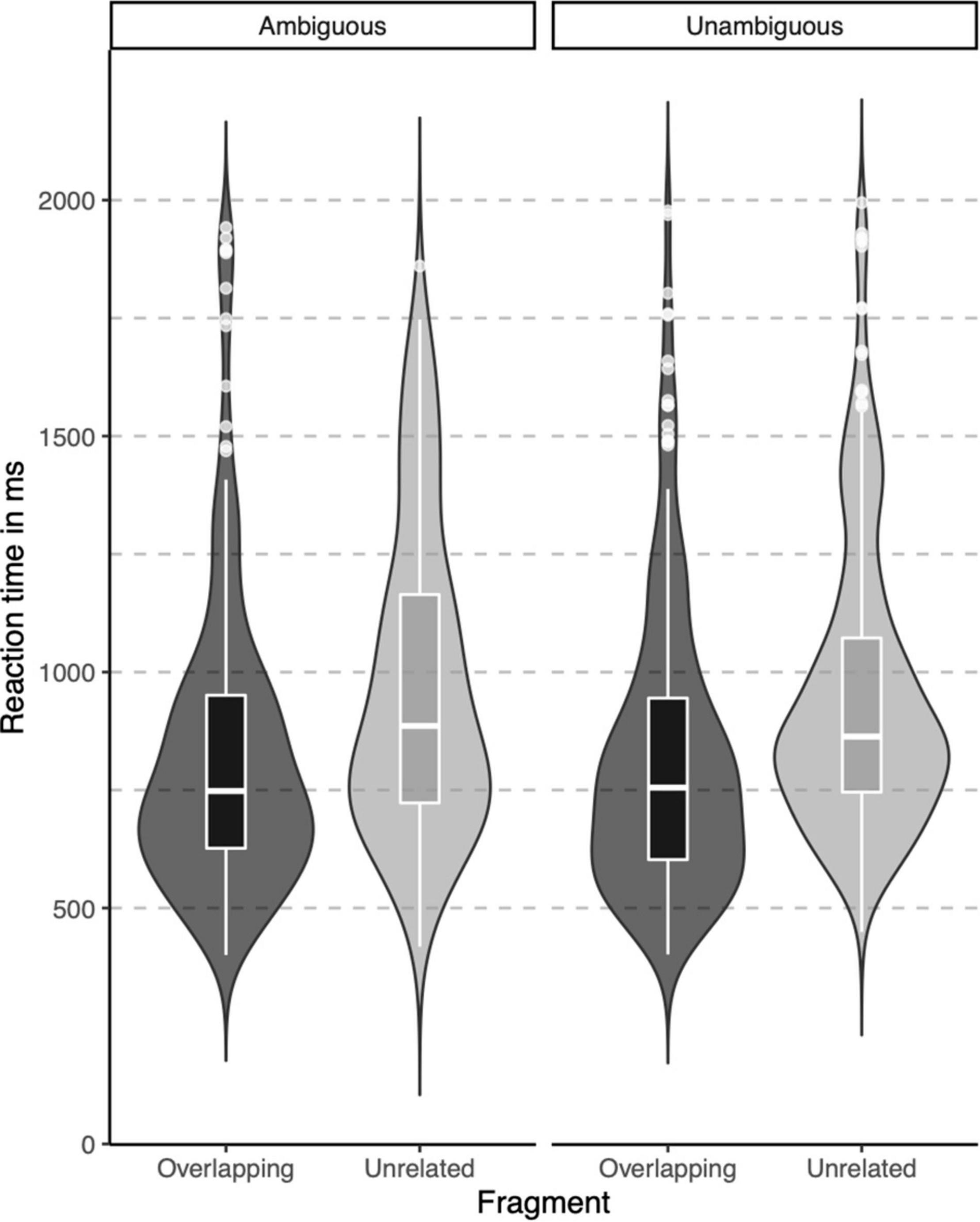

In the lexical decision task, both groups were highly accurate in identifying words (percentage button presses in response to word targets), but native signers (Mdn = 97.5%) were slightly less accurate than controls (Mdn = 100%), U = 46, p = 0.012. The native signers additionally more often identified pseudowords as words (percentage button presses in response to pseudowords; Native signers: Mdn = 35%, Controls: Mdn = 17.5%, U = 116, p = 0.042). Reaction times were only analyzed for correct responses to word targets. In Figure 3, violin-boxplots depict reaction times across groups and word onsets. As the reaction times showed considerable positive skew, values were log-transformed (using the natural logarithm) before we conducted an ANOVA with Group (Native signers vs. Controls) as a between-subject factor and Word Onset (Overlapping vs. Unrelated) as a within-subject factor. The effect of Group was not significant [F(1,26) = 1.42, p = 0.244], neither was the interaction with Group [F(1,26) = 1.49, p = 0.233]. Crucially, the main effect of Word Onset was significant [F(1,26) = 107.27, p < 0.001]. Both native signers (Overlapping: M = 817 ms, SD = 311 ms; Unrelated: M = 962, SD = 314) and controls (Overlapping: M = 888 ms, SD = 325 ms; Unrelated: M = 1002, SD = 314) were faster to respond when prime and target overlapped than when they were unrelated. In native signers, an additional ANOVA with Word Onset (Overlapping vs. Unrelated) and Predictability (Ambiguous vs. Unambiguous) as within-subject factors resulted in a significant main effect of Word Onset [F(1,13) = 43.53, p < 0.001]. Neither the main effect of Predictability F(1,13) = 0.16, p = 0.700, nor the interaction with Predictability F(1,13) = 0.00, p = 0.950 was significant. Native signers were faster to respond when prime and target overlapped than when they were unrelated, both for ambiguous word onsets (Overlapping: M = 829 ms, SD = 314 ms; Unrelated: M = 971, SD = 326) and unambiguous word onsets (Overlapping: M = 823 ms, SD = 308 ms; Unrelated: M = 956, SD = 307; see Figure 4).

Figure 3. Violin-boxplots depicting reaction times (in ms) for correct responses for Native signers (left) and Controls (right) for Overlapping (dark gray) and Unrelated (light gray) fragments.

Figure 4. Violin-boxplots depicting reaction times (in ms) for correct responses in Native signers only, for Ambiguous (left) and Unambiguous (right) fragments.

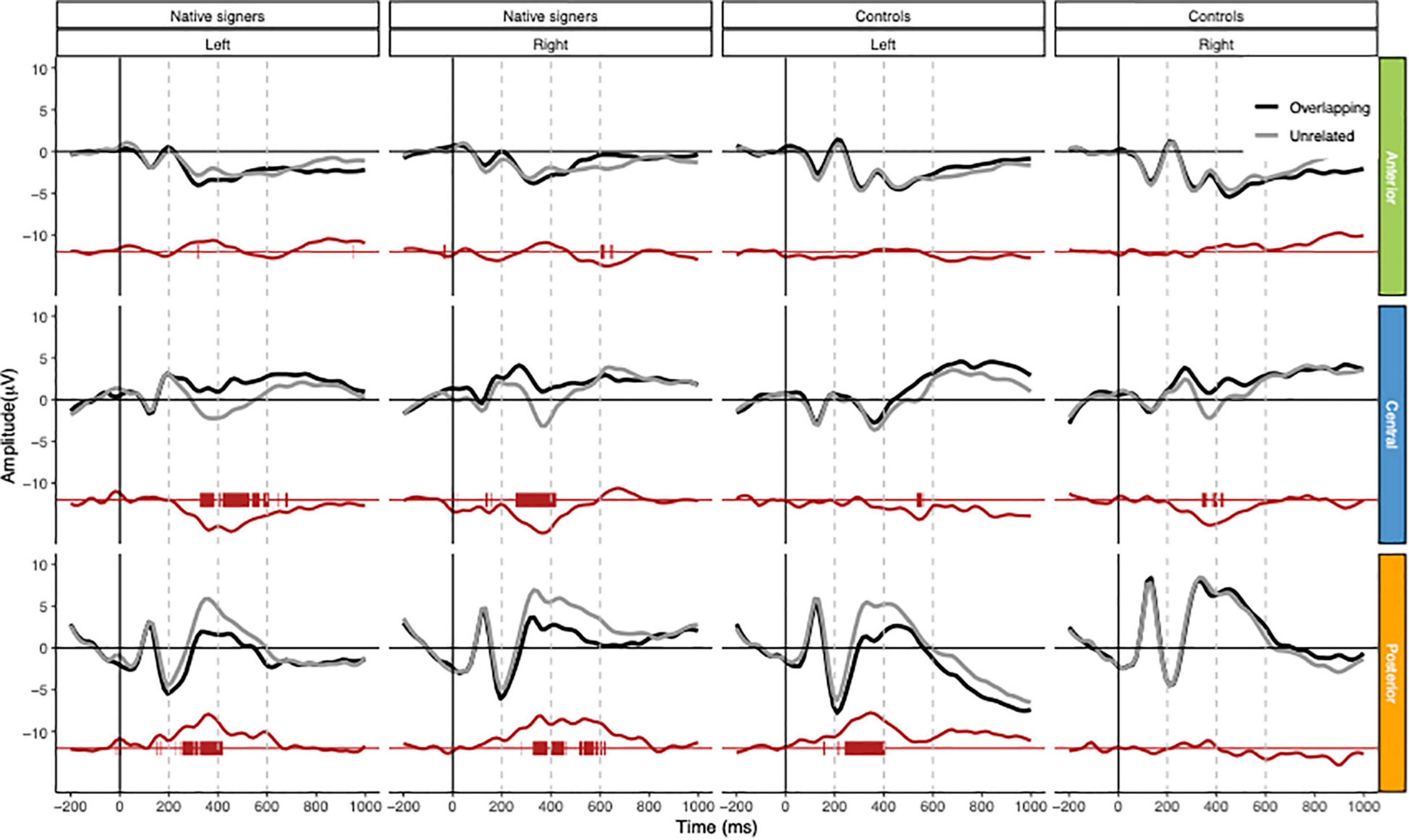

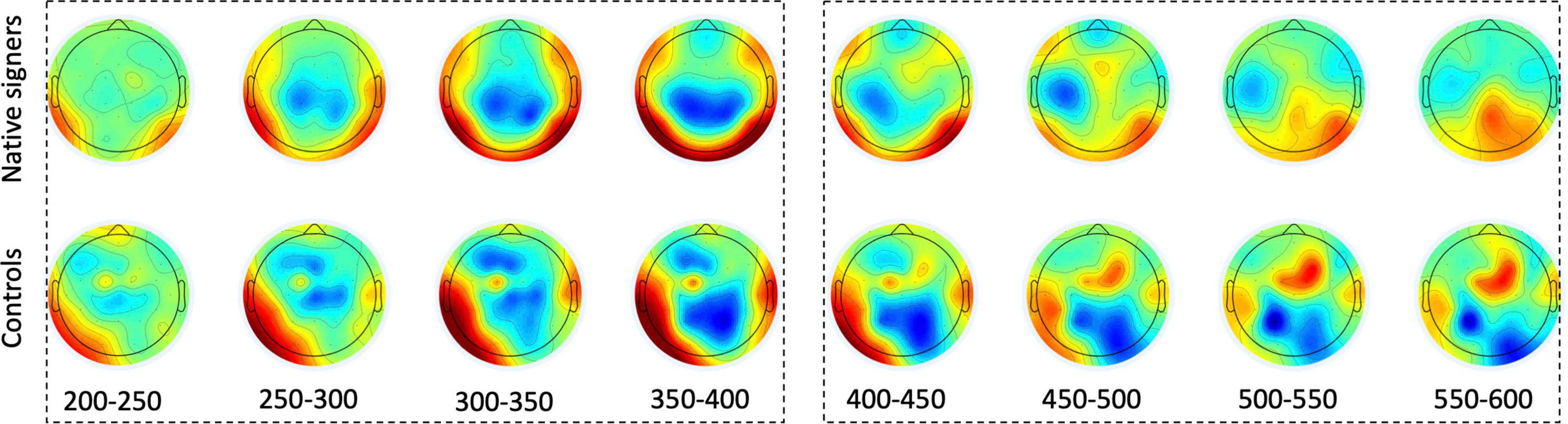

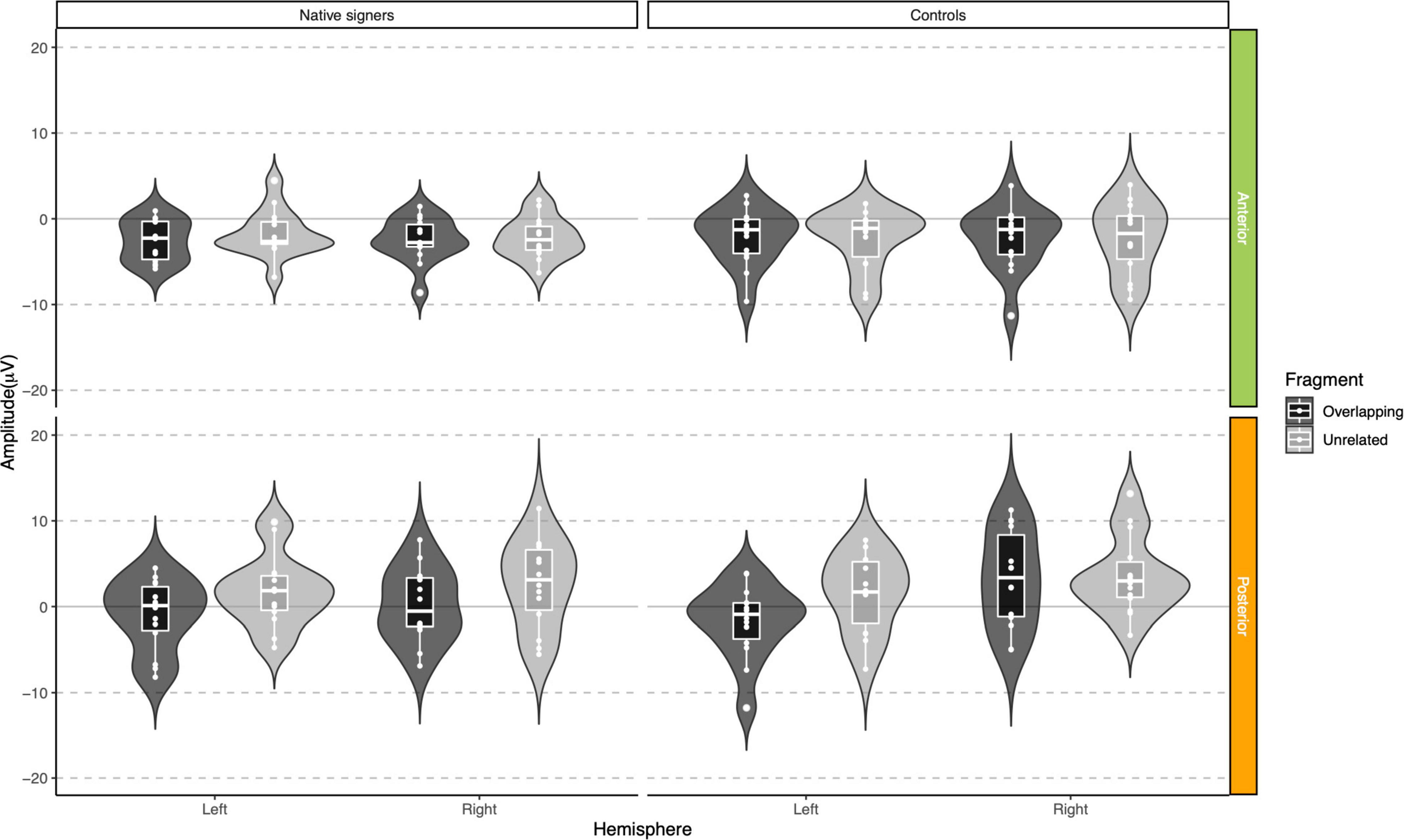

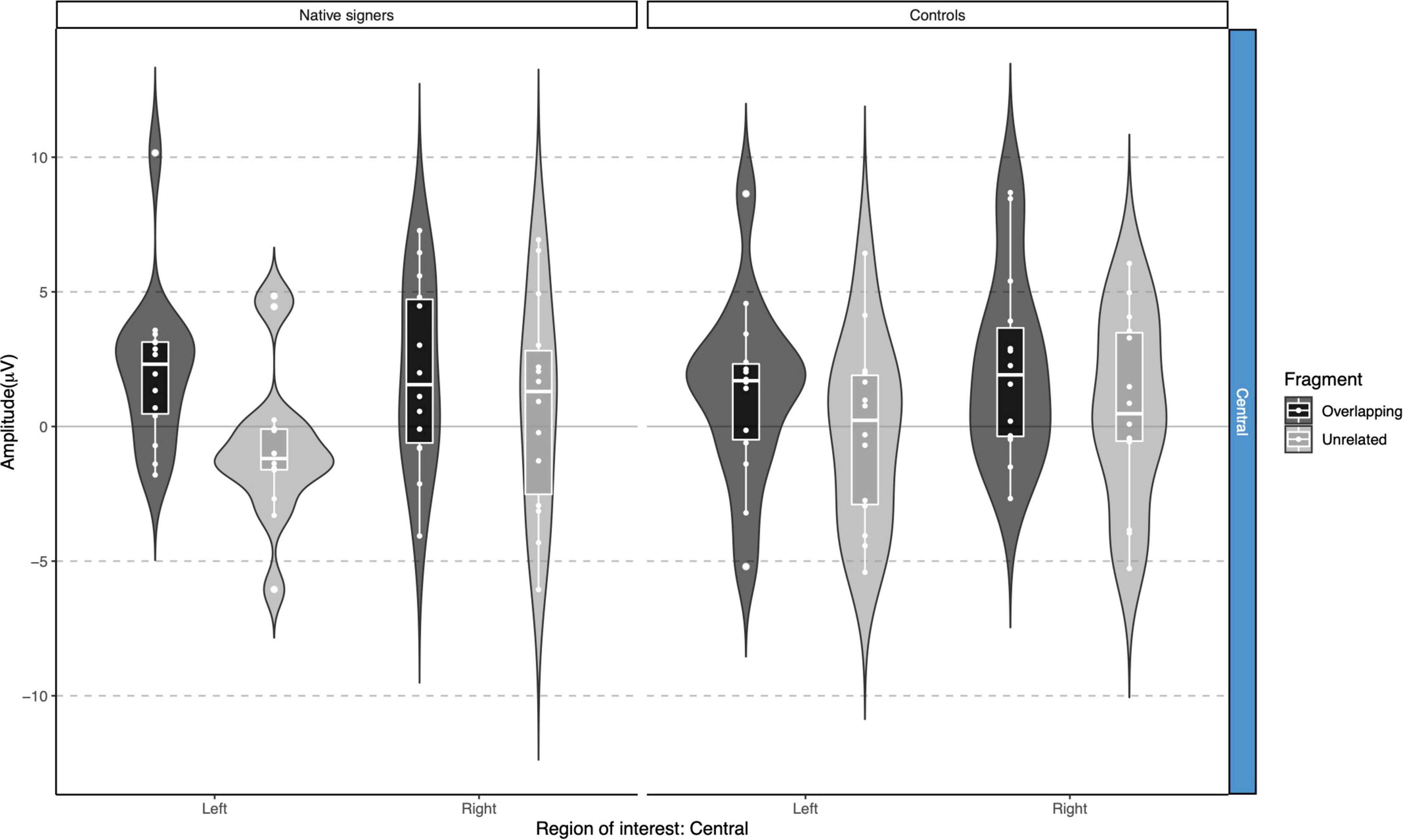

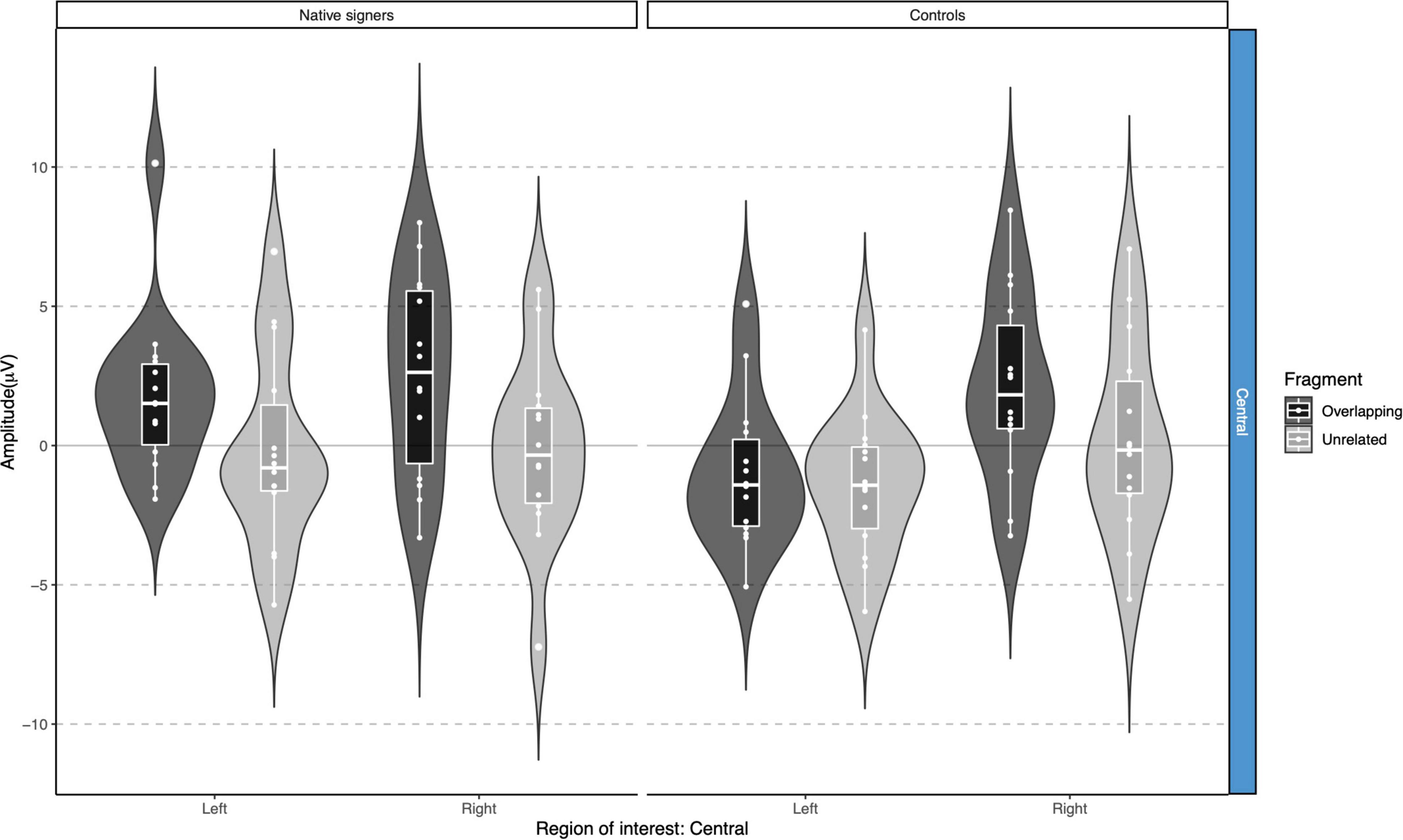

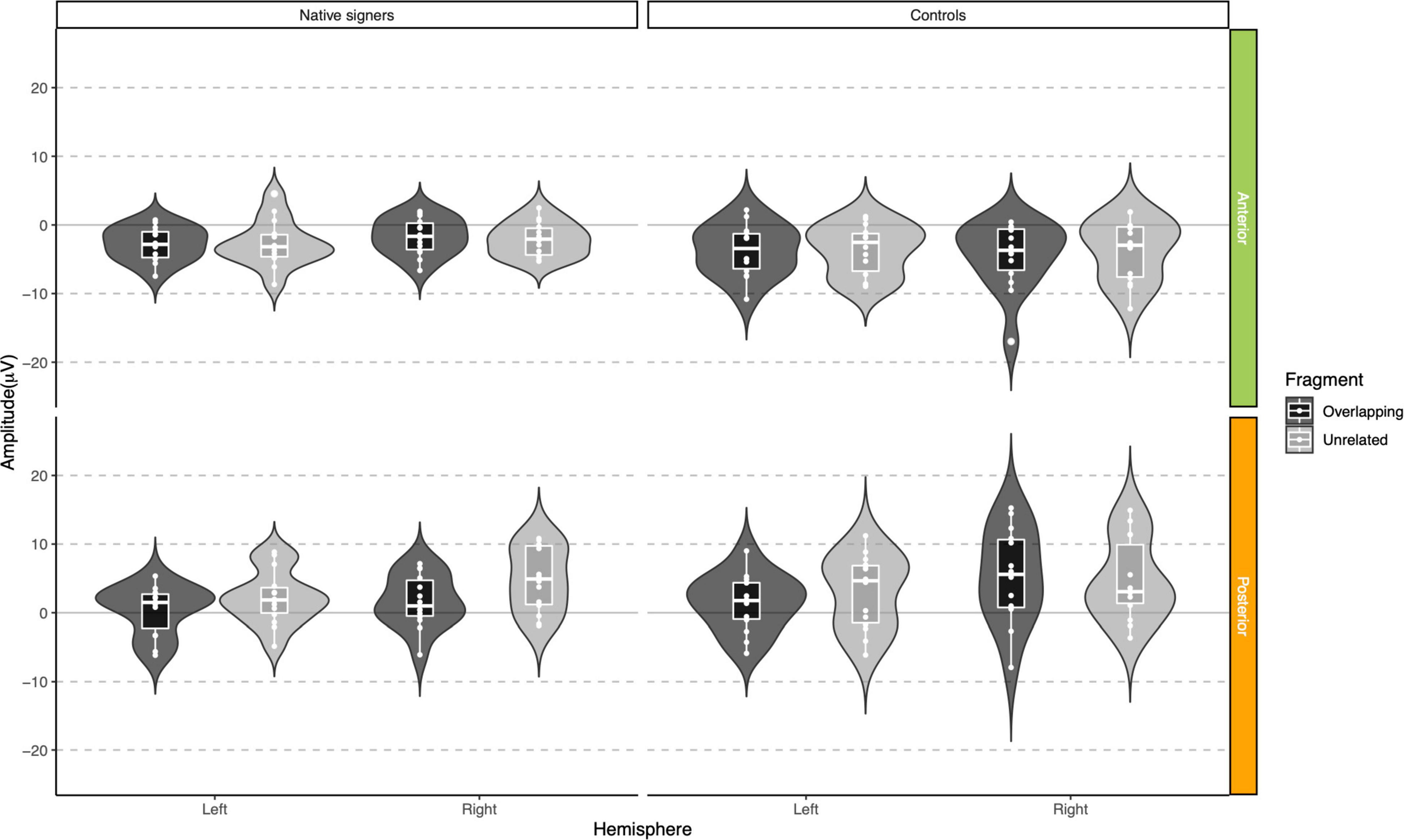

Grand average waveforms across word onsets (Overlapping vs. Unrelated) as well as difference waves (Unrelated – Overlapping) for each of the regions of interest (Anterior, Central and Posterior) and hemispheres (Left vs. Right) in both groups (Controls vs. Native signers) are presented in Figure 5. Figure 6 shows topographical voltage maps of the difference waves across the 200–400 ms and 400–600 ms time windows for both groups. A posterior positivity, which we relate to the P350 effect, was visible in both groups. This effect was left-lateralized in controls, whereas it was bilaterally distributed in native signers. At central regions, a bilateral negativity, which we relate to the N400, was evident in both groups. Central tendencies and distributions of mean amplitudes across conditions and groups for the left and right hemispheric regions of interest are presented in Figures 7, 8, for the P350/positivity and Figures 9, 10 for the N400/negativity.

Figure 5. Grand average waveforms for Native signers (left two columns) and Controls (right two columns) for the Overlapping (black line) and Unrelated (gray line) fragments across regions of interest (Anterior, Central, Posterior × Left, Right). In red, the difference wave (Unrelated – Overlapping) as well as an indication of a significant difference from zero (point-by-point; p < 0.01) for the difference waves. Vertical dashed gray lines indicate measurement windows.

Figure 6. Topographical voltage maps of the difference waves (Unrelated – Overlapping) for Native signers (upper panels) and Controls (lower panels) between 200 and 600 ms post-stimulus in 50 ms increments. Dashed boxes indicate time windows used for measurement. Green indicates zero, colors toward dark red indicate a positive difference, colors toward dark blue indicate a negative difference. Nose is at the top.

Figure 7. Violin-boxplots depicting mean amplitude for the P350/positivity in the 200–400 ms time window for Native signers (left) and Controls (right) for the Unrelated (light gray) and Overlapping (dark gray) fragments across regions of interest.

Figure 8. Violin-boxplots depicting mean amplitude for the P350/positivity in the 400–600 ms time window for Native signers (left) and Controls (right) for the Unrelated (light gray) and Overlapping (dark gray) fragments across regions of interest.

Figure 9. Violin-boxplots depicting mean amplitude for the N400/negativity in the 200–400 ms time window for Native signers (left) and Controls (right) for the Unrelated (light gray) and Overlapping (dark gray) fragments across regions of interest.

Figure 10. Violin-boxplots depicting mean amplitude for the N400/negativity in the 400–600 ms time window for Native signers (left) and Controls (right) for the Unrelated (light gray) and Overlapping (dark gray) fragments across regions of interest.

For the P350 effect, a repeated-measures ANOVA with Word Onset (Overlapping vs. Unrelated), Region (Anterior vs. Posterior) and Hemisphere (Left vs. Right) as within-subject factors and Group (Controls vs. Native signers) as a between-subject factor was separately conducted for the lateral regions of interest for each time window (200–400 and 400–600 ms). For the N400 effect, a repeated-measures ANOVA with Condition (Overlapping vs. Unrelated) and Hemisphere (Left vs. Right) as within-subject factors and Group (Controls vs. Native signers) as a between-subject factor was conducted for the central regions of interest, for each time window (200–400 and 400–600 ms) separately.

In the 200–400 ms time window, regions of interest for the P350 effect revealed a significant four-way interaction of Condition × Hemisphere × Region × Group [F(1,26) = 8.41, p = 0.008], which we followed up by separate repeated-measures ANOVAs per region. For anterior regions of interest, no significant main effects of Condition, Hemisphere or Group nor any significant interactions between factors were found (all p > 0.29). For posterior regions of interest, significant main effects of Condition [F(1,26) = 38.06, p < 0.001] and Hemisphere [F(1,26) = 6.68, p = 0.016] were modulated by a significant Condition × Hemisphere interaction [F(1,26) = 5.10, p = 0.033]. Post hoc comparisons with Tukey’s correction to account for multiple comparisons showed that mean amplitude in the 200–400 ms time window was more positive in the Unrelated condition compared to the Overlapping condition in the left posterior region [M = −3.01, SE = 0.50, t(51.9) = −6.02, p < 0.001] as well as in the right posterior region [M = −1.45, SE = 0.50, t(51.9) = −2.90, p = 0.027]. The main effect of Group was not significant [F(1,26) = 0.28, p = 0.60]; nor were any of the interactions with Group (all p > 0.08; for full results see Supplementary Tables 1A–D). Regions of interest for the N400 revealed a main effect of Condition [F(1,26) = 21.40, p < 0.001] only, with more negative amplitudes in the Unrelated condition than in the Overlapping condition. No other effects or interactions were significant (all p > 0.06, see Supplementary Tables 3, 4 for full results).

In the 400–600 ms time window, regions of interest for the P350 effect revealed a significant four-way interaction of Condition × Hemisphere × Region × Group [F(1,26) = 9.42, p = 0.005] which we followed up by separate repeated-measures ANOVAs per region. For anterior regions of interest, no significant main effects of Condition, Hemisphere or Group nor any significant interactions between factors were found (all p > 0.37). For posterior regions of interest, significant main effects of Condition [F(1,26) = 7.17, p = 0.013] and Hemisphere [F(1,26) = 6.13, p = 0.020] were modulated by a significant Condition × Hemisphere × Group interaction [F(1,26) = 5.74, p = 0.024]. The main effect of Group was not significant [F(1,26) = 0.90, p = 0.35], nor were other interactions with Group (all p > 0.07). Post hoc comparisons with Tukey’s correction to account for multiple comparisons showed that mean amplitude in the 400–600 ms time window was more positive in the Unrelated condition compared to the Overlapping condition in the right posterior region in Native signers only [M = −3.20, SE = 0.94, t(45.8) = −3.39, p = 0.028; all other p > 0.54; for full results see Supplementary Tables 2A–D]. Regions of interest for the N400 revealed a main effect of Condition [F(1,26) = 20.00, p < 0.001] only, with more negative amplitudes in the Unrelated condition than in the Overlapping condition (all p > 0.27, see Supplementary Tables 3, 4 for full results).

The main aim of the present study was to investigate whether and when during online processing beginning readers link signed language (deaf participants) or spoken language (hearing participants) to written word recognition. We tested two groups of children: congenitally deaf (native signing) and hearing beginning readers, who were matched on reading skill. Our behavioral results showed that both groups of children matched word onsets (signed or spoken) and written target words. Signed and spoken onsets facilitated lexical decisions to corresponding written words. ERPs were informative regarding aspects of processing that were involved when deaf and hearing children link their native language to reading. In both groups, rapid priming effects emerged as early as 200 ms after the onset of the written target word. That is, deaf and hearing beginning readers appeared to have used incremental processing in their native language for written word processing as rapidly as hearing adults do (see Friedrich et al., 2004a,b, 2008, 2013; Friedrich, 2005). Moreover, we found similar ERP deflections in onset priming in signing and hearing children and these ERP deflections resemble those in hearing adults. Together, our results suggest that the links attested in previous studies between sign language proficiency and reading in deaf readers (Padden and Ramsey, 1998; Chamberlain and Mayberry, 2008) might – at least in part – be mediated by implicit associations between representations of signs and written words that are automatically accessed already by beginning readers.

Facilitated lexical decision responses for targets which were preceded by a related word onset demonstrate that children link spoken language processing (hearing children) as well as sign language processing (deaf children) to reading. For hearing children, intimate links between spoken and written language are well established (for review see Goswami and Bryant, 2016). At the behavioral level, spoken-written priming of phonologically related words has been formerly observed for 8–10-year-olds (Clahsen and Fleischhauer, 2014; Quémart et al., 2018). For signing children, the present behavioral results are in line with findings showing links between sign language processing and reading in signing deaf adults (see Ormel and Giezen, 2014; Giezen and Emmorey, 2016 for reviews) and signing deaf children (Ormel et al., 2012; Villwock et al., 2021). However, response latencies reflect only the outcome of complex recognition and decision processes and do not allow disentangling whether facilitation reflects early, rather automatic, or later, rather decision related aspects of processing (for further discussion see Friedrich et al., 2013; Schild and Friedrich, 2018). In that respect, our neurocognitive data strengthen those claims. Moreover, they expand them and add more detailed information to those research questions.

Across both groups of children, ERP effects related to prime-target overlap manifested first in a time window ranging from 200 and 400 ms after target word onset. At lateral electrode leads, prime-target overlap elicited more positive amplitudes (the P350 effect), whereas, at central electrodes, prime-target overlap elicited more negative amplitudes (the N400 effect) compared to unrelated pairs, respectively. This is in line with P350 and N400 effects previously reported for spoken - written word onset priming with hearing adults (Friedrich et al., 2004a,b, 2008, 2013; Friedrich, 2005). In contrast to ERP effects found for spoken-written word onset priming in hearing adults, P350 difference topographies in hearing and signing children were more pronounced for posterior than for anterior electrode leads. Thus, topographies of P350 effects for written target words appear to follow a posterior to anterior gradient from middle childhood to adulthood. This is somewhat remarkable as spoken word onset priming elicited comparable P350 difference topographies with (left-)anterior distribution of the effect in hearing adults (e.g., Friedrich et al., 2009; Schild et al., 2014; Schild and Friedrich, 2018) and in hearing children (preschoolers and first graders; Schild et al., 2011, Schild et al., 2014b). That is, there was no posterior to anterior gradient of P350 effects for spoken targets during development. We might conclude that the neural processing of written words, as tapped by spoken-written word onset priming, undergoes more restructuring during development from middle childhood to adulthood than the neural processing of spoken words does. Nevertheless, we have to consider that we presented video clips of the speakers in the present study, while we presented unimodal spoken materials in all previous studies with children and adults.

In particular the earlier time window of ERP differences between related and unrelated pairs is associated with lexical access in written word recognition in hearing adults (e.g., Grainger et al., 2006; Holcomb and Grainger, 2006, 2007) and in signing adults (for signing readers see: Gutierrez-Sigut et al., 2017). Therefore, we conclude that hearing children are linking incremental processing in the spoken domain to written word processing as early as hearing adults do. Similarly, signing children are linking incremental processing of signs to written word processing as early as adult signers do. In particular, our results show that beginning readers are sensitive to the outcome of some sort of matching between representations of different modalities (spoken/signed and written) and target word processing is affected by mismatches. Note that this is the first study providing neurocognitive data with high temporal resolution demonstrating that deaf signing children rapidly exploit sign word onsets to facilitate written word identification. Our results point to the conclusion that native signing children are activating written word representations on the basis of sign word onsets similar to hearing children activating written word representations on the basis of spoken word onsets.

How could incrementally processed signs modulate the processing of written words? One possibility is that mouthings, which are relatively common in DGS, might provide some direct form hints between signs and written word representations. Mouthings relate to the phonology of spoken language as they are speech-derived mouth actions accompanying manual signs (Boyes-Braem and Sutton-Spence, 2001; Brentari, 2011). There might be some grapheme-mouthing correspondence between DGS and written German, which deaf native signers can use similarly to grapheme-phoneme correspondence that hearing readers use. Studies focusing on co-active mouth patterns in deaf readers report some reliable mapping between orthography and mouthing (Vinson et al., 2010; Giustolisi et al., 2017). This is consistent with an fMRI study demonstrating that mouthings accompanied by signs generated activations similar to speech reading, while mouth patterns unrelated to spoken language (called “mouth gestures”) generated activations similar to manual signs without mouth movements (Capek et al., 2008). Indeed, we found naturally produced mouth patterns in the sign word onsets that we presented (see video examples in the Supplementary Material). Therefore, our results might further inform the ongoing debate on whether mouthings and manual components have shared lexical representations or whether mouthings occur incidentally by simultaneous code mixing and blending (see Sutton-Spence, 1999; Boyes-Braem and Sutton-Spence, 2001; Sutton-Spence and Day, 2001; Emmorey et al., 2005; Johnston and Schembri, 2007; Nadolske and Rosenstock, 2007; Donati and Branchini, 2013).

In particular the early onset of effects in the ERPs obtained for both groups of beginning readers might confirm the assumption that lexical access is neither selective to the modality (sign, spoken, or written) nor to the language (sign language or written language) of the input that the system receives (Morford et al., 2011, 2019). Non-selective lexical access is well established in research with hearing bilinguals (Spivey and Marian, 1999; Lagrou et al., 2011; for review see Kroll et al., 2015). In previous spoken-written word onset priming studies with hearing adults, we already related the P350 effect to modality-independent lexical access (Friedrich et al., 2004a,2013; Friedrich, 2005). The present results suggest that the ERP effects obtained in word onset priming might be language non-selective as well. With respect to native signing beginning readers, we might conclude that they can facilitate lexical access to written word recognition via an implicit language non-selective linguistic pathway (for a similar conclusion drawn from priming data see Villwock et al., 2021). Hence, reading proficiency might well be modulated by sign language proficiency (McQuarrie and Abbott, 2013; Corina et al., 2014; Holmer et al., 2016). Moreover, deaf readers might even uniquely benefit from language non-selective lexical access during reading since there is typically less competition between phonological and orthographical patterns between signs and words compared to competition between co-activated spoken words and respective written words in hearing individuals (for further discussion see Morford et al., 2019).

P350 effects elicited in signing and hearing beginning readers were similar in their timing, but differed in the lateralization of the posteriorly distributed ERP differences. Similar to previous spoken-written priming studies with adults (Friedrich et al., 2004a,b, 2008, 2009), hearing children in the present study showed a left-lateralized P350 effect. In contrast, deaf native signing children showed a bilateral distribution of the P350 effect. Given the inverse problem in ERP research, topographic ERP effects have to be interpreted with caution. However, the different topography of P350 effects in hearing and deaf beginning readers that we obtained here is consistent with topographic differences of ERP effects formerly shown for deaf and reading adults (Neville et al., 1982; Emmorey et al., 2017; Sehyr et al., 2020). For example, for word reading, individual reading ability was associated with a larger N170 over right-hemispheric occipital sites for deaf readers. By contrast, reading ability was associated with a smaller N170 over the right hemisphere for hearing readers (Emmorey et al., 2017; Sehyr et al., 2020). These ERP findings converge with fMRI evidence for more bilaterally distributed networks that deaf signers recruit for reading (compared to hearing readers; Emmorey et al., 2013). In light of these ERP and fMRI studies with deaf adult readers, the bilateral P350 response in deaf native signing children (compared to the left-lateralized response in reading matched hearing children) might integrate into the assumption that deaf signers recruit the right hemisphere for processing visual word forms to a greater extent than hearing readers do (see also Emmorey and Lee, 2021).

Following the P350 effect and the N400 effect in the ERPs, there was evidence for some later ERP differences between overlapping and unrelated prime-target pairs (see Figure 5). Formerly, we discussed extended positive-going ERP effects for related spoken word targets as being evidence for long-lasting facilitation of respective candidate words (see Friedrich et al., 2013). However, the present design does not allow us to compare these extended ERP effects to the processing of more or less appropriate related candidate words as we did in the former study with matching and partially mismatching spoken target words. In addition to extended facilitation, late ERP effects might also reflect strategic effects associated with the lexical decision responses, which ranged between approximately 820 and 1,000 ms after target word onset (see also Friedrich et al., 2013). Future research with systematically varied prime-target overlap has to further investigate the functional role of these late ERP effects, which appear to be more pronounced in the beginning signing readers than in the beginning hearing readers (see Figure 5).

With regard to different sub-processes that might be reflected in the P350 effect, in the N400 effect and in the reaction times (for further discussion see Friedrich et al., 2013; Schild and Friedrich, 2018), word onset priming might provide a promising tool to further investigate aspects of processing that might have contributed to diverging ERP effects obtained in previous sign priming studies. For example, signed prime-target pairs overlapping in sign units have been found to either cause behavioral facilitation (Dye and Shih, 2006), no effect (Mayberry and Witcher, 2005), or even inhibition (Corina and Hildebrandt, 2002; Carreiras et al., 2008; compared to unrelated prime-target pairs, respectively). In parallel, some ERP studies have revealed either a reduced N400 when signed primes and targets overlapped (compared to completely unrelated prime-target pairs; for ASL: Meade et al., 2018; Hosemann et al., 2020), while others obtained an enhanced N400 for overlap (compared to completely unrelated prime-target pairs; Baus and Carreiras, 2012; Gutierrez et al., 2012a). This might suggest that different sign parameters differently affect different aspects of processing. A recent eye tracking study on Cantonese reading with Hong Kong Sign Language systematically varying different sign parameters also pointed in that direction (Thierfelder et al., 2020). One might suggest that the parallel activation of multiple memory representations for words (presumably reflected in the P350 effect) and the selection of the most promising candidate among them (presumably reflected in the N400 effect and in the lexical decision latencies) are differently sensitive to different units of sign language.

The present study indicates that deaf beginning readers engage rapid sign language co-activation during visual word recognition. Sign onset primes modulated ERP responses of following written target words with lexical overlap to the primes. ERP effects started 200 ms after target word onset. This suggests that deaf beginning readers implicitly link signs to written word recognition. In addition, consecutive selection mechanisms underlying the behavioral responses appeared to be facilitated for matching targets. Our results demonstrate co-activation from DGS as a native language to written German as a second language in deaf signing beginning readers. ERPs recorded in signing individuals might be a promising tool to disentangle which incremental and lexical processes in written word recognition link to which aspects of processing in the signed domain.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Ethikkommission der Deutschen Gesellschaft für Psychologie. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

BH-F: conceptualization, funding, supervision, investigation, writing – original draft, and writing – review and editing. MG: formal analysis, investigation, and writing – review and editing. BR: conceptualization, funding, supervision, and writing –review and editing. CF: conceptualization, funding, supervision, investigation, and writing – reviewing and editing. All authors contributed to the article and approved the submitted version.

This research was funded by a grant from the German Federal Ministry of Education and Research (BMBF) awarded to BH-F, BR, and CF.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We are grateful to Melanie Wegerhoff, Anja Elz, and Berit Müller for their help with data collection, Claudia Zierul for her support in statistics, schools for helping with participant recruitment, and children for participating.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.917700/full#supplementary-material

Allopenna, P. D., Magnuson, J. S., and Tanenhaus, M. K. (1998). Tracking the time course of spoken word recognition using eye movements: evidence for continuous mapping models. J. Mem. Lang. 38, 419–439. doi: 10.1006/jmla.1997.2558

Anderson, D., and Reilly, J. (2002). The MacArthur Communicative Development Inventory: normative data for American Sign Language. J. Deaf Stud. Deaf Educ. 7, 83–106. doi: 10.1093/deafed/7.2.83

Baus, C., and Carreiras, M. (2012). “Word processing,” in Language from a Biological Point of View: Current Issues in Biolinguistics, eds C. Boeckx, M. C. Horno-Chéliz, and J. L. Mendívil-Giró (Cambridge:Scholars), 165–183.

Berg, P., and Scherg, M. (1994). A multiple source approach to the correction of eye artifacts. Electroencephalogr. Clin. Neurophysiol. 90, 229–241. doi: 10.1016/0013-4694(94)90094-9

Boyes-Braem, P., and Sutton-Spence, R. (2001). The Hands are the Head of the Mouth: The Mouth as Articulator in Sign Languages. Hamburg: Signum

Brentari, D. (2011). “Sign language phonology,” in The Handbook of Phonological Theory, 2 Edn, eds J. Goldsmith, J. Riggle, and A. C. L. Yu (Chichester: Blackwell Publishing), 691–721.

Bulheller, S., and Häcker, H. (2002). Coloured Progressive Matrices (CPM). 3 ed, Frankfurt: Harcourt

Capek, C. M., Waters, D., Woll, B., MacSweeney, M., Brammer, M. J., McGuire, P. K., et al. (2008). Hand and mouth: cortical correlates of lexical processing in British Sign Language and speechreading English. J. Cogn. Neurosci. 20, 1220–1234. doi: 10.1162/jocn.2008.20084

Carreiras, M., Gutiérrez-Sigut, E., Baquero, S., and Corina, D. (2008). Lexical processing in Spanish Sign Language (LSE). J. Mem. Lang. 58, 100–122. doi: 10.1016/j.jml.2007.05.004

Chamberlain, C., and Mayberry, R. I. (2008). ASL syntactic and narrative comprehension in skilled and less skilled adults readers: bilingual-bimodal evidence for the linguistic basis of reading. Appl. Psycholinguist. 28, 537–549. doi: 10.1017/S014271640808017X

Clahsen, H., and Fleischhauer, E. (2014). Morphological priming in child German. J. Child Lang. 41, 1305–1333. doi: 10.1017/S0305000913000494

Clark, L. E., and Grosjean, F. (1982). Sign recognition processes in American Sign Language: the effect of context. Lang. Speech 25, 325–340. doi: 10.1177/002383098202500402

Corina, D. P., Hafer, S., and Welch, K. (2014). Phonological awareness for American Sign Language. J. Deaf Stud. Deaf Educ. 19, 530–545. doi: 10.1093/deafed/enu023

Corina, D. P., and Hildebrandt, U. (2002). “Psycholinguistic investigations of phonological structure in AmericanSign Language,” in Modality and Structure in Signed and Spoken Languages, eds R. P. Meier, K. Cormier, and D. Quinto-Pozos (Cambridge: Cambridge University Press), 88–111.

Dahan, D., Magnuson, J. S., Tanenhaus, M. K., and Hogan, E. M. (2001). Subcategorical mismatches and the time course of lexical access: evidence for lexical competition. Lang. Cogn. Proces. 16, 507–534. doi: 10.1080/01690960143000074

Donati, C., and Branchini, C. (2013). “Challenging linearization: Simultaneous mixing in the production of bimodal,” in Challenges to Linearization, eds I. Roberts and T. Biberauer (Berlin, Boston: De Gruyter Mouton), 93–128.

Dye, M. W. G., and Shih, S. (2006). “Phonological priming in British Sign Language,” in Laboratory Phonology, eds L. Goldstein, D. H. Whalen, and T. Best (Berlin: Mouton de Gruyter), 243–263.

Emmorey, K., Borinstein, H., and Thompson, R. (2005). “Bimodal bilingualism: code-blending between spoken English and American Sign Language,” in ISB4: Proceedings of the 4th International Symposium on Bilingualism, eds J. Cohen, K. T. McAlister, K. Rolstad, and J. MacSwan (Somerville, MA: Casadilla Press), 663–673.

Emmorey, K., and Corina, D. (1990). Lexical recognition in sign language: effects of phonetic structure and morphology. Percept. Mot. Skills 71, 1227–1252. doi: 10.2466/pms.1990.71.3f.1227

Emmorey, K., and Lee, B. (2021). The neurocognitive basis of skilled reading in prelingually and profoundly deaf adults. Lang. Linguist. Compass 15:e12407. doi: 10.1111/lnc3.12407

Emmorey, K., Midgley, K. J., Kohen, C. B., Sehyr, Z. S., and Holcomb, P. J. (2017). The N170 ERP component differs in laterality, distribution, and association with continuous reading measures for deaf and hearing readers. Neuropsychologia 106, 298–309. doi: 10.1016/j.neuropsychologia.2017.10.001

Emmorey, K., Weisberg, J., McCullough, S., and Petrich, J. A. F. (2013). Mapping the reading circuitry for skilled deaf readers: an fMRI study of semantic and phonological processing. Brain Lang. 126, 169–180. doi: 10.1016/j.bandl.2013.05.001

Ferrand, L., and Grainger, J. (1992). Phonology and orthography in visual word recognition: evidence from masked non-word priming. Q. J. Exp. Psychol. A 45, 353–372. doi: 10.1080/02724989208250619

Friedrich, C. K. (2005). Neurophysiological correlates of mismatch in lexical access. BMC Neurosci. 6:64. doi: 10.1186/1471-2202-6-64

Friedrich, C. K., Felder, V., Lahiri, A., and Eulitz, C. (2013). Activation of Words with Phonological Overlap. Front. Psychol. 4:556. doi: 10.3389/fpsyg.2013.00556

Friedrich, C. K., Kotz, S. A., Friederici, A. D., and Alter, K. (2004a). Pitch modulates lexical identification in spoken word recognition: eRP and behavioral evidence. Cogn. Brain Res. 20, 300–308. doi: 10.1016/j.cogbrainres.2004.03.007

Friedrich, C. K., Kotz, S. A., Friederici, A. D., and Gunter, T. C. (2004b). ERPs reflect lexical identification in word fragment priming. J. Cogn. Neurosci. 16, 541–552.

Friedrich, C. K., Lahiri, A., and Eulitz, C. (2008). Neurophysiological evidence for underspecified lexical representations: asymmetries with word initial variations. J. Exp. Psychol. Hum. Percept. Perform. 34, 1545–1559. doi: 10.1037/a0012481

Friedrich, C. K., Schild, U., and Röder, B. (2009). Electrophysiological indices of word fragment priming allow characterizing neural stages of speech recognition. Biol. Psychol. 80, 105–113. doi: 10.1016/j.biopsycho.2008.04.012

Giezen, M. R., and Emmorey, K. (2016). Language co-activation and lexical selection in bimodal bilinguals: evidence from picture-word interference. Bilingualism 19, 264–276. doi: 10.1017/s1366728915000097

Giustolisi, B., Mereghetti, E., and Cecchetto, C. (2017). Phonological blending or code mixing? Why mouthing is not a core component of sign language grammar. Nat. Lang. Linguist. Theory 35, 347–365. doi: 10.1007/s11049-016-9353-9

Grainger, J., Kiyonaga, K., and Holcomb, P. J. (2006). The time course of orthographic and phonological code activation. Psychol. Sci. 17, 1021–1026.

Gutierrez, E., Müller, O., Baus, C., and Carreiras, M. (2012a). Electrophysiological evidence for phonological priming in Spanish Sign Language lexical access. Neuropsychologia 50, 1335–1346. doi: 10.1016/j.neuropsychologia.2012.02.018

Gutierrez, E., Williams, D., Grosvald, M., and Corina, D. (2012b). Lexical access in American Sign Language: an ERP investigation of effects of semantics and phonology. Brain Res. 1468, 63–83. doi: 10.1016/j.brainres.2012.04.029

Schild, U., Becker, A. B., and Friedrich, C. K. (2014b). Processing of syllable stress is functionally different from phoneme processing and does not profit from literacy acquisition. Front. Psychol. 5:530. doi: 10.3389/fpsyg.2014.00530

Gutierrez-Sigut, E., Vergara-Martínez, M., and Perea, M. (2017). Early use of phonological codes in deaf readers: an ERP study. Neuropsychologia 106, 261–279. doi: 10.1016/j.neuropsychologia.2017.10.006

Holcomb, P. J., Anderson, J., and Grainger, J. (2005). An electrophysiological study of cross-modal repetition priming. Psychophysiology 42, 493–507. doi: 10.1111/j.14698986.2005.00348.x

Holcomb, P. J., and Grainger, J. (2006). On the time course of visual word recognition: an event-related potential investigation using masked repetition priming. J. Cogn. Neurosci. 18, 1631–1643. doi: 10.1162/jocn.2006.18.10.1631

Holcomb, P. J., and Grainger, J. (2007). Exploring the temporal dynamics of visual word recognition in the masked repetition priming paradigm using event-related potentials. Brain Res. 1180, 39–58. doi: 10.1016/j.brainres.2007.06.110

Holmer, E., Heimann, M., and Rudner, M. (2016). Evidence of an association between sign language phonological awareness and word reading in deaf and hard-of-hearing children. Res. Dev. Disabil. 48, 145–159. doi: 10.1016/j.ridd.2015.10.008

Hosemann, J., Mani, N., Herrmann, A., Steinbach, M., and Altvater-Mackensen, N. (2020). Signs activate their written word translation in deaf adults: an ERP study on cross-modal co-activation in German Sign Language. Glossa A J. Gen. Lingusit. 5:57. doi: 10.5334/gjgl.1014

Johnston, T. A., and Schembri, A. (2007). Australian Sign Language (Auslan): An Introduction to Sign Language Linguistics. Cambridge: Cambridge University Press.

Kroll, J. F., Dussias, P. E., Bice, K., and Perrotti, L. (2015). Bilingualism, mind, and brain. Annu. Rev. Linguist. 1, 377–394. doi: 10.1146/annurev-linguist-030514-124937

Kubus, O., Villwock, A., Morford, J. P., and Rathmann, C. (2015). Word recognition in deaf readers: cross-language activation of German Sign Language and German. Appl. Psycholinguist. 36, 831–854. doi: 10.1017/S0142716413000520

Lagrou, E., Hartsuiker, R. J., and Duyck, W. (2011). Knowledge of a second language influences auditory word recognition in the native language. J. Exp. Psychol. Learn. Mem. Cogn. 37, 952–965. doi: 10.1037/a0023217

Lee, B., Meade, G., Midgley, K. J., Holcomb, P. J., and Emmorey, K. (2019). ERP Evidence for co-activation of English words during recognition of American Sign Language signs. Brain Sci. 9:148. doi: 10.3390/brainsci9060148

Lenhard, W., and Schneider, W. (2006). ELFE 1-6: Ein Leseverständnistest für Erst- bis Sechstklässler. Göttingen: Hogrefe.

Mayberry, R. I., del Giudice, A. A., and Lieberman, A. M. (2011). Reading achievement in relation to phonological coding and awareness in deaf readers: a meta-analysis. J. Deaf Stud. Deaf Educ. 16, 164–188. doi: 10.1093/deafed/enq049

Mayberry, R. I., and Witcher, P. (2005). Age of Acquisition Effects on Lexical Access in ASL: Evidence for the Psychological Realitiy of Phonological Processing in Sign Language. Paper Presented at the 30th. Boston: University conference on language development.

McQuarrie, L., and Abbott, M. (2013). Bilingual deaf students’ phonological awareness in ASL and reading skills in English. Sign Lang. Stud. 14, 80–100. doi: 10.1353/sls.2013.0028

Meade, G., Lee, B., Midgley, K. J., Holcomb, P. J., and Emmorey, K. (2018). Phonological and semantic priming in American Sign Language: n300 and N400 effects. Language. Cogn. Neurosci. 33, 1092–1106. doi: 10.1080/23273798.2018.1446543

Meade, G., Midgley, K. J., Sehyr, Z., Holcomb, P. J., and Emmorey, K. (2017). Implicit co-activation of American Sign Language in deaf readers: an ERP study. Brain Lang. 170, 50–61. doi: 10.1016/j.bandl.2017.03.004

Miller, P. (2007). The role of spoken and sign languages in the retention of written words by prelingually deafened native signers. J. Deaf Stud. Deaf Educ. 12, 184–208. doi: 10.1093/deafed/enl031

Miller, P., and Clark, M. D. (2011). Phonemic awareness is not necessary to become a skilled deaf reader. J. Dev. Phys. Disabil. 23, 459. doi: 10.1007/s10882-011-9246-0

Morford, J. P., Kroll, J. F., Piñar, P., and Wilkinson, E. (2014). Bilingual word recognition in deaf and hearing signers: effects of proficiency and language dominance on cross-language activation. Second Lang. Res. 30, 251–271. doi: 10.1177/0267658313503467

Morford, J. P., Occhino, C., Zirnstein, M., Kroll, J. F., Wilkinson, E., and Piñar, P. (2019). What is the source of bilingual cross-language activation in deaf bilinguals? J. Deaf Stud. Deaf Educ. 24, 356–365. doi: 10.1093/deafed/enz024

Morford, J. P., Wilkinson, E., Villwock, A., Piñar, P., and Kroll, J. F. (2011). When deaf signers read English: do written words activate their sign translations? Cognition 118, 286–292. doi: 10.1016/j.cognition.2010.11.006

Nadolske, M. A., and Rosenstock, R. (2007). “Occurrence of mouthings in American Sign Language: A preliminary study,” in Visible Variation: Comparative Studies on Sign Language Structure, eds P. M. Perniss, R. Pfau, and M. Steinbach (Berlin: De Gruyter Mouton), 35–62.

Neville, H. J., Kutas, M., and Schmidt, A. (1982). Event-related potential studies of cerebral specialization during reading: iI. Studies of congenitally deaf adults. Brain Lang. 16, 316–337. doi: 10.1016/0093-934X(82)90089-X

Ormel, E., and Giezen, M. R. (2014). “Bimodal bilingual cross-language interaction: Pieces of the puzzle,” in Bilingualism and Bilingual Deaf Education, eds M. Marschark, G. Tang, and H. Knoors (New York: Oxford University Press), 75–101.

Ormel, E., Hermans, D., Knoors, H., and Verhoeven, L. (2009). The role of sign phonology and iconicity during sign processing: the case of deaf children. J. Deaf Stud. Deaf Educ. 14, 436–448. doi: 10.1093/deafed/enp021

Ormel, E., Hermans, D., Knoors, H., and Verhoeven, L. (2012). Cross-language effects in written word recognition: the case of bilingual deaf children. Bilingualism. Lang. Cogn. 15, 288–303. doi: 10.1017/S1366728911000319

Padden, C., and Ramsey, C. (1998). Reading ability in signing deaf children. Top. Lang. Disord. 18, 30–46. doi: 10.1097/00011363-199898000-00005

Papaspyrou, C., von Meyenn, A., Matthaei, M., and Herrmann, B. (2008). Grammatik der Deutschen Gebärdensprache aus Der Sicht Gehörloser Fachleute. Hamburg: Signum.

Pattamadilok, C., Chanoine, V., Pallier, C., Anton, J. L., Nazarian, B., Belin, P., et al. (2017). Automaticity of phonological and semantic processing during visual word recognition. NeuroImage 149, 244–255. doi: 10.1016/j.neuroimage.2017.02.003

Perfetti, C. A., and Sandak, R. (2000). Reading optimally builds on spoken language: implications for deaf readers. J. Deaf Stud. Deaf Educ. 5, 32–50. doi: 10.1093/deafed/5.1.32

Quandt, L. C., and Kubicek, E. (2018). Sensorimotor characteristics of sign translations modulate EEG when deaf signers read English. Brain Lang. 187, 9–17. doi: 10.1016/j.bandl.2018.10.001

Quémart, P., Gonnerman, L. M., Downing, J., and Deacon, S. H. (2018). The development of morphological representations in young readers: a cross-modal priming study. Dev. Sci. 21:e12607. doi: 10.1111/desc.12607

Raven, J. C. (1936). Mental Tests Used in Genetic Studies: The Performance of Related Individuals on Tests Mainly Educative and Mainly Reproductive, Ph.D thesis, United Kingdom, UK: King’s College London

Reitsma, P. (1984). Sound priming in beginning readers. Child Dev. 55, 406–423. doi: 10.2307/1129952

Sandler, W. (1989). Phonological Representation of the Sign: Linearity and Nonlinearity in American Sign Language. Dordrecht: De Gruyter Mouton

Sandler, W., and Lillo-Martin, D. (2006). Sign Language and Linguistic Universals. Cambridge, UK: Cambridge University Press.

Sauval, K., Casalis, S., and Perre, L. (2017). Phonological contribution during visual word recognition in child readers. An intermodal priming study in Grades 3 and 5. J. Res. Read. 40, 158–169. doi: 10.1111/1467-9817.12070

Schild, U., Becker, A. B., and Friedrich, C. K. (2014). Phoneme-free prosodic representations are involved in pre-lexical and lexical neurobiological mechanisms underlying spoken word processing. Brain Lang. 136, 31–43. doi: 10.1016/j.bandl.2014.07.006

Schild, U., and Friedrich, C. K. (2018). What determines the speed of speech recognition? Evidence from congenitally blind adults. Neuropsychologia 112, 116–124. doi: 10.1016/j.neuropsychologia.2018.03.002

Schild, U., Röder, B., and Friedrich, C. K. (2011). Learning to read shapes the activation of neural lexical representations in the speech recognition pathway. Dev. Cogn. Neurosci. 1, 163–174. doi: 10.1016/j.dcn.2010.11.002

Sehyr, Z. S., Midgley, K. J., Holcomb, P. J., Emmorey, K., Plaut, D. C., and Behrmann, M. (2020). Unique N170 signatures to words and faces in deaf ASL signers reflect experience-specific adaptations during early visual processing. Neuropsychologia 141:107414. doi: 10.1016/j.neuropsychologia.2020.107414

Soto-Faraco, S., Sebastián-Gallés, N., and Cutler, A. (2001). Segmental and suprasegmental mismatch in lexical access. J. Mem. Lang. 45, 412–432. doi: 10.1006/jmla.2000.2783

Spinelli, E., Segui, J., and Radeau, M. (2001). Phonological priming in spoken word recognition with bisyllabic targets. Lang. Cogn. Proces. 16, 367–392. doi: 10.1080/01690960042000111

Spivey, M. J., and Marian, V. (1999). Cross-talk between native and second languages: partial activation of an irrelevant lexicon. Psychol. Sci. 10, 281–284. doi: 10.1111/1467-9280.00151

Sutton-Spence, R. (1999). The influence of English on British Sign Language. Int. J. Biling. 3, 363–394. doi: 10.1177/13670069990030040401

Sutton-Spence, R., and Day, L. (2001). “Mouthings and mouth gesture in British Sign Language (BSL),” in The Hands are the Head of the Mouth: The Mouth as Articulator in Sign Languages, eds P. B. Braem and R. Sutton-Spence (Hamburg: Signum), 69–87. doi: 10.1111/cogs.12868

Thierfelder, P., Wigglesworth, G., and Tang, G. (2020). Sign phonological parameters modulate parafoveal preview effects in deaf readers. Cognition 201:104286. doi: 10.1016/j.cognition.2020.104286

Trezek, B. J., Wang, Y., and Paul, P. V. (2011). “Processes and components of reading,” in The Oxford Handbook of Deaf Studies, Language and Education, 2 Edn, eds M. Marschark and P. E. Spencer (New York: Oxford University Press), 99–114.

Villwock, A., Wilkinson, E., Piñar, P., and Morford, J. P. (2021). Language development in deaf bilinguals: deaf middle school students co-activate written English and American Sign Language during lexical processing. Cognition 211:104642. doi: 10.1016/j.cognition.2021.104642

Vinson, D. P., Thompson, R. L., Skinner, R., Fox, N., and Vigliocco, G. (2010). The hands and mouth do not always slip together in British sign language: dissociating articulatory channels in the lexicon. Psychol. Sci. 21, 1158–1167. doi: 10.1177/0956797610377340

Woolfe, T., Herman, R., Roy, P., and Woll, B. (2010). Early vocabulary development in deaf native signers: a British Sign Language adaptation of the communicative development inventories. J. Child Psychol. Psychiatry 51, 322–331. doi: 10.1111/j.1469-7610.2009.02151.x

Keywords: sign language, ERPs, lexical processing, deaf children, reading, German Sign Language (DGS)

Citation: Hänel-Faulhaber B, Groen MA, Röder B and Friedrich CK (2022) Ongoing Sign Processing Facilitates Written Word Recognition in Deaf Native Signing Children. Front. Psychol. 13:917700. doi: 10.3389/fpsyg.2022.917700

Received: 11 April 2022; Accepted: 24 June 2022;

Published: 05 August 2022.

Edited by:

Aaron Shield, Miami University, United StatesReviewed by:

Jill P. Morford, University of New Mexico, United StatesCopyright © 2022 Hänel-Faulhaber, Groen, Röder and Friedrich. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Barbara Hänel-Faulhaber, QmFyYmFyYS5IYWVuZWwtRmF1bGhhYmVyQHVuaS1oYW1idXJnLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.