- 1Department of Cognitive Science, University of California, San Diego, San Diego, CA, United States

- 2Program in Neurosciences, University of California, San Diego, San Diego, CA, United States

- 3Center for Neural Science, New York University, New York, NY, United States

- 4School of Information Technology and Electrical Engineering, The University of Queensland, Brisbane, QLD, Australia

Interactive neurorobotics is a subfield which characterizes brain responses evoked during interaction with a robot, and their relationship with the behavioral responses. Gathering rich neural and behavioral data from humans or animals responding to agents can act as a scaffold for the design process of future social robots. This research seeks to study how organisms respond to artificial agents in contrast to biological or inanimate ones. This experiment uses the novel affordances of the robotic platforms to investigate complex dynamics during minimally structured interactions that would be difficult to capture with classical experimental setups. We then propose a general framework for such experiments that emphasizes naturalistic interactions combined with multimodal observations and complementary analysis pipelines that are necessary to render a holistic picture of the data for the purpose of informing robotic design principles. Finally, we demonstrate this approach with an exemplar rat–robot social interaction task which included simultaneous multi-agent tracking and neural recordings.

Introduction

As technology and automation increasingly permeate every aspect of modern life, our relationship with these systems have begun to blur the lines between tool use and social interaction. Humans are routinely being asked to engage with artificial agents in the form of chat bots, recommender systems, and social robots which all exhibit facets of agency more familiarly associated with living beings (Saygin et al., 2000; Gazzola et al., 2007; Saygin et al., 2012). Such a promethean transition has raised an urgent need to study how biological organisms adapt and extend their social mechanisms to new digital simulacra (Baudrillard, 1981). The proliferation of technology has made sophisticated robotics accessible to many more scientists, empowering them to create a wide array of new applications, such as the use of robots to investigate animal models by physically interacting with those animals.

The goals of this research can be broadly broken down into two categories. The first, seeks to directly study how organisms respond to artificial agents in contrast to biological or inanimate ones. The second, uses the novel affordances of the robotic platforms to investigate coordination dynamics between agents Here we propose that to realize the full potential of the approach, both goals must be integrated as they provide complementary information necessary to contextualize one another results. For example, if one were to use a robot to study social behavior, without characterizing the organism’s differential response to robots and conspecifics, then it would be difficult to know whether the results reflected a generalizable reaction, or was an artifact of the animal’s particular response to the robot. Despite these epistemological liabilities, the degree to which animals’ responses differ between agent types, as well as the character of those differences, remains an open question.

At the nexus of our broadly construed categories is the nascent field, interactive biorobotics, that uses robots to experimentally probe questions in behavioral ethology, neuroscience, and psychology, using a variety of animal assays (Narins et al., 2003, 2005; Ishii et al., 2006; Shi et al., 2013; Lakatos et al., 2014; Gergely et al., 2016). Robot frogs that can emit auditory calls have been set up in environmental habitats and elicit fighting and even mating responses from wild frogs (Narins et al., 2003, 2005). Robot fish that interact with living schools of fish and vibrating robots that attract bees have shown effects on collective behavior in laboratory and naturalistic environments (Schmickl et al., 2021). Robot rats, like the Waseda Rat and the iRat, interact with living rats and affect their behavior in laboratory settings (Ishii et al., 2006; Wiles et al., 2012). The Waseda rat was used specifically to manipulate stress and anxiety-like behaviors, whereas the iRat was used to induce social interaction dynamics.

In this paper we provide multimodal recordings from rats engaged in a social experiment with rats, robots, and objects. We show that interactions with agents of varying animacy and motion characteristics evoke different behavioral responses. In addition, we show measurable variations in the activity of multiple brain regions across different interactive contexts.

Background

Real organisms do not exist within a sterile world, acting against a quiescent backdrop. The world they adapted to is dynamic, inhabited by other agents, each behaving and interacting according to their own imperatives. Therefore, if science is to actually understand how the brain synthesizes stimuli and produces effective behavior it must tackle these complex, weakly constrained settings. It is technically complex to administer experimental manipulations in a minimally constrained environment, and the availability of new technology created an opportunity for experimentalists to partner with roboticists and computationalists to create data capture and analysis tools necessary to extract robust effects from the results.

Interactive biorobotics presents a promising new approach to facilitate experimental manipulation while retaining the complexities of inter-agent dynamics. As Datteri suggests, interactive biorobotics is a methodologically novel field that is distinct from classical biorobotics (Datteri, 2020). In classical biorobotics experiments, the robot is meant to “simulate” or replicate a function of a living system without direct interaction with the organism itself. In interactive biorobotics, the robot is meant to stimulate interaction with living systems, where certain capacities of the robot are manipulated to engage and stimulate the living system. The target of the explanation is the behavior of the living system in response to the robotic agent. A key aspect of interactive biorobotics is the integration and habituation of living systems to interactive robot counterparts (Quinn et al., 2018; Datteri, 2020). A popular example of an interactive biorobotics paradigm with animals was the Waseda Rat, a rat-like robot that continuously chases a living rat to induce stress and anxiety-like behavior (Ishii et al., 2006; Shi et al., 2013).

Many experiments have demonstrated the viability of this technique for investigating neuroscientific questions. These results are the purview of interactive neurorobotics, a subfield which characterizes brain responses evoked during interaction with a robot, and their relationship with the behavioral responses. An early example of a pioneering interactive neurorobotics study is Saygin et al. (2012), which used neuroimaging to present human subjects with robots and androids for the purposes of studying the neural basis of the “uncanny valley.” This is an effect which shows that as a robot’s appearance increases in human-likeness, the more eerie or creepy it may seem to a human during interaction (Mori, 1970; Saygin et al., 2012). Behavioral evidence has been presented suggesting that macaque monkeys also have a similar response when presented with virtual avatars (Steckenfinger and Ghazanfar, 2009). However, it is unknown to what extent the uncanny valley effect is present in other animals, like rodents. While this is a fascinating question, the uncanny valley concerns robots that are close to human looks, forms and movements. The iRat is a minimalist robot intended to not be rodent-like in appearance, smell or sound to avoid any possible uncanny valleys.

An example of an interactive neurorobotics experiment, where the brain is measured along with animal behavior, was conducted with the predator-like robot known as “Robogator.” This robot alligator that can walk and bite, interacted with rats in a dynamic foraging task (Choi and Kim, 2010). In their task, as a rat approaches a food reward, the Robogator suddenly snaps its jaws toward the rodent, resulting in an approach-avoidance conflict paradigm. In this experiment, Choi and Kim (2010) drastically inhibited amygdala function by locally infusing muscimol (a GABA agonist) and inducing electrolytic lesions. They found that without amygdala function, animals showed diminished fear responses toward the robot. They also demonstrated that local infusions of muscimol globally suppressed amygdala activity, leading to increased exploration of the robot. Similar approach and avoidance dynamics are present in a variety of behavioral paradigms related to predation, social interaction, reward learning, and threat detection (Mobbs and Kim, 2015; Jacinto et al., 2016). The increase in exploration under conditions in which the amygdala is suppressed underscores the importance of the state of the animal in approaching potentially threatening, frightening, or unknown stimuli. This is an inevitable feature of social interaction dynamics, guiding an animal to engage, or not, in social interactions based on their own sense of safety, stress, or anxiety.

When potential danger or even conditions of high uncertainty are present, there is a cost to exploration due to rats’ natural propensity for neophobia (Mitchell, 1976; Modlinska et al., 2015). Inherent in introducing robot counterparts to rats is not only the uncertainty of novelty but also risk assessment regarding the fear of harm. Thus, in order to explore robots, rats must engage in regulatory behaviors allowing the neural system to enter states that allow exploratory behaviors. Inevitably, this necessitates a balance between the sympathetic nervous system and the parasympathetic nervous system, invoking allostatic processing in which systemic stability is achieved through continuous change. Robots have been more often used in fear and stress inducing paradigms with novel robots that exert extreme regulatory demands on the rat (Ishii et al., 2006; Choi and Kim, 2010). During the management of uncertainty, self-regulatory behaviors actively modulate internal demands to meet external demands (Nance and Hoy, 1996).

In addition to examining behaviors related to novelty and fear, studies have also been performed examining the similarity between rodent social behaviors and behaviors toward a robot. Rats have been shown to behave similarly toward mobile robots as they do to rats, exhibiting behaviors such as: approaching, avoiding, sniffing, and following (Wiles et al., 2012; del Angel Ortiz et al., 2016; Heath et al., 2018). The analysis showed that the rats demonstrated similar relative orientation formations when interacting with another rat or moving robot. Analysis of relative spatial position in rat–rat dyads in comparison with rat–robot dyads show similarities, raising the question of whether these dynamic interactions might have social elements (del Angel Ortiz et al., 2016). Endowing robots with coordination dynamics that mitigate concerns of dominance still leave uncertainty regarding potential animacy of the agent.

A robot’s ability to engage in self-propelled motion is crucial for its potential to elicit social and attentive behaviors. Studies of social recognition and novel object recognition traditionally disregard dynamic objects that exhibit self-propelled movement despite early demonstrations that even two-dimensional objects that exhibit self-propelled movement are often associated with sociality, agency, and animacy detection (Heider and Simmel, 1944). Self-propelled motion can take various forms. Biological motion is associated with maximizing smoothness and can be recognized as animate even with minimal representation (Flash and Hogan, 1985; Todorov and Jordan, 1998; Saygin et al., 2004). Mechanical and materials constraints on interactive robots make the use of biological motion rare and difficult to achieve. However, interactive robots provide an opportunity to experimentally manipulate agency by utilizing elements of animation through movement trajectories and temporal coordination (Hoffman and Ju, 2014).

A robotic platform that displayed emergent semi-naturalistic movement patterns is the iRat neurorobotics platform (Ball et al., 2010; Wiles et al., 2012). The iRat was originally developed with a neurally feasible model that simulated the function of the hippocampus, entorhinal cortex and parietal cortex for the purpose of learning spatial environments (Ball et al., 2010). By virtue of entering an environment and exhibiting appropriate spatial navigation and object avoidance, iRat garnered observational attention from on-looking rats (Wiles et al., 2012). This brought about the question of whether iRat could be driven to behave in a socially interactive manner that would result in rats engaging in prosocial behavior with the iRat as they do with conspecifics (Rutte and Taborsky, 2008; Bartal et al., 2011). Quinn et al. (2018) used the iRat to interact with rats for the purpose of eliciting social responses and examining prosocial behavior toward robots (For Artists Depiction and Picture of Live Interaction see Figure 1). Rats will not only engage in prosocial behaviors with each other, such as freeing other rats from an enclosed restrainer, but have also been shown to reciprocate with robots (Wiles et al., 2012; Quinn et al., 2018).

Figure 1. (A) An artist’s depiction of rat–robot and rat–object interactions from Quinn et al. (2018) (Photo credit with permission from Rosana Margarida Couceiro). (B) A picture of live rat–robot interaction.

In this paper we further prior work by investigating the effect of agent type on both behavioral and neural outcomes. For our experimental model we considered rats engaging in a socio-robotic experiment developed according to principles of rodent-centered design (see Figure 1). In this study, rats interacted in a circular arena with other rats, robots, or objects. They engaged in naturalistic behaviors during this time and the resultant observational data was used to characterize the dynamics of rats’ behavioral repertoires in the presence of different agents or objects. While the rats were freely behaving, we used multi-site electrophysiological recordings to examine brain states during different behavioral states. We examine how the agent-based interactions influence neural oscillations within local field potentials in the olfactory bulb, amygdala and hippocampus (see Section “Brain Areas of Interest”) during grooming, immobility, and rearing behaviors (see Section “Behaviors of Interest”). To investigate dynamic interactions, we demonstrate the use of deep learning video tracking for offline multi-animal and robot tracking.

Brain areas of interest

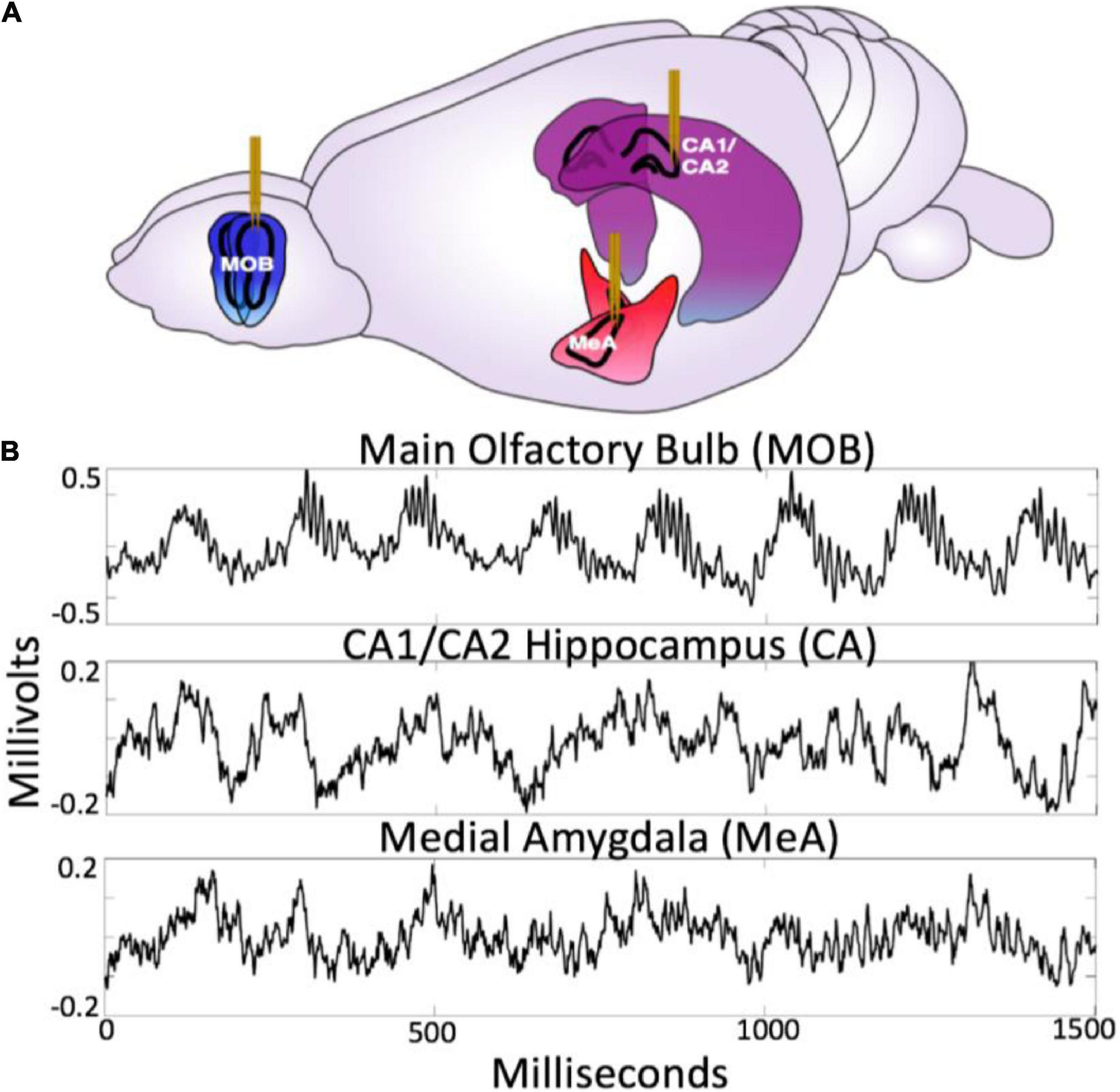

The neural circuit examined in this paper was chosen because it spans specific functional aspects critical for social behavior. Together, the olfactory bulb, amygdala, and hippocampus form a tightly connected network (for review see Brodal, 1947 and Eichenbaum and Otto, 1992) that provide information about autonomic, sensory, spatio-temporal, and affective context (Moberly et al., 2018; Jacobs, 2022) (see Figure 2). They are, thus, well situated to provide valuable information regarding the benefits and liabilities of the external environment during social interaction, exploration, and sampling of the sensory information. Further, they have well characterized anatomical connectivity and diverse observed forms of frequency dynamics which makes them an excellent subject for studying the holistic closed loop processing of social information.

Figure 2. (A) Diagram of electrode placement in the main olfactory bulb (MOB), medial amygdala (meA), hippocampus (CA1/CA2). Figure adapted from scidraw.io under Creative Commons 4.0 license (Tang, 2019). (B) Raw traces from the MOB, Ca1/Ca2 and meA.

The olfactory bulb is a dominant primary sensory organ for rodents, playing a central role in odor discrimination and social cognition for rats (Dantzer et al., 1990). The main olfactory bulb (MOB) local field potential exhibits neural oscillations associated with sensory processing (Kay et al., 2009). Rojas-Líbano et al. (2014) have shown that the frequency of the theta oscillation in the olfactory bulb LFP reliably follows respiration rate (2–12 Hz). For this study the lower frequency olfactory bulb LFP will be used as a proxy for respiration. Respiration-entrained oscillations provide information about the state of the autonomic nervous system, and are distinct from theta oscillations (Tort et al., 2018a,b). It is important to note that respiratory rhythms are not restricted to the olfactory bulb, and are also found throughout the brain (Rojas-Líbano et al., 2014; Heck et al., 2017). The following study seeks to highlight the important contribution of olfaction and respiratory-related brain rhythms to brain dynamics (Jacobs, 2012; Lebedev et al., 2018), in order to simultaneously examine sensory and autonomic dynamics as they relate to agent and object interactions. Olfactory and hippocampal theta oscillations also show coherence during olfactory discrimination tasks (Kay, 2014). Recent work also suggests coupling of the beta rhythm from olfactory bulb to hippocampus, which suggests a directionality of functional connectivity going from OB to hippocampus (Gourévitch et al., 2010). The olfactory bulb also exhibits two distinct gamma oscillations associated with contextual odor recognition processing, low gamma (50–60 Hz) during states of grooming and immobility and high gamma (70–100 Hz) associated with odor sensory processing (Kay, 2005). Future work will highlight the role of these rhythms, whereas the current work focuses on dynamics within the theta, respiratory, and beta frequencies. (For raw traces of the MOB LFP see Figure 2B).

The amygdala is a complex of historically grouped nuclei located in the medial temporal lobe, commonly associated with affective processing, saliency, associative learning, and aspects of value (Gallagher and Chiba, 1996). Oscillations in the amygdala and their coherence with other brain structures have been linked to learning and memory performance (Paré et al., 2002). The function of the medial amygdala (MeA) is associated with the accessory olfactory system, which receives inputs from the vomeronasal organ, playing a role in social recognition memory, predatory recognition, and sexual behavior (Bergan et al., 2014). The MeA receives input from accessory olfactory areas and has projections to hypothalamic nuclei that regulate defensive and reproductive behavior (Swanson and Petrovich, 1998). The basomedial nucleus has been linked to the regulation and control of fear and anxiety-related behaviors (Adhikari et al., 2015). Amir et al. (2015) investigated how principal cells in the basolateral amygdala respond to the Robogator robot during a foraging task. A group of cells reduced their firing rate during the initiation of foraging while another group increased firing rate. It was found that this depended on whether the rat initiated movement, with the authors’ suggesting that the amygdala is not only coding threats and rewards, but also is closely related to the behavioral output. (For raw traces of the MeA LFP see Figure 2B).

The CA1/CA2 subregion of the hippocampus is functionally associated with spatial navigation, contextual information, and episodic memory formation. The CA1 region of the hippocampus is commonly associated with spatial navigation dorsally and social/affective memory ventrally (van Strien et al., 2009). The hippocampal theta rhythm is a 4–10 Hz oscillation generated from the septo-hippocampal interactions, which temporally organize the activity of CA1 place cells according to the theta phase. The CA2 region of the hippocampus plays an important role in modulating the hippocampal theta rhythm and also an essential part in social memory (Mercer et al., 2007; Hitti and Siegelbaum, 2014; Smith et al., 2016). CA2 receives projections from the basal nucleus of the amygdala, which plays an important role for contextual fear conditioning (Pitkänen et al., 2000; Goosens and Maren, 2001). Ahuja et al. (2020) demonstrated that CA1 pyramidal cells were responsive to a robot that indicates a shock zone. Robots have also been developed for the purpose of improving behavioral reproducibility when examining aspects of rodent spatial cognition in neuroscience. When rats navigate through a maze, there can often be a variety of factors that an experimenter might want more control over. In this case a robot with an onboard pellet dispenser was used to regulate the rat’s direction and speed as they moved through the maze (Gianelli et al., 2018). (For raw traces of the CA1/CA2 LFP see Figure 2B).

Behaviors of interest

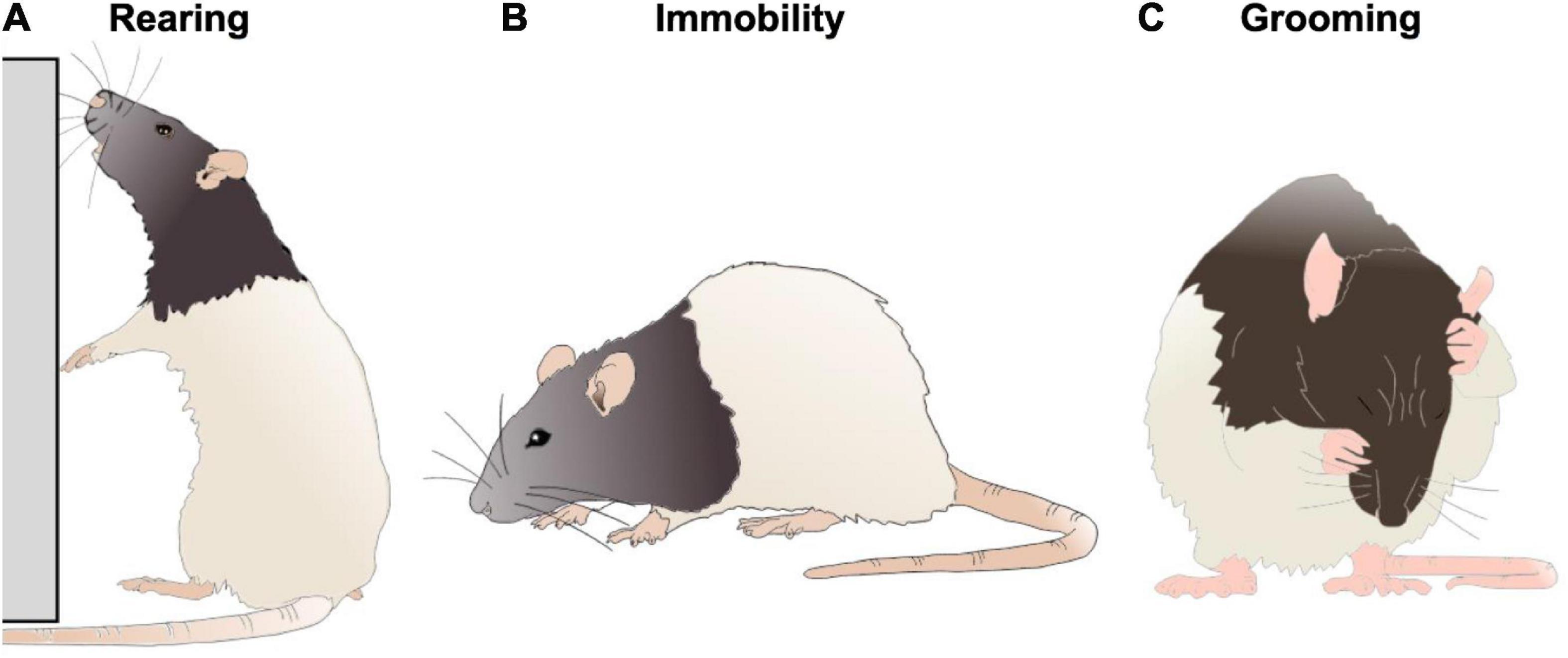

The naturalistic behaviors used for the comparative neural analysis were chosen due to their ready identifiability and representation of distinct types of exploratory and self regulatory behavior. The behaviors are generally demonstrated when the rat temporarily stopped running or walking and was not physically exploring the other agent on the open field. The behaviors we prioritized include immobility, self-grooming, and rearing (see Figure 3). Given that the state of the rat differed widely across behaviors, it was particularly important to examine how the presence of different agents (rat, robot, or object) perturbed their state within a particular behavior.

Figure 3. A depiction of a rat engaging in rearing (A), immobility (B), and grooming (C) behaviors. Figure adapted from scidraw.io under Creative Commons 4.0 license.

Immobility is a complex behavior distinguished by the motionlessness exhibited by the subject. This behavior is made distinct and significant as an intermediary behavior between the regulatory and exploratory states rats can alternate between. During immobility, the rat is likely in a heightened state of arousal, with an intensity of alertness. While this behavior is often related to an expression of fear, it also occurs during lower arousal states related to alertness and uncertainty (Golani et al., 1993; Kay et al., 2009; Gordon et al., 2014). With current comprehension, engaging in immobility is a behavior indicative of risk assessment, occurring preemptively to a threat or as a result of one (Kay, 2005). Immobility allows the rat time to acquire and interpret environmental stimuli, triangulate any potential discomfort or stressors, and act accordingly.

Self-grooming, hereafter referred to as grooming, is a behavior inherent to rodents that is communicative of not only hygienic regulation, but also self-regulation of stress relief (Fernández-Teruel and Estanislau, 2016). Grooming is a regulatory process, which often serves the function of de-arousal (Kalueff et al., 2016). Grooming includes sequences of rapid elliptical strokes, unilateral strokes, and licking of the body or anogenital area. Due to the behavioral complexity of grooming, frequency and duration of grooming bouts is dependent on context (Song et al., 2016).

Rearing is an exploratory action, exhibited as a means of increasing the rat’s access to stimuli in the environment. In both instances, the rat straightens and lengthens their spine and maneuvers their forelegs to increase their height. When rearing, rats increase their sensory exploration of the surrounding environment (Lever et al., 2006). This fluid behavior varies greatly in its duration and frequency. For the purposes of this paper, rearing was specifically defined with relation to the wall of the environment.

Materials and methods

Animals and housing

All experiments and maintenance procedures were performed in an American Association for Accreditation of Laboratory Animal Care (AAALAC) accredited facility in accordance with NIH and Institutional Animal Care and Use Committee (IACUC) ethical guidelines and preapproved by the IACUC committee. 6 Sprague-Dawley rats (n = 6) (Harlan Laboratories) performed in the behavioral experiments. 3 rats (n = 3) were surgically implanted with electrodes for electrophysiological recordings. They were acquired at 6 weeks old and housed in pairs. Cagemates were put together in an enriched environment for 30 min a day and were maintained on a 12 h day/night cycle. After receiving surgery, the implanted rat was single-housed for the whole of the experiment. To offset the lack of social enrichment from being single-housed, they were taken out to play in the enriched environment with the former cagemate on the same schedule.

Experimental conditions and trial information

Data was collected for four types of conditions: rat–rat interactions, rat–robot interactions, rat–object interactions and open field. Trials were collected from a rat and a robot freely roaming in an arena (n = 40). Comparison conditions included object trials (n = 21), social interaction trials with other rats (n = 20), and solo open field exploration (n = 84) (see Supplementary Information for Section “Animals and Housing”). Trial lengths were approximately 3 min long. Trials were counterbalanced for order effects. The robots and the animals’ were recorded using an overhead camera at 30 FPS. The videos were used to hand-label the onset and offset of each behavior with approximately 0.1 s precision (see Supplementary Information Section “Behavioral Video Coding”) and the tracker was used to estimate position of the interacting agents for each video frame. Rats were surgically implanted with electrodes in the main olfactory bulb, hippocampus, and amygdala simultaneously to record local field potentials during freely moving behavior (see Supplementary Information for Section “Surgical Procedure and Neural Implants and Recordings”).

Robot

The iRat (n = 2) is a robotics and modeling platform created by the Complex and Intelligent Systems Laboratory (Ball et al., 2010). iRat is a two wheeled mobile robot that is 180 mm × 100 mm × 70 mm. The iRat is capable of both WoZ interaction and performing pre-programmed behaviors. Robots were distinguished visually by color (red and white iRat/green and white iRat) and using distinguishing olfactory odors. The Red iRat was tagged with frankincense essential oil, the green iRat was tagged with myrrh essential oil. These odors were chosen in order to match preference profiles and are within the same category of woody scents. Our lab has previously shown that rats do not demonstrate a preference for either scent (Quinn et al., 2018). For information about the experimenter’s control of the robot’s movement dynamics see Supplementary Information Section “Wizard of Oz (WoZ).” For the experiment, locomotion of both robots was limited and reduced to below 0.5 m/s. In addition to the iRats, multiple Arduino-based mobile robots were also used (see Section “Arduino Board of Education Shield Robots” in the Supplementary Information).

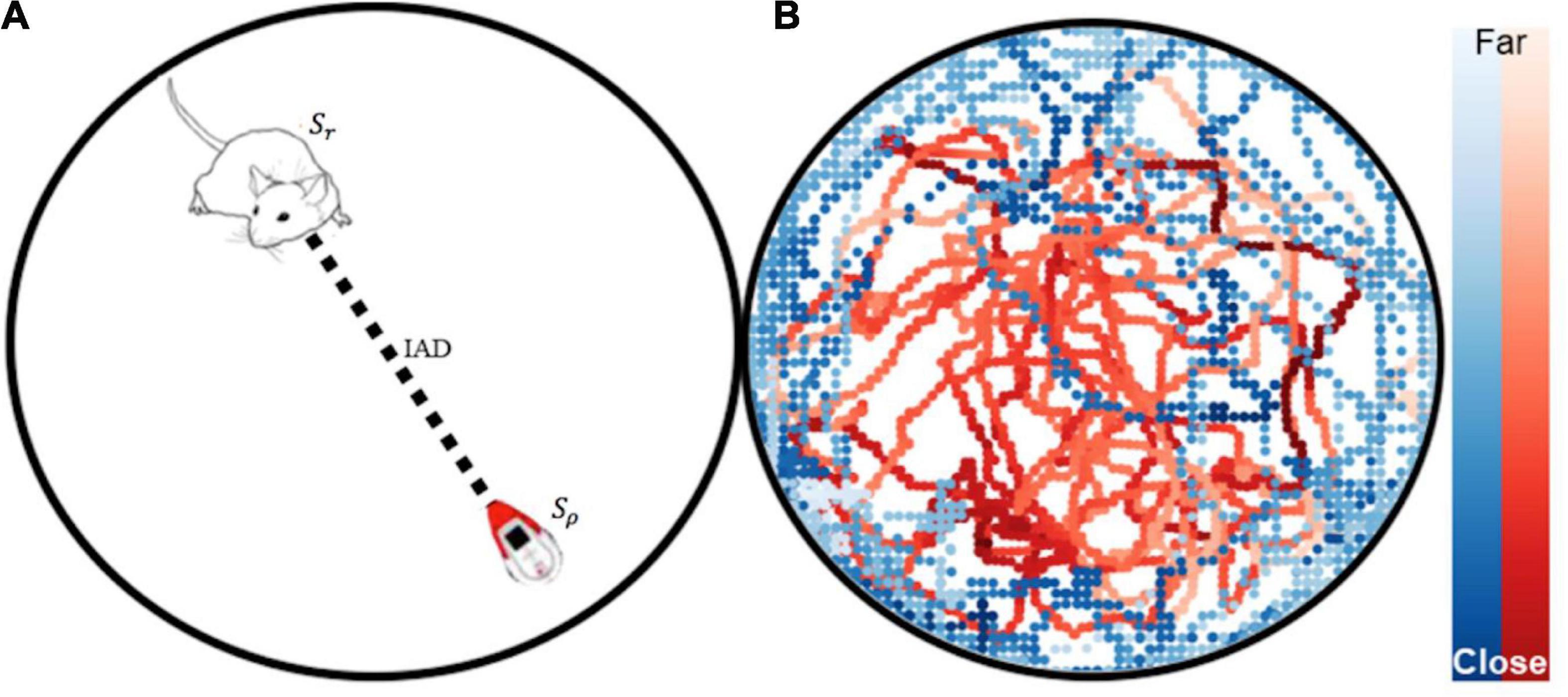

Automated tracking

Multi-agent pose tracking was performed using SLEAP (Social LEAP Estimates Animal Pose) (Pereira et al., 2022). Let S refer to the agent’s state which is the position in Cartesian x-,y- coordinates over time and θ orientation in radians over time t by video frames (see Figure 4). The position and orientation for the rat is denoted by Sr={xrat,yrat,θrat} and the robot Sρ={xrobot,yrobot,θrobot}. Inter-agent distance (IAD) was calculated by taking the euclidean distance between Sr and Sρ position vectors. (For video animations of the tracking data please see Supplementary Information. For information on the data pipeline for tracking see Supplementary Information Section “Neural Network Offline Tracking Training and Validation Results”).

Figure 4. (A) Animal and robot position tracking with SLEAP. Automated tracking-based behavior segmentation with state vectors Sr and Sρ, and the inter-agent distance (IAD). (B) Tracking data from a robot free roam trial is plotted.

Behavioral and tracking statistics

For the tracking data, inter-agent distances were calculated using the euclidean distance between agents for the rat–rat, rat–robot, and rat–object interaction conditions. The distributions of inter-agent distances, mean event counts per trial, and mean event duration per condition were compared using one way Welch’s t-tests, and effect sizes were calculated using Cohen’s d. For the behavioral events, the event frequency was calculated per trial and the mean duration for each behavioral event type was calculated per condition. The events and durations were also compared using one way Welch’s t-tests, and effect sizes were calculated using Cohen’s d.

Mixed effects model

A general linear mixed-effect model was constructed to perform an ordinary least squares regression of a response variable as a function of mixture of fixed and random effects. Fixed effects include the behavior and agent type, while random effects include the influence of the variance of each individual rat on the response variable within the region. Null distributions were created by taking the aggregate average of all behavioral events. This allows for the comparison of changes in average power within rats, while controlling for any uneven sample sizes and individual differences in overall power within brain regions. The effect size was estimated by subtracting the means in question and dividing by the standard deviation of the residual. The mean coefficients, standard errors, z scores, p-values, and effect size estimates are reported. The intercept of the baseline group is reported as Int., standard error of the mean as SEM, and the mean coefficients of the comparisons are reported as M. Z scores and p-values are also reported.

Results

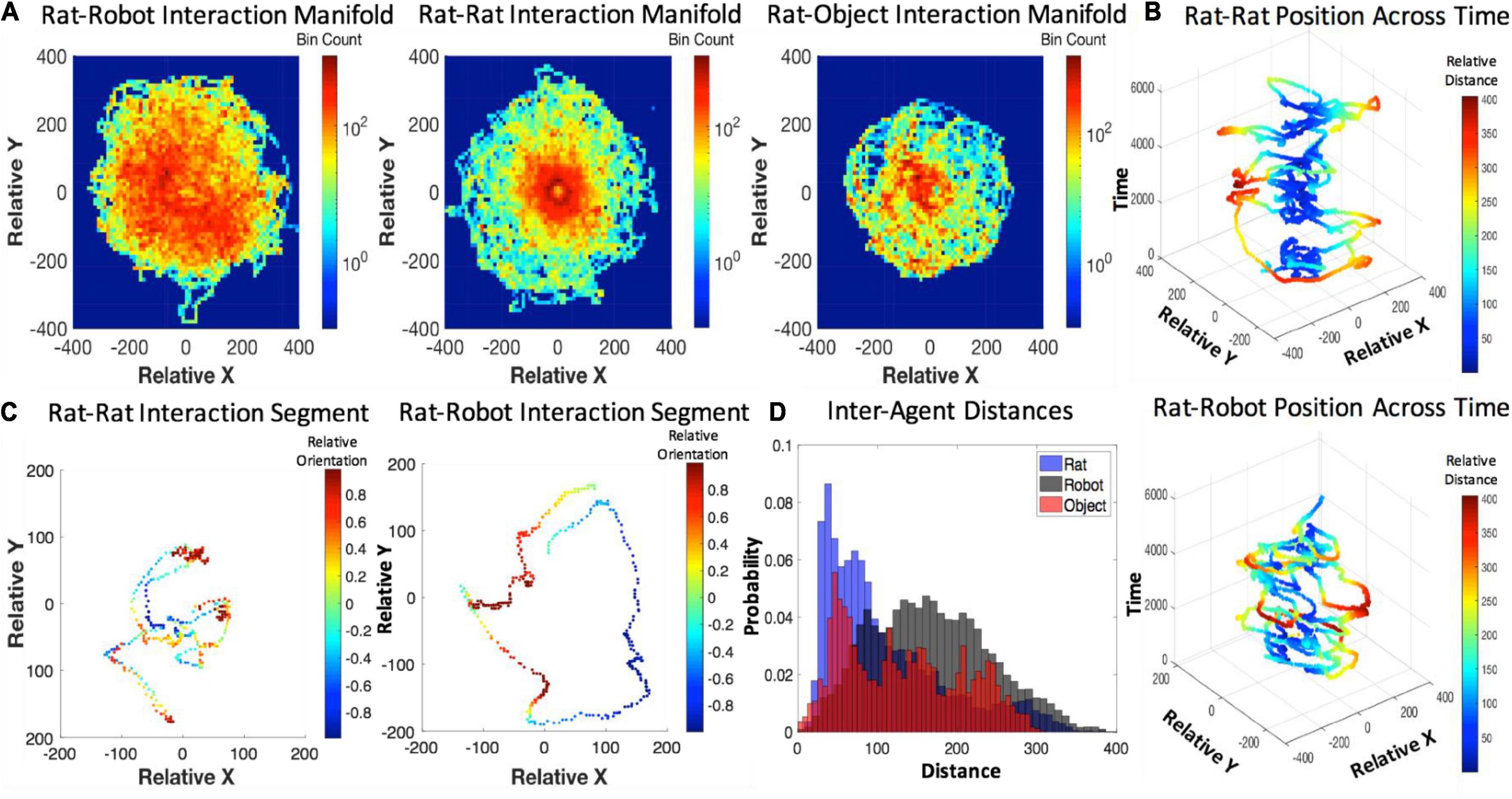

Tracking results

The inter-agent distances maintained during interaction were minimal between rats, slightly longer for rats and objects, and the longest for rats and robots (see Figure 5). The inter-agent distances for rat–robot interactions indicate that the rat and robot maintain a significantly longer distance on average (M = 167.09 pixels, SEM = 0.14) than interactions with conspecifics (M = 110.20 pixels, SEM = 0.21, t = 227.86 pixels, p < e-10, d = 0.75). The rat–robot inter-agent distance distribution had a significantly higher mean than the rat–object distance distribution (M = 131.44, SEM = 0.22, t = 135.56, p < e-10, d = 0.48). The rat–object inter-agent distance distribution showed a significantly higher mean than rat–rat distances (t = 69.93, p < e-10, d = 0.28). For more information about time course of inter-agent distance see Supplementary Information Section “Examination of Within-Trial Habituation.”

Figure 5. (A) Agent-based interaction manifolds represented by 2D histogram bins along the relative x- and y- position axes. (B) Relative position over time for the rat–rat and rat–robot interactions with a color map revealing inter-distance between agents (C), shorter segments of relative position of rat–rat and rat–robot interactions with a color map revealing relative orientation. (D) A histogram of inter-agent distance per rat, robot, and object conditions.

Behavioral hypothesis tests

For a summary of the hand-coded behavioral event identification please see Supplementary Information Section “Behavioral Video Coding.”

Immobility

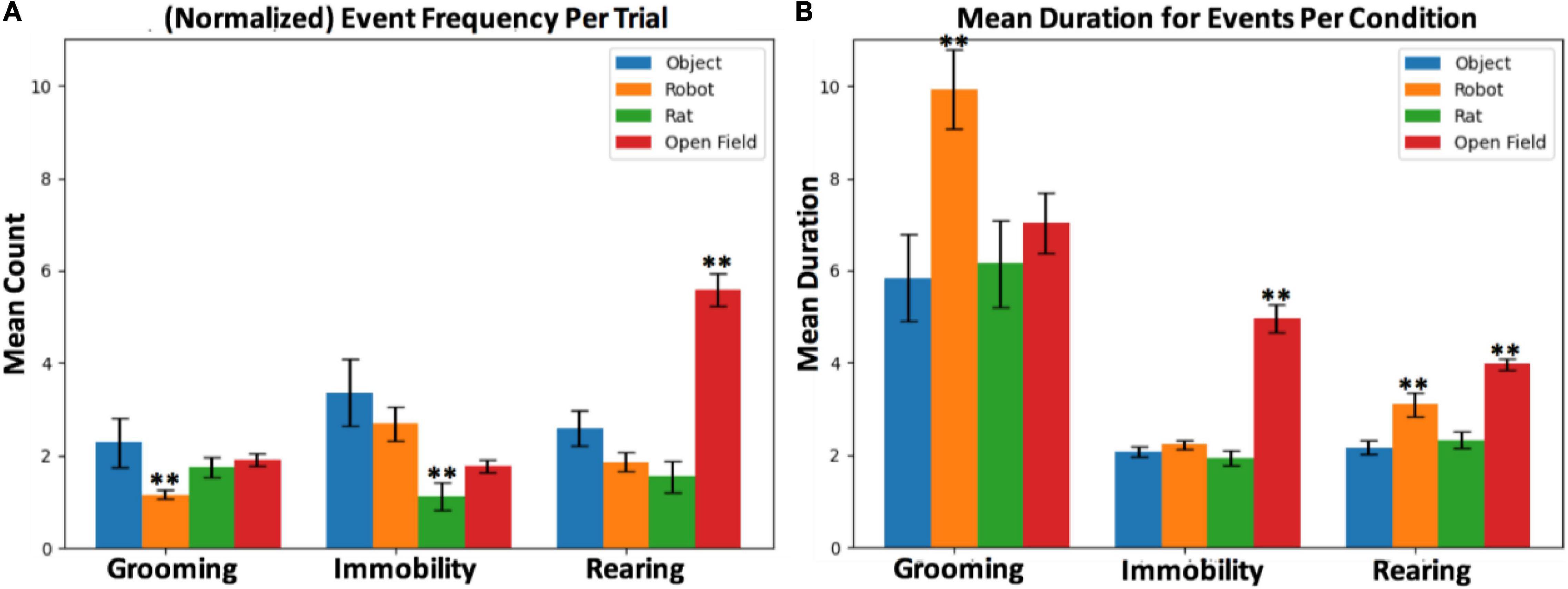

It was predicted that interaction with the robot leads to increased risk assessment behaviors, signified by increased immobility behavior (see Figure 6). The mean frequency of immobility events during rat–robot interactions (M = 2.70, SEM = 0.37) per trial was significantly larger than those from rat–rat interactions (M = 1.31, SEM = 0.31, t = 3.29, p = 0.001, d = 0.75). The mean duration of immobility during open field interactions (M = 4.95, SEM = 0.30) was significantly longer than robot (t = 2.73, p < e-4, d = 1.44), rat (t = 8.77, p < e-4, d = 1.15) and object (t = 9.06, p < e-4, d = 1.40) interactions.

Figure 6. (A) Mean counts per trial and (B) mean duration per condition for grooming, rearing and immobility events. The ** symbol refers to statistically significant differences based on hypothesis testing.

Grooming

Another hypothesis was that the robot would perturb grooming behavior due to distress related to a possible threat and stimulus uncertainty (see Figure 6). During interaction with the robot, although grooming events were significantly less frequent (which was counter to our prediction) on average per trial (M = 1.16, SEM = 0.11) than the rat (M = 1.74, SEM = 0.22, t = 2.38, p = 0.012, d = 0.82) and object interactions (M = 2.29, SEM = 0.11, t = 2.07, p < 0.04, d = 1.00). Despite being less frequent, grooming events during interaction with the robot (M = 9.93, SEM = 0.85) show a marginally longer duration than interaction with rats (M = 6.15, SEM = 0.94, t = 1.78, p < 0.04, d = 0.36) and objects (M = 5.8, SEM = 0.93, t = 1.93, p < 0.03, d = 0.39), indicating an alteration in duration of distress related grooming in the presence of robots.

Rearing

It was predicted that the robot and open field will result in increased rearing as escape-related exploratory response (see Figure 6). Rearing events during interaction with the robot (M = 3.09, SEM = 0.26) show a significantly longer duration than rat (M = 2.32, SEM = 0.18, t = 2.36, p = <0.01, d = 0.30) and object (M = 2.17, SEM = 0.16, t = 2.94, p < 0.002, d = 0.38), confirming the hypothesis that the presence of a robot might elicit exploration. The mean duration of rearing events during open field interactions (M = 3.9, SEM = 0.12) was significantly longer than the robot (t = 2.99, p < 0.002, d = 0.34), object (t = 8.99, p < e-4, d = 0.76), and rat (t = 7.62, p < e-4, d = 0.69) conditions. Empty open fields typically elicits exploratory vigilance, thus it appears that the open field induces an increase in hypervigilance relative to the robot.

Neurophysiological results

Neurophysiological signals were recorded in rats during their behavioral displays (grooming, immobility, and rearing) occurring throughout interaction sessions with different agents (rat, robot, and object). The behavioral epochs were extracted from video data aligned with the neural data and occur naturally throughout the interaction sessions (see Section “Behavioral Video Coding” in Supplementary Information for more detail about how epochs were extracted). The event markers from video coding were then used to segment the local field potential data. The event durations of these naturalistic behavioral epochs differ, so as not to impose artificial cut-offs on natural display of behaviors.

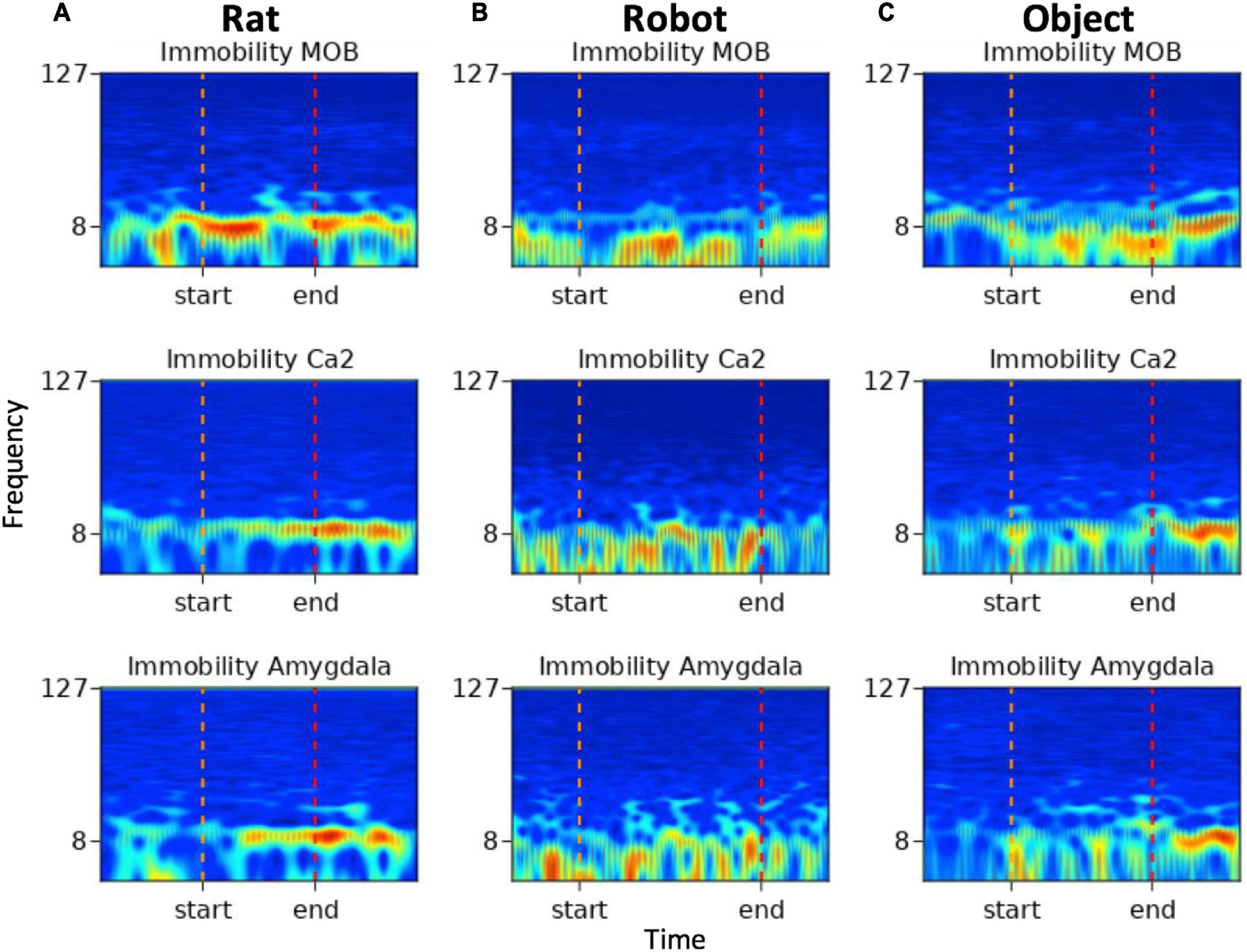

Spectrograms

To demonstrate an example of inter-agent variation within one behavior type, spectrograms below (see Figure 7) show multi-region brain dynamics from periods in which implanted rats exhibited immobility behaviors during rat, robot or object interactions. The pre- and post- event windows are 1 s in length providing a sense of scale for the viewer. In Figure 7A the period of immobility to a rat is approximately 1 s and in Figure 7B the period of immobility to robot is approximately 3 s.

Figure 7. Spectrograms of immobility events from (A) rat, (B) robot, and (C) object conditions. Frequency (Hz) is represented along the y-axis with markers for 8 and 127 Hz, time is represented along the x-axis, the color map represents high amplitude in red and low amplitude in blue. The start and end indicators denote the onset and offset of the variable duration behavioral events.

The immobility event which occurred during rat–rat social interactions in Figure 7A shows increased amplitude within the theta range in all brain regions with some transient beta oscillations in MOB and amygdala toward the end of the event. The immobility events that occurred during robot and object interactions in Figures 7B,C show high amplitude in respiratory oscillations in all regions with more pronounced beta oscillations in the amygdala during the event.

Power spectral densities

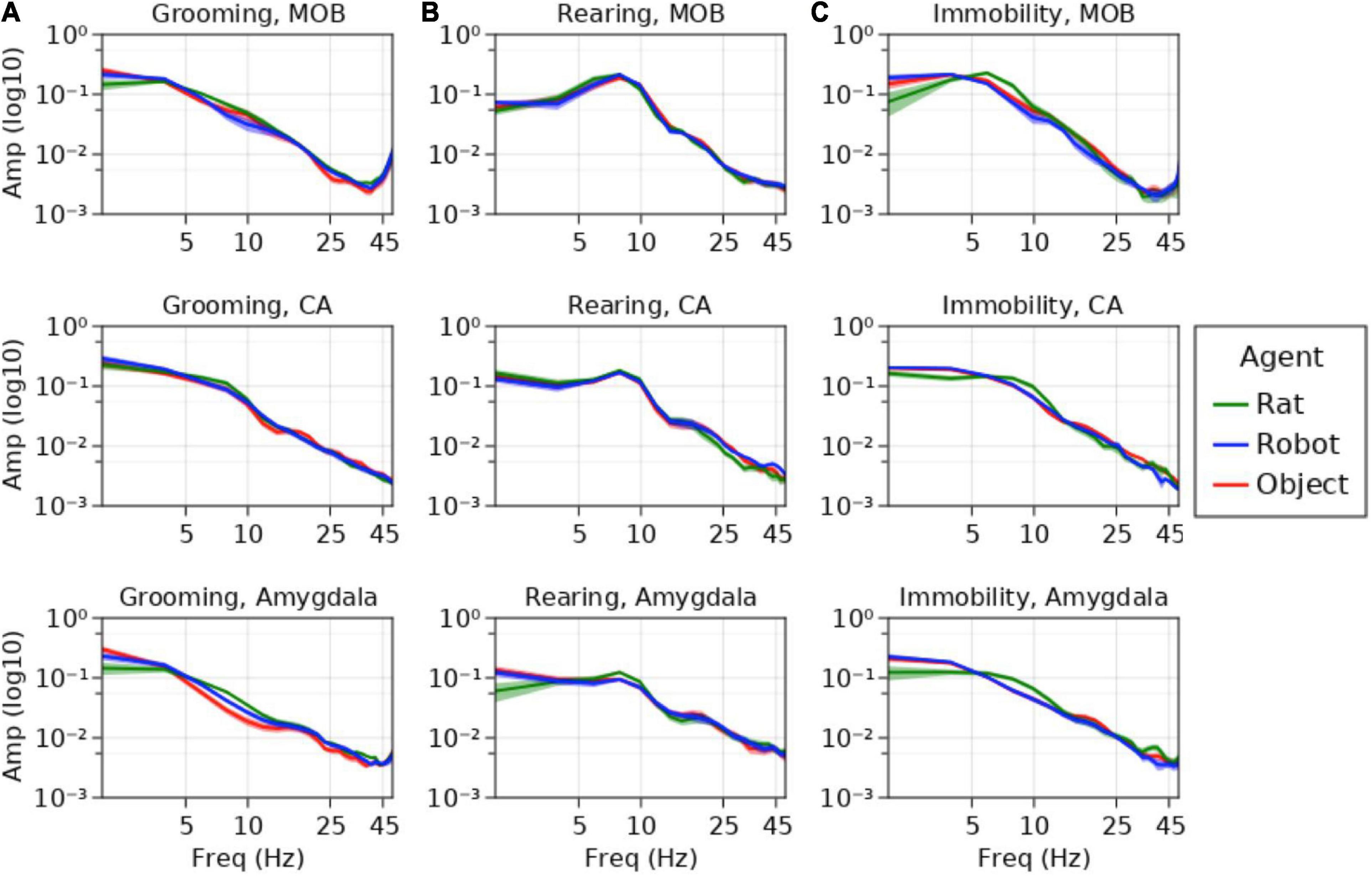

See Figure 8.

Figure 8. Average power spectral densities for MOB, CA1/CA2, and amygdala for (A) grooming, (B) rearing, and (C) immobility events. Amplitude is represented on the y-axis, frequency is represented along the x-axis. Average amplitudes during interaction with rats is represented in green, robots in blue and objects in red.

Neural hypothesis testing

The results of the mixed effects model showed a variety of effects in the respiratory, theta, and beta frequency ranges (see Figure 8 for average power spectral density plots). There are also marginal effects in the gamma range that will require a larger data set for validation and will not be addressed in this study.

Theta oscillations: olfactory exploration and salience

Other conspecifics were expected to elicit heightened sensorimotor exploration relative to robots and objects (see Figure 8). Rats are inherently more complex olfactory stimuli, and this is indicated by theta oscillations. Immobility events showed significantly higher theta amplitude in MOB during the rat–rat interaction trials (Int = 0.026, SEM = 0.0055) compared to the events from the robot (M = −0.0057, SEM = 0.0020, z = −2.83, p < 0.005, d = −0.96) and marginally larger than object trials (M = −0.0043, SEM = 0.0018, z = −2.36, p < 0.02, d = −0.7). In the MOB, the rat–robot interactions showed significantly lower theta amplitude during grooming events (Int = 0.010, SEM = 0.002) than rat–rat interactions (M = 0.0028, SEM = 0.0009, z = 2.75, p < 0.003, d = 0.82) but no difference from objects (M = 0.0008, SEM = 0.0013, z = 0.65, d = 0.24). Rearing events during rat–rat interactions (Int = 0.0015, SEM = 0.0001) exhibited significantly larger amygdalar theta oscillations than rearing events during rat–robot interactions (M = −0.0002, SEM = 0.0001, z = −2.76, p < 0.006, d = −0.59) but showed no difference from rat–object interactions (M = −7.2e-5, SEM = 0.0001, z = −0.62, d = −0.29).

Respiratory rhythms: autonomic state and distress regulation

As an indicator of autonomic state and distress regulation, we predicted higher amplitude respiratory oscillations for the object and robot interactions during grooming (see Figure 8). Immobility events showed a significantly higher respiratory amplitude in the amygdala during the rat–robot interactions (Int = 0.0032, SEM = 0.0001) than rat–rat interactions (M = −0.001, SEM = 0.0003, z = −3.06, p < 0.0022, d = −1), but showed only a trend toward a significant difference between rat–object interactions (M = −0.0004, SEM = 0.0002, z = −2.07, p < 0.04, d = −0.4). Respiratory rhythm amplitudes in the amygdala during grooming events from rat–robot trials (Int = 0.0039, SEM = 0.0004) were significantly larger than events from rat–rat interactions (M = −0.00016, SEM = 0.0006, z = −2.75, p = 0.006, d = −0.8), but showed no significant difference from rat–object trials (M = 1e-5, SEM = 0.0007, z = −0.04, p = 0.96, d = −1.47e-2). Taken together these findings indicate that both robots and novel objects elicited an increase in arousal relative to conspecifics. Consistent with this interpretation, rearing also showed a significantly larger amygdalar respiratory amplitude for rat–robot conditions (Int = 0.0022, SEM = 0.0002) when compared with rat–rat (M = −0.0007, SEM = 0.0003, z = −2.55, p < 0.01, d = −0.7), but not significantly different from rearing events from rat–object interactions (M = −0.0001, SEM = 0.0003, z = 0.43, p = 0.67, d = −0.1).

Theta and high beta oscillations: sensorimotor exploration and recognition

Although we did not have explicit prior hypotheses regarding the hippocampus and amygdala theta oscillations during grooming, we did expect that there may be differences revealing whether the rat was recognizing the robot more as an object or more as a conspecific (see Figure 8). Hippocampal theta amplitude during grooming events from rat–rat interactions (Int = 0.0054, SEM = 0.0007) were significantly different from the object conditions (M = −0.0015, SEM = 0.0005, z = −3.05, p < 0.002, d = −1.5), but showed no difference when compared with robot (M = −0.0009, SEM = 0.0001, z = −1.42, p = 0.16, d = −0.9). The amplitude of theta oscillations in the amygdala during grooming events from rat–rat interactions (Int = 0.0013, SEM = 0.0001) were significantly larger than object conditions (M = −0.0002, SEM = 0.0001, z = −2.58, p < 0.01, d = −1) but not significantly different from robot conditions (M = −0.0001, SEM = 0.0001, z = 1.28, p = 0.2, d = −0.5). One implication of this is that movement dynamics of another rat may elicit larger theta than the moving robot or stationary objects. Although this may be obligatory movement coding in the hippocampus, this dynamic might also serve to monotonically index rat, robot, and object based on this parameter.

As observed in previous work, it was expected that the object would result in a robust burst at beta frequency in the hippocampus due to learning of the object stimulus (Rangel et al., 2015). Instead, the presence of increases in high amplitude beta were more indicative of high beta/low gamma activity observed in the hippocampus during object place associations (Trimper et al., 2017). The amplitude of beta in the hippocampus during rearing events from rat–object interactions (Int = 0.0004, SEM = 1e-5) were significantly higher than events from rat–rat (M = −0.0001, SEM = 1e-5, z = −4.9, p < 1e-6, d = −2) and rat–robot interactions (M = −0.0001, SEM = 1e-5, z = −4.17, p < 1e-4, d = −1). This too indicates that the rat is likely associating the object with its place and differentiates the object from the robot and rat, both of which are moving.

Discussion

Animals are inextricably embedded within an environment and situated within a rich social world, bound to active exploration in recursive loops of perception and action (Kirsh and Maglio, 1994; De Jaegher and Froese, 2009). Brain dynamics rapidly and transiently switch from exploring the external world to evaluating the internal effect of the world on the organism (Marshall et al., 2017). Artificial systems often lack the complexity, adaptivity and responsiveness of animate systems, but can mimic kinematic and dynamic properties that inanimate objects often lack. It is an open question in the literature as to whether the rat perceives the robot as an animate or inanimate object. This study presents data to suggest that brain regions preferentially dissociate between rat, robot, and object based on sensorimotor exploration, salience, and autonomic distress regulation, indicating that the robot bears similarity to both a rat and an object. Primarily, this study outlines a general approach for such experiments that emphasize naturalistic interactions and complementary analysis pipelines that are necessary to render a holistic picture of the behavioral and brain dynamics evoked during interactive neurorobotics experiments.

Dynamic behavioral data regarding inter-agent distance suggest that the rat may be initially engaging in risk assessment behaviors when interacting with the robot, whereas they more readily approach another conspecific or stationary object. This may indicate that the rat feels safer approaching conspecifics and stationary objects than a robot. The distance between agents was also affected by the robot more often occupying the middle of the field and the rat showing a preference for the wall, a thigmotaxic strategy generally attributed to safety seeking (Lipkind et al., 2004). While that may have influenced the distance, the trajectories over time show a spiraling between rat and robot which suggests that the rat was actively avoiding the robot. The trajectories also suggest that as time passes the rat may become habituated to the robot and allow for decreased inter-agent distance at the end of a trial (see Figure 5). This differs from two prior robot-rat studies, the Waseda rat and the Robogator, where rats’ engagement with the robot is primarily enemy avoidance (Choi and Kim, 2010; Shi et al., 2013). Instead the rats in our study begin to engage in closer interactions across time rather than keeping their distance. This is more consistent with a behavioral study of the robot e-Puck and rats in which the robot elicits social behaviors from the rats (del Angel Ortiz et al., 2016).

In the present study, however, rats do show distress responses to the robot that exceed those to a conspecific or a novel object. However, they demonstrate maximal anxiety on the empty open field (used to test anxiety, Prut and Belzung, 2003); this indicates that the robot on the open field might provide comfort or that it presents a degree of behavioral competition between curiosity and anxiety. Specifically, interactions with a robot elicited or perturbed immobility, grooming, and rearing behaviors. The authors note that the rat–rat interactions resulted in few instances of immobility due to their active engagement, and reduced self-grooming likely due to the availability of social grooming by the other conspecific and the absence of distress with the conspecific. Rat conspecifics interacted, heavily engaging in coordinated exploration, following, interactive play, and anogenital exploration (also see Figure 5). This is consistent with findings detailed in a prior study (del Angel Ortiz et al., 2016).

From a design perspective, roboticists look for cues regarding whether the rat perceives the robot as more of an object or as an animal. Neuroimaging studies of human subjects viewing social androids and their movements addressed this question, discovering that the brain dissociates between androids and humans according to form and motion interactions (Saygin et al., 2012). Neural data in the present study demonstrate both robust and subtle differences to the rat, the robot, and the object. Specifically, hippocampal theta during grooming differentiates the rat from the object, however, the robot (falling in between the two) is not significantly different from either. Here it is possible that the rat is coded according to its movement dynamics, that are missing from the object, while the moving robot carries some features of each.

In contrast, theta dynamics in the MOB are similar for robot and object while differentiating the rat. This was expected based on the complexity of the inherent biological odors of the rat. The increased amplitude in respiratory rhythms during alert immobility in the amygdala to robot and object suggest autonomic regulation related to increased distress related arousal when compared with a rat. This suggests that interacting with a rat may be more engaging and less distressing. This finding is consistent with the behavioral interaction data. Thus, the brain appears to differentiate between a rat and our robots, but also distinguishes between the robot and objects. These findings provide support for the context-based modulation of brain signals (Kalueff et al., 2016). They also imply that through iterative design of robots, one could eventually produce a robot for which many regions of the brain do not readily distinguish the robot from a rat.

Future directions will also involve collecting a larger data set for the purpose of examining transient gamma activity, especially during investigation of the other agent or object. Rhythms like high gamma indicate active processing of the external sensory world, while low gamma is likely related to regulating an animal’s internal interoceptive milieu (Kay et al., 2009). It is recommended to use techniques such as burst detection to capture the more transient agent-based brain responses within behavior. Future approaches should also examine inter-regional communication, such as coherence and dynamic coupling (Fries, 2015; Breston et al., 2021). Additionally, future studies should also incorporate a richer repertoire of stimuli and robots.

Comparing the neurobehavioral states evoked by conspecifics, robots and objects may clue us into some of the minimal requirements for an animal to perceive artificial agents as social others. A key insight from Datteri (2020) about the philosophical foundations of the field is that interactive biorobotics experiments by themselves do not necessarily tell us about how organisms interact with conspecifics or predators, and suggests we should examine these interactions in their own terms before drawing unwarranted conclusions from the observations. This does not preclude a comparative approach, it just requires that we first take the robot case on its own terms and then compare it with data from social, object, and predator interactions.

Thus, this initial data from our exemplar rodent-centered design study suggest that comparing the effect of rat, robot and object on naturalistic behaviors are a promising direction moving forward in the field of interactive neurorobotics. A limitation of this study is that the robots’ motion dynamics were animated by human drivers, this introduces potential issues with anthropomorphism of interaction which can be dealt with better using automatic and autonomous robots. However, WoZ is a necessary step in the development of autonomous systems allowing for a diverse collection of movement dynamics that autonomous robots currently lack the complexity and sensitivity to exhibit. Follow up studies will be performed using autonomous robotic systems, such as PiRat (Heath et al., 2018). Autonomous robots allow for the manipulation of dynamics or functions that can be systematically manipulated according to model-based reasoning.

The results of this study also have implications for engineering design. From an engineering perspective, key considerations of social robots are safety, interactivity, and robustness. The considerations must not only be viewed from the perspective of sound engineering design, but they must also be considered from the internal perspective of the animal meant to interact with the robot. For example, the observational data and quantitative analyses suggest that a key aspect of designing interactive robots is safety. In this case safety must be considered not only by making sure that the robot is designed with physical safety in mind but more importantly whether the animal interlocutor feels safe when interacting with the robot. Safety is a key first step toward the eventual goal of getting the animal to accept the robot as a potential social companion and, thus, a primary consideration in interactivity as well.

Safety and robustness must also be considered from the perspective of species specific behaviors. A robot’s weight should also be considered and be carefully weighted for the agents it will be interacting with. If the robot is too light, it can be easily tipped over, dented or crushed in. If the robot is too heavy, it can impact the motors, adding strain, reducing speed or maneuverability, or cause damage to the environment or to the rodents. For rats, robot shells or covers should be designed so that it can be easily removed by users, but not by the animals, and made of a relatively non-porous material that can be easily cleaned in case of contamination by any substance. Robotic platforms made for interaction with non-human animals must be mostly water-proof. This is because urination and urine marking are common occurrences based on our observations, as they are natural features of interactivity, and thus the robot’s shell or exterior coating must effectively seal the electrical components from an animal’s urine. We observed multiple occasions where animal’s urine marked the field, stationary objects and occasionally other conspecifics. Urine marking is a communicative act, which can denote territoriality, dominance, and is full of rich social information (Leonardis et al., 2021). Cloth, fibers, or other such materials should be avoided or carefully tucked away from the rats’ access to prevent choking, shredding, or scent marking that would damage either the rat or the device. Marking may bias future interactions if the robot is contaminated with social odor from another conspecific.

Interactive robots have visual, auditory, olfactory, tactile and even gustatory aspects that should be actively taken into account during the design process. For instance, audition plays a key role. It is critical that interactive robots exhibit audio frequencies outside of the range of rodent distress calls, which induce panic, irritation, or stress responses. When designing interactive robots for animals it is also critical to take the animals preferred sensory systems into account, which for rats brings olfaction to the foreground and is why the robots in this study were tagged with olfactory stimuli. Contestabile et al. (2021) used a dynamic moving object as a control for complex social stimuli and found that mice prefer complex social stimuli where more multisensory information such as tactile, visual, olfactory and auditory cues are available. They demonstrate that the object imbued with social odor does not recapitulate the complexities of social interaction, but instead the authors emphasize the importance of multisensory integration. Future approaches for designing interactions should emphasize the multisensory nature of the design problem.

Robotics has significant potential to offer animal research because its use enables experimenters to control complex experimental parameters and to test embodied computational models that interact with the real world (Webb, 2000). The dynamics of sociality are non-trivial and require convergent data regarding the model system. The holistic framework of capturing naturalistic behaviors in multiple contexts with fine-grained analyses, sets forth rich neural and behavioral data to scaffold the design process of future social robots. The field of interactive neurorobotics allows for the examination of how robots evoke emergent behavior and brain dynamics in living creatures during a variety of agent-based interactions. This approach can be generalized to other animals and to humans. Social robotic interactions with humans also have affective dimensions that are indexed by autonomic signals and design principles can be improved by taking detailed behavioral interactions and neural signaling into account. Future directions will be to use advanced dynamical systems methods for examining the interaction between rats and robots that are governed by autonomous algorithms. It is essential that we also look beyond these narrow experimental contexts and generalize these lessons to our own technologically enmeshed world. While there is no putting out the fire of our increasing integration with autonomous systems, we have the opportunity to use the methods and insights gained from interactive neurorobotics to mold them into cooperative companions.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation, to any qualified researcher. A summary of the behavioral, tracking and neural data has been made publicly available at https://github.com/ericleonardis/InteractiveNeuroroboticsFrontiers.

Ethics statement

The rodent study was reviewed and approved by the University of California, San Diego (UCSD) Institutional Animal Care and Use Committee (IACUC), a compliance, ethics, and integrity review committee housed within the UCSD Animal Welfare Program as part of UCSD Research Affairs. The work also adhered to NIH ethical guidelines and the UCSD laboratory in which the work took place was inspected and approved by the UCSD Animal Welfare Program and is fully accredited by the American Association for Accreditation of Laboratory Animal Care (AAALAC).

Author contributions

EL, LQ, and AC designed the study. EL, LQ, AC, RL-M, LSe, and LSc collected the data. LQ performed the neurosurgeries. EL, RK, RL-M, JW, and LB-G performed the behavioral data analysis. LB and EL performed the neural data analysis. EL, AC, LB, RL-M, and LSe wrote and revised the article. All authors contributed to the article and approved the submitted version.

Funding

This work was funded by NSF BRAIN EAGER 1451221; NIMH R01 5R01MH110514-02; and Kavli Institute for Brain and Mind.

Acknowledgments

We acknowledge the help and input from Nicklas Boyer, Scott Heath, Carlos Ramirez-Brinez, Jt Taufatofua, Ola Olsson, Marcelo Aguilar-Rivera, Emmanuel Gygi, Estelita Leija, and many more. We thank David Ball, Scott Heath, and the iRat team for developing the iRat platform.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.897603/full#supplementary-material

References

Adhikari, A., Lerner, T. N., Finkelstein, J., Pak, S., Jennings, J. H., Davidson, T. J., et al. (2015). Basomedial amygdala mediates top-down control of anxiety and fear. Nature 527, 179–185. doi: 10.1038/nature15698

Ahuja, N., Lobellová, V., Stuchlík, A., and Kelemen, E. (2020). Navigation in a space with moving objects: rats can avoid specific locations defined with respect to a moving robot. Front. Behav. Neurosci. 206:576350. doi: 10.3389/fnbeh.2020.576350

Amir, A., Lee, S. C., Headley, D. B., Herzallah, M. M., and Pare, D. (2015). Amygdala signaling during foraging in a hazardous environment. J. Neurosci. 35, 12994–13005. doi: 10.1523/JNEUROSCI.0407-15.2015

Ball, D., Heath, S., Wyeth, G., and Wiles, J. (2010). “iRat: Intelligent Rat Animat Technology,” in Proceedings of the 2010 Australasian Conference on Robotics and Automation, Australia.

Bartal, I. B. A., Decety, J., and Mason, P. (2011). Empathy and pro-social behavior in rats. Science 334, 1427–1430.

Bergan, J. F., Ben-Shaul, Y., and Dulac, C. (2014). Sex-specific processing of social cues in the medial amygdala. eLife 3:e02743.

Breston, L., Leonardis, E. J., Quinn, L. K., Tolston, M., Wiles, J., and Chiba, A. A. (2021). Convergent cross sorting for estimating dynamic coupling. Sci. Rep. 11:20374.

Choi, J. S., and Kim, J. J. (2010). Amygdala regulates risk of predation in rats foraging in a dynamic fear environment. Proc. Natl. Acad. Sci. U.S.A. 107, 21773–21777. doi: 10.1073/pnas.1010079108

Contestabile, A., Casarotto, G., Girard, B., Tzanoulinou, S., and Bellone, C. (2021). Deconstructing the contribution of sensory cues in social approach. Eur. J. Neurosci. 53, 3199–3211. doi: 10.1111/ejn.15179

Dantzer, R., Tazi, A., and Bluthé, R. M. (1990). Cerebral lateralization of olfactory-mediated affective processes in rats. Behav. Brain Res. 40, 53–60. doi: 10.1016/0166-4328(90)90042-d

Datteri, E. (2020). The logic of interactive biorobotics. Front. Bioeng. Biotechnol. 8:637. doi: 10.3389/fbioe.2020.00637

De Jaegher, H., and Froese, T. (2009). On the role of social interaction in individual agency. Adapt. Behav. 17, 444–460.

del Angel Ortiz, R., Contreras, C. M., Gutiérrez-Garcia, A. G., and González, M. F. M. (2016). Social interaction test between a rat and a robot: A pilot study. Int. J. Adv. Robot. Syst. 13:4.

Eichenbaum, H., and Otto, T. (1992). “The hippocampus and the sense of smell,” in Chemical Signals in Vertebrates, eds R. L. Doty and D. Müller-Schwarze (Boston, MA: Springer), 67–77.

Fernández-Teruel, A., and Estanislau, C. (2016). Meanings of self-grooming depend on an inverted U-shaped function with aversiveness. Nat. Rev. Neurosci. 17, 591–591. doi: 10.1038/nrn.2016.102

Flash, T., and Hogan, N. (1985). The coordination of arm movements: an experimentally confirmed mathematical model. J. Neurosci. 5, 1688–1703.

Fouse, A., Weibel, N., Hutchins, E., and Hollan, J. D. (2011). “ChronoViz: a system for supporting navigation of time-coded data,” in Proceedings of the CHI’11 Extended Abstracts on Human Factors in Computing Systems, New York, NY.

Gallagher, M., and Chiba, A. A. (1996). The amygdala and emotion. Curr. Opin. Neurobiol. 6, 221–227.

Gazzola, V., Rizzolatti, G., Wicker, B., and Keysers, C. (2007). The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage 35, 1674–1684. doi: 10.1016/j.neuroimage.2007.02.003

Gergely, A., Compton, A. B., Newberry, R. C., and Miklósi, Á (2016). Social interaction with an “Unidentified Moving Object” elicits A-not-B error in domestic dogs. PLoS One 11:e0151600. doi: 10.1371/journal.pone.0151600

Gianelli, S., Harland, B., and Fellous, J. M. (2018). A new rat-compatible robotic framework for spatial navigation behavioral experiments. J. Neurosci. Methods 294, 40–50. doi: 10.1016/j.jneumeth.2017.10.021

Golani, I., Benjamini, Y., and Eilam, D. (1993). Stopping behavior: constraints on exploration in rats (Rattusnorvegicus). Behav. Brain Res. 53, 21–33. doi: 10.1016/s0166-4328(05)80263-3

Goosens, K. A., and Maren, S. (2001). Contextual and auditory fear conditioning are mediated by the lateral, basal, and central amygdaloid nuclei in rats. Learn. Memory 8, 148–155. doi: 10.1101/lm.37601

Gordon, G., Fonio, E., and Ahissar, E. (2014). Learning and control of exploration primitives. J. Comput. Neurosci. 37, 259–280.

Gourévitch, B., Kay, L. M., and Martin, C. (2010). Directional coupling from the olfactory bulb to the hippocampus during a go/no-go odor discrimination task. J. Neurophysiol. 103, 2633–2641. doi: 10.1152/jn.01075.2009

Heath, S., Ramirez-Brinez, C. A., Arnold, J., Olsson, O., Taufatofua, J., Pounds, P., et al. (2018). “PiRat: An autonomous framework for studying social behaviour in rats and robots,” in Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Manhattan, NY. doi: 10.1109/iros.2018.8594060

Heck, D. H., McAfee, S. S., Liu, Y., Babajani-Feremi, A., Rezaie, R., Freeman, W. J., et al. (2017). Breathing as a fundamental rhythm of brain function. Front. Neural Circuits 10:115. doi: 10.3389/fncir.2016.00115

Heider, F., and Simmel, M. (1944). An experimental study of apparent behavior. Am. J. Psychol. 57, 243–259.

Hitti, F. L., and Siegelbaum, S. A. (2014). The hippocampal CA2 region is essential for social memory. Nature 508, 88–92.

Hoffman, G., and Ju, W. (2014). Designing robots with movement in mind. J. Hum. Robot Interact. 3, 91–122.

Ishii, H., Ogura, M., Kurisu, S., Komura, A., Takanishi, A., Iida, N., et al. (2006). “Experimental study on task teaching to real rats through interaction with a robotic rat,” in Proceedings 9th International Conference on Simulation of Adaptive Behavior, SAB 2006, Berlin.

Jacinto, L. R., Cerqueira, J. J., and Sousa, N. (2016). Patterns of theta activity in limbic anxiety circuit preceding exploratory behavior in approach-avoidance conflict. Front. Behav. Neurosci. 10:171. doi: 10.3389/fnbeh.2016.00171

Jacobs, L. F. (2012). From chemotaxis to the cognitive map: the function of olfaction. Proc. Natl. Acad. Sci. U.S.A. 109, 10693–10700. doi: 10.1073/pnas.1201880109

Jacobs, L. F. (2022). How the evolution of air breathing shaped hippocampal function. Philos. Trans. R. Soc. Lond. B Biol. Sci. 377:20200532. doi: 10.1098/rstb.2020.0532

Kalueff, A. V., Stewart, A. M., Song, C., Berridge, K. C., Graybiel, A. M., and Fentress, J. C. (2016). Neurobiology of rodent self-grooming and its value for translational neuroscience. Nat. Rev. Neurosci. 17, 45–59. doi: 10.1038/nrn.2015.8

Kay, L. M. (2005). Theta oscillations and sensorimotor performance. Proc. Natl. Acad. Sci. U.S.A. 102, 3863–3868. doi: 10.1073/pnas.0407920102

Kay, L. M. (2014). Circuit oscillations in odor perception and memory. Progress Brain Res. 208, 223–251.

Kay, L. M., Beshel, J., Brea, J., Martin, C., Rojas-Líbano, D., and Kopell, N. (2009). Olfactory oscillations: the what, how and what for. Trends Neurosci. 32, 207–214.

Kirsh, D., and Maglio, P. (1994). On distinguishing epistemic from pragmatic action. Cogn. Sci. 18, 513–549. doi: 10.1016/j.actpsy.2007.02.001

Lakatos, G., Gácsi, M., Konok, V., Bruder, I., Bereczky, B., Korondi, P., et al. (2014). Emotion attribution to a non-humanoid robot in different social situations. PLoS One 9:e114207. doi: 10.1371/journal.pone.0114207

Lebedev, M. A., Pimashkin, A., and Ossadtchi, A. (2018). Navigation patterns and scent marking: underappreciated contributors to hippocampal and entorhinal spatial representations? Front. Behav. Neurosci. 12:98. doi: 10.3389/fnbeh.2018.00098

Leonardis, E., Semenuks, A., and Coulson, S. (2021). What is indexical and iconic in animal blending? Contributed symposia on conceptual blending in animal cognition. Cogn. Sci. Soc. Conf. 2021:27.

Lever, C., Burton, S., and O ’Keefe, J. (2006). Rearing on hind legs, environmental novelty, and the hippocampal formation. Rev. Neurosci. 17, 111–134. doi: 10.1515/revneuro.2006.17.1-2.111

Lipkind, D., Sakov, A., Kafkafi, N., Elmer, G. I., Benjamini, Y., and Golani, I. (2004). New replicable anxiety-related measures of wall vs. center behavior of mice in the open field. J. Appl. Physiol. 97, 347–359. doi: 10.1152/japplphysiol.00148.2004

Marshall, A. C., Gentsch, A., Jelinčić, V., and Schütz-Bosbach, S. (2017). Exteroceptive expectations modulate interoceptive processing: repetition-suppression effects for visual and heartbeat evoked potentials. Sci. Rep. 7:16525. doi: 10.1038/s41598-017-16595-9

Mercer, A., Trigg, H. L., and Thomson, A. M. (2007). Characterization of neurons in the CA2 subfield of the adult rat hippocampus. J. Neurosci. 27, 7329–7338.

Mitchell, D. (1976). Experiments on neophobia in wild and laboratory rats: a reevaluation. J. Comp. Physiol. Psychol. 90, 190–197. doi: 10.1037/h0077196

Mobbs, D., and Kim, J. J. (2015). Neuroethological studies of fear, anxiety, and risky decision-making in rodents and humans. Curr. Opin. Behav. Sci. 5, 8–15. doi: 10.1016/j.cobeha.2015.06.005

Moberly, A. H., Schreck, M., Bhattarai, J. P., Zweifel, L. S., Luo, W., and Ma, M. (2018). Olfactory inputs modulate respiration-related rhythmic activity in the prefrontal cortex and freezing behavior. Nat. Commun. 9:1528. doi: 10.1038/s41467-018-03988-1

Modlinska, K., Stryjek, R., and Pisula, W. (2015). Food neophobia in wild and laboratory rats (multi-strain comparison). Behav. Process. 113, 41–50. doi: 10.1016/j.beproc.2014.12.005

Nance, P. W., and Hoy, C. S. (1996). Assessment of the autonomic nervous system. Phys. Med. Rehabil. 10, 15–35.

Narins, P. M., Grabul, D. S., Soma, K. K., Gaucher, P., and Hödl, W. (2005). Cross-modal integration in a dart-poison frog. Proc. Natl. Acad. Sci. U.S.A. 102, 2425–2429. doi: 10.1073/pnas.0406407102

Narins, P. M., Hödl, W., and Grabul, D. S. (2003). Bimodal signal requisite for agonistic behavior in a dart-poison frog, Epipedobates femoralis. Proc. Natl. Acad. Sci. U.S.A. 100, 577–580. doi: 10.1073/pnas.0237165100

Paré, D., Collins, D. R., and Pelletier, J. G. (2002). Amygdala oscillations and the consolidation of emotional memories. Trends Cogn. Sci. 6, 306–314.

Pereira, T. D., Shaevitz, J. W., and Murthy, M. (2020). Quantifying behavior to understand the brain. Nat. Neurosci. 23, 1537–1549.

Pereira, T. D., Tabris, N., Matsliah, A., Turner, D. M., Li, J., Ravindranath, J., et al. (2022). SLEAP: A deep learning system for multi-animal pose tracking. Nat. Methods 19, 486–495. doi: 10.1038/s41592-022-01426-1

Pitkänen, A., Pikkarainen, M., Nurminen, N., and Ylinen, A. (2000). Reciprocal connections between the amygdala and the hippocampal formation, perirhinal cortex, and postrhinal cortex in rats: a review. Ann. N.Y. Acad. Sci. 911, 369–391. doi: 10.1002/hipo.20314

Prut, L., and Belzung, C. (2003). The open field as a paradigm to measure the effects of drugs on anxiety-like behaviors: a review. Eur. J. Pharmacol. 463, 3–33. doi: 10.1016/s0014-2999(03)01272-x

Quinn, L. K., Schuster, L. P., Aguilar-Rivera, M., Arnold, J., Ball, D., Gygi, E., et al. (2018). When rats rescue robots. Anim. Behav. Cogn. 5, 368–379.

Rangel, L. M., Chiba, A. A., and Quinn, L. K. (2015). Theta and beta oscillatory dynamics in the dentate gyrus reveal a shift in network processing state during cue encounters. Front. Syst. Neurosci. 9:96. doi: 10.3389/fnsys.2015.00096

Rojas-Líbano, D., Frederick, D. E., Egaña, J. I., and Kay, L. M. (2014). The olfactory bulb theta rhythm follows all frequencies of diaphragmatic respiration in the freely behaving rat. Front. Behav. Neurosci. 8:214. doi: 10.3389/fnbeh.2014.00214

Rutte, C., and Taborsky, M. (2008). The influence of social experience on cooperative behaviour of rats (Rattus norvegicus): direct vs generalised reciprocity. Behav. Ecol. Sociobiol. 62, 499–505.

Saygin, A. P., Chaminade, T., Ishiguro, H., Driver, J., and Frith, C. (2012). The thing that should not be: predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Soc. Cogn. Affect. Neurosci. 7, 413–422.

Saygin, A. P., Cicekli, I., and Akman, V. (2000). Turing test: 50 years later. Minds Mach. 10, 463–518.

Saygin, A. P., Wilson, S. M., Hagler, D. J. Jr., Bates, E., and Sereno, M. I. (2004). Point-light biological motion perception activates human premotor cortex. J. Neurosci. 24, 6181–6188.

Schmickl, T., Szopek, M., Mondada, F., Mills, R., Stefanec, M., Hofstadler, D. N., et al. (2021). Social integrating robots suggest mitigation strategies for ecosystem decay. Front. Bioeng. Biotechnol. 9:612605. doi: 10.3389/fbioe.2021.612605

Shi, Q., Ishii, H., Kinoshita, S., Konno, S., Takanishi, A., Okabayashi, S., et al. (2013). A rat-like robot for interacting with real rats. Robotica 31, 1337–1350.

Smith, A. S., Williams Avram, S. K., Cymerblit-Sabba, A., Song, J., and Young, W. S. (2016). Targeted activation of the hippocampal CA2 area strongly enhances social memory. Mol. Psychiatry 21, 1137–1144. doi: 10.1038/mp.2015.189

Song, C., Berridge, K. C., and Kalueff, A. V. (2016). ‘Stressing’ rodent self-grooming for neuroscience research. Nat. Rev. Neurosci. 17, 591–591.

Steckenfinger, S. A., and Ghazanfar, A. A. (2009). Monkey visual behavior falls into the uncanny valley. Proc. Natl. Acad. Sci. U.S.A. 106, 18362–18366.

Sturman, O., von Ziegler, L., Schläppi, C., Akyol, F., Privitera, M., Slominski, D., et al. (2020). Deep learning-based behavioral analysis reaches human accuracy and is capable of outperforming commercial solutions. Neuropsychopharmacology 45, 1942–1952. doi: 10.1038/s41386-020-0776-y

Tang, W. (2019). Hippocampus and Rat Prefrontal Cortex. Scidraw.io: OpenSource scientific drawings. Genève: zenodo.

Todorov, E., and Jordan, M. I. (1998). Smoothness maximization along a predefined path accurately predicts the speed profiles of complex arm movements. J. Neurophysiol. 80, 696–714. doi: 10.1152/jn.1998.80.2.696

Tort, A. B., Brankačk, J., and Draguhn, A. (2018a). Respiration-entrained brain rhythms are global but often overlooked. Trends Neurosci. 41, 186–197.

Tort, A. B., Ponsel, S., Jessberger, J., Yanovsky, Y., Brankačk, J., and Draguhn, A. (2018b). Parallel detection of theta and respiration-coupled oscillations throughout the mouse brain. Sci. Rep. 8:6432. doi: 10.1038/s41598-018-24629-z

Trimper, J. B., Galloway, C. R., Jones, A. C., Mandi, K., and Manns, J. R. (2017). Gamma oscillations in rat hippocampal subregions dentate gyrus, CA3, CA1, and subiculum underlie associative memory encoding. Cell Rep. 21, 2419–2432. doi: 10.1016/j.celrep.2017.10.123

van Strien, N. M., Cappaert, N. L. M., and Witter, M. P. (2009). The anatomy of memory: an interactive overview of the parahippocampal–hippocampal network. Nat. Rev. Neurosci. 10, 272–282. doi: 10.1038/nrn2614

Keywords: robot, interaction, amygdala, hippocampus, olfactory bulb, social robotics, local field potential, immobility behavior

Citation: Leonardis EJ, Breston L, Lucero-Moore R, Sena L, Kohli R, Schuster L, Barton-Gluzman L, Quinn LK, Wiles J and Chiba AA (2022) Interactive neurorobotics: Behavioral and neural dynamics of agent interactions. Front. Psychol. 13:897603. doi: 10.3389/fpsyg.2022.897603

Received: 16 March 2022; Accepted: 27 June 2022;

Published: 17 August 2022.

Edited by:

Thierry Chaminade, Centre National de la Recherche Scientifique (CNRS), FranceReviewed by:

Bradly J. Alicea, Orthogonal Research and Education Laboratory, United StatesRuediger Dillmann, Karlsruhe Institute of Technology (KIT), Germany

Copyright © 2022 Leonardis, Breston, Lucero-Moore, Sena, Kohli, Schuster, Barton-Gluzman, Quinn, Wiles and Chiba. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eric J. Leonardis, ZWpsZW9uYXJAdWNzZC5lZHU=

Eric J. Leonardis

Eric J. Leonardis Leo Breston1,2

Leo Breston1,2 Leigh Sena

Leigh Sena Raunit Kohli

Raunit Kohli Lacha Barton-Gluzman

Lacha Barton-Gluzman Janet Wiles

Janet Wiles Andrea A. Chiba

Andrea A. Chiba