- Center Leo Apostel, Vrije Universiteit Brussel, Brussels, Belgium

As a result of the identification of “identity” and “indistinguishability” and strong experimental evidence for the presence of the associated Bose-Einstein statistics in human cognition and language, we argued in previous work for an extension of the research domain of quantum cognition. In addition to quantum complex vector spaces and quantum probability models, we showed that quantization itself, with words as quanta, is relevant and potentially important to human cognition. In the present work, we build on this result, and introduce a powerful radiation quantization scheme for human cognition. We show that the lack of independence of the Bose-Einstein statistics compared to the Maxwell-Boltzmann statistics can be explained by the presence of a ‘meaning dynamics,” which causes words to be attracted to the same words. And so words clump together in the same states, a phenomenon well known for photons in the early years of quantum mechanics, leading to fierce disagreements between Planck and Einstein. Using a simple example, we introduce all the elements to get a better and detailed view of this “meaning dynamics,” such as micro and macro states, and Maxwell-Boltzmann, Bose-Einstein and Fermi-Dirac numbers and weights, and compare this example and its graphs, with the radiation quantization scheme of a Winnie the Pooh story, also with its graphs. By connecting a concept directly to human experience, we show that entanglement is a necessity for preserving the “meaning dynamics” we identified, and it becomes clear in what way Fermi-Dirac addresses human memory. Within the human mind, as a crucial aspect of memory, in spaces with internal parameters, identical words can nevertheless be assigned different states and hence realize locally and contextually the necessary distinctiveness, structured by a Pauli exclusion principle, for human thought to thrive.

1. Introduction

Quantum cognition explores the possibility of using quantum structures to model aspects of human cognition (Aerts and Aerts, 1995; Khrennikov, 1999; Atmanspacher, 2002; Gabora and Aerts, 2002; Bruza and Cole, 2005; Busemeyer et al., 2006; Aerts, 2009a; Bruza and Gabora, 2009; Lambert Mogilianski Zamir and Zwirn, 2009; Aerts and Sozzo, 2011; Busemeyer and Bruza, 2012; Haven and Khrennikov, 2013; Dalla Chiara et al., 2015; Melucci, 2015; Pothos et al., 2015; Blutner and beim Graben, 2016; Moreira and Wichert, 2016; Broekaert et al., 2017; Gabora and Kitto, 2017; Surov, 2021). Primarily, it is the structures of the complex vector space of quantum states and the quantum probability model that have been used fruitfully to describe aspects of human cognition. Recently, we have shown that quantum statistics, and more specifically the Bose-Einstein statistics, is also prominently and convincingly present in human cognition, and more specifically in the structure of human language (Aerts and Beltran, 2020, 2022). The presence of the Bose-Einstein statistics in quantum mechanics is associated with the “identity” and “indistinguishability” of quantum particles, and is probably one of the most still poorly understood aspects of quantum reality (French and Redhead, 1988; Saunders, 2003; Muller and Seevinck, 2009; Krause, 2010; Dieks and Lubberdink, 2020). Although there are connections to entanglement, and in linear quantum optics there is now effective experimental use of the “indistinguishability” of photons to fabricate qubits, and thus “indistinguishability” is considered a “resource” for quantum computing, it remains one of the most mysterious quantum properties, also structurally different from entanglement (Franco and Compagno, 2018). The original interest of one of us in identifying in human cognition and language an equivalent of this situation of “indistinguishability” in quantum mechanics, leading to the Bose-Einstein statistics, was motivated by working on a specific interpretation of quantum mechanics, called the “conceptuality interpretation” (Aerts, 2009b). Thus, this original motivation was aimed more at increasing the understanding and explanation of what “identical” and “indistinguishable” quantum particles really are, rather than intended to introduce an additional rationale for research in quantum cognition. With a focus still primarily on this original motivation, work continued on the identification of a Bose-Einstein-like statistics by one of us, with a PhD student and collaborator, and more and better experimental evidence was collected for the superiority of Bose-Einstein statistics in modeling specific situations in human language as compared to Maxwell-Boltzmann statistics (Aerts et al., 2015). However, only by switching to a completely different approach, because the original approach of finding experimental evidence was not fully satisfying, was a layer of insight opened up, showing that the presence of the Bose-Einstein statistics in human cognition and language, makes a new unexplored structure of quantum mechanics important, and perhaps even crucial, to the research field of quantum cognition (Aerts and Beltran, 2020, 2022). The reason we dare to assert this, while being aware of the many aspects that remain to be explored, is because the “radiation and quantization scheme that we were able to identify as uniquely structurally underlying this presence of the Bose-Einstein statistics in human cognition and language” also appears to be the underlying structure of Zipf's law, and its continuous version, Pareto's law (Pareto, 1897; Zipf, 1935, 1949). Zipf's and Pareto's laws, which are empirical laws, without a theoretical foundation that is satisfactory, and about which there is a minimum of consensus, show up spontaneously in many different situations in many domains. These range from rankings of the size of cities (Gabaix, 1999), rankings of the size of income (Aoki and Makoto, 2017), but also rankings that seem much more marginal, such as the number of people who watch the same TV station (Eriksson et al., 2013), or the rankings of notes in music (Zanette, 2006), or the rankings of cells' transcriptomes (Lazardi et al., 2021). Thus, the radiation and quantization scheme that we propose in a detailed way in this article can be the foundation of these dynamical situations, where Zipf's or Pareto's law is empirically established. But the radiation and quantization scheme we propose is much richer that the simple ranking that gives rise to Zipf's law, although it contains and explains it, and introduces, for example, the notion of “energy level,” and thereby the possibility of giving an energetic foundation to the dynamics underlying Zipf's and Pareto's laws. As is explicitly shown in the example we analyze, the notions of micro and macro states are introduced, and the associated entropy as a governing factor for this dynamics. This means that our approach can possess a broad application and value for very general and widely present dynamical situations in different domains of science, more specifically and among others in psychology and economics.

In Section 2 we outline the steps that took place in the identification of the Bose-Einstein statistics during the first phase, mainly also to show why it was difficult to find enough experimental evidence to push that approach through to a satisfactory conclusion. In Section 3, we analyze the Planck and Wien radiation laws, which were already previously an inspiration to us, but never directly. We explain how a direct application of the continuous functions, Bose-Einstein and Maxwell-Boltzmann, led to a totally different, and much more powerful approach in terms of identifying the Bose-Einstein statistics in human cognition and language. We outline in what way the historical discussions that took place, between Planck, Einstein, and Ehrenfest, before Einstein made the prediction of the possibility of a Bose-Einstein condensate for a boson gas in 1925, inspired and helped us to properly develop the radiation quantization scheme, the elaboration of which is the subject of this article. We illustrate the radiation and quantization scheme using the example story of Winnie the Pooh, the first story we analyzed in Aerts and Beltran (2020), and illustrate the radiation quantization scheme by means of the graphs of this Winnie the Pooh story. In Section 4, we then further develop this radiation and quantization scheme. We identify the lack of independence embedded in the Bose-Einstein statistical probabilities, as clearly and sharply put forward by Einstein and Ehrenfest for the radiation and quantization scheme of light, as caused by a “dynamics of meaning” intrinsic to human creations by what humans and their creations are. We analyze this “dynamics of meaning” in detail using an example of four words distributed over seven energy levels with total energy equal to seven. Using the example, we show how micro and macro states are formed, and how the exact combinatorial-based Maxwell-Boltzmann, Bose-Einstein, and Fermi-Dirac functions, are calculated. We set up the graphs of this example, and compare them to the graphs of the Winnie the Pooh story. We elaborate on how to introduce “that which radiates” into the scheme, and explain how in a natural way this must possess the form of a memory structure, more specifically the human memory in the case of human cognition and language. We analyze, by grounding concepts in human experience, how entanglement is indispensable when texts seek precision of formulation and maximization of meaning. The bosonic and fermionic structures can also be understood from this grounding, the bosonic present as the basic element of communication, thus of language, and the fermionic present as the basic element of “that which radiates,” “the speaker,” “the writer,” in short “the memory.”

2. Identity and Indistinguishability

Our original intuition, and what set us on the track to identify “identity” and “indistinguishability” in human cognition, and more specifically, in human language, was the following. If in a story the expression “eight cats” comes up, then generally these cats are completely “identical,” and “indistinguishable” from each other within that story, and within the way we use the expression “eight cats” in human language. At least, when we consider the story in its generality, such that it can be read and/or listened to by any human mind linguistically capable of reading a story and/or listening to it–this nuance is important when we introduce the difference between bosonic and fermionic structures in Section 4. However, “identity” and “indistinguishability” for quantum particles in quantum mechanics is not simply an epistemic matter, but also involves two very different statistical behaviors, compared to how classical particles behave. More specifically, the Maxwell-Boltzmann statistics, which describes the behavior of classical particles, is replaced by, on the one hand, the Bose-Einstein statistics when the quantum particles are bosons, and on the other hand, the Fermi-Dirac statistics when the quantum particles are fermions. Bosons are the particles of the quantum forces, electromagnetism and its photons, the weak force and its W and Z bosons, and the strong force and its gluons, and bosons are further characterized by carrying a spin of integer value, i.e., spin 0, spin1, spin 2, etc. Fermions on the other hand are particles of matter, quarks, electrons, muons, tauons and their respective neutrinos, and they are further characterized by carrying a spin of half integer value, spin 1/2, spin 3/2, spin 5/2, etc. For the replacement in human language of the Maxwell-Boltzmann statistics by a statistics that would be closer to Bose-Einstein, we were initially guided by a specific reasoning, before we began a systematic search for evidence for it. Let us illustrate this reasoning with an example.

Suppose we go to a farm where there are about as many cats available as dogs, and we ask the farmer to choose two animals for us, where each animal is either a cat or a dog. And let us suppose that the farmer chooses for us, with probability 1/2 to choose a cat, and probability 1/2 to choose a dog. We also make the hypothesis that the farmer's choice of the first animal does not affect the farmer's choice of the second animal, e.g., asking the farmer to choose at random based on the toss of a non-biased coin. In that case, it is easy to check that we have probability 1/4 = 1/2·1/2 that we are offered by the farmer two cats, probability 1/4 = 1/2·1/2 that we are offered two dogs, and probability 1/2 = 1/2·1/2+1/2·1/2 that we are offered a cat and a dog. This is a situation well described using the Maxwell-Boltzmann statistics, and indeed, the way these probabilities form, as products of independent individual probabilities for the animals separately, is typical of the Maxwell-Boltzmann statistics. We obtain a Maxwell-Boltzmann description in its optimal detail if we consider the farmer's choice of “a cat and a dog” different from the choice of “a dog and a cat.” For example, if the cats and dogs were put in two baskets, and we can easily distinguish the two baskets, and hence also the choices “cat in the first basket and dog in the second basket” is easy to distinguish from “dog in the first basket and cat in the second basket.” The probabilities then become 1/4, 1/4, 1/4 and 1/4, each being the product of the independent probabilities for cat or dog, 1/2 and 1/2. In the real situation of the example, the difference between the farmer offering “a cat and a dog” or “a dog and a cat” is not relevant, and hence we arrived at 1/4, 1/4 and 1/2 for the respective probabilities, and we will call this a “Maxwell-Boltzmann description with presence of epistemic indistinguishability” between “cat and dog” and “dog and cat,” the underlying statistics indeed remains Maxwell-Boltzmann. However, the Bose-Einstein statistics intrinsically deviates from this Maxwell-Boltzmann with epistemic indistinguishability. Indeed, Bose-Einstein assigns 1/3, 1/3, and 1/3 probabilities to “two cats,” “two dogs,” and “a cat and a dog,” respectively. Let us develop further our initial reasoning on this issue to show how we saw a way to support the presence of the Bose-Einstein probabilities in human cognition. Indeed, our initial reasoning argued that, although they clearly cannot arise from products of probabilities of the farmer's independent separate choices, they may nevertheless be understood if we consider human cognition and human language more closely. Let us suppose that it is not the farmer who chooses, but that at home the children, and there are two of them, a son and a daughter, are doing the choosing. And the three choices are presented to them like this, what do you wish, two kittens, two puppies, or a kitten and a puppy. All sorts of factors can play into how such a choice turns out, but there seems no reason to assume that it will turn out to be a Maxwell-Boltzmann choice with epistemic indistinguishability between “a kitten and a puppy” and “a puppy and a kitten,” and hence with probabilities 1/4, 1/4 and 1/2 for the choices of the three possibilities, “two kittens,” “two puppies” or “a kitten and a puppy.” On the contrary, it may well be the case that “a cat and a dog” as a choice is rather problematically presented to the children by the parents, and that this alternative is even assigned less than 1/3 probability. On the other hand, suppose the children do not agree, and it is decided to let them choose separately and independently from each other, then Maxwell-Boltzmann with epistemic indistinguishability and hence probabilities 1/4, 1/4 and 1/2 comes back into the picture.

This “indistinguishability,” of concepts, along with “lack of independence,” of corresponding probabilities, and how it possibly would appear in human cognition and language, as illustrated by the example of “cat” and “dog” above, was pondered about by one of the authors in the 2000s, during the emergence of the research domains of quantum cognition and quantum information retrieval (Aerts and Aerts, 1995; Khrennikov, 1999; Atmanspacher, 2002; Aerts and Czachor, 2004; Van Rijsbergen, 2004; Widdows, 2004; Bruza and Cole, 2005; Busemeyer et al., 2006). A first attempt to find experimental evidence for the idea took place in the years that followed by using how aspects of human cognition could be investigated starting from customized searches on the World-Wide Web. More specifically, combinations of quantities of cats and dogs were considered, in varying sizes, such as “eleven cats,” “ten cats and one dog,” “nine cats and two dogs,” “eight cats and three dogs,” and so on, up to “two cats and nine dogs,” “one cat and ten dogs,” ending with “eleven dogs.” So the combinations were chosen in such a way that the sum of the number of cats and dogs always equals eleven. If we think back to the farmer who lives on a farm with many cats and dogs who would be asked to fill eleven baskets, in each basket a cat or a dog, again it is the Maxwell-Boltzmann distribution that comes up. The situation of “five cats and six dogs” will be much more common than the situation of “eleven cats,” because each basket will be filled in an independent manner with either a cat or a dog, with probability 1/2 for this choice, bearing in mind that there are as many cats as dogs on the farm, and the farmer is assumed to choose in an independent manner for each basket again. The idea was to search the frequency of occurrence of these twelve sentences with varying combinations of numbers of cats and dogs on the World-Wide Web using the Google search engine. The underlying idea was identical to that expressed in the example above, namely, that by the human mind is chosen differently than the way cats and dogs end up in baskets by the farmer's choices. And more specifically, that the Bose-Einstein statistics might provide a better model for this human way of choosing than this is the case for the Maxwell-Boltzmann statistics. The results indeed showed that the Maxwell-Boltzmann statistics is not respected by the frequencies of occurrence of these sentences on the World-Wide Web, and that the Bose-Einstein statistics may well provide a better model (see Section 4.3 of Aerts, 2009b). The results were published in the year 2009 which, after a very active second half of the 2000s, with several proceedings of conferences, also spawned the first comprehensive special issue about “Quantum Cognition” (Bruza and Gabora, 2009). It took several years before Tomas Veloz, in the work for his PhD, undertook a much more systematic study, starting from the same idea, but now adding other pairs of concepts than the “cat, dog,” such as “sister, brother,” “son, daughter,” etc, and also considering other numbers than only 11 for the sums to be equal to. There was also a cognitive experiment set up, where participants were asked to choose between several of the proposed alternatives. This time, it became clear in a much more convincing way that Bose-Einstein provided a better model compared to Maxwell-Boltzman for the obtained outcomes of the considered cases (Aerts et al., 2015).

Both in Aerts (2009b) and in Aerts et al. (2015), regarding the evidence gathered from searches on the World-Wide Web, there was a weak element, namely that search engines such as Google or Yahoo do not make a very reliable count of the number of web pages. While these numbers of web pages played an important role in calculating the probabilities, and thus in comparing Bose-Einstein statistics with Maxwell-Boltzmann statistics as a model for the results obtained. The idea, then, was to address the relative weakness of previous attempts to find experimental evidence by replacing searches on search engines such as Google and Yahoo with searches in corpuses of documents. More specifically, the use of the corpuses, “Google Books” (https://www.english-corpora.org/googlebooks/x.asp), “News on Web” (NOW) (https://corpus.byu.edu/now/), and “Corpus of Contemporary American English” (COCA) (https://corpus.byu.edu/coca/), was investigated. With these searches on these corpuses of documents one was assured of the exactness of the frequencies of occurrences indicated with a search, so that the probabilities calculated from them carried great experimental reliability. The corpuses proved perfectly suited to study entanglement in human cognition and language (Beltran and Geriente, 2019). However, related to Maxwell-Boltzmann and Bose-Einstein, the presence of the sentences needed to test the difference between the two statistics was found to be too small in even Google Books, the largest of the three corpuses. Most searches of these sentences led to zero hits which was mainly also due to the nonconventional nature of their content, as there are not many stories where, for example, “seven cats and four dogs” is mentioned. When it became clear that Google Books is also the largest corpus available anyway, we knew that only the World-Wide Web is large enough to demonstrate “in this way” the superior modeling power in human cognition of the Bose-Einstein statistics over the Maxwell-Boltzmann statistics. The PhD student among the authors of the present article then tried to use Google anyway, where he decided to count the hits manually. This was possible because, with the current size of the World-Wide Web, these “numbers of hits” for the sentences such as “seven cats and four dogs” came to between 100 and 250, which made it possible to count them manually and thus to arrive at a reliable result with respect to the frequencies of occurrence. In an even more convincing way than this was the case in previous studies, the Bose-Einstein distribution proved to yield a better model for the collected data than this was the case for the Maxwell-Boltzmann distribution (Beltran, 2021). At this stage, however, where it proved impossible to test the original idea in even the largest of the available corpus of documents, namely Google Books, we began to look for possible other ways to know the nature of the underlying statistics of human cognition and language. And it turned out that indeed a much more direct experimental test was possible than the ones we had tried so far.

3. A Radiation and Quantization Scheme for Human Cognition

During the reflections set forth in Section 2, and being in pursuit of the Bose-Einstein statistics in human cognition and language, the closest example of a Bose-Einstein statistics in physical reality, was also always a subject of our reflection. This closest example is that of electromagnetic radiation, light, consisting of photons, which are bosons, and therefore behave following the Bose-Einstein statistics. There is also a historically very interesting aspect to this example of “light with its photons,” for it is the study of how a material entity that is heated begins to emit light radiation that led scientists on the path to quantum mechanics. Let us, write down the radiation law for light, for its form has played a role in our further investigation of the presence of the Bose-Einstein statistics in human cognition and language.

The quantity B(ν, T) is the amount of energy, per unit surface area, per unit time, per unit solid angle, per unit frequency at frequency ν that is radiated by a material entity that has been heated. The temperature T is measured in degrees Kelvin, hence with reference to the absolute zero. Three constants occur in this radiation law for light, h is Planck's constant, c is the speed of light, and k is Boltzmann's constant. We digress a little further, also on some of the historical aspects of how this radiative law of light played a role in the emergence of quantum mechanics, because that's also how we came to the much more direct and powerful way of identifying the Bose-Einstein statistics in human cognition and language.

It was Max Planck who in 1900 proposed the function shown in formula (1) as the function that describes the radiation from a black body and thus initiated the first phase (1900–1925) of the development of quantum physics (Planck, 1900). In physics, a “black body” means, “a body that absorbs all incident light.” The radiation law formulated by Planck was thus supposed to describe the radiation emitted by a material entity as a result of heating, without involving reflected radiation. Often the theory developed during these years, which was primarily a collection of novel but mostly ad hoc rules, is called the “Old Quantum Theory.” Planck began the study of the radiation from a black body using a law formulated by Wilhelm Wien, which showed very good agreement with the experimental measurements available at the time (Wien, 1897). During the year 1900, experiments were carried out with long wavelength radiation which showed indisputably that Wien's law failed for this type of radiation (Rubens and Kurlbaum, 1900), while Planck was then fully engaged in proving a thorough derivation from Boltzmann's thermodynamics for Wien's law. The introduction of the constant h, now named after him, together with the constant k, which Planck named after Boltzmann, was clearly intended primarily as a rescue operation for his thoroughly elaborated thermodynamic theory of electromagnetic radiation. Indeed, in an almost miraculous way, the new law now also described very well the long wavelength radiation that had discredited Wien's original law. A study of the scientific literature and correspondences between scientists of the period also makes it clear that Planck was not thinking about “quantization” at all, in the sense we understand it today. It was therefore Albert Einstein, in his article describing the photoelectric effect (Einstein, 1905), who was the first to consider the hν introduced by Planck, as explicit “quanta of light,” later called “photons” (Klein, 1961). Let us consider explicitly what that law of Wien is, and as will become clear, it is relevant to the part of our inquiry that we wish to bring forward here. We will write Wien's law in the following form

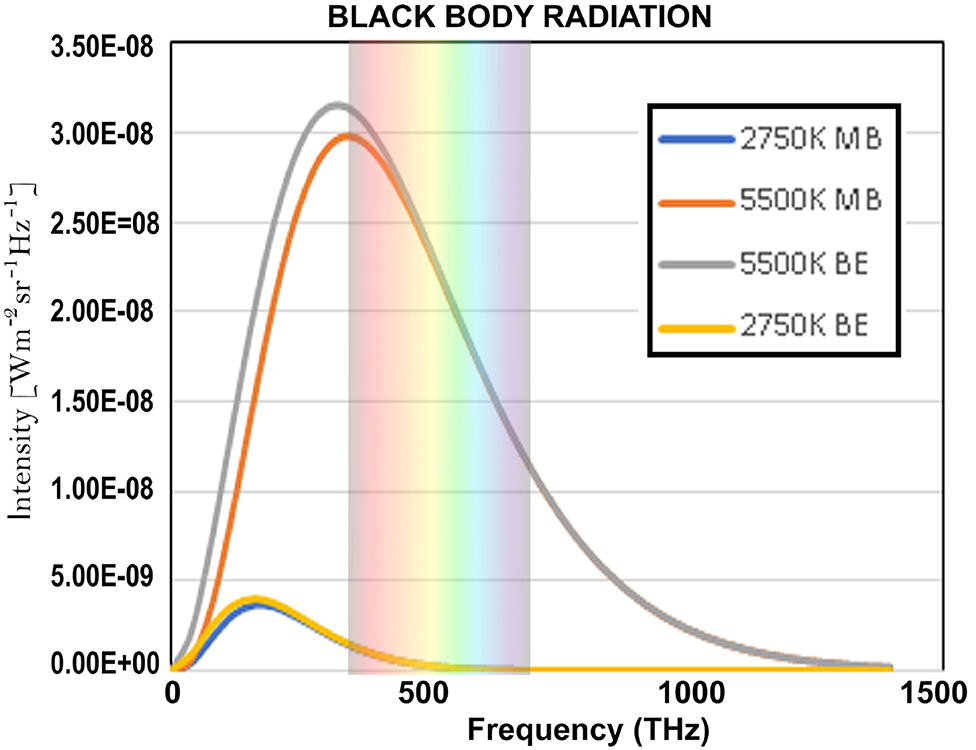

where it can be considered an approximation of Planck's law. Indeed, if hν>>kT, which is the case for short wave length radiation, we can approximate well by , and then Planck's law reduces to Wien's law. Historically, it was of course not Wien who introduced the two constants, h and k, that was Planck, Wien proposed an arbitrary constant in the law as he formulated it. In Figure 1 we have represented both Planck's radiation law formula and Wien's approximation. The gray and yellow graphs are the Planck's radiation law curves for 5, 500° Kelvin, which is approximately the temperature of the sun, and 2, 750° Kelvin, respectively, and the red and blue graphs are the Wien's radiation law curves for 5, 500° Kelvin and 2, 750° Kelvin, respectively. We can clearly see on the graphs that for high frequencies the two curves, the Wien curve and the Planck curve, practically coincide, and this was the reason that experiments were needed with low frequency electromagnetic waves to measure the difference between the two (Rubens and Kurlbaum, 1900).

Figure 1. The gray and yellow graphs are the Planck's radiation law curves for 5500° Kelvin, which is approximately the temperature of the sun, and 2750° Kelvin, respectively, and the red and blue graphs are the Wien's radiation law curves for 5500° Kelvin and 2750° Kelvin, respectively. We can clearly see on the graphs that for high frequencies, starting from red light, the two curves, the Wien curve and the Planck curve, practically coincide, and this was the reason that experiments were needed with low frequency electromagnetic waves to measure the difference between the two.

What we want to make clear now is that the portions of the functions in the two laws that are different are exactly the signatures of the two statistics we talked about in Section 2, the Bose-Einstein and the Maxwell-Boltzmann. Or, to put it more precisely,

and

the two portions of the laws that are different, are functions that represent the continuous form respectively of the Bose-Einstein statistics, and of the Maxwell-Boltzmann statistics. We have this time replaced the term hν with Ei, with an index i, which stands for “energy level number i,” and N(Ei) is the number of quanta which has energy Ei. We introduced two general constants, A and B for the Bose-Einstein distribution, and C and D for the Maxwell-Boltzmann distribution. These two constants will be completely determined by summing the i over all energy levels, from 0 to n, where then N is the total number of quanta, and E is the total energy. Hence

for the case of Bose-Einstein, and

for the case of Maxwell-Boltzmann.

As we mention in Section 2, as a consequence of even the largest available corpus of documents being too small in to allow the collection of relevant hits for sentences such as “four cats and seven dogs,” we had begun to look for possible other ways to identify the statistical structure in human cognition and language. And, at some point, the idea arose to use the continuous functions (3) and (4) that are the fingerprints of Bose-Einstein or Maxwell-Boltzmann to identify the underlying statistics in human cognition and language.This implied introducing “energy levels” for words, with “the number of times a word occurs in a text” as a criterion for the “energy level” the word is in within the text. Indeed, we were inspired by Planck's and Wien's radiation laws for a black body, which indeed describe “the number of photons” emitted over the different energy levels. Both laws also classify the photons by energy level, given by Planck's formula E = hν which links the energy level E to the frequency ν of the radiation, as can be seen in Figure 1. The frequency ranges from zero, hence energy level equal to zero, to ever higher frequencies, hence increasing energy levels, with fading radiation for increasing frequencies.

Note also that both (3) and (4) have only two constants in them, A and B, and C and D, respectively, which will be determined by the total number of words of the text, N, and by the total energy, E, of all the radiation generated by the text. And this total number of words and this total energy of radiation is uniquely determined by (5) and (6), respectively, in the Bose-Einstein case or the Maxwell-Boltzmann case. This means that there are “no parameters” to possibly make a Bose-Einstein model fit better than a Maxwell-Boltzmann model, or even to fit either one. We were not fully aware of this total lack of any possible “fitting parameters,” but as we began to pick a text to test our idea for the first time, this insight dawned. And so we knew that even with this first test, with the first text we would pick, it would be all or nothing. And at worst, neither the Bose-Einstein, or the Planck formula, nor the Maxwell-Boltzmann, or the Wien formula, would show a fitting of any quality. That would then indicate that the underlying statistics of human cognition and language are of a completely different nature, with the rather inevitable result that our basic idea was just plain wrong. We chose a text from the Winnie the Pooh stories, and more specifically the story titled “In Which Piglet Meets a Haffalump” (Milne, 1926). We counted the words by hand, to arrive at a ranking starting from energy level E0 = 0, for the word that occurs the most, which was the word And – we will notate “words” with capital letter and in italic, as in our previous articles. Then energy level E1 = 1 for the word that occurs the second most, and that was the word He, and then so on, ..., up to the words that occur only once, such as the one we classified as the last, the word You've. In Table 1 we have presented a portion of these ranked words, the full table is provided with the link: https://www.dropbox.com/s/xshgz2xmaqhtz4e/WinniethePoohData.xlsx?dl=0.

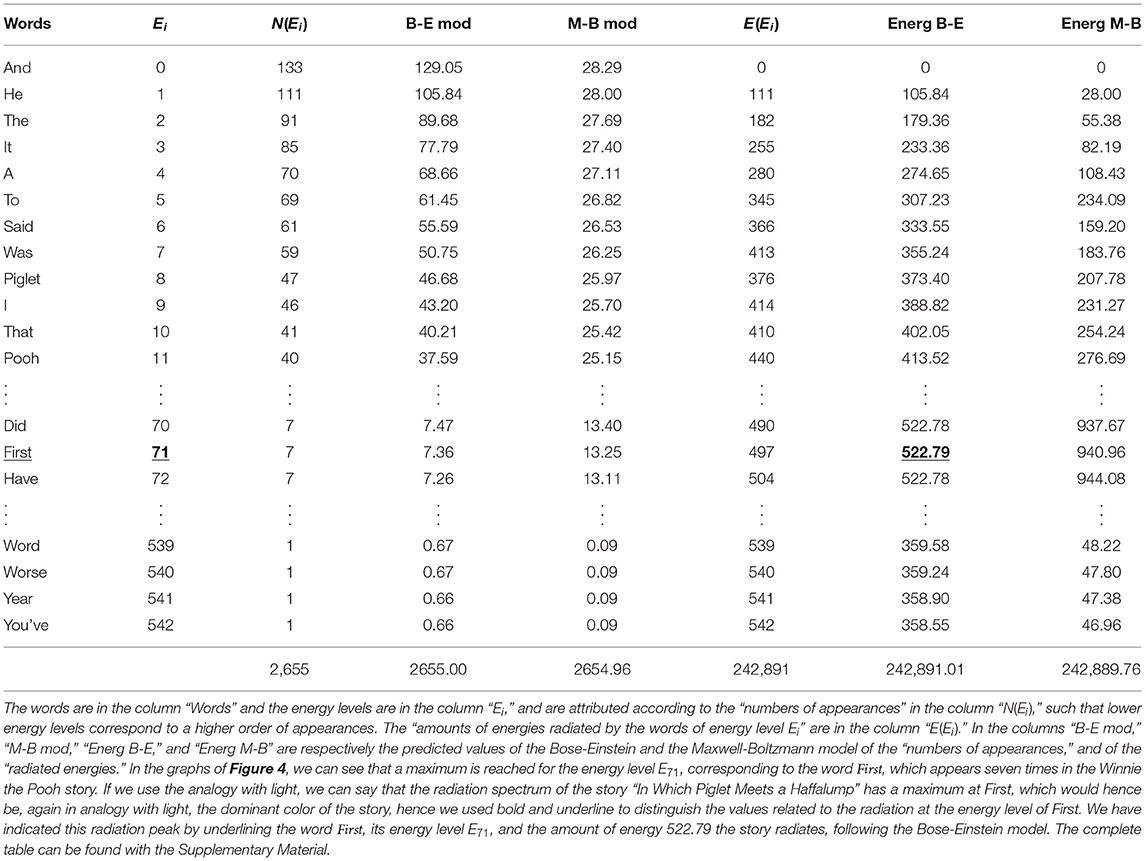

Table 1. An energy scale representation of the words of the Winnie the Pooh story “In Which Piglet Meets a Haffalump” by A. A. Milne as published in Milne (1926).

In the first column of Table 1, the words are ranked, starting with the most occurring word, And, to the least occurring word, You've. The Winnie the Pooh story we selected contained 543 different words, and so that means that the story, within our scheme, is assigned 543 different energy levels, starting from energy level equal to 0, to energy level equal to 542. In the second column of Table 1, we have represented these energy levels, i.e., running from the lowest energy level, E0 = 0, to the highest energy level E542 = 542, achieved in this particular story of Winnie the Pooh. The third column shows “the number of times” N(Ei) the with energy level Ei corresponding word in the first column occurs in the Winnie the Pooh story. Specifically, the word He, corresponding with energy level E1, occurs 111 times, and the word First, corresponding with energy level E71, occurs 7 times. The total amount of words of the Winnie the Pooh story is equal to 2, 655, and, per construction, is therefore equal to . Before we explain columns four and five, we want to tell what is in column six, because together with the first three columns, we have then described all the information that has its roots in the experimental data collected from the Winnie the Pooh story. The quantities in column six are the “energies per energy level” E(Ei). More specifically, we multiply the number of words that are in energy level Ei, i.e., N(Ei), by the value Ei of this energy level, which gives us E(Ei) = N(Ei)Ei, namely the energy radiated from that specific energy level Ei, where each word radiates and contributes to that total energy E(Ei) per energy level Ei. Once we have entered that “energy per energy level” in column six, we can immediately calculate the total energy E radiated by the entire text, by taking the sum of all these energies per energy level, so . For the Winnie the Pooh story we find E = 242, 891. Columns one, two, three and six contain the experimental data we retrieved from the Winnie the Pooh story. Some of these data are completely independent of the radiation scheme we propose for human cognition by analogy with the radiation of light, such as for example the numbers of words that are the same and the total number of words of the story. Other experimental data are not totally independent of this radiation scheme. The way we choose the energy levels, and how we add words to well-defined energy levels influences the values of these energies in an obvious way. Let us note, however, that it is difficult to imagine a more simple radiation scheme, but we will return to this later, for a real surprise awaited us in this respect.

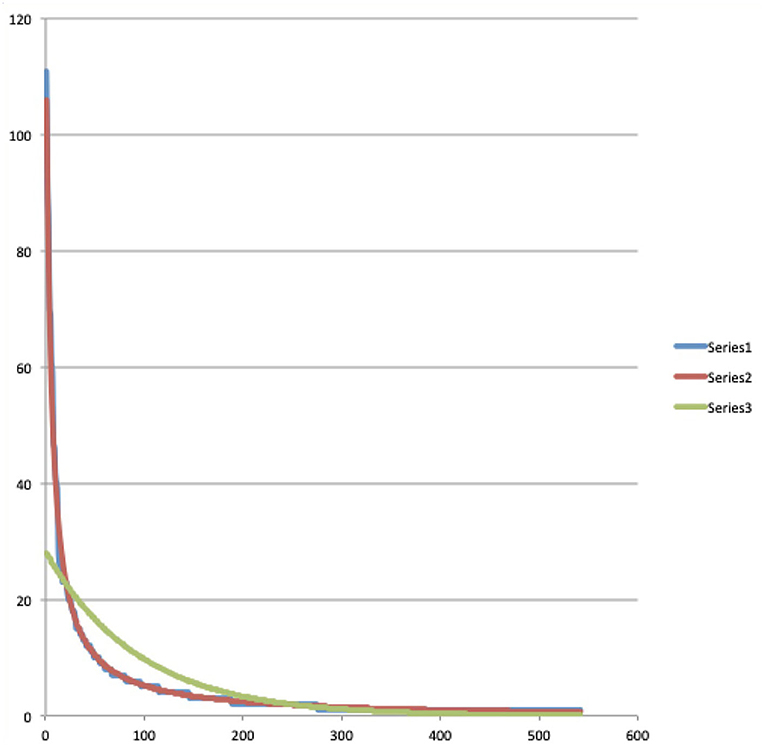

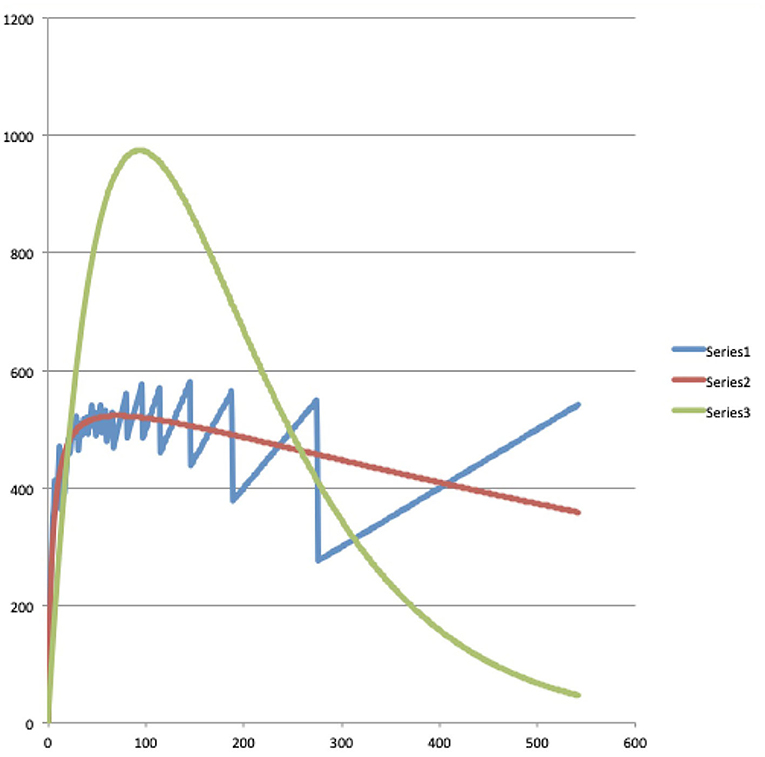

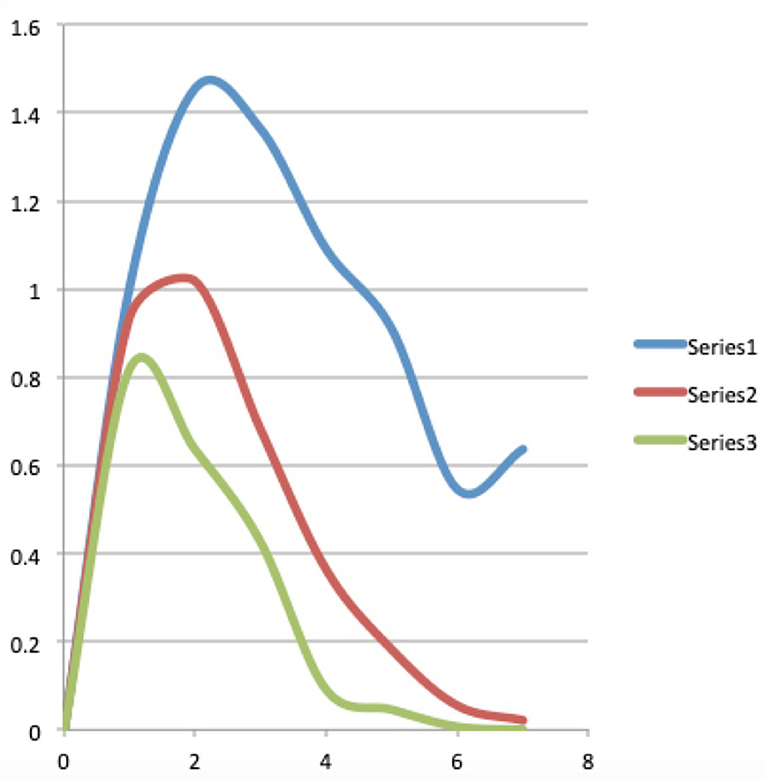

Let us now consider columns four, five, seven and eight. They contain the results we obtained by testing both the Bose-Einstein distribution function (3) and the Maxwell-Boltzmann distribution function (4) for their ability to model the experimental data contained in columns one, two, three and six within the proposed radiation scheme. And here we were rewarded with a big surprise, because when we tried the two functions, it turned out that the Bose-Einstein function showed a practically perfect modeling, after choosing the following values for the constants A and B, A = 1.0078 and B = 593.51. Equally important for the possibility that our surprise could unfold into the feeling of being on the trail of a new important discovery, was that when we tried the Maxwell-Boltzmann function on the same experimental data from the Winnie the Pooh story, it did not lead to a good model at all, on the contrary, the Maxwell-Boltzmann function deviated very much from the experimental data. In addition to the data in Table 1 itself, this result is very convincingly illustrated by the graphs we attached with the data contained in Table 1. In Figure 2, using a blue, red, and green graph, respectively, the experimental data (column three of Table 1), the Bose-Einstein function as a model (column four of Table 1), and the Maxwell-Boltzmann function as a model (column five of Table 1), are depicted. Although in this Figure 2 we can already clearly see how the Bose-Einstein function gives a very good description of the experimental data, and certainly in comparison to the really poor modeling of the Maxwell-Boltzmann function, this becomes even more clear when we calculate a log/log graph of the values in Figure 2. Such a log/log graph of the three graphs in Figure 2 can be seen in Figure 3, where thus from both the x-axis and the y-axis simply the natural logarithms are calculated of the values in Figure 2. Even more manifestly than this was already the case in Figure 2, Figure 3 illustrates how the Bose-Einstein function produces a practically perfect model and how the Maxwell-Boltzmann function totally fails to do so. In Figure 4 is a graph where the energy values, i.e., columns six, seven and eight, are represented, again blue for the experimental data, red for the Bose-Einstein model and green for the Maxwell-Boltzmann model. Although, since multiplications take place, here the discreteness of the experimental data, while both the Bose-Einstein model and the Maxwell-Boltzmann model are continuous, is more noticeable, it is nevertheless clear here too that the Bose-Einstein model represents the correct evolution of the energetic radiation, while the Maxwell-Boltzmann model does not do so at all. At this point in the description of our result, it is important to emphasize again that we are not talking about an “approximation” here. The two experimental data for the Winnie the Pooh story, namely that the total number of words is equal to N = 2, 655 and that the total energy radiated by the story is equal to E = 242,891, unambiguously determine the constants A and B, or C and D, such that for both, Bose-Einstein, and Maxwell-Boltzmann, there is only one unique solution that satisfies these experimental constraints, the size of N, and the size of E. That the Bose-Einstein function, as defined in (3) (the red graph in Figures 2–4), coincides with the experimental function (the blue graph in Figures 2–4), is therefore a very special event, and not at all a matter of “approximation” or “fitting of parameters.” Especially when this is not the case at all for the Maxwell-Boltzmann function, as defined in (4) (the green graph in Figures 2–4).

Figure 2. A representation of the “number of appearances” of words in the Winnie the Pooh story “In Which Piglet Meets a Haffalump” (Milne, 1926), ranked from lowest energy level, corresponding to the most often appearing word, to highest energy level, corresponding to the least often appearing word as listed in Table 1. The blue graph (Series 1) represents the data, i.e., the collected numbers of appearances from the story (column “N(Ei)” of Table 1), the red graph (Series 2) is a Bose-Einstein distribution model for these numbers of appearances (column “B-E mod” of Table 1), and the green graph (Series 3) is a Maxwell-Boltzmann distribution model (column “M-B mod” of Table 1).

Figure 3. Representation of the log/log graphs of the “numbers of appearances” and their Bose-Einstein and Maxwell-Boltzmann models. The red and blue graphs coincide almost completely in both whereas the green graph does not coincide at all with the blue graph of the data. This shows that the Bose-Einstein distribution is a good model for the numbers of appearances, while the Maxwell-Boltzmann distribution is not.

Figure 4. A representation of the “energy distribution” of the Winnie the Pooh story “In Which Piglet Meets a Haffalump” (Milne, 1926) as listed in Table 1. The blue graph (Series 1) represents the energy radiated by the story per energy level (column “E(Ei)” of Table 1), the red graph (Series 2) represents the energy radiated by the Bose-Einstein model of the story per energy level (column “Energ B-E” of Table 1), and the green graph (Series 3) represents the energy radiated by the Maxwell-Boltzmann model of the story per energy level (column “Energ M-B” of Table 1).

Admittedly, it was also possible that the agreement of the experimental data of the Winnie the Pooh story with the Bose-Einstein statistics was a lucky coincidence, and so we immediately started looking for other stories to see if this phenomenon would be repeated. Because we wanted to avoid having to manually classify words from other stories into our radiation scheme again, we planned in parallel to develop a program that would perform this ordering with the computer. And then a second surprise happened with potentially far-reaching consequences, we found after some searching around on the World-Wide Web that such a program already existed, and more, that it was being used by researchers interested in Zipf's law in human language (Zipf, 1935, 1949). But more importantly, we discovered that the scheme proposed by Zipf was very similar, and in the simplest case, even completely the same than our scheme. It is true that the notions of “energies” and the idea of “radiation” were not introduced, but the mathematical form of the two schemes was the same. Zipf's law is an empirical law, and although theoretical foundations are tried here and there, there is certainly no theoretical derivation that is accepted by consensus as a theoretical foundation for Zipf's law. If we consider the log/log graph that goes along with the Winnie the Pooh story, i.e., the graph shown in Figure 3, we do indeed see a curve that does not deviate much from a straight line, and this is what was particularly noted by Zipf. So when we began to examine texts from other stories, there was the additional question of whether there would be a connection there as well, and whether the log/log graphs that we will look at are those that also interested Zipf from a purely empirical standpoint. For the many subsequent texts we examined, of many different stories, shorter as well as longer than the Winnie the Pooh story, and also of the length of novels, again and again we found an equally strong confirmation of the presence of a Bose-Einstein statistics (Aerts and Beltran, 2020). Zipf's law (Zipf, 1935, 1949), and its continuous version, Pareto's law (Pareto, 1897), both turn up in all kinds of situations of human-created systems, the rankings of cities according to their size (Gabaix, 1999), the rankings of incomes from low to high (Aoki and Makoto, 2017), of wealth, of corporation sizes, but also in rankings of number of people watching the same TV channel (Eriksson et al., 2013), cells “transcriptomes” (Lazardi et al., 2021), the rankings of notes in music (Zanette, 2006) and so on… so certainly it is not only in human language that this law emerges. That Zipf's and Pareto's laws appear so prominently in many areas of human presence is a hint that our theoretical underpinning of these laws by means of a radiation and quantization scheme may also find wide application in these other areas, and not only concerns the structure of human language. The connection we make in the next Section 4 with a “meaning dynamics” also already points in the direction of a possible wide application in these other areas.

4. A Dynamics of Meaning

We wish in this section to arrive at some new insights that account for why we dared to present the comparison between electromagnetic radiation and how it interacts with matter, and human language and how it interacts with the human mind, i.e., the dynamics of human cognition, as more than just a superficial metaphor. In Aerts and Beltran (2020), the first paper where we wrote down our findings, we worked out a comparison between the human language and a gas composed of bosonic atoms close to absolute zero, or, more precisely, in the temperature region where the Bose-Einstein condensate phenomenon begins to manifest itself. The reason we then opted for that comparison, while primarily Planck's radiative law, as we outline it here in Section 3, had been our inspiration, was, because we thought in that way we could more easily gain insight into “what those energies of words really represent.” After all, photons always move at the speed of light, and have mass equal to zero, whereas atoms are closer to our near material reality. Probably we were also influenced by the spectacular aspects of the Bose-Einstein condensation, realized in 1995, and by now well mastered by experimental quantum physicists in many labs around the world (Cornell and Wieman, 2002; Ketterle, 2002). We should also note here that our activity within the research area of quantum cognition has always been two-fold, consisting of at least two strands. On the one hand, the use of the quantum formalism in human cognition leads to the possibility of describing kinds of dynamics within human cognition, e.g., decisions, but often also reasonings that are called “heuristics,” or “fallacies,” not satisfying ordinary logic- and rationality-based dynamics. Also, in this strand within quantum cognition we have always tried to “understand” what exactly is going on, “heuristics” and even “fallacies” may be “logics and rationalities” of a hidden deeper nature of human cognition. The second strand, and we certainly do not consider this less important, is to better understand quantum mechanics as applied to the description of the physical micro-world, by analyzing the application of quantum mechanics in human cognition and language. And certainly in our investigation of the presence of Bose-Einstein statistics in human cognition, this second strand is very present. So the idea is that, “if we can understand how Bose-Einstein forms within human cognition and language, then perhaps that can give us insights into what Bose-Einstein means for the physical micro-world.” During the work and research in this comparison of human language with a boson gas in a temperature close to absolute zero, we became fascinated by the question “how it was possible that Albert Einstein” wrote a paper describing the Bose-Einstein condensate as early as 1924, i.e., before the emergence of modern quantum mechanics in 1925–1926. As we examined this question more closely, a world of thought, discourse, correspondence opened up, which step by step, by reading more and more about it, we began to find scientifically relevant to our finding of the presence of Bose-Einstein statistics in human cognition and language.

The episode where the Indian physicist Satyendra Nath Bose wrote a letter to Einstein announcing a new proof for Planck's law, and how Einstein was so excited by Bose's new calculation that he translated Bose's article into German, and arranged for it to appear in the then leading journal “Zeitschrift für Physik” (Bose, 1924) is well known. However, what really took place, why for example Einstein was so enthusiastic, and why he applied Bose's method to an ideal gas right away, and wrote three articles about it (Einstein, 1924, 1925a,b), where in the second one he predicted the existence of a Bose-Einstein condensate, is already much less known. Historical sources, which have since been studied in great detail by historians of science and philosophers of science, including letters of correspondence between the scientists involved, show that three scientists played the leading role reflecting on Planck's law of radiation. Max Planck, Albert Einstein and Paul Ehrenfest, with a one-time but influential appearance by Satyendra Nath Bose, dominated the discussions about the nature of electromagnetic radiation between 1905 and 1925, the period often called the Old Quantum Theory (Howard, 1990; Darrigol, 1991; Monaldi, 2009; Pérez and Sauer, 2010; Gorroochurn, 2018). We want to draw on the interesting events, reflections, and discussions that took place during that period to point out the connections not only to our own research, but also to the endeavor of what we have now come to call “Quantum Cognition” as a domain of research.

First of all, let us note that Max Planck was already inspired by Boltzmann's statistical view of thermodynamics in the original formulation of his radiation law (Planck, 1900). Indeed, he proposed a model for the radiation of a black body that used a theory originally developed for the description of gases by Boltzmann. The latter had already used his own thermodynamics to study light radiation (Boltzmann, 1978), and in this sense had also influenced Wien (Wien, 1897), on which Planck built further. It is sometimes claimed that Planck's law brought a solution to the ultraviolet catastrophe, namely the prediction made by a law derived by Lord Rayleigh, that light in higher frequencies would radiate more and more, which clearly did not agree with the experiments. However, historical sources indicate that this is not how things happened, Rayleigh's law was only published in June 1900, when Planck had already fully enrolled, in the footsteps of Boltzmann and Wien, to work out a thermodynamic description for the radiation of a black body (Klein, 1961). We already noted in Section 3, that Planck did not think of quantization, and that it was Einstein in 1905 who brought this notion to the stage. However, the difference in interpretation of what was going on between Planck and Einstein, with also Ehrenfest engaging in reflecting on these differences, was much deeper than that. It is worth noting that Ehrenfest was the principal student of Ludwig Boltzmann in Vienna and was also a close friend of Einstein. With Einstein's support he had succeeded Hendrik Lorentz at the University of Leiden in the Netherlands and Einstein would visit Leiden regularly to discuss with his friend. Let us make clear some scientific aspects of the situation under consideration before we pick out what is scientifically important to us from these discussions. What Planck, Einstein and Ehrenfest were reflecting on, following Boltzmann, starts from a formula with combinatorial quantities in it, derived from a combinatorial reasoning, often formulated from an imagining of situations of “particles distributed among baskets”—think of the reasoning of the farmer on the farm who picks out two cats and two dogs by putting them in two baskets, as we described in Section 2. To then approximate this combinatorial formula by a continuous function, limits are applied that are very good approximations for when many particles are involved. And indeed many particles are always involved when statistical mechanics is used for thermodynamic purposes. Just as the three scientists were in complete agreement that the radiation law had to be derived using statistical thermodynamics, there was likewise no difference of opinion regarding any aspect of this limit calculation, which by the way is standard, and can be found in any book on statistical thermodynamics (Huang, 1987). So then what were the discussions about, and what did the three disagree about?

We can best illustrate the disagreement by going back to the example of the two entities that can be in two states, as we considered in Section 2. And to stay close to the subject of our paper, we take for the two entities, two concepts Animal, and the two states in which each concept Animal can be found are, Cat and Dog. Planck imagined entities he called “resonators,” as elementary constituents of the electromagnetic radiation he wanted to model. The energy elements were then “distributed among the various resonators” in Planck's representation of the situation. And, since energy elements have no identity, Planck used a combinatorial formula, which is this one of “identical particles distributed among baskets,” before making the limit transition to the continuous function. That is the reason why, after the limit procedure, Planck finds a continuous function that possesses the Bose-Einstein form, such as (3), which is indeed to be found back in (1), as we already remarked in Section 3. Einstein, however, already in his 1905 article (Einstein, 1905) on the photo electric effect, was insistent on considering the energy elements as quanta, which he even wished to grant an existence of their own in space, and Ehrenfest supported him in this way of interpreting Planck's radiation law. Over the years there was also a growing understanding that when considering the light quanta as entities in themselves, like atoms and molecules of a gas, Planck's derivation of the radiation formula exposed a fundamental problem. The probabilities for the relevant micro states, which were necessary for Boltzmann's theory in the calculation of entropy, did not appear to be statistically independent for the different light quanta. More to the point, if the probabilities for the micro states for different light quanta were assumed to be independent, the radiation law again became that of Wien, and in 1911 Ehrenfest could derive this result formally (Ehrenfest, 1911; Monaldi, 2009). In the years that followed it became more and more clear what the nature of the disagreement was between Planck on the one hand and Einstein and Ehrenfest on the other, when the lack of statistical independence became the focus of this disagreement. We have already mentioned what is meant by this lack of statistical independence using the example of the Two Animals, which can be Cat or Dog, in Section 2. The Maxwell-Boltzmann probabilities of this situation are 1/4 for two cats, 1/4 for two dogs, and 1/2 for a cat and a dog, while the Bose-Einstein probabilities are given by 1/3 for two cats, 1/3 for two dogs, and 1/3 for a cat and a dog. It is easily to understand that the latter cannot result from an independent choice between Cat and Dog. For Einstein, who wanted to consider the energy packets in Planck's formula as independently existing light quanta, this lack of independence was a major problem. Meanwhile the notion of light quanta was gaining ground among other scientists. For some, the idea that light particles formed in conglomerates of light molecules began to make sense. However, there were also numerous physicists who followed the view of Planck, and explained the peculiar statistics of the energy elements required by Planck's law of radiation as being rather useful “fictions” of the “resonator model,” and awaiting further discoveries related to it (Howard, 1990; Pérez and Sauer, 2010).

Things took a new turn when Einstein received a letter from Satyendra Nath Bose, who claimed to have found a new derivation of Planck's radiation law. Bose had tried to publish his paper but had failed, and he sent it to Einstein, suspecting that the latter would be interested, Bose was indeed aware of the discussions about Planck's radiation law that were going on. Einstein was intrigued by Bose's calculation and translated Bose's article into German sending it to Zeitschrift für Physik, where it was published (Bose, 1924). However, more, immediately he set to work introducing Bose's approach for a model of an ideal gas, about which he published an article the same year, and two additional articles in January of the following year (Einstein, 1924, 1925a,b). Ehrenfest was not at all enthusiastic about Bose's method and made this clear to a fellow physicist, writing the following in a letter dated October 9, 1924, to Abram Joffé: “Precisely now Einstein is with us. We coincide fully with him that Bose's disgusting work by no means can be understood in the sense that Planck's radiation law agrees with light atoms moving independently (if they move independently one of each other, the entropy of radiation would depend on the volume not as in Planck, but as in W. Wien, i.e., in the following way: )” (in Moskovchenko and Frenkel, 1990, p. 171–172). Einstein was silent on the problem of the statistical dependence of the light quanta leading to Planck's law of radiation using Bose's method in his first quantum gas article (Einstein, 1924). The second article (Einstein, 1925a) he wrote during a stay in Leiden with Ehrenfest, and in it he openly states, “Ehrenfest and other colleagues have objected, regarding Bose's theory of radiation and my analogous theory of the ideal gas, that in these theories the quanta or molecules are not treated as statistically independent entities, without this circumstance being specifically pointed out in our articles. This is entirely correct” (in Einstein, 1925a, page 5).

It is important to note that, parallel to these events, Louis de Broglie had come forward with the hypothesis of “a wave character also for material particles” (de Broglie, 1924), and, although Einstein only learned of de Broglie's writings after his contacts with Bose, he had read de Broglie's thesis with great interest before writing his second article on the quantum gas (Einstein, 1925a). In this article, it becomes clear that he sees the statistical dependence of gas atoms as caused by a “mutual influence,” the nature of which is as yet totally unknown. However, he also mentions that the wave-particle duality, brought out in de Broglie's work, might play a role in unraveling it (Einstein, 1925a; Monaldi, 2009). Not much later, in a letter to Erwin Schrödinger, who had begun to take an interest in the quantum gas at this time (Schrödinger, 1926a), Einstein wrote the following passage: “In Bose's statistics, which I have used, the quanta or molecules are not treated as independent from one another. [ …] According to this procedure, the molecules do not seem to be localized independently from each other, but they have a preference to be in the same cell with other molecules. […] According to Bose, the molecules crowd together relatively more often than under the hypothesis of statistical independence” (in Monaldi, 2009, page 392; see also Figure 1 in Monaldi, 2009, where this passage is shown in Einstein's handwriting). So here Einstein explained in a crystal clear way the nature of this statistical dependence to Schrödinger. Several letters between Einstein and Schrödinger in the late year of 1925 about Einstein's quantum gas, and in parallel Schrödinger taking note of de Broglie's work on the quantum theory of gases (de Broglie, 1924), were instrumental in Schrödinger's formulation of wave mechanics (Schrödinger, 1926b; Howard, 1990). When Einstein realized what it meant that Schrödinger's matter waves were defined on the configuration spaces of the quanta, which made these waves fundamentally different from de Broglie's waves, it marked the turning point for him, where he would step away from the new more and more abstract mathematically defined quantum formalism (Howard, 1990).

In modern quantum mechanics, the Bose-Einstein statistics is directly connected to the “exchange symmetry” applied to the quantum states, i.e., the unit vectors of the complex Hilbert space. In this sense, “identity” and “indistinguishability” seem to be the unique new way of understanding the Bose-Einstein statistics, but is that really the case? Is a blue photon indistinguishable from a red photon even if we can distinguish the two with our human eyes? A blue photon and a red photon possess different amounts of energy, and in a radiation scheme such as we have proposed here, both will therefore be classified in a different energy level. By the way, it is always good when it comes to notions that are difficult to grasp, to find out how quantum experimentalists deal with them. Especially since there is now interest in using the “indistinguishability” of photons with the intention of fabricating “entangled photons” that could be used as qubits for quantum computing. It then became clear, just by checking how quantum experimentalists deal with it, that blue photons are indeed “distinguishable” from red photons. We describe in Aerts and Beltran (2020) how indistinguishable photons are prepared and treated by quantum experimentalists, but in short, how blue photons are distinguishable from red photons is quite the same as we have also classified different words in different energy levels within the radiation scheme we have proposed for human cognition and language. And indeed, different words are not indistinguishable, even by definition of what they are. Yet it is precisely there, with such not indistinguishable photons and words that the Bose-Einstein statistics intervenes by thoroughly attaching other probabilities of occurrence to them. And not just “other probabilities of occurrence,” but “probabilities of occurrence that cannot come from independent choices for the individual cases.” Consider again the example of the choice between a cat and a dog, and how according to the Maxwell-Boltzmann statistics this gives rise to 1/2·1/2 = 1/4 for “two cats,” 1/2·1/2 = 1/4 for “two dogs,” and 1/2·1/2+1/2·1/2 = 1/2 for “a cat and a dog,” probabilities of occurrence which “do” obtain as products of the probabilities of occurrence of the individual cases, choosing with probability 1/2 for “one cat” and probability 1/2 for “one dog.” And how the Bose-Einstein statistics of this situation, with probabilities of occurrence 1/3, for “two cats,” 1/3 for “two dogs,” and 1/3 for “a dog and a cat,” do not allow such a decomposition into products of individual probabilities of occurrence at all. It is this lack of independence that Einstein found so annoying, and to which he suddenly, as if he had grown tired of resisting it, admitted in the method of calculation that Bose presented to him. It is argued in Howard (1990), with numerous excerpts from articles, lectures, and correspondences of Einstein that this phase nevertheless also marked his turnaround from quantum mechanics. Einstein saw no other possibility than to concede that the probabilities of occurrence of different quanta of light were not independent, because the experiments failed for a Maxwell-Boltzmann version of Planck's radiation law, which was, after all, Wien's radiation law. But Einstein believed that there was a specific force at work that caused these peculiar probabilities of the Maxwell-Boltzmann statistics to come into existence. When modern quantum mechanics came into full development in the following years, and Erwin Schrödinger formulated his wave mechanics on the basis of waves in the configuration space of particles, causing the phenomenon we now call “entanglement,” Einstein found this a bridge too far, and began the resistance that would lead to the famous article with the EPR paradox (Einstein et al., 1935).

In the studies in which it was our intention to identify the Bose-Einstein statistics in human cognition and language, and which we described in Section 2, we proposed that “a cat and a dog” would indeed be chosen no more frequently than “two cats” or “two dogs.” And we insinuated that this would be due to the “context in which the choice takes place” to be such that the alternatives from which to choose are restricted to the three alternatives, “two cats,” “two dogs,” or “a cat and a dog,” thus resulting in 1/3, 1/3, and 1/3 as probabilities of choice. Whereas, if one forms the context in which the choice takes place with the four alternatives “two cats,” “two dogs,” “one cat and one dog,” and “one dog and one cat,” then one gets the natural context for a Maxwell-Boltzmann type statistics, where then the probabilities for the choices will be 1/4, 1/4 and 1/2. But does this provide a complete and satisfying explanation for the presence of the Bose-Einstein statistics? Let's revisit our analysis of the Winnie the Pooh story “In Which Piglet Meets a Haffalump” to show that there may be something else going on after all. We consider the words in Table 1. There we see that the word Piglet is classified with energy level 8 and occurs 47 times in the story. The word First is classified with energy level 71 and occurs 7 times in the story, while the word Year is classified with energy level 541 and occurs 1 time in the story. Now suppose we could ask Alan Alexander Milne to write a few extra paragraphs specifically for this Winnie the Pooh story. It is easy to understand that in these new paragraphs the probability of the word Piglet appearing is much greater than the probability of the word First appearing, and the probability for that is much greater again than the probability of the word Year appearing. Why is this so? The reason is that the story carries “meaning,” and the words that have more affinity with this meaning that the story carries have a greater chance of appearing when the story is written. But, another way of expressing the same is to state that “meaning” is a force that makes the same words attract each other to clump together as a result of this “meaning force.” By identifying “meaning” as the cause for “the clumping of the same words in the text of a story,” have we discovered the force, this one that Einstein called a “mysterious unprecedented force,” that causes photons to clump into the same states within light?

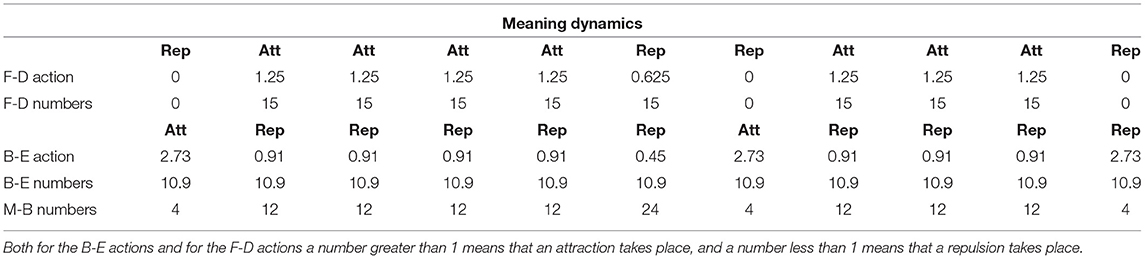

We will now consider an example of a story consisting of a small number of words such that we can use the original exact combinatorial formulas to describe and analyze it. Thus, our analysis this time will be “exact” without being obliged, due to the large number of words, to proceed to the use of the continuous functions, such as (3) and (4), which are approximations to these exact original combinatorial formulas, to identify the difference between Bose-Einstein and Maxwell-Boltzmann. This will allow us to gain more insight yet into “what exactly is going on.” We will see that we can likewise better identify the subtle structure of this “meaning dynamics.” It will also allow us to give the “Fermi-Dirac statistics” a place within our global analysis. We have proposed the situation we wish to analyze in Table 2. We consider “four words” distributed over “seven energy levels” in such a way that for each configuration “the total energy of this configuration is equal to seven.” Let us first describe what we find in the first part of Table 2, under the heading “Four Words Distributed over Seven Energy Levels.” The first column shows the numbering of the energy levels, including an energy level 0, and then 1, 2, 3, 4, 5, 6 through 7. Column two shows a first possible configuration of four words with total energy equal to seven, it is the configuration where one word possesses energy level 7, and three words possess energy level 0. Column three shows a second possible configuration, this time one word in energy level 6, one word in energy level 1, and two words in energy level 0. Indeed, this again gives four words distributed among the seven energy levels, and such that the total energy is equal to seven. The following columns show the other possible such configurations, always four words distributed over seven energy levels such that the total energy is equal to seven. Each such configuration is called a macro state, it will become clear in the following why we introduce this terminology. Table 2 shows that we can construct eleven such different configurations in this way, so there are eleven such macro states for four words distributed over seven energy levels with total energy equal to seven. In the third row of the first part of Table 2, we have numbered these macro states, from 1 to 11. Let us now explain what the numbers represent that are found in Table 2 on the second row of the first part of the table, which we have called “Maxwell-Boltzmann numbers,” written “M-B numbers” on that second row of the table. The first of those numbers, in the second column of the table, i.e., belonging to the first macro state, is equal to 4. It is the number of ways that the configuration of this first macro state can form if an underlying interchange of the four words in play leads to a distinguishable state, without counting interchanges of words that are in the same energy level, as they are by definition indistinguishable. And, if we thoroughly let sink in what is being formulated here, we understand that there are indeed 4 such micro states. Namely, any of the three words that are in energy level 0 can be moved to energy level 1, to lead to a state that is basically distinguishable from the previous one. That gives three new distinguishable states plus the one we departed from before we started moving, thus giving a total of 4 basically distinguishable states. We already told about the combinatorial formulas we would use, and, to arrive at this number 4, there is such a formula. It is given by dividing 4!—the number of possible differences by exchanging all four words—by 3!—expressing that the exchanges of the indistinguishable words in the same energy level are not counted. The general combinatorial formula that gives us for each macro state the number of micro states that exist underlying it, and that are in principle distinguishable from each other, is the following

where N is the number of considered words, hence N = 4 in our case, and Ni is the number of words in energie level i. Remember that 0! = 1, which is important for the formula to apply correctly to all macro states, even when 0 words are present in a specific energy level. We calculate from (7) the numbers of micro states for each macro state, in Table 2 to be found in the second row denoted by “M-B numbers.” Let's take a moment to consider the largest number of micro states we obtain in this way, namely the 24 belonging to macro state number six. Indeed, we wish to emphasize again why we consider these micro states as “in principle” distinguishable. For macro state number six, each of the four words belongs to a different energy level and are therefore, by definition, different words. Let us give an example of such a piece of text consisting of four different words. “Dog loves white cat” is such a sentence. Now if we interchange “dog” and “cat,” this sentence becomes “Cat loves white dog,” and this is indeed a different sentence, which we have no problem distinguishing from the previous one. This example makes clear how this “distinguishability” is akin to what we were thinking about with our simple example in Section 2, of choosing two animals, with the option of choosing a cat or choosing a dog. We can probably find examples of sentences with four words that we do not treat as distinguishable within a given context, and that is why we added the “in principle.” The total number of micro states is given by the sum of all micro states per macro state, and for this case of four words distributed over seven energy levels, such that the total energy is always equal to seven, this total number of micro states is equal to 120. We can now move on to the second part of Table 2, under the title “Maxwell-Boltzmann.”

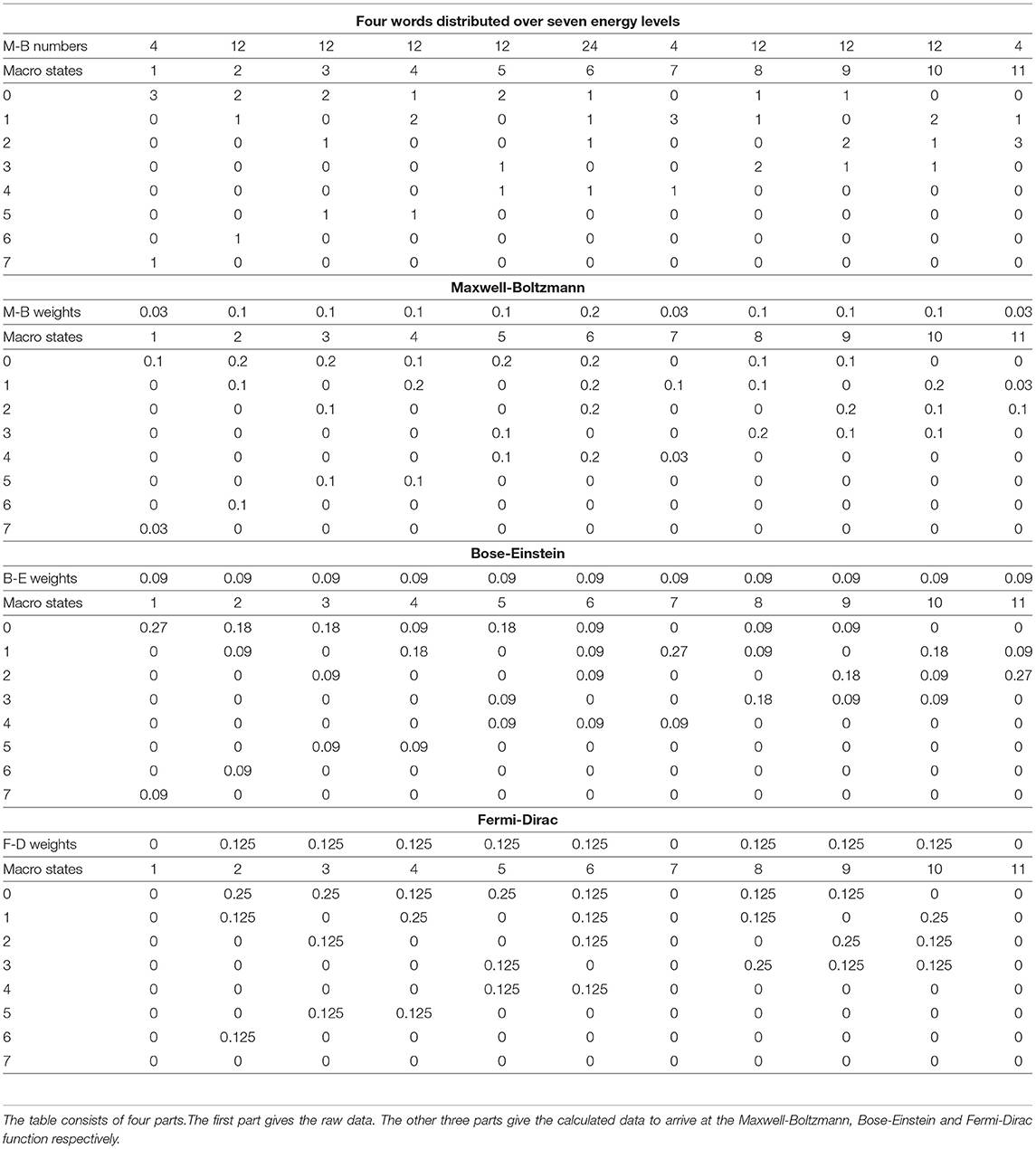

Table 2. The data of an example consisting of four words distributed over seven energy levels with total energy equal to seven.

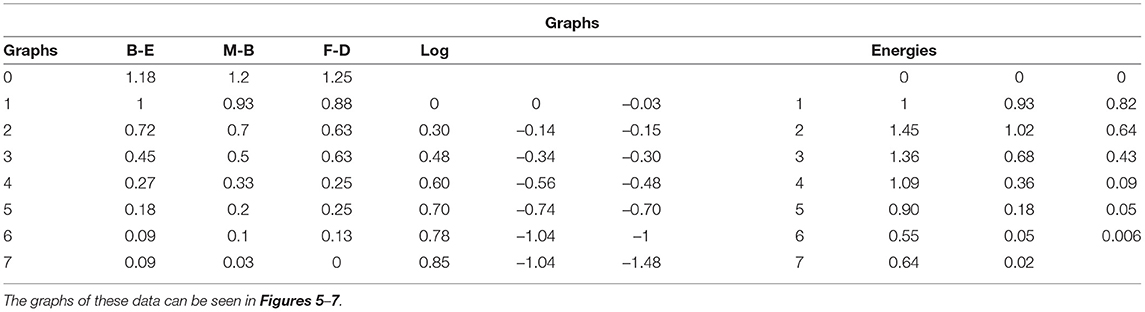

Here we make the calculations to arrive at the exact, i.e., only based on combinatorial formulae, version of what the continuous Maxwell Boltzmann function is, as given in (4). In the first row of this second part of Table 2, we calculate the Maxwell-Boltzmann weights. To do this, we divide for each macro state, the number of micro states that this macro state contains, by the total number of micro states. That is a very natural way to assign a weight per macro state that probabilistically captures the number of micro states in that macro state, the sum of all the weights being equal to 1. Or, to really use the image in which Ludwig Boltzmann looked at this situation, suppose all micro states are equally likely to be realized, then each Maxwell-Boltzmann weight of a macro state represents the probability that this macro state will be realized under a Maxwell Boltzmann statistics. The numbers in rows four through eleven of the second part of Table 2 give the weighted average values per energy level of the words present in each macro state. Let us look at one of them in detail, for example, the number located in the fifth row, the row of energy level 1, and fifth column, the column of macro state 4, which is equal to 0.2. We obtained this number by multiplying the B-E weight of the macro state in this fifth column, i.e., macro state number 4, and thus number 0.1, by the number of words located in the first part of Table 2, i.e., 2 words, and hence this gives 0.2. So this 0.2 is the weighted average number of words contributed by the underlying micro states of this macro state to energy level 1. If we add up all these weighted averages obtained in the same way over all the fifth row, that is, the row of energy level 1, we get the value of the Maxwell-Boltzmann function in energy level 1. And if we do that for each energy level we get the complete Maxwell-Boltzmann function, and we have represented it in the third column of Table 3. Let us repeat that these are the exact values of the Maxwell-Boltzmann function, to which therefore (4) is a continuous approximation. We have also attached a graph of this Maxwell-Boltzmann function for the situation of four words distributed over seven energy levels with the total energy equal to seven, it is the red graph shown in Figure 5. Before we further analyze the details of this exact version of the Maxwell-Boltzmann function, let us explain how we obtain the corresponding exact version of the Bose-Einstein function and the corresponding exact version of the Fermi-Dirac function.

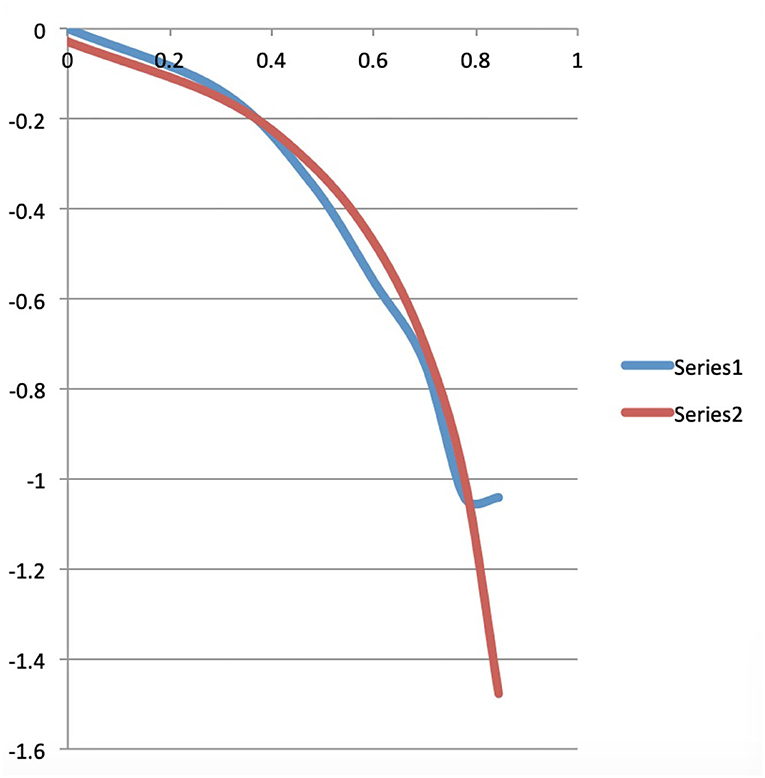

Table 3. The calculated data for the Bose-Einstein, Maxwell-Boltzmann and Fermi-Dirac functions belonging to the example of “four words distributed over seven energy levels with total energy equal to seven,” whose raw data and previous calculated data can be found in Table 2.

Figure 5. The blue, red, and green curves are the graphs of the Bose-Einstein, Maxwell-Boltzmann, and Fermi-Dirac functions for the situation of four words distributed over seven energy levels with the total energy equal to seven.

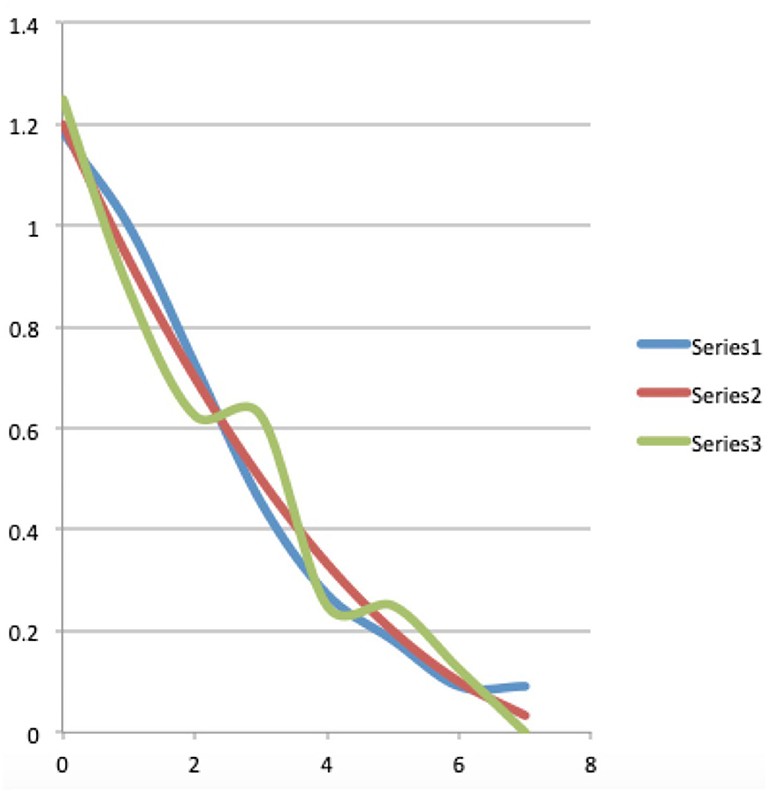

For that, we go back to Table 2, the third part of this table, under the heading Bose-Einstein. What we called the Maxwell-Boltzmann weights in the second part of Table 2, represented on the row “M-B weights” of the second part of Table 2, now become the Bose-Einstein weights, represented on the row “B-E weights” of the third part of Table 2. These are all equal to 1 divided by the number of macro states, i.e., 1/11. We thus encounter here the transformation of the Maxwell-Boltzmann weights which are naturally given by the presence of in principle distinguishable micro states, to Bose-Einstein weights which are all taken equal per macro state. It is this transformation that Einstein found highly inconvenient and which he wished to attribute to the presence of a still entirely mysterious force that causes photons to clump together in the same states. We have explained above how in human cognition and human language the force of “meaning” can play this role, and in analyzing our example further we will show this more concretely. The remainder of the calculations proceed totally identically to how we explained it in detail for the Maxwell-Boltzmann case, only that this time we substitute the Bose-Einstein weights for the earlier Maxwell-Boltzmann weights. We thus obtain the values for the exact Bose-Einstein function, also in an identical way as we did for the values of the exact Maxwell-Boltzmann function, and we find them in Table 3, in the second column and its representation in a graph in Figure 5, namely the blue graph. At this point it becomes interesting to compare these graphs, the red and blue graphs of Figure 5 with the graphs of the Bose-Einstein and Maxwell-Boltzmann functions of the Winnie the Pooh story, i.e., the red and green graphs of Figure 2. To allow for a more accurate comparison, we also constructed the graphs of the log/log functions in Figure 6, whose values can be found in Table 3, the fifth, sixth and seventh columns, which we can then compare with the log/log functions of the Bose-Einstein functions and the Maxwell-Boltzmann functions of the Winnie the Pooh story, more specifically depicted in the red and green graphs of Figure 3. Although our example of four words distributed over seven energy levels so that the total energy is equal to seven is an extremely small example, we already see similarities with the graphs of the continuous functions. The Bose-Einstein graph extends higher than the Maxwell-Boltzmann graph in 0 and is less curved in the log/log version, as is also the case for the Bose-Einstein and the Maxwell-Boltzmann of the Winnie the Pooh story. The higher value at 0 is associated with the tendency for words to accumulate at small energies when there is not much energy available, ultimately leading to the formation of a Bose-Einstein condensate with all words in the energy level 0. Before showing how the identification of a “meaning dynamics” gives us a finer understanding of the differences between Bose-Einstein and Maxwell-Boltzmann, we wish to elaborate on the Fermi-Dirac version of this situation.

Figure 6. The blue and red curves are the log/log graphs of the Bose-Einstein and Maxwell-Boltzmann functions of the situation of four words distributed over seven energy levels with total energy equal to seven.