- 1Key Laboratory of Applied Psychology, Chongqing Normal University, Chongqing, China

- 2School of Education, Chongqing Normal University, Chongqing, China

- 3Key Laboratory of Emotion and Mental Health, Chongqing University of Arts and Sciences, Chongqing, China

Social impairment is a defining phenotypic feature of autism. The present study investigated whether individuals with autistic traits exhibit altered perceptions of social emotions. Two groups of participants (High-AQ and Low-AQ) were recruited based on their scores on the autism-spectrum quotient (AQ). Their behavioral responses and event-related potentials (ERPs) elicited by social and non-social stimuli with positive, negative, and neutral emotional valence were compared in two experiments. In Experiment 1, participants were instructed to view social-emotional and non-social emotional pictures. In Experiment 2, participants were instructed to listen to social-emotional and non-social emotional audio recordings. More negative emotional reactions and smaller amplitudes of late ERP components (the late positive potential in Experiment 1 and the late negative component in Experiment 2) were found in the High-AQ group than in the Low-AQ group in response to the social-negative stimuli. In addition, amplitudes of these late ERP components in both experiments elicited in response to social-negative stimuli were correlated with the AQ scores of the High-AQ group. These results suggest that individuals with autistic traits have altered emotional processing of social-negative emotions.

Introduction

Autism spectrum disorder (ASD) is a neurodevelopmental disorder that affects 1–2% of children globally. ASD is commonly characterized by functional deficits in social behavior, difficulties in social communication, and restricted and repetitive interests (American Psychiatric Association, 2013). Such social impairment is a defining phenotypic feature of ASD (Ogawa et al., 2019; Liu et al., 2020). Previous studies of individuals with ASD have found diminished motivation to engage in social activities (Tsurugizawa et al., 2020), deficits in reciprocal social communication (De Crescenzo et al., 2019), and deficits in the ability to understand the beliefs and intentions of others (Chevallier et al., 2012; Ruta et al., 2017). It has been suggested that the core social impairment of ASD may involve impaired social motivation (Sumiya et al., 2020). This “social motivational theory” of ASD has suggested that a lack of reward feelings from social stimuli (i.e., social motivation deficit or social anhedonia) can substantially lead individuals with ASD to demonstrate a generally reduced preference for, and orientation toward, social stimuli (McPartland et al., 2012; Ruta et al., 2017) with a subsequent loss of social learning opportunities (Ruta et al., 2017; Li et al., 2019).

The autism-spectrum quotient (AQ) (Baron-Cohen et al., 2001) has been widely adopted as a way to evaluate autistic traits. It can be used to measure the core autism-related deficits in both ASD individuals and typically developing individuals. Individuals with ASD generally score at the extreme end of the AQ distribution in the general population (Dubey et al., 2015). Prior studies have found that quantifiable autistic traits are continuously distributed in typically developing individuals (Lazar et al., 2014; Sindermann et al., 2019) and that higher scores on the AQ questionnaire generally reflect a higher level of autistic traits (Ruzich et al., 2015). Individuals with high AQ scores (i.e., individuals with autistic traits or High-AQ individuals) exhibit social impairments similar to those exhibited by individuals with ASD (Poljac et al., 2012; Becker et al., 2021). Compared to individuals with low AQ scores (Low-AQ individuals), High-AQ individuals generally exhibit a reduced interest in social stimuli such as faces (Chita-Tegmark, 2016; Fogelson et al., 2019), eye gaze (Kliemann et al., 2010), social voices (Lepistö et al., 2005; Honisch et al., 2021), and social scenes (Chawarska et al., 2013; Chita-Tegmark, 2016; Tang J. S. Y. et al., 2019). These studies show that High-AQ individuals have a specific impairment in the processing of social stimuli.

Although several studies have shown that the perception of social stimuli is altered in individuals with ASD or autistic traits (Poljac et al., 2012; Chawarska et al., 2013; Robertson and Baron-Cohen, 2017; Tang J. S. Y. et al., 2019; Becker et al., 2021) these studies only used neutral social stimuli. Mental processing in individuals with ASD (Alaerts et al., 2013) or autistic traits (Azuma et al., 2015; Kerr-Gaffney et al., 2020) has been found to differ depending on the emotional valence of the stimulus, in that such individuals exhibit worse recognition of stimuli with negative rather than positive emotional valence. However, very little is known of the cognitive and neural mechanisms involved in the processing of social-emotional stimuli – a process that is fundamental to human communication (De Stefani and De Marco, 2019) – in individuals with autistic traits.

Event-related potentials (ERPs) can be used to measure the neurophysiological mechanisms underlying the processing of emotional stimuli. For example, as early components of ERP in emotion processing, N1 in both the visual modality (Xia et al., 2017; Zhao et al., 2020b) and the auditory modality (Tumber et al., 2014) reflect early attention to emotional stimuli. Larger N1 amplitudes have been observed in response to emotional pictures (Cheng et al., 2017; Tang W. et al., 2019) and emotional audio recordings (Tumber et al., 2014) as compared to neutral stimuli. In addition, the late positive potential (LPP) in the visual modality and the late negative component (LNC) in the auditory modality are late components of ERP in emotion processing that are thought to be an indicator of the evaluation of emotional information (Calbi et al., 2019; Zubair et al., 2020).

The present study aimed to investigate whether individuals with autistic traits would exhibit atypical perception of social-emotional stimuli in both the visual and auditory modalities, as measured using ERPs. As suggested by the social motivational theory of ASD (Chevallier et al., 2012), we hypothesized that individuals with autistic traits would display a similar alteration of their processing of social-emotional stimuli. In addition, as individuals with autistic traits exhibit worse recognition of negative emotional stimuli (Kerr-Gaffney et al., 2020; Meng et al., 2020), the present study hypothesized that altered perception of social-emotional stimuli in such individuals would mainly be exhibited in regard to social-negative emotions.

Experiment 1

Materials and Methods

Participants

A total of 2,592 undergraduate students aged from 18 to 23 years (Mean = 22.88 years, SD = 1.27 years) from the Chongqing Normal University were recruited to complete the Mandarin Version of the AQ questionnaire (Baron-Cohen et al., 2001; Lehnhardt et al., 2013) to estimate the magnitude of their autistic traits.

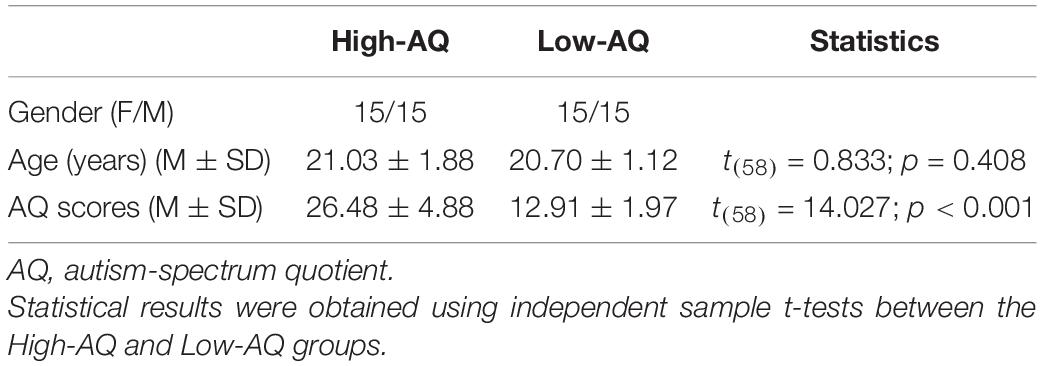

Then, two subsets of participants, those exhibiting the top 10% and bottom 10% of AQ scores (Meng et al., 2019, 2020) from the total of 2,592 undergraduate students were randomly selected and divided into High-AQ (n = 30) and Low-AQ (n = 30) groups. The detailed demographic characteristics of the participants in the High-AQ and Low-AQ groups are listed in Table 1. All participants had normal or corrected-to-normal vision and reported no history of neurological or psychiatric disorders. Written informed consent was provided by all participants prior to participation in the experiment in accordance with the Declaration of Helsinki, and the procedures of Experiment 1 were approved by the Chongqing Normal University Research Ethics Committee. The procedures were performed in accordance with ethical guidelines and regulations.

Stimuli

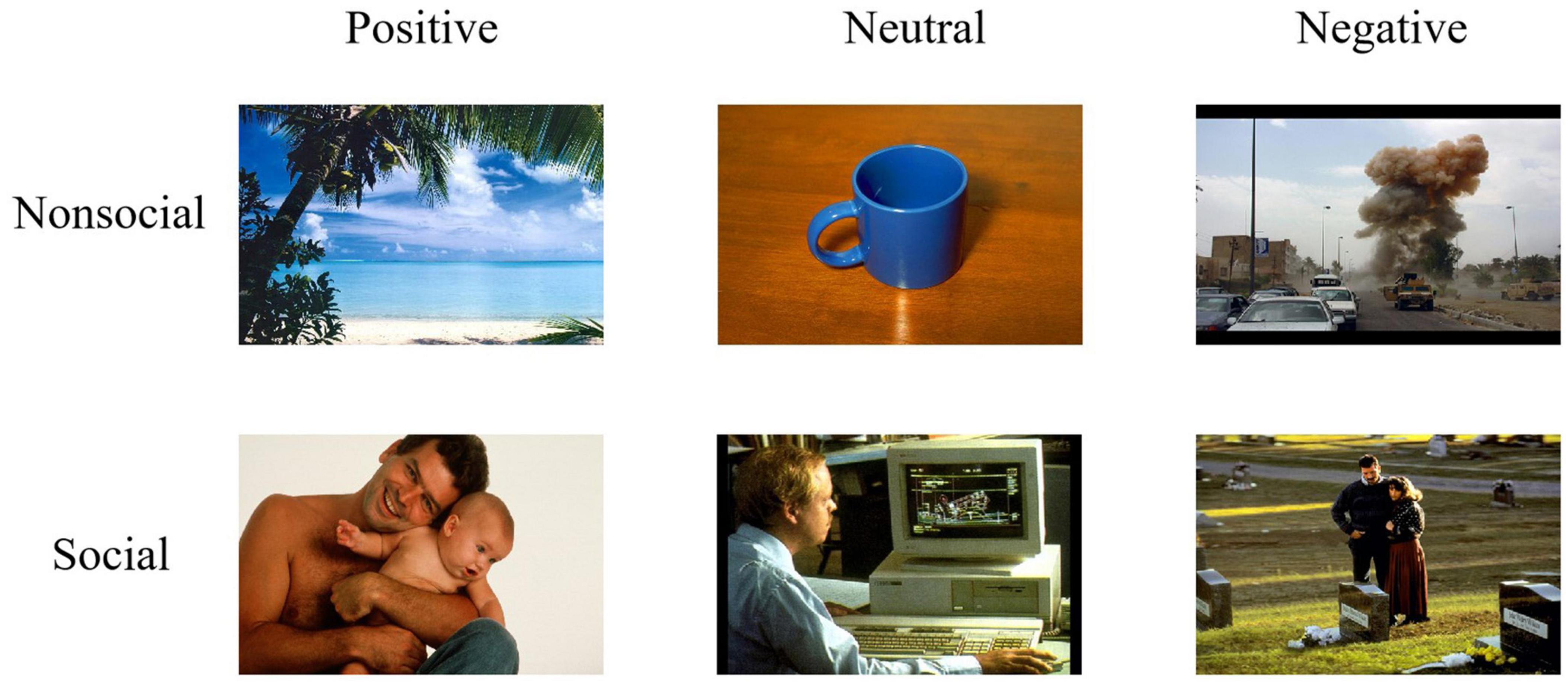

A total set of 144 digital pictures with negative, neutral, or positive emotional valence were selected from the International Affective Picture System (IAPS) (Lang and Bradley, 2007) and the Chinese Affective Picture System (CAPS) (Lu et al., 2005). These pictures also have either social or non-social dimensions. Social emotions rely on the presence of human forms interacting in cognitively complex ways involving language, meaning, and social intentionality to activate the emotion, whereas non-social emotions have nothing to do with social interaction and are not caused by other people (Silk and House, 2011; Tam, 2012; Mendelsohn et al., 2018). Based on previous research on the selection of social-emotional and non-social emotional stimuli (Silvers et al., 2012; Vrtička et al., 2013), our study selected 72 social pictures depicting two people or parts of people interacting or engaging in a social relationship, and 72 non-social pictures containing no images of people. These pictures were further classified into six types: social-neutral, non-social neutral, social-positive, non-social positive, social-negative, and non-social negative pictures (for examples, see Figure 1). The size of all selected pictures is 413 × 311 pixels, 10.5 cm × 7.9 cm.

Figure 1. Examples of pictures used in Experiment 1. Examples of nonsocial (top panel) and social (bottom panel) pictures with positive (left column), neutral (middle column), and negative (right column) emotional valence. Pictures were selected from the International Affective Picture System (IAPS) (Lang et al., 2005) (Reproduced with permission from Di Yang), and the Chinese Affective Picture System (CAPS) (Reproduced with permission from Di Yang and Yuanyan Hu).

Forty-three undergraduate students (21 females) assessed emotional valence (1 = extremely negative, 5 = neutral, 9 = extremely positive) and arousal (1 = low arousal, 9 = high arousal) produced by the selected pictures using 9-point Likert scales. In addition, they were instructed to judge whether the picture depicted social content (1 = social or 2 = non-social). The detailed descriptive statistics are summarized in Supplementary Tables 1, 2. According to the results of the assessment, social and non-social pictures were matched in emotional valence and arousal in each emotional picture.

Experimental Procedure

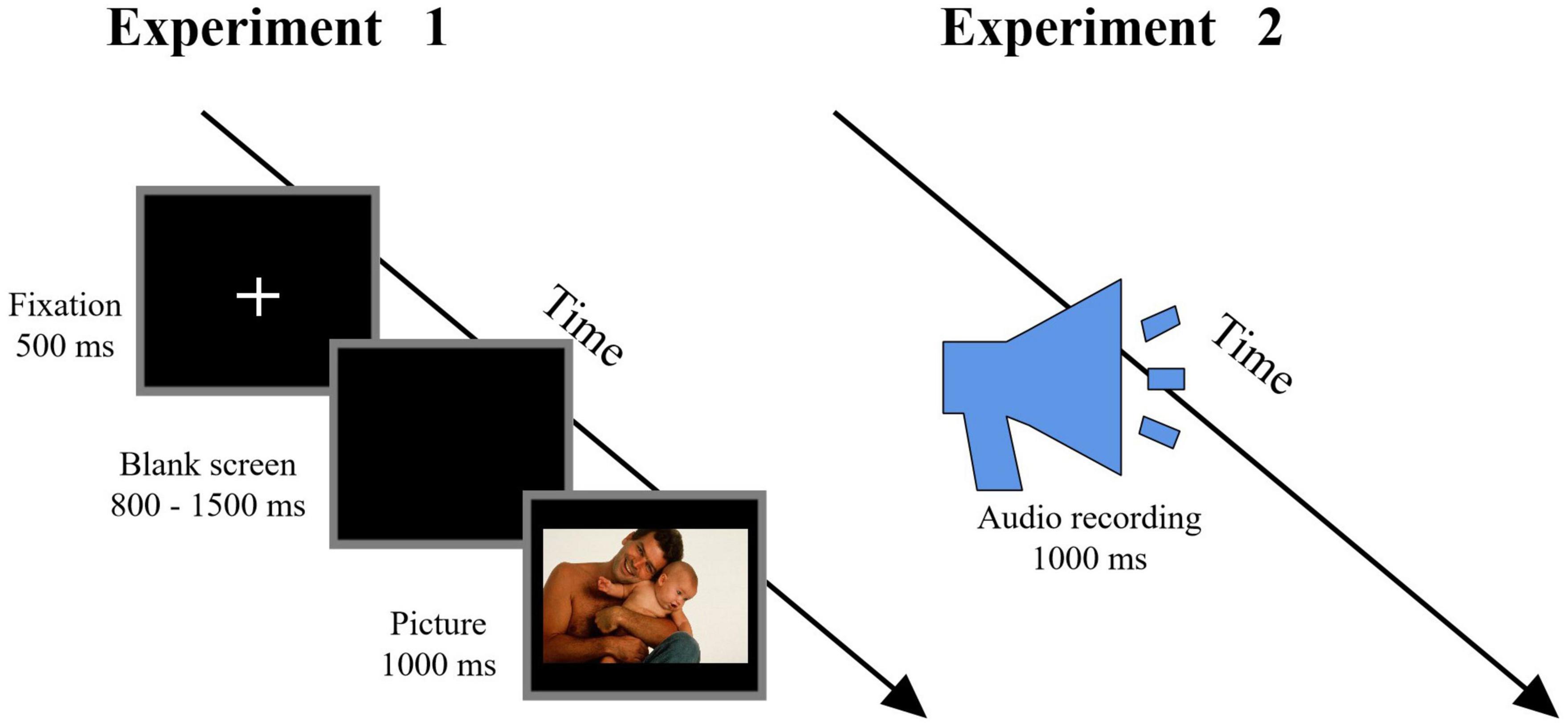

Participants were seated in a quiet room at a comfortable temperature. As in previous studies (Hsu et al., 2020; Zhou et al., 2020), an implicit processing (passive viewing) paradigm was employed. As shown in Figure 2 (left column), at the start of a trial, a fixation cross was presented for a duration of 500 ms. After 800–1,500 ms, a picture was presented for 1,000 ms, and participants were asked to view the picture passively with attention. The order of the pictures’ presentation was randomized. The experimental procedure was programmed using the E-Prime 3.0 software (Psychology Software Tools, PA, United States). Electroencephalography (EEG) data were recorded simultaneously. The entire experimental procedure consisted of three blocks, each containing 140 trials and with an inter-trial interval of 500 ms.

Figure 2. Flowchart describing the experimental design. Left column: Procedure of Experiment 1, in which participants were instructed to passively view the pictures. Right column: Procedure of Experiment 2, in which participants were instructed to passively listen to the audio recordings (Reproduced with permission from Di Yang and Yuanyan Hu).

After the EEG recording session, the participants were instructed to respond as accurately and quickly as possible by pressing a specific key (“1,” “2,” or “3”) to judge the emotional valence (positive, neutral, or negative) of the picture. Key-pressing was counterbalanced across participants to control for order effects. After judging the emotional valence, participants were instructed to rate their subjective emotional reactions (1 = very unhappy, 5 = neutral, 9 = very happy) to each picture, based on a 9-point Likert scale.

Electroencephalography Recording

Electroencephalography data were recorded from 64 scalp sites, using tin electrodes mounted on an actiCHamp system (Brain Vision LLC, Morrisville, NC, United States; pass band: 0.01–100 Hz; sampling rate: 1,000 Hz). The electrode at the right mastoid was used as a recording reference, and that on the medial frontal aspect was used as the ground electrode. All electrode impedances remained below 5 kΩ.

Electroencephalography Data Analysis

Electroencephalography data were pre-processed and analyzed via MATLAB R2014a (MathWorks, United States) and the EEGLAB v13.6.5b toolbox (Delorme and Makeig, 2004). Continuous EEG signals were band-passed filtered at 0.01–40 Hz. Time windows of 200 ms before and 800 ms after the onset of stimuli were extracted from the continuous EEG, and the extracted window was baseline-corrected by the 200 ms time interval prior to stimulus onset. The EEG epochs were visually inspected and bad trials containing significant noise from gross movements were removed. Electro-oculogram (EOG) artifacts were corrected via an independent component analysis (ICA) algorithm (Jung et al., 2001). These excluded bad trials constituted 7 ± 6.7% of the total number of trials.

After confirming scalp topographies in both the single-participant and group-level ERP waveforms, as well as previous studies (Foti and Hajcak, 2008; Hajcak et al., 2010), the dominant ERP components involved in Experiment 1 were identified, including early ERP components (N1, P2, and N2) and late ERP components (P3 and LPP). Amplitudes of N1 and N2 components were measured at the frontal-central electrodes (F1, Fz, F2, FC1, FCz, and FC2) and calculated as average ERP amplitudes within N1 latency intervals of 80–120 ms and N2 latency intervals of 210–250 ms. Amplitudes of P2, P3, and LPP components were measured at the occipital electrodes (P1, Pz, P2, PO3, POz, and PO4) and calculated as the average ERP amplitudes within P2 latency intervals of 200–240 ms, P3 latency intervals of 310–350 ms, and LPP latency intervals of 400–700 ms.

Statistical Analysis

Sample sizes of Experiment 1 were calculated using Gpower 3 v3.1.9.2; using a repeated measures analysis of variance (ANOVA) within-between (F test), with a desired power of 99%, at a 1% significance level, and an effect size of 0.29 (calculated from the interactive effect of results).

Amplitudes of dominant ERP components (N1, P2, N2, P3, and LPP) and behavioral data [accuracies (ACCs), reaction times (RTs), and subjective emotional reactions] in Experiment 1 were compared using a three-way mixed-design ANOVA, with within-participant factors of “emotion” (positive, neutral, and negative) and “sociality” (social and non-social), as well as the between-participants factor of “group” (High-AQ and Low-AQ). The Mauchly test was used in repeated measures ANOVA to test sphericity. The degrees of freedom for F-ratios were corrected according to the Greenhouse–Geisser method (Benjamini and Hochberg, 1995). If the interactions between the three factors were significant, simple effect analysis between groups was performed for each condition and reported in the results. Other detailed interaction effects are presented in the Experiment 1 in Supplementary Results.

In addition, to investigate the relationship between neural responses and autistic traits, a Pearson correlation was calculated between participants’ AQ scores and ERP amplitudes (N1, P2, N2, P3, and LPP) in Experiment 1.

Results

Behavioral Data

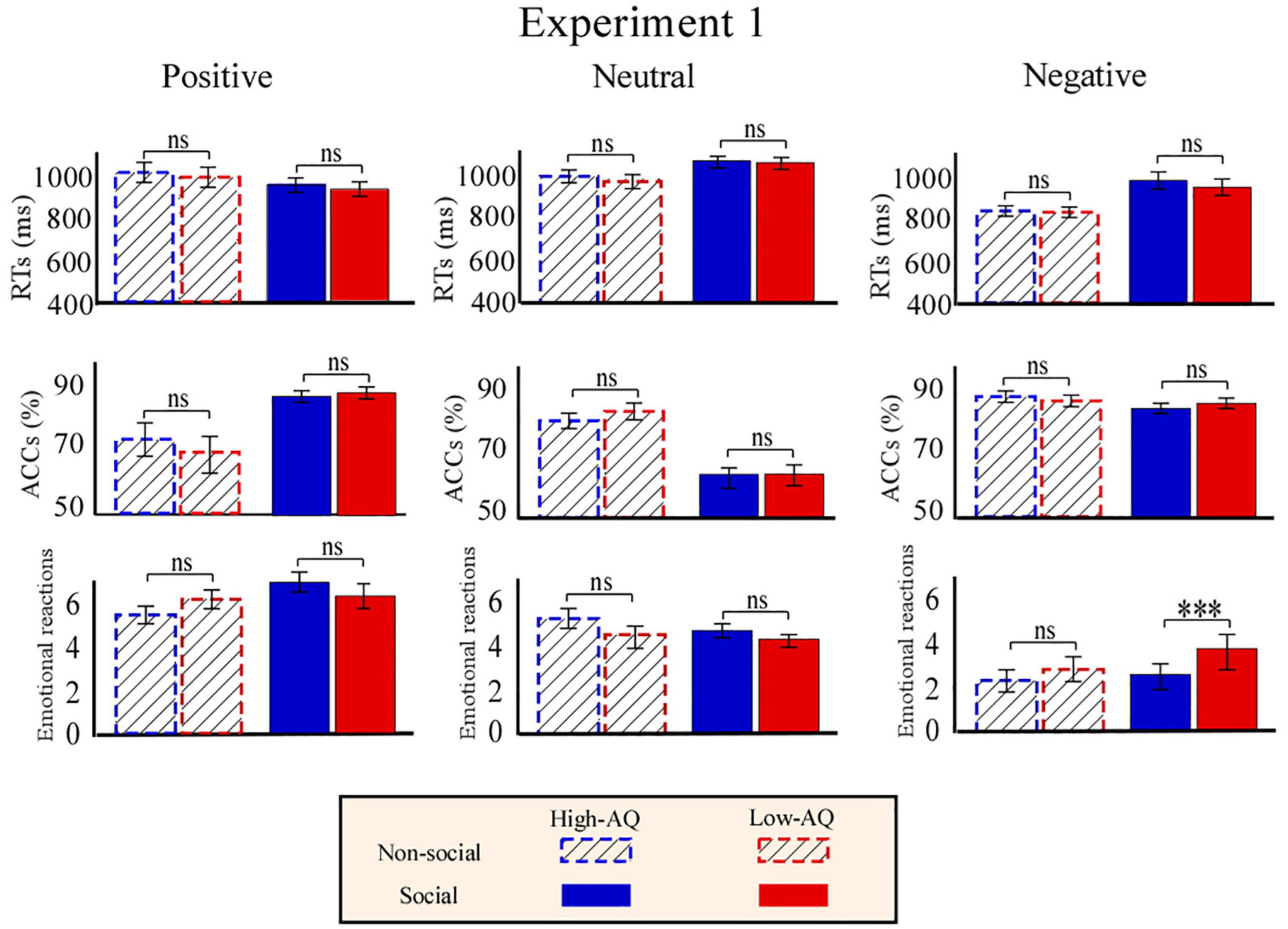

Accuracies, RTs, and emotional reactions for each condition in Experiment 1 are summarized in Figure 3. RTs were modulated by the main effects of “emotion” (F2,57 = 80.86, p < 0.001, ) and “sociality” (F1,58 = 114.66, p < 0.001, ). Participants displayed longer RTs toward the neutral pictures (1,127.05 ± 28.11 ms) than toward the positive (942.80 ± 22.11 ms, p < 0.001) and negative (929.48 ± 21.83 ms, p < 0.001) pictures. No significant difference in RTs was identified between the positive and negative pictures (p = 0.282). In addition, participants displayed longer RTs toward the social pictures (1,035.67 ± 22.79 ms) than toward the non-social pictures (963.89 ± 21.71 ms).

Figure 3. Behavioral results from Experiment 1. Bar charts show responses of High-AQ (blue) and Low-AQ (red) groups to positive (left column), neutral (middle column), and negative (right column) pictures with social (solid bar) or non-social (dotted bar) dimensions. Data of RTs, ACCs, and emotional reactions are shown in the top, middle, and bottom panels. Data in the bar charts are expressed as Mean ± SEM. nsp > 0.05, *p < 0.05, **p < 0.01, ***p < 0.001.

Accuracies were modulated by the main effect of “emotion” (F2,57 = 45.14, p < 0.001, ) and “sociality” (F1,58 = 8.01, p = 0.006, ). Participants exhibited higher accuracy toward the negative pictures (91.5 ± 1.0%) than the positive (81.0 ± 1.9%, p < 0.001) and neutral (68.7 ± 2.0%, p < 0.001) pictures, and exhibited higher accuracy toward the positive pictures than the neutral pictures (p < 0.001). In addition, participants exhibited higher accuracy toward the social pictures (82.0 ± 1.0%) than the non-social pictures (78.80 ± 1.1%).

Emotional reactions were modulated by the main effect of “emotion” (F2,57 = 241.53, p < 0.001, ). Post hoc comparisons showed that participants felt more negative toward the negative pictures (2.91 ± 0.09) as compared to the positive (6.29 ± 0.15, p < 0.001) and neutral (4.39 ± 0.07, p < 0.001) pictures, and felt more negative toward the neutral pictures as compared to the positively valenced pictures (p < 0.001). Importantly, the participants’ emotional reactions were modulated by the interaction of “emotion” × “sociality” × “group” (F2,57 = 4.24, p = 0.020, ). Simple effects analysis indicated that participants’ emotional reactions to social-negative pictures were more negative for the High-AQ group than for the Low-AQ group (High-AQ group: 2.49 ± 0.78, Low-AQ group: 3.55 ± 0.74; F2,57 = 29.21, p < 0.001, ). However, emotional reactions were not different between groups in other conditions (p > 0.05 for all comparisons).

Event-Related Potentials Data

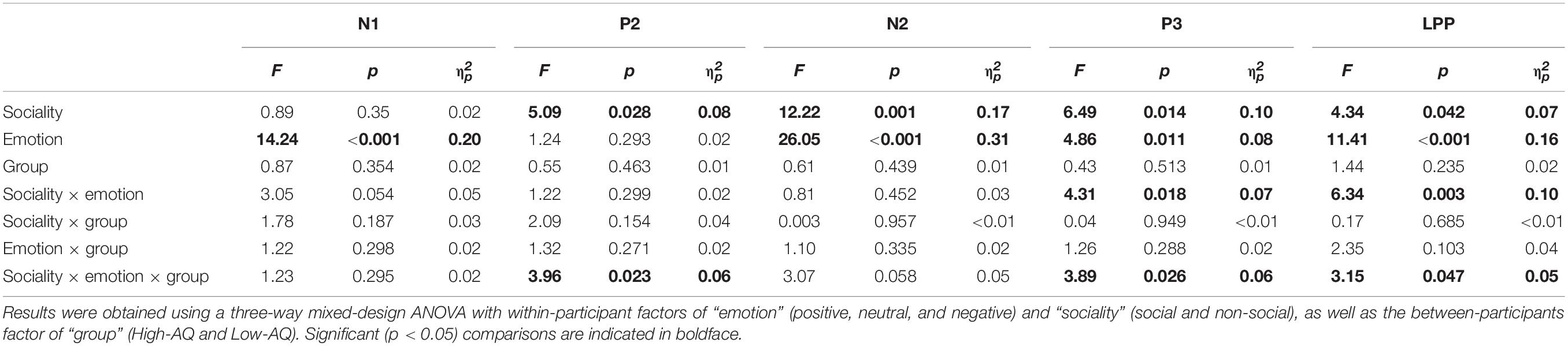

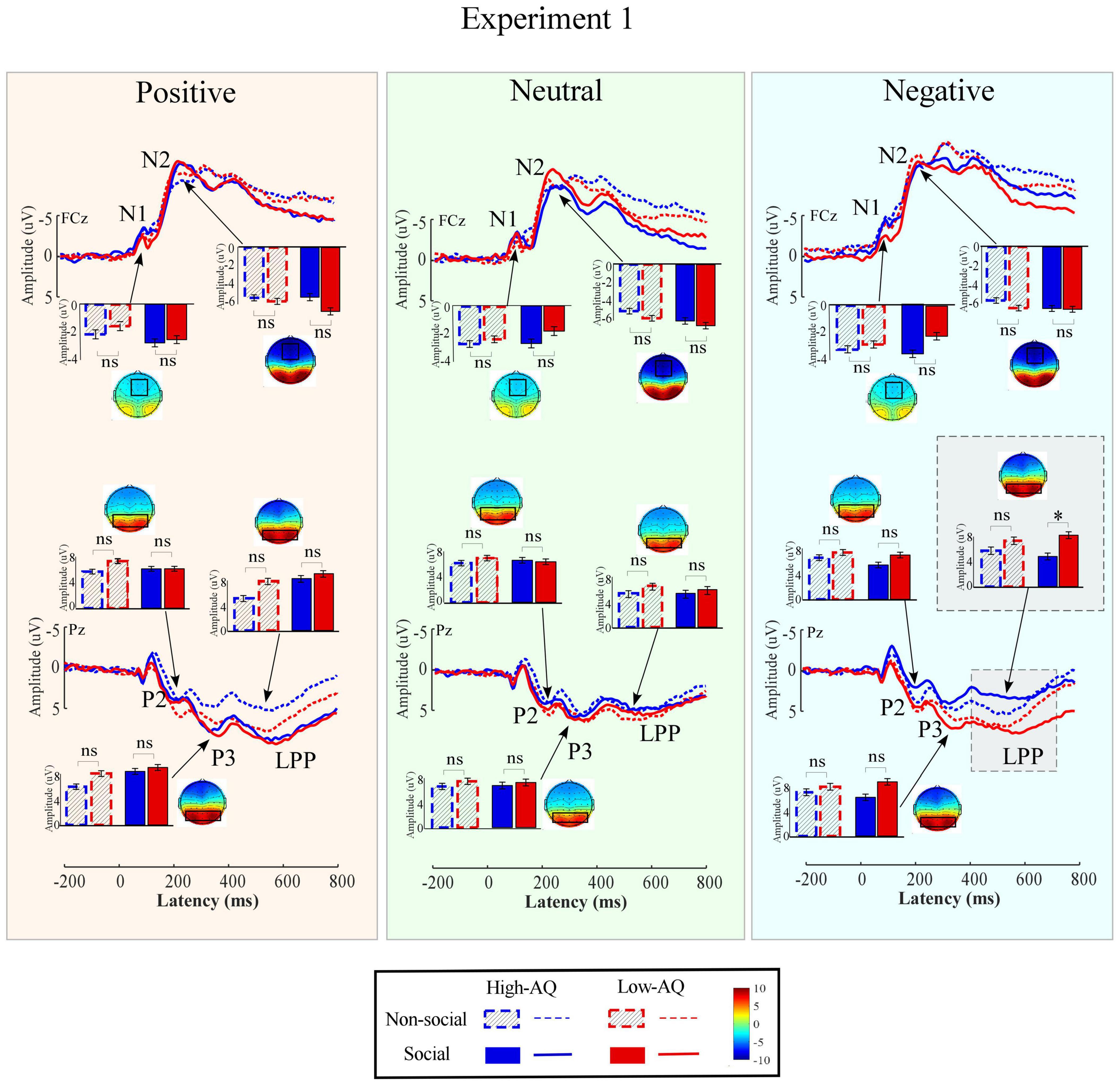

Amplitudes of dominant ERP components in Experiment 1 were compared by a three-way mixed-design ANOVA. The relevant results are shown in Figure 4 and Table 2.

Figure 4. Event-related potential waveforms, scalp topography distributions, and bar charts for Experiment 1. ERP waveforms exhibited by the High-AQ (blue) and Low-AQ (red) groups in response to positively (left column), neutral (middle column), and negatively (right column) valenced pictures with social (solid) or non-social (dotted) dimensions. Electrodes used to estimate the ERP amplitudes were marked using the black squares on their respective topographic distributions. Data in the bar charts were expressed as Mean ± SEM. nsp > 0.05, *p < 0.05, **p < 0.01, ***p < 0.001.

N1

The N1 amplitudes were significantly modulated by the main effect of “emotion” (F2,57 = 14.24, p < 0.001, ). Negative pictures (−2.91 ± 0.35 μV) elicited larger N1 amplitudes than did positive (−2.01 ± 0.34 μV, p < 0.001) and neutral (−2.25 ± 0.35 μV, p < 0.001) pictures. However, no significant difference was observed in N1 amplitudes elicited by the positive and neutral pictures (p = 0.190).

P2

The P2 amplitudes were significantly modulated by the main effect of “sociality” (F1,58 = 5.09, p = 0.028, ). Non-social pictures (7.32 ± 0.63 μV) elicited larger P2 amplitudes than social pictures (6.87 ± 0.64 μV).

N2

The N2 amplitudes were significantly modulated by the main effects of “sociality” (F1,58 = 12.22, p = 0.001, ) and “emotion” (F2,57 = 26.06, p < 0.001, ). Social pictures (−9.77 ± 0.71 μV) elicited larger N2 amplitudes than non-social pictures (−8.78 ± 0.63 μV). Negative pictures (−9.98 ± 0.65 μV) elicited larger N2 amplitudes than positive (−8.40 ± 0.67 μV, p < 0.001) and neutral pictures (−9.45 ± 0.70 μV, p = 0.011). In addition, neutral pictures elicited larger N2 amplitudes than positive pictures (p < 0.001).

P3

The P3 amplitudes were significantly modulated by the main effects of “sociality” (F1,58 = 6.49, p = 0.014, ) and “emotion” (F2,57 = 4.86, p = 0.011, ). Social pictures (7.91 ± 0.66 μV) elicited larger P3 amplitudes than non-social pictures (7.27 ± 0.64 μV). Positive pictures (8.07 ± 0.58 μV) elicited larger P3 amplitudes than negative (7.40 ± 0.68 μV, p = 0.011) and neutral (7.29 ± 0.69 μV, p = 0.013) pictures. However, no significant difference was observed in P3 amplitudes elicited by the negative and neutral pictures (p = 0.654).

Late Positive Potential

The LPP amplitudes were significantly modulated by the main effects of “sociality” (F1,58 = 4.34, p = 0.042, ) and “emotion” (F2,57 = 12.22, p = 0.001, ). Social pictures (6.77 ± 0.72 μV) elicited larger LPP amplitudes than non-social pictures (6.18 ± 0.69 μV). Positive pictures (7.51 ± 0.59 μV) elicited larger LPP amplitudes than negative (6.33 ± 0.76 μV, p = 0.003) and neutral (5.69 ± 0.78 μV, p < 0.001) pictures. However, no significant difference was observed in LPP amplitudes elicited by the negative and neutral pictures (p = 0.064).

Importantly, LPP amplitudes were modulated by the interaction of “emotion” × “sociality” × “group” (F2,57 = 3.15, p = 0.047, ). As shown in Figure 4, simple effects analysis indicated that, for social-negative pictures, LPP amplitudes were smaller in the High-AQ group than in the Low-AQ group (High-AQ group: 4.66 ± 1.07 μV, Low-AQ group: 7.98 ± 1.06 μV; F2,57 = 4.83, p = 0.032, ). However, no significant difference was observed between groups in other conditions (p > 0.05 for all comparisons).

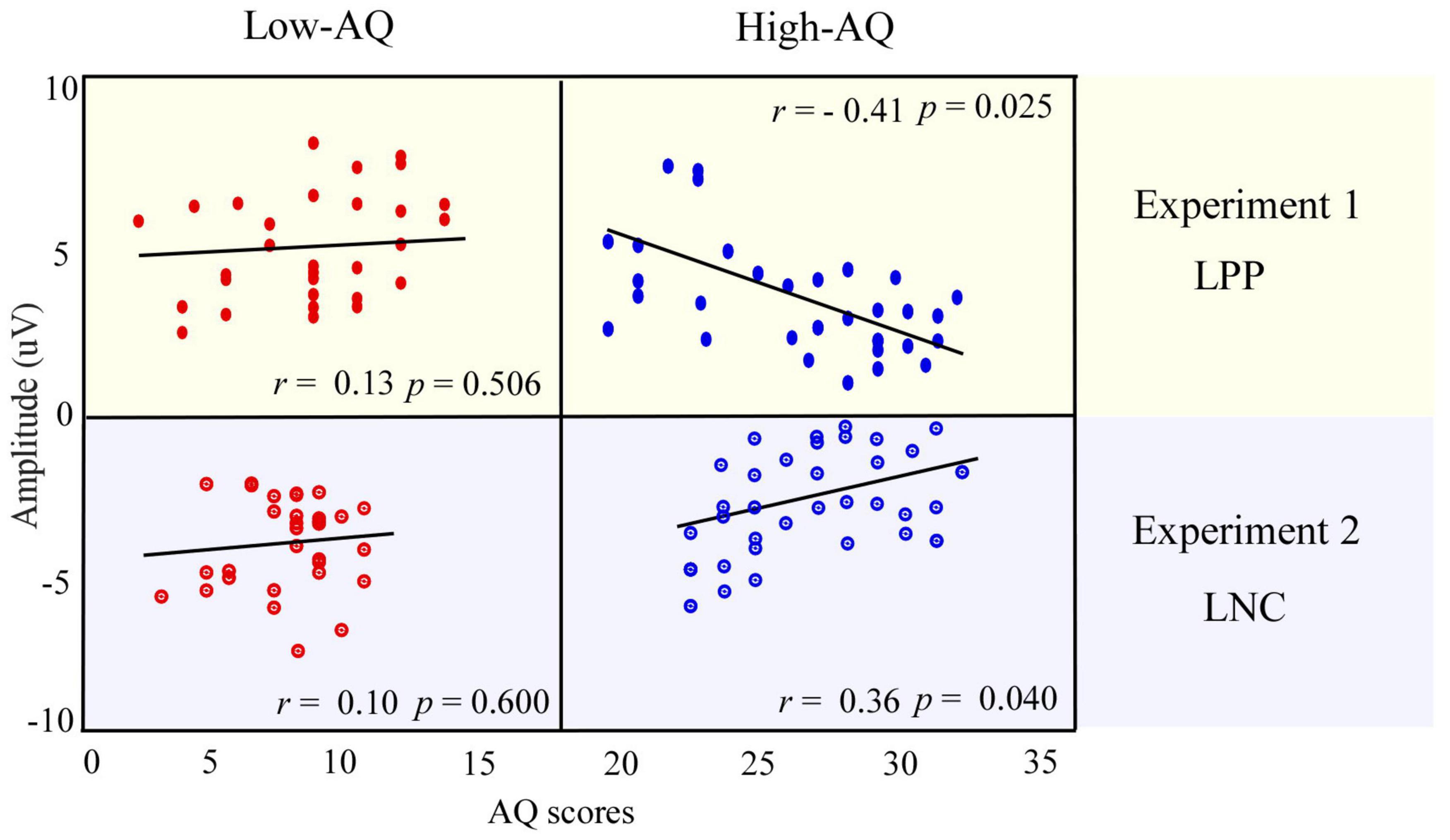

Correlation Between Event-Related Potential Data and Autism-Spectrum Quotient Scores

For social-negative pictures, the LPP amplitudes were negatively correlated with the AQ scores of the High-AQ group (r = −0.41, p = 0.025), but not correlated with the AQ scores of the Low-AQ group (r = 0.13, p = 0.506). No other reliable correlation was found between AQ scores and ERP amplitudes in other conditions (p > 0.05 for all correlations). The correlation results are displayed in Figure 5 (top panel).

Figure 5. Relationship between AQ scores and ERP amplitudes. The correlation between the LPP amplitudes in response to social-negative pictures in Experiment 1 and AQ scores of the High-AQ (blue dots) and Low-AQ (red dots) groups are shown in the top panel. The correlation between the LNC amplitudes in response to social-negative audio recordings and AQ scores of the High-AQ (blue dots) and Low-AQ (red dots) groups in Experiment 2 are shown in the bottom panel. Data in the figure were calculated using the Pearson correlation coefficient.

Experiment 2

Materials and Methods

Participants

A total of 2,083 undergraduate students aged from 18 to 23 years (Mean = 21.18 years, SD = 1.68 years) from the Chongqing Normal University (who did not participate in Experiment 1) were recruited to complete the Mandarin Version of the AQ questionnaire (Baron-Cohen et al., 2001; Lehnhardt et al., 2013).

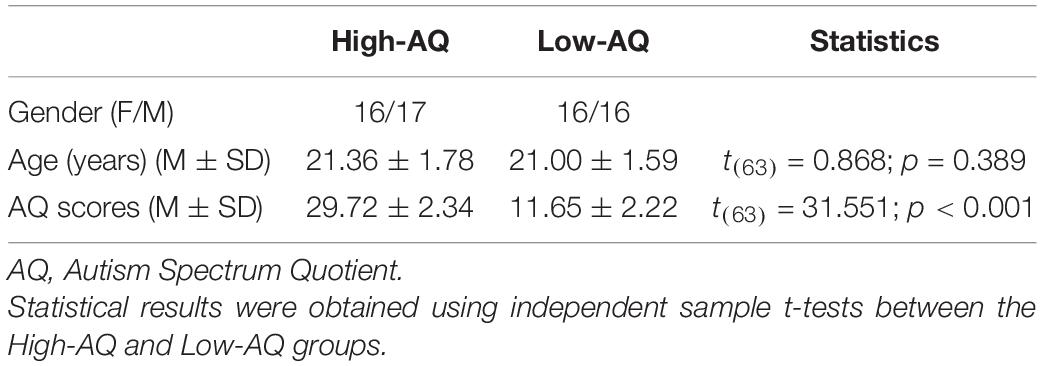

Then, a subset of participants (those exhibiting the top 10% and bottom 10% of AQ scores) (Meng et al., 2019, 2020) were randomly selected and divided into High-AQ (n = 33) and Low-AQ (n = 32) groups. The detailed demographic characteristics of the High-AQ and Low-AQ groups are listed in Table 3. All the participants had normal hearing and no history of neurological or psychiatric disorders. Informed consent was given by all participants before the formal experiment in accordance with the Declaration of Helsinki, and all procedures were approved by the Chongqing Normal University Research Ethics Committee. The procedures were performed in accordance with ethical guidelines and regulations.

Stimuli

A total of 60 negative, neutral, and positive audio recordings were selected from the Montreal Affective Voices database (Belin et al., 2008) and the Chinese Affective Voices database (Liu and Pell, 2012). These audio recordings have either social or non-social dimensions. Based on previous research on the selection of social-emotional and non-social emotional stimuli (Silvers et al., 2012; Vrtička et al., 2013), our study selected 30 social audio recordings depicting a male or female human voice voicing the vowels, such as/a/; and 30 non-social audio recordings depicting non-human sounds, such as audio recordings of musical instruments, tools, and animals. These audio recordings were then further classified into six types: social-neutral, non-social neutral, social-positive, non-social positive, social-negative, and non-social negative. These categories are consistent with the types of stimuli in Experiment 1.

All audio recordings were edited to last 1,000 ms, with a mean intensity of 70 dB (Panksepp and Bernatzky, 2002). All audio recordings were evaluated by 40 undergraduate students (20 females). They were asked to evaluate emotional valence (1 = extremely negative, 5 = neutral, 9 = extremely positive) and arousal (1 = not at all aroused, 9 = extremely aroused) produced by the audio recordings by using 9-point Likert scales. They were also instructed to judge whether the audio recordings involved social content (1 = social or 2 = non-social). The detailed descriptive statistics are summarized in Supplementary Tables 3, 4. According to the results of the assessment, social and non-social audio recordings were matched in emotional valence and arousal.

Experimental Procedure

Participants were seated in a quiet room at a comfortable temperature. As in previous studies (Hsu et al., 2020; Zhou et al., 2020), an implicit processing (passive listening) paradigm was employed and participants were asked to attentively listen to the audio recordings (Figure 2, right column). Each audio recording lasted for 1,000 ms with an inter-stimulus interval (ISI) of 1.5–2.5 s, and the order of audio recordings was randomized. The experimental procedure was programmed using the E-Prime 3.0 software (Psychology Software Tools, PA, United States). EEG data were recorded simultaneously. The whole experimental procedure consisted of two blocks, each containing 210 audio recordings.

After each EEG recording session, the participants were instructed to respond as accurately and quickly as possible by pressing a specific key (either “1,” “2,” or “3”) to judge the emotional valence of the audio recording (positive, neutral, or negative). Key-pressing was counterbalanced across participants to control for order effects. After judging the emotional valence, participants were instructed to rate their subjective emotional reactions (1 = very unhappy, 5 = neutral, 9 = very happy) to each audio recording, based on a 9-point Likert scale.

Electroencephalography Recording

Same as Experiment 1.

Electroencephalography Data Analysis

The EEG data pre-processing procedure of Experiment 2 is the same as that used in Experiment 1. These excluded bad trials constituted 5 ± 2.1% of the total number of trials.

After confirming scalp topographies in both single-participant and group-level ERP waveforms, and based on previous studies (Cheng et al., 2017; Meng et al., 2020), the dominant ERP components involved in Experiment 2 were identified, including early ERP components (N1 and P2) and LNC. N1 and P2 were identified as the most negative and positive deflections, respectively, at 100–300 ms after the audio recording’s onset with maximum distribution at the frontal-central electrodes. The LNC distributed in the frontal-central electrodes was a long-lasting negative wave within latency intervals of 300–700 ms after the audio recording’s onset. Amplitudes of N1 and P2 were both measured at the frontal-central electrodes (Fz, F1, F2, FCz, FC1, FC2, Cz, C1, and C2) and calculated as the average ERP amplitudes within N1 latency intervals of 100–120 ms and P2 latency intervals of 190–210 ms. Amplitudes of LNC were measured at the prefrontal electrodes (Fz, F1, F2, FCz, FC1, and FC2) and calculated as the average ERP amplitudes within latency intervals of 300–700 ms.

Statistical Analysis

Sample sizes of Experiment 2 were calculated using Gpower 3 v3.1.9.2 (see text footnote 1); using a repeated measures ANOVA within-between (F test), with a desired power of 99%, at a 1% significance level, and an effect size of 0.25 (calculated from the interactive effect of results).

In Experiment 2, amplitudes of dominant ERP components (N1, P2, and LNC) and behavioral data (ACCs, RTs, and subjective emotional reactions) were compared using three-way mixed-design ANOVA, with within-participant factors of “emotion” (positive, neutral, and negative) and “sociality” (social and non-social), as well as the between-participants factor of “group” (High-AQ and Low-AQ). If the interactions between the three factors were significant, simple effect analysis was performed between groups for each condition and reported in the results. Other detailed interaction effects are presented in the Experiment 2 in Supplementary Results.

In addition, to investigate the relationship between the neural responses and autistic traits, a Pearson correlation was calculated between participants’ AQ scores and the amplitudes of the ERP components (N1, P2, and LNC) in Experiment 2.

Results

Behavioral Data

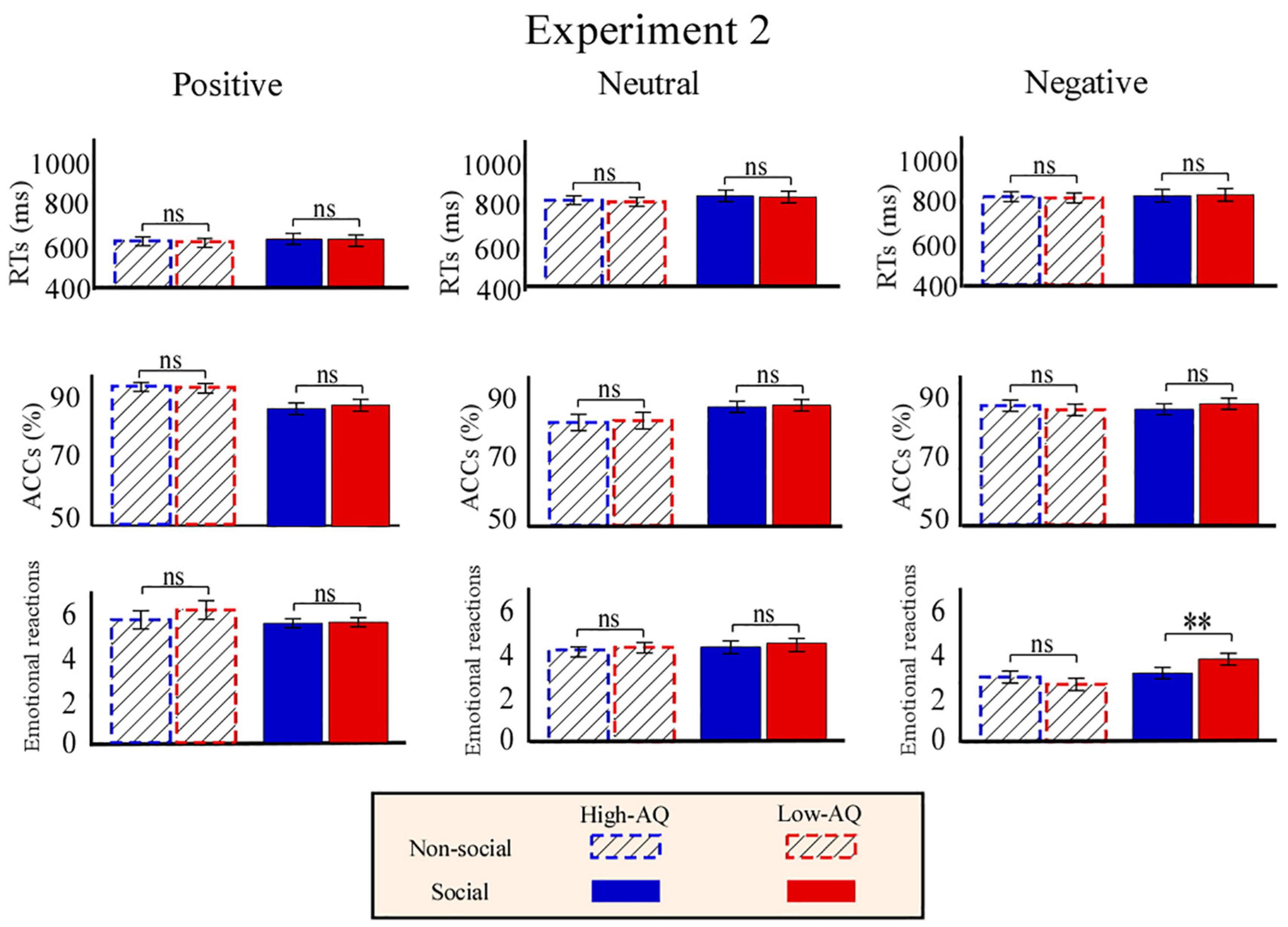

As shown in Figure 6, RTs were modulated by the main effect of “sociality” (F1,63 = 11.27, p = 0.001, ) with participants displaying longer RTs toward the social audio recordings (633.15 ± 12.66 ms) than toward the non-social audio recordings (606.71 ± 9.67 ms).

Figure 6. Behavioral results from Experiment 2. Bar charts show responses of High-AQ (blue) and Low-AQ (red) groups to positive (left column), neutral (middle column), and negative (right column) pictures with social (solid bar) or non-social (dotted bar) dimensions. Data of RTs, ACCs, and emotional reactions are shown in the top, middle, and bottom panels. Data in the bar charts are expressed as Mean ± SEM. nsp > 0.05, *p < 0.05, **p < 0.01, ***p < 0.001.

Accuracies were modulated by the main effect of “emotion” (F2,62 = 3.19, p = 0.046, ). Post hoc comparisons showed participants displayed higher ACCs toward the positive audio recordings (87.9 ± 1.0%) than toward the negative audio recordings (81.0 ± 1.9%, p = 0.047).

Emotional reactions were modulated by the main effect of “sociality” (F1,63 = 5.62, p = 0.021, ) and “emotion” (F2,62 = 543.77, p < 0.001, ). Participants felt more negative toward the social audio recordings (5.05 ± 0.05) than toward the non-social audio recordings (4.89 ± 0.08). Participants felt more negative toward the negative audio recordings (3.10 ± 0.08) than toward the positive (7.03 ± 0.12, p < 0.001) and neutral (4.77 ± 0.05, p < 0.001) audio recordings, and felt more negative toward the neutral audio recordings than toward the positive audio recordings (p < 0.001). Importantly, emotional reactions were modulated by the interaction of “emotion” × “sociality” × “group” (F2,62 = 8.21, p = 0.001, ). Simple effects analysis indicated that for social-negative audio recordings, the High-AQ group felt more negative than the Low-AQ group (High-AQ: 3.14 ± 0.14, Low-AQ: 3.81 ± 0.13; F2,62 = 12.57, p = 0.001, ). However, there was no group difference observed in the other conditions (p > 0.05 for all comparisons), as shown in Figure 6.

Event-Related Potential Data

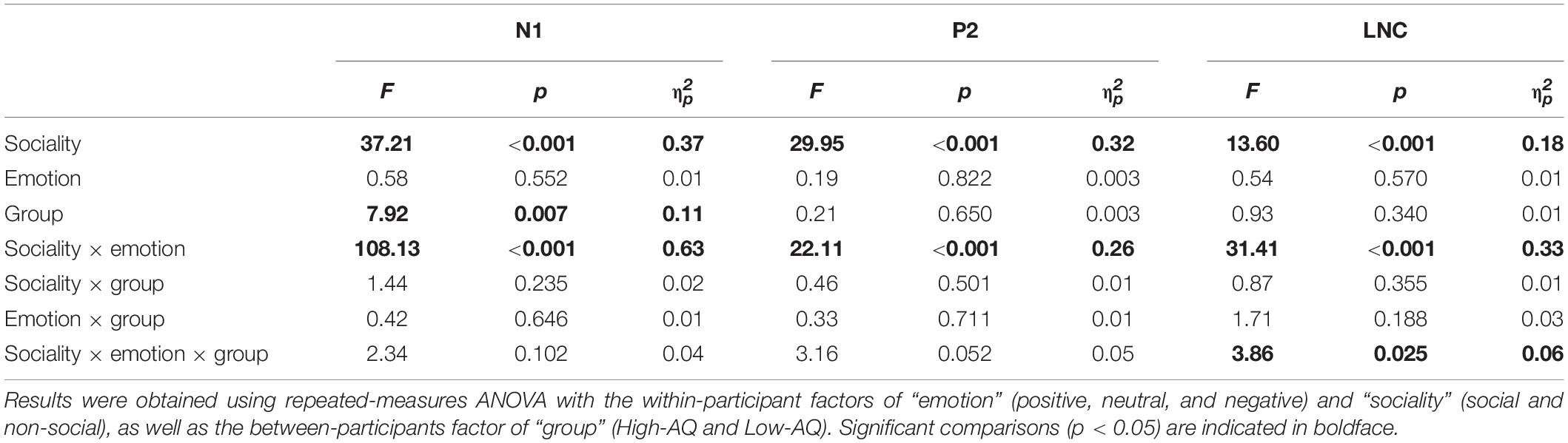

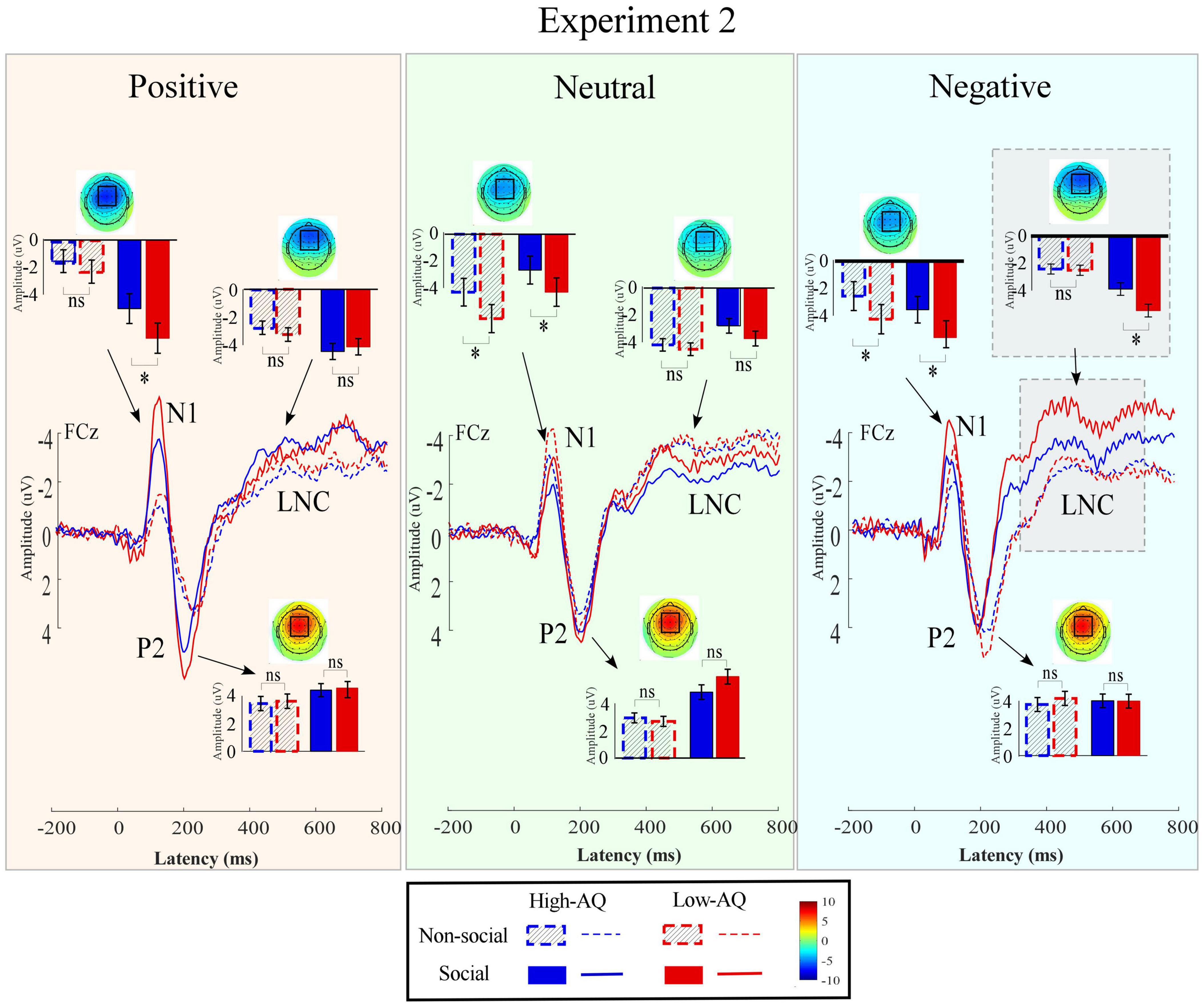

Amplitudes of dominant ERP components in Experiment 2 were compared by a three-way mixed-design ANOVA. The relevant results are shown in Figure 7 and Table 4.

Figure 7. Event-related potentials waveforms, scalp topography distributions, and bar charts in Experiment 2. ERP waveforms were exhibited by the High-AQ (blue) and Low-AQ (red) groups passively listening to positively (left column), neutral (middle column), and negatively (right column) valenced audio recordings with social (solid) or non-social (dotted) dimensions. Electrodes used to estimate the mean ERP amplitudes were marked using the black squares on their respective topographic distributions. Data in the bar charts were expressed as Mean ± SEM. nsp > 0.05, *p < 0.05, **p < 0.01, ***p < 0.001.

N1

The N1 amplitudes were modulated by the main effects of “sociality” (F1,63 = 37.21, p < 0.001, ) and “group” (F1,63 = 7.92, p = 0.007, ). Social audio recordings elicited larger N1 amplitudes than non-social audio recordings (social: −3.50 ± 0.25 μV, non-social: −2.57 ± 0.22 μV). N1 amplitudes in the High-AQ group were smaller than in the Low-AQ group (High-AQ: −2.41 ± 0.31 μV, Low-AQ: −3.66 ± 0.32 μV).

P2

The P2 amplitudes were modulated by the main effect of “sociality” (F1,63 = 29.95, p < 0.001, ) with social audio recordings eliciting larger P2 amplitudes than non-social audio recordings (social: 4.22 ± 0.30 μV, non-social: 3.14 ± 0.27 μV).

Late Negative Component

The LNC amplitudes were modulated by the main effect of “sociality” (F1,63 = 13.60, p < 0.001, ) with social audio recordings eliciting larger LNC amplitudes than non-social audio recordings (social: −2.92 ± 0.23 μV, non-social: −2.31 ± 0.18). Importantly, the LNC amplitudes were significantly modulated by the interaction effect of “emotion” × “sociality” × “group” (F2,62 = 3.86, p = 0.025, ). Simple effects analysis indicated that for social-negative audio recordings, LNC amplitudes in the High-AQ group were smaller than in the Low-AQ group (High-AQ group: −2.93 ± 0.34 μV, Low-AQ: −4.12 ± 0.35 μV; F2,62 = 5.77, p = 0.019, ). However, there was no group difference observed in the other conditions (p > 0.05 for all comparisons).

Correlation Between Event-Related Potential Data and Autism-Spectrum Quotient Scores

The LNC amplitudes elicited by social-negative audio recordings were positively correlated with the AQ scores of the High-AQ group (r = 0.36, p = 0.040) but not correlated for the Low-AQ group (r = 0.10, p = 0.600), as seen in Figure 5 (bottom panel). However, no other reliable correlations between AQ scores and ERP amplitudes were found (p > 0.05).

Discussion

The present study attempted to investigate whether High-AQ individuals would exhibit altered perception of social-emotional stimuli. Results showed that the behavioral and neural responses to social-negative stimuli in both the visual and auditory modalities differed between groups. More negative emotional reactions and smaller late ERP components (LPP in Experiment 1 and LNC in Experiment 2) in response to both social-negative pictures and social-negative audio recordings were found in the High-AQ group as compared to the Low-AQ group. Thus, the present study suggests that individuals with autistic traits may exhibit altered social perception in response to social-negative stimuli.

Experiment 1

In agreement with previous studies (Kissler and Bromberek-Dyzman, 2021), the results of Experiment 1 showed the N1 amplitudes elicited by the positive and negative pictures were larger than those elicited by the neutral pictures. Since the N1 component represents the early sensory and attention processing of visual information (Huang et al., 2017; Xia et al., 2017; Oeur and Margulies, 2020; Zhao et al., 2020a), the present results suggest that early sensory and attention processing resources were activated by emotional pictures in Experiment 1. In addition, in agreement with previous studies showing that social stimuli elicited larger amplitudes relative to non-social stimuli in the N2 time window in the visual modality (Fishman et al., 2011), the present study showed larger N2 amplitudes to be elicited by social pictures rather than non-social pictures. The N2 amplitudes evoked by visual stimuli have been suggested to be an effective index of attention (Ort et al., 2019), with a higher level of attention to stimuli inducing a larger N2 amplitude in the visual modality (Orlandi and Proverbio, 2019). Thus, our findings regarding N2 in Experiment 1 suggest that social pictures captured more attention than non-social pictures.

Also in agreement with previous studies (Calbi et al., 2019) LPP amplitudes in Experiment 1 were modulated by “emotion,” with positive pictures eliciting larger amplitudes relative to neutral pictures. As LPP over the posterior parietal cortical area is relevant to the conscious evaluation of emotional stimuli (Leventon et al., 2014), and the LPP amplitudes are positively correlated to the valence of the emotional stimuli (Suo et al., 2017), it appears, based on the present study design, that the mental processing resources of emotional valence evaluation were recruited under passive viewing conditions, and that more mental resources were involved in the response to positive pictures than to neutral pictures.

More importantly, LPP amplitudes were modulated by the interaction of “sociality,” “emotion,” and “group.” That is to say, for the social-negative pictures, LPP amplitudes in the High-AQ group were lower than those in the Low-AQ group, whereas no group difference was found for other kinds of pictures (i.e., social-neutral, non-social neutral, social-positive, non-social positive, and non-social negative). In addition, LPP amplitudes in response to social-negative pictures were correlated with the AQ scores of the participants in the High-AQ group, such that the higher the AQ scores of the participants, the smaller the LPP amplitudes elicited in response to social-negative pictures. This suggests that the LPP amplitudes evoked by social-negative pictures were sensitive to the degree of autistic traits in the High-AQ group. Thus, these results suggest that for the High-AQ group, fewer mental processing resources for emotional valence evaluation were involved in the response to social-negative pictures. This supports our hypothesis that altered processing of social-negative stimuli in the visual modality can be found in individuals with autistic traits.

Experiment 2

In agreement with prior studies (Solberg Økland et al., 2019; Hosaka et al., 2021), the N1 amplitudes elicited by the social audio recordings (human voices) in Experiment 2 were larger than those elicited by the non-social audio recordings (non-human sounds). Since the N1 component in the auditory modality is an index of early auditory processing (Zhao et al., 2016), and focused auditory attention could result in larger N1 amplitudes in response to audio recordings (Tumber et al., 2014), our findings suggest that social audio recordings capture more attention than non-social audio recordings. In addition, our results showed that the N1 amplitudes were modulated by the main effect of “group,” in that the N1 amplitudes of the High-AQ group were smaller than those of the Low-AQ group, which is in agreement with prior studies of individuals with autistic traits (Meng et al., 2019) and ASD (Ruta et al., 2017). This suggests that the High-AQ group’s attention to audio recordings in the implicit processing (passive listening) paradigm was less than that of the Low-AQ group.

In agreement with prior ERP studies (Cheng et al., 2017; Keuper et al., 2018), the LNC amplitudes in Experiment 2 were modulated by “emotion,” with positive and negative audio recordings eliciting larger amplitudes in the LNC time window than neutral audio recordings. As LNC over the posterior parietal cortical area is relevant to the conscious cognition of emotional stimuli (Leventon et al., 2014), and LNC amplitudes are positively correlated with the evaluation of the subjective emotional valence of emotional stimuli (Suo et al., 2017), it appears that more mental processing resources were recruited for evaluation of positive and negative audio recordings as compared to neutral audio recordings.

Importantly, LNC amplitudes were modulated by the interaction between “sociality,” “emotion,” and “group.” For the social-negative audio recordings, LNC amplitudes in the High-AQ group were lower than those in the Low-AQ group, whereas no difference was found between the two groups for other kinds of audio recordings. Thus, our results suggest that the High-AQ group exhibited impaired mental processing for evaluation of social-negative audio recordings. In addition, LNC amplitudes in response to social-negative audio recordings were correlated with the AQ scores of participants in the High-AQ group: the higher the AQ score, the smaller the LNC amplitudes in response to social-negative audio recordings. These results suggest that the LNC amplitudes elicited in response to social-negative audio recordings were sensitive to the magnitude of autistic traits, supporting our hypothesis that altered perception of social-negative stimuli in the auditory modality can be found in individuals with autistic traits.

Altered Social-Emotional Processing by Individuals With Autistic Traits

A key result of this study is the fact that both Experiment 1 and Experiment 2 found significant behavioral differences between the High-AQ and Low-AQ groups in response to social-negative stimuli. That is to say, in both experiments, the High-AQ group exhibited more negative emotional reactions to the social-negative stimuli than did the Low-AQ group. In addition, ERP results showed that LPP amplitudes elicited in response to social-negative pictures (Experiment 1) and LNC amplitudes elicited in response to social-negative audio recordings (Experiment 2) were lower in the High-AQ group than in the Low-AQ group. Both LPP and LNC amplitudes were correlated with the AQ scores of the High-AQ group only when they were elicited by social-negative stimuli.

These results support our hypothesis that individuals with autistic traits have altered processing of social-negative stimuli. Prior studies have suggested that social emotions have greater emotional significance than non-social emotions (Tam, 2012), which could activate individuals’ intrinsic motivation to respond to social emotions (Gourisankar et al., 2018). According to the social motivational theory of ASD (Scheggi et al., 2020), one possible explanation for these findings could be that individuals with autistic traits lack the intrinsic motivation to process socially significant emotional stimuli. In addition, autistic individuals exhibit enhanced perception, attention, and memory capabilities, which may cause their experience of the world to become too intense and even aversive (Markram et al., 2007), causing many of the key autistic symptoms, such as disorders of social interaction (Stavropoulos and Carver, 2018) and perception (Cascio et al., 2015). Therefore, the atypical perception of social-negative emotions by individuals with autistic traits may be the result of their overly intense and avoidant processing of social-negative stimuli in comparison to other types of social-emotional or non-social emotional stimuli. The social-negative stimuli may make individuals with autistic traits experience emotions that are too intense and thus contribute to a decline in their intrinsic motivation.

Despite these potential implications, several limitations of the present study should be noted. First, although the influence of autistic traits on reactions to social or non-social emotional stimuli was assessed in an experimental setting, whether and how these reactions are related to real-world behavior requires further investigation. Second, the present study used a passive listening/viewing paradigm. Filler trials were not used to evaluate whether participants focused sufficiently on the emotional stimuli, and thus further investigations should take this into account.

Conclusion

This study investigated the influence of autistic traits on the perception of social-emotional stimuli. ERPs were used to measure the neural reactions that were elicited by social-emotional stimuli in both the High-AQ and Low-AQ groups. More negative emotional reactions and smaller late ERP component amplitudes (LPP of pictures and LNC of audio recordings) were found in the High-AQ group than in the Low-AQ group in response to the social-negative stimuli. In addition, LPP or LNC amplitudes in response to social-negative stimuli were correlated with the AQ scores of the High-AQ group. These results suggest that individuals with autistic traits exhibit altered behavioral and neural processing of social-negative emotional stimuli.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Ethics Statement

The studies involving human participants were reviewed and approved by the Chongqing Normal University Research Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

DY: conceptualization, methodology, software, data curation, and writing—original draft preparation. HT: methodology, software, and writing—original draft preparation. HG: data curation and writing—original draft preparation. ZL: supervision. YH: resources. JM: conceptualization, methodology, funding acquisition, and writing—reviewing and editing. All authors contributed to the article and approved the submitted version.

Funding

This work was funded by the Youth Foundation of Social Science and Humanity, China Ministry of Education (19YJC190016).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We are grateful to Lingxiao Li and Jin Jiang for their support. We thank all participants who took part in this study.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.746192/full#supplementary-material

Abbreviations

ASD, autism spectrum disorder; AQ, autism-spectrum quotient; ERP, event-related potential; DSM-5, Diagnostic and Statistical Manual of Mental Disorders (5th ed.); IAPS, International Affective Picture System; CAPS, Chinese Affective Picture System; ISI, inter-stimulus interval; ANOVA, analysis of variance; EEG, electroencephalography; ACCs, accuracies; RTs, reaction times; EOG, electro-oculogram; ICA, independent component analysis.

References

Alaerts, K., Woolley, D. G., Steyaert, J., Di Martino, A., Swinnen, S. P., and Wenderoth, N. (2013). Underconnectivity of the superior temporal sulcus predicts emotion recognition deficits in autism. Soc. Cogn. Affect. Neurosci. 9, 1589–1600. doi: 10.1093/scan/nst156

American Psychiatric Association (2013). Diagnostic and statistical manual of mental disorders, 5th ed (DSM-5). Arlington: American Psychiatric Association.

Azuma, R., Deeley, Q., Campbell, L. E., Daly, E. M., Giampietro, V., Brammer, M. J., et al. (2015). An fMRI study of facial emotion processing in children and adolescents with 22q11.2 deletion syndrome. J. Neurodev. Disord. 7:1. doi: 10.1186/1866-1955-7-1

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., and Clubley, E. (2001). The Autism-Spectrum Quotient (AQ): evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. J. Autism Dev. Disord. 31, 5–17. doi: 10.1023/A:1005653411471

Becker, C., Caterer, E., Chouinard, P. A., and Laycock, R. (2021). Alterations in rapid social evaluations in individuals with high autism traits. J Autism Dev. Disord. 2021:47958. doi: 10.1007/s10803-020-04795-8

Belin, P., Fillion-Bilodeau, S., and Gosselin, F. (2008). The montreal affective voices: a validated set of nonverbal affect bursts for research on auditory affective processing. Behav. Res. Methods 40, 531–539. doi: 10.3758/BRM.40.2.531

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B: Methodol. 57, 289–300. doi: 10.1111/j.2517-6161.1995.tb02031.x

Calbi, M., Siri, F., Heimann, K., Barratt, D., Gallese, V., Kolesnikov, A., et al. (2019). How context influences the interpretation of facial expressions: a source localization high-density EEG study on the “Kuleshov effect”. Sci. Rep. 9:2107. doi: 10.1038/s41598-018-37786-y

Cascio, C. J., Gu, C., Schauder, K. B., Key, A. P., and Yoder, P. (2015). Somatosensory event-related potentials and association with tactile behavioral responsiveness patterns in children with ASD. Brain Topograp. 28, 895–903. doi: 10.1007/s10548-015-0439-1

Chawarska, K., Macari, S., and Shic, F. (2013). Decreased spontaneous attention to social scenes in 6-month-old infants later diagnosed with autism spectrum disorders. Biol. Psychiatry 74, 195–203. doi: 10.1016/j.biopsych.2012.11.022

Cheng, J., Jiao, C., Luo, Y., and Cui, F. (2017). Music induced happy mood suppresses the neural responses to other’s pain: evidences from an ERP study. Sci. Rep. 7:13054. doi: 10.1038/s41598-017-13386-0

Chevallier, C., Kohls, G., Troiani, V., Brodkin, E. S., and Schultz, R. T. (2012). The social motivation theory of autism. Trends Cogn. Sci. 16, 231–239. doi: 10.1016/j.tics.2012.02.007

Chita-Tegmark, M. (2016). Social attention in ASD: A review and meta-analysis of eye-tracking studies. Res. Dev. Disabil. 48, 79–93. doi: 10.1016/j.ridd.2015.10.011

De Crescenzo, F., Postorino, V., Siracusano, M., Riccioni, A., Armando, M., Curatolo, P., et al. (2019). Autistic symptoms in schizophrenia spectrum disorders: a systematic review and meta-analysis. Front. Psychiatry 10:78. doi: 10.3389/fpsyt.2019.00078

De Stefani, E., and De Marco, D. (2019). Language, gesture, and emotional communication: an embodied view of social interaction. Front. Psychol. 10:2063. doi: 10.3389/fpsyg.2019.02063

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Dubey, I., Ropar, D., and Hamilton, A. F. D. C. (2015). Measuring the value of social engagement in adults with and without autism. Mol. Autism 6:35. doi: 10.1186/s13229-015-0031-2

Fishman, I., Ng, R., and Bellugi, U. (2011). Do extraverts process social stimuli differently from introverts? Cogn. Neurosci. 2, 67–73. doi: 10.1080/17588928.2010.527434

Fogelson, N., Li, L., Diaz-Brage, P., Amatriain-Fernandez, S., and Valle-Inclan, F. (2019). Altered predictive contextual processing of emotional faces versus abstract stimuli in adults with Autism Spectrum Disorder. Clin. Neurophysiol. 130, 963–975. doi: 10.1016/j.clinph.2019.03.031

Foti, D., and Hajcak, G. (2008). Deconstructing reappraisal: descriptions preceding arousing pictures modulate the subsequent neural response. J. Cogn. Neurosci. 20, 977–988. doi: 10.1162/jocn.2008.20066

Gourisankar, A., Eisenstein, S. A., Trapp, N. T., Koller, J. M., Campbell, M. C., Ushe, M., et al. (2018). Mapping movement, mood, motivation and mentation in the subthalamic nucleus. Royal Soc. Open Sci. 5:171177. doi: 10.1098/rsos.171177

Hajcak, G., MacNamara, A., and Olvet, D. M. (2010). Event-related potentials, emotion, and emotion regulation: an integrative review. Dev. Neuropsychol. 35, 129–155. doi: 10.1080/87565640903526504

Honisch, J. J., Mane, P., Golan, O., and Chakrabarti, B. (2021). Keeping in time with social and non-social stimuli: Synchronisation with auditory, visual, and audio-visual cues. Sci. Rep. 11:8805. doi: 10.1038/s41598-021-88112-y

Hosaka, T., Kimura, M., and Yotsumoto, Y. (2021). Neural representations of own-voice in the human auditory cortex. Sci. Rep. 11:591. doi: 10.1038/s41598-020-80095-6

Hsu, C.-T., Sato, W., and Yoshikawa, S. (2020). Enhanced emotional and motor responses to live versus videotaped dynamic facial expressions. Sci. Rep. 10:16825. doi: 10.1038/s41598-020-73826-2

Hu, L., and Iannetti, G. D. (2019). Neural indicators of perceptual variability of pain across species. Proc. Nat. Acad. Sci. 116:1782. doi: 10.1073/pnas.1812499116

Huang, C.-Y., Lin, L. L., and Hwang, I.-S. (2017). Age-related differences in reorganization of functional connectivity for a dual task with increasing postural destabilization. Front. Aging Neurosci. 9:96. doi: 10.3389/fnagi.2017.00096

Jung, T. P., Makeig, S., Westerfield, M., Townsend, J., Courchesne, E., and Sejnowski, T. J. (2001). Analysis and visualization of single-trial event-related potentials. Human Brain Mapp. 14, 166–185. doi: 10.1002/hbm.1050

Kerr-Gaffney, J., Mason, L., Jones, E., Hayward, H., Ahmad, J., Harrison, A., et al. (2020). Emotion recognition abilities in adults with anorexia nervosa are associated with autistic traits. J. Clin. Med. 9:1057. doi: 10.3390/jcm9041057

Keuper, K., Terrighena, E. L., Chan, C. C. H., Junghoefer, M., and Lee, T. M. C. (2018). How the dorsolateral prefrontal cortex controls affective processing in absence of visual awareness - insights from a combined EEG-rTMS Study. Front. Human Neurosci. 12:412. doi: 10.3389/fnhum.2018.00412

Kissler, J., and Bromberek-Dyzman, K. (2021). Mood induction differently affects early neural correlates of evaluative word processing in L1 and L2. Front. Psychol. 11:588902. doi: 10.3389/fpsyg.2020.588902

Kliemann, D., Dziobek, I., Hatri, A., Steimke, R., and Heekeren, H. R. (2010). Atypical reflexive gaze patterns on emotional faces in autism spectrum disorders. J. Neurosci. 30:12281. doi: 10.1523/JNEUROSCI.0688-10.2010

Lang, P. J., and Bradley, M. M. (2007). “The International Affective Picture System (IAPS) in the study of emotion and attention,” in Handbook of emotion elicitation and assessment, eds J. A. Coan and J. J. B. Allen (Oxford: Oxford University Press), 29–46.

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (2005). International Affective Picture System (IAPS): Af-Fective Rating of Pictures and Instruction Manual Technical Report A-6. Gainesville, FL: University of Florida.

Lazar, S. M., Evans, D. W., Myers, S. M., Moreno-De Luca, A., and Moore, G. J. (2014). Social cognition and neural substrates of face perception: Implications for neurodevelopmental and neuropsychiatric disorders. Behav. Brain Res. 263, 1–8. doi: 10.1016/j.bbr.2014.01.010

Lehnhardt, F.-G., Gawronski, A., Pfeiffer, K., Kockler, H., Schilbach, L., and Vogeley, K. (2013). The investigation and differential diagnosis of Asperger syndrome in adults. Deutsches Arzteblatt Int. 110, 755–763. doi: 10.3238/arztebl.2013.0755

Lepistö, T., Kujala, T., Vanhala, R., Alku, P., Huotilainen, M., and Näätänen, R. (2005). The discrimination of and orienting to speech and non-speech sounds in children with autism. Brain Res. 1066, 147–157. doi: 10.1016/j.brainres.2005.10.052

Leventon, J. S., Stevens, J. S., and Bauer, P. J. (2014). Development in the neurophysiology of emotion processing and memory in school-age children. Dev. Cogn. Neurosci. 10, 21–33. doi: 10.1016/j.dcn.2014.07.007

Li, X., Zhang, Y., Xiang, B., and Meng, J. (2019). Differences between empathy for face and body pain: cognitive and neural responses. Brain Sci. Adv. 5, 256–264. doi: 10.26599/BSA.2019.9050022

Liu, P., and Pell, M. D. (2012). Recognizing vocal emotions in Mandarin Chinese: A validated database of Chinese vocal emotional stimuli. Behav. Res. Methods 44, 1042–1051. doi: 10.3758/s13428-012-0203-3

Liu, X., Bautista, J., Liu, E., and Zikopoulos, B. (2020). Imbalance of laminar-specific excitatory and inhibitory circuits of the orbitofrontal cortex in autism. Mol. Autism 11, 83–83. doi: 10.1186/s13229-020-00390-x

Lu, B., Hui, M., and Yu-Xia, H. (2005). The development of native chinese affective picture system–a pretest in 46 college students. Chinese Mental Health J. 19, 719–722.

Markram, H., Rinaldi, T., and Markram, K. (2007). The intense world syndrome–an alternative hypothesis for autism. Front. Neurosci. 1, 77–96. doi: 10.3389/neuro.01.1.1.006.2007

McPartland, J. C., Crowley, M. J., Perszyk, D. R., Mukerji, C. E., Naples, A. J., Wu, J., et al. (2012). Preserved reward outcome processing in ASD as revealed by event-related potentials. J. Neurodev. Disord. 4:16. doi: 10.1186/1866-1955-4-16

Mendelsohn, A. L., Cates, C. B., Weisleder, A., Berkule Johnson, S., Seery, A. M., Canfield, C. F., et al. (2018). Reading aloud, play, and social-emotional development. Pediatrics 141:e20173393. doi: 10.1542/peds.2017-3393

Meng, J., Li, Z., and Shen, L. (2020). Altered neuronal habituation to hearing others’ pain in adults with autistic traits. Sci. Rep. 10:15019. doi: 10.1038/s41598-020-72217-x

Meng, J., Shen, L., Li, Z., and Peng, W. (2019). Top-down effects on empathy for pain in adults with autistic traits. Sci. Rep. 9:8022. doi: 10.1038/s41598-019-44400-2

Oeur, R. A., and Margulies, S. S. (2020). Target detection in healthy 4-week old piglets from a passive two-tone auditory oddball paradigm. BMC Neurosci. 21:52. doi: 10.1186/s12868-020-00601-4

Ogawa, S., Iriguchi, M., Lee, Y.-A., Yoshikawa, S., and Goto, Y. (2019). Atypical Social Rank Recognition in Autism Spectrum Disorder. Sci. Rep. 9:15657. doi: 10.1038/s41598-019-52211-8

Orlandi, A., and Proverbio, A. M. (2019). Bilateral engagement of the occipito-temporal cortex in response to dance kinematics in experts. Sci. Rep. 9:1000. doi: 10.1038/s41598-018-37876-x

Ort, E., Fahrenfort, J. J., Ten Cate, T., Eimer, M., and Olivers, C. N. (2019). Humans can efficiently look for but not select multiple visual objects. eLife 8:e49130. doi: 10.7554/eLife.49130

Panksepp, J., and Bernatzky, G. (2002). Emotional sounds and the brain: the neuro-affective foundations of musical appreciation. Behav. Proc. 60, 133–155. doi: 10.1016/S0376-6357(02)00080-3

Poljac, E., Poljac, E., and Wagemans, J. (2012). Reduced accuracy and sensitivity in the perception of emotional facial expressions in individuals with high autism spectrum traits. Autism 17, 668–680. doi: 10.1177/1362361312455703

Robertson, C. E., and Baron-Cohen, S. (2017). Sensory perception in autism. Nat. Rev. Neurosci. 18, 671–684. doi: 10.1038/nrn.2017.112

Ruta, L., Famà, F. I., Bernava, G. M., Leonardi, E., Tartarisco, G., Falzone, A., et al. (2017). Reduced preference for social rewards in a novel tablet based task in young children with Autism Spectrum Disorders. Sci. Rep. 7:3329. doi: 10.1038/s41598-017-03615-x

Ruzich, E., Allison, C., Smith, P., Watson, P., Auyeung, B., Ring, H., et al. (2015). Measuring autistic traits in the general population: a systematic review of the Autism-Spectrum Quotient (AQ) in a nonclinical population sample of 6,900 typical adult males and females. Mol. Autism 6:2. doi: 10.1186/2040-2392-6-2

Scheggi, S., Guzzi, F., Braccagni, G., De Montis, M. G., Parenti, M., and Gambarana, C. (2020). Targeting PPARα in the rat valproic acid model of autism: focus on social motivational impairment and sex-related differences. Mol. Autism 11:62. doi: 10.1186/s13229-020-00358-x

Silk, J. B., and House, B. R. (2011). Evolutionary foundations of human prosocial sentiments. Proc. Nat. Acad. Sci. USA 108, 10910–10917. doi: 10.1073/pnas.1100305108

Silvers, J. A., McRae, K., Gabrieli, J. D. E., Gross, J. J., Remy, K. A., and Ochsner, K. N. (2012). Age-related differences in emotional reactivity, regulation, and rejection sensitivity in adolescence. Emotion 12, 1235–1247. doi: 10.1037/a0028297

Sindermann, C., Cooper, A., and Montag, C. (2019). Empathy, autistic tendencies, and systemizing tendencies-relationships between standard self-report measures. Front. Psychiatry 10:307. doi: 10.3389/fpsyt.2019.00307

Solberg Økland, H., Todorović, A., Lüttke, C. S., McQueen, J. M., and de Lange, F. P. (2019). Combined predictive effects of sentential and visual constraints in early audiovisual speech processing. Sci. Rep. 9:7870. doi: 10.1038/s41598-019-44311-2

Stavropoulos, K. M., and Carver, L. J. J. M. A. (2018). Oscillatory rhythm of reward: anticipation and processing of rewards in children with and without autism. Mol. Autism 9:4. doi: 10.1186/s13229-018-0189-5

Sumiya, M., Okamoto, Y., Koike, T., Tanigawa, T., Okazawa, H., Kosaka, H., et al. (2020). Attenuated activation of the anterior rostral medial prefrontal cortex on self-relevant social reward processing in individuals with autism spectrum disorder. NeuroImag. Clin. 26:102249. doi: 10.1016/j.nicl.2020.102249

Suo, T., Liu, L., Chen, C., and Zhang, E. (2017). The functional role of individual-alpha based frontal asymmetry in the evaluation of emotional pictures: evidence from event-related potentials. Front. Psychiatry 8:180. doi: 10.3389/fpsyt.2017.00180

Tam, N. D. (2012). Derivation of the evolution of empathic other-regarding social emotions as compared to non-social self-regarding emotions. BMC Neurosci. 13(Suppl. 1):28. doi: 10.1186/1471-2202-13-S1-P28

Tang, J. S. Y., Chen, N. T. M., Falkmer, M., Bolte, S., and Girdler, S. (2019). Atypical visual processing but comparable levels of emotion recognition in adults with autism during the processing of social scenes. J. Autism Dev. Disord. 49, 4009–4018. doi: 10.1007/s10803-019-04104-y

Tang, W., Bao, C., Xu, L., Zhu, J., Feng, W., Zhang, W., et al. (2019). Depressive symptoms in late pregnancy disrupt attentional processing of negative-positive emotion: an eye-movement study. Front. Psychiatry 10:780. doi: 10.3389/fpsyt.2019.00780

Tsurugizawa, T., Tamada, K., Ono, N., Karakawa, S., Kodama, Y., Debacker, C., et al. (2020). Awake functional MRI detects neural circuit dysfunction in a mouse model of autism. Sci. Adv. 6:eaav4520. doi: 10.1126/sciadv.aav4520

Tumber, A. K., Scheerer, N. E., and Jones, J. A. (2014). Attentional demands influence vocal compensations to pitch errors heard in auditory feedback. PloS One 9:e109968. doi: 10.1371/journal.pone.0109968

Vrtička, P., Sander, D., and Vuilleumier, P. (2013). Lateralized interactive social content and valence processing within the human amygdala. Front. Human Neurosci. 6:358. doi: 10.3389/fnhum.2012.00358

Xia, L., Gu, R., Zhang, D., and Luo, Y. (2017). Anxious individuals are impulsive decision-makers in the delay discounting task: an ERP Study. Front. Behav. Neurosci. 11:5. doi: 10.3389/fnbeh.2017.00005

Zhao, X., Li, H., Wang, E., Luo, X., Han, C., Cao, Q., et al. (2020a). Neural correlates of working memory deficits in different adult outcomes of ADHD: an event-related potential study. Front. Psychiatry 11:348. doi: 10.3389/fpsyt.2020.00348

Zhao, X., Li, X., Song, Y., Li, C., and Shi, W. (2020b). Autistic traits and emotional experiences in Chinese college students: Mediating role of emotional regulation and sex differences. Res. Autism Spect. Disord. 77:101607. doi: 10.1016/j.rasd.2020.101607

Zhao, Y., Luo, W., Chen, J., Zhang, D., Zhang, L., Xiao, C., et al. (2016). Behavioral and neural correlates of self-referential processing deficits in bipolar disorder. Sci. Rep. 6:24075. doi: 10.1038/srep24075

Zhou, F., Li, J., Zhao, W., Xu, L., Zheng, X., Fu, M., et al. (2020). Empathic pain evoked by sensory and emotional-communicative cues share common and process-specific neural representations. eLife 9:e56929. doi: 10.7554/eLife.56929

Keywords: autism, autistic traits, social emotion, perception, event-related potentials

Citation: Yang D, Tao H, Ge H, Li Z, Hu Y and Meng J (2022) Altered Processing of Social Emotions in Individuals With Autistic Traits. Front. Psychol. 13:746192. doi: 10.3389/fpsyg.2022.746192

Received: 23 July 2021; Accepted: 28 January 2022;

Published: 03 March 2022.

Edited by:

Paola Binda, University of Pisa, ItalyReviewed by:

Sarah Melzer, Harvard Medical School, United StatesLi Hu, Institute of Psychology, Chinese Academy of Sciences (CAS), China

Copyright © 2022 Yang, Tao, Ge, Li, Hu and Meng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Meng, cXVmdW1qQHFxLmNvbQ==

†ORCID: Yuanyan Hu, orcid.org/0000-0001-8069-9293

‡These authors have contributed equally to this work

Di Yang

Di Yang Hengheng Tao1,2‡

Hengheng Tao1,2‡ Zuoshan Li

Zuoshan Li Jing Meng

Jing Meng