95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 23 January 2023

Sec. Educational Psychology

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.1096337

This article is part of the Research Topic Executive Functions, Self-Regulation and External-Regulation: Relations and new evidence View all 12 articles

Self-regulated learning (SRL) plays a critical role in asynchronous online courses. In recent years, attention has been focused on identifying student subgroups with different patterns of online SRL behaviors and comparing their learning performance. However, there is limited research leveraging traces of SRL behaviors to detect student subgroups and examine the subgroup differences in cognitive load and student engagement. The current study tracked the engagement of 101 graduate students with SRL-enabling tools integrated into an asynchronous online course. According to the recorded SRL behaviors, this study identified two distinct student subgroups, using sequence analysis and cluster analysis: high SRL (H-SRL) and low SRL (L-SRL) groups. The H-SRL group showed lower extraneous cognitive load and higher learning performance, germane cognitive load, and cognitive engagement than the L-SRL group did. Additionally, this study articulated and compared temporal patterns of online SRL behaviors between the student subgroups combining lag sequential analysis and epistemic network analysis. The results revealed that both groups followed three phases of self-regulation but performed off-task behaviors. Additionally, the H-SRL group preferred activating mastery learning goals to improve ethical knowledge, whereas the L-SRL group preferred choosing performance-avoidance learning goals to pass the unit tests. The H-SRL group invested more in time management and notetaking, whereas the L-SRL group engaged more in surface learning approaches. This study offers researchers both theoretical and methodological insights. Additionally, our research findings help inform practitioners about how to design and deploy personalized SRL interventions in asynchronous online courses.

As the COVID-19 pandemic continues, there is a recent trend shifting from technology-assisted or blended learning toward totally online learning among universities worldwide (Hew et al., 2020). Online courses are usually provided in two modes: synchronous and asynchronous. Compared with the former, asynchronous online learning (AOL) can hold larger numbers of students, afford greater flexibility in time and space, and encompass greater student autonomy (Yoon et al., 2021). For example, asynchronous online courses (AOCs) enable students to learn anytime and anywhere. This is particularly beneficial to students who face practical challenges managing time zone differences and unstable internet access during the pandemic. Moreover, students can proceed through the course at their own pace, resulting in learner-centered learning processes (Kim et al., 2021). Despite this, students are often confronted with difficulties sustaining commitment in AOCs (Alhazbi and Hasan, 2021). For example, due to the lack of real-time learning support from instructors and peers, online learners struggle to organize and manage their learning tasks by themselves, causing negative learning experiences and outcomes (Seufert, 2020). Therefore, this time-independent delivery mode requires learners to enact self-regulated learning (SRL) strategies to plan and manage their learning processes independently. A review article by Wong et al. (2019a) reveals that considerable efforts have been made to integrate SRL-enabling tools into AOCs to support SRL strategy use. Unfortunately, even when presented with opportunities to facilitate self-regulation in AOL environments, not all students adopted optimal SRL behaviors to achieve expected learning outcomes (Fincham et al., 2018; Wong et al., 2019a). Therefore, it is necessary to (1) identify subgroups of students with different patterns of SRL behaviors and (2) examine subgroup differences regarding learning outcomes.

The person-centered approach is considered suitable because it can identify homogeneous clusters of individuals who exhibit similar features within their cluster but function in a different way compared with those from other clusters (Hong et al., 2020). Previous studies (e.g., Zheng et al., 2019) utilize various person-centered approaches (e.g., cluster analysis) to classify students according to SRL behaviors. However, many of them rely strongly on self-report measures of SRL behaviors, which suffer from issues including response bias and generate limited information about actual SRL strategy use (Baker et al., 2020). Moreover, even in those studies that remove the aforementioned restrictions of self-reports by using behavioral data (e.g., clickstreams), students are profiled based on the cumulative frequencies of SRL behaviors, which ignores the dynamic and contextual nature of SRL (Azevedo, 2014; Siadaty et al., 2016). In other words, the aggregate, nontemporal representations of SRL behaviors fail to retain any information about how students perform SRL over time and how their learning activities are adapted to meet specific task and environmental demands (Baker et al., 2020). Therefore, whether and how chronological representations of SRL behaviors can be used to identify student subgroups warrants investigation.

In recent years, there have been increasing numbers of attempts to compare learning performance across students’ SRL profiles in online learning environments (e.g., Cicchinelli et al., 2018; Lan et al., 2019). However, little is known about the differences in cognitive load (CL) and student engagement (SE) between SRL profiles, especially in the context of AOL. When studying in AOCs, in addition to dealing with the learning task at hand, students have to handle decisions that instructors are often responsible for, including planning how to proceed and reflecting on what they already learned (Seufert, 2018, 2020). Such additional demands require students to exert effective self-regulation, which otherwise might cause “mental fatigue” or cognitive overload that impedes learning (Seufert, 2018). Moreover, recent review studies building bridges between SRL and CL make theoretical arguments that self-regulation of learning processes relates to cognitive load (Seufert, 2018, 2020; de Bruin et al., 2020). Nevertheless, little empirical evidence to date has been found to verify this argument in AOL settings. Additionally, SE is another crucial determinant of online learners’ academic success (Wong and Liem, 2021). When switching to “emergency remote learning” during COVID-19, students found themselves fighting “digital burnout” or “online learning fatigue” and thus disengaged from course activities (Martin et al., 2022). Prior research suggests that students’ SRL strategies, as well as SRL profiles, have associations with their engagement in AOCs (e.g., Anthonysamy et al., 2020; Pérez-Álvarez et al., 2020). However, to our knowledge, no study exists to investigate how actual behavioral processes of SRL relate to SE in AOL environments. Therefore, whether and how subgroups of students with distinct patterns of SRL behavioral trajectories differ in CL and SE warrants investigation.

The emergence of temporal learning analytics allows researchers to explore whether student subgroups can be identified based on temporal SRL behaviors, compare how SRL behaviors of student subgroups act dynamically over time, and interpret why student subgroups differ in learning outcomes (Knight et al., 2017; Chen et al., 2018; Saint et al., 2022). In temporal learning analytics, two common types of temporal features are considered: the passage of time (e.g., how much time learners spend on learning tasks) and the temporal order (e.g., how events or states are sequentially organized; Chen et al., 2018). The current study focused on analyzing the temporal order of SRL behaviors. Although increasing studies have taken the temporality of SRL into account, SRL researchers (e.g., Saint et al., 2020a,b) point out that most temporal analyses of SRL lack sound theoretical underpinning or use a single analytical method, raising the concerns of ontologically flat explanations of learning as proposed by Reimann et al. (2014). This study captured students’ SRL behaviors as they interacted with SRL-enabling tools embedded in an AOC designed based on Zimmerman (2000) three-phase model and Barnard et al. (2009) online SRL strategies. Then, we combined lag sequential analysis and epistemic network analysis to articulate and compare patterns of how students’ SRL behaviors unfold throughout the course. Such a combination can significantly enhance our understanding of the temporal nature of the SRL processes.

SRL refers to “an active, constructive process whereby learners set goals for their learning and then attempt to monitor, regulate, and control their cognition, motivation, and behavior, guided and constrained by their goals and the contextual features of the environment” (Pintrich, 2000, p: 453). In developing various SRL models, researchers have reached a consensus that SRL is a cyclical and dynamic process (Panadero, 2017). Zimmerman (2000) divides SRL processes into three cyclical phases: forethought, performance, and self-reflection, each containing specific SRL strategies that learners are expected to execute. Furthermore, researchers increasingly emphasize SRL as highly context-specific due to continuous innovation in learning formats (Kim et al., 2018). To capture and measure the essence of online SRL, Barnard et al. (2009) operationalized the three-phase model by conceptualizing six constructs: goal setting, environmental structuring, task strategies, time management, help seeking, and self-evaluation. Based on these online SRL constructs, many studies have captured actual online SRL behaviors (e.g., Ye and Pennisi, 2022) and perceived online SRL strategies (e.g., Sun et al., 2017) and have developed interventions for promoting online SRL (e.g., Lai et al., 2018). However, these studies have paid little attention to the temporal dynamics of these online SRL behaviors.

Advances in SRL theory, learning technology, and analytic method have motivated the emergence of temporal learning analytics for SRL (Knight et al., 2017; Chen et al., 2018). First, modern SRL research conceptualizes SRL as a series of temporal events that learners perform during actual learning situations rather than as stable and decontextualized traits or aptitudes (Winne, 2010; Azevedo, 2014). Second, advanced learning technologies (e.g., intelligent tutoring systems) have been developed for tracing temporal characteristics of SRL by recording fine-grained behavioral data on the fly (Azevedo et al., 2018; Azevedo and Gašević, 2019). Third, recent developments in temporal analysis methods have further spurred researchers to undertake temporal analyses of SRL (see review by Saint et al., 2022).

By reviewing existing empirical studies employing behavioral data to explore the temporal dynamics of self-regulation in AOL, we found that very few studies (e.g., Cicchinelli et al., 2018; Fincham et al., 2018; Srivastava et al., 2022) have attempted to identify student subgroups by comparing SRL traces across individual students. For example, based on traces of SRL activities codified from log files captured by learning management systems (LMSs), Cicchinelli et al. (2018) divided learners into four subgroups (i.e., continuously active, inactive, procrastinators, and probers) utilizing sequence analysis and agglomerative hierarchical clustering. Additionally, the majority of relevant studies reveal and compare processes or patterns in online SRL by student subgroups using various temporal analytical techniques including, but not limited to: lag sequential analysis (LSA), epistemic network analysis (ENA), process mining (PM), and sequential pattern mining (SPM; e.g., Saint et al., 2020a; Hwang et al., 2021; Zheng et al., 2021; Sun et al., 2023). For example, Wong et al. (2019b) leveraged SPM to explore 103 Massive Open Online Course (MOOC) learners’ interactive sequences with course activities related to SRL and compared the differences in sequential patterns between students who viewed the SRL-prompt videos and those who did not.

In sum, researchers have illustrated heterogeneity in student SRL behaviors in AOL environments. However, most of them established student subgroups based on (quasi-) experimental designs or through comparisons of cumulative counts of SRL behaviors across students. The use of temporal SRL behaviors for detecting student subgroups is still an underexplored area of research but is one that can extend our current knowledge on the complex nature of temporally unfolding SRL processes. Additionally, although many temporal analyses were undertaken using the same data source in similar learning contexts, their research findings are not entirely consistent and may even be contradictory. One reason for this is that these researchers generally adopt a single analytical approach per study, and different analytical approaches between studies may lead to inconsistent research results (Saint et al., 2020a). As Reimann (2009) pointed out, analyses using a single analytical approach may suffer from ontological flatness. Therefore, multiple analytical approaches should be consolidated to confirm and complement each other in examinations of temporal dynamics of SRL.

Cognitive load theory assumes that (1) for learning to take place, information must be encoded into long-term memory by working memory (WM) and (2) human WM is limited in both capacity and duration (Sweller et al., 1998, 2019). When performing complex or novel learning tasks, learners must process large amounts of information and interactions simultaneously, which may overload their finite WM and thus impair academic performance (Sweller, 2010). Sweller et al. (1998) defined cognitive load (CL) as the amount of WM resources required to process complex or novel information. They recognize three types of cognitive load: intrinsic, extraneous, and germane. Intrinsic cognitive load (ICL) refers to the processing resources associated with the inherent properties of the task and is determined by task complexity and learner expertise (Sweller et al., 1998). Extraneous cognitive load (ECL) arises from unnecessary and irrelevant information imposing processing demands due to suboptimal instructional design (Sweller et al., 1998). ECL could distract learners from the task at hand and hamper learning (Stiller and Bachmaier, 2018). Germane cognitive load (GCL) refers to the WM resources that learners devote to dealing with ICL (Sweller et al., 1998). Unlike the other two loads, GCL helps with schema construction and automation and thus benefits learning (Miller et al., 2021). Appropriate instructional design can manage ICL, reduce ECL, and encourage GCL while still preventing overload (Van Merriënboer and Sweller, 2010).

Researchers have recently extended previous research on CL by unraveling the intricate relationship between SRL and CL (de Bruin et al., 2020; Seufert, 2020). Eitel et al. (2020) propose that (1) CL results not only from how instruction is designed but also from how learners process this instruction and (2) how instruction is processed by learners depends on their ability and willingness to exert self-control. According to Baumeister et al. (2007), self-control is portrayed as a conscious, deliberate, and effortful subset of self-regulation. Eitel et al. (2020) further demonstrated that offering learners proper guidance about cognitive and metacognitive strategies can improve their self-control of cognitive processing to reduce ECL and foster GCL. Additionally, Seufert (2018) argued that in different phases of self-regulation, learners need to invest cognitive and metacognitive resources in addition to dealing with the original learning task. The affordances of self-regulation impose cognitive load and might even cause cognitive overload (Seufert, 2018). Seufert (2018) analyzed the affordances of Zimmerman (2000) three phases of SRL in terms of ICL, ECL, and GCL. Meanwhile, external learning supports (e.g., prompts) have the potential to promote effective self-regulation processes, which can elicit the optimal amount of CL (Seufert, 2018). A handful of empirical studies (e.g., Liu and Sun, 2021; Sun and Liu, 2022) also illuminate how the employment of SRL-enabling tools for supporting SRL strategies can optimize cognitive load in AOCs.

In sum, researchers have established theoretical connections between SRL and CL and suggested how to optimize CL by externally supporting learners’ self-regulation. However, since this is an emerging research topic, limited studies have empirically investigated the underlying mechanisms through which temporally unfolding SRL processes have associations with ICL, ECL, and GCL. Additionally, to our knowledge, no studies have examined the relationship between SRL and CL in a specific course, especially in the context of AOL.

Student engagement (SE) refers to a student’s active participation and involvement in learning tasks and activities and consists of three different but related dimensions: behavioral, emotional, and cognitive (Fredricks et al., 2004). Behavioral engagement (BE) describes students’ observable behaviors while participating in academic activities that are crucial for attaining desired academic outcomes and preventing dropouts (Fredricks et al., 2004). This includes attention, concentration, effort, persistence, positive conduct, absence of disruptive behaviors, and involvement in curricular and extracurricular activities (Fredricks et al., 2004; Appleton et al., 2008). Emotional engagement (EE) describes students’ affective reactions (e.g., anger, anxiety, boredom, happiness, and interest) to teachers, peers, courses, and schools, their willingness to do the coursework, their sense of belonging in school, and their evaluation of school-related outcomes (Fredricks et al., 2004). Cognitive engagement (CE) describes thoughtfulness and willingness to exert effort to comprehend complex ideas and master difficult skills (Fredricks et al., 2004). It reflects students’ psychological investment in learning and strategic emphases on active self-regulation of skills and usage of deep learning strategies (Fredricks et al., 2004; Greene, 2015).

Prior research has adopted variable-centered approaches (e.g., correlation and regression) to associate SRL with SE in AOL (e.g., Pellas, 2014). For example, Sun and Rueda (2012) analyzed 203 college students’ self-reports of self-regulation and engagement after watching video recordings of lectures in a distance course. They found that self-regulation was significantly positively correlated with BE, EE, and CE, implying that students with higher levels of self-regulation demonstrated higher levels of engagement. The positive relationship between SRL and SE has been well established in variable-centered studies (Anthonysamy et al., 2020). Going beyond analyzing SRL behaviors from a variable-centered perspective, which assumes the same relations and average means for an entire population, recent studies (e.g., Pérez-Álvarez et al., 2020) increasingly concentrate on person-centered approaches to detect divergent SRL profiles and how those profiles differ regarding SE. These approaches are especially apt for studies conducted in AOL contexts where SRL behaviors vary greatly across individual students. For example, mapping SRL behavioral indicators with the clickstreams of 5,014 learners enrolled in an MOOC, Lan et al. (2019) employed K-means to find two types of learners (i.e., auditors and attentive) who shared similar patterns of SRL behaviors. They concluded that the attentive learners who followed the learning pathway intended by the instructors showed higher course engagement and completion rates than the auditors who accessed course content selectively and irregularly.

In sum, existing studies have illuminated the impacts of students’ SRL profiles on their engagement in AOCs, but most are limited to examining BE. Whether and how SRL profiles are associated with EE and CE remains unclear. Moreover, these studies distinguished SRL profiles according to frequency-based measures of SRL behaviors. To date, no studies have related divergent profiles of temporally unfolding SRL processes to the three types of SE.

The purpose of the current study is therefore threefold: (1) identifying student subgroups according to traces of online SRL behaviors; (2) examining the student subgroup differences in learning performance, CL, and SE; and (3) articulating and comparing behavior patterns of online SRL between the student subgroups. This study offers researchers both theoretical and methodological insights. Additionally, our research findings inform practitioners about how to design and deploy personalized SRL interventions in the context of AOL. Accordingly, the research questions are as follows:

RQ1. Can student subgroups be identified by the traces of SRL behaviors collected from the use of SRL-enabling tools to complete an AOC? If so, what are their characteristics?

RQ2. Do the identified student subgroups significantly differ in learning performance, cognitive load, and student engagement?

RQ3. How does this study differentiate the identified student subgroups according to their behavior patterns of online SRL?

We recruited 113 graduate students who had never attended research ethics courses before from universities in northern Taiwan. These participants were asked to complete an asynchronous online research ethics course. The course consisted of four learning units, each of which took participants approximately 40 min to complete. Twelve students were excluded because of data limitations, such as incomplete traces of SRL behaviors and insufficient learning time, leaving a final sample size of 101 students ( = 24.21 years, = 3.37, 53.5% female).

Sun and Liu (2022) designed the learning units according to Zimmerman (2000) three-phase SRL model and integrated Barnard et al. (2009) online SRL strategies in the form of tools into the three phases of SRL. In the forethought phase, learners selected a learning unit with reference to their personal interests and prior-knowledge test scores on the course list (Figure 1). Then, they were required to set a learning goal and a learning duration referring to previous learners’ averages on unit test scores and time-on-unit (Figure 2). Based on Elliot and Church (1997) achievement goal theory, we recommended that learners choose among three different learning goals: mastery, performance-approach, and performance-avoidance goals (Figure 2). According to the learning duration data collected by Sun et al. (2018, 2019), we provided four options: 20, 30, 45, and 60 min. If learners want to change the learning unit, they can click the “Course List,” which takes them back to the course list. From there, they can reselect a learning unit.

After plan making, learners proceeded to the performance phase in which they could implement SRL strategies via these tools to study multimedia learning materials (Figure 3). Students could watch and control learning materials with flash animation and switch between content sections freely by leveraging a navigation menu. Meanwhile, the top of the course interface displays a toolbar with three tools, namely, “Countdown,” “Expected Time,” and “Notes.” Learners can check how much time is left by clicking on “Countdown.” The information about the remaining time is masked in the absence of click actions for 5 s. When only 5 min are left, the “Countdown” icon will flash to remind learners to adjust their learning pace, such as resetting learning duration via “Expected Time.” When studying the materials, learners can use “Notes” to type in, delete, and save notes. While learning, if learners want to change the learning unit and learning goal, they can return to the course list by clicking the “Course List” to recreate their study plan.

After studying the learning materials, learners evaluate their performance by attending a unit test. Once finishing the test, learners received a performance feedback report including their test performance and the items they missed (Figure 4). Based on the feedback, learners determined whether to retake the unit test, review the learning materials, or start another learning unit. After finishing all the learning units, learners were asked to fill out cognitive load and student engagement questionnaires.

Considering the prevalence of digital multitasking and distraction in AOL settings, this study defines and identifies learners’ off-task behaviors in terms of Sun et al. (2018) study carried out in the same course. Specifically, off-task behaviors appear if there are 20 min of gap time between two consecutive keystrokes or clicks. It should be noted that we exclude the environmental structuring dimension since it is hard to measure based on action logs. Additionally, students were asked to pass the course independently. Thus, help-seeking strategies were not provided in the course. Nevertheless, when encountering technical problems, learners could contact instructors via email.

We collected the participants’ SRL behaviors according to the coding scheme (Table 1) developed based on Zimmerman (2000) three-phase model and Barnard et al. (2009) online SRL strategies. This study developed 10 SRL behavior codes and embedded coding rules into the learning system. Once learners used the SRL-enabling tools or were off-task, the corresponding behaviors were detected and recorded automatically. For descriptions of each coded behavior, please see “Participants and settings.”

Online unit tests were administered to evaluate the participants’ research ethics knowledge acquired in the course. Specifically, the four tests contained 25, 13, 17, and 16 multiple-choice items, and the maximum score of each test was 100 points. We averaged the four test scores for each participant as his or her learning performance score. All the items were developed and applied by Sun et al. (2018, 2019).

The cognitive load questionnaire by Leppink et al. (2013) was adapted to measure the participants’ ICL (three items), ECL (three items), and GCL (four items). All the items were assessed on an 11-point Likert scale (0 = strongly disagree, 10 = strongly agree). The Cronbach’s was.92, 0.90, and.92 for ICL, ECL, and GCL, respectively.

The student engagement questionnaire by Fredricks et al. (2005) was adapted to measure the participants’ BE (five items), EE (six items), and CE (eight items). All the items were assessed on a 5-point Likert scale (1 = strongly disagree; 5 = strongly agree). The Cronbach’s α was.71, 0.92, and.87 for BE, EE, and CE, respectively.

A sequence analysis with the R package TraMineR (Gabadinho et al., 2011) was undertaken to visualize and compare the sequences of behaviors captured based on our coding scheme. The first step of implementing between-sequence comparisons was obtaining edit distances for pairs of sequences as the minimal cost, in terms of inserting, deleting, and substituting sequence behaviors to transform one sequence into another. Specifically, a dissimilarity matrix was established using the optimal matching algorithm with an insertion/deletion cost of 1 and a substitution cost matrix based on observed transition rates between behaviors. Based on the dissimilarity matrix, we employed K-medoids with the R package fpc (Henning, 2020) to organize these behavior sequences into homogeneous clusters. Meanwhile, the average silhouette method was used via the R package factoextra (Kassambara and Mundt, 2020) to find the optimal number of clusters. To label the identified clusters, we used TraMineR to plot the behavior distribution and representative sequences for each cluster. Additionally, Welch’s independent t tests were performed to quantify the differences between the clusters regarding learning performance, cognitive load, and student engagement.

This study ran an LSA (Bakeman and Quera, 2011) using GSEQ 5.1 software to identify, visualize, and compare significant transition patterns among the SRL behavior codes demonstrated by the clusters. First, the SRL behaviors were coded into two-behavior sequences according to the chronological order. Second, to tally transitions among these behavior codes, the LSA produced a transitional frequency matrix in which each cell represents the number of times that one particular “given” code transitions immediately to another “target” code. Third, after generating the transitional frequency matrix, it proceeded to compute a transitional probability matrix. Specifically, a transitional probability represents the ratio of the frequency of a cell to the frequency for that row. Fourth, it computed an adjusted residual (i.e., z score) for each transition to determine whether the transitional probability showed significant deviation from its expected value. A z score above 1.96 implies that the transition from one code to another successor code reaches statistical significance (p < 0.05). Last, the behavioral transition diagram for each cluster was created according to the significant transition sequences.

An ENA (Shaffer, 2017) was implemented via the ENA Web Tool (version 1.7.0; Marquart et al., 2018) to model, visualize, and compare the cooccurrences of the codes for the two groups. First, this study defined the SRL behavior codes as the ENA codes, the participants as the units of analysis, and two consecutive SRL behaviors as the moving stanza. Second, based on the temporal behaviors, it created an adjacency matrix per stanza per participant, summed the adjacency matrices across all stanzas into a cumulative adjacency matrix for each participant, and then converted each resulting cumulative adjacency matrix into a normalized adjacency vector in a high-dimensional space. Third, it constructed a projected ENA space by performing dimensional reduction on the vectors via means rotation (MR) and/or singular value decomposition (SVD). MR is performed to position group means along a common axis to obtain the largest differences between the groups, whereas SVD is utilized to generate orthogonal dimensions that represent the most variance explained by each dimension. Fourth, it produced each participant’s epistemic network graphs in this space employing two coordinated representations: (1) a projected point graph, which showed the location of his or her network in the two-dimensional ENA space, and (2) a weighted network graph where nodes represent the codes and edges correspond to the relative frequency of links between any pair of nodes. The node positions are fixed across all networks and determined through an optimization routine minimizing the distance between the projected points and the centroids of their corresponding network graphs. Last, to compare the network graphs between the groups, we created ENA subtraction graphs by subtracting the weight of each connection in one group network from the corresponding connections in the other. In addition, the distributions of the projected points for the groups were compared using two-sample t tests.

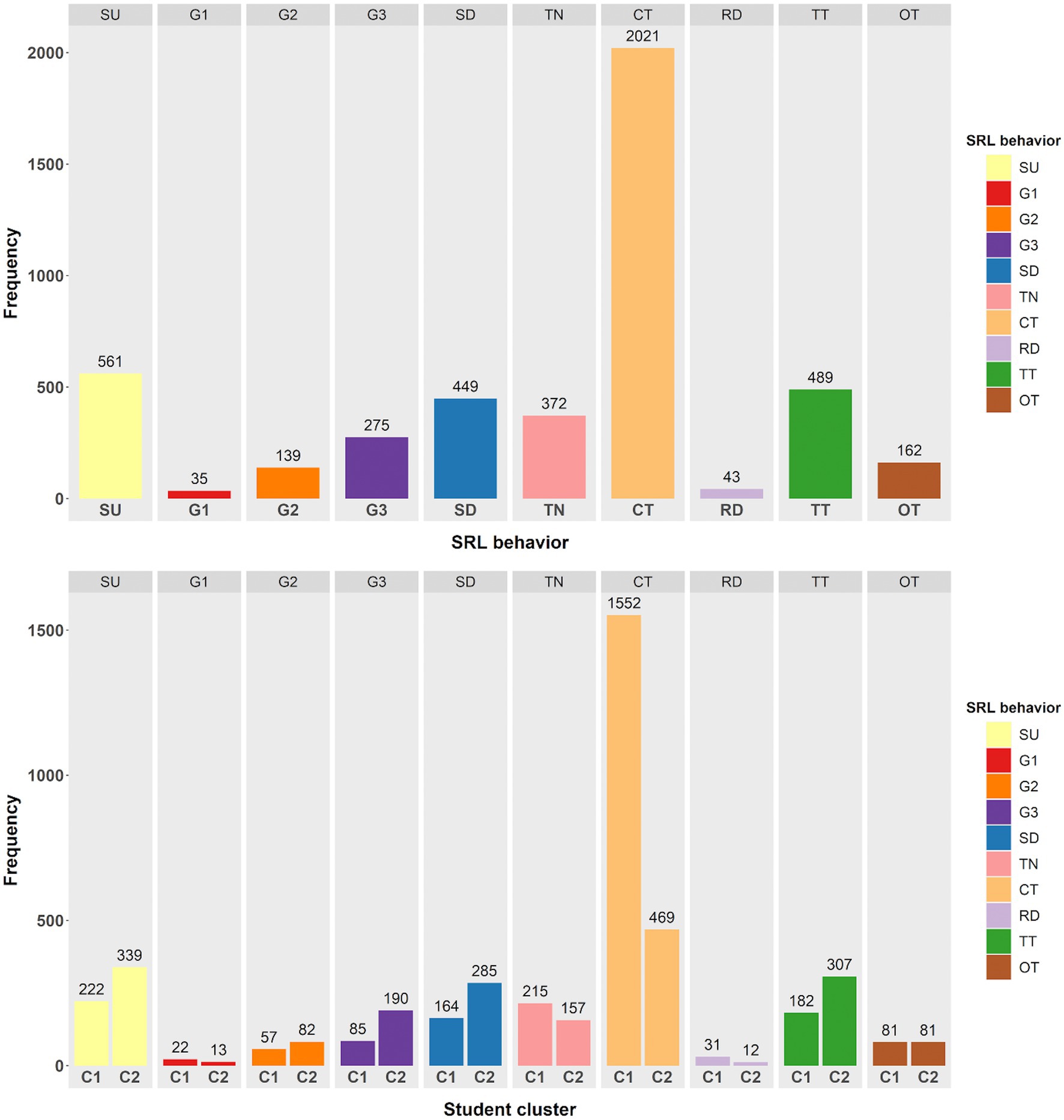

We collected a total of 4,546 SRL behaviors generated by the whole sample. Figure 5 displays the behavior frequencies. Moreover, this study visualized behavior sequences for each participant in Figure 6. Each point on the x-axis of the figure represents a corresponding position of a behavior sequence, and each value on the y-axis represents a single participant. Each line shows a series of SRL behaviors, as distinguished by different colors, that an individual learner executed during the course. Figure 6 reveals that for the learning of each unit, almost all participants start with selecting a learning unit, then setting a learning goal and duration, and end up taking a unit test. It also shows that the vast majority exhibited unique and personalized SRL behavior sequences, especially in the forethought and performance phases. For example, some students are more inclined to set performance-avoidance goals. Moreover, the sequence length widely varies from 16 to 193, indicating that some students performed longer sequences of behaviors. Such differences suggest that learner heterogeneity in temporal SRL behaviors may exist.

Figure 5. The frequencies of SRL behaviors for the overall sample (top) and the two clusters (bottom). C1 = Cluster 1; C2 = Cluster 2.

According to Figure 7, K = 2 was chosen as the ideal number of clusters. Subsequently, the partitioning around medoids (PAM) algorithm was used on the dissimilarity matrix obtained from sequence analysis, classifying participants into two clusters: Cluster 1 (n = 36) and Cluster 2 (n = 65). Figures 8–10 illustrate that between-cluster heterogeneity and within-cluster homogeneity became readily apparent in the two-cluster SRL behaviors. Although Cluster 1 had a smaller number of participants than Cluster 2, the former exhibited more frequent behaviors and longer sequence lengths (Figure 8). Both clusters’ state distributions of SRL behaviors from the beginning to the end of the course are depicted in Figure 9. Figures 5, 9 show that students from Cluster 1 devoted more effort to the performance phase, especially in time management, whereas those from Cluster 2 focused more on the regulatory activities of the forethought and reflection phases. Moreover, learners from Cluster 2 preferred setting performance-avoidance learning goals.

To further explore the differences in how learners from different clusters regulated their learning, we extracted the medoid, or most central sequences, from the two clusters as their representative sequences (Figure 10). Cluster 1 was represented by eight representative sequences, which were long and covered 36.1% of the sequences. In Cluster 2, we identified only one representative sequence, which was relatively short in length but gave 69.2% coverage. The sequences mined from Cluster 1 showed that the learners adaptively went through the three phases of SRL and demonstrated sophisticated behavior transitions. For example, when facing different learning units, learners modified learning goals by self-evaluating their performance at that time. When studying unit materials, they executed strategies of notetaking and time management depending on their learning needs. After off-task behaviors occurred, the learners usually checked the remaining learning time to adjust the subsequent learning pace. In contrast, the representative sequence identified in Cluster 2 indicated that although three-phase SRL was triggered, the participants predictably repeated the same set of SRL behaviors without any modification of strategies across the four learning units. Interestingly, they oriented themselves toward performance-avoidance learning goals. Given the findings above, we labeled Cluster 1 and Cluster 2 as the high online self-regulated learning group (H-SRL) and the low online self-regulated learning group (L-SRL), respectively.

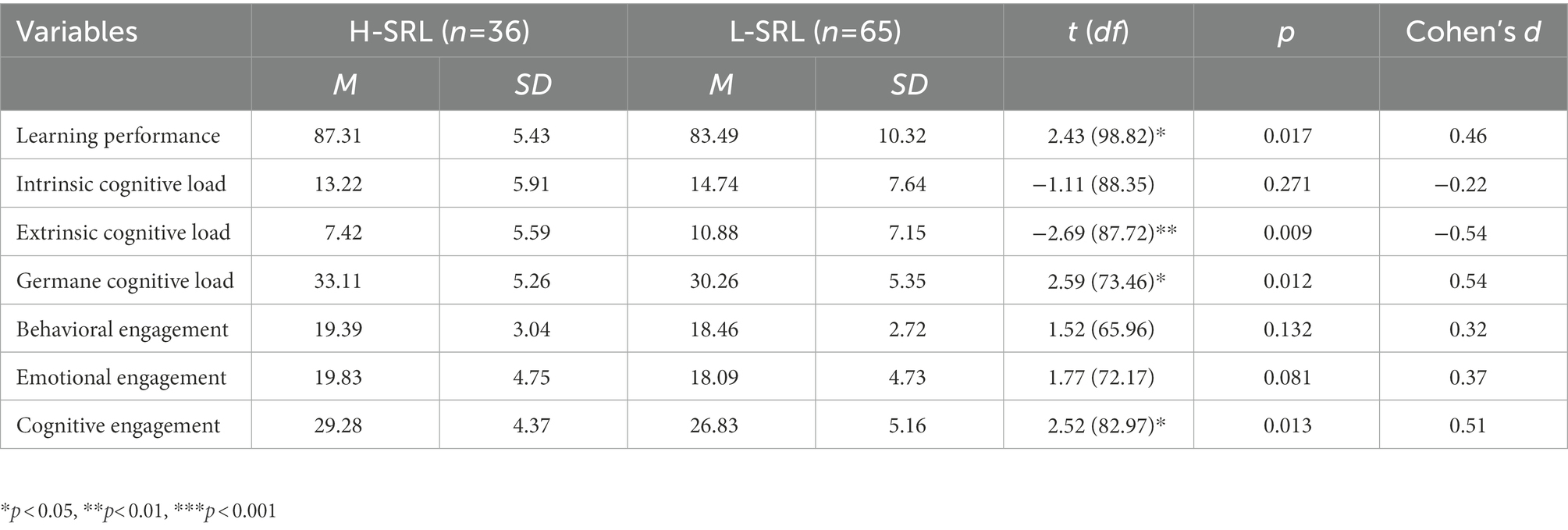

Table 2 shows that the H-SRL (M = 87.31, SD = 5.43) had significantly better learning performance than the L-SRL (M = 83.49, SD = 10.32). Moreover, the H-SRL (M = 7.42, SD = 5.59) exhibited significantly lower ECL than the L-SRL (M = 10.88, SD = 7.15). The H-SRL (M = 33.11, SD = 5.26) experienced significantly greater GCL than the L-SRL (M = 30.26, SD = 5.35). However, the t test results on ICL revealed nonsignificant differences between the groups. For the SE, the CE of the H-SRL (M = 29.28, SD = 4.37) was significantly higher than that of the L-SRL (M = 26.83, SD = 5.16), but no significant differences were found in BE and EE. According to Cohen (1988), the effect size was small for learning performance and medium for ECL, GCL, and CE.

Table 2. The results of Welch’s independent t tests on learning performance, cognitive load, and student engagement between the two groups.

Supplementary Appendix A presents the LSA results. The significant behavior patterns are portrayed in Figure 11, where the behavior codes are signified with round rectangles and the significant transitions are signified with arrows. Both groups shared some common transition sequences. In the forethought phase, the participants started by choosing a learning unit, then settled on a learning goal, and ended up with setting a learning duration (SUG1, G1SD, SUG2, G2SD, SUG3, and G3SD), indicating that they usually acted in compliance with the tools supporting goal setting. In the performance phase, they repeatedly took notes (TNTN) and usually performed time management-related behavior transitions such as repeatedly checking remaining learning time (CTCT), checking remaining learning time before attempting a test (CTTT) and switching between checking remaining time and resetting learning durations (CTRD). These sequences illustrate that students are required to invest much effort in organization and time management in AOL contexts. Additionally, it should be noted that both groups exhibited off-task behaviors after setting a learning duration (SDOT) or before checking remaining learning time (OTCT). This kind of behavior transition indicates that off-task behaviors are difficult to prevent in AOL environments, but SRL-enabling tools can offer remedy support, such as displaying the remaining learning time. In the self-reflection phase, after completing a unit test and receiving system feedback, the learners either attended the same unit test again (TTTT) or started another learning unit (TTSU), indicating that learners evaluated their learning according to the unit test and system feedback and then made learning adjustments. However, some different behavioral transfers were found between the two groups. The H-SRL usually went off-task after selecting a learning unit (SUOT), indicating that learners disengaged from the forethought phase, possibly because they struggled to determine an appropriate learning goal and learning duration by themselves. In contrast, the L-SRL directly attempted a test after setting a learning duration (SDTT) or undertaking off-task activities (OTTT), indicating that the L-SRL gravitated more toward unit tests to pass exams through minimal engagement.

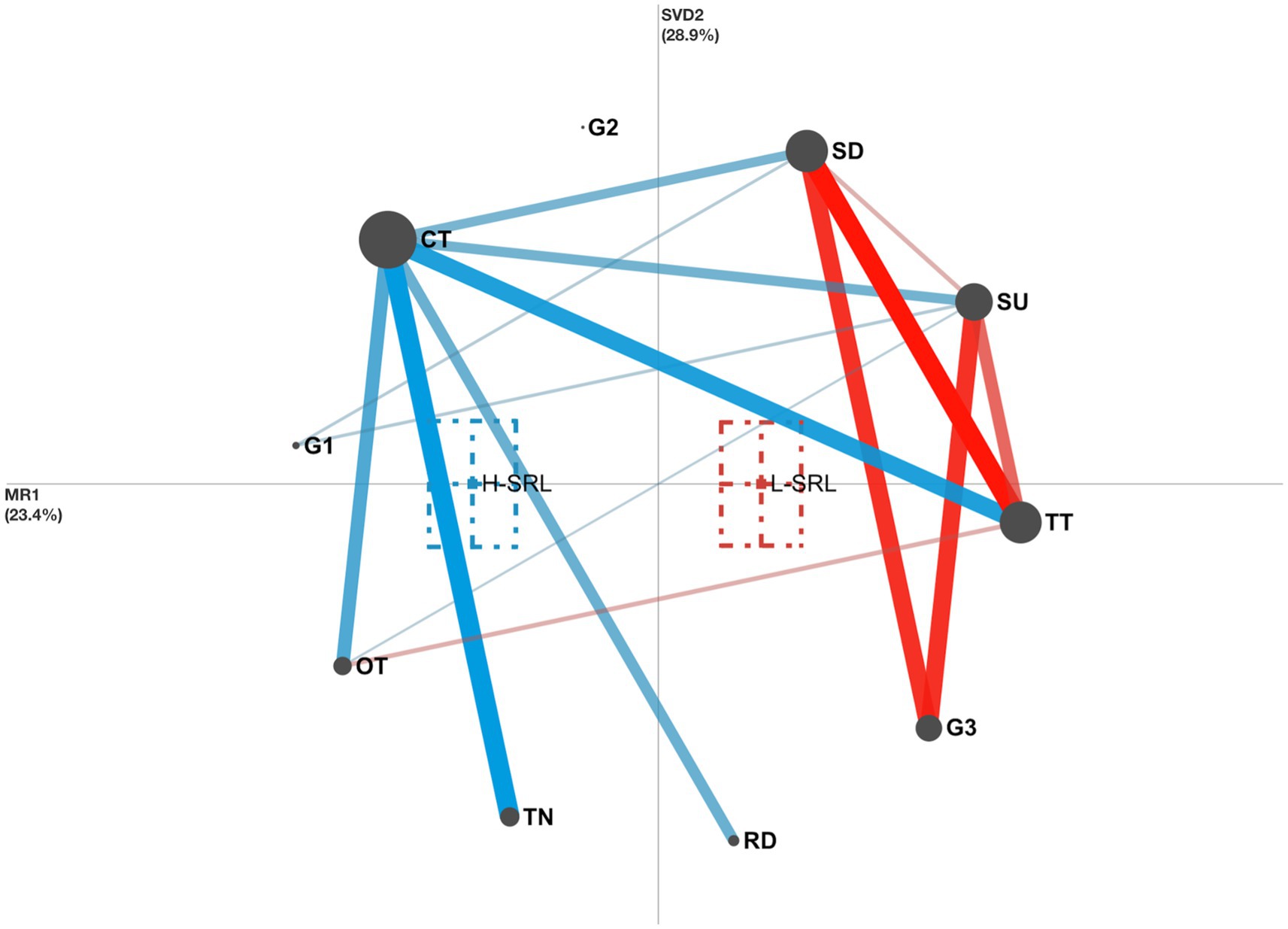

The results of ENA showed that the x-axis corresponding to MR explained 23.4% of the variance in the network, while the y-axis corresponding to SVD explained 28.9% of the variance in the network. Moreover, two-sample t tests were applied to examine whether the network centroids (colored squares surrounded by dashed-border rectangles representing 95% confidence intervals) for the two groups differed along both the x-axis and the y-axis. We found a significant difference between the H-SRL (M = −1.34, SD = 0.93) and the L-SRL (M = 0.74, SD = 1.16) on the x-axis (t = −9.86, df = 86.81, p < 0.001) but a nonsignificant difference between the H-SRL (M = 0.00, SD = 1.34) and the L-SRL (M = 0.00, SD = 1.79) on the y-axis (t = 0.00, df = 90.26, p = 1.00). These findings indicate that the H-SRL made stronger connections to G1, TN, CT, and RD, whereas the L-SRL made stronger connections to G3 and TT.

The ENA subtraction graph (Figure 12) was used to compare the mean networks of these two groups. Specifically, the H-SRL displayed stronger connections of SU and SD with G1 and weaker connections of SU and SD with G3 than the L-SRL, indicating that the H-SRL tended to choose mastery learning goals, while the L-SRL tended to set performance-avoidance learning goals. Moreover, the H-SRL showed more associations related to TN, CT, and RD and fewer associations related to TT than the L-SRL, indicating that the H-SRL preferred enacting organization and time management strategies to master the course content, while the L-SRL focused more on the unit tests than on the course materials. The H-SRL exhibited stronger links between OT and SU and CT and weaker links between OT and TT than the L-SRL. These links indicate that the H-SRL was more likely to exhibit off-task behaviors while planning for the learning units and usually checked the remaining learning time when off-task behaviors occurred. In contrast, when continuing learning was impeded due to off-task activities, the L-SRL was more inclined to start taking the unit tests rather than shifting back to reading the unit materials.

Figure 12. Comparison between the H-SRL (blue) and L-SRL (red) groups. Blue edges represent stronger associations in the H-SRL network; red edges represent stronger associations in the L-SRL network.

This study found heterogeneity in students’ behavioral processes for online SRL. Specifically, we classified the participants into two clusters (i.e., H-SRL and L-SRL) according to their traces of SRL behaviors derived from an AOC with SRL-enabling tools. We found that the H-SRL obtained higher learning performance than the L-SRL. This finding is partially consistent with Cicchinelli et al. (2018), who leveraged behavioral trajectories of SRL codified from trace data derived from an LMS to detect student subgroups. They found the highest test scores in the group who performed SRL behaviors in regular and structured ways rather than in others who rarely or irregularly engaged in SRL activities.

Additionally, the H-SRL experienced lower ECL and higher GCL than the L-SRL, which verifies the theoretical assumption that learners’ self-regulation of learning processes has associations with their cognitive loads (Seufert, 2018). Moreover, these findings support the view that how learners process instruction relates to ECL and depends on learners’ abilities and willingness to exert self-control (Eitel et al., 2020). In this study, compared to the L-SRL, the H-SRL who exerted more self-control of their cognitive processing (e.g., checking learning time frequently) showed lower ECL. Additionally, the findings substantiate another assertion that the use of learning strategies and external learning supports is associated with GCL (Klepsch and Seufert, 2020). In this study, the H-SRL who engaged more with SRL strategies via the SRL tools, particularly time management and notetaking, experienced higher GCL than the L-SRL. Another possible reason for this result is that in contrast with the L-SRL, the H-SRL bore lower ECL, freeing up more mental resources for germane processes to maximize learning. Additionally, the H-SRL showed more CE than the L-SRL, which aligns with the findings of Kim et al. (2021), who demonstrated the relationship between SRL strategy use and CE in AOCs. They found that students who more frequently performed resource management strategies (e.g., time management) showed higher CE.

The above findings suggest that although the SRL-enabling tools were provided to support SRL strategies in AOL environments, not every learner will take advantage or glean the benefits of such tools to regulate their learning processes well. It is highly possible that some learners ignore or do not comply with the provided SRL support (Bannert et al., 2015). Due to poor compliance with support, learners’ regulation was not well aligned with the learning processes, or they failed to engage in deeper learning processes (Seufert, 2018).

The LSA results showed that both groups went through three-phase SRL cycles and executed many identical behavior transitions among SRL behaviors, which implies that the SRL-enabling tools, to some extent, can facilitate students’ implementation of SRL strategies in AOL. However, we also noticed that both groups performed off-task behaviors in the performance phase. This is not surprising, as opportunity costs for studying are relatively high when students are in AOCs (Eitel et al., 2020). Opportunity costs reflect events or activities that one must delay or sacrifice to achieve an academic goal (Wolters and Brady, 2021). For example, it is challenging to persist in engaging with an AOC when the mobile phone is in reach or when friends are present. To address this issue, as suggested by Kim et al. (2021), educators should design AOCs in a way that is helpful to sustain students’ engagement throughout the course. Additionally, the LSA results also reveal the differences in behavior transitions between the groups. For the H-SRL, off-task situations were detected in the forethought phase. One possible explanation is that insufficient reference information provided in the forethought phase made learners struggle to accurately judge the difficulty of course content and thus hesitate to set learning goals and learning durations. This explanation is underpinned by Hwang et al. (2021), who report that students who referred to peers’ suggestions for self-regulation were more likely to set appropriate learning goals in an AOC with SRL support. In contrast, the L-SRL displayed more transition sequences related to taking tests. Specifically, when encountering some challenges, such as distraction or managing learning processes independently, the L-SRL tended to avoid such challenges by attempting unit tests directly, suggesting that the L-SRL had a strong tendency to follow surface learning approaches. Surface learning approaches are characterized by weak learner commitment toward studying, low engagement with learning content, and high concentration on assessment and are negatively associated with learning performance (Matcha et al., 2019; Taub et al., 2022). Similarly, many prior studies on SRL also demonstrated the adoption of surface learning approaches in AOL (Loeffler et al., 2019). For example, based on the use of study tactics extracted from trace data that an LMS captured, Saint et al. (2020b) identified four learner strategy groups (i.e., active agile, summative gamblers, active cohesive, and semiengaged groups) and reported that the summative gamblers group tended to use surface learning approaches and underperformed on course exams compared with other groups. Specifically, this group mostly focused on summative assessments and exhibited suboptimal learning behaviors such as jumping straight to a summative test after goal setting.

We conducted an ENA to confirm and complement the LSA findings. Unlike the LSA, which generated directional transition sequences, the ENA quantitatively compared the two groups’ networks of co-occurrences between behaviors and uncovered the group differences in specific network connections in more detail. Specifically, a significant difference was found in the co-occurrence networks between the two groups. Moreover, the ENA subtraction graph showed that the H-SRL had stronger associations between selecting learning units and performing off-task behaviors, whereas the L-SRL had stronger associations between taking unit tests with setting learning durations and performing off-task behaviors, which confirmed the LSA findings. More interestingly, in contrast to the LSA results that both groups shared some common behavior patterns, the ENA subtraction graph unveiled the group differences in these behavior patterns. Specifically, the H-SRL made more connections to setting mastery learning goals and managing learning time, which echoes prior review research by Wolters and Brady (2021), who reported positive correlations between college students’ use of time management strategies and the adoption of mastery learning goals. In contrast, the L-SRL made more connections to setting performance-avoidance learning goals and taking unit tests, which verified the LSA finding that the L-SRL preferred surface learning approaches. Similar findings were also reported by Jovanović et al. (2017), who mined five student groups (i.e., intensive, strategic, highly strategic, selective, and highly selective groups) according to students’ learning sequences representing their interactions with an LMS and revealed that the intensive and strategic groups outperformed the highly selective group in exam performance. They found that the intensive and strategic groups displayed mastery-goal orientation and actively practiced different learning strategies to adapt to the course requirements, whereas the highly selective group exhibited performance-goal orientation and typically employed surface learning approaches.

The present study contributes to research in the field of SRL in several ways. First, we examined the temporal dynamics of students’ SRL behaviors in the context of AOL by identifying and visualizing potential student subgroups (i.e., H-SRL and L-SRL) based on students’ trajectories of online SRL behaviors. Second, we investigated whether and how the differences in SRL behavioral trajectories are associated with AOL success by (1) testing the student subgroups for differences regarding learning performance, cognitive load, and student engagement and (2) uncovering the SRL behavior patterns of the subgroups. Third, this study provided empirical evidence for the association of the self-regulation of learning processes with cognitive load and student engagement. We found that the H-SRL had lower ECL and higher GCL and CE than the L-SRL. Last, this study is the first attempt to combine LSA and ENA to articulate and compare behavior patterns of SRL. It not only offers more holistic and in-depth insights into the temporal characteristics of SRL but also addresses, to some extent, the concerns of ontological flatness proposed by Reimann et al. (2014).

The current findings have important implications for the research and practice around SRL in the context of AOL. First, considering that the L-SRL preferred performance-avoidance goals and ignored time management and notetaking, instructors should encourage students to pursue mastery learning goals and actively engage in time management and notetaking, especially in AOCs. Additionally, this study informs the design of adaptive SRL interventions. Since not all learners were able to equally benefit from fixed SRL support, SRL interventions should be tailored to meet the needs of students with different patterns of SRL behaviors. We highly recommend that educators develop adaptive SRL interventions that can track and evaluate SRL behavior changes on the fly and provide immediate and personalized suggestions on SRL strategy use. Additionally, the temporal analyses of learners’ interactions with SRL support can evaluate how an SRL intervention relates to learning outcomes. Indeed, Damgaard and Nielsen (2018) highlighted the importance of examining the mechanisms that behavioral interventions affect, as interventions may fall short of intended positive effects if the understanding of the likely affected behavioral pathways is insufficient. Finally, the visualization of temporal SRL behaviors conveys quantitative information in a more digestible and actionable way, which enables instructors to (1) pinpoint how SRL processes unfold over time and differ across different SRL groups and (2) determine when and how to intervene as warranted.

The current study has some limitations that should be addressed in future research. First, all the participants were graduate students from universities located in northern Taiwan, which may limit the generalizability of our findings. Future studies should include a larger sample of students at other educational levels and from different countries/regions. Secondly, as with most SRL research, this study conducted a postanalysis of students’ SRL behaviors. Future studies could integrate this postanalysis into AOCs to offer students immediate learning analytics-based feedback to support their calibration for SRL. Thirdly, this study did not collect students’ scores of prior knowledge tests regarding research ethics, which limits the examination of the relationship between students’ prior knowledge and their SRL behavioral traces. In the future, researchers could investigate whether student groups with distinct SRL processes differ in prior knowledge and how students with different levels of prior knowledge perform their SRL behavioral trajectories. Fourthly, the participants’ SRL behaviors were dominated by time management due to the time restrictions imposed in the course, which may make our study not represent most behavioral data-based SRL studies, especially in authentic learning settings in which time management is usually in the background. Thus, we encourage researchers to examine further the association of time management with learning outcomes in AOL settings. For example, future studies could explore how students’ learning outcomes are related to the frequency of time management behaviors or SRL behavioral sequences involving time management. Moreover, it remains unclear whether our findings about distinct SRL behavioral patterns can be generalized to large-scale open AOL environments, such as MOOCs. Finally, because SRL is a multidimensional construct that includes (meta)cognitive, emotional, motivational, and behavioral components, it is difficult to use a single data source to capture the full range of SRL processes. Hence, future researchers could utilize multimodal multichannel data (e.g., physiological measures) to create a more comprehensive picture of SRL processes.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical approval was not provided for this study on human participants because all of the research data were stored on the first author’s and the corresponding author’s personal computers that are password-protected, and can be accessed by only the first author and corresponding author of this paper for research purposes. All of the participants in the sample voluntarily participated in the study. Individual responses were confidential. No identifying information linked responses to individuals, and thus students’ private information was fully protected in the study. There are no conflicts of interest to declare. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

JS contributed to course development, data collection, research idea, and manuscript writing and editing. YL contributed to research idea, data analysis, and manuscript writing and revision. XL contributed to manuscript writing and editing. XH contributed to manuscript review and editing. All authors contributed to the article and approved the submitted version.

This research was supported by the National Science and Technology Council (formerly Ministry of Science and Technology) in Taiwan through Grant numbers MOST 111-2410-H-A49-018-MY4, MOST 110-2511-H-A49-009-MY2, MOST 107-2628-H-009-004-MY3, MOST 105-2511-S-009-013-MY5, and NSC 99-2511-S009-006-MY3. The publication was made possible in part by support from the HKU Libraries Open Access Author Fund sponsored by the HKU Libraries.

We would like to thank NYCU’s ILTM (Interactive Learning Technology and Motivation, see: http://ILTM.lab.nycu.edu.tw) lab members and the students for participating in the study. We would like to thank Hsueh-Er Tsai for developing the online self-regulated learning system for the study as well as the reviewers who provided valuable comments. We would also like to thank Prof. Chien Chou and her team for their support in developing multimedia learning materials on research ethics.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.1096337/full#supplementary-material

Alhazbi, S., and Hasan, M. A. (2021). The role of self-regulation in remote emergency learning: comparing synchronous and asynchronous online learning. Sustainability 13:11070. doi: 10.3390/su131911070

Anthonysamy, L., Koo, A.-C., and Hew, S.-H. (2020). Self-regulated learning strategies and non-academic outcomes in higher education blended learning environments: a one decade review. Educ. Inf. Technol. 25, 3677–3704. doi: 10.1007/s10639-020-10134-2

Appleton, J. J., Christenson, S. L., and Furlong, M. J. (2008). Student engagement with school: critical conceptual and methodological issues of the construct. Psychol. Sch. 45, 369–386. doi: 10.1002/pits.20303

Azevedo, R. (2014). Issues in dealing with sequential and temporal characteristics of self- and socially-regulated learning. Metacogn. Learn. 9, 217–228. doi: 10.1007/s11409-014-9123-1

Azevedo, R., and Gašević, D. (2019). Analyzing multimodal multichannel data about self-regulated learning with advanced learning technologies: issues and challenges. Comput. Hum. Behav. 96, 207–210. doi: 10.1016/j.chb.2019.03.025

Azevedo, R., Taub, M., and Mudrick, N. V. (2018). “Understanding and reasoning about real-time cognitive, affective, and metacognitive processes to foster self-regulation with advanced learning technologies” in Handbook of self-regulation of learning and performance. eds. P. A. Alexander, D. H. Schunk, and J. A. Greene. 2nd ed (London: Routledge), 254–270.

Bakeman, R., and Quera, V. (2011). Sequential Analysis and Observational Methods for the Behavioral Sciences. Cambridge: Cambridge University Press.

Baker, R., Xu, D., Park, J., Yu, R., Li, Q., Cung, B., et al. (2020). The benefits and caveats of using clickstream data to understand student self-regulatory behaviors: opening the black box of learning processes. Int. J. Educ. Technol. High. Educ. 17, 1–24. doi: 10.1186/s41239-020-00187-1

Bannert, M., Sonnenberg, C., Mengelkamp, C., and Pieger, E. (2015). Short- and long-term effects of students’ self-directed metacognitive prompts on navigation behavior and learning performance. Comput. Hum. Behav. 52, 293–306. doi: 10.1016/j.chb.2015.05.038

Barnard, L., Lan, W. Y., To, Y. M., Paton, V. O., and Lai, S.-L. (2009). Measuring self-regulation in online and blended learning environments. Internet High. Educ. 12, 1–6. doi: 10.1016/j.iheduc.2008.10.005

Baumeister, R. F., Vohs, K. D., and Tice, D. M. (2007). The strength model of self-control. Curr. Dir. Psychol. Sci. 16, 351–355. doi: 10.1111/j.1467-8721.2007.00534.x

Chen, B., Knight, S., and Wise, A. F. (2018). Critical issues in designing and implementing temporal analytics. J. Learning Analytics 5, 1–9. doi: 10.18608/jla.2018.53.1

Cicchinelli, A., Veas, E., Pardo, A., Pammer-Schindler, V., Fessl, A., Barreiros, C., et al. (2018). Finding traces of self-regulated learning in activity streams. In A. Pardo, K. Bartimote-Aufflick, G. Lynch, S. B. Shum, R. Ferguson, A. Merceron, & X. Ochoa (Eds.), Proceedings of the 8th International Conference on Learning Analytics and Knowledge (pp. 191–200). ACM. doi:doi: 10.1145/3170358.3170381

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences. United States: Lawrence Erlbaum Associates.

Damgaard, M. T., and Nielsen, H. S. (2018). Nudging in education. Econ. Educ. Rev. 64, 313–342. doi: 10.1016/j.econedurev.2018.03.008

de Bruin, A. B. H., Roelle, J., Carpenter, S. K., and Baars, M., EFG-MRE (2020). Synthesizing cognitive load and self-regulation theory: a theoretical framework and research agenda. Educ. Psychol. Rev. 32, 903–915. doi: 10.1007/s10648-020-09576-4

Eitel, A., Endres, T., and Renkl, A. (2020). Self-management as a bridge between cognitive load and self-regulated learning: the illustrative case of seductive details. Educ. Psychol. Rev. 32, 1073–1087. doi: 10.1007/s10648-020-09559-5

Elliot, A. J., and Church, M. A. (1997). A hierarchical model of approach and avoidance achievement motivation. J. Pers. Soc. Psychol. 72, 218–232. doi: 10.1037/0022-3514.72.1.218

Fincham, E., Gašević, D., Jovanović, J., and Pardo, A. (2018). From study tactics to learning strategies: an analytical method for extracting interpretable representations. IEEE Trans. Learn. Technol. 12, 59–72. doi: 10.1109/TLT.2018.2823317

Fredricks, J. A., Blumenfeld, P., Friedel, J., and Paris, A. (2005). “School engagement” in What do children need to flourish? Conceptualizing and measuring indicators of positive development. eds. K. A. Moore and L. H. Lippman (Berlin: Springer), 305–321.

Fredricks, J. A., Blumenfeld, P. C., and Paris, A. H. (2004). School engagement: potential of the concept, state of the evidence. Rev. Educ. Res. 74, 59–109. doi: 10.3102/00346543074001059

Gabadinho, A., Ritschard, G., Müller, N. S., and Studer, M. (2011). Analyzing and visualizing state sequences in R with TraMineR. J. Stat. Softw. 40, 1–37. doi: 10.18637/jss.v040.i04

Greene, B. A. (2015). Measuring cognitive engagement with self-report scales: reflections from over 20 years of research. Educ. Psychol. 50, 14–30. doi: 10.1080/00461520.2014.989230

Henning, C. (2020). Fpc: flexible procedures for clustering. Available at: https://cran.r-project.org/web/packages/fpc/index.html

Hew, K. F., Jia, C., Gonda, D. E., and Bai, S. (2020). Transitioning to the “new normal” of learning in unpredictable times: pedagogical practices and learning performance in fully online flipped classrooms. Int. J. Educ. Technol. High. Educ. 17, 1–22. doi: 10.1186/s41239-020-00234-x

Hong, W., Bernacki, M. L., and Perera, H. N. (2020). A latent profile analysis of undergraduates’ achievement motivations and metacognitive behaviors, and their relations to achievement in science. J. Educ. Psychol. 112, 1409–1430. doi: 10.1037/edu0000445

Hwang, G.-J., Wang, S.-Y., and Lai, C.-L. (2021). Effects of a social regulation-based online learning framework on students’ learning achievements and behaviors in mathematics. Comput. Educ. 160:104031. doi: 10.1016/j.compedu.2020.104031

Jovanović, J., Gašević, D., Dawson, S., Pardo, A., and Mirriahi, N. (2017). Learning analytics to unveil learning strategies in a flipped classroom. Internet High. Educ. 33, 74–85. doi: 10.1016/j.iheduc.2017.02.001

Kassambara, A., and Mundt, F. (2020). Factoextra: extract and visualize the results of multivariate data analyses. Available at: https://cran.r-project.org/web/packages/factoextra/index.html

Kim, D., Jo, I.-H., Song, D., Zheng, H., Li, J., Zhu, J., et al. (2021). Self-regulated learning strategies and student video engagement trajectory in a video-based asynchronous online course: a Bayesian latent growth modeling approach. Asia Pac. Educ. Rev. 22, 305–317. doi: 10.1007/s12564-021-09690-0

Kim, D., Yoon, M., Jo, I.-H., and Branch, R. M. (2018). Learning analytics to support self-regulated learning in asynchronous online courses: a case study at a women’s university in South Korea. Comput. Educ. 127, 233–251. doi: 10.1016/j.compedu.2018.08.023

Klepsch, M., and Seufert, T. (2020). Understanding instructional design effects by differentiated measurement of intrinsic, extraneous, and germane cognitive load. Instr. Sci. 48, 45–77. doi: 10.1007/s11251-020-09502-9

Knight, S., Wise, A. F., and Chen, B. (2017). Time for change: why learning analytics needs temporal analysis. J. Learning Analytics 4, 7–17. doi: 10.18608/jla.2017.43.2

Lai, C.-L., Hwang, G.-J., and Tu, Y.-H. (2018). The effects of computer-supported self-regulation in science inquiry on learning outcomes, learning processes, and self-efficacy. Educ. Technol. Res. Dev. 66, 863–892. doi: 10.1007/s11423-018-9585-y

Lan, M., Hou, X., Qi, X., and Mattheos, N. (2019). Self-regulated learning strategies in world’s first MOOC in implant dentistry. Eur. J. Dent. Educ. 23, 278–285. doi: 10.1111/eje.12428

Leppink, J., Paas, F., Van der Vleuten, C. P. M., Van Gog, T., and Van Merriënboer, J. J. G. (2013). Development of an instrument for measuring different types of cognitive load. Behav. Res. Methods 45, 1058–1072. doi: 10.3758/s13428-013-0334-1

Liu, Y., and Sun, J. C.-Y. (2021). “The mediation effects of task strategies on the relationship between engagement and cognitive load in a smart instant feedback system” in Proceedings of the 21st IEEE international conference on advanced learning technologies ( IEEE)

Loeffler, S. N., Bohner, A., Stumpp, J., Limberger, M. F., and Gidion, G. (2019). Investigating and fostering self-regulated learning in higher education using interactive ambulatory assessment. Learn. Individ. Differ. 71, 43–57. doi: 10.1016/j.lindif.2019.03.006

Marquart, C. L., Hinojosa, C., Swiecki, Z., and Shaffer, D. W. (2018). Epistemic network analysis. Available at: http://app.epistemicnetwork.org/login.html

Martin, F., Xie, K., and Bolliger, D. U. (2022). Engaging learners in the emergency transition to online learning during the COVID-19 pandemic. J. Res. Technol. Educ. 54, S1–S13. doi: 10.1080/15391523.2021.1991703

Matcha, W., Gašević, D., Ahmad Uzir, N., Jovanović, J., and Pardo, A. (2019). Analytics of learning strategies: associations with academic performance and feedback. In S. Hsiao, J. Cunningham, K. McCarthy, G. Lynch, C. Brooks, R. Ferguson, & U. Hoppe (Eds.), Proceedings of the 9th International Conference on Learning Analytics and Knowledge (pp. 461–470). ACM. doi:doi: 10.1145/3303772.3303787

Miller, D. J., Noble, P., Medlen, S., Jones, K., and Munns, S. L. (2021). Brief research report: psychometric properties of a cognitive load measure when assessing the load associated with a course. J. Exp. Educ. 1-10, 1–10. doi: 10.1080/00220973.2021.1947763

Panadero, E. (2017). A review of self-regulated learning: six models and four directions for research. Front. Psychol. 8:422. doi: 10.3389/fpsyg.2017.00422

Pellas, N. (2014). The influence of computer self-efficacy, metacognitive self-regulation and self-esteem on student engagement in online learning programs: evidence from the virtual world of second life. Comput. Hum. Behav. 35, 157–170. doi: 10.1016/j.chb.2014.02.048

Pérez-Álvarez, R. A., Maldonado-Mahauad, J., Sharma, K., Sapunar-Opazo, D., and Pérez-Sanagustín, M. (2020). Characterizing learners’ engagement in MOOCs: an observational case study using the NoteMyProgress tool for supporting self-regulation. IEEE Trans. Learn. Technol. 13, 676–688. doi: 10.1109/TLT.2020.3003220

Pintrich, P. R. (2000). “The role of goal orientation in self-regulated learning” in Handbook of Self-regulation. eds. M. Boekaerts, P. R. Pintrich, and M. Zeidner (United States: Academic Press), 451–502.

Reimann, P. (2009). Time is precious: variable- and event-centred approaches to process analysis in CSCL research. Int. J. Comput.-Support. Collab. Learn. 4, 239–257. doi: 10.1007/s11412-009-9070-z

Reimann, P., Markauskaite, L., and Bannert, M. (2014). E-R esearch and learning theory: what do sequence and process mining methods contribute? Br. J. Educ. Technol. 45, 528–540. doi: 10.1111/bjet.12146

Saint, J., Fan, Y., Gašević, D., and Pardo, A. (2022). Temporally-focused analytics of self-regulated learning: a systematic review of literature. Computers and Education: Artificial Intelligence 3:100060. doi: 10.1016/j.caeai.2022.100060

Saint, J., Gašević, D., Matcha, W., Ahmad Uzir, N., and Pardo, A. (2020a). Combining analytic methods to unlock sequential and temporal patterns of self-regulated learning. In C. Rensing, H. Drachsler, V. Kovanović, N. Pinkwart, M. Scheffel, & K. Verbert (Eds.), Proceedings of the 10th international conference on learning analytics and knowledge (pp. 402–411). ACM. doi:doi: 10.1145/3375462.3375487

Saint, J., Whitelock-Wainwright, A., Gašević, D., and Pardo, A. (2020b). Trace-SRL: a framework for analysis of microlevel processes of self-regulated learning from trace data. IEEE Trans. Learn. Technol. 13, 861–877. doi: 10.1109/TLT.2020.3027496

Seufert, T. (2018). The interplay between self-regulation in learning and cognitive load. Educ. Res. Rev. 24, 116–129. doi: 10.1016/j.edurev.2018.03.004

Seufert, T. (2020). Building bridges between self-regulation and cognitive load—an invitation for a broad and differentiated attempt. Educ. Psychol. Rev. 32, 1151–1162. doi: 10.1007/s10648-020-09574-6

Siadaty, M., Gasevic, D., and Hatala, M. (2016). Trace-based micro-analytic measurement of self-regulated learning processes. Journal of Learning Analytics 3, 183–214. doi: 10.18608/jla.2016.31.11

Srivastava, N., Fan, Y., Raković, M., Singh, S., Jovanović, J., van der Graaf, J., et al. (2022). Effects of internal and external conditions on strategies of self-regulated learning: a learning analytics study. In A. F. Wise, R. Martinez-Maldonado, & I. Hilliger (Eds.), Proceedings of the 12th international conference on learning analytics and knowledge (pp. 392–403). ACM. doi:doi: 10.1145/3506860.3506972

Stiller, K. D., and Bachmaier, R. (2018). Cognitive loads in a distance training for trainee teachers. Front. Educ. 3:44. doi: 10.3389/feduc.2018.00044

Sun, J. C.-Y., and Rueda, R. (2012). Situational interest, computer self-efficacy and self-regulation: Their impact on student engagement in distance education. British Journal of Educational Technology 43, 191–204. doi: 10.1111/j.1467-8535.2010.01157.x

Sun, J. C.-Y., Wu, Y.-T., and Lee, W.-I. (2017). The effect of the flipped classroom approach to OpenCourseWare instruction on students’ self-regulation. British Journal of Educational Technology 48, 713–729. doi: 10.1111/bjet.12444

Sun, J. C.-Y., Lin, C.-T., and Chou, C. (2018). Applying learning analytics to explore the effects of motivation on online students’ reading behavioral patterns. International Review of Research in Open and Distributed Learning 19, 210–227. doi: 10.19173/irrodl.v19i2.2853

Sun, J. C.-Y., Yu, S.-J., and Chao, C.-H. (2019). Effects of intelligent feedback on online learners’ engagement and cognitive load: The case of research ethics education. Educational Psychology 39, 1293–1310. doi: 10.1080/01443410.2018.1527291

Sun, J. C.-Y., and Liu, Y. (2022). The mediation effect of online self-regulated learning between engagement and cognitive load: A case of an online course with smart instant feedback. International Journal of Online Pedagogy and Course Design (IJOPCD) 12, 1–17. doi: 10.4018/IJOPCD.295953

Sun, J. C.-Y., Tsai, H.-E., and Cheng, W. K. R. (2023). “Effects of personalized learning integrated with data visualization on the behavioral patterns of online learners” in Paper will be presented at the international conference on artificial intelligence and education (IEEE)

Sweller, J. (2010). Element interactivity and intrinsic, extraneous, and germane cognitive load. Educ. Psychol. Rev. 22, 123–138. doi: 10.1007/s10648-010-9128-5

Sweller, J., van Merrienboer, J. J. G., and Paas, F. G. W. C. (1998). Cognitive architecture and instructional design. Educ. Psychol. Rev. 10, 251–296. doi: 10.1023/A:1022193728205

Sweller, J., van Merriënboer, J. J. G., and Paas, F. (2019). Cognitive architecture and instructional design: 20 years later. Educ. Psychol. Rev. 31, 261–292. doi: 10.1007/s10648-019-09465-5

Taub, M., Banzon, A. M., Zhang, T., and Chen, Z. (2022). Tracking changes in students’ online self-regulated learning behaviors and achievement goals using trace clustering and process mining. Front. Psychol. 13:813514. doi: 10.3389/fpsyg.2022.813514

Van Merriënboer, J. J. G., and Sweller, J. (2010). Cognitive load theory in health professional education: design principles and strategies. Med. Educ. 44, 85–93. doi: 10.1111/j.1365-2923.2009.03498.x

Winne, P. H. (2010). Improving measurements of self-regulated learning. Educ. Psychol. 45, 267–276. doi: 10.1080/00461520.2010.517150

Wolters, C. A., and Brady, A. C. (2021). College students’ time management: a self-regulated learning perspective. Educ. Psychol. Rev. 33, 1319–1351. doi: 10.1007/s10648-020-09519-z

Wong, J., Baars, M., Davis, D., Van Der Zee, T., Houben, G.-J., and Paas, F. (2019a). Supporting self-regulated learning in online learning environments and MOOCs: a systematic review. Int. J. Human–Computer Interaction 35, 356–373. doi: 10.1080/10447318.2018.1543084

Wong, J., Khalil, M., Baars, M., de Koning, B. B., and Paas, F. (2019b). Exploring sequences of learner activities in relation to self-regulated learning in a massive open online course. Comput. Educ. 140:103595. doi: 10.1016/j.compedu.2019.103595

Wong, Z. Y., and Liem, G. A. D. (2021). Student engagement: current state of the construct, conceptual refinement, and future research directions. Educ. Psychol. Rev. 34, 107–138. doi: 10.1007/s10648-021-09628-3

Ye, D., and Pennisi, S. (2022). Using trace data to enhance students’ self-regulation: a learning analytics perspective. Internet High. Educ. 54:100855. doi: 10.1016/j.iheduc.2022.100855

Yoon, M., Lee, J., and Jo, I.-H. (2021). Video learning analytics: investigating behavioral patterns and learner clusters in video-based online learning. Internet High. Educ. 50:100806. doi: 10.1016/j.iheduc.2021.100806

Zheng, J., Li, S., and Lajoie, S. P. (2021). Diagnosing virtual patients in a technology-rich learning environment: a sequential mining of students’ efficiency and behavioral patterns. Educ. Inf. Technol. 27, 4259–4275. doi: 10.1007/s10639-021-10772-0

Zheng, J., Xing, W., and Zhu, G. (2019). Examining sequential patterns of self- and socially shared regulation of STEM learning in a CSCL environment. Comput. Educ. 136, 34–48. doi: 10.1016/j.compedu.2019.03.005

Keywords: temporal learning analytics, educational data mining, asynchronous online course, self-regulated learning, cognitive load, student engagement

Citation: Sun JC-Y, Liu Y, Lin X and Hu X (2023) Temporal learning analytics to explore traces of self-regulated learning behaviors and their associations with learning performance, cognitive load, and student engagement in an asynchronous online course. Front. Psychol. 13:1096337. doi: 10.3389/fpsyg.2022.1096337

Received: 12 November 2022; Accepted: 15 December 2022;

Published: 23 January 2023.

Edited by:

Jesus de la Fuente, University of Navarra, SpainReviewed by:

Manuel Soriano-Ferrer, University of Valencia, SpainCopyright © 2023 Sun, Liu, Lin and Hu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yiming Liu, ✉ ZWR1bGl1eW1AY29ubmVjdC5oa3UuaGs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.