95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 15 December 2022

Sec. Cognition

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.1090024

This article is part of the Research Topic Improving Financial Decisions View all 7 articles

Financially vulnerable consumers are often associated with suboptimal financial behaviors. Evaluated financial education programs so far show difficulties to effectively reach this target population. In our attempt to solve this problem, we built a behaviorally informed financial education program incorporating insights from both motivational and behavioral change theories. In a quasi-experimental field study among Dutch financially vulnerable people, we compared this program with both a control group and a traditional program group. In comparison with the control group, we found robust positive effects of the behaviorally informed program on financial skills and knowledge and self-reported financial behavior, but not on other outcomes. Additionally, we did not find evidence that the behaviorally informed program performed better than the traditional program. Finally, we discuss the findings and limitations of this study in light of the financial education literature and provide implications for policymaking and directions for future research.

A growing number of studies show the occurrence of suboptimal financial behaviors among low-income, low-educated, and unemployed consumers. These behaviors range from undersaving (Shurtleff, 2009) to excessive and overly expensive overborrowing (Skiba and Tobacman, 2008; Cole et al., 2014), as well as poor financial management (Perry and Morris, 2005; De Beckker et al., 2019). Furthermore, low-income and low-educated consumers are less able to deal with shocks in income or expenses, for example, due to the Covid-19 outbreak, which makes them financially vulnerable (Hasler et al., 2018). Survey studies consistently show that financially vulnerable consumers have lower levels of financial knowledge and skills than others (Bernheim, 1998; Lusardi and Mitchell, 2014; De Beckker et al., 2019; Klapper and Lusardi, 2020; Lusardi et al., 2020b), while lower levels of financial knowledge are associated with poorer financial management (Hilgert et al., 2003), poorer credit behavior (Lusardi and Tufano, 2015), and lower financial well-being (Yakoboski et al., 2020). Traditionally, these findings are seen as strong motivations to educate financially vulnerable consumers to foster healthy financial behavior and to improve their financial well-being.

However, the literature is not conclusive whether financial education is an effective policy program, both in general and in targeting the financially vulnerable. Literature reviews are inconclusive, either suggesting the effectiveness of financial education (e.g., Lusardi and Mitchell, 2014) or concluding the opposite (Willis, 2011). Furthermore, meta-analyses provide mixed results. Fernandes et al. (2014) report only very small effects of financial education on financial knowledge and financial behavior. More recent meta-studies show that financial education can impact both financial knowledge and financial behavior (Kaiser and Menkhoff, 2017; Kaiser et al., 2022), or at least some financial behaviors, like record keeping and savings (Miller et al., 2015). However, the effects on financial behavior are small-to-medium.1 Furthermore, both Fernandes et al. (2014) and Kaiser and Menkhoff (2017) found that financial education is less effective for low-income clients.2 This raises the question also proposed by Kaiser and Menkhoff (2017): What can be done to make financial education more effective, specifically for financially vulnerable consumers?

The purpose of our study is to investigate the impact of a behaviorally informed financial education program (BI program) targeting financially vulnerable consumers in comparison to both a control group and a traditional financial education program (traditional program). Several studies suggest that effective financial education programs require a combination of transfer of knowledge, skill-building, and increasing motivation to create the desired changes in behavior (Hilgert et al., 2003; Peeters et al., 2018). The traditional program primarily focuses on the transfer of knowledge and skill-building. In designing the BI program, we supplemented these elements of the traditional program with three elements focusing on motivation and practicing. First, the program contains a need-driven and adaptive approach in which participants decide which topics will be discussed. Second, trainers apply insights from autonomy-supportive teaching (Ryan and Deci, 2000a; Kusurkar et al., 2011), the stages-of-change model (Prochaska et al., 1992; Peeters et al., 2018), and interviewing techniques (Miller and Rollnick, 2002) to enhance intrinsic motivation, solve ambivalence, and support behavioral change. Third, the program contains implementation assignments to enhance practicing and behavioral change. To keep the extent of the program the same, the behaviorally informed program pays less attention to the transfer of knowledge in comparison with the traditional program. Our research question is: Does the BI program improve financial outcomes, both in comparison with no program and a traditional financial education program?

In collaboration with five Dutch local government and debt counseling organizations, we conducted a quasi-experimental study including financially vulnerable individuals. Using a difference-in-difference design, we compared the BI program with both a traditional program and a control group. We collected data both before the start of the program (baseline) and 6 months later (endline). We estimated the effects on five groups of outcomes (indices): financial skills and knowledge, financial-psychological indicators, financial behavior, financial well-being, and financial situation. In addition, we collected data during the last program session to gain insight into the program-related outcomes. The main finding of our study is that the BI program had a positive impact on self-reported financial skills and knowledge and financial behavior but not on financial well-being and financial situation. Also, the BI program was not more effective than the traditional program.

Our work contributes to the existing literature in two ways. First, by developing a financial education program based on behavioral insights and investigating whether such a program could be (more) effective in targeting financially vulnerable consumers. Prior studies have shown that offering the program on teachable moments (Kaiser and Menkhoff, 2017) and adjusted content and didactics (e.g., rules-of-thumb-based instructions, and activating and differentiating didactics; Drexler et al., 2014; Kaiser and Menkhoff, 2018; Nagel et al., 2019; Iterbeke et al., 2020) can improve the effectiveness of financial education programs. However, the literature lacks studies that evaluate the impact of programs building on motivational insights (Peeters et al., 2018). Second, our work focused on an important but underexamined target population, namely financially vulnerable subjects. As discussed, financially vulnerable individuals may have large potential to benefit from financial education. However, only a limited number of studies investigated the impact of classroom-based financial education for this target population (Collins, 2013; Reich and Berman, 2015).3 We aimed at filling these gaps.

This paper proceeds as follows. In “Design of the financial education programs,” we discuss the design of both the traditional and the behaviorally informed program. “Materials and methods” describes the quasi-experimental design and sample. In “Data and empirical strategy,” we describe the data and empirical strategy. “Results” discusses our results. “Discussion” concludes with a discussion of our findings and provides some advice for policymakers.

In addressing suboptimal financial behaviors and outcomes, financial education programs have traditionally focused on financial literacy as primary determinant. These programs build on human capital theory applied to financial behavior (Lusardi and Mitchell, 2014; Entorf and Hou, 2018), assuming that: (1) Poor financial decisions are caused by a lack of financial skills and knowledge (lack of human capital), (2) financial education (knowledge transfer and training skills) improves the participants’ financial skills and knowledge, and (3) subsequently, participants will make better (more well-informed) financial decisions, (4) which will contribute to one’s financial well-being and financial situation. The traditional program in the Netherlands has been developed by the National Institute for Family Finance Information (Nibud) and is used by a wide range of Dutch local government and non-profit organizations.4 The program has been designed for financially vulnerable individuals and its purpose is to promote healthy financial behaviors. The traditional program follows the rationale of human capital theory and focuses on transfer of knowledge and training skills.

Table 1 provides a summary of the characteristics of the traditional program. The traditional program has a method-driven approach with a fixed set-up including a fixed order of topics. The program uses standard course materials including a workbook for participants and a manual for trainers.5 The program comprises eight elementary training modules and some additional modules. The elementary modules focus on essential financial knowledge (e.g., distinguishing types of income, expenditures, and financial products) and skills (e.g., budgeting, applying for additional allowances, and keeping track). Importantly, the program pays limited attention to psychological aspects such as motivation and self-regulation. In the first session, participants set their own goal for the program by completing a statement (“I want to achieve the following with the course…”). During the last session, participants make an action plan and receive tips to stick to it. The program is meant for a small group-based or classroom setting including a trainer and 8–15 participants. The program consists of five-to-six weekly sessions of 2.5 h resulting in a total of 12.5–15 h of financial education.6

We designed a behaviorally informed financial education program using insights from both motivation and behavioral change theories and elements of the traditional program. Similar as for the traditional program, the purpose of the BI program was to foster healthy financial behavior. However, the intended mechanism to reach this goal differs. In designing this program, we assumed that improving financial knowledge and skills is insufficient to foster healthy financial behavior. Individuals often face ambivalence, internal and external barriers, and problems with self-regulation that may prevent behavioral change. Furthermore, the financial problems faced by financially vulnerable consumers may tax their mental capacities required for financial decision-making (Ridley et al., 2020; De Bruijn and Antonides, 2022). For these reasons, we designed a program that focuses on both improving financial skills and knowledge and supporting behavioral change.

The set-up of the BI program differs from the traditional program in three respects: (1) approach, (2) role of the trainer, and (3) assignments (see Table 1 for an overview). First, the BI program adopts a need-driven and adaptive approach, meaning that the contents of the program are adapted to the participants’ needs. This approach is apparent from the role of participants in designing the program. In the first session, the trainer presents potential topics to discuss around four modules: (1) income, (2) financial administration, (3) making ends meet (now), and (4) making ends meet (later). Thereafter, participants choose which topics they will discuss during the other sessions. The trainer designs the rest of the program accordingly. As a consequence, topics discussed differ among program rounds.

Second, we adjusted the role of the trainer. An important task of the trainer in the behaviorally informed program is to increase motivation and implementation, and to enhance behavioral change. We instructed trainers to apply elements of autonomy-supportive teaching derived from self-determination theory (Kusurkar et al., 2011). The purpose of autonomy-supportive teaching is to make participants feel autonomous, competent in their learning, and supported by their peers and trainers (enhancing relatedness), which all increase intrinsic or autonomous motivation (Ryan and Deci, 2000a,b; Kusurkar et al., 2011).7 We translated these insights into two elements of the program: (1) Design-your-program assignment during the first session in which participants set their own goals and choose topics for the rest of the sessions and (2) participants decide about homework by themselves. Additionally, we instructed trainers to avoid a controlling context and encourage the active participation of participants during the sessions. The role of the trainer was to create a positive, respectful, and sharing atmosphere where participants felt safe to share their stories, feelings, and questions, which supported the feeling of relatedness (Kusurkar et al., 2011).

Furthermore, trainers use the six-stage transtheoretical model of behavioral change and specific interviewing techniques as motivational instruments. The transtheoretical model proposes six stages of behavioral change: precontemplation, contemplation, preparation, action, maintenance, and termination (Prochaska et al., 1992; Prochaska and Velicer, 1997). Several studies have adapted this model to the context of financial behaviors and financial education (for an overview, see Peeters et al., 2018). During the first session, the trainer discusses the model and asks participants in which stage they place themselves and which barriers they face in changing their financial behavior. In later sessions, the model functions as a stepping-stone to refer to. Additionally, trainers apply some specific interviewing techniques, such as change talk, reflective listening, and asking open questions. Change talk is a motivational interviewing technique which helps to resolve ambivalence of participants, a common aspect of behavioral change, by highlighting the differences between the current and desired status quo (Miller and Rollnick, 2002). Trainers use specific open questions (e.g., How do you do it now? How are you going to do it? And how do you keep it up?) to enhance implementation of desired behaviors.

Third, we designed implementation assignments to enhance behavioral change. Each program session ends with making a goal-action plan and creating a rule of thumb. In a goal-action plan, participants define a session-related goal, describe the planned activities to achieve that goal, describe what and who they need for performing the activities, and set a deadline. These plans may help participants to develop concrete and actionable goals and to apply them in practice. Rules of thumb are simple heuristics of routines for financial decision-making (e.g., “I save 50 euros every month for unexpected expenditures”) and are easy to recall and to implement. Drexler et al. (2014) found that a rule-of-thumb-based financial education program for micro-entrepreneurs worked better than a standard accounting approach. Because universal rules of thumb do not address the complexity of the context for consumer financial decisions (Willis, 2008), we chose to use self-created rules of thumb as an assignment. To create enough room for these new elements, the BI program pays less attention to the transfer of knowledge, while keeping the length of the program about the same as the traditional program.

In other respects, the set-up of the BI and the traditional programs are similar. Before roll-out, we tested the BI program in a field setting and improved the program materials. Thereafter, we instructed the trainers of the BI program regarding the foundations, design, and key elements of this program during a full-day training session. Trainers taught either the BI or the traditional program, not both.

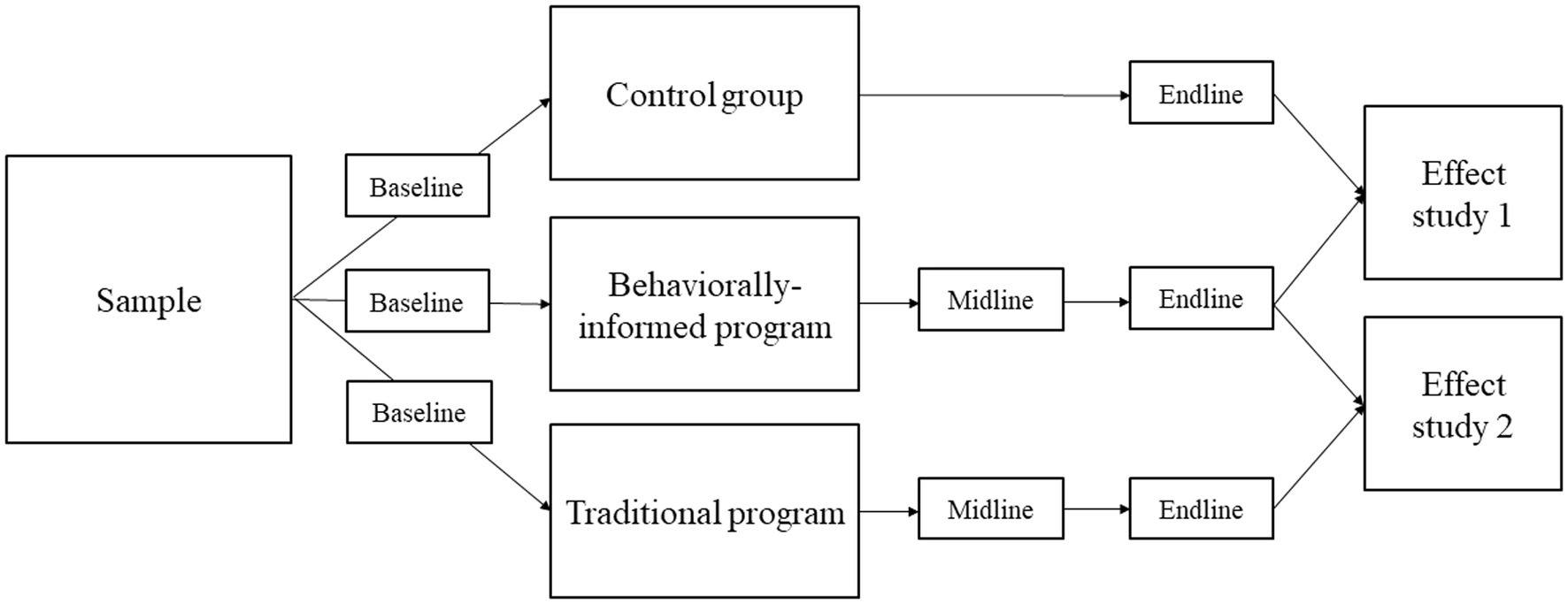

Figure 1 shows the quasi-experimental design of our study, which consisted of three conditions: (1) a traditional financial education program group, (2) a behaviorally informed (BI) financial education program group, and (3) a control group receiving no financial education. We conducted the field study in collaboration with five local government or debt counseling organizations. These field partners covered different areas (urban vs. rural), population sizes (ranging from cities with less than 40,000 to cities with over 300,000 citizens), and type of organization (municipality, social welfare organization). None of these field partners was able to implement all three conditions. Three field partners (indicated here by numbers 1–3) implemented both the behaviorally informed program and a control group, while two field partners (indicated by numbers 4 and 5) implemented both programs but no control group. As a consequence, the quasi-experimental design consisted of two separate effect studies. Effect study 1 focused on the effects of the behaviorally informed program compared to the control group. Effect study 2 investigated whether the behaviorally informed program was more effective than the traditional program. The design of this study was approved by the Research Committee of the Wageningen School of Social Sciences. As a consequence, this study was waived from a review by the ethical committee (in line with the formal requirements at the time we conducted this study).

Figure 1. Quasi-experimental research design. Effect study 1 compared the behaviorally informed program group with the control group. Effect study 2 compared the behaviorally informed with the traditional program group. Baseline survey: before the start of the program; midline survey: during the last session; endline survey: 6 months after the program’s start.

Participants were recruited from September 2017 to December 2018. We implemented several program rounds for each field partner during the study period and recruited participants before each program round,8 in close connection with the organizational processes of each field partner. As a consequence, recruitment processes differed among the field partners. Field partner 1 recruited participants during locally organized recruitment events for which they invited a broad target population (welfare claimants, debt service clients, and clients of local welfare organizations). Field partners 2, 3, and 5 mainly recruited participants among their clients (clients of budget management, debt services, and local welfare organizations) via their professionals. For field partner 5, some clients participated in the program to complete their budget management trajectory. For field partner 4, most participants enrolled in a financial education program as part of their debt reduction trajectory. We classified the participants as financially vulnerable, considering the recruitment process (mainly among debt service clients and welfare claimants) and the baseline sample characteristics (see “Sample information and balancing”).

All potential participants were informed about the purpose of the study, assignment procedures (if applicable), expected efforts (completing surveys), incentives for completing the survey, anonymous processing of the data, and the voluntary character of participation in the study. If people agreed to participate in the study, they signed an informed consent form. A total of 277 participants signed the consent form and completed the baseline survey, while 174 participants also completed the endline survey.

We applied different procedures in assigning participants to the experimental conditions. For effect study 1, we planned to randomly assign participants to the experimental and control conditions as much as possible. However, this was not feasible in practice, mainly because in several program rounds, our field partners recruited too few participants to split them into both a treatment and a control group. In these cases, we assigned the main part or all participants to the BI program group to ensure that the program could start with a minimum number of participants. Holding our key principle that all control group participants would be willing to participate in a financial education program, we applied three methods to create a control group. First, some participants were randomly assigned to the control group (9 participants of the final sample). Second, we asked potential participants who were willing to participate in the program but could not start directly (e.g., for practical reasons) to participate in the control group (10 participants). Both these and the randomly assigned participants were invited to participate in the program after completing the endline survey. Third, we recruited an additional group of participants beyond the standard recruitment process. Using a mailing and face-to-face recruitment, we asked people from a similar target group (debt trajectory clients, social benefit claimants, and visitors of financial consultation hours) to participate in the study. To hold our key principle for the control group, we included an additional question in the baseline survey: “Are you willing to participate in a financial education program if you were able and had the time to participate?” We included only participants who answered “yes” to this question in the control group (14 in the final sample). From the final sample, four participants participated twice in the study; first in the control group and thereafter in the BI program group. Overall, the control group consisted of 33 participants.

For effect study 2, we assigned participants to one of the two program groups using the following procedure. For field partner 4, we asked potential participants for their preferred day part (afternoon or evening) to follow the program and assigned them accordingly. To prevent selection bias, we rotated the day part of the programs (round 1: traditional program in the afternoon; BI program in the evening; round 2: vice versa). For field partner 5, we mainly randomly assigned participants to one of the two program groups. A few participants were assigned according to their preferences for a particular program time. We note that none of the field partners informed potential participants that the programs for the different day parts differed in content. If two or more members of the same household participated in the study (effect study 1 or 2), we assigned them to the same experimental condition. In “Sample information and balancing,” we compare the conditions for both effect studies on background characteristics and outcomes.

We collected survey (baseline, midline, and endline), administrative, observational, and interview data. The baseline survey was completed before or at the start of the first program session. A small number of participants completed this survey before the second program meeting. Participants of both program groups completed the midline survey at the end of the final program meeting. This survey contained different outcome measures than the baseline and endline surveys and focused primarily on evaluating different aspects of the program (see “Measures: Evaluation of program aspects”). The endline survey was administered about 6 months after the start of the program.9 We took substantial effort to increase the response of the endline survey by sending reminders per email (twice), sending text messages, and calling several times in case of non-response.

The main survey mode for all survey rounds was CAWI (Computer Assisted Web Interviewing). A small number of participants completed the surveys using paper and pencil. Thereafter, research assistants digitalized and checked the data. Participants received a cookbook or a gift card of 5 euros for completing the baseline survey and a gift card of 10 euros for completing the endline survey.

Table 2 provides an overview of the survey completion rates. A total of 277 participants completed the baseline survey. The final sample consisted of 140 participants for effect study 1 (33 subjects assigned to the control group; 107 subjects to the BI program) and 141 participants for effect study 2 (107 subjects assigned to the BI program and 34 subjects to the traditional program group). Endline response rates slightly differed among these conditions ranging from 56.9% (control group) to 68.0% (traditional program group). Additionally, we collected administrative data about program attendance, reasons for absence, and household members who also participated in the study. Furthermore, we collected observational and interview data to check whether both financial education programs were implemented according to their design. Research assistants observed a substantial part of the program sessions using a standardized observation form and interviewed the trainers of both programs.

We report a large number of outcome variables relative to the sample size. As a consequence, we expect some of the variables to show significant results due to chance. To avoid overemphasis on any single significant result, we minimized the number of outcome variables using the standardized indices procedure proposed by Kling et al. (2007) and applied in several other studies (e.g., Banerjee et al., 2015; Theodos et al., 2018). For each group of outcomes, we report a summary index. We constructed these indices first by creating scores for every single outcome, using factor analysis or principal component analysis if needed. Second, we switched the signs of each outcome such that higher scores corresponded to “better” outcomes. Next, we standardized each outcome into a z-score, for effect studies 1 and 2 separately, by subtracting the control group (traditional program group) mean at the corresponding survey round and dividing by the corresponding control (traditional program) group standard deviation. Then, we created five groupings of outcomes, each reflecting a specific domain, and averaged the z-scores. Again, we standardized it against the control (traditional program) group’s mean and standard deviation. As a result, the estimators could be interpreted as effect sizes in standard deviation units relative to the control (traditional program) group. Below we briefly discuss the indices, underlying individual outcomes, and used covariates (see Supplemantary material for all items).

We measured this variable using a one-dimensional scale (α = 0.909)10 including four applied knowledge-focused items (e.g., “I know which letter or email I should keep and which I can throw away”) and four applied skills-focused items (e.g., “I know how to make a budget”).11 Principal component analysis yielded a single component explaining the eight items.

This index consisted of three parts: financial attitude, financial-psychological self-evaluation, and motivation. We measured financial attitude using two items that addressed the attitude of participants toward spending money (OECD, 2015). As this measure is commonly used in financial education program evaluations, we decided to include this measure despite its low reliability (r = 0.586). Financial-psychological self-evaluation included three items (α = 0.838) measuring financial self-efficacy (Lown, 2011), perceived control (Van Dijk et al., 2020), and difficulties with self-control in keeping track of one’s financial affairs. Lastly, we measured motivation using a single item: “I find it important to keep track of my financial affairs.”

The index of financial behavior consisted of three different scales: budgeting, keeping track, and consuming consciously. We measured budgeting using four items developed by Kempson et al. (2013), asking whether one makes a budget, how often and accurately one does this, and whether one sticks to it.12 The keeping-track scale consisted of five items (α = 0.845) measuring to what extent the respondents keep track of their financial affairs. The consuming-consciously scale included three items (α = 0.844) about consciously spending money.

This index consisted of three outcomes: chronic financial stress, general financial stress, and a financial well-being scale. Chronic financial stress was measured using a six-item scale (α = 0.939), reflecting different stress symptoms related to one’s financial situation. General financial stress was measured by a single item: “In the last month, how often did you experience stress due to your financial situation?” We used the abbreviated scale (α = 0.727) of the Consumer Financial Protection Bureau (2015, 2017) to measure financial well-being.13 According to their definition, financial well-being refers to a state in which one can fully meet current and ongoing financial obligations, feels secure, and is able to make choices to enjoy life.

This index included three aspects of one’s household financial situation. We measured relative financial buffer using an item about how long one’s household can still pay the groceries and fixed charges when the main income source would be lost (derived from OECD, 2015). Additionally, we measured how well one’s household was able to make ends meet (Eurostat, 2014) and perceived debts by asking whether one thought one’s household had excessive debts (Lusardi and Mitchell, 2018).

Additionally, we collected survey data on age (in years), gender (male), educational level (lower, intermediate, and higher educated), migration background (dummy variable), partner, children (dummy variable), net household income (log),14 and main household income source (dummy variable: income from employment or self-employment versus other types of income). Additionally, we included a dummy variable for receiving either professional assistance for financial management (assistance from an administrator or budget holder) and/or professional debt assistance (debt rescheduling scheme or amicable debt settlement). Finally, items about income, living together with a partner, and with children, were included both in the baseline and in the endline survey.

The midline survey provides insight into the differences between both programs as perceived by the participants, with respect to three categories of outcomes.

Building upon the validated teaching behavior scale of Maulana et al. (2015), measures for each of two domains of trainers’ teaching behavior were constructed: (1) clear instruction and creating a safe learning environment (α = 0.932) and (2) adaptive teaching and activating learning (α = 0.909).15 We expected similar scores between both programs on the first dimension and higher scores for the BI program on the second dimension.

We measured two aspects of participant’s evaluation of the program: (1) perceived usefulness of (elements of) the program (α = 0.821) and (2) overall satisfaction with the program (single item). See Supplemantary material for the measurement instruments.

Participants were asked whether the program contributed to (1) improving financial management (four items, α = 0.887), (2) improving financial-psychological indicators (four items, α = 0.864), and (3) providing and implementing implementation tools (two items: rules of thumb and goal-action plans, α = 0.722) designed for the BI program. We expected similar scores on the first and higher scores for the BI program on the second and third aspects. See Supplemantary material for the measurement instruments.

Table 3 shows the descriptive statistics of our full sample and for each condition separately. Nearly all participants in our study were financially vulnerable. The average net household income was 1,424 euros per month, which is around the Dutch monthly minimum wage. Most participants (64%) relied on social benefits (social welfare, disability, unemployment, or pension) as the main income source. Only one-third of our sample (36%) earned income from (self-)employment. Furthermore, participants were mainly lower (47.0%) or intermediate (40%) educated. Only a small part was higher educated. More than two-thirds of the sample received professional assistance for financial management and/or problematic debts. Most participants were female (58%); the average age was 43.3 years. Only a small part of our sample had a partner (22%), while about one-third had one or more children living at home (36%).

Additionally, we tested whether the groups of both effect studies were balanced. For effect study 1, the BI program group seemed to be well balanced compared to the control group for several background characteristics, but less well for education level, professional assistance, household income, and partner. For the BI program compared to the traditional program group (effect study 2), differences in baseline characteristics were more pronounced, specifically for income, professional assistance, and income from (self-)employment. To control for these imbalances, we decided to include the above-listed variables as covariates in our regressions. Additionally, we performed a robustness check in which we matched subjects on all background characteristics (see “Empirical methods”).

We observed non-compliance for effect study 1 (see Table 2) and non-take-up among both financial education programs in effect study 2. To adequately incorporate non-compliance and non-take-up in our empirical strategy, we estimated three types of treatment effects: (1) intention-to-treat effects (effect study 1 and 2), (2) local average treatment effects (effect study 1), and (3) treated-only effects (effect study 2).

To estimate intention-to-treat (ITT) effects, we used a difference-in-difference (DID) approach. For the ITT analysis, we compared participants assigned to the BI program group to those assigned to the control group (effect study 1) or the traditional program group (effect study 2), irrespective of whether they received the treatment or not.16 The ITT-model estimates the effects of offering the BI program and takes the following form:

is the outcome variable for individual in period . is a binary variable indicating the assigned treatment status for each unit (0 = control or traditional program group, 1 = BI program group). is a time dummy variable (0 = baseline, 1 = endline). The parameter of interest is which indicates the DID estimator; is the error term. The vector contains the covariates. To ensure enough observations per estimated coefficient, we reduced the number of covariates per specification to a maximum of three. Following the balance performance (“Sample information and balancing”), we included household income (log), professional assistance, and education level as covariates in the main specification, and income from (self-)employment, partner, and migration background in a robustness check. If available, we included both baseline and endline values for the covariates. According to Lechner (2010), time-varying covariates (if not affected by the treatment) perform better in removing time-confounding than only including pre-treatment measures. Following the recommendations of Abadie et al. (2017), we clustered standard errors on the household level as we assigned participants who belonged to the same household to the same treatment group. For the analyses of the DID treatment effects, we used the diff-package designed by Villa (2016).

For effect study 1, we additionally estimated local average treatment effects (LATE) in a two-stage least squares (2SLS) framework which adequately addresses potential selection effects caused by non-compliance. This model assumes that all effects operate via treated individuals (i.e., subjects that participated in the financial education program). Following the guidelines of Gerber and Green (2012), we counted partially treated subjects as fully treated. In the first stage, we used the assigned treatment status () as an instrumental variable for the actual treatment status (). The first stage equation takes the following form:

In the second stage, we used the following equation to estimate the treatment effects:

The parameter of interest is which indicates the treatment effect among compliers. Compliers are subjects who only participated in the treatment (BI program) if they are assigned to this treatment. The vector contains the same covariates as for the difference-in-difference analysis (see Equation 1) and additionally included the baseline scores for the outcome of interest.

For effect study 2, the 2SLS-apprach was not feasible due to non-take-up among both financial education program groups. As an alternative solution, we estimated a treated-only model in which we compared educated participants of the BI program with educated participants of the traditional program.17 The treated-only model estimates the effects of participation in the behaviorally informed program and provides insight into the efficacy of the BI program, which is relevant for policymakers and practitioners. We used the following equation:

Equation (4) is very similar to equation (1). The difference is that we used the actual treatment status () for each unit (0 = traditional program group, 1 = BI program group) rather than the assigned treatment group (). We defined educated participants as those who completed at least one (main analyses), respectively three (robustness check) program session(s).

To verify whether choices in model specification and estimation did affect our results, we performed several robustness analyses in which we varied (1) added covariates and (2) including/excluding the participants of particular field partners. Additionally, we performed a propensity score matching difference-in-difference model to measure ITT and treated-only effects. In estimating the propensity score, we used a rich set of control variables (see Table 3) that might affect both treatment assignment, program participation, and (any of) the outcomes.

The statistical power of our study may have been reduced by three factors. First, our final sample was relatively small, despite intensive recruitment and survey response campaigns. Second, among those who were assigned to either the traditional or BI program group, some did not complete any of the financial education sessions (see Table 2). Furthermore, one participant of the control group attended the financial education sessions. Third, participants of both programs attended less (on average 3.6 sessions) than the planned five-to-six sessions. Due to these challenges, we expected difficulties with detecting potential treatment effects for outcomes possibly resulting after a change in financial behavior (financial well-being and financial situation) and for detecting significant differences between the BI and traditional programs. In attempting to solve these problems, we decided to minimize the number of outcomes using standardized indices (see “Measures: Outcomes and covariates”). Furthermore, we decided to apply different empirical methods and specifications to avoid overreliance on any single method or specification (as discussed above). We interpret effects only as such if they were robust, which is significant under different specifications.

To estimate the effects on evaluation scores of program elements (using the midline survey; see “Measures: Evaluation of program aspects”), we applied propensity score matching using a set of control variables measured in the baseline survey. We performed these estimations using psmatch2 (Leuven and Sianesi, 2003) without clustering the standard errors.

We used observational and interview data to evaluate the implementation of both financial education programs. The traditional program was largely implemented according to its design. The trainers of this program discussed all main topics of the workbook more or less in the same order. Sometimes, they discussed additional modules provided in the course materials like “varying income,” “money and children,” and “money and relationships.” As part of their homework, the trainer asked the participants to keep track of their earnings and expenditures during the program period. We found differences across traditional program trainers in teaching the set-a-goal assignment (not taught vs. more prominent role) and seeking interaction with participants (more vs. less prominent role). The trainers involved in our study were experienced and had already run this program for several years.

Most elements of the BI program were implemented according to its design. Indeed, trainers paid much attention to the “design-your-program” assignment. The participants’ main chosen topics were budgeting, book accounting, financial administration, and resisting temptations. Furthermore, we found that trainers indeed discussed the stages-of-change model, applied elements of the (motivational) interviewing techniques, and discussed the goal-action-plan assignment. Some deviations from the program design were observed. First, during the “design-your-program” assignment, some trainers steered participants to choose topics they found relevant (e.g., budgeting). Second, most trainers did not deliver the rules-of-thumb assignment, possibly due to time constraints. Additionally, both programs regularly had fewer than eight participants. Overall, these deviations from the planned implementation in both programs might have attenuated the differences between both programs, thus limiting the experimental manipulation for effect study 2.

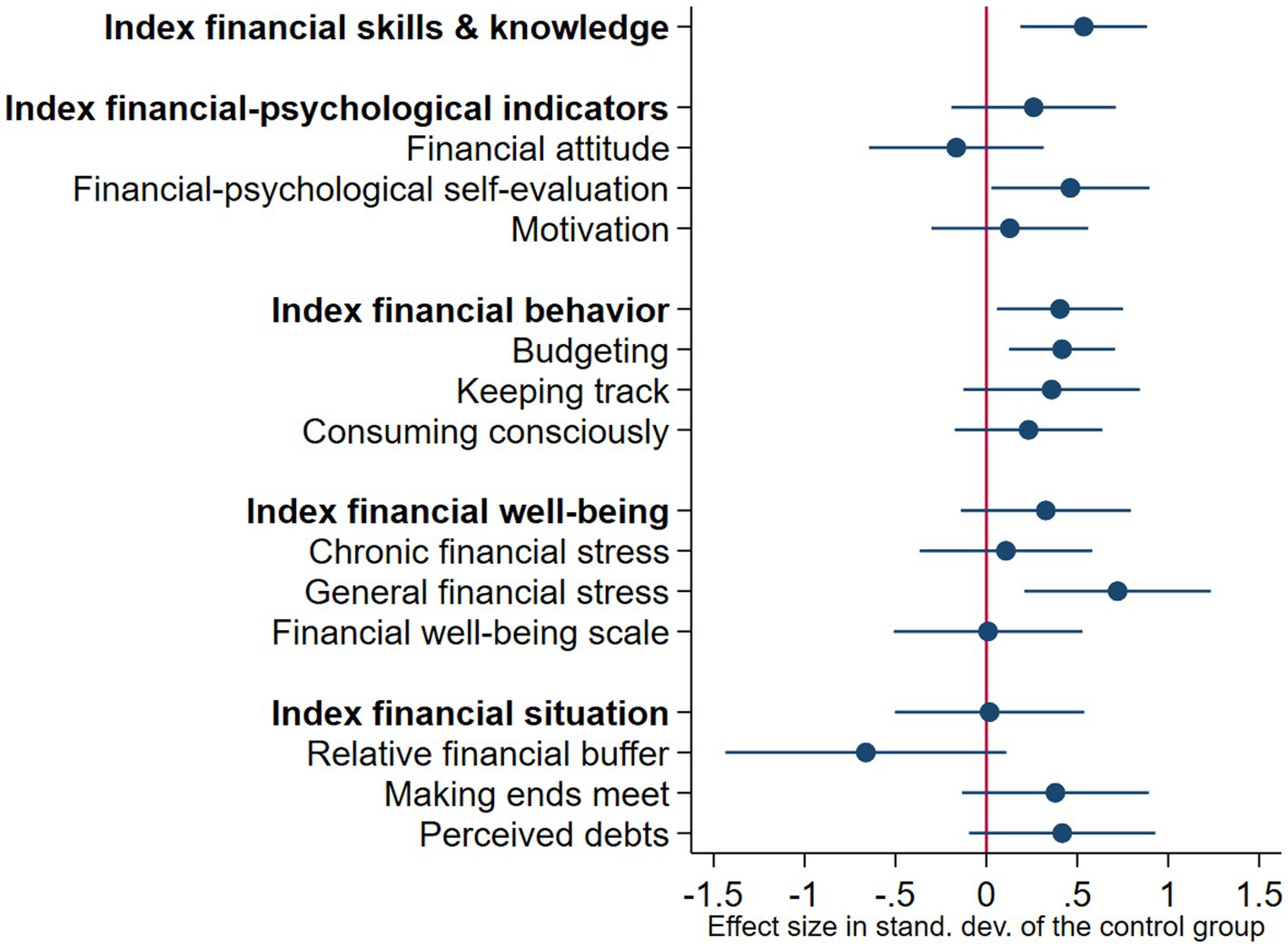

Figure 2 and Table 4 provide an overview of the ITT effects of the BI program in comparison with the control group. We found significant effects of being assigned to the BI program on financial skills and knowledge (ITT = 0.536, SE = 0.176, p = 0.003) and financial behavior (ITT = 0.405, SE = 0.175, p = 0.023). Thus, offering this program improved the financial skills and knowledge with 0.54 SDs and financial behavior with 0.41 SDs. These results hold under all specifications. In contrast, we did not find significant positive ITT effects on psychological outcomes, subjective financial well-being, and financial situation.

Figure 2. Behaviorally informed program versus control group: Intention-to-treat effects. This figure summarizes the intention-to-treat effects for the five primary outcomes (see Table 4, column 1). All treatment effects are presented as standardized z-scores, standardized to the control group. Each entry shows the standardized outcome and its 95% confidence interval.

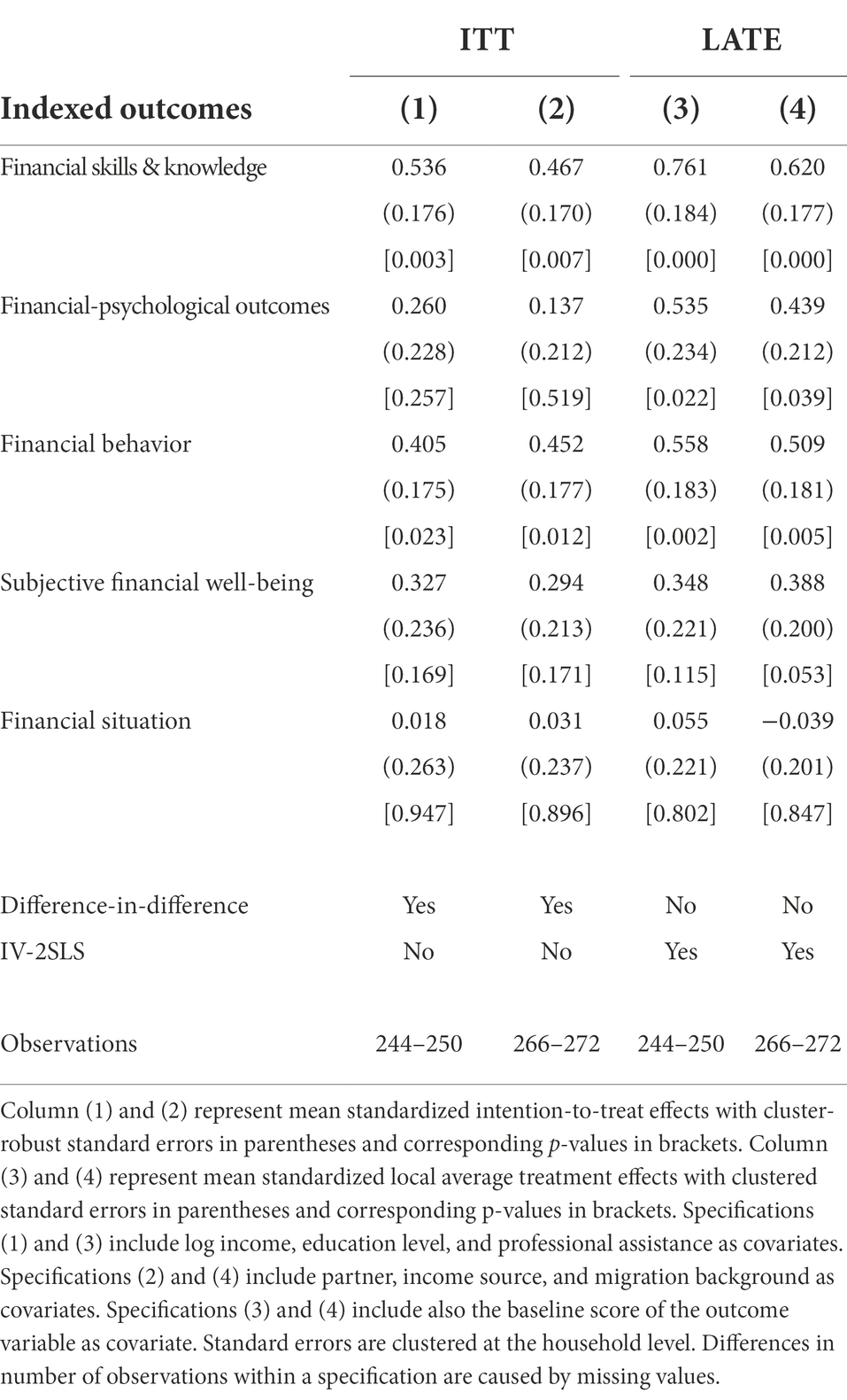

Table 4. Behaviorally informed program versus control group: Intention-to-treat and local average treatment effects.

Figure 3 and Table 4 show the estimates of the LATE effects of the BI program. We found robust significant positive effects on financial behavior (LATE = 0.761, SE = 0.184, p < 0.001), financial-psychological outcomes (LATE = 0.535, SE = 0.234, p = 0.022), and financial behavior (LATE = 0.558, SE = 0.183, p = 0.002). Thus, the effect on financial-psychological outcomes was significant under the LATE-model, but not under the ITT-model. In line with our expectations, the size of the LATE effects was larger than the ITT effects.

Figure 3. Behaviorally informed program versus control group: Local average treatment effects Notes. This figure summarizes the local average treatment effects for the five primary outcomes (see Table 4, column 3). All treatment effects are presented as standardized z-scores, standardized to the control group. Each entry shows the standardized outcome and its 95% confidence interval. The effects on financial-psychological self-evaluation, keeping track and general financial stress were not robust under alternative specifications.

We conducted the following analyses to check the robustness of our ITT and LATE results: (1) applying a propensity score matching difference-in-difference method, (2) dropping participants of field partners that did not implement a control group, (3) dropping household income as covariate (to avoid missing values), (4) including two dummy variables for professional assistance (receiving financial management assistance and receiving debt assistance), and (5) dropping participants who participated both in the control and BI program group. Our results were robust under these alternative specifications.

We conducted exploratory analyses to investigate the ITT and LATE effects on all single outcomes. We found that positive effects on budgeting (ITT = 0.416, SE = 0.147, p = 0.006; LATE = 0.426, SE = 0.179, p = 0.017) mainly have driven the effect on financial behavior. The effects on the other aspects of financial behavior (keeping track and consuming consciously) were also positive, but not significant, as reflected in Figure 2. Additionally, we found that positive effects on motivation (LATE = 0.518, SE = 0.198, p = 0.009) were the main driver of the LATE effect on financial-psychological outcomes. We did not find robust significant ITT or LATE effects on other individual outcomes.

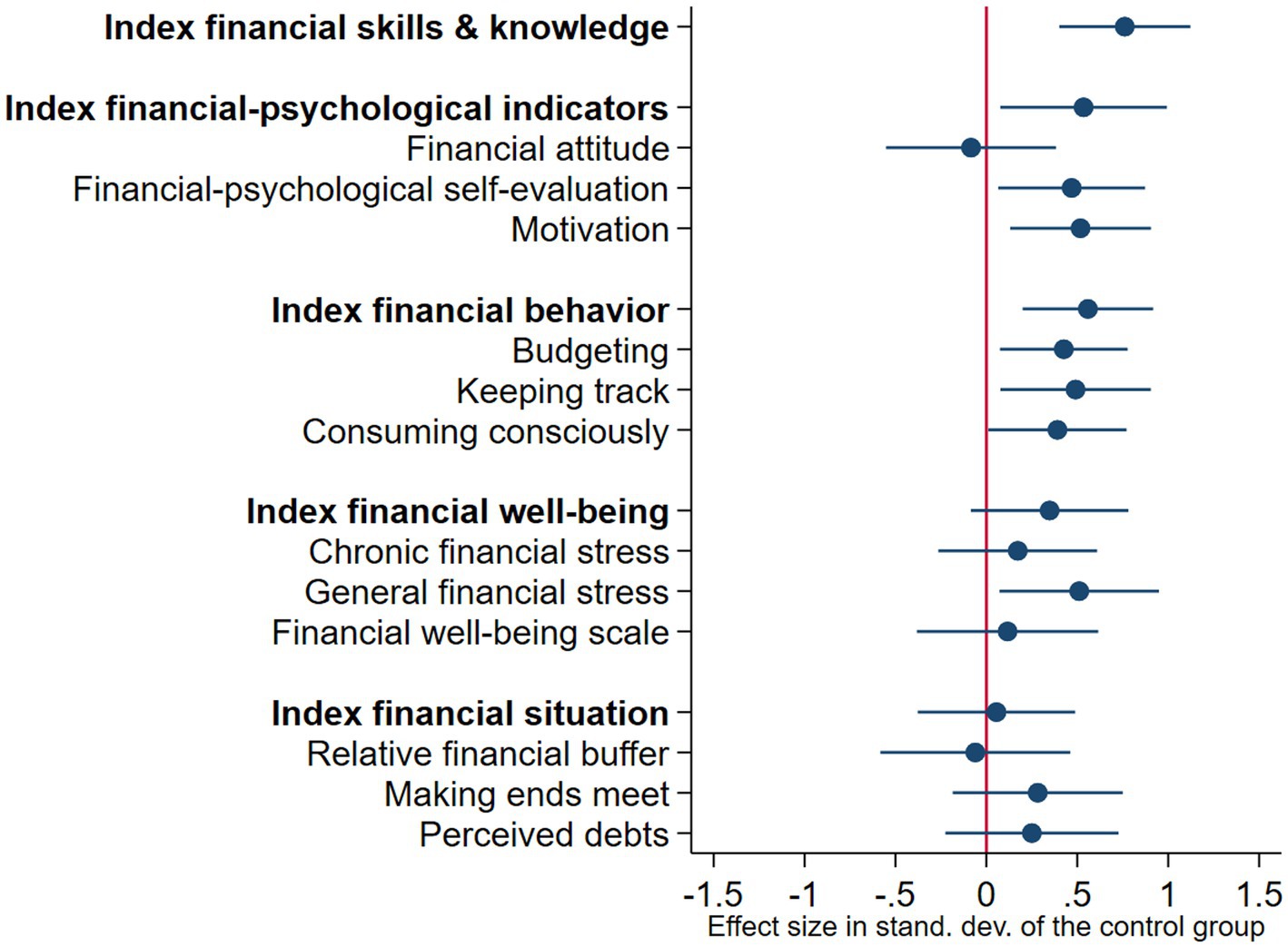

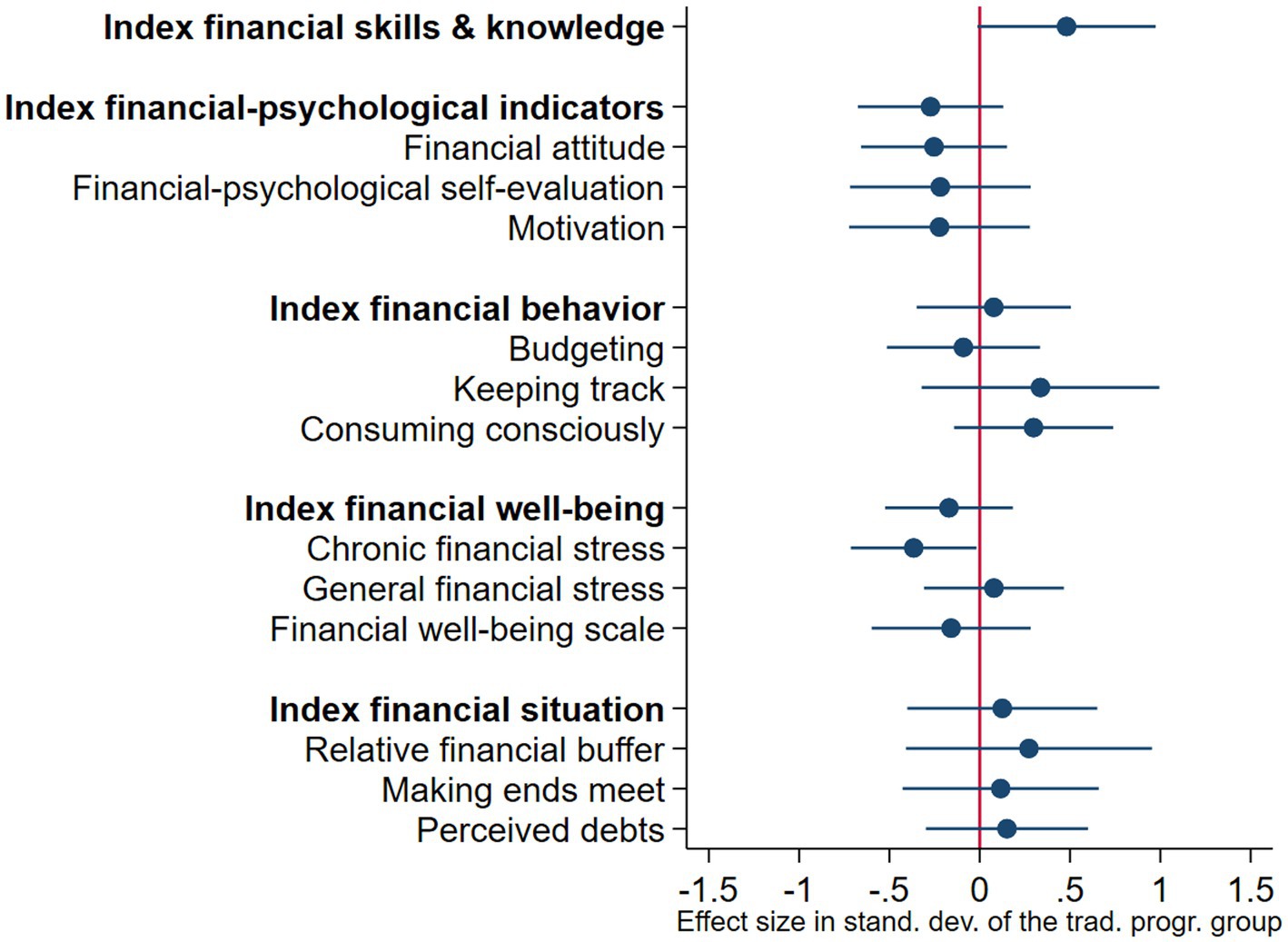

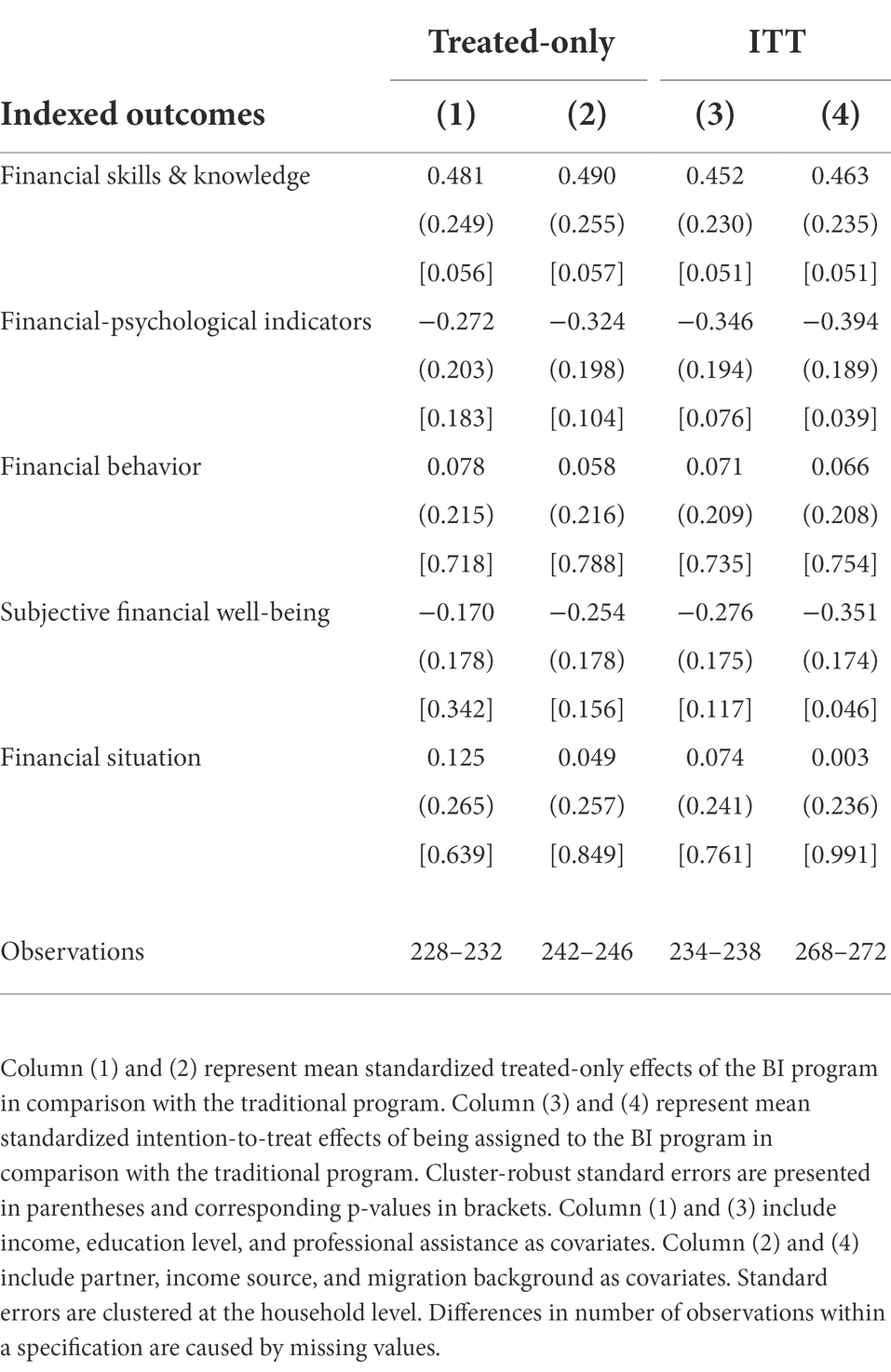

Figure 4 and Table 5 display the treated-only effects of the BI program in comparison with the traditional program. We did not find evidence for effects of participating in the BI program compared to the traditional program for any of the outcomes. None of the outcomes were significant under more than one specification. The non-results were robust under (1) alternative specifications (same as for effect study 1), (2) the ITT-model, and (3) treatment assignment based on three or more sessions attended. We exploratively analyzed the effects on all 13 individual outcomes. We did not find robust effects on any of these outcomes.

Figure 4. Behaviorally informed (BI) versus traditional program: Treated-only effects. This figure summarizes the treated-only effects for the five primary outcomes (see Table 5, column 1). Treatment effects are presented as standardized z-scores, standardized to the traditional program group, and its 95% confidence interval.

Table 5. Behaviorally informed versus traditional program: Treated-only and intention-to-treat effects.

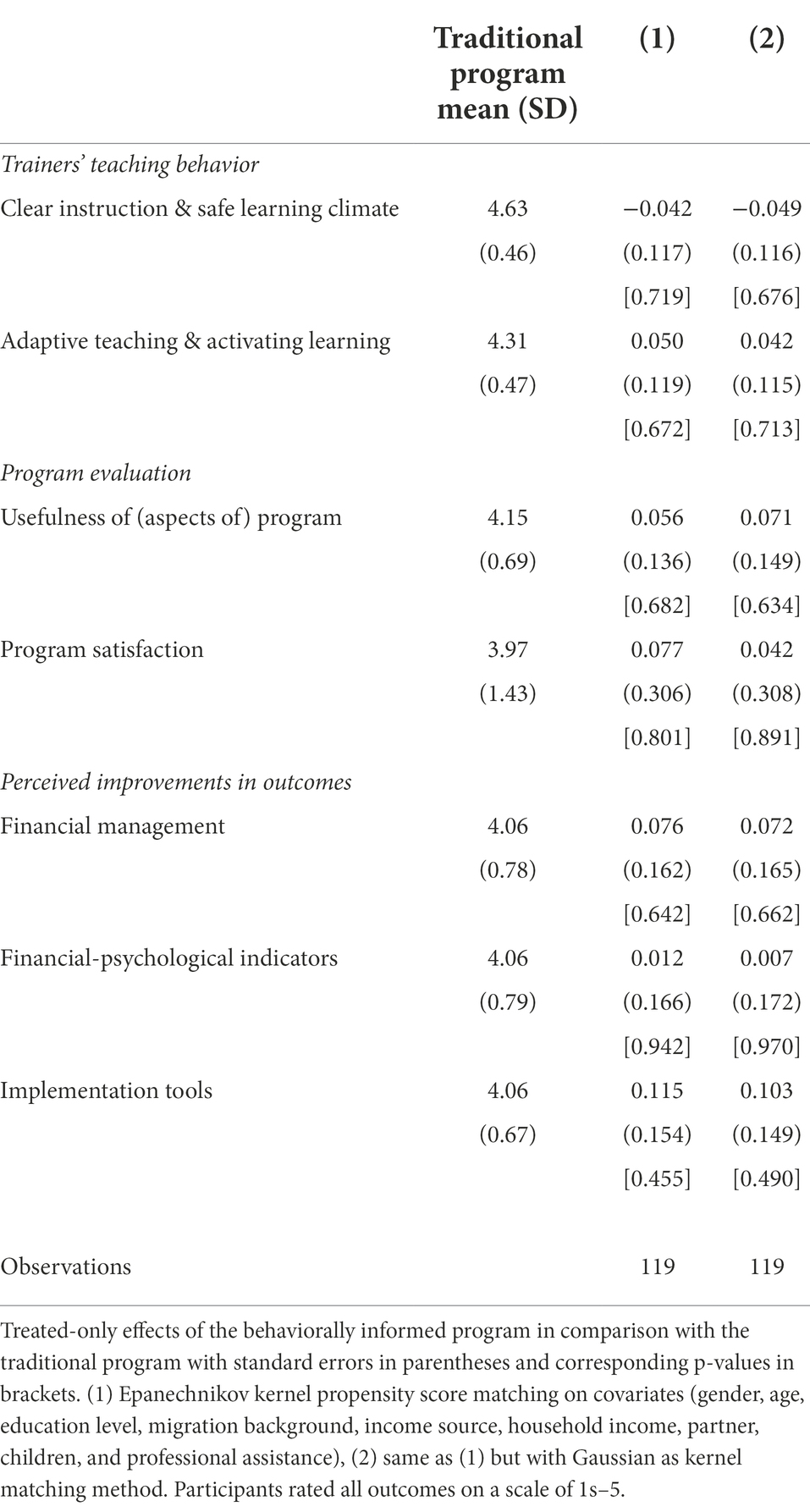

We conducted additional analyses to find out why we did not find differences between the BI and the traditional program. First, we explored the treated-only effects of participating in the BI program on evaluation scores of program elements (using the midline survey) compared to participating in the traditional program (see Table 6). We expected that participants of this program would score relatively better on financial-psychological outcomes, adaptive teaching and activating learning (evaluation trainer), and use of implementation tools than participants of the traditional program. However, neither the effects on these outcomes nor the effects on the other outcomes were significant. We note that the participants of the traditional program provided relatively high mean scores on the outcomes, suggesting little room for higher scores in the BI program. These results suggest that, in terms of outcomes for the participants, the BI and the traditional program performed more or less equally.

Table 6. Behaviorally informed versus traditional program: Treated-only effects on evaluation scores of program elements.

Second, we investigated the role of teaching experience as a potential determinant of evaluation scores on program elements. The rationale is that the BI program trainers did not have experience in teaching this program, while the traditional program trainers did have that experience. A lack of BI teaching experience might have negatively affected the BI program scores explaining the non-results for the comparison between both programs. To examine this potential explanation, we considered two types of teaching experience that might have played a role. First, we considered ex-ante teaching experience. We analyzed whether BI program trainers with previous experience in teaching financial education did obtain higher scores than teachers without this experience. Second, we considered on-the-road teaching experience. Trainers taught multiple program rounds and consequently became more experienced in teaching the BI program. We investigated whether BI program trainers obtained higher scores in later program rounds. Overall, we found that neither ex-ante teaching experience nor on-the-road experience in teaching the behaviorally informed program significantly affected participants’ evaluation scores on the program elements.

This study aimed at designing a behaviorally informed financial education program targeting financially vulnerable consumers and investigating its impact compared with both a control group and a traditional financial education program. Below, we discuss the main findings, implications, and limitations of our study.

Our study shows three main findings. First, the behaviorally informed program had a positive effect on financial behavior as compared with the control group. We found that offering the BI program improved financial behavior with 0.41 SDs (ITT effect) while the improvement for compliers was 0.56 SDs on average (LATE). Additionally, we found a significant treatment effect of 0.54 SDs (ITT), respectively, 0.76 SDs (LATE) on financial skills and knowledge. For financial-psychological outcomes, the treatment effect was only significant under the LATE-model (0.54 SDs). These results are held under several robustness specifications. Explorative analysis suggested that the effect on financial behavior was mainly explained by a positive effect on budgeting. In terms of program intensity, target population, and study design, our study is most comparable to Collins (2013). This study found positive effects of a mandatory financial education program on some self-reported behaviors, specifically paying bills on time and planning for the future, but no consistent effects on budgeting. A potential explanation of why we found positive effects on budgeting specifically is that budgeting was among the common activities of the BI program. Furthermore, the motivational and implementation components of the program might have contributed to start, improve, and sustain this activity. Financial skills, knowledge, financial-psychological outcomes, and behaviors may be considered direct effects of the BI program because these elements were taught during the program sessions.

Second, we did not find evidence for positive effects on financial well-being and financial situation. A potential reason is that our sample size was too small to detect effect sizes that can still be meaningful. Given our sample size, α = 0.05, β = 0.2, and the estimated standard errors the post-hoc minimum detectable effect sizes (MDE) for these outcomes were.66 and.74 SDs, respectively. Furthermore, we should expect smaller effect sizes for the latter two outcomes because these are indirectly related to the financial education program and may require more time and education to develop. We note that financial behavior is positively associated with both financial well-being (r = 0.286, p < 0.001) and financial situation (r = 0.274, p < 0.001). This is in line with the literature that predict and find a positive impact of financial behavior (e.g., budgeting) on financial well-being and financial outcomes (e.g., Zhang et al., 2021). For example, improved budgeting practices might help to take control over one’s finances, avoid overspending, and save for long-term goals (Zhang et al., 2021). As a consequence, one’s financial well-being and financial situation might improve. In line with this reasoning, the BI program improved budgeting and might have positive effects on these outcomes in the longer run. However, our study is not able to provide a final answer. Future studies should address this issue.

Third, our study did not find evidence that the BI program performed better than the traditional program on the primary outcomes. Given our sample size, we were able to detect effect sizes between 0.50 SDs and 0.74 SDs. As smaller effect sizes can still be meaningful, our results cannot provide a final answer whether the BI program is more effective than the traditional program. However, we even did not find significant differences between both programs for the program evaluation measures. These findings suggest that the perceived differences between both programs were smaller than expected. A potential explanation is that both programs contained significant overlap in topics discussed and education time. Additionally, both low attendance rates and deviations in implementation relative to program designs might have attenuated the (experienced) differences between both programs. Furthermore, the traditional program already obtained high scores from participants, thus leaving little room for improvement. As a consequence, we cannot provide a final answer as to whether the new elements contributed to the effectiveness of the BI program.

We discuss some shortcomings of our study. First, the evaluation period of 6 months between treatment and measuring outcomes is short. We recommend future studies to implement a longer evaluation period, preferably of at least 18–24 months, to pick up possible indirect effects on financial situation and financial well-being. Second, we report a low reliability for the commonly used financial attitude measure. Third, we faced difficulties with the implementation of the field study. We were not able to fully randomize the allocation of participants to the treatment groups. As a consequence, we cannot rule out all potential threats affecting selection, despite our efforts to increase the credibility of the common trend assumption. Additionally, we may have faced a lack of statistical power due to difficulties with recruiting participants, attrition, and partial compliance. An ideal solution to solve these problems is to run an RCT including a substantially larger sample. However, both our study and Collins (2013) show that this is not that simple. Due to the hard-to-reach target population and complex context (different governmental interventions at the same time), it will be hard to have full control over all potential threats affecting the design, implementation, and results of the study. A natural experiment might be a better solution, although this might come with selection problems and difficulties in collecting survey data. To instigate future research to the BI program in different settings, we make available the elementary course materials of this program.18

We end with an important puzzle. Low take-up and high drop-out are essential problems for financial education programs targeting financially vulnerable consumers (Collins, 2013; Kaiser and Menkhoff, 2017; Theodos et al., 2018). We faced the same problems. Despite considerable efforts (e.g., advertisements, mailings, and recruitment via professionals) of our field partners in reaching the target population, we faced low take-up and considerable drop-out rates. These issues may reflect a low demand for financial education. Furthermore, not participating can be rational as financial education does not benefit everyone (Lusardi et al., 2020a). These problems affect the cost-effectiveness of financial education programs. An effective program might not be cost-effective if too few people participate. A remaining issue is how to reach this target population. Consequently, policymakers and practitioners may consider alternative strategies to foster healthy financial behavior and to improve financial well-being. For example, they may encourage the use of budgeting tools building on commitment strategies and mental accounting (Dolan et al., 2012), as budgeting seems to improve financial well-being (Zhang et al., 2021).

In their meta-study, Kaiser and Menkhoff (2017) raise two remaining problems. First, how can we improve the effectiveness of financial education programs? Second, how can we effectively reach people who do not participate? These problems are especially pressing for reaching financially vulnerable consumers. Our work addresses the first issue and suggests that a modest financial education intervention incorporating behavioral insights has a modest positive impact on the financial skills and knowledge and the financial behavior of this target population. This result is hopeful as meta-studies have found only (very) small effects of financial education interventions on financial behavior of financially vulnerable people (Fernandes et al., 2014; Kaiser and Menkhoff, 2017).

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: Codes and data are available (upon request) via Data Archiving and Networked Services (DANS): https://doi.org/10.17026/dans-27y-s77z.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

TM designed the behaviorally informed financial education program. E-JB and TM collected the data. E-JB performed the analyses and wrote the manuscript, while GA and TM provided feedback. E-JB, GA, and TM contributed to the design of the study. All authors contributed to the article and approved the submitted version.

This work was supported by ZonMw (535.002.001) and by the Netherlands Organization for Scientific Research (023.005.060 to E-JB).

We gratefully thank Eline Maussen and Barbera van der Meulen for their research assistance. We also thank Erwin Bulte, Henriëtte Prast, Lars Tummers, Marrit van den Berg, Wilco van Dijk, Fred van Raaij, and Timo Verlaat for their useful comments.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.1090024/full#supplementary-material

1. ^Kaiser and Menkhoff (2017) found an effect size of 0.09 (standardized mean difference) on financial behavior and of 0.21 on budgeting and planning behavior.

2. ^A recent meta-study did not find differences between low- and higher-income subjects (Kaiser et al., 2022).

3. ^Some studies found that other types of financial education interventions, like financial counselling, financial coaching, and education in a matched savings account program, can improve financial outcomes for low-income participants (e.g., Agarwal et al., 2010; Grinstein-Weiss et al., 2015; Theodos et al., 2018).

4. ^The description of the traditional program applies to the program provided by Nibud until 2019. Recently, Nibud has changed the set-up of the program and, on basis of the results of this study, already implemented some elements of the behaviorally informed program. See https://www.nibud.nl/kennis-in-de-praktijk/cursussen-workshops-coaching/budgetcursus-omgaan-met-geld/ for more information about course materials (in Dutch).

5. ^We note that one of our field partners used their own materials based on the program developed by Nibud (before the 2019 change). This program included a fixed set-up and similar topics as the traditional program.

6. ^Field partners 4 and 5 implemented, respectively, five and six traditional program sessions. We note that the average number of program sessions attended was the same for the BI and traditional program (see Table 2).

7. ^We note that most people do not find elementary financial activities (e.g., budgeting or deciding about financial products) inherently interesting or enjoyable. Because intrinsic motivation for these financial behaviors is not evident, facilitating and promoting internalization is essential to enhance learning and behavioral change (Niemiec and Ryan, 2009).

8. ^A program round consisted of 5–6 weeks in which the field partners either offered both programs (field partners 4 and 5) or only the BI program (field partners 1–3). In total, field partners implemented 5–9 program rounds. Overall, the field partners provided the BI program 28 times and the traditional program 12 times.

9. ^A longer evaluation period was not feasible within the restricted time frame of the funding by the Dutch science organization ZonMw.

10. ^Reported Cronbach’s Alphas (a) and Spearman-Brown coefficient (r) were estimated using all baseline surveys.

11. ^The literature lacks a validated test that measures applied financial skills and knowledge in the context of our study. As a consequence, we decided to include these self-reported measures.

12. ^The budgeting score was computed by the procedure proposed by Kempson et al. (2013). Because the individual items are artificially coherent (one item ends up in all subscales), Cronbach’s alpha is inflated.

13. ^The original scale consisted of five items. We excluded one item ("I am just getting by financially") because it could be interpreted ambiguously.

14. ^Income was measured in six categories. We used the mean of each category (upper limit of lowest category, lower limit of highest category) as income level. We estimated the logarithm of this level and used this number in our analyses. To avoid missing values for income at the endline, we imputed baseline income values for ten participants. None of these participants reported changes in partner status, which underpins the reliability of the imputation.

15. ^We found these two components after including all nine items in a principal component analysis.

16. ^Due to survey attrition, not all initially assigned participants were included, thus slightly modifying the ITT-design. Survey attrition did not seem to be systematic, as descriptive statistics after baseline survey completion were quite similar to that after baseline and endline survey completion, both overall and per condition.

17. ^We determined non-compliance using the attendance registration of the field partners. As an additional check, we asked in the endline survey whether respondents participated in a financial education program in the last six months. Four non-compliers of the BI program group (ITT) reported to have participated in a program, while they were non-compliers according to the administrative data. To ensure that the control group only contained non-educated participants, we dropped these participants from the treated-only analysis for effect study 1.

18. ^Elementary course materials of the BI program are available (in Dutch) upon request from the corresponding author.

Abadie, A., Athey, S., Imbens, G., and Wooldridge, J. (2017). When should you adjust standard errors for clustering? NBER working paper 24003. doi: 10.3386/w24003

Agarwal, S., Amromin, G., Ben-David, I., Chomsisengphet, S., and Evanoff, D. D. (2010). Learning to cope: voluntary financial education and loan performance during a housing crisis. Am. Econ. Rev. 100, 495–500. doi: 10.1257/aer.100.2.495

Banerjee, A., Duflo, E., Goldberg, N., Karlan, D., Osei, R., Pariente, W., et al. (2015). A multifaceted program causes lasting progress for the very poor: evidence from six countries. Science 348:1260799. doi: 10.1126/science.1260799

Bernheim, D. (1998). “Financial illiteracy, education, and retirement saving,” in Living With Defined Contribution Pensions. eds. O. Mitchell and S. Schieber (Philadelphia, PA: University of Pennsylvania Press), 38–68.

Cole, S., Paulson, A., and Shastry, G. K. (2014). Smart money? The effect of education on financial outcomes. Rev. Financ. Stud. 27, 2022–2051. doi: 10.1093/rfs/hhu012

Collins, J. M. (2013). The impacts of mandatory financial education: evidence from a randomized field study. J. Econ. Behav. Organ. 95, 146–158. doi: 10.1016/j.jebo.2012.08.011

Consumer Financial Protection Bureau (2015). Measuring Financial Well-being: A Guide to Using the CFPB Financial Well-being Scale. Washington DC: Consumer Financial Protection Bureau.

Consumer Financial Protection Bureau (2017). CFPB Financial Well-being Scale: Scale Development Technical Report. Washington DC: Consumer Financial Protection Bureau.

De Beckker, K., De Witte, K., and Van Campenhout, G. (2019). Identifying financially illiterate groups: an international comparison. Int. J. Consum. Stud. 43, 490–501. doi: 10.1111/ijcs.12534

De Bruijn, E., and Antonides, G. (2022). Poverty and economic decision making: a review of scarcity theory. Theor. Decis. 92, 5–37. doi: 10.1007/s11238-021-09802-7

Dolan, P., Elliott, A., Metcalfe, R., and Vlaev, I. (2012). Influencing financial behavior: From changing minds to changing contexts. Journal of Behavioral Finance 13, 126–142. doi: 10.1257/app.6.2.1

Drexler, A., Fischer, G., and Schoar, A. (2014). Keeping it simple: financial literacy and rules of thumb. Am. Econ. J. Appl. Econ. 6, 1–31. doi: 10.1257/app.6.2.1

Entorf, H., and Hou, J. (2018). Financial education for the disadvantaged? A review. SAFA working paper 205, doi: 10.2139/ssrn.3167709

Eurostat (2014). Methodological guidelines and description of EU-SILC target variables: 2014 operation. Available at: https://circabc.europa.eu/sd/a/2aa6257f-0e3c-4f1c-947f-76ae7b275cfe/DOCSILC065 (Accessed July 13, 2017)

Fernandes, D., Lynch, J. G., and Netemeyer, R. G. (2014). Financial literacy, financial education, and downstream financial behaviors. Manag. Sci. 60, 1861–1883. doi: 10.1287/mnsc.2013.1849

Gerber, A. S., and Green, D. P. (2012). Field Experiments: Design, Analysis, and Interpretation. New York, NY: W. W. Norton & Company.

Grinstein-Weiss, M., Guo, S., Reinertson, V., and Russell, B. (2015). Financial education and savings outcomes for low-income IDA participants: does age make a difference? J. Consum. Aff. 49, 156–185. doi: 10.1111/joca.12061

Hasler, A., Lusardi, A., and Oggero, N. (2018). Financial fragility in the US: evidence and implications. GFLEC working paper 2018-1. Available at: https://gflec.org/wp-content/uploads/2018/04/Financial-Fragility-in-the-US.pdf?x21285

Hilgert, M. A., Hogarth, J. M., and Beverly, S. G. (2003). Household financial management: the connection between knowledge and behavior. Fed. Reserv. Bull. 89:309.

Iterbeke, K., De Witte, K., Declercq, K., and Schelfhout, W. (2020). The effect of ability matching and differentiated instruction in financial literacy education. Evidence from two randomised control trials. Economic. Educ. Rev. 78:101949. doi: 10.1016/j.econedurev.2019.101949

Kaiser, T., Lusardi, A., Menkhoff, L., and Urban, C. (2022). Financial education affects financial knowledge and downstream behaviors. J. Financ. Econ. 145, 255–272. doi: 10.1016/j.jfineco.2021.09.022

Kaiser, T., and Menkhoff, L. (2017). Does financial education impact financial literacy and financial behavior, and if so, when? World Bank Econ. Rev. 31, 611–630. doi: 10.1093/wber/lhx018

Kaiser, T., and Menkhoff, L. (2018). Active learning fosters financial behavior: experimental evidence. DIW Berlin Discussion Paper 1743. doi: 10.2139/ssrn.3208637

Kempson, E., Perotti, V., and Scott, K. (2013). Measuring Financial Capability: A New Instrument and Results From Low- and Middle-income Countries. Washington, DC: World Bank.

Klapper, L., and Lusardi, A. (2020). Financial literacy and financial resilience: evidence from around the world. Financ. Manag. 49, 589–614. doi: 10.1111/fima.12283

Kling, J. R., Liebman, J. B., and Katz, L. F. (2007). Experimental analysis of neighborhood effects. Econometrica 75, 83–119. doi: 10.1111/j.1468-0262.2007.00733.x

Kusurkar, R. A., Croiset, G., and Ten Cate, T. J. (2011). Twelve tips to stimulate intrinsic motivation in students through autonomy-supportive classroom teaching derived from self-determination theory. Med. Teach. 33, 978–982. doi: 10.3109/0142159X.2011.599896

Lechner, M. (2010). The estimation of causal effects by difference-in-difference methods. Found. Trends in Econom. 4, 165–224. doi: 10.1561/0800000014

Leuven, E., and Sianesi, B. (2003). PSMATCH2: Stata module to perform full Mahalanobis and propensity score matching, common support graphing, and covariate imbalance testing. Statistical Software Components.

Lown, J. M. (2011). Oustanding AFCPE® conference paper: development and validation of a financial self-efficacy scale. J. Financ. Couns. Plan. 22, 54–63.

Lusardi, A., Michaud, P. C., and Mitchell, O. S. (2020a). Assessing the impact of financial education programs: a quantitative model. Econ. Educ. Rev. 78:101899. doi: 10.1016/j.econedurev.2019.05.006

Lusardi, A., and Mitchell, O. S. (2014). The economic importance of financial literacy: theory and evidence. J. Econ. Lit. 52, 5–44. doi: 10.1257/jel.52.1.5

Lusardi, A., and Mitchell, O. S. (2018). “Older women’s labor market attachment, retirement planning, and household debt,” in Women Working Longer: Increased Employment at Older Ages. eds. C. Goldin and L. F. Katz (Chicago, IL: The University of Chicago Press), 185–215.

Lusardi, A., Mitchell, O. S., and Oggero, N. (2020b). Debt and financial vulnerability on the verge of retirement. J. Money, Credit, Bank. 52, 1005–1034. doi: 10.1111/jmcb.12671

Lusardi, A., and Tufano, P. (2015). Debt literacy, financial experiences, and overindebtedness. J. Pension Econ. Finance 14, 332–368. doi: 10.1017/S1474747215000232

Maulana, R., Helms-Lorenz, M., and van de Grift, W. (2015). Development and evaluation of a questionnaire measuring pre-service teachers’ teaching behaviour: a Rasch modelling approach. Sch. Eff. Sch. Improv. 26, 169–194. doi: 10.1080/09243453.2014.939198

Miller, M., Reichelstein, J., Salas, C., and Zia, B. (2015). Can you help someone become financially capable? A meta-analysis of the literature. World Bank Res. Obs. 30, 220–246. doi: 10.1093/wbro/lkv009

Miller, W. R., and Rollnick, S. (2002). Motivational Interviewing: Preparing People for Change. 2nd Edn. New York, NY: Guilford Press.

Nagel, H., Rosendahl Huber, L., van Praag, M., and Goslinga, S. (2019). The effect of a tax training program on tax compliance and business outcomes of starting entrepreneurs: evidence from a field experiment. J. Bus. Ventur. 34, 261–283. doi: 10.1016/j.jbusvent.2018.10.006

Niemiec, C. P., and Ryan, R. M. (2009). Autonomy, competence, and relatedness in the classroom. Theory Res. Educ. 7, 133–144. doi: 10.1177/1477878509104318

OECD (2015). OECD/INFE toolkit for measuring financial literacy and financial inclusion. Available at: https://www.oecd.org/daf/fin/financial-education/2015_OECD_INFE_Toolkit_Measuring_Financial_Literacy.pdf (Accessed July 15, 2017)

Peeters, N., Rijk, K., Soetens, B., Storms, B., and Hermans, K. (2018). A systematic literature review to identify successful elements for financial education and counseling in groups. J. Consum. Aff. 52, 415–440. doi: 10.1111/joca.12180

Perry, V. G., and Morris, M. D. (2005). Who is in control? The role of self-perception, knowledge, and income in explaining consumer financial behavior. J. Consum. Aff. 39, 299–313. doi: 10.1111/j.1745-6606.2005.00016.x

Prochaska, J. O., DiClemente, C. C., and Norcross, J. C. (1992). In search of how people change: applications to addictive behaviors. Am. Psychol. 47, 1102–1114. doi: 10.1037/0003-066X.47.9.1102

Prochaska, J. O., and Velicer, W. F. (1997). The transtheoretical model of health behavior change. Am. J. Health Promot. 12, 38–48. doi: 10.4278/0890-1171-12.1.38

Reich, C. M., and Berman, J. S. (2015). Do financial literacy classes help? An experimental assessment in a low-income population. J. Soc. Serv. Res. 41, 193–203. doi: 10.1080/01488376.2014.977986

Ridley, M., Rao, G., Schilbach, F., and Patel, V. (2020). Poverty, depression, and anxiety: causal evidence and mechanisms. Science 370:eaay0214. doi: 10.1126/science.aay0214

Ryan, R. M., and Deci, E. L. (2000a). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 55, 68–78. doi: 10.1037/0003-066X.55.1.68

Ryan, R. M., and Deci, E. L. (2000b). Intrinsic and extrinsic motivations: classic definitions and new directions. Contemp. Educ. Psychol. 25, 54–67. doi: 10.1006/ceps.1999.1020

Shurtleff, S. (2009). Improving savings incentives for the poor. National Center for policy analysis. Brief Anal. 672.

Skiba, P. M., and Tobacman, J. (2008). Payday loans, uncertainty, and discounting: explaining patterns of borrowing, repayment, and default. Vanderbilt Law Econ. Res. Paper 08–33, doi: 10.2139/ssrn.1319751

Theodos, B., Stacy, C. P., and Daniels, R. (2018). Client led coaching: a random assignment evaluation of the impacts of financial coaching programs. J. Econ. Behav. Org. 155, 140–158. doi: 10.1016/j.jebo.2018.08.019

Van Dijk, W. W., van der Werf, M. M. B., and van Dillen, L. F. (2020). The psychological inventory of financial scarcity (PIFS): a psychometric evaluation. J. Behav. Exp. Econ. 101:101939. doi: 10.1016/j.socec.2022.101939

Villa, J. M. (2016). Diff: simplifying the estimation of difference-in-differences treatment effects. Stata J. 16, 52–71. doi: 10.1177/1536867X1601600108

Willis, L. E. (2011). The financial education fallacy. Am. Econ. Rev. 101, 429–434. doi: 10.1257/aer.101.3.429

Yakoboski, P. J., Lusardi, A., and Hasler, A.. (2020). The 2020 TIAA institute-GFLEC personal finance index: many do not know what they do and do not know. Available at: https://gflec.org/initiatives/personal-finance-index/ (Accessed January, 25, 2021)

Keywords: financial literacy and education, financial behavior, financially vulnerable people, behavioral insights, quasi-experimental field study, financial education

Citation: de Bruijn E-J, Antonides G and Madern T (2022) A behaviorally informed financial education program for the financially vulnerable: Design and effectiveness. Front. Psychol. 13:1090024. doi: 10.3389/fpsyg.2022.1090024

Received: 04 November 2022; Accepted: 25 November 2022;

Published: 15 December 2022.

Edited by:

Irina Anderson, University of East London, United KingdomReviewed by:

Victor Maas, University of Amsterdam, NetherlandsCopyright © 2022 de Bruijn, Antonides and Madern. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ernst-Jan de Bruijn, ZS5kZS5icnVpam5AbGF3LmxlaWRlbnVuaXYubmw=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.