95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REGISTERED REPORT article

Front. Psychol. , 05 January 2023

Sec. Educational Psychology

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.1012787

This article is part of the Research Topic Registered Reports on the Role of Representational Competencies in Multimedia Learning and Learning with Multiple Representations View all 5 articles

Multiple external representations (e.g., diagrams, equations) and their interpretations play a central role in science and science learning as research has shown that they can substantially facilitate the learning and understanding of science concepts. Therefore, multiple and particularly visual representations are a core element of university physics. In electrodynamics, which students encounter already at the beginning of their studies, vector fields are a central representation typically used in two forms: the algebraic representation as a formula and the visual representation depicted by a vector field diagram. While the former is valuable for quantitative calculations, vector field diagrams are beneficial for showing many properties of a field at a glance. However, benefiting from the mutual complementarity of both representations requires representational competencies aiming at referring different representations to each other. Yet, previous study results revealed several student problems particularly regarding the conceptual understanding of vector calculus concepts. Against this background, we have developed research-based, multi-representational learning tasks that focus on the visual interpretation of vector field diagrams aiming at enhancing a broad, mathematical as well as conceptual, understanding of vector calculus concepts. Following current trends in education research and considering cognitive psychology, the tasks incorporate sketching activities and interactive (computer-based) simulations to enhance multi-representational learning. In this article, we assess the impact of the learning tasks in a field study by implementing them into lecture-based recitations in a first-year electrodynamics course at the University of Göttingen. For this, a within- and between-subjects design is used comparing a multi-representational intervention group and a control group working on traditional calculation-based tasks. To analyze the impact of multiple representations, students' performance in a vector calculus test as well as their perceived cognitive load during task processing is compared between the groups. Moreover, analyses offer guidance for further design of multi-representational learning tasks in field-related physics topics.

Mathematics and physics concepts are often represented in some form of external representation (De Cock, 2012). Thereby, different forms of representation, multiple representations (MRs), allow to express a concept or a (learning) subject in various manners by focusing on different properties and characteristics. In complementing and constraining each other, multiple representations enable a deep understanding of a situation or a construct (Ainsworth, 1999; Seufert, 2003) and, moreover, using multiple representations was found to have positive effects on knowledge acquisition and problem-solving skills (e.g., Nieminen et al., 2012; Rau, 2017). Regarding the understanding and communication of science concepts, visual representations are particularly crucial (Cook, 2006). Following previous research, they can help to eliminate science concepts' abstract nature and were shown to support students to develop scientific conceptions (e.g., Cook, 2006; Chiu and Linn, 2014; Suyatna et al., 2017). However, to benefit from multimedia learning environments, representational competencies based on an understanding of how individual representations depict information, how they relate to each other, and how to choose an appropriate representation to solve a problem are required (DeFT framework; Ainsworth, 2006). Without representational competencies, visual representations cannot fully unfold their potential as meaning-making tools.

Additionally, learning with and mentally processing visual representations often places special demands on the visuo-spatial working memory, thus increasing cognitive load (Baddeley, 1986; Cook, 2006; Logie, 2014). Here, previous research showed that externalizing visuo-spatial information can provide cognitive relief (e.g., Bilda and Gero, 2005). In this regard, sketching (or drawing) visual cues in multimedia learning has become an increasing scientific focus in recent years (Ainsworth and Scheiter, 2021). Following empirical findings, sketching allows to pay more attention to details (Ainsworth and Scheiter, 2021), thus supporting a visual understanding of concepts (Wu and Rau, 2018). Correspondingly, previous studies reported positive learning effects of sketching activities in (multi-)representational learning environments, as they increase attention and engagement with the representations and help to activate prior knowledge, to understand a representations' properties, or to recall information (e.g., Leopold and Leutner, 2012; Wu and Rau, 2018; Kohnle et al., 2020; Ainsworth and Scheiter, 2021). Typical sketching activities are copying a given representation, creating a visual representation with modified individual features or by transforming textual information into a drawing, or inventing a novel representation (e.g., to reason; Kohnle et al., 2020; Ainsworth and Scheiter, 2021). Moreover, with respect to Cognitive Load Theory (Sweller, 2010) which characterizes the limited capacity of working memory resources based on three types of cognitive load—intrinsic, extraneous, and germane cognitive load—sketching activities are able to promote a more effective use of these resources (Bilda and Gero, 2005). In addition to cognitive relief provided by sketching in multi-representational learning, previous work demonstrated the added value of interactive (computer-based) simulations for the development of representational competencies (e.g., Stieff, 2011; Kohnle and Passante, 2017). As such, integration of simulations in multimedia learning environments foster active learning, thus supporting students' use of scientific representations for communication and helping them to integrate their representational knowledge systematically with content knowledge (Linn et al., 2006; Stieff, 2011). Specifically, the complementation of simulation-based learning by the aforementioned sketching activities was found to support a deeper understanding of the representation being presented (Wu and Rau, 2018; Kohnle et al., 2020; Ainsworth and Scheiter, 2021).

Considering the value of multiple representations for science learning, unsurprisingly, they also play a major role in university physics. For instance, in electrodynamics, vector field representations are deeply rooted in the developmental history of the domain (Faraday, 1852), being represented either algebraically as a formula or graphically using arrows. In university experimental lectures, an introduction to electric and magnetic fields typically starts from concrete analogous representations of electric or magnetic field lines, then moving on to more abstract or idealized visual-graphical and symbolical representations (Küchemann et al., 2021). Using demonstration experiments, electric and magnetic field lines are visualized, for example, by semolina grains (Benimoff, 2006; Lincoln, 2017; Küchemann et al., 2021) or iron filings (Thompson, 1878; Küchemann et al., 2021), respectively. When representing a quantity as a vector field, the fields' properties, its divergence and curl, and further the integral theorems of Gauss and Stokes are of particular importance for physics applications (Griffiths, 2013). Accordingly, a sound understanding of vector calculus is of great importance for undergraduate and graduate physics studies. For example, a study by Burkholder et al. (2021) found a significant correlation between extensive preparation in vector calculus and students' performance in an introductory course on electromagnetism.

However, further research also revealed that a conceptual understanding, which is relevant to physics comprehension, often caused difficulties for students (e.g., Pepper et al., 2012; Singh and Maries, 2013; Bollen et al., 2015). Besides conceptual gaps regarding vector field representations in general, learning difficulties in dealing with vector field concepts such as divergence and curl became particularly apparent. For example, students struggled to extract information about divergence or curl from vector field diagrams and they interpreted and used these concepts literally instead of referring to their physics-mathematical concepts (Ambrose, 2004; Pepper et al., 2012; Singh and Maries, 2013; Bollen et al., 2015, 2016, 2018; Baily et al., 2016; Klein et al., 2018, 2019). In a study on students' difficulties regarding the curl of vector fields, Jung and Lee (2012) diagnosed the gap between mathematical and conceptual reasoning as a major source of comprehension problems. Furthermore, Singh and Maries (2013) concluded that graduate students struggle with the concepts of divergence and curl, even though they know how to calculate them mathematically. In the context of electrostatics and electromagnetism, it was also shown that conceptual gaps regarding vector calculus led to improper understanding and errors in when applying essential principles in physics of essential principles in physics (Ambrose, 2004; Jung and Lee, 2012; Bollen et al., 2015, 2016; Li and Singh, 2017). Regarding these findings, it is noticeable that the aforementioned studies did not strictly distinct between conceptual understanding and representational competencies with respect to vector fields. This is not surprising, since there is strong overlap of the two areas in this subject domain—vector fields are, as such, a form of representation that cannot be understood in a subject context isolated from concepts. Conversely, it is almost impossible to learn electrodynamics concepts without vector field representations.

In introductory physics texts, vector concepts are typically given as mathematical expressions, but are either not or insufficiently explained qualitatively (Smith, 2014). Even in more advanced physics textbooks, there is little geometric explanation or discussion of vector field concepts and integral theorems. Regarding the aforementioned empirical findings, relevance and requirement of new instructions that address a conceptual understanding become even more apparent. Consequently, numerous authors advocated the use of visual representations in order to foster a conceptual understanding. Following this line of research, Bollen et al. (2018) developed a guided-inquiry teaching-learning sequence on vector calculus in electrodynamics aiming at strengthening the connection between visual and algebraic representations. Implementing the tutorials in a second-year undergraduate electrodynamics course revealed a positive effect of the interventions on physics students' conceptual understanding and their ability to visually interpret vector field diagrams. In addition, subjects expressed primarily positive feedback regarding the learning approach. However, as discussed by the authors, the exact results should be interpreted with care as the number of participants was small and the implementation followed a less streamlined structure as, for example, no strict control and intervention group design was used. Additionally, Klein et al. (2018, 2019) developed text-based instructions for visually interpreting divergence using vector field diagrams. Eye tracking was used to analyze representation-specific visual behaviors, such as evaluating vectors along coordinate directions. Here, gaze analyses revealed a quantitative increase in conceptual understanding as a result of this intervention (Klein et al., 2018, 2019). In addition to a positive impact of visual cues on performance measures, a positive correlation with students' response confidence was found. This means that students not only answered correctly more often, but also trusted their answers more, which is a desirable result of successful teaching (Lindsey and Nagel, 2015; Klein et al., 2017, 2019). In subsequent interviews, subjects expressed diagram-specific mental operations, such as decomposing vectors and evaluating field components along coordinate directions, as a main problem source (Klein et al., 2018). Thus, a follow-up experimental study involved sketching activities aiming at generating representation-specific aids (e.g., field components) to support the visual interpretation of divergence (Hahn and Klein, 2021, 2022a). Here, sketching was shown to significantly reduce perceived cognitive load when applying visual problem-solving strategies related to a fields' divergence (Hahn and Klein, 2022a).

With regard to previous findings concerning student problems, building upon the existing multi-representational teaching-learning materials, and using the DeFT framework (Ainsworth, 2006), four multi-representational learning tasks were developed aiming at visually interpreting vector field diagrams (Hahn and Klein, 2022b). Their structure follows the Modeling Instruction approach as each task addresses one vector calculus concept in which the representational forms are used in a coordinated manner aiming at developing conceptual understanding (e.g., McPadden and Brewe, 2017). Furthermore, sketching activities and a vector field simulation are incorporated to provide cognitive relief, to foster engagement with the representations, and to support the development of representational competencies related to vector calculus concepts. Here, representation-specific sketching activities, such as sketching vector components or highlighting rows or columns to support evaluation along coordinate directions, were included (Klein et al., 2018, 2019; Hahn and Klein, 2021, 2022a). Additionally, typical sketching tasks for learning with simulations, such as copying or creating a vector field diagram, were involved (Kohnle et al., 2020). As part of the present registered report study, the research-based multi-representational learning tasks are implemented into lecture-based recitations in a first-year electrodynamics course. Consequently, the present study aims at evaluating the added value of multiple representations in task-based learning of vector calculus by comparing a multi-representational intervention group and a control group with traditional calculation-based task. Therefore, the following guiding question is investigated: “Do multi-representational learning tasks have a higher learning impact than traditional (calculation-based) tasks in the context of vector fields?” Considering previous research findings and theoretical frameworks from cognitive psychology on multi-representational learning, and on the use of sketching activities and simulations, we hypothesize that multi-representational, sketching- and simulation-based tasks

(H1) promote students' performance as measured by a vector field performance test (that includes tasks related to vector calculus, vector field quantities, and vector field concepts), and

(H2) reduce perceived cognitive load (as measured by a cognitive load questionnaire) during task processing.

Learning tasks are implemented in the weekly recitations on experimental physics II in the summer semester 2022 and 2023. Physics students usually attend experimental physics II in their second semester of study, then encountering university electromagnetism for the first time. The module includes a lecture with demonstration experiments and weekly recitations in which the compulsory assignments are discussed. Dividing the study into an alpha and a beta implementation (summer semester 2022 and 2023, respectively) primarily serves to consolidate the data. In the alpha implementation, all instruments and learning tasks are tested and psychometrically characterized, thus providing guidance for improvement. Then, alpha as well as beta implementation are used to evaluate the effectiveness of the intervention aiming at answering the guiding question and testing the hypotheses. Study design and procedure are identical in both implementations in order to transfer conclusions from the alpha to the beta implementation.

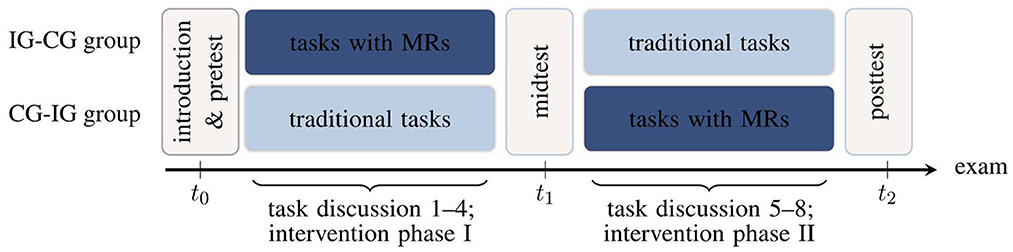

The studies are based on within- and between-subjects treatments wrapped in a rotational design (Figure 1). At the beginning of the lecture period, all recitation groups are randomly divided into two superordinate groups (IG-CG and CG-IG groups, respectively) both serving as intervention groups (IG) and control groups (CG) at some time but in different order. Students select a fixed recitation group by their own without knowing about the assignment to a treatment condition later on. Before the first intervention phase, students take a performance test on vector calculus (section 2.3). Subsequently, the first intervention phase starts and in each of the following four weeks, students complete a mandatory intervention task (either a multi-representational or a traditional task) in addition to a set of standard tasks which does not differ between the groups. The latter consists of typical, predominantly calculation- and formula-based, problem-solving tasks that have always been used in the course (e.g., they present some vector fields and students must calculate divergence or curl). First, the upper group in Figure 1 is intervention group (IG) and works on the multi-representational learning tasks, while the lower group, acting as a waiting group, is control group (CG) and works on traditional (calculation-based) tasks. All assignments are completed by self-study within one week, submitted for correction, and discussed with a dedicated, independent intervention tutor during the subsequent recitation. Prior to each task discussion, a short questionnaire on perceived cognitive load during task processing and means of task assistance is deployed (section 2.3). After the first intervention phase in the seventh week of the semester, students again complete the performance test on vector calculus and another evaluation questionnaire. Subsequently, the groups switch roles and the second four-week intervention phase starts. Finally, the performance test on vector calculus and the questionnaire are administered again.

Figure 1. Study design with timeline from left (t0) to right (t2; intervention group IG, control group CG, multiple representations MRs). The designations “IG-CG group” and “CG-IG group” refer to the chronological order of the groups in the rotational design (first intervention group, then control group, or vice versa).

Due to the lack of comparable studies regarding target group and topic, power analyses are based on effect sizes of methodologically similar studies. Akkus and Cakiroglu (2010) reported medium (η2 = 0.128, f = 0.383) to large effect sizes (η2 = 0.233, f = 0.551) when comparing seventh grade students' algebra performance between an experimental group provided with a multiple representation-based algebra instruction and a control group using a conventional instruction. Power analyses with G⋆Power 3.1 (Faul et al., 2009) indicated that for an analysis of covariance (p < 0.05) including two covariates (e.g., semester of study and school leaving examination grade) and an average effect size of f = 0.467, a sample size of N = 51 students would be required to obtain a desired power of 0.9 (McDonald, 2014). A meta analysis by Sokolowski (2018) found a large overall weighted mean effect size of f = 0.53 regarding the use of representations in Pre-K through fifth grade mathematics compared to traditional teaching methods which would require a sample size of N = 40 considering the aforementioned assumptions. Regarding the alpha implementation of this study, pre- and midtest were completed by N = 116 and N = 64 students, respectively. For beta implementation, similar sample sizes can be expected which would be consistent with the power analyses results.

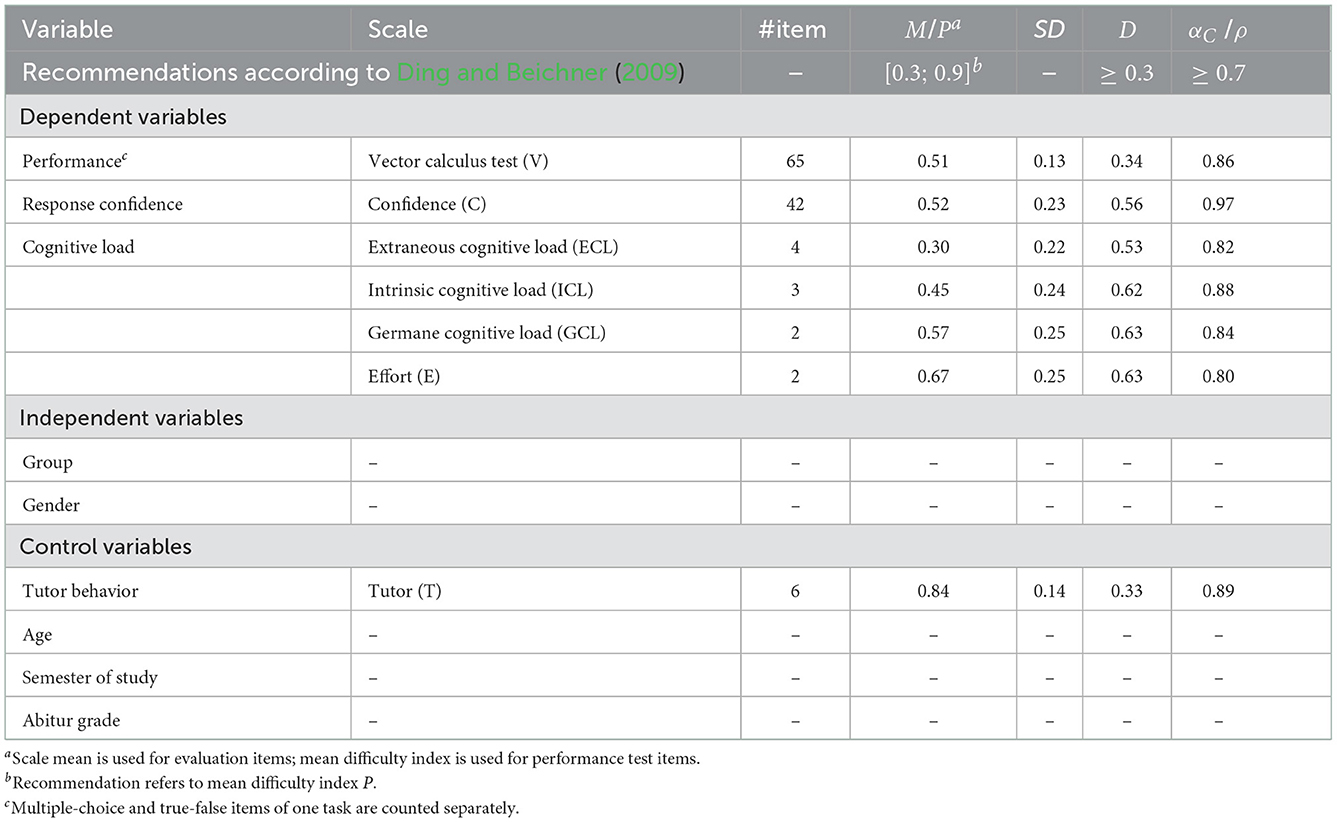

Test and scale analyses reported in the following are based on the assessments and responses of the alpha implementation. Here, data from the first implementation phase are used to ensure the largest possible data base. Therefore, the performance test at t0 (N = 116) and the weekly questionnaire used in the first recitation after the pretest (N = 93) were examined. The sample from the pretest included 86 male and 27 female undergraduate physics students with a mean age of 20.3 ± 1.9 years, a mean school leaving examination grade of 1.7 ± 0.6 [“Abitur” grade; referring to a scale from 1 (best) to 6], and in their 2.8 ± 1.5 semester of study. Prior to test and scale analyses, all datasets were cleaned of outliers. An overview of all variables and scales used in the study is given in Table 1.

Table 1. Overview of variables, instruments, and scales including scale analyses results of the alpha implementation (scale mean M, mean difficulty index P, mean standard deviation SD, mean discrimination index D, Cronbach's alpha αC, Spearman-Brown coefficient ρ).

Initially, all subjects completed a test with demographic questions (e.g., age, gender, semester of study) and a performance test on vector calculus assessing conceptual understanding closely linked with representational competencies. The performance test included 19 tasks, partly comprising several subtasks, hence, a total of 65 items (multiple-choice and true-false items of one task counted separately) covering seven different subtopics of vector calculus. Forty-nine of the items were designed in multiple-choice or true-false format, while the remaining 16 items required a sketch, formula, justification, calculation, or a proof. Most of the items were taken from established concept tests on electrodynamics (CURrENT) or have been used and validated in a similar form in previous studies (Bollen et al., 2015, 2018; Baily et al., 2016, 2017; Klein et al., 2018, 2019, 2021; Hahn and Klein, 2022a; Rabe et al., 2022). Exploratory factor analysis of the performance test did not reveal a distinct factor structure which is a common result for concept tests in STEM education research (e.g., FCI; Heller and Huffman, 1995; Hestenes and Halloun, 1995; Huffman and Heller, 1995; Scott et al., 2012). Therefore, for the following analyses, the performance test is considered in its entirety. With a mean difficulty index of P = 0.51, a mean discrimination index of D = 0.34, and a reliability of αC = 0.86 (Table 1), the performance test shows satisfactory psychometric properties according to the recommendations of Ding and Beichner (2009). Additionally, for most of the multiple-choice and true-false items, response confidence was assessed using a 6-point Likert-type rating scale (1 = absolutely confident to 6 = not confident at all; D = 0.56, αC = 0.97; Table 1) to provide insight into student response behavior beyond performance measures. Since previous studies, also in the context of instruction-based learning of vector field concepts, found positive correlations between performance and confidence (e.g., Lindsey and Nagel, 2015; Klein et al., 2019), it will be investigated whether group membership influences this correlation.

In weekly recitations, students answered a short questionnaire related to the previous learning task providing information about the cognitive load they experienced while completing the task as well as any kind of task assistance. The items regarding cognitive load are based on a scale measuring the three types of cognitive load from Leppink et al. (2013) which was supplemented by items from Klepsch et al. (2017) and Krell (2017). The final questionnaire contained 12 items measuring cognitive load on a 6-point Likert-type rating scale (1 = strongly disagree to 6 = strongly agree). As a result of principal component analysis with varimax rotation , four subscales can be identified (75.03% variance explanation, excluding item CL10; Table 1): extraneous cognitive load (four items, αC = 0.82), intrinsic cognitive load (three items, αC = 0.88), germane cognitive load (two items, ρ = 0.84), and effort (two items, ρ = 0.80). The three scales for extraneous, intrinsic, and germane cognitive load reflect the three types of cognitive load according to Sweller (2010), with the germane cognitive load scale primarily addressing perceived improvement in understanding. In addition, the effort scale assesses the effort expended in task completion (Paas and Van Merriënboer, 1994; Krell, 2017). Following the recommendations of Ding and Beichner (2009), item and scale analyses yielded good values of item-total correlation (rit ≥ 0.56) and discrimination indices (Di ≥ 0.50) as well as the scales' mean discrimination indices (D ≥ 0.53) and their reliabilities (αC ≥ 0.80). In addition to the perceived cognitive load, means of task assistance (e.g., “working together in a group with students from my course”, “looking up in a textbook”) were assessed using a choice format.

After the intervention phases, the students again completed the performance test which was extended by a module-specific task on electrostatics. In addition, a questionnaire was used which surveyed the tutor's behavior during task discussion as a control variable using six items (6-point Likert-type rating scale from 1 = strongly disagree to 6 = strongly agree). The items are based on the “tutor evaluation questionnaire” by Dolmans et al. (1994) supplemented by modifications from Baroffio et al. (1999) and Pinto et al. (2001). Following the results of principal component analysis with varimax rotation , the scale will be considered in its entirety (68.61% variance explanation). It shows a high reliability (αC = 0.89) and a satisfactory discrimination index (D = 0.33; Table 1). Further information and detailed documentation of the study material, instruments, scales, and test analyses can be found in the Supplementary material.

As required for parametric procedures, all scales for dependent and control variables (Table 1) were checked for normal distributed scale expressions. Regarding the hypotheses, statistical analyses will mainly comprise (co-)variance analyses to examine the influence of group membership on the dependent variables. Both intervention phases are methodologically treated equally, but analyzed separately to ensure the largest possible data base. Moreover, the rotational design allows a comparison of the pre-post learning gains of intervention and control group within each phase. Here, a common measure of gain, Hake's gain, as defined by the quotient of absolute gain and maximum possible gain, is used (Hake, 1998). However, a comparison of both conditions (traditional vs. multi-representational) can also take place within the IG-CG and CG-IG groups, since each group is once CG and once IG. In addition, 2 × 2 analyses of variance will be conducted to examine the impact of the intervention comparing different time points (pre-post comparison). Moreover, the performance test will be examined in more detail using Rasch analysis.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

PK supervised data collection and gave feedback to the first draft of the manuscript. LH performed the statistical analyses and wrote the first draft of the manuscript. All authors contributed to conception and design of the study.

We acknowledge support by the Open Access Publication Funds of the Göttingen University.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.1012787/full#supplementary-material

Ainsworth, S. E. (1999). The functions of multiple representations. Comput. Educ. 33, 131–152. doi: 10.1016/S0360-1315(99)00029-9

Ainsworth, S. E. (2006). DeFT: a conceptual framework for considering learning with multiple representations. Learn. Instruct. 16, 183–198. doi: 10.1016/j.learninstruc.2006.03.001

Ainsworth, S. E., and Scheiter, K. (2021). Learning by drawing visual representations: potential, purposes, and practical implications. Curr. Dir. Psychol. Sci. 30, 61–67. doi: 10.1177/0963721420979582

Akkus, O., and Cakiroglu, E. (2010). “The effects of multiple representations-based instruction on seventh grade students' algebra performance,” in Proceedings of the Sixth Congress of the European Society for Research in Mathematics Education (CERME), eds V. Durand-Guerrier, S. Soury-Lavergne, and F. Arzarello (Lyon: Institut National de Recherche Pédagogique), 420–429.

Ambrose, B. S. (2004). Investigating student understanding in intermediate mechanics: identifying the need for a tutorial approach to instruction. Am. J. Phys. 72, 453–459. doi: 10.1119/1.1648684

Baily, C., Bollen, L., Pattie, A., Van Kampen, P., and De Cock, M. (2016). “Student thinking about the divergence and curl in mathematics and physics contexts,” in Proceedings of the Physics Education Research Conference 2016 (College Park, MD: American Institute of Physics), 51–54. doi: 10.1119/perc.2015.pr.008

Baily, C., Ryan, Q. X., Astolfi, C., and Pollock, S. J. (2017). Conceptual assessment tool for advanced undergraduate electrodynamics. Phys. Rev. Phys. Educ. Res. 13, 020113. doi: 10.1103/PhysRevPhysEducRes.13.020113

Baroffio, A., Kayser, B., Vermeulen, B., Jacquet, J., and Vu, N. V. (1999). Improvement of tutorial skills: an effect of workshops or experience? Acad. Med. 74, S75–S77. doi: 10.1097/00001888-199910000-00045

Benimoff, A. I. (2006). The electric fields experiment: a new way using conductive tape. Phys. Teach. 44, 140–141. doi: 10.1119/1.2173317

Bilda, Z., and Gero, J. S. (2005). Does sketching off-load visuo-spatial working memory. Stud. Designers 5, 145–160.

Bollen, L., Van Kampen, P., Baily, C., and De Cock, M. (2016). Qualitative investigation into students' use of divergence and curl in electromagnetism. Phys. Rev. Phys. Educ. Res. 12, 020134. doi: 10.1103/PhysRevPhysEducRes.12.020134

Bollen, L., Van Kampen, P., and De Cock, M. (2015). Students' difficulties with vector calculus in electrodynamics. Phys. Rev. ST Phys. Educ. Res. 11, 020129. doi: 10.1103/PhysRevSTPER.11.020129

Bollen, L., van Kampen, P., and De Cock, M. (2018). Development, implementation, and assessment of a guided-inquiry teaching-learning sequence on vector calculus in electrodynamics. Phys. Rev. Phys. Educ. Res. 14, 020115. doi: 10.1103/PhysRevPhysEducRes.14.020115

Burkholder, E. W., Murillo-Gonzalez, G., and Wieman, C. (2021). Importance of math prerequisites for performance in introductory physics. Phys. Rev. Phys. Educ. Res. 17, 010108. doi: 10.1103/PhysRevPhysEducRes.17.010108

Chiu, J. L., and Linn, M. C. (2014). Supporting knowledge integration in chemistry with a visualization-enhanced inquiry unit. J. Sci. Educ. Technol. 23, 37–58. doi: 10.1007/s10956-013-9449-5

Cook, M. P. (2006). Visual representations in science education: the influence of prior knowledge and cognitive load theory on instructional design principles. Sci. Educ. 90, 1073–1091. doi: 10.1002/sce.20164

De Cock, M. (2012). Representation use and strategy choice in physics problem solving. Phys. Rev. ST Phys. Educ. Res. 8, 020117. doi: 10.1103/PhysRevSTPER.8.020117

Ding, L., and Beichner, R. (2009). Approaches to data analysis of multiple-choice questions. Phys. Rev. ST Phys. Educ. Res. 5, 020103. doi: 10.1103/PhysRevSTPER.5.020103

Dolmans, D. H., Wolfhagen, I., Schmidt, H., and Van der Vleuten, C. (1994). A rating scale for tutor evaluation in a problem-based curriculum: validity and reliability. Med. Educ. 28, 550–558. doi: 10.1111/j.1365-2923.1994.tb02735.x

Faraday, M. (1852). III Experimental researches in electricity-twenty-eighth series. Philos. Trans. R. Soc. Lond. 142, 25–56. doi: 10.1098/rstl.1852.0004

Faul, F., Erdfelder, E., Buchner, A., and Lang, A.-G. (2009). Statistical power analyses using G* Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Hahn, L., and Klein, P. (2021). “Multiple Repräsentationen als fachdidaktischer Zugang zum Satz von Gauß - Qualitative Zugänge zur Interpretation der Divergenz von Vektorfeldern,” in PhyDid B - Didaktik der Physik - Beiträge zur DPG-Frühjahrstagung, eds J. Grebe-Ellis and H. Grötzebauch (Fachverband Didaktik der Physik, virtuelle DPG-Frühjahrstagung 2021), 95–100. Available online at: https://ojs.dpg-physik.de/index.php/phydid-b/article/view/1151/1237

Hahn, L., and Klein, P. (2022a). “Kognitive Entlastung durch Zeichenaktivitäten? Eine empirische Untersuchung im Kontext der Vektoranalysis,” in Unsicherheit als Element von naturwissenschaftsbezogenen Bildungsprozessen, eds S. Habig and H. van Vorst (Gesellschaft für Didaktik der Chemie und Physik, virtuelle Jahrestagung 2021), 384–387. Available online at: https://www.gdcp-ev.de/wp-content/tb2022/TB2022_384_Hahn.pdf

Hahn, L., and Klein, P. (2022b). “Vektorielle Feldkonzepte verstehen durch Zeichnen? Erste Wirksamkeitsuntersuchungen,” in PhyDid B - Didaktik der Physik - Beiträge zur DPG-Frühjahrstagung, eds H. Grötzebauch and S. Heinicke (Fachverband Didaktik der Physik, virtuelle DPG-Frühjahrstagung 2022), 119–126. Available online at: https://ojs.dpg-physik.de/index.php/phydid-b/article/view/1259/1485

Hake, R. R. (1998). Interactive-engagement versus traditional methods: a six-thousand-student survey of mechanics test data for introductory physics courses. Am. J. Phys. 66, 64–74. doi: 10.1119/1.18809

Heller, P., and Huffman, D. (1995). Interpreting the force concept inventory: a reply to Hestenes and Halloun. Phys. Teach. 33, 503–511. doi: 10.1119/1.2344279

Hestenes, D., and Halloun, I. (1995). Interpreting the force concept inventory: a response to March 1995 critique by Huffman and Heller. Phys. Teach. 33, 502–506. doi: 10.1119/1.2344278

Huffman, D., and Heller, P. (1995). What does the force concept inventory actually measure? Phys. Teach. 33, 138–143. doi: 10.1119/1.2344171

Jung, K., and Lee, G. (2012). Developing a tutorial to address student difficulties in learning curl: a link between qualitative and mathematical reasoning. Can. J. Phys. 90, 565–572. doi: 10.1139/p2012-054

Klein, P., Hahn, L., and Kuhn, J. (2021). Einfluss visueller Hilfen und räumlicher Fähigkeiten auf die graphische Interpretation von Vektorfeldern: eine Eye-Tracking-Untersuchung. Z. Didakt. Naturwiss. 27, 181–201. doi: 10.1007/s40573-021-00133-2

Klein, P., Müller, A., and Kuhn, J. (2017). Assessment of representational competence in kinematics. Phys. Rev. Phys. Educ. Res. 13, 010132. doi: 10.1103/PhysRevPhysEducRes.13.010132

Klein, P., Viiri, J., and Kuhn, J. (2019). Visual cues improve students' understanding of divergence and curl: evidence from eye movements during reading and problem solving. Phys. Rev. Phys. Educ. Res. 15, 010126. doi: 10.1103/PhysRevPhysEducRes.15.010126

Klein, P., Viiri, J., Mozaffari, S., Dengel, A., and Kuhn, J. (2018). Instruction-based clinical eye-tracking study on the visual interpretation of divergence: how do students look at vector field plots? Phys. Rev. Phys. Educ. Res. 14, 010116. doi: 10.1103/PhysRevPhysEducRes.14.010116

Klepsch, M., Schmitz, F., and Seufert, T. (2017). Development and validation of two instruments measuring intrinsic, extraneous, and germane cognitive load. Front. Psychol. 8, 1997. doi: 10.3389/fpsyg.2017.01997

Kohnle, A., Ainsworth, S. E., and Passante, G. (2020). Sketching to support visual learning with interactive tutorials. Phys. Rev. Phys. Educ. Res. 16, 020139. doi: 10.1103/PhysRevPhysEducRes.16.020139

Kohnle, A., and Passante, G. (2017). Characterizing representational learning: a combined simulation and tutorial on perturbation theory. Phys. Rev. Phys. Educ. Res. 13, 020131. doi: 10.1103/PhysRevPhysEducRes.13.020131

Krell, M. (2017). Evaluating an instrument to measure mental load and mental effort considering different sources of validity evidence. Cogent Educ. 4, 1280256. doi: 10.1080/2331186X.2017.1280256

Küchemann, S., Malone, S., Edelsbrunner, P., Lichtenberger, A., Stern, E., Schumacher, R., et al. (2021). Inventory for the assessment of representational competence of vector fields. Phys. Rev. Phys. Educ. Res. 17, 020126. doi: 10.1103/PhysRevPhysEducRes.17.020126

Leopold, C., and Leutner, D. (2012). Science text comprehension: drawing, main idea selection, and summarizing as learning strategies. Learn. Instr. 22, 16–26. doi: 10.1016/j.learninstruc.2011.05.005

Leppink, J., Paas, F., Van der Vleuten, C. P., Van Gog, T., and Van Merriënboer, J. J. (2013). Development of an instrument for measuring different types of cognitive load. Behav. Res. Methods 45, 1058–1072. doi: 10.3758/s13428-013-0334-1

Li, J., and Singh, C. (2017). Investigating and improving introductory physics students' understanding of symmetry and Gauss's law. Eur. J. Phys. 39, 015702. doi: 10.1088/1361-6404/aa8d55

Lincoln, J. (2017). Electric field patterns made visible with potassium permanganate. Phys. Teach. 55, 74–75. doi: 10.1119/1.4974114

Lindsey, B. A., and Nagel, M. L. (2015). Do students know what they know? Exploring the accuracy of students' self-assessments. Phys. Rev. ST Phys. Educ. Res. 11, 020103. doi: 10.1103/PhysRevSTPER.11.020103

Linn, M. C., Lee, H.-S., Tinker, R., Husic, F., and Chiu, J. L. (2006). Teaching and assessing knowledge integration in science. Science 313, 1049–1050. doi: 10.1126/science.1131408

Logie, R. H. (2014). Visuo-spatial Working Memory. London: Psychology Press. doi: 10.4324/9781315804743

McPadden, D., and Brewe, E. (2017). Impact of the second semester University Modeling Instruction course on students' representation choices. Phys. Rev. Phys. Educ. Res. 13, 020129. doi: 10.1103/PhysRevPhysEducRes.13.020129

Nieminen, P., Savinainen, A., and Viiri, J. (2012). Relations between representational consistency, conceptual understanding of the force concept, and scientific reasoning. Phys. Rev. ST Phys. Educ. Res. 8, 010123. doi: 10.1103/PhysRevSTPER.8.010123

Paas, F. G., and Van Merriënboer, J. J. (1994). Instructional control of cognitive load in the training of complex cognitive tasks. Educ. Psychol. Rev. 6, 351–371. doi: 10.1007/BF02213420

Pepper, R. E., Chasteen, S. V., Pollock, S. J., and Perkins, K. K. (2012). Observations on student difficulties with mathematics in upper-division electricity and magnetism. Phys. Rev. ST Phys. Educ. Res. 8, 010111. doi: 10.1103/PhysRevSTPER.8.010111

Pinto, P. R., Rendas, A., and Gamboa, T. (2001). Tutors' performance evaluation: a feedback tool for the PBL learning process. Med. Teach. 23, 289–294. doi: 10.1080/01421590120048139

Rabe, C., Drews, V., Hahn, L., and Klein, P. (2022). “Einsatz von multiplen Repräsentationsformen zur qualitativen Beschreibung realer Phänomene der Fluiddynamik,” in PhyDid B - Didaktik der Physik - Beiträge zur DPG-Frühjahrstagung, eds H. Grötzebauch and S. Heinicke (Fachverband Didaktik der Physik, virtuelle DPG-Frühjahrstagung 2022), 71–77. Available online at: https://ojs.dpg-physik.de/index.php/phydid-b/article/view/1269/1491

Rau, M. A. (2017). Conditions for the effectiveness of multiple visual representations in enhancing STEM learning. Educ. Psychol. Rev. 29, 717–761. doi: 10.1007/s10648-016-9365-3

Scott, T. F., Schumayer, D., and Gray, A. R. (2012). Exploratory factor analysis of a Force Concept Inventory data set. Phys. Rev. ST Phys. Educ. Res. 8, 020105. doi: 10.1103/PhysRevSTPER.8.020105

Seufert, T. (2003). Supporting coherence formation in learning from multiple representations. Learn. Instruct. 13, 227–237. doi: 10.1016/S0959-4752(02)00022-1

Singh, C., and Maries, A. (2013). “Core graduate courses: a missed learning opportunity?” in AIP Conference Proceedings (College Park, MD: American Institute of Physics), 382–385. doi: 10.1063/1.4789732

Smith, E. M. (2014). Student & Textbook Presentation of Divergence [Master thesis]. Corvallis, OR: Oregon State University.

Sokolowski, A. (2018). The effects of using representations in elementary mathematics: meta-analysis of research. IAFOR J. Educ. 6, 129–152. doi: 10.22492/ije.6.3.08

Stieff, M. (2011). Improving representational competence using molecular simulations embedded in inquiry activities. J. Res. Sci. Teach. 48, 1137–1158. doi: 10.1002/tea.20438

Suyatna, A., Anggraini, D., Agustina, D., and Widyastuti, D. (2017). “The role of visual representation in physics learning: dynamic versus static visualization,” in Journal of Physics: Conference Series, International Conference on Science and Applied Science, Solo, Indonesia (Esquimalt, BC: IOP Publishing), 012048. doi: 10.1088/1742-6596/909/1/012048

Sweller, J. (2010). Element interactivity and intrinsic, extraneous, and germane cognitive load. Educ. Psychol. Rev. 22, 123–138. doi: 10.1007/s10648-010-9128-5

Keywords: multiple representations, simulation, conceptual understanding, vector fields, physics, sketching, task-based learning, lecture-based recitations

Citation: Hahn L and Klein P (2023) The impact of multiple representations on students' understanding of vector field concepts: Implementation of simulations and sketching activities into lecture-based recitations in undergraduate physics. Front. Psychol. 13:1012787. doi: 10.3389/fpsyg.2022.1012787

Received: 05 August 2022; Accepted: 05 December 2022;

Published: 05 January 2023.

Edited by:

Sarah Malone, Saarland University, GermanyReviewed by:

Jose Pablo Escobar, Pontificia Universidad Católica de Chile, ChileCopyright © 2023 Hahn and Klein. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Larissa Hahn,  bGFyaXNzYS5oYWhuQHVuaS1nb2V0dGluZ2VuLmRl

bGFyaXNzYS5oYWhuQHVuaS1nb2V0dGluZ2VuLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.