- 1Research Institute for Sport and Exercise, Faculty of Health, University of Canberra, Canberra, ACT, Australia

- 2Faculty of Health, University of Canberra, Canberra, ACT, Australia

- 3Health Research Institute, University of Canberra, Canberra, ACT, Australia

- 4Australian National University, Canberra, ACT, Australia

- 5School of Science and Technology, Nottingham Trent University, Nottingham, United Kingdom

- 6Australian Army, Adelaide, SA, Australia

- 7Department of Defence, Australian Government, Edinburgh, SA, Australia

Personnel in many professions must remain “ready” to perform diverse activities. Managing individual and collective capability is a common concern for leadership and decision makers. Typical existing approaches for monitoring readiness involve keeping detailed records of training, health and equipment maintenance, or – less commonly – data from wearable devices that can be difficult to interpret as well as raising privacy concerns. A widely applicable, simple psychometric measure of perceived readiness would be invaluable in generating rapid evaluations of current capability directly from personnel. To develop this measure, we conducted exploratory factor analysis and confirmatory factor analysis with a sample of 770 Australian military personnel. The 32-item Acute Readiness Monitoring Scale (ARMS) demonstrated good model fit, and comprised nine factors: overall readiness; physical readiness; physical fatigue; cognitive readiness; cognitive fatigue; threat-challenge (i.e., emotional/coping) readiness; skills-and-training readiness; group-team readiness, and equipment readiness. Readiness factors were negatively correlated with recent stress, current negative affect and distress, and positively correlated with resilience, wellbeing, current positive affect and a supervisor’s rating of solider readiness. The development of the ARMS facilitates a range of new research opportunities: enabling quick, simple and easily interpreted assessment of individual and group readiness.

Introduction

Maintaining capabilities spanning physical, cognitive, emotional, skill and team domains are common to many professions, for example: construction (Yip and Rowlinson, 2006; Nahrgang et al., 2011); nursing (Kuiper and Pesut, 2004); medicine/surgery (Elfering et al., 2017); sales/marketing (McFarland et al., 2016), and many more (Bakker and Demerouti, 2007). Research in occupational psychology offers frameworks such as the Job Demands–Resources (JD-R) model (Bakker and Demerouti, 2007), which specifies that the balancing of these capabilities against job demands predicts: job burnout (e.g., Demerouti et al., 2001; Bakker et al., 2005), organizational commitment, work enjoyment (Bakker et al., 2010), connectedness (Lewig et al., 2007), and work engagement (Bakker et al., 2007). At present, the majority of this balancing – through careful management, planning, training and recruitment – remains a challenging, time-demanding and slow (or infrequent) task, with diverse approaches and methods deployed in different contexts (Chuang et al., 2016; Der-Martirosian et al., 2017; Shah et al., 2017; Sharma et al., 2018). Against this backdrop, offering a brief, psychometrically validated, easily interpreted and widely applicable assessment of acute performance readiness may substantially improve the performance planning of personnel and their managers.

In the military, just as in other occupations, individuals and groups are developed, supported and assessed in relation to their integrated capabilities, encompassing the physical, cognitive, and psychological, as well as role-specific skills, team functioning, and equipment (Peterson et al., 2011; Reivich et al., 2011; Training and Doctrine Command, 2011). Indeed, military personnel around the world perform important roles extending beyond direct combat, to include: remote operations; peacekeeping (Bester and Stanz, 2007); protecting key assets (borders, heritage sites, endangered species); cyber activities; emergency responding (e.g., floods, bushfires, earthquakes); and collaborating with other nations (Rollins, 2001; Faber et al., 2008; Apiecionek et al., 2012; Roy and Lopez, 2013). In this way, the diversity of military roles reflects many professional and occupational settings. This diversity comprises a complex array of duties, priorities, and constraints: requiring individuals to be highly capable, strategically adaptive, and prepared to the highest standards (Rutherford, 2013). Accordingly, personnel must remain ready to perform their job role, across a wide array of capabilities, often for prolonged periods of time.

Readiness Profiles as Indicative of Resilience

As well as seeking to facilitate improved management of role readiness in acute timeframes, we also sought to respond to recent critiques of the concept of resilience – which has been adopted in workplaces around the world (Robertson et al., 2015; Crane, 2017). Current research has tended to focus on stable individual attributes that predict positive adaptations to adversity (“stable protective or mitigating personal factors” – e.g., Rutter, 1987; Connor and Davidson, 2003). This focus has constrained the ability to also examine resilience’s theoretical emphasis on the acute interaction of person-in-environment and the dynamic, interactive nature of resilience (Windle et al., 2011; Pangallo et al., 2015). Further, most current research in resilience characterizes the “positive adaptations” as exclusively referring to mental health or subjective wellbeing, when the positive/desirable response might also refer to performance (i.e., sport) or physical recovery. Responding to the research priorities of the Australian Army we set out to develop an instrument that would assess short term performance capability - to capture the desired resilience profile of responding to change-and-adversity that their personnel should demonstrate (Gilmore, 2016). Accordingly, this conceptualisation of resilience should encapsulate performance capability: (a) persisting/thriving through challenging situations; (b) rebounding following setbacks; (c) mitigating detrimental effects if-and-when they occur; and (d) learning and improving from challenges – including deliberate and planned challenges (e.g., training) as well as unexpected or unplanned challenges (ranging from the theater-of-war to also include family issues, interpersonal conflict and equipment failures – cf. Richardson, 2002 - see also Forces Command Resilience Plan, 2015). This focus on the dynamic maintenance of individual and group/team capability through various circumstances may represent a different definition of resilience to some offered in the literature, some of which focus on relatively stable attributes of the individual Indeed, The Australian Army currently defines resilience as “the capacity of individuals, teams and organizations to adapt, recover and thrive in situations of risk, challenge, danger, complexity and adversity” (Forces Command Resilience Plan, 2015). Another key difference between “resilient force capability” and previous work on resilience is the emphasis on overall performance capability as opposed to health, mental health, and stress coping – the traditional foci of resilience research. Nevertheless, the elements of a dynamic process (Luthar et al., 2000) responding to different forms of challenge or adversity (Rutter, 1987; Lee and Cranford, 2008) and a repertoire of resources and tendencies (Agaibi and Wilson, 2005) all remain consistent with resilience research.

To better reflect this emphasis on resilient performance capability, we focused instead on the concept of “readiness,” to reflect an acute, situational state, and assessing perceptions of what can be achieved or attempted in the immediate future (cf. Grier et al., 2012). In this research, we proposed that changes in such a readiness state in response to recent events may, over time, be used to infer individual and group resilience. For example, an individual who maintains high levels of readiness and capability through significant challenges could be viewed as more resilient than someone whose readiness was impaired by the same, or even less significant, adversity (similar logic could apply for inferring physical or emotional resilience from acute response profiles). An instrument assessing readiness in this way would not only reflect a response to recent critiques of resilience measurement (e.g., Windle et al., 2011; Pangallo et al., 2015), but it would better facilitate the comparison and analysis of situational psychological perceptions to objective indices of physiological stress and resilience indicators such as cortisol, testosterone, and heart-rate variability (e.g., Hellewell and Cernak, 2018).

Defining and Operationalizing Readiness

Based on this proposition that acute subjective ratings of “readiness” represent a potentially useful tool for supporting performance and training, then the next step is to conceptualize acute readiness. We operationally defined “readiness” as an acute state of preparation and capability to perform any key task or role, in the immediate future. We expected this readiness state to fluctuate in response to recent tasks, for example those causing fatigue. This conceptualization differs from some established approaches, detailed below, which reflect a more general, chronic development of skills or capability. To be useful for informing immediate performance management and key decision-making, the instrument we developed needed to focus on the acute assessment of readiness.

As an example of how readiness has previously been monitored, the United States Army defined unit readiness as “the ability of a unit to perform as designed” (Dabbieri, 2003; p. 28), assessed in four areas: personnel, equipment-on-hand, equipment serviceability, and training (Army Regulation 220-1, 2003). Personnel readiness indicated the extent to which key roles in the unit were occupied by trained and capable individuals – e.g., percentages of fulfilled positions, whether they were available for deployment, and whether they were qualified/trained for their assigned positions. Equipment-on-hand referred to the extent that the necessary equipment was available to perform the unit’s role/mission: typically measured as a percentage of available-versus-specified equipment. Equipment readiness indicated the extent to which the equipment-on-hand was functional and operational. Training readiness indicated how soldiers individually, and the unit collectively, are prepared to execute assigned tasks and missions – exampled indices might include commander’s rating of soldier individual performance of wartime tasks, rating of the unit’s collective performance, and estimated number of training days for soldiers and the unit needed to be ready to perform such tasks (Griffith, 2006). Under this approach to monitoring readiness, numerous bureaucratic and administrative records had to be combined, often taking significant time and resources, to build a representation of a unit’s readiness. These evaluations would be, by necessity, infrequent and after-the-fact: relying on a combination of information from diverse sources. Such a combination of measures is not uncommon, and military contexts are often viewed as strong examples of organization and structure. Nevertheless, approaches like this may limit the ability of readiness monitoring tools to inform key decision-making. A more immediate and more readily integrated approach to monitoring may be available through the frequent use of short and psychometrically sound measures.

Where reviews and research have attempted to conceptualize readiness, their focus has been both broad, and longer term, spanning multiple constructs, including: self-efficacy; commitment; perceived organizational support; physical fitness; sense-of-community; technical competence; family-life; and job satisfaction (Adams et al., 2009; Blackburn, 2014). While these narrative reviews offered a broad overview of readiness, they included more stable traits and attributes, and did not offer clear advice for measurement, noting for example: “Measures that do exist are generally tailored to a specific domain and their generalizability is unclear. The models related to individual readiness are still relatively few and often lack empirical support. These have not been broadly validated” (Adams et al., 2009, p. iii). Hence the necessity of developing a suitable psychometric measure of acute readiness is increasingly clear.

Existing Psychometric Measures of Readiness

Psychometric research studies have examined different forms of readiness, including: exercise readiness (e.g., freshness/energy and fatigue -Strohacker and Zakrajsek, 2016; Strohacker et al., 2021); readiness to return to sport following injury (skills/fitness and confidence/self-efficacy – Conti et al., 2019); cognitive readiness (e.g., operational and strategic – Grier, 2012; Grier et al., 2012); and – considering a military context - readiness for combat (e.g., discipline and “military climate” - Bester and Stanz, 2007; see also Wen et al., 2014). None of the existing scales span all the different roles fulfilled by military personnel - reaching beyond combat - which necessitated the development of a new specific measure. In reviewing such scales, it is clear that “readiness” can be conceptualized as multidimensional, spanning multiple domains including physical, cognitive, emotional, social, skills/training, and even equipment/resources. In combination, these different components may combine to form an overall indication of acute readiness. Further, important distinctions can be made between acute readiness versus attributes developed over time, such as skills-and-training, as well as a distinction between group-level constructs such as climate and individual states such as fatigue or freshness. We set out to develop a scale to focus on acute, individual, multidimensional readiness – in order to (when implemented) detect short-term changes in individual and group capability.

Objective Measures and Wearables

Aside from time-consuming and resource-heavy medical examinations, the main alternative to a psychometric approach for monitoring readiness would be the use of wearable devices to monitor physiological signals such as heart rate, heart-rate variability, skin temperature, and galvanic skin response (Domb, 2019; Seshadri et al., 2019). Biochemical markers are also available through the sampling of sweat and sometimes blood to capture metabolites and electrolytes (Bandodkar and Wang, 2014; Lee et al., 2018). Decreasing production costs, increasing portability and the opportunity for real-time monitoring has made these popular options. Nevertheless, these devices can demonstrate inconsistent reliability between different circumstances, and are often dependent on communications network, with implications for battery life and data-security (Evenson et al., 2015; Baig et al., 2017; Peake et al., 2018; Seshadri et al., 2019). In many settings, including the military, construction, food processing, nursing and allied health professions, complications are caused via: (a) exposure to wide variations in temperatures; (b) frequent heavy usage; (c) impacts; and (d) challenges such as water, sweat, grit and sand. Keeping such devices operational over long periods can generate a significant impost, for example, through charging, maintenance and software updates. The information extracted from the user is often removed, analyzed and stored elsewhere, which may not ‘empower’ the user in terms of increasing awareness or facilitating immediate decision making (Seshadri et al., 2019). Without access to suitable network connectivity or local information processing capabilities, the raw information gathered by these devices is often uninterpretable by the user, and over extended periods may simply be deleted or become outdated before it is uploaded. There are also reported occasions where a user’s own perceptions and performance differ substantially from the interpretation offered by wearables, such as athletes being removed from competition despite feeling good and performing well (Blair et al., 2017; Coyne et al., 2018). For these reasons, coupled with the inability of wearables to evaluate all dimensions of readiness (for example skills/training, team-functioning an equipment), a “low-tech” psychometric instrument may be beneficial at least to complement wearable technology: both to compensate in instances when conditions limit the effectiveness of the wearable, and also in facilitating better alignment between subjective perceptions and objectively measured data.

Delineating Acute Readiness From Related Concepts

Finally, in reviewing existing psychometric measures of relevant and related concepts, several key issues support the development of a new instrument. Measures such as stress-recovery (e.g., (Kölling et al., 2015; Nässi et al., 2017), daily hassles (e.g., (Holm and Holroyd, 1992) and the Task-Load Index (e.g., Hart and Staveland, 1988; Byers et al., 1989) all assess subjective perceptions of recent past events. These measures do not assess perceptions of what a person is able to do in the immediate future, and while we may expect a close relationship, performers in many settings must often complete challenging tasks but remain “ready” for another challenge – indeed this is a core requirement of the role. Measures of current subjective state such as affect (e.g., Watson et al., 1988; Crawford and Henry, 2010), anxiety or wellbeing (Ryff, 1989; Van Dierendonck, 2005) likewise do not assess the perception of what may be attempted in the immediate future - what one is ready for. Similarly, while there may be a relationship, many personnel (especially in military) are asked to perform regardless of affect or anxiety, and so a more meaningful question would be: “what are you ready for right now?.” The ability to gain insights into this “readiness” state would be invaluable to individuals and their managers, for whom planning of actions and responses is often time-limited (for example, 2-to-4 h was typical in Burr, 2018). The other related concept might be self-efficacy (Bandura, 1977; Sherer et al., 1982): which can be applied to specific tasks or broader skill-sets. While clearly relevant to the concept of readiness, especially with reference to roles and skills, we argue that immediate readiness has a more specific focus than the broad judgment of being capable that typically informs self-efficacy. Likewise, self-efficacy is more likely to be relatively stable over time (Ryckman et al., 1982; Lane et al., 2004; Chesney et al., 2006), indicating broad perceptions of capability, but not reflecting immediate judgments of situational readiness as a function of recent events such as sleep, nutrition, trauma, or physical and mental fatigue. Responding to these concerns, we developed a simple instrument that would be meaningful to users and their immediate line-managers: thus necessitating the use of plain language in both the questions/items and the higher-level constructs.

Present Research

A systematically developed measure of acute readiness, with items that are applicable across diverse professions and job roles, is necessary for timely, informative and psychometrically sound assessments of readiness. We aimed to develop and evaluate the initial evidence of validity for an instrument we called the Acute Readiness Monitoring Scale (ARMS). The ARMS was designed as a multidimensional measure assessing individuals’ perceptions of readiness for imminent challenges, drawing on capabilities from the domains of physical, cognitive, emotional, social, skills-training and equipment. We collected data from personnel across all three phases of the Army’s “Force Generation Cycle1,” spanning diverse roles and ranks, and then conducted two analyses to assess the internal structure (to determine the extent to which the items of a measurement instrument are in line with the construct of interest via factor analyses; Chan, 2014). We also sought to evaluate the correlations of ARMS factors to other related variables. These steps are in accordance with the Standards for Educational and Psychological Testing (The Standards; developed by the American Educational Research Association [AERA] et al., 2014). Additionally, we sought to examine evidence for reliability and discriminant validity of the subscales of the ARMS.

Overview of Study 1

The aim of Study 1 was to first develop a pool of items to assess acute readiness in the form of: (a) overall readiness; (b) physical readiness; (c) cognitive readiness; (d) emotional readiness (termed “readiness for threat-and-challenge” to be more acceptable to the user-group); (d) the social readiness of the unit, group or team; (e) the suitability of one’s training and skills for immediate tasks; and (f) equipment readiness. Second, we set out to evaluate the internal structure, internal consistency, and discriminant validity of the subscale scores of the new measure.

Method – Study 1

Participants

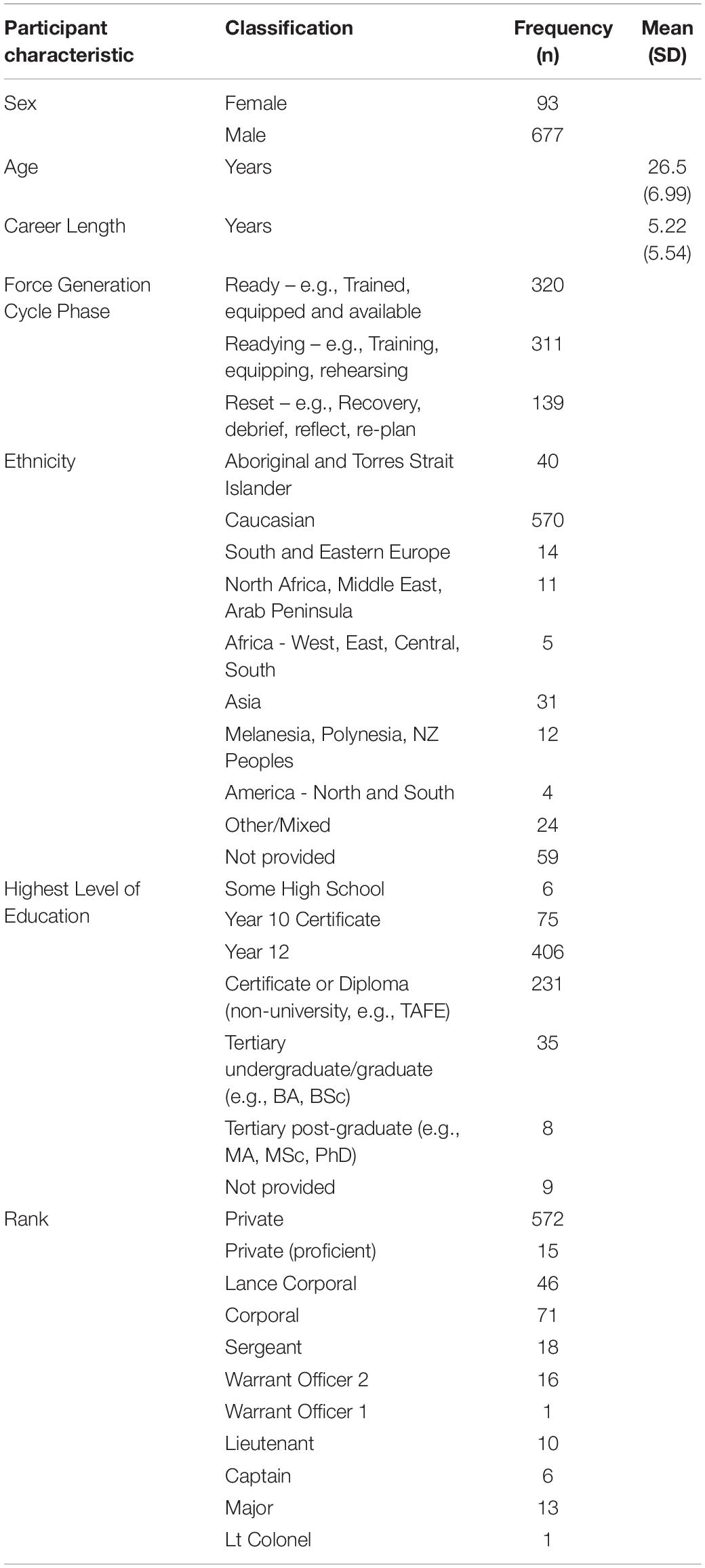

We targeted a sample of five to ten participants per item (Anthoine et al., 2014), acknowledging that (Comrey and Lee, 1992) asserted that a sample size 300 is typically viewed as appropriate. The total final sample consisted of 770 Australian Army personnel (Nmale = 677, Nfemale = 93), with a mean age of 26.5 years (SD = 7.0 years). Seven participants declined to participate at the informed consent stage, and any corresponding data were destroyed. One participant spoiled their answers and was excluded. Participants were drawn from all three phases of the “Force Generation Cycle” (Nready = 358; Nreadying = 186; Nreset = 226) and from a wide variety of trades, spanning: infantry, artillery, engineers, signals, chefs, armored divisions, clerks, and more, but not including special forces. Participants were drawn from a wide range of ranks (NPR = 572; NPR(P) = 15; NLCPL = 46; NCPL = 71; NSGT = 18; NWO2 = 16; NWO1 = 1; NLT = 10; NCAPT = 6; NMAJ = 13; NLTCOL = 1). Career length in the Army ranged from 0.5 – 42.5 years (mean = 5.2 years, SD = 5.5 years). Ethnicity was self-reported using the Australian Bureau of Statistics reporting system (Australian Bureau of Statistics, 2016), coded as follows: (a) Aboriginal and Torres Strait Islander (n = 40); (b) Arab, North Africa and Middle East (n = 11); (c) Africa (n = 5); (d) Americas – North and South (n = 4); (e) Asia (n = 31); (f) Caucasian and Western European (n = 570); (g) Melanesia, Polynesia and New Zealand Peoples (n = 12); (h) South and Eastern European (n = 14); (i) Mixed/Other (n = 24); and (j) no answer (59) Table 1. The total sample was randomly divided into n = 500 for the Exploratory Factor Analysis (Study 1), and n = 270 for the Confirmatory Factor Analysis (Study 2) and this split was checked to ensure no significant differences in composition. The subsequent evaluation of convergent and divergent validity was conducted using the whole sample.

Acute Readiness in Monitoring Scale

The ARMS items were designed to assess participants’ perceptions of readiness for immediate challenges and tasks that could be demanding physically, cognitively, emotionally, drawing on specific skills/training, teamwork and team functioning, or equipment. Items were also developed to provide an overall appraisal of readiness. An initial pool of items was developed based upon the operational definition of acute readiness. These items were then reviewed by the rest of the research team, who made suggestions for improvements and/or proposed alternative items. Items were kept brief (but not single word items), were not double-barreled in syntax, and did not borrow heavily from any one existing measure. Reverse-scored items were included. The content of items was informed by existing self-report measures of readiness, affect, perceived task load, stress-recovery, coping, and fatigue (e.g., stress recovery – Kölling et al., 2015; Task-Load Index – Byers et al., 1989; affect – Watson et al., 1988; wellbeing – Ryff, 1989; self-efficacy – (Sherer et al., 1982). The initial item pool is listed in Supplementary File 1. The items were scored on a seven-point Likert scale from zero (does not apply at all) to six (fully applies), and introduced with the phrase “Please answer the following questions in relation to how ready you feel for any upcoming task or challenge.” The 7-point response format is common in sport and performance psychology (e.g., Bartholomew et al., 2011; Ng et al., 2011) and consistent with survey takers’ preferences: performing well in terms of their discriminative power (Preston and Colman, 2000). Through this process, our team generated 12–14 items assessing perceptions of each construct, with the intention of selecting approximately four items per factor/subscale in the final product. The proposed items were subsequently evaluated by a user group (n = 23) and an international panel of experts (n = 7) – with feedback leading us to (for example) de-emphasize resilience concepts (initially a focus from the industry stakeholders), and remove references to “right now” which was already implied in the question stem. We also presented and discussed the proposed approach to a group of senior stakeholders at a workshop held on 8th April 2019.

Procedure

Ethical approval was obtained for both the expert panel consultation (HREC, 1739) and the main data collection (DST LD 08-19 and HREC 2193). Subsequently, task orders were issued from Australian Army Headquarters to make troops available for data collection visits lasting approximately 1-h. Data-collection took place in person, at locations across Australia, using paper-and-pen surveys – typically in groups of between 15 and 100 personnel in one sitting. Commanding officers were not required to be present, but some chose to attend and complete the task with their units (see Participants, above). Prior to survey administration, participants were advised of the wider intention of the project to generate a readiness monitoring instrument and shown mock-ups of how such a system could look and be used in practice. Participants were assured that were no right or wrong responses, reminded of the anonymity of their responses, and encouraged to respond honestly. We recorded the time of day that survey completion began, as well as the most recent task that the participant had completed, as well as how demanding they found that using the NASATask Load Index (TLX). Only personnel who were on-base at the time of data collection were included. Participation in the study was voluntary, and this was emphasized through the informed consent process as well as in the small presentation preceding the data collection. All participants completed a written informed consent form prior to taking the survey, which was administered in person immediately prior to the data collection. Participants were informed they could return to other tasks if they did not wish to participate, although – as implied above - several chose to complete the survey with their team and then withheld consent for the data to be used.

Data Analyses

Exploratory factor analysis (EFA) identifies the dimensionality of constructs by examining relations between items and factors (Netemeyer et al., 2012). For this reason, EFA is typically performed in the early stages of developing a new or revised instrument (Wetzel, 2012). In this study, seven candidate factors were developed: (a) overall readiness (b) physical readiness; (c) cognitive readiness (d) threat-challenge readiness; (e) skills-readiness; (f) group readiness; and (g) equipment readiness. This hypothesis was used to inform how we explored the structural pattern of the preliminary scale, along with a scree plot and eigenvalues (Thompson, 2004). Scree plots are useful to estimate where a significant drop occurs in the strength of possible factors (Cattell, 1966; Netemeyer et al., 2003).

We developed the factorial structure of the new measure using EFA, Exploratory Structural-Equation Modeling (ESEM) and CFA. ESEM incorporates aspects of the CFA process, such as specifying item-combinations and relationships, within the exploratory phase of the scale development (Asparouhov and Muthén, 2009). As such, ESEM is useful in clarifying key issues such as cross loading and potential shared error variance before moving to the CFA stage. Statistical analyses were conducted in SPSS (IBM Corp, 2016 – data cleansing, collating emerging factors, checking, internal reliability) and MPlus 8.3 (Muthén and Muthén, 2012 - running models, assessing each factor, model, and fit indices). We worked from the Pearson correlation matrix that is the default in MPlus, and we used oblique “geomin” rotation in line with guidance for EFA (Dien, 2010). For CFA modeling, latent factors were permitted to correlate, with cross-loadings of items on unintended factors being constrained to zero. Similar to CFA, as the analysis progressed into evaluating ESEM models, items could load on their predefined latent factors, while estimating cross loadings and considering this in the development of the evolving model.

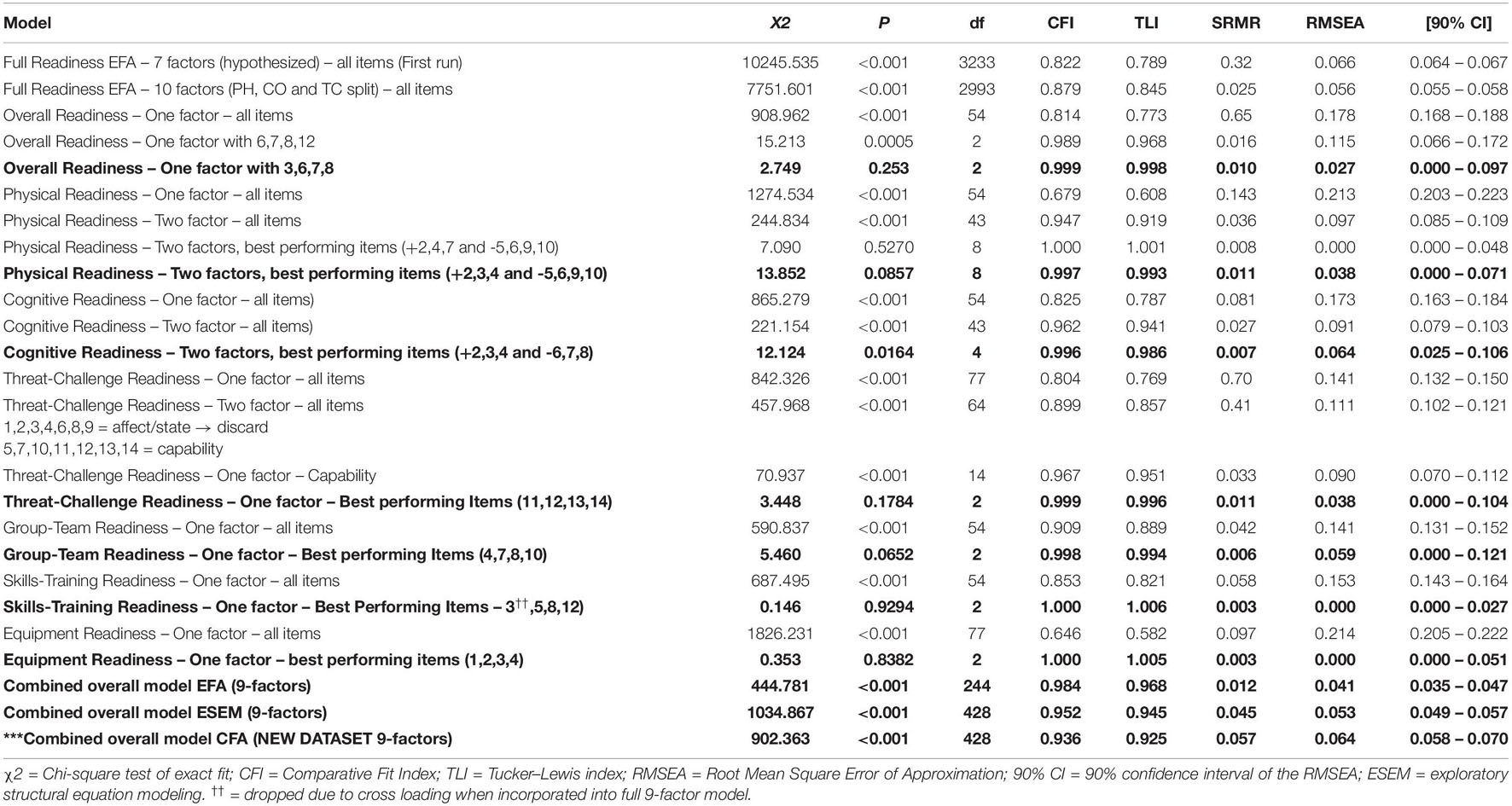

As complete models were evaluated, goodness-of-fit was evaluated using the χ2 test (with p-value) and χ2/df (degrees of freedom), Comparative Fit Index (CFI), Tucker-Lewis index (TLI), Root Mean Square Error of Approximation (RMSEA), and Standardized Root Mean Square (SRMR). Adequate and excellent model-to- data fit was indicated by non-significant χ2 test or χ2/df < 2.5, CFI and TLI values >0.90 and 0.95, respectively, and RMSEA and SRMR values of or <0.08 and 0.06, respectively (Hu and Bentler, 1999; Marsh et al., 2004; Hooper et al., 2008). The strength of factor loadings was informed by the recommendations put forth by Comrey and Lee (1992) – i.e., > 0.71 = “excellent,” > 0.63 = “very good,” > 0.55 = “good,” > 0.45 = “fair,” < 0.30 = “poor”). The internal consistency of the subscale scores was determined through an assessment of Cronbach’s alpha (Cronbach, 1951). In line with the recommendation by Nunnally (1978), internal consistency estimates > 0.70 were deemed adequate.

Results – Study 1

Item Distribution

Prior to the factor analyses, data were scanned for univariate normality regarding the assumption for the use of maximum likelihood estimation method. Median values for skewness and kurtosis for the 88 candidate items were 0.69 and 0.11, respectively, and ranged from −2.21 to 1.36 for skewness, and −1.01 to 5.16 for kurtosis. Two heavily skewed items were removed from the analysis (“I am sick today” and “I am injured today” – both very frequently scored as zero or “zero inflated”). We observed up to 2% missing data in some variables, and so data were analyzed using a robust maximum likelihood estimator (MLR). MLR yields robust fit indices and standard errors in the case of non-normal data and operates well when categorical variables with a minimum of five response categories are employed (Rhemtulla et al., 2012; Bandalos, 2014).

Configurations of the Proposed Factors

The exploratory factor analysis, using the subset of n = 500 respondents, process began with an initial analysis run to obtain eigenvalues for each factor in the data. Next, the Kaiser-Meyer-Olkin (KMO) Measure of Sampling Adequacy (KMO = 0.962) test and Bartlett’s Test of Sphericity (χ2(3655) = 37578.3, p < 0.001) were employed to determine that the data collected were appropriate for an exploratory factor analysis. Having established the data were suitable for EFA, we continued to explore the data. The initial analysis suggested 13 factors with an eigenvalue over one, whereas the scree plot suggested a firm transition (“scree”) after nine factors. As such, we used MPlus to generate factor solutions between 7 and 14 factors, and reviewed the solutions in relation to: (a) the hypothesized factor structure; (b) feedback from the expert panel (prioritizing conceptual clarity); and (c) user-group workshop and stakeholder inputs (for example prioritizing interpretability, brevity and parsimony). By reviewing the multiple factor solutions simultaneously, we recorded which items consistently loaded together, and which items consistently cross-loaded, or failed to load meaningfully. Items largely loaded consistently into the expected factors, but two proposed factors in particular – physical readiness and cognitive readiness – contained two clusters of items loading meaningfully onto separate factors: that we interpreted to distinguish between “readiness” and “fatigue.” We investigated this development further using the process detailed below. The proposed threat-challenge factor split into items clearly pertaining to “readiness” alongside others capturing simply “affect,” leading to these latter items to be discarded as they were considered inconsistent with the targeted emphasis on performance capability.

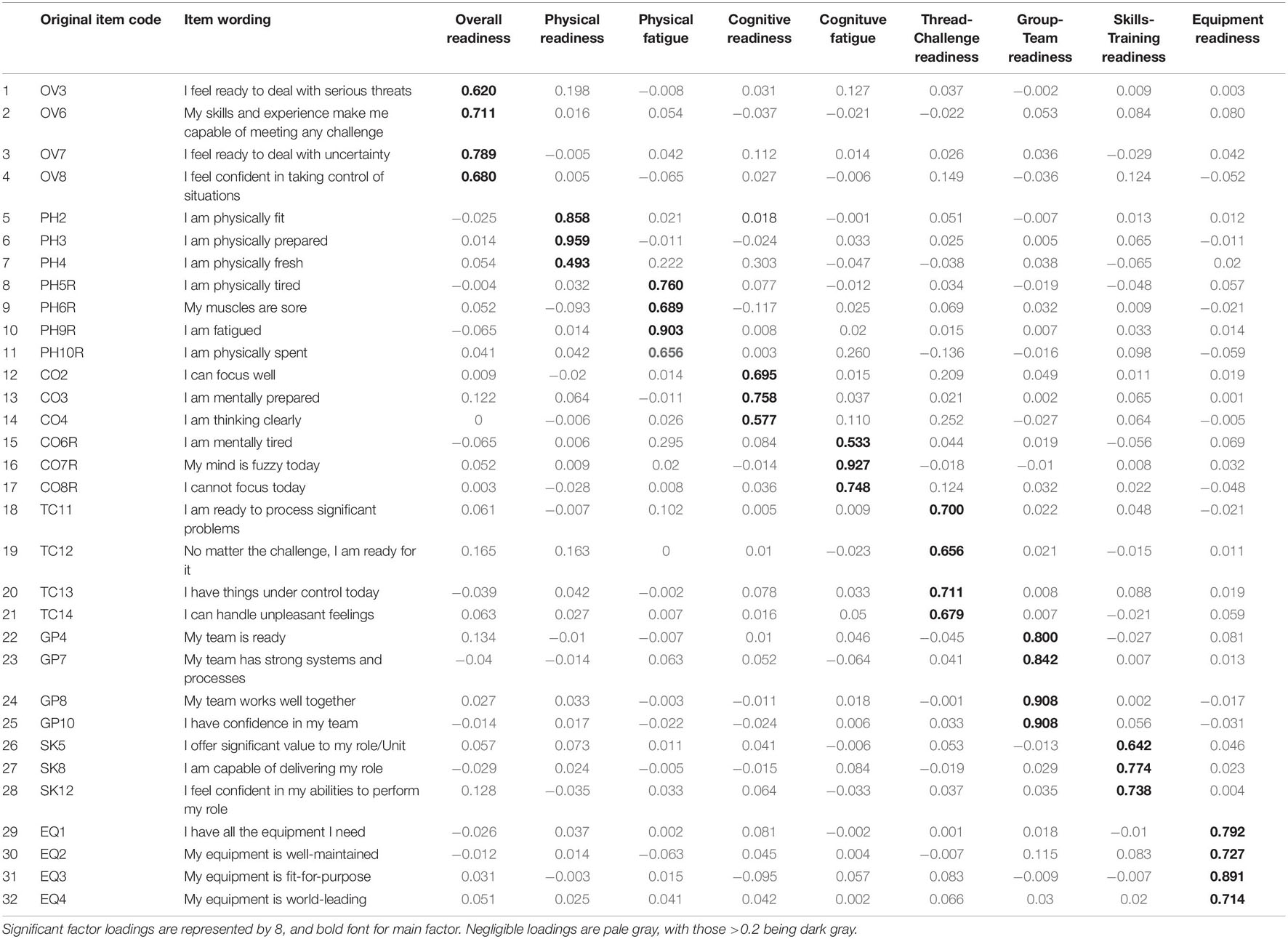

At this stage, we examined each of the hypothesized factors using EFA, ESEM and CFA to systematically remove problematic items, and then re-run the resulting ESEM model with the best performing items. For these analyses, we included CFA steps as they were helpful in comparing models and selecting items with strong primary factor loadings to ultimately inform the final ESEM model (see also, Bhavsar et al., 2020). Model misspecification was identified through assessments of standardized factor loadings and modification indices, in a manner similar to item reduction approaches used in previous scale development procedures (e.g., Rocchi et al., 2017). Alongside these statistical criteria, we also considered the conceptual coverage of the items. Items with standardized factor loadings below 0.30, as well as items with multiple (two or more) moderate-sized or large modification indices (over 10) were reviewed and considered for deletion. As such, 56 of the 88 items were deleted in a systematic manner over several iterations. The resulting nine one-factor models each had excellent fit (see Table 2). After removing one item that cross loaded (SK3), the revised combined/overall model demonstrated good fit [χ2 (428) = 1034.867, p < 0.001; χ2/428 = 2.4; CFI = 0.95; TLI = 0.95; SRMR = 0.05; RMSEA = 0.05 (90% CI: 0.05, 0.06)]. The result of EFA in Study 1 was a refined measure that included 32 items arranged into nine factors (see Table 3).

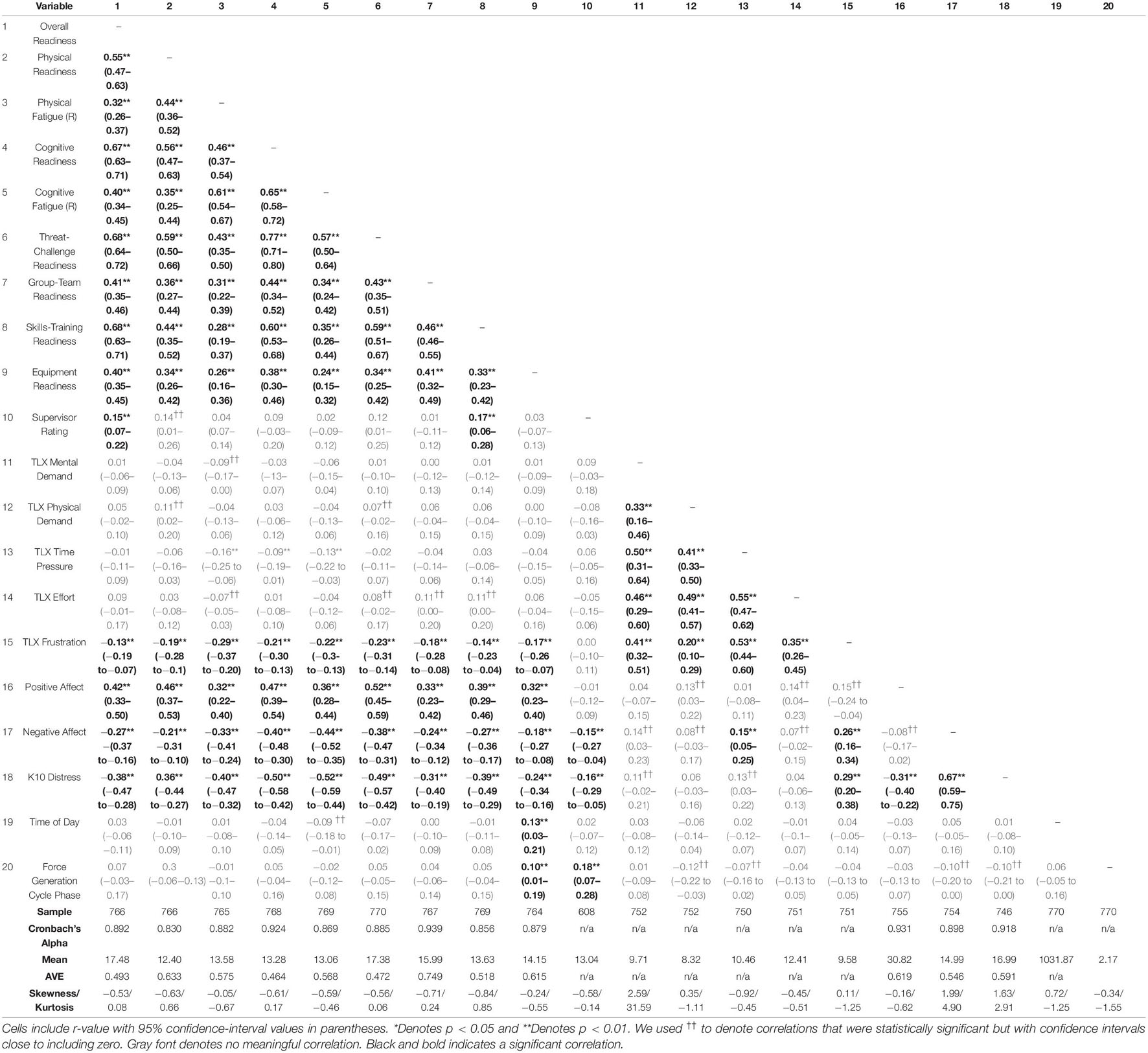

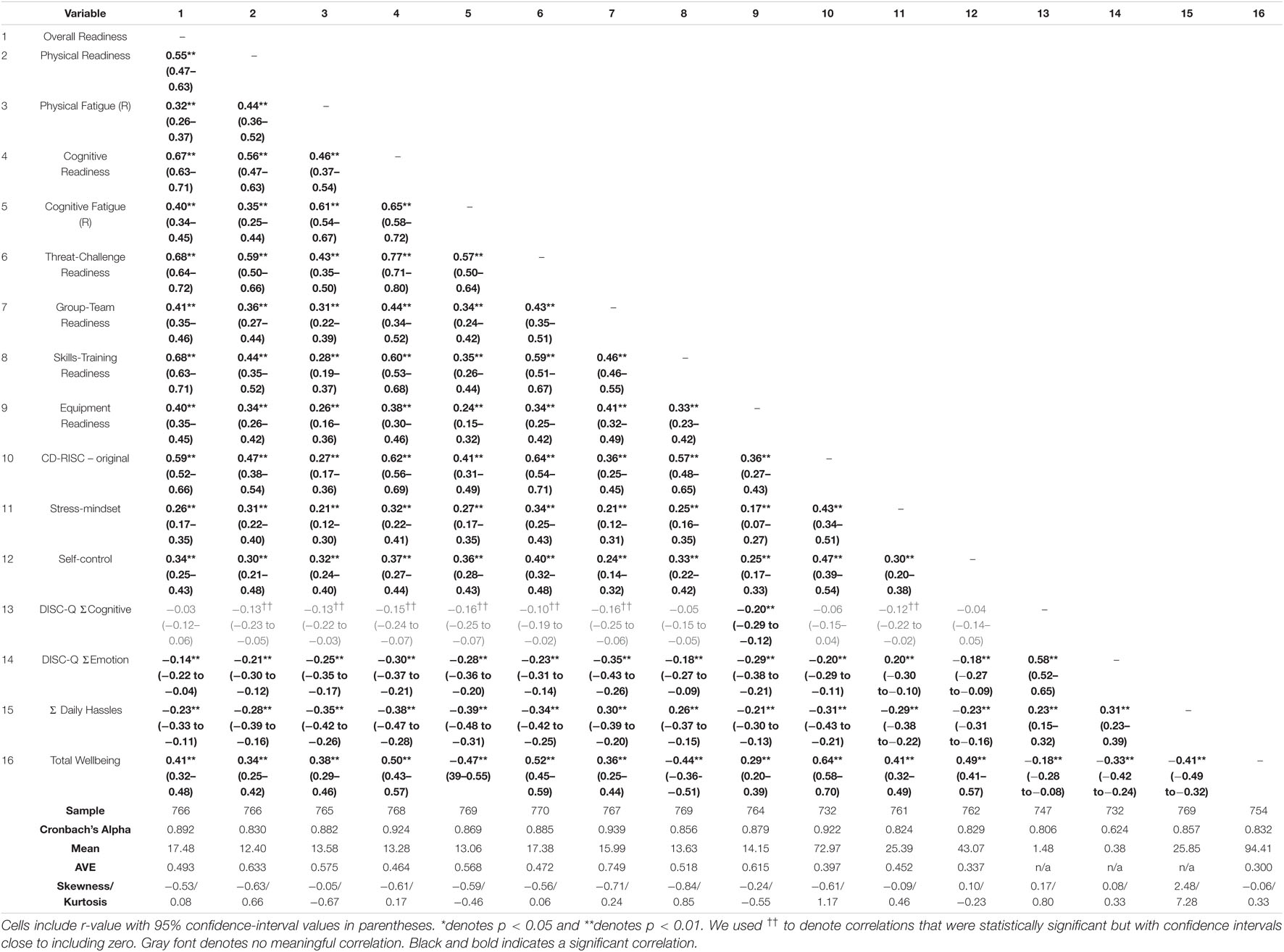

Standardized factor loadings were significant and above 0.30 (range 0.49 to 0.90; see Table 3). Four cross-loadings greater than 0.20 on unintended factors were present, and this was not viewed as a concern warranting their deletion (see also Bhavsar et al., 2020). Subscale correlations ranged from 0.26 to 0.77 and were in the expected directions (see Tables 4, 5). Cronbach’s alpha reliability coefficients are also reported in Tables 4, 5. These were over 0.70 for all factors.

Table 4. Correlation table for concurrent and discriminant validity of study 2 – acute and short-term correlates.

Table 5. Correlation table for concurrent and discriminant validity of study 2 – traits and long-term correlates.

Overview of Study 2

The aims of Study 2 were: first to test the revised items and factor structure from Study 1 with a previously unused sample (n = 270 from the original sample of 770); and second, to test the nomological network of the readiness scale, by examining their relations with indication of recent stress and demands, as well as protective traits such as resilience and self-control. Based on the theoretical rationale that resilience represents an array of traits that mitigate the effects of stress and demand on current readiness (e.g., Rutter, 1987; Connor and Davidson, 2003) we proposed that: (a) job demands, daily hassles, and perceived load in the most recent task would influence current readiness; (b) also influenced mitigating traits including resilience, stress mindset and self-control. We further predicted that (c) current readiness should be correlated to current affect, current distress, and a supervisor’s rating of current soldier readiness. Specifically, perceived readiness should be positively correlated with positive affect and supervisor’s ratings of soldier readiness, but negatively correlated with reported levels of distress and negative affect.

Method – Study 2

Confirmatory Factor Analysis (CFA) was conducted on the remaining sample of 270 participants, randomly separated from the data used in Study 1. Subsequently, using the full 770 participants, correlational analysis was conducted using Pearson correlations, their 95% bootstrapped confidence intervals (CI), and average variance extracted (AVE) of the factors between: (a) ARMS factors; (b) recent sources of stress and demand (DISQ scores, Daily-Hassles and NASA-TLX); (c) resilience promoting traits (CD-RISC, Stress Mindset, Self-Control); (d) other current indicators of readiness including the K10 distress scale, PANAS affect score; and (e) a single item Visual Analog Scale rating of each soldier’s readiness by their current supervisor (see Tables 4, 5).

Measures

We selected a range of measures to assess convergent and discriminant validity of the newly developed ARMS. In the following paragraphs, we report the Cronbach alpha reliability from the original validation or a cited revalidation: for internal reliability scores in the current study see Tables 4, 5. To assess recent stress and load experienced by participants, we included three measures of recent stress: (a) chronic load using the 22-item Demand-Induced Strain Compensation Questionnaire to assess both cognitive and emotional occupational strain (Bova et al., 2015; α = 0.55–0.78); (b) recent low-level stresses using the 117-item Daily Hassles Scale (Kanner et al., 1981; Holm and Holroyd, 1992; α = 0.80–0.88); and (c) perceptions of how demanding the most recent task was – in terms of mental demand, physical demand, time-pressure, frustration and effort -using the NASA Task Load Index (NASA-TLX – Hart and Staveland, 1988; Xiao et al., 2005; α > 0.80).

To assess traits that protect performance capability from the effects of stress (i.e., resilience), we used: (a) the 25-item Connor-Davidson Resilience Scale (CD-RISC – Connor and Davidson, 2003; α = 0.89); (b) the 8-item Stress Mindset scale assessing the degree to which respondents view stress and challenge and developmental experiences versus detrimental (Crum et al., 2013; α = 0.86); (c) the 13-item Self-Control Scale (Tangney et al., 2004; α = 0.85); and (d) subjective wellbeing, which we hypothesized would be protective against stress and hassles in promoting acute readiness and capability. For this we used (Ryff and Keyes, 1995) 18-item psychological wellbeing scale (α = 0.33–0.56).

To assess other potential indices of acute readiness, we assessed: (a) affective state, using the Positive and Negative Affect Schedule (PANAS – Watson et al., 1988; α = 0.87–0.88); (b) 30-day distress using the Kessler-10-item Distress Scale (Sampasa-Kanyinga et al., 2018; α = 0.88); and (c) a rating by the supervisor, using a Visual Analog Scale (VAS) from 1 to 20 (1 = “not ready for likely duties,” 10 = “Suitably ready for likely duties”; 20 = “Beyond capable in relation to likely duties”). This VAS was developed specifically for this study. Participants gave additional consent for supervisor’s rating to be sought.

Analysis

Concurrent validity would be demonstrated by a significant correlation (p < 0.05 and confidence intervals not including 0) between corresponding factors (e.g., overall readiness and positive affect). Discriminant validity would be supported when two criteria were met: (1) 95% CI of the correlation between ARMS factors and the other factors did not include 1 or −1 (Bagozzi et al., 1991; Chan et al., 2018) and (2) either the Average Variance Extracted (AVE) of each factor was larger than their shared variance (i.e., square of correlation) with the other factors (Fornell and Larcker, 1981), or a visual inspection of the scatter plot between the two variables did not suggest any linear or curve-linear relationship.

Results – Study 2

Using CFA, the model specified in Study 1 showed acceptable fit to the new data [χ2 (428) = 902.363, p < 0.001; χ2/428 = 2.1; CFI = 0.94; TLI = 0.93; SRMR = 0.06; RMSEA = 0.06 (90% CI: 0.06, 0.07)]. These are similar fit indices to those from Study 1, and broadly interpreted as on the boundary between “acceptable” and “good” model fit. Given the intended implementation by the stakeholders, we agreed that no further refinements were necessary to improve model-fit at this stage.

For concurrent validity, ARMS factors positively associated with each other, as well as positive affect, while negatively correlating with K10-distress and negative affect (see Table 4). For discriminant validity, the correlation 95% CI spanned zero between the ARMS factors and most aspects of the NASA-TLX, consistent with the notion that recent task-load alone is not a sufficient predictor of immediate readiness. Nevertheless, TLX-frustration demonstrated small correlation with ARMS subscales, suggesting that the emotional experience of frustration may be more relevant in determining perceptions of immediate readiness than other forms of task-load (see Table 4). Similarly, DISC-Q scores for emotional strain were negatively correlated to ARMS factors, although DISC-Q cognitive strain showed only small correlations. Thus, emotional load and emotional strain seem to be more promising indicators of readiness perceptions than recent cognitive load and/or strain. As expected - noting that fatigue items were reverse coded - all ARMS subscales were positively correlated with the resilience-promoting traits of resilience (CD-RISC), stress-mindset, self-control, and psychological wellbeing; while being negatively correlated with the total severity of recent hassles. ARMS subscales were positively correlated with positive affect, and negatively correlated with negative affect and K-10 distress, while meeting the criteria to demonstrate discriminant validity. The final Cronbach alpha for the overall ARMS scale was 0.949.

Discussion

We developed and validated a psychometric instrument suitable for assessing acute readiness: the ARMS. The intent behind this tool was to facilitate rapid, reliable indications from personnel themselves of current individual and group capabilities for immediate tasks; as well as the ability to monitor how individuals and groups respond to training and deployment challenges. One data sample, conducted with Australian Army, was divided into two analyses, with findings supporting the key aspects of construct validity in this new psychometric tool.

Summary of Findings

In Study 1, a total of 32 items for the ARMS received support in the forms of user-group endorsement, expert panel clarification, and then the demonstration of a factor structure that was largely consistent with expectations, including good model fit indices. The inclusion of four items to indicate “overall readiness” as a brief scale helps to facilitate the relatively un-intrusive monitoring of day-to-day readiness. The factor structure was kept simple, with no additional modeling beyond simply tallying the nine factors, with no sharing of error variances or additional modeling performed. In Study 2, the factor structure developed in Study 1 was supported, showing acceptable fit in a fresh sample. Further, the concurrent validity of the ARMS was evaluated by examining the correlations between subscales, as well as with targeted constructs such as recent task-load, time-of-day, affect, distress and supervisor-ratings of readiness. The subscales of the ARMS showed small-to-moderate intercorrelations as might be expected, and were also moderately associated with affect and K10-distress. The subscales “overall readiness” and “equipment readiness” showed small but statistically significant correlations to the supervisor’s rating of readiness. Most aspects of recent task-load did not associate to ARMS scores, although frustration in the most recent task was consistently correlated with ARMS scores. Additionally, ARMS subscales were largely correlated with recent stress, as indicated by severity of recent “daily hassles” and evaluations of occupational emotional demands (DISC-Q – De Jonge and Dormann, 2003). The initial validation of a psychometric tool for monitoring readiness across a range of contexts and job-roles provides the basis for further cross-sectional and longitudinal evaluations.

The main divergence from expectations was the emergence of separate “readiness” and “fatigue” factors under both the “physical” and “cognitive” subscales. While correlated, these items were not able to be modeled within single factors: i.e., they were not two ends of the same spectrum. A similar observation was made in a recent study of exercise readiness (Strohacker and Zakrajsek, 2016), with “freshness” and “fatigue” modeled as separate factors. Informal reviews with the user-group supported the interpretation that - while perhaps counter-intuitive - one can be fatigued by recent commitments and yet still “ready-to-go-again.” Likewise, one can feel neither fresh nor fatigued. As such a respondent could indicate physical or mental readiness-versus-fatigue to be any configuration of: (a) high:high; (b) high:low; (c) low:high or (d) low:low. In the studied population – perhaps faced by frequent combinations of physical and mental load, as well as certain cultural norms around admitting to weakness or vulnerability – it is possible that the observed pattern is unique to military: but that would largely support the need for further research to assess the suitability of the ARMS for different contexts. Upon returning to the wider literature, however, we did find examples of this pattern in other research. For example, Boolani and colleagues have characterized different correlates of both trait (Boolani and Manierre, 2019) and state fatigue-versus-energy (Boolani et al., 2018), as well as different responses to physical activity (Boolani et al., 2021). As such, our findings may be adding to a growing awareness that the experiences of energy and fatigue may be separate.

Comparison to Previous Findings

We developed the ARMS as a highly useable and easily interpreted scale. In comparison to the bureaucratic-administrative exercise of monitoring training, equipment servicing and medical reporting processes (cf. Dabbieri, 2003), the ARMS represents a new and complimentary opportunity. This new psychometric instrument allows leaders/managers to quickly request validated, meaningful readiness scores from an individual, team or wider group: for example, in rapidly planning a new project, tender or crisis response. Not only would the resulting scores from the ARMS be almost immediate and in a consistent format, they would be provided by personnel with direct access to the relevant information. Further, information provided from the ARMS is readily integrated, synthesized and interpretable. These are all advancements complementing the existing management practices reliant on finding, studying and synthesizing information from a wide range of records: generated infrequently, and stored in different formats. Further, in comparison to the data from wearable technology that monitors physiological signs such as heart-rate variability, sleep or physical activity logs (cf. Domb, 2019; Seshadri et al., 2019), data from the ARMS should be more readily interpreted with minimal additional processing, and more readily collated at the group level to give information that planners and decision-makers may need. For example, information about lack of sleep or sustained physical exertion during operations may simply represent quite typical role requirements from long-distance travel or night-shift work, and so may tell the receiver little about the individual or group’s capabilities for further actions. Likewise, in a situation with limited connectivity and/or constraints on battery usage, the ARMS could be completed quickly using paper-and-pens, collated and interpreted in situ, without computational processing.

While previous psychometric instruments have been developed, the ARMS is arguably the only instrument to balance brevity (i.e., 4 core items + 28 expansion items) with capturing the multidimensional nature of acute readiness, across various roles and performance capabilities (cf. Bester and Stanz, 2007). Existing measures have either focused on specific aspects of readiness (e.g., cognitive readiness – Grier, 2012; exercise readiness – Strohacker and Zakrajsek, 2016; return from injury readiness – Conti et al., 2019) or broad overall appraisals of self-efficacy or anxiety. These measures have a much higher-ratio of items-per-component, representing a higher burden on respondents. Combining multiple different scales is also less suitable for immediate integration into a multidimensional model representing acute readiness. Similarly, psychometric measures of recent hassles, stress recovery, self-efficacy, affect, or anxiety are typically either: (a) too long; (b) not specific enough to be used and interpreted by users (i.e., broader academic concepts); (c) focused on past events, not the immediate here-and-now; or (d) some combination of a-c. The ARMS is the only instrument designed to be brief, readily interpreted by users themselves, and specifically focused on acute readiness.

Next Steps

We recognize that the scale may require further validation in other contexts and groups in order to assess the generalizability. The sample in this study was, relatively young, well-educated and predominantly Caucasian, which may necessitate caution in seeking to apply these findings in other contexts/populations. Likewise, the emergence of physical and mental factors in “readiness” and “fatigue” warrants further examination in future research, although it is consistent with at least one previous study (Strohacker and Zakrajsek, 2016). Further, while the ARMS has been developed to be implemented into a regular program of monitoring, for example through an app or regular reporting practices, it has not been tested in that context. Hence, it would be important to determine how the instrument behaves when completed frequently, and whether there are ways of optimizing the utility of using the instrument - for example through carefully managing how often, what time-of-day, and after what events each subscale should be completed. This type of research would allow for the estimation of test-retest reliability, in circumstances where consistent scores would be expected, and also sensitivity to changes. While one aim of developing the ARMS was to facilitate a novel method for evaluating resilience – by observing acute psychological responses to challenges, adversity and training activities – the next logical step would be to evaluate the extent to which the instrument enables this evaluation. Future research assessing the use of the ARMS as an indicator of resilience is needed and would require repeated administration of the ARMS before and after a defined challenging event, or series of events.

Limitations

The entire sample in this study included military personnel who were “on-base,” and so much less likely to be experiencing the fatigue generated by sustained operations and time away-from-home (or, for example, the fatigue caused by working from home over an extended period). Hence, it would be important to test the ARMS under diverse circumstances – both more civilian-relevant professions and also in more demanding military circumstances: potentially evolving items or factors to capture the effects of those activities. Finally, through the conduct of this study including talking to respondents and reviewing qualitative comments, coupled with the literature reviewed in preparing the ARMS, it is possible that there are other dimensions of readiness not assessed in the current measure. These forms might include social support from family and friends, rather than focusing on one’s unit or team, and also behavioral readiness such as having good routines throughout one’s day, or good diet and sleep patterns.

Conclusion

Overall, therefore, the present study has developed and provided initial validation of an instrument to assess acute multidimensional readiness with a focus on performance capability. The resulting instrument offers many opportunities both to resolve or circumvent limitations in other measures, as well as opening up new avenues of research in further refining the scale, and optimizing the implementation into real-world settings. Furthermore, the development of the ARMS has advanced the conceptual understanding in this topic, by identifying a potentially important distinction between readiness as “freshness” versus “fatigue” as separate but correlated constructs. In addition to facilitating a new avenue of research on acute readiness itself - a previously under-researched concept – the development of the ARMS opens up opportunities to resolve real-world problems around readiness-monitoring, with implications for inferring resilience beyond cross-sectional trait measures, and potential to be adopted and applied in other contexts such as sport, occupational setting and health-monitoring. Finally, by offering the ability to capture subjective perceptions of readiness, the new scale also may facilitate research on how wearable technology and objectively measured indices relate to these subjective perceptions, and how this “nexus” of different forms of information might be useful in managing training, performance and recovery in a wide array of performance contexts. Overall, the ARMS makes it possible to assess acute readiness, for the group and individual, in a way that is both un-intrusive, easily interpreted and yet is psychometrically validated. As such, numerous new possibilities open up following the development of this scale.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Defense (Australia) Human Research Ethics Committee (Low Risk) University of Canberra Human Research Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

RK conceptualizing the project alongside co-authors and stakeholders, collecting and analyzing data, and preparing the written report. AF, BR, and MS provided important conceptual and methodological inputs in designing, implementing and interpreting the study (BR also participated in data collection). TN and MW are statisticians who provided critical oversight in planning, implementing and interpreting the analysis of data. LM was the Army liaison who ensured access to data collection opportunities and oversaw the secure collection and storage of data, as well as assisting in design and interpretation phases of the project. DC was the lead contributor from Defense Science Technology Group, playing a crucial role in the design, planning and facilitation of the study: spanning conceptualization, ethical approvals, methods and the planning of data collection. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by a grant from Defence Science Technology, Australia, as part of the “Human Performance Research Network” (“HPRnet” – Award ID: 2016000167).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors wish to acknowledge important contributions from Amy Eades and Peter Howard, who assisted in the inputting of data from paper forms, and Jonathan Upshall who assisted in the data collection process. Dev Roychowdhury was involved in the development of the questionnaire items. Eugene Aidman offered important inputs at the early stages of conceiving this project, as well as advice in refining the ethics application. Further, in addition to the extensive support from LTCOL Lee Melberzs (author), LTCOL Bevan McDonald reviewed, advocated, and facilitated the project and was invaluable in making this research possible.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.738609/full#supplementary-material

Footnotes

- ^ The Australian Army, like many other armed forces, enacts a training cycle (the “Force Generation Cycle”): rotating personnel through three phases of Ready, Readying, and Reset. Each phase comes with different expectations, activities, and support requirements (Australian Army, 2014). While adopting this cyclical approach facilitates a sound balance of training, recovery and active duties, the Australian Army subsequently identified an opportunity to optimize and individualize activities within this cycle (Gilmore, 2016) – which precipitated the current research project.

References

Adams, B. D., Hall, C. D. T., and Thomson, M. H. (2009). Military Individual Readiness. État de Préparation Militaire de L’individu (DRDC No. CR-2009-075). Ottawa, ON: Department of National Defence.

Agaibi, C. E., and Wilson, J. P. (2005). Trauma, PTSD, and resilience: a review of the literature. Trauma Viol. Abuse 6, 195–216. doi: 10.1177/1524838005277438

American Educational Research Association [AERA], American Psychological Association [APA], and National Council on Measurement in Education [NCME] (2014). Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association.

Anthoine, E., Moret, L., Regnault, A., Sbille, V., and Hardouin, J. B. (2014). Sample size used to validate a scale: a review of publications on newly-developed patient reported outcomes measures. Health Q. Life Outcomes 12, 1–10. doi: 10.1186/s12955-014-0176-2

Apiecionek, L., Kosowski, T., Kruszynski, H., Piotrowski, M., and Palka, R. (2012). “The concept of integration tool for the civil and military service cooperation during emergency response operations,” in Proceedings of the 2012 Military Communications and Information Systems Conference, MCC 2012, New York, NY.

Asparouhov, T., and Muthén, B. (2009). Exploratory structural equation modeling. Struct. Equ. Model. 16, 397–438. doi: 10.1080/10705510903008204

Australian Army (2014). Chief of Army Opening Address to Land Forces 2014, Army, September 24, 2014. Available online at: https://www.army.gov.au/our-work/speeches-and-transcripts/chief-of-army-opening-address-to-land-forces-2014 (accessed February 6, 2018).

Australian Bureau of Statistics (2016). 2016 Census QuickStats. Canberra: Australian National Government.

Bagozzi, R. P., Yi, Y., and Phillips, L. W. (1991). Assessing construct validity in organizational research. Admin. Sci. Q. 36, 421–458. doi: 10.2307/2393203

Baig, M. M., Gholam-Hosseini, H., Moqeem, A. A., Mirza, F., and Lindén, M. (2017). A systematic review of wearable patient monitoring systems – current challenges and opportunities for clinical adoption. J. Med. Syst. 7, 109–115. doi: 10.1007/s10916-017-0760-1

Bakker, A. B., and Demerouti, E. (2007). The job demands-resources model: state of the art. J. Manag. Psychol. 22, 309–328. doi: 10.1108/02683940710733115

Bakker, A. B., Demerouti, E., and Euwema, M. C. (2005). Job resources buffer the impact of job demands on burnout. J. Occupat. Health Psychol. 10, 170–180. doi: 10.1037/1076-8998.10.2.170

Bakker, A. B., Hakanen, J. J., Demerouti, E., and Xanthopoulou, D. (2007). Job resources boost work engagement, particularly when job demands are high. J. Educ. Psychol. 99, 274–284. doi: 10.1037/0022-0663.99.2.274

Bakker, A. B., van Veldhoven, M., and Xanthopoulou, D. (2010). Beyond the demand-control model: thriving on high job demands and resources. J. Pers. Psychol. 9, 3–16. doi: 10.1027/1866-5888/a000006

Bandalos, D. L. (2014). Relative performance of categorical diagonally weighted least squares and robust maximum likelihood estimation. Struct. Equ. Model. 21, 102–116. doi: 10.1080/10705511.2014.859510

Bandodkar, A. J., and Wang, J. (2014). Non-invasive wearable electrochemical sensors: a review. Trends Biotechnol. 32, 363–371. doi: 10.1016/j.tibtech.2014.04.005

Bandura, A. (1977). Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 84, 191–215. doi: 10.1037/0033-295X.84.2.191

Bartholomew, K. J., Ntoumanis, N., Ryan, R. M., and Thøgersen-Ntoumani, C. (2011). Psychological need thwarting in the sport context: assessing the darker side of athletic experience. J. Sport Exerc. Psychol. 33, 75–102. doi: 10.1123/jsep.33.1.75

Bester, P. C., and Stanz, K. J. (2007). The conceptualisation and measurement of combat readiness for peace-support operations – an exploratory study. SA J. Industr. Psychol. 33, 68–78. doi: 10.4102/sajip.v33i3.398

Bhavsar, N., Bartholomew, K. J., Quested, E., Gucciardi, D. F., Thøgersen-Ntoumani, C., Reevee, J., et al. (2020). Measuring psychological need states in sport: theoretical considerations and a new measure. Psychol. Sport Exerc. 47:101617. doi: 10.1016/j.psychsport.2019.101617

Blackburn, D. (2014). Military individual readiness: an overview of the individual components of the adam, hall, and Thomson model adapted to the Canadian armed forces. Can. Milit. J. 14, 36–45.

Blair, M. R., Body, S. F., and Croft, H. G. (2017). Relationship between physical metrics and game success with elite rugby sevens players. Intern. J. Perform. Analys. Sport 15, 1037–1046. doi: 10.1080/24748668.2017.1348060

Boolani, A., Bahr, B., Milani, I., Caswell, S., Cortes, N., Smith, M. L., et al. (2021). Physical activity is not associated with feelings of mental energy and fatigue after being sedentary for 8 hours or more. Ment. Health Phys. Activ. 21:100418. doi: 10.1016/j.mhpa.2021.100418

Boolani, A., and Manierre, M. (2019). An exploratory multivariate study examining correlates of trait mental and physical fatigue and energy. Fatigue Biomed. Health Behav. 7, 29–40. doi: 10.1080/21641846.2019.1573790

Boolani, A., O’Connor, P. J., Reid, J., Ma, S., and Mondal, S. (2018). Predictors of feelings of energy differ from predictors of fatigue. Fatigue Biomed. Health Behav. 7, 12–28. doi: 10.1080/21641846.2018.1558733

Bova, N., De Jonge, J., and Guglielmi, D. (2015). The demand-induced strain compensation questionnaire: a cross-national validation study. Stress Health 31, 236–244. doi: 10.1002/smi.2550

Burr, R. L. G. (2018). Accelerated Warfare: Futures Statement for An Army in Motion. Canberra: Australian Army.

Byers, J. C., Bittner, A. C., and Hill, S. G. (1989). “Traditional and raw task load index (TLX) correlations: are paired comparisons necessary?,” in Advances in Industrial Ergonomics and Safety, Vol. 32, ed. A. Mital (London: Taylor Francis), 481–485.

Cattell, R. B. (1966). The screen test for the number of factors. Multivariate Behav. Res. 1, 245–276. doi: 10.1207/s15327906mbr0102_10

Chan, D. K. C., Keegan, R. J., Lee, A. S. Y., Yang, S. X., Zhang, L., Rhodes, R. E., et al. (2018). Towards a better assessment of perceived social influence: the relative role of significant others on young athletes. Scand. J. Med. Sci. Sports 29, 286–298. doi: 10.1111/sms.13320

Chan, E. K. H. (2014). “Standards and guidelines for validation practices: development and evaluation of measurement instruments,” in Validity and Validation in Social, Behavioral, and Health Sciences, eds B. D. Zumbo and E. K. H. Chan (New York, NY: Springer), 9–24.

Chesney, M. A., Neilands, T. B., Chambers, D. B., Taylor, J. M., and Folkman, S. (2006). A validity and reliability study of the coping self-efficacy scale. Br. J. Health Psychol. 11, 421–437. doi: 10.1348/135910705X53155

Chuang, C. H., Jackson, S. E., and Jiang, Y. (2016). Can knowledge-intensive teamwork be managed? examining the roles of HRM systems, leadership, and tacit knowledge. J. Manag. 42, 524–554. doi: 10.1177/0149206313478189

Comrey, A. L., and Lee, H. B. (1992). A First Course in Factor Analysis, 2nd Edn, Hillsdale, NJ: Lawrence Erlbaum.

Connor, K. M., and Davidson, J. R. T. (2003). Development of a new resilience scale: the Connor-Davidson Resilience scale (CD-RISC). Depress. Anxiety 18, 76–82. doi: 10.1002/da.10113

Conti, C., di Fronso, S., Pivetti, M., Robazza, C., Podlog, L., and Bertollo, M. (2019). Well-come back! Professional basketball players perceptions of psychosocial and behavioral factors influencing a return to pre-injury levels. Front. Psychol. 10:222. doi: 10.3389/fpsyg.2019.00222

Coyne, J. O. C., Gregory Haff, G., Coutts, A. J., Newton, R. U., and Nimphius, S. (2018). The current state of subjective training load monitoring—A practical perspective and call to action. Sports Med. Open 4:58. doi: 10.1186/s40798-018-0172-x

Crane, M. F. (2017). Managing for Resilience: A Practical Guide for Employee Wellbeing and Organizational Performance. London: Routledge.

Crawford, J. R., and Henry, J. D. (2010). The Positive and Negative Affect Schedule (PANAS): construct validity, measurement properties and normative data in a large non-clinical sample. Br. J. Clin. Psychol. 43, 245–265. doi: 10.1348/0144665031752934

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika 16, 297–334. doi: 10.1007/BF02310555

Crum, A. J., Salovey, P., and Achor, S. (2013). Rethinking stress: the role of mindsets in determining the stress response. J. Pers. Soc. Psychol. 104, 716–733. doi: 10.1037/a0031201

De Jonge, J., and Dormann, C. (2003). “The DISC model: demand-induced strain compensation mechanisms in job stress,” in Occupational Stress in the Service Professions, eds M. F. Dollard, A. H. Winefield, and H. R. Winefield (London: Taylor and Francis), 43–74.

Demerouti, E., Nachreiner, F., Bakker, A. B., and Schaufeli, W. B. (2001). The job demands-resources model of burnout. J. Appl. Psychol. 86, 499–512. doi: 10.1037/0021-9010.86.3.499

Der-Martirosian, C., Radcliff, T. A., Gable, A. R., Riopelle, D., Hagigi, F. A., Brewster, P., et al. (2017). Assessing hospital disaster readiness over time at the US department of veterans affairs. Prehosp. Disaster Med. 32, 46–57. doi: 10.1017/S1049023X16001266

Dien, J. (2010). Evaluating two-step PCA of ERP data with geomin, infomax, oblimin, promax, and varimax rotations. J. Psychophysiol. 47, 170–183. doi: 10.1111/j.1469-8986.2009.00885.x

Domb, M. (2019). “Wearable devices and their Implementation in various domains,” in Wearable Devices - the Big Wave of Innovation, ed. N. Nasiri (London: IntechOpen), doi: 10.5772/intechopen.86066

Elfering, A., Grebner, S., Leitner, M., Hirschmüller, A., Kubosch, E. J., and Baur, H. (2017). Quantitative work demands, emotional demands, and cognitive stress symptoms in surgery nurses. Psychol. Health Med. 22, 604–610. doi: 10.1080/13548506.2016.1200731

Evenson, K. R., Goto, M. M., and Furberg, R. D. (2015). Systematic review of the validity and reliability of consumer-wearable activity trackers. Intern. J. Behav. Nutr. Phys. Activity 12:159. doi: 10.1186/s12966-015-0314-1

Faber, A. J., Willerton, E., Clymer, S. R., MacDermid, S. M., and Weiss, H. M. (2008). Ambiguous absence, ambiguous presence: a qualitative study of military reserve families in wartime. J. Fam. Psychol. 22, 222–230. doi: 10.1037/0893-3200.22.2.222

Forces Command Resilience Plan (2015). Headquarters Forces Command Resilience Report Financial Year 2017/2018. Available online at: https://cove.army.gov.au/sites/default/files/06-07/06/180227-Guide-HQ-FORCOMD-Military-Health-and-Psychology-Commanding-Office.pdf (accessed July 2018).

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Market. Res. 18, 39–50. doi: 10.2307/3151312

Gilmore, P. W. (2016). Leading a Resilient Force. Army Research Paper, No. 11. Available online at: https://researchcentre.army.gov.au/sites/default/files/161107_gilmore_resilient.pdf (accessed July, 2018).

Grier, R. A. (2012). Military cognitive readiness at the operational and strategic levels: a theoretical model for measurement development. J. Cogn. Eng. Decis. Mak. 6, 358–392. doi: 10.1177/1555343412444606

Grier, R. A., Fletcher, J. D., and Morrison, J. E. (2012). Defining and measuring military cognitive readiness. Proc. Hum. Fact. Ergon. Soc. 56, 435–437. doi: 10.1177/1071181312561098

Griffith, J. (2006). What do the soldiers say: needed ingredients for determining unit readiness. Arm.Forc. Soc. 32, 367–388. doi: 10.1177/0095327X05280555

Hart, S. G., and Staveland, L. E. (1988). “Development of NASA-TLX (Task Load Index): results of empirical and theoretical research,” in Human Mental Workload, eds P. A. Hancock and N. Meshkati (Amsterdam: North Holland Press), doi: 10.1016/S0166-4115(08)62386-9

Hellewell, S. C., and Cernak, I. (2018). Measuring resilience to operational stress in canadian armed forces personnel. J. Traum. Stress 31, 89–101. doi: 10.1002/jts.22261

Holm, J. E., and Holroyd, K. A. (1992). The daily hassles scale (revised): does it measure stress or symptoms? Behav. Assess. 14, 465–482.

Hooper, D., Coughlan, J., and Mullen, M. R. (2008). Structural equation modelling: guidelines for determining model fit. Electron. J. Bus. Res. Methods 6, 53–60. doi: 10.12691/amp-5-3-2

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Kanner, A. D., Coyne, J. C., Schaefer, C., and Lazarus, R. S. (1981). Comparison of two modes of stress measurement: daily hassles and uplifts versus major life events. J. Behav. Med. 4, 1–40. doi: 10.1007/BF00844845

Kölling, S., Hitzschke, B., Holst, T., Ferrauti, A., Meyer, T., Pfeiffer, M., et al. (2015). Validity of the acute recovery and stress scale: training monitoring of the German Junior National Field Hockey Team. Intern. J. Sports Sci. Coach. 10, 529–542. doi: 10.1260/1747-9541.10.2-3.529

Kuiper, R. A., and Pesut, D. J. (2004). Promoting cognitive and metacognitive reflective reasoning skills in nursing practice: self-regulated learning theory. J. Adv. Nurs. 45, 381–391. doi: 10.1046/j.1365-2648.2003.02921.x

Lane, J., Lane, A. M., and Kyprianou, A. (2004). Self-efficacy, self-esteem and their impact on academic performance. Soc. Behav. Pers. 32, 247–256. doi: 10.2224/sbp.2004.32.3.247

Lee, H. H., and Cranford, J. A. (2008). Does resilience moderate the associations between parental problem drinking and adolescents’ internalizing and externalizing behaviors? A study of Korean adolescents. Drug Alcoh. Depend. 96, 213–221. doi: 10.1016/j.drugalcdep.2008.03.007

Lee, J., Wheeler, K., and James, D. A. (2018). Wearable Sensors in Sport A Practical Guide to Usage and Implementation (Springer Briefs in Applied Sciences and Technology), 1st Edn, London: Springer.

Lewig, K. A., Xanthopoulou, D., Bakker, A. B., Dollard, M. F., and Metzer, J. C. (2007). Burnout and connectedness among Australian volunteers: a test of the job demands–resources model. J. Vocat. Behav. 71, 429–445. doi: 10.1016/j.jvb.2007.07.003

Luthar, S. S., Cicchetti, D., and Becker, B. (2000). The construct of resilience: a critical evaluation and guidelines for future work. Child Dev. 71, 543–562.

Marsh, H. W., Hau, K. T., and Wen, Z. (2004). In search of golden rules: comment on hypothesis-testing approaches to setting cutoff values for fit indexes and dangers in overgeneralizing Hu and Bentler’s (1999) findings. Struct. Equ. Model. 11, 320–341. doi: 10.1207/s15328007sem1103_2

McFarland, R. G., Rode, J. C., and Shervani, T. A. (2016). A contingency model of emotional intelligence in professional selling. J. Acad. Mark. Sci. 44, 108–118.

Muthén, L., and Muthén, B. (2012). Mplus Version 7 User’s Guide. Los Angeles, CA: Muthén & Muthén, doi: 10.1111/j.1532-5415.2004.52225.x

Nahrgang, J. D., Morgeson, F. P., and Hofmann, D. A. (2011). Safety at work: a meta-analytic investigation of the link between job demands, job resources, burnout, engagement, and safety outcomes. J. Appl. Psychol. 96, 71–94. doi: 10.1037/a0021484

Nässi, A., Ferrauti, A., Meyer, T., Pfeiffer, M., and Kellmann, M. (2017). Psychological tools used for monitoring training responses of athletes. Perform. Enhanc. Health 5, 125–133. doi: 10.1016/j.peh.2017.05.001

Netemeyer, R. G., Bearden, W. O., and Sharma, S. (2003). Scaling Procedures. Thousand Oaks, CA: SAGE Publications, Inc. doi: 10.4135/9781412985772

Netemeyer, R., Bearden, W., and Sharma, S. (2012). “Validity,” in Scaling Procedures, eds R. G. Netemeyer, W. O. Bearden, and S. Sharma (Thousand Oaks, CA: Sage Publications), 72–88. doi: 10.4135/9781412985772.n4

Ng, J. Y., Lonsdale, C., and Hodge, K. (2011). The basic needs satisfaction in sport scale (BNSSS): instrument development and initial validity evidence. Psychol. Sport Exerc. 12, 257–264. doi: 10.1016/j.psychsport.2010.10.006

Pangallo, A., Zibarras, L., Lewis, R., and Flaxman, P. (2015). Resilience through the lens of interactionism: a systematic review. Psychol. Assess. 27, 1–20. doi: 10.1037/pas0000024

Peake, J. M., Kerr, G., and Sullivan, J. P. (2018). A critical review of consumer wearables, mobile applications, and equipment for providing biofeedback, monitoring stress, and sleep in physically active populations. Front. Physiol. 9:743. doi: 10.3389/fphys.2018.00743

Peterson, C., Park, N., and Castro, C. A. (2011). Assessment for the U.S. Army comprehensive soldier fitness program: the global assessment tool. Am. Psychol. 66, 10–18. doi: 10.1037/a0021658

Preston, C. C., and Colman, A. M. (2000). Optimal number of response categories in rating scales: reliability, validity, discriminating power, and respondent preferences. Acta Psychol. 104, 1–15. doi: 10.1016/S0001-6918(99)00050-5

Reivich, K. J., Seligman, M. E. P., and McBride, S. (2011). Master resilience training in the U.S. Army. Am. Psychol. 66, 25–34. doi: 10.1037/a0021897

Rhemtulla, M., Brosseau-Liard, P. É, and Savalei, V. (2012). When can categorical variables be treated as continuous? A comparison of robust continuous and categorical SEM estimation methods under suboptimal conditions. Psychol. Methods 17, 354–373. doi: 10.1037/a0029315

Richardson, G. E. (2002). The metatheory of resilience and resiliency. J. Clin. Psychol. 58, 307–321. doi: 10.1002/jclp.10020

Robertson, I. T., Cooper, C. L., Sarkar, M., and Curran, T. (2015). Resilience training in the workplace from 2003 to 2014: a systematic review. J. Occup. Organ. Psychol. 88, 533–562. doi: 10.1111/joop.12120

Rocchi, M., Pelletier, L., Cheung, S., Baxter, D., and Beaudry, S. (2017). Assessing need-supportive and need-thwarting interpersonal behaviours: the Interpersonal Behaviours Questionnaire (IBQ). Pers. Individ. Differ. 104, 423–433. doi: 10.1016/j.paid.2016.08.034

Rollins, J. W. (2001). Civil-military cooperation (CIMIC) in crisis response operations: the implications for NATO. Intern. J. Phytoremed. 8, 122–129. doi: 10.1080/13533310108413883

Roy, T. C., and Lopez, H. P. (2013). A comparison of deployed occupational tasks performed by different types of military battalions and resulting low back pain. Milit. Med. 178:e0937-43. doi: 10.7205/milmed-d-12-00539

Rutherford, P. (2013). Training in the army: meeting the needs of a changing culture. Train. Dev. 40, 8–10.

Rutter, M. (1987). Psychosocial resilience and protective mechanisms. Am. J. Orthopsych. 57, 316–331. doi: 10.1111/j.1939-0025.1987.tb03541.x

Ryckman, R. M., Robbins, M. A., Thornton, B., and Cantrell, P. (1982). Development and validation of a physical self-efficacy scale. J. Pers. Soc. Psychol. 42, 891–900. doi: 10.1037/0022-3514.42.5.891

Ryff, C. D. (1989). Happiness is everything, or is it? Explorations on the meaning of psychological well-being. J. Pers. Soc. Psychol. 57, 1069–1081. doi: 10.1037/0022-3514.57.6.1069

Ryff, C. D., and Keyes, C. L. M. (1995). The structure of psychological well-being revisited. J. Pers. Soc. Psychol. 69, 719–727. doi: 10.1037/0022-3514.69.4.719

Sampasa-Kanyinga, H., Zamorski, M. A., and Colman, I. (2018). The psychometric properties of the 10-item Kessler psychological distress scale (K10) in Canadian military personnel. PLoS One 13:e0196562. doi: 10.1371/journal.pone.0196562

Seshadri, D. R., Li, R. T., Voos, J. E., Rowbottom, J. R., Alfes, C. M., Zorman, C. A., et al. (2019). Wearable sensors for monitoring the physiological and biochemical profile of the athlete. NPJ Digit. Med. 72, 2–16. doi: 10.1038/s41746-019-0150-9

Shah, N., Irani, Z., and Sharif, A. M. (2017). Big data in an HR context: exploring organizational change readiness, employee attitudes and behaviors. J. Bus. Res. 70, 366–378. doi: 10.1016/j.jbusres.2016.08.010

Sharma, N., Herrnschmidt, J., Claes, V., Bachnick, S., De Geest, S., and Simon, M. (2018). Organizational readiness for implementing change in acute care hospitals: an analysis of a cross-sectional, multicentre study. J. Adv. Nurs. 74, 2798–2808. doi: 10.1111/jan.13801

Sherer, M., Maddux, J. E., Mercandante, B., Prentice-Dunn, S., Jacobs, B., and Rogers, R. W. (1982). The self-efficacy scale: construction and validation. Psychol. Rep. 51, 663–671. doi: 10.2466/pr0.1982.51.2.663

Strohacker, K., Keegan, R. J., Beaumont, C. T., and Zakrajsek, R. A. (2021). Applying P-technique factor analysis to explore person-specific models of readiness-to-exercise. Front. Sports Act. Living 3:685813. doi: 10.3389/fspor.2021.685813

Strohacker, K., and Zakrajsek, R. A. (2016). Determining dimensionality of exercise readiness using exploratory factor analysis. J. Sports Sci. Med. 15, 229–238.

Tangney, J. P., Baumeister, R. F., and Boone, A. L. (2004). High self-control predicts good adjustment, less pathology, better grades, and interpersonal success. J. Pers. 72, 271–324. doi: 10.1111/j.0022-3506.2004.00263.x

Thompson, B. (2004). Exploratory and Confirmatory Factor Analysis: Understanding Concepts and Applications. Washington, DC: American Psychological Association, doi: 10.1037/10694-000

Training and Doctrine Command (2011). The U.S. Army Learning Concept for 2015. TRADOC Pamphlet 525-8-2. Vilnius: Training and Doctrine Command.