95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 22 July 2021

Sec. Neuropsychology

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.671470

This article is part of the Research Topic Virtual, Mixed, and Augmented Reality in Cognitive Neuroscience and Neuropsychology View all 9 articles

A correction has been applied to this article in:

Corrigendum: May I Smell Your Attention: Exploration of Smell and Sound for Visuospatial Attention in Virtual Reality

When interacting with technology, attention is mainly driven by audiovisual and increasingly haptic stimulation. Olfactory stimuli are widely neglected, although the sense of smell influences many of our daily life choices, affects our behavior, and can catch and direct our attention. In this study, we investigated the effect of smell and sound on visuospatial attention in a virtual environment. We implemented the Bells Test, an established neuropsychological test to assess attentional and visuospatial disorders, in virtual reality (VR). We conducted an experiment with 24 participants comparing the performance of users under three experimental conditions (smell, sound, and smell and sound). The results show that multisensory stimuli play a key role in driving the attention of the participants and highlight asymmetries in directing spatial attention. We discuss the relevance of the results within and beyond human-computer interaction (HCI), particularly with regard to the opportunity of using VR for rehabilitation and assessment procedures for patients with spatial attention deficits.

In our everyday life, we tend to underestimate the importance of smell as a source of information and interaction with our environment. Smell can evoke memories more intensively than any other modality (Herz and Schooler, 2002; Obrist et al., 2014), and it can be used to convey meaning (e.g., warn us of danger) (Obrist et al., 2014), or promote instinctive behaviors (e.g., avoidance) (Stevenson, 2009). Humans selectively shift their attention based on the presence of certain smells in their surrounding space by modulating their distance to the source and based on the perceived pleasantness of an odor (Rinaldi et al., 2018). While the effect of smell on spatial attention is increasingly studied in psychology and neuroscience, its study within HCI is still in its infancy.

The spatial design features of smell are increasingly recognized for virtual reality (VR) applications. For example, VaiR (Rietzler et al., 2017) and Head Mounted Wind (Cardin et al., 2007) are both extending the VR headset with wind and thermal stimuli, while Season Traveler (Ranasinghe et al., 2018) also integrates olfactory stimuli to increase the sense of the presence of users in a multisensory VR experience. While there is a growing interest in exploring the potentials around smell in VR to enrich user experiences with olfactory stimuli (Carulli et al., 2018; Risso et al., 2018; Bordegoni et al., 2019) and in designing wearable olfactory interfaces [e.g., Wang et al. (2020), Amores and Maes (2017), and Amores et al. (2018)], the study of smell in relation to its spatial features is still limited, [e.g., Maggioni et al. (2020), Shaw et al. (2019), and Kim and Ando (2010)]. Hence, here we specifically investigated the effect of smell presented under unimodal and multimodal conditions for catching the attention of users during a visual exploration task in VR.

The participants were asked to search for specific visual targets among distractors in a virtual environment. We adopted three experimental conditions (smell, sound, and smell and sound) and a control condition (vision only). To enable this investigation, we created a VR implementation of the Bells Test (Gauthier et al., 1989), an established neuropsychological test used to assess attentional and visuospatial disorders (Ferber and Karnath, 2001; Azouvi et al., 2002). The Bells Test is widely used in clinical settings for the diagnosis of unilateral spatial neglect (USN), which describes the inability of certain patients with brain damage to respond to stimuli presented on the contralesional side of their space.

Based on the results from the experiment with the 24 participants, we show that performance was higher when multimodal stimulation was used. That is, using a combination of olfactory and auditory stimuli was significantly more effective in capturing the visuospatial attention of the participants toward the right side of the VR exploration space. Interestingly we found asymmetries in the spatial orientation effects. That is, stimulation coming from the left side of space resulted in a higher performance toward the left, regardless of the three experimental conditions. We discuss these findings in light of the growing efforts toward the development of multisensory HCI applications, especially in the context of promoting VR as an effective tool both for the assessment and rehabilitation of brain damage disorders related to spatial attention.

In this section, we discuss the relevant related studies on attention and spatial attention, specifically through the lens of multisensory stimulation and olfactory research, which is increasingly recognized within HCI. We highlight the potential of VR not only for the design of multisensory VR experiences through the integration of smell but also as a tool for implementing and adapting traditional assessment methods of spatial neglect.

When considering attention, one of the simplest distinctions that can be made is between top-down and bottom-up processes. The first is referred to the voluntary allocation of attention on specific information or features, while the latter is referred to the automatic attention shift triggered by salient sensory stimulation (Corbetta and Shulman, 2002; Pinto et al., 2013). Spatial attention focuses on prioritizing spatial locations in the environment, and it includes both the top-down and bottom-up processes. The brain area mainly involved in the visuospatial processing and movement orienting is the superior colliculi (Krauzlis et al., 2013). This area is also strongly involved in the integration and processing of all the multisensory inputs perceived from the external environment (Meredith and Stein, 1986). Attentional shifts in one modality are usually accompanied by the integration of other modalities (Driver and Spence, 1998) (e.g., a sudden movement followed by a sound perceived from the same spatial area causes the integration of vision and audition). Neural correlates for this process include the ventral frontoparietal network strongly lateralized to the right cerebral hemisphere (Corbetta and Shulman, 2002).

The attentive system would seem to be also responsible for the recognition of multisensory objects by binding simultaneously multimodal signals across space (Busse et al., 2005). Specifically, the integration of different sensory modalities has been proven to have a strong effect when related to spatial attention. For example, Santangelo (Santangelo and Spence, 2007; Santangelo et al., 2008) explored the use of multisensory cues to capture spatial attention during low and high perceptual load tasks, and their results appear to indicate that only bimodal cues effectively captured spatial attention regardless of any increase in perceptual load. Moreover, Keller (2011) made a comparison between visual and olfactory attention features and highlighted similarities between the two modalities, especially when allocating attention in a specific time section and on specific features (e.g., odor pleasantness).

The importance of understanding the mechanisms that influence the attention of users, especially related to its visuospatial characteristics, is increasingly recognized when designing interactive systems (e.g., in computer vision, see review by Nguyen et al., 2018). More specific to VR, Dohan and Mu (2019) implemented a gaze-controlled VR game that deployed an eye tracking system to better explore the attention and behavioral patterns of users, while Almutawa and Ueoka (2019) explored the influence of spatial awareness in VR, especially during the transition from the real world to VR environments.

Despite the predominance of studies on other sensory modalities, there is a growing body of research on smell and cross-modal correspondences studies, especially on smell and sound (Belkin et al., 1997; Crisinel and Spence, 2012; Deroy et al., 2013). Von Békésy (1964), for example, suggested the existence of similarities in the way the brain processes the direction of auditory stimuli and odors. Results of this work showed that the olfactory system is also capable of determining the direction of a scent in a way that is similar to the auditory system, but with lower spatial sensitivity, by recognizing time differences between the two nostrils in the order of 0.3 msec. Considering similar principles, Porter et al. (2007) demonstrated that humans can navigate space by scent-tracking. Moreover, there is evidence supporting the existence of a link between olfaction and vision, highlighting the role that smell plays in attracting visual attention. An example is provided by the work of Seigneuric et al. (2010), who found that in a visual exploration task, smell influences visual attention and, more specifically, facilitates the recognition of an object that is associated to the odor perceived. All these findings encouraged greater consideration of the possible role that smell can play on spatial attention when combined with different sensory modalities.

Few studies have recently investigated the relationship between attention and multisensory stimulation, such as between vision, touch, and audition (Spence et al., 1998; Van Hulle et al., 2013); attention and tactile information (Spence, 2002; Spence and Gallace, 2007); and audition and vision (McDonald et al., 2000; Gallace and Spence, 2006). These studies provide evidence that multisensory stimuli lead to faster and stronger responses than single sensory stimuli. The enhanced multisensory response is not simply due to the additive effects of concurrent sensory information. Multisensory stimulation often elicits more accurate responses than the expected response predicted by additive models of unimodal stimuli (Colonius and Diederich, 2003; Murray et al., 2005; Spence and Ho, 2008; Lunn et al., 2019). The concept of “super-additivity,” because of the perception and the integration of stimuli from different sensory modalities, allows to derive three basic rules (Holmes and Spence, 2005). Researchers found that to obtain an increase in neural activity, and consequently a stronger and faster response, multisensory stimulation must come from similar space locations, must be perceived almost at the same moment, and at least one of the stimuli must be only weakly effective in evoking a neural response (Holmes and Spence, 2005).

Recent developments have resulted in systems such as VaiR (Rietzler et al., 2017), Head Mounted Wind (Cardin et al., 2007), and Season Traveler (Ranasinghe et al., 2018), which illustrate the advances in multisensory technology. Studies of systems, such as VaiR (Rietzler et al., 2017) and Head Mounted Wind (Cardin et al., 2007), which are wearable displays using arrays of fans or pneumatic air nozzles attached to users as extensions of a VR headset, have shown that participants experienced an increased sense of presence and can correctly detect air-flow directions but do not account for the sense of smell. Season Traveler (Ranasinghe et al., 2018) extends wind and thermal stimuli through olfactory stimuli and confirmed the previous results regarding an enhanced sense of presence in a virtual environment. In this case, the scented air was released near the nose of the user to increase the sense of presence in a multisensory VR experience but was not studied with regard to its spatial features. Indeed, while there is a growing interest in designing wearable olfactory interfaces (e.g., Amores and Maes, 2017; Amores et al., 2018; Wang et al., 2020), and enriching user experiences with olfactory stimuli (Bordegoni et al., 2019; Micaroni et al., 2019), the study of smell in relation to its spatial features is still limited (e.g., Kim and Ando, 2010; Shaw et al., 2019; Maggioni et al., 2020).

Most recently, Maggioni et al. (2020) not only highlighted the spatial design features for the sense of smell but also illustrated its effect in three application scenarios, including two VR implementations. Most relevant for this study is a described VR implementation that focuses on the ability of users to locate the source of an olfactory stimulus in a 360° virtual space. The authors compared smell with sound stimuli and combined these two sources of information. Their results showed an interaction effect between the stimulation modality and position of users on the accuracy in locating the source [F(2, 12) = 18.6, p < 0.01, η2 = 0.75], and provide valuable information about the performance of users in localizing olfactory and auditory spatial cues. More specifically, the accuracy of users in the front position in VR was comparable across all modalities but was significantly different between olfactory and auditory stimuli, and olfactory and audio-olfactory in the back position (i.e., front position refers to the space when the cue is presented within 180° centered on the head direction of the participant, and back position when outside 180°). Olfactory stimuli together with auditory stimuli can play an even more important role in the navigation of VR environments where all the interactions are mainly based on audiovisual stimulation, which can easily lead to overwhelm the attention of users. This study provides valuable information on the importance of carefully choosing the scent-delivery parameters when exploring the spatial features of smell, such as we do in our smell-enhanced VR implementation of The Bells Test, a well-established neuropsychological test.

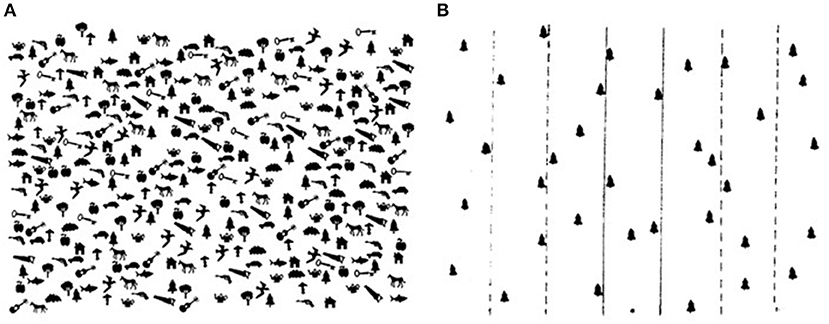

Visual exploration tasks, such as line bisection task (i.e., a task where participants have to highlight the middle point of a horizontal line presented on a sheet located in front of them) or cancellation task (i.e., a task where participants have to search and highlight visual targets among distractor figures), are commonly used in neuropsychology for the assessment of visuospatial deficits (Plummer et al., 2003). The Bells Test (Gauthier et al., 1989) (Figure 1) is widely used for the assessment of unilateral spatial neglect (USN).

Figure 1. (A) Bells Test and (B) its target distribution (Gauthier et al., 1989).

Neglect is generally defined as “the failure to report, respond, or orient to stimuli presented to the side opposite a brain lesion, when this failure cannot be attributed to either sensory or motor defects” (Heilman et al., 1984). Neglect is mainly due to lesions involving the right inferior parietal and adjacent temporal lobe (Vallar and Perani, 1986) and occurs in ~30% of patients who had a stroke (Nijboer et al., 2013). There is also evidence of ipsilateral neglect, where the neglected side of the space corresponds with the lesioned brain hemisphere (Weintraub and Mesulam, 1987). In addition, patients with neglect usually show a lack of awareness for their disorder (anosognosia) (Gialanella et al., 2005). For example, when asked to copy an image located in front of them, they copy only the right half of it and, in the same way, they may eat only the right part of their food (reporting that their performance is correct).

Cancellation tasks like the Bells Test are commonly performed during the assessment of this deficit, and patients usually show behavioral patterns of hypo-attention on the contralesional side (i.e., left) and hyper-attention on the ipsilesional side (i.e., right) (Albert, 1973; Rapcsak et al., 1989; Plummer et al., 2003). Participants are instructed to search and highlight as many bells as they can see among visual distractors (see Figure 1). This is traditionally done with paper and pencil, with the additional instruction not to move the sheet located in front of them. In this way, neuropsychologists can understand the exploration path followed by the patient, to assess possible visuospatial and attentional deficits such as USN.

Here, we present a VR implementation of the Bells Test, augmenting the visual search with olfactory and auditory stimulations, delivered individually or combined, to explore the effect of these modalities on the visuospatial attention and performance of the participants in VR. We maintained the same features of the Bells Test, as it would be used in clinical settings with neuropsychological patients; however, we adapted the test to cover a larger canvas and provide an immersive experience for participants without attentional or visuospatial deficits. To do so, we increased the number of targets (i.e., from 35 to 75) and distractors (i.e., from 280 to 520) of the Bells Test to cover a 180° VR exploration space that simulated a distance between the participant and the semicircle space of ~2 m. As mentioned above in Section Technology Advances to Enable the Study of Spatial Attention With Smell, we followed the recently published study by Maggioni et al. (2020) on the accuracy of the participants in the front and back position in VR when using olfactory stimuli, making us focus only on the front position, within 180° centered on the head direction of the participants.

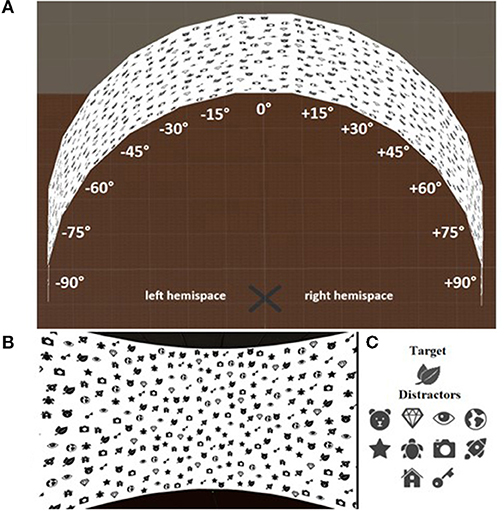

We divided the VR space into 13 sections equivalent in height and width, consisting of a central section and six sections for each of the left and right hemispaces (see Figure 2). The central section was set at 0° rotation, perpendicular to the participant point of view during the visual exploration task, while for the left and right hemispaces, each of the six sections increased its rotation on the vertical axis by 15° until they reached +90° for the right hemispace and −90° for the left hemispace. Each section contained five targets and 40 distractors. The VR environment was rendered in order to prevent the participants to perceive the edges of the 13 sections that constituted the 180° exploration space and gave the illusion of a regular semicircle (Figure 2). This solution avoids the adoption of a section-by-section exploration procedure and promoted, instead, the exploration of the entire space without visual bias. The VR scenario was created using Unity 3D Software (version 2018.2.5), and the participants were asked to perform the visual exploration task wearing an HTC Vive VR headset.

Figure 2. Illustration of the VR implementation of the Bells Test with (A) the 13 sections and (B) from the point of view of the participants. (C) Target and distractor items used in the VR implementation.

For the user study, we selected peppermint as the olfactory stimulus. Peppermint scent has been proven to have an arousing effect on the central nervous system and has been used in a previous study on attention (Warm et al., 1991; Dember et al., 2001), as well as on workload, work efficiency, and alertness, for example in driving tasks (Raudenbush et al., 2009). To ensure semantic congruency between visual and olfactory stimuli, based on a prior study (Raudenbush et al., 2009), we replaced the traditional “bells” in the Bells Test with a new target symbol (i.e., two small mint leaves, see Figure 2).

The study aimed to explore the effect of olfactory and auditory stimulation on visuospatial attention. We used the above-described implementation of the Bells Test while integrating the different modalities and compare the performance of the participants under three experimental conditions: smell, sound, and smell and sound stimuli. We used a visual-only control condition, without any additional sensorial stimulation. Thus, we had a total of four conditions as part of the study design. The study was approved by the University Science and Technology Cross Schools Research Ethics Committee. Each participant gave written informed consent after being instructed about the study procedures and completing an olfactory assessment test (Nordin et al., 2003) to exclude people with smell dysfunctions, adverse reactions to strong smells, respiratory problems, flu, serious head trauma, or brain disorders.

This was a mixed model study design with three experimental conditions and a visual-only control condition (without any additional sensorial stimulation) as a within-participants factor, and the stimulation origin side as a between-participants factor. The participants were asked to complete the visuospatial exploration task in VR as fast as possible (i.e., “find the target item ‘mint leave”'). Each condition followed the same rules as the control condition but with the introduction of smell (Condition I), sound (Condition II), and combined smell and sound (Condition III) stimulation during the exploration task. Therefore, for each participant, we tested the unisensory effects in condition I and II (i.e., olfactory and auditory only) and then the multisensory effects in condition III (i.e., combined smell and sound). The stimulation origin side for each condition was counterbalanced between the participants. The exploration space was limited to a 180° field of view to avoid participants turning around and invert the origin of the stimuli, and in line with a prior study (Maggioni et al., 2020) that showed the effectiveness of focusing on the front position (see explanation in Section Technology Advances to Enable the Study of Spatial Attention With Smell). The participants were asked to perform the visual exploration task wearing an HTC Vive VR headset. At the beginning of each exploration task, the participants were instructed to wear the headset and to reach a mark located in the middle of the virtual semicircle space (see Figure 2A). While performing the task, they were allowed to freely move their head and rotate their body, but they were asked to remain on the mark.

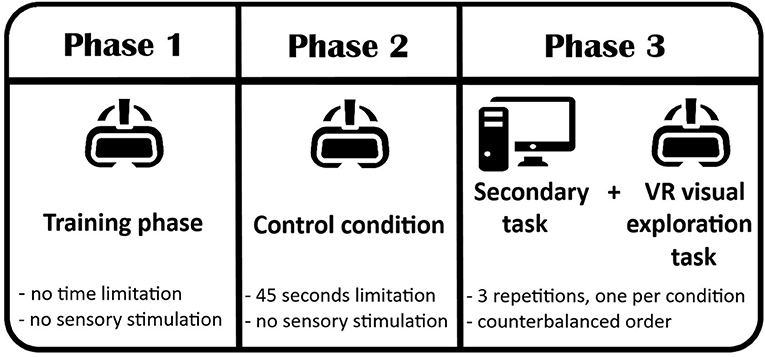

For the investigation of the effect of unimodal and multimodal stimuli on visuospatial attention in VR, we used peppermint (i.e., essential oil from Holland and Barrett) as the olfactory stimulus due to its above-described effect on the central nervous system and its prior use in studying attention (Warm et al., 1991; Dember et al., 2001). As an auditory stimulus, we opted for a simple sequence of three piano G tones, where the first and third tones were the same, while the second was an octave higher of the other two. This sequence was used to avoid any correlation due to their pitch following prior studies (see Ben-Artzi and Marks, 1995; Carnevale and Harris, 2016; McCormick et al., 2018). The study was divided into three phases (see an overview in Figure 3).

Figure 3. Overview of the three main study phases. After the training phase and the control condition of the VR visual exploration task, the participants proceeded with the three experimental conditions starting from the Secondary task followed by the VR exploration task. The conditions were counterbalanced between the participants.

The participants started with the training phase (Phase 1) to familiarize themselves with the VR exploration task without any time constraints or any sensory stimulation and distractors, only with visual targets. Phase 1 was then followed by the control condition (Phase 2) and finally by the main part of the experiment (Phase 3), composed of two parts: a secondary task and the visuospatial exploration task with all three conditions. The order of the conditions and the origin of the stimulation (left or right hemispace), excluding the initial control condition, were counterbalanced using a Latin square design, as it was the position of targets and distractors in each of the 13 sections among task conditions. Each of those three phases is described in detail below.

During the training phase, the participants could familiarize themselves with the exploration task, the controller functionality, and the VR environment. They were instructed to find and select specific targets (i.e., small figures of mint leaves). They selected the target by pointing at it with a VR reproduction of a green ray that originated from the bottom of the HTC controller, and by pulling and releasing the trigger to highlight it. Visual instructions on the use of the controller were provided to the participants. They were also informed of the time constraint in the actual testing phase that would follow later. In this phase, there were no distractors, the targets were highlighted in red, and no time limit was set. At the end of the training phase, the participants could restart the training until they were comfortable with the task and interaction. This phase was important to counteract any possible bias due to novelty effects introduced by the visual exploration task and the VR environment. When a participant was ready to proceed, we started with the control condition.

Here, the participants proceeded with the control condition (i.e., the visual-only exploration task). They were instructed to find and select the targets (i.e., mint leaves) among the distractors (as shown in Figure 2). They selected the target by pointing at it with the VR reproduction of a green ray that originated from the bottom of the HTC controller, and pulling and releasing the trigger to highlight it. In section Measures and Hypotesis we describe the measures employed to evaluate the performance of the participants.

This final phase comprises a secondary task followed by the VR visual exploration task with all three experimental conditions. The secondary task condition followed the same counterbalanced order of the VR task and was carried out before each of the three experimental conditions to allow the participants to familiarize themselves with the sensory stimulations they would experience later in the VR task. Therefore, the secondary task condition always corresponds to the following VR experimental condition. This step aimed to reduce the novelty effect and biases due to the introduction of the olfactory and auditory stimuli that characterize the experimental conditions of the VR visual exploration task, and its data were not further analyzed. The secondary task was performed on a desktop computer monitor, where a sequence of figures, as used in the VR Bells Test implementation (see Figure 2), were presented on a monitor (22” diagonal). The monitor was located on a desk at a distance of 150 cm from the participants. Behind the monitor, we located a speaker to deliver the sound stimuli; and, on the top of the monitor, there was a nozzle connected to the smell delivery device. For both trials, the participants had to press on a keyboard the “Y” key if in the set of images the target was present, and the “N” key if the target was not included in the set of images. The target figure was anticipated by smell (condition I), sound (condition II), or smell and sound (condition III) stimuli.

This secondary task was composed of a randomized presentation of 30 distractors trials and 10 target trials. Each trial differs from the other based on the combination of images and their position. Each trial starts with a 3,000 ms. fixation point (white square sized 10 × 10 pixels), followed by a presentation of a set of four images, located as shown in Figure 4. In the distractor trials, we presented four random distractors, and in the target trials we presented three random distractors and the target (the same mint leaves icon used in the VR exploration task). For the target trials, the fixation point was accompanied by the same sensory stimulation used for the VR exploration task, according to the three experimental conditions: single smell presentation with 1 s delivery time (condition I), single presentation of the sound sequence (condition II), and combination of smell and sound (condition III).

After completing the secondary tasks, the participants were verbally introduced to continue with the visual exploration task in VR (all three conditions in counterbalanced order between participants). Each visual exploration session was limited to 45 s. During a pilot study with five participants, we observed that this time interval was long enough to allow the participants to explore almost all the VR exploration space, but it was difficult for them to select all the targets in both hemispaces. This choice allowed us to observe in greater detail the differences between the two hemispaces because of the experimental conditions.

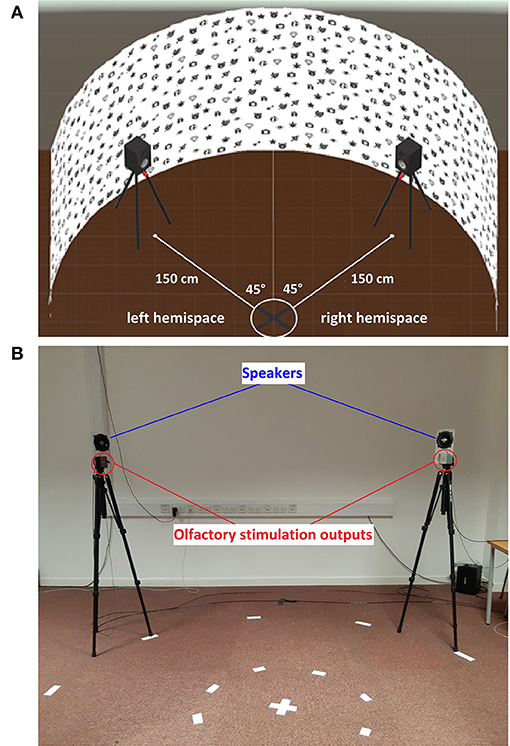

The stimuli origin side was counterbalanced between the left or right hemispace, between the participants. The delivery point for olfactory and auditory stimuli was set at ±45° from the head of the participants at a distance of 150 cm (Figure 5). We set the origin of the stimuli at ±45° to drag the attention of the participants in the middle of the right or left hemispace. This also allows the participants to continue the visuospatial exploration without being distracted toward the extreme edge of the hemispace.

Figure 5. Schematic representation of the VR environment. (A) The curved white virtual wall used to implement the Bells test inside the VR headset. (B) The real world location of the olfactory (red) and auditory (blue) stimulation outputs integrated on top of a tripod.

The olfactory stimuli were administered using a custom-made computer-controlled scent delivery device, previously used in other research studies (Maggioni et al., 2018, 2020; Cornelio et al., 2020). The delivery device contains three electro-valves (4-mm Solenoid/Spring pneumatic valve) that regulates the airflow (on-off) from an ultra-low noise oil-free compressor with a storing tank (8 Bar maximum capacity, 24 L, 93–78 L/min at 1–2 Bar, Bambi Air, Birmingham, United Kingdom). The compressor supplies a regulated air flow (max 70 L/s) through 4-mm plastic pipes passing by the electro-valve and arriving in glass bottles that contained the essential oil. The airflow was set at a constant pressure of 1.5 Bar-L/min, through an air regulator. The smell reached the participants through a 3D-printed single-channel nozzle. Each valve was connected with a jar of essential oil, so we have one for the left hemispace, one for the right hemispace, and one for the secondary task. The nozzles for the smell output were anchored in laser cut plates and attached to the sound speakers that were mounted on and attached to two tripods. Thus, the olfactory and auditory stimuli shared the same output position.

With the setup shown in Figure 5, the olfactory stimulus was reaching the participants in an average of 6 s, based on a pilot study we have conducted with five participants. During the pilot study, the participants were instructed to report when they started to perceive the olfactory stimuli by pressing the trigger of the HMD controller. With the same procedure, we have tested additionally the auditory stimulus duration for synchronizing auditory and olfactory stimuli for condition III in the study. The delivery time for the olfactory stimulus was 1 s with delivery intervals of 5 s and automatically triggered at the beginning of the visual exploration task. The delivery was not continuous to avoid any possible habituation effect and environmental saturation. For the auditory stimulus, the duration of the sequence of three piano G tones was also set at 1 s and repeated every 5 s until the end of the task. Hence, the auditory and olfactory stimulations were synchronized, with the auditory stimulation starting after 5 s with a duration of 1 s, matching the 6 s needed for the smell to reach the user.

To assess the performance of the participants, we collected the following data at the end of each condition. All the data were automatically recorded by the script implemented in Unity. We measured (a) the number of targets selected (i.e., mint leaves) with details about their location (left or right hemispace, section of appearance, see Section Materials and Methods); and (b) the starting point (which of the 13 sections in the left or right hemispace) and the exploration path followed by the participants during the VR visual exploration task, combined with data collected through the VR headset and related to the head position of the participants.

Based on a prior study, we had the following two hypotheses:

H1: the introduction of olfactory and auditory stimulation will draw the attention of the participants to the hemispace from which they are delivered.

H2: the combination of both stimuli (i.e., additive effect of multisensory stimulation explained in section The Role of Smell Stimulation for Spatial Attention) will have a stronger effect than the single sensory stimulation on the performance of the participants.

An a priori statistical power analysis to estimate sample size using G*Power was performed. We set the repeated measures ANOVA with the four conditions as a within-participants factor (i.e., control, I, II, and III) and between-participants factor on the stimulation side. Approximately 21 participants are required to obtain a power of 0.95, an alpha level of 0.05, and a medium effect size (f = 0.25) (Faul et al., 2007; Lakens, 2013).

The study involved a total of 24 participants (M age = 28.96, ± 5.14 years; five females; five left-handed). All the participants had a normal or corrected-to-normal vision. Based on the olfactory assessment test result, nobody reported any olfactory dysfunctions preventing them from participating in the experiment.

To test the hypotheses (see Section Measures and Hypothesis), we considered data related to the number of selected targets and their position in the exploration space (i.e., left or right hemispace). The analysis was performed on IBM SPSS 26. First, we explored the data through a normality test [Shapiro–Wilk test (Sheskin, 2011)]. The distribution of the targets selected for both sides in the three experimental conditions followed a normal distribution (p >.05). Therefore, we applied parametric analyses. We performed a general linear model (GLM) multivariate analysis with the number of targets selected in the left and right hemispaces (visuospatial attention performance) as dependent variables with experimental conditions (i.e., control, smell, sound, and smell-sound) and the origin side of sensory stimulation (i.e., the left or right side) as independent fixed factors.

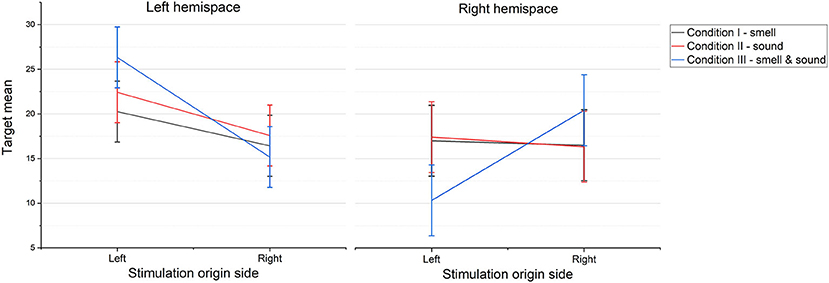

The results showed a significant main effect of the origin side of sensory stimulations (i.e., left or right) on the visuospatial attention performance of the participants [F(1, 89) = 11.23; p = 0.001] particularly on the left hemispace (M left = 23 SD = 7.58, M right = 16.39; SD = 9.33) (H1). This means that the participants explored more accurately the left side of the VR space when the sensory stimulation originated from the left side, independently from the type of stimulation (experimental condition). Furthermore, as shown in Figure 6, the results showed a significant interaction effect of experimental condition with the origin side of sensory stimulations on the visuospatial attention performance of the participants on the right hemispace [F(2, 89) = 1.36; p = 0.037]. Nevertheless, an observation of the graphs (Figure 6) would seem to suggest a stronger difference in condition III, where olfactory and auditory stimuli were combined. Multiple comparisons (Bonferroni corrected) showed that for the right hemispace, there was a significant difference (p = 0.004) in condition III between the targets selected when the stimulation came from the left (M = 10.33; SD = 7.87), and those selected when the stimulation came from the right (M = 20.42; SD = 7.98). This result seems to support the hypothesis of a stronger effect of multisensory stimulation compared with unimodal stimuli.

Figure 6. Mean score of the participants during visuospatial exploration task on the left and right hemispaces under the three experimental conditions for the two origin sides (left or right) of the sensory stimulation. Error bars represent the standard errors of the means.

To verify which experimental condition drove the visuospatial attention of the participants, we calculated the hemispace dominance (i.e., the hemispace with the highest number of targets selected) for the control condition (no stimulation) and for each experimental condition. We then considered the congruency between the origin side of the sensory stimulation and the hemispace dominance of the participants in each condition (i.e., whether participants found more target stimuli in the hemispace that matched the origin of the sound and smell). Performing a chi-square analysis on the congruency frequencies, the results showed no significant difference between hemispace dominance (left or right) in unisensorial condition [i.e., smell χ2(1) = 0.67, p > 0.05, or sound χ2(1) = 0.01, p > 0.05] and in the control condition [i.e., no stimulation χ2(1) = 0.17, p > 0.05]. Instead, we found a significant difference for the multisensory stimulation (i.e., smell and sound together [χ2(1) = 8.17, p = 0.004]). The hemispace dominance tended to be congruent with the origin of the stimulation during the multisensorial condition (H2) (see Table 1).

In this section we discuss the principal effects of multisensory stimulation on visuospatial attention, focusing specifically on the spatial differences highlighted by the results. We highlight the potential of multisensory stimulation and VR for enhancing the design of future HCI tools through the integration of smell. Finally, we consider the opportunity of using VR as a tool for implementing and augmenting classical neuropsychological rehabilitation with multisensory stimulations.

The study presented here explored the effect of auditory and olfactory stimulation on visuospatial attention and found evidence of a strong effect obtained by the integration of these two modalities related to the right hemispace of the exploration space. In the left hemispace, we observed a significant main effect of the origin of sensory stimulations (±45° left or right), independently from the sensory modality deployed.

Considering the prevailing occidental population that participated in the study, this difference between the two hemispaces could be explained by lexical-cultural aspects, since all of the participants were trained since childhood in a left-to-right orientation in reading and writing. There is evidence in the literature that supports this hypothesis. At the end of the 1960s, a series of studies had demonstrated that a point can be more accurately located when presented to the left visual field (Kimura, 1969). Another study (Vaid and Singh, 1989) explored asymmetries on the perception of visual stimuli with four different groups of participants composed of left-to-right readers, right-to-left readers, left-to-right and right-to-left readers, and illiterates. Their results revealed a significant left hemifield preference only for the left-to-right readers, while they did not find any reliable differences between left- and right-handers.

These results could be further explained by the pseudoneglect phenomenon (Bowers and Heilman, 1980). Similar to neglect, pseudoneglect is predominantly observed during line bisection tasks in subjects without visuospatial or attentional deficits and is characterized by a systematical tendency to mismatch the midpoint of a line. Specifically, patients with neglect usually make errors toward the right side of the line, while non-neglect subjects tend to locate the midline toward the left (Bowers and Heilman, 1980; McCourt and Jewell, 1999; Jewell and McCourt, 2000). All these findings support natural facilitation towards the left exploration space, leading to an increased attentional focus on the left hemispace. This could explain why the participants in this study appeared to be less influenced by the stimulation used to catch their attention toward the left hemispace, while for the right hemispace only multisensory stimulation seemed to have the power to influence the performance of the users due to the additive stimulation value of both sound and smell combined (Colonius and Diederich, 2003; Murray et al., 2005; Spence and Ho, 2008; Lunn et al., 2019). Interestingly, in Voinescu et al. (2020), the authors concluded that auditory stimulation is recommended for VR tasks requiring frequent interaction and responses to relevant information, showing a better performance when compared with visual stimuli. In this work, in contrast, we found that auditory only appeared to be not sufficient to influence an attentional task in the left hemispace, while only the multisensory stimulation influenced the attention of the users and significantly improve their performance. For future research, a more heterogeneous population (i.e., with a balanced gender and different cultural background) will allow to better understand eventual aspects related to gender or cultural differences.

Although we considered only participants without attentional or visuospatial deficits in this experiment, the motivation for this research was also driven by the interest of the authors in promoting VR as a tool for neuropsychological assessments and rehabilitation. While there is a range of classical methods available for clinical applications, VR and multisensory technology provide compelling and novel opportunities not yet fully exploited. With VR implementation and the introduction of olfactory stimulation for the Bells Test, we only make a small but first step toward that effort contributing to impact beyond HCI.

Several rehabilitation methods have been developed for USN, each of them with a specific theoretical framework (Pierce and Buxbaum, 2002; Azouvi et al., 2017). In the last decades, rehabilitation and assessment methods have been upgraded to deploy new technologies, and VR has already been used in neuropsychology for rehabilitation and assessment purposes such as visuospatial impairments, attention deficits, and USN (Rose et al., 2005; Pedroli et al., 2015). Moreover, VR and more specifically head-mounted displays (HMDs) had been proved to be efficient and reliable for neuropsychological purposes (Foerster et al., 2016) and to be accessible also through wheelchairs (Hansen et al., 2019). Focusing on USN treatments, VR-based methods proved in some cases to be even more sensitive in the assessment, especially for mild patients who may not be detected by standard paper-and-pencil test (Kim et al., 2011), while other studies showed better outcomes after VR-based rehabilitation sessions compared with classical methods (Buxbaum et al., 2008; Dawson et al., 2008). Moreover, multisensory stimulation is increasingly recognized and adopted as an effective USN rehabilitation method (Zigiotto et al., 2020), and VR technology constitutes the perfect tool to control and integrate different sensory modalities. Nevertheless, VR implementations of USN rehabilitation methods are focused mainly on visual and auditory stimuli (Tsirlin et al., 2009), and more rarely they include haptic interactions (Baheux et al., 2006; Teruel et al., 2015). To date and to the best knowledge of the authors, there has not been any investigation using olfactory stimuli with neuropsychological visual cancellation task, and this study represents an initial step toward the creation of VR multisensory environments that include olfactory stimulations. The results showed how multisensorial stimuli can successfully drag the visuospatial attention toward the right side of the exploration space in participants without attentive or visuospatial deficits. Future implementation of this methodology with a clinical population could provide more insights regarding the possibility of integrating smell in multisensory stimulation to drag visuospatial attention toward the neglected left side. The results obtained represent the first step into exploring the use of smell and sound in a traditional visuospatial test (i.e., the Bells Test) in a VR environment. This innovation could lead to the introduction of new rehabilitation tools that can be used for clinical purposes and open up new opportunities for working with patients.

Recent years have seen a growing interest and efforts to move HCI toward multisensory interaction (Obrist et al., 2016). The opportunities for multisensory experience design (Velasco and Obrist, 2020) and to enhance the sense of presence through smell in virtual environments are increasingly recognized [e.g., Season Traveler (Ranasinghe et al., 2018)], and yet we are only starting to scratch the surface of its potential, especially when it comes to the spatial features for smell stimulation (Maggioni et al., 2020).

This study contributes insights into the integration of olfactory and multisensory stimulation in VR, opening up a design space for a range of application scenarios. Let the following be imagined: everyday life is pervaded by notifications (e.g., emails, telephone calls, social media updates). Although notifications aim to provide information related to background events, they can also cause frustration and decrease the performance of a user by interrupting an ongoing activity (be it work, play, or learning), as each notification asks for attention. A prior study has shown that olfactory notifications can improve the performance of users and are perceived as less disruptive (Maggioni et al., 2018). While this prior study (Maggioni et al., 2018) demonstrates the benefits of olfactory stimulation, it also offers initial evidence that smell influences visuospatial attention on a computer screen in a work environment. These findings, combined with the results from this study on spatial attention, can inspire future studies on how an olfactory notification system can help guide the attention of workers toward a specific task in increasingly distributed and ubiquitous information spaces, [e.g., multiple screens, multi-platform, and cross-media contents (Brudy et al., 2019)]. To achieve the best possible performance, multimodal stimuli can be carefully crafted based on needs, preferences, and individual characteristics and backgrounds of users (e.g., handedness, cultural background). This is becoming even more relevant in light of advances in augmented reality applications, blending the real world with virtual worlds (e.g., Hartmann et al., 2019).

Moreover, outside the attentive domain, multisensory stimulations can have the additional advantage of enhancing and compensating for sensory capabilities of people, creating more inclusive interactive environments. For example, a prior study has shown that augmenting the environment with auditory and tactile stimuli can improve spatial learning and orientation in students who are blind (Albouys-Perrois et al., 2018), and has improved spatial skills in children (Brule et al., 2016). The addition of smell stimuli to virtual environments could help to increase the sense of presence of users and, above all, be used to prepare and train people in dangerous situations, such as during fire evacuations (Nilsson et al., 2019) or to recover from traumatizing experiences or posttraumatic stress disorder (PTSD), such as war scenarios (Aiken and Berry, 2015).

We explored the use of smell and its combination with other modalities (sound) in a visuospatial exploration task in VR. We aimed to understand the effect of single sensory and multisensory stimulation on catching the attention of users comparing smell, sound, and smell and sound stimuli. The results showed that olfactory stimuli combined with auditory stimuli were significantly more effective in capturing the visuospatial attention of the participants toward the right side of the VR exploration space, while stimulation from the left side resulted in a higher performance toward the left hemispace, with no significant differences between the three experimental conditions. Furthermore, the VR implementation provides insights on the exploration path used to explore visuospatial stimuli. Future studies could investigate personal and cultural differences between participants, comparing performances of non-clinical vs. clinical groups (e.g., people with a diagnosis of spatial neglect due to a brain lesion). Finally, this study extends recent efforts around multisensory HCI with the potential to create not just more immersive but also more inclusive interactive experiences. Above all, we believe in the importance of integrating the sense of smell in future HCI scenarios to drive the spatial attention of participants.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Sciences & Technology Cross-Schools Research Ethics Committee at the University of Sussex. The project reference number is ER/EM443/3. The patients/participants provided their written informed consent to participate in this study.

ND identified the potential research area in the intersection of visual attention and multisensory stimuli (i.e., use of scent and sound stimuli) through discussions with EM and MO. ND carried out a detailed literature review and defined the experimental design based on the co-authors feedback. ND developed the VR environment with support from DP and designed the experimental protocol and integration of the sensory stimuli. ND was responsible for data collection and analysis with close input and feedback from the co-authors, mainly EM, MO, and AG. All authors contributed to the article and approved the submitted version.

The authors would like to thank the Erasmus+ Traineeship program and the European Research Council, European Union's Horizon 2020 program (Grant no: 638605) for supporting this research.

DP is employed by the company Ultraleap Ltd., but the research was carried out as part of his PhD at the University of Sussex. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer MC declared a shared affiliation with one of the authors ND to the handling editor at time of review.

The authors would like to thank Giada Brianza for the advice during the study design, Robert Cobden for the technical support in the VR implementation, and Dr. Patricia Cornelio for the help in recruiting participants.

Aiken, M. P., and Berry, M. J. (2015). Posttraumatic stress disorder: possibilities for olfaction and virtual reality exposure therapy. Virt Real. 19, 95–109. doi: 10.1007/s10055-015-0260-x

Albert, M. L. (1973). A simple test of visual neglect. Neurology 23, 658–664. doi: 10.1212/WNL.23.6.658

Albouys-Perrois, J., Laviole, J., Briant, C., and Brock, A. M. (2018). “Towards a multisensory augmented reality map for blind and low vision people: a participatory design approach,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, 2018-April (Montreal, QC: ACM), 1–14. doi: 10.1145/3173574.3174203

Almutawa, A., and Ueoka, R. (2019). “The influence of spatial awareness on VR: investigating the influence of the familiarity and awareness of content of the real space to the VR,” in Proceedings of the 2019 3rd International Conference on Artificial Intelligence and Virtual Reality (Singapore), 26–30. doi: 10.1145/3348488.3348502

Amores, J., and Maes, P. (2017). “Essence,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (New York, NY: ACM), 28–34.

Amores, J., Richer, R., Zhao, N., Maes, P., and Eskofier, B. M. (2018). “Promoting relaxation using virtual reality, olfactory interfaces and wearable EEG,” in 2018 IEEE 15th International Conference on Wearable and Implantable Body Sensor Networks, BSN 2018, 2018-Janua, (Las Vegas, NV) 98–101. doi: 10.1109/bsn.2018.8329668

Azouvi, P., Jacquin-Courtois, S., and Luauté, J. (2017). Rehabilitation of unilateral neglect: evidence-based medicine. Ann. Phys. Rehabil. Med. 60, 191–197. doi: 10.1016/j.rehab.2016.10.006

Azouvi, P., Samuel, C., Louis-Dreyfus, A., Bernati, T., Bartolomeo, P., Beis, J. M., et al. (2002). Sensitivity of clinical and behavioural tests of spatial neglect after right hemisphere stroke. J. Neurol. Neurosurg. Psychiatry 73, 160–166. doi: 10.1136/jnnp.73.2.160

Baheux, K., Yoshizawa, M., Yoshida, Y., Seki, K., and Handa, Y. (2006). “Simulating hemispatial neglect with virtual reality,” in 2006 International Workshop on Virtual Rehabilitation, 100–105. New York, NY: IEEE. doi: 10.1109/IWVR.2006.1707535

Belkin, K., Martin, R., Kemp, S. E., and Gilbert, A. N. (1997). Auditory pitch as a perceptual analogue to odor quality. Psychol. Sci. 8, 340–342. doi: 10.1111/j.1467-9280.1997.tb00450.x

Ben-Artzi, E., and Marks, L. E. (1995). Visual-auditory interaction in speeded classification: role of stimulus difference. Percept. Psychophys. 57, 1151–1162. doi: 10.3758/BF03208371

Bordegoni, M., Carulli, M., and Ferrise, F. (2019). “Improving multisensory user experience through olfactory stimuli,” in Emotional Engineering, Vol.7, ed S. Fukuda (Springer, Cham), 201–231. doi: 10.1007/978-3-030-02209-9_13

Bowers, D., and Heilman, K. M. (1980). Pseudoneglect: effects of hemispace on a tactile line bisection task. Neuropsychologia 18, 491–498. doi: 10.1016/0028-3932(80)90151-7

Brudy, F., Holz, C., Rädle, R., Wu, C. J., Houben, S., Klokmose, C. N., et al. (2019). “Cross-Device taxonomy: survey, opportunities and challenges of interactions spanning across multiple devices,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems - CHI '19, 28 (Glasgow: Association for Computing Machinery). doi: 10.1145/3290605.3300792

Brule, E., Bailly, G., Brock, A., Valentin, F., Denis, G., and Jouffrais, C. (2016). “MapSense: multi-sensory interactive maps for children living with visual impairments,” in Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (San Jose, CA: ACM), 445–457. doi: 10.1145/2858036.2858375

Busse, L., Roberts, K. C., Crist, R. E., Weissman, D. H., and Woldorff, M. G. (2005). The spread of attention across modalities and space in a multisensory object. Proc. Natl. Acad. Sci. U. S. A. 102, 18751–18756. doi: 10.1073/pnas.0507704102

Buxbaum, L. J., Palermo, M. A., Mastrogiovanni, D., Read, M. S., Rosenberg-Pitonyak, E., Rizzo, A. A., et al. (2008). Assessment of spatial attention and neglect with a virtual wheelchair navigation task. J. Clin. Exp. Neuropsychol. 30, 650–660. doi: 10.1080/13803390701625821

Cardin, S., Vexo, F., and Thalmann, D. (2007). Head Mounted Wind. Computer Animation and Social Agents (CASA2007). Available online at: https://infoscience.epfl.ch/record/104359 (accessed November 8, 2020.)

Carnevale, M. J., and Harris, L. R. (2016). Which direction is up for a high pitch? Multisens. Res. 29, 113–32. doi: 10.1163/22134808-00002516

Carulli, M., Bordegoni, M., Ferrise, F., Gallace, A., Gustafsson, M., and Pfuhl, T. (2018). “Simulating multisensory wine tasting experience,” in Proceedings of International Design Conference, DESIGN (Dubrovnik: Faculty of Mechanical Engineering and Naval Architecture). doi: 10.21278/idc.2018.0485

Colonius, H., and Diederich, A. (2003). Multisensory interaction in saccadic reaction time: a time-window-of-integration model. J. Cogn. Neurosci.16, 1000–1009. doi: 10.1162/0898929041502733

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Cornelio, P., Maggioni, E., Brianza, G., Subramanian, S., and Obrist, M. (2020). “SmellControl: the study of sense of agency in smell,” in ICMI 2020 - Proceedings of the 2020 International Conference on Multimodal Interaction (Netherlands: ACM), 470–480. doi: 10.1145/3382507.3418810

Crisinel, A. S., and Spence, C. (2012). A fruity note: crossmodal associations between odors and musical notes. Chem. Senses 37, 151–158. doi: 10.1093/chemse/bjr085

Dawson, A. M., Buxbaum, L. J., and Rizzo, A. A. (2008). “The virtual reality lateralized attention test: sensitivity and validity of a new clinical tool for assessing hemispatial neglect,” in 2008 Virtual Rehabilitation (Vancouver: IWVR), 77–82. doi: 10.1109/ICVR.2008.4625140

Dember, W. N., Warm, J. S., and Parasuraman, R. (2001). Olfactory Stimulation and Sustained Attention. Compendium of Olfactory Research, 39–46.

Deroy, O., Crisinel, A. S., and Spence, C. (2013). Crossmodal correspondences between odors and contingent features: odors, musical notes, and geometrical shapes. Psychon. Bull. Rev. 20, 878–896. doi: 10.3758/s13423-013-0397-0

Dohan, M., and Mu, M. M. (2019). “Understanding User Attention in VR using gaze controlled games,” in TVX 2019 - Proceedings of the 2019 ACM International Conference on Interactive Experiences for TV and Online Video, 19 (Salford), 167–173. doi: 10.1145/3317697.3325118

Driver, J., and Spence, C. (1998). Crossmodal attention. Curr Opin Neurobiol. 8, 245–53. doi: 10.1016/S0959-4388(98)80147-5

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Ferber, S., and Karnath, H. O. (2001). How to assess spatial neglect - line bisection or cancellation tasks? J. Clin. Exp. Neuropsychol. 23, 599–607. doi: 10.1076/jcen.23.5.599.1243

Foerster, R. M., Poth, C. H., Behler, C., Botsch, M., and Schneider, W. X. (2016). Using the virtual reality device oculus rift for neuropsychological assessment of visual processing capabilities. Sci. Rep. 6, 1–10. doi: 10.1038/srep37016

Gallace, A., and Spence, C. (2006). Multisensory synesthetic interactions in the speeded classification of visual size. Percept. Psychophys. 68, 1191–1203. doi: 10.3758/BF03193720

Gauthier, L., Dehau, F. T., and Yves, J. (1989). The bells test: a quantitative and qualitative test for visual neglect. Int. J. Clin. Neuropsychol. XI, 49–54.

Gialanella, B., Monguzzi, V., Santoro, R., and Rocchi, S. (2005). Functional recovery after hemiplegia in patients with neglect: the rehabilitative role of anosognosia. Stroke 36, 2687–2690. doi: 10.1161/01.STR.0000189627.27562.c0

Hansen, J. P., Trudslev, A. K., Harild, S. A., Alapetite, A., and Minakata, K. (2019). “Providing access to VR through a wheelchair,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow: ACM), 5–12. doi: 10.1145//3290607.3299048

Hartmann, J., Holz, C., Ofek, E., and Wilson, A. D. (2019). “RealityCheck: blending virtual environments with ssituated physical reality,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems - CHI '19 (Glasgow: ACM), 12. doi: 10.1145/3290605.3300577

Heilman, K. M., Valenstein, E., and Watson, R. T. (1984). Neglect and related disorders. Semin. Neurol. 4, 209–219. doi: 10.1055/s-2008-1041551

Herz, R. S., and Schooler, J. W. (2002). A naturalistic study of autobiographical memories evoked by olfactory and visual cues: testing the Proustian hypothesis. Am. J. Psychol. 115, 21–32. doi: 10.2307/1423672

Holmes, N. P., and Spence, C. (2005). Multisensory integration: space, time and superadditivity. Curr. Biol. 15, R762–R764. doi: 10.1016/j.cub.2005.08.058

Jewell, G., and McCourt, M. E. (2000). Pseudoneglect: a review and meta-analysis of performance factors in line bisection tasks. Neuropsychologia 38, 93–110. doi: 10.1016/S0028-3932(99)00045-7

Keller, A. (2011). Attention and olfactory consciousness. Front. Psychol. 2, 380. doi: 10.3389/fpsyg.2011.00380

Kim, D. W., and Ando, H. (2010). “Development of directional olfactory display,” in Proceedings - VRCAI 2010, ACM SIGGRAPH Conference on Virtual-Reality Continuum and Its Application to Industry (New York, NY: ACM Press), 143–144.

Kim, Y. M., Chun, M. H., Yun, G. J., Song, Y. J., and Young, H. E. (2011). The effect of virtual reality training on unilateral spatial neglect in stroke patients. Ann. Rehabil. Med. 35:309. doi: 10.5535/arm.2011.35.3.309

Kimura, D. (1969). Spatial localization in left and right visual fields. Can. J. Psychol. 23, 445–458. doi: 10.1037/h0082830

Krauzlis, R. J., Lovejoy, L. P., and Zénon, A. (2013). Superior colliculus and visual spatial attention. Ann. Rev. Neurosci. 36, 165–182. doi: 10.1146/annurev-neuro-062012-170249

Lakens, D. (2013). Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t-tests and ANOVAs. Front. Psychol. 4:863. doi: 10.3389/fpsyg.2013.00863

Lunn, J., Sjoblom, A., Ward, J., Soto-Faraco, S., and Forster, S. (2019). Multisensory enhancement of attention depends on whether you are already paying attention. Cognition 187, 38–49. doi: 10.1016/j.cognition.2019.02.008

Maggioni, E., Cobden, R., Dmitrenko, D., Hornbæk, K., and Obrist, M. (2020). SMELL SPACE: mapping out the olfactory design space for novel interactions. ACM Transact. Comp. Hum. Interact. 27, 1–26. doi: 10.1145/3402449

Maggioni, E., Cobden, R., Dmitrenko, D., and Obrist, M. (2018). “Smell-O-message: integration of olfactory notifications into a messaging application to improve users' performance,” in ICMI 2018 - Proceedings of the 2018 International Conference on Multimodal Interaction (Boulder, CO), 45–54. doi: 10.1145/3242969.3242975

McCormick, K., Lacey, S., Stilla, R., Nygaard, L. C., and Sathian, K. (2018). Neural basis of the crossmodal correspondence between auditory pitch and visuospatial elevation. Neuropsychologia 112, 19–30. doi: 10.1016/j.neuropsychologia.2018.02.029

McCourt, M. E., and Jewell, G. (1999). Visuospatial attention in line bisection: stimulus modulation of pseudoneglect. Neuropsychologia 37, 843–855. doi: 10.1016/S0028-3932(98)00140-7

McDonald, J. J., Teder-Sälejärvi, W. A., and Hillyard, S. A. (2000). Involuntary orienting to sound improves visual spatial attention. Nature 407, 906–908. doi: 10.1038/35038085

Meredith, M. A., and Stein, B. E. (1986). Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J. Neurophysiol. 56, 640–662. doi: 10.1152/jn.1986.56.3.640

Micaroni, L., Carulli, M., Ferrise, F., Gallace, A., and Bordegoni, M. (2019). An olfactory display to study the integration of vision and olfaction in a virtual reality environment. J. Comp. Inf. Sci. Eng. 19:8. doi: 10.1115/1.4043068

Murray, M. M., Molholm, S., Michel, C. M., Heslenfeld, D. J., Ritter, W., Javitt, D. C., et al. (2005). Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cerebral Cortex 15, 963–974. doi: 10.1093/cercor/bhh197

Nguyen, T. V., Zhao, Q., and Yan, S. (2018). Attentive systems: a survey. Int. J. Comput. Vis. 126, 86–110. doi: 10.1007/s11263-017-1042-6

Nijboer, T. C. W., Kollen, B. J., and Kwakkel, G. (2013). Time course of visuospatial neglect early after stroke: a longitudinal cohort study. Cortex 49, 2021–2027. doi: 10.1016/j.cortex.2012.11.006

Nilsson, T., Shaw, E., Cobb, S. V. G., Miller, D., Roper, T., Lawson, G., et al. (2019). “Multisensory virtual environment for fire evacuation training,” in Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow), 1–4. doi: 10.1145/3290607.3313283

Nordin, S., Brämerson, A., Murphy, C., and Bende, M. (2003). A Scandinavian adaptation of the multi-clinic smell and taste questionnaire: evaluation of questions about olfaction. Acta Otolaryngol. 123, 536–542. doi: 10.1080/00016480310001411

Obrist, M., Tuch, A. N., and Hornbaek, K. (2014). “Opportunities for odor: experiences with smell and implications for technology,” in Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems (CHI '14) (Toronto, ON), 2843–2852. doi: 10.1145/2556288.2557008

Obrist, M., Velasco, C., Vi, C. T., Ranasinghe, N., Israr, A., Cheok, A. D., et al. (2016). “Touch, taste, & smell user interfaces: the future of multisensory HCI,” in Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems - CHI EA '16 (San Jose, CA), 3285–3292. doi: 10.1145/2851581.2856462

Pedroli, E., Serino, S., Cipresso, P., Pallavicini, F., and Riva, G. (2015). Assessment and rehabilitation of neglect using virtual reality: a systematic review. Front. Behav. Neurosci. 9:226. doi: 10.3389/fnbeh.2015.00226

Pierce, S. R., and Buxbaum, L. J. (2002). Treatments of unilateral neglect: a review. Arch. Phys. Med. Rehabil. 83, 256–268. doi: 10.1053/apmr.2002.27333

Pinto, Y., Leij, A. R., van der Sligte, I. G., Lamme, V. A. F., and Scholte, H. S. (2013). Bottom-up and top-down attention are independent. J. Vis. 13:16. doi: 10.1167/13.3.16

Plummer, P., Morris, M. E., and Dunai, J. (2003). Assessment of unilateral neglect. Phys. Ther. 83, 732–40. doi: 10.1093/ptj/83.8.732

Porter, J., Craven, B., Khan, R. M., Chang, S. J., Kang, I., Judkewitz, B., et al. (2007). Mechanisms of scent-tracking in humans. Nat. Neurosci. 10, 27–29. doi: 10.1038/nn1819

Ranasinghe, N., Jain, P., Tram, N. T. N., Koh, K. C. R., Tolley, D., Karwita, S., et al. (2018). “Season traveller: multisensory narration for enhancing the virtual reality experience,” in Conference on Human Factors in Computing Systems - Proceedings, 2018-April (New York, NY: Association for Computing Machinery), 1–13.

Rapcsak, S. Z., Verfaellie, M., Fleet, S., and Heilman, K. M. (1989). Selective attention in hemispatial neglect. Arch. Neurol. 46, 178–182. doi: 10.1001/archneur.1989.00520380082018

Raudenbush, B. B., Grayhem, R., Sears, T., and Wilson, I. (2009). Effects of peppermint and cinnamon odor administration on simulated driving alertness, mood and workload. North Am J Psychol. 11, 245–256.

Rietzler, M., Plaumann, K., Kräenzle, T., Erath, M., Stahl, A., and Rukzio, E. (2017). “VaiR: simulating 3D airflows in virtual reality,” in Conference on Human Factors in Computing Systems - Proceedings, 2017-May (New York, NY: Association for Computing Machinery), 5669–5677.

Rinaldi, L., Maggioni, E., Olivero, N., Maravita, A., and Girelli, L. (2018). Smelling the space around us: odor pleasantness shifts visuospatial attention in humans. Emotion 18, 971–979. doi: 10.1037/emo0000335

Risso, P., Covarrubias Rodriguez, M., Bordegoni, M., and Gallace, A. (2018). Development and testing of a small-size olfactometer for the perception of food and beverages in humans. Front. Dig. Human. 5, 1–13. doi: 10.3389/fdigh.2018.00007

Rose, F. D., Brooks, B. M., and Rizzo, A. A. (2005). Virtual reality in brain damage rehabilitation: review. CyberPsychol. Behav. 8, 241–262. doi: 10.1089/cpb.2005.8.241

Santangelo, V., Ho, C., and Spence, C. (2008). Capturing spatial attention with multisensory cues. Psychon. Bull. Rev. 15, 398–403. doi: 10.3758/PBR.15.2.398

Santangelo, V., and Spence, C. (2007). Multisensory cues capture spatial attention regardless of perceptual load. J. Exp. Psychol. Hum. Percept. Perf. 33, 1311–1321. doi: 10.1037/0096-1523.33.6.1311

Seigneuric, A., Durand, K., Jiang, T., Baudouin, J. Y., and Schaal, B. (2010). The nose tells it to the eyes: crossmodal associations between olfaction and vision. Perception 39, 1541–1554. doi: 10.1068/p6740

Shaw, E., Roper, T., Nilsson, T., Lawson, G., Cobb, S. V. G., and Miller, D. (2019). “The heat is on: exploring user behaviour in a multisensory virtual environment for fire evacuation,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow: ACM). doi: 10.1145/3290605.3300856

Sheskin, D. J. (2011). Handbook of Parametric and Nonparametric Statistical Procedures. Boca Raton, FL: Chapman & Hall/CRC.

Spence, C. (2002). Multisensory attention and tactile information-processing. Behav. Brain Res. 135, 57–64. doi: 10.1016/S0166-4328(02)00155-9

Spence, C., and Gallace, A. (2007). Recent developments in the study of tactile attention. Can. J. Exp. Psychol. 61, 196–207. doi: 10.1037/cjep2007021

Spence, C., and Ho, C. (2008). Multisensory warning signals for event perception and safe driving. Theor. Issues Ergon. Sci. 9, 523–554. doi: 10.1080/14639220701816765

Spence, C., Nicholls, M. E. R., Gillespie, N., and Driver, J. (1998). Cross-modal links in exogenous covert spatial orienting between touch, audition, and vision. Percept. Psychophys. 60, 544–557. doi: 10.3758/BF03206045

Stevenson, R. J. (2009). An initial evaluation of the functions of human olfaction. Chem. Senses 35, 3–20. doi: 10.1093/chemse/bjp083

Teruel, M. A., Oliver, M., Montero, F., Navarro, E., and González, P. (2015). “Multisensory treatment of the hemispatial neglect by means of virtual reality and haptic techniques," In: Artificial Computation in Biology and Medicine. IWINAC 2015. Lecture Notes in Computer Science, vol 9107. eds J. Ferrández Vicent, J. Álvarez-Sánchez, F. de la Paz López, F. Toledo-Moreo, H. Adeli (Cham: Springer). doi: 10.1007/978-3-319-18914-7_4

Tsirlin, I., Dupierrix, E., Chokron, S., Coquillart, S., and Ohlmann, T. (2009). Uses of virtual reality for diagnosis, rehabilitation and study of unilateral spatial neglect: review and analysis. Cyberpsychol. Behav. 12:208. doi: 10.1089/cpb.2008.0208

Vaid, J., and Singh, M. (1989). Asymmetries in the perception of facial affect: is there an influence of reading habits? Neuropsychologia 27, 1277–1287. doi: 10.1016/0028-3932(89)90040-7

Vallar, G., and Perani, D. (1986). The anatomy of unilateral neglect after right-hemisphere stroke lesions. a clinical/CT-scan correlation study in man. Neuropsychologia 24, 609–622. doi: 10.1016/0028-3932(86)90001-1

Van Hulle, L., Damme, S., Van, Spence, C., Crombez, G., and Gallace, A. (2013). Spatial attention modulates tactile change detection. Exp. Brain Res. 224, 295–302. doi: 10.1007/s00221-012-3311-5

Velasco, C., and Obrist, M. (2020). Multisensory Experiences: Where the Senses Meet Technology. Oxford: Oxford University Press.

Voinescu, A., Fodor, L. A., Fraser, D. S., and David, D. (2020). “Exploring attention in vr: effects of visual and auditory modalities,” in Advances in Intelligent Systems and Computing, 1217 AISC (Springer), 677–683.

Von Békésy, G. (1964). Olfactory analogue to directional hearing. J. Appl. Physiol. 19, 369–373. doi: 10.1152/jappl.1964.19.3.369

Wang, Y., Amores, J., and Maes, P. (2020). “On-face olfactory interfaces,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI: ACM), 1–9. doi: 10.1145/10.1145/3313831.3376737

Warm, J. S., Dember, W. N., and Parasuraman, R. (1991). Effects of olfactory stimulation on performance and stress in a visual sustained attention task. J. Soc. Cosmet. Chem. 42, 199–210.

Weintraub, S., and Mesulam, M. M. (1987). Right cerebral dominance in spatial attention. Arch. Neurol. 44:621. doi: 10.1001/archneur.1987.00520180043014

Keywords: virtual reality, smell, sound, multisensory, visuospatial attention

Citation: Dozio N, Maggioni E, Pittera D, Gallace A and Obrist M (2021) May I Smell Your Attention: Exploration of Smell and Sound for Visuospatial Attention in Virtual Reality. Front. Psychol. 12:671470. doi: 10.3389/fpsyg.2021.671470

Received: 23 February 2021; Accepted: 21 June 2021;

Published: 22 July 2021.

Edited by:

Mounia Ziat, Bentley University, United StatesReviewed by:

Charlotte Magnusson, Lund University, SwedenCopyright © 2021 Dozio, Maggioni, Pittera, Gallace and Obrist. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nicolò Dozio, bmljb2xvLmRvemlvQHBvbGltaS5pdA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.