95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 07 June 2021

Sec. Neuropsychology

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.664367

This article is part of the Research Topic Affective, Cognitive and Social Neuroscience: New Knowledge in Normal Aging, Minor and Major Neurocognitive Disorders View all 25 articles

Background: As with cognitive function, the ability to recognize emotions changes with age. In the literature regarding the relationship between recognition of emotion and cognitive function during aging, the effects of predictors such as aging, emotional state, and cognitive domains on emotion recognition are unclear. This study was performed to clarify the cognitive functions underlying recognition of emotional facial expressions, and to evaluate the effects of depressive mood on recognition of emotion in elderly subjects, as well as to reproduce the effects of aging on the recognition of emotional facial expressions.

Materials and Methods: A total of 26 young (mean age = 20.9 years) and 30 elderly subjects (71.6 years) participated in the study. All subjects participated in face perception, face matching, emotion matching, and emotion selection tasks. In addition, elderly subjects were administered a multicomponent cognitive test: the Neurobehavioral Cognitive Status Examination (Cognistat) and the Geriatric Depression Scale-Short Version. We analyzed these factors using multiple linear regression.

Results: There were no significant differences between the two groups in the face perception task, but in the face matching, emotion matching, and emotion selection tasks, elderly subjects showed significantly poorer performance. Among elderly subjects, multiple regression analyses showed that performance on the emotion matching task was predicted by age, emotional status, and cognitive function, but paradoxical relationships were observed between recognition of emotional faces and some verbal functions. In addition, 47% of elderly participants showed cognitive decline in one or more domains, although all of them had total Cognistat scores above the cutoff.

Conclusion: It might be crucial to consider preclinical pathological changes such as mild cognitive impairment when testing for age effects in elderly populations.

As with cognitive functions, it is crucial for people to be able to recognize emotions. Therefore, there is a long history of research on emotion recognition in subjects ranging from infants to the elderly (Bridges, 1932; Gitelson, 1948). Some authors have suggested that the ability to recognize emotions changes with age (Isaacowitz et al., 2007; Mill et al., 2009). As age-related differences have been observed not only in emotion recognition but also in cognitive functions (Glisky, 2007), their relationship has been examined. There are two main views of the relationship between age-related changes in emotional and cognitive functions in the literature. One perspective suggests that changes in emotion recognition are independent of those in cognitive function, although both are influenced by age. For example, Baena et al. (2010), who examined younger and older adults using an emotional identification task and cognitive tests targeting prefrontal functions, suggested that age effects may contribute independently to each function. The second viewpoint suggests that changes in emotion recognition are dependent on cognitive functions. Suzuki and Akiyama (2013) examined cognitive and emotional function in younger and older adults and reported that age-related deficits in emotion identification could be explained by general cognitive abilities and not by age itself. More recently, Virtanen et al. (2017) focused on an elderly population using large-scale sampling and suggested that the decline in emotion recognition may depend on cognitive functions. In their study, declines in the recognition of some types of emotion, such as anger, fear, and disgust, were sensitive to cognitive deterioration even when cognitive function was within the normal range, i.e., Mini-Mental State Examination (MMSE) scores were above the cutoff point. As their study adjusted for several factors, such as age, sex, education level, depressive symptoms, and antidepressant use, their results may indicate that recognition of emotion is mediated by cognitive function.

It is not yet clear which of these perspectives is more accurate. However, these studies suggest that cognitive functions mediate the ability to recognize emotion in elderly people, even considering cognitive tasks other than those used to assess prefrontal function. However, a few points still require clarification. First, Virtanen et al. (2017) used the MMSE as a cognitive task and determined that a decrease of 1 or 2 points was correlated with diminished emotion recognition. The MMSE is a multifactor test that includes immediate memory, orientation, delayed recall, and working memory (Shigemori et al., 2010), and the total MMSE score is calculated as the sum of these abilities. It is not clear which of these abilities may contribute to deterioration of emotion recognition. Second, the prevalence of depression is high in elderly populations and is associated with age-related factors (Djernes, 2006), and depression also affects the recognition of emotions (Demenescu et al., 2010). Therefore, it may be important to consider not only cognitive function but also depressive mood in the emotion recognition paradigm. However, Virtanen et al. (2017) adjusted for depressive symptoms and antidepressant use to calculate the effects of cognitive decline. We believe that depressive mood as well as cognitive function should be considered in analyzing the factors influencing emotion recognition.

The present study was performed to reproduce the effects of aging on the recognition of emotional facial expressions, to clarify the cognitive functions underlying recognition of emotional facial expressions, and to evaluate the effects of depressive mood on recognition of emotion in elderly subjects.

We compared 26 adults (12 men, 14 women) aged 19–24 years (mean = 20.9 years) to 30 healthy elderly individuals (15 men, 15 women) aged 61–79 years (mean = 71.6 years). The education level of the older subjects ranged from 9 to 16 years (mean = 13.9 years, standard deviation = 2.2, and median = 14 years). The younger adults were graduate and undergraduate students, with an education level ranging from 13 to 18 years (mean = 15.1 years, standard deviation = 1.2, and median = 15 years). All elderly participants were recruited through the Silver Human Resource Center in Tokyo as paid volunteers. The human resource center was requested to recruit elderly participants conforming to our inclusion criteria, i.e., age from 60s to 70s with no history of dementia or neurological and psychiatric disorders. None of the participants were excluded from the study once it had started.

All of the elderly participants were tested using the Japanese version of the neurobehavioral cognitive status examination Cognistat (Matsuda and Nakatani, 2004) to assess their cognitive abilities and the Japanese version of the Geriatric Depression Scale-Short Version (GDS-S-J; Sugishita and Asada, 2009) to evaluate their depressive symptoms.

To evaluate subjects’ emotion recognition abilities, we used emotion matching and emotion selection tasks. We also used face perception and face matching tasks to clarify subjects’ basic face perception ability. The procedures for these four tasks were adopted from previous studies (Miller et al., 2012; Kumfor et al., 2014). The original stimuli were based on Caucasian faces; however, in the present study, to make the stimuli appropriate for a Japanese population, face stimuli were selected from a Japanese facial expression database (Fujimura and Umemura, 2018), including eight models (four males and four females) and six basic emotions (happiness, anger, disgust, sadness, fear, and surprise) and neutral. Pictures were cropped to create long oval shapes to hide the hair and then converted into grayscale images.

The aim of this task was to confirm subjects’ basic perceptual ability. Each picture was 75.3 × 51.8 pixels (height × width) in size, and the distance between stimuli was 44.8 pixels. A pair of different models or two pictures of the same model, each with the same emotional expression (neutral face), were presented on the computer screen, and subjects were asked, “Are these pictures the same?” We used eight models (four male and four female). There were 28 trials including 20 pairs (8 matched and 12 unmatched pairs). The hit rate (number of correct identifications of a pair/28 pairs) was used as the measure of participants’ performance; the maximum score was 1.0.

The aim of this task was to clarify subjects’ face identification ability independent of their emotional expression recognition ability. A pair of faces expressing different emotions (six facial expressions and one neutral expression) showing the same model or different models were presented on the computer screen, and subjects were asked, “Do these show the same person?” There were 42 trials (21 matched and 21 unmatched models) and no counterbalancing. We used three male models, and different facial expressions were presented for each trial. The hit rate (number of correct identifications of a pair/42 pairs) was used as the measure of each participant’s performance; the maximum score was 1.0. All stimulus properties in the face matching task were the same as those in the face perception task.

The aim of this task was to evaluate subjects’ emotion identification ability without verbal labeling, independent of face identification. Two faces of different people with the same or different emotional expressions were presented on the computer screen, and the subjects were asked to decide whether each facial expression pair was the same. There were 42 trials (21 matched and 21 unmatched facial expressions) composed of different models and without counterbalancing. We used three male models and presented different models for each trial. The hit rate (number of correct identifications of each pair/42 pairs) was used as a measure of each participant’s performance; the maximum score was 1.0. All stimulus properties of the face matching task were the same as those in the face perception task.

This task measured the participants’ ability to select a facial expression based on a verbal label. The subjects viewed arrays of seven pictures of a single model displaying six emotional expressions and one neutral expression, and they were asked to point to the target face corresponding to a label spoken by the examiner. The size of each picture was 32.2 × 20.6 pixels (height × width). One of the target faces was plotted in the center of the screen, and the other six target faces were plotted at radial distances 48.9 pixels from the center. There were 42 trials, and we used six models (three male and three female). The faces and the positions of the emotions on the screen were varied across trials. To maximize the intrinsic value of the stimuli, a partial credit score was adopted. In this scoring method, each response was given credit based on the proportion of subjects in the reference group giving that response. For example, if a given stimulus was classified as “happy” by 50% of the reference group, “angry” by 40%, and “neutral” by 10%, then the response “happy” would receive a score of 1.0 (0.5/0.5), “angry” would receive 0.8 (0.4/0.5), and “neutral” would receive 0.2 (0.1/0.5). All other responses would receive a score of 0 (Heberlein et al., 2004).

The experiment was conducted in a quiet room with one subject at a time. Before the experimental task, elderly subjects were administered the Cognistat examination and the Japanese version of the GDS-S-J. During the experimental task, the participants were seated 55 cm from the monitor (Iiyama ProLite XU2390HS-B2, Japan). The experimental order of the four tasks was counterbalanced among subjects.

All statistical analyses were performed using R-3.5.1 (R Core Team, 2018) with the effect size package (Ben-Shachar et al., 2020) for effect size (d) analyses, the anovakun package version 4.8.4 (Iseki, 2019) for mixed-model univariate ANOVA, and the MASS package (Fox and Weisberg, 2019) for linear regression analyses. The face perception, face matching, and emotion matching tasks were analyzed using Welch’s t test, and the face selection task was analyzed using mixed-model univariate ANOVA, with emotion (anger, disgust, fear, sadness, surprise, happiness, and neutral) as the within-subject variable and age (young, elderly) as the between-subject variable. Post hoc analyses using Holm’s sequentially rejective Bonferroni procedure for multiple comparisons were conducted to examine interaction and main effects. In the elderly population, to clarify the effects of emotion recognition ability, stepwise multiple linear regression analyses were conducted. Scores on the face matching and emotion matching tasks were entered as dependent variables, and age, face perception task score, GDS-S-J score, and Cognistat subtest scores were entered as independent variables in separate regression analyses. In all analyses, P < 0.05 was taken to indicate statistical significance.

The study design was approved by the Ethical Review Committee of Chuo University Institute of Cultural Sciences (protocol number: 03 [FY2018]). All participants provided written informed consent to participate in the study. Although none of the procedures placed a high burden on the participants, they were told that they could take a rest at any time during the study if necessary.

Three subjects in the control group were excluded from the analyses due to missing data. Thus, data of 23 young subjects were analyzed (11 men and 12 women, aged 19–24 years [mean = 21.1 years]; Table 1). In addition, due to a ceiling effect, the orientation Cognistat subtest score was excluded from the regression analyses.

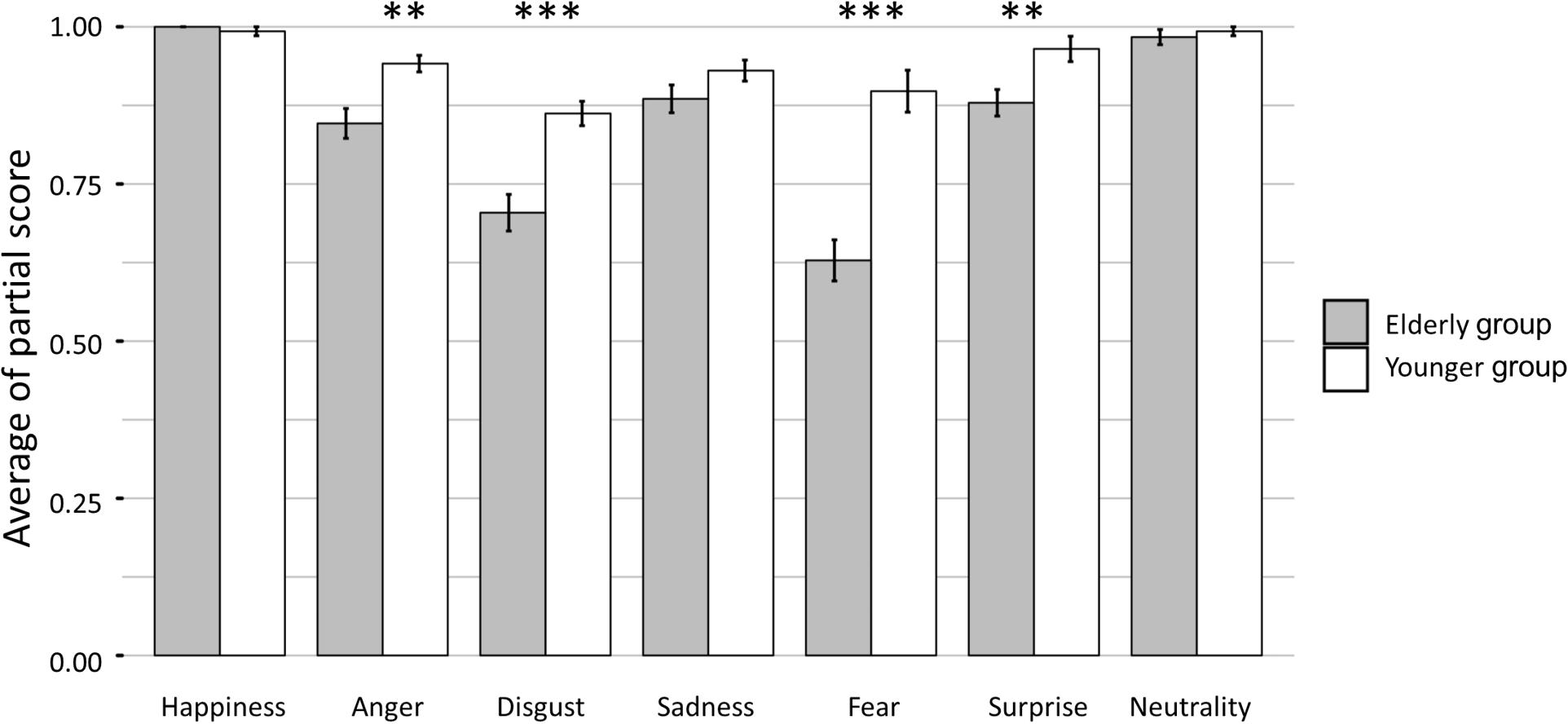

Although there were no significant differences between the two groups in the face perception task [Welch’s t (40.8) = –2.0, P = 0.057, and d = –0.61], significant differences were observed for the face matching [Welch’s t (41.0) = –4.5, P < 0.001, and d = –1.4], and emotion matching tasks [Welch’s t (50.8) = –2.4, P = 0.02, and d = –0.7]. These results indicated that young and elderly subjects had similar performance in face perception, whereas elderly subjects had lower face identification and emotion recognition abilities. In addition, the emotion selection task showed a significant interaction [F (3.9) = 10.2, P < 0.001, and η2g = 0.14], and the single main effect tests revealed that the performance in emotional face recognition was lower in the elderly group than in young adults for the emotions of anger, disgust, fear, and surprise (Figure 1).

Figure 1. Comparison of the emotion selection task results between the elderly subjects and young subjects. The performance of the elderly subjects was lower than that of young subjects in recognizing facial expressions denoting anger, disgust, fear, and surprise (∗∗P < 0.01 and ∗∗∗P < 0.001).

Multiple regression analyses were conducted to identify the predictors of emotion recognition ability in the elderly group. Explanatory variables were selected by stepwise selection using Akaike’s information criterion. We calculated the variance of inflation factor (VIF) for the explanatory variables of the input candidates, and found that the value ranged from 1.2 to 2.4 and no VIF score exceeded 10, which were considered to cause multicollinearity (Belsley et al., 1980). In the face matching task, the F-value of the model was significant, although the adjusted R2 was low (adjR2 = 0.21, P < 0.05); however, the adjusted coefficient of multiple determination (R2) for the emotion matching task was significant (adjR2 = 0.71, P < 0.001). Partial regression coefficients for each factor are shown in Table 2.

This study was performed to reproduce the effects of aging on the recognition of emotional facial expressions, to clarify the cognitive functions underlying recognition of emotional facial expressions, and to evaluate the effects of depressive mood on recognition of emotion in elderly subjects. To achieve these aims, we compared experimental emotion recognition tasks and a multicomponent neuropsychological test instead of using a simple screening test (Virtanen et al., 2017). The results were as follows. First, compared to young adult subjects, elderly subjects showed clear deterioration of their ability to recognize emotional facial expressions. Notably, the effects of age varied among categories of emotional expression. Second, regression analyses indicated that performance on the emotion matching task was predicted by depressive mood, cognitive function, and age.

Previous studies have reported that cognition of negative facial expressions, such as anger and fear, declines with age, while cognition of positive facial expressions, such as joy, is less affected by aging (Calder et al., 2003; Suzuki et al., 2007). Age-related changes and their discrepancies between categories have often been explained as positivity bias supporting socioemotional selectivity theory (Mather and Carstensen, 2005). That is, it is believed that elderly people act and think in pursuit of current happiness rather than information that will be beneficial in the future. The results of the present study also replicated these phenomena in elderly participants. In addition, age-related changes may be related to the study paradigm used. Suzuki and Akiyama (2013) reported that when neutral expressions were presented momentarily followed by labeling of the presented expressions, the hit rate of joyful expressions decreased and false positives increased in elderly participants. On the other hand, Saito et al. (2020) reported that immediate detection of joyful expressions by the visual search paradigm is less likely to occur in elderly participants. In the present study, seven different facial expressions were presented at once suggesting that happiness was relatively easy to identify.

As reported previously (Keightley et al., 2006; Mill et al., 2009) we confirmed that older participants had poor ability to recognize emotional facial expressions. In addition, our study confirmed that face recognition ability declined not only during tasks with a high cognitive load, such as in the emotion selection task, which required participants to select a facial expression based on a verbal label, but also during low cognitive load tasks, such as the emotion matching task, in which subjects were instructed to match emotional expression stimuli. These results suggested that the decline in emotional expression recognition in elderly people is a universal phenomenon independent of task demands. In addition, our study demonstrated that an aging effect was apparent not only compared to young subjects but also in comparisons among elderly subjects. In multiple regression analyses, age was a powerful predictor of performance in the emotion matching task. Therefore, aging may be a crucial factor in the decline in ability to recognize emotional facial expressions. In contrast, in the face matching task, which showed significant declines compared to performance among younger subjects, the factor of aging was not a predictor of face identification among elderly subjects of different ages. These results suggested that, compared to the face matching task, the emotion matching task was relatively sensitive to aging in old age.

In multiple regression analyses, our study also confirmed that depressive mood was related to performance on the emotion matching task. Although Demenescu et al. (2010) reported impaired recognition of facial expressions in subjects with anxiety and major depression, their subjects were diagnosed patients, whereas our subjects had not been diagnosed. The cutoff points for the GDS-S-J are 5/6 for depressive tendency and 9/12 for depressive state (Watanabe and Imagawa, 2013); in the present study, only two subjects exceeded the cutoff for depressed mood, and none demonstrated a depressed state. These results suggested that not only clinical depression but also emotional state within the normal range may be crucial for recognition of facial expressions.

Demented patients have also been reported to show a decline in the recognition of facial expressions, and a relationship between this recognition deficit and cognitive capacities has been demonstrated (Torres Mendonça De Melo Fádel et al., 2019). In the present study, however, the elderly participants did not have dementia; rather, our elderly subjects were recruited from a community job service, and all scored above the cutoff score on the Cognistat. These results suggest that the decline in emotion recognition may be related to aging, and not to pathological changes in the brain. However, our elderly subjects may not have been completely healthy subjects. Some showed decreased scores on Cognistat subtests, with the most notable decline being on the memory subtest followed by the attention (digit span) subtest; 47% (14/30) and 33% (10/30) of elderly subjects, respectively, were below the cutoff points in these subtests. Therefore, our subjects may have included some individuals from a preclinical population, such as people with mild cognitive impairment (MCI; Petersen et al., 1999). At the time of this experiment, our elderly subjects had no apparent issues related to their cognitive function, and their functional abilities were apparently preserved (i.e., they could manage their schedule and come to the experimental room independently). However, some showed declines in one or more cognitive domains. Therefore, some of our subjects may have met the diagnostic criteria for MCI (Albert et al., 2011) or mild cognitive disorder (American Psychiatric Association, 2013). We cannot define a biological substrate related to age at this stage, so MCI should be taken into consideration in explaining the effects of aging on the recognition of emotional expression.

The present study revealed an effect of hidden cognitive decline and depressive mood on recognition of facial expression within an elderly population. However, our study had limitations. First, the sample size and deviation of cognitive function test are small in the elderly, and therefore we could not conclude that cognitive functions and depressive mood definitely affect recognition of emotional facial expressions. Second, we did not examine cognitive function and depressive scale in young adults, so the predictors of facial cognition shown in this study may have been limited to the elderly. Third, the face recognition paradigm used was different from that used by Virtanen et al. (2017), and therefore it remains possible that differences between the results of these studies may have been due to differences in the paradigms used.

In conclusion, we found that elderly individuals showed a decline in the recognition of emotional facial expressions. The effect of age was apparent not only compared to a young population but also relative to older subjects. In addition to the effect of age, emotional status (depressive tendencies) and cognitive function were also related to recognition of emotional expression, but paradoxical relationships were apparent between recognition of emotional faces and some verbal functions. It may be important to consider preclinical pathological changes, such as MCI, in evaluating the effects of age in elderly populations.

The data analyzed in this study is subject to the following licenses/restrictions: The data are not publicly available due to ethical restrictions. The data that support the findings of this study are available on request from the corresponding author, AM. Requests to access these datasets should be directed to AM, Z3JlZW5AdGFtYWNjLmNodW8tdS5hYy5qcA==.

The studies involving human participants were reviewed and approved by Ethical review committee of Chuo University Institute of Cultural Sciences [protocol number: 03 (FY2018)]. The participants provided their written informed consent to participate in this study.

AM and RO designed experiments. RO performed the experiments. RO analyzed the results. AM and RO wrote the manuscript. Both authors read and approved the final manuscript.

This work was supported by JSPS KAKENHI Grant Numbers 18H03663 and 20K20871 and JSPS Topic-Setting Program to Advance Cutting-Edge Humanities and Social Sciences Research Area Cultivation and JSPS Bilateral Joint Research Projects. This study was also supported in part by Chuo University Grant for Special Research.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We would like to thank all the participants for this study. In addition, we would like to thanks to Prof. Olivier Piguet and Dr. Fiona Kumfor for their cooperation.

Albert, M. S., DeKosky, S. T., Dickson, D., Dubois, B., Feldman, H. H., Fox, N. C., et al. (2011). The diagnosis of mild cognitive impairment due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s Dement. 7, 270–279. doi: 10.1016/j.jalz.2011.03.008

American Psychiatric Association (2013). Diagnostic and Statistical Manual of Mental Disorders, 5th Edn. Washington, D.C: American Psychiatric Publishing, doi: 10.1176/appi.books.9780890425596.893619

Baena, E., Allen, P. A., Kaut, K. P., and Hall, R. J. (2010). On age differences in prefrontal function: The importance of emotional/cognitive integration. Neuropsychologia 48, 319–333. doi: 10.1016/j.neuropsychologia.2009.09.021

Belsley, D. A., Kuh, E., and Welsch, R. E. (1980). Regression Diagnostics: Identifying Influential Data and Sources of Collinearity. New Jersey, NJ: Wiley.

Ben-Shachar, M., Makowski, D., and Lüdecke, D. (2020). Compute and interpret indices of effect size. Vienna: R Core Team.

Bridges, K. M. B. (1932). Emotional Development in Early Infancy. Child Dev. 3:324. doi: 10.2307/1125359

Calder, A. J., Keane, J., Manly, T., Sprengelmeyer, R., Scott, S., Nimmo-Smith, I., et al. (2003). Facial expression recognition across the adult life span. Neuropsychologia 41, 195–202. doi: 10.1016/S0028-3932(02)00149-5

Demenescu, L. R., Kortekaas, R., den Boer, J. A., and Aleman, A. (2010). Impaired attribution of emotion to facial expressions in anxiety and major depression. PLoS One 5:15058. doi: 10.1371/journal.pone.0015058

Djernes, J. K. (2006). Prevalence and predictors of depression in populations of elderly: A review. Acta Psychiat. Scand. 113, 372–387. doi: 10.1111/j.1600-0447.2006.00770.x

Fox, J., and Weisberg, S. (2019). R Companion to Applied Regression, 3rd Edn. Thousand Oaks CA: Sage.

Fujimura, T., and Umemura, H. (2018). Development and validation of a facial expression database based on the dimensional and categorical model of emotions. Cognit. Emot. 32, 1663–1670. doi: 10.1080/02699931.2017.1419936

Glisky, E. L. (2007). “Changes in Cognitive Function in Human Aging,” in Brain Aging: Models, Methods, and Mechanisms, ed. D. R. Riddle (Boca Raton, FL: CRC Press).

Heberlein, A. S., Adolphs, R., Tranel, D., and Damasio, H. (2004). Cortical regions for judgments of emotions and personality traits from point-light walkers. J. Cognit. Neurosci. 16, 1143–1158. doi: 10.1162/0898929041920423

Isaacowitz, D. M., Löckenhoff, C. E., Lane, R. D., Wright, R., Sechrest, L., Riedel, R., et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychol. Aging 22, 147–159. doi: 10.1037/0882-7974.22.1.147

Iseki, R. (2019). Anovakun (version 4.8.4.). Available Online at: http://riseki.php.xdomain.jp/

Keightley, M. L., Winocur, G., Burianova, H., Hongwanishkul, D., and Grady, C. L. (2006). Age effects on social cognition: Faces tell a different story. Psychol. Aging 21, 558–572. doi: 10.1037/0882-7974.21.3.558

Kumfor, F., Sapey-Triomphe, L.-A., Leyton, C. E., Burrell, J. R., Hodges, J. R., and Piguet, O. (2014). Degradation of emotion processing ability in corticobasal syndrome and Alzheimer’s disease. Brain 137, 3061–3072. doi: 10.1093/brain/awu246

Mather, M., and Carstensen, L. L. (2005). Aging and motivated cognition: The positivity effect in attention and memory. Trends Cognit. Sci. 9, 496–502. doi: 10.1016/j.tics.2005.08.005

Matsuda, O., and Nakatani, M. (2004). Manual for Japanese version of the Neurobehavioral Cognitive Status Examination (COGNISTAT). Tokyo: World Planning.

Mill, A., Allik, J., Realo, A., and Valk, R. (2009). Age-Related Differences in Emotion Recognition Ability: A Cross-Sectional Study. Emotion 9, 619–630. doi: 10.1037/a0016562

Miller, L. A., Hsieh, S., Lah, S., Savage, S., and Hodges, J. R. (2012). One size does not fi t all: Face emotion processing impairments in semantic dementia, behavioural-variant frontotemporal dementia and Alzheimer ’ s disease are mediated by distinct cognitive deficits. Behav. Neurol. 25, 53–60. doi: 10.3233/BEN-2012-0349

Petersen, R. C., Smith, G. E., Waring, S. C., Ivnik, R. J., Tangalos, E. G., and Kokmen, E. (1999). Mild Cognitive Impairment. Arch. Neurol. 56:303. doi: 10.1001/archneur.56.3.303

Saito, A., Sato, W., and Yoshikawa, S. (2020). Older adults detect happy facial expressions less rapidly. R. Soc. Open Sci. 7:191715. doi: 10.1098/rsos.191715

Shigemori, K., Ohgi, S., Okuyama, E., Shimura, T., and Schneider, E. (2010). The factorial structure of the mini mental state examination (MMSE) in Japanese dementia patients. BMC Geriatr. 10:36. doi: 10.1186/1471-2318-10-36

Sugishita, M., and Asada, T. (2009). The creation of the Geriatric Depression Scale-Short version-Japanese. Jap. J. Cognit. Neurosci. 11, 87–90.

Suzuki, A., and Akiyama, H. (2013). Cognitive aging explains age-related differences in face-based recognition of basic emotions except for anger and disgust. Aging Neuropsychol. Cognit. 20, 253–270. doi: 10.1080/13825585.2012.692761

Suzuki, A., Hoshino, T., Shigemasu, K., and Kawamura, M. (2007). Decline or improvement?. Age-related differences in facial expression recognition. Biol. Psychol. 74, 75–84. doi: 10.1016/j.biopsycho.2006.07.003

Torres Mendonça De Melo Fádel, B., Santos, De Carvalho, R. L., Belfort Almeida, Dos Santos, T. T., et al. (2019). Facial expression recognition in Alzheimer’s disease: A systematic review. J. Clin. Exp. Neuropsychol. 41, 192–203. doi: 10.1080/13803395.2018.1501001

Virtanen, M., Singh-Manoux, A., Batty, G. D., Ebmeier, K. P., Jokela, M., Harmer, C. J., et al. (2017). The level of cognitive function and recognition of emotions in older adults. PLoS One 12:1–11. doi: 10.1371/journal.pone.0185513

Keywords: emotional face recognition, elderly people, cognitive function, depression, multiple regression analyses

Citation: Ochi R and Midorikawa A (2021) Decline in Emotional Face Recognition Among Elderly People May Reflect Mild Cognitive Impairment. Front. Psychol. 12:664367. doi: 10.3389/fpsyg.2021.664367

Received: 05 February 2021; Accepted: 10 May 2021;

Published: 07 June 2021.

Edited by:

Sara Palermo, Unità di Neuroradiologia, Carlo Besta Neurological Institute (IRCCS), ItalyReviewed by:

Carol Dillon, Centro de Educación Médica e Investigaciones Clínicas Norberto Quirno (CEMIC), ArgentinaCopyright © 2021 Ochi and Midorikawa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Akira Midorikawa, Z3JlZW5AdGFtYWNjLmNodW8tdS5hYy5qcA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.