- Journalism Department, School of Journalism and Communication, Renmin University of China, Beijing, China

This study examined factors including health-related anxiety, preexisting misinformation beliefs, and repeated exposure contributing to individuals’ acceptance of health misinformation. Through a large-scale online survey, this study found that health-related anxiety was positively associated with health misinformation acceptance. Preexisting misinformation beliefs, as well as repeated exposure to health misinformation, were both positively associated with health misinformation acceptance. The results also showed that demographic variables were significantly associated with health misinformation acceptance. In general, females accepted more health misinformation compared to males. Participants’ age was negatively associated with health misinformation acceptance. Participants’ education level and income were both negatively associated with their acceptance of health misinformation.

Introduction

Due to the development of technology and the popularity of social media, people are increasingly relying on the Internet as their primary source of information (Lazer et al., 2018). A similar trend has been observed worldwide as well as in China. Tencent’s WeChat application boasted a total number of monthly active users over 1.2 billion, and Sina Weibo reported daily 224 million active users as of the third quarter of 2020 (Statista, 2020). Social media has also become a prominent venue for people to exchange health-related information, which contributes to public health care, especially in China where many people possess insufficient health resources (Zhang et al., 2020). A Chinese national representative survey found that 98.35% of participants reported using WeChat to acquire health information (Zhang et al., 2017). Another study found that people’s personal beliefs were influenced by online information (Stevenson et al., 2007). At the same time, there are also critical concerns about the prevalence of misinformation on social media due to its potentially detrimental effects on individuals as well as on society (Broniatowski et al., 2018; Chou et al., 2018). For example, one study found that false news reached more people and spread faster than accurate news (Vosoughi et al., 2018). In an online knowledge contest hosted by the Food Safety Office of the State Council of China in 2019, 12 million participants answered 1,000 quiz questions with an accuracy rate of merely 50%. The spread of health misinformation has also been attributed as the most important reason for the low and inaccurate health and food safety knowledge (Xinhua News, 2019).

Misinformation has been widely discussed in the political realm, due to the significant impact of political news on socialization and democratization. For instance, by examining digital trace data across various electronic platforms, one study found that the audience of political fake news comprises a small, disloyal group of heavy Internet users (Nelson and Taneja, 2018). In recent years, misleading information was also observed in other topics such as vaccination and nutrition (Lazer et al., 2018), which pose great threats to public health.

Health misinformation refers to false or inaccurate health-related claims with a lack of scientific evidence (Chou et al., 2018). It inhibits individuals from engaging in health behaviors and diminishes institutional efforts to promote public health (Oh and Lee, 2019). Given its detrimental impact on the health of individuals, false statements have drawn attention from academia, industry, and governments (Chen et al., 2018). For example, several studies have explored the dissemination of specific health-related misinformation on social media, including cancer-related misinformation, Zika virus rumors, food safety-related rumors, and about influenza vaccines, etc. (e.g., Bode and Vraga, 2018; Chen et al., 2018; Oh and Lee, 2019). By analyzing tweets (posted on the social media platform Twitter) during the 2014 Ebola pandemic, researchers found that 10% of messages contained false, or at least partially false information (Sell et al., 2020). Another experiment focusing on the impact of conspiracy cues in Zika-related information found that conspiracy theories significantly influenced individuals’ beliefs, but exerted less tangible influence on people’s intentions to get vaccinated (Lyons et al., 2019).

Most existing literature is focused on the content characteristics of health misperceptions (Moran et al., 2016), diffusion patterns, and factors contributing to the fast spread of health misinformation (Chen et al., 2018; Jamison et al., 2020), as well as the impact of unsubstantiated statements (Broniatowski et al., 2018; Swire-Thompson and Lazer, 2020). Misinformation acceptance has been widely discussed in political psychology, and some scholars suggest that the psychological drivers that lead people to believe it needs to be addressed (Chou et al., 2020). Even though social media is an increasingly prominent way in which Chinese people acquire information about their health, a recent systematic review indicated that few studies examine the prevalence of misinformation in the Chinese context (Wang et al., 2019).

The convenience of social media sites makes it easy for ill-intentioned actors to purposefully disseminate misinformation, which can exert significant effects on individuals’ affective, cognitive, and behavioral responses regarding health-related issues. Thus, understanding the psychological mechanisms of people evaluating, processing, and trusting anti-science claims is crucial to constraining and debunking health misinformation. However, less is known about the role of belief systems, emotions, and cognition when it comes to people’s receptivity to specific health messages on social media.

This study details a large-scale online survey conducted through a mobile news application in China. The objectives of this study were to (a) examine how factors such as anxiety about health-related issues, preexisting beliefs, and repeated exposure to health misinformation may contribute to the acceptance of the unverified information on social media, and (b), to explore demographic differences (e.g., sex, age, education level, and social-economic status) in health misinformation acceptance.

Factors Contributing to Health Misinformation Acceptance

Misinformation refers to “cases in which people’s beliefs about factual matters are not supported by clear evidence and expert opinion” (Nyhan and Reifler, 2010). Misinformation can be spread intentionally or unintentionally. Rumor is defined as “a proposition for belief in general circulation within a community without proof or evidence of its authenticity” (Walker and Blaine, 1991). In the current study, we do not intend to make a clear distinction between misinformation or rumor because the two terms both focus on the unique characteristics of unverified information.

Misinformation is not new to the Internet or social media. Nonetheless, Internet technology and the popularity of social media have facilitated the sharing of information as well as the prevalence of misinformation. The fast spread of misinformation on social media not only causes consequences such as invoking anxiety and fear among people but also leads to serious consequences such as forming irrational beliefs or undesirable behaviors (Fernández-Luque and Bau, 2015). This is especially the case for health-related misinformation. For example, even after corrections, 20–25% of the public continue to believe rumors regarding the association between childhood vaccines and autism (Lewandowsky et al., 2012).

In terms of factors contributing to the adoption of misinformation, some studies have focused on demographic differences such as sex, age, education level, or income, with the assumption that some people are naturally more inclined to believe misinformation (e.g., Greenspan, 2008). Other studies have focused on the content and the context of misinformation, suggesting that anyone can be susceptible to misbeliefs (Greenhill and Oppenheim, 2017). Research also showed that individuals rely upon sources, narrative, and contexts to evaluate the believability of social media messages (Karlova and Fisher, 2013).

Specific to health misinformation, some studies have explored the associations between psychological characteristics and people’s acceptance and trust in health misbeliefs. For instance, it was noted that individuals’ epistemic beliefs influence the extent to which they accept and share online misinformation (Chua and Banerjee, 2017). Online falsehoods can also prime individuals’ psychological proximity to health threats, and, in turn, the consequent higher perceived threats were found to increase the likelihood that people share misinformation (Williams Kirkpatrick, 2020). It was also discovered that exposure to collective opinion could reduce the propensity for individuals to believe and share health-related misinformation (Li and Sakamoto, 2015). Notably, in simulated pandemic settings, researchers found that the congruence between people’s original affective states and the emotions triggered by the contents led to higher acceptance of inaccurate claims (Na et al., 2018). Through interviews with people in the Hubei province of China during the COVID-19 outbreak, it was found that participants seldom seek confirmation of information. Even among those who strived to conduct verification, they relied more on heuristic cues rather than systematic processing strategies (Zou and Tang, 2020).

In the current study, we examine some key variables such as health-related anxiety, preexisting beliefs, and repeated exposure to misbeliefs in contributing to misinformation acceptance in the health arena. We then examine the associations between different socio-demographic variables and people’s health misinformation acceptance.

Health-Related Anxiety

In the context of health misinformation perception, health-related anxiety can be defined as “how anxious the individual is about issues directly related to the rumor” (Greenhill and Oppenheim, 2017). Individuals who have higher levels of anxiety are more motivated to seek information to either legitimize their anxiety or to help them to ease the worry (Fergus, 2013). Previous studies have found a positive association between health anxiety and a need for health information (for a review, see McMullan et al., 2019). Considering that health information shared online often comes from unknown sources with low quality, people would spend more time to (dis)confirm the validity of the information (Starcevic and Berle, 2013). Under such circumstances, anxious individuals are less likely to use central processing cues such as examining the logic or the plausibility of the unverified information. Negative affective states such as fear and anxiety can even reinforce people’s believability of false information. Individuals who have higher levels of health anxiety were more affected by negative information and therefore more likely to share health misinformation (Pezzo and Beckstead, 2006; Cisler and Koster, 2010). For instance, one study noted that anxious people were more likely to disseminate misinformation as a way to vent negative emotions (Pezzo and Beckstead, 2006). Thus, the first hypothesis can be predicted:

H1: Individual’s health-related anxiety is positively associated with their health misinformation acceptance.

Preexisting Misinformation Beliefs

Preexisting beliefs refer to people’s pre-existing opinions and attitudes they already have, before they process any information (Ecker et al., 2014). Persuasion literature indicates that individuals are more likely to accept messages which are congruent with their preexisting attitudes or opinions (McGuire, 1972). When individuals are presented with a piece of new information, they check it against their preexisting knowledge to assess its compatibility. Social judgment theory posits that persuasive messages are usually judged against an individuals’ existing position (anchor attitude). If the new message falls into the latitude of acceptance, then it will be perceived as closer than it is to the anchor attitude (Brehmer, 1976). Messages that are consistent with one’s beliefs will be evaluated and processed more fluently. Therefore, individuals are more likely to accept these messages as truthful. Messages which are not congruent or which contradict an individuals’ preexisting beliefs can cause cognitive dissonance and are more difficult to accept (Greenhill and Oppenheim, 2017).

In the context of health misinformation, individuals are more likely to believe a piece of new information if it is compatible and congruent with their preexisting beliefs. On the one hand, some people hold skeptical views when it comes to health-related information or misinformation. On the other hand, other people are more receptive and are more likely to accept what they were told. Therefore, we predict:

H2: Individuals’ preexisting beliefs will be positively associated with their health misinformation acceptance.

Repeated Exposure

In the case of spreading misinformation, individuals may have different levels of exposure. Some people may have heard something many times and others may never have heard of it at all. Previous research in cognitive science has demonstrated that prior exposure increased the perceived accuracy of a statement (for a detailed review, see Dechêne et al., 2010). Repeated exposure to (mis)information may trigger several cognitive processes as well as bias in information processing. A common account is that repetition leads to the experience of processing fluency, which is known to shape illusory truth (Wang et al., 2016). For example, repeated exposure to the same information may increase an individuals’ perceived familiarity with the information, which makes it easier to process at both perceptual and conceptual levels (Whittlesea, 1993). The availability heuristic also states that people will use the most familiar and most recent information to serve as their base to form attitudes and infer the accuracy of certain statements. In particular, the effect of repeated exposure has also been detected in people’s trust in false and unverified information (Fazio et al., 2015). Misinformation can distort an individuals’ memory in that they may forget sources and over time, they morph the fictional sources into factual sources (Shen et al., 2019). Therefore, it is worth assuming that individuals will be more likely to believe health-related misinformation when it has been repeated.

H3: Individuals’ repeated exposure to health misinformation is positively associated with their health misinformation acceptance.

Demographic Factors

The above-discussed factors may contribute to more or less acceptance of health misinformation. However, demographic characteristics may also affect how people respond to health misinformation. However, the existing literature remains inconclusive on how demographic factors such as age, sex, income, and education level may contribute to rumor acceptance. Therefore, we used a research question to examine the relationship between demographic characteristics and health misinformation acceptance.

RQ1: How might individuals’ sex, age, education level, and income associate with their health misinformation acceptance?

Methods

Participants and Procedure

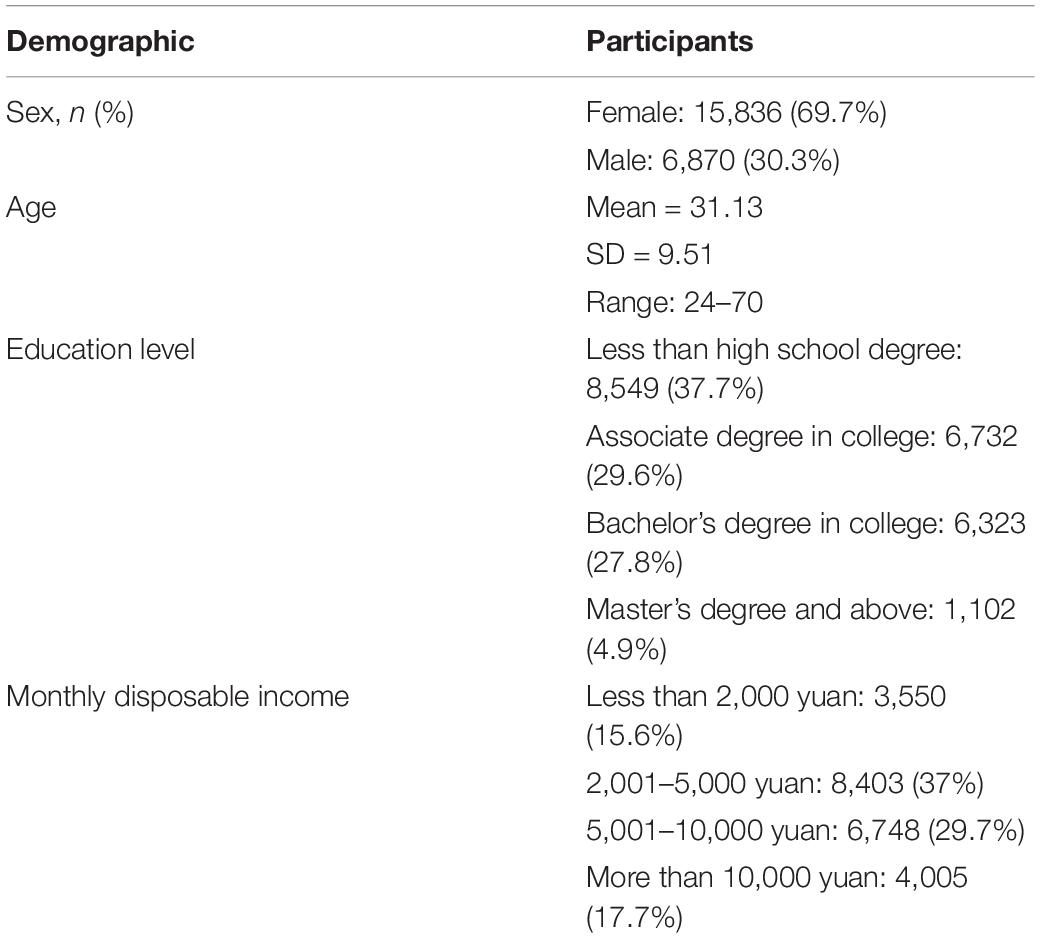

A sample of 22,706 participants with a mean age of 31.13 (SD = 9.51) participated in the survey. Of these participants, 69.7% (n = 15,836) were female and 30.3% (n = 6,870) were male. Descriptive statistics on participants’ demographic information can be found in Table 1. The survey received ethical approval from the Internal Review Board from the School of Journalism and Communication, Renmin University of China. An online survey was conducted through Tencent News mobile application, as 99.1% of Internet users use mobile phones to access the Internet. Tencent is one of China’s largest Internet portals. In 2010, Tencent launched Tencent News application to provide users with the latest news and information. According to the statistics provided by Aurora Mobile, Baidu APP, Toutiao, and Tencent News APP are the biggest three news platforms with the largest numbers of daily active users to the hundred million volume level. Ranked as the second, Tencent News App reported a penetration rate of 22.4% as of the third quarter of 2020 (Sina News, 2020). The online questionnaire was randomly sent to users of Tencent News Mobile Application in mainland China. Consent was acquired before participants started answering the questionnaire. The survey questionnaire is included in Appendix A.

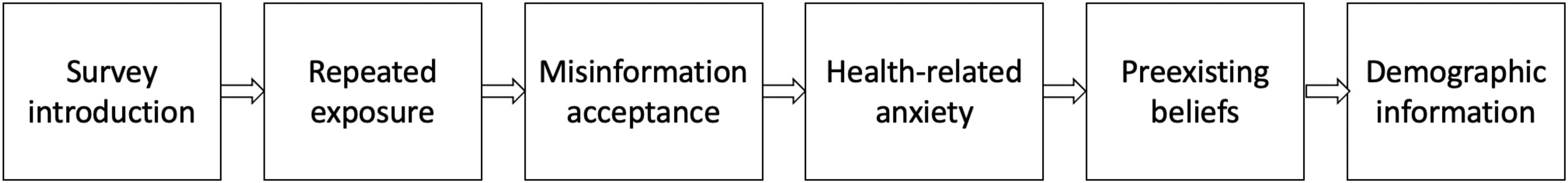

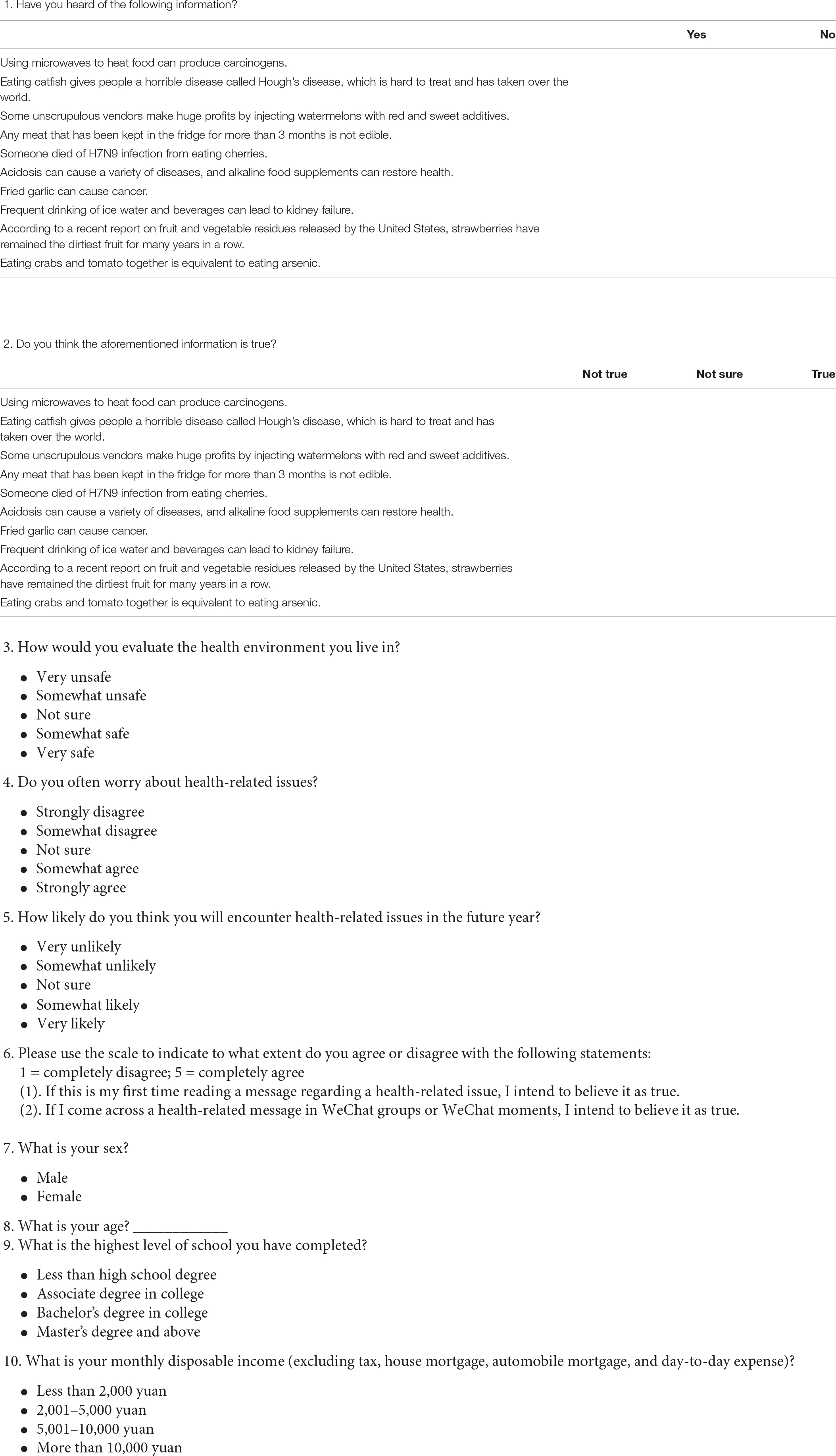

Participants were first presented with ten health-related items of misinformation. The criteria for misinformation selection in this study were based on the misinformation debunking messages released by the Jiaozhen fact-checking platform of Tencent News. The final misinformation items used in this study were selected based on popularity, frequency, and topics of misinformation. Participants were first asked to indicate whether they were aware of this misinformation item. They were then asked whether they believed it. After exposure to all ten pieces of health misinformation, they were asked about health-related anxiety, preexisting misinformation beliefs, and demographic information. The survey design can be found in Figure 1.

Measures

Misinformation Acceptance

Misinformation acceptance was measured by asking participants to evaluate whether health-related (mis)information was true or not. Sample items include “Using microwaves to heat food may cause cancer” and “Eating catfish can lead to a terrifying disease called Halff’s disease, which is so difficult to treat that it has taken over the world.” Misinformation acceptance composite was calculated by aggregating the number of participants’ evaluations of rating the rumor’s as truthful out of the ten pieces of health misinformation (α = 0.85).

Health-Related Anxiety

Health-related anxiety measured how anxious participants were in evaluating their current health environment and future health conditions. To measure participants’ health-related anxiety, we adapted one question from a previous study (“How likely do you think you will encounter health-related issues in the future year,” Greenhill and Oppenheim, 2017) and added two more questions to form a scale (see Appendix A). The three items on a five-point Likert-type scale showed acceptable reliability (α = 0.72).

Preexisting Misinformation Beliefs

Participants’ preexisting misinformation beliefs were measured by two items on a five-point Likert-type scale including “If this is my first time reading a message regarding a health-related issue, I intend to believe it as true” and “If I come across a health-related message in WeChat groups or WeChat moments, I intend to believe it as true.” The two items showed acceptable reliability (α = 0.73).

Repeated Exposure

Before asking the participants to indicate their acceptance of the rumor, they were first asked to indicate whether they had heard or read the (mis)information previously. Repeated exposure was measured similarly to previous studies, examining food safety rumors (Feng and Ma, 2019) and political or economic rumors (Greenhill and Oppenheim, 2017). Overall repeated exposure was calculated by aggregating the score over the ten pieces of health misinformation. The ten items showed acceptable reliability (α = 0.74).

Results

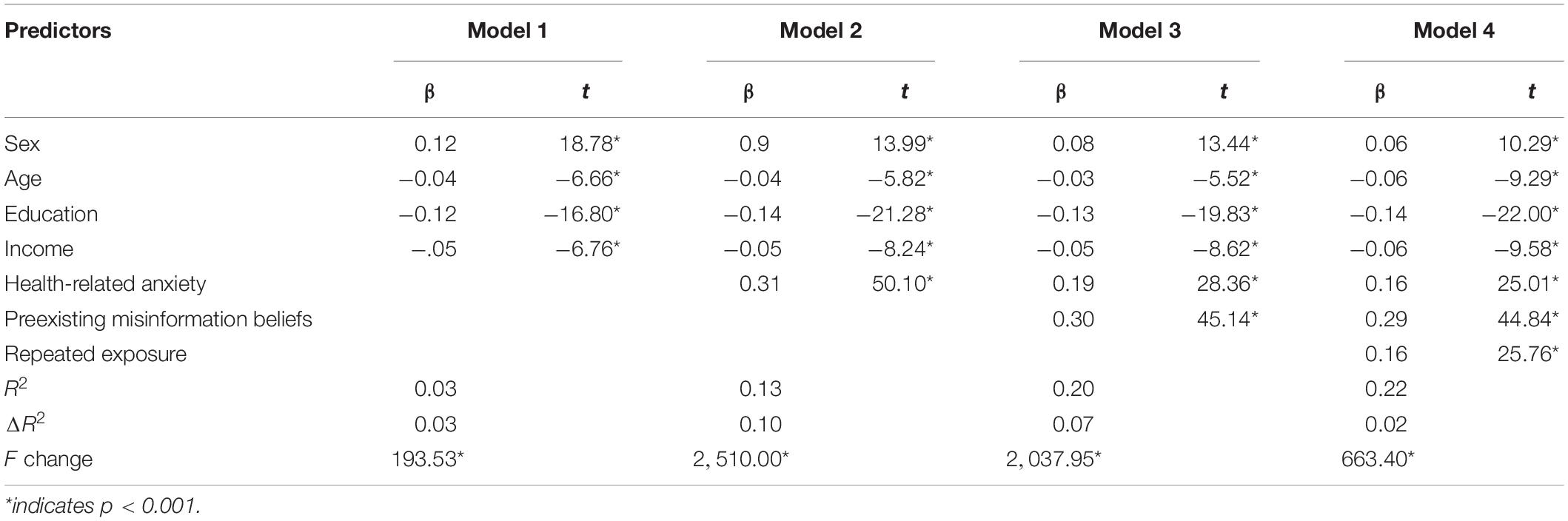

On average, participants only identified less than four items of misinformation. The Mean score for participants who fail to recognize the (mis)information was 6.55 with a standard deviation of 3.06. Hierarchical regression analysis was used to test the hypotheses and answer the research question (see Table 2). To conduct the stepwise regression analysis, demographic variables including participants’ sex, age, education level, and income were first entered in the linear regression model.

Firstly, the results showed that participants’ health-related anxiety (M = 3.30, SD = 0.80) was positively associated with misinformation acceptance, β = 0.31, t(22,700) = 50.10, p < 0.001. Participants’ health-related anxiety explained a significant portion of the variance in the misinformation acceptance, ΔR2 = 0.10, F(1, 22,700) = 2,510.00, p < 0.001, after controlling for demographic variables. Therefore, H1 was supported.

Secondly, participants’ preexisting misinformation beliefs (M = 2.74, SD = 0.90) were also positively associated with misinformation acceptance, β = 0.30, t(22,699) = 45.14, p < 0.001. Participants’ preexisting misinformation beliefs explained a significant portion of the variance in the misinformation acceptance, ΔR2 = 0.07, F (1, 22,699) = 2,037.95, p < 0.001, after controlling for demographic variables and the effect of health-related anxiety. Therefore, H2 was supported.

Thirdly, participants’ repeated exposure to misinformation (M = 4.94, SD = 2.53) was also positively associated with misinformation acceptance, β = 0.16, t(22,698) = 25.76, p < 0.001. Participants’ repeated exposure to misinformation explained a significant portion of the variance in the misinformation acceptance, ΔR2 = 0.02, F (1, 22,698) = 663.40, p < 0.001, after controlling for demographic variables, the effect of health-related anxiety, and the effect of preexisting misinformation beliefs. Therefore, H3 was supported.

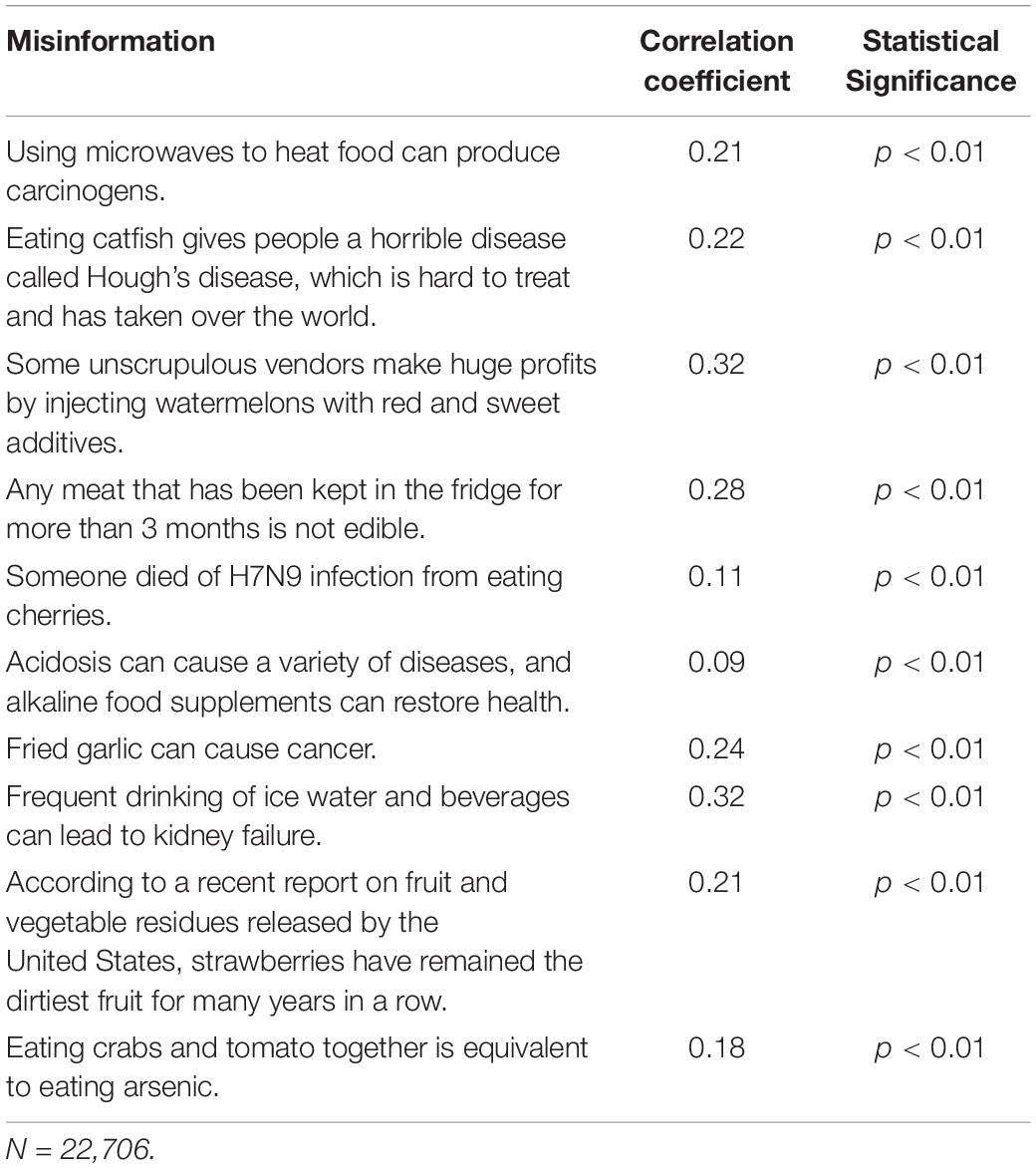

Furthermore, since repeated exposure of misinformation was matched to misinformation acceptance, we conducted further item-by-item correlation analysis for each health misinformation. The results showed that previous exposure to health misinformation was positively correlated with misinformation acceptance. For the ten pieces of misinformation, the correlation between repeated exposure and acceptance ranged from 0.09 to 0.32. Detailed results can be found in Table 3.

In order to answer the research question, demographic variables such as sex, age, education level, and income were also included in the linear regression model. The results showed that participants’ sex (sex was dummy coded as: 0 = male, 1 = male) was significantly associated with misinformation acceptance, β = 0.06, t(22,698) = 10.29, p < 0.001. Participants’ age was negatively associated with misinformation acceptance, β = −0.05, t(22,698) = −9.29, p < 0.001. Participants’ education level was also negatively associated with misinformation acceptance, β = −0.14, t(22,698) = −22.00, p < 0.001, as well as their income level, β = −0.06, t(22,698) = −9.58, p < 0.001.

Discussion

As technology is making it easier to disseminate information on the Internet and social media, misinformation continues to influence people to a large degree in terms of forming their attitudes and opinions on socio-political issues worldwide. In particular, the proliferation of health misinformation on social media has raised critical concerns, especially in the current global pandemic when we also need to fight against an infodemic (Zarocostas, 2020). Considering the significant effects on individuals’ physical and psychological health, it is thus important to examine psychological responses to health-related misinformation on social media, which have largely been overlooked in comparison to political misinformation.

The present study reported the findings from a large-scale online survey conducted on a news mobile application in China. Based on previous literature on misinformation receptivity, the current study identified three emotional and cognitive factors in contributing to health misinformation acceptance, including anxiety about health-related issues, preexisting misinformation beliefs, and repeated exposure. Furthermore, this study also found that demographic factors such as an individuals’ sex, age, income, and education level were all associated with their acceptance of health misinformation.

Above all, the results showed that individuals’ anxiety about health-related issues was strongly associated with their health misinformation acceptance. This finding was in line with previous studies identifying health anxiety in increasing the odds that people accept and share unverified information (Oh and Lee, 2019). Individuals with higher health anxiety were sensitive to negative information and were more likely to spread unsubstantiated messages (Pezzo and Beckstead, 2006; Oh and Lee, 2019). Widely spread health-related misinformation on social media usually contains fear appeals and uncertainties, and thus they were loaded with intense emotion to instigate anxiety and panic. The negativity bias effect posits that negative information and related negative emotions can have greater effects on individuals’ psychological states compared to positive or neutral information (Rozin and Royzman, 2001), the effect of which was intensified among people who used to be more anxious about health-related issues. Resonating with the role of emotional congruence in rumor acceptance (Na et al., 2018), our results indicated that when exposed to anxiety-inducing messages, individuals tend to accept them because of their potential (negative) influences on their health, and they might spread these messages to ease their worry.

Secondly, the findings suggest that individuals’ preexisting misinformation beliefs were positively associated with their acceptance of misinformation. Confirmation bias stated that individuals were more likely to favor information that is in line with their prior beliefs. This not only applies to situations in which individuals already hold misinformation beliefs, but it could also be applied to ambiguous situations in that individuals tend to interpret ambiguous information as supporting their prior beliefs. Our findings reaffirmed the role of belief systems in leading people to accept misinformation in the context of health. As people devote limited cognitive efforts to assessing the truthfulness of information (Zou and Tang, 2020), they rely on their cognitive experience, together with their affective responses (i.e., health-related anxiety) to assess new information. For example, previous studies examining political rumors have found that people’s general tendency to believe conspiracy theories and worldviews is associated with the rejection of scientific findings (Lewandowsky et al., 2013). Similar results have been observed by studies investigating Zika virus-related rumors (Al-Qahtani et al., 2016) and anti-vaccine (mis)information (Jolley and Douglas, 2014).

Similarly, repeated exposure to health misinformation was also positively associated with misinformation acceptance. A previous study found that misinformation that was “sticky” enough to be circulated could be disseminated widely and proliferate (Heath and Heath, 2007). Even among participants who had a more solid knowledge base, repetition might still lead to insidious misperceptions (Fazio et al., 2015), suggesting that subjective knowledge may not always be effective in constraining fluency heuristics. Our empirical result showed that repeated exposure increased receptivity to health misinformation. Notably, the item-by-item analysis (Table 3) implied that the implausibility of each statement might alter the extent to which people believe it to be true. For instance, given consumers’ concerns about food safety, statements such as “some unscrupulous vendors make huge profits by injecting watermelons with red and sweet additives” seem to be reasonable, and thus more likely to be accepted.

In terms of demographic differences, the results showed that compared to men, women were more likely to accept health misinformation. Participants’ age, income, and education level were all negatively associated with their health misinformation acceptance. These results suggested that individuals with a higher socioeconomic status (including a higher education level and higher income) were less likely to accept health misinformation compared to those from lower socio-economic groups. Many studies have tested the knowledge gap hypothesis and found that social class was an important factor in explaining why information dissemination was more effective on people from a higher socio-economic group. One meta-analysis found that the knowledge gap was not only exemplified on social and political topics, but also on health-science topics and international issues (Hwang and Jeong, 2009). The health-science knowledge gap could affect whether people with different socio-economic status adopt preventive health behaviors. People with higher socioeconomic status tend to have higher levels of health literacy and are more likely to adopt recommended preventive health behaviors. Previous studies have found that engagement in preventive behavior was strongly influenced by other socio-demographic variables such as socioeconomic status, age, and sex (Rosenstock et al., 1994). For example, knowledge about food safety issues is positively associated with individuals’ evaluation of the susceptibility and risk perceptions related to food safety concerns (Mou and Lin, 2014).

Overall, the present study extended the theoretical models of misinformation acceptance into the health arena by exploring three potential predictors: health-related anxiety, preexisting misinformation beliefs, and repeated exposure to misleading statements. Together, we suggest that congruence (in terms of both affective states and beliefs), and repetition are two significant mechanisms that lead people to misperceptions. In this regard, anti-science statements may exert influence by increasing perceived familiarity, and, in turn, increase the ease with which a new piece of information is processed (i.e., processing fluency). Much more serious is that the impact of misperceptions may be strengthened among people who used to be anxious about health risks. As a result, some brief interventions, such as the myth vs. fact approach (Schwarz et al., 2007), may fail, or even backfire. Even explicit warnings or retracting pieces of misinformation in news articles (Ecker et al., 2010) can not significantly safeguard against the availability heuristic and diminish long-term misperceptions.

These findings offer valuable insights into misinformation debunking for health practitioners. Firstly, public health authorities should take measures to increase people’s institutional trust, which might alleviate their health-related anxiety, and, in turn, decrease the odds that people are instigated by inflammatory misbeliefs. Secondly, in a sense to tackle the effect of cognition bias and repeated exposure, active inoculating (or prebunking) may increase people’s ability to recognize and resist false information, as confirmed in the case of COVID-19 (van der Linden et al., 2020). Repeated warnings may be efficient in weakening the continued influence of misinformation resulting from individuals’ psychological characteristics (Ecker et al., 2011). Priority should also be given to social media messages that seem more plausible, or ambiguous, which were found to be more acceptable after prior exposure. More fundamentally, this study indicates the significance and urgency of improving individuals’ health literacy, which enables people to critically examine health (mis)information on social media. The current study found that repeated exposure and preexisting misinformation beliefs were linked with people’s receptivity and the believability of false statements, yet both are uncontrollable in practice. To the extent that it is difficult to control how people expose themselves to unsubstantiated health claims, improved health literacy can nevertheless help individuals to better examine the plausibility of health (mis)information on social media and reduce uncertainty.

Limitation and Future Direction

The current study also has several limitations. First, the health-related misinformation used in the study was by no means representative of all health misinformation on social media. It is still possible that the observed associations among the key variables only pertain to the specific health misinformation adopted in the current study. Although the topics we have included in this study were carefully selected by a fact-checking platform by popularity, frequency, and generalizability, future studies could incorporate various health-related topics with varying levels of severity and familiarity.

Second, the design of the study was correlational. Although significant associations were observed in the survey, causal relations can only be established by using longitudinal design or controlled experiments. Future studies could also use carefully designed experiments with pre-tested materials as stimuli to rule out the effects caused by other confounding factors. With the advancement in computational social science, future studies could adopt more sophisticated methods to collect individuals’ behavioral data together with individuals’ social network data to trace the actual spread misinformation while controlling for network effects.

Third, the measurements in this survey were mostly self-generated. Although the key variables we aimed to study were inspired by previous studies, considering the practical issues in survey distribution and logistics, we have included self-generated measurements to better fit the scope of the current study. Since the composites we calculated in the study all showed acceptable reliability, the self-generated measures do not undermine the practical contributions of these findings. Future studies could incorporate both subjective and objective measurements to measure individuals’ affective, cognitive, and behavioral responses to health misinformation. For example, in addition to anxiety or health misinformation acceptance, future studies could use measurements such as emotions, perceived trust, intentions to share, and actual sharing or disseminating behaviors.

Conclusion

In the age of fake news and online misinformation, the negative effects of this dissemination cannot be overlooked. Health misinformation should receive more attention from both academia and industry due to its significant impacts on individuals’ lives. This article reported key results from a large-scale online survey aimed at dissecting what factors contribute to the acceptance of health misinformation. Factors including health-related anxiety, preexisting misinformation beliefs, and repeated exposure contributed to health misinformation acceptance. Furthermore, the results suggest obvious demographic differences in health misinformation acceptance, as female participants in this study were more likely to accept health misinformation as true compared to males, and individuals with a lower educational background and low-income were more receptive to health misinformation compared to those who received higher education and have higher incomes.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Institutional Review Board School of Journalism and Communication Renmin University of China. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

WP and JF contributed to the conception and design of the study. JF organized the experiment. WP and DL performed the statistical analysis and wrote the first draft of the manuscript. All authors worked on revisions of the manuscript and performed the additional statistical analysis, wrote the sections of the manuscript, contributed to manuscript revision and, read the manuscript, and approved the submitted version.

Funding

This study was supported by the Journalism and Marxism Research Center, Renmin University of China (MXG202008).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Al-Qahtani, A. A., Nazir, N., Al-Anazi, M. R., Rubino, S., and Al-Ahdal, M. N. (2016). Zika virus: a new pandemic threat. J. Infect. Dev. Countr. 10, 201–207. doi: 10.3855/jidc.8350

Bode, L., and Vraga, E. K. (2018). See something, say something: correction of global health misinformation on social media. Health Commun. 33, 1131–1140. doi: 10.1080/10410236.2017.1331312

Brehmer, B. (1976). Social judgment theory and the analysis of interpersonal conflict. Psychol. Bull. 83:985. doi: 10.1037/0033-2909.83.6.985

Broniatowski, D. A., Jamison, A. M., Qi, S., AlKulaib, L., Chen, T., Benton, A., et al. (2018). Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. Am. J. Public Health 108, 1378–1384. doi: 10.2105/ajph.2018.304567

Chen, L., Wang, X., and Peng, T.-Q. (2018). Nature and diffusion of gynecologic cancer–related misinformation on social media: analysis of tweets. J. Med. Internet Res. 20:e11515. doi: 10.2196/11515

Chou, W.-Y. S., Gaysynsky, A., and Cappella, J. N. (2020). Where we go from here: health misinformation on social media. Am. J. Public Health 110, S273–S275. doi: 10.2105/ajph.2020.305905

Chou, W.-Y. S., Oh, A., and Klein, W. M. (2018). Addressing health-related misinformation on social media. JAMA 320, 2417–2418. doi: 10.1001/jama.2018.16865

Chua, A. Y., and Banerjee, S. (2017). To share or not to share: the role of epistemic belief in online health rumors. Int. J. Med. Inf. 108, 36–41. doi: 10.1016/j.ijmedinf.2017.08.010

Cisler, J. M., and Koster, E. H. (2010). Mechanisms of attentional biases towards threat in anxiety disorders: an integrative review. Clin. Psychol. Rev. 30, 203–216. doi: 10.1016/j.cpr.2009.11.003

Dechêne, A., Stahl, C., Hansen, J., and Wänke, M. (2010). The truth about the truth: a meta-analytic review of the truth effect. Pers. Soc. Psychol. Rev. 14, 238–257. doi: 10.1177/1088868309352251

Ecker, U. K., Lewandowsky, S., Fenton, O., and Martin, K. (2014). Do people keep believing because they want to? Preexisting attitudes and the continued influence of misinformation. Mem. Cogn. 42, 292–304. doi: 10.3758/s13421-013-0358-x

Ecker, U. K., Lewandowsky, S., Swire, B., and Chang, D. (2011). Correcting false information in memory: manipulating the strength of misinformation encoding and its retraction. Psychon. Bull. Rev. 18, 570–578. doi: 10.3758/s13423-011-0065-1

Ecker, U. K., Lewandowsky, S., and Tang, D. T. (2010). Explicit warnings reduce but do not eliminate the continued influence of misinformation. Mem. Cogn. 38, 1087–1100. doi: 10.3758/mc.38.8.1087

Fazio, L. K., Brashier, N. M., Payne, B. K., and Marsh, E. J. (2015). Knowledge does not protect against illusory truth. J. Exp. Psychol. Gen. 144:993. doi: 10.1037/xge0000098

Feng, Q., and Ma, Z. (2019). The dissemination of on-line rumours and social class differences: a study on the on-line food-safety rumours. J. Commun. Rev. 28, 28–41.

Fergus, T. A. (2013). Cyberchondria and intolerance of uncertainty: examining when individuals experience health anxiety in response to Internet searches for medical information. Cyberpsychol. Behav. Soc. Netw. 16, 735–739. doi: 10.1089/cyber.2012.0671

Fernández-Luque, L., and Bau, T. (2015). Health and social media: perfect storm of information. Healthc. Inform. Res. 21:67. doi: 10.4258/hir.2015.21.2.67

Greenhill, K. M., and Oppenheim, B. (2017). Rumor has it: the adoption of unverified information in conflict zones. Int. Stud. Q. 61, 660–676. doi: 10.1093/isq/sqx015

Greenspan, S. (2008). Annals of Gullibility: Why We Get Duped and How to Avoid It: Why We Get Duped and How to Avoid It. Santa Barbara, CA: ABC-CLIO.

Heath, C., and Heath, D. (2007). Made to Stick: Why Some Ideas Survive and Others Die. New York, NY: Random House.

Hwang, Y., and Jeong, S.-H. (2009). Revisiting the knowledge gap hypothesis: a meta-analysis of thirty-five years of research. J. Mass Commun. Q. 86, 513–532. doi: 10.1177/107769900908600304

Jamison, A. M., Broniatowski, D. A., Dredze, M., Wood-Doughty, Z., Khan, D., and Quinn, S. C. (2020). Vaccine-related advertising in the Facebook Ad Archive. Vaccine 38, 512–520. doi: 10.1016/j.vaccine.2019.10.066

Jolley, D., and Douglas, K. M. (2014). The effects of anti-vaccine conspiracy theories on vaccination intentions. PLoS One 9:e89177. doi: 10.1371/journal.pone.0089177

Karlova, N. A., and Fisher, K. E. (2013). A social diffusion model of misinformation and disinformation for understanding human information behaviour. Inform. Res. 18:573.

Lazer, D. M., Baum, M. A., Benkler, Y., Berinsky, A. J., Greenhill, K. M., Menczer, F., et al. (2018). The science of fake news. Science 359, 1094–1096.

Lewandowsky, S., Ecker, U. K., Seifert, C. M., Schwarz, N., and Cook, J. (2012). Misinformation and its correction: continued influence and successful debiasing. Psychol. Sci. Public Interest 13, 106–131. doi: 10.1177/1529100612451018

Lewandowsky, S., Oberauer, K., and Gignac, G. E. (2013). NASA faked the moon landing—therefore,(climate) science is a hoax: an anatomy of the motivated rejection of science. Psychol. Sci. 24, 622–633. doi: 10.1177/0956797612457686

Li, H., and Sakamoto, Y. (2015). “Computing the veracity of information through crowds: a method for reducing the spread of false messages on social media,” in Proceedings of the 2015 48th Hawaii International Conference on System Sciences, Kauai.

Lyons, B., Merola, V., and Reifler, J. (2019). Not just asking questions: effects of implicit and explicit conspiracy information about vaccines and genetic modification. Health Commun. 34, 1741–1750. doi: 10.1080/10410236.2018.1530526

McGuire, W. J. (1972). Attitude change: the information processing paradigm. Exp. Soc. Psychol. 5, 108–141.

McMullan, R. D., Berle, D., Arnáez, S., and Starcevic, V. (2019). The relationships between health anxiety, online health information seeking, and cyberchondria: systematic review and meta-analysis. J. Affect. Disord. 245, 270–278. doi: 10.1016/j.jad.2018.11.037

Moran, M. B., Lucas, M., Everhart, K., Morgan, A., and Prickett, E. (2016). What makes anti-vaccine websites persuasive? A content analysis of techniques used by anti-vaccine websites to engender anti-vaccine sentiment. J. Commun. Healthc. 9, 151–163. doi: 10.1080/17538068.2016.1235531

Mou, Y., and Lin, C. A. (2014). Communicating food safety via the social media: the role of knowledge and emotions on risk perception and prevention. Sci. Commun. 36, 593–616. doi: 10.1177/1075547014549480

Na, K., Garrett, R. K., and Slater, M. D. (2018). Rumor acceptance during public health crises: testing the emotional congruence hypothesis. J. Health Commun. 23, 791–799. doi: 10.1080/10810730.2018.1527877

Nelson, J. L., and Taneja, H. (2018). The small, disloyal fake news audience: the role of audience availability in fake news consumption. New Media Soc. 20, 3720–3737. doi: 10.1177/1461444818758715

Nyhan, B., and Reifler, J. (2010). When corrections fail: the persistence of political misperceptions. Polit. Behav. 32, 303–330. doi: 10.1007/s11109-010-9112-2

Oh, H. J., and Lee, H. (2019). When do people verify and share health rumors on social media? The effects of message importance, health anxiety, and health literacy. J. Health Commun. 24, 837–847. doi: 10.1080/10810730.2019.1677824

Pezzo, M. V., and Beckstead, J. W. (2006). A multilevel analysis of rumor transmission: effects of anxiety and belief in two field experiments. Basic Appl. Soc. Psychol. 28, 91–100. doi: 10.1207/s15324834basp2801_8

Rosenstock, I. M., Strecher, V. J., and Becker, M. H. (1994). “The health belief model and HIV risk behavior change,” in Preventing AIDS, eds R. J. DiClemente and J. L. Peterson (Berlin: Springer), 5–24. doi: 10.1007/978-1-4899-1193-3_2

Rozin, P., and Royzman, E. B. (2001). Negativity bias, negativity dominance, and contagion. Pers. Soc. Psychol. Rev. 5, 296–320. doi: 10.1207/s15327957pspr0504_2

Schwarz, N., Sanna, L. J., Skurnik, I., and Yoon, C. (2007). Metacognitive experiences and the intricacies of setting people straight: implications for debiasing and public information campaigns. Adv. Exp. Soc. Psychol. 39, 127–161. doi: 10.1016/s0065-2601(06)39003-x

Sell, T. K., Hosangadi, D., and Trotochaud, M. (2020). Misinformation and the US Ebola communication crisis: analyzing the veracity and content of social media messages related to a fear-inducing infectious disease outbreak. BMC Public Health 20:550. doi: 10.1186/s12889-020-08697-3

Shen, C., Kasra, M., Pan, W., Bassett, G. A., Malloch, Y., and O’Brien, J. F. (2019). Fake images: the effects of source, intermediary, and digital media literacy on contextual assessment of image credibility online. New Media Soc. 21, 438–463. doi: 10.1177/1461444818799526

Sina News (2020). Aurora: A Series of Reports on the New Information Industry. Available online at: https://finance.sina.com.cn/tech/2020-12-02/doc-iiznctke4421877.shtml (accessed January 26, 2021).

Starcevic, V., and Berle, D. (2013). Cyberchondria: towards a better understanding of excessive health-related Internet use. Expert Rev. Neurother. 13, 205–213. doi: 10.1586/ern.12.162

Statista (2020). Number of Daily Active Users of Sina Weibo in China from 4th Quarter 2017 to 3rd Quarter 2021. Available online at: https://www.statista.com/statistics/1058070/china-sina-weibo-dau/ (accessed January 26, 2021).

Stevenson, F. A., Kerr, C., Murray, E., and Nazareth, I. (2007). Information from the Internet and the doctor-patient relationship: the patient perspective–a qualitative study. BMC Fam. Pract. 8:47. doi: 10.1186/1471-2296-8-47

Swire-Thompson, B., and Lazer, D. (2020). Public health and online misinformation: challenges and recommendations. Annu. Rev. Public Health 41, 433–451. doi: 10.1146/annurev-publhealth-040119-094127

van der Linden, S., Roozenbeek, J., and Compton, J. (2020). Inoculating against fake news about COVID-19. Front. Psychol. 11:566790. doi: 10.3389/fpsyg.2020.566790

Vosoughi, S., Roy, D., and Aral, S. (2018). The spread of true and false news online. Science 359, 1146–1151. doi: 10.1126/science.aap9559

Walker, C. J., and Blaine, B. (1991). The virulence of dread rumors: a field experiment. Lang. Commun. 11, 291–297. doi: 10.1016/0271-5309(91)90033-r

Wang, W.-C., Brashier, N. M., Wing, E. A., Marsh, E. J., and Cabeza, R. (2016). On known unknowns: fluency and the neural mechanisms of illusory truth. J. Cogn. Neurosci. 28, 739–746. doi: 10.1162/jocn_a_00923

Wang, Y., McKee, M., Torbica, A., and Stuckler, D. (2019). Systematic literature review on the spread of health-related misinformation on social media. Soc. Sci. Med. 240:112552. doi: 10.1016/j.socscimed.2019.112552

Whittlesea, B. W. (1993). Illusions of familiarity. J. Exp. Psychol. Learn. Mem. Cogn. 19:1235. doi: 10.1037/0278-7393.19.6.1235

Williams Kirkpatrick, A. (2020). The spread of fake science: lexical concreteness, proximity, misinformation sharing, and the moderating role of subjective knowledge. Public Understand. Sci. [Epub ahead of print].

Xinhua News (2019). Eliminate Health Myths and Get Rid of Health Anxiety. Available online at: http://www.xinhuanet.com/food/2019-07/16/c_1124758041.htm (accessed January 26, 2021).

Zarocostas, J. (2020). How to fight an infodemic. Lancet 395:676. doi: 10.1016/s0140-6736(20)30461-x

Zhang, L., Jung, E. H., and Chen, Z. (2020). Modeling the pathway linking health information seeking to psychological well-being on WeChat. Health Commun. 35, 1101–1112. doi: 10.1080/10410236.2019.1613479

Zhang, X., Wen, D., Liang, J., and Lei, J. (2017). How the public uses social media wechat to obtain health information in china: a survey study. BMC Med. Inform. Decis. Mak. 17:66. doi: 10.1186/s12911-017-0470-0

Appendix

Appendix A. Survey questionnaire.

Keywords: health misinformation, social media, misinformation acceptance, health-related anxiety, preexisting misinformation beliefs, repeated exposure

Citation: Pan W, Liu D and Fang J (2021) An Examination of Factors Contributing to the Acceptance of Online Health Misinformation. Front. Psychol. 12:630268. doi: 10.3389/fpsyg.2021.630268

Received: 17 November 2020; Accepted: 08 February 2021;

Published: 01 March 2021.

Edited by:

Xiaofei Xie, Peking University, ChinaReviewed by:

Michael K. Hauer, Drexel University, United StatesKai Shu, Illinois Institute of Technology, United States

Copyright © 2021 Pan, Liu and Fang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jie Fang, MjAwODAwNzNAcnVjLmVkdS5jbg==

Wenjing Pan

Wenjing Pan Diyi Liu

Diyi Liu