- 1Institute of Psychology, Chinese Academy of Sciences, Beijing, China

- 2Department of Psychology, University of Chinese Academy of Sciences, Beijing, China

Background: Trait self-esteem reflects stable self-evaluation, and it affects social interaction patterns. However, whether and how trait self-esteem can be expressed through behaviors are controversial. Considering that facial expressions can effectively convey information related to personal traits, the present study investigated the three-dimensional (3D) facial movements related to self-esteem level and the sex differences therein.

Methods: The sample comprised 238 participants (46.2% males, 53.8% females). Their levels of trait self-esteem were evaluated by employing the Rosenberg Self-Esteem Scale (SES) (47.9% low self-esteem, 52.1% high self-esteem). During self-introductions, their facial movements in 3D space were recorded by Microsoft Kinect. Two-way ANOVA was performed to analyze the effect of self-esteem and gender on 3D facial movements. Additionally, Logistic regression models were established to describe the relationship between 3D facial movements and self-esteem levels in both genders.

Results: The results of two-way ANOVA revealed a main effect of trait self-esteem level for cheeks and lips’ movements. Meanwhile, there was a significant interaction between trait self-esteem and gender on the variability of lips’ movements. In addition, the combination of facial movements can effectively identify trait self-esteem in men and women, with 75.5 and 68% accuracy, respectively.

Conclusion: The present results suggest that the 3D facial expressions of individuals with different trait self-esteem levels were different, and such difference is affected by gender. Our study explores a possible way in which trait self-esteem plays a role in social interaction and also provides the basis for automatic self-esteem recognition.

Introduction

Self-esteem is the positive or negative self-evaluation a person feels about oneself (Rosenberg, 1965), which can be divided into trait self-esteem (long-time fluctuations) and state self-esteem (short-time fluctuations) (Kernis, 1993). While state self-esteem is influenced by social evaluation (Leary et al., 2003), trait self-esteem is influenced by social evaluation and in turn affects social behavior (Greenwald and Banaji, 1995), social decision-making (Anthony et al., 2007), and ultimately, individual well-being (Baumeister et al., 2003). Individuals with higher trait self-esteem usually appear self-confident to others (Krause et al., 2016). They give people a more positive first impression (Back et al., 2011), are more likely to be admitted during interviews (Imada and Hakel, 1977), and have higher sales performance at work (Miner, 1962). Vice versa, perceptions of other’s self-esteem is an important factor in determining the mode of communication (Macgregor et al., 2013). One way to obtain an understanding of how trait self-esteem affects social interaction is to investigate the expression of trait self-esteem.

Previous studies have reported that males have slightly higher self-esteem than females (Feingold, 1994). Some comparisons showed that gender is an important factor in the relationship between self-esteem and body image (Israel and Ivanova, 2002), alcohol using (Neumann et al., 2009), and life satisfaction (Moksnes and Espnes, 2013). Besides, the self-reported personality traits of men and women with high self-esteem have weak differences (Robins et al., 2001). Agentic traits such as extraversion are important for men’s self-esteem, while communal traits such as agreeableness are important for women’s self-esteem. However, only a few studies have investigated sex differences in the expression of trait self-esteem. Considering that men and women with high self-esteem tend to show different traits and do different behaviors, they are much likely to show their trait self-esteem in different ways.

Many researchers have studied the perception of self-esteem instead of the expression of self-esteem, yet different results have been obtained. A study revealed that self-esteem may not be perceived well through explicit behavioral clues (e.g., maintain eye contact and hesitate when speaking) (Savin-Williams and Jaquish, 1981). Other studies indicated zero correlation between self-reported self-esteem and others’ perceived self-esteem (Yeagley et al., 2007; Kilianski, 2008). However, a recent study has shown that when controlling acquaintance, people can identify trait self-esteem from self-introduction videos fairly accurately (Hirschmueller et al., 2018). The perception of self-esteem is influenced by prior knowledge and individual differences (Ostrowski, 2010; Nicole et al., 2015), and it is difficult to identify fine-grained behavioral information. Using advanced technology to encode the expression of trait self-esteem may be an objective way to study the interpersonal effects of trait self-esteem. For example, the relationship between encoded gait information and trait self-esteem was found in previous research (Sun et al., 2017).

Compared with other expressions, facial movements convey more information about personal traits (Harrigan et al., 2004) and are more noticed. Meanwhile, facial expressions play an important role in social interaction and communication (Berry and Landry, 1997; Ishii et al., 2018). As a result, facial movement is likely to be an aspect of trait self-esteem expression. On one hand, facial movements are related to personality and depression (de Melo et al., 2019; Suen et al., 2019), which are linked to self-esteem (Robins et al., 2001). On the other hand, gender differences exist in facial movements (Hu et al., 2010). Taken together, these previous findings provide evidence for the sex differences in the facial movements related to trait self-esteem. Therefore, it is important to distinguish the unique facial expressions of men and women with high self-esteem/low self-esteem. Moreover, three-dimensional (3D) facial data are generally considered to contain more information than 2D facial data and have better performance in identifying traits (Jones et al., 2012; de Melo et al., 2019). Thus, it is more comprehensive to explore the facial expressions of trait self-esteem in 3D space.

The purpose of this study was to investigate the 3D facial movements related to trait self-esteem and whether such relationship is influenced by gender. Based on previous findings, we hypothesized that facial movements would be separately influenced by trait self-esteem level and gender. In addition, we expected interaction exists between self-esteem level and gender in their effect on facial movements.

Materials and Methods

Participants

A total of 240 students and workers from University of Chinese Academy of Sciences participated in this study. To participate in the study, participants had to meet three inclusion criteria: (1) At least 18 years old; (2) Fluent in Mandarin; and (3) Healthy and able to make normal facial expressions. After excluding the data of participants who frequently covered their faces with their hands, 238 qualified samples were obtained, of which 110 were male and 128 were female. The retention rate was 99.17%. The average age was 22.8 years (SD = 2.8).

A post hoc G power analysis (Faul et al., 2007) on 238 participants was conducted with a priori alpha levels of 0.05 and a medium effect. This power analysis demonstrated relatively large effects (effects of power = 0.97) (MacCallum et al., 1996).

Measures

Rosenberg Self-Esteem Scale

This study used the Chinese version of the Rosenberg Self-Esteem Scale (SES) (Rosenberg, 1965; Ji and Yu, 1993) as a tool to measure trait self-esteem (Kang, 2019). The scale contains 10 questions in total and the score range from 10 to 40; the higher the score, the higher the level of self-esteem. Generally speaking, scores lower than 15 indicate very low self-esteem, scores range in 15–20 indicate low self-esteem, scores range in 20–30 indicate normal self-esteem, scores range in 30–35 indicate high self-esteem, and scores above 35 indicate very high self-esteem. In this study, we divided the subjects into two groups based on their self-esteem score. Scores higher than 30 were classified into high self-esteem group (HSE), and scores lower than or equal to 30 were classified into low self-esteem group (LSE).

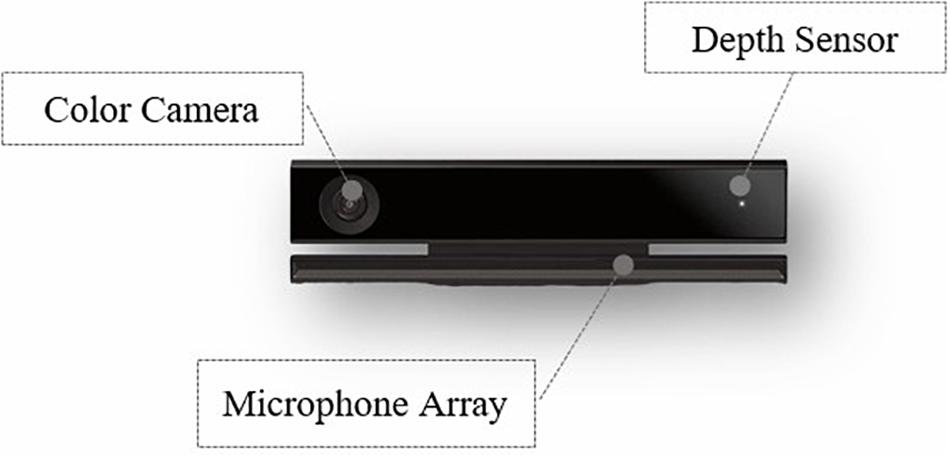

Kinect

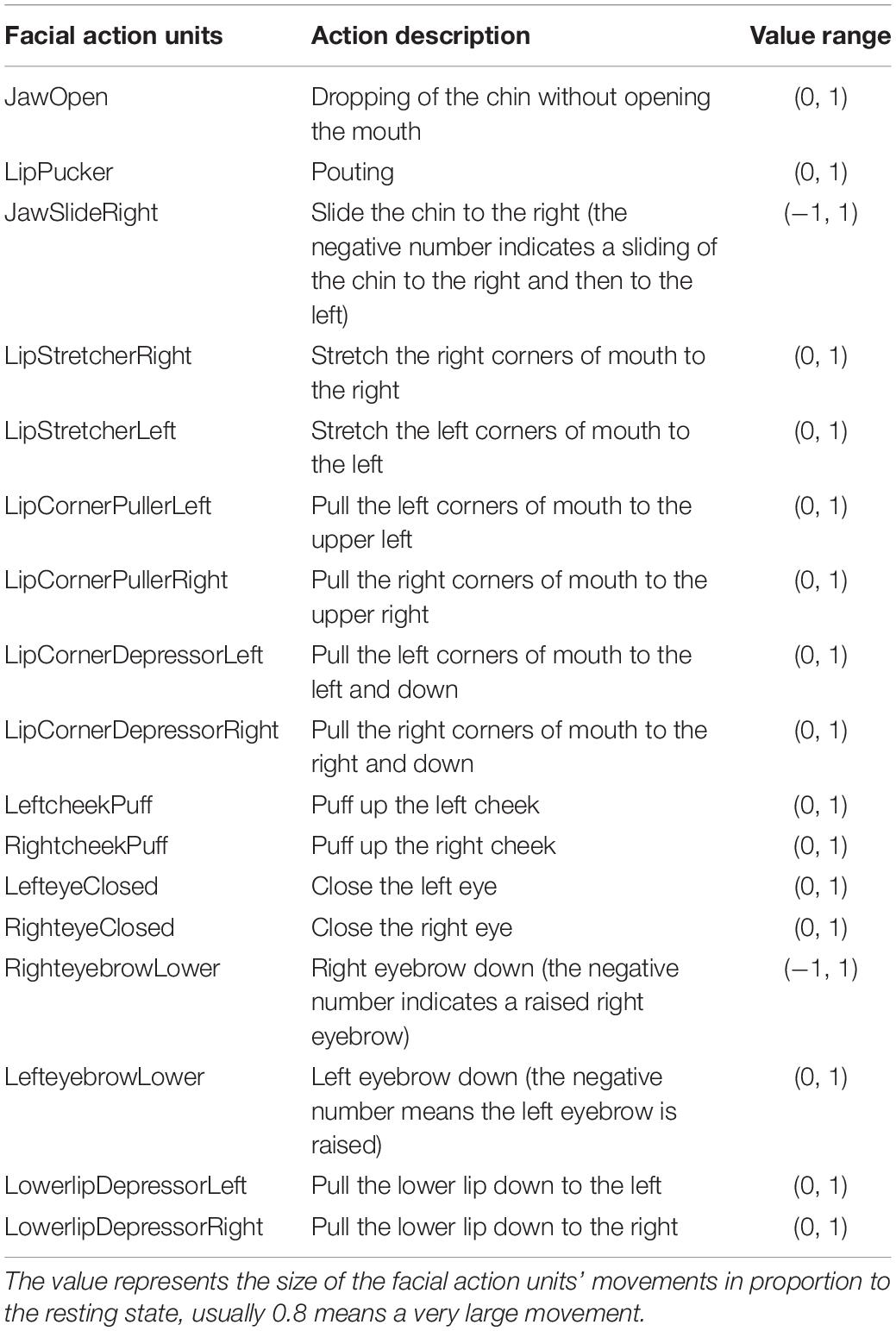

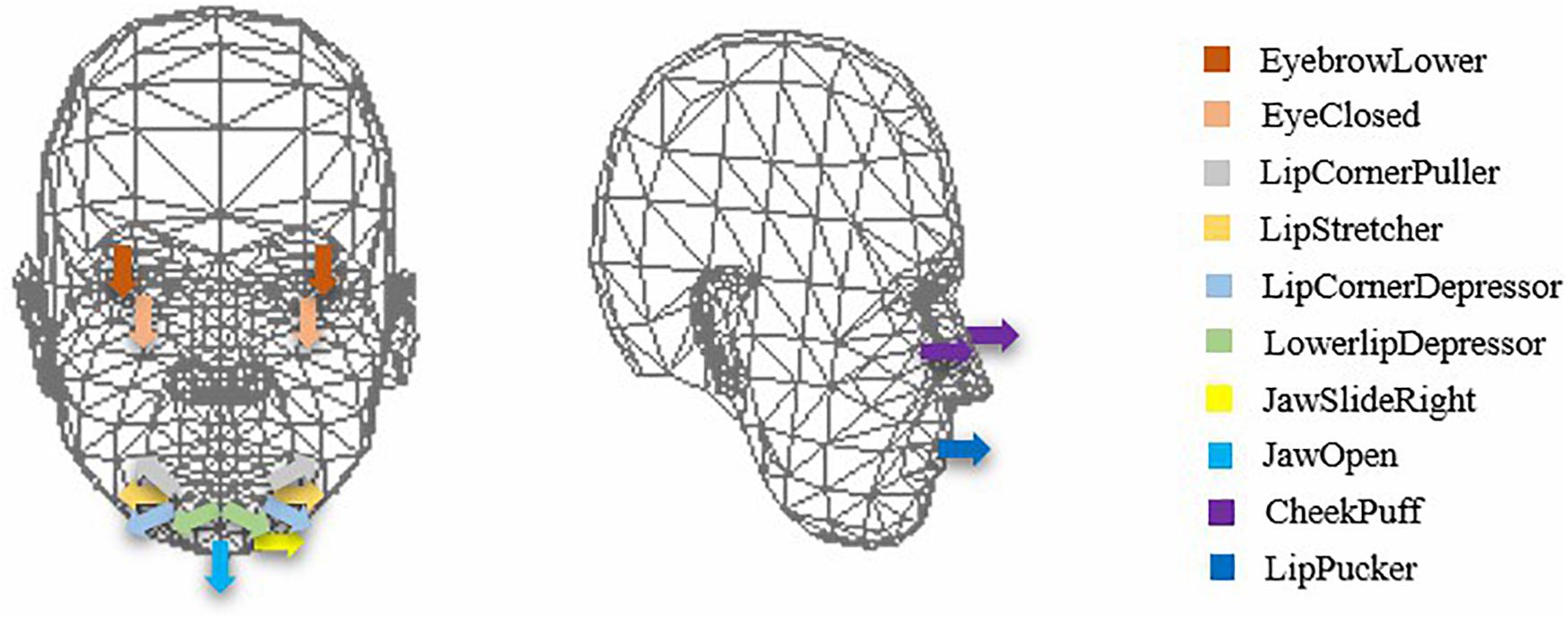

A Kinect camera can be used to recognize facial movements automatically (Zaletelj and Koir, 2017) (Figure 1). The sampling frequency of the Kinect is 30 Hz, which means it can record 30 frames of individual facial activity per second. To quantitatively describe the 3D facial movements, Kinect is able to capture and track the key 17 action units (AUs) (Ekman and Friesen, 1978) around facial features in 3D space. The specific meaning of each AU is provided in Table 1, and the intuitive facial movements are shown in Figure 2, which shows that there are three AUs moving in depth dimension. Most of the AUs are expressed as a numeric weight that varies between 0 and 1. Three of the AUs – jaw slide right, right eyebrow lower, and left eyebrow lower – vary between −1 and +1. The absolute value of the numeric weight represents the moving distance of the corresponding AU. Finally, Kinect will collect one list of time series data for each AU’s movements.

Design and Procedure

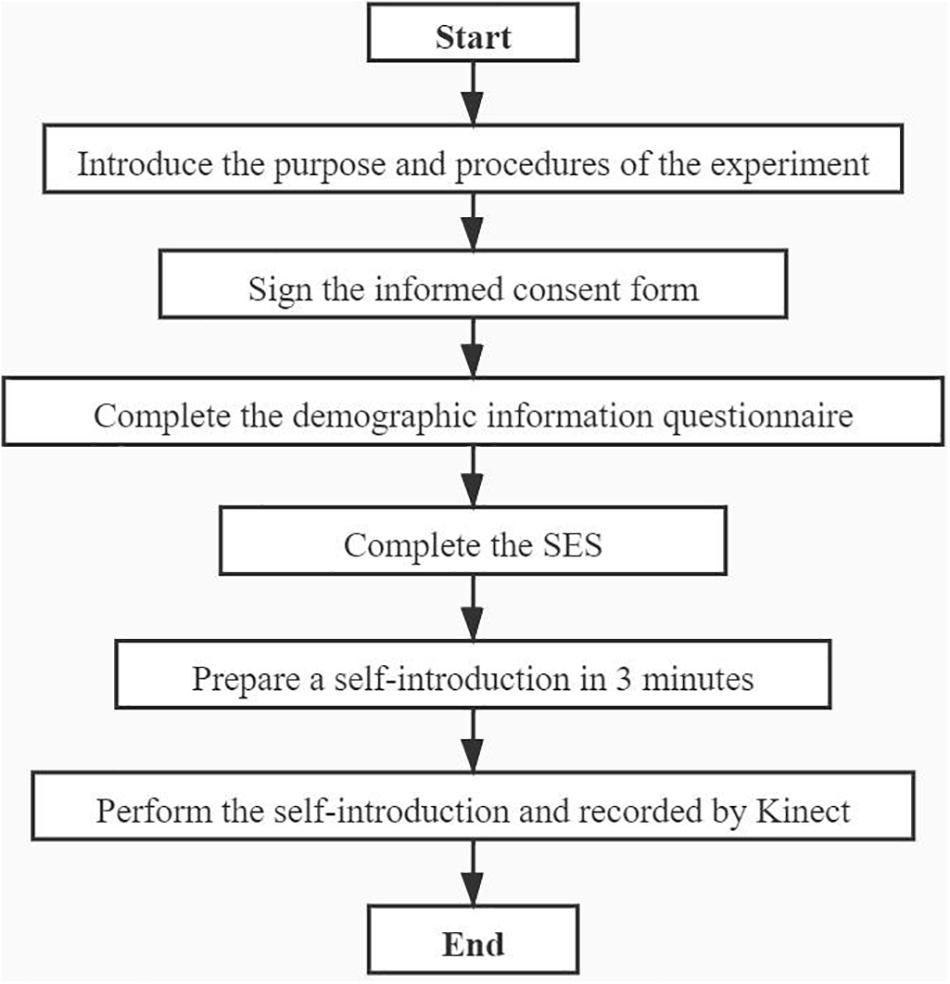

To analyze the relationship between self-esteem and 3D facial movements, this study designed a facial data collection experiment with a self-introductory situation according to the following steps: (1) First, the demographic information of a subject was recorded. (2) Then, the level of self-esteem was determined using the SES. (3) During the experimental task (self-introduction), the participants’ facial changes in 3D space (the movement of AUs) were captured by Kinect.

Specifically, during the experimental task, a self-introductory situation was set. Compared with conversation contexts and emotional-induction scenes, self-introduction facing the camera is least affected by social evaluation. We believe that in this situation, the subjects show their trait self-esteem rather than state self-esteem (Park et al., 2007). In this section, participants were asked to make a 1-min self-introduction using the following outline: (1) Please introduce yourself and your hometown in detail; (2) Please give a detailed account of your major and the interesting research work you did when you were at school; (3) Please describe your plans for the future and what kind of work you would like to do. Meanwhile, illumination, the relative position, and the relative distance between Kinect and participants were strictly controlled. For illumination, we chose a bright room without direct sunlight for data acquisition; for relative position, we asked the participants to keep their body as still as possible; for distance, we controlled the distance between the camera and the participants to 3 m.

The whole process of the experiment is shown in Figure 3. The same steps were followed for each subject independently. The entire process took approximately 10 min.

Statistical Analysis

After the experiment, we collected demographic information, self-esteem score, and 3D facial movements for each subject. Then we performed data preprocessing. For self-esteem scores, people in the LSE group were defined as those whose self-esteem score was less than or equal to 30 points, while the HSE group had a score greater than 30. For AU data, we normalized the AU data and eliminated outliers outside of the three standard deviations. Then the average and standard deviation values of each AU were calculated to describe the magnitude and variability of the facial muscle’s movements. The greater the absolute value, the larger the moving amplitude. In addition, the greater the standard deviation, the larger the facial variability.

For each AU indicator, descriptive analyses were used to calculate the means and SDs for each group (LSE vs HSE), and the data were also analyzed according to gender (male vs female). Considering the ratio of the number of variables to the sample size, differences between the mean scores for group × gender were calculated using the two-way ANOVA. Then, multivariate analyses using logistic regression models were conducted to identify the relationship between AUs’ movements level and self-esteem level in which odds ratios (ORs) were calculated. In addition, stepwise logistic regressions were followed for removing unimportant independent variables to get the best-fitted models. All statistical processes were performed on SPSS 22.0, with a two-tailed probability value of <0.05 considered to be statistically significant.

Results

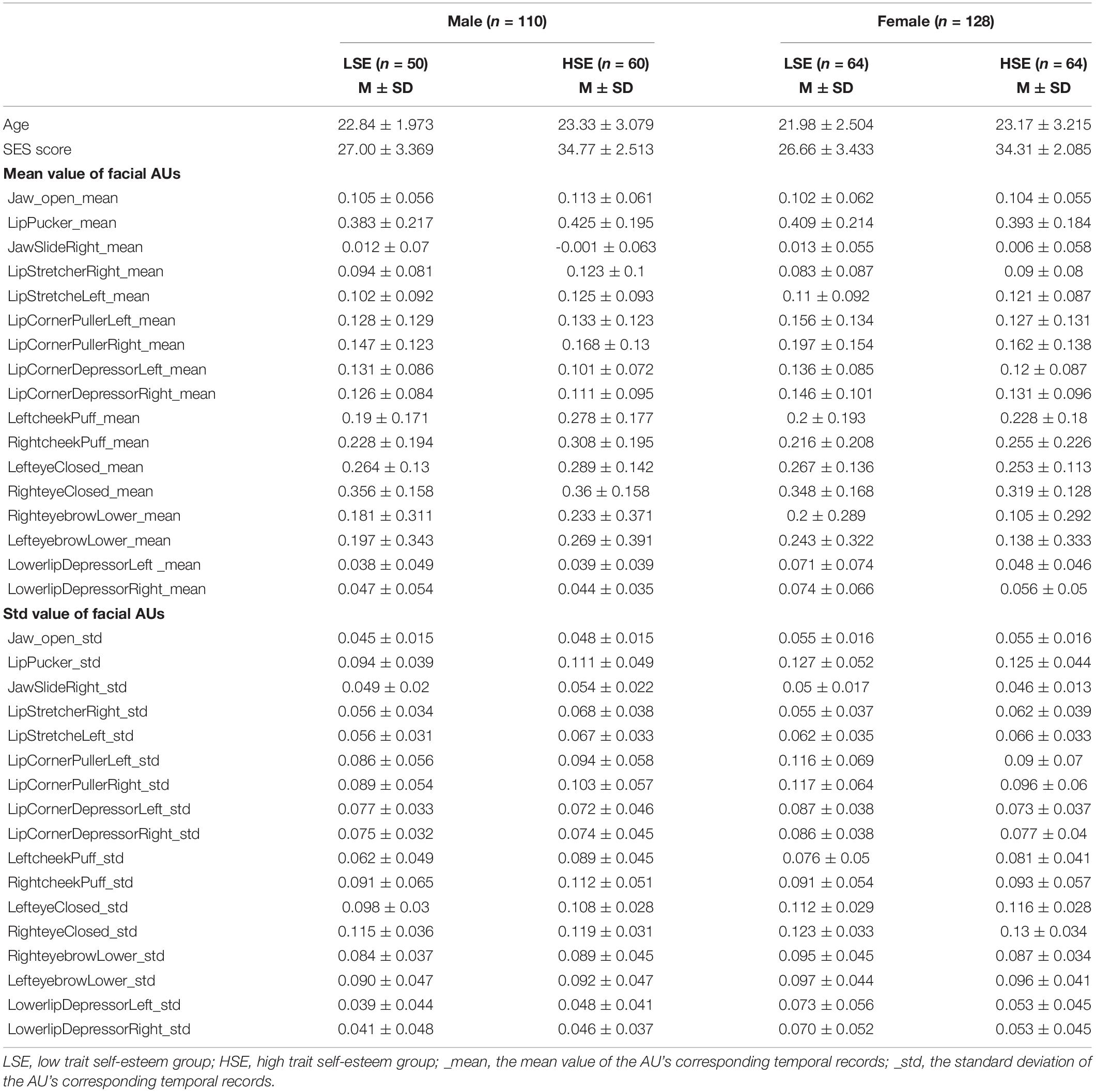

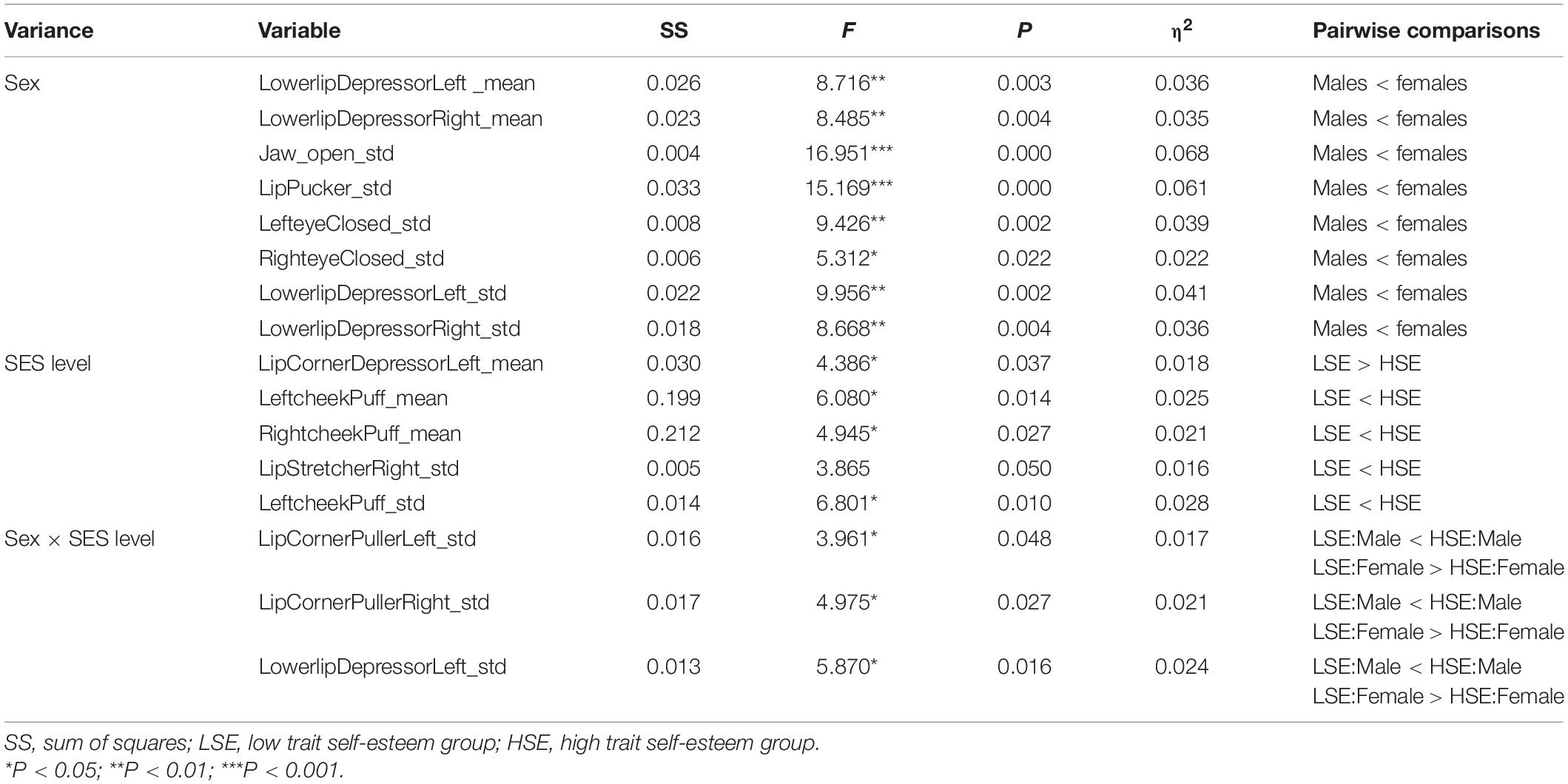

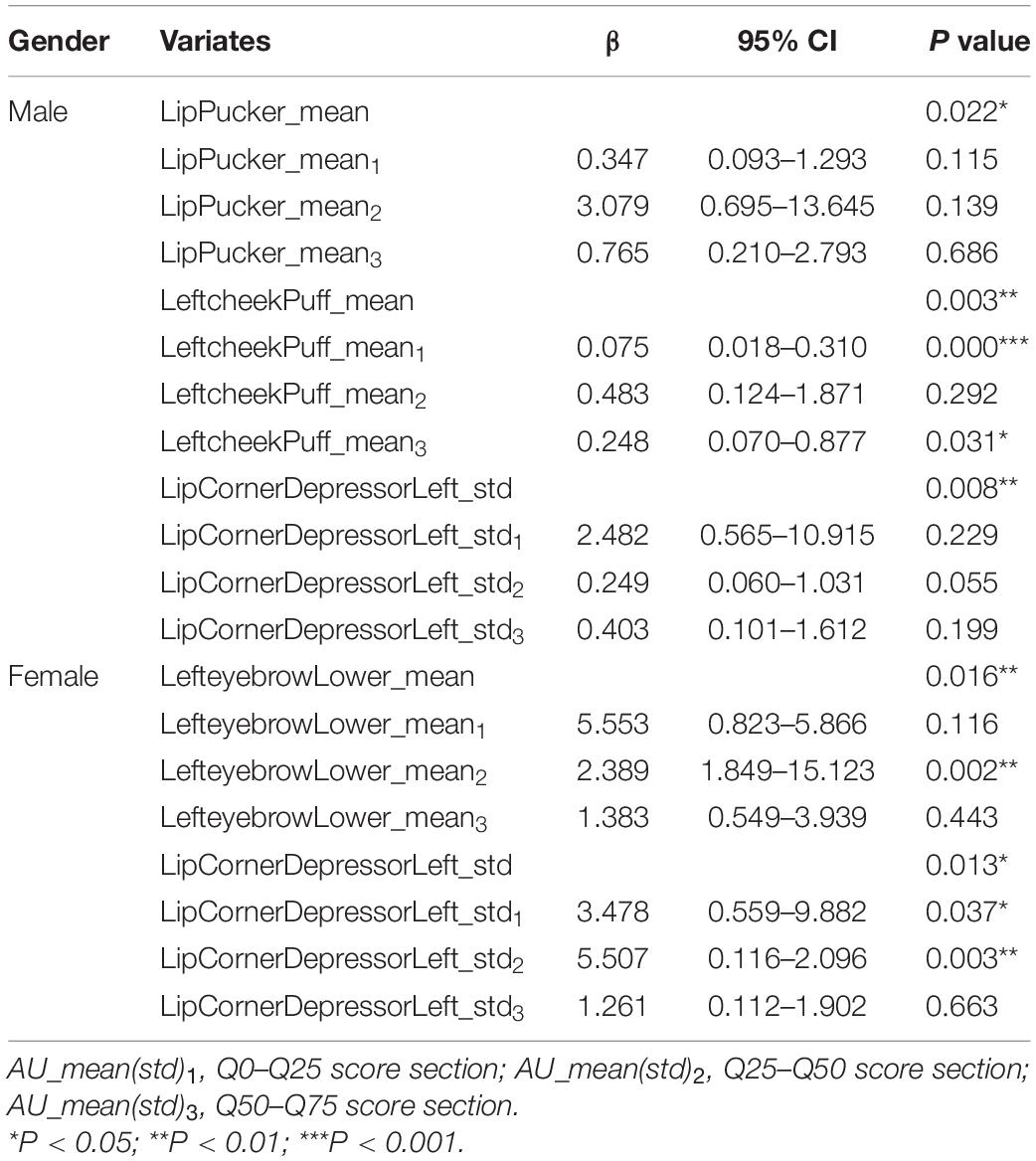

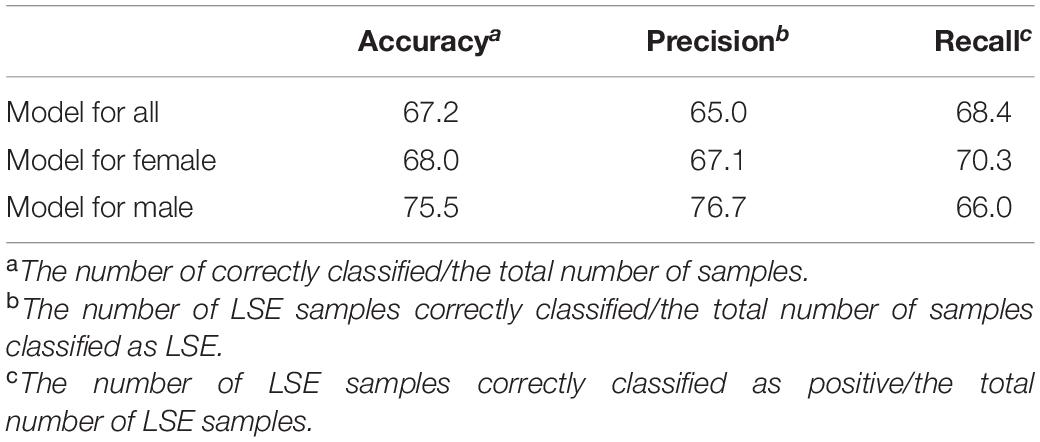

A total of 110 males (46.22%) and 128 (53.78%) were involved in this study. No significant sex × SE-level differences in age distribution were found. The mean values as well as standard deviation values of AU movements are described as M ± SD in Table 2. The significant results from a 2 × 2 ANOVA separated by SE level (i.e., LSE and HSE) and sex (i.e., male and female) are shown in Table 3. Significant models to predict self-esteem level from AU movements are shown in Table 4.

There were significant main effects for the SE levels: LipCornerDepressorLeft_mean [F (1, 234) = 4.386, P = 0.037], LeftcheekPuff_mean [F (1, 234) = 6.080, P = 0.014], RightcheekPuff_mean [F (1, 234) = 4.945, P = 0.027], LipStretcherRight_std [F (1, 234) = 3.865, P = 0.050], and LeftcheekPuff_std [F (1, 234) = 6.801, P = 0.010]. The results revealed that amplitude and richness of facial movements increased as self-esteem became higher, except the amplitude of LipCornerDepressorLeft_mean AU.

There were also significant interaction effects between sex and self-esteem level: LipCornerPullerLeft_std [F (1, 234) = 3.961, P = 0.048], LipCornerPullerRight_std [F (1, 234) = 4.975, P = 0.027], and LowerlipDepressorLeft_std [F (1, 234) = 5.870, P = 0.016]. These three AUs in men with low self-esteem moved more variably than men with high self-esteem, while in women, the opposite was true.

Step-forward logistic regression models were conducted to check whether some crucial AUs’ movements could combine to predict self-esteem as the optimal model in both genders. Table 4 presented the multivariable associations between AUs’ movements in 3D space and self-esteem. Three independent variables were included in the logistic regression model of men, they were LipPucker_mean (P = 0.022), LeftcheekPuff_mean (P = 0.003), and LipCornerDepressorLeft_std (P = 0.008). In addition, two independent variables were included in the logistic regression model of women, they were LefteyebrowLower_mean (P = 0.016) and LipCornerDepressorLeft_std (P = 0.013). Those AUs’ indicators might be powerful when they are combined to predict self-esteem. Both logistic regression models were significant (P < 0.001), with men and women models having prediction accuracy in classification of 75.5 and 68%, respectively. In addition, we made a further confirmation that the effect of building models separately for men and women was better than building a combining model (shown in Table 5).

Discussion

The purpose of the study was to explore the relationship between 3D facial movements and trait self-esteem. Kinect was employed to quantify facial movements during self-introduction and statistical analysis explored the relationship between facial movements and level of self-esteem. The results were concordant with our hypothesis, revealing that some 3D facial movements were related to trait self-esteem, and sex factor has an impact on such relationship.

Previous studies found that self-esteem may not be perceived well through explicit behavioral clues (Savin-Williams and Jaquish, 1981; Kilianski, 2008). In contrast, the present findings indicate that during self-introductions, the trait self-esteem has a great effect on some facial AUs’ movements, and a specific combination of facial AUs’ movements can effectively predict the level of trait self-esteem. This extends a recent work that self-esteem perceptions of strangers based on self-introduction videos were fairly accurate (r = 0.31, P = 0.002) (Hirschmueller et al., 2018). Specifically, compared with the LSE group, individuals in the HSE group puff their cheeks larger, move their lips to right more, and puff their left cheek more. Differently, high self-esteem people move their lip corner to bottom left smaller, which is a very unique movement best representing sadness (coded as AU15 in FACS) (Ekman and Friesen, 1978). One potential explanation is that trait self-esteem may be expressed through different emotions during self-rating. While individuals with high self-esteem tend to express more positive emotions such as pride (Tracy and Robins, 2003; Agroskin et al., 2014) and happiness (Kim et al., 2019) when introducing themselves, those with low self-esteem are more likely to exhibit negative emotions including sadness and disgust (Satoh, 2001). In addition, it is noteworthy that the left cheek was found to be the area most affected by trait self-esteem (for amplitude: η2 = 0.025, for variability: η2 = 0.028). This led to the hypothesis that trait self-esteem may be a potential factor for the left cheek bias (Nicholls et al., 2002; Lindell and Savill, 2010; Lindell, 2013). Future factor analysis researches on left cheek bias can take trait self-esteem into consideration.

Moreover, the interaction effect exists in the influence of trait self-esteem and gender on facial movements. In terms of the variability of lip movements, high self-esteem males move more variable than low self-esteem males, while low self-esteem females move more variable than high self-esteem females. One way to obtain an understanding of why men and women express their trait self-esteem differently is that self-esteem is largely derived from interpersonal experience (Leary et al., 1995) and gender identity (Cvencek et al., 2016). Therefore, men who are more deterrent enjoy higher social status and thus, higher levels of self-esteem. On the contrary, women who have more affinity have enhanced interpersonal relationships and thus, higher levels of self-esteem (Baldwin and Keelan, 1999). An alternative explanation is that men and women with high self-esteem have different personality traits. While agentic traits such as extraversion are more important for men’s self-esteem, communal traits (e.g., agreeableness) are more crucial for women’s self-esteem (Robins et al., 2001). Although the mechanism is unclear, our study indicates that the facial expressions of trait self-esteem are related to gender, and establishing self-esteem prediction models according to gender is better than combined prediction. It is recommended that future studies on self-esteem should consider gender as an important factor.

The following briefly foregrounds some of the study’s implications. First, the present study collected reasonable self-introduction video data and employed advanced 3D facial movement quantification techniques to determine the relationship between 3D facial expressions and trait self-esteem. To the authors’ knowledge, it is the first to investigate the quantified 3D facial expressions of trait self-esteem, and our results demonstrate the necessity. Second, our research discovers the effect of gender on the facial expression of self-esteem and provided suggestions for future nonverbal research on self-esteem. Finally, the results provide a basis for predicting self-esteem based on 3D facial data. In view of the possibility that the self-reporting method may result in false answers during interviews and job scenarios (McLarnon et al., 2019), a facial prediction method offers a supplementary strategy to measure self-esteem.

However, the findings must be considered in light of various limitations. First of all, since this study was conducted in China, how many findings can be extended to other cultures is uncertain. Previous studies have indicated that Asian subjects may have different facial expressions than subjects from other cultural backgrounds (Matsumoto and Ekman, 1989). In addition, cultures differed significantly in the magnitude of gender effect on self-esteem. Future cross-cultural research might help to understand the difference in facial expression of self-esteem between cultures. Secondly, in accordance with theories of the lens model (Watson and Brunswik, 1958), the influence of trait self-esteem in social interaction is realized through the expression of trait self-esteem and the perception of such expression. This study only objectively explored the relationship between facial expressions and self-esteem but did not investigate the extent to which human perceivers can be affected by these expressions. A further limitation can be seen in the influence of participants’ knowledge background in self-reporting methods.

Regarding the future lines of research, stable trait self-esteem prediction models can be proposed based on researches about trait self-esteem expression. Considering social approval and knowledge background, automatic self-esteem recognition can be used to measure self-esteem in interview scenarios or special populations. Furthermore, multimodal data (such as eye movements, facial movements, and gestures) can be combined to fully describe the expression of self-esteem and establish more accurate prediction models.

Data Availability Statement

The datasets presented in this article are not readily available because raw data cannot be made public. If necessary, we can provide behavioral characteristic data. Requests to access the datasets should be directed to XL.

Ethics Statement

The studies involving human participants were reviewed and approved by the scientific research ethics committee of the Chinese Academy of Sciences Institute of Psychology (H15010). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

TZ contributed to the conception and design of the study. XL collected the data and developed the instrument. XW performed most of the statistical analysis and wrote the manuscript with input from all authors. YW performed part of the statistical analysis and helped drafting a part of the text. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by the Key Research Program of the Chinese Academy of Sciences (No. ZDRW-XH-2019-4).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We wish to thank all subjects for their participation in this study.

References

Agroskin, D., Klackl, J., and Jonas, E. (2014). The self-liking brain: a VBM study on the structural substrate of self-esteem. PLoS One 9:e86430. doi: 10.1371/journal.pone.0086430

Anthony, D. B., Wood, J. V., and Holmes, J. G. (2007). Testing sociometer theory: self-esteem and the importance of acceptance for social decision-making. J. Exp. Soc. Psychol. 43, 425–432. doi: 10.1016/j.jesp.2006.03.002

Back, M. D., Schmukle, S. C., and Egloff, B. (2011). A closer look at first sight: social relations lens model analysis of personality and interpersonal attraction at zero acquaintance. Eur. J. Pers. 25, 225–238. doi: 10.1002/per.790

Baldwin, M. W., and Keelan, J. P. R. (1999). Interpersonal expectations as a function of self-esteem and sex. J. Soc. Pers. Relationsh. 16, 822–833. doi: 10.1177/0265407599166008

Baumeister, R. F., Campbell, J. D., Krueger, J. I., and Vohs, K. D. (2003). Does high self-esteem cause better performance, interpersonal success, happiness, or healthier lifestyles? Psychol. Sci. Public Interest 4, 1–44. doi: 10.1111/1529-1006.01431

Berry, D. S., and Landry, J. C. (1997). Facial maturity and daily social interaction. J. Pers. Social Psychol. 72, 570–580. doi: 10.1037/0022-3514.72.3.570

Cvencek, D., Greenwald, A. G., and Meltzoff, A. N. (2016). Implicit measures for preschool children confirm self-esteem’s role in maintaining a balanced identity. J. Exp. Soc. Psychol. 62, 50–57. doi: 10.1016/j.jesp.2015.09.015

de Melo, W. C., Granger, E., Hadid, A., and Ieee. (2019). “Combining global and local convolutional 3D networks for detecting depression from facial expressions,” in Proceedings of the 2019 14th Ieee International Conference on Automatic Face and Gesture Recognition (Lille), 554–561.

Ekman, P., and Friesen, W. V. (1978). Facial Action Coding System (FACS): a technique for the measurement of facial action. Riv. Psichiatr. 47, 126–138.

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G∗Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Feingold, A. (1994). Gender differences in personality: a meta-analysis. Psychol. Bull. 116, 429–456. doi: 10.1037/0033-2909.116.3.429

Greenwald, A. G., and Banaji, M. R. (1995). Implicit social cognition: attitudes, self-esteem, and stereotypes. Psychol. Rev. 102, 4–27. doi: 10.1037/0033-295x.102.1.4

Harrigan, J. A., Wilson, K., and Rosenthal, R. (2004). Detecting state and trait anxiety from auditory and visual cues: a meta-analysis. Pers. Soc. Psychol. Bull. 30, 56–66. doi: 10.1177/0146167203258844

Hirschmueller, S., Schmukle, S. C., Krause, S., Back, M. D., and Egloff, B. (2018). Accuracy of self-esteem judgments at zero acquaintance. J. Pers. 86, 308–319. doi: 10.1111/jopy.12316

Hu, Y., Yan, J., and Shi, P. (2010). “A fusion-based method for 3D facial gender classification,” in Proceedings of the 2010 The 2nd International Conference on Computer and Automation Engineering (ICCAE) (Singapore), 369–372. doi: 10.1109/ICCAE.2010.5451407

Imada, A. S., and Hakel, M. D. (1977). Influence of nonverbal-communication and rater proximity on impressions and decisions in simulated employment interviews. J. Appl. Psychol. 62, 295–300. doi: 10.1037/0021-9010.62.3.295

Ishii, L. E., Nellis, J. C., Boahene, K. D., Byrne, P., and Ishii, M. (2018). The importance and psychology of facial expression. Otolaryngol. Clin. North Am. 51, 1011–1017. doi: 10.1016/j.otc.2018.07.001

Israel, A. C., and Ivanova, M. Y. (2002). Global and dimensional self-esteem in preadolescent and early adolescent children who are overweight: Age and gender differences. Int. J. Eat. Disord. 31, 424–429. doi: 10.1002/eat.10048

Jones, A. L., Kramer, R. S. S., and Ward, R. (2012). Signals of personality and health: the contributions of facial shape, skin texture, and viewing angle. J. Exp. Psychol. Hum. Percept. Perform. 38, 1353–1361. doi: 10.1037/a0027078

Kang, Y. (2019). The relationship between contingent self-esteem and trait self-esteem. Soc. Behav. Pers. Int. J. 47, e7575. doi: 10.2224/sbp.7575

Kernis, M. H. (1993). “The roles of stability and level of self-esteem in psychological functioning,” in Self-Esteem, ed. R. F. Baumeister (New York, NY: Springer US), 167–182. doi: 10.1007/978-1-4684-8956-9_9

Kilianski, S. E. (2008). Who do you think I think I am? Accuracy in perceptions of others’ self-esteem. J. Res. Pers. 42, 386–398. doi: 10.1016/j.jrp.2007.07.004

Kim, E. S., Hong, Y.-J., Kim, M., Kim, E. J., and Kim, J.-J. (2019). Relationship between self-esteem and self-consciousness in adolescents: an eye-tracking study. Psychiatry Invest. 16, 306–313. doi: 10.30773/pi.2019.02.10.3

Krause, S., Back, M. D., Egloff, B., and Schmukle, S. C. (2016). Predicting Self-confident Behaviour with Implicit and Explicit Self-esteem Measures. Eur. J. Personal. 30, 648–662. doi: 10.1002/per.2076

Leary, M. R., Gallagher, B., Fors, E., Buttermore, N., Baldwin, E., Kennedy, K., et al. (2003). The invalidity of disclaimers about the effects of social feedback on self-esteem. Pers. Soc. Psychol. Bull. 29, 623–636. doi: 10.1177/0146167203029005007

Leary, M. R., Tambor, E. S., Terdal, S. K., and Downs, D. L. (1995). Self-Esteem as an interpersonal monitor. J. Pers. Soc. Psychol. 68, 270–274. doi: 10.1207/s15327965pli1403

Lindell, A. K. (2013). The silent social/emotional signals in left and right cheek poses: A literature review. Laterality 18, 612–624. doi: 10.1080/1357650x.2012.737330

Lindell, A. K., and Savill, N. J. (2010). Time to turn the other cheek? The influence of left and right poses on perceptions of academic specialisation. Laterality 15, 639–650. doi: 10.1080/13576500903201784

MacCallum, R. C., Browne, M. W., and Sugawara, H. M. (1996). Power analysis and determination of sample size for covariance structure modeling. Psychol. Methods 1, 130–149. doi: 10.1037/1082-989x.1.2.130

Macgregor, J. C. D., Fitzsimons, G. M., and Holmes, J. G. (2013). Perceiving low self-esteem in close others impedes capitalization and undermines the relationship. Pers. Relatsh. 20, 690–705. doi: 10.1111/pere.12008

Matsumoto, D., and Ekman, P. (1989). American-Japanese cultural differences in intensity ratings of facial expressions of emotion. Motiv. Emot. 13, 143–157. doi: 10.1007/BF00992959

McLarnon, M. J. W., DeLongchamp, A. C., and Schneider, T. J. (2019). Faking it! Individual differences in types and degrees of faking behavior. Pers. Individ. Dif. 138, 88–95. doi: 10.1016/j.paid.2018.09.024

Miner, J. B. (1962). Personality and ability factors in sales performance. J. Appl. Psychol. 46, 6–13. doi: 10.1037/h0044317

Moksnes, U. K., and Espnes, G. A. (2013). Self-esteem and life satisfaction in adolescents-gender and age as potential moderators. Qual. Life Res. 22, 2921–2928. doi: 10.1007/s11136-013-0427-4

Neumann, C. A., Leffingwell, T. R., Wagner, E. F., Mignogna, J., and Mignogna, M. (2009). Self-esteem and gender influence the response to risk information among alcohol using college students. J. Subst. Use 14, 353–363. doi: 10.3109/14659890802654540

Nicholls, M. E. R., Clode, D., Lindell, A. K., and Wood, A. G. (2002). Which cheek to turn? The effect of gender and emotional expressivity on posing behavior. Brain Cogn. 48, 480–484. doi: 10.1006/brcg.2001.1402

Nicole, W., Wilhelm, F. H., Birgit, D., and Jens, B. (2015). Gender differences in experiential and facial reactivity to approval and disapproval during emotional social interactions. Front. Psychol. 6:1372. doi: 10.3389/fpsyg.2015.01372

Ostrowski, T. (2010). Self-esteem and social support in the occupational stress-subjective health relationship among medical professionals. Polish Psychol. Bull. 40, 13–19. doi: 10.2478/s10059-009-0003-5

Park, L. E., Crocker, J., and Kiefer, A. K. (2007). Contingencies of self-worth, academic failure, and goal pursuit. Pers. Soc. Psychol. Bull. 33, 1503–1517. doi: 10.1177/0146167207305538

Robins, R. W., Tracy, J. L., Trzesniewski, K., Potter, J., and Gosling, S. D. (2001). Personality correlates of self-esteem. J. Res. Pers. 35, 463–482. doi: 10.1006/jrpe.2001.2324

Rosenberg, M. (1965). Society and the Adolescent Self-Image. Princeton, NJ: Princeton University Press.

Satoh, Y. (2001). Self-disgust and self-affirmation in university students. Jpn. J. Educ. Psychol. 49, 347–358. doi: 10.5926/jjep1953.49.3_347

Savin-Williams, R. C., and Jaquish, G. A. (1981). The assessment of adolescent self-esteem: a comparison of methods1. J. Pers. 49, 324–335. doi: 10.1111/j.1467-6494.1981.tb00940.x

Suen, H.-Y., Hung, K.-E., and Lin, C.-L. (2019). TensorFlow-based automatic personality recognition used in asynchronous video interviews. IEEE Access 7, 61018–61023. doi: 10.1109/access.2019.2902863

Sun, B., Zhang, Z., Liu, X., Hu, B., and Zhu, T. (2017). Self-esteem recognition based on gait pattern using Kinect. Gait Posture 58, 428–432. doi: 10.1016/j.gaitpost.2017.09.001

Tracy, J. L., and Robins, R. W. (2003). “Does pride have a recognizable expression?,” in Emotions Inside Out: 130 Years after Darwin’s the Expression of the Emotions in Man and Animals, Vol. 1000, eds P. Ekman, J. J. Campos, R. J. Davidson, and F. B. M. DeWaal (New York, NY: New York Acad Sciences), 313–315. doi: 10.1196/annals.1280.033

Watson, A. J., and Brunswik, E. (1958). Perception and the representative design of psychological experiments. Philos. Q. 8:382. doi: 10.2307/2216617

Yeagley, E., Morling, B., and Nelson, M. (2007). Nonverbal zero-acquaintance accuracy of self-esteem, social dominance orientation, and satisfaction with life. J. Res. Pers. 41, 1099–1106. doi: 10.1016/j.jrp.2006.12.002

Keywords: facial expressions, three-dimensional data, gender, logistic regression, trait self-esteem, two-way ANOVA

Citation: Wang X, Liu X, Wang Y and Zhu T (2021) How Can People Express Their Trait Self-Esteem Through Their Faces in 3D Space? Front. Psychol. 12:591682. doi: 10.3389/fpsyg.2021.591682

Received: 05 August 2020; Accepted: 04 January 2021;

Published: 04 February 2021.

Edited by:

Pietro Cipresso, Istituto Auxologico Italiano (IRCCS), ItalyReviewed by:

Lucia Billeci, National Research Council (CNR), ItalyX. Ju, NHS Greater Glasgow and Clyde, United Kingdom

Copyright © 2021 Wang, Liu, Wang and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaoqian Liu, bGl1eGlhb3FpYW5AcHN5Y2guYWMuY24=

Xiaoyang Wang

Xiaoyang Wang Xiaoqian Liu

Xiaoqian Liu Yuqian Wang1

Yuqian Wang1 Tingshao Zhu

Tingshao Zhu